Abstract

Objective: To evaluate the benefit of a wireless remote microphone (MM) for speech recognition in noise in bimodal adult cochlear implant (CI) users both in a test setting and in daily life. Design: This prospective study measured speech reception thresholds in noise in a repeated measures design with factors including bimodal hearing and MM use. The participants also had a 3-week trial period at home with the MM. Study sample: Thirteen post-lingually deafened adult bimodal CI users. Results: A significant improvement in SRT of 5.4 dB was found between the use of the CI with the MM and the use of the CI without the MM. By also pairing the MM to the hearing aid (HA) another improvement in SRT of 2.2 dB was found compared to the situation with the MM paired to the CI alone. In daily life, participants reported better speech perception for various challenging listening situations, when using the MM in the bimodal condition. Conclusion: There is a clear advantage of bimodal listening (CI and HA) compared to CI alone when applying advanced wireless remote microphone techniques to improve speech understanding in adult bimodal CI users.

Introduction

Over the past few years, more patients with residual hearing are receiving a cochlear implant (CI). These patients are good candidates for the use of a CI in one ear and a hearing aid (HA) in the other ear, which is referred to as bimodal hearing. Bimodal hearing has shown improved speech recognition in quiet and in noise and sound localisation compared to unilateral CI use alone (Morera et al, Citation2012; Illg et al, Citation2014; Blamey et al, Citation2015; Dorman et al, Citation2015). However, in acoustically complex, real-life environments, speech comprehension remains a challenge. In these situations, the presence of reverberation and background noise causes deterioration of understanding a conversation (Lenarz et al, Citation2012; Srinivasan et al, Citation2013).

The introduction of directional microphones for CIs has provided a significant improvement in hearing in noise (Spriet et al, Citation2007; Hersbach et al, Citation2012). Directional microphones work optimally in near-field situations when the sound source is located closely, directed towards the front while the background noise is behind the listener. However, in daily life, full benefit of directional microphones is often not reached, because most listening conditions do not match with the requirements of the directional microphones. The speech source can be at a distance from the CI microphone whereas the background noise more nearby makes the signal-to-noise ratio (SNR) too low for speech understanding, despite the effect of the directional microphone. Furthermore, reverberation can compromise the benefit of the directional microphones. Thirdly, background noise does not only come from behind the listener, but can also be located next to or in front of the listener, which will cause a diminished effect of the directional microphones. Adaptive beamforming has been introduced to address this last limitation, as the direction and shape of the beam can be adjusted dependent of the location of speakers and the background noise (Kreikemeier et al, Citation2013; Picou et al, Citation2014).

Another way to improve hearing in demanding listening situations is the use of a wireless remote microphone system. Typically these systems consist of a microphone placed near the speaker’s mouth, which picks up the speech, converts it to an electrical waveform and transmits the signal directly to a receiver worn by the listener with a digital radio frequency (RF) transmission. By acquiring the signal at or near the source, the SNR at the listener’s ear is improved and consequently the negative effects of ambient noise, as well as those of distance and reverberation, are reduced. Previous research has shown considerable improvement in unilateral CI users’ speech recognition in noise using RF systems (Schafer & Thibodeau, Citation2004, Schafer et al, Citation2009; de Ceulaer et al, Citation2015). These studies are laboratory studies with a multiple loudspeaker set up. Noise is coming from behind or next to the subjects. In the study of de Ceulaer et al (Citation2015), a diffuse noise field is created with four loudspeakers with speech coming from three loudspeakers. Bimodal users were instructed to take their HA off during the testing. Improvements of 6–14 dB in SNR has been reported in these studies, depending on the test setup.

A new technology for wireless remote microphones based on the 2.4 GHz wireless frequency band has been developed. With this technology, wireless-assistive listening devices, like a remote microphone or a streamer for sound from the TV, has been developed by several manufacturers of HAs. Cochlear Ltd. and Resound Ltd. developed the Cochlear Wireless Mini Microphone or Resound Mini Microphone, which is a small personal streaming device microphone for transmitting sound from the microphone or the output from any external audio source directly to a Cochlear sound processor and to a Resound HA. The microphone can be clipped onto the speaker’s clothing and provides a wireless link between the speaker and the listener that will potentially improve the signal-to-noise ratio. In a study of Wolfe et al (Citation2015), a significant improvement in speech recognition in quiet and noise was found for unilateral as well as bilateral CI users when using this wireless microphone. Bimodal users were instructed to take their HA off during the testing.

Recently, Cochlear Ltd. and Resound Ltd. introduced an upgrade of the system, called the Wireless Mini Microphone 2+ (Cochlear) or Wireless Multi Microphone (Resound), further on abbreviated as MM. Directional microphones are added to the design and the working range of the MM is extended to 25 m.

In all adult studies describing the effect of RF systems or the Cochlear Mini Microphone in CI users, these devices were only connected to the CIs and not to the contralateral HA. The potential extra benefit of enhancing contralateral acoustical hearing by using a remote microphone system was not yet investigated for adult CI users. The objective of this study is, therefore, to evaluate the potential benefit of an advanced remote wireless microphone system with a fixed omnidirectional microphone mode in the bimodal situation with a CI in one ear, HA in the contralateral ear, and the signal of the remote microphone coupled to the CI and HA. We investigated the effect on speech recognition in noise in bimodal adult CI users both in a test setting and in daily life.

Methods

Participants

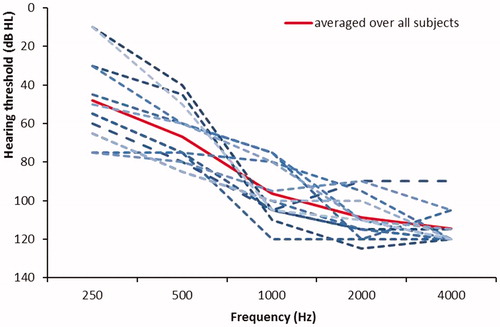

A total of 13 post-lingually deafened adults participated in this study. Participants ranged in age from 19 to 83 years old (group mean age = 56; standard deviation = 20 years). All were experienced bimodal users, unilaterally implanted with the Nucleus CI24RE or CI422 implant by surgeons of the Rotterdam Cochlear Implant team at the Erasmus MC hospital in the Netherlands. Only subjects with unaided hearing thresholds in the non-implanted ear better than 75 dB HL at 250 Hz were included. shows the unaided audiograms of the non-implanted ear of the individual participants. All subjects used a HA (Phonak Naida SP or UP) prior to the study, which was replaced with a Resound Enzo 998 HA during the study. This HA was fitted with the NAL-NL2 or Audiogram+ (depending on the subjects’ preference) fitting rule as a first fit. Real-ear measurements were used to verify the fitting of the HA. For the real-ear measurements an ISTS-signal (Holube et al, Citation2010) was presented at 55, 65 and 75 dB SPL and gains were adjusted to the fitting rule if needed. The fitting was adjusted afterwards with a loudness balancing procedure to balance the perceived loudness with the CI and the HA. All subjects had used their CI for at least 1 year prior to this study (a 1–8 years range, group mean = 4.1 year, standard deviation = 2.1 years), see . All subjects used the Nucleus 6 (CP910) sound processor for at least 2 months. In addition, all had open-set speech recognition of at least 60% correct phonemes at 65 dB SPL on the clinically used Dutch consonant–vowel–consonant word lists (Bosman & Smoorenburg, Citation1995) with the CI alone. All participants were native Dutch speakers. All participants signed an informed consent letter before participating in the study. Approval of the Ethics Committee of the Erasmus Medical Centre was obtained (protocol number METC253366).

Figure 1. The hearing thresholds of the individual subjects for the ear with the HA. The solid line displays the mean hearing loss.

Table 1. Participant demographics, including details of hearing losses and HA and CI experience.

Study design and procedures

This prospective study used a within-subjects repeated measures design with two factors: Bimodal (yes/no) and MM (yes/no). The study consisted of one visit in which speech-in-noise tests were performed for four different combinations of the two factors: (i) CI only (other ear blocked), no MM, (ii) CI and HA, no MM, (iii) CI only (other ear blocked), with MM and (iv) CI and HA both paired to the MM. The order of the four conditions was randomised to prevent any order effects. Noise reduction algorithms on the CI (SCAN, SNR-NR and WNR) and HA (SoundShaper, WindGuard and Noise Tracker II) were turned off during the test session in the clinic.

At the end of the test session, subjects received a diary to evaluate the effect of the MM for 3 weeks in daily life with the MM paired to both CI and HA. During the 3 weeks evaluation at home, the noise reduction algorithms of both the sound processor (SCAN, SNR-NR and WNR) and the HA (SoundShaper, WindGuard and Noise Tracker II on) were activated to provide optimal hearing in daily life situations of the subjects.

Test environment and materials

Dutch speech material developed at the VU Medical Centre (Versfeld et al, Citation2000) was used for testing speech recognition in noise. From this speech material, unrelated sentences were selected. A list with 18 sentences were presented at a fixed level of 70 dB SPL for each test condition. This level is representative for a raised voice (Pearsons et al, Citation1977) in background noise. The sentences were presented in steady state, speech-shaped noise. We scored the correct words per sentence per list. An adaptive procedure was used to find the signal-to-noise ratio targeting at a score of 50% correct words (Speech Reception Threshold or SRT). For each condition and for each subject, a list with 18 sentences was randomly selected from a total of 28 lists. An extensive description of the speech reception in noise test is given in Dingemanse & Goedegebure (Citation2015).

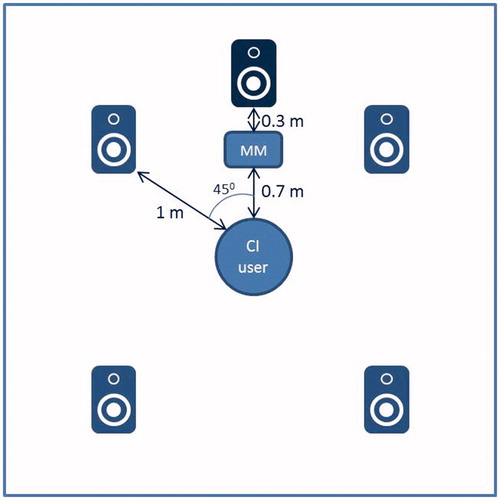

Sentences were presented from a loudspeaker that was located at 1 m at 0° azimuth. Four uncorrelated speech-shaped noises were presented with four loudspeakers located at −45°, 45°, −135° and 135° azimuth. The rationale for this loudspeaker arrangement was to simulate a diffuse, uncorrelated noise that exists in typical noisy daily life situations. During testing, the MM was positioned in horizontal direction (in omnidirectional mode) at 30 cm from the centre of the cone of the loudspeaker used to present the sentences. Because of the radiation pattern of the loudspeaker in the vertical plane we decided to place the MM no closer than 30 cm to the loudspeaker. At this distance from the loudspeaker the sound level was 77.5 dB SPL, meaning a better SNR of 7.5 dB compared to the place of the subject. displays a schematic of the test environment.

Figure 2. A schematic representation of the test environment. The CI user is in the middle of five loudspeakers, all at a distance of 1 m. The MM is placed at 0.3 m from the loudspeaker with the speech material, the other four loudspeakers presented uncorrelated speech-shaped noises.

All testing was performed in a sound-attenuated booth. Participants sat 1 m in front of a loudspeaker. For the speech in noise tests, research equipment was used consisting of a Madsen OB822 audiometer, a Behringer UCA202 soundcard and a Macbook pro notebook.

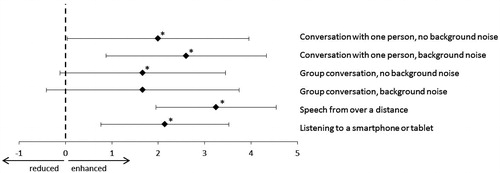

The subjects received a diary to evaluate the effect of the MM in daily use for different listening situations. Subjects were asked to indicate on a visual analogue scale (VAS) if the MM reduced or enhanced their speech recognition in a particular situation. The scale ranges from −5 to +5, where −5 indicates “much worse” and +5 indicates “much better”, comparing the condition with the MM to the condition without the MM. The midpoint of the scale (0) indicates that the participant experienced no changes. Subjects were asked to evaluate this for six listening situations, including: a conversation with one person with and without background noise, a group conversation with and without background noise, speech from over a distance and listening to a smartphone or tablet.

Statistical analysis

Data interpretation and analysis were performed with SPSS (v23; SPSS Inc., Chicago, IL). Because the low number of subjects, non-parametric statistical methods were used. For the speech recognition in noise, the Friedman test was used to compare SRTs over all listening conditions. Afterwards, post hoc comparisons with the Wilcoxon-signed rank test were performed. We used the Benjamini–Hochberg method to control the false-discovery rate for multiple comparisons (Benjamini & Hochberg, Citation1995). To analyse the diary data, the one-sample Wilcoxon-signed rank test was used.

Results

Speech recognition in noise

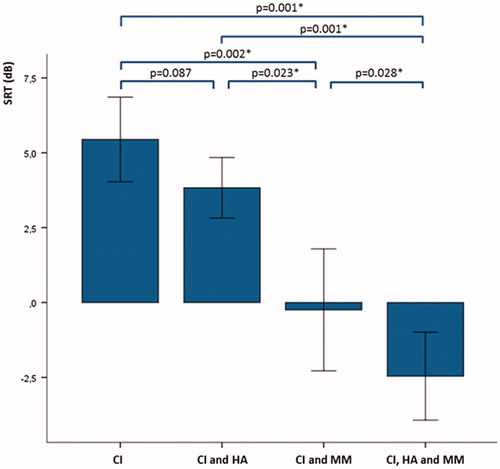

The results for the speech recognition in noise test are presented in . Significantly different speech reception thresholds were found across the listening devices [Friedman test: χ2(3) = 27.4, p < 0.001]. Post hoc comparisons using the Wilcoxon-signed rank test indicated that a significant difference in SRT of 5.4 dB was found between the use of the MM with the CI and the use of the CI without the MM (Z= −3.11, p = 0.002, Y= −0.86). By also pairing the MM to the HA, another improvement in SRT of 2.2 dB was found (Wilcoxon-signed rank test, Z= −2.20, p = 0.028, Y= −0.61). Reported p values were not corrected for multiple comparisons. After correcting for multiple comparisons with the Benjamini–Hochberg method, these differences remained significant. No correlation was found between the amount of hearing loss and the benefit of the MM.

Figure 3. The results of the speech perception in noise test for the four listening conditions. p values are uncorrected p values of Wilcoxon-signed rank tests. Asterisks denote significant differences after correction for multiple comparisons. The error bars represent the standard errors of the mean.

Additionally, we compared the benefit of bimodal hearing between the two conditions without MM and with MM (SRTCI and HA–SRTCI versus SRTCI and HA and MM–SRTCI and MM). No significant difference was found, so the benefit of bimodal hearing remains intact when using MM.

Results of the diary

Ten subjects completed the diary to evaluate the use of the MM. The results of the MM diary are presented in . A significant improvement of the use of the MM was found for the conversation with one person (both with and without background noise), the group conversation without background noise, the speech from over a distance and listening to a smartphone or tablet (one-sample Wilcoxon-signed rank test, p = 0.02, p = 0.01, p = 0.02, p = 0.01, p = 0.03, respectively). For the group conversation with background noise, an improvement was found of borderline statistical significance (one-sample Wilcoxon-signed rank test, p = 0.05). Examples of different places and situations where participants used the MM are shown in . To examine if there was a difference in SRT score between the 10 subjects who used the diary and the three subjects who did not use the diary, we used a Mann–Whitney U-test on the SRT for CI and MM. This test indicated that the groups did not have a significantly different SRT score (U = 9, p = 0.31, r = 0.28).

Figure 4. The results for the diary for the six different listening situations. Asterisks denote significant differences. The error bars represent the standard errors of the mean.

Table 2. Different places were the MM is used during the evaluation at home for the different listening situations.

Discussion

This study showed a large statistically significant and clinically relevant benefit of an advanced remote wireless microphone system that is connected to a CI in one ear and a HA in the contralateral ear. This large improvement in performance for speech perception in noise is the combined effect of the two factors that we investigated: the effect of the MM, and the effect of the bimodal connection of the MM. The effect of the MM explained the largest part of the improvement and is a known effect. At the location of the MM, the speech had a higher level giving a better speech-to-noise ratio of the signal that is transmitted to the CI and HA. In our setup, the SNR at the position of the MM was 7.5 dB better compared to the position of the listener. The SNR improvement due to the MM is 5.7 dB for the CI only condition and 6.3 dB for the bimodal condition, which is relatively close to this maximum value.

In our study, we found an improvement of 1.6–2.2 dB due to bimodal hearing. This is comparable to what was reported by Ching et al (Citation2007) in a review about bimodal hearing. They described an improvement which ranges from 1 to 2 dB across all reviewed studies.

An interesting finding is that the bimodal connection of the MM gave an additional improvement over the connection to the CI alone. With this MM connected to both hearing devices, both devices received the same input signal. This input signal was processed independently by the HA (acoustical and cochlear processing) and the CI (purely electrical processing), resulting into two different patterns of auditory nerve stimulation at each ear, providing both similar and complementary information to the central auditory system. In central auditory processing, these differences and similarities in auditory information were used for better speech intelligibility in noise, giving the complementarity effect and the binaural redundancy (Ching et al, Citation2007).

This is the first study to evaluate the performance of the MM for speech recognition in bimodal adult CI users. The only previous study with the previous version of the MM, the Cochlear Mini Microphone focussed on the use of this microphone connected to the CI alone (Wolfe et al, Citation2015) for unilateral as well as bilateral CI users. Wolfe et al (Citation2015) also found a significant improvement in speech recognition in noise, but they measured improvement of word scores for different fixed SNRs, making a comparison between our results and their findings difficult. Possible differences between the effect of the Mini Microphone for unilateral or bimodal CI users were not investigated in that study.

The average SRT with the MM is –2.5 dB for the bimodal condition in our study sample. With the used-speech material, the SRT for normal-hearing subjects is −7 dB. Even with the use of a MM, the CI users preformed less than normal-hearing subjects. However, in our study the distance of the MM to the speech source was 30 cm. To improve the SNR further, it is important to make this distance shorter by using the MM in daily life. A distance of 15 cm is the clinical recommendation, and this may give an additional improvement up to 6 dB compared to our setup, bringing the SRT close to that of normal-hearing subjects. In this study, the MM is used in omnidirectional mode. It is expected that by using the directional mode of the MM, even a better SRT could be obtained. All testing was completed in a sound-attenuated booth, which is a limitation of the study. Performance and benefits with the MM will probably be greater when tested in a sound booth rather than a real-world environment, because of the greater reverberation in the latter.

The customised diaries of the subjects showed perceived improvement due to the MM for all reported listening situations but one. For group conversations with background noise, no significant benefit of the MM was found. This is probably because in such situations the microphone is placed in the middle of the group. Because of the increased distance of the speakers to the MM the SNR will decrease, whereby speech perception, even with the MM, will become difficult. This is comparable with the results of de Ceulaer et al. (Citation2015) who used a multiple talker network test set up with three speech sources to simulate a group conversation. They found only a limited improvement in SRT when using one Phonak Roger Pen, but a considerable improvement by using three Roger Pens.

The results of the diary also showed that the MM can be used easily in a lot of different places. Only 10 out of 13 subjects used the diary. It can be hypothesised that mainly the participants who perceived benefit from the MM used the diary. However, in the speech test situation in the booth no difference between the subjects who used the diary and the subjects who did not was found.

This study has its limitations. First, the study sample is relatively small. Subsequently, all participants were evaluated while using one model of sound processor, HA and wireless remote microphone. These results may differ for other types of sound processors, HAs or remote microphones.

Conclusion

To conclude, the use of the MM in combination with the Nucleus 6 sound processor and the Resound Enzo 998 HA provided significantly better sentence recognition in noise than what was obtained without the use of the MM. Furthermore, the use of the MM in bimodal situation provides additional benefit compared to MM use with the CI alone. Also participants reported significantly better speech perception in daily life for different listening situations. Therefore, application of advanced wireless remote systems in bimodal users is an effective way to deal with challenging listening conditions, as it optimally uses bimodal hearing capacities while enhancing the SNR.

Declaration of interest

This study was supported by Cochlear Ltd. and Resound Ltd. The authors report no conflicts of interest. The authors alone are responsible for the content and writing of this article.

| Abbreviations | ||

| RF | = | radio frequency |

| HA | = | hearing aid |

| SRT | = | speech reception threshold |

| MM | = | wireless remote microphone |

| CI | = | cochlear implantund |

| SNR | = | signal to noise ratio |

References

- Benjamini, Y. & Hochberg, Y. 1995. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J R Stat Soc Series B Stat Methodol, 57, 289–300.

- Blamey, P.J., Maat, B., Başkent, D., Mawman, D., Burke, E., et al. 2015. A retrospective multicenter study comparing speech perception outcomes for bilateral implantation and bimodal rehabilitation. Ear Hear, 36, 408–416.

- Bosman, A.J. & Smoorenburg, G.F. 1995. Intelligibility of Dutch CVC syllables and sentences for listeners with normal hearing and with three types of hearing impairment. Audiology, 34, 260–284.

- Ching, T.Y.C., van Wanrooy, E. & Dillon, H. 2007. Binaural-bimodal fitting or bilateral implantation for managing severe to profound deafness: A review. Trends in Amplif, 11, 161–191.

- de Ceulaer, G., Bestel, J., Mülder, H.E., Golddeck, F., de Varebeke, S.P.J. & Govaerts, P.J. 2015. Speech understanding in noise with the Roger Pen, Naida CI Q70 processor and integrated Roger 17 receiver in a multi-talker network. Eur Arch Otorhinolaryngol, 273, 1107–1114.

- Dingemanse, J.G. & Goedegebure, A. 2015. Application of noise reduction algorithm ClearVoice in cochlear implant processing: Effects on noise tolerance and speech intelligibility in noise in relation to spectra resolution. Ear Hear, 36, 357–367.

- Dorman, M.F., Cook, S., Spahr, A., Zhang, T., Loiselle, L., et al. 2015. Factors constraining the benefit to speech understanding of combining information from low-frequency hearing and a cochlear implant. Hear Res, 322, 107–111.

- Hersbach, A.A., Arora, K., Mauger, S.J., & Dawson P.W. 2012. Combining directional microphone and single-channel noise reduction algorithms: A clinical evaluation in difficult listening conditions with cochlear implant users. Ear Hear, 33, e13–e23.

- Holube, I., Fredelake, S., Vlaming, M. & Kollmeier, B. 2010. Development and analysis of an International Speech Test Signal (ISTS). Int J Aud, 49, 891–903.

- Illg, A., Bojanowicz, M., Lesinski-Schiedat, A., Lenarz T., & Buchner A. 2014. Evaluation of the bimodal benefit in a large cohort of cochlear implant subjects using a contralateral hearing aid. Otol Neurotol, 35, e240–e244.

- Kreikemeier, S., Margolf-Hackl, S., Raether, J., Fichtl, E., & Kiessling, J. 2013. Comparison of different directional microphone technologies for moderate-to-severe hearing loss. Hear Rev, 20, 44–45.

- Lenarz, M., Sonmez, H., Joseph, G., Buchner, A., & Lenarz, T. 2012. Cochlear implant performance in geriatric patients. Laryngoscope, 122, 1361–1365.

- Morera, C., Cavalle, L., Manrique, M., Huarte, A., Angel, R., et al. 2012. Contralateral hearing aid use in cochlear implanted patients: Multicenter study of bimodal benefit. Acta Otolaryngol, 132, 1084–1094.

- Picou, E., Aspell, E. & Ricketts, T. 2014. Potential benefits and limitations of three types of directional processing in hearing aids. Ear Hear, 35, 339–352.

- Pearsons, K.S., Bennett, R.L. & Fidell, S. 1977. Speech levels in various noise environments (Report No. EPA-600/1-77-025). Washington (DC): U.S. Environmental Protection Agency.

- Schafer, E.C. & Thibodeau, L. 2004. Speech recognition abilities of adults using cochlear implants with FM systems. J Am Acad Audiol, 15, 678–691.

- Schafer, E.C., Wolfe, J., Lawless, T., Stout, B. 2009. Effects of FM-receiver gain on speech-recognition performance of adults with cochlear implants. Int J Audiol, 48, 196–203.

- Spriet, A., Van Deun, L., Eftaxiadis, K., Laneau, J., Moonen, M, et al. 2007. Speech understanding in background noise with the two-microphone adaptive beamformer BEAM in the Nucleus Freedom cochlear implant system. Ear Hear, 28, 62–72.

- Srinivasan, A.G., Padilla, M., Shannon, R.V., Landsberger, D.M. 2013. Improving speech perception in noise with current focusing in cochlear implant users. Hear Res, 299, 20–36.

- Versfeld, N.J., Daalder, L., Festen, J.M., Houtgast, T. 2000. Method for the selection of sentence materials for efficient measurement of the speech reception threshold. J Acoust Soc Am, 107, 1671–1684.

- Wolfe, J., Morais, M. & Schafer, E. 2015. Improving hearing performance for cochlear implant recipients with use of a digital, wireless, remote-microphone, audio-streaming accessory. J Am Acad Audiol, 26, 532–539.