Abstract

To evaluate whether speech recognition in noise differs according to whether a wireless remote microphone is connected to just the cochlear implant (CI) or to both the CI and to the hearing aid (HA) in bimodal CI users. The second aim was to evaluate the additional benefit of the directional microphone mode compared with the omnidirectional microphone mode of the wireless microphone. This prospective study measured Speech Recognition Thresholds (SRT) in babble noise in a ‘within-subjects repeated measures design’ for different listening conditions. Eighteen postlingually deafened adult bimodal CI users. No difference in speech recognition in noise in the bimodal listening condition was found between the wireless microphone connected to the CI only and to both the CI and the HA. An improvement of 4.1 dB was found for switching from the omnidirectional microphone mode to the directional mode in the CI only condition. The use of a wireless microphone improved speech recognition in noise for bimodal CI users. The use of the directional microphone mode led to a substantial additional improvement of speech perception in noise for situations with one target signal.

Introduction

One of the main challenges for patients with cochlear implants (CI) is speech comprehension in acoustically complex real-life environments due to reverberation and disturbing background noises (Lenarz et al. Citation2012; Srinivasan et al. Citation2013). Data logs of CI processors of 1000 adult CI users showed that many CI users spent large parts of their day in noisy environments, on average more than four hours a day (Busch, Vanpoucke, and Van Wieringen Citation2017). Although speech perception in quiet is generally good, the remaining impairment in difficult listening situations can limit quality of life, professional development and social participation (Gygi and Ann Hall Citation2016; Ng and Loke Citation2015).

The introduction of directional microphones for CIs has provided a significant improvement in hearing in noise abilities (Hersbach et al. Citation2012; Spriet et al. Citation2007); however, their use is often limited as they require near field situations where the sound source is located close by, directed toward the front while the background noise is behind the listener.

Another way to improve hearing in demanding listening situations is the use of a wireless remote microphone system. Typically these systems consist of a microphone placed near the speaker’s mouth, which picks up the speech, converts it to an electrical waveform and transmits the signal directly to a receiver worn by the listener. By acquiring the signal at or near the source, the signal-to-noise ratio (SNR) at the listener’s ear is improved and consequently the negative effects of ambient noise, as well as those of distance and reverberation, are reduced. Previous research has shown considerable improvement in unilateral CI users’ speech recognition in noise (De Ceulaer et al. Citation2016; Razza et al. Citation2017; Schafer and Thibodeau Citation2004; Schafer et al. Citation2009; Vroegop et al. Citation2017; Wolfe et al. Citation2015a; Wolfe, Morais, and Schafer Citation2015b). A directional mode of the wireless microphone would possibly improve speech recognition even more, but specific, comparative data is lacking. The previously mentioned studies used either an omnidirectional mode of the wireless microphone (Razza et al. Citation2017; Vroegop et al. Citation2017; Wolfe, Morais, and Schafer Citation2015b), an adaptive mode changing between omnidirectional and directional depending on the amount of the background noise (De Ceulaer et al. Citation2016), or a directional mode (Schafer et al. Citation2009).

Due to expanding CI selection criteria (Dowell, Galvin, and Cowan Citation2016; Leigh et al. Citation2016) the use of a cochlear implant in one ear and a hearing aid (HA) in the contralateral ear, which is referred to as bimodal hearing, has become standard care. Bimodal hearing has been shown to improve speech recognition in noise compared with unilateral CI use alone (Blamey et al. Citation2015; Dorman et al. Citation2015; Ching, Van Wanrooy, and Dillon Citation2007; Illg et al. Citation2014; Morera et al. Citation2012). Only one study (Vroegop et al. Citation2017) described the combined effect of a wireless microphone and bimodal hearing. Their results showed that the use of the wireless microphone in the bimodal situation, connected to both the CI and the HA, provided additional benefit compared with the use of the wireless microphone with the CI alone. However, the study failed to differentiate the benefit found between the result of wireless microphone use or just the addition of the HA. Therefore, in our current study we investigate whether speech recognition in noise differs according to whether the wireless microphone is connected to just the CI or to both the CI and HA. The second aim in our study was to evaluate the effect of a directional microphone mode of the wireless microphone.

Methods

Participants

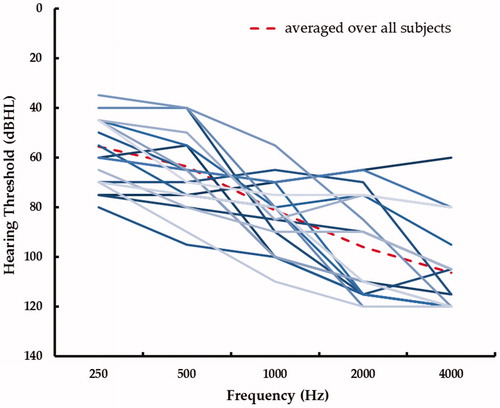

A total of 18 postlingually deafened adults participated in this study, see for participant demographics. Participants ranged in age from 32 to 81 years old (group mean age = 62; SD =15 years). All were experienced bimodal users, unilaterally implanted with AB HiRes 90K implant by surgeons of five different cochlear implant teams in the Netherlands and Belgium. All participants had used their cochlear implant for at least 6 months prior to this study (average = 4 years, SD = 3.6 years). All participants used either the AB Naida Q70 or AB Naida Q90 sound processor. In the study all participants were provided with a new AB Naida Q90 sound processor. In addition, all had open-set speech recognition of at least 70% correct phonemes at 65 dB SPL on the clinically used Dutch consonant-vowel-consonant word lists (Bosman and Smoorenburg Citation1995) with the cochlear implant alone. Only participants with unaided hearing thresholds in the non-implanted ear of 80 dB HL or better at 250 Hz were included. shows the unaided audiograms of the non-implanted ear of the individual participants. All participants used a hearing aid prior to the study, which was replaced by a new Phonak Naida Link UP HA for the tests in the study. For the test conditions with the wireless microphone, the Phonak Roger Pen was used. Integrated Roger 10 and Roger 17 receivers were used for connection with the HA and CI respectively. All participants were native Dutch speakers. All participants signed an informed consent letter before participating in the study. Approval of the Ethics Committee of the Erasmus Medical Centre was obtained (protocol number METC306849).

Figure 1. The hearing thresholds of the individual participants for the ear with the hearing aid. The dashed line displays the mean hearing loss averaged over all participants.

Table 1. Participant demographics, including details of hearing losses and HA and CI experience.

HA, CI and wireless microphone settings

The HA was fitted with the Phonak bimodal fitting formula, a special prescriptive fitting formula for bimodal hearing which was developed for the Phonak Naida Link hearing aid. This formula differs from more standard fitting formulas in three respects: the frequency response, the loudness growth and the dynamic compression. Firstly, this formula focuses on the frequency response by optimising low-frequency gain and optimising frequency bandwidth. Low-frequency gain optimisation uses the model of effective audibility to ensure audibility of speech recognition in quiet environments (Ching et al. Citation2001). Frequency bandwidth is optimised by assuring that bandwidth is as wide as possible, based on a study of Neuman and Svirsky (Citation2013). In that study the effect of frequency bandwidth on bimodal auditory performance was investigated. They found that smaller frequency bandwidths of the HA resulted in a worse performance of subjects in the bimodal condition compared with a wider frequency bandwidth of the HA. Besides, the fitting formula ensures frequencies between 250 and 750 Hz are audible (Sheffield and Gifford Citation2014), and that amplification does not extend into presumed dead regions (Zhang et al. Citation2014). To obtain the latter, a reduced frequency bandwidth of the gain is applied if the slope of the hearing loss is more than 35 dB per octave and the high frequency hearing loss exceeds 85 dB HL or if the hearing loss is more than 110 dB HL. Secondly, the input-output function of the CI is implemented in the hearing aid (compression kneepoint = 63 dB SPL, compression ratio = 12:1) with the aim of improving loudness balance between HA and CI. Thirdly, the dynamic compression behaviour is aligned by integrating the Naída CI dual-loop AGC into the hearing aid (Veugen et al. Citation2016). No fine tuning of the HA or volume adjustments was performed.

For the test session the participant’s current ‘daily’ CI programme was used. The Phonak Roger Pen is part of the Phonak Roger system. It uses digital signal transmission and digital signal processing to feature an adaptive gain adjustment. For the condition with the Roger Pen the CI programme was modified by changing the signal input from 100% microphone input to a 70:30 mix of the Roger 17 aux input and the participant’s microphone respectively. Wolfe and Schafer (Citation2008) advised a mixing ratio of 50:50 mainly based on soft speech in quiet surroundings. In the study of De Ceulaer et al. (Citation2016) also a mixing ratio of 50:50 was used. However, they did not find benefit of using one Roger Pen in a diffuse noise field. In our study we investigated the effect of the Roger Pen for different listening conditions in background noises. We wanted a condition for the Roger Pen in which the effect of the Roger Pen was expected to be found, otherwise we would not be able to distinguish between listening conditions. A 100% Roger condition would presumably result in the largest effect; however, CI users generally do not prefer this in daily life, because they are disconnected from the surrounding sounds. Therefore, we choose to use the 70:30 condition, in which 70% of the signal comes from the Roger Pen and 30% comes from the participant’s CI processor microphone.

Noise reduction algorithms on the cochlear implant (Clearvoice, Windblock, Soundrelax) and HA (Noiseblock, Soundrelax, Windblock) were turned off during the test sessions. In the listening conditions omnidirectional microphone modes of both the CI and the HA were used.

Study design

This prospective study used a ‘within-subjects repeated measures’ design. The study consisted of one visit in which speech-in-noise tests were performed for six different listening conditions: (1) CI and HA, (2) CI and HA, Roger Pen paired to CI (3) CI and HA, Roger Pen paired to both, (4) CI only, (5) CI and Roger Pen Omni Directional, (6) CI and Roger Pen Directional (see ). The order of the six conditions was randomised to prevent any order effects.

Table 2. Different test conditions.

Test environment and materials

Dutch speech material, single talker, female voice, developed at the VU Medical Centre (Versfeld et al. Citation2000) was used for testing speech recognition in noise. From this speech material, unrelated sentences were selected. A list of 20 sentences was presented at a fixed level of 70 dB SPL for each test condition. This level is representative for a raised voice (Pearsons, Bennet, and Fidell Citation1977) in background noise. The first list of sentences was used for exercise and adaptation to the test. The sentences were presented in a reception babble noise with an average spectrum similar to the international long-term average speech spectrum (ILTASS). We scored the correct words per sentence. An adaptive procedure was used to find the signal-to-noise ratio targeting a score of 50% correct words (Speech Recognition Threshold [SRT]). For each condition and for each participant a list with 20 sentences was randomly selected from a total of 25 lists without replacement. The adaptive procedure used was a stochastic approximation method with step size 4 · (Pc(n − 1) − target_Pc) (Robbins and Monro Citation1951), with Pc(n − 1) being the percent correct score of the previous trial. An extensive description of the speech recognition in noise test is given in (Dingemanse and Goedegebure Citation2015).

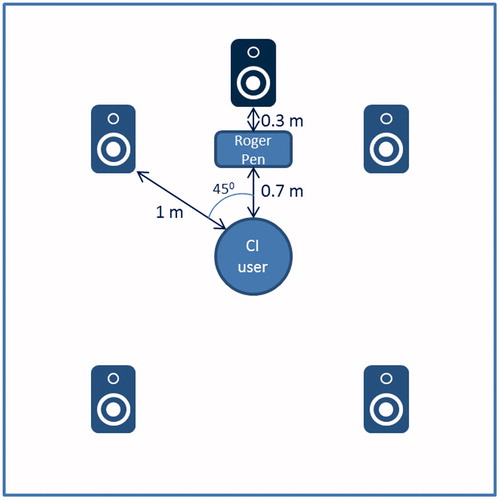

Sentences were presented from a loudspeaker that was located at 1 m at 0° azimuth. Four uncorrelated reception babble noises were presented with four loudspeakers located at −45°, 45°, −135° and 135° azimuth, placed at 1 m from the listener as well. The rationale for this loudspeaker set-up was to simulate a diffuse, uncorrelated noise that exists in typical noisy daily life situations. During testing, the Roger Pen was positioned in horizontal direction (in omnidirectional mode) at 30 cm from the centre of the cone of the loudspeaker used to present the sentences. Because of the radiation pattern of the loudspeaker in the vertical plane we decided to place the Roger Pen no closer than 30 cm to the loudspeaker. At this distance from the loudspeaker the sound level was 77.5 dBSPL, meaning a better SNR of 7.5 dB compared with the place of the participant. displays a schematic of the test environment.

Figure 2. A schematic representation of the test environment. The CI user is in the middle of five loudspeakers, all at a distance of 1 m. The target signal is coming from S0. The Roger Pen is placed at 30 cm from the target signal.

All testing was performed in a sound-attenuated booth. For the speech in noise tests, research equipment was used consisting of a Roland UA-1010 soundcard and a fanless Amplicon pc.

Statistical analysis

A Power Analysis was computed using the G*Power software, with a required power of 0.8 and an alpha-error level of 0.05. For speech perception we choose a difference of ≥15% as relevant, which is the least possible difference which is measurable with this test in one person. With a slope of the psychometric function of 6.4%/dB on average (Dingemanse and Goedegebure Citation2015), the difference between two test conditions must be ≥3 dB to be significant. We planned a repeated measures ANOVA. The sample size calculation indicated that a sample of 12 subjects would be needed to detect a significant difference. Because we found 18 suitable participants willing to participate, we added them to the sample.

Data analyses were performed with SPSS (v23). For the speech recognition in noise, the repeated measures ANOVA was used. Afterward, post hoc testing using Bonferroni correction was performed. We used the Benjamini–Hochberg method to control the false discovery rate for multiple comparisons. This method controls the expected proportion of falsely rejected hypotheses and is described in the study of Benjamini and Hochberg (Citation1995).

Results

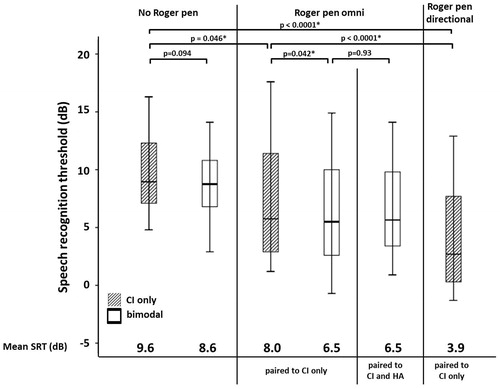

In the results for the speech recognition in noise test are displayed. A normality check of the SRTs revealed normally distributed data for all listening conditions. A repeated measures ANOVA determined that the speech recognition threshold differed significantly between listening conditions (F(5, 85) = 17.923, p < 0.0001).

Figure 3. Box–Whisker plots of the speech recognition threshold for the no Roger Pen condition, Roger pen omni condition and the Roger pen directional condition. Boxes represent the median (thick horizontal line), lower and upper quartiles (end of boxes), minimum and maximum values (ends of whiskers). Values at the bottom of the figure denotes the average SRT per listening condition. The p-values are corrected for multiple comparisons. Asterisks denotes significant differences.

Paired wise comparisons between the bimodal listening condition in which he Roger Pen was paired to the CI only (SRT = 6.5 dB) and the condition with the Roger Pen paired to both the CI and HA (SRT = 6.5 dB) revealed no significant differences (p = 0.93). The average SRT in the bimodal conditions with the Roger Pen was 1.4 dB better compared with the Roger Pen paired to the CI only.

The best SRT was found for the directional mode of the Roger Pen, which improved the SRT with 4.1 dB compared with the CI only condition in which the Roger Pen was paired in omnidirectional mode (p < 0.0001).

Paired wise comparison between bimodal listening (SRT = 8.6 dB) and CI only conditions (SRT = 9.6 dB) without the Roger Pen revealed no significant bimodal benefit (p = 0.094), although the performance tends to increase slightly.

Discussion

In most previous studies on the effect of the Roger Pen, the device was used with the CI only (De Ceulaer et al. Citation2016; Razza et al. Citation2017; Wolfe et al. Citation2015a). Another wireless microphone, the Cochlear Minimic 2+, was also tested in bimodal CI users. Vroegop et al. (Citation2017) showed that the use of the wireless microphone in the bimodal situation provides additional benefit compared with the use of the wireless microphone with the CI alone. However, they did not include a bimodal condition where the wireless microphone was connected to the CI only. Therefore it is possible that the benefit found was not due to the connection of the wireless microphone to the HA, but just to the addition of the HA. Our current study setup acknowledged this flaw but nevertheless showed no difference for the bimodal condition between connecting the Roger Pen to the CI alone and connecting the Roger Pen to both the CI and HA. However, improved SRT’s are found when study participants added the HA while already using the Roger pen with the CI. This is probably the result of central bimodal processes, which consists of the complementarity effect and the binaural redundancy (Ching, Van Wanrooy, and Dillon Citation2007). As no additional benefit is shown for also connecting the Roger Pen to the HA, apparently these bimodal processes were not influenced by this in our study set-up. However, in our set-up relatively small SNR improvements were found by use of the wireless microphone. It is possible that these central processes might be influenced if better SNRs at the HA side would be found.

In our setup, the SNR at the position of the Roger Pen was 7.5 dB better compared with the position of the listener. With a mix ratio of 70:30, theoretically, a 5 dB improvement would be expected for using the Roger Pen in the CI only condition. However, we did find a relatively small improvement of 1.6 dB for this condition compared with the CI only condition without the Roger Pen. The exact factors accounting for this difference are not known. However, the theoretical calculation assumes a 100% equally distributed omnidirectional pattern, no loss of signal quality due to transmission and mixing of the Roger signal with the CI-microphone signal. Also, there is a possible interfering effect due to the high compression ratio of the CI.

As we used a 70:30 mixed ratio, we were at least able to find a small benefit in contrast to the study of De Ceulaer et al. (Citation2016) who used a less favourable mix-ratio of 50:50. They also used a higher distance between the Roger Pen and the loudspeaker.

The average SRT in the best condition (Roger Pen in directional microphone mode) is 3.9 dB in our study sample, an improvement in SNR of 4.1 compared with the Roger Pen in omnidirectional microphone with the CI only. In our study the distance of the Roger Pen to the speech source was 30 cm. In daily life it will likely be important to further improve the SNR by making this distance shorter. A distance of 15 cm is the clinical recommendation, which will give an additional improvement in SNR.

We chose to evaluate the effect of the Roger Pen with the settings of the Phonak Naída Link HA according to the clinical recommendations of the manufacturer, in order to be able to mimic the daily clinical practice as much as possible. One of these recommendations is the use of the special developed bimodal fitting rule, which we used in this study. However, although all different sub parts of this fitting rule are based on scientific research (Ching et al. Citation2001; Neuman and Svirsky Citation2013; Sheffield and Gifford Citation2014; Veugen et al. Citation2016; Zhang et al. Citation2014), the effect of the bimodal fitting formula as a whole has not been tested before on auditory functioning. We found no effect of bimodal hearing compared with the CI only condition in the condition without the Roger Pen. A possible explanation could be that this fitting formula is not the optimal one for all participants. Another constraint is that in our study the subjects were not used to this fitting formula. Further investigations to these special developed HA fitting formula and the effect on bimodal hearing are needed.

In the study we found for some listening conditions only small differences. It is questionable if these differences are really clinically significant. Another limitation of the study is that testing was completed in a sound-attenuated booth. Performance and benefits with the Roger Pen will probably be greater when tested in a sound booth rather than a real world environment, because of the greater influence of reverberation in the latter. Another limitation is that all participants were evaluated while using one model of sound processor, hearing aid and wireless remote microphone. These results may differ for other types of sound processors, hearing aids or remote microphones.

Conclusion

To conclude, the use of a wireless microphone improved speech recognition in noise for bimodal CI users. In this study it seemed sufficient to connect the wireless microphone to the CI only in the bimodal condition. The use of the directional microphone mode of the Roger Pen led to a substantial additional improvement of speech perception in noise. Therefore, application of the Roger Pen is advised for bimodal CI users to optimise their hearing in difficult listening conditions and the directional microphone mode is advised for situations with one target signal.

Acknowledgements

The authors gratefully acknowledge all participants of the research project. They also wish to thank Gertjan Dingemanse, Teun van Immerzeel and Marian Rodenburg-Vlot for their contribution to the experimental work and the data collection. Advanced Bionics delivered the HAs for the use in the study. The authors declare no other conflict of interest.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Benjamini, Y., and Y. Hochberg. 1995. “Controlling the False Discovery Rate - a Practical and Powerful Approach to Multiple Testing.” Journal of the Royal Statistical Society Series B-Methodological 57: 289–300.

- Blamey, P. J., B. Maat, D. Başkent, D. Mawman, E. Burke, N. Dillier, A. Beynon, et al. 2015. “A Retrospective Multicenter Study Comparing Speech Perception Outcomes for Bilateral Implantation and Bimodal Rehabilitation.” Ear Hear 36 (4): 408–416. doi:10.1097/AUD.0000000000000150

- Bosman, A. J., and G. F. Smoorenburg. 1995. “Intelligibility of Dutch CVC Syllables and Sentences for Listeners with Normal Hearing and with Three Types of Hearing Impairment.” Audiology: Official Organ of the International Society of Audiology 34 (5): 260–284. doi:10.3109/00206099509071918

- Busch, T., F. Vanpoucke, and A. Van Wieringen. 2017. “Auditory Environment across the Life Span of Cochlear Implant Users: Insights from Data Logging.” Journal of Speech Language and Hearing Research 60 (5): 1362–1377. doi:10.1044/2016_JSLHR-H-16-0162

- Ching, T. Y., H. Dillon, R. Katsch, and D. Byrne. 2001. “Maximizing Effective Audibility in Hearing Aid Fitting.” Ear and Hearing 22 (3): 212–224. doi:10.1097/00003446-200106000-00005

- Ching, T. Y. C., E. Van Wanrooy, and H. Dillon. 2007. “Binaural-Bimodal Fitting or Bilateral Implantation for Managing Severe to Profound Deafness: A Review.” Trends in Amplification 11 (3): 161–192. doi:10.1177/1084713807304357

- De Ceulaer, G., J. Bestel, H. E. Mulder, F. Goldbeck, S. P. DE Varebeke, and P. J. Govaerts. 2016. “Speech Understanding in Noise with the Roger Pen, Naida CI Q70 Processor, and Integrated Roger 17 Receiver in a Multi-Talker Network.” European Archives of Oto-Rhino-Laryngology 273 (5): 1107–1114. doi:10.1007/s00405-015-3643-4

- Dingemanse, J. G., and A. Goedegebure. 2015. “Application of Noise Reduction Algorithm ClearVoice in Cochlear Implant Processing: Effects on Noise Tolerance and Speech Intelligibility in Noise in Relation to Spectral Resolution.” Ear and Hearing 36 (3): 357–367. doi:10.1097/AUD.0000000000000125

- Dorman, M. F., S. Cook, A. Spahr, T. Zhang, L. Loiselle, D. Schramm, J. Whittingham, and R. Gifford. 2015. “Factors Constraining the Benefit to Speech Understanding of Combining Information from Low-Frequency Hearing and a Cochlear Implant.” Hear Research 322: 107–111. doi:10.1016/j.heares.2014.09.010

- Dowell, R., K. Galvin, and R. Cowan. 2016. “Cochlear Implantation: Optimizing Outcomes through Evidence-Based Clinical Decisions.” International Journal of Audiology 55: S1–S2. doi:10.1080/14992027.2016.1190468

- Gygi, B., and D. Ann Hall. 2016. “Background Sounds and Hearing-Aid Users: A Scoping Review.” International Journal of Audiology 55 (1): 1–10. doi:10.3109/14992027.2015.1072773

- Hersbach, A. A., K. Arora, S. J. Mauger, and P. W. Dawson. 2012. “Combining Directional Microphone and Single-Channel Noise Reduction Algorithms. A Clinical Evaluation in Difficult Listening Conditions with Cochlear Implant Users.” Ear and Hearing 33 (4):e13–e23. doi:10.1097/AUD.0b013e31824b9e21

- Illg, A., M. Bojanowicz, A. Lesinski-Schiedat, T. Lenarz, and A. Büchner. 2014. “Evaluation of the Bimodal Benefit in a Large Cohort of Cochlear Implant Subjects Using a Contralateral Hearing Aid.” Otology & Neurotology 35 (9): e240–e244. doi:10.1097/MAO.0000000000000529

- Leigh, J. R., M. Moran, R. Hollow, and R. C. Dowell. 2016. “Evidence-Based Guidelines for Recommending Cochlear Implantation for Postlingually Deafened Adults.” International Journal of Audiology 55: S3–S8. doi:10.3109/14992027.2016.1146415

- Lenarz, M., H. Sonmez, G. Joseph, A. Buchner, and T. Lenarz. 2012. “Cochlear Implant Performance in Geriatric Patients.” Laryngoscope 122 (6): 1361–1368. doi:10.1002/lary.23232

- Morera, C., L. Cavalle, M. Manrique, A. Huarte, R. Angel, A. Osorio, L. Garcia-Ibañez, E. Estrada, and C. Morera-Ballester. 2012. “Contralateral Hearing Aid Use in Cochlear Implanted Patients: Multicenter Study of Bimodal Benefit.” Acta Oto-Laryngology 132 (10): 1084–1094. doi:10.3109/00016489.2012.677546

- Neuman, A. C., and M. A. Svirsky. 2013. “Effect of Hearing Aid Bandwidth on Speech Recognition Performance of Listeners Using a Cochlear Implant and Contralateral Hearing Aid (Bimodal Hearing).” Ear & Hearing 34 (5): 553–561. doi:10.1097/AUD.0b013e31828e86e8

- Ng, J. H., and A. Y. Loke. 2015. “Determinants of Hearing-Aid Adoption and Use among the Elderly: A Systematic Review.” International Journal of Audiology 54 (5): 291–300. doi:10.3109/14992027.2014.966922

- Pearsons, K. S., R. L. Bennet, and S. Fidell. 1977. Speech Levels in Various Noise Environments. (Report No. EPA-600/1-77-025). Washington, DC: U.S. Environmental Protection Agency.

- Razza, S., M. Zaccone, A. Meli, and E. Cristofari. 2017. “Evaluation of Speech Reception Threshold in Noise in Young Cochlear™ Nucleus® System 6 Implant Recipients Using Two Different Digital Remote Microphone Technologies and a Speech Enhancement Sound Processing Algorithm.” International Journal of Pediatric Otorhinolaryngology 103: 71–75. doi:10.1016/j.ijporl.2017.10.002

- Robbins, H., and S. Monro. 1951. “A Stochastic Approximation Method.” The Annals of Mathematical Statistics 22 (3): 400–407. doi:10.1214/aoms/1177729586

- Schafer, E. C., and L. M. Thibodeau. 2004. “Speech Recognition Abilities of Adults Using Cochlear Implants with FM Systems.” Journal of the American Academy of Audiology 15 (10): 678–691. doi:10.3766/jaaa.15.10.3

- Schafer, E. C., J. Wolfe, T. Lawless, and B. Stout. 2009. “Effects of FM-Receiver Gain on Speech-Recognition Performance of Adults with Cochlear Implants.” International Journal of Audiology 48 (4): 196–203. doi:10.1080/14992020802572635

- Sheffield, S. W., and R. H. Gifford. 2014. “The Benefits of Bimodal Hearing: Effect of Frequency Region and Acoustic Bandwidth.” Audiology & Neuro-Otology 19 (3): 151–163. doi:10.1159/000357588

- Spriet, A., L. Van Deun, K. Eftaxiadis, J. Laneau, M. Moonen, B. VAN Dijk, A. VAN Wieringen, and J. Wouters. 2007. “Speech Understanding in Background Noise with the Two-Microphone Adaptive Beamformer BEAM in the Nucleus Freedom Cochlear Implant System.” Ear & Hearing 28 (1): 62–72. doi:10.1097/01.aud.0000252470.54246.54

- Srinivasan, A. G., M. Padilla, R. V. Shannon, and D. M. Landsberger. 2013. “Improving Speech Perception in Noise with Current Focusing in Cochlear Implant Users.” Hearing Research 299: 29–36. doi:10.1016/j.heares.2013.02.004

- Versfeld, N. J., L. Daalder, J. M. Festen, and T. Houtgast. 2000. “Method for the Selection of Sentence Materials for Efficient Measurement of the Speech Reception Threshold.” The Journal of the Acoustical Society of America 107 (3): 1671–1684. doi:10.1121/1.428451

- Veugen, L. C., J. Chalupper, A. F. Snik, A. J. Opstal, and L. H. Mens. 2016. “Matching Automatic Gain Control across Devices in Bimodal Cochlear Implant Users.” Ear and Hearing 37 (3): 260–270. doi:10.1097/AUD.0000000000000260

- Vroegop, J. L., J. G. Dingemanse, N. C. Homans, and A. Goedegebure. 2017. “Evaluation of a Wireless Remote Microphone in Bimodal Cochlear Implant Recipients.” International Journal of Audiology 56 (9): 643–649. doi:10.1080/14992027.2017.1308565

- Wolfe, J., M. M. Duke, E. Schafer, C. Jones, H. E. Mulder, A. John, and M. Hudson. 2015a. “Evaluation of Performance with an Adaptive Digital Remote Microphone System and a Digital Remote Microphone Audio-Streaming Accessory System.” American Journal of Audiology 24 (3): 440–450. doi:10.1044/2015_AJA-15-0018

- Wolfe, J., M. Morais, and E. Schafer. 2015b. “Improving Hearing Performance for Cochlear Implant Recipients with Use of a Digital, Wireless, Remote-Microphone, Audio-Streaming Accessory.” Journal of the American Academy of Audiology 26 (6): 532–539. doi:10.3766/jaaa.15005

- Wolfe, J., and E. C. Schafer. 2008. “Optimizing the Benefit of Sound Processors Coupled to Personal FM Systems.” Journal of the American Academy of Audiology 19 (8): 585–594. doi:10.3766/jaaa.19.8.2

- Zhang, T., M. F. Dorman, R. Gifford, and B. C. Moore. 2014. “Cochlear Dead Regions Constrain the Benefit of Combining Acoustic Stimulation with Electric Stimulation.” Ear & Hearing 35 (4): 410–417. doi:10.1097/AUD.0000000000000032