ABSTRACT

Technological advancements create new ways of informing and persuading citizens in the political advertising context. Insights are limited regarding how citizens deal with data-driven political advertising (DDPA). This is problematic because the collection and combination of large amounts of data render them vulnerable to information and power asymmetries. Using multidisciplinary perspectives, this article discusses the digital campaign competence of voters. We look at the interplay of literacy components, offer a typology, and predict campaign behavior, such as ad engagement and ad avoidance. We use data from a multiple-wave panel survey (NW1 = 1914, NW3 = 1303) conducted during the 2021 German federal elections. A latent profile analysis reveals five voter profiles with varying levels of DDPA literacy (i.e. conceptual understanding and evaluative perceptions). People mostly evaluate DDPA as neutral or negative, highly differ in their level of objective conceptual understanding, and underestimate the effectiveness of DDPA. We find no differences between the five profiles in their ad engagement but find differences concerning ad avoidance. The results deepen our understanding of a digitally campaign-competent electorate and highlight areas in which citizen empowerment is needed in light of the inequalities that DDPA has produced.

Political advertising is a crucial part of political communication processes between political actors and the electorate (Kaid, Citation2006, p. 52). It helps to develop an informed electorate, which is important for a well-functioning democracy because it allows voters to critically evaluate the political information to which they are exposed (Strömbäck, Citation2005). However, arguably, the priority of political advertising is to persuade citizens to vote for a particular party (Goldstein & Ridout, Citation2004). In an increasingly digital media environment, these ways of persuading and informing the electorate have changed, for example through data-driven political advertising (DDPA; Tufekci, Citation2014). DDPA refers to a digital campaigning practice that uses individual data and new technologies in a digital infrastructure to enable voter segmentation, aggregation, or sophisticated voter targeting (Roemmele & Gibson, Citation2020). Hence, the fusion of politics and commercial data brokering has transformed personal data into a valuable political resource as a tool for political influence. While some forms of DDPA are not new, the fourth era of campaigning (Roemmele & Gibson, Citation2020) enabled political actors to reach out to voters in unprecedented ways (Dommett, Citation2019). Therefore, concerns are voiced about its impact on voters and the health of democracy (Roemmele & Gibson, Citation2020), but insights into how citizens should deal with DDPA are limited. Voter attitudes and behaviors toward DDPA have been researched (e.g., Kruikemeier et al., Citation2016), but due to fast technological developments, these observations are somewhat outdated.

The lack of knowledge among citizens about how these new forms of advertising function renders individuals vulnerable to a variety of detrimental effects for democracy. First, while advertising is an integral part of society today, DDPA functions within the political (not private) sphere, thereby amplifying information asymmetries among citizens (Bayer, Citation2020; Tufekci, Citation2014). This means that not everyone has access to the same sort of political information which furthers divisiveness. For example, parties may send different ads to target different voters (i.e., dark ads). These may be intentionally unevenly distributed, possibly resulting in distorted political realities with dire consequences for social trust (Mazarr et al., Citation2019), fragmentation, polarization, or citizen misinformation (Kozyreva et al., Citation2020).

Second, DDPA transforms campaigning tactics and gives power to online platforms, resulting in a power asymmetry between citizens and political or private market players (Kefford et al., Citation2022). Hence, competitive advantages between players are maintained or enhanced. Third, DDPA is shaped by commercial advertising techniques but not as heavily regulated (Helberger et al., Citation2021). While consumers may expect exaggeration in product advertising, political advertising is expected to be factual and plausible (Scammell & Langer, Citation2006). Furthermore, as political advertising is political speech, commercial benchmarks of fairness do not apply (Helberger et al., Citation2021, p. 296). Hence, citizens may have difficulties assessing the trustworthiness and truthfulness of political ads (Nelson et al., Citation2021) as political ads do not need to adhere to the same standards as product advertising. This uncertainty may decrease positive engagement with the ads.

Fourth, with the increased use of DDPA, discussions around citizens’ privacy have surged. Scholars highlight the need for individuals to be aware of the necessity to protect themselves online (Masur, Citation2020, p. 265). Minimizing susceptibilities to persuasion effects, increasing autonomy in decision-making, boosting cognitive resistance, and fostering coping responses are desirable outcomes of a heightened literacy (Boerman et al., Citation2012; Rozendaal et al., Citation2011) are, therefore, crucial for a digitally competent electorate.

This study focuses on the empowerment of citizens in the context of the inequalities that data-driven political advertising has created. The study (a) identifies the components of DDPA literacy and investigates their interplay, (b) elaborates a typology based on these components, and (c) uses this typology to distinguish forms of DDPA campaign (dis-) engagement.

Two components of data-driven political advertising literacy

To understand DDPA, one needs to have a grasp of advances in advertising techniques in the political sphere. DDPA exposes citizens to persuasion attempts by a political actor facilitated by new technological infrastructures (Roemmele & Gibson, Citation2020). Therefore, we can learn much from other disciplines that have studied the impact of data-driven ads for years, such as advertising and media effects research. Following this research, we can develop an understanding of DDPA literacy using existing studies on knowledge about and resistance to persuasion (Boerman et al., Citation2018; Rozendaal et al., Citation2011), political knowledge (Fowler et al., Citation2022; Nelson et al., Citation2021), and literacy terminologies (Masur, Citation2020; Sander, Citation2020).

To understand how individuals comprehend and interpret persuasive messages we can learn from two conceptually related but not identical concepts: Persuasion Knowledge (Friestad & Wright, Citation1994), and Advertising Literacy (Malmelin, Citation2010). By focusing on the infrastructures and techniques that use data to effectively target political ads from a political entity to citizens, along with the related consequences, we extend these concepts to the political domain. While there are no standard measures of persuasion knowledge in the advertising context (Ham & Nelson, Citation2019, p. 136), how individuals deal with persuasion attempts through ads has previously been described and tested based on two components, namely, conceptual and attitudinal dimensions (Boerman et al., Citation2012) or conceptual and affective advertising literacy components (Rozendaal et al., Citation2011, Citation2016). Applying this to DDPA literacy means that there is likely individual-level variation in conceptual understanding (CU or “understanding”) as well as evaluative perceptions (EPs or “evaluation”) of DDPA. While CU refers to low or high levels of knowledge about DDPA, EPs refer to positive, negative, or neutral sentiments toward DDPA. Hence, a DDPA-literate electorate processes targeted political ads based on (a) their understanding of DDPA strategies but also on (b) their evaluations of DDPA. Insights into how understanding and evaluation covary are limited. However, the advantage of distinguishing between these two components is that it allows for more nuanced observations regarding their respective roles in behavioral responses (Ham & Nelson, Citation2019, p. 133). For example, the ability to understand the persuasive intent of a message or to recognize its sender is important for the receivers to judge its appropriateness and decide on a response strategy (Friestad & Wright, Citation1994). Hence, equipped with both CU and EP, citizens should be able to identify DDPA attempts as persuasion and respond how they see fit—for instance, through ad avoidance (Ham, Citation2017) or ad engagement.

Conceptual understanding refers to the ability to understand DDPA and its implications. Persuasion knowledge of sponsored content (Boerman et al., Citation2018), an understanding of the economic model behind DDPA (Kruschinski & Haller, Citation2017), and awareness of the regulation of DDPA (Dobber et al., Citation2019) are crucial for citizens. Since DDPA is specifically concerned with the accumulation of personal online data, components of online privacy literacy (Masur, Citation2020) must also be included in CU. A lack of understanding might impede people’s ability to critically assess the given information, thereby making them more vulnerable (Nelson et al., Citation2021). For example, this means that citizens could remain unaware of the possibility that advertisers may zoom in on their preferences, personal vulnerabilities, or biases to deliver targeted content and ad formats. Sax et al. (Citation2018) point out that targeting a specific audience could violate their autonomy in making independent choices. While digital marketplaces might exploit digital vulnerabilities (Helberger et al., Citation2021), they may also offer opportunities to users to get more relevant content. Thus, a heightened understanding as part of DDPA literacy could be regarded as a potential solution to lessen digital vulnerabilities and increase the ability to perceive opportunities and benefits in the digital sphere.

Understanding a persuasion intent is seen as an antecedent of evaluating targeted advertising (Boerman & van Reijmersdal, Citation2016; Friestad & Wright, Citation1994; Ham, Citation2017; Hudders et al., Citation2017; Kirmani & Campbell, Citation2004). Once individuals become aware of persuasion tactics, a change-of-meaning process (Friestad & Wright, Citation1994) commences. On the one hand, this means that they become able to evaluate the persuasive tactics of the sender; on the other hand, it means that they can evaluate the advertising, the product, and the brand itself. In the DDPA context, this could refer to an evaluation of political ads, a political campaign, a politician, a party, or a (social media) platform.

Evaluative perception refers to the attitudinal assessment of DDPA. Along with the conceptual understanding, evaluative perceptions of DDPA might play a crucial role in determining citizens’ behavioral responses. Evaluating information as either positive or negative might affect the attitudes toward a political party (Eagly & Chaiken, Citation2005). In the context of DDPA, this could mean that people who evaluate data-driven strategies or tactics positively would have more positive attitudes toward targeted campaign ads. Evaluations are thus important for assessing DDPA and can take many different forms. The most frequently assessed evaluative dimensions are the appropriateness of the ad, disliking of targeted ads, and skepticism toward the ad (Boerman et al., Citation2018; Rozendaal et al., Citation2011). General feelings of distrust and critical attitudes (Boerman et al., Citation2017) as well as evaluations of content as creepy due to privacy infringements (Smit et al., Citation2014) have been observed. We additionally assess the perceived relevance of DDPA.

The relationship between understanding and evaluation

Scholars have shown that understanding ad personalization techniques precedes evaluation in contexts like targeted political advertising (Kruikemeier et al., Citation2016) or sponsored Facebook posts (Boerman et al., Citation2017). Contradicting findings exist regarding the relationship between knowledge and attitudes, with some studies showing higher levels of knowledge about political behavioral targeting are related to more positive attitudes (Dobber et al., Citation2019), and others showing the opposite in the case of online behavioral advertising (OBA; Smit et al., Citation2014). The differences in measuring related concepts using items of varying difficulty levels across different contexts may explain the contrasting results regarding understanding and evaluation. However, given the rapid evolution of the digital media environment, it is possible that respondents gathered experiences with data-driven advertising techniques and were thus able to update their benefit and risk assessment accordingly.

Toward a typology of DDPA literacy

To explore DDPA literacy among citizens, typologies can be useful starting points as they enable the categorization of individuals and identification of potential variations within the general population. Typologies thus offer a full set of theoretically possible deductive categories. Gran et al. (Citation2021) used this approach to detect distinct digital divides among internet users, based on different levels of awareness and attitudes toward algorithms. Likewise, this study aims to distinguish groups based on their level of understanding and evaluation of DDPA. To guide our thinking, we look at Norris (Citation2000) work and Zaller’s R-A-S model (Citation1992) on political sophistication and apply it to the context of today’s data-driven politics. Both argue that highly sophisticated people are more likely to encounter persuasive messages and are better equipped to reject or accept these messages, than less sophisticated people. We adapt their idea of this reinforcing circle to fit today’s data-driven campaigning environment by additionally considering citizens’ evaluation of DDPA. This is important because the combination of emotions or evaluations with different levels of sophistication can lead to distinct political behaviors (Lamprianou & Ellinas, Citation2019). As such, higher sophistication levels might be related to more engagement, if the evaluation is positive, and to more avoidance if the evaluation is negative. The same might be true for lower sophistication levels. However, individuals with lower levels of sophistication may also have neutral evaluative perceptions due to their lack of awareness, which may not be related to their behavioral responses. Hence, in our study, we argue that depending on citizens’ high or low understanding (perceived and factual) and their positive, negative, or neutral evaluation, citizens fall within different sub-groups of the typology as shown in .

Table 1. DDPA literacy typology.

In this a priori typology, some citizens may be considered fully literate. These are people with higher levels of CU and a clear evaluative perception (EP) of DDPA, which is either positive or negative. In other words, these are citizens who have a solid understanding of targeting techniques and their implications but dislike them (fully literate negative) or are more open and accepting (fully literate positive) toward their use. Citizens with lower levels of understanding but a clear evaluative perception are regarded as affectively literate. This means that they are not particularly knowledgeable about DDPA but do have a strong sentiment toward it which is either positive (affectively literate positive) or negative (affectively literate negative). A fifth category of orientation toward DDPA are conceptually literate individuals. This group is characterized by a high level of conceptual understanding of DDPA but lacks a clear view as to whether it is a good or bad practice. Finally, citizens who possess limited knowledge and have no clear evaluation of DDPA as positive or negative are classified as DDPA illiterate.

This categorization allows us to explore how prevalent the sub-groups are and, in the next step, how they behave in the context of DDPA (e.g., who is more likely to engage with campaign ads or avoid them). Given that behaviors are determined by the interplay of cognitive (CU) and affective (EP) processes (Malhotra, Citation2005), we anticipate differences in behavioral outcomes, although the extent of the variation remains uncertain.

How DDPA literacy predicts campaign ad (dis-) engagement

Following the literature on persuasion knowledge (e.g., Boush et al., Citation2009) and political knowledge (Fowler et al., Citation2022), we expect that different types of DDPA literate citizens will engage in different behaviors during a political campaign. Other variables may influence people’s behavioral responses, such as privacy concerns, and (political) self-efficacy. Given the study’s focus, we outline our expectations in which interacting levels of conceptual understanding and evaluative perceptions matter for ad engagement or avoidance.

Once individuals become aware of a persuasion attempt, they evaluate it based on their beliefs, which subsequently influences their behavioral response (Friestad & Wright, Citation1994; Kirmani & Campbell, Citation2004). In the context of online behavioral advertising, this evaluation might refer to a risk/benefit assessment (Ham, Citation2017). We argue that this same assessment applies to data-driven election campaigns. We focus on commonly studied advertising responses like ad avoidance (e.g., Baek & Morimoto, Citation2012) and ad engagement (Voorveld et al., Citation2018), which represent opposite ends of a continuum (Kelly et al., Citation2020). Ad avoidance refers to a behavior where the recipient aims to escape the advertisement (Speck & Elliott, Citation1997), while ad engagement involves actively being attracted to the ads (Calder et al., Citation2009).

Based on previous literature, we can formulate expectations for some typology sub-groups. Our insights, however, are limited. For example, for fully literate people, ad (dis-) engagement may be explained by high levels of CU. While we would expect that higher political sophistication levels are connected with more engagement (Norris, Citation2000), previous research has shown that those who understand the persuasion intent of an ad (i.e., those with higher levels of understanding; van Noort et al., Citation2012), or those who have activated their persuasion knowledge (Kruikemeier et al., Citation2016), are less likely to distribute or engage with political ads on social media. Furthermore, the political knowledge literature suggests that if citizens have a high level of CU of advertising techniques, they are more likely to evaluate the ads negatively (Fowler et al., Citation2022, p. 152). Hence, we would expect less engagement. In sum, we are unsure about the (dis-) engagement of fully literates but would expect higher CU levels to be connected with negative DDPA evaluations.

Meanwhile, affectively literate citizens have strong feelings about DDPA and their orientations will be influenced primarily by their EPs of DDPA. Insights from political advertising suggest that attitudes shape perceptions of politicians and impact voting intentions (Dermody & Scullion, Citation2005). Positive perceptions (e.g., relevance) of Facebook ads were related to more ad engagement, while the opposite can lead to ad avoidance (Van den Broeck et al., Citation2020). Additionally, attitudes toward social media advertising (e.g., the tactics or strategies) in general are drivers of ad engagement (Zhang & Mao, Citation2016). More in-depth research is needed on the extent to which attitudes toward DDPA impact ad (dis-) engagement (Ham & Nelson, Citation2019).

Conceptually literate and illiterate citizens share a neutral feeling toward DDPA, which may explain their (dis-) engagement. Previous studies (e.g., Kruikemeier et al., Citation2020) showed that most internet users feel neutral about personal data collection and usage, suggesting that their privacy concerns may not be strong enough to influence their behavior. This indifference toward DDPA may make citizens less likely to alter their behavior, regardless of their level of understanding.

Since we could not define clusters of DDPA-literate citizens beforehand, this research is exploratory. Based on the insights provided by the literature, we formulated the following research question instead of proposing hypotheses.

RQ1:

To what extent do citizens with different levels of conceptual understanding and evaluative perceptions of DDPA cluster in different groups and to what extent do these different clusters predict engagement with and avoidance of political ads?

Methods

Sample and procedure

To identify a voter typology based on varying levels of CU and EPs and describe how they are linked to levels of ad engagement and ad avoidance, we gathered data in Germany during the federal elections in August and September 2021. The study was reviewed by the Ethics Review Board of the University of Amsterdam (filed as 2021-PCJ-13845), and the data were collected by Respondi AG. We relied on wave 1 and wave 3 of a four-wave panel survey. Both are pre-election waves, all relevant questions were asked in these two waves, and the waves were conducted roughly one month apart. The survey was part of a larger project: NORFACE DATADRIVEN. Quotas were used for age, gender, and education. A total of 1,914 (Nw1) respondents completed the online survey in wave 1, and 1,303 (Nw3) respondents participated in wave 3. This corresponds to a response rate of 68% and an attrition rate of 32%. On average, respondents were 47 years old (SD = 15.3), and 50.7% were female. The sample is fairly representative of the German population at large.

Measures

All items were measured in both waves. In this section, they are reported as measured in wave 1 because most analyses were conducted with variables from wave 1. The exact measures and the measures in wave 3 are available in the online supplementary materials.

Conceptual understanding of DDPA

Informed by different disciplines, the survey contains many questions on citizens’ conceptual understanding and evaluative perceptions and thereby improves our understanding of DDPA literacy. Based on previous literature (e.g., Masur, Citation2020; Rozendaal et al., Citation2016; Smit et al., Citation2014), we measured CU of DDPA with the following nine components:

understanding the persuasive intent of targeted political campaign advertisements,

recognizing the political source,

understanding the persuasive tactics,

understanding the economic model, and

reflective awareness of the effectiveness of targeted political campaign advertisements for (a) oneself and (b) others.

These components were each measured with one to four items on a Likert scale ranging from 1 = strongly disagree to 7 = strongly agree. Responses thus reflect subjective self-assessments.

The remaining four components of CU were based on Masur’s (Citation2020, p. 262) conceptual model of online privacy literacy, and were assessed with one or two statements each. The specific items were self-developed to make sure they applied to our study’s DDPA context. The respondents were asked to indicate whether they thought that the statements were true (1 = incorrect, 2 = correct, 3 = do not know). They concerned:

(6) knowledge about data collection and surveillance practices of political parties during election campaigns,

(7) knowledge about privacy and data protection laws with regard to targeted political campaigns,

(8) knowledge about data collection, analysis, and sharing practices of online service providers for targeted political campaign advertisements, and

(9) technical knowledge related to privacy and data protection regarding targeted political campaign advertisements.

Correct answers were coded 1, incorrect answers and do not know were coded 0. These responses, therefore, reflect objective or factual knowledge.

Evaluative perception of DDPA

To account for the EP dimension of DDPA literacy, we used a seven-point semantic differential to gauge respondents’ views regarding appropriateness, relevance, likability, and distrust of targeting people with political campaign information. EP measures are based on Boerman et al. (Citation2018, p. 675) in which appropriateness refers to a moral evaluation of sponsored content, and liking refers to an overall attitude toward advertisements. We assess appropriateness with four items (e.g., unacceptable or acceptable), and liking with two items (e.g., annoying or pleasant). We refer to the tendency to question claims of the sponsored content as distrustFootnote1 and measure it with one item (i.e., untrustworthy–trustworthy). Additionally, we added relevance as a DDPA context-specific concept which we measured with three items (e.g., irrelevant or relevant). We computed scales when CU or EP sub-components were measured with more than one item.

Dependent variables

Political ad engagement

To measure ad engagement, we relied on self-reports and asked how often they shared or clicked on political ads on social media in the last three months. The variable was reverse-coded for intuitive interpretation, (1 = never, 5 = daily), and a scale variable was computed (inter-item correlation = .63, MW1 = 1.36, SDW1 = 0.75).

Political ad avoidance

To measure self-reported avoidance of political online advertisements, we asked respondents to indicate for eight statements how often they avoid ads (1 = never, 7 = very often), for example, “I have refrained from visiting certain websites because they pass on my data to political parties” (scale developed by Noetzel et al. (Citation2022), based on Boerman et al. (Citation2021); Penney (Citation2017). No multi-collinearity was detected between ad avoidance and the independent variables (Eigen value = 5.28, explained variance = 66%, Cronbach’s α = .93, MW1 = 3.17, SDW1 = 1.78).

Analytical strategy

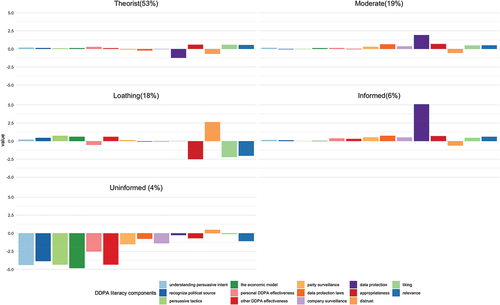

First, to identify a voter typology according to different levels of CU and EPs, we used a latent profile analysis (LPA) on the responses from wave 1 (N = 1914).Footnote2 Using a mix of various measurement level variables, LPA aims to identify latent subpopulations based on certain variables (Spurk et al., Citation2020). LPA thereby assumes that, based on probabilities, people can be sorted into categories or types that have different attributes. In our study, the different attributes refer to the varying levels of understanding and evaluation, resulting in different profiles. We used Latent Gold (version 6.0) and included all CU and EP variables mentioned earlier. We ran eight rounds of LPAs to fit a model to our data and entered 5,000 random sets of starting values to ensure validity and robustness in each of our solutions. To identify the best model fit, we relied on commonly used fit indices and applied the parsimony principle, which means that the solution with the fewest classes is preferred. We decided that the five-profile solution was most appropriate according to its fit indices (in the online supplementary materials). Moreover, the five-profile solution offers substantively adequate profiles that are relatively different in content. We stored the best solution of group membership as a new variable (nominal measurement level) and computed dummy variables for each profile.

Second, we investigated the extent to which belonging to a specific voter profile affects ad engagement and ad avoidance. To make use of the panel-survey structure, we first looked at the mean differences between the two waves for each voter profile and later calculated a lagged dependent variable model to predict ad engagement and ad avoidance in wave 3.

Last, we regressed the resulting voter profiles on socio-demographics and other individual predictors to obtain more nuanced insights into the voter profiles. To do so, we used a multiple logistic regression.

Results

This study used Latent Profile Analysis to identify latent sub-groups based on DDPA-literacy sub-scales. Where possible, composite variables were employed instead of single items to simplify the model’s interpretation. Table A2.6 in the online supplementary materials shows the descriptive statistics for the understanding and evaluation sub-scales.

Interpretation of the extracted profiles

Answering the first part of the overarching research question, the LPA distinguished five voter profiles. They exhibit the most variation on the different components of CU and less on the evaluative perceptions of DDPA as shown in , hence most profiles are referred to by conceptual understanding peculiarities. The first extracted profile represents the biggest group at 53%. These individuals can be characterized as theorists because their self-reported understanding is high, but they score low on questions regarding applied knowledge. Hence, in practice, they might not be able to put their theoretical knowledge to use. They are neutral toward DDPA. The second extracted profile, moderate (19%), demonstrates high to medium self-reported understanding levels, along with medium levels of actual knowledge. They acknowledge the relevance of DDPA while simultaneously expressing distrust toward it. The third profile, loathing (18%), has knowledge about DDPA but detests it and is highly distrustful. Individuals in the fourth profile, informed (6%), distinguish themselves from other citizens through their high levels of knowledge (both self-reported and applied knowledge). They see the relevance of DDPA but are otherwise neutral toward it. Finally, the fifth profile (4%), uninformed, has virtually no knowledge about DDPA, and evaluates DDPA negatively.

Mapping the five voter groups

Next, we examined the overlap between these five groups and the previously conceptualized dimensions of DDPA literacy. The most striking observation to emerge from this comparison was that no voter profile evaluates data-driven political advertising positively. Most people seem to be rather neutral.Footnote3 Hence, these five voter profiles only map some of the literacy dimensions, namely fully literate (negative), affectively literate (negative), and conceptually literate. More than three-quarters of the respondents could be regarded as conceptually literate because the largest share of citizens (theorist, moderate, and informed) report at least medium levels of knowledge about DDPA (irrespective of nuances in subjective vs. objective knowledge within a voter profile) and a neither positive nor negative evaluation of data-driven political advertising. Conceptually literate groups follow a common structure of medium self-perceived (subjective) understanding levels, but highly differ on actual (objective) understanding levels. This means that the largest variation in our data appeared when answering the correct/incorrect statements in the CU sub-scale, and suggests that the majority struggle with actively protecting their online privacy. Table A2.4 in the online supplementary materials includes exact wording for the CU sub-scale. Loathing individuals fit into the fully literate (negative) dimension because they show high levels of CU but evaluate DDPA negatively. Lastly, uninformed citizens fit into the affectively literate (negative) dimension because of their low CU levels and negative perceptions of DDPA. This means that only loathing and uninformed individuals dislike DDPA, think it is inappropriate, and are highly distrustful of it. Both profiles report low awareness of the effectiveness of DDPA on themselves. Hence, they do not believe that targeted political ads considerably influence them personally. Lastly, no profile could be categorized as illiterate as we did not find low understanding levels paired with indifference to DDPA in our sample.

Next, we regressed the five voter profiles on socio-demographics and on the variables that we deemed the most impactful based on the literature: privacy concerns, political self-efficacy, and political knowledge. Most notably, the results revealed that privacy concerns can have adverse effects. The more privacy concerns one has, the more likely one is to belong to the loathing group, while fewer privacy concerns seem to increase the likelihood of corresponding to the uninformed profile. As the two groups evaluate DDPA negatively but differ strongly in their CU levels, it may be that this difference in privacy concerns is linked to knowledge. While it is not surprising that lower levels of formal education appear to be connected with membership in the uninformed profile, it is also associated with being part of the loathing group. Furthermore, low political self-efficacy increases the likelihood of belonging to the uninformed profile. This may imply that if people feel like they have no say in the political landscape, they may be less inclined to educate themselves about the implications of data-driven election campaigns. Lastly, young people tend to be part of the moderate and informed profiles, while older people cluster in the loathing group. The members of moderate, informed, and loathing share a high conceptual understanding of DDPA but differ in their evaluations of DDPA. This suggests a potential generational gap in DDPA acceptance as shown in .

Table 2. Logistic regression describing the five voter profiles.

Predicting ad engagement and ad avoidance

To answer the second part of the research question, that is, to what extent the different voter profiles predict ad engagement and ad avoidance, we first ran ten regression models with lagged dependent variables. Each regression included the lagged score of either ad engagement or ad avoidance. This method reduces selection bias, reverse causation, and other common problems in cross-sectional studies because we explain changes in the dependent variable between the two waves at the individual level. As both dependent variables are positively skewed, we report findings based on 5,000 bootstrap samples. No multicollinearity was detected and variance inflation rate values were below 10 (Tabachnick et al., Citation2019). Control variables that may explain variance in ad engagement and ad avoidance were included to increase the robustness of our findings. To explore the data and avoid reducing the predictive power of the independent variables, we alternated voter profiles as reference categories.Footnote4 By doing so, we observe how the other voter profiles differ from this specific profile.

The most surprising finding is that we do not find significant differences between the five voter profiles and ad engagement, but we do find that the loathing group is more likely to avoid campaign ads compared to the moderate group. While these two groups are similar in size, the other profiles vary in size. Hence, using small voter profiles as a reference category might not reveal significant effects and should be taken into consideration when interpreting the results as shown in and .Footnote5

Table 3. Predicting ad engagement in wave 3.

Table 4. Predicting ad avoidance in wave 3.

The lagged values of ad engagement and ad avoidance in wave 1 explain most of the variance in wave 3. Furthermore, political self-efficacy significantly predicts engagement with political ads, while people who worry more about their privacy and are less knowledgeable about politics seem to avoid political ads online.

Discussion and conclusion

In this exploratory study, we provide deeper insights into the DDPA literacy of citizens. Informed by political, and persuasion knowledge literature, we first draft a typology based on conceptual understanding and evaluative perceptions of DDPA and then examine how belonging to a typological sub-group may be related to political ad (dis) engagement.

The proposed conceptual DDPA literacy typology had two goals: to sort respondents based on theory and contrast the categorization with reality, and to serve as a starting point to understand the DDPA literacy of the electorate. The typology consists of six groups with either high or low understanding and positive, negative, or neutral evaluations. The results of the latent profile analysis indicate that real world voter groups partially fit into this conceptualization. The value of the typology thus lies in its ability to ascertain which theoretically deduced types are observable in real-world data. Results imply that DDPA literacy is a nuanced concept and distinct from the often-studied idea during the era of pre-online data-driven campaigns, that higher political sophistication leads to greater engagement (e.g., Norris, Citation2000).

First, and most notably, our data shows that none of the five profiles evaluates DDPA positively. Citizens seem unanimously neutral or negatively inclined toward DDPA. This is in line with the findings of Kruikemeier et al. (Citation2020) on users’ neutrality toward data collection. Loathing and uninformed are the only groups that clearly evaluate DDPA negatively. While the former show higher levels of CU, the latter report virtually no understanding of DDPA. It thus seems that DDPA is evaluated negatively irrespective of high or low understanding levels. Thus, we partially confirm the finding of Fowler et al. (Citation2022) that higher understanding levels are connected to negative ad evaluations and contrast the finding of Dobber et al. (Citation2019) that higher knowledge levels are associated with more favorable attitudes toward DDPA. By investigating CU and EP levels using extensive measurement scales, we make a valuable contribution to the literature. However, future research should validate our proposed DDPA literacy components and compare data protection regulations across different countries to ascertain if positive assessments of data-driven political advertising remain absent.

Second, most people can be considered conceptually literate about DDPA which allows for two observations: The overall level of DDPA literacy may not be as concerning anymore as suggested in prior studies on behavioral commercial advertising (Ham, Citation2017; Smit et al., Citation2014), and the electorate does not appear as differentiated in their conceptual understanding (and evaluative perceptions) as one might anticipate. Thus, voters do learn about new campaigning techniques, albeit not to the extent that scholars wish they would.

Although conceptually literate, all respondents show higher subjective than objective understanding of data-driven campaigning. This means voters feel like they have more knowledge than they actually have. This has been observed in previous research on political advertising literacy (Nelson et al., Citation2021) and our study reveals a similar pattern among citizens in the data-driven political advertising context.

Third, people across all profiles seem to think that DDPA does not influence them as much as it influences others. This finding of the third-person effect (Perloff, Citation1999) is in line with past research on response strategies to online behavioral advertising (Ham & Nelson, Citation2016). In the DDPA context, this means that voters may find it difficult to accurately assess its potential influence. In particular, because no one evaluates DDPA positively, there is reason to believe that citizens want to limit its influence on themselves. However, since most people do not know about technological possibilities to restrict DDPA influence, they may not be able to act. This finding underscores the importance of increasing the competence of citizens in reacting to data-driven advertising.

The findings of the multiple logistic regressions help us identify who belongs to which voter profile. The results reveal important differences across some fringe groups such as loathing and informed. Interestingly, younger citizens are more likely to be part of the informed profile, whereas older people tend to fall within the loathing cluster. The main difference between the two profiles is the rather neutral evaluation of DDPA by the former while the latter despises it and is highly distrustful. This generational gap in acceptance of data-driven advertising techniques is in line with the finding that younger generations more readily accept surveillance (Ham & Nelson, Citation2016). Furthermore, citizens with lower political self-efficacy and lower privacy concerns are more likely to belong to the uninformed profile. This finding strongly suggests the importance of fostering digital campaign competence by enhancing citizens’ feelings of control in the data-driven realm.

The second part of this research aimed to enhance our understanding of how these voter profiles behave in election campaigns. We did not find significant differences across voter profiles concerning ad engagement, but two profiles differ regarding ad avoidance. It may be that we did not find additional differences due to a lack of power and floor effects, especially for ad engagement. We anticipated finding different (dis-) engagement behaviors between the profiles because previous research indicated that higher levels of understanding are linked to lower engagement with ads (van Noort et al., Citation2012). We find that the loathing group, with high understanding levels and pronounced distrust toward data-driven campaigns, tends to avoid campaign ads more than the moderate group, which has medium understanding levels and evaluates DDPA neutral overall. Interestingly, low understanding levels coupled with negative evaluations present in the uninformed profile do not affect their ad avoidance behavior. Our study is one of the first to investigate the interplay of understanding and evaluation with extensive scales on behavioral responses in the context of DDPA. This sets it apart from previous research.

Limitations and future research

The generalizability of these results is subject to certain limitations. First, although we adhered to common thresholds of group sizes when conducting the LPA, the fringe groups, in particular, are small. This may imply that subsequent analyses were underpowered, and we may not have been able to detect small effects for predicting ad engagement and ad avoidance. Most respondents neither engaged with nor avoided political ads which skewed both variables. Positive skewness, most pronounced in the floor effect of ad engagement, is common, but our data may have been heavily influenced by small sample sizes and large standard errors. Given that we lacked this information, future research should conduct a power analysis using effect sizes, mean differences, and standard errors to determine the required sample size for detecting significant effects in each voter profile.

Second, using true/false statements to measure understanding may have triggered acquiescence among respondents. If respondents tend to agree with the researcher by answering that the statements are correct rather than incorrect, our findings may be biased. Especially the technological understanding measure might have been affected and thus led us to conclude that most respondents lack this understanding. Although this bias is possible, studies have shown that people generally struggle to use technology to protect their online privacy and lack empowerment (Boerman et al., Citation2021).

Third, as this study integrates concepts from different disciplines, we could not control for all potentially relevant exploratory variables. Citizens’ privacy cynicism may have further explained the absence of behavioral responses of the loathing group despite their pronounced dislike. Feelings of frustration or hopelessness might explain why people do not act on their negative evaluations of DDPA (van Ooijen et al., Citation2022). For future studies that, unlike us, expose respondents to political advertisements, Binder et al. (Citation2022) recommend controlling for partisanship as an explanatory variable because voters tend to respond defensively if they receive data-driven ads from non-favored parties. These variables may be important for analyzing the influence of CU and EP on behavior.

Our findings have important implications. First, with its exploratory nature, this study offers insights into how citizens with different levels of CU and EPs of DDPA cluster. We thereby highlight to what extent variations among citizens exist. Future research might be inspired to investigate DDPA literacy in other European multi-party systems to assess the consistency of these voter profiles.

Second, we could not find the reinforcing link between knowledge and engagement of previous research (e.g., Friestad & Wright, Citation1994). How citizens relate to new technologies in data-driven political advertising seems to be complex and tainted by a pronounced dislike among some groups. The common doomsday attitude that surrounds data-driven advertising in politics might contribute to this. Political parties might be impacted if citizens’ negative perceptions of data-driven strategies spill over to the advertising message (Strycharz & Segijn, Citation2022). While DDPA has the potential to exploit vulnerabilities, exclusively perceiving its threats may hinder people from harnessing the full potential of DDPA for citizens. To some extent, voters could decide to receive personally relevant information. However, we argue that citizens’ cost/benefit appraisal should happen consciously. Future research may thus investigate whether behavioral intentions to use privacy-enhancing technology may be influenced by raising awareness about the potential positive sides of DDPA.

Third, political self-efficacy and privacy concerns appear to be important predictors of group membership. For example, individuals with heightened privacy concerns are more likely to be part of the loathing profile and avoid ads more compared to the moderate profile. Future studies might benefit from examining DDPA literacy in terms of what leads to ad (dis-) engagement. For instance, they could consider factors like perceived issue severity or personal susceptibility (Strycharz et al., Citation2019). Moving forward, educational efforts to enhance self-efficacy and the ability to assess privacy concerns in the form of NGO-led workshops, educational games, or informational guides may prove crucial to building citizen competencies.

Citizens’ digital campaign competence is becoming increasingly important as the fourth era of political campaigning is in full bloom, and potentially deceptive strategies should be detected, evaluated, and responded to. Hence, educators should consider the interaction of understanding and evaluation, thereby shedding light on two components of voters’ data-driven political advertising literacy.

Open scholarship

This article has earned the Center for Open Science badge for Open Data. The data are openly accessible at https://osf.io/q5zuh/.

This article has earned the Center for Open Science badge for Open Data. The data are openly accessible at https://osf.io/q5zuh/.

Supplemental Material

Download MS Word (168.2 KB)Acknowledgments

The authors would like to thank Fabio Votta as well as the anonymous reviewers and editorial staff for their excellent feedback, which greatly improved this manuscript. This research was funded in whole or in part by the Austrian Science Fund (FWF) 10.55776/I4818.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data that support the findings of this study are available in OSF at https://osf.io/q5zuh/.

Supplementary material

Supplemental data for this article can be accessed online at https://doi.org/10.1080/15205436.2024.2312202.

Additional information

Funding

Notes on contributors

Sophie Minihold

Sophie Minihold is a joint Ph.D. candidate in Communication Science at the University of Vienna (Political Communication Research Group) and at the University of Amsterdam (Amsterdam School of Communication Research). She studied Communication Science at the University of Vienna (Bakk. Phil.) and at the University of Amsterdam (research master; MSc). She researches the digital campaign competence of citizens in data-driven election campaigns in European multi-party systems.

Sophie Lecheler

Sophie Lecheler is Professor of Political Communication at the Department of Communication at the University of Vienna. She previously worked at the Amsterdam School of Communication Research (ASCoR) at the University of Amsterdam, and the Department of Government at the London School of Economics and Political Science (LSE). She previously acted as chair to the Political Communication Division at the International Communication Association (ICA), and is currently associate editor of two journals, the International Journal of Press/Politics and Human Communication Research. Her research focuses on the impact of technology on political media and political journalism.

Rachel Gibson

Rachel Gibson is a Professor of Politics at the University of Manchester, having joined the Department and Politics and Institute for Social Change in December 2007. Between 2016 and 2019, she served as Director of the Cathie Marsh Institute for Social Research. She is currently running a five-year international project Digital Campaigning and Electoral Democracy that is funded by the European Research Council as an Advanced Investigator Grant. She completed her dissertation on the rise of anti-immigrant parties in Western Europe in the late twentieth century at Texas A&M University.

Claes de Vreese

Claes de Vreese is a Distinguished University Professor of AI & Society at the University of Amsterdam with a special focus on AI along with media and democracy. He is incoming scientific director of the Digital Democracy Center at SDU and member of the Danish Institute for Advanced Studies. He was the founding director of the Center for Politics and Communication and co-directs the University of Amsterdam initiatives Information, Communication & the Data Society (ICDS), Human(e) AI, and the Digital Media Methods Lab. He is member of the ICA Executive Committee and served as president in 2020-21. His research interests span from media, public opinion, and electoral behavior to the role of data and AI in democratic processes.

Sanne Kruikemeier

Sanne Kruikemeier is a Professor in Digital Media & Society in the Strategic Communication Group of Wageningen University and Research. She is co-chair of the political communication division of the Netherlands-Flanders Communication Association. Her research focuses on the consequences and implications of online communication for individuals and society. Her research received funding from several science foundations, including an ERC starting grant, a NORFACE grant, as well as grants from various programs of the Netherlands Organization for Scientific Research. She also received awards from the International Communication Association, such as the CAT Dordick Dissertation Award.

Notes

1 The term skepticism is more commonly employed. However, because we use only one item for this concept, we label it as distrust, though the underlying conceptualization corresponds to skepticism.

2 An LPA for wave 3 showed voter profiles similar to those found for wave 1.

3 We define the cutoff points for neutral evaluations as one standard deviation above and below the mid-point.

4 Including all five voter profiles at once produced a singularity error, indicating multicollinearity (Plonsky & Ghanbar, Citation2018).

5 We ran both a zero-inflated Poisson regression and a negative binomial regression to account for the skew of ad engagement and ad avoidance. While the confidence intervals between the voter profiles shrunk, the results did not change.

References

- Baek, T. H., & Morimoto, M. (2012). Stay away from me. Journal of Advertising, 41(1), 59–76. https://doi.org/10.2753/JOA0091-3367410105

- Bayer, J. (2020). Double harm to voters: Data-driven micro-targeting and democratic public discourse. Internet Policy Review, 9(1). https://doi.org/10.14763/2020.1.1460

- Binder, A., Stubenvoll, M., Hirsch, M., & Matthes, J. (2022). Why Am I getting this ad? How the degree of targeting disclosures and political fit affect persuasion knowledge, party evaluation, and online privacy behaviors. Journal of Advertising, 51(2), 206–222. https://doi.org/10.1080/00913367.2021.2015727

- Boerman, S. C., Kruikemeier, S., & Zuiderveen Borgesius, F. J. (2017). Online behavioral advertising: A literature review and research agenda. Journal of Advertising, 46(3), 363–376. https://doi.org/10.1080/00913367.2017.1339368

- Boerman, S. C., Kruikemeier, S., & Zuiderveen Borgesius, F. J. (2021). Exploring motivations for online privacy protection behavior: Insights from panel data. Communication Research, 48(7), 953–977. https://doi.org/10.1177/0093650218800915

- Boerman, S. C., & van Reijmersdal, E. A. (2016). Informing consumers about “hidden” advertising: A literature review of the effects of disclosing sponsored content. In P. De Pelsmacker (Ed.), Advertising in new formats and media (pp. 115–146). Emerald Group Publishing Limited. https://doi.org/10.1108/978-1-78560-313-620151005

- Boerman, S. C., van Reijmersdal, E. A., & Neijens, P. C. (2012). Sponsorship disclosure: Effects of duration on persuasion knowledge and brand responses: Sponsorship disclosure. Journal of Communication, 62(6), 1047–1064. https://doi.org/10.1111/j.1460-2466.2012.01677.x

- Boerman, S. C., van Reijmersdal, E. A., Rozendaal, E., & Dima, A. L. (2018). Development of the Persuasion Knowledge Scales of Sponsored Content (PKS-SC). International Journal of Advertising, 37(5), 671–697. https://doi.org/10.1080/02650487.2018.1470485

- Boush, D. M., Friestad, M., & Wright, P. (2009). Deception in the marketplace: The psychology of deceptive persuasion and consumer self-protection. Routledge.

- Calder, B. J., Malthouse, E. C., & Schaedel, U. (2009). An experimental study of the relationship between online engagement and advertising effectiveness. Journal of Interactive Marketing, 23(4), 321–331. https://doi.org/10.1016/j.intmar.2009.07.002

- Dermody, J., & Scullion, R. (2005). Young people’s attitudes towards British political advertising: Nurturing or impeding voter engagement? Journal of Nonprofit & Public Sector Marketing, 14(1–2), 129–149. https://doi.org/10.1300/J054v14n01_08

- Dobber, T., Fathaigh, R. Ó., & Zuiderveen Borgesius, F. J. (2019). The regulation of online political micro-targeting in Europe. Internet Policy Review, 8(4). https://doi.org/10.14763/2019.4.1440

- Dobber, T., Trilling, D., Helberger, N., & de Vreese, C. (2019). Spiraling downward: The reciprocal relation between attitude toward political behavioral targeting and privacy concerns. New Media & Society, 21(6), 1212–1231. https://doi.org/10.1177/1461444818813372

- Dommett, K. (2019). Data-driven political campaigns in practice: Understanding and regulating diverse data-driven campaigns. Internet Policy Review, 8(4). https://doi.org/10.14763/2019.4.1432

- Eagly, A. H., & Chaiken, S. (2005). Attitude research in the 21st century: The current state of knowledge. In D. Albarracín, B. T. Johnson, & M. P. Zanna (Eds.), The handbook of attitudes (pp. 743–767). Lawrence Erlbaum Associates Publishers.

- Fowler, E. F., Franz, M., & Ridout, T. N. (2022). Political advertising in the United States (2nd ed.). Taylor & Francis.

- Friestad, M., & Wright, P. (1994). The persuasion knowledge model: How people cope with persuasion attempts. Journal of Consumer Research, 21(1), 1. https://doi.org/10.1086/209380

- Goldstein, K., & Ridout, T. N. (2004). Measuring the effects of televised political advertising in the United States. Annual Review of Political Science, 7(1), 205–226. https://doi.org/10.1146/annurev.polisci.7.012003.104820

- Gran, A.-B., Booth, P., & Bucher, T. (2021). To be or not to be algorithm aware: A question of a new digital divide? Information, Communication & Society, 24(12), 1779–1796. https://doi.org/10.1080/1369118X.2020.1736124

- Ham, C.-D. (2017). Exploring how consumers cope with online behavioral advertising. International Journal of Advertising, 36(4), 632–658. https://doi.org/10.1080/02650487.2016.1239878

- Ham, C.-D., & Nelson, M. R. (2016). The role of persuasion knowledge, assessment of benefit and harm, and third-person perception in coping with online behavioral advertising. Computers in Human Behavior, 62, 689–702. https://doi.org/10.1016/j.chb.2016.03.076

- Ham, C.-D., & Nelson, M. R. (2019). The reflexive persuasion game: The persuasion knowledge model (1994–2017). In S. Rodgers & E. Thorson (Eds.), Advertising theory (2nd ed., pp. 124–140). Routledge. https://doi.org/10.4324/9781351208314-8

- Helberger, N., Dobber, T., & de Vreese, C. (2021). Towards unfair political practices law: Learning lessons from the regulation of unfair commercial practices for online political advertising. Journal of Intellectual Property, Information Technology and Electronic Commerce Law, 12(3), 273–296.

- Hudders, L., De Pauw, P., Cauberghe, V., Panic, K., Zarouali, B., & Rozendaal, E. (2017). Shedding new light on how advertising literacy can affect children’s processing of embedded advertising formats: A future research agenda. Journal of Advertising, 46(2), 333–349. https://doi.org/10.1080/00913367.2016.1269303

- Kefford, G., Dommett, K., Baldwin-Philippi, J., Bannerman, S., Dobber, T., Kruschinski, S., Kruikemeier, S., & Rzepecki, E. (2022). Data-driven campaigning and democratic disruption: Evidence from six advanced democracies. Party Politics, 29(3), 448–462. https://doi.org/10.1177/13540688221084039

- Kelly, L., Kerr, G., & Drennan, J. (2020). Triggers of engagement and avoidance: Applying approach-avoid theory. Journal of Marketing Communications, 26(5), 488–508. https://doi.org/10.1080/13527266.2018.1531053

- Kirmani, A., & Campbell, M. C. (2004). Goal seeker and persuasion sentry: How consumer targets respond to interpersonal marketing persuasion. Journal of Consumer Research, 31(3), 573–582. https://doi.org/10.1086/425092

- Kozyreva, A., Lewandowsky, S., & Hertwig, R. (2020). Citizens versus the internet: Confronting digital challenges with cognitive tools. Psychological Science in the Public Interest, 21(3), 103–156. https://doi.org/10.1177/1529100620946707

- Kruikemeier, S., Boerman, S. C., & Bol, N. (2020). Breaching the contract? Using social contract theory to explain individuals’ online behavior to safeguard privacy. Media Psychology, 23(2), 269–292. https://doi.org/10.1080/15213269.2019.1598434

- Kruikemeier, S., Sezgin, M., & Boerman, S. C. (2016). Political microtargeting: Relationship between personalized advertising on Facebook and voters’ responses. Cyberpsychology, Behavior, and Social Networking, 19(6), 367–372. https://doi.org/10.1089/cyber.2015.0652

- Kruschinski, S., & Haller, A. (2017). Restrictions on data-driven political micro-targeting in Germany. Internet Policy Review, 6(4). https://doi.org/10.14763/2017.4.780

- Lamprianou, I., & Ellinas, A. A. (2019). Emotion, sophistication and political behavior: Evidence from a laboratory experiment. Political Psychology, 40(4), 859–876. https://doi.org/10.1111/pops.12536

- Kaid, L. L. (2006). Political advertising in the United States. In L. L. Kaid & C. Holtz-Bacha (Eds.), The SAGE handbook of political advertising (pp. 37–64). Sage Publications, Inc. https://us.sagepub.com/en-us/nam/the-sage-handbook-of-political-advertising/book227468

- Malhotra, N. K. (2005). Attitude and affect: New frontiers of research in the 21st century. Journal of Business Research, 58(4), 477–482. https://doi.org/10.1016/S0148-2963(03)00146-2

- Malmelin, N. (2010). What is advertising literacy? Exploring the dimensions of advertising literacy. Journal of Visual Literacy, 29(2), 129–142. https://doi.org/10.1080/23796529.2010.11674677

- Masur, P. K. (2020). How online privacy literacy supports self-data protection and self-determination in the age of information. Media and Communication, 8(2), 258–269. https://doi.org/10.17645/mac.v8i2.2855

- Mazarr, M., Bauer, R., Casey, A., Heintz, S., & Matthews, L. (2019). The emerging risk of virtual societal warfare: social manipulation in a changing information environment. RAND Corporation. https://doi.org/10.7249/RR2714

- Nelson, M. R., Ham, C. D., & Haley, E. (2021). What do we know about political advertising? Not much! Political persuasion knowledge and advertising skepticism in the United States. Journal of Current Issues & Research in Advertising, 42(4), 329–353. https://doi.org/10.1080/10641734.2021.1925179

- Noetzel, S., Stubenvoll, M., Binder, A., & Matthes, J. (2022). How to avoid targeted campaign ads. Predicting reactive and preventive avoidance behaviors toward targeted political advertising [ Manuscript submitted for publication]. Department of Communication, University of Vienna.

- Norris, P. (2000). A virtuous circle: Political communications in postindustrial societies. Cambridge University Press.

- Penney, J. W. (2017). Internet surveillance, regulation, and chilling effects online: A comparative case study. Internet Policy Review, 6(2). https://doi.org/10.14763/2017.2.692

- Perloff, R. M. (1999). The third person effect: A critical review and synthesis. Media Psychology, 1(4), 353–378. https://doi.org/10.1207/s1532785xmep0104_4

- Plonsky, L., & Ghanbar, H. (2018). Multiple regression in L2 research: A methodological synthesis and guide to interpreting R2 values. The Modern Language Journal, 102(4), 713–731. https://doi.org/10.1111/modl.12509

- Roemmele, A., & Gibson, R. (2020). Scientific and subversive: The two faces of the fourth era of political campaigning. New Media & Society, 22(4), 595–610. https://doi.org/10.1177/1461444819893979

- Rozendaal, E., Lapierre, M. A., van Reijmersdal, E. A., & Buijzen, M. (2011). Reconsidering advertising literacy as a defense against advertising effects. Media Psychology, 14(4), 333–354. https://doi.org/10.1080/15213269.2011.620540

- Rozendaal, E., Opree, S. J., & Buijzen, M. (2016). Development and validation of a survey instrument to measure children’s advertising literacy. Media Psychology, 19(1), 72–100. https://doi.org/10.1080/15213269.2014.885843

- Sander, I. (2020). Critical big data literacy tools—Engaging citizens and promoting empowered internet usage. Data & Policy, 2. https://doi.org/10.1017/dap.2020.5

- Sax, M., Helberger, N., & Bol, N. (2018). Health as a means towards profitable ends: mHealth apps, user autonomy, and unfair commercial practices. Journal of Consumer Policy, 41(2), 103–134. https://doi.org/10.1007/s10603-018-9374-3

- Scammell, M., & Langer, A. I. (2006). Political advertising: Why is it so boring? Media Culture & Society, 28(5), 763–784. https://doi.org/10.1177/0163443706067025

- Smit, E. G., Van Noort, G., & Voorveld, H. A. M. (2014). Understanding online behavioural advertising: User knowledge, privacy concerns and online coping behaviour in Europe. Computers in Human Behavior, 32, 15–22. https://doi.org/10.1016/j.chb.2013.11.008

- Speck, P. S., & Elliott, M. T. (1997). Predictors of advertising avoidance in print and broadcast media. Journal of Advertising, 26(3), 61–76. https://doi.org/10.1080/00913367.1997.10673529

- Spurk, D., Hirschi, A., Wang, M., Valero, D., & Kauffeld, S. (2020). Latent profile analysis: A review and “how to” guide of its application within vocational behavior research. Journal of Vocational Behavior, 120, 103445. https://doi.org/10.1016/j.jvb.2020.103445

- Strömbäck, J. (2005). In search of a standard: Four models of democracy and their normative implications for journalism. Journalism Studies, 6(3), 331–345. https://doi.org/10.1080/14616700500131950

- Strycharz, J., Noort, G. V., Smit, E., & Helberger, N. (2019). Protective behavior against personalized ads: Motivation to turn personalization off. Cyberpsychology: Journal of Psychosocial Research on Cyberspace, 13(2), Article 2. https://doi.org/10.5817/CP2019-2-1

- Strycharz, J., & Segijn, C. M. (2022). The future of dataveillance in advertising theory and practice. Journal of Advertising, 51(5), 574–591. https://doi.org/10.1080/00913367.2022.2109781

- Tabachnick, B. G., Fidell, L. S., & Ullman, J. B. (2019). Using multivariate statistics (7th ed.). Pearson.

- Tufekci, Z. (2014). Engineering the public: Big data, surveillance and computational politics. First Monday. https://doi.org/10.5210/fm.v19i7.4901

- Van den Broeck, E., Poels, K., & Walrave, M. (2020). How do users evaluate personalized Facebook advertising? An analysis of consumer- and advertiser controlled factors. Qualitative Market Research: An International Journal, 23(2), 309–327. https://doi.org/10.1108/QMR-10-2018-0125

- van Noort, G., Antheunis, M. L., & van Reijmersdal, E. A. (2012). Social connections and the persuasiveness of viral campaigns in social network sites: Persuasive intent as the underlying mechanism. Journal of Marketing Communications, 18(1), 39–53. https://doi.org/10.1080/13527266.2011.620764

- van Ooijen, I., Segijn, C. M., & Opree, S. J. (2022). Privacy cynicism and its role in privacy decision-making. Communication Research, 009365022110609. https://doi.org/10.1177/00936502211060984

- Voorveld, H. A. M., van Noort, G., Muntinga, D. G., & Bronner, F. (2018). Engagement with social media and social media advertising: The differentiating role of platform type. Journal of Advertising, 47(1), 38–54. https://doi.org/10.1080/00913367.2017.1405754

- Zaller, J. R. (1992). The nature and origins of mass opinion. Cambridge University Press. https://doi.org/10.1017/CBO9780511818691

- Zhang, J., & Mao, E. (2016). From online motivations to ad clicks and to behavioral intentions: An empirical study of consumer response to social media advertising. Psychology & Marketing, 33(3), 155–164. https://doi.org/10.1002/mar.20862