Given abundant textual data, such as consumer reviews, online discussion forums, and research surveys, researchers in marketing and management and information systems ought to utilize the toolbox of analyzing textual data quantitatively. Yang and Subramanyam (Citation2023) state that topic models can be notorious for their instability, i.e., the generated results could be inconsistent and irreproducible at different times, even on the same dataset. To alleviate the instability problem and extract actionable insights from textual data, these authors proposed a Stable LDA (Linear Discriminant Analysis) method. Their method used four textual data sets to incorporate topical word clusters into the topic models to steer the model inference toward consistent results. The idea is to go beyond cursory eliciting of information from subjects’ textual responses, to analyze this so-called qualitative data quantitatively using myriad tools available.

Quantitative textual analysis refers to methods that extend beyond (though sometimes incorporating) traditional humanistic “close readings.” For example, whereas a traditional Latinist might spend years pursuing answers to a set of research questions about Ovid’s corpus, the quantitative analyst might instead process that corpus and run a topic model on it to address the same or similar research questions. In the field of social research, there have been quantitative analyses of various kinds of textual data, such as newspapers or open-ended survey questions. In most of these cases, either the correlational approach or the dictionary-based approach has been employed. In the former approach, multivariate analyses are utilized to examine word correlations or co-occurrences and discover themes. For example, cluster analysis of words is used to find groups of words that appear frequently in the same document. In the latter approach, words or documents are assigned to preexisting categories following rules that are created by the researchers, and categorization results are analyzed quantitatively.

Textual analysis involves analyzing not only the contents but also the structure or design of a text and how the different parts are organized in sentences, paragraphs, and sequencing as a part of a larger context. Textual content comes in many forms, including semantically in paragraphs, headings, lists, and tables; visually in images, infographics, and fliers and programmatically in alternative text, closed captions, and transcriptions. The purpose of textual analysis is to describe the content, structure, and functions of the messages contained in texts. In a business enterprise, textual analysis like sentiment analysis, topic detection, and urgency identification, help gain a deeper understanding of customers, to improve the customer experience, and to listen to customers at every step of a journey. According to Smith (Citation2017), textual analysis is a transdisciplinary method that is present in a number of the social sciences and humanities disciplines, including sociology, psychology, political science, health, history, and media studies. The method of textual analysis can include specialized methods within these disciplines such as content analysis, semiotics, interactional analysis, and rhetorical criticism. Semiotics is the study of signs and symbols and how meaning is ascribed to them; this is similar to interactional analyses that attempt to understand the ways texts are used by actors in various contexts. Rhetorical criticism focuses more on a singular text and assesses how constructed messages can be improved.

Content analysis is a major subset of overall textual analysis. Content analysis has been used to make replicable and valid inferences by interpreting and coding textual material – documents, oral communication, and graphics – qualitative data can be converted into quantitative data. In the era of big data, the methodological technique of content analysis can be the most powerful tool in the researcher’s kit. Content analysis is versatile enough to apply to textual, visual, and audio data. Given the massive explosion in permanent, archived linguistic, photographic, video, and audio data arising from the proliferation of technology, the technique of content analysis appears to be on the verge of a renaissance (Stemler, Citation2015). Smith (Citation2017) notes that content analysis can vary given its quantitative or qualitative approach, but broadly deals with the assessment of material in a given text (whether it is merely counting occurrences or searching for deeper meaning).

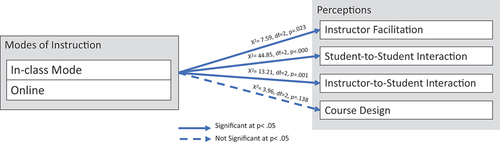

This study employs a systematic methodology to evaluate the effectiveness, influence, and consequences of different instructional approaches (specifically, traditional versus online education) within an introductory business analytics curriculum. The primary aim is to clarify their specific impact on student learning outcomes. This data was collected using several questions on a survey. Perceptions were collected on a five-point Likert scale, as well as with open-ended responses. The quantitative analysis of this research has already been previously published (Matta & Palvia, Citation2022). below shows a high-level summary of the analysis of four perceptions held by students on the instruction modes.

Figure 1. Results of a comparison between perceptions of online and in-class modes of instruction using an Omnibus Friedman Test.

This study extends the open-ended comments collected from students on four perceptions: Instructor Facilitation, Student-to-Student Interaction, Instructor-to-Student Interaction, and Course Design. The systematic analysis of these comments is provided in this paper.

Methodology

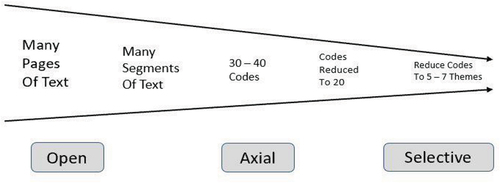

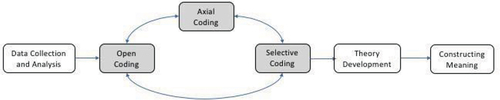

Williams and Moser (Citation2019) state, “While quantitative research methods seek to count and provide statistical relevance related to how often a phenomenon occurs and then generalize the findings, qualitative research methods provide opportunities to delve into the phenomenon and determine its meaning while and after it occurs.” This research delves into the qualitative analysis of detailed comments from students who experienced the three modes of instruction to improve our understanding of student perceptions and satisfaction. A key data organizing structure in qualitative research is coding. Saldaña (Citation2021) define this here: “A code in qualitative inquiry is most often a word or short phrase that symbolically assigns a summative, salient, essence-capturing, and/or evocative attribute for a portion of language-based or visual data”. Coding is essentially a three-part schema – Codes, Categories, and Theory. Open, Axial, and Selective coding of collected data facilitates the creation of categories and then theory. These are described in and (Williams & Moser, Citation2019).

A myriad of computer-assisted tools (Yin, Citation2009) such as Atlas.ti, HyperRESEARCH, NVivo, and Ethnograph are available to do an extensive analysis of textual data. These tools can help in coding and categorizing large amounts of narrative text. Other software in the market that can conduct more extensive and sophisticated analysis of textual data are proprietary software such as A.nnotate, Aquad, MAXQDA, XSight, Qiqqa, and open source software like Coding Analysis Toolkit (CAT), RQDA, Compendium, Transana, and Dedoose (web-based).

We analyzed over 3000 words of open-ended student responses to eight questions meant to not only draw lessons for online education but also elicit ideas for improvement to existing theories for online learning. For each of the four perceptions of instructional modes – instructor facilitation, student-to-student interactions, instructor-to-student interactions, and course design, students were asked what impressed them the most and what could be improved. We summarized their responses and developed patterns using the process of open coding. In following this process of open coding, we first aligned themes using color coding, based on multiple reads of respondent’s notes from survey questions. Then, to adjust for investigator biases, the second author also verified the codes and adjusted them as necessary. Second, we used the “5W-1 H” (who, what, where, when, why, and how) questions as a foundational way for exploring and examining data to “list characterizing codes and categories attached to the text” (Flick, 2009, p. 311). Third, we counted the number of times a theme (code) appeared at the end of each of the eight questions. The counts or frequencies point to the importance or criticality of the various themes. On completion of open coding, we engaged in axial coding to reduce the number of themes. In this second level of coding, we sifted through the data, refined and categorized it to create distinct thematic categories in preparation for selective coding. In the next level of selective coding, we further collapsed the few identified categories to develop fewer high-level themes relevant to our research context. Then, in the next section, we used these high-level themes to propose emergent lessons for online modes of instruction. For each characteristic, we discuss key findings, aspects that impressed students most, and what the instructor could have done better.

Instructor facilitation

This component captured whether the instructor provided stimulated intellectual effort and was involved in facilitating student learning. According to Baber (Citation2020), Garrison (Citation2016) and Eom and Ashill (Citation2016), instructor facilitation has a significant impact on student satisfaction and perceived learning outcomes. Students discussed aspects that impressed them and those that needed improvement. Although appreciative of instructor facilitation and effort, students complained about content overload in the online modes.

Analysis of the quantitative data for this factor showed that students perceived the Instructor Facilitation for learning significantly better in the in-class mode than in either of the two online modes. As seen in the emerging themes, they cited the instructor’s passion, knowledge, engagement, and teaching style as being salient.

Aspects that impressed students most

Students appreciated the instructor’s facilitation of instruction in the online mode – the organization, deliberation, dedication, engagement, involvement, responsiveness to e-mail messages, flexibility, adjustment (to calm the nerves), encouragement for class participation by all students through discussion boards (they noted that in F2F instruction class participation was dominated by extroverted students putting introverted students at a disadvantage), provision of discussion forum summaries, demonstrated care for student learning and grades, sense of humor, good knowledge of the subject, adroit use of detailed PowerPoint slides during lectures, and patience. The results of selective coding of the detailed comments yielded the following pattern.

Caring, passionate, flexible, effective, and organized (7)

Knowledge (6)

Engagement (6)

Humor and teaching style (2)

Discussion board (2) what the instructor could have done better

While students appreciated what the instructor did to facilitate learning, their commentary revealed that online teaching required additional scaffolding in the form of more checkpoints, more explanations with real world examples, clearer communication and establishment of systematic and structured work to impose discipline among students. They also noted the need to be better prepared for online synchronous teaching with additional features like audio clips on the PowerPoint slides. Additionally, students expressed a strong preference for proctored synchronous quizzes rather than timed asynchronous quizzes.

Nothing (12)

Need more reminders/explanations (5)

Need more real-life examples (3)

Have synchronous quizzes (instead of asynchronous time based quizzes) with all video cameras turned on (2)

Needed more preparation/effectiveness in using Zoom (2)

Difficult to fully comprehend in online mode (2)

Student-to-student interaction

This component captured whether the students considered their interaction with one another to be frequent, positive, constructive, and helpful in improving the quality of their learning outcomes. Interactions among students appeared reduced from F2F mode to some extent but remained steady at a lower level in the online mode. Students were required to make presentations on selected topics in F2F as well as online synchronous modes. Presentations required judgment calls about content, style, format, etc. including multiple choice questions to be answered by other students – this facilitated student interaction in both F2F and online synchronous modes. Students who were shy while in class and in synchronous modes, felt more empowered to contribute and interact in asynchronous modes. This phenomenon was also noted by Busteed (Citation2021), who found that 32% of online students are more comfortable sharing opinions as compared to 17% of students in class. In the asynchronous mode, students better appreciated the online discussion forum functionality to comment on chapter contents. When a few motivated students sent useful information on almost every chapter in the textbook, interactions amongst students increased as counter comments were generated by fellow students. Such interactions facilitated the formation of virtual study groups thus promoting peer-to-peer learning somewhat akin to the physical study groups in F2F learning.

As expected, analysis of quantitative data revealed that students perceived the interactions amongst themselves to be significantly better in class over either of the online modes. They missed the face-to-face interactivity, which was further deterred in the online synchronous mode when several students kept their video cameras off. Interestingly, although they perceived no significant difference between the two online modes, they considered the asynchronous mode to have better student-to-student interactions than the synchronous mode. We believe this was an outcome of discussion forum activity and the creation of informal virtual study groups.

Aspects that impressed students most

Students liked the interactions amongst them that were facilitated by the online asynchronous discussion forum, even though it was mostly driven by a couple of motivated students. The instructor’s role in discussing salient points in the mode of this utility was effective particularly because the forum was student-driven. It carried issues faced by students and was therefore essentially relevant to them. Student presentations in both in-class and online modes enabled useful interactions. Selective coding of detailed student comments tends to confirm the above assertions:

Interaction and learning through student presentations in both modes (9)

Increase interactions through the discussion forum (5)

Interactions through e-mails and informal study groups beyond discussion forum (3)

What the instructor could have done better

Although most students dislike having the camera on, they noted that doing so facilitated interaction among them. Eye contact, despite being virtual, facilitates familiarity, which in turn encourages interaction. Without cameras, students hesitated to interact with unknown peers and even know peers. One of the emerging themes was a preference for group projects instead of individual projects, which had been implemented to alleviate the issue of inequitable load sharing. The summary of patterns emerging through selective coding yielded the following result:

Nothing (9)

Have a group project rather than individual presentations (6)

Need more interaction during the synchronous Zoom class requiring all videos to be on and through group chats (4)

Instructor-to-student interaction

This component captured whether the student considered their interaction with the instructor to be frequent, positive, constructive, and helpful in improving the quality of their learning outcomes. Students claimed that they missed the ability to ask questions in the online synchronous mode and recommended having virtual office hours.

Once again, data suggested that students perceived the instructor’s interaction with students in the in-class mode to be significantly better than both online modes. Also similar to the instructor’s facilitation, students perceived no significant difference between the two online modes.

Aspects that impressed students most

Students appreciated prompt replies to their e-mail messages that were open, honest, caring, prompt, and meaningful feedback on homework assignments – items (i), (iii) &; (iv) below. But apparently, these were better in the in-class mode. Although item (ii) is listed as positive, student commentary also listed it as an area for improvement. They also attributed this to an inclusive teaching style that incorporated student involvement, such as addressing students by their names and encouraging participation. Other notable attributions included discussion forum summaries, detailed coverage of course material, and a knowledgeable professor with a sense of humor. Emerging themes in response to this question include instructor’s empathy for students who were under-prepared for online teaching.

Effective prompt communication by the instructor (15);

Discussion board feedback and summaries (7);

Motivate with humor and care for student learning (5);

Knowledge (2);

What the instructor could have done better

Students requested adequate office hours and fairness in the selection of participants for teams. Their commentary exhibited awareness of their own need to be proactive, responsive, and participate more. They cited some challenges due to inferior audio and video quality in the online mode. Some students did not turn on their cameras in the synchronous online mode, which contributed to reduced interactions with the professor. They point to the need for students to be more proactive and for the Professor to provide more support in the form of explicit instructions. Selective coding of their commentary revealed the following themes for improvement.

Nothing (16);

Students to be more proactive in asking questions (8);

More explanations by Instructor (5)

No online teaching (2);

While the majority of students did not offer any suggestions for improvement, the last three pertain to the inadequacy of the online mode in the sense that the students could not be more proactive in asking questions and the instructor could not provide more explanations concerning course components. These items are reflective of the need for improvement in online modes of instruction.

Course design

This component referred to course objectives, procedures, grading components, and policies as noted in the syllabus. Course elements included instructional tools and technologies such as discussion forums, class participation systems, and lecture slides. Although the instructor was no longer physically present, the instructional tools remained the same. Students did not perceive course design as being significantly different across the three modes but expressed a slight preference for course design in the in-class mode. This is consistent with Piccoli et al. (Citation2020) who note that even before the pandemic, course design had already been gradually progressing toward digitizing the instructor’s role. Students and instructors have already been shifting to seeking online interactions over face-to-face and reduced personal visits to instructor offices. This supports the lack of significant results for differences in perceptions about course design between online and in-class modes of instruction.

Aspects that impressed students most

Students appreciated the organization, relevance, and delivery of course components in all modes, including a clear correlation between content discussed in class and content tested. Course components included Excel as a tool, class engagement in person and through discussion forums, student presentations in-class and online, lectures and their alignment with the homework and tests, clarity of the homework assignments’ instructions, and the ability of technology to support instruction. The following themes emerged in terms of what impressed students:

Learned Excel well (12)

Discussion Forum was effective (4)

Lectures were aligned to tests and homework assignments (3)

Class participation points (3)

Student presentations were helpful (3)

Assignment instructions were detailed (2)

Lecture slides were effective (2)

Technology (Blackboard, discussion forum, video conferencing tools) was effective (2)

What the instructor could have done better

While most students appeared to be satisfied, students were able to realize the effectiveness of the explanation of in-person education. We already know that it is harder to retain information while learning online. Students also requested more explanations of assignments. Some students suggested making participation on discussion boards mandatory. Better connectivity and faster internet speeds were needed to improve learning in the online synchronous mode with video and screen-sharing. One instructional element was not appreciated by some students – timed asynchronous quizzes that were instituted with the move to online instruction. The following themes emerged from student commentary.

Nothing (4)

Better Instructions and explanations (4)

Technology (New computer, better connection, stronger server) (3)

More discussion board and student presentations (2)

In summary, we analyzed student perceptions regarding four key instructional factors across in-class and online modes of instruction: instructor facilitation, student-to-student interaction, instructor-to-student interaction, and course design. We found that students greatly appreciated the passion, knowledge, engagement, and teaching style demonstrated by the instructor across both in-class and online modes. However, they desired additional scaffolding, real-world, examples, and structure in the online environment to add comprehension and learning in class interactions were highly valued by students for enabling engagement. Yet online discussion forums provided flexibility for quieter students to participate more fully. Prop communication and feedback from the instructor were effective. Students wanted more virtual office hours and detailed explanations in online settings. Lastly, students were largely satisfied with course design across modes but sought in enhanced instructions and technology support for online learning. In conclusion, while in-class instruction was still preferred, overall for satisfaction of learning outcomes, online modes provided beneficial participation flexibility though improvements in scaffolding and instructor interaction are needed.

In this way, we have demonstrated a multi-step coding process to analyze over 3000 words of open-ended student responses. We followed a five-step process that began with open coding by lining themes and color coding based on multiple reads of textual data. In the second step we used the 5W1H technique to further characterize and categorize the codes. Then, we counted code frequencies to identify critical themes. Fourth, we conducted axial coding to reduce and refine codes into distinct categories, and finally through selective coding, we collapsed to the categories into a few high-level themes, relevant to the research context. We recommend that researchers with textual responses from subjects analyze these utilizing one or more of the quantitative approaches available.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Shailendra Palvia

Shailendra C. Palvia is Professor Emeritus of MIS at Long Island University (LIU) Post. At LIU, he was Director of MIS during 1997-2004. He received his M.B.A. from the University of Minnesota, and B.S. in Chemical engineering from the Indian Institute of Technology, New Delhi, India. He has published over 150 articles in refereed journals and conference proceedings including Decision Sciences, Communications of the ACM, MIS Quarterly, Information & Management, and Communications of AIS. He is the Founding Editor (1999-2007 and 2013-2020) of the Journal of IT Case and Application Research (JITCAR). During 2002-2013, he chaired eleven annual international smart-sourcing conferences held in USA, India, and South Korea. He was nominated by the LIU Post to receive Robert Krasnoff Lifetime Scholarship Award in 2012 and 2016. Dr. Palvia has co-edited four books on Global IT Management and one book on Global Sourcing Management. He spent four months from January to April, 2017 at the Indian Institute of Management, Ahmedabad, India as Fulbright-Nehru Senior Scholar. As a global scholar, he conducted Case Research Methodology classes for the Ph.D. students of Business School at the Kennesaw State University in 2022 and 2023. He has been an invited speaker (including keynote speaker) to Germany, India, Italy, Russia, Singapore, Thailand, and USA.

Vic Matta

Vic Matta is an Associate Professor in the Analytics and Information Systems (AIS) Department at the College of Business, Ohio University, Athens, Ohio. He has ten years of information systems industry experience and a Ph.D. from the Russ College of Engineering and Technology. He teaches courses in Business Analytics, Visualization, and Strategic Use of Analytics. He conducts business consulting and project management in our executive education workshops. He is an accomplished teacher and has won several teaching awards at the college and university. He has many editorial engagements and is an Industry Editor for JITCAR. He is an active member of the Association of Information Systems (AIS) and the Special Interest Group in Decision Support and Analytics.

References

- Baber, H. (2020). Determinants of students’ perceived learning outcome and satisfaction in online learning during the pandemic of COVID-19. Journal of Education and E-Learning Research, 7(3), 285–292. https://doi.org/10.20448/journal.509.2020.73.285.292

- Busteed, B. (2021). This May be the biggest lesson learned from online education during the pandemic. Education. Retrieved from https://www.forbes.com/sites/brandonbusteed/2021/03/03/this-may-be-the-biggest-lesson-learned-from-online-education-during-the-pandemic/?sh=23a092165086

- Eom, S. B., & Ashill, N. (2016). The determinants of students’ perceived learning outcomes and satisfaction in University online education: An update. Decision Sciences Journal of Innovative Education, 14(2), 185–215. https://doi.org/10.1111/dsji.12097

- Garrison, D. R. (2016). E-Learning in the 21st Century: A community of inquiry framework for research and practice. Taylor & Francis.

- Matta, V., & Palvia, S. (2022). A comparison of student perceptions and academic performance across three instructional modes. Information Systems Education Journal, 20(2), 38–48. https://eric.ed.gov/?id=EJ1342025

- Piccoli, G., Bartosiak, M. Ł., Palese, B., & Rodriguez, J. (2020). Designing scalability in required in-class introductory college courses. Information & Management, 57(8), 103263. https://doi.org/10.1016/j.im.2019.103263

- Saldaña, J. (2021). The coding manual for qualitative researchers. sage.

- Smith, J. A. (2017). Textual analysis. The International Encyclopedia of Communication Research Methods, 1–7. http://doi.org/10.1002/9781118901731.iecrm0248

- Stemler, S. E. (2015). Content analysis. Emerging Trends in the Social and Behavioral Sciences: An Interdisciplinary, Searchable, and Linkable Resource, 1–14.

- Williams, M., & Moser, T. (2019). The art of coding and thematic exploration in qualitative research. International Management Review, 15(1), 45–55.

- Yang, Y., & Subramanyam, R. (2023). Extracting actionable insights from text data: A stable topic model approach. MIS Quarterly, 47(3), 923–954. https://doi.org/10.25300/MISQ/2022/16957

- Yin, R. (2009). Case study research design and methods fourth edition, applied social research methods seiries. In.