Abstract

Objective: The human–machine interface (HMI) is a crucial part of every automated driving system (ADS). In the near future, it is likely that—depending on the operational design domain (ODD)—different levels of automation will be available within the same vehicle. The capabilities of a given automation level as well as the operator’s responsibilities must be communicated in an appropriate way. To date, however, there are no agreed-upon evaluation methods that can be used by human factors practitioners as well as researchers to test this.

Methods: We developed an iterative test procedure that can be applied during the product development cycle of ADS. The test procedure is specifically designed to evaluate whether minimum requirements as proposed in NHTSA’s automated vehicle policy are met.

Results: The proposed evaluation protocol includes (a) a method to identify relevant use cases for testing on the basis of all theoretically possible steady states and mode transitions of a given ADS; (b) an expert-based heuristic assessment to evaluate whether the HMI complies with applicable norms, standards, and best practices; and (c) an empirical evaluation of ADS HMIs using a standardized design for user studies and performance metrics.

Conclusions: Each can be used as a stand-alone method or in combination to generate objective, reliable, and valid evaluations of HMIs, focusing on whether they meet minimum requirements. However, we also emphasize that other evaluation aspects such as controllability, misuse, and acceptance are not within the scope of the evaluation protocol.

Introduction

Partially automated driving (SAE level 2; Society of Automotive Engineers [SAE] 2018) in which the user is still required to control the vehicle in case of system limits and failures is already available by most manufacturers. Higher levels of automation in which the driver is merely required to provide fallback performance of the driving task (conditional automation; SAE level 3) will be brought to the market in the next few years. This will likely create situations in which different levels of automation are present in the same vehicle, depending on the operational design domain (ODD; e.g., level 3 on the highway, level 2 on rural roads). It thus has to be ensured that the level of automation that is operational at any given time and the driver’s responsibilities are communicated appropriately by the human–machine interface (HMI). In the context of driver distraction research, methods for investigating and ensuring the suitability of in-vehicle HMIs for use have been established. For this purpose, standardized and generally accepted test and evaluation methods such as heuristic evaluations (e.g., Campbell et al. Citation2016) and simulator study protocols (e.g., Alliance of Automobile Manufacturers Citation2006; NHTSA Citation2014) are available.

However, with the change in the driver’s role from manually controlling (level 0) to supervising the vehicle automation (level 2) or acting as a fallback performer (level 3), these methods can no longer be readily applied. Furthermore, recent research has been conducted in the context of level 3 automation, uncovering new challenges for HMI design such as overtrust or mode confusion (Feldhütter et al. Citation2017). However, the results of these studies vary widely, with reaction times to requests to intervene ranging from 1 s to as much as 15 s (Eriksson and Stanton Citation2017). This might very well be attributed to variations in the experimental protocols (e.g., selection of participants or instructions). This example shows that there is an urgent need to develop and standardize test methods for assessing automated driving system (ADS) HMIs that can be employed in the technical and scientific community (Burns Citation2018).

This article outlines a newly developed test procedure designed to advance standardization in testing of HMIs for automated driving. With the Federal Automated Vehicles (AV) Policy, the NHTSA (Citation2017) has provided a valuable framework that can be used to guide the development and validation of ADS as part of a voluntary self-assessment. Acknowledging that the HMI—identified as 1 of 12 priority safety design elements in NHTSA’s AV Policy—will be crucial for the success of ADS, we developed a 3-step iterative test procedure that can be employed to evaluate ADS HMIs. The current article describes the underlying considerations and structure as well as methodological details of the test procedure and an exemplary application for the evaluation of SAE level 3 HMIs. The proposed test procedure consists of 3 steps:

A standardized set of use cases has to be defined to make sure that the test procedure generates comprehensive and comparable results. Accordingly, a use case catalogue was developed based on combinatorics of SAE levels of driving automation (Naujoks et al. Citation2018). The resulting set of use cases was narrowed down by theoretical and practical considerations derived from an extensive literature review of published research, standards, processes (voluntary) guidance, and design principles (Naujoks et al. Citation2018).

A heuristic evaluation can be used by human factors experts to assess the compliance of an HMI with common standards, guidelines, and best practices. It can also be used as guidance by designers in early stages of product development.

A protocol for empirical studies can be employed in driving simulator, test track, and on-road studies. Specifics of the study protocol as well as guidance for practitioners and researchers are presented below.

Methods and results

Objective

In the automotive context, the evaluation of HMIs has a long tradition. In the context of manual driving, the evaluation focused on the distraction potential of in-vehicle information systems, making the assessment of visual workload associated with the in-vehicle information systems the central goal (e.g., measuring eyes-off-road time; Alliance of Automobile Manufacturers Citation2006; NHTSA Citation2014). In the context of automated driving, a variety of theoretical constructs related to the safe driver–vehicle interaction (DVI) such as trust, controllability, mode awareness, or usability could be used as criteria, because research has shown that they all pose challenges to the design and evaluation of HMIs for ADS.

The present procedure takes a more practical approach. The goal of our proposed method is to ensure that minimum HMI requirements as defined by NHTSA (Citation2017) are met that are related to and possibly even prerequisites to meet the above-mentioned criteria. Following NHTSA’s (2017) voluntary guidance,

at minimum, an ADS should be capable of informing the human operator or occupant through various indicators that the ADS is: (1) functioning properly; (2) currently engaged in ADS mode; (3) currently “unavailable” for use; (4) experiencing a malfunction; and/or (5) requesting control transition from the ADS to the operator. (p. 10)

Thus, the test procedure described in the next sections should be able to differentiate between HMIs in which these requirements are or are not fulfilled.

As such, the test protocol is intentionally limited to the assessment of the usability of the HMI, with the aim of evaluating the effectiveness (i.e., users of ADS are able to perceive the minimum required mode indicators with accuracy and completeness) and efficiency (i.e., mode indicators are designed so that users of ADS can perceive the AV mode with minimum effort) of HMI indicators (International Organization for Standardization [ISO] 2018). Thus, the scope of the HMI test protocol is to facilitate regular usage of the ADS, such as switching the automation on, checking the status of the ADS, or taking back manual control from the automation (either driver-initiated or system-initiated). It is intended to ensure that necessary mode indicators are present and that they are adequately supporting users of ADS in noticing (e.g., by placing visual interfaces close to the driver’s expected line of sight) and interpreting them (e.g., by intuitive color coding). Assessing the DVI beyond that in related domains like controllability (including the time budget needed to safely take over control from an ADS in case of system limits), misuse, or acceptance will require different methods.

Use case catalogue for assessing the HMI of ADS

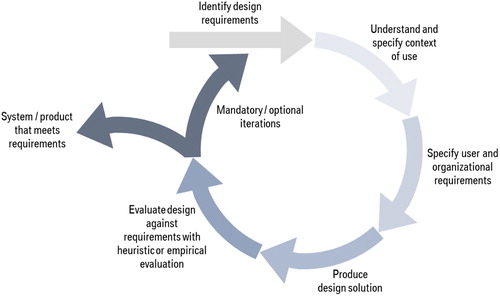

According to Nielsen (Citation1994), there are 2 main approaches to evaluating HMIs during the product development cycle: (1) Expert-based assessments involving human factors specialists and (2) empirical evaluations involving potential users of the product. The aim of these complementary approaches is to unveil usability problems and misunderstandings that may arise during the interaction with the product via the HMI. Both methods tend to uncover different kinds of usability issues so that both of them should be pursued to arrive at a comprehensive assessment (ISO 2018; see ).

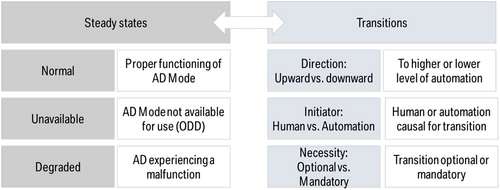

However, both methods require that relevant use cases (i.e., interaction sequences between the user and the product to achieve a desired state; see ISO 2008a) are completed by study participants or walked through by expert assessors, making the use cases the core of each evaluation procedure. In the context of ADS, the challenge in selecting use cases is to generate a comprehensive set of use cases encompassing all scenarios that are relevant to safe and efficient DVI use. Though research in this area has traditionally focused on the transition of control from level 3 to the human operator (i.e., level 0; see, for example, an overview by Eriksson and Stanton Citation2017), other types of mode transitions are rarely investigated. provides an overview of modes and steady states (i.e., driving within the same SAE level) and types of transitions (i.e., switching to a lower or higher level of automation) in the context of ADS.

Figure 2. Steady states and transitions forming the basis of the proposed use case catalogue for evaluating ADS HMIs.

Against this background and with the goal in mind to evaluate minimum requirements requested by NHTSA, we proposed a stepwise selection procedure resulting in the use case set depicted in (see Naujoks et al. [Citation2018] and Appendix I, online supplement, for a more detailed description). The approach can be applied to test different ADS with similar or even varying specifications. For example, a vehicle might only incorporate L0 (level 0) and L3 (level 3) automation, resulting in fewer use cases. The main advantage of this procedure is that it provides a clear and reproducible way of deducting to-be-tested use cases, regardless of potential variations between actual systems.

Table 1. Use case set for the assessment of minimum HMI requirements based on NHTSA’s (2017) Federal AV Policy. Note that some use cases might not be applicable if a vehicle is not equipped with a respective system.

The final step in the selection process then consists of identifying test cases, which can be defined as instances of use cases resulting from the interaction architecture and ODD of the specific ADS. For example, reaching boundaries of the ODD of the ADS might generate different instances in which the request to intervene is displayed in a different way, making them different test cases of the same use case (see Appendix I for more examples). Thus, a comprehensive HMI assessment requires collecting all possible test cases (i.e., instances of the use cases presented in ) and using them as a basis for the subsequent evaluation.

Heuristic evaluation protocol

As an initial evaluation step, we propose a heuristic evaluation to be conducted by HMI experts (Naujoks et al. Citation2019) to check whether the ADS HMI contains all necessary mode indicators and is in line with “voluntary guidance, best practices, and design principles published by SAE International, ISO, NHTSA …” (NHTSA Citation2017, p. 10). In principle, at least 2 assessors complete all defined test cases (i.e., all instances of the use cases defined in ) and verify whether basic HMI requirements are met with the help of a standardized checklist. To compile these requirements, we reviewed applicable standards, best practices, and guidelines and combined them into a short and easy-to-use checklist (see ; Appendix II, online supplement, contains an extended version with references and detailed evaluation steps).

Table 2. Checklist items.a

A checklist sheet involving positive and negative examples for each item as well as a reporting sheet can also be found in Naujoks et al. (Citation2019). One example for a checklist item is “the system mode should be displayed continuously” (p. 123). This recommendation must be checked for all possible system states. The item is defined as acceptable if the minimum set of HMI indicators is present. A negative evaluation should be given if some indicators are completely missing, if indicators are not distinguishable from each other, or if indicators are displayed only for a very short period of time.

Test protocol in driving simulation

The last step of the test procedure consists of an empirical evaluation with test participants interacting with an HMI in a standardized manner. The aim of the test is to evaluate whether the basic HMI requirements as formulated by the NHTSA are met by observing participants’ interactions with the HMI and self-reported understanding of the mode indicators presented during transitions and steady states (i.e., the test cases). In essence, the purpose of the empirical test protocol is to investigate whether the hypotheses in are falsified on the basis of observations made during specified experimental conditions as presented in this article. For example, the assumption that the “HMI is capable of communicating that L3 cannot be activated when driving outside the ODD” would be falsified when contrary empirical observations are made. Although the exact implementation of such a test protocol will vary with the specifics of the human–machine interaction (e.g., the verification of a speech- and gesture-based interaction might differ from that of a visual–manual interaction), some common principles should be used to guarantee comparability of confirmation tests. The proposed characteristics of the study concept are supported by studies conducted by the authoring team.

Test protocol specifics

To achieve comparable and valid results, several specifics of the experimental protocol need to be taken into account. The following list provides a first set of preliminary criteria to ensure internal and external validity of evaluations:

Sample: A sufficiently large sample should be tested. At minimum, N = 20 participants should take part in the confirmation study (ISO Citation2008b; RESPONSE Consortium Citation2006). Furthermore, a diverse age distribution should be tested with a broad age distribution reflecting the target population as required by the NHTSA visual–manual distraction protocol (NHTSA Citation2014; age groups: 18–24: n = 5, 25–39: n = 5, 40–54: n = 5, >54: n = 5). Preferably, their prior experience with assistance systems is comparable. Lastly, participants should be recruited from a sample of potential users of the ADS and should not be affiliated with the entity that is carrying out the test (e.g., not working for the Original Equipment Manufacturer (OEM) that is performing the test).

Instructions: Instructions provided to participants regarding their responsibilities and the capabilities of the ADS can have a major impact on the quality of DVI (Hergeth et al. Citation2017). Because it cannot be guaranteed that all users of ADS will be comprehensively trained or familiarized prior to usage of the ADS, the instruction should be kept on a basic level. To avoid instruction effects, the participants should merely be informed that they will be using an automated driving system and driver assistance systems during the upcoming trial. No information about the respective mode indicators and driver responsibilities associated with the automation levels should be provided.

Use cases: A comprehensive set of use cases should be completed by the participants, either by asking the drivers to perform the respective control input (in case of driver-initiated transitions) or by triggering the control transitions externally (i.e., via the driving simulation; e.g., in case of requests to intervene). All use cases in that are possible when using a specific ADS should be included (e.g., when a vehicle is only capable of L3, a smaller set of use cases is used as when a vehicle also has an L2 system). An exemplary application of the use case catalogue to driver-initiated transitions from L0 to L3 automation is reported in Forster et al. (Citation2018).

Exposure and learning effects: The test should provide enough time to interact with the ADS. Though investigating first-time usage situations might be most crucial because they could create most misunderstandings and usability problems, sustained use of the system during a longer time period might uncover different kinds of usability and understanding issues. Forster et al. (Citation2019) have investigated learning effects in interaction with HMIs for automated driving and found that performance increases and stabilizes over a testing time of at least 30–40 min. The test should therefore be long enough that the use cases can be completed repeatedly so that both initial experience and repeated exposure situations are included. In our experience, completing the proposed number of use cases at least twice should take no longer than approximately 1 h.

Test environment fidelity: A sufficiently high realism should be achieved to guarantee external validity of the results. Driving simulators provide the opportunity of controlled testing in a hazard-free environment, but external validity might be an issue. Thus, use of a high-fidelity simulator with the possibility of simulating the automatic execution of lateral and longitudinal control, including a realistic mock-up allowing the driver to intervene by steering, braking, or accelerating with hands-on detection at the steering wheel and the ability to implement the relevant HMI elements such as visual displays and control devices is recommended. A moving-based driving simulator might be desirable but not inevitably necessary for a valid observation of the quality of DVI (Wang et al. Citation2010).

Driving situation complexity: Because research has demonstrated that the quality of DVI (e.g., takeover time) is sensitive to situational variations (e.g., Gold et al. Citation2018), we argue that for the purpose at hand the test situations should be realistic but not so complex that it creates unnecessary variance caused by the experimental setup and not the HMI under investigation (i.e., low traffic density, no need for route guidance, no intersections, etc.). We propose to trigger system-initiated transitions without any environmental cues because the HMI should be able to transfer the relevant information for the driver to take action (e.g., deactivating a system) completely on its own. For example, when testing a request to intervene in poor weather conditions, a participant might decide to take back vehicle control from the ADS because of the situational circumstances and not as a result of correctly perceiving and interpreting the HMI. The test situations outlined by the Alliance of Automobile Manufacturers (Citation2006) could serve as a template for this.

Evaluation criteria

provides an overview of the criteria used to decide whether the HMI is compliant with the basic HMI requirements. The level of abstraction in formulation of the criteria is deliberately kept on an intermediate level that allows flexibility to adapt the criteria to the specific HMI. For example, we propose that the indicators associated with the respective automated driving mode should be identified correctly. Demonstrating that this requirement is met could be achieved in several ways, such as interviewing participants during or after the test drives or observing their behavior during the drive. Behavioral observations could be conducted in form of observing how drivers interact with a non-driving-related task and/or whether they have noticed HMI indicators when they are presented. Both methods have their own constraints such as memory effects in the context of interviews or ambiguity of behavioral observations. Therefore, we propose that a combination of self-report and observational measures should be used (see Appendix III, online supplement, for more details) and that the decision should be documented in the form of a pass–fail logic. For the criteria to be fulfilled, we propose to follow the criteria as proposed in the Response Code of Practice (ISO Citation2008b; RESPONSE Consortium Citation2006). The Code of Practice argues that “practical testing experience revealed that a number of 20 valid data sets per scenario can supply a basic indication of validity” (p. 13), because 20 out of 20 participants passing the defined criteria in the user study support the assumption that 85% of the target population will pass the criteria as well (ISO Citation2008b).

Table 3. Evaluation criteria.

Discussion

By providing an overview of relevant research and proposing a methodological framework, the test procedure described should help to stimulate discussion and advance the development of ADS evaluation approaches. By relying on complete and disjunct combinations of SAE levels of driving automation, the described method can be adapted to evaluate every conceivable ADS HMI and combinations thereof, making it a valuable tool for both researchers and practitioners alike. The proposed theoretical framework was applied in a pilot study, comparing 2 different HMI concepts for ADS (see Appendix IV, online supplement). This exemplary application for evaluating the HMI of an SAE L3 ADS HMI shows how the test procedure could be employed to test a specific system and helps to identify areas that require further research. It can be employed in a variety of settings to test ADS HMIs and can be expanded to higher levels of automation such as level 4 automation (with a transition to minimal risk state).

At this stage, the proposed test procedure focuses on the interaction between an ADS-equipped vehicle and its human operator. The procedure is designed to evaluate whether minimum HMI requirements as proposed in NHTSA’s automated vehicle policy are met. As such, it focuses on indicators used to communicate relevant system modes; however, a comprehensive HMI evaluation should also include whether the operation logic of the user interface (e.g., control design to switch between automation modes) is easily understood by users (Burns Citation2018). We suggest that both heuristic evaluations and the empirical test protocol presented in this article should be employed to arrive at a comprehensive HMI assessment. At the same time, we are aware of the limitations of the method. It must be emphasized that this article focuses on the functionality and usability aspects of the HMI. Related evaluation aspects like user acceptance and controllability of system limits are also important factors when evaluating HMIs of ADS, but they are not within the scope of this method, and they likely require very different testing methods than the one proposed here (including the evaluation of other/more use cases, more complex research environments, and evaluation criteria). Another limitation of the approach is that levels of automation might not be as distinct as suggested by the SAE definitions. For example, an L2 feature might allow hands-free driving below a certain speed but might require having hands on the steering wheel above that speed. Depending on the specific HMI design, it might thus be necessary to include these as different sublevels in the test protocol.

Supplemental Material

Download Zip (60.1 KB)Acknowledgments

We thank Katja Mayr and Sarah Fürstberger and Viktoria Geisel for their input and support in drafting this article.

References

- Alliance of Automobile Manufacturers. Statement of Principles, Criteria and Verification Procedures on Driver Interactions with Advanced In-Vehicle Information and Communication Systems. Washington, DC: Author; 2006.

- Burns P. Human factors: unknowns, knowns and the forgotten. Paper presented at: 2018 SIP-adus Workshop Human Factors; 2018; Tokyo, Japan.

- Campbell JL, Brown JL, Graving JS, et al. Human Factors Design Guidance for Driver–Vehicle Interfaces. Washington, DC: NHTSA; 2016. Report No. DOT HS 812 360.

- Eriksson A, Stanton NA. Takeover time in highly automated vehicles: noncritical transitions to and from manual control. Hum Factors. 2017;59:689–705.

- Feldhütter A, Segler C, Bengler K. Does shifting between conditionally and partially automated driving lead to a loss of mode awareness? In: Stanton A, ed. International Conference on Applied Human Factors and Ergonomics. Cham, Switzerland: Springer; 2017:730–741.

- Forster Y, Hergeth S, Naujoks F, Beggiato M, Krems J, Keinath A. Learning to use automation: behavioral changes in interaction with automated driving systems. Transp Res Part F Traffic Psychol Behav. 2019;62:599–614.

- Forster Y, Hergeth S, Naujoks F, Krems JF. Unskilled and unaware: subpar users of automated driving systems make spurious decisions. In: Adjunct Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications. New York, NY: Association for Computing Machinery; 2018:159–163.

- Gold C, Happee R, Bengler K. Modeling take-over performance in level 3 conditionally automated vehicles. Accid Anal Prev. 2018;116:3–13.

- Hergeth S, Lorenz L, Krems JF. Prior familiarization with takeover requests affects drivers’ takeover performance and automation trust. Hum Factors. 2017;59:457–470.

- International Organization for Standardization. Intelligent Transport Systems—System Architecture—“Use Case” Pro-forma Template. Geneva, Switzerland: Author; 2008a. ISO/TR 25102.

- International Organization for Standardization. Road Vehicles Functional Safety. Geneva, Switzerland: Author; 2008b. ISO 26262.

- International Organization for Standardization. Ergonomics of Human–System Interaction—Part 11: Usability: Definitions and Concepts. Geneva, Switzerland: Author; 2018. ISO 9241-11.

- Naujoks F, Hergeth S, Wiedemann K, Schömig N, Keinath A. Use cases for assessing, testing, and validating the human machine interface of automated driving systems. Proc Hum Factors Ergon Soc Annu Meet. 2018;62:1873–1877.

- Naujoks F, Wiedemann K, Schömig N, Hergeth S, Keinath A. Towards guidelines and verification methods for automated vehicle HMIs. Transp Res Part F Traffic Psychol Behav. 2019;60:121–136.

- NHTSA. Visual–Manual NHTSA Driver Distraction Guidelines for In-Vehicle Electronic Devices. Washington, DC: Author, Department of Transportation; 2014.

- NHTSA. Federal Automated Vehicles Policy 2.0. Washington, DC: Author, Department of Transportation; 2017.

- Nielsen J. Usability inspection methods. In: Conference Companion on Human Factors in Computing Systems. New York, NY: Association for Computing Machinery; 1994:413–414.

- RESPONSE Consortium. Code of Practice for the Design and Evaluation of ADAS. 2006. RESPONSE 3: A PReVENT Project.

- Society of Automotive Engineers. Taxonomy and Definitions for Terms Related to On-Road Motor Vehicle Automated Driving Systems. Warrendale, PA: SAE International; 2018.

- Wang Y, Mehler B, Reimer R, Lammers V, D’Ambrosio L, Coughlin J. The validity of driving simulation for assessing differences between in-vehicle informational interfaces: a comparison with field testing. Ergonomics. 2010;53:404–420.