Abstract

Teachers consider learning goals as they implement new technologies like Virtual Reality in the classroom, but may overlook or struggle with verifying whether new learning actually occurred. We interviewed six US high school teachers and surveyed 277 of their students to understand the pedagogical implications and challenges of coordinating assessments with the integration of VR in their classrooms. Some teachers devised new assessment strategies to capture the deep, constructivist, learning they saw occurring in their students as a result of VR. Students’ responses suggest they needed more explicit goals for the VR class activities, but students provided insights about the benefits they perceived for their retention of relevant information. Future work should continue to explore the alignment of learning objectives, technology, and assessment.

Supplemental data for this article is available online at https://doi.org/10.1080/15391523.2021.1950083 .

As emerging technologies like virtual reality (VR) become more prominent in K-12 classrooms, it is imperative that we study the value these tools can bring to schools from the perspective of both educators and students (Pantelidis, Citation2010; Southgate et al., Citation2019; Zhu, Citation2016). Research suggests that VR experiences and simulations can have positive benefits on students’ learning outcomes (Hu-Au & Lee, Citation2017; Merchant et al., Citation2014; Pantelidis, Citation2010; Vogel et al., Citation2006). However, research on the educational benefits of VR has largely come from experimental studies focused on students’ learning outcomes, often in very specific domains such as medical procedures or place-based recall (Aggarwal et al., Citation2006; Bursztyn et al., Citation2017; Freina & Ott, Citation2015; Krokos et al., Citation2019; Psotka, Citation1995). There is a need to conduct more applied research focusing on how educators approach assessment around virtual learning in natural classroom settings (Elmqaddem, Citation2019; O’Connor & Domingo, Citation2017; Roussos, Citation1997; Velev & Zlateva, Citation2017).

In our prior research, only three teachers out of 33 were actively thinking about how to assess learning after students used VR content (Castaneda et al., Citation2018). This finding suggests that assessment may not be at the forefront of educators’ minds when they incorporate VR into the classroom. We wondered why this was the case and decided to explore teachers’ approaches to VR and assessment. As noted in Shifflet and Weilbacher (Citation2015), identifying the complexities and contradictions in the actual classroom practice of applying technology is an informative and useful exercise. In the present study, we worked with a group of high school teachers to explore how they integrated VR into their classrooms and developed assessments related to those virtual experiences for their students. This research will help educators and administrators to further consider the value, as well as the complexity, of incorporating emerging technologies in the classroom and developing assessments to evaluate student learning with those technologies.

Literature review

Educators are eager to provide students with engaging ways to learn, and VR provides a medium to explore virtual scenarios and make meaning through virtual experiences (Castaneda et al., Citation2017). Research from a variety of fields has demonstrated the value of virtual simulations for learning information and skills as a result of immersion (Chen et al., Citation2020; Chernikova et al., Citation2020; Harman et al., Citation2017; Kaplan et al., Citation2021; Krokos et al., Citation2019; Pantelidis, Citation2010). Immersive VR enables students to physically engage with new concepts and environments (Bailenson, Citation2018; Hu-Au & Lee, Citation2017; Lau & Lee, Citation2015; Prensky, Citation2013; Regian et al., Citation1992; Roussou & Slater, Citation2005; Winn, Citation1993), leads to a deeper sense of presence and engagement in real or surreal environments (Coelho et al., Citation2006; Heeter, Citation1992; Makransky & Lilleholt, Citation2018), and thus likely enables them to more actively construct their own knowledge (Koohang et al., Citation2009; Roussou & Slater, Citation2005). There is even evidence that, with proper structure, students may benefit vicariously from observing their peers during a VR experience (Gholson & Craig, Citation2006; Mayes, Citation2015).

Though these types of findings are exciting, VR does not lead to significant learning gains in all contexts (e.g., Parong & Mayer, Citation2021). Moreover, there are open questions around whether such findings generalize to everyday classrooms, where applying newer technologies is more complex than in controlled research settings (Koehler & Mishra, Citation2009; Merchant et al., Citation2014; Roussos, Citation1997; Syverson & Slatin, Citation2010). Though there are many examples of teachers using technology in innovative ways, it is not always clear whether these uses actually enhance student learning (Bursztyn et al., Citation2017; Kolb, Citation2017; Price & Kirkwood, Citation2010), and learning outcomes are often not the primary focus when a new technology is introduced (Kopcha et al., Citation2020). It is vital that educators examine the alignment of desired learning outcomes with the technology they are implementing and think critically, in advance, about how they will assess the extent to which learning has occurred.

Unfortunately, many of the mainstream technology implementation frameworks (e.g., TPACK [Koehler & Mishra, Citation2009], TIM [Harmes et al., Citation2016] and SAMR [Romrell et al., Citation2014]) do not strongly emphasize the role of classroom assessment and student learning gains. Although these important topics are mentioned in Kolb’s Triple E framework, no concrete steps are provided for assessment of learning gains, leaving teachers to grapple with this task as they simultaneously work to integrate a new technology (Kolb, Citation2017). The ISTE Standards for Educators do include standards for assessment, and highlight the importance of connecting data to student learning goals (International Society for Technology in Education, Citation2017).

How can teachers assess what students learn from VR?

Much of the existing research examining VR and student learning does not address the merits, challenges, or process of having teachers design assessments that work in concert with this technology. How do teachers know if the technology facilitated the acquisition of the desired learning outcome, especially if the focus has solely been on implementing the technology itself?

When a technology is implemented into classrooms without clear intention, it may be situated in ways that do not center students and their learning goals (Ertmer & Ottenbreit-Leftwich, Citation2010; Loveless & Dore, Citation2002). In part, this is because these technologies were not designed with the classroom context in mind and therefore may be challenging to incorporate into the classroom and curriculum to begin with (Koehler & Mishra, Citation2009; Voogt & Roblin, Citation2012). When it comes to assessment, Kolb (Citation2017) notes that teachers’ enthusiasm for a new technological tool may overshadow the need to check for learning. Furthermore, teachers may not have the skills or appropriate framework to conceptualize and measure whether the tool is helping students meet learning objectives (Koehler & Mishra, Citation2009; Ross et al., Citation2010). Though VR is an inherently engaging technology, educators need to be cautious that there is not a false equivalence of student engagement with enhanced student learning (Kolb, Citation2017). Prior work has shown that while student engagement can increase with the introduction of a new technological tool, this does not always result in increased performance on assessments (Baker & O’Neil, Citation1994; Sandholtz et al., Citation1997). In order to understand student learning gains, educators must first have assessments that effectively capture the learning that may be occurring from the virtual experience.

Classroom settings can be challenging places to gather data, especially given the range of environmental variables, human variation, and level of disruption that can occur, yet assessment of student learning is a necessary element of technology implementation and teachers should be equipped to carry it out (Price & Kirkwood, Citation2010). Gathering evidence of learning when introducing a new technology into a classroom requires reflection on how one defines learning, and how technology is integrated to achieve learning goals (Dwyer et al., Citation1991; Ottenbreit-Leftwich & Kimmons, Citation2018). Therefore, aligning technology integration with student learning outcomes sets the stage for an evidence-based evaluation of when and how technology has an impact on student learning.

The practice of directly asking for student feedback (Popham, Citation2008; Wolpert-Gawron, Citation2017) is a simple but overlooked method of gathering evidence (Ferguson, Citation2012). Providing students with opportunities for feedback in course activities and assessments is an important way for educators to connect with students as individuals who have an investment in their own learning (Ertmer & Ottenbreit-Leftwich, Citation2010; Washor & Mojkowski, Citation2013).

The current study

Our aim is to understand how teachers construct assessments to evaluate students’ learning with VR. The study was an open-ended and exploratory case study (Creswell & Creswell, Citation2018), allowing teachers the flexibility to design and implement assessments that were relevant to their classroom contexts and specific learning objectives. Although many studies have begun investigating the impacts of VR on student learning (Chen et al., Citation2005; Johnson, Citation2019; Krokos et al., Citation2019; Makransky & Lilleholt, Citation2018; Merchant et al., Citation2014), we examine the pedagogical implications and challenges teachers encountered as they approached the integration of VR, developing assessments, and implementing those assessments to evaluate student learning. We also include students’ perceptions of the assessments in order to capture their impressions of the alignment of their virtual experiences and the assessments.

Research questions

How do teachers design assessments to capture students’ learning as a result of using VR in the classroom?

What challenges do teachers encounter as they seek to assess student learning from VR experiences?

After using VR in their classroom, how do teachers perceive the efficacy of the assessment strategy they used?

What are students’ perceptions about the assessments’ ability to evaluate their learning from VR?

Method

Teacher interviews

Participants and recruitment

We used a multi-site approach to recruitment (e.g., Grimes & Warschauer, Citation2008), inviting teachers from six diverse schools who had participated in our previous VR research as well as additional educator contacts involved in VR teacher groups. We selected this sample because their familiarity with similar classroom technologies meant they had a high likelihood of getting past the basic implementation of VR and onto assessment considerations. We sent emails to six high school instructors in Missouri, Massachusetts, Washington, D.C., Washington, and Georgia who planned to use VR during the 2018–2019 school year. All six teachers expressed interest in participating in this research. Teachers signed informed consent forms for the study, and any VR equipment donated to the classrooms was documented via a Memorandum of Understanding (treated as donations to the school regardless of continued participation in the research) or, if equipment was not needed, teachers received a $250 gift card for purchasing VR content. All recruitment and study procedures were approved by an independent IRB before beginning this research.

Four of the teachers had previously participated in exploratory studies about VR, and two were new to using VR but expressed confidence in using emerging technologies. Most teachers (4) worked in traditional public schools, one in a private school, and one in a public charter school. See for student, teacher, and school characteristics (names are pseudonyms).

Table 1. Description of classrooms and students.

Interview procedures

Three researchers with extensive experience studying virtual reality implementation in the classroom conducted the interviews. Each researcher was assigned two teachers to interview throughout the study. We conducted two 45–60 minute semi-structured interview sessions with each teacher, one at the start of the school year and another just after their VR unit. We used semi-structured interview protocols (See Supplementary material Appendix A) to elicit teachers’ subjective experiences around planning, designing, and implementing assessments to capture students’ learning with VR. This interview format provided the researchers with a consistent set of questions for every participant while maintaining flexibility to rephrase, probe, or omit questions when needed to obtain coverage of teachers’ recalled experiences without redundancy (McIntosh & Morse, Citation2015).

During some of the initial interviews, one researcher led the interview while the other two listened and took notes to test the interview protocol and prepare for subsequent interviews. The assigned researcher transcribed participant responses during each interview. One teacher requested to complete the post interview via email. In addition to the interviews, which were the primary data sources in this study, we asked teachers to provide copies of the assignments and tests they developed so that we could accurately describe their assessment approach in the Results. The three researchers met monthly throughout the school year to provide updates on participants’ VR use and to discuss specific points related to the research questions from any recent interviews.

Thematic analysis

We analyzed the teacher interview responses using thematic analysis, a qualitative methodology used to identify, organize, analyze, describe, and report patterns or themes within a data set in rich detail (Braun & Clarke, Citation2006). We used a bottom-up, or inductive, approach without a preexisting coding frame and without preconceived notions about what the data set should reveal. This means the themes we identified are strongly linked to the data themselves rather than a larger theory that describes the phenomena revealed by the data (Patton, Citation1990). The themes that emerged from this process are semantic in that they describe the explicit meanings in the data without theorizing about what has shaped or informed participants’ responses (Braun & Clarke, Citation2006). The analysis focused on cataloguing assessment approaches and challenges teachers faced.

Our thematic analysis process involved a series of six iterative steps. First, the researchers independently reviewed all of the teachers’ pre and post interview notes to familiarize themselves with the data. In the second step, each researcher focused on the pre and post interview notes only for the two teachers they had interviewed. In this phase, we pulled quotes and examples with the research questions in mind, logging them individually as reflection notes. The researchers then came together for the third step which was to discuss, compare, and contrast the individual teachers’ responses and assessment approaches, creating a shared document with a compilation of examples and quotes that we grouped into preliminary categories (e.g., challenges to assessment) across teachers.

In the fourth stage, the primary investigator refined the broad categories of interview excerpts and examples, and organized the material according to preliminary themes. Next, the three researchers discussed the meaning of these themes before returning to their assigned transcripts to identify further examples and quotes that aligned with each one. Finally, the primary investigator reviewed the content within each preliminary theme, summarizing them into final themes with supporting data, which served as the first draft of the findings as they appear in this report. As we prepared the findings for publication, we consulted several times with a qualitative research expert to ensure that the conclusions were appropriate given the data analysis strategy used.

Student surveys

Sample

We designed a mixed method survey (Supplementary material Appendix B) to collect feedback about the assessments from all of the students across all of the classrooms. Opt-In consent forms were sent home by the teachers so a guardian could provide informed consent for the student to participate in the research. Students with guardian consent were asked to complete a 10-minute online computer survey at the end of their class VR unit. Students were informed that they could opt out of the research at any time with no effect on their course grades. Across all of the classrooms, a total of 277 students, ranging in age from 14 to 17 years-old, responded to our survey.

Survey design

The 16-item online survey asked students about their VR use, the value of VR as a learning tool, and their perceptions of the assessments their teachers used with their VR unit. Only student feedback about the assessments is reported here. Likert scale and forced choice items were accompanied by open-ended prompts where students could provide context for their forced-choice responses (Creswell & Creswell, Citation2017). This is an efficient mixed method for collecting data from a large number of students while also making sure students can provide answers in their own words, with minimal social desirability effects (Creswell & Creswell, Citation2017; Groves et al., Citation2011).

Data analysis

To analyze broad trends across all student responses (n = 277), we did not disaggregate student data by class. Students’ Likert scale responses were summarized by calculating descriptive statistics (i.e., total counts, averages, and percentages) of students who chose each response. In the results, we summarize the forced choice response data and facilitate comparison of items by reporting the percentage of students who agreed to some extent with the given statements. We coded students’ responses to the open-ended questions using a summative content analysis approach (Hsieh & Shannon, Citation2005), identifying certain recurring words, phrases, or content in the text of students’ responses and using that recurring content to create codes. The researchers then read back through the open-ended responses to quantify the occurrences of these codes. Counting is used to identify patterns in the data and to contextualize the codes (Hsieh & Shannon, Citation2005). The summative analysis went beyond a simple quantitative report of the frequency of certain codes to latent content analysis of each of the codes. In this step, the researchers gathered student quotes under each code to interpret the contextual use of the code. This process allowed us to identify and report the reasons students gave for their agreement or disagreement with the survey statements about the assessments they took in their classes.

Results

summarizes information about the teachers (with pseudonyms) and their approaches to assessment.

Table 2. VR use and assessment approaches by teacher.

Teacher assessment strategies to capture learning from VR

When prompted to consider assessment as part of their VR curriculum, the educators in this study easily generated ideas regarding how the assessments might be constructed. All six teachers told us that assessments should be both genuine (i.e., consistent with real-world applications) and meaningful (i.e., students understand and buy into the purpose behind the assessment and desired skills) in order to best align with the virtual experience. The assessment types that teachers discussed in their pre-interviews varied from more traditional (e.g., rote assessments, or the same assessments used prior to introducing VR) to new, more open-ended assessments. The teachers told us that they expected to observe positive outcomes on student assessments simply as a result of having the students engage in immersive VR learning.

Two teachers constructed new formative assessments. In Leah’s (T4) Level 3 world language classroom, it was a “passport project” where students wrote, spoke, and practiced concepts as they virtually visited different locales around Central and South America. Students engaged with new vocabulary and cultural artifacts and wrote about products, practices, and perspectives from each location as they moved through the unit. Leah wrote to students and gave them feedback at various points along the geographical and cultural journey. She stated,

This worked really well. It was easy to grade and allowed students to express where they were at with their language skills. It was very clear what students were supposed to do. It obviously did not capture their speaking, but it did capture their writing.

Leah noticed that students were able to stay in the target language while doing the work and could share their opinions and speculations, along with correctly applying the subjunctive, which was one of the primary goals for the unit.

Delaney (T1) altered existing formative assessments in her biology course but used a district-required summative assessment at the end of the year. She relied on a brief formative questionnaire for use immediately after a VR experience she had set up in a station rotation model (students moving from station to station, completing various activities) that targeted specific anatomical systems. Using this “exit ticket,” Delaney hoped to quickly assess whether students had gained a key insight related to hemoglobin transport. She stated,

I have large class sizes, over 25 in most classes. Rotating them through stations is a challenge, but the real-world experience and hands-on techniques is a strength of science. In VR kids can get in there and look around, actually getting able to go into anatomy/physiology in-depth…manipulating things with their hands. I want to know what they understand and reflect upon that and change what I need to.

The other four teachers elected to use summative approaches to assessment. Monica (T2) stated that she regularly used formative assessments with her students and then traditionally did deeper summative reflections at the end of her humanities units. She planned a similar strategy with VR. She was looking to see if reflective work at the conclusion of the VR units prompted students to have a greater depth of understanding than she would normally see. She encouraged her students to challenge their thinking and preconceptions through reflection, comparing their prior knowledge to present learning after experiencing historical content in VR. The project was called “Choice History” and students could select their own content path in the study of world history (e.g., WWI). Students were specifically asked to reflect on learning that occurred as a result of their VR experience.

Naomi (T5) regularly utilized formative assessments of students’ vocabulary use prior to implementing VR. She then had students use 360° VR content about Costa Rica and Chile in order to compare the two cultures. Students completed an open-ended summative assessment scored on language control, text length, language function, comprehensibility, comprehension, and cultural awareness. Although student responses were assessed using a previously developed rubric, she hoped that the virtual experience would inspire students to explore and construct meaning, resulting in more detailed open-ended responses. She theorized that students would be more willing to engage in a broader use of vocabulary on the assessments as a result of the immersive experience. Naomi was very enthusiastic about VR as a tool for students to experience culture first-hand, see the challenges facing communities in other parts of the world, and compare and contrast cultures. She felt that by placing students in immersive VR, she could push them to think more deeply and connect with cultural concepts in new ways.

Tania (T6) used her existing end-of-chapter tests as part of her assessment strategy. The end of chapter tests consisted of matching prompts (e.g., matching historical events with the year in which they occurred) as well as multiple choice questions. Tania added short answer questions that prompted students to consider viewpoints from multiple historical actors (e.g., “In your opinion, to what extent did the United States win the Vietnam War?”). Tania predicted that the VR activities, such as conducting virtual interviews with people who played very different roles during the Vietnam War, would help students form a more complex understanding of the event.

Ami (T3) devised an entirely new assessment method. She used the VR game Keep Talking and Nobody Explodes (Steel Crate Games, Citation2015) as the final unit assessment for her students. In this unit, students learned new vocabulary and how to state commands in Spanish so they could successfully complete the assessment task in VR. In groups of four, students worked collaboratively, in Spanish, to disarm a virtual bomb while Ami observed and assessed the groups using a rubric focused on language use (e.g., commands) and comprehension. She stated in her pre-interview:

I will work on developing an easy and short checklist to assess success and receive student feedback on the use of content like commands in listening and speaking to defuse the bomb as well as expressions to ask for clarification and paraphrasing skills.

Unanticipated challenges posed difficulties for timely assessment

Although four of the six teachers had used VR prior to this study, nearly all encountered technological challenges that prohibited easy implementation of the tool, causing delays that impacted their assessments. Delaney (T1) shared in her post-interview, "The struggle in the beginning really sucked. I wish I had understood the computer needs earlier. I think it could have been amazing." Due to delays in getting hardware up and running, Delaney had to completely change her initial plan to use a formative assessment with VR in a biology unit at the beginning of the year. Also, due to the delays, she had to find new VR content, change her formative assessments, and use VR much later than planned.

Even when hardware was available, there were challenges in using it. Ami (T3) noted that she had Google Cardboards and an HTC Vive available in her classroom and at the beginning of the study had intended to use both with students. However, she shared that she "couldn’t figure out the Vive on [her] own" and did not have the time to go back to it. Instead, she opted for the cell-phone-based VR experiences only, thus altering the VR activity, student learning experiences, and her approach to assessment.

Monica (T2) and Leah (T4) had equipment available and running in their classrooms. They struggled with the school Wi-Fi connection and the fact that their VR equipment, and the students’ cell phones, were considered nonstandard district equipment. Therefore, VR activities and assessments were often delayed as they navigated technical challenges.

In Tania’s (T6) case, her school was working with outside developers to design a VR experience that aligned exactly to course standards. There were delays in getting the content and she struggled with the process of obtaining a school-district-approved computer capable of running VR. She expressed anxiety about how the VR content was actually going to work in practice with the assessment she planned to give because the content kept getting delayed and she was unable to preview it.

The other major challenge for the instructors, with the exception of Tania, was finding relevant VR content for the courses that aligned with school/district standards and learning objectives. Teachers looked for commercial content that would overlap with course objectives and then adjusted their units, and assessments, to make sure the VR content fit. Delaney noted:

The experiences are not exactly on key with what I need yet. Not as many experiences exist now but the technology will get there. The length of the experiences and trying to get kids to do it in one class period is a challenge.

Teacher perceptions of the efficacy of their assessment strategies

Post-VR and assessments, all teachers interviewedFootnote1 saw benefits to using the technology, whether it was enhanced engagement and increased interest in the class material, better subsequent learning outcomes, opportunities for visual learning and a sense of “being there” (i.e., presence), or new types of collaboration with peers. In all classes, VR was an option, so there were students who opted not to use VR, and several teachers compared VR and non-VR students in their interviews. Two major findings were that teachers noticed a great deal of subjectivity in their assessments and, relatedly, it was difficult for teachers to single out any improvements specifically from VR because of other changes they had made as a result of introducing the technology.

Naomi (T5) constructed a rubric for her students’ responses to the cultural observations and noted: "I find [the VR group’s] responses more holistic in text type, length, narrating, describing and giving opinions. I also find their recall is more accurate.” However, upon further reflection, she commented that, in practice, the rubric was “too general” and she should be more specific in the future to better assess whether students were really meeting the requirements of each criterion.

When asked, “How do you know if this tool was effectively assessing student knowledge gains?” Monica (T2) remarked: “In some ways, I don’t. I will say the conversations and reflections with students made me much more aware of what the students felt was important.” Monica felt that using VR as a supplementary resource enabled students to provide more interesting details and perspectives in their written reflections relative to other students who did not choose to use VR. She also felt that the assessments showed that students were clearing up possible misconceptions. She shared a student reflection to illustrate her point:

I felt like I was in that trench, and it looked a lot different than I thought it would. In books and even in movies it feels not anywhere near as real as when you are actually standing in it. It was smaller in there, and I felt sorry for the men that were suffering in there.

Leah (T4) stated that she felt VR provided her students with a visual connection to the vocabulary they were learning and described it as “an almost real experience with the vocabulary.” Through observations and work with students in VR, she believed she saw faster grammar acquisition and improved cultural consideration, but her grading rubric was not specifically designed to capture these changes. She stated,

They see things and experience the same things, so they really can describe what they see to one another…There is rich content for them to engage with that has an impact on their ability to remember the vocab and engage in conversation.

Ami (T3) had designed a very specific rubric for observing student problem solving, in the target language, while immersed in VR. She felt that the assessment was well-designed to capture the knowledge students gained throughout the unit because she “could see students as they negotiated meaning, applied their knowledge and vocabulary” and she “watched them give commands, and heard them describe a situation.” She could give specific, real-time feedback to the students, as they were immersed, about their command of Spanish. This was a new format and presentation of assessment for Ami and her students. She described enhanced student engagement, as well as increased interaction with peers, improved use of the target language, and more descriptive language usage (both word-type and greater length and complexity of phrases) as a result of VR and this was captured in her observations and on her rubric.

Five teachers noted that incorporating VR led them to change their curriculum in other ways that they felt were beneficial to student learning. Delaney attributed observed learning gains to increased motivation and interest as a result of using VR and she felt the curriculum was enhanced by the implementation of the station rotation model, a new strategy for her classroom.

Monica shared that using VR may have helped with student engagement and their ability to connect more deeply with the learning process in their reflections:

Student engagement went through the roof and the reflections seemed to be deeper, and certainly were longer for the people who did the VR experience. The students seemed more able (or willing?) to chase down more content, to ask more questions. In turn, the students who did the VR experience gave more detailed answers with examples, particularly in the class discussion.

Ami and Leah remarked that they were teaching different content now and the inclusion of VR had shifted their overall curricular focus. Ami said that although she observed positive developments in her students, it was “difficult to compare [these improvements] to previous years” due to the significant changes in the curriculum as a whole. Naomi had previously used photographs of locations, such as Machu Picchu, with students for this unit. She would pass photos around to the class or project images on the screen up front and have students write responses while seated at their desks. With VR, students were up and moving around the classroom; she even allowed them to be in small groups in the hallway. She described how VR brought a new physical element to her classroom activities, which represented another change, in addition to the virtual content. The improvements that teachers made to their curriculum as they integrated VR created a confound as they tried to understand whether the VR experiences offered unique benefits for student learning.

Students’ perceptions of the assessments

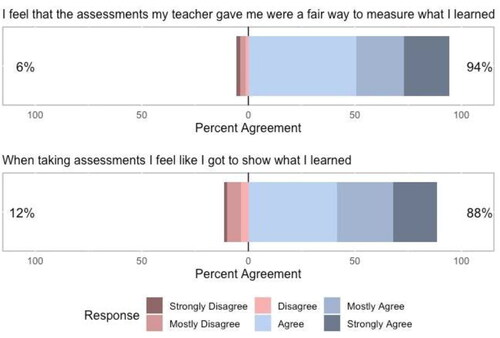

Of the students that responded to the student survey (n = 277), nearly all of them agreed to some extent with the statement: “I feel that the assessments my teacher gave me were a fair way to measure what I learned” (). The majority of students also agreed to some extent with the follow-up statement: “When taking assessments I feel like I got to show what I learned” ().

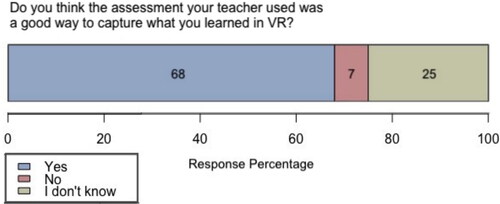

Most students responded with a “yes” to the question: “Do you think the assessment your teacher used was a good way to capture what you learned in VR?” (). In open-ended follow-up responses, students who responded “yes” to this question provided a few reasons why they believed the assessments did a good job of capturing what they learned: because they were based on what they had encountered and practiced in VR, because the VR activities resembled real-world scenarios, or because VR facilitated an assessment experience where they were challenged to apply what they know in a unique and enjoyable way.

Figure 2. Student response proportions to a question about VR assessment quality. Note. Numbers on the bars indicate the number of students who selected each response.

A quarter of students responded with “I don’t know.” These students’ open-ended responses revealed that some students were unsure about how exactly they were being assessed through the different assignments and activities they were asked to complete. One student said, “I loved the VR but I would need a little bit more than just those interactions, like a summary of the assignment or activity.” Students in some classes felt that teachers simply introduced VR as a class activity and did not clearly communicate that there would be a follow-up assessment where they would be asked to demonstrate what they had learned through VR. For example, one student noted, “The tests/quizzes or other assessments seemed like a normal assessment and not something special for virtual reality. When we did projects, however, I think it was easier to show what I learned.” Other students reported that they did not feel like the assessment appropriately captured their learning because the pace of the VR activity was too fast, the VR activity was more entertaining than it was educational, and/or because they believed another method would have been more effective.

Discussion

In this study, we used a qualitative approach to study six North American high school teachers as they designed assessments to capture their students’ learning from VR experiences in the classroom. Our goal with this exploratory case study was to expand understanding (Yin, Citation2014) around the challenges teachers face as they attempt to assess learning after implementing VR. We documented their challenges, approaches to assessment, as well as their perceptions of success. We complemented teachers’ responses with survey data from their students around their experiences with the assessment process. The findings provide insights into the challenges of assessing learning from emergent technology in the classroom, with implications for both educators and researchers to further investigate this process and provide support for teachers as they approach this challenging and important task.

Teacher assessment strategies to capture learning from VR

The teachers were optimistic that the VR units and related assessments would feel more meaningful to students compared to their usual lessons. Teachers considered a range of assessment types for this study, from standard multiple choice tests and open ended writing up to a fully immersive VR assessment.

In the interviews, teachers used constructivist language to talk about students creating their own knowledge (Swan, Citation2005) by engaging in authentic, immersive learning and assessment experiences (Hu-Au & Lee, Citation2017; Wiggins, Citation1990). The teachers felt that VR enabled students to both construct new knowledge and connect with their prior knowledge to engage in a deeper application of the material. However, the enthusiasm the teachers displayed for their assessment ideas at the outset belied some of the challenges they would face in design, implementation, and evaluation of their assessment practices.

Five of the six teachers used either their assessment strategy from before incorporating VR (e.g., an end-of-unit reflection/presentation, a rubric-graded series of notes, a written unit test) or a slightly modified version (e.g, inserting an exit ticket, adding a few open-response questions to an existing assessment). This aligns with similar findings from research on technology in the classroom (Mama & Hennessy, Citation2013), wherein, due to the complexities of implementing the technology, teachers may be inclined to use more familiar approaches to instruction and assessment than they had intended until they feel more comfortable with the technology itself (Ertmer & Ottenbreit-Leftwich, Citation2010).

Only one teacher, Ami (T3), totally altered her traditional unit pedagogy and assessment design to fully integrate VR into both the curriculum and the assessment. Her use of the VR content as the assessment, with real-time feedback, group collaboration, and active application vocabulary, modeled a more complex integration of technology and assessment. This type of application of VR assessment aligns with constructivist pedagogical styles that are grounded in student experience where students actively demonstrate their knowledge through an application of concepts (Brooks & Brooks, Citation1999; Honebein, Citation1996). If students had not mastered the proper vocabulary and commands, they would not be able to effectively disarm the virtual bomb, thus losing points in this “applied” assessment.

Unanticipated challenges posed difficulties for timely assessment

As emergent technologies such as VR make their way into classroom settings, it is common to encounter implementation hurdles. In our study, when teachers planned to use VR as a key component of their lessons and assessments, delays or issues derailed their pedagogical goals. Four out of six teachers experienced technological issues that impacted their ability to use VR, and thereby detracted from their time to develop assessments. We specifically chose the participants in this study because they were technologically savvy or had used VR before, so we find it all the more interesting that it was still a challenge for this group to implement both VR and assessments.

Research has demonstrated that teachers spend extra time and energy implementing a new technology (Koehler & Mishra, Citation2009; Vannatta & Fordham, Citation2004). The teachers were surprised and dismayed by the delays and hurdles they experienced in using VR. Surprisingly, the delays were not necessarily a function of the complexity of the VR hardware. Though we did hear from teachers that they encountered issues with their IT support and districts when using the more advanced hardware, even those using cell-phone-based experiences ran into challenges in classroom application.

We focus on technical challenges to demonstrate how teachers’ plans for assessment became less important than the difficulties they experienced with the technology. Given teachers’ plans to use VR in conjunction with particular units of study, delays in functionality often meant the class had already moved on to new units; this was particularly frustrating for teachers.

All of the teachers found it challenging to locate relevant and appropriate VR content, with the exception of Tania (T6) who worked with an outside company that created a VR experience (which came with its own delays and struggles). Proper alignment between traditional course curricula and the commercially available VR content was on the forefront of the teachers’ minds and presented a continual challenge. Educators have to invest significant time and energy to find and select virtual content (Cook et al., Citation2019; Johnson, Citation2019).

The challenges teachers faced making the technology work drew time and energy away from their assessment development. General assumptions about implementation strategies may not hold true in actual classrooms; those gaps in our understanding may make a significant difference in our ability to improve practice (Roblyer, Citation2005). Our findings indicate that while assessment should be a fundamental component of the introduction of VR to the classroom, we cannot overlook the technical barriers that the technology presents for teachers and how those barriers may result in assessment becoming an afterthought with regard to overall implementation. The fact that all teachers persevered suggests that the appeal and potential value of VR for students was a strong motivator.

Teacher perceptions of the efficacy of their assessment strategies

VR’s ability to engage learners in immersive content is an appealing aspect of the technology (Baker & O’Neil, Citation1994; Ladendorf et al., Citation2019; Prensky, Citation2013; Sandholtz et al., Citation1997; Winn, Citation1993). The teachers in our study all noted enhanced engagement with the material and were enthusiastic to share improvements they observed as a result of introducing their assessments of VR learning. Teachers observed qualitatively different behaviors and representations of learning from students compared to past years, both as a result of having used VR and of the changes to their pedagogy necessitated by VR. At times, teachers observed improvements that they felt were occurring as a result of VR exposure but were not well-captured by their assessments. Being able to capture these shifts in learning is vital, and the teachers in this study reflected that there were ways they could have done this better.

Teachers who used multiple choice tests, for instance, found basic recall to be present but the more nuanced or advanced concepts included in the VR content were not necessarily reflected within the test’s questions or the students’ responses. This makes sense given that some types of learning that VR might be most intended for in a classroom setting (i.e., more abstract, higher-level thinking and perspective taking; Chen et al., Citation2005; Krokos et al., Citation2019) may not be as strongly emphasized on multiple choice assessments.

From the interviews, it appeared that more open-ended assessments enabled teachers to better understand what students were learning from the virtual experience. This was evident with Delaney (T1), who found her formative assessments captured more of the higher-level concepts than the multiple choice test used by the district. Similarly, Monica (T2) noticed enhanced perspective-taking on open response reflections, as did Naomi (T5) in both student discussions and written reflections. Though encouraging to hear teachers reflect on enhanced student discussions, improved writing, and faster skill acquisition, those specifics were not captured in most of the assessment designs, more in teachers’ subjective observations.

When VR itself was the assessment, the findings were less subjective than when another assessment mode was used to retrospectively assess learning from a virtual experience. Because the content was the assessment, and the teacher was actively observing student interaction in the virtual space, she could provide clear feedback about students’ contextual understanding and vocabulary.

Shifts in pedagogy and instruction commonly occur upon the introduction of a new technology (Cook et al., Citation2019; Johnson, Citation2019), and VR was no exception. Teachers noted that it was difficult to compare the current assessments to students’ prior work because of the combination of shifts in pedagogy and new technology. This type of shift in practice is documented in previous work, which suggests that teachers’ concrete experiences with technology inform their attitudes and beliefs about integration and vice versa (Ruggiero & Mong, Citation2015). Bringing in a new technology such as VR does not represent an isolated step; it becomes embedded in the learning context and, if well-connected to the teacher’s pedagogical knowledge, offers opportunities for the continued evolution of classroom practice (Hughes, Citation2005). That benefit, independent of the possible gains of immersive learning, might be a great opportunity for teachers to continue to evolve and refine how students demonstrate understanding.

Students’ VR assessment experience

We wanted to understand students’ perceptions of the assessments developed by their teachers. We asked students to reflect on what they learned using VR and how well they were able to demonstrate that learning on their assessments. The majority of students felt that the assessments were a fair way to measure and show their learning. Though these are encouraging findings, a subset of students were not sure whether the assessments were well-aligned with the VR unit or the content they learned through VR. Student responses indicated that at times they did not realize they were being assessed, misunderstood the skills being emphasized (e.g., speed vs. content), and/or were not entirely sure what skill or content knowledge was being assessed. These responses suggest that not having the proper structure and framing around the VR activities can detract from students’ learning experience, retention of relevant information, and the overall effectiveness of assessments as a reflection of learning outcomes for students.

The nuance of the student responses to these exploratory questions serve as an important reminder that student input can provide practical insights on the quality of the technology-facilitated learning experience and whether the assessments captured their learning. There was alignment between the teachers’ remarks about the challenges of capturing VR learning through conventional assessment methods and the student responses, suggesting that assessing knowledge gained through VR experiences is an area that would benefit from further exploration.

Limitations

We conducted an exploratory case study of six educators to better understand how teachers would approach developing assessments to capture students’ learning from VR. This design yielded rich data but did not cover a wide range of teachers and subjects. Moreover, teachers selected their own units, types of assessments, and measurement tools and had prior interest in using the technology, which may have influenced their decisions throughout the study. Compared to a controlled experimental design focused on measuring students’ pre-post learning outcomes, our case study focused on gaining insights into how teachers think about and develop assessments when using an emerging technology in the classroom.

Future work

It is vitally important as new technologies enter the classroom that dialogue does not solely focus on the technology itself, but also on how educators know that the technology is bringing value to student learning. Continuing to delineate whether and how VR adds value to student learning, as well as providing students new opportunities to showcase their learning that align well with the technology, will be crucial as its usage grows. Because the teachers in our study felt that VR was fostering learning in a new way, it will be important that assessment strategies keep pace with newer approaches to student learning. Additionally, inviting students to give input in the design of the assessments would provide more evidence for teachers to further align their technology and pedagogy.

Conclusion

There were things they learned in VR that weren’t on the test. The extra things they learned from VR were in the right direction and sometimes more advanced than what they are required to learn this year… and that is really beneficial for them in the future even if it’s not something they’re required to know. – Delaney (T1)

Our work with educators highlights the unique potential of VR to actively engage learners in new, immersive, and interactive ways. It is important to note the teachers experienced hurdles implementing VR that at least temporarily impeded their ability to focus on assessment. These hurdles demanded a significant amount of time and attention on external challenges, as well as additional time on instruction and assessment.

Incorporating VR into their classrooms prompted teachers to make changes to their assessment approaches and overall pedagogy. Given the changes occurring as a result of the technology and the pedagogical shifts, it was difficult to compare the new methodologies and experiences to the conventional curriculum. The challenges teachers encountered when trying to use traditional assessments to capture the learning they felt they observed in students underscores the importance of looking beyond VR implementation and finding practical and objective ways to help teachers assess this kind of learning.

Students provided an important source of information. Student accounts emphasized the need for setting explicit learning goals around VR activities and articulating how the VR experience is meant to achieve learning goals.

Overall, teachers struggled to find clear evidence that learning was truly improved as a result of VR, mainly because they had to rethink their classroom practice and assessments while overcoming many technical challenges. An important step forward would be to co-develop assessment strategies with teachers that can feasibly be used to assess the learning value a new technology brings when implemented in a real classroom. Supporting teachers to collect clear evidence indicating whether learning was truly improved as a result of VR (or any technology) will provide them with crucial information to decide whether the time and effort is worthwhile.

Notes on contributors

Lisa M. Castaneda has a Masters degree in Education and was a classroom teacher for 10 years, in grade s K-8. She is a Co-founder and the CEO of foundry10, a philanthropic educational research organization based in Seattle, WA. She has led larger scale VR research in classrooms across the U.S. and Canada. Her work has been featured in research journals, educator oriented conferences and press, as well as in popular press and developer conferences.

Samantha W. Bindman is a Senior Researcher at foundry10 who earned her PhD in Developmental Psychology from the University of Michigan. Before coming to foundry10, Sam worked on two IES researcher-practitioner partnership grants at Seattle Public Schools focusing on racial and ethnic disproportionality in school discipline and parent involvement in students’ transition from middle to high school. Sam’s current projects include exploring the potential for emergent technologies to foster learning both in and out of the classroom and the role of technology in family life.

Riddhi A. Divanji is pursuing a Master’s degree in Measurement and Statistics at the University of Washington. She is interested in evaluating youth outcomes, exploring how learning takes place within and across settings, and building community partnerships to better support young people as they move through their education. Riddhi’s current projects involve exploring adaptive learning technologies, K-12 interventions, and use of games in learning environments.

Supplemental Material

Download MS Word (25 KB)Notes

1 Tania (T6) was unable to complete all of her final interview and is omitted from this portion of the results.

References

- Aggarwal, R., Grantcharov, T. P., Eriksen, J. R., Blirup, D., Kristiansen, V. B., Funch-Jensen, P., & Darzi, A. (2006). An evidence-based virtual reality training program for novice laparoscopic surgeons. Annals of Surgery, 244(2), 310.

- Bailenson, J. (2018). Experience on demand: What virtual reality is, how it works, and what it can do. WW Norton & Company.

- Baker, E. L., & O’Neil, H. F. (Eds.). (1994). Technology assessment in education and training. (Vol. 1). Lawrence Erlbaum Associates.

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. [Database] https://doi.org/10.1191/1478088706qp063oa

- Brooks, M. G., & Brooks, J. G. (1999). The courage to be constructivist. Educational Leadership, 57(3), 18–24.

- Bursztyn, N., Walker, A., Shelton, B., & Pederson, J. (2017). Assessment of student learning using augmented reality Grand Canyon field trips for mobile smart devices. Geosphere, 13(2), 260–268. https://doi.org/10.1130/GES01404.1

- Cadiz Province Tourism. (2017, Jan 26). 360° VR: Puenting en Algodonales [Video]. YouTube. https://www.youtube.com/watch?v=QZbj84J1VOE

- Capitol Interactive & Digital History Studios. (2021a). I was there [Virtual reality content]. Capitol Interactive. https://www.vrexplorer.net/I-Was-There/103794/Oculus-Go

- Capitol Interactive & Digital History Studios. (2021b). Through the eyes [Virtual realitycontent]. Capitol Interactive. https://www.vrexplorer.net/Through-The-Eyes/104170/Oculus-Go

- Capitol Interactive & Digital History Studios. (2021c). Timelines: Cold War, Vietnam & 1968 [Virtual Reality Content]. Capitol Interactive. https://www.vrexplorer.net/Timelines-Cold-War-Vietnam-1968/103792/Oculus-Go

- Castaneda, L., Cechony, A., & Bautista, A. (2017). Applied VR in the Schools, 2016-2017 Aggregated Report. foundry10. http://fineduvr.fi/wp-content/uploads/2017/10/All-School-Aggregated-Findings-2016-2017.pdf

- Castaneda, L. M., Bindman, S. W., Cechony, A., & Sidhu, M. (2018). The disconnect between real and virtually real worlds: The challenges of using VR with adolescents. Presence: Teleoperators and Virtual Environments, 26(4), 453. https://doi.org/10.1162/pres_a_00310

- Chen, C. J., Toh, S. C., & Ismail, W. M. F. W. (2005). Are learning styles relevant to virtual reality? Journal of Research on Technology in Education, 38(2), 123–141. https://doi.org/10.1080/15391523.2005.10782453

- Chen, F. Q., Leng, Y. F., Ge, J. F., Wang, D. W., Li, C., Chen, B., & Sun, Z. L. (2020). Effectiveness of virtual reality in nursing education: Meta-analysis. Journal of Medical Internet Research, 22(9), e18290. https://doi.org/10.2196/18290

- Chernikova, O., Heitzmann, N., Stadler, M., Holzberger, D., Seidel, T., & Fischer, F. (2020). Simulation-based learning in higher education: A meta-analysis. Review of Educational Research, 90(4), 499–541. https://doi.org/10.3102/0034654320933544

- Coelho, C., Tichon, J. G., Hine, T. J., Wallis, G. M., & Riva, G. (2006). Media presence and inner presence: The sense of presence in virtual reality technologies. In G. Riva, M. T. Anguera, B. K. Wiederhold, & F. Mantovani (Eds.), From communication to presence: Cognition, emotions and culture towards the ultimate communicative experience (pp. 25–45). IOS Press.

- Cook, M., Lischer-Katz, Z., Hall, N., Hardesty, J., Johnson, J., McDonald, R., & Carlisle, T. (2019). Challenges and strategies for educational virtual reality. Information Technology and Libraries, 38(4), 25–48. https://doi.org/10.6017/ital.v38i4.11075

- Creswell, J. W., & Creswell, J. D. (2017). Research design: Qualitative, quantitative, and mixed methods approaches. SAGE Publications, Inc.

- Creswell, J. W., & Creswell, J. D. (2018). Research design: Qualitative, quantitative, and mixed methods approaches (5th ed.). SAGE Publications, Inc.

- Dwyer, D. C., Ringstaff, C., & Sandholtz, J. H. (1991). Changes in teachers’ beliefs and practices in technology-rich classrooms. Educational Leadership, 48(8), 45–52.

- Elmqaddem, N. (2019). Augmented and virtual reality in education. Myth or reality? International Journal of Emerging Technologies in Learning, 14(03), 234–242. https://doi.org/10.3991/ijet.v14i03.9289

- Ertmer, P. A., & Ottenbreit-Leftwich, A. T. (2010). Teacher technology change: How knowledge, confidence, beliefs, and culture intersect. Journal of Research on Technology in Education, 42(3), 255–284. https://doi.org/10.1080/15391523.2010.10782551

- Ferguson, R. F. (2012). Can student surveys measure teaching quality?Phi Delta Kappan, 94(3), 24–28. https://doi.org/10.1177/003172171209400306

- Freina, L., & Ott, M. (2015). A literature review on immersive virtual reality in education: State of the art and perspectives. The International Scientific Conference Elearning and Software for Education, 1, 133–141.

- Gholson, B., & Craig, S. D. (2006). Promoting constructive activities that support vicarious learning during computer-based instruction. Educational Psychology Review, 18(2), 119–139. https://doi.org/10.1007/s10648-006-9006-3

- Google LLC. (2016). Google Earth VR [Virtual Reality Content]. Google LLC. https://arvr.google.com/earth/

- Grimes, D., & Warschauer, M. (2008). Learning with laptops: A multi-method case study. Journal of Educational Computing Research, 38(3), 305–332. https://doi.org/10.2190/EC.38.3.d

- Groves, R. M., Fowler Jr, F. J., Couper, M. P., Lepkowski, J. M., Singer, E., & Tourangeau, R. (2011). Survey methodology (Vol. 561). John Wiley & Sons.

- Harman, J., Brown, R., & Johnson, D. (2017). Improved memory elicitation in virtual reality: new experimental results and insights. In R. Bernhaupt, G. Dalvi, A. Joshi, D. K. Balkrishan, & J. O’Neil (Eds.), IFIP conference on human-computer interaction (pp. 128–146). Springer.

- Harmes, J. C., Welsh, J. L., & Winkelman, R. J. (2016). A framework for defining and evaluating technology integration in the instruction of real-world skills. In Y. Rosen, S. Ferrara, & M. Mosharraf (Eds.), Handbook of research on technology tools for real-world skill development (pp. 137–162). IGI Global.

- Heeter, C. (1992). Being there: The subjective experience of presence. Presence: Teleoperators and Virtual Environments, 1(2), 262–271. https://doi.org/10.1162/pres.1992.1.2.262

- Honebein, P. C. (1996). Seven goals for the design of constructivist learning environments. In B. G. Wilson (Ed.), Constructivist learning environments: Case studies in instructional design (pp. 11–24). Educational Technology Publications.

- Hsieh, H. F., & Shannon, S. E. (2005). Three approaches to qualitative content analysis. Qualitative Health Research, 15(9), 1277–1288. [Database] https://doi.org/10.1177/1049732305276687

- Hu-Au, E., & Lee, J. J. (2017). Virtual reality in education: A tool for learning in the experience age. International Journal of Innovation in Education, 4(4), 215–226. https://doi.org/10.1504/IJIIE.2017.10012691

- Hughes, J. (2005). The role of teacher knowledge and learning experiences in forming technology-integrated pedagogy. Journal of Technology and Teacher Education, 13(2), 277–302.

- Immersive VR Education Ltd. (2018). Titanic VR [Virtual Reality Content]. Immersive VR Education Ltd. http://titanicvr.io/

- Inspyro Ltd. (2016). Trench experience VR [Virtual Reality Content]. Inspyro Ltd. https://play.google.com/store/apps/details?id=com.computeam.sommevr

- International Society for Technology in Education. (2017). ISTE standards for educators. https://www.iste.org/standards/iste-standards-for-teachers

- Johnson, J. (2019). Jumping into the world of virtual & augmented reality. Knowledge Quest, 47(4), 22–28.

- Kaplan, A. D., Cruit, J., Endsley, M., Beers, S. M., Sawyer, B. D., & Hancock, P. A. (2021). The effects of virtual reality, augmented reality, and mixed reality as training enhancement methods: A meta-analysis. Human Factors, 63(4), 706–726. https://doi.org/10.1177/0018720820904229

- Koehler, M., & Mishra, P. (2009). What is technological pedagogical content knowledge (TPACK)?Contemporary Issues in Technology and Teacher Education, 9(1), 60–70.

- Kolb, L. (2017). Learning first, technology second: The educator’s guide to designing authentic lessons. International Society for Technology in Education.

- Koohang, A., Riley, L., Smith, T., & Schreurs, J. (2009). E-learning and constructivism: From theory to application. Interdisciplinary Journal of E-Learning and Learning Objects, 5(1), 91–109.

- Kopcha, T. J., Neumann, K. L., Ottenbreit-Leftwich, A., & Pitman, E. (2020). Process over product: The next evolution of our quest for technology integration. Educational Technology Research and Development, 68(2), 729–749. https://doi.org/10.1007/s11423-020-09735-y

- Krokos, E., Plaisant, C., & Varshney, A. (2019). Virtual memory palaces: Immersion aids recall. Virtual Reality, 23(1), 1–15. https://doi.org/10.1007/s10055-018-0346-3

- Ladendorf, K., Schneider, D. E., & Xie, Y. (2019). Mobile-based virtual reality: Why and how does it support learning. In Y. Zhang & D. Cristol (Eds.), Handbook of mobile teaching and learning (pp. 1353–1371). Springer.

- Lau, K., & Lee, P. (2015). The use of virtual reality for creating unusual environmental stimulation to motivate students to explore creative ideas. Interactive Learning Environments, 23(1), 3–18. https://doi.org/10.1080/10494820.2012.745426

- Loveless, A., & Dore, B. (Eds.). (2002). ICT in the primary school. Open University Press.

- Makransky, G., & Lilleholt, L. (2018). A structural equation modeling investigation of the emotional value of immersive virtual reality in education. Educational Technology Research and Development, 66(5), 1141–1164. https://doi.org/10.1007/s11423-018-9581-2

- Mama, M., & Hennessy, S. (2013). Developing a typology of teacher beliefs and practices concerning classroom use of ICT. Computers & Education, 68, 380–387. https://doi.org/10.1016/j.compedu.2013.05.022

- Mayes, J. (2015). Still to learn from vicarious learning. E-Learning and Digital Media, 12(3-4), 361–371. https://doi.org/10.1177/2042753015571839

- McIntosh, M. J., & Morse, J. M. (2015). Situating and constructing diversity in semi-structured interviews. Global Qualitative Nursing Research, 2, 1–12.

- Merchant, Z., Goetz, E. T., Cifuentes, L., Keeney-Kennicutt, W., & Davis, T. J. (2014). Effectiveness of virtual reality-based instruction on students’ learning outcomes in K-12 and higher education: A meta-analysis. Computers & Education, 70, 29–40. https://doi.org/10.1016/j.compedu.2013.07.033

- Muséum National d’Histoire Naturelle & The Orange Foundation. (2017). Journey Into the Heart of Evolution [Virtual Reality Content]. Muséum National d’Histoire Naturelle & The Orange Foundation. https://www.mnhn.fr/en/explore/virtual-reality/journey-into-the-heart-of-evolution

- O’Connor, E. A., & Domingo, J. (2017). A practical guide, with theoretical underpinnings, for creating effective virtual reality learning environments. Journal of Educational Technology Systems, 45(3), 343–364. https://doi.org/10.1177/0047239516673361

- Ottenbreit-Leftwich, A., & Kimmons, R. (2018). The K-12 educational technology handbook (1st ed.). EdTech Books. https://edtechbooks.org/k12handbook

- Pantelidis, V. S. (2010). Reasons to use virtual reality in education and training courses and a model to determine when to use virtual reality. Themes in Science and Technology Education, 2(1–2), 59–70.

- Parong, J., & Mayer, R. E. (2021). Cognitive and affective processes for learning science in immersive virtual reality. Journal of Computer Assisted Learning, 37(1), 226–241. https://doi.org/10.1111/jcal.12482

- Patton, M. Q. (1990). Qualitative evaluation and research methods. SAGE Publications, Inc.

- Popham, W. J. (2008). Transformative assessment. Association for Supervision and Curriculum Development.

- Prensky, M. (2013). Our brains extended. Educational Leadership, 70(6), 22–27.

- Price, L., & Kirkwood, A. (2010). Technology enhanced learning—Where’s the evidence. In C. Steel, M. Keppell, P. Gerbic, & S. Housego (Eds.), Curriculum, technology & transformation for an unknown future. Proceedings ascilite Sydney 2010 (pp. 772–782). The University of Queensland.

- Psotka, J. (1995). Immersive training systems: Virtual reality and education and training. Instructional Science, 23(5-6), 405–431. https://doi.org/10.1007/BF00896880

- Regian, J. W., Shebilske, W. L., & Monk, J. M. (1992). Virtual reality: An instructional medium for visual-spatial tasks. Journal of Communication, 42(4), 136–149. https://doi.org/10.1111/j.1460-2466.1992.tb00815.x

- Roblyer, M. D. (2005). Educational technology research that makes a difference: Series introduction. Contemporary Issues in Technology and Teacher Education, 5(2), 192–201.

- Romrell, D., Kidder, L. C., & Wood, E. (2014). The SAMR model as a framework for evaluating mLearning. Journal of Asynchronous Learning Networks, 18(2), 1–15.

- Ross, S. M., Morrison, G. R., & Lowther, D. L. (2010). Educational technology research past and present: Balancing rigor and relevance to impact school learning. Contemporary Educational Technology, 1(1), 17–35.

- Roussos, M. (1997). Issues in the design and evaluation of a virtual reality learning environment [Unpublished master’s thesis]. University of Illinois at Urbana-Champaign.

- Roussou, M., & Slater, M. (2005). A virtual playground for the study of the role of interactivity in virtual learning environments [Paper presentation]. Presence 2005 8th Annual International Workshop on Presence.

- Ruggiero, D., & Mong, C. J. (2015). The teacher technology integration experience: Practice and reflection in the classroom. Journal of Information Technology Education: Research, 14, 161–178. https://doi.org/10.28945/2227

- Sandholtz, J. H., Ringstaff, C., & Dwyer, D. C. (1997). Teaching with technology: Creating student-centered classrooms. Teachers College Press.

- Shifflet, R., & Weilbacher, G. (2015). Teacher beliefs and their influence on technology use: A case study. Contemporary Issues in Technology and Teacher Education, 15(3), 368–394.

- Southgate, E., Smith, S. P., Cividino, C., Saxby, S., Kilham, J., Eather, G., Scevak, J., Summerville, D., Buchanan, R., & Bergin, C. (2019). Embedding immersive virtual reality in classrooms: Ethical, organisational and educational lessons in bridging research and practice. International Journal of Child-Computer Interaction, 19, 19–29. https://doi.org/10.1016/j.ijcci.2018.10.002

- Steel Crate Games. (2015). Keep talking and nobody explodes [Virtual Reality Content]. Steel Crate Games. https://keeptalkinggame.com/

- Swan, K. (2005). A constructivist model for thinking about learning online. In J. Bourne & J. C. Moore (Eds.), Elements of quality online education: Engaging communities (pp. 13–31). The Sloan Consortium.

- Syverson, M. A., Slatin, J. (2010). Evaluating learning in virtual environments. Learning Record. http://www.learningrecord.org/caeti.html

- The Body VR LLC. (2016). The Body VR: Journey inside a cell [Virtual Reality Content]. The Body VR. https://thebodyvr.com/

- Vannatta, R. A., & Fordham, N. (2004). Teacher dispositions as predictors of classroom technology use. Journal of Research on Technology in Education, 36(3), 253–271. https://doi.org/10.1080/15391523.2004.10782415

- Velev, D., & Zlateva, P. V. (2017). Virtual reality challenges in education and training. International Journal of Learning and Teaching, 3(1), 33–37.

- Vogel, J. J., Vogel, D. S., Cannon-Bowers, J., Bowers, C. A., Muse, K., & Wright, M. (2006). Computer gaming and interactive simulations for learning: A meta-analysis. Journal of Educational Computing Research, 34(3), 229–243. https://doi.org/10.2190/FLHV-K4WA-WPVQ-H0YM

- Voogt, J., & Roblin, N. P. (2012). A comparative analysis of international frameworks for 21st century competences: Implications for national curriculum policies. Journal of Curriculum Studies, 44(3), 299–321. https://doi.org/10.1080/00220272.2012.668938

- Washor, E., & Mojkowski, C. (2013). Leaving to learn: How out-of-school learning increases student engagement and reduces dropout rates. Heinermann.

- Wiggins, G. (1990). The case for authentic assessment. Practical Assessment, Research & Evaluation, 2(2), 2.

- Winn, W. (1993, August). A conceptual basis for educational applications of virtual reality. Human Interface Technology Laboratory. http://www.hitl.washington.edu/research/learning_center/winn/winn-paper.html∼

- Wolpert-Gawron, H. (2017). Just ask us: Kids speak out on student engagement. Corwin Press.

- Yin, R. K. (2014). Case study research design and methods (5th ed.). SAGE Publications, Inc.

- Zhu, K. (2016). Virtual reality and augmented reality for education: Panel. In M. Aoki & Z. Pan (Eds.), SIGGRAPH ASIA 2016 symposium on education: Talks (pp. 1–2). Association for Computing Machinery.