?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

LncRNA plays an important role in many biological and disease progression by binding to related proteins. However, the experimental methods for studying lncRNA-protein interactions are time-consuming and expensive. Although there are a few models designed to predict the interactions of ncRNA-protein, they all have some common drawbacks that limit their predictive performance. In this study, we present a model called HLPI-Ensemble designed specifically for human lncRNA-protein interactions. HLPI-Ensemble adopts the ensemble strategy based on three mainstream machine learning algorithms of Support Vector Machines (SVM), Random Forests (RF) and Extreme Gradient Boosting (XGB) to generate HLPI-SVM Ensemble, HLPI-RF Ensemble and HLPI-XGB Ensemble, respectively. The results of 10-fold cross-validation show that HLPI-SVM Ensemble, HLPI-RF Ensemble and HLPI-XGB Ensemble achieved AUCs of 0.95, 0.96 and 0.96, respectively, in the test dataset. Furthermore, we compared the performance of the HLPI-Ensemble models with the previous models through external validation dataset. The results show that the false positives (FPs) of HLPI-Ensemble models are much lower than that of the previous models, and other evaluation indicators of HLPI-Ensemble models are also higher than those of the previous models. It is further showed that HLPI-Ensemble models are superior in predicting human lncRNA-protein interaction compared with previous models. The HLPI-Ensemble is publicly available at: http://ccsipb.lnu.edu.cn/hlpiensemble/.

Introduction

Recent studies have shown that only a small number of human transcripts are involved in the protein translation process. Other RNAs which lack open reading frames and therefore can't translate into proteins are called noncoding RNA (ncRNA) [Citation1]. Long non-coding RNA (lncRNA) is a type of ncRNA with a length between 200 nt and 100,000 nt, which is the main component of the transcripts. Many studies have shown that lncRNA plays an important role in many biological and pathological processes. For example, regulation of gene expression [Citation2], transcription and post-translational regulation [Citation3], chromatin modification [Citation4,Citation5], disease progression [Citation6] and development [Citation7–Citation10]. In general, lncRNA performs its biological function by binding to the relevant protein [Citation11,Citation12]. Some experimental methods have been developed to identify lncRNA-protein interactions. For example, RNA immunoprecipitation and mass spectrometry are performed to identify lncRNA binding proteins [Citation13]. However, the experimental study of lncRNA-protein interactions requires a great deal of resources. Fortunately, accumulated experimental data made it possible to predict lncRNA-protein interactions by computational methods.

During recent years, several computational methods have been proposed to predict lncRNA-protein interactions. In 2011, Bellucci et al. developed a computational model called CatRAPID [Citation14]. The model introduced the biological properties of RNA and protein. For example, the secondary structure of RNA, the three-dimensional structure of protein, the hydrogen bond between RNA and protein, the van der Waals force and so on. In the same year, Muppirala et al. introduced a model called RPISeq [Citation15], which contained two sub-models, respectively, trained by support vector machine (SVM) [Citation16] and random forest (RF) [Citation17]. RPISeq only applied sequence information to predict RNA-protein interactions. In 2013, Wang et al. proposed a classifier based on naive Bayesian and extended naive Bayesian [Citation18]. The model introduced the properties of RNA and protein to produce triple features. Later, Lu et al. proposed a model called lncPro, which predicted the interactions of lncRNA-proteins by Fisher's linear discriminant analysis of amino acid and nucleotide sequences [Citation19]. Recently, Suresh et al. developed a SVM classification model called RPI-Pred, which extracted RNA secondary structure features and protein three-dimensional structural features from RNA and protein sequences, respectively [Citation20]. In 2016, Ge et al. developed a lncRNA-protein bipartite network inference (LPBNI) calculation method. LPBNI only used the known lncRNA-protein interactions to extrapolate the potential lncRNA-protein interactions [Citation21]. In addition, Pan et al. developed a model called IPMiner by applying the stacked autoencoder to learn high-level features for predicting RNA-protein interactions from raw sequence composition features [Citation22].

Although there are several models that can predict the lncRNA-protein interactions, they have some common drawbacks. First, the previous study attempted to construct a model to predict the RNA-protein interactions of all species. However, the homology of lncRNA is very weak, and there is a great difference between lncRNA in different species. Only 12% of human ncRNA can be found in other species. In addition, the training data for most previous models contained large amounts of mRNA. The mRNA refers to a class of RNA that can encode a protein, which is completely different from lncRNA. Application mRNA-protein data training model to predict lncRNA-protein interactions may reduce the accuracy of the model. Second, most of the previous models had a high false positive risk. The experimentally identified lncRNA-protein interactions account for only a small part. However, the predicting outcomes from most previous models showed that almost all of the unknown lncRNA-protein interactions were positive, indicating that the predicting outcomes are false positives (see “External validation” section). Third, the accuracy of previous prediction models was not high enough. Most of them applied only one type of lncRNA feature and one type of protein feature, which limited the comprehensiveness of the predictions.

In this study, we propose a new lncRNA-protein interactions prediction model called HLPI-Ensemble to solve the above problems. HLPI-Ensemble is specially designed for predicting human-related lncRNA-protein interactions, which is trained by human lncRNA-protein interactions data. We apply random pairing strategy to generate negative samples for lncRNA-protein interactions. The HLPI-Ensemble is based on the ensemble strategy. This strategy not only improves the prediction performance of model but also prevent the model from overfitting. Furthermore, HLPI-Ensemble employs three mainstream machine learning algorithm of SVM, RF and Extreme Gradient Boosting (XGB) [Citation23] by ensemble strategy to generate HLPI-SVM Ensemble, HLPI-RF Ensemble, and HLPI-RF Ensemble, respectively. HLPI-SVM Ensemble, HLPI-RF Ensemble, and HLPI-XGB Ensemble achieve AUCs of 0.95, 0.96 and 0.96, respectively, in the test dataset. Moreover, in the external validation, we compare the performance of the HLPI-Ensemble models with the previous models by external validation. The test results show that the false positive (FP) of the HLPI-Ensemble models is much lower than that of the previous models. In addition, other evaluation indicators of the HLPI-Ensemble models are higher than those previous models. The HLPI-Ensemble is publicly available at: http://ccsipb.lnu.edu.cn/hlpiensemble/.

Results

Performance evaluation

In order to evaluate the performance of HLPI-Ensemble comprehensively, we introduce the Area under curve (AUC), Accuracy (ACC), Recall (REC), Specificity (SPEC), Positive Predictive Value (PPV) and F1 score as the evaluation indicator of HLPI-Ensemble.

AUC is the area under the ROC curve, which is an evaluation indicator dedicated to the classification model [Citation24]. The ROC curve is based on the true category of the sample and the probability of prediction [Citation25]. The greater the value of AUC, the better the predictive power of the model. If AUC = 1, it indicates that the model has perfect predictive performance. If AUC = 0.5, it implies that the model's predictions are random. The reasonable range of AUC is between 0.5 and 1.

In addition to AUC, the common classification model evaluation indicators are ACC, REC, SPEC, PPV, F1 scores. The formulas for these indicators are shown below.

In the above formulas, P represents the number of positive samples, and N represents the number of negative samples. In our study, we define the correct pair of lncRNA-protein entries as positive samples, whereas randomized pairs of lncRNA-protein are negative samples. Here, TP represents true positives, which refers to the number of positive samples correctly classified by the classifier. TN represents true negative, that is, the number of negative samples correctly classified by the classifier. FP stands for false positives, it refers to the number of false positive samples classified by the classifier. Similarly, FN stands for false negatives, which refers to the number of false negative samples classified by the classifier. ACC is a common description of the system error, it indicates the difference between the predicted result and the true value [Citation26]. REC is also known as sensitivity, and it measures the correct proportion of positive recognition [Citation27]. Similarly, SPEC indicates the correct proportion of correct negative recognition [Citation27]. PPV is the proportions of positive results in statistics and diagnostic tests that are true positive results [Citation27]. PPV is also known as precision (PRE). F1 score is the harmonic mean of precision and sensitivity [Citation27]. Through these evaluation indicators, we can measure the performance of the model in a comprehensive way.

Model performance

HLPI-Ensemble includes HLPI-SVM Ensemble, HLPI-RF Ensemble and HLPI-XGB Ensemble. Each ensemble model contained nine types of sub-models trained by a combination of different features. shows the prediction results of each HLPI-Ensemble sub-model in NPTest1.

Table 1. The prediction results of SVM, RF and XGB by Pse-in-One feature combinations in NPTest1.

From we can find that different feature combinations have different advantages. Taking HLPI-SVM Ensemble as an example, the AUC of the model trained by the feature combination of the lncRNA's DAC and protein's PC-PseAAC-General is highest in all SVM models. However, the model with highest F1 score is trained by the feature combination of the lncRNA's Kmer and protein's PC-PseAAC-General. The model with highest REC is trained by the feature combination of the lncRNA's DAC and protein's Kmer. In short, different feature combinations can improve the different indicators. Therefore, the ensemble of these models trained by different feature combinations will further improve the overall performance [Citation28].

Next, we apply ensemble strategies to each class of HLPI-Ensemble sub-model. There are two types of ensemble strategies, which are the average strategy and the linear combination strategy. To get more reliable results, we introduce 10-fold cross-validation to compare the performance of these two strategies in NPTest2. shows the performance results of average strategy and linear strategy that verified by 100 times 10-fold CV in NPTest2.

Table 2. The performance of average strategy and linear strategy that verified by 100 times 10-fold CV in NPTest2.

The reason why applying three types of models at the same time to compare performance of the ensemble strategies is to avoid the contingency of the results. From we can see that all the indicators of the two strategies are very close in all HLPI-Ensemble models. The AUC is adopted as the main evaluation indicators of the ensemble models. The AUC sd is the standard deviation of the results of 100 times 10-fold cross-validation. Other indicators have been adjusted according to the best threshold. Although the performance of the three types of ensemble models is close, the AUC of the average ensemble strategy is higher than that of the linear ensemble strategy in HLPI-SVM Ensemble and HLPI-XGB Ensemble. However, in the HLPI-RF Ensemble, the performance of the linear ensemble strategy is superior to the average ensemble strategy. This shows that different algorithms are suitable for different ensemble strategies. Based on the performance of all the indicators, the ensemble strategies for HLPI-SVM Ensemble, HLPI-RF Ensemble and HLPI-XGB Ensemble are average ensemble strategy, linear ensemble strategy and average ensemble strategy, respectively. Finally, HLPI-Ensemble SVM, HLPI-Ensemble RF and HLPI-Ensemble XGB obtained the AUCs of 0.9557, 0.9629 and 0.9644 respectively.

External validation

The performance of sub-models and HLPI-Ensemble models.

Next, we introduce the external validation dataset to compare the performance of the HLPI-Ensemble models with the sub-models. The external validation dataset is extracted from the lncRNome database [Citation29]. We remove the overlap between lncRNome and NPInter v2.0. shows the performance of all sub-models and it reveals the performance of all HLPI-ensemble models in external validation.

Table 3. The performance of all sub-models in external validation.

From the comparison between and , we can identify that the AUC of HLPI-SVM Ensemble and HLPI-XGB Ensemble are higher than their corresponding sub-models. Although the AUC of HLPI-RF Ensemble is lower than its sub-model, the ACC, SPEC, PPV and F1 of HLPI-RF are all higher than its sub-models. This indicates that the HLPI-Ensemble models have better prediction performance than a single sub-model. In addition, from we find that REC value of sub-models is too high and the SPEC value of sub-models is too low. In short, the sub-model is biased towards predicting unknown data as positive. Further, the high FP also proves that the sub-model is at risk of false positives. The high FP of the model will mislead the researchers and led to waste of manpower and material resources. Comparing and , we can find that the REC of the HLPI-Ensemble models are within a reasonable range and the SPEC of the HLPI-Ensemble models are significantly higher than that of the sub-models. The FP of HLPI-Ensemble models is significantly lower than that of the sub-models. Compared with the sub-models, the HLPI-Ensemble models have lower false positive rate. In addition, the PPV, F1 score, TP and TN of the HLPI-Ensemble model are superior to those of the sub-models. This proves that applying ensemble strategies with multiple single models can improve the overall performance of model. In summary, the ensemble strategy can improve the performance of the model and reduce the risk of false positives. In addition, the scope of ensemble strategy is not limited to the three machine learning algorithms mentioned and it have a wide range of applications in various fields.

Table 4. The performance of all HLPI-Ensemble models in external validation.

The comparison of HLPI-Ensemble models with previous models

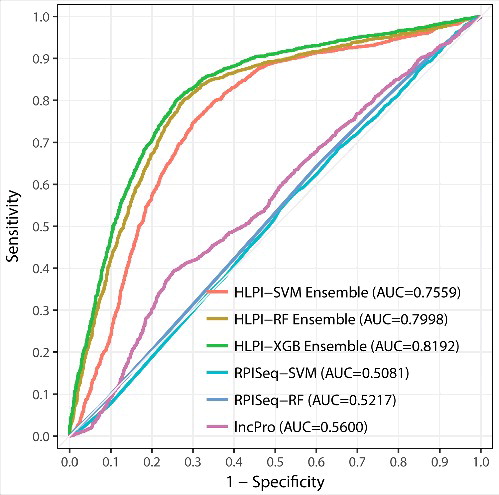

Moreover, we compare the HLPI-Ensemble models with three popular computational models of RPI-Pred [Citation20], lncPro [Citation19] and RPISeq [Citation15]. In these models, RPISeq contains both SVM model (RPISeq-SVM) and RF model (RPISeq-RF), which we test separately [Citation15]. shows the performance of HLPI-Ensemble models and previous models tested by lncRNome [Citation29].

Figure 1. The ROC curves of HLPI-SVM Ensemble, HLPI-RF Ensemble, HLPI-XGB Ensemble, RPISeq-SVM, RPISeq-RF and lncPro are expressed in red, brown, green, blue, navy blue and pink, respectively. The maximum area under the curve (AUC) represents the best performance of the model.

In , we can see that the AUC values of HLPI-SVM Ensemble, HLI-RF Ensemble, HLPI-XGB Ensemble, RPISeq-SVM, RPISeq-RF and lncPro are 0.7559, 0.7998, 0.8192, 0.5081, 0.5217 and 0.5600 respectively. It is clear that the AUCs of the HLPI-Ensemle models are much higher than other similar prediction methods in the lncRNome dataset. Unfortunately, RPI-Pred only provide the results of the binary classification format, so we cannot draw its ROC curve. We convert the result of the probability format provided by RPIPred, lncPro, RPISeq-SVM, RPISeq-RF into a binary classification format with 0.5 as the boundary. Furthermore, we specified that probability value greater than 0.5 as positive and the probability less than 0.5 as negative [Citation15]. Based on these binary classification results, we calculate all the indicators of HLPI-Ensemble and other models (see ).

Table 5. The performance of HLPI-Ensemble, RPI-Pred, lncPro, RPISeq-SVM and RPISeq-RF in lncRNome database.

As shown in , the three ensemble models of HLPI-Ensemble are better when compared to the other methods, which indicate HLPI-Ensemble has an excellent advantage in predicting human lncRNA-protein interactions. Furthermore, we find that all models except HLPI-ensemble models have high false positive problems. It can be seen from FP that the previous models are biased toward predicting the unknown lncRNA-protein pairs as positive. In addition, the extreme imbalance between REC and SPEC also show that there is high false positive risk in previous models. The high FP is the main reason for the poor prediction performance. Compared to the previous models, the three HLPI-Ensemble models have been greatly improved in reducing false positives. The REC and SPEC of the HLPI-Ensemble models are better than the previous models. Further, the AUC of HLPI-Ensemble models surpassed all previous models, which suggest that HLPI-Ensemble models have strong applicability in predicting potential human lncRNA-protein interactions. In addition, other indicators of HLPI-Ensemble models are higher than the previous models, which further show the superiority of the ensemble strategy. In short, the results of the external validation confirm the robustness of the ensemble strategy and the practical value of HLPI-Ensemble in predicting human lncRNA-protein interactions.

Discussion

The reasons for the success of HLPI-Ensemble may come from the following factors. First, the HLPI-Ensemble is specially designed for human lncRNA-protein interactions prediction. None of the previous models distinguish the sources of RNA. They try to build a model that can predict the RNA-protein association of all species. Unlike the previous models, HLPI-Ensemble focuses on human lncRNA-protein interactions prediction. The 8120 experimental validation of human lncRNA-protein interactions are extracted from NPInter for constructing HLPI-Ensemble. Furthermore, we apply three types of feature extraction algorithms for lncRNA and protein, respectively, which produce nine types of combinations of features. These nine types of combination features summarize the association between human lncRNA and protein. Compared with the previous model, HLPI-Ensemble have a stronger specificity in predicting human lncRNA-protein.

Second, the ensemble strategy and the random pairing strategy is applied to effectively reduce false positive rate. As shown in the external validation, previous models predict most human lncRNA-protein interactions as positive. The reason for the high false positivity may be the negative sample selection of the previous models. Most of the previous models chose RNA that could not bind to proteins and protein that could not bind to RNAs as negative sample. Biology researchers, however, are more concerned with the interactions between RNA capable of binding proteins and RNA binding proteins, which are different from the negative samples of previous models. This results in high false positive of the previous models. HLPI-Ensemble is different from the previous models, since it introduces the random pairing strategy to generate negative samples. The positive samples of HLPI-Ensemble are experimentally confirmed interaction pairs of lncRNA and protein. Although the negative samples provided by the random matching strategy are not necessarily accurate, they can effectively reduce the risk of false positives. Compared with the negative results, the researchers are more concerned with the positive results. The false positive results will lead to the waste of experimental resources or even misleading research. HLPI-Ensemble can help researchers keep away from false positives and correctly predict unknown human lncRNA-protein relationships. Finally, the ensemble strategy improves the performance of the overall model. It can be seen from and that most HLPI-Ensemble models perform better than their corresponding sub-models. Although the sub-model from also has a false positive problem, it can be seen from the comparison between and that the FP of all HLPI-Ensemble models are significantly lower than the corresponding sub-models. This suggests that the ensemble strategy can effectively reduce the false positive. Because the ensemble strategy integrates the advantages of sub-models trained by different feature combinations, the ensemble models performs better than the previous single models. In summary, compared to the previous models, HLPI-Ensemble has good robustness in the prediction of human lncRNA-protein interactions.

Of course, HLPI-Ensemble have some limitations that need to be improved in the future. For example, the known human lncRNA-protein interactions data is still insufficient. More human lncRNA-protein interactions data can further improve the performance of HLPI-Ensemble. In addition, ensemble strategy is based on multiple sub-models, which results in huge computational resources. As the level of hardware continues to raise the problem will be resolved.

To help researchers further study human lncRNA-protein interactions, we create a free website for HLPI-Ensemble (http://ccsipb.lnu.edu.cn/hlpiensemble/). In addition, people can download all the datasets of this study from the web site. It should be noted that HLPI-Ensemble is designed to predict human lncRNA-protein interactions, which does not support lncRNA data and protein data from other species.

Materials and methods

Dataset

We apply the NPInter v2.0 database [Citation30] as the benchmark dataset and the lncRNome database [Citation29] is applied as the external validation dataset. The construction of these two datasets is shown below.

Benchmark dataset

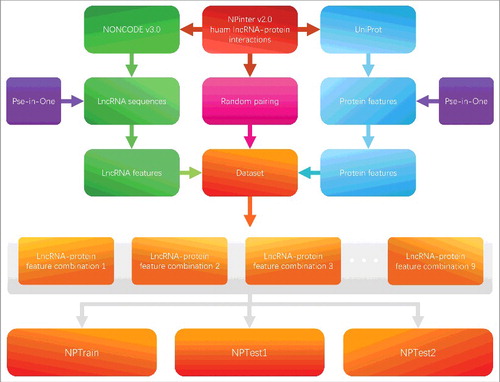

The data extracted to construct HLPI-Ensemble come from the NPInter v2.0 database [Citation30]. NPInter is a database that integrated the experimental interactions of ncRNA and multiple biomolecules. NPInter covers the majority of known human lncRNA-protein interactions. shows the process of constructing benchmark dataset from NPInter v2.0.

As shown in , the human lncRNA-protein interactions are extracted from NPInter v2.0 database. The process of filtering and cleaning data is strictly based on three principles. First, the species of the lncRNA-protein entity must be human. Second, the lncRNA-protein interaction type must be “binding.” Finally, the entity must have a Pubmed ID that can be queried, meaning that the entity can be experimentally tested. After data cleaning, we obtain 8120 experimental validated human lncRNA-protein interactions data. The lncRNA data of NPInter is extracted from the NONCODE v3.0 database [Citation31]. The NONCODE database provides a most complete collection and annotation of lncRNA. The protein data of NPInter is extracted from the UniProt database [Citation32]. UniProt covers almost all known protein information. We extract lncRNA sequences and protein sequences from NONCODE and UniProt, respectively. Next, the lncRNA features and protein features are extracted from lncRNA sequences and protein sequences by Pse-in-One, respectively. At the same time, the random pairing strategy is applied to generate negative samples according to the known human lncRNA-protein interactions. In the random pairing strategy, the experimentally validated lncRNA and protein interactions pairs are disrupted and randomly paired. Then, the random matched interactions pairs that duplicate with positive samples (known interactions pairs) are removed. The remaining random pairs are treated as the negative dataset. To ensure the data balance, the sample size of the negative dataset generated by the random pairing strategy is equal to the sample size of the positive dataset. Finally, we equally divide the data into three divisions which are NPTrain, NPTest1, and NPTest2. Among them, NPTrain is the training dataset. NPTest1 is used to test the performance of the sub-models. NPTest2 is used to compare the performance of different ensemble strategies.

External validation dataset

The dataset implemented by external validation is extracted from the lncRNome database [Citation29]. The lncRNome database is a comprehensive knowledge base for human lncRNA. The process of generating external validation dataset is similar to the process of generating benchmark dataset. Further, we remove the overlap between lncRNome and NPInter v2.0. First, we have executed a crawler script written by ourselves to get the human lncRNA-protein entity in lncRNome database. Next, we get the lncRNA's corresponding entity in NPInter v2.0 database based on the conversion of the Ensemble ID stored by lncRNome database through the NONCODE database. These lncRNome entities that can be converted to NPInter entities are overlapping data. The overlapped data is removed to ensure the reliability and fairness of the test results. In addition, some of the lncRNome entity is incomplete, such as the lack of Ensemble ID or the lack of sequence information. These lncRNome entities are invalid and they can neither be used for building models nor used to evaluate models. Obviously, such invalid data must also be removed. After filtering out the invalid data, we extracted 2729 pairs of human lncRNA-protein interaction from lncRNome and generated 2729 negative samples by application random pairing strategy.

Data features

The data features are generated by Pse-in-One. The Pse-in-One is a popular feature extraction model that extracts the features of DNA, RNA, and protein by pseudo components [Citation33]. We utilize Pse-in-One to extract lncRNA features and protein features. The features of lncRNA and protein are matched to produce nine types of feature combinations for sub-models training. The process of lncRNA feature and protein feature extraction is described in the following paragraphs. For details, see Supplementary Materials 1.

LncRNA features

A variety of feature extraction methods are integrated in Pse-in-One. We apply three types of them, including Kmer, DAC, and PC-PseDNC-General. In these methods, Kmer is the simplest method of representing lncRNA. Kmer represents the frequency of occurrence of k adjacent nucleic acids by lncRNA sequence [Citation34]. A total of 16 lncRNA features are extracted by Kmer. Another algorithm for generating lncRNA features is Dinucleotide-based auto covariance (DAC) [Citation35, Citation36]. The DAC measures the correlation of same physicochemical index between two dinucleotides separated by a distance of lag along the lncRNA sequence. We extracted 44 lncRNA features from the DAC. The last algorithm for generating lncRNA characteristics is the general parallel correlation pseudo dinucleotide composition (PC-PseDNC-General) [Citation37]. Pseudo dinucleotide composition is a class of common feature extraction algorithm for nucleic acid sequences, which is based on nucleic acid sequence of various physiochemical indices. We extracted 22 physiochemical indices built in PC-PseDNC-General as lncRNA features.

Protein features

The process of generating protein features is similar to the process of generating lncRNA features. We apply three types of protein sequence feature extraction methods of Kmer, AC and PC-PseAAC-General. The principle of the Kmer algorithm is not repeated here as described above [Citation34]. A total of 400 protein features are extracted by Kmer. The AC approach measures the correlation of same property between two residues separated by a distance of lag along the protein sequence [Citation38]. We extracte 1094 protein features from the AC. General parallel correlation pseudo amino acid composition (PC-PseAAC-General) is a feature extraction algorithm for measuring the physicochemical properties of amino acid sequences [Citation38]. We extract 22 protein features from PC-PseAAC-General based on the default parameters of Pse-in-One.

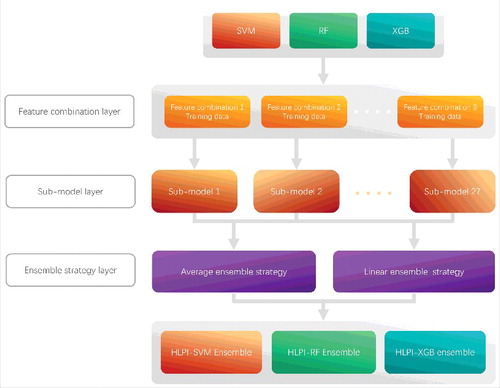

Ensemble model

The performance of a single predictive model is limited by the features of the model and its own predictive ability. Applying ensemble strategies with multiple single models can improve the overall performance of model. In addition, the ensemble model covers a wide range of features that are more robust than a single model. Here, we introduce the average ensemble strategy and the linear ensemble strategy to further improve the model performance. HLPI-Ensemble employs three machine algorithms of Support Vector Machines (SVM), Random Forests (RF) and Extreme Gradient Boosting (XGB) to implement the ensemble strategy to construct HLPI-SVM Ensemble, HLPI-RF Ensemble and HLPI-XGB Ensemble. shows the process of the ensemble strategy implemented in these three models.

As shown in , the HLPI-Ensemble consists of three parts: Feature combination layer, Sub-model layer and Ensemble strategy layer. First, three lncRNA feature data and three protein feature data are combined in the Feature combination layer. A total of nine types of feature combinations are generated. Next, three types of machine learning algorithms of SVM, RF and XGB are trained by nine types of feature combinations, respectively. A total of 27 sub-models are generated in the Sub-model layer. Finally, we choose an ensemble strategy for corresponding HLPI-Ensemble model from the average ensemble strategy and the linear ensemble strategy according to the performance of ensemble strategy in the Ensemble strategy layer.

Then, we apply the 10-fold cross-validation (10-fold CV) to test the performance of models. 10-fold CV is an evaluation method for the binary class model. It randomly divides the data set into 10 parts and then uses nine of these data to train model, with the remaining one as the test set. Repeat this process to ensure that each part has been served as test set. Finally, take an average of 10 prediction results as the final result of evaluation. In addition, we also apply the 10-fold CV in the parameter tuning process. However, the results of 10-fold CV are contingent. In order to obtain stable results, we repeat this process 100 times in all 10-fold CV.

Sub-model and tuning parameters

HLPI-Ensemble consists of HLPI-SVM Ensemble, HLPI-RF Ensemble and HLPI-XGB Ensemble. Each class of ensemble model is supported by nine types of sub-models. All sub-model parameters are optimized.

The sub-models of HLPI-SVM Ensemble are based on the SVM algorithm. The core idea of SVM is to construct hyperplanes or a set of hyperplanes in high or infinite dimensional spaces that can be used for classification, regression, or other tasks [Citation16]. The sub-models of HLPI-SVM Ensemble employ Radial Basis Function Kernel as core. In addition, random search is applied to tune parameters. Further, the tuned parameters of each model are verified by 100 times 10-fold CV to ensure the reliability of the results. The parameter with the highest AUC value is chosen as the final parameter of the sub-model. All the sub-models of HLPI-SVM Ensemble have applied the above optimization process.

The sub-models of HLPI-RF Ensemble are based on the RF algorithm. RF is a classifier that uses multiple decision trees to train and predict samples [Citation17]. The parameter tuning process of HLPI-RF Ensemble sub-model is similar to the process of HLPI-SVM Ensemble sub-model. The difference is that the HLPI-RF Ensemble sub-model applies the grid search to find the optimal combination of parameters. Each parameter's model is validated by 100 times 10-fold CV. The combination of the parameters with the highest AUC value is chosen as the final parameter of the sub-model. All sub-models of HLPI-RF Ensemble had applied the above optimization process.

The sub-model of HLPI-XGB Ensemble is based on the XGB algorithm. XGB is a popular algorithm in recent years, it has improved Gradient boosting algorithm and achieved a good performance improvement [Citation23, Citation39]. The XGB algorithm has several parameters that need to be adjusted. Traversing all combinations of parameters takes a lot of computational time. In order to shorten the search time, fixed parameter techniques are used to quickly narrow the search range of parameters. First, the XGB hyperparameters are initially set based on our past modeling experience. Then, the grid search is used to find the optimal value of the first parameter while the other parameters remain fixed. All search results were validated by 3 times 10-fold CVs. When the optimal value of the first parameter is found, replace the first argument with the grid search result. The process of searching for the second parameter is similar to the above procedure. When all the parameters have found the optimal solution, the process of search is stopped. Finally, we would get a collection containing all the optimal parameters. However, the parametric combination of fixed parameter techniques may not be a globally optimal solution. In order to further enhance the reliability of the model parameters, we carry out grid search within the optimized parameters. In this process, all the results were verified by 100 times10-fold CV. The combination of the parameters with the highest AUC value is chosen as the formal parameter of the sub-model. All sub-models of HLPI-XGB Ensemble have applied the above optimization process.

Ensemble strategy

HLPI-Ensemble introduces the average ensemble strategy and linear integration strategy, which are applied to generate HLPI-SVM Ensemble, HLPI-RF Ensemble and HLPI-XGB Ensemble.

The core idea of the average ensemble strategy is to average the prediction results of sub-models as the final results. The average ensemble strategy is defined as follows.

In the above formula, n represents the number of integrated sub-models, represents the prediction result of the i-th sub-model. The prediction result of the sub-model is the probability of interaction between each lncRNA-protein pair. The advantage of the average ensemble strategy is that it reduces the influence of the anomalous results on the overall prediction results to further improve the robustness of the ensemble model. Taking HLPI-SVM Ensemble as an example. HLPI-SVM Ensemble covers nine types of sub-models and their corresponding feature combinations are different. Different combinations of features tend to different predictions. Assuming that the nine types of sub-models predict a pair of unknown human lncRNA-protein interactions and their results are 0.96, 0.90, 0.88, 0.20, 0.86, 0.88, 0.92, 0.89, 0.96. It can clearly see that 0.20 is anomalous in these values. Abandoning 0.20 is unwise because it may imply factors that ignored by other sub-models. The contribution of each sub-model should be considered. The average ensemble strategy is applied to the above prediction results, and the final prediction result is 0.83, which is close to the prediction results of all sub-models.

Another ensemble strategy for HLPI-Ensemble is linear ensemble, which integrated sub-models based on linear model algorithms. The linear integration strategy applies the multivariable linear regression model (MLRM). The linear ensemble strategy is defined as follows.

In the above formula, n represents the number of integrated sub-models, denotes the model coefficients,

represents the prediction probability result of the i-th sub-model,

denotes the prediction result of the linear ensemble strategy. MLRM take the NPTest1 prediction results of all sub-model as training dataset. shows the coefficients of each HLPI-Ensemble model trained by linear ensemble strategy.

Table 6. The coefficients of HLPI-Ensemble models trained by linear ensemble strategy.

From we can see that the assigned weights of the sub-models are different. The linear ensemble strategy tends to assign different weights to the different sub-models. As can be seen from , is the largest coefficient in HLPI-SVM Ensemble. This suggests that the

-related HLPI-SVM Ensemble sub-model contributes the most to HLPI-SVM Ensemble. Similarly,

in HLPI-RF Ensemble also has the same interpretation. In HLPI-Ensemble XGB,

has the largest absolute value of all coefficients.

Disclosure of potential conflicts of interest

No potential conflicts of interest were disclosed.

Supplementary_material_Huan_Hu.docx

Download MS Word (32.7 KB)Acknowledgment

This work was supported by the National Natural Science Foundation of China under Grant No. 31570160, Innovation Team Project of Education Department of Liaoning Province under Grant No. LT2015011, the Doctor Startup Foundation from Liaoning Province under Grant No. 20170520217, Important Scientific and Technical Achievements Transformation Project under Grant No. Z17-5-078, Large-scale Equipment Shared Services Project under Grant No. F15165400 and Applied Basic Research Project under Grant No. F16205151.

Additional information

Funding

References

- UA Ø, Derrien T, Beringer M, et al. Long noncoding RNAs with enhancer-like function in human cells. Medecine Sciences M/s. 2010;143(1):46–58.

- Guttman M, Amit I, Garber M, et al. Chromatin signature reveals over a thousand highly conserved large non-coding RNAs in mammals. Nature. 2009;458(7235):223.

- Crick FHC, Barnett L, Brenner S, et al. General Nature of the Genetic Code for Proteins. Nature. 1961;192(4809):1227–1232.

- Yanofsky C. Establishing the triplet nature of the genetic code. Cell. 2007;128(5):815–8.

- Bertone P, Stolc V, Royce TE, et al. Global identification of human transcribed sequences with genome tiling arrays. Science. 2004;306(5705):2242.

- Chen X. Predicting lncRNA-disease associations and constructing lncRNA functional similarity network based on the information of miRNA. Sci Rep. 2015;5:13186.

- Consortium EP, Birney E, Stamatoyannopoulos JA, et al. Identification and analysis of functional elements in 1% of the human genome by the ENCODE pilot project. Nature. 2007;447(7146):799–816.

- Carninci P, Sandelin A, Lenhard B, et al. Genome-wide analysis of mammalian promoter architecture and evolution. Nat Genet. 2006;38(6):626–35.

- Chen X, You ZH, Yan GY, et al. IRWRLDA: improved random walk with restart for lncRNA-disease association prediction. Oncotarget. 2016;7(36):57919.

- Chen X. KATZLDA: KATZ measure for the lncRNA-disease association prediction. Sci Rep. 2015;5:16840.

- Chen X, Huang YA, Wang XS, et al. FMLNCSIM: fuzzy measure-based lncRNA functional similarity calculation model. Oncotarget. 2016;7(29):45948–45958.

- Huang YA, Chen X, You ZH, et al. ILNCSIM: improved lncRNA functional similarity calculation model. Oncotarget. 2016;7(18):25902–25914.

- Moran VA, Niland CN, Khalil AM. Co-Immunoprecipitation of long noncoding RNAs. Methods Mol Biol. 2012;925(925):219.

- Bellucci M, Agostini F, Masin M, et al. Predicting protein associations with long noncoding RNAs. Nat Methods. 2011;8(6):444.

- Muppirala UK, Honavar VG, Dobbs D. Predicting RNA-Protein Interactions Using Only Sequence Information. Bmc Bioinformatics. 2011;12(1):489.

- Cortes C, Vapnik V. Support Vector Network. 1995;20(3):273–297.

- Ho TK. The Random Subspace Method for Constructing Decision Forests. IEEE Transactions on Pattern Analysis & Machine Intelligence. 1998;20(8):832–844.

- Wang Y, Chen X, Liu ZP, et al. De novo prediction of RNA–protein interactions from sequence information. Molecular Biosystems. 2013;9(1):133.

- Lu Q, Ren S, Lu M, et al. Computational prediction of associations between long non-coding RNAs and proteins. Bmc Genomics. 2013;14(1):651.

- Suresh V, Liu L, Adjeroh D, et al. RPI-Pred: predicting ncRNA-protein interaction using sequence and structural information. Nucleic Acids Res. 2015;43(3):1370.

- Ge M, Li A, Wang M. A Bipartite Network-based Method for Prediction of Long Non-coding RNA-protein Interactions. Genomics Proteomics Bioinformatics. 2016;14(1):62–71.

- Pan X, Fan Y, Yan J, et al. IPMiner: hidden ncRNA-protein interaction sequential pattern mining with stacked autoencoder for accurate computational prediction. BMC Genomics. 2016;17:582.

- Chen T, Guestrin C. editors. XGBoost:A Scalable Tree Boosting System. ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2016.

- Ling CX, Huang J, Zhang H. editors. AUC: A Better Measure than Accuracy in Comparing Learning Algorithms. Canadian Society for Computational Studies of Intelligence Conference on Advances in Artificial Intelligence. 2003.

- Fawcett T. An introduction to ROC analysis. Pattern Recognition Letters. 2006;27(8):861–874.

- Diebold FX, Mariano RS. Comparing Predictive Accuracy. Nber Technical Working Papers. 1995;13(3):253–263.

- Powers DMW. Evaluation: From Precision, Recall and F-Factor to ROC, Informedness, Markedness & Correlation. Journal of Machine Learning Technologies. 2011;2:2229–3981.

- Li Z, Ai H, Wen C, et al. CarcinoPred-EL: Novel models for predicting the carcinogenicity of chemicals using molecular fingerprints and ensemble learning methods. Sci Rep. 2017;7(1):2118.

- Bhartiya D, Pal K, Ghosh S, et al. lncRNome: a comprehensive knowledgebase of human long noncoding RNAs. Database, 2013,(2013-01-01). 2013;2013(14):bat034.

- Yuan J, Wu W, Xie C, et al. NPInter v2.0: an updated database of ncRNA interactions. Nucleic Acids Res. 2014;42(Database issue):104–8.

- Bu D, Yu K, Sun S, et al. NONCODE v3.0: integrative annotation of long noncoding RNAs. Nucleic Acids Res. 2012;40(Database issue):210–5.

- Gane PJ, Bateman A, Mj M, et al. UniProt: A hub for protein information. Nucleic Acids Res. 2015;43(Database issue):204–12.

- Liu B, Liu F, Wang X, et al. Pse-in-One: a web server for generating various modes of pseudo components of DNA, RNA, and protein sequences. Nucleic Acids Res. 2015;43(Web Server issue):W65–W71.

- Wei L, Liao M, Gao Y, et al. Improved and Promising Identification of Human MicroRNAs by Incorporating a High-Quality Negative Set. IEEE/ACM transactions on computational biology and bioinformatics. 2014;11(1):192–201.

- Dong Q, Zhou S, Guan J. A new taxonomy-based protein fold recognition approach based on autocross-covariance transformation. Bioinformatics (Oxford, England). 2009 Oct 15;25(20):2655–62.

- Guo Y, Yu L, Wen Z, et al. Using support vector machine combined with auto covariance to predict protein-protein interactions from protein sequences. Nucleic Acids Res. 2008 May;36(9):3025–30.

- Chen W, Zhang X, Brooker J, et al. PseKNC-General: a cross-platform package for generating various modes of pseudo nucleotide compositions. Bioinformatics (Oxford, England). 2015;31(1):119–20.

- Cao DS, Xu QS, Liang YZ. Propy: a tool to generate various modes of Chou's PseAAC. Bioinformatics (Oxford, England). 2013;29(7):960–2.

- Efron B, Hastie T, Johnstone I, et al. Least Angle Regression. Annals of Statistics. 2004;32(2): págs. 407–451.