ABSTRACT

This research reports on a co-design project to improve creative engagement tools with academics and public sector organisations in the northwest UK. Creative engagement (which is a staple of co-design activities but also used widely outside design) is often supported by tools and resources. However, there is a need to tailor tools for specific contexts to accommodate the skills and practices of creative engagement professionals and the contexts in which they work. While there is a literature examining tools in co-design and to a lesser extent in wider creative engagement activities, there is a lack of research on how tools can be improved. This article presents a framework that enables engagement practitioners to improve the tools they use in their practice. Following a Participatory Action Research approach, three case studies document the application and testing of the improvement framework. The paper discusses the insights and lessons learnt from this process and the impact of the new improvement activities on the practices of the creative engagement professionals. The research outcomes include building improvement capabilities in participants and understanding of how the framework works in practice and how it could be more widely applied to tool improvement within and beyond co-design.

1. Introduction

In this paper, we describe research where we work with creative engagement professionals to conceive and test a framework aimed at improving the tools and resources they use in their professional activities. In essence, any creative engagement professional (including co-designers) should be able to use this framework to help them reflect on and improve the tools and resources they use.

Creative engagement (CE) involves enabling an expressive dialogue often between communities and public bodies using creative acts (e.g. film, photography and storytelling). There is a close relationship between co-design and CE, for example, with advocacy planning (Davidoff Citation1965) and open philosophies (e.g. open source, design) that have been in the spotlight of design studies in the past decades (Lee Citation2008; Cruickshank, Coupe, and Hennessy Citation2013; Baibarac and Petrescu Citation2019). In advocacy planning, Davidoff (Citation1965) asserts that an urban planner should support the development of alternative renewal approaches that could advocate for communities’ interests and include the voices of those affected by a policy in public decision-making processes. Non-designers, such as community organisers and student groups, have a long history of conducting such planning processes with communities since the 1960s. Similarly, co-design processes can be initiated by anyone interested in improving their current situations and may or may not involve professional designers (Zamenopoulos and Alexiou Citation2018). While CE practice and co-design use similar approaches, CE, however, is also used beyond co-design, for example, where straightforward information gathering is the aim (e.g. in patient participation in the health service) or where engagement will contribute to decision-making but there is no requirement for the abductive, creative leaps common to co-design (Manzini Citation2015; Cramer-Petersen, Christensen, and Ahmed-Kristensen Citation2019).

Both co-design and CE are often supported by tools to enhance the creative abilities of those involved in engagement activities. Co-designers often use toolkits and handbooks with methods that might not address a particular problem but provide principles and instructions that guide them to design their own engagement approaches, such as the Community Planning Handbook (Wates Citation2000) and NESTA, National Endowment for Science Technology and the Arts (Citation2014). However, many tools and methods assume anyone has the skills to easily employ them. For example, some tools are translations of designerly methods into popular versions taken out of context (e.g. IDEO tools), but the person using the tools must have the knowledge and skill to understand how to use them (Johansson-Sköldberg, Woodilla, and Mehves Citation2013).

Drawing on scaffolds for experiencing (Sanders Citation2002) and toolkit approaches that encourages democratic innovation (Von Hippel Citation2005), we also appropriate the concept of ‘convivial tools’ (Illich Citation1973) and good design (Norman and Draper Citation1986) to define tools as means of enhancing skills, giving people control when conducting tasks and enabling them to constructively apply tools in their own practice. Here we define methods as a coherent set of principles and include guidelines in terms of tools, techniques and principles for organisation (Bratteteig et al. Citation2013), where techniques are specific ways to perform an activity and are how tools are put into action (Sanders, Brandt, and Binder Citation2010) as concrete instruments that support techniques and skills, such as pencils and pens as tools for sketching, drawing and annotating. Tools could be physical, digitally downloaded and printed or entirely digital in nature. We believe tools can be fitted into a larger set of methods more or less at will (Brandt, Binder, and Sanders Citation2012) enabling people to freely deploy tools in their creative practice. Some tools have crossed over between practices acquiring joint identity across fields (Levina and Vaast Citation2005) and have been improved in the process (Sanders and Stappers Citation2012).

The design of tools that respond to a specific project and envision their use before use has been criticised as stakeholders and potential users will appropriate tools in unpredictable ways, requiring more flexibility in use (Ehn Citation2008). Each CE project needs to be designed according to an understanding of the context, where tools should accommodate multiple design languages and skills of communities to support them in the design and decision-making processes, enabling those involved to appropriate tools in their practices. For example, a co-design study required adapted approaches and tools to enable people with communication impairment to participate in the project (Wilson et al. Citation2015). While there is a literature examining design, adaptation, and evaluation of tools in co-design, there is a lack of research on how these can be improved. Peters, Loke, and Ahmadpour (Citation2020) argue that it is ideal to evaluate tools within a rigorous context to support the improvement of tools as it often relies on observations of the tool designers themselves in a lab environment. They reviewed 76 tools and concluded that user involvement in tool design and evaluation would benefit the co-design community as tools could be context-appropriate to real-life practices.

Researchers have co-designed flexible tools with experts in urban spatial, health and social care settings for ongoing use and appropriation in other contexts in more recent tool design approaches (Baibarac and Petrescu Citation2019; Whitham et al. Citation2019). However, appropriating tools designed elsewhere to be applied in different fields requires tailoring them for local conditions such as healthcare (Donetto et al. Citation2015), urban planning (Iaione Citation2016), or social services (Cruickshank et al. Citation2017). Our approach here is to acknowledge the expertise of CE practitioners in their own CE practice. We worked with a wide range of CE practitioners both inside co-design and outside to develop and approach together that allows any CE practitioner to reflect on and improve the tools they use in their own engagement practice. This supports the improvement of CE practice without claiming a hierarchical position or imposing values or our practices as co-designers. This is in sympathy with Lee’s ‘Design Choices’ research (Citation2018), so, for example, Lee calls for engagement with the preconditions of co-design or a focus on co-creation events. Our concern here is to help practitioners examine and improve what they do with their own understanding. Here, the improvement framework could be brought to bear by practitioners to improve these aspects (and others) of their CE practices through an examination of the tools used and how that practitioner has appropriated them into their own practice.

Appropriation may involve adaptation of a tool or improving it to create new versions. Although there are some overlaps on what constitutes improvement and adaptation, these concepts are not the same. Adaptation processes happen in a non-deliberate manner to fit a tool better to an existing framework (De Waal and Knott Citation2013), whereas improvement processes involve identifying issues and proposing positive changes to a framework in a deliberative way. In this paper, improvement is an activity that consists of a cycle of critical observation, creative design inputs that lead to agreed positive changes in life and work situations as well as attempts to increase knowledge. Design knowledge is not intrinsically linked with the development of artefacts but rather tied to practice where the reflection in action is how knowledge is generated (Swann Citation2002). Tool design practices include stages for future iterations and expansions to gain wider relevance (Baibarac and Petrescu Citation2019) or continuous adaptations to suit practitioners’ applications as they arise (Morris and Cruickshank Citation2013).

This research follows a Participatory Action Research approach to address the research question: how can CE practitioners be supported to improve their own tools? To guide this project, we draw on PD methods and principles (Bratteteig et al. Citation2013) and those of co-design (Zamenopoulos and Alexiou Citation2018) to underpin our improvement actions and on the case study framework (Yin Citation2018) to conduct research. The first step to address the research question was to build a proposition used for improving tools. In an approach similar to Cockton’s ‘meta-principles’ (Citation2014), we identify fundamental properties of all CEs and propose the use of three dimensions (Instruction, Functionality and Flexibility). We developed a framework that builds on the co-design practice landscape of planning, enabling and the actual doing activities in workshop-like events. The second step was to design activities around the framework to help understand how they are related to each other. These activities include questions in the form of tasks to encourage participants to work through the dimensions, where engagement practitioners worked together to learn and reflect on the propositions to understand how tool improvement occurs in practice. Lastly, we shared the research findings with experts in tools and participatory approaches to raise awareness and provide insights, discussing their implications in CE practices. In the following section, we review guidelines for designing physical and digital artefacts and activities involved in co-design to build a framework as a meta-tool to improve CE practices.

2. Building a framework for improving tools for CE: bringing tool design practice and theory together

We adapted Hopper’s architectural guidelines applied in interface design (Citation1986), where we consider the functionality, instruction (interface), and flexibility and adaptability to build a framework used in tool design. We describe each design aspect, drawing a parallel between architectural/interface design and tool design. Here we describe the three key dimensions of the framework.

(1) Interface (Instruction): Hooper argues that computer interfaces are like façades, which people experience primarily when they face interface designs, or like entranceways that are designed to inform the whole place in a systematic way, like European cathedrals and formal Japanese gardens. In interface design, the inside and the outside of an interface are the relationship between design concept and purpose. In general, the design specification is articulated with a briefing that guides the concept, providing essential information about the design of a building, interface or tool. A good tool can be designed with specific colours, shapes, words that enable people to familiarise with activities and things.

(2) Functionality: The function is the primary consideration in the design of digital interfaces, buildings and tools. The function of a design is to fulfil the purpose for which it was intended, i.e. a design that works. Beyond this level, the final form depends on the people’s needs and the context as different forms may represent the same functionality. For example, the form of a tool used for engaging with young people should be full of colours, but the tool might not have the same form when engaging with young adults as they might feel they are being treated as children.

(3) Flexibility and adaptability: In vernacular architecture, buildings are adapted to contain larger families, or they are changed to improve on the earlier effort. Hooper highlights that mechanisms for change are critical to flexibility and adaptability. For instance, double-click timing on operational systems is the local control that enables personalisation to users’ computing skills. In another example, a paper-based tool can provide different layouts or editable headings in the digital file or provide blank spaces to allow extra information. Hooper concludes that:

‘flexibility in personalisation may not necessarily provide adaptable systems. One may want to rely on expert judgment of a best system as a first approximation, making changes available from this base level. One might to prevent the moving of walls, for example, but encourage the rearrangement of furniture’ (Hooper Citation1986, 15)

These three dimensions were applied to three layers of practice in CE: a) planning (activity before events); b) facilitation of human interactions that enable the process of CE; and c) application or doing for the practical use of tools with participants.

(a) Planning activities involves considering the aims and objectives, audience and actions used for engaging with participants, where tools are often adopted to assist this practice. These elements compose a collaborative structure for a common action that enables the emergence of new designs also referred to in the literature as negotiation spaces (Pedersen Citation2020), design spaces (Marttila and Botero Citation2013), or solution space (Von Hippel Citation2005).

(b) Enabling activities involves implementing the plan within a collaborative space, where a facilitator uses methods, techniques, and tools to facilitate a creative exchange between participants. The facilitator’s role is to make sure everyone can contribute to an activity, making the most out of the expertise and creativity of participants. Tassoul defines the job of facilitation as ‘setting the right conditions for a group of people to do a good session, highly inspired and a high quality of interactions and concept generation’ (Tassoul Citation2009, 33). In this practice known as creative facilitation, a facilitator formulates mechanisms that have specific functions (e.g. energising participants, generating ideas) and uses approaches developed in and on practice (Forester Citation1999) to draw participants into design processes.

(c) Doing activities involves exchanging expertise and ideas with participants through tools for making, telling and enacting activities or a combination of them (Brandt, Binder, and Sanders Citation2012) to collaborate in the design and decision-making processes. Visual tools assist participants in expressing their experiences in telling activities. Tools give people the ability to create things to externalise ideas and embodied knowledge in the form of artefacts in making activities. Tools can support people to imagine and act out possible futures by experiencing a design setting and exploring activities that are likely to take place in enacting activities.

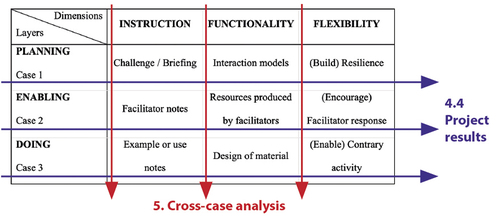

Building on these overlapping practices, we will map these layers and the dimensions into a framework, called Improvement Matrix (). The proposition we tested is that this matrix of nine considerations allows CE professionals to dissect the tools they use in terms of their tool conception, introduction to participants and practical application, and use these categories to improve their tools.

Table 1. Building the improvement matrix.

3. The improvement matrix: a framework for improving CE tools

section summarises the content of the framework and looks at how the improvement of tools using the design propositions predicts positive changes in each co-design practice and activity.

(a) Planning (Design)

The improvement matrix suggests three components that support the practice of planning (), known as design as shown below.

(a.1) Challenge/Briefing: the briefing formalises mutual and coherent understanding of objectives, drivers, and issues, which can be framed in a dynamic and participative process by those involved in a CE project (Murphy and Hands Citation2012). The briefing guides the concept, providing essential information about the design of a tool. It describes the frame in which a tool addresses a particular engagement challenge. For example, tools for engaging with people with aphasia would be framed to deal with the challenge of engaging with people who have difficulty with spoken and written language, presenting non-verbal elements that would enable them to participate in the design process of computer-based therapy tools (Wilson et al. Citation2015).

(a.2) Interaction models: The function is the primary consideration in the design of a space as part of an interaction model. The interaction model describes how an interface should work to enhance the use of digital products and how a system is organised and operates. The interaction model binds the intentions and engagement context which a tool is designed for. It is how a tool and inputs that are part of an activity interrelate, in ways that support real-life interactions (i.e. practical use). Interactions are inputs people have to perform when they are addressing their engagement challenges. For example, a tool that collects drawings as responses about young people’s preferences enables practitioners to take decisions based on evidence, where drawing is the interaction model that satisfies the intention of collecting young people’s voice in the engagement process.

(a.3) (Build) Resilience: Building tool resilience to deal with unforeseen applications involves designing tools that allow appropriation. Dix (Citation2007) discusses the design for appropriation, where designers can design to allow for the unexpected by, for instance, not making systems or products with a fixed meaning. For example, in a CE project called Make it Stick (Cruickshank et al. Citation2017), the researchers developed a tool to enable CE without the need for participants to write, which was initially designed to be customised, downloaded, and printed. However, they noticed that the tool was not meeting the user’s needs due to the limited customisation. As a result, they designed an interactive template that allowed people to customise the sticker template, enabling people to use it in unexpected ways.

The improvement of tools within this layer of practice will develop the CE practice of planning collaborative spaces, providing engagement practitioners with new ideas to address their challenges in current and future projects.

(b)Enabling (Facilitation)

The second layer of the matrix related to the practice of enabling CE, known as facilitation layer (), suggests three components that support a creative facilitation practice with tools as shown below.

(b.1) Facilitator notes: Facilitators identify priorities and expectations of stakeholders, which can be formalised in an agreed briefing with a group of people affected by a project, similar to a typical design process as inputs for creating facilitation frameworks (Cruickshank and Evans Citation2012), and then establish a facilitation approach. Facilitator notes include the plans for implementing a session and the activities that participants will follow through on in a project using specific tools. These notes describe to a facilitator how a tool should be introduced to participants and include instructions about the space, duration, requirements, examples, techniques, activities, etc. This kind of information can be provided in a guideline sheet, website or handbook to support facilitators in assisting and assigning tools in their practice. A tool that instructs facilitators on how to draw out ideas from their participants can be improved to provide more appropriate guidelines for a particular context or by adding extra information for facilitators.

(b.2) Resources produced by facilitators: Once the approach is established, facilitators assign or produce resources and tools to support the facilitation of activities. The function of tools should fulfil the purposes of supporting the facilitation, enabling participants to achieve desired outcomes. These resources produced by facilitators include maps, visual materials, and inspirational exercises that support them to engage with participants, guiding their actions and collecting information needed for learning and evaluation in a planned session. For example, a tool that supports facilitators to gather collective ideas from a group of entrepreneurs can be improved to work with local residents by giving specific actions to promote better creativity and problem-solving skills that fit residents’ expertise.

(b.3) (Encourage) Facilitator response: This approach can be associated with improvising sessions within a planned structure at the time of project delivery. Flexibility in facilitation is about designing a session as a type of conceptual prototype, where role-playing the planned ideas for activities and analysing the implications of these lead to a practical facilitation session. Once the facilitation approach is designed to respond to an agreed briefing and facilitator resources and notes are created to support CE, the session delivery requires flexibility and adaptability of the plan. A responsive facilitation is not about what a facilitator has to do to follow the plan, but what facilitation options are available for them to achieve an agreed objective. A way to improve facilitator response can be achieved by providing different ways to facilitate activities around a tool. Describing experiences and stories about facilitation strategies using a tool can enhance facilitators’ response and their ability to improvise. For example, a tool can be improved by providing examples of uses and tips to engage participants, focusing on different situations where it might not work as expected, providing ways to change the facilitation approach and afford flexibility to new facilitators.

The improvement of tools in this layer of practice will develop the CE practice of enabling people to exchange ideas and inputs in design processes, providing facilitators ways to assist participants’ understanding and their contribution to projects with their expertise.

(c) Doing (Application)

The third layer of the matrix related to the practice of doing CE, known as application (), suggests three components that support the practical use of a tool by participants, which are described below:

(c.1) Example or use notes: Once basic requirements are met, other attributes contribute to make the tool work. The instructions (interface) are the words and examples used for guiding participants on how to complete a tool. They are textual elements that refer to wording that presents and introduces a tool and suggests its uses and are also examples or use notes that provide participants inspiration on how to fill in the blank spaces. For example, a tool for engaging with young people can be improved by changing the word ‘visit’ to look more informal and approachable than the word ‘meeting’.

(c.2) Design of material: The form depends on a relationship between the users’ needs and skills and the social context in which it is designed. This relationship and context will define the form of the design. For instance, an A6-sized tool designed to gather ideas can be improved in a bigger format to support more detailed ideas or extra notes in a lengthy activity.

(c.3) (Enable) Contrary activity: This component refers to the non-deliberative action of adaptability, where participants fit existing tools into their practice in CE activities. For example, a tool could be improved with blank text boxes instead of lines. In this way, participants would not feel restricted to complete all the lines, enabling them to draw if they wish so.

The improvement of tools in this layer of practice will develop the practice of doing CE through writing, making, and enacting activities by redesigning tools that are user-friendly to participants of a project.

3.1. Framework overview

Building on these nine elements, we tested the dimensions for improving tools through co-design workshops to develop a deep understanding of how the Improvement Matrix () works in practice. In the following section, we present three case studies, where we delivered workshops with CE practitioners from different organisations and backgrounds and developed improved tools as the practical outputs of this study.

Table 2. Design layer of practice.

Table 3. Facilitation layer of practice.

Table 4. Application layer of practice.

Table 5. The improvement matrix.

4. Working together to improve CE tools

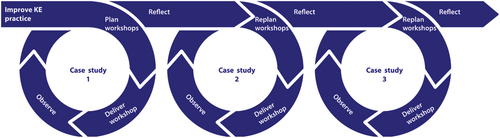

We developed the Improvement Matrix through a series of three co-design workshops with engagement practitioners, where each workshop consists of testing and analysing the framework in a real-world context with little control of events. A multiple-case study as a part of the action research self-reflective spiral of cycles is defined as the methodology performed in this research project that formed part of a larger research project called Leapfrog (2015–2018). Each case constitutes a PAR cycle of planning, acting, observing and reflecting (Kemmis and Robin Citation2005) as illustrated in .

The co-design workshops were documented through materials produced by participants, audio records taken from the discussion at the implementation phase, photographs, and researchers’ personal accounts, such as reports and reflective blog posts. The analysis of the evidence follows a bricolage of general techniques (Yin Citation2018) that ‘play’ with data in order to search for relevant patterns, insights, or concepts, and rely on the theoretical propositions (Functionality, Instruction, and Flexibility) in order to develop a case description. In this combination of techniques, various codes were assigned to evidence, and then examined, categorised, tabulated, tested, and recombined with the assistance of the researchers’ memos and diagrams to draw empirical conclusions. In this paper, we consider the four design choices’ categories (Lee et al. Citation2018) to describe each case study.

4.1. Project preconditions

The overall objective of the workshops was to explore tools that we preselected for each engagement situation and improve tools to suit participants’ ways of working. As part of a larger project, we chose tools that were co-designed with practitioners who engage with their communities on a daily basis (e.g. young people, library users) to be improved and adopted in other fields, such as health care.

Each case study considers the improvement of tools to develop an understanding of each layer of CE practice: Planning, Enabling and Doing and a cross-case analysis provide an understanding of each of the dimensions of tools: Instruction, Functionality, and Flexibility. In each case study, we aimed at testing three components of the improvement matrix across each layer to improve the tools and resources practitioners use in their engagement activities as illustrated in .

4.2. Participants

In these workshops, groups of engagement practitioners who work with tools collaborated with us through experimenting, learning and reflecting on the improvement process as co-researchers, providing evidence to test the Improvement Matrix. These practitioners included designers and non-designers (who nevertheless work in a creative manner) and aim at involving their communities in public decision-making processes or other CE activities. They were recruited from either a pool of motivated and existing contacts from the Leapfrog project or researchers who work with CE from a design research conference.

4.2.1. Case 1

We invited design research participants, who work with groups of nondesigners or are experts in tools and participatory practices, to attend the workshop to improve their CE practices. Eight DRS2018 delegates attended the workshop during the Ph.D. By Design event prior to the main conference at Limerick School of Art and Design ().

4.2.2. Case 2

We worked together with Children’s Champions, a team from a joint health and care system called Integrated Care Communities in Northwest England (). The team is a group of multidisciplinary healthcare practitioners responsible for engaging with children and Young People (YP) in their local communities to get their needs and voice listened to and heard.

4.2.3. Case 3

We worked together with the staff members of the Quality Improvement Team from Lancashire Care NHS Foundation Trust (). The team is composed of multidisciplinary healthcare practitioners that deal with complaints at diverse levels.

4.3. Co-design events

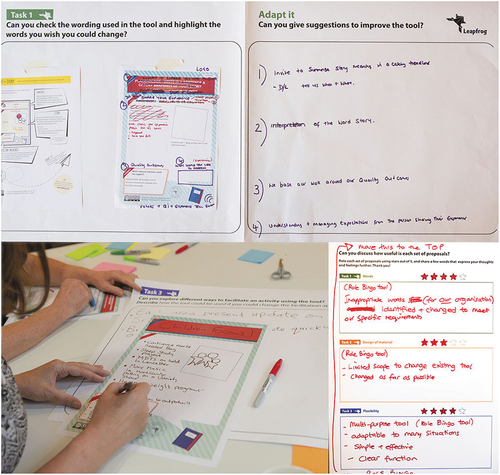

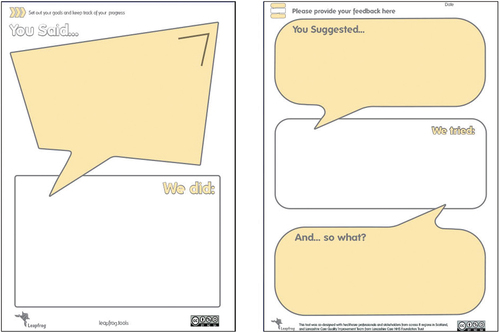

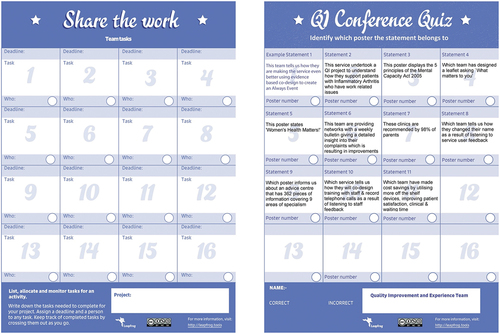

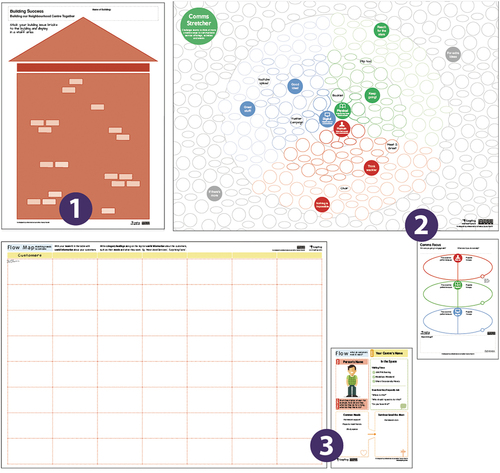

We tested the framework with engagement practitioners through co-design workshops, using the future workshop (Kensing and Madsen Citation1992) and bricolage (Büscher et al. Citation2001) PD techniques under the conceptual idea of toolkits for innovation (Von Hippel Citation2001). In these workshops, we provided participants with many copies of the chosen tools, basic materials (e.g. sticky notes, sharpies, paper) and proformas () to support them to create something new and evaluate their proposals. This approach enabled participants to co-design improvements directly on tools through a cycle of trial-and–error, where they (1) critiqued the present, (2) envisioned the future and (3) implemented designs through testing and evaluating the effects of their decisions. They conducted this improvement cycle three times by looking at the instruction, functionality and flexibility of tools in each round, and then reflecting on which proposals could lead to the improvement of their CE practices at the end. We planned each of these steps to last around 10–30 minutes within a half–day workshop (1.5–3 hours).

We worked as neutral facilitators supporting participants to improve tools, minimising our inputs and working as boundary spanners (Levina and Vaast Citation2005) who empowered participants not only to express their views but also to perform direct changes on the tools to transform them into boundary objects-in-use, i.e. tools usefully deployed in different fields. In each workshop, we preselected tools to be improved in the workshop according to the needs and context of each group of participants described as follows.

4.3.1. Case 1

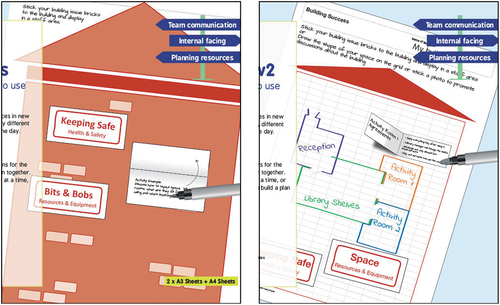

We invited DRS2018 delegates to attend the workshop and learn how to improve their CE practices, which required a scenario to provide context to participants. We presented a Leapfrog project, where we collaborated with Lancashire County Council library practitioners as a result of a massive budget cut in Lancashire libraries and museums in November/2015 that led the libraries to turn into community multi-service centres. The main challenge of this project was to create a set of tools to enable the best possible transition to Neighbourhood Centres, i.e. tools that help each centre to address challenges in their own way. We conducted a series of workshops with small groups of library practitioners, where they co-designed tools to address their challenges.

In the DRS2018 workshop, we introduced to participants the same challenges our Leapfrog partners had to face to provide the context and intentions for which the tools presented at the workshop were co-designed. We preselected three of these tools () and asked participants to improve them according to their CE practices. This case study presents a challenging workshop that we conducted with one facilitator in a shorter period of time compared to the other cases due to the 1.5-hour time frame allocated for pre-conference workshops. Getting settled before the start of the workshop, developing points in more detail, and providing a clearer focus at the beginning could have enhanced the impact of the workshop. Choosing tools used for a specific context helped participants to get their head around the improvement process. Participants concluded the activities on time at the expense of better outcomes.

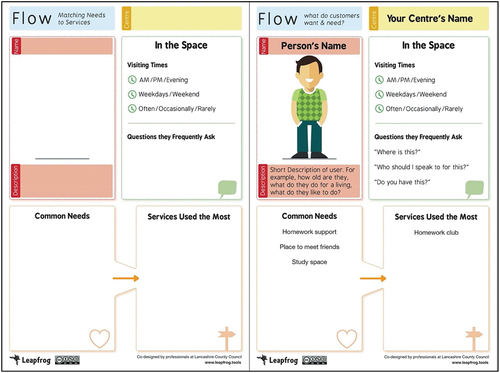

Figure 7. Tools: (1) building success, (2) comms stretcher & comms focus, and (3) flow map & flow cards (visit www.leapfrog.tools for more details).

4.3.2. Case 2

The children’s champions team were looking forward to getting better assets, engagement, and including YP’s voice in their bimonthly meetings. Considering their practice, we shortlisted five tools that could be used for capturing YP’s voice, translating evidence, and sharing outcomes across teams and organisations. We included a simple consensus activity to enable participants to choose three out of the five preselected tools to be improved in the workshop. This activity consisted of asking each participant to choose two tools and the five most voted ones were explored and improved by the group during the workshop.

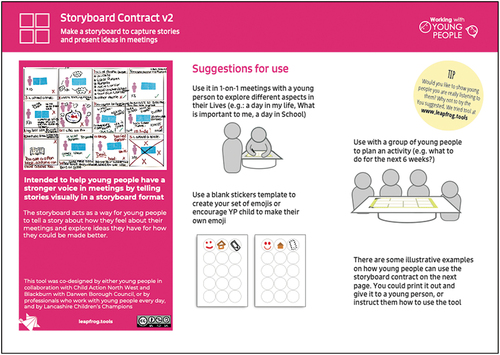

During the workshop, participants shared their expertise on engaging with children and YP, showing how different experiences and perspectives affect the improvement of tools. One participant was excited to use tools in practice as he mentioned he employs traditional methods and techniques, such as focus group and flipcharts. His group mentioned the need to use the tool first before suggesting improvements. Some participants struggled to understand the flow customer tools (, ), which supports the creation of personas as a designerly technique to describe service users. Whereas a simplistic tool with a lack of instructions, such as the Storyboard contract (), enabled participants to generate good suggestions that could lead to the improvement of their practice.

4.3.3. Case 3

The quality improvement team believed the Leapfrog tools would benefit them and were interested in creating their own tools for their organisation. Considering their practice, we shortlisted seven tools that could help them to gather feedback from their communities, to map ideas and opportunities, to enable their communities to respond to their feedback, and to communicate improvements to their communities and wider teams prior to the workshop. Similarly to case 2, we included a simple consensus activity to enable participants to choose five of them to be improved in the workshop.

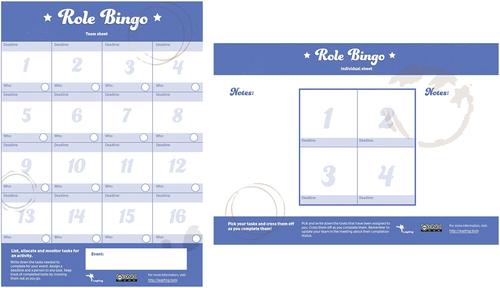

In this workshop, there was a disagreement about improving tools to specialise them to a specific activity. Although participants were from the same organisation, they worked in different teams and had distinctive engagement challenges. For instance, when the team were discussing about improving the tool Role bingo (), one participant suggested to redesign it into a team to-do list, whereas another participant wanted to use it as a project management tool. Many agreements and disagreements occurred when improving contrary activities, which provided a better understanding on this layer of the matrix.

4.4. Results

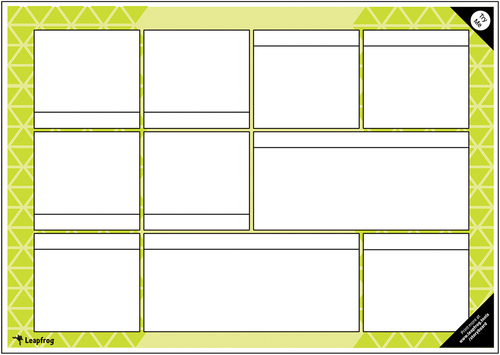

We report two levels of results: outputs from immediate results and further implementations and outcomes as impacts of the project. Based on participants’ reflection on which suggestions led to the improvement of the tools, we redesigned new versions of the tools (visual examples below) and made them available for download on the Leapfrog website as the tangible outputs for practitioners.

4.4.1. Case 1 outputs

Participants agreed upon a course of improvement for a set of tools by providing more open and flexible design concepts that give CE practitioners more control over the process, and also flexibility in using and understanding tools. Participants’ suggestions focused on extending features, providing more instructions, and new ideas to address challenges in order to give more flexibility to practitioners. We implemented these improvements in the new version of a tool to provide more flexibility to practitioners as illustrated in .

4.4.2. Case 2 outputs

Participants agreed upon a course of improvement for a set of tools by helping facilitators to design engagement approaches and providing indications of use and practical guidance to participants on how to complete tools throughout an activity in order to enhance skills needed for the job of facilitation. We implemented most of these improvements on the Storyboard contract () by adding an instruction sheet as illustrated in .

4.4.3. Case 3 outputs

Participants agreed upon a course of improvement for a set of tools by tailoring them to suit their community needs and practices, where they focus on improving the content in the tool. Their suggestions focused on improving visual and written communication through changing the words and graphic design of tools and adding flexible features and formats in order to make them more appropriate to their organisation and the communities they work with. We implemented these improvements in the new version of a tool as illustrated in .

4.4.4. Outcomes

Participants learnt how to improve tools through the process, enabling them to creatively deploy tools with understanding in their practice as the learning effect of this project:

‘Some people feel like [tools] quite rigid, and like obviously the more people understand how to use the tool, and all the different aspects like the more you get out of this at the first place, sitting down at the end of task 3, I understand that if I’m working to figure task 1.’ (Case 1 participant)

‘It was also good to think how we can be more inventive in getting our own voices heard in the Integrated Care Communities when we are competing for time and funding for our services.’ (Case 2 participant)

‘From the workshop we’ve done today, it shows how adaptable you can use them [tools], with ideals for people on their own field, and how you could adapt it.’ (Case 3 participant)

After the workshop, one organisation shared with us how they adopted the improved tool in a Quality Improvement conference as illustrated in .

5. How do the design propositions (instruction, functionality, and flexibility) improved tools in practice?

We cross-compared the evidence gathered across cases and drew insights on how each dimension works in practice to improve tools.

5.1. Instruction

To improve the instruction, practitioners highlight the lack of clarity, language issues, and restrictive aspects in the instructions, and then suggest improvements on how the tool should work, indications of use, and adding, removing, or changing the features to make the communication more appropriate for an organisation and audience. The improvement of tools involves providing clear visual design and instructions, indications of use, and friendly and clearer words for their practice.

5.2. Functionality

To improve the functionality of tools, practitioners highlight the lack of clarity, inappropriate design concepts, and restrictive aspects, and then suggest improvements by adding resources or changing the type of interactions/visual design and providing more practical guidance at the introduction and guidance during an engagement activity. The improvement of tools involves providing new ideas to address a challenge, adding or removing features to expand tool applications, clear and friendly graphic design and additional guidance and instructions to enhance the engagement of participants and practitioners in an activity.

5.3. Flexibility

To improve the flexibility of tools, practitioners highlight the restrictive aspects and suggest different uses, and then propose improvements by simplifying/removing and adding/extending features, providing editable headings, formats and instructions, and designing activities as a group. The improvement of tools involves enabling wider applications through different features, providing ideas that give practitioners more flexibility in understanding and use or generating ideas together as a group, in order to build understanding on employing tools in creative activities.

6. Summary and discussion

In this paper, we proposed a framework for improving tools by looking at co-design practices and theories and applied these to create a framework to support and enhance CE activities. We tested the frame to support potential uses in a specific project as well future projects by employing three design propositions (Instruction, Functionality, and Flexibility) within three layers of the practice landscape in CE (Planning, Enabling, and Doing) through co-design workshops.

The findings in the cross-case provided insights on the improvement process and how the propositions play out in practice to improve CE tools. The insights suggest the types of participants, and tools might affect the improvement process. Selecting tools for specific context helps participants to understand the improvement process. Simple tools can enable CE practitioners to generate good suggestions that lead to the improvement of tools, whereas complex tools can hinder the tool improvement. Participants with less tool experience provide fewer improvement suggestions, but they can still contribute with good suggestions whilst learning through the process. The workshop outcomes suggest participants have developed capabilities to develop their practices through the improvement of tools.

A peer review with experts at the EAD2019 has provided some learning points that are prompting more research. If tool flexibility is considered in the design of the methodology when working with different groups, it can enhance transferability in other research contexts. This also involves including flexibility in the Improvement Matrix as a meta-tool. We also identified a challenge in the way CE professionals explored the framework, when considering the Facilitation layer. Often, it was seen as embedded in other layers or less important in some CE practices, where a facilitator is seen more as the specialist or is absent. Other applications involved using it as an empty matrix to think through a particular co-design event as a template to populate it with participants’ information, and as a visual aid to create participatory tools. Further research involves exploring the Improvement Matrix with larger groups of practitioners and in other design research areas, such as education or informatics study, to see how it would work in practice, tracking changes in the improved tools and the framework over time.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Baibarac, C., and D. Petrescu. 2019. “Co-design and Urban Resilience: Visioning Tools for Commoning Resilience Practices.” CoDesign 15 (2): 91–109. doi:10.1080/15710882.2017.1399145.

- Brandt, E., T. Binder, and E. B.-N. Sanders. 2012. “Tools and Techniques: Ways to Engage Telling, Making and Enacting.” In Routledge International Handbook of Participatory Design, 165–201. New York: Routledge

- Bratteteig, T., K. Bødker, Y. Dittrich, P. H. Mogensen, and J. Simonsen. 2013. Organising Principles and General Guidelines for Participatory Design Projects. New York: Routledge.

- Büscher, M., S. Gill, P. Mogensen, and D. Shapiro. 2001. “Landscapes of Practice: Bricolage as a Method for Situated Design.” Computer Supported Cooperative Work (CSCW) 10 (1): 1–28. doi:10.1023/A:1011293210539.

- Cockton, G. 2014. “You Must Design Design, Co-Design Included.” Wer gestaltet die Gestaltung? 189–213. doi:10.14361/transcript.9783839420386.189.

- Cramer-Petersen, C. L., B. T. Christensen, and S. Ahmed-Kristensen. 2019. “Empirically Analysing Design Reasoning Patterns: Abductive-deductive Reasoning Patterns Dominate Design Idea Generation.” Design Studies 60: 39–70. doi:10.1016/j.destud.2018.10.001.

- Cruickshank, L., G. Coupe, and D. Hennessy. 2013. “Co-Design: Fundamental Issues and Guidelines for Designers: Beyond the Castle Case Study.” Swedish Design Research Journal 10: 48–57. doi:10.3384/svid.2000-964X.13248.

- Cruickshank, L., and M. Evans. 2012. “Designing Creative Frameworks: Design Thinking as an Engine for New Facilitation Approaches.” International Journal of Arts and Technology 5 (1): 73–85. doi:10.1504/IJART.2012.044337.

- Cruickshank, L., R. Whitham, G. Rice, and H. Alter. 2017. “Designing, Adapting and Selecting Tools for Creative Engagement: A Generative Framework.” Swedish Design Research Journal 15: 42–51. doi:10.3384/svid.2000-964X.17142.

- Davidoff, P. 1965. “Advocacy and Pluralism in Planning.” Journal of the American Institute of Planners 31 (4): 331–338. doi:10.1080/01944366508978187

- De Waal, G. A., and Knott, P. 2013. “Innovation Tool Adoption and Adaptation in Small Technology-based Firms.” International Journal of Innovation Management 17 (03): 1340012. doi:10.1142/S1363919613400124

- Dix, A. 2007. “Designing for Appropriation.” Paper Presented at the Proceedings of the 21st British HCI Group Annual Conference on People and Computers: HCI... But Not as We Know it-Volume 2, Lancaster, September 3–7.

- Donetto, S., P. Pierri, V. Tsianakas, and G. Robert. 2015. “Experience-based Co-design and Healthcare Improvement: Realizing Participatory Design in the Public Sector.” The Design Journal 18 (2): 227–248. doi:10.2752/175630615X14212498964312.

- Ehn, P. 2008. “Participation in design things.” In Proceedings of the Tenth Anniversary Conference on Participatory Design 2008, 92–101. Bloomington, Indiana: Indiana University

- Forester, J. 1999. The Deliberative Practitioner : Encouraging Participatory Planning Processes. Cambridge: MIT Press.

- Hooper, Kristina. 1986. “Architectural Design: An Analogy.” In User centered system design : new perspectives on human-computer interaction, edited by Donald Norman and Stephen Draper, 9–23. Hillsdale, N.J.: L. Erlbaum Associates

- Iaione, C. 2016. “The CO‐City: Sharing, Collaborating, Cooperating, and Commoning in the City.” American Journal of Economics and Sociology 75 (2): 415–455. doi:10.1111/ajes.12145.

- Illich, I. 1973. Tools for Conviviality. London: Calder and Boyars.

- Johansson-Sköldberg, U., J. Woodilla, and Ç. Mehves. 2013. “Design Thinking: Past, Present and Possible Futures.” Creativity and Innovation Management 22 (2): 121–146. doi:10.1111/caim.12023.

- Kensing, F., and K. H. Madsen. 1992. “Generating visions: future workshops and metaphorical design.” In Design at work, edited by Joan Greenbaum and Morten Kyng, 155–68. Hillsdale, N.J.: L. Erlbaum Associates Inc

- Lee, J.-J., M. Jaatinen, A. Salmi, T. Mattelmäki, R. Smeds, and M. Holopainen. 2018. “Design Choices Framework for Co-creation Projects.” International Journal of Design 12 (2): 15.

- Lee, Y. 2008. “Design Participation Tactics: The Challenges and New Roles for Designers in the Co-design Process.” CoDesign 4 (1): 31–50. doi:10.1080/15710880701875613.

- Levina, N., and E. Vaast. 2005. “The Emergence of Boundary Spanning Competence in Practice: Implications for Implementation and Use of Information Systems.” MIS Quarterly 29 (2): 335–363. doi:10.2307/25148682.

- Manzini, E. 2015. Design, when everybody designs : an introduction to design for social innovation. Cambridge, Massachusetts: The MIT Press

- Marttila, S., and A. Botero. 2013. “The'Openness Turn'in co-design. From usability, sociability and designability towards openness.” In Co-create 2013 : the Boundary-Crossing Conference on Co-Design in Innovation, edited by Riitta Smeds and Olivier Irrmann, 99–111. Espoo, Finland: Aalto University

- Morris, L., and L. Cruickshank. 2013. “New Design Processes for Knowledge Exchange Tools for the New IDEAS Project.” Paper presented at the The Knowledge Exchange: an interactive conference, Lancaster, UK.

- Murphy, E., and D. Hands. 2012. “Wisdom of the Crowd: How Participatory Design Has Evolved Design Briefing.” Swedish Design Research Journal 8: 28–37. doi:10.3384/svid.2000-964X.12228.

- NESTA, National Endowment for Science Technology and the Arts. 2014. DIY - development impact & you : practical tools to trigger & support social innovation. New York: The Rockefeller Foundation

- Norman, D. A., and S. W. Draper. 1986. User Centered System Design: New Perspectives on Human-computer Interaction. Hillsdale, NJ: L. Erlbaum Associates.

- Pedersen, S. 2020. “Staging Negotiation Spaces: A Co-design Framework.” Design Studies 68: 58–81. doi:10.1016/j.destud.2020.02.002.

- Peters, D., L. Loke, and N. Ahmadpour. 2020. “Toolkits, Cards and Games–a Review of Analogue Tools for Collaborative Ideation.” CoDesign ahead-of-print (ahead–of–print). doi:10.1080/15710882.2020.1715444.

- Sanders, E., E. Brandt, and T. Binder. 2010. “A framework for organizing the tools and techniques of participatory design.” In Proceedings of the 11th Biennial Participatory Design Conference, 195–8. Sydney, Australia: Association for Computing Machinery

- Sanders, E., and P. Stappers. 2012. Convivial Toolbox: Generative Research for the Front End of Design, Edited by P. J. Stappers. The Netherlands: Amsterdam.

- Sanders, Elizabeth. 2002. “Scaffolds for experiencing in the new design space.” In Information Design, edited by Institute for Information Design, 1–6. Tokyo: Graphic-Sha Publishing Co

- Stephen, Kemmis, and Robin McTaggart. 2005. “Participatory action research: Communicative action and the public sphere.” In The Sage handbook of qualitative research, 3rd edition, edited by Norman K. Denzin and Yvonna S. Lincoln, 559–604. London: SAGE Publications Ltd

- Swann, C. 2002. “Action Research and the Practice of Design.” Design Issues 18 (1): 49–61. doi:10.1162/07479360252756287.

- Tassoul, M. 2009. Creative Facilitation. 2rd ed. ed. Delft: VSSD

- Von Hippel, E. 2001. “PERSPECTIVE: User Toolkits for Innovation.” The Journal of Product Innovation Management 18 (4): 247–257. doi:10.1016/S0737-6782(01)00090-X.

- Von Hippel, E. 2005. Democratizing Innovation. Cambridge, MA: MIT Press.

- Wates, N. 2000. The Community Planning Handbook: How People Can Shape Their Cities, Towns and Villages in Any Part of the World. London: Earthscan.

- Whitham, R., L. Cruickshank, G. Coupe, L. E. Wareing, and P. David. 2019. “Health and Wellbeing: Challenging Co-Design for Difficult Conversations, Successes and Failures of the Leapfrog Approach.” The Design Journal 22 (sup1): 575–587. doi:10.1080/14606925.2019.1595439.

- Wilson, S., A. Roper, J. Marshall, J. Galliers, N. Devane, T. Booth, and C. Woolf. 2015. “Codesign for People with Aphasia through Tangible Design Languages.” CoDesign 11 (1): 1–14. doi:10.1080/15710882.2014.997744.

- Yin, R. K. 2018. Case study research and applications : design and methods. Sixth edition. ed. Thousand Oaks, California ; London: SAGE Publications

- Zamenopoulos, T., and K. Alexiou. 2018. Co-design as Collaborative Research, Connected Communities Foundation Series. Bristol: Bristol University/AHRC Connected Communities Programme.