?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

High dimensional error covariance matrices and their inverses are used to weight the contribution of observation and background information in data assimilation procedures. As observation error covariance matrices are often obtained by sampling methods, estimates are often degenerate or ill-conditioned, making it impossible to invert an observation error covariance matrix without the use of techniques to reduce its condition number. In this paper, we present new theory for two existing methods that can be used to ‘recondition’ any covariance matrix: ridge regression and the minimum eigenvalue method. We compare these methods with multiplicative variance inflation, which cannot alter the condition number of a matrix, but is often used to account for neglected correlation information. We investigate the impact of reconditioning on variances and correlations of a general covariance matrix in both a theoretical and practical setting. Improved theoretical understanding provides guidance to users regarding method selection, and choice of target condition number. The new theory shows that, for the same target condition number, both methods increase variances compared to the original matrix, with larger increases for ridge regression than the minimum eigenvalue method. We prove that the ridge regression method strictly decreases the absolute value of off-diagonal correlations. Theoretical comparison of the impact of reconditioning and multiplicative variance inflation on the data assimilation objective function shows that variance inflation alters information across all scales uniformly, whereas reconditioning has a larger effect on scales corresponding to smaller eigenvalues. We then consider two examples: a general correlation function, and an observation error covariance matrix arising from interchannel correlations. The minimum eigenvalue method results in smaller overall changes to the correlation matrix than ridge regression but can increase off-diagonal correlations. Data assimilation experiments reveal that reconditioning corrects spurious noise in the analysis but underestimates the true signal compared to multiplicative variance inflation.

1. Introduction

The estimation of covariance matrices for large dimensional problems is of growing interest (Pourahmadi, Citation2013), particularly for the field of numerical weather prediction (NWP) (Weston et al., Citation2014; Bormann et al., Citation2016) where error covariance estimates are used as weighting matrices in data assimilation problems (e.g. Ghil, Citation1989; Daley, Citation1991; Ghil and Malanotte-Rizzoli, Citation1991). At operational NWP centres there are typically measurements every 6 h (Bannister, Citation2017), meaning that observation error covariance matrices are extremely high-dimensional. In nonlinear least-squares problems arising in variational data assimilation, the inverses of correlation matrices are used, meaning that well-conditioned matrices are vital for practical applications (Bannister, Citation2017). This is true in both the unpreconditioned and preconditioned variational data assimilation problem using the control variable transform, as the inverse of the observation error covariance matrix appears in both formulations. The convergence of the data assimilation problem can be poor if either the background or observation variance is small; however, the condition number and eigenvalues of background and observation error covariance matrices have also been shown to be important for convergence in both the unpreconditioned and preconditioned case in Haben et al. (Citation2011a, Citation2011b), Haben (Citation2011), CitationTabeart et al. (2018). Furthermore, the conditioning and solution of the data assimilation system can be affected by complex interactions between the background and observation error covariance matrices and the observation operator (Johnson et al., Citation2005; CitationTabeart et al., 2018). The condition number of a matrix,

provides a measure of the sensitivity of the solution

of the system

to perturbations in

The need for well-conditioned background and observation error covariance matrices motivates the use of ‘reconditioning’ methods, which are used to reduce the condition number of a given matrix.

In NWP applications, observation error covariance matrices are often constructed from a limited number of samples (Cordoba et al., Citation2016; Waller, Ballard, et al., Citation2016; Waller, Simonin, et al., Citation2016). This can lead to problems with sampling error, which manifest in sample covariance matrices, or other covariance matrix estimates, that are very ill-conditioned or can fail to satisfy required properties of covariance matrices (such as symmetry and positive semi-definiteness) (Ledoit and Wolf, Citation2004; Higham et al., Citation2016). In some situations, it may be possible to determine which properties of the covariance matrix are well estimated. One such instance is presented in Skøien and Blöschl (Citation2006), which considers how well we can expect the mean, variance and correlation lengthscale of a sample correlation to represent the true correlation matrix depending on different properties of the measured domain (e.g. sample spacing, area measured by each observation). However, this applies only to direct estimation of correlations and will not apply to diagnostic methods (e.g. Desroziers et al., Citation2005), where transformed samples are used and covariance estimates may be poor. We note that in this paper, we assume that the estimated covariance matrices used in our experiments represent the desired correlation information matrix well and that differences are due to noise rather than neglected sources of uncertainty. This may not be the case for practical situations, where reconditioning may need to be performed in conjunction with other techniques to compensate for the underestimation of some sources of error.

Depending on the application, a variety of methods have been used to combat the problem of rank deficiency of sample covariance matrices. In the case of spatially correlated errors, it may be possible to fit a smooth correlation function or operator to the sample covariance matrix as was done in Simonin et al. (Citation2019) and Guillet et al. (Citation2019), respectively. Another approach is to retain only the first k leading eigenvectors of the estimated correlation matrix and to add a diagonal matrix to ensure the resulting covariance matrix has full rank (Stewart et al., Citation2013; Michel, Citation2018). However, this has been shown to introduce noise at large scales for spatial correlations and may be expensive in terms of memory and computational efficiency (Michel, Citation2018). Although localisation can be used to remove spurious correlations, and can also be used to increase the rank of a degenerate correlation matrix (Hamill et al., Citation2001), it struggles to reduce the condition number of a matrix without destroying off-diagonal correlation information (Smith et al., Citation2018). A further way to increase the rank of a matrix is by considering a subset of columns of the original matrix that are linearly independent. This corresponds to using a subset of observations, which is contrary to a key motivation for using correlated observation error statistics: the ability to include a larger number of observations in the assimilation system (Janjić et al., Citation2018). Finally, the use of transformed observations may result in independent observation errors (Migliorini, Citation2012; Prates et al., Citation2016); however, problems with conditioning will manifest in other components of the data assimilation algorithm, typically the observation operator. Therefore, although other techniques to tackle the problem of ill-conditioning exist, they each have limitations. This suggests that for many applications the use of reconditioning methods, which we will show are inexpensive to implement and are not limited to spatial correlations, may be beneficial.

We note that small eigenvalues of the observation error covariance matrix are not the only reason for slow convergence: if observation error standard deviations are small, the observation error covariance matrix may be well-conditioned, but convergence of the minimisation problem is likely to be poor (Haben, Citation2011; Tabeart et al., Citation2018). In this case reconditioning may not improve convergence and performance of the data assimilation routine.

Two methods, in particular, referred to in this work as the minimum eigenvalue method and ridge regression, are commonly used at NWP centres. Both methods are used by Weston (Citation2011), where they are tested numerically. Additionally, in Campbell et al. (Citation2017), a comparison between these methods is made experimentally and it is shown that reconditioning improves convergence of a dual four-dimensional variational assimilation system. However, up to now there has been minimal theoretical investigation into the effects of these methods on the covariance matrices. In this paper, we develop theory that shows how variances and correlations are altered by the application of reconditioning methods to a covariance matrix.

Typically reconditioning is applied to improve convergence of a data assimilation system by reducing the condition number of a matrix. However, the convergence of a data assimilation system can also be improved using multiplicative variance inflation, a commonly used method at NWP centres such as ECMWF (Liu and Rabier, Citation2003; McNally et al., Citation2006; Bormann et al., Citation2015, Citation2016) to account for neglected error correlations or to address deficiencies in the estimated error statistics by increasing the uncertainty in observations. It is not a method of reconditioning when a constant inflation factor is used, as it cannot change the condition number of a covariance matrix. In practice, multiplicative variance inflation is often combined with other techniques, such as neglecting off-diagonal error correlations, which do alter the conditioning of the observation error covariance matrix.

Although it is not a reconditioning technique, in Bormann et al. (Citation2015) multiplicative variance inflation was found to yield faster convergence of a data assimilation procedure than either the ridge regression or minimum eigenvalue methods of reconditioning. This finding is likely to be system-dependent; the original diagnosed error covariance matrix in the ECMWF system has a smaller condition number than the corresponding matrix for the same instrument in the Met Office system (Weston et al., Citation2014). Additionally, in the ECMWF system, the use of reconditioning methods only results in small improvements to convergence, and there is little difference in convergence speed for the two methods. This contrasts with the findings of Weston (Citation2011), Weston et al. (Citation2014), and Campbell et al. (Citation2017) where differences in convergence speed when using each method of reconditioning were found to be large. Therefore, it is likely that the importance of reducing the condition number of the observation error covariance matrix compared to inflating variances will be sensitive to the data assimilation system of interest. Aspects of the data assimilation system that may be important in determining the level of this sensitivity include: the choice of preconditioning and minimisation scheme (Bormann et al., Citation2015), quality of the covariance estimate, interaction between background and estimated observation error covariance matrices within the data assimilation system (Fowler et al., Citation2018; Tabeart et al., Citation2018), the use of thinning and different observation networks. We also note that Stewart et al. (Citation2009, Citation2013) and Stewart (Citation2010) consider changes to the information content and analysis accuracy corresponding to different approximations to a correlated observation error covariance matrix (including an inflated diagonal matrix). Stewart et al. (Citation2013) and Healy and White (Citation2005) also provide evidence in idealized cases to show that inclusion of even approximate correlation structure gives significant benefit over diagonal matrix approximations, including when variance inflation is used.

In this work, we investigate the minimum eigenvalue and ridge regression methods of reconditioning as well as multiplicative variance inflation, and analyse their impact on the covariance matrix. We compare both methods theoretically for the first time, by considering the impact of reconditioning on the correlations and variances of the covariance matrix. We also study how each method alters the objective function when applied to the observation error covariance matrix. Other methods of reconditioning, including thresholding (Bickel and Levina, Citation2008) and localisation (Horn and Johnson, Citation1991; Ménétrier et al., Citation2015; Smith et al., Citation2018) have been discussed from a theoretical perspective in the literature but will not be included in this work. In Section 2, we describe the methods more formally than in previous literature before developing new related theory in detail in Section 3. We show that the ridge regression method increases the variances and decreases the correlations for a general covariance matrix and the minimum eigenvalue method increases variances. We prove that the increases to the variance are bigger for the ridge regression method than the minimum eigenvalue method for any covariance matrix. We show that both methods of reconditioning reduce the weight on observation information in the objective function in a scale-dependent way, with the largest reductions in weight corresponding to the smallest eigenvalues of the original observation error covariance matrix. In contrast, multiplicative variance inflation using a constant inflation factor reduces the weight on observation information by a constant amount for all scales. In Section 4, the methods are illustrated via numerical experiments for two types of covariance structures. One of these is a simple general correlation function, and one is an interchannel covariance arising from a satellite-based instrument with observations used in NWP. We provide physical interpretation of how each method alters the covariance matrix, and use this to provide guidance on which method of reconditioning is most appropriate for a given application. We present an illustration of how all three methods alter the analysis of a data assimilation problem, and relate this to the theoretical conclusions concerning the objective function. We finally present our conclusions in Section 5. The methods are very general and, although their initial application was to observation error covariances arising from NWP, the results presented here apply to any sampled covariance matrix, such as those arising in finance (Higham, Citation2002; Qi and Sun, Citation2010) and neuroscience (Schiff, Citation2011; Nakamura and Potthast, Citation2015).

2. Covariance matrix modification methods

We begin by defining the condition number. The condition number provides a measure of how sensitive the solution of a linear equation

is to perturbations in the data

A ‘well-conditioned problem’ will result in small perturbations to the solution with small changes to

whereas for an ‘ill-conditioned problem’, small perturbations to

can result in large changes to the solution. Noting that all covariance matrices are positive semi-definite by definition, we distinguish between the two cases of strictly positive definite covariance matrices and covariance matrices with zero minimum eigenvalue. Symmetric positive definite matrices admit a definition for the condition number in terms of their maximum and minimum eigenvalues. For the remainder of the work, we define the eigenvalues of a symmetric positive semi-definite matrix

via:

(1)

(1)

Theorem 1.

If is a symmetric positive definite matrix with eigenvalues defined as in Equation(1) we can write the condition number in the L2 norm as

Proof.

See Golub and Van Loan (Citation1996, Sec. 2.7.2). □

For a singular covariance matrix, the convention is to take

(Trefethen and Bau, Citation1997, Sec. 12). We also note that real symmetric matrices admit orthogonal eigenvectors which can be normalised to produce a set of orthonormal eigenvectors.

Let be a positive semi-definite covariance matrix with condition number

We wish to recondition

to obtain a covariance matrix with condition number κmax

(2)

(2)

where the value of κmax is chosen by the user. We denote the eigendecomposition of

by

(3)

(3)

where

is the diagonal matrix of eigenvalues of

and

is a corresponding matrix of orthonormal eigenvectors.

In addition to considering how the covariance matrix itself changes with reconditioning, it is also of interest to consider how the related correlations and standard deviations are altered. We decompose as

where

is a correlation matrix, and

is a non-singular diagonal matrix of standard deviations. We calculate

and

via:

(4)

(4)

We now introduce the ridge regression method and the minimum eigenvalue method, the two methods of reconditioning that will be discussed in this work. We then define multiplicative variance inflation. This last method is not a method of reconditioning, but will be used for comparison purposes with the ridge regression and minimum eigenvalues methods.

2.1. Ridge regression method

The ridge regression method (RR) adds a scalar multiple of the identity to to obtain the reconditioned matrix

The scalar δ is set using the following method.

Define

Set

In the literature (Hoerl and Kennard, Citation1970; Ledoit and Wolf, Citation2004), ‘ridge regression’ is a method used to regularise least squares problems. In this context, ridge regression can be shown to be equivalent to Tikhonov regularisation (Hansen, Citation1998). However, in this paper, we apply ridge regression as a reconditioning method directly to a covariance matrix. For observation error covariance matrices, the reconditioned matrix is then inverted prior to its use as a weighting matrix in the data assimilation objective function. As we are only applying the reconditioning to a single component matrix in the variational formulation, the implementation of the ridge regression method used in this paper is not equivalent to Tikhonov regularisation applied to the variational data assimilation problem (Budd et al., Citation2011; Moodey et al., Citation2013). This is shown in Section 3.5 where we consider how applying ridge regression to the observation error covariance matrix affects the variational data assimilation objective function. The ridge regression method is used at the Met Office (Weston et al., Citation2014).

2.2. Minimum eigenvalue method

The minimum eigenvalue method (ME) fixes a threshold, T, below which all eigenvalues of the reconditioned matrix, are set equal to the threshold value. The value of the threshold is set using the following method.

Set

Define

where κmax is defined in (Equation2

(2)

(2) ).

Set the remaining eigenvalues of

via

(5)

(5)

Construct the reconditioned matrix via

where

Under the condition that where l is the index such that

the minimum eigenvalue method is equivalent to minimising the difference

with respect to the Ky-Fan 1-d norm (the proof is provided in Appendix). The Ky-Fan p – k norm (also referred to as the trace norm) is defined in Fan (Citation1959) and Horn and Johnson (Citation1991), and is used in Tanaka and Nakata (Citation2014) to find the closest positive definite matrix with condition number smaller than a given constant. A variant of the minimum eigenvalue method is applied to observation error covariance matrices at the European Centre for Medium-Range Weather Forecasts (ECMWF) (Bormann et al., Citation2016).

2.3. Multiplicative variance inflation

Multiplicative variance inflation (MVI) is a method that increases the variances corresponding to a covariance matrix. Its primary use is to account for neglected error correlation information, particularly in the case where diagonal covariance matrices are being used even though non-zero correlations exist in practice. However, this method can also be applied to non-diagonal covariance matrices.

Definition 1.

Let be a given variance inflation factor and

be the estimated covariance matrix. Then multiplicative variance inflation is defined by

(7)

(7)

This is equivalent to multiplying the estimated covariance matrix by a constant. The updated covariance matrix is given by

(8)

(8)

The estimated covariance matrix is therefore multiplied by the square of the inflation constant. We note that the correlation matrix,

, is unchanged by application of multiplicative variance inflation.

Multiplicative variance inflation is used at NWP centres including ECMWF (Bormann et al., Citation2016) to counteract deficiencies in estimated error statistics, such as underestimated or neglected sources of error. Inflation factors are tuned to achieve improved analysis or forecast performance, and are hence strongly dependent on the specific data assimilation system of interest. Aspects of the system that might influence the choice of inflation factor include observation type, known limitations of the covariance estimate, and observation sampling or thinning.

Although variance inflation is not a method of reconditioning, as it is not able to alter the condition number of a covariance matrix, we include it in this paper for comparison purposes. This means that variance inflation can only be used in the case that the estimated matrix can be inverted directly, i.e. is full rank. Multiplicative variance inflation could also refer to the case where the constant inflation factor is replaced with a diagonal matrix of inflation factors. In this case, the condition number of the altered covariance matrix would change. An example of a study where multiple inflation factors are used is given by Heilliette and Garand (Citation2015), where the meteorological variable to which an observation is sensitive determines the choice of inflation factor. However, this is not commonly used in practice, and will not be considered in this paper.

3. Theoretical considerations

In this section, we develop new theory for each method. We are particularly interested in the changes made to and

for each case. Increased understanding of the effect of each method may allow users to adapt or extend these methods, or determine which is the better choice for practical applications.

We now introduce an assumption that will be used in the theory that follows.

Main Assumption: Let be a symmetric positive semi-definite matrix with

We remark that any symmetric, positive semi-definite matrix with is a scalar multiple of the identity, and cannot be reconditioned since it is already at its minimum possible value of unity. Hence in what follows, we will consider only matrices

that satisfy the Main Assumption.

3.1. Ridge regression method

We begin by discussing the theory of RR. In particular, we prove that applying this method for any positive scalar, δ, results in a decreased condition number for any choice of

Theorem 2.

Under the conditions of the Main Assumption, adding any positive increment to the diagonal elements of decreases its condition number.

Proof.

We recall that The condition number of

is given by

(9)

(9)

It is straightforward to show that for any completing the proof. □

We now consider how application of RR affects the correlation matrix and the diagonal matrix of standard deviations

Theorem 3.

Under the conditions of the Main Assumption, the ridge regression method updates the standard deviation matrix , and correlation matrix

of

via

(10)

(10)

Proof.

Using (Equation4(4)

(4) ),

Substituting this into the expression for

yields:

(11)

(11)

Considering the components of and the decomposition of

given by (Equation4

(4)

(4) ):

(12)

(12)

as required. □

Theorem 3 shows how we can apply RR to our system by updating and

rather than

We observe, from (Equation10

(10)

(10) ), that applying RR leads to a constant increase to variances for all variables. However, the inflation to standard deviations is additive, rather than the multiplicative inflation that occurs for multiplicative variance inflation. We now show that RR also reduces all non-diagonal entries of the correlation matrix.

Corollary 1.

Under the conditions of the Main Assumption, for

Proof.

Writing the update equation for given by (Equation10

(10)

(10) ), in terms of the variance and correlations of

yields:

(13)

(13)

We consider for

As

and

are diagonal matrices, we obtain

(14)

(14)

From the update Equationequation ((10)

(10) Equation10

(10)

(10) Equation)

(10)

(10) ,

for any choice of i. This means that

for any choice of i. Using this in (Equation14

(14)

(14) ) yields that for all values of i, j with

as required.

For i = j, it follows from (Equation13(13)

(13) ) that

for all values of i. □

3.2. Minimum eigenvalue method

We now discuss the theory of ME as introduced in Section 2.2. Using the alternative decomposition of given by (Equation6

(6)

(6) ) enables us to update directly the standard deviations for this method.

Theorem 4.

Under the conditions of the Main Assumption, the minimum eigenvalue method updates the standard deviations, , of

via

(15)

(15)

This can be bounded by

(16)

(16)

Proof.

(17)

(17)

(18)

(18)

Noting that for all values of k, we bound the second term in this expression by

(19)

(19)

(20)

(20)

This inequality follows from the orthonormality of and by the fact that

by definition. □

Due to the way the spectrum of is altered by ME, it is not evident how correlation entries are altered in general for this method of reconditioning.

3.3. Multiplicative variance inflation

We now discuss theory of MVI that was introduced in Section 2.3. We prove that MVI is not a method of reconditioning, as it does not change the condition number of a covariance matrix.

Theorem 5.

Multiplicative variance inflation with a constant inflation parameter cannot change the condition number or rank of a matrix.

Proof.

Let be our multiplicative inflation constant such that

The eigenvalues of

are given by

If is rank-deficient, then

and hence

is also rank deficient. If

is full rank then we can compute the condition number of

as the ratio of its eigenvalues, which yields

(21)

(21)

Hence, the condition number and rank of are unchanged by multiplicative inflation. □

3.4. Comparing ridge regression and minimum eigenvalue methods

Both RR and ME change by altering its eigenvalues. In order to compare the two methods, we can consider their effect on the standard deviations. We recall from Sections 3.1 and 3.2 that RR increases standard deviations by a constant and the changes to standard deviations by ME can be bounded above and below by a constant.

Corollary 2.

Under the conditions of the Main Assumption, for a fixed value of for all values of i.

Proof.

From Theorems 3 and 4, the updated standard deviation values are given by

(22)

(22)

From the definitions of δ and T, we obtain that

(23)

(23)

We conclude that the increment to the standard deviations for RR is always larger than the increment for ME. □

3.5. Comparison of methods of reconditioning and multiplicative variance inflation on the variational data assimilation objective function

We demonstrate how RR, ME and MVI alter the objective function of the variational data assimilation problem when applied to the observation error covariance matrix. We consider the 3D-Var objective function here for simplicity of notation, although the analysis extends naturally to the 4D-Var case. We begin by defining the 3D-Var objective function of the variational data assimilation problem.

Definition 2.

The objective function of the variational data assimilation problem is given by

(24)

(24)

where

is the background or prior,

is the vector of observations,

is the observation operator mapping from control vector space to observation space,

is the background error covariance matrix, and

is the observation error covariance matrix. Let Jo denote the observation term in the objective function and Jb denote the background term in the objective function.

In order to compare the effect of using each method, they are applied to the observation error covariance matrix in the variational objective function (Equation24(24)

(24) ). We note that analogous results hold if all methods are applied to the background error covariance matrix in the objective function. We begin by presenting the three updated objective functions, and then discuss the similarities and differences for each method together at the end of Section 3.5. We first consider how applying RR to the observation error covariance matrix alters the variational objective function (Equation24

(24)

(24) ).

Theorem 6.

By applying RR to the observation error covariance matrix we alter the objective function (Equation24(24)

(24) ) as follows:

(25)

(25)

where

is a diagonal matrix with entries given by

Proof.

We denote the eigendecomposition of as in (Equation3

(3)

(3) ). Applying RR to the observation error covariance matrix,

we obtain

(26)

(26)

We then calculate the inverse of

and express this in terms of

and an update term:

(27)

(27)

(28)

(28)

(29)

(29)

Substituting (Equation29

(29)

(29) ) into (Equation24

(24)

(24) ), and defining

as in the theorem statement we can write the objective function using the reconditioned observation error covariance matrix as (Equation25

(25)

(25) ). □

The effect of RR on the objective function differs from the typical application of Tikhonov regularisation to the variational objective function (Budd et al., Citation2011; Moodey et al., Citation2013). In particular, we subtract a term from the original objective function rather than adding one, and the term depends on the eigenvectors of as well as the innovations (differences between observations and the background field in observation space). Writing the updated objective function as in (Equation25

(25)

(25) ) shows that the size of the original objective function (Equation24

(24)

(24) ) is decreased when RR is used. Specifically, as we discuss later, the contribution of small-scale information to the observation term, Jo, is reduced by the application of RR.

We now consider how applying ME to the observation error covariance matrix alters the objective function (Equation24(24)

(24) ).

Theorem 7.

By applying ME to the observation error covariance matrix we alter the objective function (Equation24(24)

(24) ) as follows:

(30)

(30)

where

(31)

(31)

Proof.

We begin by applying ME and decomposing as in (Equation6

(6)

(6) ):

(32)

(32)

Therefore, calculating the inverse of the reconditioned matrix yields

(33)

(33)

As this is full rank, we can calculate the inverse of the diagonal matrix

(34)

(34)

(35)

(35)

Defining as in the theorem statement, and we can write

as

(36)

(36)

Substituting this into the definition of the objective function (Equation24

(24)

(24) ) we obtain the result given in the theorem statement. □

As is non-zero only for eigenvalues smaller than the threshold T, the final term of the updated objective function (Equation30

(30)

(30) ) reduces the weight on eigenvectors corresponding to those small eigenvalues. As all the entries of

are non-negative, the size of the observation term in the original objective function (Equation24

(24)

(24) ) is decreased when ME is used.

Finally, we consider the impact on the objective function of using MVI. We note that this can only be applied in the case that the estimated error covariance matrix is invertible as, by the result of Theorem 5, variance inflation cannot change the rank of a matrix.

Theorem 8.

In the case that is invertible, the application of MVI to the observation error covariance matrix alters the objective function (Equation24

(24)

(24) ) as follows

(37)

(37)

Proof.

By Definition 1, for inflation parameter α. The inverse of

is given by

(38)

(38)

Substituting this into (Equation24(24)

(24) ) yields the updated objective function given by (Equation37

(37)

(37) ). □

For both reconditioning methods, the largest relative changes to the spectrum of occur for its smallest eigenvalues. In the case of positive spatial correlations, small eigenvalues are typically sensitive to smaller scales. For spatial correlations, weights on scales of the observations associated with Johnson et al. (Citation2005) showed how, when changing the relative weights of the background and observation terms by inflating the ratio of observation and background variances, it is the complex interactions between the error covariance matrices and the observation operator that affects which scales are present in the analysis. This suggests that in the preconditioned setting, MVI will also alter the sensitivity of the analysis to different scales.

We also see that for RR and ME smaller choices of κmax yield larger reductions to the weight applied to small scale observation information. For RR, a smaller target condition number results in a larger value of δ, and hence larger diagonal entries of Λδ. For ME, a smaller target condition number yields a larger threshold, T, and hence larger diagonal entries of . This means that the more reconditioning that is applied, the less weight the observations will have in the analysis. This reduction in observation weighting is different for the two methods; RR reduces the weight on all observations, although the relative effect is larger for scales corresponding to the smallest eigenvalues, whereas ME only reduces weight for scales corresponding to eigenvalues smaller than the threshold T. In ME, the weights on scales for eigenvalues larger than T are unchanged.

Applying MVI with a constant inflation factor also reduces the contribution of observation information to the analysis. In contrast to both methods of reconditioning, the reduction in weight is constant for all scales and does not depend on the eigenvectors of R. This means that there is no change to the sensitivity to different scales using this method. The analysis will simply pull closer to the background data with the same relative weighting between different observations as occurred for analyses using the original estimated observation error covariance matrix.

We have considered the impact of RR, ME and MVI on the unpreconditioned 3D-Var objective function. For the preconditioned case, Johnson et al. [Citation2005] showed how, when changing the relative weights of the background and observation terms by inflating the ratio of observation and background variances, it is the complex interactions between the error covariance matrices and the observation operator that affects which scales are present in the analysis. This suggests that in the preconditioned setting MVI will also alter the sensitivity of the analysis to different scales.

4. Numerical experiments

In this section, we consider how reconditioning via RR and ME and application of MVI affects covariance matrices arising from two different choices of estimated covariance matrices. Both types of covariance matrix are motivated by NWP, although similar structures occur for other applications.

4.1. Numerical framework

The first covariance matrix is constructed using a second-order auto-regressive (SOAR) correlation function (Yaglom, Citation1987) with lengthscale 0.2 on a unit circle. This correlation function is used in NWP systems (Thiebaux, Citation1976; Stewart et al., Citation2013; Waller, Dance, et al., Citation2016; Fowler et al., Citation2018; Tabeart et al., Citation2018) where its long tails approximate the estimated horizontal spatial correlation structure well. In order to construct a SOAR error correlation matrix, S, on the finite domain, we follow the method described in Haben (Citation2011) and CitationTabeart et al. (2018). We consider a one-parameter periodic system on the real line, defined on an equally spaced grid with N = 200 grid points. We restrict observations to be made only at regularly spaced grid points. This yields a circulant matrix where the matrix is fully defined by its first row. To ensure the corresponding covariance matrix is also circulant, we fix the standard deviation value for all variables to be

One benefit of using this numerical framework is that it allows us to calculate a simple expression for the update to the standard deviations for ME. We recall that RR updates the variances by a constant, δ. We now show that in the case where is circulant, ME also updates the variances of

by a constant.

Circulant matrices admit eigenvectors which can be computed directly via a discrete Fourier transform (Gray, Citation2006) (via where

denotes conjugate transpose). This allows the explicit calculation of the ME standard deviation update given by Equation(15)

(15)

(15) as

(39)

(39)

This follows from (15) because the circulant structure of the SOAR matrix yields

We therefore expect both reconditioning methods to increase the SOAR standard deviations by a constant amount. As the original standard deviations were constant, this means that reconditioning will result in constant standard deviations for all variables. These shall be denoted for RR and

for ME. Constant changes to standard deviations also mean that an equivalent MVI factor that corresponds to the change can be calculated. This will be denoted by α.

Our second covariance matrix comprises interchannel error correlations for a satellite-based instrument. For this, we make use of the Infrared Atmospheric Sounding Instrument (IASI) which is used at many NWP centres within data assimilation systems. A covariance matrix for IASI was diagnosed in 2011 at the Met Office, following the procedure described in Weston (Citation2011) and Weston et al. (Citation2014) (shown in Online Resource 1). The diagnosed matrix was extremely ill-conditioned and required the application of the ridge regression method in order that the correlated covariance matrix could be used in the operational system. We note that we follow the reconditioning procedure of Weston et al. (Citation2014), where the reconditioning method is only applied to the subset of 137 channels that that are used in the Met Office 4D-Var system. These channels are listed in Stewart et al. (Citation2008, Appendix). As the original standard deviation values are not constant across different channels, reconditioning will not change them by a constant amount, as is the case for Experiment 1. We note that the 137 × 137 matrix considered in this paper corresponds to the covariance matrix for one ‘observation’ at a single time and spatial location. The observation error covariance matrix for all observations from this instrument within a single assimilation cycle is a block diagonal matrix, with one block for every observation, each consisting of a submatrix of the 137 × 137 matrix.

In the experiments presented in Section 4.2, we apply the minimum eigenvalue and the ridge regression methods to both the SOAR and IASI covariance matrices. The condition number before reconditioning of the SOAR correlation matrix is 81,121.71 and for the IASI matrix, we obtain a condition number of 2005.98. We consider values of κmax in the range for both tests. We note that the equivalence of the minimum eigenvalue method with the minimiser of the Ky-Fan

norm is satisfied for the SOAR experiment for

and the IASI experiment for

4.2. Results

4.2.1. Changes to the covariance matrix

Example 1:

Horizontal correlations using a SOAR correlation matrix

Due to the specific circulant structure of the SOAR matrix and constant value of standard deviations for all variables Equation(10)(10)

(10) and Equation(39)

(39)

(39) indicate that we expect increases to standard deviations for both methods of reconditioning to be constant. This was found to be the case numerically. In , the computed change in standard deviation for different values of κmax is given as an absolute value and as α, the multiplicative inflation constant that yields the same change to the standard deviation as each reconditioning method. We note that in agreement with the result of Corollary 2 the variance increase is larger for the RR than the ME for all choices of κmax. Reducing the value of κmax increases the change to standard deviations for both methods of reconditioning. The increase to standard deviations will result in the observations being down-weighted in the analysis. As this occurs uniformly across all variables for both methods, we expect the analysis to pull closer to the background. Nevertheless, we expect this to be a rather small effect. For this example, even for a small choice of κmax the values of the equivalent multiplicative inflation constant, α, is small, with the largest value of

occurring for RR for

Table 1 Change in standard deviation for the SOAR covariance matrix for both methods of reconditioning.

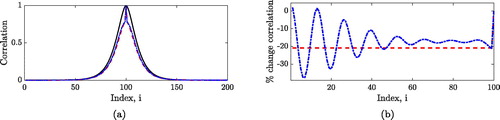

As the SOAR matrix is circulant, we can consider the impact of reconditioning on its correlations by focusing on one matrix row. In , the correlations and percentage change for the 100th row of the SOAR matrix are shown for both methods for These values are calculated directly from the reconditioned matrix. We note that by definition of a correlation matrix,

i for all choices of reconditioning. This is the reason for the spike in correlation visible in the centre of and on the right of . As multiplicative variance inflation does not change the correlation matrix, the black line corresponding to the correlations of the original SOAR matrix also represents the correlations in the case of multiplicative inflation. We also remark that although ME is not equivalent to the minimiser of the Ky-Fan

norm for

the qualitative behaviour in terms of correlations and standard deviations is the same for all values in the range

It is important to note that ME is still a well-defined method of reconditioning even if it is not equivalent to the minimiser of the Ky-Fan

norm.

Fig. 1. Changes to correlations between the original SOAR matrix and the reconditioned matrices for (a) shows

(black solid),

(red dashed),

(blue dot-dashed) (b) shows

(red dashed) and

(blue dot-dashed). As the SOAR matrix is symmetric, we only plot the first 100 entries for (b).

shows that for both methods, application of reconditioning reduces the value of off-diagonal correlations for all variables, with the largest absolute reduction occurring for variables closest to the observed variable. Although there is a large change to the off-diagonal correlations, we notice that the correlation lengthscale, which determines the rate of decay of the correlation function, is only reduced by a small amount. This shows that both methods of reconditioning dampen correlation values but do not significantly alter the overall pattern of correlation information. shows the percentage change to the original correlation values after reconditioning is applied. For RR, although the difference between the original correlation value and the reconditioned correlation depends on the index i, the relative change is constant across all off-diagonal correlations. As MVI does not alter the correlation matrix, it would correspond to a horizontal line through 0 for .

When we directly plot the correlation values for the original and reconditioned matrices in , the change to correlations for ME appears very similar to changes for RR. However, when we consider the percentage change to correlation in , we see oscillation in the percentage differences of the ME correlations, showing that the relative effect on some spatially distant variables can be larger than for some spatially close variables. The spatial impact on individual variables differs significantly for this method. We also note that ME increases some correlation values. These are not visible in due to entries in the original correlation matrix that are close to zero. Although the differences between and

far from the diagonal are small, small correlation values in the tails of the original SOAR matrix mean that when considering the percentage difference we obtain large values, as seen in . This suggests that RR is a more appropriate method to use in this context, as the reconditioned matrix represents the initial correlation function better than ME, where spurious oscillations are introduced. These oscillations occur as ME changes the weighting of eigenvectors of the covariance matrix. As the eigenvectors of circulant matrices can be expressed in terms of discrete Fourier modes, ME has the effect of amplifying the eigenvalues corresponding to the highest frequency eigenvectors. This results in the introduction of spurious oscillations in correlation space.

Both methods reduce the correlation lengthscale of the error covariance matrix. In CitationTabeart et al. (2018), it was shown that reducing the lengthscale of the observation error covariance matrix decreases the condition number of the Hessian of the 3D-Var objective function and results in improved convergence of the minimisation problem. Hence, the application of reconditioning methods to the observation error covariance matrix is likely to improve convergence of the overall data assimilation problem. Fowler et al. (Citation2018) studied the effect on the analysis of complex interactions between the background error correlation lengthscale, the observation error correlation lengthscale and the observation operator in idealised cases. Their findings for a fixed background error covariance, and direct observations, indicate that the effect of reducing the observation error correlation lengthscale (as in the reconditioned cases) is to increase the analysis sensitivity to the observations at larger scales. In other words, more weight is placed on the large-scale observation information content and less weight on the small scale observation information content. This corresponds with the findings of Section 3.5, where we proved that both methods of reconditioning reduce the weight on small scale observation information in the variational objective function. However, the lengthscale imposed by a more complex observation operator could modify these findings.

Example 2:

Interchannel correlations using an IASI covariance matrix

We now consider the impact of reconditioning on the IASI covariance matrix. We note that there is significant structure in the diagnosed correlations (see online resource 1), with blocks of highly correlated channels in the lower right-hand region of the matrix. We now consider how RR, ME and MVI change the variances and correlations of the IASI matrix.

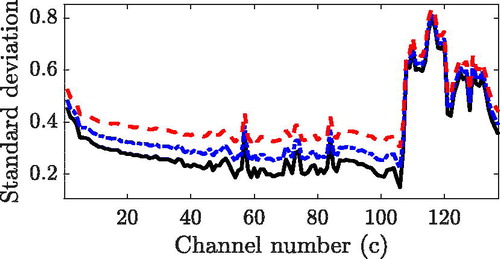

shows the standard deviations and

These are calculated from the reconditioned matrices, but the values coincide with the theoretical results of Theorems 3 and 4. Standard deviation values for the original diagnosed case have been shown to be close to estimated noise characteristics of the instrument for each of the different channels (Stewart et al., Citation2014). We note that the largest increase to standard deviations occurs for channel 106 only and corresponds to a multiplicative inflation factor for this channel of 2.02 for RR and 1.81 for ME. Channel 106 is sensitive to water vapour and is the channel in the original diagnosed covariance matrix with the smallest standard deviation. The choice of

is of a similar size to the value of the parameters used at NWP centres (Weston, Citation2011; Weston et al., Citation2014; Bormann et al., Citation2016). This means that in practice, the contribution of observation information from channels where instrument noise is low is being substantially reduced.

Fig. 2. Standard deviations for the IASI covariance matrix (black solid),

(red dashed),

(blue dot-dashed) for

Channels are ordered by increasing wavenumber, and are grouped by type. We expect different wavenumbers to have different physical properties, and therefore different covariance structures. In particular, larger standard deviations are expected for higher wavenumbers due to additional sources of error (Weston et al., Citation2014), which is observed on the right-hand side of . For RR, larger increases to standard deviations are seen for channels with smaller standard deviations for the original diagnosed matrix than those with large standard deviations. This also occurs to some extent for ME, although we observe that the update term in Equation(15)(15)

(15) is not constant in this case. This means that the reduction in weight in the analysis will not be uniform across different channels for ME. The result of Corollary 2 is satisfied; the increase to the variances is larger for RR than ME. This is particularly evident for channels where the variance from the original diagnosed covariance matrix is small. As MVI increases standard deviations by a constant factor, the largest changes for this method would occur for channels with large standard deviations in the original diagnosed matrix. This is in contrast to RR, where the largest changes occur for the channels in the original diagnosed matrix with the smallest standard deviation.

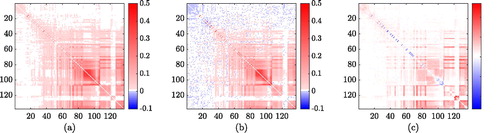

shows the difference between the diagnosed correlation matrix, and the reconditioned correlation matrices

and

As some correlations in the original IASI matrix are negative, we plot the entries of

and

in respectively. Here

denotes the Hadamard product, which multiplies matrices of the same dimension elementwise. This allows us to determine whether the magnitude of the correlation value is reduced by the reconditioning method; a positive value indicates that the reconditioning method reduces the magnitude of the correlation, whereas a negative value indicates an increase in the correlation magnitude. For RR, all differences are positive, which agrees with the result of Theorem 3. As MVI does not change the correlation matrix, an equivalent figure for this method is not given. We also note that there is a recognisable pattern in , with the largest reductions occurring for the channels in the original diagnosed correlation matrix which were highly correlated. This indicates that this method of reconditioning does not affect all channels equally.

Fig. 3. Difference in correlations for IASI (a) (b)

and (c)

where

denotes the Hadamard product. Red indicates that the absolute correlation is decreased by reconditioning and blue indicates the absolute correlation is increased. The colourscale is the same for (a) and (b) but different for (c). Condition numbers of the corresponding covariance matrices are given by

and

For ME, we notice that there are a number of entries where the absolute correlations are increased after reconditioning. There appears to be some pattern to these entries, with a large number occurring in the upper left-hand block of the matrix for channels with the smallest wavenumber (Weston et al., Citation2014). However, away from the diagonal for channels 0–40, where changes by RR are very small, the many entries where absolute correlations are increased by ME are much more scattered. This more noisy change to the correlations could be due to the fact that 96 eigenvalues are set to be equal to a threshold value by the minimum eigenvalue method in order to attain One method to reduce noise was suggested in Smith et al. (Citation2018), which showed that applying localization methods (typically used to reduce spurious long-distance correlations that arise when using ensemble covariance matrices via the Schur product) after the reconditioning step can act to remove noise while retaining covariance structure.

For positive entries, the structure of appears similar to that of RR. There are some exceptions, however, such as the block of channels 121–126 where changes in correlation due to ME are small, but correlations are changed by quite a large amount for RR. The largest elementwise difference between RR and the original diagnosed correlation matrix is 0.138, whereas the largest elementwise difference between ME and the original diagnosed correlation matrix is 0.0036. The differences between correlations for ME and RR are shown in .

For both methods, although the absolute value of all correlations is reduced, correlations for channels 1–70 are eliminated. This has the effect of emphasising the correlations for channels that are sensitive to water vapour. Weston et al. (Citation2014) and Bormann et al. (Citation2016) argue that much of the benefit of introducing correlated observation error for this instrument can be related to the inclusion of correlated error information for water vapour sensitive channels. Therefore, although the changes to the original diagnosed correlation matrix are large it is likely that a lot of the benefit of using correlated observation error matrices is retained.

We also note that it is more difficult to choose the best reconditioning method in this setting, due to the complex structure of the original diagnosed correlation matrix. In particular, improved understanding of how each method alters correlations and standard deviations is not enough to determine which method will perform best in an assimilation system. One motivation of reconditioning is to improve convergence of variational data assimilation algorithms. Therefore, one aspect of the system that can be used to select the most appropriate method of reconditioning is the speed of convergence. As ME results in repeated eigenvalues, we would expect faster convergence of conjugate gradient methods applied to the problem for

for ME than RR. However, Campbell et al. (Citation2017), Weston (Citation2011), Weston et al. (Citation2014) and Bormann et al. (Citation2015) find that RR results in faster convergence than ME for operational variational implementations. This is likely due to interaction between the reconditioned observation error covariance matrix and the observation operator, as the eigenvalues of

are shown to be important for the conditioning of the variational data assimilation problem in CitationTabeart et al. (2018).

Another aspect of interest is the influence of reconditioning on the analysis and forecast performance. We note that this is likely to be highly system and metric-dependent. For example, Campbell et al. (Citation2017) studied the impact of reconditioning on predictions of meteorological variables (temperature, geopotential height, precipitable water) over lead times from 0 to 5 days. In the U.S. Naval Research Laboratory system, ME performed slightly better at short lead times, whereas RR had improvements at longer lead times (Campbell et al., Citation2017). Differences in forecast performance were mixed, whereas convergence was much faster for RR. This meant that the preferred choice was RR. However, in the ECMWF system, Bormann et al. (Citation2015) studied the standard deviation of first-guess departures against independent observations. Using this metric of analysis skill, ME was found to out-perform RR. The effect of RR on the analysis of the Met Office 1D-Var system is studied in CitationTabeart et al. (2019), where changes to retrieved variables sensitive to water vapour (humidity, variables sensitive to cloud) are found to be larger than for other meteorological variables such as temperature.

4.2.2. Changes to the analysis of a data assimilation problem

In Section 3.5, we considered how the variational objective function is altered by RR, ME and MVI. We found that the two methods of reconditioning reduced the weight on scales corresponding to small eigenvalues by a larger amount than MVI, which changes the weight on all scales uniformly. In this section, we consider how the analysis of an idealised data assimilation problem is altered by each of the three methods. We also consider how changing κmax alters the analysis of the problem.

In order to compare the three methods, we study how the solution of a conjugate gradient method applied to the linear system

changes for RR, ME and MVI, where

is the linearised Hessian associated with the 3D-Var objective function Equation(24)

(24)

(24) . To do this we define a ‘true’ solution,

construct the corresponding data

and assess how well we are able to recover

when applying RR, ME and MVI to

Haben (Citation2011) showed that this is equivalent to solving the 3D-Var objective function in the case of a linear observation operator. We define a background error covariance matrix,

which is a SOAR correlation matrix on the unit circle with correlation lengthscale 0.2 and a constant variance of 1. Our observation operator is given by the identity, meaning that every state variable is observed directly.

We construct a ‘true’ observation error covariance given by a 200-dimensional SOAR matrix on the unit circle with standard deviation 1 and lengthscale 0.7. We then sample the Gaussian distribution with zero mean and covariance given by

250 times and use these samples to calculate an estimated sample covariance matrix via the MATLAB function cov. This estimated sample covariance matrix

has condition number

The largest estimated standard deviation is 1.07 and the smallest is 0.90, compared to the true constant standard deviation of 1. RR, ME and MVI are then applied to

with

When applying MVI, we use two choices of α which correspond to changes to the standard deviations

and

The modified error covariance matrices will be denoted

and

We define a true state vector,

(40)

(40)

which has five scales. We then construct

using

and apply the MATLAB 2018b

routine to the problem

for each choice of

We recall that

as

Let

denote the solution that is found using

and

refer to a solution found using a modified version of

namely

or

The maximum number of iterations allowed for the conjugate gradient routine is 200, and convergence is reached when the relative residual is less than

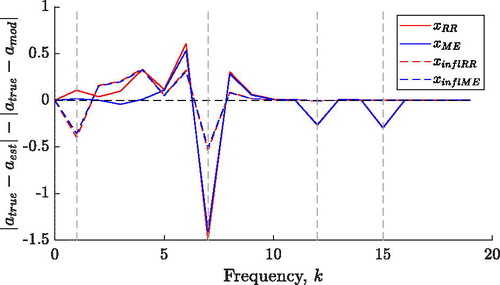

From Section 3.5, we expect RR, ME and MVI to behave differently at small and large scales. We therefore analyse how using each method alters the solution at different scales using the discrete Fourier transform (DFT). This allows us to assess how well each scale of

is recovered for each choice of

As

is the sum of sine functions, only the imaginary part of the DFT will be non-zero. We therefore define

similarly

and

By construction, as given by Equation(40)

(40)

(40) , is the sum of sine functions of period

for

atrue returns a signal with 5 peaks, one for each value of n at frequency k = n. The amplitude for all other values of k is zero. For frequencies larger than 20, all choices of estimated and modified

recover atrue well. shows the correction that is applied by the modified choices of

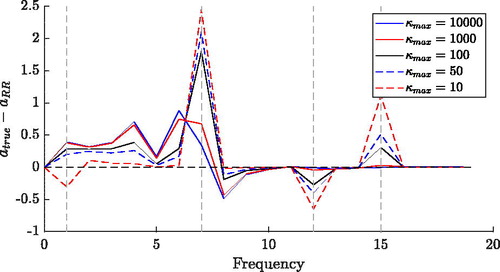

compared to

for the first 20 frequencies. A positive (negative) value shows that amod moves closer to (further from) atrue than aest. The distance from 0 shows the size of this change. For the first true peak (k = 1) RR is able to move closer to atrue than aest. However, both reconditioning methods move further from the truth at the location of true signals k = 7, 12, 15. For frequencies where aest has a spurious non-zero signal RR and ME are able to move closer to atrue than aest. At the location of true signals k = 7, 12, 15, MVI makes smaller changes compared to aest than either method of reconditioning. As all modifications to

move amod further from atrue than aest for k = 7, 12, 15, MVI is therefore better able to recover the value of atrue than RR or ME at these true peaks. However, MVI introduces a larger error for the first peak at k = 1 than RR or ME, and changes for frequencies k > 5 are much smaller than for reconditioning. This agrees with the findings of Section 3.5, that the weight on all scales is changed equally by MVI, whereas both methods of reconditioning result in larger changes to smaller scales and are hence able to make larger changes to amplitudes for higher frequencies. We recall from Section 3.5 that ME changes only the smaller scales, whereas RR also makes small changes to the larger scales. This behaviour is seen in : for frequencies k = 0 to 5 ME results in very small changes, with much larger changes for frequencies

RR makes larger changes for larger values of k, but also moves closer to atrue for

Fig. 4. Change in pointwise difference of discrete Fourier transform (DFT) from to

where aest denotes the vector of coefficients of the imaginary part of

A positive (negative) value indicates that

is closer to (further from)

than

and the amplitude shows how large this change is. Vertical dashed lines show the locations of non-zero values for the true signal.

We now consider how changing κmax alters the quality of As the behaviour for

shown in was similar for both RR and ME, we only consider changes to RR. shows the difference between atrue and aRR for different choices of κmax. Firstly we consider the true signal that occurs at frequencies

For k = 1 the smallest error occurs for

and the largest error occurs for

For k = 7, 12, 15 the error increases as κmax decreases. For all other frequencies, reducing κmax reduces the error in the spurious non-zero amplitudes. For very large values of κmax, we obtain small errors for the true signal, but larger spurious errors elsewhere. Very small values of κmax can control these spurious errors, but fail to recover the correct amplitude for the true signal. Therefore a larger reconditioning constant will result in larger changes to the analysis. This means that there is a balance to be made in ensuring the true signal is captured, but spurious signal is depressed. For this framework a choice of

provides a good compromise between recovering the true peaks well and suppressing spurious correlations.

Fig. 5. Difference between atrue and aRR for different choices of κmax. Vertical dashed lines show the locations of non-zero values for the true signal.

Finally, shows how convergence of the conjugate gradient method is altered by the use of reconditioning and MVI. Using a larger inflation constant for MVI does lead to slightly faster convergence compared to However, reducing κmax leads to a much larger reduction in the number of iterations required for convergence for both RR and ME. This agrees with results in operational data assimilation systems, where the choice of κmax and reconditioning method makes a difference to convergence (Weston, Citation2011; CitationTabeart et al., 2019).

Table 2 Changes to convergence of RR, MI and MVI for different values of κmax.

5. Conclusions

Applications of covariance matrices often arise in high dimensional problems (Pourahmadi, Citation2013), such as NWP (Weston et al., Citation2014; Bormann et al., Citation2016). In this paper we have examined two methods that are currently used at NWP centres to recondition covariance matrices by altering the spectrum of the original covariance matrix: the ridge regression method, where all eigenvalues are increased by a fixed value, and the minimum eigenvalue method, where eigenvalues smaller than a threshold are increased to equal the threshold value. We have also considered multiplicative variance inflation, which does not change the condition number or rank of a covariance matrix, but is used at NWP centres (Bormann et al., Citation2016).

For both reconditioning methods, we developed new theory describing how variances are altered. In particular, we showed that both methods will increase variances, and that this increase is larger for the ridge regression method. We also showed that applying the ridge regression method reduces all correlations between different variables. Comparing the impact of reconditioning methods and multiplicative variance inflation on the variational data assimilation objective function we find that all methods reduce the weight on observation information in the analysis. However, reconditioning methods have a larger effect on smaller eigenvalues, whereas multiplicative variance inflation does not change the sensitivity of the analysis to different scales. We then tested both methods of reconditioning and multiplicative variance inflation numerically on two examples: Example 1, a spatial covariance matrix, and Example 2, a covariance matrix arising from NWP. In Section 4.2, we illustrated the theory developed earlier in the work, and also demonstrated that for two contrasting numerical frameworks, the change to the correlations and variances is significantly smaller for the majority of entries for the minimum eigenvalue method.

Both reconditioning methods depend on the choice of κmax, an optimal choice of which will depend on the specific problem in terms of computational resource and required precision. The smaller the choice of κmax, the more variances and correlations are altered, so it is desirable to select the largest condition number that the system of interest can deal with. Some aspects of a system that could provide insight into reasonable choices of κmax are:

For conjugate gradient methods, the condition number provides an upper bound on the rate of convergence for the problem

(Golub and Van Loan, Citation1996), and can provide an indication of the number of iterations required to reach a particular precision (Axelsson, Citation1996). Hence, κmax could be chosen such that a required level of precision is guaranteed for a given number of iterations.

For more general methods, the condition number can provide an indication of the number of digits of accuracy that are lost during computations (Gill et al., Citation1986; Cheney, Citation2005). Knowledge of the error introduced by other system components, such as approximations in linearised observation operators and linearised models, relative resolution of the observation network and state variables, precision and calibration of observing instruments, may give insight into a value of κmax that will maintain the level of precision of the overall problem.

The condition number measures how errors in the data are amplified when inverting the matrix of interest (Golub and Van Loan, Citation1996). Again, the magnitude of errors resulting from other aspects of the system may give an indication of a value of κmax that will not dominate the overall precision.

For our experiments, we considered choices of κmax in the range For Experiment 2 these values are similar to those considered for the same instrument at different NWP centres e.g. 25, 100, 1000 (Weston, Citation2011), 67 (Weston et al., Citation2014), 54 and 493 (Bormann et al., Citation2015), 169 (Campbell et al., Citation2017). We note that the dimension of this interchannel error covariance matrix in operational practice is small and only forms a small block of the full observation error covariance matrix. Additionally, the matrix considered in this paper corresponds to one observation type; there are many other observation types with different error characteristics.

In this work, we have assumed that our estimated covariance matrices represent the desired correlation matrix well, in which case the above conditions on κmax can be used. This is not true in general, and it may be that methods such as inflation and localisation are also required in order to constrain the sources of uncertainty that are underestimated or mis-specified. In this case, the guidance we have presented in this paper concerning how to select the most appropriate choice of reconditioning method and target condition number will need to be adapted. Additionally, localisation alters the condition number of a covariance matrix as a side effect; the user does not have the ability to choose the target condition number κmax or control changes to the distribution of eigenvalues (Smith et al., Citation2018). This indicates that reconditioning may still be needed in order to retain valuable correlation information whilst ensuring that the computation of the inverse covariance matrix is feasible.

The choice of which method is most appropriate for a given situation depends on the system being used and the depth of user knowledge of the characteristics of the error statistics. The ridge regression method preserves eigenstructure by increasing the weight of all eigenvalues by the same amount, compared to the minimum eigenvalue method which only increases the weight of small eigenvalues and introduces a large number of repeated eigenvalues. We have found that ridge regression results in constant changes to variances and strict decreases to absolute correlation values, whereas the minimum eigenvalue method makes smaller, non-monotonic changes to correlations and non-constant changes to variances. In the spatial setting, the minimum eigenvalue method introduced spurious correlations, whereas ridge regression resulted in a constant percentage reduction for all variables. In the inter-channel case, changes to standard deviations and most correlations were smaller for the minimum eigenvalue method than for ridge regression.

Another important property for reconditioning methods is the speed of convergence of minimisation of variational data assimilation problems. It is well known that other aspects of matrix structure, such as repeated or clustered eigenvalues, are important for the speed of convergence of conjugate gradient minimisation problems. As the condition number is only sensitive to the extreme eigenvalues, conditioning alone cannot fully characterise the expected convergence behaviour. In the data assimilation setting, complex interactions occur between the constituent matrices (Tabeart et al., Citation2018), which can make it hard to determine the best reconditioning method a priori. One example of this is seen for operational implementations in Campbell et al. (Citation2017) and Weston (Citation2011) where the ridge regression method results in fewer iterations for a minimisation procedure than the minimum eigenvalue method, even though the minimum eigenvalue method yields observation error covariance matrices with a large number of repeated eigenvalues. Furthermore, CitationTabeart et al. (2018) found cases in an idealised numerical framework where increasing the condition number of the Hessian of the data assimilation problem was linked to faster convergence of the minimisation procedure. Again, this was due to interacting eigenstructures between observation and background terms, which could not be measured by the condition number alone. Additionally, Haben (Citation2011) and CitationTabeart et al. (2018) find that the ratio of background to observation error variance is important for the convergence of a conjugate gradient problem. In the case where observation errors are small, poor performance of conjugate gradient methods is therefore likely. This shows that changes to the analysis of data assimilation problems due to the application of reconditioning methods are likely to be highly system-dependent, for example due to: quality of estimated covariance matrices, interaction between background and observation error covariance matrices, specific implementations of the assimilation algorithm, and choice of preconditioner and minimisation routine. However, the improved understanding of alterations to correlations and standard deviations for each method of reconditioning provided here may allow users to anticipate changes to the analysis for a particular system of interest using the results from previous idealised and operational studies (e.g. Weston et al., Citation2014; Bormann et al., Citation2016; Fowler et al., Citation2018; Tabeart et al., Citation2018; Simonin et al., Citation2019).

Acknowledgements

Thanks to Stefano Migliorini and Fiona Smith at the UK Met Office for the provision of the IASI matrix.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Axelsson, O. 1996. Iterative Solution Methods. Cambridge: Cambridge University Press.

- Bannister, R. N. 2017. A review of operational methods of variational and ensemble variational data assimilation. Q. J. R. Meteorol. Soc. 143, 607–633. doi:10.1002/qj.2982

- Bickel, P. J. and Levina, E. 2008. Covariance regularization by thresholding. Ann. Stat. 36, 2577–2604. doi:10.1214/08-AOS600

- Bormann, N., Bonavita, M., Dragani, R., Eresmaa, R., Matricardi, M. and co-authors. 2015. Enhancing the Impact of IASI Observations through an Updated Observation Error Covariance Matrix. ECMWF Technical Memoranda. ECMWF, Reading.

- Bormann, N., Bonavita, M., Dragani, R., Eresmaa, R., Matricardi, M. and co-authors. 2016. Enhancing the impact of IASI observations through an updated observation error covariance matrix. Q. J. R. Meteorol. Soc. 142, 1767–1780. doi:10.1002/qj.2774

- Budd, C. J., Freitag, M. A. and Nichols, N. K. 2011. Regularization techniques for ill-posed inverse problems in data assimilation. Comput. Fluids 46, 168–173. doi:10.1016/j.compfluid.2010.10.002

- Campbell, W. F., Satterfield, E. A., Ruston, B. and Baker, N. L. 2017. Accounting for correlated observation error in a dual-formulation 4D variational data assimilation system. Mon. Wea. Rev. 145, 1019–1032. doi:10.1175/MWR-D-16-0240.1

- Cheney, W. 2005. Numerical Mathematics and Computing. 5th ed., International ed. Brooks/Cole Cengage Learning, Pacific Grove, CA.

- Cordoba, M., Dance, S. L., Kelly, G. A., Nichols, N. K. and Waller, J. A. 2016. Diagnosing atmospheric motion vector observation errors for an operational high resolution data assimilation system. Q. J. R. Meteorol. Soc. 143, 333–341.

- Daley, R. 1991. Atmospheric Data Analysis. Cambridge University Press, New York.

- Desroziers, G., Berre, L., Chapnik, B. and Poli, P. 2005. Diagnosis of observation, background and analysis-error statistics in observation space. Q. J. R. Meteorol. Soc. 131, 3385–3396. doi:10.1256/qj.05.108

- Fan, K. 1959. Convex sets and their applications. Lecture notes. Applied Mathematics Division, Argonne National Laboratory, Lemont, IL.

- Fowler, A. M., Dance, S. L. and Waller, J. A. 2018. On the interaction of observation and prior error correlations in data assimilation. Q. J. R. Meteorol. Soc. 144, 48–62. doi:10.1002/qj.3183

- Ghil, M. 1989. Meteorological data assimilation for oceanographers. Part I: description and theoretical framework. Dyn. Atmos. Oceans. 13, 171–218. doi:10.1016/0377-0265(89)90040-7

- Ghil, M. and Malanotte-Rizzoli, P. 1991. Data assimilation in meteorology and oceanography. Adv. Geophys. 33, 141–266. doi:10.1016/S0065-2687(08)60442-2

- Gill, P. E., Murray, W. and Wright, M. H. 1986. Practical Optimization. Academic Press, Amsterdam/London.

- Golub, G. H. and Van Loan, C. F. 1996. Matrix Computations. 3rd ed. The John Hopkins University Press, Baltimore, MD.

- Gray, R. M. 2006. Toeplitz and circulant matrices: a review. Found. Trends Commun. Inform. Theory 2, 155–239.

- Guillet, O., Weaver, A. T., Vasseur, X., Michel, Y., Gratton, S. and co-authors. 2019. Modelling spatially correlated observation errors in variational data assimilation using a diffusion operator on an unstructured mesh. Q. J. R. Meteorol. Soc. 145, 1947–1967. doi:10.1002/qj.3537

- Haben, S. A. 2011. Conditioning and preconditioning of the minimisation problem in variational data assimilation. PhD thesis, Department of Mathematics and Statistics, University of Reading, Reading.

- Haben, S. A., Lawless, A. S. and Nichols, N. K. 2011a. Conditioning and preconditioning of the variational data assimilation problem. Comput. Fluids 46, 252–256. doi:10.1016/j.compfluid.2010.11.025

- Haben, S. A., Lawless, A. S. and Nichols, N. K. 2011b. Conditioning of incremental variational data assimilation, with application to the Met Office system. Tellus A 64, 782–792.

- Hamill, T. M., Whitaker, J. S. and Snyder, C. 2001. Distance-dependent filtering of background error covariance estimates in an ensemble Kalman filter. Mon. Wea. Rev. 129, 2776–2790. doi:10.1175/1520-0493(2001)129<2776:DDFOBE>2.0.CO;2

- Hansen, P. C. 1998. Rank-Deficient and Discrete Ill-Posed Problems: Numerical Aspects of Linear Inversion. Society for Industrial and Applied Mathematics, Philadelphia, PA.

- Healy, S. B. and White, A. A. 2005. Use of discrete Fourier transforms in the 1D-Var retrieval problem. Q. J. R. Meteorol. Soc. 131, 63–72. doi:10.1256/qj.03.193

- Heilliette, S. and Garand, L. 2015. Impact of accounting for inter-channel error covariances at the Canadian meteorological centre. In: Proceedings of the 2015 EUMETSAT Meteorological Satellite Conference, Toulouse, France, p. 8. Online at: https://www.eumetsat.int/website/home/News/ConferencesandEvents/PreviousEvents/DAT_2305526.html