Abstract

Diverse funding sources, including the government, nonprofit, and industry sectors support academic research, generally, and gambling research, specifically. This funding allows academic researchers to assess gambling-related problems in populations, evaluate tools designed to encourage responsible gambling behaviors, and develop evidence-based recommendations for gambling-related topics. Some stakeholders have raised concern about industry-funded research. These critics argue that industry funding might influence the research process. Such concerns have led to the development of research guidelines that aim to preserve academic independence. Concurrently and independently, researchers have begun to embrace ‘Open Science’ practices (e.g. pre-registration of research questions and hypotheses, open access to materials and data) to foster transparency and create a valid, reliable, and replicable scientific literature. We suggest that Open Science principles and practices can be integrated with existing guidelines for industry-funded research to ensure that the research process is ethical, transparent, and unbiased. In the current paper, we engage with the aforementioned issues and present a formal framework to guide industry-funded research. We outline Guidelines for Research Independence and Transparency (GRIT), which integrates Open Science practices with existing guidelines for industry-funded research. Specifically, we describe how particular Open Science practices can enhance industry-funded research, including research pre-registration, separation of confirmatory and exploratory analyses, open materials, open data availability, and open access to study manuscripts. We offer our guidelines in the context of industry-funded gambling studies, yet researchers can extend these ideas to the behavioral sciences, more generally, and to funding sources of any type.

Two recent academic discussions have raised important questions about research integrity and transparency in the behavioral sciences. First, the research replication crisis provoked methodological self-reflection among scientists representing numerous research domains, including social psychology, marketing, biomedicine, and more (e.g. Open Science Collaboration Citation2015; Camerer et al. Citation2016, Citation2018; Coiera et al. Citation2018; Hutson Citation2018). Such deliberation helped identify a variety of so-called questionable research practices (Simmons et al. Citation2011; Wicherts et al. Citation2016) and helped advance a variety of novel open science principles and practices to guide research (Cumming Citation2014; Nosek et al. Citation2015). The hope is that such consideration will strengthen research tactics and strategies in a way that increases the likelihood of a more robust and reliable published research literature.

Second, observations about the prevalence of industry funding in addiction-related research, generally, and gambling-related research, specifically, has triggered a vigorous discussion of the potential for research funding sources, particularly industry funding sources, to bias researchers and research products (Adams Citation2007, Citation2011; Cassidy Citation2014; Griffiths and Auer Citation2015; Wohl and Wood Citation2015; Collins et al. Citation2019; Ladouceur et al. Citation2019). Such dialog frequently calls for bans on industry funding (Catford Citation2012; Livingstone and Adams Citation2016; Hancock and Smith Citation2017), but occasionally has advanced recommendations to guide industry-funded research (e.g. Kim et al. Citation2016). To date, there has been little consideration about how these academic discussions and their associated sets of recommendations might complement each other and act in concert to advance research integrity and transparency. Recognizing this need, in the current commentary we briefly summarize the debate about gambling industry funding and discuss the importance of supporting transparency in science. We conclude by offering guidelines for healthy and independent, funded research that integrates open science practices with research ethics recommendations.

Gambling research and industry funding

Gambling industry support of and involvement with gambling research is common (see Ladouceur et al. Citation2019; Reilly Citation2019). This support includes providing researchers with access to gamblers who visit their gambling venues, making data available for research purposes, and direct funding of research. This funding trend is very similar to other academic disciplines, such as medicine, engineering, and information technology, which also receive a substantial amount of research funds from industry partners (Atkinson Citation2018). Industry funding allows for the maintenance and expansions of research programs, technological advancements, and scientific innovation (Bozeman and Gaughan Citation2007; see review of industry funding of science in Perkmann et al. Citation2013; Wright et al. Citation2014), as well as training graduate students and knowledge mobilization. However, some critics have raised concerns that industry funding can bias researchers (e.g. researchers might shift their research design, their data analytic plan, or interpretation of their results in a way that aligns with the gambling industry’s desire to place gambling in a positive light), which taints the integrity of the scientific literature (see Sismondo Citation2008).

There is a good reason to be vigilant about industry-funded research. Documentation of industry research abuses in pharmaceutical (Goldacre Citation2014), tobacco (Tong and Glantz Citation2007), and food and nutrition studies (Chartres et al. Citation2016) supports the notion that, without caution, the research process can be corrupted. For instance, Kearns et al. (Citation2016) reported that the sugar industry during the 1960s and 70s used financial incentives and contracts to compel researchers to publish studies downplaying the role of sugar in the development of cardiovascular disease.Footnote1 More recently, researchers have warned of research bias in industry-funded concussion research (Fainaru-Wada and Fainaru Citation2017) and industry-funded research on causes of climate change (Basken Citation2016). Although it is debatable, observations such as these have led some (e.g. Cassidy Citation2014) to argue that gambling industry-funded research should be interpreted with skepticism and, by extension, gambling researchers should not accept funds from the gambling industry to conduct their research.

Empirical assessments of actual industry bias in gambling research are scant, and this issue requires further study (e.g. Ladouceur et al. Citation2019; Shaffer et al. Citation2019). Despite the limited data available for this issue, there has been considerable debate among gambling public health stakeholders about whether researchers should accept industry funds to conduct their research. Hancock and Smith (Citation2017) are among the researchers who oppose industry funding of gambling research. They argue that gambling industry funding is damaging to the scientific advancement of gambling as a public health issue. Other critics have suggested that industry funding has the potential to create pressure to arrive at conclusions that place gambling and the gambling industry in a positive light (see Orford Citation2017; Cowlishaw and Thomas Citation2018). Likewise, Kim et al. (Citation2016) argue that industry funding potentially can influence the topics that researchers address. Specifically, they suggest that industry-funded research tends to neglect studies about the adverse consequences of gambling. Adams (Citation2011) puts forth a particularly damning argument by arguing that industry-funded research is inherently unethical because a portion of these funds are from losses incurred by problem gamblers. These opinions are not, however, universal.

We suggest, as do others (Blaszczynski and Gainsbury Citation2014; Kim et al. Citation2016; Ladouceur et al. Citation2016; Collins et al. Citation2019), that when properly managed, industry funding can provide significant benefits for researchers, policymakers, and individual gamblers. As a supplement to government, organizational, and other extramural funding sources, or as a replacement in some cases (like in Ontario where the provincial government has ceased funding gambling research with profits from the gambling industry despite having a mandate to do so), industry funding can allow more scholars to investigate key public health issues and the consequences of gambling for diverse populations across the world. Also, whereas academic research often is misinterpreted (e.g. Lambert Citation2007; Yamashita and Brown Citation2017), partnerships between academics and the gambling industry can help avoid the potential spread of inaccurate and/or inappropriate interpretations of the academic literature by nonacademic industry consultants hired to summarize research or design responsible gambling advertisements and programs. Moreover, industry stakeholders can provide unique datasets to researchers with actual gambling records, and access to subpopulations of individual gamblers who would be difficult or impossible to obtain data from otherwise. Partnerships provide an additional pathway through which industry representatives can convey their concerns related to problem gambling and insights regarding gambling more generally. Finally, by leveraging research findings from industry-funded research, stakeholders can work with companies to quickly implement evidence-based policies and practices to reduce problem gambling and also improve corporate responsible gambling programs with empirical evaluations of existing programs.

Guidelines for accepting industry funding

We believe the benefits of accepting research funds from the gambling industry outweigh the real or perceived costs. Perhaps this is because we have first-hand experience with how a well-structured funding relationship with the gambling industry (or other funding bodies) can advance knowledge while avoiding the potential pitfalls. This occurs when there are clear limits and boundaries on that relationship. Our respective research centers are diversely funded research, education, training, and outreach entities. We have accepted funding from federal and state/provincial government sources, from private entities, from nonprofit industry-aligned funding organizations, and directly from gambling industry companies. The diversity of our funding has led some to question our research and our ethics (Schüll Citation2012; Hancock and Smith Citation2017). However, members of both research centers have worked hard to ensure that we produce objective and independent research products regardless of funding source (Wohl and Wood Citation2015; LaPlante et al. Citation2019). Indeed, some of our most important work has relied on industry collaboration and funding. Consider, for example, the Division on Addiction’s research using actual gambling records from online betting and gaming services (e.g. LaBrie et al. Citation2007; Nelson et al. Citation2019) and the Carleton University Gambling Laboratory’s development and testing of responsible gambling tools (Wohl et al. Citation2010, Citation2017).Footnote2

Researchers who engage in collaborations with industry need to adhere to clear and unwavering academic independence principles. What does this mean in practice?Footnote3 Adams' (Citation2007) PERIL model suggests that researchers can evaluate their risk of ‘moral jeopardy’ by considering five primary characteristics of their industry-funded work: Purpose, Extent, Relevant harm, Identifiers, and Link to donor. ‘Purpose’ refers to the suggestion that using funds from the gambling industry would conflict with researchers’ public good goals, but the risk of moral jeopardy is mitigated to some extent when gambling funds come from a funder that also has a public good role, such as gambling funds from the government as opposed to a company. ‘Extent’ refers to researchers’ dependence upon the industry funds for their work, with greater dependence creating greater risk. ‘Relevant harm’ refers to how much harm is associated with the particular product (e.g. lottery tickets versus electronic gaming machines) that generates the funds for research purposes. ‘Identifiers’ refers to the visibility of the funder for a research product. The model suggests that both visibility and invisibility create risk: providing supporting public relations material to the industry and failing to declare pertinent associations, respectively. Finally, ‘Link to donor’ refers to the directness of the association between a researcher and the funder, with stronger links with no intermediary body creating more moral jeopardy.

More recently, Kim et al. (Citation2016) suggested six general guidelines for researchers who intend to receive funding from the gambling industry. First, gambling researchers should ensure that they are familiar with ethical principles of science and potential issues with receiving funding from industry. Second, all research should be conducted autonomously and without future consequences from the funder based on the nature of the results. Third, a risk-benefit analysis should be conducted prior to accepting funding from industry, ensuring that the potential benefits of the research outweigh potential risks. Fourth, any conflicts of interest should be clearly stated and these perceived or actual conflicts of interest must not interfere with the scientific integrity of the research process. Fifth, research funding sources should be made clear in the informed consent process of enrolling research participants. Sixth, any current or past funding sources should be made clear and explicitly stated in any research documents, to mitigate the potential for conflicts of interest or lack of disclosure of such information.

Likewise, an unpublished code of ethics for gambling research and accompanying framework for engaging in low risk activities, The Auckland Code (Cassidy and Markham Citation2018), suggests that researchers and other key stakeholders should follow the HEART code of ethics: Honesty, Excellence, Accountability, Rigor, and Transparency. In brief, these principles suggest that research be completed in honest environments that strive for research excellence and integrity by investigators, but maintain accountability to the public good, experimental rigor of a high order, and the promotion of transparency for the research process. The companion framework proposes low risk approaches for 12 research indicators. For example, regarding academic freedoms, the framework suggests low risk when a funder places ‘no restrictions on academic freedoms,’ medium risk when ‘academic freedoms [are] limited in some respects,’ and high risk in the cases where there are ‘restrictions placed upon academic freedoms.’

To date, the evaluation of gambling industry funding research guidelines such as these is absent from the literature. Therefore, although such guidelines derive from expert opinion, common sense strategies, and conventional wisdom, it is unclear whether they are associated with producing a more objective and trustworthy research literature. Uncritically, these guidelines and others like them, are plausibly important to establishing effective principles of research conduct; however, for some, questions might remain about whether they go far enough to protect research integrity and whether some contemporary practices might provide added value or even surpass the value of some recommendations. In the remainder of this commentary, we argue that contemporary research practices that advance open science can further protect researcher independence, and the trustworthiness and replicability of the research they produce.

Integrating open science practices and principles into industry funding guidelines

Major gambling industry funding guidelines and recommendations have not explicitly considered the value of different open science practices and what types of protections they might provide to the research process.Footnote4 ‘Open Science’ practices are designed to increase the transparency of scientific research practices (Cumming Citation2014; Open Science Collaboration Citation2015). This growing movement is intended to increase the quality of scientific research by improving and standardizing methods, while also ensuring that a robust body of high quality and replicable research findings is created. These practices include research pre-registration (i.e. specifying the research plan before beginning analyses), as well as open data (i.e. posting datasets publicly), open access (i.e. posting scientific articles publicly for free), and open materials (i.e. posting analytic code and/or survey documents publicly on an online archive).

Whereas gambling industry funding research guidelines have yet to be evaluated empirically, some research has evaluated how open science principles and practices shift the published research literature. To illustrate, accounts of publication bias (i.e. when the outcome of a study influences whether it is published, usually with respect to the statistical significance of the findings) in the psychology literature are one of many driving factors for open science advocacy (Simonsohn et al. Citation2014; Munafò et al. Citation2017). In opposition to this practice, a core open science principle suggests that publication decisions should not depend on the statistical significance of study outcomes, which leads to an unbalanced literature (Ioannidis Citation2005). Registered reports (i.e. a specific publication strategy by which peer review of hypotheses, research methods, and analytic strategy, prior to data collection, are integral to publication decisions) are a type of open science practice that advocates believe will help reduce publication bias. A recent comparison of the standard psychology literature with studies published using registered reports observed that whereas a standard publication process yielded a 96% confirmation rate of the first hypothesis in a published paper, the rate drops to just 44% in registered reports (Scheel et al. Citation2020). This pattern of findings suggests that failures to confirm hypotheses are underreported in the published literature; using registered reports could help correct this problem. This finding provides support for the notion that this open science practices can help generate a more accurate body of scientific evidence.

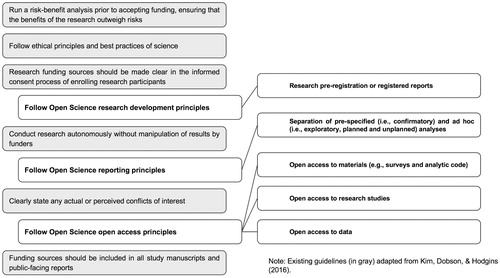

Assuming that open science can contribute to the development of a more replicable, balanced, and trustworthy research literature, it is worth considering how best to integrate open science practices with existing gambling research guidelines. illustrates the Guidelines for Research Independence and Transparency (GRIT) model; this figure displays how open science principles and practices can be integrated into one of the gambling field’s set of guidelines for appropriate use of industry funding. We contend that three core open science principles, operationalized by five open science practices, integrate well with the Kim et al. (Citation2016) guidelines for using industry funding. More specifically, we suggest that, in addition to the existing six guidelines from Kim et al. (Citation2016), researchers also should follow open science principles related to (1) research development, (2) research reporting, and (3) research open access. Although open science principles and practices are valuable, regardless of industry funding status, we expand on these central ways that specific open science practices might help protect academic-industry research arrangements in the next section.

Specific open science practices to protect against funding-related research bias

Gambling researchers have suggested that open science practices can provide several important benefits for gambling research (Blaszczynski and Gainsbury Citation2019; LaPlante Citation2019; Wohl et al. Citation2019). These practices include, but are not limited to (1) research pre-registration, (2) separation of pre-specified (i.e. confirmatory) and ad hoc (i.e. exploratory) analyses, (3) open access study materials, (4) open data availability, and (5) open access to published research studies. Open science advocates developed these practices to help manage a variety of so-called questionable research practices that are associated with poor reproducibility and replicability (see Wicherts et al. Citation2016). Some of these questionable research practices include ad hoc or post hoc modification of variables included in statistical models to obtain significant results (i.e. p-hacking), hypothesizing after results are known (i.e. HARKing; Kerr Citation1998), omitting results from publications after not finding statistical significance, and in extreme cases outright fabrication or falsification of data. This section will describe each of the above five open science practices in detail and discuss potential ways that they could mitigate the potential for direct or indirect funding bias.

First, research pre-registration refers to the public process of posting all planned hypotheses, research protocols and anticipated analytic plans, funding descriptions, and conflict of interest statements prior to initiating any steps of the research process (Yamada Citation2018). This practice could increase public confidence in industry-funded research because a detailed research plan is posted to a third-party research archive, such as the Open Science Framework (see OSF.io), publicly before beginning the study. Due to time-stamping features of such websites, researchers cannot secretly modify their plans while a study is in progress in response to input from an industry sponsor. Timestamps should provide further confidence that research data are not analyzed in a way that searches for industry-friendly outcomes if initial hypotheses are not confirmed. It is important to note that researchers can change their pre-registrations following their public posting in the form of ‘Transparent Changes’ documents; however, these changes usually are minor and require considerable documentation of their nature, rationale, and potential effects on the results. Open science research development principles themselves preclude large-scale changes to the pre-registered research plan, such as changing research questions or hypotheses, not reporting certain results (e.g. results unfavorable to the industry sponsor), or modifying analyses to increase the significance of desired outcomes (i.e. p-hacking).

Second, open science reporting principles recommend separating and clearly labeling confirmatory and exploratory analyses, and further separating exploratory analyses into planned and unplanned analyses. Doing so accrues several benefits for the quality of the research study itself and for reducing industry bias (Wagenmakers et al. Citation2012; Mayo Citation2018; Nosek et al. Citation2018; Lakens Citation2019). Identifying hypotheses as confirmatory means that the researcher has a theoretical or empirical justification for specifying the direction of a particular hypothesis. In contrast, exploratory results are those that are based on serendipitous, significant relationships found in the data. By separating these two types of hypotheses and analyses, readers can have greater confidence that the results labeled as confirmatory were based on planned hypotheses, were not the results of HARKing, and were not influenced by industry pressure to modify interpretations of the results. Moreover, any exploratory results that were added due to industry input would be labeled as exploratory. This labeling makes it clear to avoid identifying them as final or robust results, and instead to designate them as directions for future research and independent replicationFootnote5 in a confirmatory study. Hence, these practices provide clarity about which published results hold greater weight at a particular point in time.

Third, open access study materials refers to the practice of public documentation and archiving of researchers’ notebooks, questionnaires, analytic code, and other resources, like experimental research vignettes, photos, videos, etc. Making such resources openly available provides a level of research transparency that is uncommon in behavioral science, regardless of funding source. Because failing to follow such practices creates real limits to research replication and reproducibility, it follows that using such practices mitigates those limits. Therefore, research studies that engage in open materials practices, especially those that also engage in open data practices, quickly can be subjected to independent third-party review for accuracy. Furthermore, archiving of researchers’ notebooks provides an inside look at the decisions and influences over research over time. Together, such practices should provide added confidence that funding-related research abuses and tampering are minimized.

Fourth, open data refers to the practice of posting de-identified and anonymized data used to conduct analyses for a particular research project with Institutional Review Board approval and in compliance with privacy regulations (Gewin Citation2016). This practice enables researchers to better understand how industry funding could influence results by checking the accuracy of reported data analyses and by conducting meta-scientific studies (e.g. scoping reviews and/or meta-analyses).Footnote6 In addition, open data practices make otherwise difficult to obtain research datasets (e.g. player records from online gambling services or player card gambling records of land-based gambling services) available to any researcher. Even those researchers who do not use gambling industry funding can access such datasets and judge for themselves whether publications are accurate or evaluate new research questions not yet addressed in the published literature. Ultimately, this accelerates the pace of the science of gambling.

Fifth, open access to research publications through pre-prints (e.g. on PsyArXiv) and open access publishing practices can expedite the speed with which results reach the public, other researchers, government representatives, public health stakeholders, and industry funders. This practice also provides free access to study findings to more stakeholders who otherwise might not have access, including policy makers and individual gamblers. Proponents of open access practices have identified other benefits of making research available to all without paywalls, including greater rates of scientific innovation due to more rapid and universal access to the latest research findings (Houghton Citation2009) and access to articles by practicing clinicians who can quickly translate research findings into improving patient care (Björk Citation2017). Scientific research that is locked away in professional journals or stored in nonpublic industry reports provides restricted health value to society.

Limitations

Although there are clear advantages to using open science practices for minimizing the potential for bias associated with industry funding, open science practices are not a panacea for research bias. First, open science does not prevent the possibility that industry stakeholders can play a role in influencing research agendas, for example, by focusing requests for proposals on safe topics. One way that the research community could protect against this is by educating stakeholders about the value of diverse research questions and by advancing multi-disciplinary programs and projects. Second, researchers who post pre-registrations and pre-prints publicly reveal their plans and findings prior to formal publication. Typically, when using independent scientific research practices, such information should be embargoed from funders to avoid intentional or unintentional influence. However, because such documents are public within an open science model, the transparency of such actions mitigates the potential complications of this quicker timeline for research dissemination. Nonetheless, posting such documentationFootnote7 opens the door for potential influence and researchers should be mindful of this possibility.

Third, researchers also could fail to adhere to their pre-registered research plan and results. This could occur for a variety of reasons, including complying with reviewer requests, exploring the possibility of a new insight, or purposely engaging in questionable research practices. In fact, research shows that adherence to pre-registered plans often is limited (Boccia et al. Citation2016; Claesen et al. Citation2019) and researchers still utilize RDoF when conducting pre-registered research (Veldkamp et al. Citation2017). It is imperative that researchers document any change to the pre-registration plan and provide a detailed explanation for any variance. To this point, researchers need to remember that the issue with pre-registration deviation is not so much whether it is done, but how it is done. Transparent deviations allow readers to understand the motives and impetus behind changes and then judge for themselves what weight to give published findings.

Concluding thoughts

Within the context of diverse funding portfolios that could include industry funding, open science practices provide important protections for the scientific integrity of the research produced. Although previous research has suggested that data transparency and open science might be an important way of buttressing gambling industry-academic collaboration (Shaffer et al. Citation2009; LaPlante et al. Citation2019), until now, no one has explained how this might be the case. To fill this gap, in this commentary, we outlined the GRIT guidelines for transparent collaboration between industry and academic researchers. These guidelines integrate key open science practices to provide best practice recommendations and explain how these practices can provide important safeguards in the research process.

In many countries, government support for research continues to fall. By some estimates, less than 50% of active research is publicly funded (Mervis Citation2017). Industry is providing some funding to supplementFootnote8 government grants, but with it has come considerable concern about the integrity of the resulting research. Of course, as researchers, we would be doing a disservice to our profession if we discounted the possibility that research funded by the gambling industry reports findings that are different from similar research that was not funded by the gambling industry. However, this possibility only can be addressed with empirical data. The few available empirical assessments of the impact of gambling industry funding on published research have not identified any systematic differences (e.g. Ladouceur et al. Citation2019), but more work is needed in this area to confirm these findings. Nonetheless, adding open science practices as an essential tool for best research practices will provide added protection against such a possibility. We call on all researchers in the gambling field to adopt open sciences practices regardless of whether they accept gambling industry funds. These practices become critically important when investigators accept gambling industry funds to conduct research.

At the same time, simply advocating for or using the GRIT guidelines is not enough. Future studies should evaluate the use of the guidelines and their influence on research quality, similar to evaluations of new open science practices (e.g. Anvari and Lakens Citation2018; Scheel et al. Citation2020). By working together to better understand and refine guidelines for industry-funded research, researchers both within and outside of the field of gambling studies can produce high-quality, unbiased, and impactful scholarly work.

Lastly, although this commentary has focused on the concerns associated with gambling industry funding, it is important to note that the arguments for regular use of open science principles and practices logically extend to any funding source (e.g. nonprofit, government). Researchers should protect their independence, autonomy, objectivity, and commitment to transparency regardless of the funding source. Along with creativity, innovation, systematic reflection, and empirical rigor, research funding is part of the lifeblood of academia. Without funds to remunerate participants, purchase equipment, provide stipends for graduate students, and hire research staff, the ability for academics to conduct empirical research and disseminate their findings becomes difficult. We suggest that balancing funding guidelines with open science principles and practices can help researchers to better navigate their way to producing replicable research.

Acknowledgments

We are grateful to Dr. Howard J. Shaffer and Mr. Silas Xuereb for their comments on and editing of this manuscript.

Disclosure Statements

The Division on Addiction currently receives funding from the Addiction Treatment Center of New England via SAMHSA; The Foundation for Advancing Alcohol Responsibility (FAAR); DraftKings; the Gavin Foundation via the Substance Abuse and Mental Health Services Administration (SAMHSA); GVC Holdings, PLC; The Healing Lodge of the Seven Nations via the Indian Health Service with funds approved by the National Institute of General Medical Sciences, National Institutes of Health; The Integrated Centre on Addiction Prevention and Treatment of the Tung Wah Group of Hospitals, Hong Kong; St. Francis House via the Massachusetts Department of Public Health Bureau of Substance Addiction Services; and the University of Nevada, Las Vegas via MGM Resorts International.

During the past five years, the Division on Addiction has also received funding from Aarhus University Hospital with funds approved by The Danish Council for Independent Research; ABMRF – The Foundation for Alcohol Research; Caesars Enterprise Services, LLC; Cambridge Police Department with funds approved by the Office of Juvenile Justice Delinquency Prevention; the David H. Bor Library Fund, Cambridge Health Alliance; DraftKings; Fenway Community Health Center, Inc.; Heineken USA, Inc.; Massachusetts Department of Public Health, Bureau of Substance Addiction Services; Massachusetts Gaming Commission, Commonwealth of Massachusetts; National Center for Responsible Gaming; and University of Nevada, Las Vegas via MGM Resorts International.

During the past five years, Dr. Eric Louderback has received research funding from a grant issued by the National Science Foundation (NSF), a government agency based in the United States. His research has been financially supported by a Dean's Research Fellowship from the University of Miami College of Arts & Sciences, which also provided funds to present at academic conferences. He has received travel support funds from the Hebrew University of Jerusalem to present research findings.

During the past five years, the Carleton University Gambling Laboratory has received research funding from federal granting agencies in Canada and Australia unconnected to Dr. Michael Wohl's gambling research. In relation to his gambling research, he has received research funds from provincial granting agencies in Canada. He has also received direct and indirect research funds from the gambling industry in Canada, United States of America, United Kingdom, Australia, and Sweden. Additionally, he has served as a consultant for the gambling industry in Canada, United States of America, United Kingdom, and New Zealand. A detailed list can be found on his curriculum vitae (http://carleton.ca/bettermentlabs/wp-content/uploads/CV.pdf).

During the past five years, Dr. Debi LaPlante has received speaker honoraria and travel support from the National Center for Responsible Gaming (NCRG) and the National Collegiate Athletic Association. She has served as a paid grant reviewer for NCRG and received honoraria funds for preparation of a book chapter from Universite Laval. She has received royalties for Harvard Medical distance learning courses and the American Psychiatric Association's, Addiction Syndrome Handbook. She was a non-paid board member of the New Hampshire Council on Problem Gambling. She currently is a non-paid board member of the New Hampshire Council for Responsible Gambling.

Notes

1 Other researchers have debated these findings on industry bias from the sugar industry (Johns and Oppenheimer Citation2018), instead suggesting that Kearns et al.'s (Citation2016) findings should be understood within the scientific discourse and norms during that historical period.

2 In the spirit of these efforts to provide objective and influential research, the Division on Addiction demonstrated an early commitment to transparency and open science practices through data sharing, especially for its industry-funded research output (see Shaffer et al. Citation2009; www.thetransparencyproject.org).

3 Notably, this commentary restricts its discussion to published transdisciplinary guidelines for gambling industry funding; however, other discipline-specific professional codes of ethics, such as for the American Psychological Association (https://www.apa.org/ethics/code/), also have some relevance to this discussion.

4 Some models have highlighted the importance of transparency in industry-researcher collaborations (e.g., the HEART model), yet no research to our knowledge has directly identified benefits of specific open science practices for industry-funded studies in the gambling domain.

5 For a detailed discussion of replicability and the importance of replication in the addiction sciences more broadly, see Heirene (CitationIn Press; DOI:10.1080/16066359.2020.1751130).

6 Open data provides some general benefits, as well. Unfortunately, reporting errors in published scientific studies do occur (Nuijten et al. Citation2016). Making data open allows others to reproduce published findings and identify such errors. There is also more than one way a data set can be analyzed, and the data analytic choices a researcher makes can influence results (for a discussion see Silberzahn et al. Citation2018).

7 Although open science principles and practices support the public posting of research pre-registrations, there are tools available on the Open Science Framework that allow for a temporary embargo period for a pre-registration. By embargoing a pre-registration, the research plan and metadata (e.g., timestamp) are preserved, yet third parties, such as industry funders, are not able to view the research plan for a specified period of time (e.g. 6 months).

8 Very recently (as of writing this article during May 2020), levels of gambling industry funding have declined due to the COVID-19 pandemic. For example, the International Center for Responsible Gambling (ICRG) cancelled all funding opportunities for the year 2020. Researchers should closely follow these developments, because they will likely influence industry-funded research for years to come.

References

- Adams PJ. 2007. Assessing whether to receive funding support from tobacco, alcohol, gambling and other dangerous consumption industries. Addiction. 102(7):1027–1033.

- Adams PJ. 2011. Ways in which gambling researchers receive funding from gambling industry sources. Int Gambl Stud. 11(2):145–152.

- Anvari F, Lakens D. 2018. The replicability crisis and public trust in psychological science. Comprehens Res Soc Psychol. 3(3):266–286.

- Atkinson RD. 2018. Industry funding of university research: which states lead? [accessed 2020 May 11]. http://www2.itif.org/2018-industry-funding-university-research.pdf.

- Basken P. 2016. A year after a climate-change controversy, smithsonian and journals still seek balance on disclosure rules. The Chronicle of Higher Education; [accessed 2020 May 11]. https://www.chronicle.com/article/A-Year-After-a-Climate-Change/235838.

- Blaszczynski A, Gainsbury S. 2014. Editor’s notes. Int Gambl Stud. 14(3):354–356.

- Blaszczynski A, Gainsbury S. 2019. Editor’s note: replication crisis in the social sciences. Int Gambl Stud. 19(3):359–361.

- Boccia S, Rothman KJ, Panic N, Flacco ME, Rosso A, Pastorino R, Manzoli L, La Vecchia C, Villari P, Boffetta P. 2016. Registration practices for observational studies on ClinicalTrials.gov indicated low adherence. J Clin Epidemiol. 70:176–182.

- Bozeman B, Gaughan M. 2007. Impacts of grants and contracts on academic researchers’ interactions with industry. Res Policy. 36(5):694–707.

- Björk B. 2017. Open access to scientific articles: a review of benefits and challenges. Intern Emerg Med. 12(2):247–253.

- Camerer CF, Dreber A, Forsell E, Ho T-H, Huber J, Johannesson M, Kirchler M, Almenberg J, Altmejd A, Chan T. 2016. Evaluating replicability of laboratory experiments in economics. Science. 351(6280):1433–1436.

- Camerer CF, Dreber A, Holzmeister F, Ho T-H, Huber J, Johannesson M, Kirchler M, Nave G, Nosek BA, Pfeiffer T. 2018. Evaluating the Replicability of Social Science Experiments in Nature and Science Between 2010 and 2015. Nat Hum Behav. 2(9):637–644.

- Cassidy R. 2014. Fair game? Producing and publishing gambling research. Int Gambl Stud. 14(3):345–353.

- Cassidy R, Markham F. 2018. The Auckland Code: a code of ethics for gambling researchers. Derived from the International Think Tank on Research, Policy, and Practice, Banff, Canada 2017.

- Catford J. 2012. Battling big booze and big bet: Why we should not accept direct funding from the alcohol or gambling industries. Health Promot Int. 27(3):307–310.

- Chartres N, Fabbri A, Bero LA. 2016. Association of industry sponsorship with outcomes of nutrition studies: a systematic review and meta-analysis. JAMA Intern Med. 176(12):1769–1777.

- Claesen A, Gomes S, Tuerlinckx F, Vanpaemel W, Leuven KU. 2019. Preregistration: comparing dream to reality. [accessed 2020 May 11].

- Coiera E, Ammenwerth E, Georgiou A, Magrabi F. 2018. Does health informatics have a replication crisis? J Am Med Inform Assoc. 25(8):963–968.

- Collins P, Shaffer HJ, Ladouceur R, Blaszszynski A, Fong D. 2019. Gambling research and industry funding. J Gambl Stud. 35(3):875–886.

- Cowlishaw S, Thomas SL. 2018. Industry interests in gambling research: lessons learned from other forms of hazardous consumption. Addict Behav. 78:101–106.

- Cumming G. 2014. The new statistics: Why and how. Psychol Sci. 25(1):7–29.

- Fainaru-Wada M, Fainaru S. 2017. NFL-NIH research partnership set to end with $16M unspent. [accessed 2020 May 11]. http://www.espn.com/espn/otl/story/_/id/20175509/nfl-donation-brain-research-falls-apart-nih-appears-set-move-bulk-30-million-donation.

- Gewin V. 2016. Data sharing: an open mind on open data. Nature. 529(7584):117–119.

- Goldacre B. 2014. Bad Pharma: How drug companies mislead doctors and harm patients. New York (NY): Macmillan.

- Griffiths MD, Auer M. 2015. Research funding in gambling studies: some further observations. Int Gambl Stud. 15(1):15–19.

- Hancock L, Smith G. 2017. Critiquing the Reno Model I–IV international influence on regulators and governments (2004–2015)— the distorted reality of “responsible gambling. Int J Ment Health Addiction. 15(6):1151–1176.

- Heirene R. In press. A call for replications of addiction research: which studies should we replicate and what constitutes a ‘successful’ replication? Addict Res Theory. DOI:10.1080/16066359.2020.1751130

- Houghton JW. 2009. Open access: What are the economic benefits? A Comparison of the United Kingdom, Netherlands and Denmark. DOI: 10.2139/ssrn.149257

- Hutson M. 2018. Artificial intelligence faces reproducibility crisis. Science. 359(6377):725–729.

- Ioannidis JP. 2005. Why most published research findings are false. PLoS Med. 2(8):e124.

- Johns D, Oppenheimer G. 2018. Response-the sugar industry’s influence on policy. Science. 360(6388):501–502.

- Kearns CE, Schmidt LA, Glantz SA. 2016. Sugar industry and coronary heart disease research: a historical analysis of internal industry documents. JAMA Intern Med. 176(11):1680–1685.

- Kerr NL. 1998. HARKing: hypothesizing after the results are known. Pers Soc Psychol Rev. 2(3):196–217.

- Kim HS, Dobson KS, Hodgins DC. 2016. Funding of gambling research: ethical issues, potential benefit and guidelines. J Gambl Stud. 32(32):111–132.

- LaBrie RA, LaPlante DA, Nelson SE, Schumann A, Shaffer HJ. 2007. Assessing the playing field: a prospective longitudinal study of internet sports gambling behavior. J Gambl Stud. 23(3):347–362.

- Ladouceur R, Blaszczynski A, Shaffer HJ, Fong D. 2016. Extending the Reno model: responsible gambling evaluation guidelines for gambling operators, public policymakers, and regulators. Gaming Law Rev Econ. 20(7):580–586.

- Ladouceur R, Shaffer P, Blaszczynski A, Shaffer HJ. 2019. Responsible gambling research and industry funding biases. J Gambl Stud. 35(2):725–730.

- Lakens D. 2019. The value of preregistration for psychological science: a conceptual analysis. [accessed 2020 May 11].

- Lambert M. 2007. The misuse of science. S Afr j Sports Med. 19(1):2–2.

- LaPlante DA. 2019. Replication is fundamental, but is it common? A call for scientific self-reflection and contemporary research practices in gambling-related research. Int Gambl Stud. 19(3):362–368.

- LaPlante DA, Gray HM, Nelson SE. 2019. Should we do away with responsible gambling? In: Shaffer HJ, Blaszczynski A, Ladouceur R, Collins P, Fong D, editors. Responsible gambling: primary stakeholder perspectives. Oxford: Oxford University Press; p. 35–57.

- Livingstone C, Adams PJ. 2016. Clear principles are needed for integrity in gambling research. Addiction. 111(1):5–10.

- Mayo DG. 2018. Statistical inference as severe testing: How to get beyond the statistics wars. Cambridge: Cambridge University Press.

- Mervis J. 2017. Data check: U.S. government share of basic research funding falls below 50%. Science. https://www.sciencemag.org/news/2017/03/data-check-us-government-share-basic-research-funding-falls-below-50.

- Munafò MR, Nosek BA, Bishop DVM, Button KS, Chambers CD, Percie Du Sert N, Simonsohn U, Wagenmakers E-J, Ware JJ, Ioannidis JPA. 2017. A manifesto for reproducible science. Nat Hum Behav. 1(1):1–9.

- Nelson SE, Edson TC, Singh P, Tom M, Martin RJ, LaPlante DA, Gray HM, Shaffer HJ. 2019. Patterns of daily fantasy sport play: tackling the issues. J Gambl Stud. 35(1):181–204.

- Nosek BA, Alter G, Banks GC, Borsboom D, Bowman SD, Breckler SJ, Buck S, Chambers CD, Chin G, Christensen G, et al. 2015. Scientific Standards. Promoting an open research culture. Science. 348(6242):1422–1425.

- Nosek BA, Ebersole CR, DeHaven AC, Mellor DT. 2018. The preregistration revolution. Proc Natl Acad Sci USA. 115(11):2600–2606.

- Nuijten MB, Hartgerink CH, van Assen MA, Epskamp S, Wicherts JM. 2016. The prevalence of statistical reporting errors in psychology (1985–2013). Behav Res Methods. 48(4):1205–1226.

- Open Science Collaboration. 2015. Estimating the reproducibility of psychological science. Science. 349(6251):aac4716.

- Orford J. 2017. The gambling establishment and the exercise of power: a commentary on Hancock and Smith. Int J Ment Health Addict. 15(6):1193–1196.

- Perkmann M, Tartari V, McKelvey M, Autio E, Broström A, D’Este P, Fini R, Geuna A, Grimaldi R, Hughes A. 2013. Academic engagement and commercialisation: a review of the literature on university–industry relations. Research Policy. 42(2):423–442.

- Reilly C. 2019. Responsible gambling: organizational perspective. In Shaffer HJ, Blaszczynski A, Ladouceur R, Collins R, FongD, editors. Responsible gambling: primary stakeholder perspectives. Oxford: Oxford University Press; p. 211–219.

- Scheel AM, Schijen M, Lakens D. 2020. An excess of positive results: Comparing the standard Psychology literature with Registered Reports. [accessed 2020 May 11]. https://psyarxiv.com/p6e9c.

- Schüll ND. 2012. Addiction by design: machine gambling in Las Vegas. Princeton (NJ): Princeton University Press.

- Shaffer PM, Ladouceur R, Williams PM, Wiley RC, Blaszczynski A, Shaffer HJ. 2019. Gambling research and funding biases. J Gambl Stud. 35(3):875–886.

- Shaffer HJ, LaPlante DA, Chao YE, Planzer S, LaBrie RA, Nelson SE. 2009. Division on addictions creates new data repository. World Online Gambling Law Report. http://www.thetransparencyproject.org/publications/World_Online_Gambling_Law_Report_Volume_8_Issue_3_March_2009.pdf.

- Silberzahn R, Uhlmann EL, Martin DP, Anselmi P, Aust F, Awtrey E, Bahník Š, Bai F, Bannard C, Bonnier E, et al. 2018. Many analysts, one data set: making transparent how variations in analytic choices affect results. Adv Meth Prac Psycho Sci. 1(3):337–356.

- Simmons JP, Nelson ID, Simonsohn U. 2011. False-positive psychology: undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychol Sci. 22(11):1359–1366.

- Simonsohn U, Nelson LD, Simmons JP. 2014. p-curve and effect size: correcting for publication bias using only significant results. Perspect Psychol Sci. 9(6):666–681.

- Sismondo S. 2008. How pharmaceutical industry funding affects trial outcomes: causal structures and responses. Soc Sci Med. 66(9):1909–1914.

- Tong EK, Glantz SA. 2007. Tobacco industry efforts undermining evidence linking secondhand smoke with cardiovascular disease. Circulation. 116(16):1845–1854.

- Veldkamp CLS, Bakker M, van Assen MALM, Crompvoets EAV, Ong HH, Soderberg CK, Wicherts JM. 2017. Restriction of opportunistic use of researcher degrees of freedom in pre‐registrations on the Open Science Framework. [accessed 2020 May 11]. https://psyarxiv.com/g8cjq.

- Wagenmakers EJ, Wetzels R, Borsboom D, van der Maas HL, Kievit RA. 2012. An agenda for purely confirmatory research. Perspect Psychol Sci. 7(6):632–638.

- Wicherts JM, Veldkamp CL, Augusteijn HE, Bakker M, Van Aert R, Van Assen MA. 2016. Degrees of freedom in planning, running, analyzing, and reporting psychological studies: a checklist to avoid p-hacking. Front Psychol. 7:1832.

- Wohl MJA, Davis CG, Hollingshead SJ. 2017. How much have you won or lost? Personalized behavioral feedback about gambling expenditures regulates play. Comput Hum Behav. 70:437–445.

- Wohl MJ, Wood RT. 2015. Is gambling industry-funded research necessarily a conflict of interest? A reply to Cassidy (2014). Int Gambl Stud. 15(1):12–14.

- Wohl MJ, Tabri N, Zelenski JM. 2019. The need for open science practices and well-conducted replications in the field of gambling studies. Int Gambl Stud. 19(3):369–376.

- Wohl MJ, Christie KL, Matheson K, Anisman H. 2010. Animation-based education as a gambling prevention tool: correcting erroneous cognitions and reducing the frequency of exceeding limits among slots players. J Gambl Stud. 26(3):469–486.

- Wright BD, Drivas K, Lei Z, Merrill SA. 2014. Technology transfer: industry-funded academic inventions boost innovation. Nature. 507(7492):297–299.

- Yamada Y. 2018. How to crack pre-registration: toward transparent and open science. Front Psychol. 9:1831.

- Yamashita T, Brown JS. 2017. Does cohort matter in the association between education, health literacy and health in the USA? Health Promot Int. 32(1):16–24.