?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

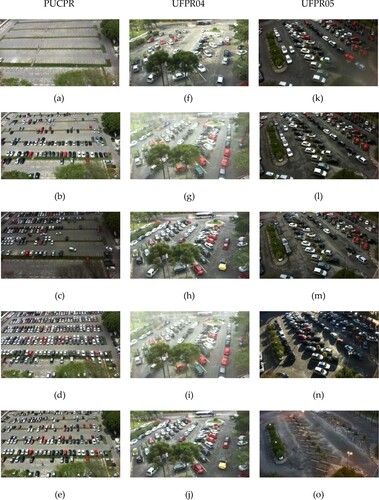

As a new concept in urban development, smart cities are characterized primarily by their mobility. To solve these problems, it became necessary to develop an intelligent system. Using the Advanced Saliency Detection Method and an Efficient Features Extraction Model, the proposed work is aimed at detecting vacant outdoor parking lots. Experimental work has been conducted using the publicly available “PKLot” dataset, which consists of 695,899 segmented images. Under different weather conditions, the images were taken from three different camera locations in two different parking lots in Brazil, including sunny, cloudy, and rainy days. The experimental results mentioned that the hybrid feature extraction model enhanced the performance of parking detection systems. Using three different datasets, PUCPR, UFPR04, and UFPR05, we obtain an accuracy of 99.93%, 99.89%, and 99.87%. This is clear that the hybrid feature extraction model with the PUCPR dataset has produced the highest accuracy.

1. Introduction

Smart cities have great opportunities in terms of technological developments and accessibility. With the advancement of modernization, the present era has witnessed a huge growth in vehicle ownership. In numerous towns and cities, the highly populated areas are facing problems in finding parking spaces. Consequently, due to the scarcity of parking space, vehicle users are not enjoying their convenient services during their vehicle trips. This problem is giving rise to many other problems such as traffic congestion, difficulty in finding proper parking spaces, and parking accessibility [Citation1].

Many researchers have contributed to this area like Al-Kharusi et al. [Citation2] have suggested a more genius system to detect parking spaces based on image processing techniques. The images of the parking lot are captured and processed by his proposed system. In this way, the driver uses this processed information to find an available car park. This has initiated a unique parking management system based on License Plate Recognition [Citation3]. This system brings forth the correct vehicle information and accesses the time of the vehicle. Moreover, this system offers video streaming that further helps in deriving the best and latest information about the vehicle with utmost speed. It has been proffered that the LPR model promises a high success rate of 95% in real-time implementation.

A more promising parking space detection system is introduced based on the image processing technique [Citation4]. The use of a moving object lags in efficiency and accuracy as compared to the process of detecting an image. Furthermore, this system does not use sensor-based technology but image processing technique. The reason behind this phenomenon is that a sensor-based system is not as good and cost-effective as an image processing technique. It brought to light all the existing parking services and their economic viability was also discussed [Citation5]. The purpose behind such consideration was to highlight varied problems present in the current existing parking methods such as the lack of reliability modernity and efficiency. A protected parking system that is based on video analytics to detect human activity and user interaction [Citation6]. Incoming and outgoing vehicles are registered through the image registration algorithm to keep a record of the available parking slot. Moreover, to keep an eye on the activities taking place in the parking area, support vector machine classification is performed.

An effective car parking system based on Wireless Network Sensor (WSIV) was established with the availability of parking lots is detected and monitored by the node [Citation7]. The people, involved in the management of the whole parking system, collect the detail to know about the vacant parking fields, security, and statistical data. CrossBow Motes are used to implementing the above-discussed system. In [Citation8], the authors described a genius method to estimate the magnitude of the non-measured free parking area. This system operates by using a static surveillance camera. Many parking systems in literature have their own merits and limitations.

After careful observation, it has been considered that there is a need to invent a new intelligent outdoor parking system for a better resolution of the problem. Presently, there are no parking management systems for the existing car parking areas. The present car parking areas are handled manually without the support of any scientific equipment or system. Generally, it takes too much time for people to find a suitable parking area for their vehicles. As a result, people waste a lot of their time while finding parking spaces. In metropolitan cities, the problem of the non-availability of free parking spaces is greater as compared to the rural areas. The primary reason behind this phenomenon is the non-installation of modern technologies in the parking areas.

The present work aims to discover an intelligent parking system that is based on hybrid feature extraction techniques rather than sensor-based systems. An intelligent feature extraction-based approach should be adopted for an optimized parking system so that it could diminish the cost of the sensor and the trouble caused during wiring. Rising population, industrialization, and mismanaged parking systems are causing parking-related obstructions day by day. Therefore, an intelligent outdoor parking system should be developed which could tackle all these challenging problems with utmost efficiency, accuracy, and security.

The remaining paper has been composed of four sections. Section 2 elaborates the research background, and section 3 focuses on the proposed optimized model and preprocessing of the dataset used for the experimentation work. Section 4 describes experimental results and in the last section, work has been concluded with the future directions as well.

2. Related works

A plethora of studies has been conducted in the field of developing efficient parking systems. Almeida et al. [Citation9] presented a parking lot dataset for researchers. It consisted of 105,837 images captured from a different angle from various parking areas. A parking lot dataset is proposed with 480,000 images under different climatic conditions using the convolution neural network [Citation10]. It has been made evident from the collected images that there are different classification techniques to consider the primary challenges. Other studies present features like colour histograms [Citation11], and colour space are used as features [Citation12].

Delibaltov et al. [Citation13] develops a unique structure that was based on 3D models. This system was very much capable of overcoming the problem of the occlusions which were observed during the calculation of the vacant parking space probability. The study was mainly focused on removing the occlusions which were experienced at a higher rate in the traditional methods. These occlusions were removed with the help of Support Vector Machines (SVM). To overcome the problems that occurred by illumination changes, cars were detected using a Bayesian classifier that was based on edges, corners, and wavelet features [Citation14]. A method that uses aerial images as the basic input for the detection of empty spaces in the parking lots was proposed by [Citation15]. The authors proposed an algorithm that was capable of obtaining a set of canonical parking spaces for learning the parking spaces and estimating its structure based on the pre-trained model automatically generated from the system [Citation16–23]. In [Citation24], authors used image-based methods to classify space and cars. A monitoring approach for outdoor parking lots was developed using videos [Citation25,Citation26].

Horprasert et al. [Citation27]present background subtraction for static images obtained from cameras, which can estimate the background estimation at a specific interval of time and statistically arrange the data for calculation of the occupancy in the parking lots. In the study [Citation28,Citation29], Gabor filters were used for training a classifier on the images which were captured at different lighting conditions. These images were of the unoccupied parking slots. So, the system can detect the object that is the vehicle present in the slot or not.

A Bayesian structure for formulating the vacant parking space is capable of working in both day and night situations [Citation30]. Masmoudi et al. [Citation31] focuses on the occlusions that were seen in the parking lots. They explained that many times the spaces between parked cars slots can be missed out which can affect the accuracy of the system. In [Citation32,Citation33], a sensors-based solution was provided for collecting data on real-time parking availability. Jermsurawong et al. [Citation34] present a method that was customized to find the occupancy of the parking spaces. This neural network helps in extracting features that are the basic visual-based features. Some other latest concepts related to IoT-based techniques, image processing, and fog removal elimination have been studied [Citation35–39]. The summary of literature and performancedenotation were presented in .

Table 1. Literature review of existing parking systems.

Ultrasonic and radar detection are implemented in the smart parking system for smart cities. This technique minimizes traffic jams, reduces air pollution, reduction of waiting time to park vehicles, self-governing. The Bluetooth technology used in this implementation, by this the user identifies the vacant slot [Citation40]. This system helps in the shopping malls, cinema halls, universities etc. Two different variations of the parking system are discussed in [Citation41]. The authors concluded that the IoT (Internet of things) is a suitable technology, and the authors used level 1 of IoT to implement the smart parking system. Empty slots of the parking system can be identified by the users from the smartphone or laptops [Citation42]. Wireless sensors, computer vision and android are used to identify the parking system [Citation43].

However, numerous authors have proposed Parking Detection Systems (PDS) based on sensor-based networks and vision-based systems. The drawback of sensor-based networks is that the installation of such systems is highly expensive. Therefore, large parking spots with many spaces to monitor cannot be deployed with individual sensors. Consequently, it can be observed that vision-based systems are much better than sensor-based systems in terms of cost and maintenance. Therefore, a framework has been proposed which optimizes parking systems based on advanced feature extraction and machine learning techniques.

3. Materials and methods

An extensive and critical theoretical study of literature survey on various parking systems, techniques and tools used for video and image processing shows that there is no existence of efficient and effective systems for managing outdoor parking systems. It is an irksome and exhausting task to find a vacant parking space in urban areas. It results in an utter wastage of time and causes dissatisfaction among potential visitors or customers. The drivers could be supported by an efficient car-park routeing system to get an optimal parking lot instantly. Present systems are either based on sensor-based technology, which is rather expensive, or on video-based technologies which do not work properly under sunny, cloudy, and rainy weather. This study suggests a hybrid model which is designed and implemented to detect vacant parking slots in outdoor parking. The sole aim of this study is to evaluate more advanced feature extractors for classification so that it could receive correct information about the observed outdoor parking lots.

3.1. Dataset

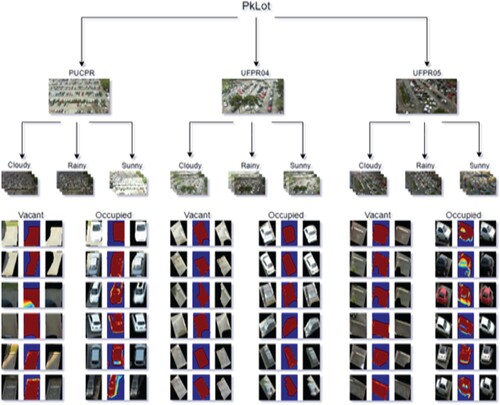

The absence of a constant and stable dataset is one of the biggest obstacles faced by researchers during pursuing their work in the field of parking space classification “PKLot” is a strong image dataset that is used in parking lots for the detection of parking spaces. The dataset “PKLot” consists of 12,417 images of parking lots and 695,899 segmented images of parking spaces. All of these images were captured at the parking areas of the Federal University of Parana (UFPR) from the 4th and 5th floor and the 10th floor of the administration building of the Pontifical Catholic University of Parana (PUCPR) situated in Curitiba, Brazil. The images were captured with the 5-minute-time-lapse interval with the help of a low-cost full High Definition (HD) camera (Microsoft LifeCam, HD-5000) which was deployed at the top of a building so that the possible obstruction between adjacent vehicles could be curtailed. This process was repeated for more than 30 days. The primary objective behind this phenomenon was to collect images under various weather conditions. The captured images were stored in JPEG (Joint Photographic Experts Group) format with lossless compression in a resolution of 1280 × 720 pixels and then were categorized into three subsets named PUCPR, UFPR04 and UFPR05. This dataset has become popular in the computer vision research community because it contains images captured under varied weather conditions under different illumination from different angles. Various challenges in the dataset are discussed like shadow presence, sunlight over-exposition, low light during the rainy season in .

3.2. Experimental framework

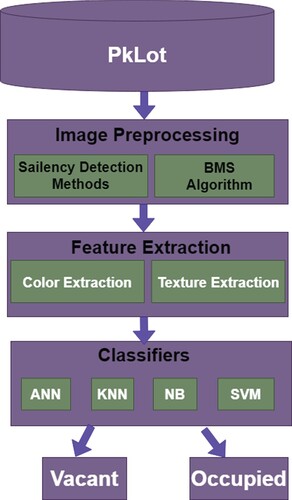

The proposed outdoor parking detection system begins with the collection of parking lot images using either a standard dataset or by capturing through an input device, such as a CCTV (Closed Circuit Television) camera and ends at the detection stage in which the status of parking lots (vacant or occupied) is detected using hybrid feature extraction model. The most important stages of an outdoor parking detection system are preprocessing, feature extraction, classification model and testing the proposed model using various parameters. shows the proposed design of the outdoor parking detection system which is explained in detail in subsequent sections.

3.3. Image pre-processing

It is necessary to pre-process the images for noise elimination. Image enhancement is considered one of the most vital pre-processing steps. Various image enhancement algorithms have been developed [Citation44–48]. After a thorough survey of the numerous image enhancement techniques, it becomes outright evident that CLAHE surpasses all other techniques. It splits an image into foreground and background as well as into regions or categories. There are numerous image segmentation techniques present in literature [Citation49–60] like edge detection-based, region-based, watershed-based, clustering-based, saliency-based and so on. First, the input images must be resized to before applying the pre-processing steps. Nowadays, a recent development in computer vision applications is playing a central role by detecting the most noticeable object in the images. Therefore, in this study, the Saliency Detection method is used to focus on the foreground object without any background deviation.

3.3.1. Saliency detection method

The automatic procedure to locate the vital parts of an image is called saliency detection. Saliency detections automate the procedure of understanding the stands out in the image. The main application of saliency detection is object detection in the images. The main advantage of saliency detection is it consumes fewer resources and less time. Saliency detection corrects the inaccurate results of the salient regions of the image. Salient detection fuses the image colour and the space to calculate the features of the image regions. Each pixel in the image is represented by the average colour features and the coordinates of the image pixels. Saliency detection highlights the objects in the image and enhances the contrast with the surrounding area, the background should be weakened, and salient parts of the image is high lightened i.e. the foreground of the image is highly contrasted. Objectness saliency draws the bounding boxes when an object is there in the image. This objectness saliency is used to identify the vacant places in the parking system. When a car is identified in the image, this saliency detection draws the bounding box and then extract the features which are discussed in the following section.

The steps used saliency detection of the image are.

Transform the image into the colour space

Find the number of levels from the original image n = log2(min(w,h)/10) where w and h are the width and height of the image and then build the Gaussian pyramid for the image.

Calculate the contrast map of the image i.e. find the difference between the pixel (x,y) and the other neighbour pixel in the image

Construct the saliency region from the above step by summing up the contrast map of all the scales in the image.

Calculate the saliency value of each region as the average saliency pixel value. By this, the salient regions with accurate boundaries are highlighted.

3.3.2. Saliency detection method to extract region of interest (ROI)

Nowadays, a recent development in the computer vision applications is playing a central role by detecting the most noticeable object in the images. Humans always focus on the most important and informative region of an image when they see it. So, we are going to use the Saliency Detection method in order to focus on the foreground object without any background deviation. In this work, we used the Boolean Map-based Saliency (BMS) algorithm [Citation61] for salient object detection.

3.3.3. Parameters used in BMS algorithm

Image: An image is characterized by a set of binary images. These binary images are generated by randomly thresholding the image’s colour channels.

Gestalt principle: Gestalt is a German word which means “shape” or “form”. Gestalt principle [Citation62,Citation63] aims to formulate the regularities according to which the perceptual input s organized into unitary forms. These principles are mainly applied to vision. In visual perception, such forms are the regions of the visual field whose portions are perceived as grouped or joined together, and are thus segregated from the rest of the visual field. Gestalt principle states that several factors influence the figure-ground segregation like size, surroundedness, convexity and symmetry.

Boolean Maps: Boolean map B represents an observer’s momentary conscious awareness of a scene. Boolean maps are generated from randomly selected feature channels. For example, given an image I, a set of Boolean maps

is generated.

Attention Map: An attention map A(B) demonstrates the influence of a Boolean map B on visual attention. This attention map highlights those regions on B that attract attention visually. An attention map is efficiently computed by binary image processing techniques to activate regions with closed outer contours on a given Boolean map.

Mean Attention Map: A mean attention map

is obtained through a linear combination of the resulting attention maps.

Saliency: The saliency is modelled by the mean attention map

over randomly generated Boolean maps. Finally, post-processing is applied on the mean attention map to output a saliency map S.

3.3.4. Working of BMS algorithm

The Boolean map concept is used in order to obtain a saliency model. BMS illustrate the topological structure of Boolean map B in order to calculate saliency map on the basis of a Gestalt principle of figure-ground segregation [Citation61]. In addition, the surroundedness cue of the Gestalt principle is implemented in this algorithm for the detection of the saliency. This method is used because the topological relationship between the figure and the ground is contained in this method. Saliency based technique has proved itself as a constant cue to various transformations. BMS helps in measuring the surroundedness of the image on the basis of a set of Boolean maps. Then binary image processing techniques are implemented so that an attention map could be computed for the activation of the regions with closed outer contours on a given Boolean map. After that saliency S is modelled on the mean attention map according to the set of randomly sampled Boolean maps. This mean attention map or expected attention map is also considered as a full resolution preliminary saliency map S which can be implemented for particular tasks.

3.4. Feature extraction

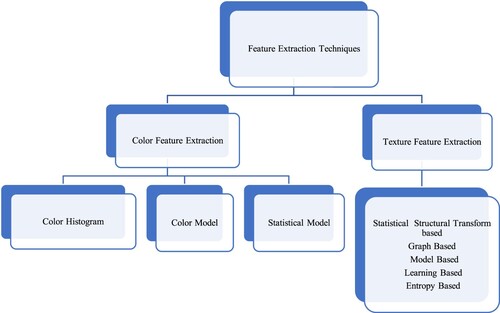

Feature extraction is applied after pre-processing of images. The feature extraction phase involves transforming an image into a set of features that can uniquely represent it. The given set of features that uniquely represents an image is also called a feature vector. There are many feature extraction techniques discussed in the literature. The most important features in an image are colour, texture, and shape. Since, the objective of this study is to detect the status of the parking lot (vacant or occupied), this model is working on two classes of features descriptors i.e. colour and texture. There are many techniques [Citation64–74] available in the literature. Some of the techniques discussed for basic understanding are shown in .

Saliency detection detects the physical objects that do not differentiate the shadow or car in the image. To identify the cars in the images we used feature extraction techniques. The colour features considered in this paper are standard deviation, skewness, colour histogram, average RGB. Statistical features are range, mean, variance, HOG algorithm. Texture features are entropy, contrast, correlation, energy, homogeneity, coarseness, directionality, regularity, roughness, line-likeness. After the correlation of the HOG features is extracted followed by the processing of the graph model which results in the geometrical car model, this will help to find the distance between the adjacent detected cars to classify the region as the vacant parking spot. The graph model helps to discriminate the vacant spots from the occupied spots in the observed parking lot.

3.5. Proposed hybrid feature extraction

A hybrid feature extraction model has been proposed based on colour and texture features. For this purpose, authors have used Statistical Model (range, mean, variance) for colour feature extraction and the statistical approach (HOG algorithm) for texture feature extraction. Finally, both the techniques have been combined to develop a hybrid feature set. The feature extraction framework to extract hybrid features.

The statistical method of Colour features is used in this study. It includes range, mean and variation of individual channels of six colour spaces (3 for RGB and 3 for HSV) to compute a total of 18 features i.e. F1–F18, as described in Algorithm 1.

Table

Table

It returns extracted HOG features from a truecolor or grayscale input image I. The features are returned in a 1-by-N vector, where N is the HOG feature length.

Finally, The Algorithm 3 for the proposed hybrid feature extraction based on the combined colour and texture featuresis has been described as below.

The above algorithm is the hybrid feature extraction algorithm. Line 1 categorises the images whether it is occupied or vacant in the parking system. It is the vacant space then the control jumps to line 8 otherwise the control jumps to line 17. The method features_extraction_color() extracts the colour features, the vehicle’s colour model is built then follows a similar way to build the colour model. Dismissed the unimportant features to decrease the time complexity. Finally applied HOG to extract the critical features. This method is used to analyse the occupancy context to determine the spot occupancy. This method examines the neighbouring pixels of initial seed points and determines the pixel neighbours that add to the region of interest. This procedure continues when the difference between the segment and the new pixel exceeds the specified threshold.The method features_extraction_texture() extracts the texture features. To determine the presence of the car, this method extracts the edge detection using the texture features. After extracting the features, all the features are stored in the HYBRID_FEATURES vector. After designing this framework, the most promising classification model based on machine learning technique as presented in the literature [Citation75,Citation76]. SVM has been applied to the hybrid feature vector. The 30% of data is reserved for testing purposes and the testing dataset is applied on the hybrid model designed in the training phase. It is evaluated on various parameters like accuracy, sensitivity, specificity, false-positive rate, and false-negative rate [Citation77]. Finally, various parameters evaluated during the testing phase are compared with the existing state-of-art techniques.

A normalized version of the image is used concerning the global input parameters i.e. average, variance, reference image. After extracting the features from each frame of the image, applied the Support Vector Machine (SVM) to identify the vacant spot in the parking system. The exact position and the size of the cars are not known, it is not possible to specify the vehicle position during classification. After calculating the HOG feature vector then gives this data to the SVM classifier to predict the class as either vacant or occupied. After summing up the results of all the segments SVM calculates the classification score. Binary SVM is trained to analyse and classify the segments into two classes (i.e. occupied or vacant). The pixel values of the segment are calculated to detect whether the parking spaces are vacant or occupied. The SVM is used to classify the segment as vacant or occupied. Doing this repeatedly for multiple segments gives the classification score and SVM can accurately decide whether the object is a car or not. The SVM produces the probabilities for prediction.

Model performance is measured in terms of accuracy and time taken to classify a single image. Accuracy is defined as the number of accurately classified images from the total image dataset and mathematically presented as

TP: True positive (The correctly recognized car occupying parking system)

TN: True negative (The correctly recognized vacant space)

FP: False positive (Did not recognize the vacant spot)

FN: False negative (Did not recognize the occupied spot).

3.6. Occupancy detection

For the analysis of the occupancy detection, need to highlight the changes in the region of interest concerning the image background. To detect the changes in the frame, determine the region of interest in the frame. Each region of interest corresponds to the occupied spot and accumulate the pixel-wise difference in each region. To detect the vacant spot in the image, the technique used is the background subtraction from the current frame and if the pixel weight exceeds a predefined threshold, the vehicle is detected. The difference is scaled, stored and detect the change in the region of the interest. Comparing the actual pixel value with the historical values will filter the exceeding the threshold.

4. Results

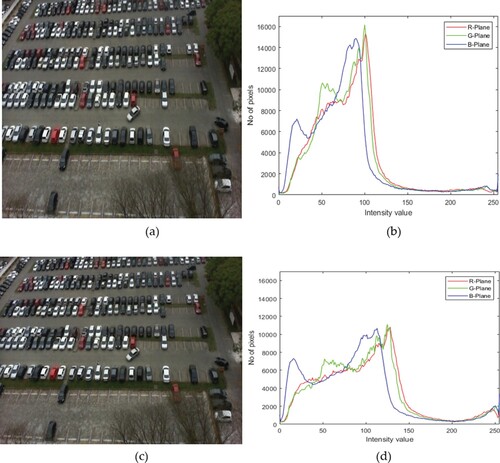

The experiments have been conducted on three sub-datasets (PUCPR, UFPR04 and UFPR05) under various climatic conditions like rainy, sunny, and cloudy using [Citation78]. In , we presented the image before and after the CLAHE algorithm along with their histogram graphs.

Figure 4. (a) Original Cloudy image, (b) Histogram of original Image, (c) Image after CLAHE and (d) Histogram of Enhanced Image.

Further, the method of saliency extraction has been applied to discard the unnecessary background from the images of free or occupied parking lots. The small segment from the original dataset images has been captured to monitor the status of parking occupancy. It is evident from that the ROI has been extracted using the Saliency detection method to attain a relatively significant accuracy improvement.

Figure 5. Arrangement of dataset challenges (a) original frame (b) Saliency (c) vacant or occupied frame.

Further, various existing machine learning techniques like KNN, ANN, NBC and SVM have been applied on all three datasets with our proposed system. Compared the present model with the existing machine learning techniques and the results are tabulated in . It is observed that for the PUCPR dataset the present model achieved an accuracy of 99.93% and the processing time is 10.35(ms). The remaining existing classifiers took more time than the present model. The present model Hybrid feature extraction achieved maximum accuracy and less processing time for all three datasets.

Table 2. Comparison of proposed with the different existing models.

It can be observed from that SVM is a powerful classifier that performs well for binary classification. The basic principle of SVM is to maximize the distances of samples to a boundary that separates the classes (i.e. occupied or vacant). The robustness of the SVM, the use of the kernel trick to map the non-linear separable dataset into a higher dimensional space to find a hyperplane to separate the samples as well as handling of the outliers makes it an efficient classifier than other classifiers.

The SVM binary classification algorithm searches for an optimal hyperplane that separates the data into two classes. For separable classes, the optimal hyperplane maximizes a margin surrounding itself, which creates boundaries for the positive and negative classes. For inseparable classes, the objective is the same, but the algorithm imposes a penalty on the length of the margin for every observation that is on the wrong side of its class boundary.

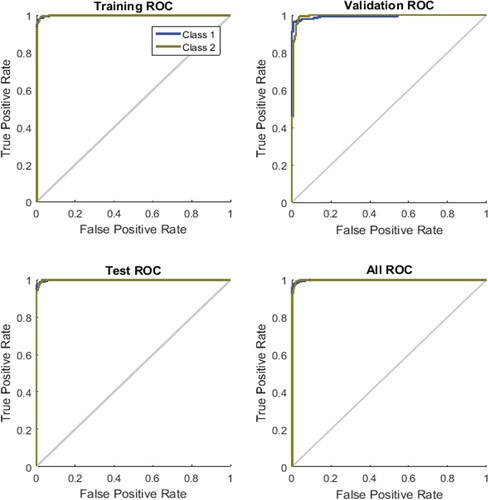

SVM algorithm use a set of mathematical functions that are defined as a kernel. The function of a kernel is to take data as input and transform it into the required form. Three kernel functions that can be used in SVM are Gaussian or Radial Basis Function (RBF), Linear and Polynomial. By default Gaussian kernel is applied and it provides best results on three data-subsets as shown in .

Table 3. Comparison of SVM models on proposed system based on different Kernel Functions.

We have applied “fitcsvm” function for Support Vector Machine using different parameters:

fitcsvm(train_features_svm,train_targets_svm,'KernelScale’,'auto’,'Standardize’,true,'OutlierFraction’,0.05)

Standardize parameter flags to standardize the predictor data. Here “Standardize”, true centres and scales each predictor variable by the corresponding weighted column mean and standard deviation.

OutlierFraction parameter sets expected proportion of outliers in the training data with a numeric scalar in the interval [0,1). Here “OutlierFraction”, 0.05 assumes that 5% of the observations are outliers.

The experimental results of the proposed hybrid feature extraction framework using the SVM classification model based on accuracy, sensitivity, specificity, false-positive rate (FPR) and false-negative rate (FNR) are shown in . It has been observed from this table that the data-subset PUCPR provides the highest accuracy, sensitivity and specificity as compared to other data subsets like UFPR04 and UFPR05. Further, the average accuracy of the proposed model i.e. 99.89% on the complete dataset (PUCPR, UFPR04 and UFPR05) shows an improvement in detecting the status of a parking lot.

Table 4. Experimental results of Proposed Hybrid Feature Extraction framework using SVM Classifier.

For a better understanding of the experimental results, Receiver Operating Characteristic (ROC) curves of the proposed system (Refer ). These curves depict the strength of the proposed model. From these curves, it can be observed that the ROC curve of the classifier in the proposed model is very close to the top left of the graph which means that an extremely high true positive rate at a low false-positive rate can be achieved. Hence, the optimal threshold can be achieved by the proposed model. The class1 and class 2 represents the vacant and occupied classes.

Further, the comparative analysis of the proposed hybrid model with some existing state-of-the-art techniques based on accuracy is presented in . From this table, it has been observed that an improved accuracy rate of 99.89% can be achieved using the proposed hybrid feature extraction framework which optimizes the system as compared with other existing state-of-the-art techniques. Therefore, it has been clinched that after pre-processing and with advanced hybrid feature extraction techniques, an optimized classification model for detecting outdoor parking lot can be designed.

Table 5. Accuracy comparison of different feature extraction techniques with proposed hybrid feature extraction model.

compares the present work with the existing methodologies. From the results, it was proved that the Hybrid feature extraction framework achieved the highest accuracy over the existing methodologies.

Table 6. Comparison of proposed with the other existing parking Methodologies.

5. Conclusion and future recommendations

Intelligent outdoor parking system provides a better life quality in urban areas and plays an important role in the development of smart cities. As the number of vehicles is increasing, particularly in urban areas, people find it difficult to search a vacant parking lot. Therefore, the authors have proposed an optimized Parking System based ona hybrid feature extraction framework. For experimentation and validation purposes publicly available and widely used dataset “PKLot” has been considered. Further, the popular classifier, SVM has beenapplied for classification on a few thousand samples, selected randomly from the dataset. The experiments have been conducted on three datasubsets namely, PUCPR, UFPR04 and UFPR05 where PUCPR is comparatively less noisy and provides the highest accuracy as compared with other datasubsets like UFPR04 and UFPR05. Further, a comparison of experimental results has been presented with the proposed Hybrid model of COLOR and TEXTURE feature extractors and existing state of art techniques. It has been observed that an improvement in the accuracy of 99.89% has been achieved with the proposed system that provides a better-optimized solution which is very good for this real-life problem of parking space and can be more helpful for smart cities. In future, the work can be extended by including images of night views of parking areas in addition to rainy, sunny, and cloudy images in the dataset. In future, various deep learning techniques can be applied to the dataset and experimental results can be compared based on accuracy and processing time.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Zoika S, Panagiotis GT, Stefanos T, et al. Casual analysis of illegal parking in urban roads: the case of Greece. Case Stud Transp Policy. 2021;9(3):1084–1096.

- AI-Kharusi H, Al-Bahadly I. Intelligent parking management system based on image processing. World J Eng Technol. 2014;2:55–67.

- Tian Q, Guo T, Qiao S. Design of intelligent parking management system based on license plate recognition. J Multimed. 2014;9(6):774–780.

- Yusnita R, Norbaya F, Basharuddin N. Intelligent parking space detection system based on image processing. Int J Innov Manag Technol. 2012;3(3):232–235.

- Faheem F, Mahmud SA, Khan GM, et al. A survey of intelligent car parking system. J Appl Res Technol. 2013;11(5):714–726.

- Rizwan M, Habib HA. Video analytics framework for automated parking. Tech J, Univ Eng Technol (UET) Taxila, Pakistan. 2015;20(4):87–94.

- Alharbi A, Ahlkias G, Yamin M, et al. Web based frame work for smart parking system. Int J Inf Technol. 2021;13:1495–1502.

- Postigo CG, Torres J, Menendez JM. Vacant parking area estimation through background subtraction and transience map analysis. IET Intell Transp Syst. 2015;9(9):835–841.

- Almeida P, Oliveira LS, Silva E, et al. Parking space detection using textural descriptors. In: Systems, man, and cybernetics (SMC), IEEE international conference, 2013. p. 3603–3608.

- Do H, Kim J, Lee K, et al. Implementation of CNN based parking slot type classification using arounf view image. In: Proceedings of the 2020 IEEE international conference on consumer electronics (ICCE), 2020.

- Tschentscher M, Koch C, König M, et al. Scalable real-time parking lot classification: an evaluation of image features and supervised learning algorithms. In: Proceedings of the international joint conference on neural networks, vol. 15, 2015.

- Ahrnbom M, Astrom K, Nilsson M. Fast classification of empty and occupied parking spaces using integral channel features. In: IEEE computer society conference on computer vision and pattern recognition workshops, 2016. p. 1609–1615.

- Delibaltov D, Wu W, Loce RP, et al. Parking lot occupancy determination from lamp-post camera images. In: IEEE conference on intelligent transportation systems, proceedings, ITSC, 2013. p. 2387–2392.

- Wu Q, Huang C, Wang S, et al. Robust parking space detection considering inter-space correlation. In: IEEE International conference on multimedia and expo, 2007. p. 659–662.

- Seo YW, Urmson C. Utilizing prior information to enhance self-supervised aerial image analysis for extracting parking lot structures. In: Proceedings of IEEE/RSJ international conference on intelligent robots and systems, pp. 339–344, 2009.

- Lu R, Lin X, Zhu H, et al. SPARK: a new VANET-based smart parking scheme for large parking lots. In: Proceedings – IEEE INFOCOM, 2009. p. 1413–1421.

- Geng Y, Cassandras CG. A new ‘smart parking’ system based on optimal resource allocation and reservations. International IEEE conference on intelligent transportation systems, Washington, DC, USA, vol. 14, 2011.

- Tang C, Wei X, Zhu C, et al. Towards smart parking based on fog computing. IEEE Access. 2018;6:70172–70185.

- Badii C, Nesi P, Paoli I. Predicting available parking slots on critical and regular services by exploiting a range of open data. IEEE Access. 2018;6:44059–44071.

- Wolff J, Heuer T, Gao H, et al. Parking monitor system based on magnetic field sensors. In: IEEE intelligent transportation systems conference, Toronto, Canada, 2006. p. 1275–1279.

- Chunhe Y, Jilin L. A type of sensor to detect occupancy of vehicle berth in carpark. In: Proceedings of international conference on signal processing, vol. 3, 2004. p. 2708–2713.

- Kotb AO, Shen YC, Huang Y. Smart parking guidance, monitoring and reservations: a review. IEEE Intell Transp Syst Mag. 2017;9(2):6–16.

- Safi QGK, Luo S, Pan L, et al. SVPS: cloud-based smart vehicle parking system over ubiquitous VANETs. Comput Netw. 2018;138:18–30.

- Huang CC, Wang SJ. A hierarchical Bayesian generation framework for vacant parking space detection. IEEE Trans Circuits Syst Video Technol. 2011;20(12):1770–1785.

- Funck S, Mohler N, Oertel W. Determining car-park occupancy from single images. In: Proceedings of IEEE intelligent vehicles symposium, 2004. p. 325–328.

- Lee CH, Wen MG, Han CC, et al. An automatic monitoring approach for unsupervised parking lots in outdoors. In: Proceedings of international carnahan conference on security technology, 2005. p. 271–274.

- Horprasert T, Harwood D, Davis LS. A statistical approach for real-time robust background subtraction and shadow detection. In: IEEE ICCV, 1999.

- Schneiderman H, Kanade T. Object detection using the statistics of parts. Int J Comput Vision. 2004;56:151–177.

- Viola P, Jones M. Robust real-time face detection. Int J Comput Vision. 2004;57(2):137–154.

- Huang CC, Tai YS, Wang SJ. Vacant parking space detection based on plane-based Bayesian hierarchical framework. IEEE Trans Circuits Syst Video Technol. 2013;23(9):1598–1610.

- Manase DK, Zainuddin Z, Syarif S, et al. Car detection in roadside parking for smart parking system based on image processing. In: 2020 international seminar on intelligent technology and its applications (ISITIA), IEEE, 2020. p. 194–198.

- Caicedo F, Blazquez C, Miranda P. Prediction of parking space availability in real time. Expert Syst Appl. 2012;39(8):7281–7290.

- Saharan S, Kumar N, Bawa S. An efficient smart parking pricing system for smart city environment: a machine-learning based approach. Future Gener Comput Syst. 2020;106(1):622–640.

- Jermsurawong J, Ahsan U, Haidar A, et al. One-day long statistical analysis of parking demand by using single-camera vacancy detection. J Transp Syst Eng Inf Technol. 2014;14(2):33–44.

- Kaur S, Bansal RK, Mittal M, et al. Mixed pixel decomposition based on extended fuzzy clustering for single spectral value remote sensing images. J Indian Soc Remote Sens. 2019;47(3):427–437. doi:10.1016/j.compeleceng.2018.08.018.

- Mittal M, Kumar M, Verma A, et al. FEMT: a computational approach for fog elimination using multiple thresholds. Multimed Tools Appl. 2021;80(1):227–241.

- Singh R, Gahlot A, Mittal M. Iot based intelligent robot for various disasters monitoring and prevention with visual data manipulating. Int J Tomogr Simul. 2019;32(1):90–99.

- Sanjeewa EDG, Herath KKL, Madhusanka BGDA, et al. Visual attention model for mobile robot navigation in domestic environment. GSJ. 2020;8(7):1960–1965.

- Ghansiyal A, Mittal M, Kar AK. Information management challenges in autonomous vehicles: a systematic literature review. J Cases Inf Technol. 2021;23(3):58–77.

- Kumar I, Manuja P, Soni Y, et al. An integrated approach toward smart parking implementation for smart cities in India. In: Kolhe M, Tiwari S, Trivedi M, et al., editors. Advances in data and information sciences. Vol. 94. Singapore: Springer; 2020. p. 343–349. (Lecture notes in networks and systems).

- Nath A, Konwar HN, Kumar K, et al. Technology enabled smart efficiency parking system (TESEPS). In: Sarma, H, Bhuyan B, Borah S, et al., editors. Trends in communication, cloud, and big data. Vol. 99. Singapore: Springer; 2020. p. 141–150. (Lecture notes in networks and systems).

- da Cruz MAA, Rodrigues JJ, Gomes GF, et al. An IoT-based solution for smart parking. In: Singh P, Pawłowski W, Tanwar S, et al., editors. Proceedings of first international conference on computing, communications, and cyber-security (IC4S 2019). Vol. 121. Singapore: Springer; 2020. p. 213–224. (Lecture notes in networks and systems).

- Sarangi M, Mohapatra S, Tirunagiri SV, et al. IoT aware automatic smart parking system for smart city. In: Mallick P, Balas V, Bhoi A, et al., editors. Cognitive informatics and soft computing. Vol. 1040. Singapore: Springer; 2020. p. 469–481. (Advances in intelligent systems and computing).

- Irmak E, Ertas AH. A review of robust image enhancement algorithms and their applications. In: IEEE International conference on smart energy grid engineering, 2016. p. 371–375.

- Jaya VL, Gopikakumari R. IEM: a new image enhancement metric for contrast and sharpness measurements. Int J Comput Appl. 2013;79(9):1–9.

- Saenpaen J, Arwatchananukul S, Aunsri N. A comparison of image enhancement methods for lumbar spine X-ray image. In: International conference on electrical engineering/electronics, computer, telecommunications and information technology (ECTI-CON), 2018. p. 798–801.

- Jintasuttisak T, Intajag S. Color retinal image enhancement by Rayleigh contrast-limited adaptive histogram equalization. Int Conf Control Autom Syst. 2014;10:692–697.

- Yadav G, Maheshwari S, Agarwal A. Multi-domain image enhancement of foggy images using contrast limited adaptive histogram equalization method. In: Proceedings of international conference on recent cognizance in wireless communication & image processing, Springer, 2014. p. 31–38.

- Elaziz MA, Bhattacharyya S, Lu S. Swarm selection method for multilevel thresholding image segmentation. Expert Syst Appl. 2019;138:1–24.

- Yu C-y, Zhang W-s, Wang C-l. A saliency detection method based on global contrast. Int J Signal Process Image Process Pattern Recognit. 2015;8(7):111–122.

- Bali A, Singh SN. A review on the strategies and techniques of image segmentation, In: IEEE international conference on advanced computing & communication technologies, 2015. p. 113–120.

- Kang WX, Yang QQ, Liang RR. The comparative research on image segmentation algorithms. In: IEEE conference on education technology and computer science, 2009. p. 703–707.

- Yogamangalam R, Karthikeyan B. Segmentation techniques comparison in image processing. Int J Eng Technol. 2013;5:307–313.

- Min H, Jia W, Wang XF, et al. An intensity-texture model based level set method for image segmentation. Pattern Recognit. 2015;48:1547–1562.

- Zhang J, Sclaroff S. Saliency detection: a Boolean map approach. In: Proceedings of IEEE international conference on computer vision, 2013. p. 153–160.

- Karch P, Zolotova I. An experimental comparison of modern methods of segmentation. In: IEEE international symposium on applied machine intelligence and informatics (SAMI), 2010. p. 247–252.

- Qi W, Han J, Zhang Y, et al. Graph-Boolean map for salient object detection. Signal Process Image Commun. 2016;49:9–16.

- Cong R, Lei J, Fu H, et al. Review of visual saliency detection with comprehensive information. IEEE Trans Circuits Syst Video Technol. 2018;29(10):2941–2959.

- Zhang L, Chen J, Qiu B. Region of interest extraction in remote sensing images by saliency analysis with the normal directional lifting wavelet transform. Neurocomputing. 2016;179:186–201.

- Zhang F, Wu T, Zheng G. Video salient region detection model based on wavelet transform and feature comparison. EURASIP J Image Video Process. 2019;58.

- Zhang J, Sclaroff S. Saliency detection: a Boolean map approach. In: Proceedings of IEEE international conference on computer vision, 2013. p. 153–160.

- Koffka K. Principles of Gestalt psychology. London: Lund Humphries; 1935.

- Kootstra G, Kragic D. Fast and bottom-up object detection, segmentation, and evaluation using Gestalt principles. In: Proceedings of IEEE international conference on robotics and automation, 2011. p. 3423–3428.

- Lin CH, Chen RT, Chan YK. A smart content-based image retrieval system based on color and texture feature. Image Vis Comput. 2009;27(6):658–665.

- Chen WT, Liu WC, Chen MS. Adaptive color feature extraction based on image color distributions. IEEE Trans Image Process. 2010;19(8):2005–2016.

- Aptoula E, Lefevre S. Morphological description of color images for content-based image retrieval. IEEE Trans Image Process. 2009;18(11):2505–2517.

- Alqaisi A, Altarawneh M, Alqadi ZA, et al. Analysis of color image features extraction using texture methods. Telecommun Comput Electron Control. 2019;17(3):1220–1225.

- Humeau-Heurtier A. Texture feature extraction methods: a survey. In: IEEE access. Vol. 7. IEEE; 2019. p. 8975–9000.

- Navarro CF, Perez CA. Color–texture pattern classification using global–local feature extraction, an SVM classifier, with bagging ensemble post-processing. Appl Sci. 2019;9(15):3130.

- Singha M, Hemachandran K. Content based image retrieval using color and texture. Signal Image Process: An Int J. 2012;3(1):39–57.

- Wang X, Bai X, Liu W, et al. Feature context for image classification and object detection. In: Proceedings of IEEE international conference of computer vision and pattern recognition, 2011. p. 961–968.

- Wu CM, Chen YC. Statistical feature matrix for texture analysis. CVGIP, Graph Models Image Process. 1992;54(5):407–419.

- Haralick RM. Statistical and structural approaches to texture. Proc IEEE. 1979;67(5):786–804.

- Ahmad WSHMW, Fauzi MFA. Comparison of different feature extraction techniques in content-based image retrieval for CT brain images. IEEE, 2008.

- Huang S, Cai N, Pacheco PP, et al. Applications of support vector machine (SVM) learning in cancer genomics. Cancer Genomics Proteom. 2018;15:41–51.

- Yu W, Liu T, Valdez R, et al. Application of support vector machine modeling for prediction of common diseases: the case of diabetes and pre-diabetes”. BMC Med Inform Decis Mak. 2010;10(16).

- Zhu W, Zeng N, Wang N. Sensitivity, specificity, accuracy, associated confidence interval and ROC analysis with practical SAS® implementations. Health Care and Life Sciences, NESUG 2010.

- MATLAB R2018a on Windows 7 Professional, 64-bit Operating System, Processor Intel (R) Core (TM) i3-5005U CPU @ 2.00GHz, RAM 12.0 GB.