?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This paper studies an inverse problem of recovering an unknown diffusion coefficient in a parabolic equation. We adopt a total variation regularization method to deal with the ill-posedness. This method has the advantage to solve problems that the solution is non-smooth or discontinuous. By transforming the problem into an optimal control problem, we derive a necessary condition of the control functional. Through some prior estimates of the direct problem, the uniqueness and stability of the minimizer are obtained. In the numerical part, a Gauss–Jacobi iteration scheme is used to deal with the non-linear term. Some numerical examples are presented to illustrate the performance of the proposed algorithm.

1. Introduction

The mathematical models describing the diffusion process are of great importance in many scientific fields, such as heat conduction, diffusion, groundwater flow, oil reservoir simulation, etc. The aim of the direct problem is to determine the diffusion field according to the system parameters. However, in many practical situations, the diffusion parameters may not be known. The purpose of the inverse problem is to reconstruct these parameters from the observation data of the diffusion field. In this paper, we consider a simple model based on the following parabolic equation

(1)

(1) where

,

and

are two given functions,

is an unknown diffusion coefficient. Assume that the final observation data, i.e.

(2)

(2) is given. The goal of the inverse problem is to reconstruct

in Equation (Equation1

(1)

(1) ). The mathematical model (Equation1

(1)

(1) ) arises in many engineering and physical settings, for example, the heat conduction in a rod. Being different from the corresponding direct problem, the inverse problem is ill posed [Citation1]. In many practical applications, the observation data

in (Equation2

(2)

(2) ) is obtained by measurements. A small noise in

may cause a big change in the reconstructions of

, which may lead to a meaningless result [Citation2,Citation3].

There has been many works related to this inverse problem. In [Citation4], a semigroup method is used to analyse the existence of the solution of the inverse problem and the local representation of the unknown diffusion coefficient is obtained at the end points. In [Citation5] the uniqueness and conditional stability of the inverse problem are studied by transforming the problem into a non-linear operator equation. The numerical solution is obtained by an iteratively regularized Gauss–Newton method. In [Citation6] an optimization scheme with both -regularization and BV-regularization are discussed. The existence and convergence of the approximate solution to the problem is obtained in the framework of finite element analysis and the Armijo algorithm is applied for the numerical solution. In [Citation7] the problem is transformed into an optimal control problem, and the uniqueness and stability of the control functional are proved. The stable numerical solution is obtained by the gradient iteration method. There are also some other works, see [Citation8–16] and the references therein.

The optimization method is a powerful tool for dealing with the ill-posed problems [Citation17–20]. The basic idea is to restrict the problem to some compact set, and then get the solution by minimizing the following functional [Citation21]

where

is a forward map,

is a regularization parameter and

is a penalty term. According to the prior knowledge of the inversion solution,

can be chosen differently. The well-known Tikhonov regularization method [Citation22] is to use the

norm as a penalty term, and this method usually works well for continuous models. However, in many practical applications, the solution of the problem may not be continuous. For example, in image processing, a common problem of Tikhonov regularization is that it will smooth out the sharp edges of an image and can not preserve the detailed information very well [Citation23]. In [Citation24] a total variation regularization method was proposed by Rudin et al. and good results are achieved in the field of image processing. The definition of total variation [Citation25,Citation26] can be expressed as

The total variation of k is also known as the bounded variation of k. The space of all functions in

with bounded variation is denoted by

and the corresponding BV-norm is given by

It can be proved that

is complete under the BV-norm and thus a Banach space. In addition, if Ω is a bounded open set with Lipschitz boundary in

, then

(see [Citation27] for more details). Total variation regularization is one of the most effective tools to solve problems when the solution is non-smooth or discontinuous.

Compared with the intensive study of total variation regularization method in image processing, the applications of this method for reconstructing an unknown coefficient of partial differential equations are much less, see [Citation28–34]. There are also some difficulties for total variation regularization method both theoretically and numerically [Citation35,Citation36]. For example, the non-differentiability of the TV-term can bring great difficulties to theoretical analysis; in numerical calculation, its nonlinearity and degeneracy can also make the algorithm more complex. In this paper, from a point of view of theoretical analysis, we adopt an optimal control framework to discuss this problem. By introducing a polished TV-term, we derive a necessary condition of the control functional. The necessary condition which is a variational inequality with a coupled system of a parabolic equation. Being different from the previous work in [Citation7], the variational inequality in this paper includes a non-linear term. In order to get the uniqueness and stability results, we also need an additional condition

(3)

(3) where κ is a positive constant. In the numerical part, we modify this necessary condition into an equality form and adopt a Gauss–Jacobi iteration scheme to deal with the nonlinear term. The numerical results show that this modification is effective and can reconstruct the non-smooth solutions very well.

The organization of this paper is as follows: Section 2 transforms the problem into an optimal control problem and derives a necessary condition for the control functional. Section 3 studies the well-posedness of the optimal control problem based on the necessary condition. Section 4 discretizes the system and give a numerical algorithm. Section 5 presents some numerical examples to illustrate the effectiveness of the algorithm.

2. Optimal control problem

It is well known that the original problem is ill-posed and cannot be solved directly. The most applicable method for solving this problem is the Tikhonov regularization method. However, Tikhonov regularization method often requires some smoothness of the solution. In many practical applications, the solution of the problem may not always be continuous. To overcome this difficulty, we consider the following total variation regularization-based optimal control problem.

Problem P. Define an admissible domain

(4)

(4) where

are two given positive constants. Assume that the observation data

in (Equation2

(2)

(2) ) satisfies the following condition

(5)

(5) The optimal control problem in this study is to find

such that

(6)

(6)

(7)

(7)

where

(in order to avoid the potential misleading of the divergence notation, we use the notation

instead of

in the TV-penalty term, because our problem is one-dimensional),

is the solution of Equation (Equation1

(1)

(1) ) corresponding to

and

is a regularization parameter.

Following [Citation36], we modify the penalty term as

(8)

(8) where β is a small positive parameter and in this paper it is taken as

. This modification can provide certain advantages in computation, such as differentiability of

when

. According to (Equation8

(8)

(8) ) the optimal control problem (Equation6

(6)

(6) ) and (Equation7

(7)

(7) ) can be approximated by

(9)

(9)

(10)

(10)

Definition 2.1

cf.[Citation37]

A function , with

is called the a weak solution of Equation (Equation1

(1)

(1) ), if

(11)

(11) for any test function

and a.e. time

and

Lemma 2.2

cf.[Citation38,Citation39]

For Equation (Equation1(1)

(1) ) we have the following estimate

(12)

(12) where C is independent of ϕ and f.

Now, we are going to prove the existence of minimizers of problem P. In order to get this result, we first give the continuous property of by the following lemma.

Lemma 2.3

Assume that

and

as

then we have

(13)

(13)

Proof.

Let and choosing the test function φ in (Equation11

(11)

(11) ) as

(for abbreviation, we denote it by

), which depends on x, we obtain

According to Gronwall's inequality and Cauchy's inequality, we have

(14)

(14) From (Equation14

(14)

(14) ), we know that

is uniformly bounded for all

. Therefore, we can extract a subsequence of

, still denoted by

, such that

(15)

(15) Now we will show that

. Multiplying both sides of (Equation11

(11)

(11) ) by a function

with

and taking

, then we have

Integrating by parts, we obtain

(16)

(16)

From (Equation15

(15)

(15) ) and taking

in (Equation16

(16)

(16) ), we deduce

(17)

(17) Notice that (Equation17

(17)

(17) ) is valid for any

with

, so we have

and

. Hence,

by the definition of

.

Through the weak convergence of , next we will show the convergence of

in the sense of

topology. By taking

and

, then from (Equation11

(11)

(11) ) we have

(18)

(18)

A similar discussion for

, we have

(19)

(19)

Combining (Equation18

(18)

(18) ) and (Equation19

(19)

(19) ), we obtain

(20)

(20)

Furthermore, by using equation (Equation11

(11)

(11) ) for

and

, we deduce

(21)

(21)

Substituting (Equation21

(21)

(21) ) into (Equation20

(20)

(20) ), yields

(22)

(22)

Rewriting the second term on the left-hand side of (Equation22

(22)

(22) ) and after a simple calculation, we have

(23)

(23)

Integrating (Equation23

(23)

(23) ) with respect to t, we obtain

(24)

(24) By the weak convergence of

and the convergence of

, it is easy to know

Hence

(25)

(25) In addition, according to Hölder's inequality, we obtain

(26)

(26)

Then from (Equation5

(5)

(5) ), (Equation14

(14)

(14) ), (Equation25

(25)

(25) ) and (Equation26

(26)

(26) ), we derive the final result

(27)

(27) This completes the proof.

From Lemma 2.3, we can easily get the following theorem.

Theorem 2.4

There exists a minimizer of

i.e.

Proof.

It is easy to know that is non-negative, and thus

has the greatest lower bound

. Let

be a minimizing sequence, namely

Since

and thanks to the particular structure of J, we have

By noticing the boundness of

, we also have

Therefore, we can extract a subsequence, which is still denoted by

, such that

By the Sobolev embedding theorem, we obtain

Then, from the Lebesgue control convergence theorem and Lemma 2.3, we deduce

Hence,

. This completes the proof.

Theorem 2.4 shows the existence of the minimizer of the optimal control problem. Next, we will establish a necessary condition for the control functional. On the basis of the necessary condition, we will prove the uniqueness and stability of the optimal control problem in the next section.

Theorem 2.5

Let k be the solution of problem P. Then for any by Equation (Equation1

(1)

(1) ) we have the following variational inequality

(28)

(28) where

and w satisfies the following equation

(29)

(29)

Proof.

For any ,

, let

then from (Equation10

(10)

(10) ) we have

(30)

(30) Differentiate with respect to η of both sides of (Equation30

(30)

(30) ), we have

(31)

(31) where

is the solution of Equation (Equation1

(1)

(1) ) corresponding to

. Let

and calculate directly from (Equation1

(1)

(1) ) gives

(32)

(32) Let

and suppose w satisfying the following equation

(33)

(33) where

is the adjoint operator of

. Then from (Equation33

(33)

(33) ) we obtain

(34)

(34) From (Equation32

(32)

(32) ) we have

(35)

(35) Furthermore, by using Green's formula we have

(36)

(36)

Substitution of (Equation34

(34)

(34) ) and (Equation35

(35)

(35) ) into (Equation36

(36)

(36) ) gives

(37)

(37) Let

then combining (Equation31

(31)

(31) ) and (Equation37

(37)

(37) ), we have the final result

(38)

(38) This completes the proof.

3. Local uniqueness and stability

This section studies the uniqueness and stability of the optimal control problem P. Since the optimal control problem P is non-convex, one may not expect to get the global uniqueness. However, under the assumption that T is relatively small, we can prove that the minimizer of problem P is locally unique. This is also a main contribution of this study.

In the following Lemma and Theorem, we will use symbol C (independent of T) to denote different constants unless stated otherwise.

Lemma 3.1

cf.[Citation38,Citation39]

For Equation (Equation29(29)

(29) ) the following estimate holds

(39)

(39)

Lemma 3.2

For any bounded continuous function we have

(40)

(40) where

is a fixed point.

Proof.

For , we have

This completes the proof.

Suppose are two observation data that satisfies (Equation2

(2)

(2) ) and

(41)

(41) where

is an error bound. Let

be two minimizers of the optimal control problem and

(42)

(42) where κ is a positive constant.

and

are solutions of Equations (Equation1

(1)

(1) ) and (Equation29

(29)

(29) ) corresponding to

, respectively.

Taking , then from (Equation1

(1)

(1) ) and (Equation29

(29)

(29) ) we can obtain

(43)

(43) and

(44)

(44)

Lemma 3.3

For Equation (Equation43(43)

(43) ), we have the following estimate

(45)

(45)

Proof.

From (Equation43(43)

(43) ) we obtain

Integrating by parts, we have

According to Young's inequality and noticing (Equation4

(4)

(4) ), we have

thus

This completes the proof.

Lemma 3.4

For Equation (Equation44(44)

(44) ), we have the following estimate

(46)

(46)

Proof.

From (Equation44(44)

(44) ) we have

This yields

From (Equation45

(45)

(45) ) and use Young's inequality, we obtain

thus

This completes the proof.

Theorem 3.5

Suppose are two observation data, and

are the corresponding minimizers of problem P. If there exists a point

such that

(47)

(47) then for

, we have the following estimate

where C is independent of λ and T.

Proof.

Let when

and let

when

, from (Equation28

(28)

(28) ) we have

(48)

(48)

(49)

(49) Combining (Equation48

(48)

(48) ) and (Equation49

(49)

(49) ), we get

(50)

(50)

According to the mean value theorem, we have

(51)

(51) By noticing (Equation42

(42)

(42) ), we obtain

(52)

(52) From (Equation50

(50)

(50) )–(Equation52

(52)

(52) ) and using Cauchy's inequality, we have

(53)

(53)

where we have used the estimate in Lemma 3.3 and Lemma 3.4. From (Equation12

(12)

(12) ), (Equation39

(39)

(39) ) and noticing (Equation5

(5)

(5) ) we have

(54)

(54) Combining (Equation53

(53)

(53) ) and (Equation54

(54)

(54) ), we have

(55)

(55) According to Lemma 3.2 and assumption (Equation47

(47)

(47) ), we derive

(56)

(56) Choose T such that

(57)

(57) then combining (Equation56

(56)

(56) ) and (Equation57

(57)

(57) ), we can easily obtain

This completes the proof.

Remark 3.6

It should be pointed out that the uniqueness and stability of the optimal solution are established on the basis of condition (Equation42(42)

(42) ). Theoretically speaking, we can not take

too large when we need to solve problem (Equation9

(9)

(9) ) by numerical methods. However, we do not use this condition in the numerical simulation. In our numerical experiments, it seems that the condition

can be automatically satisfied when we discretize the system by the central difference scheme, which can be seen in the next section of numerical simulations.

4. Discretization and numerical algorithm

We are already prove the well posedness of the optimization problem (Equation9(9)

(9) ). In this section, we will adopt a Jacobi iterative algorithm to minimize the cost functional. The key ingredient of the algorithm is based on the following theorem.

Theorem 4.1

Let k be the solution of the optimal control problem P. Then from Equations (Equation1(1)

(1) ) and (Equation29

(29)

(29) ), we have

(58)

(58)

Remark 4.2

The proof of this theorem can be obtained by a similar discussion of Theorem 2.5, we omit it here.

Next, we adopt a Jacobi iteration scheme to approximate the solution of (Equation58(58)

(58) ). The advantage of the use of this algorithm is that it can look for the steady solution directly, and it is easy to implementation. Now, let us discretize the term

. As shown in Figure , at a given point o, let E and W denote the two adjoint points, and let e and w be the corresponding two midway points.

Define , then

can be written as

. By applying the central difference scheme to Z, we have

(59)

(59) where h is the spatial step size. Next, we generate further approximations for Z at the two midway points

here

and

are approximated by

Then, at a target point o, can be discretized by

(60)

(60) Furthermore, by substituting (Equation60

(60)

(60) ) into (Equation58

(58)

(58) ), we obtain

(61)

(61) Let

then (Equation61

(61)

(61) ) is equivalent to

(62)

(62) Let

and substituting it into (Equation62

(62)

(62) ), we have

(63)

(63) Then the Gauss–Jacobi iteration scheme[Citation23] can be applied for (Equation63

(63)

(63) ). At each step n, we update

to

by

(64)

(64) where

. The integral term in the right-hand side

can be computed by Simpson's rule or trapezoidal rule.

From the analysis above, we propose the following algorithm:

Choose a function

as an initial value of iteration.

Solve Equation (Equation1

(1)

(1) ) to obtain

, where

.

Solve Equation (Equation29

(29)

(29) ) to obtain

, where

and compute

.

Compute

to obtain the next value of iteration, where the boundary points of k are handled by the Neumann boundary condition.

Select an arbitrarily small positive constant α, compute

and compare it with α. If

stop and take

. Otherwise, let n = n + 1, go to step 2 and go on computing by the induction principle.

5. Numerical experiments

In this section we carried out some numerical experiments to examine the theory and algorithm presented in the above sections.

The forward problem (Equation1(1)

(1) ) and (Equation29

(29)

(29) ) are solved by the finite volume method. In all the numerical experiments, we take

where τ is the time step size, h is the spatial step size and δ is the noise level. The regularization parameters used in this paper are chosen by the Morozov discrepancy principle (see, e.g. [Citation1,Citation40]). The synthetic data is generated in the following form

where random

denotes the uniform distribution in

. The source term

and the initial term

in Equation (Equation1

(1)

(1) ) are taken as

and

respectively.

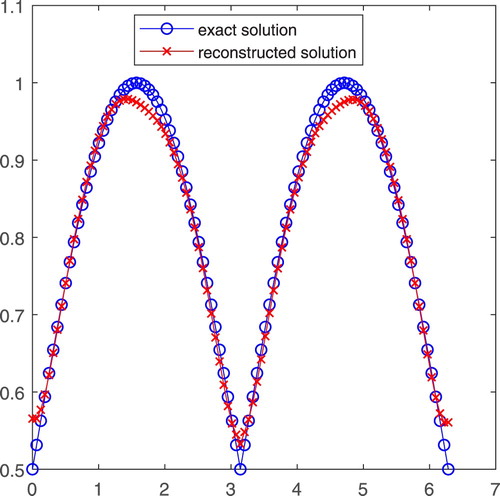

Example 5.1

In the first example, we take

The initial value of iteration

and the regularization parameter

.

Figure shows the reconstructions of , where the '–o–' line is the exact coefficient and the '–x–' line is the inversion coefficient. It can be seen that the diffusion coefficient

is non-smooth at

, and the inversion algorithm can give a satisfactory result.

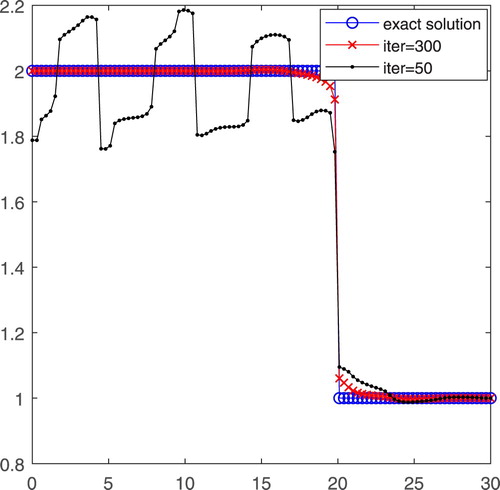

Example 5.2

In the second example, we take

The initial value of iteration

and the regularization parameter

.

In this case, the change of is mainly reflected on the discontinuous point. In order to show the inversion process of the total variation algorithm, we compare the numerical results of different iteration times. As shown in Figure , the '–o–' line is the exact coefficient, and the '–•–' line and the '–x–' line are the inversion coefficient after 50 iterations and 300 iterations, respectively. From the numerical result, we can see that our algorithm works well for the discontinuous case.

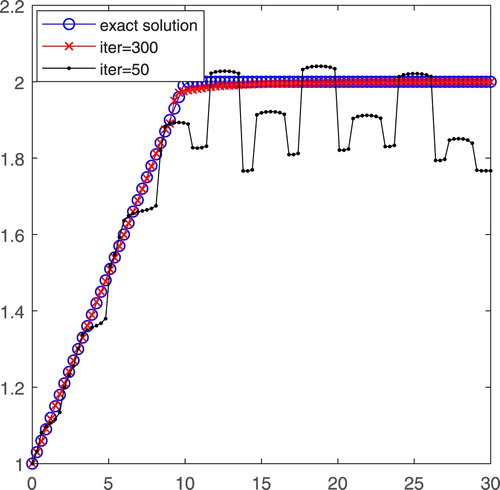

Example 5.3

In the third example, we take

The initial value of iteration

and the regularization parameter

.

Figure shows different iteration times of the numerical results. The '–o–' line is the exact coefficient . The '–•–' line is the inversion coefficient

after 50 iteration times. The '–x–' line is the inversion coefficient

after 300 iteration times. It can be seen that after 300 iterations the coefficient

is recovered very well.

6. Conclusions

In this paper, we investigated an inverse problem of identifying an unknown coefficient of a parabolic equation. The total variation regularization method is proposed to deal with the ill-posedness. By transforming the problem into an optimal control problem, the existence, necessary condition as well as the stability of the minimizer of the control functional are established. Through a modification of the necessary condition into an equality form, we designed a Jacobi iteration scheme to obtain numerical solution. The numerical experiments show that this algorithm is effective and stable to reconstruct non-smooth solutions.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Kirsch A. An introduction to the mathematical theory of inverse problems. New York: Springer; 2011.

- Deng Z, Yang L, Yu J, et al. An inverse problem of identifying the coefficient in a nonlinear parabolic equation. Nonlinear Anal Theory Methods Appl. 2009;71(12):6212–6221. doi: 10.1016/j.na.2009.06.014

- Sun J. An eigenvalue method using multiple frequency data for inverse scattering problems. Inverse Problems. 2012;28(2):025012. doi: 10.1088/0266-5611/28/2/025012

- Demir A, Hasanov A. Identification of the unknown diffusion coefficient in a linear parabolic equation by the semigroup approach. J Math Anal Appl. 2008;340(1):5–15. doi: 10.1016/j.jmaa.2007.08.004

- Isakov V, Kindermann S. Identification of the diffusion coefficient in a one-dimensional parabolic equation. Inverse Problems. 2000;16(3):665–680. doi: 10.1088/0266-5611/16/3/309

- Keung YL, Zou J. Numerical identifications of parameters in parabolic systems. Inverse Problems. 1998;14(1):83–100. doi: 10.1088/0266-5611/14/1/009

- Deng Z, Yang L, Yu J, et al. Identifying the diffusion coefficient by optimization from the final observation. Appl Math Comput. 2013;219(9):4410–4422.

- Chen Q, Liu JJ. Solving an inverse parabolic problem by optimization from final measurement data. J Comput Appl Math. 2006;193(1):183–203. doi: 10.1016/j.cam.2005.06.003

- Cheng J, Liu JJ. A quasi Tikhonov regularization for a two-dimensional backward heat problem by a fundamental solution. Inverse Problems. 2008;24(6):065012. doi: 10.1088/0266-5611/24/6/065012

- Deng Z, Yang L. An inverse problem of identifying the radiative coefficient in a degenerate parabolic equation. Chin Ann Math Ser B. 2014;35(1):355–382. doi: 10.1007/s11401-014-0836-x

- Egger H, Engl HW. Tikhonov regularization applied to the inverse problem of option pricing: convergence analysis and rates. Inverse Problems. 2005;21(3):1027–1045. doi: 10.1088/0266-5611/21/3/014

- Yang L, Dehghan M, Yu J, et al. Inverse problem of time-dependent heat sources numerical reconstruction. Math Comput Simul. 2011;81(8):1656–1672. doi: 10.1016/j.matcom.2011.01.001

- Yang L, Yu JN, Deng Z. An inverse problem of identifying the coefficient of parabolic equation. Appl Math Model. 2008;32(10):1984–1995. doi: 10.1016/j.apm.2007.06.025

- Deng Z, Yang L, Yao B, et al. An inverse problem of determining the shape of rotating body by temperature measurements. Appl Math Model. 2018;59:464–482. doi: 10.1016/j.apm.2018.02.002

- Yang L, Deng Z, Hon Y. Simultaneous identification of unknown initial temperature and heat source. Dyn Syst Appl. 2016;25(1–4):583–602.

- Yamamoto M, Zou J. Simultaneous reconstruction of the initial temperature and heat radiative coefficient. Inverse Problems. 2001;17(4):1181–1202. doi: 10.1088/0266-5611/17/4/340

- Isakov V. Inverse parabolic problems with the final overdetermination. Commun Pure Appl Math. 1991;44(2):185–209. doi: 10.1002/cpa.3160440203

- Jiang D, Feng H, Zou J. Convergence rates of Tikhonov regularizations for parameter identification in a parabolic-elliptic system. Inverse Problems. 2012;28(10):104002. doi: 10.1088/0266-5611/28/10/104002

- Deng Z, Hon Y, Yang L. An optimal control method for nonlinear inverse diffusion coefficient problem. J Optim Theory Appl. 2014;160(3):890–910. doi: 10.1007/s10957-013-0302-z

- Deng Z, Yu J, Yang L. Identifying the coefficient of first-order in parabolic equation from final measurement data. Math Comput Simulat. 2008;77(4):421–435. doi: 10.1016/j.matcom.2008.01.002

- Isakov V. Inverse problems for partial differential equations. New York: Springer; 2006.

- Tikhonov A, Goncharsky AV, Stepanov VV, et al. Numerical methods for the solution of ill-posed problems. Dordrecht: Springer; 1995.

- Shen J, Chan T. Mathematical models for local nontexture inpaintings. SIAM J Appl Math. 2002;62(3):1019–1043. doi: 10.1137/S0036139900368844

- Rudin LI, Osher S, Fatemi E. Nonlinear total variation based noise removal algorithms. Phys D. 1992;60(1):259–268. doi: 10.1016/0167-2789(92)90242-F

- Hào DN, Quyen TN. Convergence rates for total variation regularization of coefficient identification problems in elliptic equations I. Inverse Problems. 2011;27(7):075008. doi: 10.1088/0266-5611/27/7/075008

- Wunderli T. A partial regularity result for an anisotropic smoothing functional for image restoration in BV-space. J Math Anal Appl. 2008;339(2):1169–1178. doi: 10.1016/j.jmaa.2007.07.013

- Giusti E. Minimal surfaces and functions of bounded variation. Boston: Birkhäuser; 1984.

- Bachmayr M, Burger M. Iterative total variation schemes for nonlinear inverse problems. Inverse Problems. 2009;25(10):105004. doi: 10.1088/0266-5611/25/10/105004

- Chan TF, Tai X. Identification of discontinuous coefficients in elliptic problems using total variation regularization. SIAM J Sci Comput. 2003;25(3):881–904. doi: 10.1137/S1064827599326020

- Dou Y, Han B. Total variation regularization for the reconstruction of a mountain topography. Appl Numer Math. 2012;62(1):1–20. doi: 10.1016/j.apnum.2011.09.004

- Hào DN, Quyen TN. Convergence rates for total variation regularization of coefficient identification problems in elliptic equations II. J Math Anal Appl. 2012;388(1):593–616. doi: 10.1016/j.jmaa.2011.11.008

- Li L, Han B. A new iteratively total variational regularization for nonlinear inverse problems. J Comput Appl Math. 2016;298:40–52. doi: 10.1016/j.cam.2015.11.033

- Pan B, Xu D, Xu Y, et al. TV-like regularization for backward parabolic problems. Math Methods Appl Sci. 2017;40(4):957–969. doi: 10.1002/mma.4027

- Wang L, Liu JJ. Total variation regularization for a backward time-fractional diffusion problem. Inverse Problems. 2013;29(11):115013. doi: 10.1088/0266-5611/29/11/115013

- Osher S, Burger M, Goldfarb D, et al. An iterative regularization method for total variation-based image restoration. Multiscale Model Simul. 2005;4(2):460–489. doi: 10.1137/040605412

- Acar R, Vogel CR. Analysis of bounded variation penalty methods for ill-posed problems. Inverse Problems. 1994;10(6):1217–1229. doi: 10.1088/0266-5611/10/6/003

- Evans L. Partial differential equations. 2nd ed. Graduate Studies in Mathematics; Providence: American Mathematical Society; 2010.

- Friedman A. Partial differential equations of parabolic type. Englewood Cliffs, NJ: Prentice-Hall; 1964.

- Ladyzhenskaia OA, Solonnikov VA, Ural'ceva NN. Linear and quasi-linear equations of parabolic type. Providence (RI): American Mathematical Society; 1968.

- Xiao T, Yu S, Wang Y. Numerical solution for inverse problems. Beijing: Science Press; 2003.