?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

A solution method for an inverse problem of determining the time-dependent lowest order coefficient of the 2D bioheat Pennes equation with nonlocal boundary conditions and total energy integral overdetermination condition recently appeared in literature is analysed and improved. Improvements include convective boundary condition into the model, the development of an accurate forward solver, accurate determination of total energy, and the proposal of a method for the numerical treatment of the inverse problem from noisy data. In the method, the 2D bioheat Pennes equation is solved by the method of lines based on a highly accurate pseudospectral approach, and sought coefficient values are estimated by the Levenberg–Marquardt method with the discrepancy principle as stopping rule. Numerical experiments are reported to illustrate effectiveness of the proposed method.

2010 Mathematics Subject Classification:

1. Introduction

In this paper, we consider an inverse boundary value problem (IBVP) associated with a 2D linear second-order parabolic partial differential equation under convective and nonlocal boundary conditions, assuming an integral energy overdetermination condition. The model is intended to describe the bioheat transfer process in a rectangular perfused living tissue by means of the so-called Pennes equation for the temperature U of the tissue [Citation1–3],

(1)

(1) with boundary conditions

(2)

(2)

(3)

(3)

(4)

(4) and initial condition

(5)

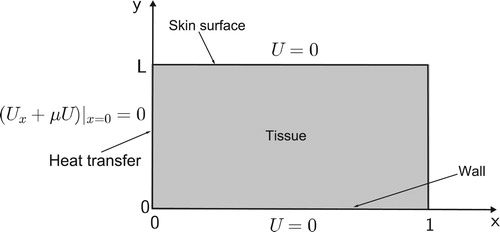

(5) In the above model, the tissue is supposed to have thickness equals to L and width equals to 1; the upper skin is located at y = L and there is a wall between the tissue and an adjoint blood vessel at y = 0 (Figure ). Equation (Equation1

(1)

(1) ) relies on the Fourier law for heat conduction assuming that the tissue is homogeneous and has isotropic thermal properties. The effects of the presence of the microcirculatory system are collectively accounted to avoid local anatomic issues so that all the blood perfusion information is concentrated in the term

, where

is the so called blood perfusion coefficient [Citation2–4]. Besides, the source term f accounts for the volumetric rate of external irradiation and metabolic heat. The convective boundary condition (Equation3

(3)

(3) ) attempts to simulate the heat transfer by convection between the boundary of the tissue at x = 0 and the external environment, which is supposed to be at zero temperature [Citation5]. Assumption (Equation4

(4)

(4) ) means that the upper skin and the wall are kept at zero temperature; in turn, the nonlocal periodic boundary condition (Equation2

(2)

(2) ) states that the boundary of the tissue at x = 0 and x = 1 are kept at the same (unknown) temperature. Nonlocal boundary conditions are characterized by the specification of values of the solution or its derivatives on a fixed part of the boundary together with a relationship between these values and the values of the same functions on other internal or boundary manifolds [Citation6,Citation7]. They are often observed in connection with a bioheat transfer model describing the relation between tissue temperature and arterial blood perfusion [Citation8,Citation9]. Note that the case

is the so called Ionkin boundary condition [Citation10,Citation11] that describes an insulated condition at x = 0. For other bioheat models with application in distinct scenarios the reader is referred to [Citation5,Citation12–16].

For simplicity from here on, we will take L = 1 which does not loss generality (see Remark 2.1 in [Citation17])). Let and

. We denote by

the set of all continuous functions on

and by

the set of all differentiable functions on

. Also

In the definitions above, the overline symbol denotes the closure of a set.

The inverse problem investigated in this work consists of finding a pair satisfying (Equation1

(1)

(1) )–(Equation5

(5)

(5) ) as well as the total integral overdetermination condition

(6)

(6) where

stands for thermal energy. In particular, motivated by practical problems, the main goal of the paper is to estimate the unknowns from noise corrupted discrete data

, measured at time steps

. Thus, the study presented in this work complements an approach reported in [Citation11], where the reconstruction of the unknowns is based on exact data.

Techniques for inverse blood perfusion determination in one-dimensional (1D) case based on initial and classical boundary conditions (Dirichlet, Neumann, Robin) and additional classical measurements are found in [Citation18] and references therein; related works including existence and uniqueness results are also found in [Citation19–22]. On the other hand, determination of blood perfusion in 1D cases based on nonlocal or nonclassical initial/boundary conditions and integral overdetermination data can be found in [Citation8,Citation23–26]. Moreover, such type of inverse problems in multidimensional cases have been studied in [Citation10,Citation11,Citation27–30]. A 2D bioheat transfer model which considers the coefficient p to be space-dependent and includes a given temperature on the upper skin, adiabatic conditions at left and right walls, and convective heat transfer between the tissue and an adjoint large blood vessel is studied both theoretically and numerically in [Citation1,Citation31]. Based on these studies, the inverse problem of estimating the space-dependent perfusion coefficient in this type of model is addressed in [Citation32] using a method of lines coupled with a nonlinear minimization protocol based on the regularized Gauss–Newton method. Identification procedures based on well-recognized approximation methods are available in several places. For instance, identification strategies based on Trefftz method can be found in [Citation13,Citation14], finite differences are used in [Citation11,Citation29], and the boundary element method is employed in [Citation15].

The goal of the present paper is to introduce a stable numerical reconstruction method for the time-dependent perfusion coefficient satisfying (Equation1

(1)

(1) ) – (Equation5

(5)

(5) ) under the condition (Equation6

(6)

(6) ). As in [Citation17], where the authors deal with the one dimensional reconstruction problem, the forward problem is approached by means of the method of lines, where the spatial derivatives in (Equation1

(1)

(1) ) are discretized through a highly accurate Chebyshev pseudospectral (CPS) approach [Citation33,Citation34]. This procedure results in a semidiscrete model involving an ODE system with respect to the variable t, which is then solved by the Crank–Nicolson method. The reconstruction problem is posed as a minimization problem whereby approximations for

are obtained. To solve the minimization problem, the Levenberg–Marquardt method [Citation35,Citation36] in conjunction with the discrepancy principle as stopping rule is considered. Such an approach requires accurate approximations to energy values at each iteration, this is the reason why we use the Clenshaw–Curtis quadrature rule, which is based on the Chebyshev points as nodes [Citation34, chapter 12].

The outline of the paper is the following. In Section 2, we discuss the well-posedness of the inverse reconstruction problem. In Section 3, the numerical scheme for the forward problem is addressed. In Section 4, we describe the estimation procedure. In Section 5, we present and justify the use of the Clenshaw–Curtis quadrature rule to evaluation of energy values. In Section 6, we present some numerical results. The paper ends with concluding remarks.

2. Mathematical background

In order to ensure global well-posedness of the inverse problem, we first use the generalized Fourier method to obtain a formal solution to (Equation1(1)

(1) )–(Equation5

(5)

(5) ) in series form. We follow the line of analysis reported in [Citation10,Citation11], which addresses the case

. Proceeding in this way, the first step is to consider the following 2D spectral problem for

,

(7)

(7)

(8)

(8)

(9)

(9) where σ is the separation parameter. Using the variable separation method, we look for solutions of the form

(10)

(10) Substitution of (Equation10

(10)

(10) ) into (Equation7

(7)

(7) )–(Equation9

(9)

(9) ) yields the following Sturm–Liouville problems

(11)

(11)

(12)

(12) Problem (Equation11

(11)

(11) ) is standard and has eigenvalues given by

and respective eigenfunctions

,

. On the other hand, (Equation12

(12)

(12) ) requires a special treatment since it is associated with a nonself-adjoint type problem. However, it is not difficult to check [Citation17,Citation26] that the eigenvalues

are given by

where

,

, are monotone increasing positive solutions of the equation

for

and

, respectively; in turn,

is the unique positive solution of the nonlinear equation

In addition, the system of eigenfunctions

,

, is given by

Now it is easy to check that the product ,

,

are eigenfunctions of the two dimensional spectral problem (Equation7

(7)

(7) )–(Equation9

(9)

(9) ) for the case

. Also, it can be shown that

,

,

, and

are biorthogonal in

, where

(13)

(13) are eigenfunctions of adjoint spectral problem to (Equation12

(12)

(12) ). The system of roots functions of (Equation7

(7)

(7) )–(Equation9

(9)

(9) ) is then given by

jointly with the system

of root functions of the corresponding adjoint problem. This comes as no surprise since it is characteristic for 2D problems that the product of eigenfunctions of two 1D spectral problems becomes eigenfunction of a 2D spectral problem. However, note that the boundary condition in (Equation11

(11)

(11) ) is self adjoint since it is a Dirichlet boundary condition, but the boundary condition in (Equation12

(12)

(12) ) is not. They are regular (but not strongly regular) [Citation37] and unlike the case of

, for the case

, the eigenvalues of the auxiliary spectral problem are the zeros of multiplicity two of the characteristic determinant. The expansion theorem in terms of eigenfunctions for the problem with regular boundary conditions [Citation37] is not applicable to the case

. In the case

the corresponding system of eigenfunctions and associated eigenfunctions is Riesz basis in

[Citation7]. The spectral problem (Equation12

(12)

(12) ) with the boundary conditions in the case

has the system of eigenfunctions which is a basis with parenthesis in

, [Citation11].

In addition, it is well known that the products of two orthonormal eigenfunctions corresponding of two 1D self adjoint boundary conditions are also an orthonormal basis in [Citation38], but generally it is not characteristic for other boundary conditions: Even if the eigenfunction of two 1D spectral problems generate bases, this may not happen with the products [Citation39]. For this reason, the business property of the products

,

,

of eigenfunctions (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ) in

has to be studied. However, this system is complete since

,

is an orthogonal basis and

,

, is basis with parenthesis in

[Citation38]. Then

is also a basis with parentheses in

, since for arbitrary

the series

converges in

, where

.

In the case , the system of eigenfunctions and associated eigenfunctions of problem (Equation7

(7)

(7) )–(Equation9

(9)

(9) ) has the form

whereas the system of eigenfunctions and associated eigenfunctions of the adjoint problem is given by

Then, the sequences

and

form a biorthogonal system of functions on

. Besides, each of these sequence form a Riesz basis in

[Citation7].

The solution of the forward problem (Equation1

(1)

(1) )–(Equation5

(5)

(5) ) for arbitrary

is then sought in Fourier series form in terms of

. We will not enter into details here; instead, we refer the reader to [Citation11]. The associated inverse problem can be addressed by means of such representation, taking into account the overdetermination condition (Equation6

(6)

(6) ) with

as unknown. Indeed, in this case, the inverse problem can be reformulated as a Volterra integral equation of the second kind with respect to

and whose solution depends on smoothness assumptions on the data. This leads to existence and uniqueness of the solutions for the inverse problem. More precisely, provided such r exists on

, the coefficient p can be determined from the equation

(14)

(14) As the details are quite similar to the proof of the case

presented in [Citation11], we omit the proof. However, for convenience of the reader the existence and uniqueness of solution to the inverse problem is stated below under the following assumptions on φ, f and E:

with

,

with

,

The main result on existence and uniqueness of the solution of the inverse problem (Equation1

(1)

(1) )–(Equation6

(6)

(6) ) is presented as follows.

Theorem 2.1

Under the conditions –

the inverse problem (Equation1

(1)

(1) )–(Equation6

(6)

(6) ) has a unique solution pair

and this solution depends continuously upon the data

.

Theorem 2.1 states that the inverse problem under investigation is well-posed in appropriate spaces of regular input data functions. However, in practice, the input data are inaccurate and the energy E is not smooth. Hence the solution of the inverse problem based on numerical differentiation following (Equation14(14)

(14) ) as done in various papers, see, e.g. [Citation8,Citation11,Citation29], becomes unstable and the calculated coefficient p loses its physical significance. This motivates the inverse problem method to be presented in Section 4 based on discrete data and the CPS collocation method.

3. Highly accurate forward solver

In this section, we will derive an efficient numerical approach for solving the bioheat model (Equation1(1)

(1) )–(Equation5

(5)

(5) ) that we will use in the numerical treatment of the inverse problem of interest. For this purpose, we will use the CPS method in which the discretization of spatial derivatives is done using the Chebyshev differentiation matrix, giving rise to a system of time-dependent ordinary differential equations that can be solved in in different ways. For the sake of clarity, before describing details of the proposed approach, let us introduce some generalities and notation about Chebyshev's differentiation matrix. Let

denote a vector with function values

as entries, where

are the so called Chebyshev nodes (also known as Chebyshev–Gauss–Lobatto nodes) within the interval

,

(15)

(15) and let the associated

Chebyshev differentiation matrix be denoted by D. Assuming that the rows and columns of D are indexed from 0 to n, the entries of D are given by [Citation34]

(16)

(16) where

if i = 0 or i = n and

otherwise. Provided v is sufficiently smooth, it is well known that matrix D yields highly accurate approximations to

,

, simply by taking

,

, and so on [Citation33,Citation34]. Further, to approximate the derivative

at Chebyshev nodes

distributed within an interval

, observe that introducing the change of variable

,

, and setting

, we have

. This implies that the Chebyshev differentiation matrix with nodes within

is simply

times matrix D defined in (Equation16

(16)

(16) ).

With regard to the discretization of the bioheat problem, for simplicity we will consider a mesh consisting of grid points on the unit square based on

Chebyshev points within

in each direction:

(17)

(17) and assume that grid points are numbered in lexicographic ordering. As the standard differentiation matrix is no longer used in this work, to discretize spatial derivatives, assume that the Chebyshev differentiation matrix with nodes within

is also denoted by D and partitioned as

(18)

(18) where the subscript

stands for transpose of a vector or matrix. For future use, let

,

denote the vectors formed by the first and last n components of

, respectively. Also, set

(19)

(19) Then second-order partial derivatives with respect to x on the grid can be approximated as

(20)

(20) where the last equality is by virtue of (Equation3

(3)

(3) ). Now noting that

using (Equation20

(20)

(20) ) and the boundary condition (Equation2

(2)

(2) ), the approximation becomes

where

(21)

(21) Since only unknowns

matter, with

denoting the first canonical vector of

, we conclude that

(22)

(22) where we have introduced

Therefore, an approximation to second-order derivatives with respect to x, for grid points associated to the unknowns only, can be written as follows:

(23)

(23) where ⊗ denotes Kronecker product and

denotes the

identity matrix. A similar approximation for second-order derivatives with respect to y on the whole grid can readily be written. In fact, if we use Matlab notation and introduce

we deduce that

(24)

(24) Neglecting approximation errors, combination of (Equation23

(23)

(23) ) and (Equation24

(24)

(24) ) together with the collocation method yield a semidiscrete model described as

(25)

(25) where

is a long vector containing block component

,

(26)

(26) with I denoting the identity matrix of appropriate order,

(27)

(27) and

The initial value problem (IVP) (Equation25

(25)

(25) ) can be solved by a variety of methods, including Runge–Kutta methods, predictor-corrector methods, etc. In this work, approximate solutions are calculated using the Crank–Nicolson method which means, for timestep h, at time

,

approximations

to

are calculated according to

or, equivalently

(28)

(28) where

(29)

(29) Numerical results that illustrate the effectiveness of the forward solver we have just described are postponed to Section 6.

4. Estimation method

Let be a family of continuous linearly independent functions on

such as polynomials, splines, etc., and let

. Let us construct approximations to the perfusion coefficient

in the subspace finite-dimensional

. That is, the idea is to determine

, with

,

(30)

(30) where

,

, are unknown coefficients to be determined by requiring that the integral overdetermination condition (Equation6

(6)

(6) ) is satisfied approximately in a sense to be described below. For now notice that if the perfusion coefficient is as in (Equation30

(30)

(30) ), then the semidiscrete forward problem becomes

(31)

(31) so that, for each collection of coefficients

, there is a solution

. Let

be an approximation to

(

),

,

, obtained by some numerical method, and let the associated approximation to the solution

be denoted by

. Now assume that energy values are calculated by using

in conjunction with some quadrature rule and that such values are denoted by

. Obviously, the quality of energy values calculated in this way depends on α. Thus, the purpose of the estimation method we have in mind is to determine a proper α such that predicted energy values

are close to measured values

, i.e.

(32)

(32) in a sense to be specified latter. To this end, if the discrepancy between predicted and measured data

is denoted by

(33)

(33) the estimation method proposed in this work looks for coefficients

which minimizes the sum of square discrepancies

(34)

(34) where

denotes the 2-norm in

,

is the vector of predicted energy values and

is the vector of measured data.

From here on, we will focus on the minimization problem. The convergence analysis of the approximations is beyond the scope of the present work and is left for future research. As a matter of fact, it is not difficult to see that the first-order necessary condition for minimization of

,

, reads

(35)

(35) where

is the Jacobian matrix (also referred to as the sensitivity matrix) whose entries are

(36)

(36) For practical calculation of

we note that as

is obtained by integrating

, partial derivatives

are also obtained by integrating

. But since

solves (Equation31

(31)

(31) ), it is straightforward to see that

(37)

(37) Now since the initial value

does not depend on the vector of parameters α, then

As a result, partial derivatives of temperature

with respect to

can be computed by solving the initial value problem

(38)

(38) To summarize, the determination of

can be done by first solving the auxiliary initial value problem (Equation38

(38)

(38) ) and then integrating the solution to determine partial derivatives

.

In practice, measured data are corrupted by noise and the challenge is to estimate a vector of parameters by approximately minimizing (Equation34

(34)

(34) ) with

instead of

such that

(39)

(39) With the Jacobian matrix at hand the nonlinear problem (Equation34

(34)

(34) ) can be handled in several ways by using iterative methods. For instance, by linearizing predicted energy

around a current estimate

at iteration k we obtain

(40)

(40) where

and

denote the Jacobian matrix and the predicted energy at iteration k. The Gauss–Newton method for minimizing (Equation34

(34)

(34) ) with data satisfying (Equation39

(39)

(39) ) substitutes the linear model (Equation40

(40)

(40) ) into (Equation35

(35)

(35) ), and defines a sequence of iterative approximations

given as

(41)

(41) where

is assumed to be nonsingular. Problems for which

is rank-deficient or close to singular generate difficulties and sequence (Equation41

(41)

(41) ) may lead to useless results. The Levenberg-Marquardt method (LMM) alleviates such difficulty by constructing a sequence of approximations of the form

(42)

(42) where

is the so called damping parameter and

is a diagonal matrix with positive entries.

A number of implementations of LMM are available in the literature which depend on the way the damping parameters are updated and on the choice of the diagonal matrix

[Citation35,Citation36,Citation40,Citation41]. In this work, we follow an approach by Yamashita et al. [Citation42] who consider LMM with line search and Armijo stepsize rule, with

chosen as the identity matrix and the damping parameter

chosen as the squared residual norm at iteration k. Regardless the chosen LMM implementation, a crude algorithm to estimate the desired coefficient can go as follows

Set k = 0 and choose an initial guess

.

Set

and solve the IVP (Equation31

(31)

(31) ) to calculate energy values

.

Calculate the residual

. If

meets a termination criterion, calculate

and stop, otherwise,

(3.1) For

, solve the IVP (Equation38

(38)

(38) ) to calculate the Jacobian

,

(3.2) Choose

and calculate

based on (Equation42

(42)

(42) ),

(3.3) Do j = j + 1 and go back to step 2.

For an LMM implementation with chosen according to Yamashita et al. [Citation42], applied to the estimation of the perfusion coefficient of a 1D bioheat problem, the reader is referred to [Citation17]. In addition, it is worth noting that the matrix inverse in (Equation42

(42)

(42) ) should not be is explicitly calculated to obtain

. Instead, just use a matrix decomposition to solve the system

for z and set

. Efficient calculation of energy values and corresponding derivatives is addressed in the next section.

5. Numerical calculation of energy and derivatives

For an efficient implementation of the optimization method, we have in mind energy values and the corresponding derivatives must be calculated by using highly accurate quadrature rules. If temperature values are known exactly, energy values can be calculated by using Gaussian quadrature following standard procedures. In fact, if

and

denote nodes and weights of Gaussian quadrature on

, in calculating energy values

we first approximate the integral with respect to x as

and then take

with

. Taking into account that the sums above can be regarded as standard inner product in

, it is straightforward to see that energy values can readily be approximated as

(43)

(43) where

denotes the

matrix with entries

,

, and

denotes the vector of weights.

Approximations obtained with Gaussian quadrature with m + 1 points is known to be exact for polynomials of degree up to 2m + 1. However, the calculation of energy values, as described above, cannot be done in our context since, what we have at our disposal are approximate temperature values at Chebyshev points not at Gauss quadrature nodes. To circumvent this difficulty we chose to use Clenshaw–Curtis quadrature rule based on Chebyshev points as nodes which integrates polynomials of degree m exactly. That is, Clenshaw–Curtis quadrature is half as efficient as Gaussian quadrature, although in practice it is not uncommon to see that both quadratures essentially provide the same precision or cases where Clenshaw–Curtis quadrature is more accurate than Gaussian quadrature [Citation43]. So, to calculate approximations to energy values

we use (Equation43

(43)

(43) ) where

is replaced by the vector of Clenshaw–Curtis quadrature weights,

are replaced by Chebyshev points, and

is replaced by matrix

with entries

calculated by the CPS method. In other words, in the numerical implementation of the reconstruction method proposed in this work, energy values are calculated as

(44)

(44) where w denotes the vector of Clenshaw–Curtis quadrature weights and

is the matrix with approximations to the solution at time

on the Chebyshev mesh. Clenshaw–Curtis quadrature weights can be readily calculated in several ways, see, e.g. [Citation34]. Obviously, a similar procedure can be followed to calculate partial derivatives required in (Equation36

(36)

(36) ).

6. Numerical results

Numerical results reported in what follows start with an example that illustrates both the effectiveness of the CPS method and the accuracy of Clenshaw–Curtis quadrature rule in calculating energy values. Then, three examples that illustrate the reconstruction method proposed in this work are described.

6.1. Assessing CPS method and Clenshaw–Curtis quadrature rule

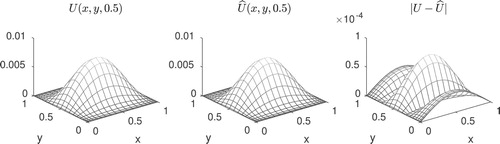

To demonstrate numerically the effectiveness of the forward solver developed in Section 3 we use a benchmark problem for the case , with source function

chosen so that the unique solution to (Equation1

(1)

(1) )–(Equation5

(5)

(5) ) is

(45)

(45) with perfusion coefficient defined by

. It is not difficult to show that for this test problem the energy function is given by

(46)

(46) Calculation of the forward problem is made using

Chebyshev grid points and the auxiliary initial value problem (Equation25

(25)

(25) ) is solved by using Crank–Nicolson method with timestep

(i.e.with N = 200). Exact solution

, numerical solution

and the corresponding error at t = 0.5,

, all are displayed in Figure . This test problem is also considered in [Citation11] (see Example 4.1) and solved by using finite differences with stepsize

. The accuracy obtained in this way is approximately of the order

. This contrasts significantly with our results obtained with much fewer grid points and with pointwise error of the order

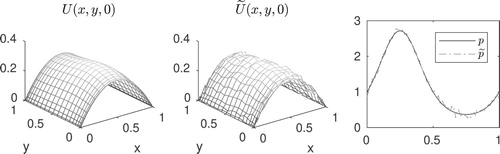

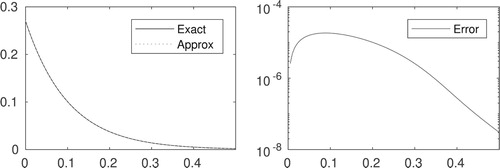

.

Figure 2. Exact solution and numerical solution of model (Equation1(1)

(1) )and corresponding error.

![Figure 2. Exact solution and numerical solution of model (Equation1(1) Ut=Uxx+Uyy−p(t)U(x,y,t)+f(x,y,t),(x,y,t)∈]0,1[×]0,L[×]0,T],(1) )and corresponding error.](/cms/asset/cc74b672-d1db-4535-812b-4a1e29903053/gipe_a_1846034_f0002_ob.jpg)

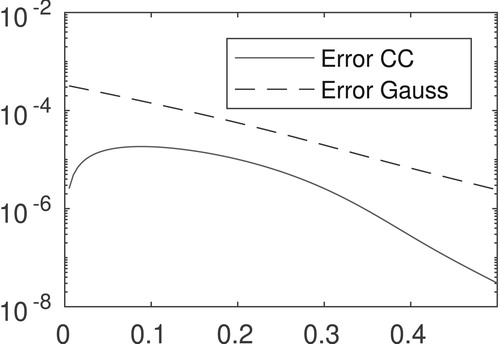

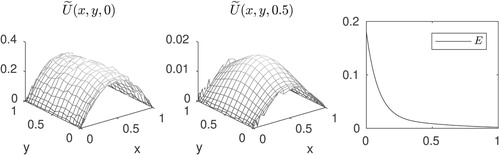

Next, to assess the approximation error of Clenshaw–Curtis quadrature rule we use the approximate solution W to calculate energy values according to (Equation44(44)

(44) ) with n + 1 = 21 Chebyshev points. Outcome of the calculations, as well as the corresponding pointwise error, are displayed in Figure . Note that the approximation error in energy values is approximately

.

Figure 3. Left: Energy values and their Chebyshev-Clenshaw–Curtis based approximations. Right: Pointwise error.

To further evaluate the approximation error of Clenshaw–Curtis quadrature rule, energy values were calculated using Gaussian quadrature with the same number of points. Results displayed in Figure show what is often observed in practice: both quadrature rules have essentially the same accuracy, see again [Citation43].

6.2. Reconstruction examples

In our examples, the forward solver is implemented as described in the previous subsection and the Levenberg method looks for a parametrized coefficient based on a linear spline basis [Citation44] associated with m + 1 = 21 equally distributed points. Thus the Jacobian matrix

at iteration k is of order

and highly sparse due to the local properties of the spline basis being used. Noisy measurements are simulated by adding Gaussian random noise to the exact data

,

, such that

where

are zero-mean random numbers scaled in such a way that relative noise level in data is specified as

Constant

stands for relative noise level whereas

and

denote vectors containing noise-free and noisy measurements. To stop the iterative process, we use the discrepancy principle, i.e. the iterations stop at the first k such that

In all computations, the error norm was used as input data and the discrepancy principle was implemented with

We consider two numerical examples to illustrate the effectiveness of the estimation method.

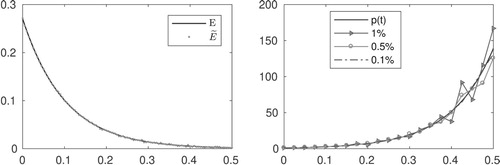

6.2.1. Example 1

This example, taken from [Citation11], considers the case and has a solution given by the pair

The source term and energy are, respectively,

To evaluate the robustness of the estimation method to noise, we consider data as described above with three noise levels, namely

and for each noise level we calculate the relative error in the reconstruction defined as

where

and

denote the vectors containing pointwise values of exact and estimated

, respectively. Exact data and noisy data with noise level

as well as the results of the numerical inversion for all tested noise levels are displayed in Figure . Relative errors are displayed in Table . Note that the pointwise estimation error improves as the noise level decreases and few iterations were required for the stopping criterion to be satisfied, e.g. for NL=

the criterion was satisfied after k = 9 iterations.

Figure 5. Left: Exact and noisy data for numerical inversion. Noisy data in this illustration corresponds to noise level . Right: Exact coefficient

and recovered ones.

Table 1. Relative error in reconstructing .

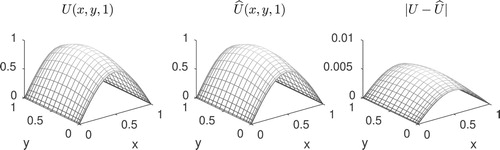

To further evaluate the effectiveness of the proposed inversion method, the recovered perfusion coefficient, the source function and initial data in model (Equation1(1)

(1) ) – (Equation5

(5)

(5) ) were used as input data to predict temperature values through our forward solver. The predicted computed values, denoted here by

, and pointwise errors

for the case

at the time level t = 0.5 are displayed in Figure . Notice that the error in the recovered

for this case is about

.

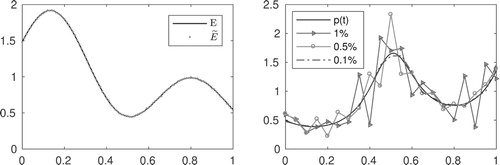

6.2.2. Example 2

We now address the reconstruction problem using a test problem with . In this case, the solution to the inverse problem is

In addition, the source term, the initial condition and the energy function are, respectively,

For this example the forward solver also uses

grid points but the time step is h = 0.05 and the semidiscrete model (Equation25

(25)

(25) ) is solved on

As in Example 1, to evaluate the reconstruction method we also consider noise data with three noise levels,

Numerical results displayed in Figure together with relative errors presented in Table show that the quality of the reconstruction improves as the noise level decreases and indicate therefore that the method is robust. As for the number of iterations spent by the whole process, in this example, for

the stopping index was k = 5.

Figure 7. Left: Exact and noisy data for numerical inversion. Noisy data in this illustration corresponds to noise level . Right: Exact coefficient

and recovered ones.

Table 2. Relative error in reconstructing .

Finally, as in Example 2, we also use the recovered coefficient in order to calculate predicted temperature values

. These values and the corresponding pointwise error for

are displayed in Figure .

6.2.3. Example 3: Hard test

We consider a test problem using data that can be accepted as experimental and that allow us to objectively challenge the effectiveness of the proposed method. The data set we have in mind consists of energy values corresponding to a bioheat transfer problem (Equation1(1)

(1) )-(Equation5

(5)

(5) ), with

, initial condition

(47)

(47) and source function

(48)

(48) For the moment, the perfusion coefficient p is an arbitrary function in

and therefore, no solution to the bioheat transfer problem is available. In this example we use

.

For ease of exposition, we will start with an analysis of the input data described above to ensure that the inverse problem of interest has a unique solution. Next, we will describe how the data set is generated and, finally, we will describe results of the reconstruction.

6.2.4. Analysis

We will show that the data set we have in mind arises from a well-posed problem by checking that the input data and the associated energy E meet the conditions

–

in Theorem 2.1. In fact, it is easy to check that φ satisfies the boundary conditions in

and that

(49)

(49) Clearly, the first integral in (Equation49

(49)

(49) ) is positive. As for the second, integration by parts shows that

so that

. We can argue analogously to show that

(50)

(50) Therefore, since φ is sufficiently regular, all conditions in

are satisfied. In turn, due to the definition of function f in (Equation48

(48)

(48) ), it follows that the boundary conditions in

are satisfied and that

holds true. Thus, since f is sufficiently regular, all conditions in

holds true.

Now since both and

are satisfied, for given

, p>0, we are allowed to conclude that the forward problem (Equation1

(1)

(1) )–(Equation5

(5)

(5) ) has a unique solution

, which, as already mentioned, is expressed in series form in terms of the basis

[Citation11]. Therefore, to check that

holds true it is sufficient to show that the associated energy E defined in (Equation6

(6)

(6) ) satisfies

. For this we will show that

on

. Indeed, assume the contrary and set

. As

on

, it is clear that U does not attain

in

. From the maximum principle for parabolic equations [Citation45, Theorem 1.5, p. 53], if U attains

at some point in

then

throughout

; clearly, this contradicts (Equation1

(1)

(1) ), as both p and f given by (Equation48

(48)

(48) ) are positive on

. As a consequence, taking into account (Equation2

(2)

(2) )–(Equation4

(4)

(4) ), U attains

at a point

, 0<y<1 or at a point

, 0<y<1,

. Assuming that U attains

at a point

, 0<y<1, using again the maximum principle, it follows that

, i.e.

, which contradicts (Equation3

(3)

(3) ). From condition (Equation2

(2)

(2) ), assuming that U attains

at a point

, 0<y<1, U attains

also at

, so we have the same contradiction. Thus, we conclude that

on

and from (Equation6

(6)

(6) ) that

. Now, for each

fixed, as f is not identically null and taking into account that

satisfies (Equation1

(1)

(1) ), it is clear that

is not identically null on

. Then, from the continuity of U in

, we conclude that

for all

.

In our numerical experiments we will use

(51)

(51)

6.2.5. Generating data

Roughly speaking, to simulate experimental energy data for the bioheat transfer problem of interest we will rely on temperature data obtained from the numerical solution of a nearby problem. For this, we first use the Crank–Nicolson method to calculate approximate solutions to the forward problem (Equation1

(1)

(1) )–(Equation5

(5)

(5) ), as described in (Equation28

(28)

(28) ), assuming that neither the initial condition

given in (Equation47

(47)

(47) ) nor the coefficient

in (Equation51

(51)

(51) ) are exactly known. The source term, on the other hand, is assumed known and given in (Equation48

(48)

(48) ). Then, to calculate discrete energy values

we simply integrate the approximate solution at

on

using the composite trapezoidal quadrature rule. However, we observe that, since the CPS method works on a grid based on Chebyshev points, to calculate energy values in this way the approximate solutions at each time step have to be interpolated on a regular grid. In our numerical calculations, to interpolate the data we use the built-in Matlab function interp2. In practice, the temperature data used in our calculations come from solving the forward problem with initial condition

and perturbed coefficient

, where

and

denote random numbers with specified intensities. For the reconstruction problem, we will always use temperature data on a spatial grid of

Chebyshev points at time steps of size h = 0.05. As an example of input data that we use to generate energy values, exact and perturbed initial condition, φ,

, as well as exact and perturbed coefficient, p,

, with a noise level of

in both cases, are displayed in Figure .

On the other hand, the initial condition , the approximate numerical solution obtained by Crank–Nicolson method at t = 0.5, as well as the calculated energy values, are all displayed in Figure . It is worth emphasizing here that, since the Crank-Nicolson method is unconditionally stable, provided input errors are small, the approximate solutions

will not depart so much from

. This justifies why we regard the actual temperature data as experimental.

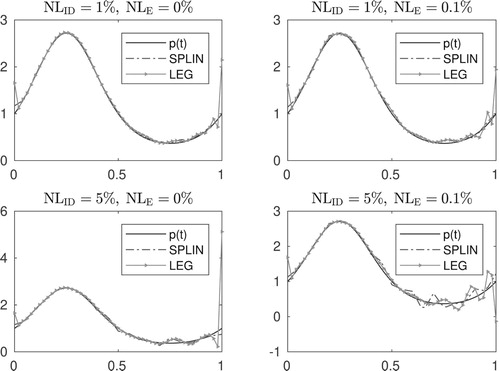

6.2.6. Reconstruction results

We report numerical results obtained with the proposed method from data , generated as described above, assuming that both

,

(the input data) are affected by perturbations with the same intensity. We consider input data with three noise levels,

. Before proceeding we observe that, since the purpose of the present example is to challenge the proposed method, in addition to addressing the reconstruction problem with the calculated energy values

as input data, we also consider extra uncertainty in

coming from additive random disturbances in

; the numerical experiment with this type of data is conducted considering two noise levels:

. Further, for comparison purposes, in addition to the results obtained with the use of the spline basis, we also report results obtained with the method under the assumption that the perfusion coefficient is parameterized as a linear combination of Legendre polynomials on [0,1] of degree less than or equal to m (a subset of the Legendre basis of

). That is, we also look for

with

,

(52)

(52) where

denotes the shifted Legendre polynomial

(53)

(53) being

the classical Legendre polynomial of degree k. In our numerical calculations both bases (spline and polynomial) have dimension 21. Taking into account that we consider disturbances in the input data with three noise levels (

) and disturbances in the calculated energy also with three noise levels (

), the numerical results of the experiment can be organized into 9 cases, each of them associated to a pair

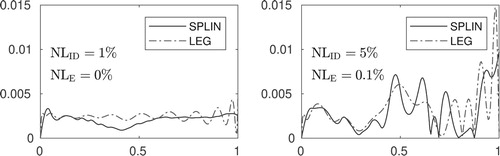

. In Figure , we present results of four cases, which we consider as the most representative ones and that correspond to the combinations (S, S), (S, L), (L, S) and (L, L), where S and L stand for smallest and largest noise levels respectively.

As we can see, except for the case (S,S), i.e.(), the quality of the reconstructions obtained with the Legendre basis look significantly inferior when compared to that obtained with the spline basis. To assess the results in a more efficient way, we calculate relative errors in the reconstructions for both approaches. Results are shown in Table not only confirm what we visually concluded from Figure , but also that, for this test problem, the reconstruction quality obtained with the spline basis is superior.

Table 3. Relative error in estimated coefficients.

To close this comparison, we mention that we also performed other experiments using the Legendre basis with dimensions 11 (m = 10) and 16 (m = 15). The quality of the results followed the same trend as those presented above and, therefore, are not reported here.

In the last part of the numerical experiment, we first solve the semidiscrete problem with noise-free input data and consider the numerical solution at as exact temperature data. Then, with

and the estimated coefficient

as input data, we solve the semidiscrete problem to predict temperature values at the same time steps and compare them with the exact ones through relative errors in the Frobenius norm. The results of such a comparison for the cases (S, S) and (L, L) are shown in Figure . From this figure, it can be seen that the errors in the predicted temperatures do not exceed

and

for the cases (S,S) and (L,L), respectively, which is quite notable since in these cases the largest error in

is about

. We believe that the reason of this is the ill-posed nature of the problem: very different input parameters can lead to very similar results.

7. Concluding remarks

The paper considers the determination of a time-dependent lowest order coefficient of a 2D parabolic equation with Ionkin nonlocal boundary condition and total energy measurement. Existence and uniqueness and global well-posedness of the problem are studied by using a series expansion method based on eigenfunctions of a nonself-adjoint spatial differential operator. The system of eigenfunctions is proved to be a basis with parenthesis of the solution space. For numerically solving the problem, the sought coefficient was determined by a nonlinear least-squares method combined with the CPS collocation method to solve the forward problem. Numerical results showed that the method is able to provide accurate and stable numerical reconstructions.

As future work, we plan to study an inverse problem associated with a 2D parabolic equation described as

(54)

(54) with nonclassical boundary conditions

(55)

(55)

(56)

(56)

(57)

(57) and initial condition

(58)

(58) The above mathematical model is intended to describe the bioheat transfer process of a rectangular perfused tissue with thickness and width equal to 1, upper skin at y = 1, a wall between the tissue and an adjoint blood vessel at y = 0 under adiabatic condition at x = 0 and the Wentzell boundary condition at x = 1, which is describing a situation like the Robin boundary condition, with the additional feature that the boundary has the capacity of storing heat.

The inverse problem that we plan to study consists of finding a pair satisfying (Equation54

(54)

(54) ) – (Equation58

(58)

(58) ) and the total integral overdetermination condition

(59)

(59) The mathematical analysis of the problem (Equation54

(54)

(54) )–(Equation59

(59)

(59) ) requires solving the 2D spectral problem

where σ is the separation parameter. This kind of 2D spectral problems is new and there is not enough literature available [Citation39,Citation46]. Theoretical and numerical analysis of (Equation54

(54)

(54) )–(Equation59

(59)

(59) ) are the subject of ongoing research.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Bedin L, Bazán FSV. On the 2D bioheat equation with convective boundary conditions and its numerical realization via a highly accurate approach. Appl Math Comp. 2014;236:422–436.

- Fan J, Wang L. Analytical theory of bioheat transport. J Appl Phys. 2011;109:104702.

- Pennes HH. Analysis of tissue and arterial blood temperatures in the resting human forearm. J Appl Physiol. 1948;1:93–122.

- Lakhssassi A, Kengne E, Semmaoui H. Modified Pennes' equation modelling bioheat transfer in living tissues: analytical and numerical analysis. Nat Sci. 2010;2:1375–1385.

- Cao L, Qin QH, Zhao N. An RBMFS model for analysing thermal behavior of skin tissues. Int J Heat Mass Transfer. 2010;53:1298–1307.

- Azizbavov EI. The nonlocal inverse problem of the identification of the lowest coefficient and the right-hand side in a second-order parabolic equation with integral conditions. Bound Value Probl. 2019;2019:1–19.

- Ionkin NI. Solution of a boundary-value problem in heat conduction with a non-classical boundary condition. Differ Equ. 1977;13:294–304.

- Hazanee A, Lesnic D. Determination of a time-dependent coefficient in the bioheat equation. Int J Mech Sci. 2014;88:259–266.

- Hochmuth R. Homogenization for a non-local coupling model. Appl Anal. 2008;87:1311–1323.

- Ionkin NI, Morozova VA. The two-dimensional heat equation with nonlocal boundary conditions. Differ Equ. 2000;36:982–987.

- Ismailov MI, Erkovana S. Inverse problem of finding the coefficient of the lowest term in two-dimensional heat equation with Ionkin-type boundary condition. Comput Math Math Phys. 2019;59:791–808.

- Ciesielski M, Duda M, Mochnacki B. Comparison of bioheat transfer numerical models based on the Pennes and Cattaneo–Vernotte equations. J Appl Math Comput Mech. 2016;15:33–38.

- Grysa K, Maciag A. Trefftz method in solving the Pennes' and single-phase-lag heat conduction problems with perfusion in the skin. Int J Numer Methods Heat Fluid Flow. 2019;30:3231–3246.

- Grysa K, Maciag A. Identifying heat source intensity in treatment of cancerous tumor using therapy based on local hyperthermia – The Trefftz method approachs. J Therm Biol. 2019;84:16–25.

- Iljaz J, Skerget L. Blood perfusion estimation in heterogeneous tissue using BEM based algorithm. Eng Anal Bound Elem. 2014;39:75–87.

- Majchrzak E, Turchan L. The general boundary element method for 3D dual-phase lag model of bioheat transfer. Eng Anal Bound Elem. 2015;50:76–82.

- Ismailov MI, Bazán FSV, Bedin L. Time-dependent perfusion coefficient estimation in a bioheat transfer problem. Comput Phys Commun. 2018;230:50–58.

- Grabski JK, Lesnic D, Johansson BT. Identification of a time-dependent bioheat blood perfusion coefficient. Int Commun Heat Mass Transfer. 2016;75:18–22.

- Lesnic D, Ivanchov MI. Determination of the time-dependent perfusion coefficient in the bioheat equation. Appl Math Lett. 2015;39:96–100.

- Lin Y. An inverse problem for a class of quasi-linear parabolic equations. SIAM J Math Anal. 1991;22:146–156.

- Prilepko AI, Solov'ev VV. On the solvability of inverse boundary value problems for the determination of the coefficient preceding the lower derivative in a parabolic equation. Differ Equ. 1987;23:136–143.

- Yousefi SA. Finding a control parameter in a one-dimensional parabolic inverse problem by using the Bernstein Galerkin method. Inverse Probl Sci Eng. 2009;17:821–828.

- Ismailov MI, Kanca F. An inverse coefficient problem for a parabolic equation in the case of nonlocal boundary and overdetermination conditions. Math Methods Appl Sci. 2011;34:692–702.

- Ivanchov MI, Pabyrivska NV. Simultaneous determination of two coefficients of a parabolic equation in the case of nonlocal and integral conditions. Ukr Math J. 2001;53:674–684.

- Kerimov NB, Ismailov MI. Direct and inverse problems for the heat equation with a dynamic-type boundary condition. IMA J Appl Math. 2015;80:1519–1533.

- Kerimov NB, Ismailov MI. An inverse coefficient problem for the heat equation in the case of nonlocal boundary conditions. J Math Anal Appl. 2012;396:546–554.

- Cannon JR, Lin Y, Wang S. Determination of source parameter in parabolic equation. Meccanica. 1992;27:85–94.

- Daoud DS, Subasi D. A splitting up algorithm for the determination of the control parameter in multi-dimensional parabolic problem. Appl Math Comput. 2005;166:584–595.

- Dehghan M. Numerical methods for two-dimensional parabolic inverse problem with energy overspecification cation. Int J Comput Math. 2000;77:441–455.

- Pyatkov SG. Solvability of some inverse problems for parabolic equations. J Inv Ill-Posed Probl. 2004;12:397–412.

- Bedin L, Bazán FSV. A note on existence and uniqueness of solutions for a 2D bioheat problem. Appl Anal. 2017;96:590–605.

- Bazán FSV, Bedin L, Borges LS. Space-dependent perfusion coefficient estimation in a 2D bioheat transfer problem. Comput Phys Commun. 2017;214:18–30.

- Fornberg B. A practical guide to pseudospectral methods. Cambridge: Cambridge University Press; 1996.

- Trefethen LN. Spectral methods in matlab. Philadelphia (PA): SIAM; 2000.

- Levenberg K.A. Method for the solution of certain non-linear problems in least squares. Quart Appl Math. 1944;2:164–168.

- Marquardt DW. An algorithm for least squares estimation of nonlinear parameters. J Soc Ind Appl Math. 1963;11:431–441.

- Naimark MA. Linear differential operators: elementary theory of linear differential operators. New York (NY): Frederick Ungar Publishing Co.; 1967.

- Lo CY. Boundary value problems. Singapore: World Scientfic; 2000.

- Yu. Kapustin N, Moiseev EI. A spectral problem for the Laplace operator in the square with a spectral parameter in the boundary condition. Differ Equ. 1998;34:663–668.

- Beck JV, Arnold KJ. Parameter estimation in engineering and science. New York (NY): Wiley; 1977.

- Dennis J, Schnabel R. Numerical methods for unconstrained optimization and nonlinear equations. Englewood Cliffs (NJ): Prentice Hall; 1983.

- Yamashita N, Fukushima M. On the rate of convergence of the Levenberg–Marquardt method. Comput (Suppl). 2001;15:239–249.

- Trefethen LN. Is gauss quadrature better than Clenshaw–Curtis? SIAM Rev. 2008;50:67–87.

- Golub HH, Ortega JM. Scientific computing and differential equations-an introduction to numerical methods. San Diego (CA): Academic Press; 1992.

- Pao CV. Nonlinear parabolic and elliptic equations. New York (NY): Plenum Press; 1992.

- Yu. Kapustin N. Basis properties of a problem for the Laplace operator on the square with spectral parameter in a boundary condition. Differ Equ. 2017;53:563–565.