?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

In this paper, the problem of unknown source identification for the space-time fractional diffusion equation is studied. In this equation, the time fractional derivative used is a new fractional derivative, namely, Caputo-Fabrizio fractional derivative. We have illustrated that this problem is an ill-posed problem. Under the assumption of a priori bound, we obtain the optimal error bound analysis of the problem under the source condition. Moreover, we use a modified quasi-boundary regularization method and Landweber iterative regularization method to solve this ill-posed problem. Based on a priori and a posteriori regularization parameter selection rules, the corresponding convergence error estimates of the two regularization methods are obtained, respectively. Compared with the modified quasi-boundary regularization method, the convergence error estimate of Landweber iterative regularization method is order-optimal. Finally, the advantages, stability and effectiveness of the two regularization methods are illustrated by examples with different properties.

1. Introduction

In the past few decades, the fractional diffusion equation was attracted much attention from many scholars. For fractional derivatives, different fractional derivatives are used in different fractional diffusion equations. There are many kinds of fractional derivatives, such as Caputo fractional derivative, Riesz fractional derivative, Grunwald Letnikov fractional derivative, Riemann-Liouville fractional derivative and so on. In order to solve the problem of singular kernel of fractional derivative, Caputo and Fabrizio developed a new fractional derivative with smooth kernel, called fractional Caputo-Fabrizio derivative (FCFD) in 2015 [Citation1]. It could describe the inhomogeneity of materials and the fluctuation of different scales. About the direct problem of fractional Caputo-Fabrizio derivative (FCFD) equation, there are a lot of researches results. Losada and Nieto studied some properties of FCFD [Citation2]. The following were some well-posed problems of differential equations with FCFD studied by some scholars, such as Cauchy problem, uniqueness of solutions, existence of solutions, etc. In [Citation3], the authors studied the Cauchy problem of the space-time fractional diffusion equation, where the time fractional derivative was a new fractional derivative Caputo-Fabrizio fractional derivative. Firstly, the expression of the solution of the linear diffusion equation was obtained by Laplace transform, proving the uniqueness of the solution. Secondly, under the global Lipschitz assumption, the regularity of weak solutions in linear and nonlinear cases was studied, and the existence of local mild solutions was given under the local Lipschitz assumption. In [Citation4], Xiangcheng Zheng and Hong Wang studied a class of nonlinear with variable order FCFD fractional order ordinary differential equation. It was proved that the problem was well-posed. The method was further extended and the well-posedness results of linear partial differential equations were proved. In [Citation5], Al-Salti considered a class of boundary value problem for time fractional heat conduction equations with FCFD. The existence and uniqueness of the solution were proved by the method of separation of variables and the initial value problem of fractional order differential. The Caputo-Fabrizio fractional heat conduction equation was transformed into Volterra integral equation to solve. Different solutions were given for different parameters. As a differential operator, FCFD had a wide range of applications. It was applied to many models in many fields, such as dynamic system, biomedicine, electro magnetics and so on. In [Citation6], Baleanu proposed a new FCFD model that included an index kernel. The uniqueness of the solution was proved. The convergence was analysed. Through numerical experiments, it was proved that the new fractional order model was better than the integer order model with ordinary time derivative. In [Citation7], Al-khedhairi proposed a financial model with non-smooth FCFD. The existence and uniqueness of the solution of the model was proved. The local stability was analysed and the key dynamic properties of the model were studied. In [Citation8], the authors consider the case of linear fractional differential equations:

where

,

and

, and studied the explicit form of the general solution of fixed point. On this basis, the uniqueness of the solution to the initial value problem of nonlinear differential equations was studied. In [Citation9,Citation10], the form of numerical solution and algorithm of differential equations with FCFD were mainly studied. In [Citation9], the authors introduced the second-order scheme for the distribution of particle anomalous motion function and derived a spatial fractional diffusion equation with time FCFD based on a continuous time random walk model

It was shown that the scheme was unconditionally stable. Through theoretical proof and numerical verification, the stability of the scheme was proved and the optimal estimation was obtained. In [Citation10], based on the new fractional derivative FCFD, the authors introduced the Crank-Nicolson difference scheme for solving the fractional Cattaneo equation:

where

,

,

. On the basis of uniform division, a priori estimate of discrete

error with optimal order of convergence speed

was established. Numerical experiments verified the applicability and accuracy of the format and provide support for theoretical analysis.

In recent years, involved in fractional differential equations, Caputo fractional derivative and Riemann-Liouville fractional derivative are the most common fractional derivatives in the inverse problem literature. Moreover, most fractional differential equations only do fractional operations on time, not spatial fractional order. To our knowledge, so far, few papers have discussed the inverse problem of Caputo-Fabrizio fractional derivative. There is no literature to use the regularization method to deal with the ill-posed problem of differential equations containing Caputo-Fabrizio fractional derivative.

Nowadays, it is a hot topic in the field of inverse problems to deal with ill-posed problems by regularization method. There are many regularization methods to deal with ill-posed problem. In the early days, most scholars used Tikhonov regularization method [Citation11,Citation12] to deal with inverse problems. It is one of the oldest regularization methods. Later, on the basis of Tikhonov regularization method, some scholars studied the modified Tikhonov regularization method [Citation13], the fractional Tikhonov regularization method [Citation14,Citation15] and the simplified Tikhonov regularization method [Citation16]. In dealing with the inverse problem of bounded domain, the regularization methods are as follows: quasi-boundary regularization method [Citation17–19], the truncation regularization method [Citation20,Citation21], a modified quasi-boundary regularization method [Citation22], a mollification regularization method [Citation23], quasi-reversibility regularization method [Citation24,Citation25], Landweber iterative regularization method [Citation26–28], etc. In solving the inverse problem in unbounded domain, the common regularization methods are Fourier truncation regularization method [Citation29–32]. In [Citation33], Liu and Feng considered a backward problem of spatial fractional diffusion equation, and constructed a regularization method based on the improved ‘kernel’ idea, namely the improved kernel method. The modified kernel method can also deal with more ill-posed problem [Citation34–37].

In this paper, we first prove the uniqueness of the solution of problem (Equation1(1)

(1) ), and illustrate the ill-posedness of the inverse problem (Equation1

(1)

(1) ), in other words, the solution does not depend continuously on the measured data. Secondly, we use the modified quasi-boundary regularization method to solve the ill-posed problem (Equation1

(1)

(1) ). Based on a priori and a posteriori regularization parameter selection rules, we give the convergence error estimates between the exact solution and the regularization solution. However, the convergence error estimates obtained by the modified quasi-boundary regularization method will lead to saturation phenomenon. In addition, we propose a second regularization method, Landweber iterative regularization method, to solve this ill-posed problem. Through the study, we find that the error estimation results obtained by Landweber iterative regularization method will not produce saturation phenomenon, and the convergence error estimates under the a priori and a posteriori regularization parameter choice rules are order optimal.

In this paper, we consider the following problem:

(1)

(1) where

,

, Ω is a bounded domain in

with sufficient glossy boundary

.

is the Caputo-Fabrizio operator for fractional derivatives of order α which is defined as follows: assume a>0,

, and

, for a function with a first derivative ν, its Caputo-Fabrizio fractional derivative is defined as [Citation5]:

In problem (Equation1

(1)

(1) ), when

are known, the problem is well-being problem. If

is unknown, using the additional condition

we can get the space-dependent source term

. But in practice,

can only be obtained by measurement, so there will be errors between the measured data and the exact data.

Assumption 1.1

We assume that the measured data function and the exact data function

satisfy

(2)

(2) where the constant

represents a noise level and

denotes the

norm.

Definition 1.1

Without loss of generality, admit a family of eigenvalues

, with the function set

constituting an orthonormal basis in space

.

Denote the eigenvalues of the fractional power operators as

and the corresponding eigenfunctions as

, then we can get

According to the result in [Citation3], the solution of the problem (Equation1

(1)

(1) ) is

(3)

(3) where

,

, and

are the Fourier coefficients. Applying additional condition

and combining with formula (Equation3

(3)

(3) ), we can get

(4)

(4) Moreover, we have

(5)

(5) where

, and

is the Fourier coefficients.

Let , then

Therefore, (Equation4

(4)

(4) ) and (Equation5

(5)

(5) ) can be rewritten as

(6)

(6)

(7)

(7) where

is the Fourier coefficients.

Consequently, we obtain the exact solution of the source term as follows

(8)

(8) Due to

, then

. Consequently,

Therefore, according to (Equation2

(2)

(2) ), we have

(9)

(9) The organizational structure of this paper is as follows. In the second section, we analyse the uniqueness of the solution and ill-posedness of the problem (Equation1

(1)

(1) ). In addition, the conditional stability result is also given. In the third section, we first give some preliminary results, and then use these preliminary results to obtain the optimal error bound estimates of the problem (Equation1

(1)

(1) ). Next, we use the modified quasi-boundary regularization method to solve the inverse problem (Equation1

(1)

(1) ). In Section 4, we obtain the convergence error estimates between the regularized solution and the exact solution under the a priori and a posteriori regularization parameter selection rules. In Section 5, we present a second regularization method, Landweber iterative regularization method, to deal with this ill-posed problem. In Section 6, we compare the error estimates obtained by two regularization methods for the inverse problem (Equation1

(1)

(1) ). In Section 7, three numerical examples of different properties are given. In Section 8, we make a brief summary of the whole paper.

2. Conditional stability results, uniqueness and ill-posed analysis for inverse problem (1)

In this section, we mainly discuss uniqueness of solution, the ill-posed analysis and conditional stability of the unknown source identification problem of inverse problem (Equation1(1)

(1) ).

Definition 2.1

For any and

, defined

(10)

(10) where

is the inner product in

. Then

is a Hilbert space with the norm

(11)

(11)

Some Lemmas and Theorems given below are the results of conditional stability and some conclusions, which can help us analyse the ill-posed of inverse problem (Equation1(1)

(1) ). It is applicable to the whole paper.

Lemma 2.1

For any and

, we obtain

(12)

(12) where

Proof.

Due to , according to function

is an increasing function, we can get

(13)

(13) Combining (Equation13

(13)

(13) ) and

, we obtain

If

, we have

where

.

If , we have

where

.

Therefore, we can get

where

The proof of Lemma 2.1 is completed.

Theorem 2.1

Let satisfy

for all

. Therefore, the solution

and

of inverse problem (Equation1

(1)

(1) ) is unique.

Proof.

According to , if

, we can get

According to Lemma 2.1, we have

(14)

(14) According to

and

, we know

(15)

(15) where

.

From (Equation7(7)

(7) ), if

, that is

, we have

Further, according to (Equation3

(3)

(3) ), we know

The proof of Theorem 2.1 is completed.

Due to ,

, i.e.

. Then, it can be concluded from formula (Equation8

(8)

(8) ) that the small perturbation of

will cause a great change of

, which is a ill-posed problem. Next, we will give the conditional stability results of the source term

. In order to discuss the convergence of errors, we make the following assumptions:

Assumption 2.1

It is assumed that satisfy the following a priori bound condition

(16)

(16) where

, both E and p are positive constants.

Theorem 2.2

Suppose satisfies the a priori bound condition (Equation16

(16)

(16) ), the following results are obtained

(17)

(17) where

.

Proof.

According to the formula for the solution of source term and using the Hölder inequality, we can get

(18)

(18) According to Lemma 2.1, (Equation15

(15)

(15) ) and (Equation16

(16)

(16) ), we obtain

(19)

(19) Let us take formula (Equation19

(19)

(19) ) into formula (Equation18

(18)

(18) ), we have

Therefore,

where

. We have finished the proof of Theorem 2.2.

3. Preliminary results and optimal error bound of the problem (1)

3.1. Preliminaries

Suppose that X and Y are infinite dimensional Hilbert spaces. is regarded as a linear injective operator of X and Y, and K has a non-closed range

.

Consider the following operator Equation [Citation38–42]:

(20)

(20) All operators

can be seen as a special method to solve the inverse problem (Equation20

(20)

(20) ). The approximate solution of operator Equation (Equation20

(20)

(20) ) is expressed by

. Assume that

is the noise data obtained by measurement and satisfies

(21)

(21) where δ is the noise level.

Suppose M be a bounded set. For , based on assumption (Equation21

(21)

(21) ), the worst case error

of X in set M identified by

is defined as follows [Citation39–41,Citation43]:

(22)

(22) The definition of the optimal error bound (or the best possible error bound) is the infimum of all mappings

:

(23)

(23) Next, let us review some of the best results.

Set is considered as a set of elements that satisfy the source condition, i.e.

(24)

(24) where the operator function

is defined spectral representation [Citation41,Citation44,Citation45]

(25)

(25) In (Equation25

(25)

(25) ),

is the spectral decomposition of operator

,

is the spectrum family of operator

, a denotes a constant and satisfies

.

is a strictly convex function, and

,

. The spectrum of operator

is denoted by

.

A method is called [Citation38,Citation46]:

Optimal on the set M if

.

Order optimal on the set M if

with

.

In order to obtain the optimal error bound (or the best possible error bound) of error in the worst case defined in formula (Equation22

(22)

(22) ), we need to make some assumptions for the function φ. Suppose the function φ in formula (Equation24

(24)

(24) ) satisfies the following assumptions:

Assumption 3.1

([Citation38,Citation41,Citation45]) In formula (Equation24(24)

(24) ),

is a continuous function, then it has the following properties:

.

φ is strictly monotonically increasing on

.

is convex.

According to the above assumptions, the following theorem estimates give the general formula for finding the optimal error bound.

Theorem 3.1

[Citation38,Citation41,Citation43,Citation45]

Let the expression of be given by formula (Equation24

(24)

(24) ), and let Assumption 3.1 be hold and

, then

(26)

(26)

From Theorem 3.1, we can get the best possible error bound. This is a best result, but when we use it in a problem, two difficulties may arise. First of all, it is hard to know the convexity of ρ, which is sometimes violated. Second, even for small may not belong to

. We will use the following two conclusions to deal with these two difficulties.

Lemma 3.1

[Citation47]

If ρ is not necessarily convex, we have

for

.

for

.

Lemma 3.2

[Citation47]

Let be compact and let

be the ordered eigenvalued of

. If there exists a constant k>0 such that

for all

, then

for

where

.

3.2. Optimal error bound for problem (1)

In this section, we mainly study the optimal error bound of problem (Equation1(1)

(1) ). We only discuss the identification space-dependent source term

, where

,

is as follows

(27)

(27) where

is a norm in Sobolev space.

Problem (Equation1(1)

(1) ) is transformed into an operator equation by operator

:

(28)

(28) From

we have

(29)

(29) where

.

So, we obtain

For the convenience of subsequent discussion, in this section, let

. So,

can be written as follows

(30)

(30) where

is the eigenvalues of the operator L.

Due to then

(31)

(31) Now let us reconstruct (Equation27

(27)

(27) ) into (Equation24

(24)

(24) ), and we can get the following proposition:

Proposition 3.1

Considering the operator equation in formula (Equation28(28)

(28) ), the set M given in formula (Equation27

(27)

(27) ) is equivalent to the general source set:

(32)

(32) where

is given in the form of the following parameters

(33)

(33)

Proof.

By a priori bound condition we get the following inequality

(34)

(34) From (Equation32

(32)

(32) ) and (Equation34

(34)

(34) ), we obtain the expression of operator function

:

(35)

(35) Combining with (Equation31

(31)

(31) ), we obtain the function

in (Equation33

(33)

(33) ).

We have finished the proof of Proposition 3.1.

Proposition 3.2

The function defined by (Equation33

(33)

(33) ) is continuous and has the following properties:

.

φ is a strictly monotonically increasing function.

is a strictly monotonically increasing function and expressed in the form of parameters:

(36)

(36)

is a strictly monotonically increasing function and expressed in the form of parameters:

(37)

(37)

The inverse function

satisfies:

(38)

(38) where

Proof.

Conclusions (i), (ii), (iii) and (iv) are obvious, and we only prove conclusion . We can prove the (Equation38

(38)

(38) ) formula by proving

, where

We have finished the proof of Proposition 3.2.

Theorem 3.2

Suppose noise assumption (Equation9(9)

(9) ) and (Equation27

(27)

(27) ) hold. Therefore, the optimal error bound of the inverse problem (Equation1

(1)

(1) ) are as follows:

If

and

, we obtain

(39)

(39)

If

and

, we obtain

(40)

(40)

Proof.

From (Equation38(38)

(38) ), we have

(41)

(41) Consequently, according to Lemma 3.1, we obtain conclusion (i).

According to (Equation33(33)

(33) ), we have

(42)

(42) In Lemma 3.2, take

, we can get conclusion (ii). We have finished the proof of Theorem 3.2.

In the next section, we mainly introduce the first regularization method to deal with inverse problem (Equation1(1)

(1) ), namely the modified quasi-boundary regularization method.

4. A modified quasi-boundary regularization method and convergence estimation

In this section, we first give the regularization solution of a modified quasi-boundary regularization method corresponding to the exact solution of the inverse problem (Equation1

(1)

(1) ). Secondly, the a priori and a posteriori Hölder-type error convergence estimates are given by using a priori and a posteriori regularization parameter selection rule.

We can transform the ill-posed problem (Equation1(1)

(1) ) into the following integral equation for analysis:

where

Obviously kernel function

, so

is a self-adjoint operator.

Due to is a set of standard orthogonal bases in

. So, it is easy to know that

(43)

(43) is the singular value of operator K.

For the standard quasi-boundary regularization method, its main idea is to add a penalty term to the termination data, that is, , then the regularization solution of the inverse problem can be obtained. In this paper, in order to solve the inverse problem (Equation1

(1)

(1) ), we use a modified quasi-boundary regularization method, i.e. replacing

with

. The regularization solution

can be obtained by the following system of equations:

(44)

(44) where

is a regularization parameter.

Next, we will show that is the regularization solution of the source term

in problem (Equation1

(1)

(1) ). By separating the variables, we can get the following form of

(45)

(45) where

According to

we obtain

(46)

(46) where

.

Thus, according to and (Equation46

(46)

(46) ), we have

(47)

(47) where

.

Consequently, we have

(48)

(48) The regularization solution with exact measurable data is as follows:

(49)

(49)

4.1. The error convergent estimate with an a priori regularization parameter choice rule

Theorem 4.1

Suppose satisfy

,

. Assuming that the a priori bound condition (Equation16

(16)

(16) ) and noise assumption (Equation9

(9)

(9) ) hold, the exact solution of problem (Equation1

(1)

(1) ) is given by (Equation6

(6)

(6) ). Let the regularization solution of the modified quasi-boundary regularization method be expressed as (Equation48

(48)

(48) ), and the convergence error estimates of the following Hölder-type are obtained

If 0<p<4 and choose

, we obtain the following error estimate result:

(50)

(50)

If

and choose

, we obtain the following error estimate result:

(51)

(51)

where

.

Proof.

By the triangle inequality, we know

(52)

(52) We first give the error estimate of the first term in inequality (Equation52

(52)

(52) ). According to (Equation48

(48)

(48) ), (Equation49

(49)

(49) ) and (Equation9

(9)

(9) ), we obtain the following result

(53)

(53) where

.

Using Lemma 2.1 and (Equation15(15)

(15) ), we have

Consequently, according to (Equation9

(9)

(9) ), we can get

(54)

(54) In the next moment, we give the error estimate of the second term in formula (Equation52

(52)

(52) ).

From (Equation49(49)

(49) ), we can deduce that

(55)

(55) By a priori bound condition (Equation16

(16)

(16) ), we have

where

.

Using Lemma 2.1 and (Equation15(15)

(15) ), we can get the following result

Let

where

.

If 0<p<4, due to and

.

Consequently,

where

, and

satisfies

.

According to the expression of , we have

i.e.

Then,

(56)

(56) If

,

, we have

(57)

(57) According to (Equation56

(56)

(56) ) and(Equation57

(57)

(57) ), we obtain

(58)

(58) where

Consequently,

(59)

(59) Regularization parameter μ selection rules are as follows:

(60)

(60) Combining (Equation52

(52)

(52) ), (Equation54

(54)

(54) ), (Equation59

(59)

(59) ) and (Equation60

(60)

(60) ), we can get the convergence error estimate

(61)

(61) We have finished the proof of Theorem 4.1.

4.2. The error convergent estimate with an a posteriori parameter choice rule

In this section, we give a selection rule of the a posteriori regularization parameter, that is, we choose the regularization parameter μ in regularization resolution by using Morozov's discrepancy principle [Citation43]. In Theorem 2.2, under the results of conditional stability, we can obtain the error convergence estimate between the regularization solution (Equation48(48)

(48) ) and the exact solution.

Morozov's discrepancy principle for our case is to find μ such that

(62)

(62) where

is constant.

Lemma 4.1

Let then the following properties are established

is a continuous function;

is a strictly increasing function over

Proof.

According to

it can be seen that these four properties are obvious.

Remark 4.1

By the Lemma 4.1, if , μ selected by formula (Equation62

(62)

(62) ) is unique.

Lemma 4.2

If , we can get

(63)

(63)

where

Proof.

According to (Equation62(62)

(62) ) and (Equation9

(9)

(9) ), we have

By a priori bound condition (Equation16

(16)

(16) ), we obtain

where

.

According to , we have

(64)

(64) where

.

Using Lemma 2.1 and (Equation64(64)

(64) ) to reduce

, we obtain

Let

where

.

If 0<p<2, let .

According to the expression of , we have

Let

, we can get

Then,

(65)

(65) If

,

due to

, so

(66)

(66) Consequently,

(67)

(67) Then,

Therefore, we have

(68)

(68) where

We have finished the proof of Lemma 4.2.

Theorem 4.2

Suppose satisfy

,

. Let the a posteriori regularization parameter selection rule satisfy Morozov's discrepancy principle (Equation62

(62)

(62) ). The a priori bound condition (Equation16

(16)

(16) ) and noise assumption (Equation9

(9)

(9) ) hold. There are two results as follows:

If 0<p<2, we obtain the following error estimation result:

(69)

(69)

If

, we obtain the following error estimation result:

(70)

(70)

Proof.

We prove the conclusion through the following three steps:

Firstly, by triangle inequality, we have

(71)

(71) Secondly, we give an estimate for the second term in formula (Equation71

(71)

(71) ). From (Equation55

(55)

(55) ), we can get

From (Equation9

(9)

(9) ) and (Equation62

(62)

(62) ), we have

By a priori bound condition (Equation16

(16)

(16) ), we know

By Theorem 2.2, we deduce that

(72)

(72) Finally, we give an estimate for the first term in formula (Equation71

(71)

(71) ). According to the proof result of the first term in Theorem 4.1, we have

(73)

(73) According to Lemma 4.2, we can get

(74)

(74) From (Equation71

(71)

(71) ), (Equation72

(72)

(72) ) and (Equation74

(74)

(74) ), we have

(75)

(75) We have finished the proof of Theorem 4.2.

In the next section, we propose the second regularization method for solving inverse problem (Equation1(1)

(1) ), namely, Landweber iterative regularization method. The a priori and a posteriori convergence error estimates are given under the selection rules of a priori and a posteriori regularization parameter.

5. Landweber iterative regularization method and convergence analysis

Now let us solve the corresponding regularization solution of Landweber iterative regularization method for inverse problem (Equation1

(1)

(1) ). If we replace equation Kf = h with operator equation

, we can obtain the regularization solution

. The iterative scheme is as follows:

(76)

(76) where a is called the relaxation factor, I is a unit operator, m is the iterative step number,

is called the regularization parameter and satisfies

.

Let operator be as follows:

So, by direct calculation, we obtain

By the singular values (Equation43

(43)

(43) ) of the operator

and (Equation76

(76)

(76) ), we obtain the Landweber iterative regularization solution

(77)

(77) where

.

The regularization solution with accurate measurable data is as follows

(78)

(78) Next, we discuss the a priori and a posteriori convergence error estimates by Landweber iterative regularization method. Iteration step number

must be known. Based on two kinds of regularization parameter selection rules, we give the Hölder-type convergence estimates between the regularization solution and the exact solution, respectively.

5.1. The convergent error estimate with an a priori parameter choice rule

Theorem 5.1

Suppose satisfy

,

. Assuming that a priori condition (Equation16

(16)

(16) ) and the noise assumption (Equation9

(9)

(9) ) hold, and the exact solution of the problem (Equation1

(1)

(1) ) is formula (Equation6

(6)

(6) ). The corresponding Landweber iterative regularization solution

is represented by the formula (Equation77

(77)

(77) ). If we select the regularization parameter

, where

(79)

(79) then we obtain the following convergence error estimate

(80)

(80) where

denotes the largest integer less than or equal to b and

is positive constant.

Proof.

By the triangle inequality, we have

(81)

(81) On the one hand, from (Equation77

(77)

(77) ), (Equation78

(78)

(78) ), and (Equation9

(9)

(9) ), we obtain

where

.

Because is the singular value of operator K, and

, so we obtain

.

If 0<x<1, we have inequality

Consequently,

Applying Bernoulli inequality

, we can get

i.e.

So, we have

(82)

(82) On the other hand, by Lemma 2.1, (Equation15

(15)

(15) ) and (Equation78

(78)

(78) ), we have

where

.

According to Lemma 2.1 and (Equation15(15)

(15) ), we have

Let

,

.

Assume satisfies

, according to the

expression, we can get

Let

, we have

So,

then,

Therefore,

(83)

(83) Combining (Equation79

(79)

(79) ), (Equation81

(81)

(81) ), (Equation82

(82)

(82) ) and (Equation83

(83)

(83) ), we obtain

where

. The proof of Theorem 5.1 is finished.

5.2. The convergent error estimate with an a posteriori parameter choice rule

In this section, based on the Morozov discrepancy principle [Citation43], we give the a posteriori regularization parameter selection rule and obtain the convergence error estimate under the a posteriori regularization parameter selection rule.

Let be a given constant. The method of selecting the a posteriori regularization parameter

is that the iteration stops when

satisfies

(84)

(84) for the first time, where

is constant.

Lemma 5.1

Let so we can get the following properties

is a continuous function;

is a strictly decreasing function for any

Proof.

We can easily get these properties by formula

Remark 5.1

By the Lemma 5.1, the uniqueness of is selected by the method of formula (Equation84

(84)

(84) ).

Lemma 5.2

For a given constant , it is assumed that the a priori bound condition (Equation16

(16)

(16) ), the regularization solution of inverse problem (Equation1

(1)

(1) ) and noise assumption (Equation9

(9)

(9) ) hold. If the regularization parameter is chosen based on Morozov's discrepancy principle (Equation84

(84)

(84) ), then the regularization parameter

satisfies the inequality

(85)

(85)

Proof.

Owing to , combining (Equation9

(9)

(9) ) and (Equation77

(77)

(77) ), we obtain

(86)

(86) According to (Equation16

(16)

(16) ), we have

where

.

According to Lemma 2.1, (Equation15(15)

(15) ) and (Equation64

(64)

(64) ), we have

(87)

(87) Let

,

.

Assume satisfies

, according to the

expression, we have

Let

, we obtain

So

Consequently,

Therefore, we obtain

(88)

(88) Combining (Equation86

(86)

(86) ) and (Equation88

(88)

(88) ), we obtain

i.e.

The proof of Lemma 5.2 is completed.

Lemma 5.3

According to (Equation9(9)

(9) ) and (Equation84

(84)

(84) ), we have

(89)

(89)

Proof.

We note

i.e.

(90)

(90) The proof of Lemma 5.3 is completed.

Theorem 5.2

Assume satisfy

,

. Let

be the Landweber iterative regularization solution corresponding to the exact solution

of inverse problem (Equation1

(1)

(1) ). It is assumed that the noise assumption (Equation9

(9)

(9) ) and the a priori condition (Equation16

(16)

(16) ) hold, and the regularization parameter

satisfies the iteration stopping criterion (Equation84

(84)

(84) ). Therefore, we obtain the convergence error estimates as follows:

(91)

(91) where

is positive constant.

Proof.

According to the triangle inequality, we obtain

(92)

(92) Using Lemma 5.2 and (Equation82

(82)

(82) ), we have

(93)

(93) According to (Equation16

(16)

(16) ), we have

Moreover, according to Theorem 2.2 and Lemma 5.3, we have

(94)

(94) Combining (Equation92

(92)

(92) ), (Equation93

(93)

(93) ) and (Equation94

(94)

(94) ), we obtain

where

.

The proof of Theorem 5.2 is completed.

6. Analysis and comparison of the optimal approximation

In Sections 4 and 5, we use the modified quasi-boundary regularization method and Landweber iterative regularization method to obtain the regularization solution of problem (Equation1(1)

(1) ), respectively. Based on the a priori bound condition (Equation16

(16)

(16) ), the a prioriand a posteriori error estimates are obtained under different regularization parameter selection rules. Next, we will compare the convergence error estimates of the two regularization methods.

For some regularization methods, the convergence error estimates obtained do not hold for all p, but only for some . We call this phenomenon saturation effect. If a regularization method produces saturation effect, it means that the order of the error estimation between the corresponding regularized solution and the exact solution cannot be increased with the increase of the assumption of smoothness of the solution.

We choose as a priori bound condition(where p>0). From Tables , and Theorem 3.2, whether a priori or a posteriori, the modified quasi-boundary regularization method will produce saturation effect compared with Landweber iterative regularization method. Compared with the modified quasi-boundary regularization method, the error estimates obtained by Landweber iterative regularization method are order-optimal.

Table 1. A priori convergence error estimates of two regularization methods.

Table 2. A posteriori convergence error estimates of two regularization methods.

From the above analysis, we know that under the a priori bound condition (Equation16(16)

(16) ), the error estimation result obtained by Landweber iterative regularization method is order-optimal.

7. Numerical experiments

In this section, we illustrate the stability and effectiveness of the two regularization methods and the superiority of the Landweber iterative regularization method through several numerical examples with different properties.

The analytic solution of the problem (Equation1(1)

(1) ) is difficult to obtain. Therefore, we construct the termination data

by solving the following well-posed problem.

(95)

(95) with the given data

,

.

In general, we assume and

. In the finite difference algorithm, we make the grid size of space variable and time variable be

and

respectively. In space interval

, grid points are marked as

, and in time interval

, grid points are marked as

,

. For the function u on the grid point, we can approximately express it as

.

To simplify the calculation, let . Then the space has the following discrete form:

(96)

(96) The Caputo-Fabrizio fractional derivative is approximated by [Citation48]:

(97)

(97) where

,

and

. In (Equation95

(95)

(95) ), according to initial condition and boundary condition, we can get a numerical solution for well-posed problem (Equation95

(95)

(95) ) from the finite difference scheme

(98)

(98) Denote

, then according to scheme (Equation98

(98)

(98) ), we obtain the following iterative scheme

where

,

.

A is a tridiagonal matrix given by

For the regularization problem (Equation44

(44)

(44) ) corresponding to the modified quasi-boundary regularization method, we use the above finite difference scheme to discretize the first equation in (Equation44

(44)

(44) ), we obtain

where

with

is the approximate value of

and

with

is the approximate value of regularization solution

.

Moreover, we can infer the following relationship

(99)

(99) where D is a matrix.

In addition, according to the fourth formula in (Equation44(44)

(44) ), we have

(100)

(100) To transform (Equation100

(100)

(100) ) into matrix form, we have

(101)

(101) where

, and Y is a tridiagonal matrix given by

From (Equation99

(99)

(99) ) and (Equation101

(101)

(101) ), we obtain the unknow source vector satisfies the following format

(102)

(102) For the Landweber iterative regularization method, similar to the modified quasi-boundary regularization method, in solving the numerical problem of inverse problem (Equation1

(1)

(1) ), we need to find a matrix K to make

hold. The matrix K satisfies the following conditions:

where

Therefore, the regularization solution is obtained by the following formula:

By adding random perturbation to noise data

, the data with errors are obtained,

where

can produce a random number with a mean value of 0 and a variance of 1, and ε represents the relative error level. The absolute error levels are as follows:

(103)

(103) A priori regularization parameter is based on the smooth condition of the exact solution, which is difficult to obtain in general. For the two regularization methods, the a posteriori regularization parameter selection rule is selected as an example to prove the stability and effectiveness of the two regularization methods. By direct calculation, we obtain

and

for

. Let T = 1,

,

, p = 2,

. Choosing M = 100, N = 40, we give three examples of different properties.

Example 7.1

Consider smooth function

Example 7.2

Consider piecewise smooth function

Example 7.3

Consider non-smooth function

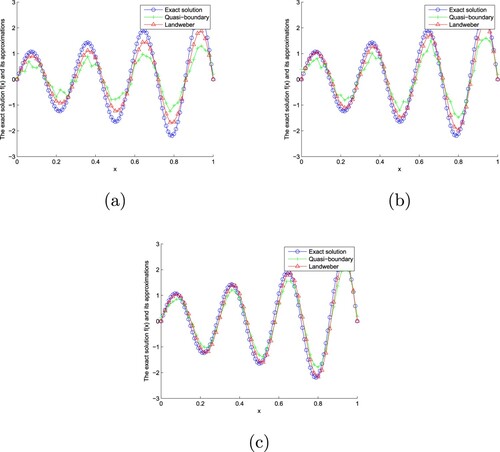

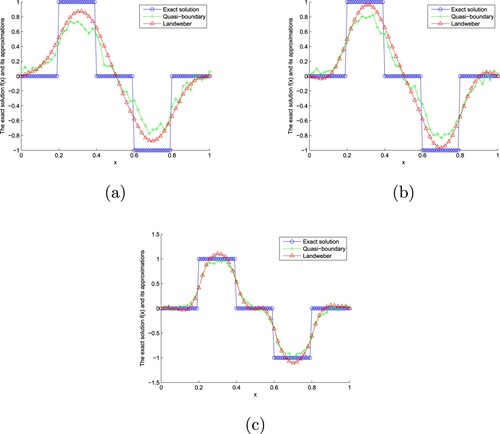

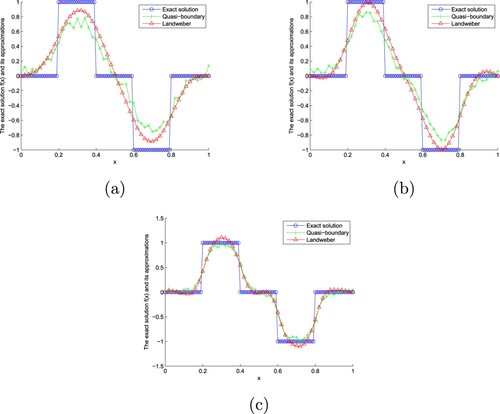

Figure shows the exact solution and its approximation solution under a modified quasi-boundary regularization method and Landweber iterative regularization method for at different error level

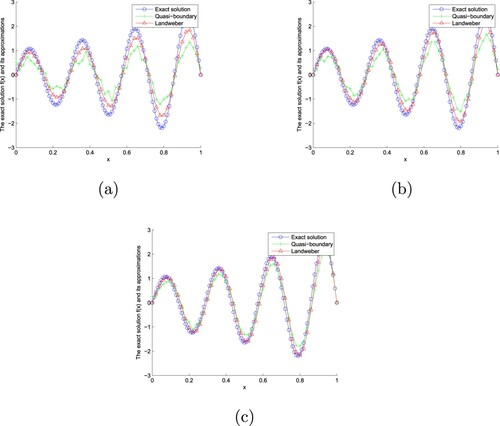

with Example 7.1. Figure shows the exact solution and its approximation solution under a modified quasi-boundary regularization method and Landweber iterative regularization method for

at different error level

with Example 7.1. Table shows the comparison of absolute error of two regularization methods of Example 7.1 for different α with

. Table shows the comparison of two regularization methods of the CPU time of Example 7.1 for different α with

.

Figure 1. The exact solution and approximate solution of two regularization methods of Example 7.1 with for

. (a)

(b)

(c)

. (a)

. (b)

and (c)

.

Figure 2. The exact solution and approximate solution of two regularization methods of Example 7.1 with for

. (a)

(b)

(c)

. (a)

. (b)

and (c)

.

Table 3. Absolute error of two regularization methods of Example 7.1 for different α with .

Table 4. The CPU time of two regularization methods of Example 7.1 for different α with .

For the two regularization methods, in Figures and , we find that the smaller the erroe level ε, the better the fitting effect. Moreover, the fitting effect of Landweber iterative regularization method is better than that of a modified quasi-boundary regularization method. From Table , we find that the smaller α and ε, the smaller the absolute error. Moreover, the error of Landweber iterative regularization method is smaller than that of a modified quasi-boundary regularization method. From Table , we find that when α is larger and ε is smaller, the CPU time is longer. In addition, the CPU time of the modified quasi-boundary regularization method is shorter than that of Landweber iterative regularization method.

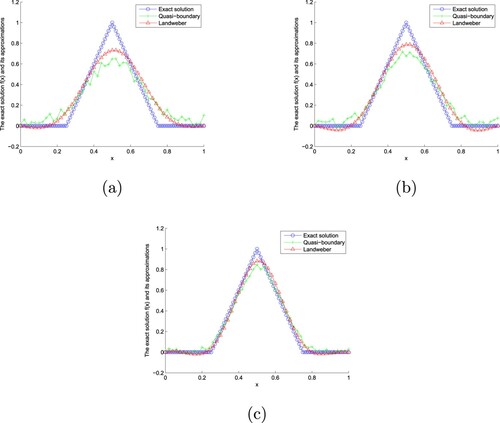

Figure shows the exact solution and its approximation solution under a modified quasi-boundary regularization method and Landweber iterative regularization method for at different error level

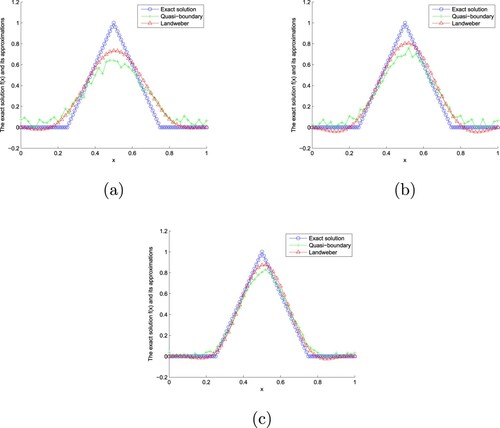

with Example 7.2. Figure shows the exact solution and its approximation solution under a modified quasi-boundary regularization method and Landweber iterative regularization method for

at different error level

with Example 7.2. Table shows the comparison of absolute error of two regularization methods of Example 7.2 for different α with

. Table shows the comparison of two regularization methods of the CPU time of Example 7.2 for different α with

.

Figure 3. The exact solution and approximate solution of two regularization methods of Example 7.2 with for

. (a).

(b).

(c).

. (a)

. (b)

and (c)

.

Figure 4. The exact solution and approximate solution of two regularization methods of Example 7.2 with for

. (a).

(b).

(c).

. (a)

. (b)

and (c)

.

Table 5. Absolute error of two regularization methods of Example 7.2 for different α with .

Table 6. The CPU time of two regularization methods of Example 7.2 for different α with .

From Figures and , we can find that the smaller ε is, the better the fitting effect is. In addition, compared with the modified quasi-boundary regularization method, the Landweber iterative regularization method is more effective. From Table , we find that the smaller α and ε are, the smaller the absolute error is. In addition, compared with the modified quasi-boundary regularization method, the error of Landweber iterative regularization method is smaller. From Table , we find that the larger α is, the smaller ε is, the longer the CPU time is. Moreover, the CPU time of the modified quasi-boundary regularization method is shorter than that of Landweber iterative regularization method.

Figure shows the exact solution and its approximation solution under a modified quasi-boundary regularization method and Landweber iterative regularization method for at different error level

with Example 7.3. Figure shows the exact solution and its approximation solution under a modified quasi-boundary regularization method and Landweber iterative regularization method for

at different error level

with Example 7.3. Table shows the comparison of absolute error of two regularization methods of Example 7.3 for different α with

. Table shows the comparison of two regularization methods of the CPU time of Example 7.3 for different α with

.

Figure 5. The exact solution and approximate solution of two regularization methods of Example 7.3 with for

. (a).

(b).

(c).

. (a)

. (b)

and (c)

.

Figure 6. The exact solution and approximate solution of two regularization methods of Example 7.3 with for

. (a).

(b).

(c).

. (a)

. (b)

and (c)

.

Table 7. Absolute error of two regularization methods of Example 7.3 for different α with .

Table 8. The CPU time of two regularization methods of Example 7.3 for different α with .

From Figures and , we find that the smaller ε is, the better the fitting effect is. In addition, compared with the modified quasi-boundary regularization method, the Landweber iterative regularization method has better fitting effect. From Table , we find that the absolute error decreases with the increase of α and decreases with the decrease of ε. In addition, compared with the modified quasi-boundary regularization method, the error of Landweber iterative regularization method is smaller. From Table , we find that the larger α is, the smaller ε is, the longer the CPU time is. In addition, the CPU time of the modified quasi-boundary regularization method is shorter than that of Landweber iterative regularization method.

According to the above three examples of different properties, it can be proved that the absolute error obtained by Landweber iterative regularization method is smaller than that of a modified quasi-boundary regularization method for given α and ε. Moreover, through Figures –, we find that the fitting effect of Landweber iterative regularization method is better than that of a modified quasi-boundary regularization method. Therefore, the Landweber iterative regularization method is more effective than the modified quasi-boundary regularization method. However, in terms of the CPU time, a modified quasi-boundary regularization method is shorter than Landweber iterative regularization method.

8. Conclusion

In this paper, the problem of unknown source identification for space-time fractional diffusion equation is studied. In this equation, the time fractional derivative is a new fractional derivative Caputo-Fabrizio fractional derivative. We prove that this problem is an ill-posed problem. Based on a priori assumption, the optimal error bound analysis of the problem under the source condition is obtained. In addition, we solve the inverse problem (Equation1(1)

(1) ) by two different regularization methods. The convergence error estimates of the two regularization methods are obtained under a priori and a posteriori regularization parameter selection rules. Compared with the modified quasi-boundary regularization method, we prove that the convergence error estimates obtained by Landweber iterative regularization method are order optimal. Finally, numerical examples of several different properties are given to demonstrate the advantages and disadvantages, stability and effectiveness of the two regularization methods, and the advantages of the two regularization methods in dealing with the inverse problem.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Caputo M, Fabrizio M. A new definition of fractional derivative without singular kernel. Progr Fract Differ Appl. 2015;1(2):73–85.

- Losada J, Nieto JJ. Properties of a new fractional derivative without singular kernel. Progr Fract Differ Appl. 2015;1(2):87–92.

- Tuan NH, Zhou Y. Well-posedness of an initial value problem for fractional diffusion equation with Caputo-Fabrizio derivative. Chaos Soliton Fract. 2020;138:109966.

- Zheng XC, Wang H, Fu HF. Well-posedness of fractional differential equations with variable-order Caputo-Fabrizio derivative. Chaos Soliton Fract. 2020;138:109966.

- Al-Salti N, Karimov E, Kerbal S. Boundary-value problems for fractional heat equation involving Caputo-Fabrizio derivative. New Trends Math Sci. 2016;4(4):79–89.

- Baleanu D, Jajarmi A, Mohammadi H, et al. A new study on the mathematical modelling of human liver with Caputo-Fabrizio fractional derivative. Chaos Soliton Fract. 2020;134:109705.

- Al-khedhairi A. Dynamical analysis and chaos synchronization of a fractional-order novel financial model based on Caputo-Fabrizio derivative. Eur Phys J Plus. 2019;134(10):532.

- Al-Salti N, Karimov E, Sadarangani K. On a differential equation with Caputo-Fabrizio fractional derivative of order 1<β≤2 and application to mass-spring-damper system. Progr Fract Differ Appl. 2016;2(4):257–263.

- Shi JK, Chen MH. A second-order accurate scheme for two-dimensional space fractional diffusion equations with time Caputo-Fabrizio fractional derivative. Appl Numer Math. 2020;151:246–262.

- Liu ZG, Cheng AJ, Li XL. A second order Crank-Nicolson scheme for fractional Cattaneo equation based on new fractional derivative. Appl Math Comput. 2017;311:361–374.

- Wang JG, Wei T, Zhou YB. Tikhonov regularization method for a backward problem for the time-fractional diffusion equation. Appl Math Model. 2013;37(18–19):8518–8532.

- Yang F, Zhang P, Li XX, et al. Tikhonov regularization method for identifying the space-dependent source for time-fractional diffusion equation on a columnar symmetric domain. Adv Differ Equ. 2020;2020:128.

- Yang F, Fu CL, Li XX. A modified tikhonov regularization method for the cauchy problem of Laplace equation. Acta Math Sci. 2015;35(6):1339–1348.

- Xiong XT, Xue X. A fractional Tikhonov regularization method for identifying a space-dependent source in the time-fractional diffusion equation. Appl Math Comput. 2019;349:292–303.

- Yang F, Pu Q, Li XX. The fractional Tikhonov regularization methods for identifying the initial value problem for a time-fractional diffusion equation. J Comput Appl Math. 2020;380:112998.

- Wang JG, Wei T, Zhou Y. Optimal error bound and simplified Tikhonov regularization method for a backward problem for the time-fractional diffusion equation. J Comput Appl Math. 2015;279(18–19):277–292.

- Feng XL, Eldén L. Solving a Cauchy problem for a 3D elliptic PDE with variable coefficients by a quasi-boundary-value method. Inverse Probl. 2014;30(1):015005.

- Yang F, Wang N, Li XX, et al. A quasi-boundary regularization method for identifying the initial value of time-fractional diffusion equation on spherically symmetric domain. Inverse Ill-posed Probl. 2019;27(5):609–621.

- Yang F, Sun YR, Li XX, et al. The quasi-boundary regularization value method for identifying the initial value of heat equation on a columnar symmetric domain. Numer Algor. 2019;82(2):623–639.

- Yang F, Zhang P, Li XX. The truncation method for the Cauchy problem of the inhomogeneous Helmholtz equation. Appl Anal. 2019;98(5):991–1004.

- Yang F, Pu Q, Li XX, et al. The truncation regularization method for identifying the initial value on non-homogeneous time-fractional diffusion-wave equations. Mathematics. 2019;7:1007.

- Wei T, Wang JG. A modified quasi-boundary value method for an inverse source problem of the time-fractional diffusion equation. Appl Numer Math. 2014;78:95–111.

- Yang F, Fu CL, Li XX. A mollification regularization method for unknown source in time-fractional diffusion equation. Int J Comput Math. 2014;91(7):1516–1534.

- Zhang HW, Qin HH, Wei T. A quasi-reversibility regularization method for the Cauchy problem of the Helmholtz equation. Int J Comput Math. 2011;88(4):839–850.

- Yang F, Fu CL. The quasi-reversibility regularization method for identifying the unknown source for time fractional diffusion equation. Appl Math Model. 2015;39(5–6):1500–1512.

- Yang F, Ren YP, Li XX. Landweber iteration regularization method for identifying unknown source on a columnar symmetric domain. Inverse Probl Sci Eng. 2018;26(8):1109–1129.

- Yang F, Liu X, Li XX. Landweber iteration regularization method for identifying unknown source of the modified Helmholtz equation. Bound Value Probl. 2017;2017(1):1–16.

- Yang F, Zhang Y, Li XX. Landweber iteration regularization method for identifying the initial value problem of the time-space fractional diffusion-wave equation. Numer Algor. 2020;83:1509–1530.

- Xiong XT, Fu CL, Li HF. Fourier regularization method of a sideways heat equation for determining surface heat flux. J Math Anal Appl. 2006;317(1):331–348.

- Li XX, Lei JL, Yang F. An a posteriori fourier regularization method for identifying the unknown source of the space-fractional diffusion equation. J Inequal Appl. 2014;2014(1):1–13.

- Yang F, Fu CL, Li XX, et al. The Fourier regularization method for identifying the unknown source for the modified Helmholtz equation. Acta Math Sci. 2014;34(4):1040–1047.

- Yang F, Fan P, Li XX, et al. Fourier truncation regularization method for a time-fractional backward diffusion problem with a nonlinear source. Mathematics. 2019;7(9):865.

- Liu SS, Feng LX. A posteriori regularization parameter choice rule for a modified kernel method for a time-fractional inverse diffusion problem. J Comput Appl Math. 2019;353:355–366.

- Liu SS, Feng LX. Optimal error bound and modified kernel method for a space-fractional backward diffusion problem. Adv Differ Equ. 2018;2018:268.

- Liu SS, Feng LX. A modified kernel method for a time-fractional inverse diffusion problem. Adv Differ Equ. 2015;2015:342.

- Yang F, Fu JL, Li XX. A potential-free field inverse Schrödinger problem: optimal error bound analysis and regularization method. Inverse Probl Sci Eng. 2020;28(9):1209–1252.

- Zhang ZQ, Ma YJ. A modified kernel method for numerical analytic continuation. Inverse Probl Sci Eng. 2013;21(5):840–853.

- Tautenhahn U. Optimality for ill-posed problems under general source conditions. Numer Funct Anal Optim. 1998;19(3–4):377–398.

- Ivanchov MI. The inverse problem of determining the heat source power for a parabolic equation under arbitrary boundary conditions. J Math Sci. 1998;88(3):432–436.

- Schr o¨er T, Tautenhahn U. On the optimal regularization methods for solving linear ill-posed problems. Z Anal Anwend. 1994;13(4):697–710.

- Tautenhahn U. Optimal stable approximations for the sideways heat equation. J Inverse Ill-Posed Probl. 1997;5(3):287–307.

- Vainikko G. On the optimality of methods for ill-posed problems. Z Anal Anwend. 1987;6(4):351–362.

- Hohage T. Regularization of exponentially ill-posed problems. Numer Funct Anal Optim. 2000;21(3–4):439–464.

- Engl HW, Hanke M, Neubauer A. Regularization of inverse problem. Dordrecht: Kluwer Academic Publishers; 1996.

- Tautenhahn U, Gorenflo R. On optimal regularization methods for fractional differentiation. J Anal Appl. 1999;18(2):449–467.

- Tautenhahn U. Optimal stable solution of Cauchy problems for elliptic equations. Z Anal Anwend. 1996;15:961–984.

- Hofmann B, Tautenhahn U, H a¨mrik U. Conditional stability estimates for ill-posed PDE problem by using interpolation. Preprint 2011–16, 2011. Available from: http://nbn-resolving.de/urn:nbn:de:bsz:ch1-qucosa-72654.

- Atangana A, Alqahtani RT. Numerical approximation of the space-time Caputo-Fabrizio fractional derivative and application to groundwater pollution equation. Adv Differ Equ. 2016;2016(1):156.