?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This study deals with the identification of source function from final time state observation in a two-dimensional hyperbolic problem. The solution to the direct problem is obtained by the weak solution approach and finite element method. In the part of the inverse problem, the trust-region method and Levenberg–Marquardt method, which are nonlinear least-squares optimization methods, are used for the identification of source function. The findings are presented with numerical examples.

1. Introduction

In abstract and applied mathematics, the hyperbolic problems related to wave equations have attracted the attention of many scientists. The desire to get some knowledge about the internal dynamics of the wave from its traceable behaviour has been the reason for this interest. In hyperbolic equations, solutions of the corresponding direct problems may have discontinuities, singular components of complicated structure. As it is known, the more stable an operator is, the harder it is to work with its inverse. In this respect, inverse problems for hyperbolic equations have always been popular.

Considering the spatial variables in the region and time variable in the interval

, let us write the hyperbolic equation on the domain

in the form

(1.1)

(1.1)

(1.2)

(1.2)

(1.3)

(1.3) where

(1.4)

(1.4) In vibration modelling, the external forces with the form of separation of variables

have special meanings. For example, the selection of

describes a harmonic spatial force. Generally, the problem (1.1)–(1.3) is admitted as a model for flexible waves corresponding to a point slipping source. This kind of point source can be connected with models in ground-penetrating radar, reflection seismology, oil and gas exploration and many other physical systems [Citation1].

For a given source , obtaining the solution

of the initial boundary value problem (1.1)–(1.3) is defined as the direct problem. We can conclude from [Citation2] that under the conditions (1.4), the problem (1.1)–(1.3) has a weak solution

with the weak derivatives

and

. Also, this solution satisfies the following estimate:

(1.5)

(1.5) Besides, the identification of any unknown data from a given observation related to the solution is defined as the inverse problem.

In this study, we consider the following inverse problem:

(1.6)

(1.6) where

represents the solution of the direct problem for a given source

.

In the last decades, inverse source problems with the final time observation for hyperbolic problems have attracted great attention due to both their significance in engineering applications and significance in the theory of inverse problems [Citation3–10].

The solution of the (1.6) inverse problem is achieved by minimizing the following least-squares functional

(1.7)

(1.7) On the other hand, these types of inverse problems are ill-posed according to Hadamard requirements which are existence, uniqueness and stability of the solution. There are many studies to overcome the ill-posedness by regularization techniques [Citation4,Citation11–14]

The layout of this article is as follows. In Section 2, we have applied the finite element method for weak solution to direct problem. In Section 3, we have implemented a trust-region algorithm and Levenberg–Marquardt method, which are interrelated methods, except for one point, for the identification problem. In the last section, we have tested the proposed methods on two numerical examples.

2. Weak formulation and finite element method for direct problem

Multiplying the equality of (1.1) by a test function , which is zero on the boundary and integrating over the domain, we get the following integral equality for

:

and by Green’s formula, we have

(2.1)

(2.1) By the spaces

and

, the variational formulation of the considered problem is:

find such that for every fixed

and

(2.2)

(2.2) Let us apply the finite element method to the direct problem. The domain

is approximated by the union of

elements

where

is the

th element. The union of the elements is called

, and it is the finite element mesh for the domain

. The number

indicates the size of the elements.

We seek the approximate solution in the form

(2.3)

(2.3) where

is the number of the nodes. The basis function

is the second-order Lagrangian shape functions

The six coefficients

of this polynomial are determined by requiring

With these six parameters, we consider constructing a quadratic Lagrange polynomial by placing nodes at the vertices and mid-sides of a triangular element [Citation15].

Now, considering the approximate solution in (2.2)

We get

Choosing

to be each of the basis functions

, we write

So, we have

unknowns and

equations above, which will give a unique solution

.

For solution vector , we can write the following time-dependent matrix equations:

(2.4)

(2.4) where

and

The equality of (2.4) is the system of second-order ordinary differential equation and the initial conditions come from (1.2) such as

and by vector form

Hence, we have the system

(2.5)

(2.5) This system can be solved on the time interval

by any of the numerical methods like Euler's method, Runge–Kutta method or an adaptive scheme. Although the requirement on

is not so stringent by an implicit method when solving the hyperbolic problems, we must not forget stability issues. In applying (2.5) to approximate a solution to the hyperbolic equation, we realize that the approximate solution is growing implausible in amplitude, and then the time step must be decreased.

The approximate solution satisfies the a priori estimate [Citation15]

(2.6)

(2.6)

3. Solution of inverse identification problem

In order to get the unknown source function from the given final time observation by (1.7), we will use the nonlinear least-squares optimization for the problem

(3.1)

(3.1) where

and the components of

are defined as

.

Considering the compatibility conditions, the structure of identified function is estimated and appropriate parameters

are included to this function. So, it is assumed that we want to get

, which is the minimum of the objective function

Namely, the problem (3.1) is reformulated as follows:

(3.2)

(3.2) Let us write the Taylor approximation by the degree 2 of

around any

:

where

is the quadratic approximation of

around

,

the gradient of

computed at

,

an approximation of the Hessian matrix

of

at

and

the real hessian matrix of

at

.

Also, we know, from the optimization theory that if is a local minimizer, then

(Necessary condition for a local minimizer) and

(Positive definiteness – Sufficient condition for a local minimizer), with some number

. So, we must always construct the positive definite

matrix.

In the remaining of the paper, we use the 2-norm, .

On the other hand, we know, from the vector analysis that the gradient at is

where

is the vector whose components are defined as follows:

Hessian at

is

and approximation of Hessian at

is

. Here,

is the partial derivative (Jacobian) of

with respect to

.

According to the Gauss–Newton method, the computation of the step involves the solution of the linear system

(3.3)

(3.3) One difficulty of using the Gauss–Newton step is that the Jacobi matrix

may be ill-conditioned, which normally leads to a very big step

. And a very long step

usually causes the algorithm to break down, because of either numerical overflows or failure in line searches. So, when the Gauss–Newton method is used for ill-conditioned problems, it is not efficient about convergence relations. The main difficulty is that the step size

is too large and goes in a ‘bad’ direction that gives little reduction in the function.

We can handle this issue via the use of a Lagrange multiplier and thus replace the problem

(3.4)

(3.4) where

is the Lagrange multiplier for the constraint at the

th iteration.

The parameter affects both the direction and the length of the step

. Depending on the size of

, the correction

can vary from a Gauss–Newton step for

to a very short step approximately in the steepest descent direction for large values of

. As we see from these considerations, this parameter acts similar to the step control for the damped Gauss–Newton method, but it also changes the direction of the correction.

There are two effective methods for solving such nonlinear equations: Levenberg–Marquardt method, first given by Levenberg [Citation16] and re-derived by Marquardt [Citation17], and trust-region method [Citation18,Citation19]. They are both Gauss–Newton-based methods and expose quadratic speed of convergence near .

The Levenberg–Marquardt step of (3.4) can be interpreted as solving the normal equations used in the Gauss–Newton method, but ‘shifted’ by a scaled identity matrix, so as to convert the problem from having an ill-conditioned (or positive semi-definite) matrix into a positive definite one. Notice that the positive definiteness implies that the Levenberg–Marquardt direction has always descent and, therefore, the method is well defined. During the process, the size of

is updated by controlling the gain ratio

A large value of

implies that

is a good approximation to

and we can decrease

so that the next Levenberg–Marquardt step is closer to the Gauss–Newton step. If

is small or negative then

is a poor approximation and we must increase

to get steepest descent direction and reduce the step length.

The Levenberg–Marquardt method’s algorithm is as follows;

Levenberg–Marquardt method’s algorithm

Table

On the other hand, reduction in the step size is important. It can be proven that, if we apply a proper limitation on the step size

, we sustain global convergence even if

is an indefinite matrix. The trust-region algorithm is based on this rule (

is called the region radius).

In a trust-region method, it is assumed that the model is sufficiently accurate inside a ball with radius (trust-region radius) centred at

and the step

is to solution to a constrained optimization problem

(3.5)

(3.5) Indeed, a trust-region algorithm for nonlinear least-squares and Levenberg–Marquardt method are similar, apart from the bound

is updated from iteration to iteration directly instead of updating parameter

.

Trust-region method’s algorithm is as follows:

Trust-region method’s algorithm

Table

4. Numerical illustrations

Now, we aim to test the explained process by giving on two problems. In solving these problems, we have used the MATLAB-2019 software.

Example 1 Consider the material occupying .

On the time interval , we have the problem

(4.1)

(4.1)

(4.2)

(4.2)

(4.3)

(4.3)

and want to identify the source function from the final time state information

(4.4)

(4.4) The exact source function is

.

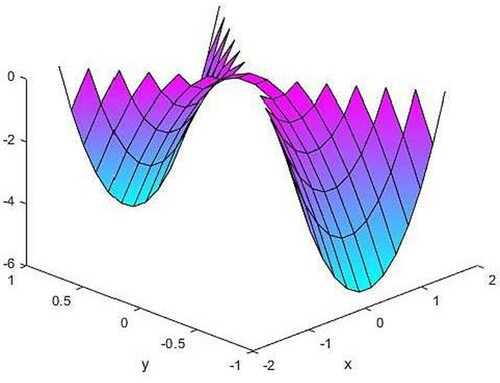

Firstly, let us compute the solution of direct problem by the finite element method.

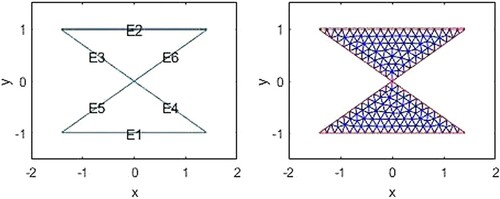

The domain is approximated by the union of

elements such as

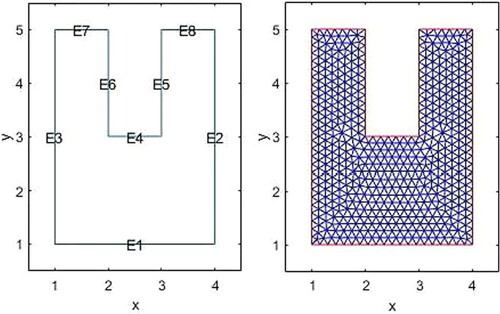

In the following, there are the figures of the domain and a finite element mesh for the problem.

The numbers of elements and nodes are 1033 and 2188, respectively, for the mesh size .

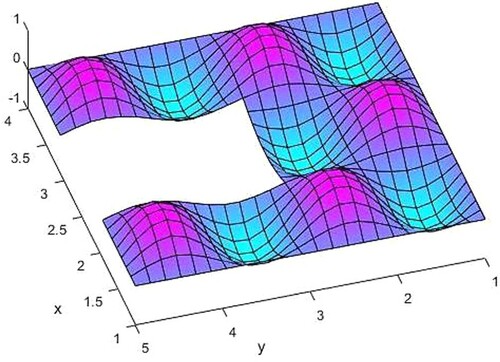

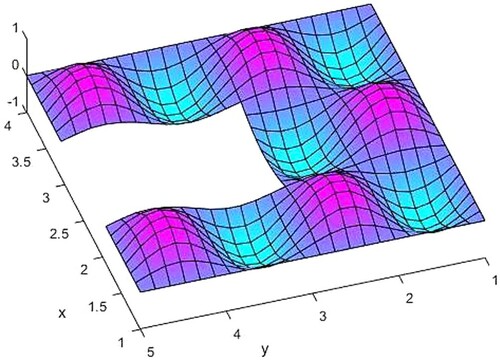

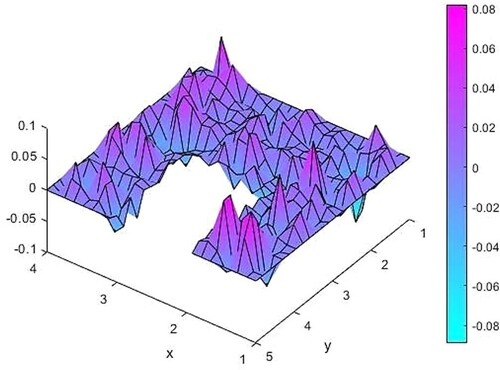

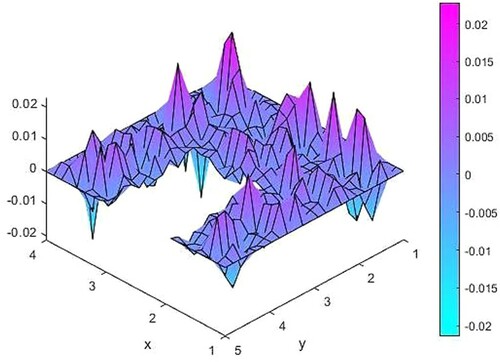

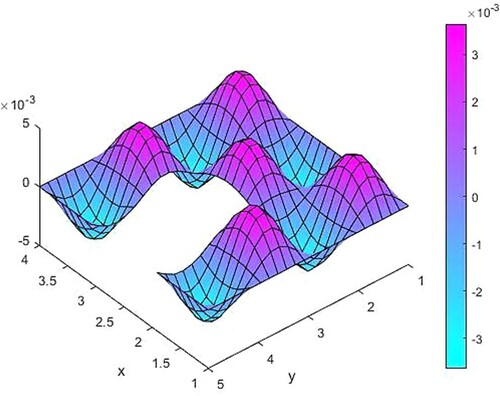

The exact solution and the calculated solution of the finite element method

by (2.5) are presented in Figures .

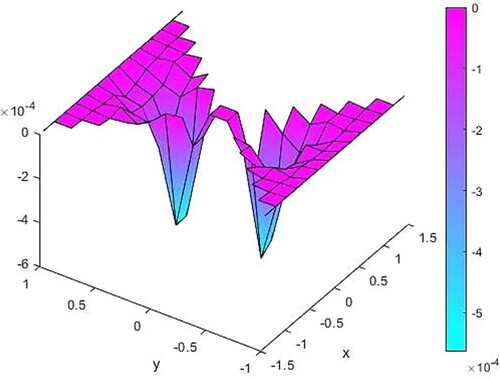

Some error norm values corresponding different maximum mesh size are given in Table . Due to ill-conditioning of some matrices in (2.5), it can be seen by

and

in Table that a smaller

value gives a bigger error norm. Hence, we take the

mesh size for this example. Figure shows the error of the computation for this mesh size.

Table 1. Some and corresponding error norm values.

Secondly, we deal with the solution of the inverse identification problem by nonlinear least-squares optimization techniques.

From the given final time state function , we can estimate that the source function may have the form

(4.5)

(4.5) On the other hand, the exact source function leading to the given final time state function is

The minimization is carried out by the single-parameter functional

(4.6)

(4.6) If minimization of this functional by the Levenberg–Marquardt method and trust-region method is carried out using MATLAB then the following outcomes are obtained.

For the Levenberg–Marquardt method, we get Table .

Table 2. Levenberg–Marquardt method.

Optimization is completed by the Levenberg–Marquardt method since the relative norm of the current step is less than the value of the step tolerance

. The found optimal parameter is

. Hence, the identified source function is

(4.7)

(4.7) The residual norm given by (4.6) for this function is

.

For the trust-region method, we get Table .

Table 3. Trust-region method.

Optimization is completed by the trust-region method since the size of the gradient , which is the first-order optimality measure, is less than the value of the optimality tolerance

. The found optimal parameter is

. Hence, the identified source function is

(4.8)

(4.8) The residual norm given by (4.6) for this function is

.

The difference between the optimal parameter values of two methods is

As seen the parameters of these methods are too close to each other. If we take the optimal parameter as

then we get the following error norm

(4.9)

(4.9) As seen in this example, the Levenberg–Marquardt method was able to calculate the result with the same success using less iteration.

Now, we will examine that the problem needs regularization process or not. To do this, we generate random noisy data . The noisy data are simulated as

(4.10)

(4.10) where

are random variables with the mean zero and standard deviation

given by

, where

represents the percentage of noise. The random variables

is generated by normrnd MATLAB function.

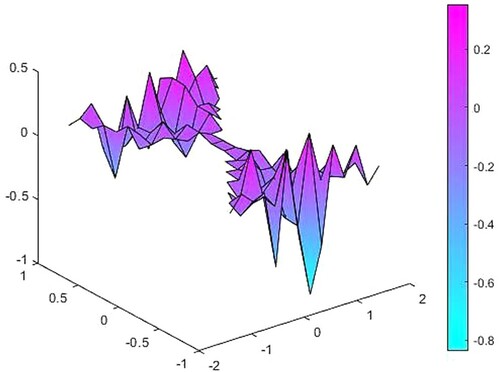

Firstly, we generate random noisy data with the percentage of 10% in the observation function and measure the difference between the source functions caused by this noise.

The optimal parameters accompanying with noisy source function are and

.

The 10% noise causes the norm between observation functions and

norm between source functions.

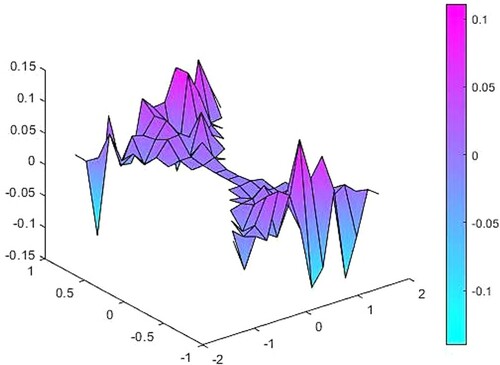

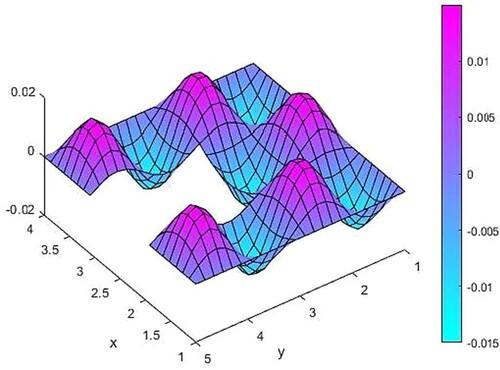

Secondly, we generate random noisy data with the percentage of 2% in the observation function and measure the difference between the source functions caused by this noise (Figures ).

Figure 6. The difference between source functions corresponding to the exact and 10% noisy observation function.

Figure 8. The difference between source functions corresponding to the exact and 2% noisy observation function.

The optimal parameters accompanying with noisy source function are and

.

The 2% noise causes the norm between observation functions and

norm between source functions.

In Table , we present norms of some noisy observations and corresponding source function differences.

Table 4. Some noisy observations and source function differences.

As seen from Table , while noise levels decrease, the corresponding source function differences also decrease. Hence, there is no need for regularization process.

Example 2 Consider the material occupying the domain bounded by the lines and

.

On the time interval , we have the problem

(4.11)

(4.11)

(4.12)

(4.12)

(4.13)

(4.13)

and want to identify the source function from the final time state information

(4.14)

(4.14) The exact source function is

.

Firstly, let us compute the solution of direct problem by finite element method.

In the following, there are the figures of domain and a finite element mesh for the problem (Figure ).

The numbers of elements and nodes are 292 and 671, respectively, for the mesh size .

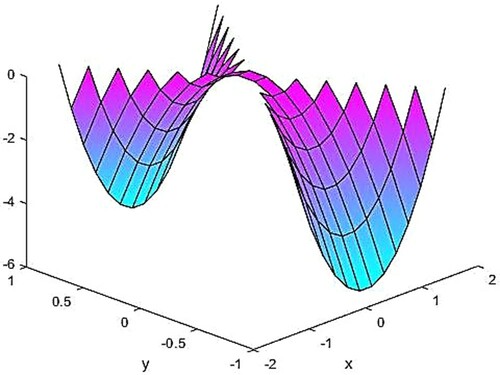

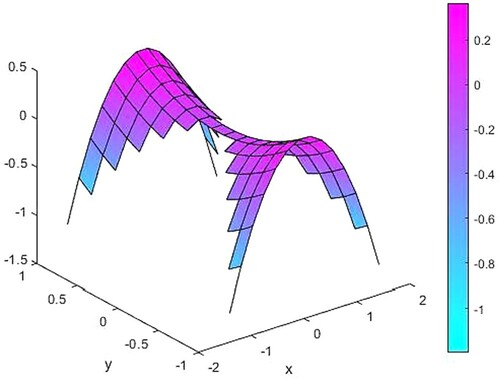

The exact solution and the calculated solution of the finite element method

by (2.5) are presented in Figures .

Figure 14. The difference between source functions corresponding to the exact and 10% noisy observation function.

Figure 16. The difference between source functions corresponding to the exact and 2% noisy observation function.

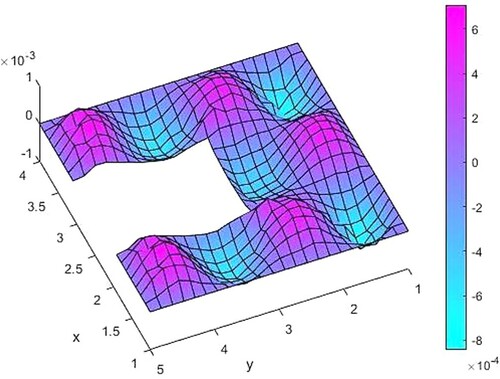

Some error norm values corresponding different maximum mesh size are given in Table . Due to ill-conditioning of some matrices in (2.5), it can be seen by

and

in Table that a smaller

value gives a bigger error norm. Hence, we take the

mesh size for this example. Figure shows the error of the computation for this mesh size .

Table 5. Some and corresponding error norm values.

Secondly, we deal with the solution of inverse identification problem by nonlinear least-squares optimization techniques.

From a given final time state function , we can estimate that the source function may have the form

(4.15)

(4.15) On the other hand, the exact source function leading to a given final time state function is

(4.16)

(4.16) The minimization is carried out by the single-parameter functional

(4.17)

(4.17) If minimization of this functional by the Levenberg–Marquardt method and trust-region method is carried out using MATLAB then the following outcomes are obtained.

For the Levenberg–Marquardt method, we get Table .

Table 6. Levenberg–Marquardt method.

Optimization is completed by the Levenberg–Marquardt method since the final change in the sum of squares relative to its initial value is less than the value of the function tolerance . The found optimal parameter is

. Hence, the identified source function is

(4.18)

(4.18) The residual norm given by (4.16) for this function is

.

For the trust-region method, we get Table .

Table 7. Trust-region method.

Optimization is completed by the trust-region method since the final change in the sum of squares relative to its initial value is less than the value of the function tolerance . The found optimal parameter is

. Hence, the identified source function is

(4.19)

(4.19) The residual norm given by (4.6) for this function is

.

The difference between the optimal parameter values of two methods is

As seen, the parameters of these methods are too close to each other. If we take the optimal parameter as

then we get the following error norm

(4.20)

(4.20) As seen in this example, the trust-region method was able to calculate the result with the same success using less iteration.

Now, we generate random noisy data by (4.10) to decide the regularization process is needed or not (Figures ).

Firstly, we generate random noisy data with the percentage of 10% in the observation function and measure the difference between the source functions caused by this noise.

The optimal parameters accompanying with noisy source function are and

.

The 10% noise causes the norm between observation functions and

norm between source functions (for the trust-region method).

Secondly, we generate random noisy data with the percentage of 2% in the observation function and measure the difference between the source functions caused by this noise.

The optimal parameters accompanying with noisy source function are and

.

The 2% noise causes the norm between observation functions and

norm between source functions (for the trust-region method).

In Table , we present norms of some noisy observations and corresponding source function differences.

Table 8. Some noisy observations and source function differences

As seen from Table , while noise levels decrease, the corresponding source function differences also decrease. Hence, there is no need for regularization process.

5. Conclusions

The trust-region and Levenberg–Marquardt methods which are nonlinear least-squares optimization methods are powerful tools for identification problems. The methods known to give successful results in one-dimensional problems have also yielded successful results in two-dimensional problems. The fact that the regularization operations are carried out within the methods eliminates the need for adding an extra regularization term to the objective function. This situation can be seen from Tables and .

Although the difference between two methods is not much in the first example with one identified parameter, in the second example where two identified parameters are included, the trust-region method yielded less iterations than the Levenberg–Marquardt method, in compatible with general predictions.

Acknowledgements

We are very grateful to the reviewers for their comments and suggestions.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Aki K, Richards PG. Quantitative seismology theory and methods. New York: Freeman; 1980.

- Evans LC. Partial differential equations, 2nd edn. Graduate studies in mathematics, vol. 19. New York: American Mathematical Society; 2002.

- Kuliev GF. Problem of optimal control of the coefficients for hyperbolic equations. Izvestiya Vysshikh Uchebnykh Zavedenii. Matematika. 1985;3:39–44.

- Feng X, Sutton B, Leuhart S, et al. Identification problem for the wave equation with Neumann data input and Dirichlet data abservating. Nonlinear Anal. 2003;52(7):1777–1795.

- Maciąg A. The usage of wave polynomials in solving direct and inverse problems for two-dimensional wave equation. Int J Numer Method Biomed Eng. 2011;27(7):1107–1125.

- Tagiyev RK. On optimal control of the hyperbolic equation coefficients. Autom Remote Control. 2012;73(7):1145–1155.

- Hasanov A, Mukanova B. Relationship between representation formulas for unique regularized solutions of inverse source problems with final overdetermination and singular value decomposition of input–output operators. IMA J Appl Math. 2015;80:676–696.

- Subaşı M, Kaçar A. A variational technique for optimal boundary control in a hyperbolic problem. Appl Math Comput. 2012;218:6629–6636.

- Deiveegan A, Prakash P, Nieto JJ. Optimization method for identifying the source term in an inverse Wave equation. Electron J Diff Equat. 2017;2017(200):1–15.

- Subaşı M, Araz S. Numerical regularization of optimal control for the coefficient function in a wave equation. Iran J Sci Technol Trans A Sci. 2019;43:2325–2333.

- Eng HW, Scherzer O, Yamamato M. Uniqueness and stable determination of forcing terms in linear partial differential equations with overspecified boundary data. Inverse Probl. 1994;10:1253–1276.

- Yamamato M. On ill-posedness and a Tikhonov regularization for a multidimensional inverse hyperbolic problem. J Math Kyoto Univ. 1996;36:825–856.

- Cheng J, Yamamato M. One new strategy for a priori choice of regularization parameters in Tikhonov’s regularization. Inverse Probl. 2000;16:L31–L38.

- Kabanikhin SI, Satybaev AD, Shishlenin MA. Direct methods of solving multidimensional inverse hyperbolic problems. Utrecht: VSP Science Press; 2005.

- Larson MG, Bengzon F. The finite element method: theory, implementation, and applications. Berlin: Springer; 2010.

- Levenberg K. A method for the solution of certain nonlinear problems in least squares. Qart Appl Math. 1944;2:164–166.

- Marquardt DW. An algorithm for least-squares estimation of nonlinear inequalities. SIAM J Appl Math. 1963;11:431–441.

- Goldfeld SM, Quandt RE, Trotter HF. Maximization by quadratic hill-climbing. Econometrica. 1966;34(3):541–551.

- Sorensen DC. Newton's method with a model trust region modification. SIAM J Numer Anal. 1982;19(2):409–426.

- Conn AR, Gould NIM, Toint PL. Trust-region methods. Philadelphia: Society for Industrial and Applied Mathematics; 2000.

- Madsen K, Nielsen HB, Tingleff O. Methods for non-linear least squares problems, informatics and mathematical modelling. Kopenhag: Technical University of Denmark; 2004.