?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

In this paper, we study an inverse transmission scattering problem of a time-harmonic acoustic wave from the viewpoint of Bayesian statistics. In Bayesian inversion, the solution of the inverse problem is the posterior distribution of the unknown parameters conditioned on the observational data. The shape of the scatterer will be reconstructed from full-aperture and limited-aperture far-field measurement data. We first prove a well-posedness result for the posterior distribution in the sense of the Hellinger metric. Then, we employ the Markov chain Monte Carlo method based on the preconditioned Crank-Nicolson algorithm to extract the posterior distribution information. Numerical results are given to demonstrate the effectiveness of the proposed method.

1. Introduction

Transmission problems have important applications in various fields of physical, engineering, and mathematical science [Citation1]. Such problems have been extensively studied in the scattering of acoustic, elastic, or electromagnetic waves by many researchers. One of the crucial problems that attracted interest is the inverse scattering problem for determining the physical and geometric properties of the scatterer. In this paper, we are concerned with an inverse transmission scattering problem of time-harmonic acoustic waves.

In the literature, there are many deterministic methods for solving the inverse acoustic transmission scattering problem numerically. These methods can be divided into two categories as iterative methods (see [Citation2–4]) and non-iterative methods (see [Citation5–8]). One of the main difficulties of the iterative methods is to compute the global minimizers due to the existence of local minima. Non-iterative methods such as the linear sampling method [Citation9] need to calculate scattered fields corresponding to many sampling points for determining the scatterer.

Recently, many researchers have devoted themselves to studying inverse problems from a useful viewpoint of Bayesian statistics [Citation10–12]. Meanwhile, Bayesian inference has been developed to solve inverse scattering problems [Citation13–19]. In Bayesian framework, all quantities are viewed as random variables. Then, the inverse problem is reformulated as a problem of statistical inference. Compared with the deterministic approaches, which only provide a point estimate of the solution, the Bayesian method calculate the point estimates, such as the maximum a posterior estimate and the conditioned mean estimate. It is also able to quantify the uncertainty, such as the confidence interval of the solution.

The primary purpose of this paper is to use the Bayesian approach to reconstruct the shape of the scatterer from full-aperture and limited-aperture far field measurement data. We shall choose the Gaussian measures as the prior distribution, as it is tractable for the theoretical analysis and computation in infinite-dimensional settings [Citation12]. Based on such priors [Citation12], we prove the well-posedness of the posterior distribution for this problem. By Bayes' formula, the solution of the inverse problem is the posterior distribution, which is analytically intractable due to the high dimensionality of the integral and the non-linearity of the forward models. There are two primary techniques to explore the information of the posterior distribution. One is based on sampling using Markov chain Monte Carlo (MCMC) methods [Citation20–23]. The other one is a variational Gaussian approximation, which approximates the posterior distribution with respect to Kullback-Leibler divergence [Citation24–26]. Alternatively, we sample the posterior distribution by using the MCMC methods based on the preconditioned Crank-Nicolson (pCN) algorithm, which is independent of discretization dimensionality [Citation27].

The rest of the paper is organized as the following. In Section 2, we present the forward transmission scattering problem, which is reduced to a system of coupled boundary integral equations. Then in Section 3, we apply the Bayesian approach to the inverse transmission problem and prove the well-posedness of the posterior distribution. In Section 4, we describe the Gaussian prior and express the samples from the prior. Numerical results are provided in Section 5 to demonstrate the effectiveness of the proposed method.

2. Direct and inverse scattering problem

In this section, we describe the direct transmission scattering problem, which is based on the boundary integral equation methods for the solution of the transmission problem, and present the inverse transmission scattering problem.

We assume that is a bounded, simply connected domain with

-boundary Γ (

). The direct scattering problem is to find the total field u and the scattered field

satisfying

and the transmission conditions

(3)

(3)

(4)

(4) where

are the wave numbers, j = 1, 2, ν denotes the unit outward normal to Γ. In addition, the scattered fields

are required to satisfy the Sommerfeld radiation condition

(5)

(5) uniformly with respect to all

. According to the Green representation formula [Citation1], we seek a solution in the form of

(6)

(6)

(7)

(7) where the superscript

and

denote the limits or traces from outside and inside of Γ to Γ, respectively. The function

is the fundamental solution of the two-dimensional Helmholtz equation with wave numbers

, j = 1, 2. Here

is the first kind Hankel function of order zero.

Define boundary integral operators and

, for

:

with density φ. Then from the jump relations, we see that u and

defined by (Equation6

(6)

(6) ) and (Equation7

(7)

(7) ) solve the boundary value problem (1)–(Equation5

(5)

(5) ), provided

,

,

and

satisfy the system of integral equations [Citation28]

Combining with the transmission conditions (Equation3

(3)

(3) ) and (Equation4

(4)

(4) ), we have

(8)

(8)

(9)

(9) The uniqueness and existence results for the system of boundary integral equations (Equation8

(8)

(8) ) and (Equation9

(9)

(9) ) have been established in [Citation28]. Then the direct transmission scattering problem can be solved by substituting the solutions of equations (Equation8

(8)

(8) ) and (Equation9

(9)

(9) ) into integral equations (Equation6

(6)

(6) ) and (Equation7

(7)

(7) ) to obtain u and

.

Meanwhile, the scattered field has the asymptotic representation (see [Citation1])

uniformly for all directions

. Then the far field pattern

of the scattered field

is given by

(10)

(10) Define the forward mapping as F from the boundary Γ to the solution

, i.e.

where the operator F is related to the integral formulations (Equation8

(8)

(8) ), (Equation9

(9)

(9) ), and (Equation10

(10)

(10) ). This relation makes it is possible to reconstruct the scatterer's boundary from the far field pattern, which often contains non-negligible errors and uncertainties.

Given the wave numbers

and the incident wave

, the inverse transmission scattering problem can be formulated as

(11)

(11) where Γ is desired,

is the measurement operator,

denotes the mapping from the boundary Γ to the noise-free observations,

is the noisy observations of

, and η is a N-dimensional zero mean Gaussian noise with covariance matrix

, i.e.

.

3. Bayesian approach

In this section, we reformulate the inverse problem as a problem r1of statistical inference. The well-posedness of the posterior distribution is presented.

Assume that the scatterer Ω is starlike with respect to the origin. The boundary Γ of the scatterer Ω can be parameterized as

where

. Let

and using the above parameterization, we can rewrite (Equation11

(11)

(11) ) as a statistical model

(12)

(12) where

for some suitable Banach spaces X. In this paper, we choose X r1 as the Hölder continuous space, i.e.

,

, with a norm defined by

We view the quantities involved in (Equation12

(12)

(12) ) as random variables. The Bayesian formulation recasts the inverse problem into a problem of statistical inference. The solution of the inverse problem is the posterior distribution

on the random variable

. Denote the prior distribution for q by

. Since the noise is additive Gaussian, i.e.

, the distribution of y conditional on q is

(13)

(13) where the potential

is defined by

with

denoting the Euclidean norm. Bayes' theorem in infinite dimensions is expressed using Radon-Nikodym derivative of the posterior measure

with respect to the prior measure

:

(14)

(14) In the rest of this section, we discuss the well-posedness of the posterior distribution in the sense of Hellinger metric.

Lemma 3.1

For every , there exists

such that

(15)

(15) for all

.

Proof.

From the definition of the operator , we need to prove

(16)

(16) that is

(17)

(17) Set

,

,

. Parameterizing the boundary integral equation (Equation17

(17)

(17) ), we arrive at the estimate, for

,

(18)

(18) It is known that

for

. Due to the well-posedness of equations (Equation8

(8)

(8) ) and (Equation9

(9)

(9) ) [Citation29], we know that

and

are bounded, which means

Thus, we can obtain

(19)

(19) When

, it is clear that

(20)

(20) When

, according to Young's inequality, we have

(21)

(21) In addition, we have

(22)

(22) Combining the estimations (Equation20

(20)

(20) ), (Equation21

(21)

(21) ) and (Equation22

(22)

(22) ) yields

and then the proof is completed.

Remark

Unless otherwise stated, the constant C at different places may have different values and depend on different variables.

The Lipschitz continuity of the operator is given in the following the lemma [Citation15].

Lemma 3.2

For every , there exists

such that

(23)

(23) for all

with

.

Theorem 3.1

For the problem

with the terms defined the same as above. Assume that

is a Gaussian measure satisfying

. Then the posterior distribution given by (Equation14

(14)

(14) ) is well-defined and

is absolutely continuous with respect to

(

). Furthermore, the posterior measure

is Lipschitz continuous with respect to the data y in the Hellinger distance: there exists

such that, for all y,

with

,

(24)

(24)

Proof.

With the boundedness and continuity of the forward mapping as proved in Lemmas 3.1 and 3.2, we can verify the associated function ϕ satisfying the Assumption 2.6 in [Citation12]. Then, the first result is straightforward with an application of Theorem 6.29 in [Citation12]. Furthermore, we can obtain that the posterior

is Lipschitz continuous with respect to the data y (Theorem 4.2 in [Citation12]).

Remark

Let be a reference measure of measures

and

, and then the Hellinger distance is defined by

4. Samples from the prior

It is well known that the prior distribution plays an important role in Bayesian inference. In this section, we present the Gaussian distribution as our priors.

One often assumes that the prior is a Gaussian distribution defined with mean and covariance operator

, i.e.

, where

is symmetric positive and of trace class,

. Here the operator

is assumed to be Laplacian-like in the sense of Assumption 2.9 in [Citation12]. It is motivated to choose this kind of covariance operator since

is a trace class operator on

, which can guarantee bounded variance and almost surely pointwise well-defined samples as

holds (see [Citation12]).

We specify the Gaussian prior which is consistent with the above theory [Citation13]:

where

with the definition domain

Here

is the standard Sobolev space with the periodic boundary condition

, i.e. if

, then

where

's are the coefficients of Fourier expansion series of v. The eigenvalues of

are

,

, and the corresponding eigenfunctions are

and

(we take

[Citation13]). According to the Karhunen-Loève (KL) expansion [Citation12], we have

(25)

(25) where

and

are independent and identically distributed (i.i.d.) sequences with

. Integrating

, we obtain

(26)

(26) where

is a Gaussian random variable. In this way, we can claim that the prior distribution of q is indeed a Gaussian distribution due to the linearity and continuity of the integral operators (see [Citation30]). Moreover, it follows that q is

-Hölder continuous almost surely based on the Gaussian measure

[Citation13]. In the numerical implementation, we approximate the samples from the prior by the truncated Karhunen-Loève (KL) expansion

(27)

(27) Before discussing the numerical implementation of Bayesian inference with the Gaussian prior, we briefly present the sampling method based on the MCMC algorithm to draw the samples from the posterior distribution. Here we adopt the pCN-MCMC algorithm [Citation27], which is shown in Algorithm ??. The pCN algorithm has the proposal:

where q is the present position,

is the proposed position,

is the jump parameter controling the stepsize.

5. Numerical results

In the following, we present numerical results to show the performance of the proposed method. We take the above discussed Gaussian as prior and truncated the series in (Equation27(27)

(27) ) with

, and

,

, and

obey the same distribution

. Fix the wavenumbers

,

, and the incident field

with incident direction

. The synthetic data is generated by using the boundary element method solving the forward scattering problem (1)–(Equation5

(5)

(5) ) and adding Gaussian noise to the resulting solution [Citation28], where the noise covariance is assumed to be

. The pCN-MCMC algorithm is run for

iterations with the first

samples used as the burn-in. The stepsize β is tuned in a way that the acceptance probability is about

. Define the limited aperture

The far-field pattern data is given by

.

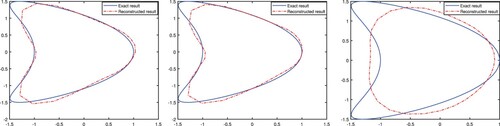

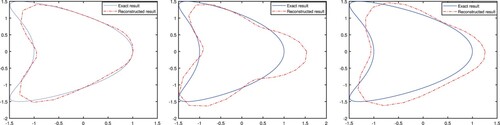

Example 5.1

A kite-shaped obstacle:

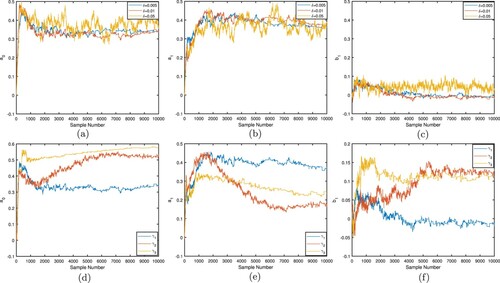

In Figure , the conditioned mean estimates of the posterior distribution are displayed from full-aperture observations with noise levels

. From these numerical results, one can see that the proposed method is effective to recover the shape of the scatterer. Moreover, the data noise level is a crucial factor to the reconstructed results. It comes as no surprise that the reconstructed results are better with a smaller noise level δ. Fix the noise level

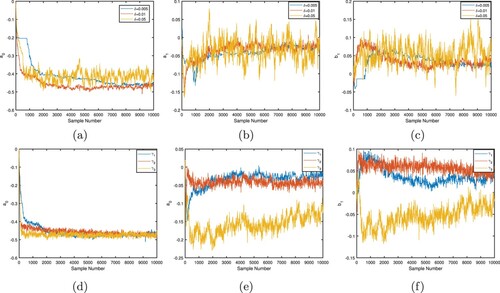

. We present the reconstructed results from different limited-aperture data in Figure . The results displayed in Figure show the effect of the aperture of observations on the posterior distribution. The results of reconstruction deteriorate as the aperture gets smaller. Moreover, it is intuitive that the segment of the boundary of the scatterer closed to the location of given data can be captured well. Finally, some statistical information are presented. Without loss of generality, we show the Markov chains of the coefficients

,

and

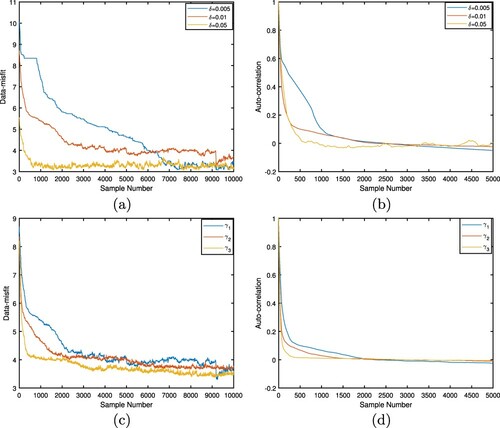

in Figure and omit the other coefficients. As shown in Figure , these results with different noise levels or different apertures suggest that these Markov chains can reach stability. Figure shows the trace plots of the negative log-likelihood

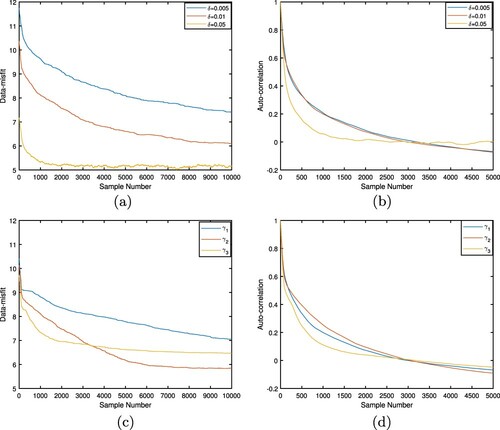

(or data-misfit) and the corresponding auto-correlation functions with different noise levels and different apertures, respectively. After a few iterations, it can be observed that the pCN can arrive at the stationary stage. The corresponding auto-correlation functions decay to zero.

Figure 3. The first row denotes the Markov chains of the coefficients ,

and

with

. The second row denotes the Markov chains of the coefficients

,

and

with

,

.

Figure 4. The traceplots of the negative log-likelihood and the corresponding auto-correlation functions for the kite-shaped scatterer, respectively.

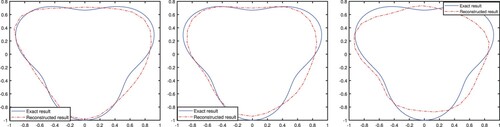

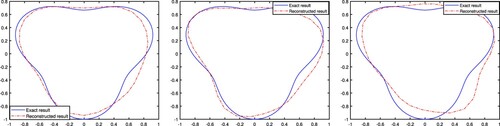

Example 5.2

A pear-shaped obstacle:

where

. In Figure , we present the reconstructed results from full aperture data with noise level

. Obviously, the proposed method is effective to reconstruct the shape of the scatterer with different noise levels. When the data is polluted by a smaller noise level, the reconstructed result is better than that contaminated by a larger noise level. Figure shows the reconstructed scatterer from different limited-aperture data. We find that the proposed method is also useful to recover the boundary of the scatterer from limited-aperture data. In Figure , the Markov chains of the coefficients

,

and

are displayed with different noise levels and different limited apertures, respectively. As shown in Figure , these chains can basically arrive at a stable. Figure displays the traceplots of the negative log-likelihood

and the corresponding auto-correlation functions, respectively. We can observe that the auto-correlation functions quickly decay to zero with larger noise level or smaller limited-aperture data.

6. Conclusion

In this work, we consider the Bayesian inference for reconstructing the shape of the scatterer. We choose the Gaussian measure as our prior and prove the well-posedness result for the posterior distribution. The numerical results show the effectiveness of the proposed method. In future work, we would like to use the variational Gaussian approximation instead of the sampling methods to extract the knowledge of the posterior distribution. Moreover, we can develop the Bayesian method for more complex models, such as transmission eigenvalue problems, elastic and electromagnetic scattering problems.

Acknowledgments

This work is supported by the National Natural Science Foundation of China under grants No.11771068 and No.11501087.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Colton D, Kress R. Inverse acoustic and electromagnetic scattering theory. 2nd ed. Berlin: Springer-Verlag; 1998. (Applied mathematical sciences).

- Hohage T, Schormann C. A Newton-type method for a transmission problem in inverse scattering. Inverse Probl. 1998;14:1207–1227.

- Lee KM. Inverse transmission scattering problem via a Dirichlet-to-Neumann map. Eng Anal Bound Elem. 2019;101:214–220.

- Ghosh Roy DN, Warner J, Couchman LS, et al. Inverse obstacle transmission problem in acoustics. Inverse Probl. 1998;14:903–929.

- Colton D, Coyle J, Monk P. Recent developments in inverse acoustic scattering theory. SIAM Rev. 2000;42:369–414.

- Colton D, Kirsch A. A simple method for solving inverse scattering problems in the resonance region. Inverse Probl. 1996;12:383–393.

- Colton D, Piana M, Potthast R. A simple method using Morozov's discrepancy principle for solving inverse scattering problems. Inverse Probl. 1997;13:1477–1493.

- Yang J, Zhang B, Zhang R. A sampling method for the inverse transmission problem for periodic media. Inverse Probl. 2012;28:Article ID: 035004.

- Colton D, Haddar H, Piana M. The linear sampling method in inverse electromagnetic scattering theory. Inverse Probl. 2003;19:S105–S137.

- Dashti M, Stuart AM. The Bayesian approach to inverse problems. In: Handbook of uncertainty quantification. Cham: Springer; 2017.

- Kaipio JP, Somersalo E. Statistical and computational inverse problems. New York: Springer-Verlag; 2005. (Applied mathematical sciences).

- Stuart AM. Inverse problems: A Bayesian perspective. Acta Numer. 2010;5:451–559.

- Bui-Thanh T, Ghattas O. An analysis of infinite dimensional Bayesian inverse shape acoustic scattering and its numerical approximation. SIAM/ASA J Uncertain Quantification. 2014;2:203–222.

- Huang J, Deng Z, Xu L. Bayesian approach for inverse interior scattering problems with limited aperture. Appl Anal. 2020;1–14. https://doi.org/https://doi.org/10.1080/00036811.2020.1781828.

- Li Z, Deng Z, Sun J. Extended-sampling-Bayesian method for limited aperture inverse scattering problems. SIAM J Imag Sci. 2020;13:422–444.

- Li Z, Liu Y, Sun J, et al. Quality-Bayesian approach to inverse acoustic source problems with partial data. SIAM J Sci Comput. 2021;43:A1062–A1080.

- Liu J, Liu Y, Sun J. An inverse medium problem using Stekloff eigenvalues and a Bayesian approach. Inverse Probl. 2019;35:Article ID: 94004.

- Wang Y. Seismic inversion: theory and applications. Hoboken: John Wiley and Sons; 2016.

- Wang Y, Ma F, Zheng E. Bayesian method for shape reconstruction in the inverse interior scattering problem. Math Probl Eng. 2015;2:1–12.

- Bui-Thanh T, Ghattas O, Martin J, et al. A computational framework for infinite-dimensional Bayesian inverse problems part I: the linearized case, with application to global seismic inversion. SIAM J Sci Comput. 2013;35:A2494–A2523.

- Cui T, Law KJH, Marzouk YM. Dimension-independent likelihood-informed MCMC. J Comput Phys. 2016;304:109–137.

- Martin J, Wilcox LC, Burstedde C, et al. A stochastic Newton MCMC method for large-scale statistical inverse problems with application to seismic inversion. SIAM J Sci Comput. 2012;34:A1460–A1487.

- Petra N, Martin J, Stadler G, et al. A computational framework for infinite-dimensional Bayesian inverse problems, part II: Stochastic Newton MCMC with application to ice sheet flow inverse problems. SIAM J Sci Comput. 2014;36:A1525–A1555.

- Archambeau C, Cornford D, Opper M, et al. Gaussian process approximations of stochastic differential equations. In: JMLR: Workshop and Conference Proceedings, Bletchley Park, UK; 2007; 1:1–16.

- Arridge SR, Ito K, Jin B, et al. Variational Gaussian approximation for poisson data. Inverse Probl. 2018;34:Article ID: 025005.

- Challis E, Barber D. Gaussian Kullback-Leibler approximate inference. J Mach Learn Res. 2013;14:2239–2286.

- Cotter SL, Roberts GO, Stuart AM, et al. MCMC methods for functions: modifying old algorithms to make them faster. Statist Sci. 2012;28:424–446.

- Hsiao GC, Xu L. A system of boundary integral equations for the transmission problem in acoustics. Appl Numer Math. 2011;61:1017–1029.

- Hsiao GC, Wendland WL. Boundary integral equations. Berlin: Springer-Verlag; 2008.

- Hairer M. Introduction to stochastic PDEs. 2009. arXiv:0907.4178.