ABSTRACT

This paper develops a critical perspective on the use of conversational agents (CAs) with children at home. Drawing on interviews with eleven parents of pre-school children living in Norway, we illustrate the ways in which parents resisted the values epitomised by CAs. We problematise CAs’ attributes in light of parents’ ontological perceptions of what it means to be human and outline how their attitudes correspond to Bourdieu’s [1998a. Acts of Resistance. New York: New Press] concept of acts of resistance. For example, parents saw artificial conversation designed for profit as a potential threat to users’ autonomy and the instant gratification of CAs as a threat to children’s development. Parents’ antecedent beliefs map onto the ontological tensions between human and non-human attributes and challenge the neoliberal discourse by demanding freedom and equality for users rather than productivity and economic gain. Parents’ comments reflect the belief that artificial conversation with a machine inappropriately and ineffectively mimics a nuanced and intimate human-to-human experience in service of profit motives.

Conversation is an exchange of minds. When people converse, they share their thoughts, they provide information to each other, and thus contextualise an observed event or phenomenon. Conversation is essential for facilitating learning and maintaining social relationships (Howe et al. Citation2019), and ultimately, for healthy communities and effective civic lives. Conversation is increasingly mediated by, or directed to, high-speed, multi-channel and multimedia conversational partners. For example, when children converse with each other they use social media platforms such as Snapchat (Piwek and Joinson Citation2016), and when they play games, they communicate through avatars and digital story characters (Kafai, Fields, and Cook Citation2010). While human face-to-face conversations occur through the engagement of all six senses of vision, hearing, touch, taste, olfaction and proprioception, digital conversations are currently limited to one or two modalities. In this study, we examine experience with Conversational Agents (CAs) that rely on the audio modality only.

Conversational agents

CAs are variously referred to in the literature, including with terms such as voice assistants, voice assisted technology, smart speakers, and virtual assistants. We adapt the term conversational agents from human–computer interaction studies and define CAs as ‘natural language interaction interfaces designed to simulate conversation with a real person’ (Rubin, Chen, and Thorimbert Citation2010, 496). CAs rely on natural language processing and artificial intelligence to respond to voice commands. A voice command can trigger the CA to play of music or an audiobook, to provide public information (e.g., announcing the weather forecast), or take private action (e.g., setting an alarm). Originally designed for increasing the accessibility of screen interactions for disabled people, today’s CAs are designed to support learning and everyday interactions through information retrieval.

CAs are embedded in many commonly used commercial products. For examples, iOS devices include the Siri voice assistant, and Android smartphones come with the Google Assistant. Smart speakers have also gained widespread adoption and are used as both home automation systems and as portable voice assistants shared by several family members (Lopatovska et al. Citation2019). Examples include the Amazon Alexa voice assistant embedded in the Amazon Echo and Echo Dot speakers and the Google Assistant embedded in Google Home. Voice assistants can also be embodied and take the form of robots, smart tangibles for adults, or smart toys for children. Typically studied as Internet of Things or Internet of Toys devices (see e.g., McReynolds et al. Citation2017), these CAs are part of connected systems that are increasingly common in modern households. As the name reveals, CAs are specifically designed to support voice-based interaction between humans and machines.

Families’ and children's use of CAs

Conversation is one of the most frequently studied forms of human communication, with studies examining the socio-cultural (e.g., Wegerif Citation1996) and multimodal nature of conversation (e.g., Jewitt, Bezemer, and O'Halloran Citation2016). Research concerned with the use of CAs in families has thus far mostly relied on the socio-cultural understanding of communication and has been predominantly framed in the ‘social ecology’ framework. Social ecology emphasises the societal reasons (socio-economic, cultural, political or historical) for positive and negative attitudes towards CAs’ perceived and actual attributes. For example, within a social ecology framework, Beneteau et al. (Citation2020) studied the interaction of ten urban families living in the USA with the Echo Dot and organised their observations under the socio-cultural theme of access democratisation. CAs, with their reliance on speech rather than for example the writing mode, democratised ease of use and access to information for both children and parents in the study. Based on the ways in which the CAs fostered but also disrupted communication practices in the families, Beneteau et al. (Citation2020) formulated design recommendations that could complement and augment parenting skills. In this and other studies with CAs, the parents reflected on, revealed, and revised their understanding of the role of conversation in children’s lives, and they projected this understanding on the attributes of the CAs.

Other research has also examined families’ attitudes to derive design recommendations and implications for future CAs. For example, exploring the actual and perceived attributes of CAs designed for family co-use, Lin et al. (Citation2021) encouraged 14 parents of 2-5-year-olds in USA to use a robot for their children’s story time and subsequently interviewed them about their expectations regarding the robot’s skills. Families’ values of literacy education, family bonding, and habit cultivation were not always met with the limited social intelligence of the robot, leading to parents’ reservations regarding the robots’ role in the home. Correspondingly, Lin et al. (Citation2021) recommend that designers consider the context of use and parents’ perceived intelligence of a robot when designing the technologies to increase family acceptance.

We expand this rich literature with a different focus: we aim to understand the antecedent beliefs of adults’ attitudes towards non-use of CAs at home. This focus is important from our critical technology design perspective that aims to understand the factors that inhibit and resist CAs’ deployment in families. Our approach is motivated by literature of technology resistance, critical technology studies in schools and the theoretical framework of Bourdieu’s Acts of Resistance.

Technology acceptance and resistance models

The Technology Acceptance Model has been a dominant theory in technology, digital media and human–computer interaction studies since the 1980s and has significantly contributed to the evidence base in digital media design (e.g., Tsai, Wang, and Lu Citation2011). It has had a strong influence on the field, with applications in both quantitatively and qualitatively oriented studies that aimed to identify attitudes, viewpoints and beliefs towards the adoption and acceptance of various technologies, including CAs (see Chen, Li, and Li Citation2012 for an overview). The underlying concepts of the Technology Acceptance Model (TAM) are rooted in theories of behavioural intentions, perceived behavioural control and behaviour prediction models (see Marangunić and Granić Citation2015 for a review). Davis (Citation1986) first proposed to conceptualise acceptance of technologies along two dimensions of 1, perceived ease of use and 2, perceived usefulness. These two dimensions have been used to interpret attitudes, views and beliefs concerning diverse technologies introduced to families and classrooms since the 1980s. With more interactive media and more complex ecologies of media use, additional belief factors, adults’ attitudes toward technology use, contextual factors as well as usage measures of actual system usage (King and He Citation2006) were added to the model. This revised model has been applied to diverse technologies, including information systems in web-based learning (e.g., Gong, Xu, and Yu Citation2004), digital libraries in Africa, Asia, and Central/Latin America (Park et al. Citation2009) or digital learning tools such as interactive whiteboards (e.g., Önal Citation2017) or iPads in schools (Do et al. Citation2022), as well as numerous studies with CAs (e.g., Lovato and Piper Citation2015; Kory-Westlund and Breazeal Citation2019; Chocarro, Cortiñas, and Marcos-Matás Citation2021).

In parallel to the technology acceptance studies, a contrasting body of empirical and theoretical literature has evolved with an explicit focus on technology resistance. Although less numerous and less explicitly part of human–computer interaction studies, the technology resistance studies have aggregated a number of socio-cultural reasons for technology resistance. The evidence re-localises acceptance reasons at the opposite end of parents’ attitudes (Venkatesh and Brown Citation2001). Notably, researchers have found that novelty (and fear of the unknown) and high cost and the rapid change of technology are key barriers to technology adoption in families (Cannizzaro et al. Citation2020).

Venkatesh and colleagues’ work on technology resistance (e.g., Venkatesh Citation2000; Venkatesh and Davis Citation2000) identified key variables that could extend and refine the TAM. Building on Venkatesh’s perspective, Laumer, Maier, and Eckhardt (Citation2009) conceptualised the key reasons for resistances, and Laumer and Eckhardt (Citation2010) proposed a Technology Resistance Model. The Technology Resistance Model is based on inverse propositions of TAM, i.e., perceived difficulty to use rather than perceived usefulness and the intention to resist a particular technology. Like TAM and other models of acceptance, technology resistance models have been subsequently revised and expanded with the intention to understand users’ intentions and to inform the design of technologies (Laumer and Eckhardt Citation2012).

In contrast to these models, the technology resistance model proposed by Cenfetelli (Citation2004a) does not focus on variables that are qualitatively different from acceptance perceptions. These perceptions can discourage technology use even though their absence does not encourage use (Cenfetelli Citation2004a), and are therefore additional, but not opposite, perceptions to acceptance attitudes. Positioned against the ‘positive outlook’ of user acceptance, satisfaction and innovation models, Cenfetelli’s (Citation2004b) argues that the inhibitors of technology use are qualitatively different from technology acceptance beliefs and ought to be studied with different theoretical approaches. Our work acknowledges the significant predictive value of the Technology Acceptance and Resistance Models and Cenfetelli’s (Citation2004a) useful provocation of reconceptualising the inhibitory factors of technology use. We build on this work and connect it to the perspective of critical studies of education and technology.

Critical technology studies

The Technology Acceptance and Resistance Models can be criticised for insufficient attention to the situational and contextual factors shaping users’ perceptions of technologies. In particular, the Technology Acceptance and Resistance Models have paid less attention to the disruptive role that technology has played in education, notably the negative consequences of technology adoptions and adaptations in public education systems (see for example Facer Citation2011; Lupton and Williamson Citation2017; Selwyn, Campbell, and Andrejevic Citation2022). The far-reaching consequences of commerce-driven technology development and deployment have been recently highlighted in studies of data governance (Hillman Citation2022) and criticised for pushing performativity agendas in schools based on digitised and quantified student outcomes (Daliri-Ngametua, Hardy, and Creagh Citation2022). These studies showed that significant technology investments do not justify a positivist outlook on technology acceptance and proposing that technology research should not only focus on ‘what works’ but also deeply and critically examine at what cost a technology might work, for diverse types of learners (Macgilchrist, Potter, and Williamson Citation2021).

In this article, we aimed to critically engage with parents’ attitudes regarding CAs at home and better understand the antecedent reasons for any negative attitudes towards CAs in their children’s lives. In theorising the reasons for taken-for-granted assumptions in Technology Acceptance Models, we drew on Bourdieu’s (Citation1998a, Citation1998b) theoretical framework of Acts of Resistance.

Acts of resistance: theoretical framework for the study

We consider Bourdieu’s comprehensive social theory as the most useful frame in identifying the social antecedents to adults’ beliefs regarding a new technology. In particular, we draw on the theoretical concept of active resistance against socio-politico-economic trends, which Bourdieu discussed in terms of active resistance against ‘doxas’ (term adopted from Husserl Citation1973). Doxas are orthodoxies in social territories (Grenfell Citation2004), which can be understood as sites that can oppose oppression and provide basis for questioning internalised norms that have become accepted as given truths in society. In his volume ‘Acts of Resistance’, Bourdieu described how neoliberalism became a doxa, in that it became accepted as the objective truth by individuals as well as the society (Bourdieu Citation1998b). He highlights, for example, how the neoliberal notion of progress has been universally described as always desirable. Yet, this progress is also part of ‘habitus’ (Bourdieu Citation1977), that is, the progress is part of investments in the established social order. Although the habitus exists objectively through economic and conceptual structures (King Citation2000), doxas are part of a person’s own habitus (Chopra Citation2003) and are thus worldviews that get reproduced in symbols and resources used by individuals.

Bourdieu’s epistemic frame is useful for conceptualising what comes to the surface when CAs provoke technology resistance. To understand parents’ attitudes from this perspective, we need to go beyond themes relevant for anticipated or actual use and delve deeper into ontologies, or the cognitive belief systems that individuals hold about realities. CAs epitomise ontologies about what it means to be human and what it means to be a human using technologies in a modern society, which we frame as personal and social ontologies.

Personal ontologies

With artificially intelligent technologies such as the CAs, people can have ontologies that allow them to distinguish between humanoid and non-humanoid attributes, and that thus provide insights into individuals’ understanding of what it means to be human. For personified robots, Severson and Carlson (Citation2010) proposed that children might perceive them as ‘possessing a unique constellation of social and psychological attributes that cut across prototypic ontological categories’ (1101). CAs are different in that they don’t have a human appearance and with their reliance on audio communication, are restricted in semiotic meaning-making. As such, the technologies not only extend but also contradict natural human conversations.

Social ontologies

Attitudes and individuals’ dispositions towards specific behaviours can be explained by the situations and contexts in which they occur (Gilbert and Malone Citation1995). The zeitgeist of modern societies for which CAs are developed, is that of neoliberal economy, which, from Bourdieu’s (Citation1998a) and the critical technology perspective adopted in this article, is at its roots, exploitative. Given neoliberalism’s commitment to free market as a self-regulating force (independent of government interference), the ideal learner is an autonomous, rational and free individual. Yet, not all learners have, or can have, these characteristics. Education that follows the neoliberal economy positions learners as economic entrepreneurs, ‘who are tightly governed and who, at the same time, define themselves as free’ (Davies and Bansel Citation2007, 249). The push for learning is not driven by a social good desire but rather by the expectation for all individuals to increase their market value and be responsible for the human capital they add to the job market. ‘Through discourses of inevitability and globalisation, and through the technology of choice, responsibilized individuals have been persuaded to willingly take over responsibility for areas of care that were previously the responsibility of government’ (251, ibid).

The neoliberal’s tendency to commodify and fragment knowledge has been discussed in relation to formal education (e.g., Selwyn Citation2016) but as yet, not in studies of CAs – a gap addressed by our study.

Study aims and research questions

In this paper, we report on interview data with parents of pre-school children who discussed their existing or possible future use of CAs at home. Each family represents a subculture within a larger culture (Gillis Citation1997) and through their cultures, they practice or limit the societal doxas (Pang, Macdonald, and Hay Citation2015). Unlike previous literature on CAs, our analysis aimed to examine antecedent beliefs concerning CAs’ inhibitory and resistance reasons for use. We asked: How do personal and social ontologies manifest in parents’ accounts of CAs? Do human and neoliberal values constitute sites of resistance or acceptance of CAs?

Methods

The data we draw on in this article were collected as part of a larger international project designed to examine parents’ attitudes towards CAs for use with their pre-school-aged children in Japan, the USA and Norway. The project was couched in human–computer interaction terms with the commitment to derive design recommendations that address the benefits and shortcomings of current CAs design. In this project we draw only on data obtained during the interviews conducted in Norway.

Study participants

Eleven parents of children aged between 3 and 5 years living in Norway, participated in the study. The parents were recruited through university mailing lists and advertisement spaces. We did not require that parents have CAs at home or be active users of the technology. Our invitation letter was deliberately phrased in an open-ended way, explaining that we were interested in understanding parents’ views on the technologies and that we were inviting their honest reflections and reactions. lists the ages and family composition for each interviewed parent, along with information on which CA(s) they use (if any) and for what purpose(s).

Table 1. Interviewees’ characteristics in relation to children and CA ownership and use.

Study procedure

The interviews followed an interview protocol that was developed by Author 2 in their previous study with US parents and adapted to the Norwegian context through translation and contextualisation of the questions. A project team member established a time suitable for each parent and conducted one-to-one interviews through Zoom. The Zoom video files were deleted immediately after each interview and audio recording was professionally transcribed in Norwegian and analysed in original language. The transcripts were coded using a theoretically driven thematic analysis (Clarke, Braun, and Hayfield Citation2015), which proceeded in three broader steps of data familiarisation, theme development and coding, following the steps described by Braun and Clarke (Citation2006). We used sites of resistance and socio-personal norms as ‘sensitizing concepts’ when analysing the data (Tracy Citation2019). The development of themes was flexible and organic, consisting of the researchers’ deep engagement with the data. The epistemological approach to data was contextualist and critical realist, in that we aimed to understand the parents’ ‘experiences as lived realities that are produced, and exist, within broader social contexts’ (21; Terry et al. Citation2017). Selected quotes were translated into English and are presented in Findings.

Ethical considerations

The study was approved by the Norwegian Centre for Data (NSD) and followed standard ethical procedure for educational research whereby the participants were free to withdraw their consent to participate at any time of the study as well as offered the possibility to discuss the nature of the research with the principal investigators (a possibility taken up by one of the participants). The parents were informed about the purpose of the study and an earlier draft of this article was shared with them for comment and the feedback of one parent was incorporated into the final version of the manuscript. All data were anonymised and handled in line with the Norwegian personal data protection laws.

Findings

Personal ontologies

Cognitive–affective deficiencies in CAs

This theme conveys the parents’ perception of communication as a human attribute tapping into cognitive and affective functions, which a machine cannot replicate. The parents also expressed the concern that frequent use of CA could negatively influence how humans talk to each other.

So what I thought of immediately is that of being able to convey thoughts and feelings and talk with empathy. This is something I cannot quite imagine that a mobile or computer will be able to achieve. There is something that is a little lost in the empathy piece. If they [children] hear a somewhat mechanical voice that over time becomes in many ways part of communication, then I think about whether it can affect the way how you speak yourself, and how you manage to convey things and such. (Participant nr.5)

It's almost a bit silly, because an assistant will never be able to create the situations you get into. Imagine if the assistant had said that if you do “it”, you get “that”, then you also get the reality that this does not happen as the assistant had intended. You never know, people may give you different responses to the same request. Then it is very difficult if you interpret everything literally. I do not think you can compare that, I do not think you can prepare a child’s social skills with such an assistant. I believe that they must have social situations, and we must support them in those situations when the situations arise. Because we cannot know in advance what happens between toddlers and school children, there is so much variety. (Participant nr. 4)

You have to say “thank you”, or “please” or something like that; building and developing these habits is really worth their weight in gold. At the same time, the idea with the prosocial is that it should not be just a habit. There must also be a thought, a feeling, an intention behind it. The question then is whether that intention and feeling can be a bit absent when it's a technological gadget you relate to. It is really just a system that expects something from you versus that there are other people who get it with the expression around what you are saying. (Participant nr. 9)

Intentional boundaries on socio-cultural conversation

The key theme in parents’ accounts of non-human attributes of CAs, were tied to CAs’ utilitarian value. The few parents in our sample who had a CA at home and actively used it, commented that they deploy the technology for information retrieval:

… like when wondering what the weather is like. They [family members] want to know what the degrees are in London. These are the kinds of things they use it for. A bit like fact-checking, things they kind of wonder about. (Participant nr. 6)

We can quickly forget the messages between us when it comes to swimming, and whether the ballet starts at four, or a quarter past four, and things like that. So yes, for logistics and for the practical things, but when it goes as deep as with social skills, then no, I do not think I would have used such an assistant. (Participant nr. 4)

The parents acknowledged machines’ ability to support language stimulation and execution of practical tasks, but they rejected the idea that CAs could contribute to conversations that require complex, non-standardised and flexible language use.

So if you have to make it fit into exactly that pattern to be understood, for the assistant to do what you want it to do or what it is then it can be frustrating, but it can also go over the, in a way, dynamic development of the language to some extent. (Participant nr. 9)

But that assistant could also have said “remember to put on a warm jacket today; it's cold outside,” or “put on a rain jacket; it's raining.”

No, no, no. They [the children] have to look out the window and judge for themselves, I do not want them to need a voice that says what to do. Because that's what we do as parents, we say it, and in the end we want them to consider it for themselves.’

Thus, parents found value in CAs but wanted to maintain boundaries that would confine devices to operational arenas within family life and exclude them from social and personal conversational spaces. Connecting this finding to Bourdieu’s critique of the neoliberal values, we notice that parents are resisting the automatisation and monetisation of personal and social aspects of life and allowing CAs to intrude on that part of life. Individual responsibility and free choice are presented by the neoliberal frame as meritocratic sources of progress and growth that can be automatised. There was a sense in parents’ accounts that this is not what they want for their children and indirectly critiqued technological progress that promotes such values. Naubauer criticised the ‘emancipatory informational-neoliberalism, specifically the rise of flexible, globalised production chains made possible through new ICTs and integrated global markets.’ Brown (Citation2015) further adds to the debate by describing neoliberal changes as ‘stealth revolution’ that positioned students as consumers and education as training, replacing the ideal of education being a process forming sovereign citizens through free knowledge provision. Our findings resonate with these critical accounts of neoliberal values embedded in conversational agents’ design.

Lack of multisensory dimensions to conversation

Another site of tension appeared in relation to the difference in human and non-human way of communicating. On one hand, the parents missed that CAs were not multisensory and on the other hand, they appreciated the focused attention on voice as a primary, quick and effective mode of communication:

We use it when we are looking for something, and it's mostly about efficiency. That we speak into it instead of write something. (Participant nr.1)

If the assistant asks the right questions, then of course [it’s helpful]. And [if it is] able to discuss with children, so that they do not just ask questions, but also give answers. It's kind of like reflecting on something together.

True, if you dehumanize, then you may not have the same ability to be empathetic in a way. If one is to learn morality, one must be touched in a way. And I think you do that more by seeing faces and seeing what unfolds. (Participant nr. 6)

In other words, it is mimicry that happens in microseconds in our faces that we interpret without necessarily thinking about it, as we get reflected in the other in a way. And in a way it cannot be replaced. (Participant nr.4)

Social ontologies

Concerns about instant gratification and dependence

The Instant Gratification theme refers to instances in parents’ accounts that touched on the readiness, accessibility, and constant ability of CAs to respond to human commands. This continuous 24/7 availability is a non-humanoid capability, which could be accessed by any human, including young children who might struggle to access other interfaces.

However, the parents resisted this discourse in several ways. They commented, for example, that CAs should not always give children what they want and that the perpetual compliance and responsiveness go against their parenting values and boundary setting:

The other thing that I think is negative about this is; you always get what you want. In other words, these are made so that they will fulfil our wishes … don’t you think? That “I want to do this, turn on the light”, “turn on the music”, or “find that TV channel”. And that's really not how it works in real life. (Participant nr.10)

I'm very sceptical about it, not directly because of the technology, but because of those who own the technology and implement it. Like if there is something that captures everything that is going on around a child and in a house … . They collect data about you continuously. (Participant nr.9)

I think it is a little bit scary. That you become addicted to something that can remind you of things. You probably do not need someone who says it all the time, the idea is that you should grow up with the value that you should be kind to others. And if you are going to become dependent on someone to remind you of that, we are actually creating a need that is not necessary. So the question is, how much should you have of such reminders? (Participant nr.4)

Against this doxa, one has to try to defend oneself, I believe, by analysing it and trying to understand the mechanisms through which it is produced and imposed. But that is not enough, although it is important, and there are a certain number of empirical observations that can be brought forward to counter it. (31).

Disempowering families and undermining their autonomy

There was a clear desire for parents to take control, assume autonomy and use technologies that reflect their family and cultural values: ‘Is it [the technology] something you can make yourself or is it something that is made in advance. And in that case; For whom is it made? For which kind of culture?’ (Participant nr.6)

Parents also felt that a heavy-handed intervention could pose a threat to children’s own agency and freedom, as captured by Participant nr. 9:

But for the child's own sake, it is limited for how often they want to receive corrective messages. Whether it comes from parents or friends or a virtual assistant. So the third time I say “now you have to be kind” or “or remember the jacket” it gets annoying for the kids, and if the assistant pushes on right away it is just even more on top of all the corrections they already receive.

I'm also very afraid, in relation to the digital, that one should be stimulated too much. That one should get too many inputs. There is too little that comes from within … It is conceivable that it is better to create things yourself and come up with things with the risk of forgetfulness than it is that everything should be stimulated from the outside so you remember to do everything correctly. (Participant nr. 11)

… to somehow feel like you are being monitored all the time. And someone says to you: remember that, remember that, you have to say thank you for the food, you have to put on that … I do not know. It feels a bit like … , it feels a bit strange. (Participant nr. 10)

Importantly, parents saw the erosion of children’s freedom as a potential threat to their development and wellbeing, by emphasising that:

… the worry is that the language becomes more rigid. That the freedom to … that they [the children] cannot develop naturally … So if you have to make it fit into that exact pattern to be understood, for the assistant to do what you want it to do or whatever it is, it can be frustrating, but it can also affect the, in a way, dynamic development of the language to a certain degree. (Participant nr. 9)

Discussion

The advances in AI design and the increased availability of smart technologies on the global market require a consideration of the ontologies around which reality is co-constructed with new technologies. We examined the antecedents to parents’ attitudes towards CAs within the broader arenas of human and social ontologies.

The parents in our interviews outlined what Porcheron et al. (Citation2018) explicitly argued, namely that ‘conversational’ agents do not live up to their name because the devices cannot carry on a true conversation. The parents outlined how the capabilities of CAs fall short of conversation attributes between humans. CAs rely only on speech-based conversation, and human conversations involve a fully embodied language and the orchestration of multimodal semiotic signs, including subtle yet important modes such as ‘slight shifts in posture; gaze; an outstretched hand; joint looking at the screen’ that get activated in contemporary conversations (Kress Citation2011, 240). Furthermore, conversation is generated and mediated in a context, which manifests through the socio-personal background individuals bring to the exchange (Littleton and Mercer Citation2013). Parents did not perceive CAs to facilitate such conversations and highlighted the importance of socio-cultural cues in facilitating the establishment of what conversation scholars refer to as ‘common ground,’ that is, shared understanding and ‘a common belief about what is accepted’ (25; Stalnaker Citation2002) that emerges during conversations between people.

The parents perceived CAs as lacking the critical, reflexive, and moral capacities of human beings. They did not perceive this design shortcoming as an opportunity for further development but rather as a reason for rejecting the technology. In contrast, parents valued CAs machine-like attributes of CAs and their utilitarian functionalities, which they saw as useful for families. This clear delineation of human versus non-human attributes corresponds to previous work on the lived experiences of modern families where digital media use has been repeatedly documented to be characterised by mixed acceptance and rejection intentions and behaviours.

For example, research shows that US parents have mixed views about their children’s use of screens (Strouse, Newland, and Mourlam Citation2019) and British parents support but also strictly limit children’s digital reading habits (Kucirkova and Littleton Citation2016). Kucirkova and Flewitt (Citation2020) analysed interview and observation data of British families using digital books and concluded that parents conceptualised the media along three dichotomous themes of trust/mistrust, agency/dependency and nostalgia/realism. On one hand, the parents trusted the artificial intelligence of the technology in providing fact-based learning moments for the child and on the other hand, they mistrusted the AI in monitoring and surveilling their children. The same technology attribute can thus elicit contrasting and conflicting attitudes, which can be reconciled with what Lin et al. (Citation2021) referred to as a ‘cognitive dissonance’ between the facilitating and inhibitory reasons for technology. In Lin et al.’s (Citation2021) observations parents facilitated robots’ use in family storytelling at home, and at the same time, they reported being uneasy about situations where robots replace humans in family storytelling times: ‘Some parents seemed disturbed by the thought of a robot guiding a child through emergent, uncertain states of development. Their concern runs counter to the expectation that a storytelling robot serve as parent double’ (9).

The study participants’ social ontologies led them to reject and resist CAs, which speaks to the neoliberal, market-related values that encompass the ideals of rational market exchange and overt monetisation of knowledge and skills. Bourdieu (Citation1989) discussed four types of capital: economic, cultural, symbolic and social capital. Capital can be understood as the accumulation of resources or goods that reproduce and over time become a powerful tool. Capital can be symbolic or material and both kinds of capital give power to individuals. In a neoliberal society, economic capital is the most prominent cultural production that individuals possess and exchange. The economic capital owned by the designers and developers of these technologies includes the skills these developers have (for example, coding knowledge), which position them on top of the human capital hierarchy (see Bourdieu Citation1989). CAs are produced by companies that have accrued enormous capital and have become economic elites who pose a threat to the autonomy of states and individuals (Livingstone and Pothong Citation2022). In this context, a commonly perceived and real risk is a loss of agency – a theme apparent in our data when parents referred to the design of CAs and the lack of cultural responsiveness and possibility to self-design them.

The parents in Lin et al.’s (Citation2021) interviews referred to insufficiently intelligent robots and predicted that making them more intelligent would lead to greater acceptance among parents. However, reflecting on the same findings from a critical technology perspective, we propose that viewing CAs inferior intelligence as a shortcoming in design reflects the neoliberal ideal of perpetual innovation, expansion and growth. In the neoliberal free market economy, rapid prototype development is typical as the market values large-scale production rather than precision in design. Indeed, as argued before, perpetual innovation is part of neoliberal tenets where market economics push for fast product generation and exchange (Reynolds and Szerszynski Citation2012). Market allocates resources and opportunities because market is considered the most efficient mechanism for capital exchange (Olssen and Peters Citation2005): ‘This new modality of social engineering positions human beings and knowledge as management resources exploited to obtain exchangeable and marketable value’ (268, Moltó Egea Citation2014). Bourdieu openly criticised the neoliberal ideology for its damage to social relations and cited neoliberal economic science as the main theoretical weakness of a neoliberal movement (Bourdieu and Dabel Citation1966). The discourse embraced by neoliberalism is that of globalisation, or ‘mondialization’ (Mitrović Citation2005), and in the context of learning, that of educational policies (Lingard, Rawolle, and Taylor Citation2005), which reduce the autonomy of shared responsibility. The by-product of global economy operating under neoliberalism in relation to CAs is thus a push away from a common good concern to a private profit concern. The negative effects of the global neoliberal economy are most acutely felt by the disadvantaged groups, which was not directly highlighted by our data but was alluded to by the parents.

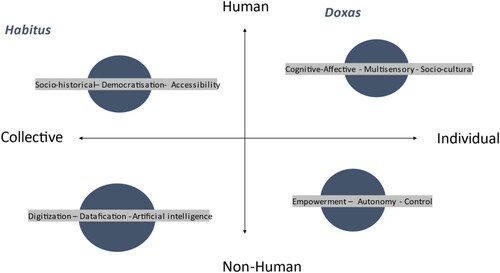

Our findings indicate that parents were not necessarily looking for more intelligent and capable CAs and that the desire for more intelligent systems reflects neoliberal values rather than families’ needs and desires. We argue that the values exposed in parents’ accounts reframe the role of CAs as learning and social agents around the societal ideals of perpetual availability, emphasis on products versus processes and individual-centred meritocracy. If we accept neoliberalism as a doxa, we can see how CAs frame the learning process as a ‘market form’ that has a calculable economic value, and thus can be exchanged on the market. CAs are not human teachers, they are products which are being constantly innovated as part of market economics, which, on surface, favour the habitus of collective practices but in reality, advance individual dispositions. These themes and their mapping on doxas and habitus, together with the two poles of the continuum of human and non-human and personal and social ontologies, are summarised in .

Figure 1. Acts of resistance captured through parents’ ontologies about CAs in families.

Study limitations and implications

There is a close, empirically demonstrated connection between users’ antecedent beliefs, perceptions and attitudes and actual behaviour (Ajzen and Fishbein Citation1975). Nevertheless, it would be important to examine how actual use of CAs maps onto the concerns and preferences expressed by the parents. The empirical part of our study drew on small-scale interviews with a homogeneous group of parents, and it would be interesting to examine how family ontologies vary in relation to diverse adults’ and children’s attributes. In particular, diverse families’ relationships with technologies at home (e.g., whether they generally restrict or facilitate use, whether they are first or late adopters), might play a role.

Overall, our study expands the conversation about resistance reasons and inhibitory factors concerning modern technologies in the society. Addressing ontological questions in the context of CAs is a new research area that carries implications for artificial intelligence studies and can contribute to expanding the horizons of learning technology studies.

Conversational agents collect authentic and at times, intimate, data that reflect family values, habits, routines and interests. These unique data could be mined to understand children’s language development, linguistic and cultural patterns or identify family structures and dynamics. However, the data of commercially produced conversational agents are aggregated for commercial uses and researchers are missing upon insights that could fill empirical gaps and expand theoretical models. Our study contributes a conceptual extension to Bourdieu’s and technology resistance theories with a documentation of the ways in which neoliberal thinking permeates CA’s design and gets reflected in parents’ attitudes.

Acknowledgements

The authors would like to thank the participating parents and the Norwegian Research Council, CIFAR and Jacobs Foundation for supporting this work. We thank Dr Janik Festerling and Professor Iram Siraj for their comments on an earlier version of this manuscript.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Ajzen, I., and M. Fishbein. 1975. “A Bayesian Analysis of Attribution Processes.” Psychological Bulletin 82 (2): 261–277. doi:10.1037/h0076477.

- Beneteau, E., A. Boone, Y. Wu, J. A. Kientz, J. Yip, and A. Hiniker. 2020. “Parenting with Alexa: Exploring the Introduction of Smart Speakers on Family Dynamics.” Paper Presented at the Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems.

- Bourdieu, P. 1966. Alain Darbel, L’amour de l’art, les musees d’art et leur public. Paris: Minuit.

- Bourdieu, P. 1977. “The Economics of Linguistic Exchanges.” Social Science Information 16 (6): 645–668.

- Bourdieu, P. 1989. “Social Space and Symbolic Power.” Sociological Theory 7 (1): 14–25. doi:10.2307/202060.

- Bourdieu, P. 1998a. Acts of Resistance. New York: New Press.

- Bourdieu, P. 1998b. “Neo-liberalism, the Utopia (Becoming a Reality) of Unlimited Exploitation.” In Acts of Resistance: Against the Tyranny of the Market, 94–105. New York: The New Press.

- Braun, V., and V. Clarke. 2006. “Using Thematic Analysis in Psychology.” Qualitative Research in Psychology 3 (2): 77–101. doi:10.1191/1478088706qp063oa.

- Brown, W. 2015. Undoing the Demos. Neoliberalism’s Stealth Revolution. Boston: MIT Press.

- Cannizzaro, S., R. Procter, S. Ma, and C. Maple. 2020. “Trust in the Smart Home: Findings from a Nationally Representative Survey in the UK.” PLoS One 15 (5): e0231615. doi:10.1371/journal.pone.0231615.

- Cenfetelli, R. 2004a. “An Empirical Study of the Inhibitors of Technology Usage.” ICIS 2004 Proceedings, 13.

- Cenfetelli, R. T. 2004b. “Inhibitors and Enablers as Dual Factor Concepts in Technology Usage.” Journal of the Association for Information Systems 5 (11): 472–492. doi:10.17705/1jais.00059.

- Chen, S.-C., S.-H. Li, and C.-Y. Li. 2012. “Recent Related Research in Technology Acceptance Model: A Literature Review.” Australian Journal of Business and Management Research 1 (9): 124. doi:10.52283/NSWRCA.AJBMR.20110109A14.

- Chocarro, R., M. Cortiñas, and G. Marcos-Matás. 2021. “Teachers’ Attitudes Towards Chatbots in Education: A Technology Acceptance Model Approach Considering the Effect of Social Language, bot Proactiveness, and Users’ Characteristics.” Educational Studies, 1–19.

- Chopra, R. 2003. “Neoliberalism as Doxa: Bourdieu's Theory of the State and the Contemporary Indian Discourse on Globalization and Liberalization.” Cultural Studies 17 (3-4): 419–444. doi:10.1080/0950238032000083881.

- Clarke, V., V. Braun, and N. Hayfield. 2015. “Thematic Analysis.” In Qualitative Psychology: A Practical Guide to Research Methods, edited by Jonathan A. Smith, 222–248. London: SAGE.

- Daliri-Ngametua, R., I. Hardy, and S. Creagh. 2022. “Data, Performativity and the Erosion of Trust in Teachers.” Cambridge Journal of Education 52 (3): 391–407. doi:10.1080/0305764X.2021.2002811.

- Davies, B., and P. Bansel. 2007. “Neoliberalism and Education.” International Journal of Qualitative Studies in Education 20 (3): 247–259. doi:10.1080/09518390701281751.

- Davis, F. D. 1986. “A Technology Acceptance Model for Empirically Testing New End-user Information Systems: Theory and Results.” Doctoral diss., MiT SLOAN School of Management.

- Do, D. H., S. Lakhal, M. Bernier, J. Bisson, L. Bergeron, and C. St-Onge. 2022. ““More than Just a Medical Student”: A Mixed Methods Exploration of a Structured Volunteering Programme for Undergraduate Medical Students.” BMC Medical Education 22 (1): 1–12. doi:10.1186/s12909-021-03037-4.

- Facer, K. 2011. Learning Futures: Education, Technology and Social Change. London: Routledge.

- Festerling, J., and I. Siraj. 2020. “Alexa, What are You? Exploring Primary School Children’s Ontological Perceptions of Digital Voice Assistants in Open Interactions.” Human Development 64 (1): 26–43. doi:10.1159/000508499.

- Gilbert, D. T., and P. S. Malone. 1995. “The cOrrespondence Bias.” Psychological Bulletin 117 (1): 21.

- Gillis, J. R. 1997. A World of Their Own Making: Myth, Ritual, and the Quest for Family Values. Harvard: Harvard University Press.

- Gong, M., Y. Xu, and Y. Yu. 2004. “An Enhanced Technology Acceptance Model for Web-Based Learning.” Journal of Information Systems Education 15 (4).

- Grenfell, M. J. 2004. Pierre Bourdieu: Agent Provocateur. New York: A&C Black.

- Hillman, V. 2022. “Bringing in the Technological, Ethical, Educational and Social-Structural for a New Education Data Governance.” Learning, Media and Technology, 1–16.

- Howe, C., S. Hennessy, N. Mercer, M. Vrikki, and L. Wheatley. 2019. “Teacher–Student Dialogue During Classroom Teaching: Does it Really Impact on Student Outcomes?” Journal of the Learning Sciences 28 (4-5): 462–512. doi:10.1080/10508406.2019.1573730.

- Husserl, E. 1973. Experience and Judgement: Investigations in a Genealogy of Logic. New York: Routledge & Kegan Paul.

- Jewitt, C., J. Bezemer, and K. O'Halloran. 2016. Introducing Multimodality. London: Routledge.

- Kafai, Y. B., D. A. Fields, and M. S. Cook. 2010. “Your Second Selves.” Games and Culture 5 (1): 23–42. doi:10.1177/1555412009351260.

- King, A. 2000. “Thinking with Bourdieu Against Bourdieu: A ‘Practical’ Critique of the Habitus.” Sociological Theory 18 (3): 417–433. doi:10.1111/0735-2751.00109.

- King, W. R., and J. He. 2006. “A Meta-Analysis of the Technology Acceptance Model.” Information & Management 43 (6): 740–755. doi:10.1016/j.im.2006.05.003.

- Kory-Westlund, J. M., and C. Breazeal. 2019. “A Long-Term Study of Young Children's Rapport, Social Emulation, and Language Learning with a Peer-Like Robot Playmate in Preschool.” Frontiers in Robotics and AI 6: 81.

- Kress, G. 2011. “‘Partnerships in Research’: Multimodality and Ethnography.” Qualitative Research 11 (3): 239–260. doi:10.1177/1468794111399836.

- Kucirkova, N., and R. Flewitt. 2020. “Understanding Parents’ Conflicting Beliefs About Children’s Digital Book Reading.” Journal of Early Childhood Literacy, 1468798420930361. doi:10.1080/03004430.2018.1458718

- Kucirkova, N., and K. Littleton. 2016. The Digital Reading Habits of Children. A National Survey of Parents’ Perceptions of and Practices in Relation to Children’s Reading for Pleasure with Print and Digital Books, 380–387.

- Laumer, S., and A. Eckhardt. 2010. “Why do People Reject Technologies?–Towards an Understanding of Resistance to it-Induced Organizational Change.” ICIS 2010 Proceedings, 151. https://aisel.aisnet.org/icis2010_submissions/151.

- Laumer, S., and A. Eckhardt. 2012. “Integrated Series in Information Systems.” Information Systems Theory 28: 63–86. doi:10.1007/978-1-4419-6108-2_4.

- Laumer, S., C. Maier, and A. Eckhardt. 2009. “Towards an Understanding of an Individual's Resistance to Use an Information System-empirical Examinations and Directions for Future Research.” DIGIT 2009 Proceedings, 9.

- Lin, C., S. Šabanović, L. Dombrowski, A. D. Miller, E. Brady, and K. F. MacDorman. 2021. “Parental Acceptance of Children’s Storytelling Robots: A Projection of the Uncanny Valley of AI.” Frontiers in Robotics and AI 8: 49.

- Lingard, B., S. Rawolle, and S. Taylor. 2005. “Globalizing Policy Sociology in Education: Working with Bourdieu.” Journal of Education Policy 20 (6): 759–777. doi:10.1080/02680930500238945.

- Littleton, K., and N. Mercer. 2013. Interthinking: Putting Talk to Work. London: Routledge.

- Livingstone, S., and K. Pothong. 2022. “Imaginative Play in Digital Environments: Designing Social and Creative Opportunities for Identity Formation.” Information, Communication & Society 25 (4): 485–501. doi:10.1080/1369118X.2022.2046128.

- Lopatovska, I., K. Rink, I. Knight, K. Raines, K. Cosenza, H. Williams, P. Sorsche, D. Hirsch, Q. Li, and A. Martinez. 2019. “Talk to Me: Exploring User Interactions with the Amazon Alexa.” Journal of Librarianship and Information Science 51 (4): 984–997. doi:10.1177/0961000618759414.

- Lovato, S., and A. M. Piper. 2015, June. ““Siri, is this you?” Understanding young children’s interactions with voice input systems.” In Proceedings of the 14th International Conference on Interaction Design and Children, 335–338.

- Lupton, D., and B. Williamson. 2017. “The Datafied Child: The Dataveillance of Children and Implications for Their Rights.” New Media & Society 19 (5): 780–794. doi:10.1177/1461444816686328.

- Macgilchrist, F., J. Potter, and B. Williamson. 2021. Shifting Scales of Research on Learning, Media and Technology. Vol. 46, pp. 369–376. London: Taylor & Francis.

- Marangunić, N., and A. Granić. 2015. “Technology Acceptance Model: A Literature Review from 1986 to 2013.” Universal Access in the Information Society 14 (1): 81–95.

- McReynolds, E., S. Hubbard, T. Lau, A. Saraf, M. Cakmak, and F. Roesner. 2017. “Toys That Listen: A Study of Parents, Children, and Internet-Connected Toys.” Paper Presented at the Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems.

- Mitrović, L. R. 2005. “Bourdieu's Criticism of the Neoliberal Philosophy of Development, the Myth of ‘Mondialization’ and the New Europe.” FACTA UNIVERSITATIS-Philosophy, Sociology, Psychology and History 4 (1): 37–49.

- Moltó Egea, O. 2014. “Neoliberalism, Education and the Integration of ICT in Schools. A Critical Reading.” Technology, Pedagogy and Education 23 (2): 267–283. doi:10.1080/1475939X.2013.810168.

- Olssen, M., and M. A. Peters. 2005. “Neoliberalism, Higher Education and the Knowledge Economy: From the Free Market to Knowledge Capitalism.” Journal of Education Policy 20 (3): 313–345. doi:10.1080/02680930500108718.

- Önal, N. 2017. “Use of Interactive Whiteboard in the Mathematics Classroom: Students’ Perceptions within the Framework of the Technology Acceptance Model.” International Journal of Instruction 10 (4): 67–86. doi:10.12973/iji.2017.1045a.

- Pang, B., D. Macdonald, and P. Hay. 2015. “‘Do I Have a Choice?’ The Influences of Family Values and Investments on Chinese Migrant Young People's Lifestyles and Physical Activity Participation in Australia.” Sport, Education and Society 20 (8): 1048–1064. doi:10.1080/13573322.2013.833504.

- Park, N., R. Roman, S. Lee, and J. E. Chung. 2009. “User Acceptance of a Digital Library System in Developing Countries: An Application of the Technology Acceptance Model.” International Journal of Information Management 29 (3): 196–209. doi:10.1016/j.ijinfomgt.2008.07.001.

- Piwek, L., and A. Joinson. 2016. ““What do They Snapchat About?” Patterns of use in Time-Limited Instant Messaging Service.” Computers in Human Behavior 54: 358–367. doi:10.1016/j.chb.2015.08.026.

- Porcheron, M., J. E. Fischer, S. Reeves, and S. Sharples. 2018. “Voice Interfaces in Everyday Life.” In Proceedings of The 2018 Chi Conference On Human Factors in Computing Systems, 1–12, April.

- Reynolds, L., and B. Szerszynski. 2012. “Neoliberalism and Technology: Perpetual Innovation or Perpetual Crisis.” Neoliberalism and Technoscience: Critical Assessments, 27–46.

- Rubin, V. L., Y. Chen, and L. M. Thorimbert. 2010. “Artificially Intelligent Conversational Agents in Libraries.” Library Hi Tech 28 (4): 496–522. doi:10.1108/07378831011096196.

- Selwyn, N. 2016. “Digital Downsides: Exploring University Students’ Negative Engagements with Digital Technology.” Teaching in Higher Education 21 (8): 1006–1021. doi:10.1080/13562517.2016.1213229.

- Selwyn, N., L. Campbell, and M. Andrejevic. 2022. “Autoroll: Scripting the Emergence of Classroom Facial Recognition Technology.” Learning, Media and Technology, 1–14.

- Severson, R. L., and S. M. Carlson. 2010. “Behaving as or Behaving as If? Children’s Conceptions of Personified Robots and the Emergence of a New Ontological Category.” Neural Networks 23 (8–9): 1099–1103. doi:10.1016/j.neunet.2010.08.014.

- Stalnaker, R. 2002. “Common Ground.” Linguistics and Philosophy 25 (5/6): 701–721. doi:10.1023/A:1020867916902.

- Strouse, G. A., L. A. Newland, and D. J. Mourlam. 2019. “Educational and Fun? Parent Versus Preschooler Perceptions and Co-use of Digital and Print Media.” AERA Open 5 (3): 233285841986108. doi:10.1177/2332858419861085.

- Terry, G., N. Hayfield, V. Clarke, and V. Braun. 2017. “The SAGE Handbook of Qualitative Research in Psychology.” The SAGE Handbook of Qualitative Research in Psychology 2: 17–36. doi:10.4135/9781526405555.n2.

- Tracy, S. J. 2019. Qualitative Research Methods: Collecting Evidence, Crafting Analysis, Communicating Impact. London: John Wiley & Sons.

- Tsai, C. Y., C. C. Wang, and M. T. Lu. 2011. “Using the Technology Acceptance Model to Analyze Ease of Use of a Mobile Communication System.” Social Behavior and Personality: An International Journal 39 (1): 65–69. doi:10.2224/sbp.2011.39.1.65.

- Venkatesh, V. 2000. “Determinants of Perceived Ease of Use: Integrating Control, Intrinsic Motivation, and Emotion into the Technology Acceptance Model.” Information Systems Research 11 (4): 342–365. doi:10.1287/isre.11.4.342.11872.

- Venkatesh, V., and S. A. Brown. 2001. “A Longitudinal Investigation of Personal Computers in Homes: Adoption Determinants and Emerging Challenges.” MIS Quarterly 25: 71–102. doi:10.2307/3250959.

- Venkatesh, V., and F. D. Davis. 2000. “A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies.” Management Science 46 (2): 186–204. doi:10.1287/mnsc.46.2.186.11926.

- Wegerif, R. 1996. “Using Computers to Help Coach Exploratory Talk Across the Curriculum.” Computers & Education 26 (1-3): 51–60. doi:10.1016/0360-1315(95)00090-9.