ABSTRACT

Extended reality technologies – mixed, augmented, and virtual reality, and future-related technologies – are rapidly expanding in many fields, with underexplored potentials for multimodal composition in digital media environments. This research generates new knowledge about the novel wearable technology – smart glasses – to support elementary students’ multimodal story authoring with 3D virtual objects or holograms. The researchers and teachers implemented learning experiences with upper elementary students from three classrooms to compose and illustrate written narratives before retelling the story with Microsoft HoloLens 2 smart glasses, selecting 3D holograms to illustrate the settings, characters, and events from the 3D Viewer software. The findings analyse how smart glasses supported students’ multimodal composition, and relatedly, the new modal resources available to students wearing smart glasses to compose 3D stories. The findings have significance for educators and researchers to understand and utilise the multimodal affordances of augmented and mixed reality environments for composing and storytelling.

Introduction

Literacy and digital media practices, such as narrative composition, are now often digitally mediated by new technologies, producing more than digital substitutes of print texts (Mills and Stone Citation2020). Additionally, extended reality technologies (XR) offer radically different features than other digital technologies to communicate with audiences in ways that were previously inconceivable (Mills, Scholes, and Brown Citation2022a). This research examines the potential of smart glasses: referred to as either mixed reality (see Smole Citation2022), or as augmented reality smart glasses (ARSG) to differentiate from smartphone-based augmented reality (see Ro, Brem, and Rauschnabel Citation2018).

The research presented here examines the use of smart glasses with school students to retell their written stories interactively, using 3D holograms as virtual story illustrations assembled and viewed within the real classroom. It is predicted that over the next decade smart glasses will become the next leading mobile technology for mixed and augmented reality after the smartphone (Lee and Hui Citation2018), becoming more affordable and enabling widescale utilisation (Southgate, Smith, and Cheers Citation2016).

Smart glasses have a see-through optical display worn on the user’s eye-line, with a built-in processor in the head-mounted device, and a touchless input interface. These devices, such as Microsoft Hololens, Google Glass, and Vuzix Blade, support multimodal input from the user, such as head movement, voice command, and in-air haptic gesture recognition (Nichols and Jackson Citation2022). Research on wearable technologies, such as smart glasses, in digital media studies and education is timely and urgent, given the growth of extended reality technologies associated with Industry 4.0 and the future world of work – of which smart glasses are an under researched subset (Australian Council of Learned Academies Citation2020 [ACOLA]).

Extended reality: mixed, virtual, and augmented reality

Extended reality (XR) technologies include three main types: (i) augmented reality or AR, which overlays virtual imagery on the real world; (ii) mixed reality, or MR, in which virtual and real world are blended while virtual objects respond to the physical actions of the user; and (iii) virtual reality, or VR, offering fully immersive experiences in virtual worlds, with the real world blocked from view. Extended reality also includes related technologies still in development (Mills Citation2022; Palmas and Klinker Citation2020).

Educational research that compares VR, MR, and AR in classrooms points to MR applications as having fewer studies to date, given their recency. Additionally, existing MR studies have been critiqued for reporting ad hoc and informal uses, rather than authentic integration with the curriculum for classroom use (Ali, Dafoulas, and Augusto Citation2019; Munsinger, White, and Quarles Citation2019). Notably, existing research also currently includes fewer MR applications with children than adult learners in higher education (Munsinger, White, and Quarles Citation2019). Extended reality (XR) technology, including MR, is anticipated to peak in development this decade, increasing affordances for collaboration, recreational, industry, and affordability for educational use (Mills Citation2022).

Existing research on smart glasses for learning

Most of the research on smart glasses for learning has been in contexts beyond schooling, such as medical and health science in higher education (Birt et al. Citation2018; Hofmann, Haustein, and Landeweerd Citation2017). Among the few studies that have begun to research the use of smart glasses in schooling, the focus has not been on textual practices, but on the basic functionality of the devices with children, such as differences between voice, gesture, and clicker-based interaction (Munsinger, White, and Quarles Citation2019).

An early study of smart glasses for multimodal composition; that is, using multiple modes such as words, images, and audio, examined the use of Google Glass to provide video feedback by peers on written compositions among adult learners (Tham Citation2017). While such studies with smart glasses are rare, related AR technologies – AR books – have been shown to support textual practices by connecting words, sounds, and 3D virtual objects with locations in the real world (Bursali and Yilmaz Citation2019). The benefits of AR technology for early literacy learning have been shown through its advantage for situated learning, leading to better retention of new vocabulary than conventional didactic methods, while increasing young learners’ attention and satisfaction (Santos et al. Citation2016). Research of other AR content-areas applications have shown improved academic outcomes by stimulating the students’ attention (Tosto et al. Citation2021). In sum, the few studies of extended reality technologies point to new pedagogical possibilities for textual practices, of which smart glass use is currently an under-researched subset, particularly in K-12 schooling.

Extending social semiotics to using smart glasses for multimodal narratives

This research applies and extends multimodal social semiotics – a theory of meaning making that attends to communication modes to represent ideas and shape power relations, seen as social and cultural resources (Jewitt, Bezemer, and O'Halloran Citation2016). While research of multimodal authoring is advancing, that is, textual composition combining multiple modes – images, words, audio, gestures, and movement – researchers have yet to understand the affordances of smart glasses for multimodal textual practices (Mills and Stone Citation2020).

Research has previously shown that practices of multimodal story reading in digital spaces are markedly different than reading books, involving skills that have been found to be deficient in fourth- and fifth-grade students, and this has been attributed to an absence of scaffolding of digital reading in the school curriculum, even in common online reading situations (Golan, Barzillai, and Katzir Citation2018). For example, readers of multimodal, digital media texts require new reading behaviours, greater focus, and different reading pathways (Kieffer, Vukovic, and Berry Citation2013). Reading comprehension in many digital contexts, such as the internet and e-book apps, can be interrupted by distracting hyperlinks as users navigate texts that are connected in multiple directions (Mills, Unsworth, and Scholes Citation2022b). In contrast, well-designed AR books can support a high level of reading comprehension, aiding longer-term memory of material than for control groups using conventional or print-based reading (Bursali and Yilmaz Citation2019).

Smart glasses open an expanded array of new possibilities for mobile digital storytelling and story reading that warrants urgent attention, because little is known about the multimodal compositional and text processing skills required in these hybrid, MR spaces (Schleser and Berry Citation2018). Smart glasses display virtual content while gathering information about the user’s real world via sensors and input devices, such as eye-tracking, GPS, accelerometers, microphones, and magnetometers to display information. Connecting to WIFI and capable of displaying and recording mixed reality video shared to a computer, smart glasses can record and store video or data while on the move (Hofmann, Haustein, and Landeweerd Citation2017).

While research of 3D multimodal composition using smart glasses is currently rare, related research from a social semiotic perspective of 3D multimodal composition has examined immersive virtual reality (VR) painting with Google Tilt Brush (e.g., Mills and Brown Citation2021; Mills, Scholes, and Brown Citation2022a). Multimodal composition in virtual reality environments involves a range of new haptic and proprioceptive skills (Mills and Brown Citation2021), technical skills (Mills Citation2022), spatial orientations, and body movements (Mills, Scholes, and Brown Citation2022a), while teachers who integrate these technologies encounter new pedagogical and technological challenges that can be guided by classroom research (see Hofmann, Haustein, and Landeweerd Citation2017). Despite the challenges of technological innovation in schooling, wearable technology should be researched now in writing instruction ‘while it is still in its infancy’ (Tham Citation2017, 23).

Materials and methods

This section presents the methods of the qualitative, classroom-based application of multimodal composing with smart glasses, including the research questions, participant description, learning experiences, research ethics approval, data collection methods, and multimodal analysis applied to the digital data sets.

Research questions

Given the need to understand the potentials of smart glasses for supporting upper elementary students’ composition of 3D multimodal narratives, the research reported here addressed two key research questions:

How can smart glasses support students’ multimodal composition in the classroom?

What multimodal resources are available to students wearing smart glasses to compose narratives?

These questions were asked in the context of research with upper elementary students using the Microsoft HoloLens 2 smart glasses and 3D Viewer software. The activity structure involved the students first writing their stories and illustrating them on paper, before narrating their stories to the researchers, teachers, and peers. Stories were narrated verbally and illustrated by interacting haptically with virtual 3D holograms that were superimposed over the real classroom. The aim was to understand the students’ experiences of this novel storytelling process, and its similarities and distinctions with conventional forms of story authoring at school. An additional aim was to understand from the perspective of social semiotics (e.g., Kress and Van Leeuwen Citation2021), the kinds of novel 3D modal resources that are readily available to young users for narrative composing using smart glasses.

Site and participant description

The public K-12 school was located in a district in south-east Queensland, Australia. This particular location is of a lower socioeconomic status than nationally, including lower tertiary qualifications and higher unemployment than the national average (Australian Bureau of Statistics Citation2016). The population was also culturally diverse, with 31% from overseas and 69% born in Australia (ABS Citation2016). The students were recruited from three Year 6 classrooms (n = 27), ages 10–11 years, with a consent rate of 90%.

Learning experiences

The mixed reality research activity targeted the Australian Curriculum in English and Media Arts, where Year 6 students should learn to compare texts across media, and design story settings, characters, events, and ideas using words, images, and sounds (Australian Curriculum, Assessment & Reporting Authority [ACARA] Citation2017a). The learning experiences in the study also target the General Capability goals in the Australian Curriculum, aimed to develop students’ Information and Communication Technology (ICT) capability. This involves utilising ICT to realise creative ideas and intentions and communicating with ICT to share and exchange knowledge (ACARA Citation2017b).

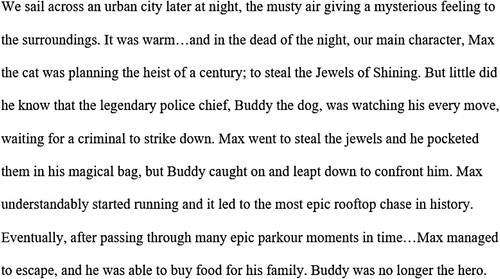

The research team collaborated with the Year 6 teachers of the three classes to facilitate the smart glasses activity, which applied the students’ knowledge of ecosystems and wildlife – communities of organisms that live in different ecological environments. The research activity involved writing, drawing, and then using smart glasses, to compose multimodal stories that centred the narrative in a particular biome. The students completed a template created by the researchers and teachers to plan the components of story writing, including their story setting, main and secondary characters, key events, complications, resolution, and coda (See below). The teachers taught integrated content-area lessons on ecosystems and biomes, narrative genre, grammar, vocabulary, spelling, and story writing.

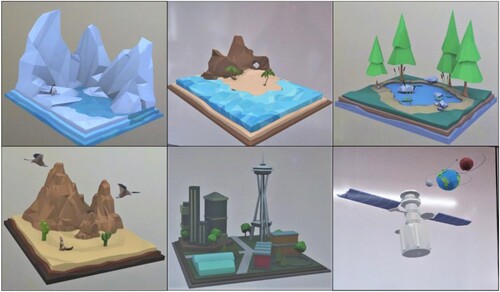

Figure 1. Sample of 3D Viewer catalogue options for the story planning activity.

Students viewed available options in the 3D Viewer application, choosing a story setting or biome – arctic, tropical island, forest, desert, or urban (see below).

Figure 2. Setting/biome options from 3D Viewer application.

Students could select satellite and planet holograms to create a sixth environment. They also chose characters and at least three key imaginative objects to indicate story events (see ).

Figure 3. 3D Viewer options using HoloLens 2 in classroom.

Because the 3D holograms were selected from a catalogue of existing virtual objects, the students’ ideas for their narratives were shaped in some way by the available options, while the resulting stories were unique. Selections could be made through HoloLens 2 interactive input facilities, including haptics, eye tracking of the user’s gaze, and voice control.

Research ethics

Ethical clearance was granted by the Australian Catholic University Human Research Ethics Committee (ethics approval ACU 2018-97H) following guidelines for research with minors in the National Statement on Ethical Conduct in Human Research (National Health and Medical Research Council Citation2018). The students and their caregivers provided voluntary, informed, understood, and written consent to participate in the research study, and for students to be interviewed and digitally recorded using video, with student names and images published anonymously using pseudonyms.

Data collection

Over two weeks of data collection, the research team conducted the smart glasses activity using Microsoft HoloLens 2 and collected students’ written stories and drawings. Each visit involved full days of recording and interviewing students as they completed the learning experiences. The students’ view from the smart glasses was livestreamed to a computer using 4G modem and Mixed Reality Capture and recorded using the Open Broadcaster Software (OBS Studio). Once the students had finished arranging their holograms they used the voice-activated video recording of their storytelling, supported by 3D imagery. The following data were collected:

Student drawings, written story, and holographic story. View sample: https://drive.google.com/file/d/1jTq8uxYuhK7tXh-q6OSddTNsMQXCh-D5/view?usp=sharing

Video recordings of students using smart glasses with the corresponding HoloLens 2 storytelling recording. View sample: https://drive.google.com/file/d/1-Vmxv3NBIsDdXJ7ZrQ7UFiYWagfJG7_4/view?usp=sharing

Screen-capture (OBS Studio) of the holographic story-making process. View sample: https://drive.google.com/file/d/14JMq3BT4H5M4caF8JG6NDNWXSagKhA1v/view?usp=sharing-

Video recorded think-aloud interviews of the design process. View sample: https://drive.google.com/file/d/1XtZZ4v7T8rlIqi1f_c0viVd6i55MVpoi/view?usp=sharing

A think-aloud interview protocol was used to understand the students’ experiences of the smart glasses, because this method illuminates the users’ thought-processes while engaging with new technology, without having to rely on memory of a past activity (Hevey Citation2020). The interview schedule addressed the research questions by gaining participant perspectives of the story content, ease or difficulty of the haptic controls, use of storytelling media, and affordances of the smart glasses for storytelling. For example, students were asked: ‘How does it feel to move the virtual holograms around?’ and ‘How is this different to other story making activities that you have done at school?’

Data analysis

This section describes the analysis of the multimodal datasets and think-aloud interviews described in two sub-sections.

Analysis of multimodal datasets

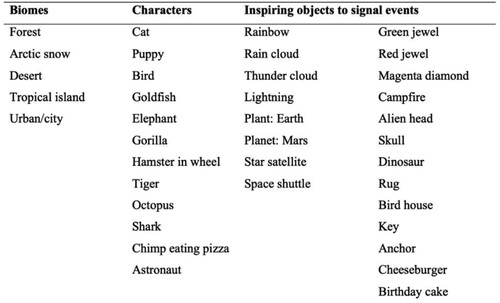

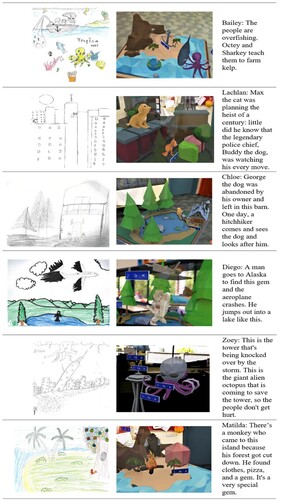

The students’ drawings, written story, and videorecorded/screen-captured holographic stories (imagery and narration) were compiled and analysed to compare the ideational meanings – how the stories make sense through different semiotic resources (Halliday et al. Citation2014; ).

Figure 4. Sample of student drawings, 3D holographic scenes, and written story excerpts.

The initial analysis of the stories across written, hand-drawn, and virtual textual formats identified adaptations of story settings, writing point of view (e.g., first person, third person), character development, and story complexity. The significant visual meaning systems in the stories were then compared, realised through interpersonal metafunction, including how the representational elements were organised to set up relations between the depicted characters and the viewer (Painter, Martin, and Unsworth Citation2013). Key aspects of the textual metafunction were also analysed, concerning the coherence and organisation or weighting of elements within the visual layout to manage the viewers’ attention (Painter, Martin, and Unsworth Citation2013). Visual meaning comparisons included the 3D placement of characters and objects, and the composition of elements working together in the scene. The interactive relationship between signifiers to each other or the viewer were also analysed, including vectors in transactional images, point of view, and visual representational structures (Kress and Van Leeuwen Citation2021; Painter, Martin, and Unsworth Citation2013).

Analysis of student think-aloud interviews and video recording

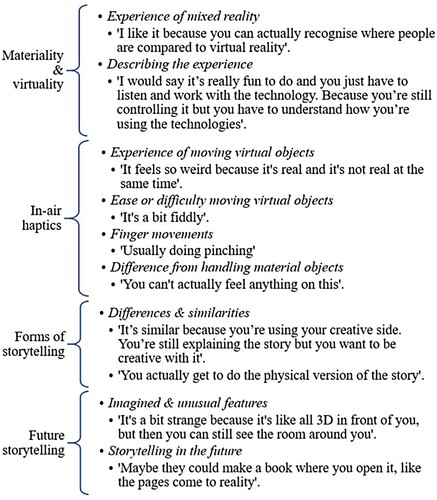

Student think-aloud interviews and video recordings of their multimodal storytelling were transcribed and analysed using NVivo 12 software, moving between deductive coding from the multimodal theory-informed interview framework, and inductive analysis from themes emerging in informants’ discourse (Guest et al. Citation2017). The resulting themes address the research questions about how smart glasses can support students’ multimodal composition, and the modal resources available to students: blending virtual and material, in-air haptics as modal resource, comparing forms of storytelling, and future storytelling (see ). Haptics are human interactions with the environment using hand and finger movements (Minogue and Gail Jones Citation2006). Haptics were coded into subthemes: grab (pinch, closed hand, drag, release), and point (touch, scroll). See for coded themes with examples.

Results

The two key research questions will be addressed to understand how mixed reality smart glasses can support students’ multimodal composition in the classroom, and to identify the modal resources available to students for 3D story making. This section describes the students’ experiences with smart glasses for story authoring following the analytic themes from the combined data sets: blending virtual and material, in-air haptics as modal resources, 3D visual modal resources, and comparing story formats, including future storytelling.

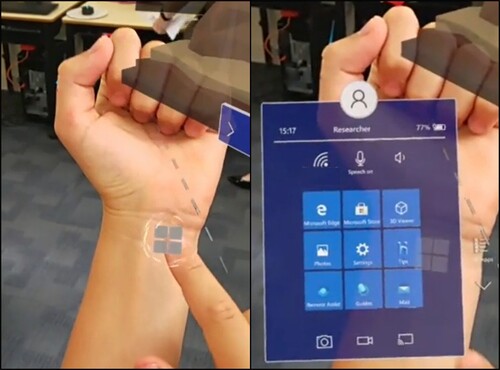

Blending virtual and material with smart glasses

The changing materiality or the physical medium of textual practices, whether of stone, parchment, paper, or screen, is of key interest to researchers of digital composition, as has the more recent immateriality of certain virtual texts that lack tangibility (Mills and Brown Citation2021). During the smart glasses story composition, the students’ interactions between virtual and material worlds for multimodal authoring were observed. The students noted that the holograms looked and felt real because they responded to the students’ hand movements without mediating tools, such as game controllers (see ).

Figure 6. HoloLens 2 Start menu viewed over student’s wrist with finger tapping.

Bailey explained: ‘It’s really nice not to have those clanky, big controls … whereas it’s pretty annoying to have the big controllers with my normal gaming gear – it takes away from the immersion. But with this it’s very immersive.’ The HoloLens 2 uses real-time hand and eye-tracking through infrared cameras that capture the user’s manipulation of digital objects (Smole Citation2022).

Students talked about the novel relationship between the virtual texts and the materiality of their physical interaction, as Lachlan noted: ‘It’s really interesting, because it’s there, but you don’t need game controls – it just senses where your hands are.’ Some were confused about whether the visible hands were their own or a digital projection, since they blended interactively with the virtual animations: ‘Oh, so I have the hands’ and ‘They actually look animated … or are they real?’ (see )

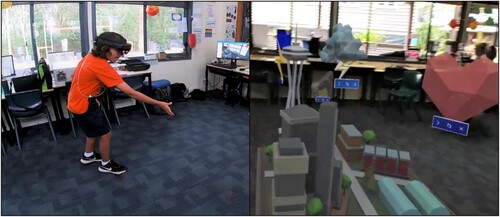

Figure 7. Video of Jayden (left) interacting with virtual text (right).

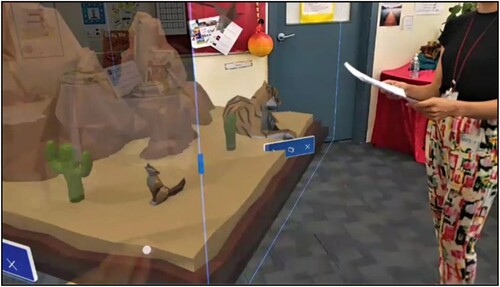

The multimodal textual affordances, including sound effects and images (still and animated), appeared as virtual, dynamically interactive texts suspended within the real classroom and its actors (teachers, researchers, students). These gravity-defying virtual models were responsively controlled by students’ hand movements, gaze, and voices (see ). Students took up the layered multimodal affordances (Blevins Citation2018), adapting their knowledge of narratives to the new storytelling context.

Figure 8. Student makes a holographic scene while the teacher observes.

The blending of the virtual and real was a distinctive feature of the textual practice, as Georgia described: ‘It's kind of weird when you first start, because everything looks very different and you can see the world around you, but once you get used to it, it’s actually really cool, because you can move things around relatively easily.’ Some of the students described the novelty of holographic texts: ‘It’s really artistic and futuristic’ and ‘It feels so real, like a story.’ Blevins (Citation2018) theorises the confluence of the virtual and material in augmented and mixed reality texts as compositional layers that add meaning to each other, and which authors need to consider in terms of the text’s location and relationship with the surrounding space. For example, some students enlarged the 3D models to striking proportions, others positioned medium-sized models at waist height for ease of manipulation, placing key characters and objects where they and others could view them.

The students compared the activity with their previous authoring using immersive virtual reality (VR), where unlike smart glasses the real world is blocked from view (Mills and Brown Citation2021). Students noted the safety advantage when working with smart glasses compared to VR head-mounted displays, as Jayden described: ‘It’s cool because you can see around instead of walking into stuff [in VR]. It’s really different!’ Emily agreed: ‘In a way this makes you feel a bit safer [than VR].’ Bailey elaborated: ‘Sometimes VR would feel claustrophobic, like, I’m almost trapped in the VR, because it’s a whole virtual world.’

The holographic stories were more connected to the real-world location: ‘It makes it feel a bit more real, because last time we did VR, it felt like you were in a different place. But here you’re in the same place.’ This is an advantage of smart glasses over VR for learning because students don’t need to hold a mental image of their physical environment while interacting with the virtual texts, having immediate access to the virtual and the real (Oranç and Küntay Citation2019). Researchers here observed that the blending of virtual and real in the storytelling practice supported, rather than interfered with, text making and social interaction.

Additionally, smart glasses support interactions between realities given that the virtual texts responded to the users’ real movement in a real location, with visible and co-present class members. One student explained: ‘I like it because you can actually recognise where people are, compared to virtual reality.’ This is helpful for creating a sense of a learning community (Ali, Dafoulas, and Augusto Citation2019).

In-air haptics as modal resources

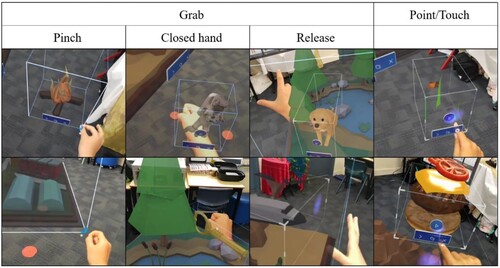

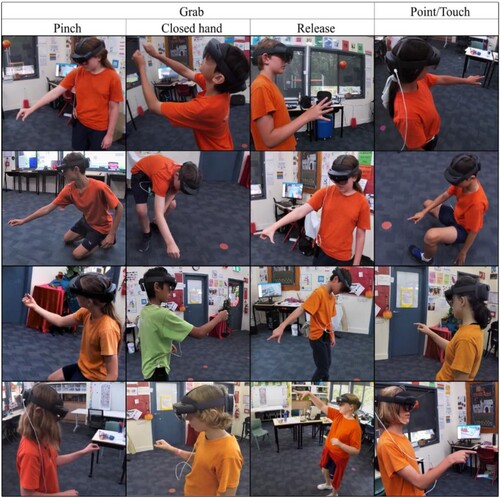

A key feature of smart glasses for enabling students’ multimodal composition in the classroom was in-air haptics to manipulate the 3D virtual models. Haptics refers to human interactions with the environment using hand and finger movements (Mills, Unsworth, and Scholes Citation2022b; Minogue and Gail Jones Citation2006). The HoloLens is programmed to follow a haptic gesture recognition or cuing system (Nichols and Jackson Citation2022). The following haptic gestures were used by the students to manipulate the 3D virtual objects: grab (pinch, closed hand, drag, release), and point (touch, scroll) in the story composing (see ).

Figure 9. Haptic gestures to manipulate holograms in 3D Viewer with HoloLens 2.

The first set of haptic gestures relates to ‘grab,’ which is used to grip, move, and release objects intuitively through distinct, but related, actions: (i) Pinch: Touch the tips of the index finger and thumb together to select, resize, rotate, or relocate an object, (ii) Closed hand: Sustain the pinch, but use a tightened fist to hold, drag, and relocate an object, and (iii) Release: Separate the thumb and index finger widely to release hold of an object (see ). This haptic gesture recognition system of HoloLens2 is supported by voice-enabled commands, head movement (with accelerometer), and sensor eye tracking (Lopez et al. Citation2021).

Figure 10. Students’ haptic gestures.

The second main category of haptic interaction is the use of an extended index finger or ‘point’ to animate an object, or to scroll the index finger in an upward or downward motion, used by students to select objects from the hologram menu in 3D Viewer (see ). The term ‘point’ distinguishes this haptic category from the broader concept of human touch – the bodily sense to perceive texture, vibration, and temperature (Friend and Mills Citation2021).

Student descriptions of hand movements included: ‘pinching,’ ‘grabbing,’ ‘ball your hand up into kind of like a fist shape,’ ‘move your hand,’ ‘rotating,’ ‘dropping,’ ‘use your pointy finger’ and ‘tapping.’ These responses were consistent with the video observations and the haptic gesture recognition feature of the touchless input interface, which tracks the users’ mid-air, free-hand movement. Haptic interaction with the visual holograms was supported by sound effects and animations that began when virtual buttons were tapped, blending the physicality of their haptic gestures with corresponding translations of the virtual 3D objects.

In terms of the haptic functionality for supporting students’ multimodal composing, the students mostly found that the haptic gestural interaction was natural and intuitive. The 3D holograms do not mimic the real-world behaviour of physical objects, since the holograms are weightless, textureless, and immaterial. For example, Carter described: ‘It feels really trippy, because it doesn’t feel like you’re actually grabbing it. The graphics are really cool so it works from your pinch, and you can move it.’ While students initially found the sensory illusion somewhat surreal, given the novelty, their haptic gestures quickly became fluid given the frequency of repeated actions. Others, like Savannah, elaborated why the haptic interaction felt unusual or weird: ‘It feels weird because you’re not actually feeling it. Still – you’re making it move.’ Amelia’s perception was similar: ‘It feels kind of weird, because you can just put your hand through the hologram, but you can then grab it.’

Using smart glasses, the user sees the virtual overlay, but they can also physically and visually interact and modify the virtual objects, aptly expressed by Lachlan: ‘You’re moving something, but not feeling it. It’s kind of like telekinetically moving things around.’ The ability to interact with superimposed information on the real world is what differentiates MR from AR. However, while the virtual objects move, resize, rotate, and so on in response to user haptics, there was no cutaneous or tactile stimuli, pressure, or vibrations – unlike haptic feedback from devices worn against the skin.

When asked about the ease or difficulty of manipulating the virtual texts for multimodal composing, some students found the touchless input interface of the smart glasses effortless compared to game controllers in other digital applications. Carter suggested: ‘It's pretty easy, because you won't find a lot of video games, or any type of VR, that would be this easy to move stuff around.’ An advantage of the weightlessness of moving large virtual objects around the classroom was expressed by Liam: ‘It's a lot easier to move than what it would be in real life.’ Highlighted in these experiences was the unusual sensory affordances of virtual texts that were responsive to the students’ physical movements.

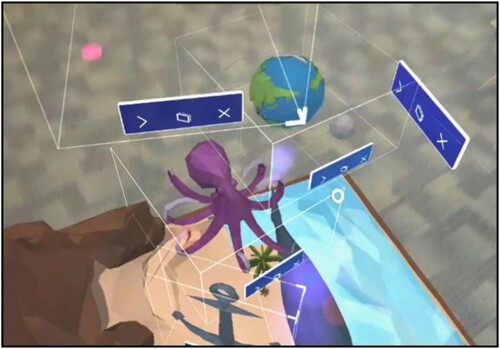

However, some students, such as Logan, experienced difficulties releasing the holograms: ‘Sometimes it's easy, sometimes it's difficult, because the holograms get stuck and follow you.’ This occurred when students did not open their thumb and index finger clearly enough to signal the release of virtual objects. Others, such as Ella, found resizing (reducing) the 3D models tricky, which was signalled by pinching the vertices of the 3D shapes: ‘It’s all sort of easy. But sometimes shrinking it down is a little bit harder.’ Students were often observed to experience difficulties when manipulating virtual objects that were intersecting or near each other, which the student were informed would make movement harder. below illustrates overlapping objects.

Figure 11. Student view of 3D holographic objects near each other.

Olivia described her haptic interaction with intersecting virtual models: ‘It’s tricky just trying to pinch it, because usually you have one object to pinch, but this corner is kind of invisible.’ In sum, observations and dialogue with the students suggested that the touchless interface required initial instruction and practice for precision and efficiency, while still presenting only moderate frustration for users in the first few minutes, or when performing a new function (e.g., scrolling).

3D Visual modal resources

The visual mode was critical for communicating meaning with smart glasses when narrating holographic stories, as the task required overlaying different static and animated 3D models to create settings, characters, and to signal events with key objects, accompanied by their audio-recorded (verbal) narration. The 3D holographic models were used by the students to focus the audiences’ visual attention on the location of the action, along with key actors and events in the narrative, with particular animated holograms also serving to bring the stories to life.

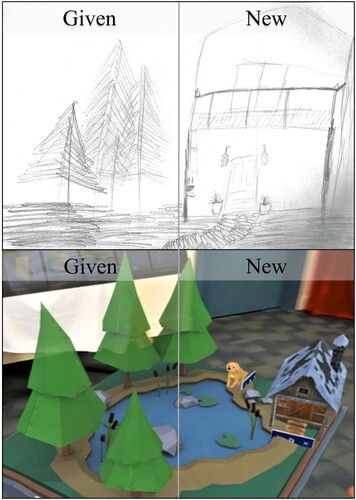

While there are multiple points of difference between 2D drawings and 3D holographic imagery, the analysis which follows highlights visual representational structures of the holograms that were not present in two-dimensions, considering each in turn: given-new, ideal-real, triptych, and relations between compositional elements.

Given-new in 2D drawings and 3D holograms

Drawings and 3D holographic story scenes could both be read, like most images in western cultures, from a left to right or a ‘given-new’ visual structure, also known as ‘past-present’ (see ). This is the horizontal juxtaposition of two contrasting elements, on the left, the given or agreed upon departure for the message, and on the right, the new or focal information (Kress and Van Leeuwen Citation2021).

Figure 12. Chloe’s 2D drawing and 3D holographic scene.

In both the drawing and the 3D holographic scene in above, the pine trees depicted on the left constitute the ‘given,’ while the ‘new’ information – where the viewer should focus their attention – is the house on the right-hand side of the images. In both 2D and 3D story scenes, the entrance to the house is facing towards the intended viewer. In the 3D holographic scene, the main character – the puppy – is positioned centrally between the ‘given’ and the ‘new,’ facing the viewer, since the puppy is crucial to the narrative – the lost puppy in the forest discovers an old house.

A key difference between the 2D drawing and the 3D holographic scene, however, is that the viewer positioning is fixed in the drawing – the author has decided to show the scene from a mid-level frontal view. In contract, viewers of the original 3D holographic scene could view the representation from multiple angles on the horizontal plane – provided that the placement of the 3D objects in the physical context allowed the viewer to move around it, for example, viewing the puppy and the house from behind (see on 3D sculpture: Kress and Van Leeuwen Citation2021). In the classroom, the viewer’s choice of positioning was in a large empty classroom providing multiple viewing options on the horizontal plane, with only some limits due to the walls and others in the room.

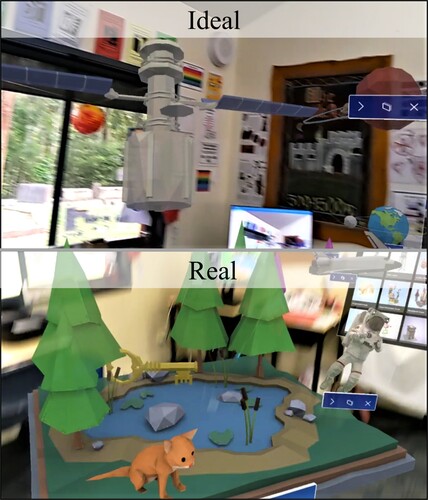

Ideal-real in 3D holograms

The vertical dimension of students’ 3D holographic story scenes, also present in 2D visual imagery, called the ‘ideal-real’ were analysed, where the bottom or lower element – the real – grounds the image with the factual reality, while the upper half of the visual structure contrasts the idealised future or promised essence (Kress and Van Leeuwen Citation2021). This vertical conjunction can be discerned in Liam’s holographic representation of an astronaut whose spacecraft crashes on the ‘real’ earth. The astronaut’s hardship is resolved when he transcends into his home in outer space – the ‘ideal’ environment ().

Figure 13. Liam’s 3D holographic scene: ideal and real.

Unlike the relations between given and the new along the horizontal plane, which can be altered significantly by the viewer moving interactively around the 3D holograms, the relations between ideal and real, and between centre and margins cannot be as easily inverted, which holds true for other three-dimensional compositions (see Kress and Van Leeuwen Citation2021). For example, to enable viewers to see holograms easily from underneath, students would need to climb a ladder to elevate the holograms. Unlike the horizontal dimension, which maximises multiple positioning and viewer interactivity, the vertical dimension and centrality are less easily altered by the viewer positioning. Viewing key story illustrations three-dimensionally allowed students to walk around the story scene and view the story from a 360-degree perspective, which is distinct from two-dimensional story illustrations, as Emily appreciated: ‘Well, it allows you to see things and look at it from different angles.’

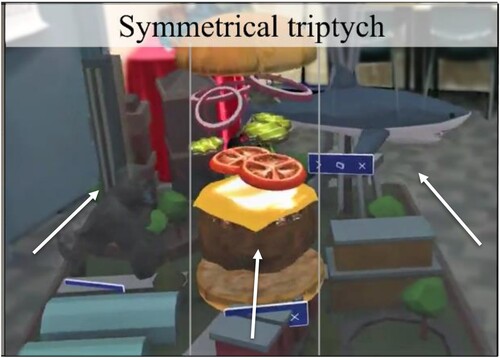

Triptych

Researchers observed the presence of the triptych in the visual representational structure of some 3D holographic scenes. A triptych is composed of a central element, called the mediator, flanked on either side by two symmetrical elements, which are not conceptually opposing (Kress and Van Leeuwen Citation2021). For example, is a holographic scene of an animated burger that can be read as a symmetrical triptych.

Figure 14. Joseph’s 3D holographic scene read as symmetrical triptych.

The taller buildings form a backdrop which positions the viewer on the ‘front’ or more open side of the urban biome. In the centre, is the mediator, which the student named the ‘Sacred Cheeseburger.’ The symmetrical triptych shows two flanking elements, the gorilla on the left, and the flying shark on the right, who are together trying to obtain the cheeseburger – who is the mediator between the ‘given’ on the left, and the ‘new’ the right.

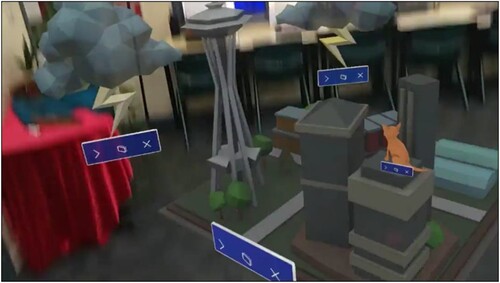

Relations between visual compositional elements

Many of the holographic scenes constructed meaningful relations between the visual compositional elements, such as between the characters, and between the characters and the viewers. For example, Lachlan composed the following story about Buddy the Police Dog set in the city ().

Figure 15. Lachlan’s story: relations between character, objects, and viewer.

In , Lachlan has positioned Buddy the Dog facing the Max the Cat – the character of interest in the dog’s pursuit. The positioning of the cat forms a vector that points toward the giant shining jewels (green and red), with the dog behind – the cat seemingly unaware of the dog. Both characters are positioned from a side angle to the viewer, making them easy to observe, but creating the sense that they can’t observe the viewer. Lachlan narrated his holographic story to the interviewer (see ).

The position of the Buddy the Dog is significant, both on the vertical and horizontal plane, since horizontally, the cat is in the dog’s view, and the gems are similarly in the dog’s view beyond the cat. Along the vertical plane, Buddy is positioned on a building at mid-level height to support his surveillance of, and dominance over the cat, who is positioned on a lower vertical plane.

Finally, researchers observed the opportunity for 3D holograms to present multiple viewer perspectives, such as a frontal angle, oblique, back view, or over-the-shoulder, as demonstrated in Georgia’s composition below (see ). The student here views the central character – the cat – from back view, so that the viewer’s perspective is aligned with the cat, who is puzzled by the lightning bolts that are mysteriously emitted from the building. The lightning is given visual salience by elevated placement on the vertical plane. As the story unfolds, the cat becomes the hero, uncovering the evil plans of the antagonist.

Figure 17. Viewing from behind the character.

In sum, the smart glasses activity provided new affordances to construct different visual representational structures, placement of characters, and viewer positioning for 3D modelling. The 3D virtual holograms, like other 3D visual displays, may not always contrast the relations between left-right, centre-margins, or top–bottom. However, when they are used, 3D holograms can communicate meaning in a similar way to 2D images, but with some additional affordances for viewer positioning, particularly on the highly interactive horizontal plane, where the point-of-view can be easily altered.

Comparing and contrasting storytelling media

In understanding the affordances of multimodal composition with smart glasses, one aim was to compare the students’ previous school-based experiences with narrative composing. For example, in addition to analysing their transmedia stories – drawing, writing, and storytelling with smart glasses – researchers asked the students to make comparisons with other school-based story making activities.

In terms of similarities, the students noted that irrespective of the new media, at the heart of the activity is the fundamental art of storytelling. Lachlan considered: ‘I guess it’s still about storytelling – tell a story. You need to at least have a clear definition of story in some way.’ However, he further noted: ‘Because this is visual, it can help you imagine how it’s looking.’ Unlike story writing with pen or pencils on paper, the virtual holograms superimposed on the real world supported story imagining, providing visual anchors which drive the narrative forward. The students also recognised that creativity and the quality of the ideas are important in all forms of storytelling. Layla observed: ‘It’s really similar in some ways because you still need creativity, and you still have to work out what you want to do, and how you want to tell it.’ This was confirmed by the analysis of the drawings, written stories, and holographic stories (imagery and verbal), which contained the same story essence, but the virtual stories provided greater visual detail, supported by verbal description of the action as adapted versions of the written stories.

When asked about the differences between authoring stories across media, students drew attention to the heightened interactivity offered in the XR activity, as Layla observed: ‘In other activities, it’s more – you write and then read it – and draw it or paint it. But this one’s really cool, because it’s interactive.’ Similarly, Riley conferred: ‘I think it’s different because it’s hands-on. Compared to just writing stories, you get to see it come to life!’ The immersive interactivity brought their stories to life virtually, using giant manipulable holograms situated in the classroom.

Importantly, the students draw attention to the three-dimensionality and quality of these visuals as a key point of difference. Matilda compared:

It makes it like more 3D, and it makes it feel so real, whereas, if you tried to draw something like this it would take, like, hours of practice and work … I could never draw this even if I tried!

Students saw the XR activity as providing greater scaffolding than composing with a blank piece of paper. Carter explained: ‘I liked actually making it real instead of just having it on a piece of paper – where you don’t have as much control.’ Some noted greater options in terms of the scale of the illustrations compared to drawing on paper, as Savannah noted: ‘It allows you to make it different sizes. It gives you more options.’ Likewise, students appreciated that the holographic text was essentially viewed privately during construction, while those walking past the classroom and away from the computer screencast were not privy to what the students were making with the haptic gestures: ‘I liked how I could see through it, through the glasses, but other people can’t see it.’

Significantly, multimodal storytelling with smart glasses drew on different skills and knowledge than drawing and writing on paper. The additional technical knowledge was identified by the students as central. For example, Bailey noted: ‘I think you need both technology skills and knowledge of stories, because you really have to focus on the technology part of it, but also your story throughout – what you’re actually telling.’ Similarly, Savannah noted: ‘I would say it’s really fun to do, but you just have to listen and work with the technology. Because you’re still controlling it, you have to understand how you’re using the technologies.’

Others, such as Layla, saw technology as important, but also identified creativity as central: ‘I think definitely technology is important, but also creativity as well … to be able to work out what you want to do with different choices.’ Creativity was a repeated theme in their responses, as Jayden said: ‘You have to be more creative with what you’re doing.’ Likewise, others referenced creative judgement: ‘It involves creating things and thinking about where they really look good.’

The students considered a range of potential uses of smart glasses for future forms of digital storytelling. One student, Riley, noted the benefits for focusing young learners on stories through animations, which have movement: ‘People are intrigued by the story, and the animation can keep their focus – kids even. For example, this fish is moving.’ Similarly, Savannah saw the potentials of the visual mode for non-readers or for those beginning to read: ‘It’s more visual. If someone can’t read, then this would be a good idea to use.’ The potential uses of reading with smart glasses were raised by students, as Archie suggested: ‘Maybe they could make a book where you open it, and the pages come to reality.’

Others noted the affordances for multiple points of view. For example, Henry explained, ‘You could have all the writing, and then you could have your smart glasses to help people once they've read the story to be able to see it from someone else's perspective or see the writer's point of view.’ Jayden suggested translating virtual models to material ones: ‘They could maybe make 3D models like this, but then they can make it handmade.’

When asked if they had ever imagined storytelling in this way, most students had not, as Zoey said: ‘No, honestly. I don't think I ever have!’ The students were not only engaged, but they were mesmerised, as Jayden described: ‘It feels really amazing – it’s like nothing you’ve ever really seen before.’ Others described their encounter with 3D holograms as something inconceivable: ‘I wouldn’t have thought this would even exist. I wouldn’t have thought this was possible.’ Another commented: ‘Amazing, because it's something that you'd see in a movie – just pops up!’ The teachers in this study reported that these learning experiences enabled ‘students to individually bring to life their stories by making them in 3D.’ They emphasised that the authentic connection to the curriculum ‘enhanced their related personal learning projects and ideas.’

Discussion

The findings support the value of using mixed reality to enhance print-based reading and writing, extending the notion of transmedia narratives – that is, literary narratives that exist as both printable hardcopy and digital multimedia versions, each with different modal affordances (Unsworth Citation2013). Compared to written stories, the holographic storytelling enabled students to make a stronger visual commitment to the fictional worlds and story settings, such as the 360-degree, visual details of the physical landscape that served as constitutive for the action. Unlike 2D drawings, the 3D virtual stories offered multiple, interactive points of view.

This research extends beyond previous studies of augmented reality storytelling using widely accessible mobile phones, where AR experiences typically require users to synthesise story pieces distributed in the environment – videos, interviews, photographs, and news stories (Dunleavy and Dede Citation2014). In those narrative creation formats, disconnect occurred between highly fictionalised virtual landscapes and the real environment (Dunleavy and Dede Citation2014). In the present study, storytelling with smart glasses supported story creation that blended the virtual and the material in meaningful ways. Students drew on their knowledge of narrative genre and content to create virtual illustrations that interacted with the corporeality of the participants’ haptic gestures and were located in the physical classroom environment.

In terms of the haptic affordances, while the haptic gesture recognition system of the touchless interface was initially tricky for some students and specific functions (e.g., scrolling), the haptic manipulation of virtual 3D models in the storytelling experience focused the students’ attention on 3D compositional elements that were absent in the drawing and writing activities. AR has advantages for conceptualising, creating, and manipulating 3D models and objects, and for improving students’ spatial abilities (Diegmann et al. Citation2015). Likewise, AR applications that combine different modes, including animated images, background music, and sound effects, can enhance learning (Yeh and Tseng Citation2020). Alternative technologies, called haptic technologies, may in the future provide users with additional tactile feedback to simulate weight and gravitational pull – found useful for teaching abstract physics concepts (Civelek et al. Citation2014).

Conclusion

The majority of research on AR, VR, and MR in education has focused on students’ consumption of learning material (Maas and Hughes Citation2020); however, this research was driven by a recognition that students need to create narratives, rather than simply consume them, working with transmedia narratives across modes. Future research is needed to investigate the growing possibilities for multimodal narratives in XR environments, including newer and less-researched technologies, such as smart glasses and other wearables. While extended reality technologies, such as MR, AR, and VR, are predicted to have a significant impact on education, research of advanced XR devices that respond to the users’ interactions and the environment is still emergent, due to the early high costs and suitable content developed for schools (Maas and Hughes Citation2020). Emerging research of primary and secondary teachers has found that the majority would support the use smart glasses in their classrooms if given the opportunity, while the technical and pedagogical training and support from the school leadership is noted as central (Kazakou and Koutromanos Citation2022).

This high-growth technology market requires educational research to guide technology development and curriculum applications across disciplines and levels of schooling from K-12 (Lopez et al. Citation2021; Pellas, Kazanidis, and Palaigeorgiou Citation2020). Importantly, we need to understand extended reality environments as new media for textual practices. For example, how do different kinds of concepts, information, visualisations, abstractions, and multimodal textual and image formats (e.g., 2D, 3D), support students’ reading comprehension with mixed reality? Similarly, how do children understand the virtual and the real in MR textual practices at different stages of development, such as in early childhood (Oranç and Küntay Citation2019)?

The next generation of wearable technology for media production has arrived, with an urgent need to understand their pedagogical uses in classrooms at all education levels. Wearable technology is not a passing fad but is recognised as a key advancement in communication media – the cusp of a key technological advancement for digital media practices since the advent of computers and smartphones (Tham Citation2017). Given the importance of remote learning options – as highlighted by the pandemic – and the emerging opportunities to use smart glasses to connect and interact anywhere in the world, smart glasses are set to intensify multimodal information, immersion, and social interaction (Pellas, Kazanidis, and Palaigeorgiou Citation2020; Stott Citation2020).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- ACOLA. “Media Release Nov 2020: A Year of Rapid Digital Change.” ACOLA, November 4. https://acola.org/media-release-nov-2020-iot-rapid-digital-change/.

- Ali, Almaas A., Georgios A. Dafoulas, and Juan Carlos Augusto. 2019. Collaborative Educational Environments Incorporating Mixed Reality Technologies: A Systematic Mapping Study.” IEEE Transactions on Learning Technologies 12 (3): 321–332. doi:10.1109/tlt.2019.2926727.

- Australian Bureau of Statistics (ABS). 2016. “Census QuickStats Search”. ABS. Accessed August 4, 2022. https://www.abs.gov.au/census/find-census-data/quickstats/2016/0.

- Australian Curriculum, Assessment and Reporting Authority. 2017b. “Information and Communication Technology (ICT) Capability (Version 8.4).” Australian Curriculum. Accessed August 4, 2022. https://www.australiancurriculum.edu.au/f-10-curriculum/general-capabilities/information-and-communication-technology-ict-capability/.

- Australian Curriculum, Assessment and Reporting Authority (ACARA). 2017a. “F-10 Curriculum (Version 8.4).” Australian Curriculum. Accessed August 4, 2022.https://www.australiancurriculum.edu.au/f-10-curriculum/.

- Birt, James, Zane Stromberga, Michael Cowling, and Christian Moro. 2018. “Mobile Mixed Reality for Experiential Learning and Simulation in Medical and Health Sciences Education.” Information 9 (2): 31. doi:10.3390/info9020031.

- Blevins, Brenta. 2018. “Teaching Digital Literacy Composing Concepts: Focusing on the Layers of Augmented Reality in an Era of Changing Technology.” Computers and Composition 50 (December): 21–38. doi:10.1016/j.compcom.2018.07.003.

- Bursali, Hamiyet, and Rabia Meryem Yilmaz. 2019. “Effect of Augmented Reality Applications on Secondary School Students.” Reading Comprehension and Learning Permanency Computers in Human Behavior 95 (June): 126–135. doi:10.1016/j.chb.2019.01.035.

- Civelek, Turhan, Erdem Ucar, Hakan Ustunel, and Mehmet Kemal Aydin. 2014. “Effects of a Haptic Augmented Simulation on K–12 Students’ Achievement and Their Attitudes Towards Physics.” EURASIA Journal of Mathematics, Science & Technology Education 10 (6): 565–574. doi:10.12973/eurasia.2014.1122a.

- Diegmann, Phil, Manuel Schmidt-Kraepelin, Sven Eynden, and Dirk Basten. 2015. “Benefits of Augmented Reality in Educational Environments - a Systematic Literature Review.” Wirtschaftsinformatik Proceedings 2015 103. https://aisel.aisnet.org/wi2015/103.

- Dunleavy, Matt, and Chris Dede. 2014. “Augmented Reality Teaching and Learning.” In Handbook of Research on Educational Communications and Technology, edited by J. Michael Spector, M. David Merrill, Jan Elen, and M. J. Bishop, 735–745. New York: Springer.

- Friend, Lesley, and Kathy A. Mills. 2021. “Towards a Typology of Touch in Multisensory Makerspaces.” Learning, Media and Technology 46 (4): 465–482. doi:10.1080/17439884.2021.1928695.

- Golan, Danielle Dahan, Mirit Barzillai, and Tami Katzir. 2018. “The Effect of Presentation Mode on Children's Reading Preferences, Performance, and Self-Evaluations.” Computers & Education 126: 346–358. doi:10.1016/j.compedu.2018.08.001.

- Guest, Greg, Emily Namey, Jamilah Taylor, Natalie Eley, and Kevin McKenna. 2017. “Comparing Focus Groups and Individual Interviews: Findings from a Randomized Study.” International Journal of Social Research Methodology 20 (6): 693–708. doi:10.1080/13645579.2017.1281601.

- Halliday, Michael Alexander Kirkwood, Christian MIM Matthiessen, Michael Halliday, and Christian Matthiessen. 2014. An Introduction to Functional Grammar. London: Routledge.

- Hevey, David. 2020. “Think-Aloud Methods.” In Encyclopedia of Research Design, edited by Neil J. Salkind, 1505–1506. New York: SAGE.

- Hofmann, Bjørn, Dušan Haustein, and Laurens Landeweerd. 2017. “Smart-glasses: Exposing and Elucidating the Ethical Issues.” Science & Engineering Ethics 23 (3): 701–721. doi:10.1007/s11948-016-9792-z.

- Jewitt, Carey, Jeff Bezemer, and Kay O'Halloran. 2016. Introducing Multimodality. London: Routledge. doi:10.4324/9781315638027

- Kazakou, Georgia, and George Koutromanos. 2022. “Augmented Reality Smart Glasses in Education: Teachers’ Perceptions Regarding the Factors That Influence Their Use in the Classroom.” New Realities, Mobile Systems and Applications, edited by M.E. Auer and T. Tsiatsos, 145–155. Cham: Springer.

- Kieffer, Michael J., Rose K. Vukovic, and Daniel Berry. 2013. “Roles of Attention Shifting and Inhibitory Control in Fourth-Grade Reading Comprehension.” Reading Research Quarterly 48 (4): 333–348. doi:10.1002/rrq.54.

- Kress, Gunther, and Theo Van Leeuwen. 2021. Reading Images: The Grammar of Visual Design. 3rd ed. London: Routledge.

- Lee, Lik-Hang, and Pan Hui. 2018. “Interaction Methods for Smart Glasses: A Survey.” IEEE Access 6: 28712–28732. doi:10.1109/ACCESS.2018.2831081.

- Lopez, Miguel Angel, Sara Terron, Juan Manuel Lombardo, and Rubén Gonzalez-Crespo. 2021. “Towards a Solution to Create, Test and Publish Mixed Reality Experiences for Occupational Safety and Health Learning: Training-MR.” International Journal of Multimedia and Artificial Intelligence 7 (2): 212–223. doi:10.9781/ijimai.2021.07.003.

- Maas, Melanie J., and Janette M. Hughes. 2020. “Virtual, Augmented and Mixed Reality in K–12 Education: A Review of the Literature.” Technology, Pedagogy, and Education 29 (2): 231–249. doi:10.1080/1475939X.2020.1737210.

- Mills, Kathy A. 2022. “Potentials and Challenges of Extended Reality Technologies for Language Learning.” Anglistik 33 (1): 147–163. doi:10.33675/ANGL/2022/1/13.

- Mills, Kathy A., and Alinta Brown. 2021. “Immersive Virtual Reality for Digital Media Production: Transmediation is Key.” Learning, Media and Technology 47 (2): 1–22. doi:10.1080/17439884.2021.1952428.

- Mills, Kathy A., Laura Scholes, and Alinta Brown. 2022a. “Virtual Reality and Embodiment in Multimodal Meaning Making.” Written Communication 39 (3): 335–369. doi:10.1177/07410883221083517M.

- Mills, Kathy A., and Bessie Stone. 2020. “Multimodal Attitude in Digital Composition: Appraisal in Elementary English.” Research in the Teaching of English 55 (2): 160–186.

- Mills, Kathy A., Len Unsworth, and Laura Scholes. 2022b. Literacy for Digital Futures: Mind, Body, Text. New York: Routledge.

- Minogue, James, and M. Gail Jones. 2006. “Haptics in Education: Exploring an Untapped Sensory Modality.” Review of Educational Research 76 (3): 317–348. doi:10.3102/00346543076003317.

- Munsinger, Brita, Greg White, and John Quarles. 2019. “The Usability of the Microsoft Hololens for an Augmented Reality Game to Teach Elementary School Children.” In 2019 11th International Conference on Virtual Worlds and Games for Serious Applications (VS-Games): 1–4. doi:10.1109/VS-Games.2019.8864548

- National Health and Medical Research Council. 2018. “National Statement on Ethical Conduct in Human Research (2007) - Updated 2018”. NHMRC. July 18. https://www.nhmrc.gov.au/about-us/publications/national-statement-ethical-conduct-human-research-2007-updated-2018.

- Nichols, Greg, and Sean Jackson. 2022. “The 4 Best AR Glasses: Pro-level AR and XR Headsets.” ZDNet. July 29. https://www.zdnet.com/article/best-ar-glasses/.

- Oranç, Cansu, and Aylin C. Küntay. 2019. “Learning from the Real and the Virtual Worlds: Educational Use of Augmented Reality in Early Childhood.” International Journal of Child-Computer Interaction 21: 104–111. doi:10.1016/j.ijcci.2019.06.002.

- Painter, Claire, Jim Martin, and Len Unsworth. 2013. Reading Visual Narratives: Image Analysis of Children’s Picture Books. Sheffield: Equinox.

- Palmas, Fabrizio, and Gudrun Klinker. 2020. “Defining Extended Reality Training: A Long-term Definition for all Industries.” In 2020 IEEE 20th International Conference on Advanced Learning Technologies (ICALT), 6-9 July: 322–344. doi:10.1109/ICALT49669.2020.00103

- Pellas, Nikolaos, Ioannis Kazanidis, and George Palaigeorgiou. 2020. A Systematic Literature Review of Mixed Reality Environments in K-12 Education.” Education and Information Technologies 25 (4): 2481–2520. doi:10.1007/s10639-019-10076-4.

- Ro, Young K., Alexander Brem, and Philipp A. Rauschnabel. “Augmented Reality Smart Glasses: Definition, Concepts and Impact on Firm Value Creation.” In Augmented Reality and Virtual Reality, edited by Timothy Hyungsoo Jung, and M. Claudia tom Dieck, 169–181. Cham: Springer. doi:10.1007/978-3-319-64027-3_12

- Santos, Marc Ericson C., Takafumi Taketomi, Goshiro Yamamoto, T. Mercedes Ma Rodrigo, Christian Sandor, and Hirokazu Kato. 2016. “Augmented Reality as Multimedia: The Case for Situated Vocabulary Learning.” Research and Practice in Technology Enhanced Learning 11 (1): 1–23. doi:10.1186/s41039-016-0028-2.

- Schleser, Max, and Marsha Berry. 2018. Mobile Story Making in an Age of Smartphones. Cham: Springer International Publishing. doi:10.1007/978-3-319-76795-6

- Smole, S. 2022. “Use Microsoft Hololens 2 To Transform Training with Wearable Mixed Reality”. Roundtable Learning. Accessed 01 August 2022. https://roundtablelearning.com/microsoft-hololens-2-to-train-with-wearable-mixed-reality/.

- Southgate, Erica, Shamus P. Smith, and Hayden Cheers. 2016. Immersed in the Future: A Roadmap of Existing and Emerging Technology for Career Exploration. Report Series Number 3. Newcastle: DICE Research. http://dice.newcastle.edu.au/DRS_3_2016.pdf.

- Stott, Lee. 2020. “Augmented Reality Remote Learning with Hololens 2”. Microsoft. August 24. https://techcommunity.microsoft.com/t5/educator-developer-blog/augmented-reality-remote-learning-with-hololens2/ba-p/1608687.

- Tham, Jason Chew Kit. 2017. Wearable Writing: Enriching Student Peer Review with Point-of-View Video Feedback Using Google Glass.” Journal of Technical Writing and Communication 47 (1): 22–55. doi:10.1177/0047281616641923.

- Tosto, Crispino, Tomonori Hasegawa, Eleni Mangina, Antonella Chifari, Rita Treacy, Gianluca Merlo, and Giuseppe Chiazzese. 2021. “Exploring the Effect of an Augmented Reality Literacy Programme for Reading and Spelling Difficulties for Children Diagnosed with ADHD.” Virtual Reality 25 (3): 879–894. doi:10.1007/s10055-020-00485-z.

- Unsworth, Len. 2013. “Point of View in Picture Books and Animated Movie Adaptations.” Scan: The Journal for Educators 32 (1): 28–37.

- Yeh, H. C., and Sheng-Shiang Tseng. 2020. “Enhancing Multimodal Literacy Using Augmented Reality.” Language Learning & Technology 24 (1): 27–37. 10125/44706.