ABSTRACT

Innovative methods for remote data collection, developed during the COVID-19 pandemic, also carry value for extending the reach of future research. Using the case study of an evaluation of children’s learning from Sesame Street, this methodological paper discusses challenges of assessing preschool children’s hands-on problem solving remotely via video chat, as well as techniques that were used to overcome these challenges, to yield rich, reliable data. These techniques pertained to every phase of the research: materials and preparatiory work prior to data collection, procedural and technical considerations during sessions (including enlisting assistance from the children’s parents), managing children’s behavior, preventing parents from influencing their children’s responses, and considerations during data analysis. Although remote methods should not replace in-person research in all cases, they hold the potential for increasing the geographic and demographic diversity of samples, thus producing more representative and generalizable data.

The COVID-19 pandemic and resulting social isolation posed severe challenges for conducting research with children. In response, researchers were forced to develop innovative methods for remote data collection, and techniques to ensure the validity and reliability of those data. Many of these methodological techniques were not only essential to research conducted during social isolation, but also hold significant value for expanding the scope of future research.

Some traditional research methods are always conducted remotely, or translate easily to remote data collection, such as surveys, diaries, or content analysis (e.g., Hensen et al., Citation2021; Van Nuil et al., Citation2023), although they may be more challenging to conduct remotely if the sample is comprised of young children. Other methods, such as eye tracking research to measure attentional skills, require greater adaptation if they are to be employed remotely, but can be facilitated by creative uses of advances in technology (e.g., Eschman et al., Citation2022).

One example of the latter is the assessment of critical thinking skills via task-based interviews. Task-based interviews have a long history in research on problem solving and critical thinking with both adults and children, from classic studies such as those by Jerome Bruner and Jean Piaget (e.g., Bruner et al., Citation1956; Inhelder & Piaget, Citation1958) to more contemporary research in areas such as STEM education and (particularly relevant to the papers in this special section) playful learning (e.g., Evans et al., Citation2021; Goldin, Citation2000). However, task-based interviews are typically conducted via in-person sessions in which the participant and researcher interact one-on-one in the same room, rather than remotely across a distance of hundreds or even thousands of miles.

This methodological paper considers issues and challenges in the remote collection of observational data with preschoolers, along with practical techniques to overcome these challenges. They are illustrated through a case study of research on Sesame Street’s impact on children’s process skills for critical thinking and STEM-based, hands-on problem solving. (For empirical data regarding impact, see the companion paper in this special section by Fisch, Fletcher, and colleagues).

At the time data were collected (pilot study conducted in March, 2022 and the full study in October and November, 2022), home visits for data collection were not possible because of constraints stemming from the COVID-19 pandemic.Footnote1 Thus, all interviews and observations of children’s problem solving were conducted online, via Zoom.

We begin by addressing the challenges of attempting to assess process skills for critical thinking among young children, regardless of whether research is conducted in person or remotely. Next, we turn to the additional issues that arise when adapting such measures for remote data collection.

Assessing process skills for critical thinking and problem solving

Process skills for critical thinking and problem solving include the use of strategies and heuristics such as gathering information, testing hypotheses, using or changing materials, employing trial and error, and reapproaching problems if an initial attempt at a solution is unsuccessful. Measuring such skills among preschool children – particularly with sufficient precision to reveal growth between a pretest and posttest – is challenging even when research is conducted in person. On the most basic level, there is the need to create assessment tasks that are pedagogically substantive, provide opportunities for children to engage in rich exploration and experimentation, and allow for more than one possible correct solution (e.g., Goldin, Citation2000). Second, when evaluating the impact of educational materials, there is the need for alignment between the content of the curriculum that is being evaluated and the assessment (e.g., Martone & Sireci, Citation2009); after all, if there is a mismatch between what an assessment measures and what the target curriculum is intended to teach, the assessment is unlikely to be valid. Assessments also must be age-appropriate, taking into account the developmental constraints of target-age children’s attention span, hand-eye coordination, limited articulation, and cognitive abilities. Otherwise, children’s thinking or understanding may be masked by the demands of a task that requires (for example) a level of hand-eye coordination that is not reasonable to expect from children of a given age.

Once appropriate hands-on tasks have been devised, it is equally crucial to create detailed coding schemes that align well with the target curriculum. For the present study, we were interested not only in the answers children produced, but also in the processes of thinking, reasoning, and experimentation through which they arrived at their answers. That is, our tasks and coding schemes assessed both children’s process skills and the solutions they produced.

Process skills (which we operationalize as the strategies and heuristics a child employs while working on a task) should be coded objectively, relying more heavily on empirical observation than on researchers’ subjective impressions (e.g., Goldin, Citation2000). They also must be coded at a fine enough level of detail to yield a rich portrait of the child’s approach, and to reveal any pretest-posttest change. Children’s solutions, too, should be coded objectively, in ways that reflect a range of sophistication, rather than merely assigning answers as “right” or “wrong.” Naturally, coding schemes for both process and solution also must be valid (construct and content validity), and capable of being employed reliably, both across raters (interrater reliability) and from pretest to posttest (test-retest reliability).

The present study

For the present study of children’s learning from Sesame Street’s playful problem solving curriculum, validity was supported by the use of established methodological approaches and close alignment between assessments and the curriculum materials being evaluated. The development of measures was grounded in methodological approaches and coding schemes that had been used successfully in past research on children’s learning from educational television. These previous studies had assessed the impact of educational media on STEM-based process skills and solutions among older, school-age children (e.g., Fisch et al., Citation2014; Hall et al., Citation1990), as well as the sophistication of solutions among preschool children (e.g., Grindal et al., Citation2019). Since the curriculum underlying the Sesame Street episodes in the treatment was grounded by its Active Playful Learning theoretical foundation (Fletcher et al., Citationthis issue), it was also important for our approach to be consistent with task-based methods used in past studies of playful learning outside the realm of media (e.g., Evans et al., Citation2021). However, none of these studies (nor, to our knowledge, any others) had previously measured the impact of media on process skills among preschool children, requiring us to adapt the approach for Sesame Street’s young audience.

To achieve proper alignment with the content of the Sesame Street episodes used in the treatment, we designed three pairs of hands-on tasks that addressed the same engineering concepts as three of the Sesame Street episodes in the 12-episode treatment. However, the hands-on tasks were embedded in new contexts, different from those seen in Sesame Street, so that children’s performance would represent near transfer of learning to novel problems (e.g., Bransford et al., Citation1999; Fisch et al., Citation2005), rather than simply direct comprehension of what had been seen. Since the curriculum underlying these episodes was grounded by Active Playful Learning, the tasks were also consistent with the sorts of hands-on tasks used in past research on playful learning (see Fisch et al., Citationthis issue).

The three episodes were selected because their content was clear and concrete, lending themselves well to hands-on tasks. Two tasks were designed for each topic, one for the pretest and one for the posttest, with the order of the tasks counterbalanced across children. The tasks were: Slides/Chutes (adjusting the speed of a ramp via slope and friction), Tower/Giraffe (building towers with nonstandard materials), and Roof/Goldfish (properties of materials, to build a roof that is sturdy and waterproof). Detailed descriptions of these tasks can be found in the Appendix to this paper, and further information about the tasks is presented in Fisch et al. (Citationthis issue).

Each pair of parallel tasks was essentially isomorphic, but the tasks were embedded in different contexts with goals that were the inverse of each other. For example, the Slides task presented children with two identical toy animals (in different colors) and two identical slides made of blocks and cardboard. Children were asked to change the slides so that one of the animals would always slide more quickly than the other. The Chutes tasks was the same, but the two animals were balls with animal faces, the cardboard slides were replaced with cardboard chutes, and the goal was to make one animal roll more slowly than the other. Each task could be solved in numerous ways, such as changing the slope of one or both ramps, or by placing a textured object (such as fur or a non-skid mat) on a ramp to increase friction. Children were also given a “toolkit” of materials (e.g., markers, a ruler, scissors, sticky notes, cotton balls) that they could use while working on the tasks if they wished, although they were not required to use the additional materials.

The coding scheme for process skills was adapted from Hall et al. (Citation1990) and Fisch et al. (Citation2014). Each of the child’s actions or utterances while working on the task was coded via a list of more than one dozen strategies and heuristics that children might employ (e.g., gathering information, changing materials, testing hypotheses). To adapt the scheme for this study and age group, our initial round of modifications entailed cutting those heuristics that were either inapplicable to the current tasks or not age-appropriate for preschoolers (e.g., making arithmetic computations, considering probability), as well as specifying concrete examples of ways in which each heuristic might appear in the child’s work, to facilitate consistent coding across researchers. To illustrate how the process coding scheme worked, presents an excerpt of one five-year-old’s work on the Chutes task, along with the related coding. (For a complete list of the heuristics coded in process scores, see Fisch et al., Citationthis issue.)

Table 1. Sample of process coding: Approximately 30-second excerpt of one child’s work on the Chutes task.

Hierarchical coding schemes for solution scores were built upon characteristics of each task, to reflect different levels of sophistication, such as whether the child succeeded in making one ramp faster than the other, and whether the child’s solution involved changing slope and/or friction. Here, too, the scheme included specific examples of potential responses. (See Fisch et al., Citationthis issue for an example of a solution coding scheme for one task.) Refinements to both the process and solution coding schemes were made on the basis of pilot test data, as will be discussed below.

Online observations of hands-on problem solving

Considerations regarding the design of tasks and coding schemes would be as relevant to in-person research as to research conducted remotely. In remote research, however, these considerations are compounded. For the purposes of this discussion, we divide the challenges inherent in remote data collection into three broad categories: materials and preparation prior to data collection, ensuring that children’s behavior will be observable and codable, and managing the testing session.

Materials and preparation

Typically, hands-on materials are an integral part of task-based interviews to explore children’s cognition. Consider, for example, classic cases such as Piaget’s pendulum problem in which children manipulate the weight and length of a pendulum (Inhelder & Piaget, Citation1958), or more recent investigations in which children use common objects such as pipe cleaners or chopsticks to create tools to accomplish a goal (e.g., Beck et al., Citation2011; Evans et al., Citation2021).

This reliance on hands-on materials can pose challenges when research is conducted remotely, rather than in person. On one level, there is simply the practical issue that far more sets of materials are required. In our own in-person research, we usually create one set of materials for each researcher to use, plus several extra sets to replace any materials that children damage in the course of data collection – approximately one dozen sets in all. By contrast, when the same research is conducted remotely, one set of materials must be created and shipped to each participant, rather than to each researcher. Thus, since over 100 children participated in the present study, and each required one set of materials for the pretest and one for the posttest, preparation for the study entailed assembling and shipping more than 200 sets of materials – roughly 20 times the number we would ordinarily create. In planning the study, then, we had to allow for much more staff time and a longer lead time for preparation prior to data collection. A related practical issue is the added cost of purchasing, assembling, and shipping so many sets of materials. In the case of our Sesame Street study, however, we found that the additional material and shipping costs were mostly offset by savings in local travel, since remote data collection meant that researchers did not have to visit participants’ homes.

To ensure that the materials would be available to the children during their data collection sessions, we shipped the materials to their parents for arrival several days before the session. We notified parents when to expect the package, and enclosed a list of all of the materials. Researchers contacted parents prior to the session, and asked them to cross-check the materials they received against the list, to ensure that everything was present. If parents notified us that a package was delayed or lost, or if any materials were missing from a package (all of which occurred on occasion), we could send replacements prior to the session.

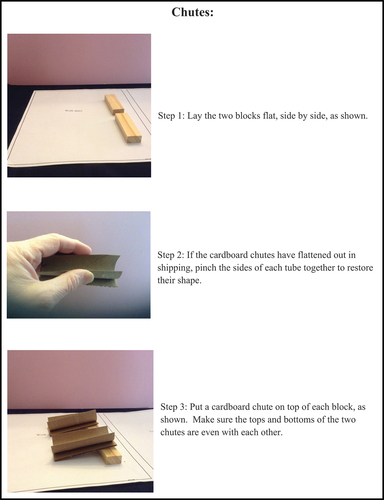

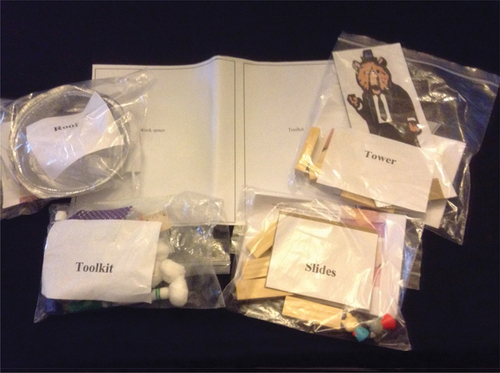

Beyond simply supplying materials, set-up posed additional challenges. During in-person research, the materials for a hands-on task can be set up by a researcher who is highly familiar with both the task and the materials. By contrast, in remote data collection, the researcher is not in the same room as the child’s materials, or perhaps not even in the same geographic region. For this reason (and others, discussed below), we enlisted the children’s parents as assistants who set up materials under our direction. Because the three tasks, plus the toolkit, included dozens of items, we facilitated set-up by dividing the package of materials into separately bagged “kits” for each task (). At the beginning of each task, the researcher walked the parent (and sometimes the child) through setting up the relevant materials, step by step, while modeling the set-up procedure with a duplicate set of materials. In addition, each kit also included a sheet of printed, illustrated set-up instructions (), in case it was difficult for a parent to see the researcher’s materials on a small screen (e.g., if the parent was using a smartphone). Since the researcher could see the family’s set-up on his or her screen, the researcher could give instructions to correct any errors during set-up, or repeat the demonstration to make it clear.

Figure 1. Sample set of materials sent to participating families. Note separately bagged kits for each task and toolkit, plus work mat.

Through these means, we could be confident that all of the participating children had the necessary materials, and that materials were set up properly and uniformly for each task.

Observable, codable data

Even in person, shyness or limited articulation among young children can pose challenges for the “interview” aspect of task-based interviews, inhibiting children’s ability to explain their reasoning. The challenge increases in remote data collection, when children’s verbal responses are filtered through a microphone and computer speakers. Additionally, since young children may find it difficult to sit still for extended periods of time, some of their work on a task may fall outside camera range. Naturally, children’s behavior can only be observed and coded if it is visible and audible to the researcher.

We took several steps to overcome these challenges in our Sesame Street study. First, as in the set-up of materials, parents again played an important role as assistants. Parents were asked in advance to set up materials on a flat surface (preferably a table) and were assigned the role of “cameraperson” to frame camera angles so that the researcher could see the child’s face and hands, and adjust as needed. Additionally, if a child’s verbal response was inaudible or unclear to the researcher, the researcher could ask the parent to repeat what the child said.

Second, a useful technique to keep the child’s behavior on-camera was to include a “work mat” among the materials that were sent to families (see ). The work mat was an 11” x 17” sheet of paper marked with two large rectangles; one rectangle outlined a space marked “Work space” (where children worked on the tasks), and the other was marked “Toolkit” (where children kept the toolkit materials so they would be immediately accessible). The mat established a defined area that helped parents frame camera angles, and served to constrain the space in which children worked, so that it would be visible on-screen. Although a few children did not confine their work to the mat, most did.

Technology issues, too, can pose challenges for collecting observable data remotely. High rates of cell phone penetration, even among low-income families, have eased barriers to internet access; Rideout (Citation2017) found that 95% of U.S. families with children aged 0 to 8 years had smartphones, and 78% had tablets. However, low-income families may not have latest-generation technology or high-speed connections, which can cause freezes or interruptions in sessions; for example, Katz and Rideout (Citation2021) found that 20% of low-income families did not have broadband connections, as opposed to only 11% of families above the poverty level. To minimize such issues, our screener included having families watch a Sesame Street video on the YouTube channel we created for the study, to ensure that their technology was sufficient to watch the videos in the treatment and participate in the remote task-based interviews.

Other potential technology issues stem from the nature of the devices and software used for video chat (in this case, Zoom). In Zoom’s speaker mode, the on-screen view shows only the person who is speaking at the moment. As a result, if Zoom defaults to speaker mode, a researcher’s question during a session can cause the on-screen view to shift to show the researcher instead of the child, preventing the researcher from seeing what the child is doing. To avoid this problem, researchers either pinned the child’s image to the screen or set Zoom to gallery mode (showing a split-screen of the child and researcher) so that the child would be visible throughout.

Similarly, an unexpected issue arose when a pilot family connected to Zoom via a Portal device. Portal’s operating software was designed to automatically zoom in on the face of the person speaking, which prevented the researcher from seeing the child’s hands and his or her work on the task. Fortunately, few families in the study owned Portal devices, so we overcame the issue by simply asking parents to use other devices instead. After the time of our study, however, newer generations of iPads have incorporated a similar auto-zoom feature, so avoiding this issue with more ubiquitous devices may require having families deactivate the feature before data collection.

Managing sessions

Some of the greatest challenges for remote data collection stem, not from materials or technology, but from the participants themselves. Young children’s limited attention spans always create constraints for conducting developmental research, but when data are collected in person, researchers can redirect their attention through physical means, such as moving into the child’s line of vision or gently touching the child’s hand. Recapturing and redirecting a child’s attention can be much more difficult when a researcher is present only through a screen (e.g., Shields et al., Citation2021); indeed, Grootswagers (Citation2020) points to attentional challenges in collecting remote data, even from adults. To minimize these issues, we limited the length of sessions, to keep them within most children’s attention span. As a rule, we set the time for each hands-on task at a maximum of approximately 10 minutes (unless a child was deeply engaged and wanted to continue working), so that the session of three tasks would be no more than 30–40 minutes, including introductory conversation and set-up.

Once again, this was also an area in which parents played an essential role as assistants, helping to redirect children when they were distracted or lost focus. Indeed, because parents knew their own children far better than researchers could, they had the potential to be more effective than researchers in redirecting their child’s attention. Of course, parents are not always successful in redirecting their children’s attention either. In one extreme case during our pilot test, a child lost interest during a session, crawled into a closet, pulled out a toy, and started to play. Neither the researcher nor the parent was able to redirect him, even when the parent attempted to take the toy away. The only choice at that point was to end the task and move on to the next task in hopes that the new task would hold the child’s attention.

Conversely, however, this is also an area in which enlisting parents as assistants carries challenges of its own. When research is conducted in person, researchers can guard against parents’ interfering in their children’s responses by either interviewing the child apart from the parent or requesting that the parent sit quietly so that the child can respond on his or her own. These techniques are not feasible, though, when the parent is involved in the session as an assistant whose aid is needed for the researcher to be able to see and hear children’s responses, or to manage children’s behavior. If parents (with all good intentions) try to scaffold their children’s performance, or even offer hints while children are working on a task, the parents’ input is likely to mask, or even override, children’s own reasoning. (Shields et al., Citation2021 report similar challenges of parental involvement in their own remote data collection.)

Thus, in our Sesame Street study, we explained to parents up front what their role would be – to manage the camera and set up materials – but cautioned them not to give any hints or help their child in any way,Footnote2 so that we could see how the child figured out each task on his or her own. (Indeed, giving parents the job of “cameraperson” had the side benefit of being helpful in this regard, since it gave parents something to do throughout the session.) The vast majority of parents understood the need and were careful not to interfere. If a parent forgot, and started to offer a hint, the researcher immediately interrupted, and reminded the parent of the importance of letting the child figure out the task by himself or herself. This was effective in most cases.

Still, there were several parents who had more difficulty holding themselves back from trying to support their children by giving them hints, even after researchers reminded them repeatedly not to interfere. In those cases, there was little that researchers could do during the session, so we had to compensate during coding and analysis instead. Coders noted these moments, and evaluated whether a child’s behavior after the parent’s interjection followed naturally from what the child was doing before the parent spoke. If so, the child’s actions were coded as usual. But if the child’s behavior shifted in line with the parent’s hint or suggestion, we did not code the behavior that immediately followed the parent’s interjection. Fortunately, there were only a handful of children for whom this was the case, and excluding actions that arose in response to their parents’ prompting preserved our view of their reasoning.

Pilot testing

Because there was little precedent for conducting task-based interviews remotely via video chat, an extensive pilot test was conducted with a sample that was nearly half as large as in the subsequent study. Whereas the subsequent full study was conducted with a sample of 116 children (with the sample size based on a power analysis assuming a moderate effect size; see Fisch et al., Citationthis issue), the pilot study was conducted with 53 children (28 girls and 25 boys) from 21 different states in the United States. The sample included 14 three-year-olds, 20 four-year-olds, and 19 five-year-olds, with a mix of SES and ethnicity. All of the children and their families were either native English speakers or bilingual, so that there would be no language barriers to their understanding the English-language Sesame Street episodes. However, if any children or parents were more comfortable speaking Spanish, their pretest and posttest sessions were conducted in Spanish by bilingual researchers, so that they could express themselves more easily.

The procedure was largely identical to that employed in the subsequent full study. Children were divided into three viewing conditions, each of which watched 12 episodes of Sesame Street at home, over the course of one month. Children in two of the conditions watched episodes from Sesame Street’s playful problem solving curriculum (see Truglio & Seibert Nast, Citationthis issue), with some variation of episodes between the two groups. The third group was a control group that watched Sesame Street episodes designed to promote social and emotional learning (SEL) instead. Task-based interviews were administered remotely as a pretest and posttest, before and after the viewing period, and recorded for later coding. (For more details on the procedure, see Fisch et al., Citationthis issue).

The sample for the pilot study was not large enough to expect highly significant pretest-posttest differences between the three conditions, but the results were in the expected direction (i.e. positive change from pretest to posttest, and larger gains among viewers of the playful problem solving episodes) and suggestive enough to serve as proof of concept for the subsequent full study. More important for the purposes of this paper, though, data and experiences from the pilot test were invaluable in informing changes in the design of the tasks, techniques for data collection, and the coding schemes for process skills and the sophistication of children’s solutions.

Tasks and coding

Overall, the tasks were effective in that they were engaging to children, and yielded rich data, prompting a variety of approaches as children worked toward solutions. They were neither too simple for children, which would have produced ceiling effects, nor too difficult, producing floor effects. Changes stemmed more from the need to streamline the amount of time necessary for each task, so that sessions could be conducted without exceeding most children’s attention span. In particular, each task originally included asking children if they wanted to try to find another, different solution after they solved the task correctly, as a measure of motivation. However, pilot data showed that the question did not serve as an effective indicator of motivation, because there were numerous reasons why children did or did not try to find an alternate solution. In addition, the question often was not asked, either because children did not find a correct first solution, or because the session ran out of time. Thus, the question was omitted from the full study, although if children chose to pursue a second, alternate solution on their own, those data were coded as well (as in the example in ).

Pilot data also informed the refinement of the coding schemes for both process and solutions. When children produced unexpected approaches or solutions in the pilot test, they were added as examples to the coding schemes, so that coders would have clear direction as to how to classify them if they also appeared in the full study. A few heuristics were removed from the process score coding scheme because they did not appear more than once or twice in the pilot data, and coders did not code them consistently. With these refinements, the coding schemes proved to be highly reliable across two independent coders in the pilot test (Cronbach’s alpha = .87 for process scores and .89 for solution scores, averaged across the tasks). Indeed, even when the number of coders was doubled for the full study, agreement among the four coders remained high (Cronbach’s alpha = .76 for process scores and .84 for solution scores, averaged across the tasks), as reported by Fisch et al. (Citationthis issue).

Techniques for remote data collection

It was during pilot testing that we refined many of the techniques for data collection discussed earlier. Guidelines were clarified for the length of tasks, and researchers shared techniques that they found to be effective in making set-up clear for families, or in managing children or parents when necessary. Through these discussions, procedures for data collection were made stronger and more uniform across researchers.

Pilot testing was also invaluable in revealing technology issues that needed to be overcome. While piloting our methodology, we discovered the auto-zoom issue on Portal devices discussed above. Because few families had Portal devices, we asked families not to use Portal in the full study. During pilot testing, we also discovered the need to use gallery view in Zoom (creating a split-screen of the child and researcher) instead of speaker view, to keep the child visible on-screen throughout the session.

In these ways (and others), the pilot test provided an empirical basis for developing methods for remote data collection that, although grounded in established methods for in-person task-based interviews, had little precedent when conducted through a screen. The result was a set of methods and reliable measures that yielded rich, meaningful data which lent deep insight into children’s processes of critical thinking and problem solving.

Discussion

To summarize, in addition to challenges inherent in both in-person and remote research with preschool children, such as devising tasks that are age-appropriate, align well with the targeted curriculum, allow for multiple approaches, and can be coded reliably and at a sufficient level of precision to detect change, we can add the following challenges for remote data collection:

Challenge #1: Increased need for materials, and replacing delayed or missing materials from a distance. Solutions: Create enough sets of materials to ship to all families, have parents take inventory of materials in advance, send replacements when needed.

Challenge #2: Setting up materials for tasks properly, without a researcher present to do so. Solutions: Have parents assist in set-up, walk families through set-up step-by-step online, provide illustrated instruction sheets as backup.

Challenge #3: Ensuring that children’s actions are visible on screen, and that verbal responses are clearly audible. Solutions: Enlist parents as “camerapeople,” have parents repeat any inaudible responses, provide work mat to frame camera angles and constrain work space.

Challenge #4: The need for families to have devices and internet connections that are sufficient for the demands of the study. Solution: Provide sample video during recruiting as a test of technological capacity, limit technological demands to the capacity of an older smartphone.

Challenge #5: Preventing video chat devices and software from switching away from children, and auto-zoom features from hindering researchers’ view of children’s work. Solutions: Use gallery mode, avoid or deactivate auto-zoom devices.

Challenge #6: Keeping task demands within the constraints of children’s limited attention span, and recapturing or redirecting children’s attention when necessary. Solutions: Limit length of tasks and sessions, enlist parents as assistants.

Challenge #7: Involving parents to support their children and data collection while also preventing parental prompts that influence children’s behavior or mask their reasoning. Solutions: Assign parents defined roles, explain importance of children’s responding independently, give reminders when needed.

Certainly, we would not argue that remote observation of children’s hands-on problem solving is a perfect substitute for in-person research. However, as this case study demonstrates, the challenges of remote data collection can be overcome, not only in response to a global pandemic, but in future research as well.

Perhaps the greatest limitations of remote data collection lie in the increased difficulty of managing children’s behavior if they lose focus, and managing the behavior of those parents who repeatedly attempt to scaffold their children’s performance. In our study, both of these challenges were greatly reduced through the techniques discussed above, but could not be eliminated completely from a distance.

Despite these limitations, though, when remote data collection is conducted thoughtfully, it also holds the potential for benefits over in-person research, even beyond the constraints of a pandemic. By freeing researchers from the practical constraints of travel, remote data collection enables researchers to employ samples that are much more geographically and demographically diverse than would be possible if data were collected from each participant in person (a point echoed by Shields et al., Citation2021). Children from 21 different states participated in our pilot test, and the full study included children from 30 states – a far wider reach than would have been possible otherwise. The sample for our full study was also economically and ethnically diverse: Annual family incomes ranged from below $25,000 to over $100,000, and 28% of families identified as multiracial, 28% White, 21% Black/African-American, 21% Hispanic/Latinx, and one family apiece as Asian, Native American, or Albanian (Fisch et al., Citationthis issue). Admittedly, the sample for this study probably would have been diverse even if the research had been conducted in person, since we would have recruited in the New York and Philadelphia metropolitan areas, which offer access to highly diverse populations. But the increase in diversity facilitated by remote methods would likely be more pronounced among researchers who are based in areas with less heterogeneous populations.

Naturally, remote data collection is not a cure-all to ensure the representativeness of samples, since low-income families may be limited by lack of access to latest-generation technology or connections. However, as noted earlier, the near-ubiquitous reach of smartphones (Rideout, Citation2017) has made access issues less daunting than they were a decade ago. Supported by the techniques described above, and others that are sure to emerge in the future, remote methods can be a valuable complement to in-person research, making data more representative of – and thus more generalizable to – the population at large.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Shalom M. Fisch

Shalom M. Fisch is President of MediaKidz Research & Consulting. For over 35 years, he has applied educational practice and empirical research to help create engaging, impactful educational media for children. Prior to founding MediaKidz in 2001, he served as Vice President of Program Research at Sesame Workshop.

Kathy Hirsh-Pasek

Kathy Hirsh-Pasek, a Professor of Psychology at Temple University and a senior fellow at the Brookings Institute, was declared a “scientific entrepreneur” from the American Association of Psychology. Writing 17 books and 250+ publications, she is a leading professor in the science of learning who is known for translating research into actionable impact in schools (Activeplayfullearning.com), digital and screen media, and community spaces (Playfullearninglandscapes.com).

Gavkhar Abdurokhmonova

Gavkhar Abdurokhmonova is a doctoral student in the Human Development and Quantitative Methodology program (concentration in Developmental Science, concentration in Neuroscience and Cognitive Science) at the University of Maryland, working with Dr. Rachel Romeo. Her research moves beyond documenting deleterious outcomes of socioeconomic disparities, and instead explores the neural mechanisms by which environments shape development, with the goal of highlighting the importance of individual differences in children’s language experiences.

Lacy Davis

Lacy Davis, Nachum Fisch, Susan Fisch, and Carolyn Volpe are Researchers at MediaKidz Research & Consulting.

Katelyn Fletcher

Katelyn Fletcher is a former postdoctoral research fellow at Temple University’s Infant and Child Lab and consultant for the Brookings Institution Center for Universal Education. Her research interests focus on playful learning and the design, implementation, and evaluation of educational programs. Katelyn currently works in the field of education philanthropy.

Annelise Pesch

Annelise Pesch is a postdoctoral research fellow working at Temple University. Her research investigates social cognitive development in the preschool years including social learning, trust, play, and the impact of technology on learning and development. She leverages her research to inform the design of high-quality informal learning spaces through the Playful Learning Landscapes initiative.

Jennifer Tomforde

Jennifer Tomforde has worked in the field of educational research and consulting for the past 13 years and currently coordinates a literacy outreach program.

Charlotte Anne Wright

Charlotte Anne Wright is a Learning Designer and Research Specialist at Begin Learning. She led the LEGO Foundation’s Playful Learning and Joyful Parenting project as a research fellow at Temple Infant and Child Lab.

Notes

1. At the time this research was planned in 2021, it appeared that COVID-19 restrictions might lift sufficiently in time to collect data in person. However, the emergence of the Omicron variant at the end of 2021 caused COVID-19 restrictions to be extended, and made it clear that data would have to be collected remotely.

2. The only exception was if a child needed help with a low-level action that he or she found physically difficult, such as tearing a piece of tape off a roll. Parents were allowed to help with such basic actions, so that children’s reasoning would not be masked by largely unrelated issues of physical coordination.

References

- Beck, S. R., Apperly, I. A., Chappell, J., Guthrie, C., & Cutting, N. (2011). Making tools isn’t child’s play. Cognition, 119(2), 301–306. https://doi.org/10.1016/j.cognition.2011.01.003

- Bransford, J. D., Brown, A. L., & Cocking, R. R. (Eds.). (1999). How people learn: Brain, mind, experience, and school. National Academy Press.

- Bruner, J. S., Goodnow, J. J., & Austin, G. A. (1956). A study of thinking. John Wiley & Sons.

- Eschman, B., Todd, J. T., Sarafraz, A., Edgar, E. V., Petrulla, V., McNew, M., Gomez, W., & Bahrick, L. E. (2022). Remote data collection during a pandemic: A new approach for assessing and coding multisensory attention skills in infants and young children. Frontiers in Psychology, 12, 1–14. https://doi.org/10.3389/fpsyg.2021.731618

- Evans, N. S., Todaro, R. D., Schlesinger, M. A., Golinkoff, R. M., & Hirsh-Pasek, K. (2021). Examining the impact of children’s exploration behaviors on creativity. Journal of Experimental Child Psychology, 207, 105091. https://doi.org/10.1016/j.jecp.2021.105091

- Fisch, S. M., Fletcher, K., Abdurokhmonova, G., Davis, L., Fisch, N., Fisch, S. R. D., Jurist, M., Kestin, R., Pesch, A., Seguì, I., Shulman, J., Silton, N., Tomforde, J., Volpe, C., Wright, C. A., & Hirsh-Pasek, K. ( this issue). “I wonder, what if, let’s try”: Sesame Street’s playful learning curriculum impacts children’s engineering-based problem solving. Journal of Children and Media, 18(3), 334-350.

- Fisch, S. M., Kirkorian, H., & Anderson, D. R. (2005). Transfer of learning in informal education: The case of television. In J. P. Mestre (Ed.), Transfer of learning from a modern multidisciplinary perspective (pp. 371–393). Information Age Publishing.

- Fisch, S. M., Lesh, R., Motoki, E., Crespo, S., & Melfi, V. (2014). Cross-platform learning: How do children learn from multiple media? In F. C. Blumberg (Ed.), Learning by playing: Video gaming in education (pp. 207–219). Oxford University Press.

- Goldin, G. A. (2000). A scientific perspective on structured, task-based interviews in mathematics education research. In A. E. Kelly & R. A. Lesh (Eds.), Handbook of research design in mathematics and science education (pp. 517–545). Lawrence Erlbaum Associates.

- Grindal, T., Silander, M., Gerard, S., Maxon, T., Garcia, E., Hupert, N., Vahey, P., & Pasnik, S. (2019). Early science and engineering: The impact of The Cat in the Hat Knows a Lot About That! on learning. Education Development Center, Inc. & SRI International.

- Grootswagers, T. (2020). A primer on running human behavioural experiments online. Behavioral Research Methods, 52(6), 2283–2286. https://doi.org/10.3758/s13428-020-01395-3

- Hall, E. R., Esty, E. T., & Fisch, S. M. (1990). Television and children’s problem-solving behavior: A synopsis of an evaluation of the effects of Square One TV. The Journal of Mathematical Behavior, 9(2), 161–174.

- Hensen, B., Mackworth-Young, C. R. S., Simwinga, M., Abdelmagid, N., Banda, J., Mavodza, C., Doyle, A. M., Bonell, C., & Weiss, H. A. (2021). Remote data collection for public health research in a COVID-19 era: Ethical implications, challenges and opportunities. Health Policy and Planning, 36(3), 360–368. https://doi.org/10.1093/heapol/czaa158

- Inhelder, B., & Piaget, J. (1958). The growth of logical thinking: From childhood to adolescence. Basic Books. https://doi.org/10.1037/10034-000

- Katz, V., & Rideout, V. (2021). Learning at home while under-connected: Lower-income families during the COVID-19 pandemic. New America. https://www.newamerica.org/education-policy/reports/learning-at-home-while-underconnected/

- Martone, A., & Sireci, S. G. (2009). Evaluating alignment between curriculum, assessment, and instruction. Review of Educational Research, 79(4), 1332–1361. https://doi.org/10.3102/0034654309341375

- Rideout, V. (2017). The common sense census: Media use by kids age zero to eight. Common Sense Media.

- Shields, M. M., McGinnis, M. N., & Selmeczy, D. (2021). Remote research methods: Considerations for work with children. Frontiers in Psychology, 12, article 703706. https://doi.org/10.3389/fpsyg.2021.703706

- Truglio, R. T., & Seibert Nast, B. ( this issue). Playful problem solving: Positive approaches to learning on Sesame street. Journal of Children and Media, 18(3), 322–333.

- Van Nuil, J. I., Schmidt-Sane, M., Bowmer, A., Brindle, H., Chambers, M., Dien5, R., Fricke6, C., Hong, Y. N. T., Kaawa-Mafigiri, D., Lewycka, S., Rijal8, S., & Lees, S. (2023). Conducting social science research during epidemics and pandemics: Lessons learnt. Qualitative Health Research, 33(10), 815–827. https://doi.org/10.1177/10497323231185255

Appendix

Three pairs of hands-on tasks were created to assess children’s critical thinking skills in the context of problem solving:

Slides/Chutes (aligned with the “Ramp Racers” episode): In the Slides task, children were shown two identical toy animals (in different colors) and two identical slides made of blocks and cardboard. Children were asked to change the slides so that one of the animals would always slide more quickly than the other. The Chutes tasks was the same, but the two animals were balls with animal faces, the cardboard slides were replaced with cardboard chutes, and the goal was to make one animal roll more slowly than the other. Each task could be solved, for example, by changing the slope of one or both ramps, or by placing a textured object (either fur or a non-skid mat) on a ramp to increase friction. Other solutions were possible as well.

Tower/Giraffe (aligned with the “Tallest Block Tower Ever” episode): In the Tower task, children were shown a small cardboard cutout of Baby Bear (not the Sesame Street character) and a block tower that was the same height. Children were asked to build a larger tower that would be the same height as a cutout of Papa Bear. In the Giraffe task, children were shown a “feeder” that was too tall for a cutout giraffe to reach the leaf at the top of the feeder. They were asked to build a smaller tower that would be just the right height to put the leaf next to the giraffe’s mouth. In both the Tower and Giraffe tasks, it was possible to build towers with blocks alone, but difficult to keep the blocks balanced. To build a stable tower, it was more effective to combine blocks with other, nonstandard materials that were on hand (such as a pack of Post-It notes, a box of paperclips, or cotton balls, provided in the toolkit that is discussed in the body of this paper).

Roof/Goldfish (aligned with the “Rainy Day Play” episode): In the Roof task, children were shown a toy cat or dog in a clear plastic cup that served as its “house.” They were asked to build a roof for the house that would keep the animal dry in the rain. The Goldfish task was identical, but the animal in the cup was a goldfish who wanted a roof that would let water through to let the goldfish get wet in the rain. In both tasks, children were given several pieces of material (some sturdy, some not; some waterproof, some not) that they could use to make their roofs, or they could use other materials from the toolkit instead. The task materials also included a small bottle of water so that children could test their roofs if they wanted, although we did not ask them to do so.