Abstract

Parkinson’s is a neurodegenerative condition associated with several motor symptoms including tremors and slowness of movement. Freezing of gait (FOG); the sensation of one’s feet being “glued” to the floor, is one of the most debilitating symptoms associated with advanced Parkinson’s. FOG not only contributes to falls and related injuries, but also compromises quality of life as people often avoid engaging in functional daily activities both inside and outside the home. In the current study, we describe a novel system designed to detect FOG and falling in people with Parkinson’s (PwP) as well as monitoring and improving their mobility using laser-based visual cues cast by an automated laser system. The system utilizes a RGB-D sensor based on Microsoft Kinect v2 and a laser casting system consisting of two servo motors and an Arduino microcontroller. This system was evaluated by 15 PwP with FOG. Here, we present details of the system along with a summary of feedback provided by PwP. Despite limitations regarding its outdoor use, feedback was very positive in terms of domestic usability and convenience, where 12/15 PwP showed interest in installing and using the system at their homes.

Providing an automatic and remotely manageable monitoring system for PwP gait analysis and fall detection.

Providing an automatic, unobtrusive and dynamic visual cue system for PwP based on laser line projection.

Gathering feedback from PwP about the practical usage of the implemented system through focus group events.

Implications for Rehabilitation

Keywords:

Introduction

Parkinson’s disease (PD), caused by the depletion of dopamine in the substantia nigra, is a degenerative neurological condition affecting the initiation and control of movements, particularly those related to walking [Citation1,Citation2]. There are many physiological symptoms associated with PD including akinesia, hypokinesia, and Bradykinesia [Citation3]. Another major symptom includes freezing of gait (FOG), usually presenting in advanced Parkinson’s [Citation4–7]. FOG is one of the most debilitating and least understood symptoms associated with Parkinson’s. It is exacerbated by several factors including the need to walk through narrow spaces, turning and stressful situations [Citation7,Citation8]. FOG and associated incidents of falling often incapacitate people with Parkinson’s (PwP) and, as such, can have a significant detrimental impact at both a physical and psychosocial level [Citation6]. Consequently, the patient’s quality of life decreases and health care and treatment expenditures increase substantially [Citation9]. A research study conducted by the University of Rochester’s Strong Memorial Hospital [Citation10] showed that ∼30% of PwP experience sudden, unexpected freezing episodes, thus highlighting the high level of dependency that many PwP have on physical or psychological strategies that may assist in alleviating FOG and help people start walking again.

Many studies suggest that auditory [Citation11–14] and visual cues [Citation13–26] can improve a PwP’s gait performance, especially during FOG. Rubinstein et al. [Citation14], observed that in the presence of an external “movement trigger” (i.e., a sensory cue), a patient’s self-paced actions such as walking, can be significantly improved; a phenomenon, known as “kinesia paradoxica”.

PD monitoring

Many previous studies have developed methods for monitoring FOG behaviors and intervening to improve motor symptoms with the use of external visual cues. Many studies utilize computer vision technologies to minimize the need for patients to wear measurement devices, which can be cumbersome and also have potential to alter a person’s movement characteristics. Since the release of the Microsoft Kinect camera,Redmond, WA (a peripheral 3D camera as a replacement for conventional gamepads for Xbox gaming consoles) several attempts have been made to use the Kinect sensor as a noninvasive approach for monitoring PD-related gait disorders. Many previous research studies have focused on rehabilitation outcomes and experimental methods for monitoring patients’ activities. For instance, in Takač et al. [Citation27], a home tracking system was developed using Microsoft Kinect sensors to help PwP who experience regular FOG. The research interconnected multiple Kinect sensors together to deliver a wider coverage of the testing environment. The model operated by collectively gathering data from multiple Kinect sensors into a central computer and storing them in a centralized database for further analysis and processing. The research employed a model based on the subject’s histogram color and height together with the known average movement delays between each camera. Nonetheless, as a Kinect camera produces a raw RGB data stream, analyzing multiple Kinect color data streams for the histogram of color in real-time requires a very powerful processor and significant amount of computer memory. Moreover, the synchronization between each camera feed would add extra computation in this approach.

Mobility improvement

Previous research has demonstrated that dynamic visual cues (such as laser lines projected on the floor) can deliver a profound improvement to walking characteristics in PwP [Citation14]. Furthermore, strong evidence now exists suggesting that it is not only the presence of sensory information (or an external “goal” for movement) that “drives” improvements/kinesia paradoxia, but rather the presence of continuous and dynamic sensory information. This was first demonstrated by Azulay et al. [Citation23], who showed that the significant benefits to gait gained when walking on visual stepping targets were lost when patients walked on the same targets under conditions when the room was illuminated by strobopic lighting; thus making the visual targets appear static. Similar observations have also been made in the auditory domain [Citation3].

In Zhao et al. [Citation28], in order to improve PwP’s gait performance, a visual cue system was implemented based on a wearable system installed on subjects’ shoes. This system employed laser pointers as visual cues fitted on a pair of modified shoes using a 3D printed caddy. The system consisted of pressure sensors that detect the stance phase of gait and trigger the laser pointers when a freeze occurs. While effective and intuitive to use, the reliance on any attachable/wearable apparatus has clear potential to be cumbersome and also required users to remember to attach appropriate devices, even around the house; where many people experience significant problems with FOG at times when they are not wearing shoes. In another approach based on wearable devices [Citation29], the effect of a subject-mounted light device (SMLD) projecting visual step length markers on the floor was evaluated. The study showed that a SMLD induced a statically significant improvement on subjects’ gait performance. Nevertheless, it was suggested that the requirement of wearing SMLD might lead to practical difficulties both in terms of comfort and the potential for the devices impacting on patients’ movement characteristics. In Velik et al. [Citation22], the entire visual cue system including a SMLD and a backpack consisting of a remotely controlled laptop (needing to be carried by the subjects), will inevitably have comparable shortcomings. Moreover, similar to the aforementioned technologies, the laser visual cues are always turned on, regardless of the subject’s FOG status of gait performance. McAuley et al. [Citation24] and Kaminsky et al. [Citation25], proposed the use of virtual cueing spectacles (VCS) that, similar to approaches that project targets on the floor, project virtual visual targets on to a user’s spectacles. The use of VCS might eliminate major disadvantages introduced by SMLD (or other wearable approaches), but these systems still need to either be sensitive to FOG onset, or constantly turned on, even when not required.

Fall detection

Similar to the technological developments described above, several attempts have been made to design automated methods for detecting falls in older adults based on a variety of techniques such as wearable devices [Citation30–33] and computer vision [Citation34–36]. As falls are a major problem in PwP with FOG (in 2017 determined to be a top research priority for Parkinson’s UK), such developments are particularly relevant, and should ideally be integrated with attempts to provide sensory cues for movement. The Microsoft Kinect has previously been used as a noninvasive approach for fall-detection. For instance, in Mastorakis et al. [Citation35], the user’s body velocity and inactivity was taken into account that made the floor detection unnecessary for the fall detection due to the use of a 3D bounding box (the active area of interest). This removes the need for any environmental pre-knowledge such as a floor’s position or height. Moreover, in Stone et al. [Citation34], an algorithm was developed that determines a subject’s vertical state in each frame to trigger a detected fall using a decision tree and feature extraction. The research used 454 simulated falls and nine real fall incidents for the trial. Finally in Rusko et al. [Citation37], different machine learning techniques including Native Bays, decision tree and support vector machine (SVM) were used for fall detection in which the decision tree algorithm proved to be more accurate compared to other machine learning techniques used in the study, with over 93.3% success rate in detecting true positive fall incidents.

In the current study, we describe a novel integrated system that not only features an unobtrusive monitoring tool for fall and FOG incidents using the Microsoft Kinect v2 camera, but also implements an ambient-assisted living (AAL) environment designed to improve patients’ mobility during a FOG incident using automatic laser-based visual cue projection. Using a laser-based dynamically changing visual cue that can cast lines according to patients’ orientation and position in a room, the system is capable of delivering bespoke and tailored sensory information for each user in a manner that eliminates any need to wear body-worn sensors.

In conjunction with the current system, we have developed a companion smartphone application and a client software that enables doctors, healthcare providers and family members to monitor and receive notifications regarding possible incidents. Upon the detection of a fall, the system can automatically capture the event alongside an appropriate time stamp and notify a relevant person via email, live video feed (through the smartphone companion app), Skype conversation or developed client software.

Methods

FOG detection and algorithm

The approach for FOG detection builds on previously published work from the authors [Citation38,Citation39]. An algorithm was developed to monitor the behavior of subjects’ gait cycle and the number of footsteps in a given time in order to estimate the occurrence of FOG. A foot-off event is considered to have occurred when the knee angle of one foot has decreased to less than a specific threshold. Following significant pilot testing, this angle was specified as 170°. Moreover, a foot contact is triggered when the knee angle of the same foot has returned to its original value (170 > θ ≤ 180) in a time period of more than 200 ms. The 200 ms timing threshold was set to avoid the false positives flags due to certain inconsistencies and noise in the Kinect data readings.

Vertical and horizontal servos’ angle calculation

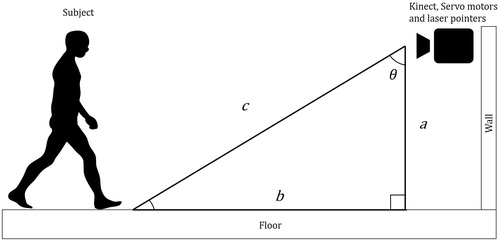

As mentioned above, this system was designed to provide dynamic visual cues using a set of two laser lines projected in front of the patient. The position and orientation of those lines are controlled by a set of two servo motors perpendicular to each other (). Once the location of a given subject’s ankle joints in a room were determined using Kinect v2 skeletal data stream, the appropriate angle for the vertical servo angle could be calculated using Pythagorean theorem as depicted in .

Where “a” is the Kinect’s camera height to the floor and “c” is the hypotenuse of the right triangle, which is the same as the subject’s closest foot joint distance to the Kinect camera in the Z-axis. θ is the calculated vertical angle for the servo motor. The position offsets in the X and Y axes between the servo motor and the Kinect’s lens center has been taken into account when the system calculated the correct angle for the vertical servo motor/laser pointer.

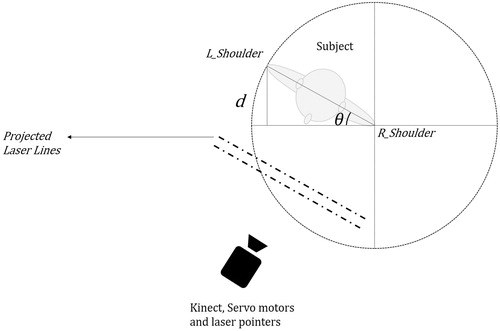

For horizontal servo motor angle determination, the subject’s shoulders displacement difference in Z-axis (depth) was calculated as it is shown in ( variable d).

Figure 2. Horizontal angle determination (note that Kinect sees a mirrored image thus shoulders are reversed).

The angle for the horizontal servo motor can be determined by calculating the inverse sine of θ. Depending on the subject’s direction of rotation to the left or right, the result would be subtracted or added from/to 90, respectively. This is because, in order to cast laser lines in front of the subject, the horizontal servo motor should rotate in reverse compared to the subject’s body rotation.

Fall detection and algorithm

Fall detection using Microsoft Kinect has previously been shown to be highly accurate [Citation34–36,Citation39]. This research employed the heuristic fall detection algorithm used in [Citation40] with 97% true positive detection accuracy of fall incidents. The approach was developed based on the subject’s head “joint” (as detected by the Kinect) distance to the floor and its fall velocity and acceleration relative to the floor. The implemented algorithm keeps tracking the subject’s head in 3D Cartesian coordinates as well as its distance to the floor in 1 s time bins at all times. This enables the calculation of the subject’s head velocity and acceleration. Combined with the subject’s head distance to the floor, the system can eliminate most so-called “false positive” falls caused by not considering low-velocity “falls” such as laying down or high distance-to-ground incidents such as sitting on a chair. The system can also distinguish between a recoverable and unrecoverable fall incident based on a user-defined threshold.

Experimental setup

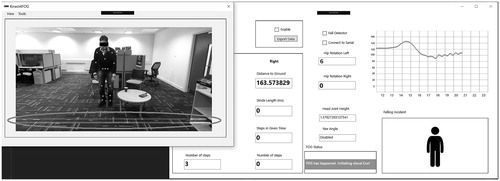

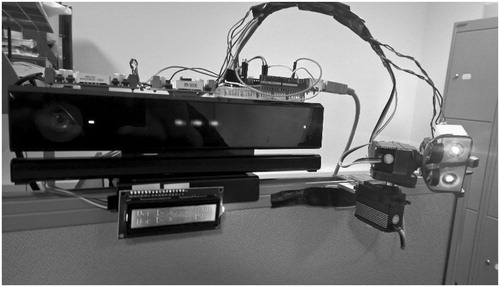

The developed visual cue system consisted of a two servo motors controlling the vertical and horizontal movements of two class-3B 10 mW 532 nm wavelength green line laser projectors. The servo motors are controlled using an Arduino Uno microcontroller that receives its inputs from a PC based on the Kinect v2 camera and bespoke algorithms. The LCD shown in provides basic information about the adjustments for vertical and horizontal angles to the user.

Figure 3. A view of the prototype system. The two servo motors controlling vertical and horizontal movement are on the left of the image (between the Kinect and lasers).

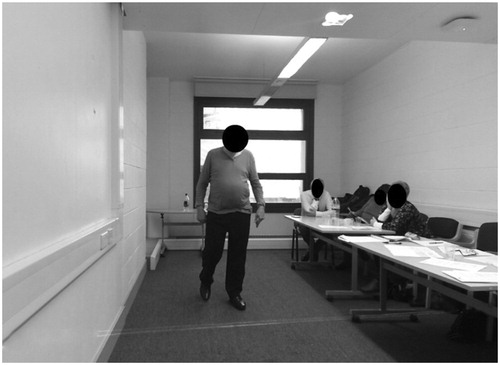

During the development phase of the system, the horizontal servo motor was designed to have a 150 ms delay. This latency was introduced to minimize motor jitter as it would be less likely that a subject changes his/her body orientation within that timeframe. The vertical servo motor showed 33 ms delay due to the 30 Hz sampling frequency of the Kinect v2. During the focus group sessions, participants were instructed to walk towards the camera in pre-defined paths towards the Kinect camera as it is seen in within the distance range of 4.33 m to 1.38 m while their gait and locomotion were being tracked by the developed system. The Kinect was placed perpendicularly at a height of 1.57 m from the ground.

demonstrates how the system provides real-time information about the patient including the stride performance, fall tracking and other information about the subject’s location in the room. The “FOG Status” section shows that a FOG incident has occurred and consequently the red circle indicates the laser-based visual cue.

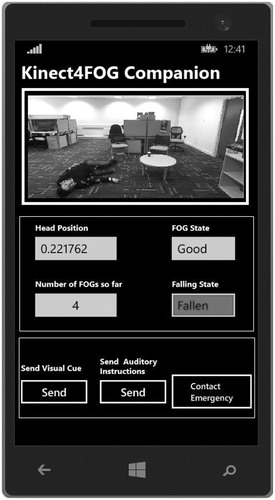

shows the design of the system’s smartphone companion application for healthcare providers, doctors and carers. The application provides information about the subject including the number of estimated FOG incidents as well as a notification to a carer if a critical fall incident occurs. Moreover, it provides the carer with the ability to send visual or auditory cues during a FOG incident or contact emergency services. Based on the user preference, the system can contact a relevant person via email or notifications in the companion smartphone application including a live stream of the incident and the time stamp of the relevant date and time. A user, once notified, can also initiate a Skype conversation where he/she can talk to the patient and provide further support.

shows the system behavior when a fall incident occurs. As the figure depicts, the developed software was designed as an open-ended solution that can provide alternative visual or auditory cues. However, while feasible, such additions are beyond the scope of this specific study.

User interface and doctor–patient communication

The developed system provides a comprehensive graphical user interface (GUI) that enables doctors and healthcare providers gather important information about a patient’s gait performance such as stride time, steps in a given time and total number of steps in real-time (). These data can later be recorded and exported to a patient’s database profile for future analysis and evaluations.

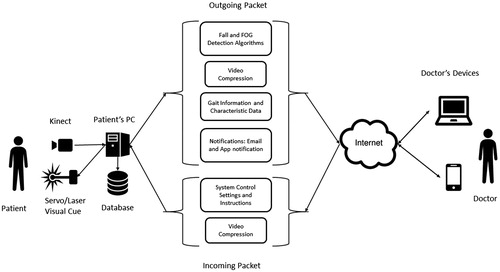

The developed software can also enable a user to log in and observe the patient’s status as well as provide support should the patient require. illustrates the network diagram facilitating the relay of video streams via the internet to the smartphone and client applications.

Figure 6. Network connection diagram including outgoing and incoming data packets over the internet.

A smartphone companion application was also developed that provided notification support and a live video stream of a patient to be monitored regardless of the location and time difference between the patient and healthcare provider. As these features were also demonstrated to participants during the focus group, in their questionnaires they were asked to appraise the smartphone companion app, the live video stream, and remote visual cue trigger.

Focus group and participants

The aim of the focus group was to review the functionality and performance of the developed system. Fifteen PwP (12 male, 3 female) participated in the focus group in which seven PwP volunteered to directly interact with and evaluate the system. The 15 volunteers were split up in to three groups of five people.

Participants were recruited from local support groups run by Parkinson’s UK. Potential participants were given written information about the study and were invited to participate. They were reminded that they were under no obligation to take part and could withdraw at any time. All participants provided written and informed consent. All investigations were carried out according to the principles laid down by the Declaration of Helsinki of 1975, revised in 2008. Ethical approval for the research was granted by Brunel University London’s ethics committee.

Participants were given the opportunity to experience the system themselves. They were advised to walk towards the camera while being monitored, assess the system’s capabilities for visual cue projection and observe the dynamic laser lines in action. Moreover, the falling incidents were simulated by a healthy adult to demonstrates the system’s fall detection and live support capabilities. Seven of the 15 participants tested the system and all 15 participants observed the prototype system in operation. At the end of each session participants responded to a series of questions relating to their experience and opinions of the system. Their feedback is presented in .

Table 1. Patients participants.

Results

demonstrates the actual trial of the system by a volunteer. As it can be seen, the system casts laser lines as visual cues in front of the patient according to his direction and location in the room upon an occurrence of a FOG incident. shows the results based on the participants’ feedback about the focus group.

Figure 7. A PD patient volunteering to try out the system’s capabilities in detecting FOG. Visual cues are projected on the floor based on his whereabouts.

Table 2. Test subjects’ characteristics (n = 15; 12 males, 3 females).

When asked about the healthcare provider remote communication method with the patient during a critical fall incident, eight patients suggested a telephone call while six suggested a Skype video call and one remained neutral. While the prototype system cost £137.69 to build excluding the controlling PC, patients suggested that they would be willing to pay between £150 and 500 to have the system installed in their homes. Nevertheless, if the prototype is released as a commercial device, other economic factors including insurance, maintenance, and the necessity to install multiple systems in different rooms would inevitably escalate the price. Finally, when asked about possible improvements to the final product, eight of the patients suggested that a hybrid/portable method that can also provide outdoor visual cues, would be very beneficial while seven wanted it to be as simple as possible to keep the cost down and have a separate device for outdoor purposes.

Discussion

The aim of this study was to develop and evaluate an integrated system capable of detecting falls and FOG, providing visual cues orientated to a user’s position, and providing a range of communication options. Based on the patients’ feedback, and in accordance with previous research studies, it was concluded that our system can indeed be helpful and used as a replacement to alternative, potentially less-capable technologies such as laser canes and laser-mounted shoes. Due to the system being an open-ended, proof of concept, the system’s coverage is limited to only one axis. Nonetheless, future improvements can eliminate this constraint by mounting the laser pointer, servo motors and the Kinect camera on a circular rail attached to the ceiling capable of moving/rotating in accordance to the subject position and direction in a room. Although this research was focused solely on the automatic projection of dynamic visual cues, the system was designed to accommodate additional features in future developments, such as auditory cues.

Overall, based on the patients’ feedback, the system represents a viable solution for detecting fall incidents and providing help during a critical fall when the patient is unattended. Also, based on feedback from our participants, we can conclude that our system has the capacity to provide an unobtrusive and automatic visual cue projection when needed at home during a FOG episode.

Conclusion

The results of this research demonstrate the viability of using an automatic and unobtrusive system for monitoring and improving the mobility of PwP based on the Microsoft Kinect camera. The implementation of a visual cuing system based on laser lines for improving FOG incidents in PwP has been developed and reviewed by 15 PwP. Feedback provided regarding the utility of the system showed promising results. All the participants either “agreed” or “strongly agreed” with the fact that the system’s visual cues are helpful in increasing their mobility and walking performance. Of those who tested the system, 86.6% were satisfied with the system’s FOG detection whereas 13.3% neither agreed nor disagreed about the system’s competency in detecting FOG incidents. Nevertheless, there are shortcomings to our system, such as the indoor-only coverage and the need for installing the system in each of the most commonly used areas of a house.

Overall, compared to current commercially available alternative devices, this system provides a broadly affordable solution while, theoretically, providing a means of improving patients’ mobility unobtrusively. Moreover, this solution is one of the few that can function in an automated fashion, both in terms of event detection, cue provision and when establishing communication with third parties. The ease of use and simple installation process compared to other available solutions can make the system a desirable solution for indoor assisting purposes as suggested by participants in the current study.

Acknowledgements

We would like to acknowledge Parkinson’s UK for facilitating and advising us regarding the process of recruiting PD patients.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Yarnall A, Archibald N, Brun D. Parkinson’s disease. Lancet. 2015;386:896–912. [Internet].

- Dirkx MF, den Ouden HEM, Aarts E, et al. Dopamine controls Parkinson’s tremor by inhibiting the cerebellar thalamus. Brain. 2017;140:721–734.

- Young WR, Shreve L, Quinn EJ, et al. Auditory cueing in Parkinson’s patients with freezing of gait. What matters most: action-relevance or cue-continuity? Neuropsychologia. 2016;87:54–62.

- Pickering RM, Grimbergen YAM, Rigney U, et al. A meta-analysis of six prospective studies of falling in Parkinson’s disease. Mov Disord. 2007;22:1892–1900.

- Johnson L, James I, Rodrigues J, et al. Clinical and posturographic correlates of falling in Parkinson’s disease. Mov Disord. 2013;28:1250–1256.

- Bloem BR, Hausdorff JM, Visser JE, et al. Falls and freezing of gait in Parkinson’s disease: a review of two interconnected, episodic phenomena. Mov Disord. 2004;19:871–884.

- Okuma Y. Freezing of gait in Parkinson’s disease. J Neurol. 2006;253:27–32.

- Beck EN, Ehgoetz Martens KA, Almeida QJ. Freezing of gait in Parkinson’s disease: an overload problem? PLoS One. 2015;10:e0144986.

- Nutt JG, Bloem BR, Giladi N, et al. Freezing of gait: moving forward on a mysterious clinical phenomenon. Lancet Neurol. 2011;10:734–744.

- University of Rochester. Laser pointer helps Parkinson’s patients take next step. [Internet]. 1999 [cited 2017 May 24]. Available from: https://www.rochester.edu/news/show.php?id =1175

- Rochester L, Hetherington V, Jones D, et al. The effect of external rhythmic cues (auditory and visual) on walking during a functional task in homes of people with Parkinson’s disease. Arch Phys Med Rehabil. 2005;86:999–1006.

- Khan AA. Detecting freezing of Gait in Parkinson’s disease for automatic application of rhythmic auditory stimuli. Berkshire, UK: University of Reading; 2013.

- Suteerawattananon M, Morris GS, Etnyre BR, et al. Effects of visual and auditory cues on gait in individuals with Parkinson’s disease. J Neurol Sci. 2004;219:63–69.

- Rubinstein TC, Giladi N, Hausdorff JM. The power of cueing to circumvent dopamine deficits: a review of physical therapy treatment of gait disturbances in Parkinson’s disease. Mov Disord. 2002;17:1148–1160.

- Carrel AJ. The effects of cueing on walking stability in people with Parkinson’s disease [dissertation]. Iowa, USA: Iowa State University; 2007.

- Jiang Y, Norman KE. Effects of visual and auditory cues on gait initiation in people with Parkinson’s disease. Clin Rehabil. 2006;20:36–45.

- Velik R. Effect of on-demand cueing on freezing of Gait in Parkinson’s patients. Int J Med Pharm Sci Eng. 2012;6:10–15.

- Azulay JP, Mesure S, Blin O. Influence of visual cues on gait in Parkinson’s disease: contribution to attention or sensory dependence? J Neurol Sci. 2006;248:192–195.

- Donovan S, Lim C, Diaz N, et al. Laserlight cues for gait freezing in Parkinson’s disease: an open-label study. Park Relat Disord. 2011;17:240–245.

- Dvorsky BP, Elgelid S, Chau CW. The Effectiveness of utilizing a combination of external visual and auditory cues as a Gait training strategy in a pharmaceutically untreated patient with Parkinson’s disease: a case report. Phys Occup Ther Geriatr. 2011;29:320–326.

- Griffin HJ, Greenlaw R, Limousin P, et al. The effect of real and virtual visual cues on walking in Parkinson’s disease. J Neurol. 2011;258:991–1000.

- Velik R, Hoffmann U, Zabaleta H, et al. The effect of visual cues on the number and duration of freezing episodes in Parkinson’s patients. Conf Proc IEEE Eng Med Biol Soc. 2012;2012:4656–4659.

- Azulay J, Mesure S, Amblard B, et al. Visual control of locomotion in Parkinson’s disease. Analysis. 1999;122:111–120.

- Kaminsky TA, Dudgeon BJ, Billingsley FF, et al. Virtual cues and functional mobility of people with Parkinson’s disease: a single-subject pilot study. J Rehabil Res Dev. 2007;44:437–448.

- McAuley JH, Daly PM, Curtis CR. A preliminary investigation of a novel design of visual cue glasses that aid gait in Parkinson’s disease. Clin Rehabil. 2009;23:687–695.

- Lebold CA, Almeida QJ. An evaluation of mechanisms underlying the influence of step cues on gait in Parkinson’s disease. J Clin Neurosci. 2011;18:798–802.

- Takač B, Chen W, Rauterberg M. Toward a domestic system to assist people with Parkinson’s. SPIE Newsroom. 2013;19:871–884.

- Zhao Y, Ramesberger S, Fietzek UM, et al. A novel wearable laser device to regulate stride length in Parkinson’s disease. Conf Proc IEEE Eng Med Biol Soc. 2013;2013:5895–5898.

- Lewis GN, Byblow WD, Walt SE. Stride length regulation in Parkinson’s disease: the use of extrinsic, visual cues. Brain. 2000;123:2077–2090.

- Kang JM, Yoo T, Kim HC. A wrist-worn integrated health monitoring instrument with a tele-reporting device for telemedicine and telecare. IEEE Trans Instrum Meas. 2006;55:1655–1661.

- Nyan MN, Tay FEH, Murugasu E. A wearable system for pre-impact fall detection. J Biomech. 2008;41:3475–3481.

- Pierleoni P, Belli A, Palma L, et al. A high reliability wearable device for elderly fall detection. IEEE Sensors J. 2015;15:4544–4553.

- Nguyen TT, Cho MC, Lee TS. Automatic fall detection using wearable biomedical signal measurement terminal. Conf Proc IEEE Eng Med Biol Soc. 2009;2009:5203–5206.

- Stone E, Skubic M. Fall detection in homes of older adults using the Microsoft Kinect. IEEE J Biomed Health Inform. 2015;19:290–301.

- Mastorakis G, Makris D. Fall detection system using Kinect’s infrared sensor. J Real-Time Image Proc. 2012;9:635–646.

- Gasparrini S, Cippitelli E, Spinsante S, et al. A depth-based fall detection system using a Kinect sensor. Sensors. 2014;14:2756–2775.

- Rusko M, Korauš A. Faculty of materials science and technology in Trnava. Mater Sci Technol. 2010;22:157–162.

- Amini A, Banitsas K, Hosseinzadeh S. A new technique for foot-off and foot contact detection in a gait cycle based on the knee joint angle using Microsoft Kinect v2. 2017 IEEE EMBS International Conference on Biomedical & Health Informatics; 16–19 February 2017. Orlando: IEEE; 2017. p. 153–156.

- Bigy AAM, Banitsas K, Badii A, et al. Recognition of postures and freezing of gait in Parkinson’s disease patients using Microsoft Kinect sensor. 7th Annual International IEEE EMBS Conference on Neural Engineering; 22 April 2015; Montpellier, France: EMBS. 2015. p. 731–734.

- Amini A, Banitsas K, Cosmas J. A comparison between heuristic and machine learning techniques in fall detection using Kinect v2. 2016 IEEE International Symposium on Medical Measurements and Applications; 15–18 May 2016. Benevento, Italy: IEEE; 2016. p. 1–6.