Abstract

Purpose

Assistive Technologies encompass a wide array of products, services, healthcare standards, and the systems that support them. Product/market fit is necessary for a technology to be transferred successfully. Current tools lack variables that are key to technology transfer, and current trainings do not have a validated tool to assess the effectiveness of a training, increasing innovators’ readiness for technology transfer. The goal was to develop a tool to evaluate the readiness of a technology by incorporating other models and focusing beyond just commercialization.

Materials and Methods

The development involved five stages: 1. Review of current tools used in technology transfer in academic, government, and industry settings; 2. Development of the draft version of the tool with internal review; 3. Alpha version review and refinement, 4. Content validation of the tool’s beta version; 5. Assessment of the readiness tool for reliability and preparedness for wide-use dissemination.

Results

The tool was revised and validated to 6 subscales and 25 items. The assistive technology subscale was removed from the final version to eliminate repetitive questions and taking into consideration that the tool could be used across technologies.

Conclusions

We developed a flexible assessment tool that looked beyond just commercial success and considered the problem being solved, implications on or input from stakeholders, and sustainability of a technology. The resulting product, the Technology Translation Readiness Assessment Tool (TTRAT)TM, has the potential to be used to evaluate a broad range of technologies and assess the success of training programs.

Quality of life can be substantially impacted when an assistive technology does not meet the needs of an end-user. Thus, effective Assistive Technology Tech Transfer (ATTT) is needed.

The use of the TTRAT may help to inform NIDILRR and other funding agencies that invest in rehabilitation technology development on the overall readiness of a technology, but also the impact of the funding on technology readiness.

The TTRAT may help to educate novice rehabilitation technology innovators on appropriate considerations for not only technology readiness, but also general translation best practices like assembling a diverse team with appropriate skillsets, understanding of the market and its size, and sustainability strategies.

IMPLICATIONS FOR REHABILIATION

Introduction

Collaborative design in assistive technologies and tech for disabilities

Assistive Technologies (ATs), an umbrella term covering the systems and services related to the delivery of assistive products and services [Citation1], are needed by an estimated 1 billion individuals worldwide to live active, independent lives [Citation2–4]. In addition, the use of ATs is linked to a decreased need for services and a reduction in service-related healthcare expenditures [Citation5]. In contrast, individuals who do not have access to appropriate ATs are known to be less healthy, more isolated, and have a lower quality of life [Citation4]. While WHO broadly defines ATs, the use of AT as an application and motivation for this study relates to assistive products, specifically.

As the global population increases and ages, the number of individuals who need ATs is expected to double, from 1 to 2 billion people, by 2050 [Citation6]. The amount spent on ATs is estimated to grow in parallel with this need, from $15 Billion in 2015 to over $26 Billion by 2024 [Citation5]. The potential for substantial impact on quality of life and the size of the market opportunity associated with ATs call for attention to the effectiveness of Assistive Technology Tech Transfer (ATTT) activities. Poorly defined and fragmented markets and a lack of clear regulations are cited as barriers to successful ATTT and are a likely cause of a high rate of abandonment of devices by end-users – from 20–30%. This abandonment rate is indicative of poorly designed or fitted devices that do not meet end-users’ needs [Citation7–9]. In addition, there is concern that quality and reliability are decreasing and reducing the likelihood of reimbursement for AT and related services [Citation10–13].

ATs encompass a wide array of products, services, healthcare standards, and the systems that support them. They challenge the one size fits all approach of research, clinical trials, and the translation of technology into meaningful interventions, from idea to impactful solution [Citation14]. There is a growing need to streamline and improve the efficiency of ATTT, which requires the interdisciplinary collaboration of the healthcare landscape, researchers, industry, the community, and the adoption of user-centered design [Citation15]. However, why should universities, government agencies, and entrepreneurs care about ATTT? This collaborative design approach is also applicable across other technology development sectors and agencies, lending to a more uniform mindset to address the needs and demands of a given market. By looking to improve the success of ATTT, the benefits can impact companies and society and meet a growing market need.

Technology transfer and product/market fit

Product/market fit, a term common in the commercial sector but less used among researchers who are not focused on commercial markets, is defined as the degree to which a product (or technology) satisfies a strong market demand [Citation16]. The factors that determine product-market fit are complex and technology-specific but generally include whether the technology: (1) meets the needs of customer segments; (2) has a value proposition over existing products; and (3) can be sold at the required profit margin. Put simply, when product/market fit is not achieved, the technology will not be transferred successfully and therefore serves as a binary indicator (yes/no) of technology transfer (TT) success.

Achieving product/market fit is challenging for most technology. However, tools such as TT training [Citation17] and readiness assessment tools can assist in overcoming the barriers to achieving a proper product/market fit. There are systematic approaches to determining whether a product/market fit is possible and identifying what changes could be made to the technology to achieve it. These approaches have become common in academia through the Lean LaunchPad (LLP) training developed by Steve Blank [Citation16,Citation18,Citation19] and first adopted by the National Science Foundation (NSF), which developed the Innovation-Corps (I-Corps) Program to train people involved in tech transfer in the LLP process [Citation20]. The LLP process has been used broadly in the academic literature with over 2,200 citations. Both the National Institutes of Health (NIH) and the Department of Defense (DOD) use it to support TT from grantees. Most recently, the National Institute for Disability, Independent Living, and Rehabilitation Research (NIDILRR) supported the IMPACT Center (Initiative to Mobilize Partnerships for Successful Assistive Technology Transfer) to run a program similar to I-Corps, 'IMPACT Start-Up,' to apply this method to AT (see www.idea2impact.org).

Other tools

Other tools help to assess product/market fit but lack variables that are key to technology translation. The IMPACT Center’s operational definition of technology translation is ensuring that new products gained through research and development will ultimately be used by those who need them through various means, rather than seeing commercialization as the only pathway and endpoint to user adoption. These tools [Citation21] may also not be tailored or tailorable to AT. For example, the Technology Readiness Levels [Citation22] scale was developed by the American National Aeronautics and Space Administration to measure a technology’s readiness for space [Citation23] and later as a planning tool for innovation management in the European Union [Citation24], but lacks discipline-specific tailoring [Citation23], as does the Manufacturing Readiness Levels developed by the DOD to assess a product’s maturity and identify risk during acquisition and translation [Citation25]. The Need to Knowledge Model [Citation26], Lean LaunchPad Startup Canvas [Citation17], and the Cloverleaf Model [Citation27] are all specific to commercialization rather than general translation.

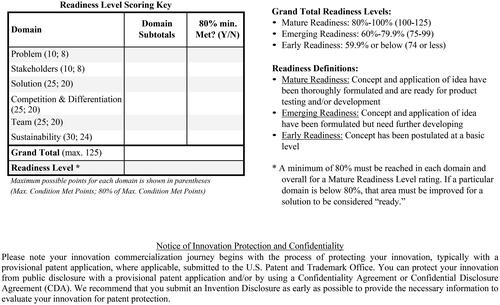

Another training tool, the Pitt Translational Canvas (; [Citation28]), was developed by a group of researchers with experience in TT, including one of the paper’s authors, in response to the often poor fit of a Business Model Canvas (BMC) or Lean Startup Canvas for academic TT. Instead of encouraging researchers to focus on cost structure, revenue streams, and channels, for example, the Pitt Translational Canvas encourages researchers to follow a sequential process that starts with clearly identifying the problem. Other aspects of the model are analogous to the BMC, such as benefits (value proposition on the BMC) and stakeholders (customer segments on the BMC). The Solution box incorporates 'key resources’ and 'activities.' The Pitt Translational Canvas’ unique feature is its linearity, with each box building off the previous one. For example, without first understanding the problem (box 1) and viewing the rest of the canvas with that lens (e.g., box 2: how do the stakeholders perceive the problem?; box 3: how does the solution solve the problem for the stakeholders?), an innovator is unable to understand how a solution benefits each of the stakeholders (box 4), what the competition is for a given solution (box 5), and how it differentiates from the competition (box 6). While this is a helpful guiding framework for translation, it does not include a rating scale and therefore is not used for tracking readiness.

Figure 1. Pitt translational canvas. Step by step technology translational canvas that includes the following domains: Problem, Stakeholders, Solution, Benefits, Competition, Differentiation and Action Plan.

A readiness assessment tool is needed in tech transfer

The AT domain is just one example of where a readiness assessment tool can help identify gaps in the translational pathway. Such a tool can also assess the effectiveness of existing training interventions like NSF, NIH I-Corps, and IMPACT Start-Up. Studies show that choosing a particular model may not guarantee TT success [Citation29] and do not provide a real measure of the impact a training intervention has to compare the learning gains. The Cloverleaf Model for Technology Transfer may serve as a framework that can be adapted to serve as a training tool (i.e., what tech transfer steps may be required; awareness of steps) to compare and contrast improvement of training interventions and as a tool to determine where the product or technical standard is on the pathway to success.

Research investigating TT success from universities demonstrates that success rates vary, and incentives to perform TT, such as intramural grants and high royalty percentages for inventors, improve success rates [Citation30]. Universities are also starting to include patenting and TT activities in promotion and tenure reviews, which provide further incentives [Citation31]. These changes in the academic culture and the success of training programs such as the NSF I-Corps at catalyzing tech transfer from research institutions [Citation20] provide an optimistic outlook for researchers and entrepreneurs to increase TT success rates.

Product case study to demonstrate a readiness assessment tool’s utility and ultimate benefit for PWD

A distinctive case study of CreateAbility Inc., from the IMPACT Center’s technical assistance portfolio, demonstrates how a Readiness Assessment Tool could improve a product’s readiness for translation. CreateAbility Inc.’s mission is to create innovative products that help clients, primarily individuals with intellectual disabilities, flourish beyond their current capabilities but focus on interactive health and safety monitoring software solutions. CreateAbility’s AT-focused product for the IMPACT training was a tool to promote a more accessible work environment for people with intellectual disabilities. This product is significantly needed as people with intellectual disabilities have a higher unemployment rate [Citation32] and difficulty finding employment well matched to their interests and skills [Citation33]. When CreateAbility Inc. approached the IMPACT Center, they were unsure about sustainability pathways for their product. A tool that could help assess the overall readiness and case for additional funding was needed to develop an action plan.

Thus, the purpose of this study was to develop a TT readiness assessment tool that would be applicable for the AT entrepreneurs and innovators the IMPACT Center serves to ultimately promote the translation of AT to PWD to maintain or improve functioning and independence. A secondary objective of the study, based on what the literature presents related to gaps in assessing technology readiness, was to consider other technology domains in constructing the tool to increase generalizability and assess broader technologies outside the AT field. This study’s specific aims were to review current tools, draft a new tool, and assess the tool for content validity and reliability.

Methods

Qualitative and quantitative research methodologies were used to develop the TTRAT. The item pool was compiled from a literature and subject matter expert review. The psychometric properties of the TTRAT were verified using face and content validity, and the reliability was tested using Cronbach’s alpha reliability. The development involved five distinctive stages: 1. Review of current tools used in TT in academic, government, and industry settings; 2. Development of the draft version of the tool with internal review; 3. Alpha version review and refinement, 4. Content validation of the tool’s beta version; 5. Assess the readiness tool for reliability and prepare for wide-use dissemination.

Stage 1 – review of current tools used in TT in academic, government, and industry settings

A team led by a member of the original 'Pitt Translational Canvas’ development team of TT experts and additional contributors with experience in translating AT products reviewed key literature of current models of technology translation and readiness levels [Citation26,Citation30,Citation34,Citation35] using relevant keywords () in online databases like Pubmed, Google Scholar and relevant journals such as the Journal of Technology Transfer. The content was evaluated to assess principles observed, domains addressed, stakeholder involvement, awareness of the TT steps needed in various settings (i.e., academia to market, small business to market), and whether different products, including standards, could be assessed by the tool.

Table 1. Table of Key Words used in the literature review of current models of technology translation and readiness levels. Publications were searched for in PubMed, Google Scholar, and relevant journals such as the Journal of Technology Transfer.

Stage 2 - Development of the draft version of the tool with internal review

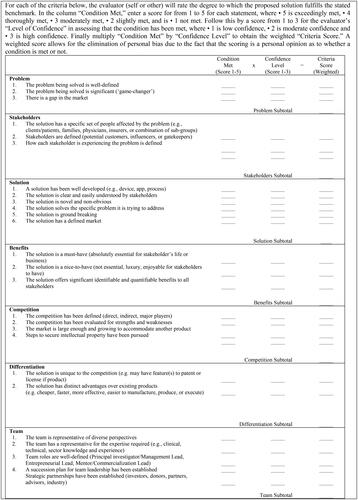

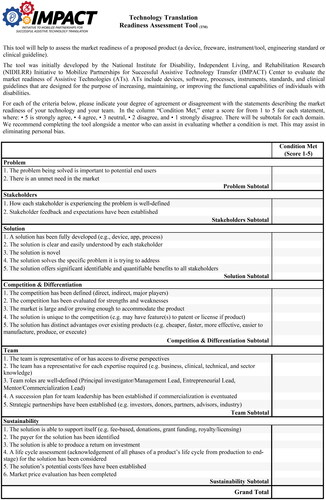

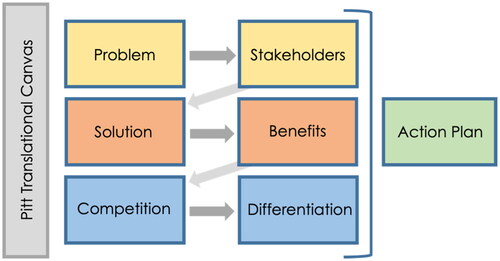

The nine domains drafted (Problem, Stakeholders, Solution, Benefits, Competition, Differentiation, Team, Sustainability, Assistive Technology) were based on the validated Cloverleaf Model of Technology Transfer [Citation28] tool and the Pitt Translational Canvas () and went beyond just addressing commercialization. The number of items (N = 34) was comparable to what is contained in the Cloverleaf Model (N = 30) to ensure conciseness and that it was not time-consuming to complete by keeping the assessment under an average of 15 min. The tool was designed to be facilitated at various time points during technology development to assess the progress and readiness by either an innovator or mentor. The draft version of the tool () was developed in Microsoft Word and converted into a PDF. Similar to the Cloverleaf Model, it includes a weighted score criteria that reduces the influence of personal bias and the likelihood of rating certain items on a high or low baseline.

Figure 2. Pre-alpha (Pre-Version 1) Development - AT readiness assessment tool. Tool included Condition Met x Confidence Level = Criteria Score (weighted). Domains included: Problem, Stakeholders, Solution, Benefits, Competition, Differentiation, Team, Sustainability and Assistive Technology.

Internal reviewers recruited from academia and small business startups had experience in TT, standards development, and one out of six had experience using an AT. Reviewers were instructed to use a technology or product standard they had developed or were familiar with as their lens for the tool, in addition to a series of questions () to assist the developers in understanding how to improve the tool. The tool was refined based on the internal reviewers’ feedback, and the alpha version of the AT readiness tool was developed.

Table 2. Questions asked by developers of the internal review team when assessing the AT Readiness Assessment Tool.

Stage 3 – alpha version review and refinement

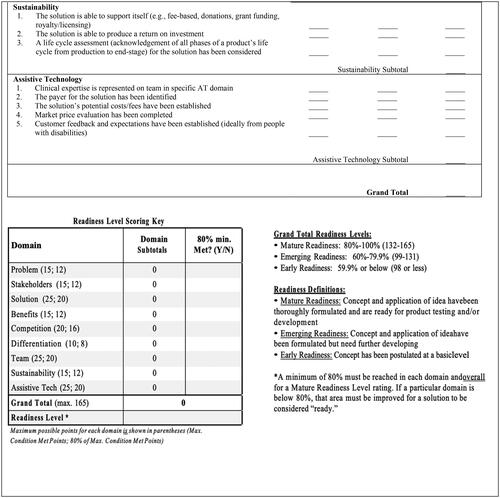

To assess the tool’s face validity, usability, and sensitivity to detect change over time in the different domains and across various experience levels of TT, a review of the alpha version of the readiness tool (), named the AT Readiness Assessment Tool, was conducted by subject matter experts and a pilot cohort of students in two settings: experts or experienced AT entrepreneurs, novice TT students in academia and in a massive open online course (MOOC) of mixed TT experience from novice to expert.

Figure 3. Alpha version (Version 1) - AT Readiness Assessment Tool. Tool was developed to assess the market readiness of the proposed technical solution (a device, freeware, instrument/tool, engineering standard or clinical guideline). Domains include: Problem, Stakeholders, Solution, Benefits, Competition, Differentiation, Team, Sustainability and Assistive Technology.

The first group, a Development Panel of experts and entrepreneurs in the field of AT, was asked to test the tool using a technology or standard they had developed or were familiar with when testing the tool. One panel member, also a visually impaired screen-reader user, provided feedback on the tool’s accessibility. Based on feedback from the screen-reader user, the tool was transferred into Qualtrics XM, an online experience management survey platform, to meet Americans with Disabilities Act (ADA) compliance and increase the accessibility of the tool as the beta version.

To test if the tool was appropriate for novice users, we assessed the alpha version of the AT Readiness Assessment Tool with students from the MOOC, “Idea 2 IMPACT: An Introduction to Translating Assistive Health Technologies and Other Products,” developed and run on the Coursera MOOC platform and with a group of students (N = 9) in graduate-level design class, “Fundamentals of Rehabilitation & Assistive Technology Design.” Participants in the MOOC were mainly of non-student status (57%), with the majority residing in North America (23.42%) or Asia (45.30%). The design class also addressed additional open-ended feedback questions related to the tool’s instructions, domains, scoring, and usability. Data was collected and stored for both user groups in Qualtrics XM online.

Stage 4 – content validation of the beta version

It was vital for us to assess the content validity of the tool so that practitioners and researchers would have a strong basis for understanding its relevance to particular kinds of technology assessments [Citation36–39]. Content validity is "the degree to which elements of an assessment instrument are relevant to and representative of the targeted construct for a particular assessment purpose" [Citation40]. To ensure the overall validity based on best practices and a commonly used method [Citation37,Citation41–44], we applied measurement of the Content Validity Index (CVI) to capture key evidence to support the validity of our readiness assessment tool.

We assessed the content validity of the Readiness Tool by recruiting six unique experts who were not involved in evaluating the initial draft of the tool. The Item-Content Validity Index (I-CVI) was calculated to determine the appropriateness of each question for the survey, while the Scale-Content Validity Index (S-CVI) was determined by averaging the I-CVI scores of each question [Citation44,Citation45]. For the tool to achieve acceptable validity, it is suggested that each item have a minimum I-CVI score of 0.78 and an overall S-CVI score of 0.90 or higher [Citation44].

Prior to the computation of CVI, expert reviewers must be carefully selected [Citation44,Citation46]. Davis [Citation46] provides criteria for selecting the review panel of experts. For example, the number of experts for content validation should be between six and ten because less than six requires a universal agreement between reviewing experts, and more than ten seems unnecessary [Citation40,Citation47]. Expert reviewers are vital for researchers to secure valuable consultation in the content domain [Citation46]. In our case, we formed the review panel with six content experts and provided them with clear instructions [Citation36]. Since more than five experts are involved in our evaluation, the lower limit of the acceptable value for S-CVI/Avg should be 0.8 [36, 45]. Moreover, items with an I-CVI score of less than 0.8 should be modified or removed [Citation37].

The next step was to invite the review panel of six experts to rate the relevance of each item from the tool on a four-point ordinal scale, including "Highly Agree," "Agree," "Somewhat Agree," and "Do Not Agree" [Citation46]. The relevance rating must be recorded as 1 or 0 [40]. We collected the score on each item from content experts; then, the value 1 was assigned to relevance scales including "Highly Agree" and "Agree," and the value 0 was assigned to other scales, including "Somewhat Agree" and "Do Not Agree."

The CVI calculation process involved two levels of computation: on an item level, the I-CVI, and on a scale level, the S-CVI [Citation44]. We first calculated I-CVI to measure the percentage of expert reviewers providing items with a relevance score of "Highly Agree" and "Agree" [Citation40]. I-CVI computations for each question (32 questions in total) are done along the domains of (1) concise, (2) relevant, and (3) clear, which generates 96 items. For example, item 1 has all six experts in agreement, and the sum-up of its relevant score is (1 + 1 + 1 + 1 + 1 + 1) = 6; the I-CVI of this item is 6 divided by 6 experts, which is equal to 1. Then, based on I-CVI, we computed two specific S-CVI: S-CVI/Ave and S-CVI/UA. S-CVI/Ave is based on the average method, which measures the average scale-level content validity [Citation37].

The panel of six experts was also given the opportunity to provide open feedback on the overall assessment of the tool.

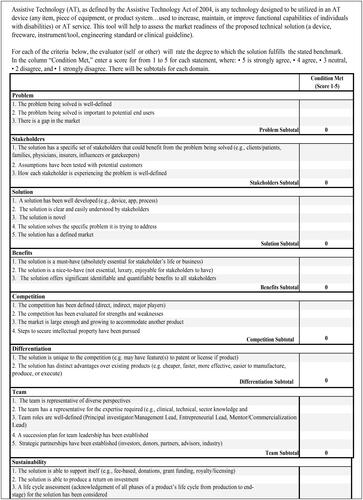

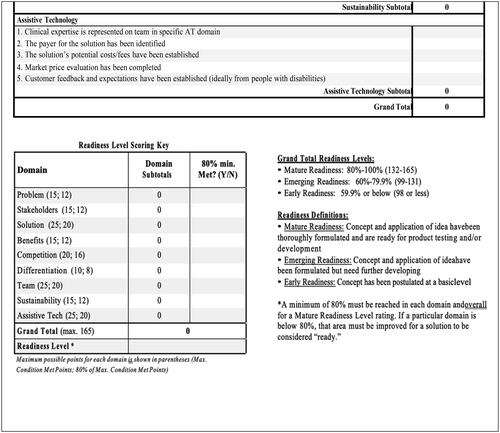

Stage 5 - Assessment of the readiness tool for reliability and preparedness for wide-use dissemination

We recruited 24 participants, including experts with experience in AT and/or TT, while considering the feasibility of recruitment and retention of subjects. Item-level statistics for the validated TTRAT subscales were calculated using SPSS (v.26), including Cronbach’s Alpha and item-total correlations. Item reliability testing reduced the overall tool to 6 subscales with 25 items (, ). Items that did not meet an item-total correlation threshold of at least 0.30 were eliminated from the subscale unless deemed necessary by the developers in understanding a technology’s readiness. Additionally, a few subscales were combined to increase interpretability, which shortened the tool and eliminated repetitive items. Internal consistency reliability scores of α = 0.70 were considered acceptable for a newly developed tool [Citation47,Citation48].

Figure 4. Beta Version (Version 2) – Technology Translation Readiness Assessment Tool (TTRAT). This tool will help to assess the market readiness of a proposed product (a device, freeware, instrument/tool, engineering standard or clinical guideline). The tool was initially developed by the National Institute for Disability, Independent Living, and Rehabilitation Research (NIDILRR) Initiative to Mobilize Partnerships for Successful Assistive Technology Transfer (IMPACT) Center to evaluate the market readiness of Assistive Technologies (ATs). ATs include devices, software, processes, instruments, standards, and clinical guidelines that are designed for the purpose of increasing, maintaining, or improving the functional capabilities of individuals with disabilities. Domains include: Problem, Stakeholders, Solution, Competition & Differentiation, Team, and Sustainability.

Table 3: Content Validation Content validation was computed by CVI at the item level and scale level by six experts. (N = 6).

Table 4. Item reliability statistics for Revised TTRAT.

Based on the reliability testing results, the tool’s final version (version 3, ) was developed for dissemination.

Results

Our structured development and validation processes resulted in a tool applicable to innovators, users, funders, and supporters (e.g., technology transfer offices) of AT and other technology domains. The sections that follow describe how we iteratively developed the TTRAT.

Calculating domain totals and defining readiness in the draft version

Each domain had a subtotal that is the sum of each item’s Criteria Score, calculated by “Condition Met (score 1 to 3)” multiplied by the “Confidence Level (score 1 to 5),” where 5 is exceedingly met, 4 thoroughly met, 3 moderately met, 2 slightly met, and 1 not met, followed by 1 is low confidence, 2 is moderate confidence, and 3 is high confidence, respectively. A Grand Total was calculated by the sum of each Domain’s Subtotal and a readiness level determined, with a maximum score of 500.

Grand Total Readiness Levels were: Mature Readiness, 80–100% (Criteria Score 400–500), Emerging Readiness 60–79.9% (Criteria Score 300–399), Developing Readiness 59.9% or below (Criteria Score 299 or less). Readiness Definitions were as follows: Mature Readiness – Concept and application of idea have been thoroughly formulated and are ready for product testing and/or development; Emerging Readiness – Concept and application of idea have been formulated but need further developing; Developing Readiness – Concept has been postulated at a basic level. A minimum of 80% must also be reached in each domain for a Mature Readiness Level rating. If a particular domain was totaled to be below 80%, that area must be improved for a solution to be considered ready.

Changes to draft versions of the readiness tool based on internal review

Based on feedback obtained by the expert panel of reviewers of the draft readiness tool, the alpha version of the tool was developed. The weighted score was removed, and only a ‘Condition Met’ score was required for each item in the domains, as ‘Confidence Level’ was viewed as still being a personal bias. ‘Condition Met’ was rated on a scale of 1 to 5, where 5 is strongly agree, 4 agree, 3 neutral, 2 disagree, and 1 strongly disagree, instead of the previous ‘exceedingly met’ to ‘not met’ scoring. Domains still retained a subtotal that would be summed up for a ‘Grand Total’ score. The verbiage of the item questions was changed for clarification where necessary and redundant items were removed. Still geared towards assistive technologies, the domains remained as Problem, Stakeholders, Solution, Benefits, Competition, Differentiation, Team, Sustainability, and Assistive Technology. Readiness definitions remained unchanged, as did the requirement of a minimum of 80% in each domain and overall for a Mature Readiness Level rating.

Alpha version review and refinement

Overall, feedback from novice users was positive. Instructions were “clear,” and readiness definitions were “clearly explained.” Suggestions were made to use the tool multiple times throughout the development of a product, expand to include clinical and healthcare standards and policy, and customize it to suit a developer’s needs.

Content validation

The content validation of our tool was computed by CVI, a best practice and commonly used method, at both the item level and the scale level based on ratings from a group of experts (N = 6).

In our case, we have 96 items from eight categories, and the total sum of I-CVI is 75.83, so the S-CVI/Ave is (75.83/96) = 0.79, close to meeting the satisfactory requirement of 0.8. As the results indicate, 31 items (relating to 18 questions) have ratings below 0.8, and the remaining 65 items have fulfilled an acceptable level of I-CVI. We deleted seven of the 18 questions and modified another seven questions.

S-CVI/UA is based on the Universal Agreement (UA) method (a conservative method requiring agreement among experts); the UA score is 1 if the item achieves 100% experts in agreement; otherwise, the UA score is given as 0 [37]. For example, item 1 () has all six experts in agreement, thus, its UA score is 1. We calculated the average UA scores across all 96 items and got an S-CVI/UA equal to 0.10, indicating that the readiness tool has achieved a proposition of 10% of universal agreement ().

In summary, after using the CVI procedure and adjusting the content, our readiness tool should have demonstrated acceptable item level (I-CVI greater than 0.8) and scale level (S-CVI/Ave of 0.8 or higher) content validity.

Based on feedback from the panel of six experts in the open commentary portion of the assessment, it was determined that the tool could be used to assess the readiness of any new technology, in addition to ATs, and thus renamed the IMPACT Center’s Technology Translation Readiness Assessment Tool ™ (TTRAT).

Reliability of tool and development of the final beta version

The tool’s alpha version (version 1) contained nine subscales and 33 items (). The revised tool had subscales with a Cronbach Alpha score of at least 0.624 or greater, which approached adequate reliability for a newly developed tool. Problem, Competition & Differentiation, Team, and Sustainability all scored 0.753 or higher, which are considered appropriate [Citation47,Citation48].

The Assistive Technology subscale was removed to eliminate repetitive questions and took into consideration that the tool could be used across technologies and not limited simply to AT. Questions that pertained to the unique needs of ATTT, such as “Clinical expertise is represented on team in specific AT domain,” “Customer feedback and expectations have been established (ideally from people with disabilities),” have been revised to fit into other subscales such as Team – “The team has a representative for each expertise required (e.g., business, clinical, technical, and sector knowledge).” No changes were made to the beta version based on the novice user group’s utilization of the tool.

Discussion and conclusion

Case study to illustrate tool’s utility in promoting effective translation

We return to our case study to demonstrate how the TTRAT was used in practice in our IMPACT training to highlight gaps or areas for improvement that could impact how, whether, or at what speed the technology is translated to users who can influence cost, resources, and commitment to the innovation process itself. CreateAbility’s mentors and the IMPACT teaching team used the TTRAT to highlight several gaps, including how it offers significant identifiable and quantifiable benefits to all stakeholders (‘Solution’ domain); understanding of market size (Competition & Differentiation); and how the product will support itself in subsequent phases, whether it can produce a return on investment, and whether the costs and market price evaluations have been completed (‘Sustainability’). The TTRAT findings helped inform CreateAbility’s Commercialization Plan, and they were awarded a Small Business Innovation Research Phase II grant. This case study highlights how gaps identified in early training and development stages can catalyze action plans that help to accelerate technology translation. Common early barriers like a vague understanding of the problem or gap that the solution fills can disrupt or miss opportunities related to appropriate dissemination strategies.

Similarly, a missed opportunity related to identifying skills that are lacking from the team in the early stages may result in technology that is ill-fit for its intended users. Thus, the TTRAT may assist innovators in saving costs, identifying other resources, and getting their technology to the market faster. At the same time, ensuring users have technology that is affordable, accessible and fits their needs. If the technology meets the user criteria, it is less likely to be abandoned and more likely to impact their health, function, and participation positively. The TTRAT may also assist funders in determining where to invest and the return of that investment based on how the technology readiness has evolved.

Other uses for the tool

We developed a flexible assessment tool that built upon the Cloverleaf Model by looking beyond just commercial success and taking into consideration the problem being solved, implications on or input from stakeholders, and sustainability of a technology. The tool should be appropriate for translating technology standards, open-source technologies, and technologies that would be commercialized through traditional methods. While the tool was initially developed for use in assessing the readiness of ATs, content validation, reliability testing, and reviews of our model have shown that key ideas typically found in the AT sector – small and divided markets and device abandonment – are observed in other technology and innovation sectors. Thus, we have chosen to adapt our tool so that it may be used in settings beyond just AT development and ATTT.

The Lean LaunchPad and I-Corps™ models have been adopted by academic and government settings to use in the commercialization of a variety of technologies. While there may not be a direct competitor for a standard, in the sense of an existing product, a standard of care (SOC) may already exist that needs to be modified or improved. For instance, “The competition has been evaluated for strengths and weaknesses” can be thought of as “The existing SOC has been evaluated for strengths and weaknesses.” This tool may also help developers to think more broadly about stakeholder demands and the usefulness of a technology even at a very early stage – prior to initiating TT – whereas Lean LaunchPad begins with a search to discover customers and does not provide a tool for identifying unmet needs.

University TT and innovation offices can use this readiness tool to assist academic researchers in transferring their ideas out of the university setting. Steps typically taken by a TT office, such as establishing the developmental stage of a technology, licensing, and patenting, just to name a few, can be aided and tracked by the TTRAT. Venture Capital investors can use this for making funding decisions, and innovation design competitions and mentors can quickly judge a technology’s readiness.

Domain score vs. Grand total score and success

Considering the interests of diverse stakeholders and looking beyond commercialization prepares innovators to assess gaps that should be addressed in order to increase the likelihood of successfully getting a technology into the hands of its intended user. Even though a high overall Grand Total score defines the readiness level of technology as Mature, a technology that lacks sustainability or a well-developed team plan is less likely to be transferred. Thus, the 80% minimum in each domain remains a requirement. Innovators can work to improve each domain to meet the minimum requirement in a particular category.

Limitations of the tool

Despite the validation and reliability of the tool being tested and established, there are other limitations. The authors did not systematically collect demographic details of the users of the tool and may have made assumptions about personal demographics or missed other important information about the users. Also, during the tool development, the sample of users was skewed towards the novice, perhaps limiting the generalizability of the tool. Elimination of a weighted scoring system to help curb bias, as seen in the Cloverleaf Model, may introduce potential bias or not address gaps of an innovator using the tool to evaluate their own technology. A novice innovator may believe they have asked all the right questions and interviewed enough customers and stakeholders, thus scoring themselves higher in certain domains than what an experienced innovator and entrepreneur may see as suitable. The authors have taken this into account when it comes to innovators for whom they are providing technical assistance and have developed and chosen to use this tool within a technology translation training course. For users not enrolled in a TT course such as Lean LaunchPad or I-Corps™, using this tool with a mentor or someone with experience in commercialization or translation may help curb the introduction of personal bias. This suggestion has been added in the tool’s directions but is not required for completion.

As with other tools available for understanding TT, generalizability is a concern. While the authors have attempted to create a tool that a variety of domains can use, one tool may not always work for all. Other researchers may also be interested in validating the tool with other technology domains to further test the tool’s generalizability, such as testing applicability outside of the academic context as universities work with corporate research sponsors or licensees.

Future use of the TTRAT tool

A second cohort of trainees for the multi-level TT training will use the validated Technology Transfer Readiness Assessment Tool for the first time. Teams of NIDILRR grantees from various grant funding mechanisms (i.e., SBIR, FIP, RERC, DRRP) and other entrepreneurs enrolled in the training will use the tool three times throughout phase 1, pre-course, mid-course, and post-course to assess the readiness of their innovation followed by a single time at the end of phase 2. With this diverse group of researchers and entrepreneurs, who are developing a variety of technologies and standards, we can determine whether there are technological, organizational, or sectoral boundaries (e.g., academia, small business, and government) to the usefulness of this Technology Translation Readiness Assessment Tool ™. Future work may also encompass an IRB review of the research to collect the tool users’ demographics to understand better how the tool functions within other sectors and groups and increase generalizability with a larger sample size. Evidence generated to date indicates it can assist innovators in assessing their technology’s readiness for commercialization throughout the development process and achieve successful ATTT to meet the growing need.

The TTRAT may also help assess pre- and post-translation readiness of technology funded by the National Institutes of Health, National Science Foundation, private foundations, accelerators, and incubators to identify the impact of the investment subjectively. As previously described, the TTRAT may also be helpful to assess the impact of innovation training interventions run by these groups and similarly useful for university innovation courses and technology transfer offices. The tool’s psychometric properties will become stronger the more the tool is used. Expanding outside of ATs will allow us to assess its fit and promote its use with other technology domains.

Acknowledgements

This project was supported by National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR DRRP: 90DPKT0002), a Center within the Administration for Community Living (ACL), Department of Health and Human Services (HHS). These results do not necessarily represent the policy of NIDILRR, ACL, or HHS, and you should not assume endorsement by the Federal Government.t

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

Data is available upon request.

Additional information

Funding

References

- World Health Organization. Assistive technology fact sheet. 2018. https://www.who.int/news-room/fact-sheets/detail/assistive-technology#:∼:text=Assistive%20technology%20is%20an%20umbrella,t. hereby%20promoting%20their%20well%2Dbeing

- United Nations, Department of Economic and Social Affairs, Population Division. World Population Ageing 2015 (ST/ESA/SER.A/390). 2015. https://www.un.org/en/development/desa/population/publications/pdf/ageing/WPA2015_Report.pdf

- Officer A, Posarac A. World report on disability. Geneva: World Health Organization; 2011. https://www.pfron.org.pl/fileadmin/files/0/292_05_Alana_Officer.pdf

- Tebbutt E, Brodmann R, Borg J, et al. Assistive products and the sustainable development goals (SDGs). Global Health. 2016;12(1):79.

- Bickenbach J. The world report on disability. Disabil Soc Routledge. 2011;26(5):655–658.

- Algeria C, Rica C, Ethiopia E, et al. Improving access to assistive technology. Report No.: EB142/21. World Health Organization. 2018. http://apps.who.int/gb/ebwha/pdf_files/EB142/B142_CONF2-en.pdf

- Phillips B, Zhao H. Predictors of assistive technology abandonment. Assist Technol. 1993;5(1):36–45.

- Hocking C. Function or feelings: factors in abandonment of assistive devices. TAD. 1999;11(1-2):3–11.

- Sugawara AT, Ramos VD, Alfieri FM, et al. Abandonment of assistive products: assessing abandonment levels and factors that impact on it. Disabil Rehabil Assist Technol. 2018;13(7):716–723.

- Toro ML, Worobey L, Boninger ML, et al. Type and frequency of reported wheelchair repairs and related adverse consequences among people with spinal cord injury. Arch Phys Med Rehabil. 2016;97(10):1753–1760.

- Worobey L, Oyster M, Nemunaitis G, et al. Increases in wheelchair breakdowns, repairs, and adverse consequences for people with traumatic spinal cord injury. Am J Phys Med Rehabil. 2012;91(6):463–469.

- Worobey L, Oyster M, Pearlman J, et al. Differences between manufacturers in reported power wheelchair repairs and adverse consequences among people with spinal cord injury. Arch Phys Med Rehabil. 2014;95(4):597–603.

- McClure L, Boninger M, Oyster M, et al. Wheelchair repairs, breakdown, and adverse consequences for people with traumatic spinal cord. Arch Phys Med Rehabil. 2009;90(12):2034–2038. Dec) PMID: 19969165.

- Wang RH, Kenyon LK, McGilton KS, et al. The time is now: a FASTER approach to generate research evidence for Technology-Based interventions in the field of disability and rehabilitation. Arch Phys Med Rehabil. 2021;102(9):1848–1859.

- Lane JP. Bridging the persistent gap between R&D and application: a historical review of government efforts in the field of assistive technology. Assistive Technology Outcomes & Benefits (ATOB). 2015. https://www.atia.org/wp-content/uploads/2015/10/ATOBV9N1.pdf#p

- Blank S, Dorf B. 2012. The startup owner’s manual: the step-by-Step guide for building a great company. BookBaby.

- Canaria CA, Portilla L, Weingarten M. I-Corps at NIH: entrepreneurial training program creating successful small businesses. Clin Transl Sci. 2019;12(4):324–328.

- Blank S, Engel J, Hornthal J. Lean launchpad evidence-based entrepreneurship educators guide. 6th ed. Venture Well. 2014. https://venturewell.org/wp-content/uploads/Educators-Guide-Final-w-cover-PDF.pdf

- Blank S. Steve Blank: The Class That Changed How Entrepreneurship Is Taught. Poets and Quants. 2021. https://poetsandquants.com/2021/07/12/steve-blank-the-class-that-changed-how-entrepreneurship-is-taught/

- National Science Foundation. National science foundation - where discoveries begin. US NSF - I-Corps. 2021. https://www.nsf.gov/news/special_reports/i-corps/

- Office USGA. GAO technology readiness assessment guide: best practices for evaluating the readiness of technology for use in acquisition programs and projects–exposure draft. 2016. https://www.gao.gov/products/gao-16-410g

- Banke J. Technology readiness levels demystified. NASA. 2010. https://www.nasa.gov/topics/aeronautics/features/trl_demystified.html

- Heder M. From NASA to EU: the evolution of the TRL scale in public sector innovation. Innov J. 2017;22(2):2–23. https://www.innovation.cc/discussion-papers/2017_22_2_3_heder_nasa-to-eu-trl-scale.pdf

- EARTO. "The TRL Scale as a Research & Innovation Policy Tool, EARTO Recommendations" (PDF). European Association of Research & Technology Organisations. 2014. https://www.earto.eu/wp-content/uploads/The_TRL_Scale_as_a_R_I_Policy_Tool_-_EARTO_Recommendations_-_Final.pdf

- DoD US. Manufacturing Readiness Level (MRL) Deskbook v 2020. United States Department of Defense Manufacturing Technology Program. 2020. http://www.dodmrl.com/MRL%20Deskbook%20V2020.pdf

- Flagg JL, Lane JP, Lockett MM. Need to Knowledge (NtK) Model: an evidence-based framework for generating technological innovations with socio-economic impacts. Implement Sci. 2013;8:21.

- Heslop LA, McGregor E, Griffith M. Development of a technology readiness assessment measure: the cloverleaf model of technology transfer. J Technol Transf. 2001;26(4):369–384.

- Goldberg M, Kapoor W, Maier J, et al. Idea 2 Impact: fostering clinician scientists’ innovation skills. In: Proceedings of the VentureWell OPEN Conference, Austin, TX. 2018.

- Choi HJ. Technology transfer issues and a new technology transfer model. JOTS. 2009;35(1):49–57.

- Friedman J, Silberman J. University technology transfer: do incentives, management, and location matter? J Technol Transfer. 2003;28(1):17–30. :1021674618658

- Sanberg PR, Gharib M, Harker PT, et al. Changing the academic culture: valuing patents and commercialization toward tenure and career advancement. Proc Natl Acad Sci USA. 2014;111(18):6542–6547.

- Emerson E. Poverty and people with intellectual disabilities. Ment Retard Dev Disabil Res Rev. 2007;13(2):107–113.

- Flores N, Jenaro C, Begoña Orgaz M, et al. Understanding quality of working life of workers with intellectual disabilities. J Appl Res Intellect Disabil. 2011;24(2):133–141.

- Bauer SM. Demand pull technology transfer applied to the field of assistive technology. J Technol Transf. Springer. 2003;28(3/4):285–303.

- Lane JP, Flagg JL. Translating three states of knowledge–discovery, invention, and innovation. Implement Sci. 2010;5:9.

- Lynn M. Determination and quantification of content validity. Nurs Res. 1986;35(6):382–386.

- Hadie S, Hassan A, Ismail Z, et al. Anatomy education environment measurement inventory: a valid tool to measure the anatomy learning environment. Anat Sci Educ. 2017;10(5):423–432.

- Cook DA, Beckman TJ. Current concepts in validity and reliability for psychometric instruments: theory and application. The Am J Med. 2006;119(2):166.e7–166.e16.

- Haynes S, Richard D, Kubany E. Content validity in psychological assessment: a functional approach to concepts and methods. Psychol Assess. 1995;7(3):238–247.

- Yusoff M, Department of Medical Education, School of Medical Sciences, Universiti Sains Malaysia, MALAYSIA ABC of content validation and content validity index calculation. EIMJ. 2019;11(2):49–54.

- Ozair M, Baharuddin K, Mohamed S, Emergency Department, Hospital Angkatan Tentera Wilayah Kota Kinabalu, Pangkalan TLDM Kota Kinabalu, Sepangar, Sabah, MALAYSIA, et al. Development and validation of the knowledge and clinical reasoning of acute asthma management in emergency department (K-CRAMED). EIMJ. 2017;9(2):1–17.

- Lau A, Yusoff M, Lee Y, et al. Development and validation of a chinese translated questionnaire: a single simultaneous tool for assessing gastrointestinal and upper respiratory tract related illnesses in pre-school children. J Taibah Univ Med Sci. 2018;13(2):135–141.

- Quamar A. 2018. Systematic development and Test-Retest reliability of the electronic instrumental activities of daily living satisfaction assessment (EISA) outcome measure. ProQuest Dissertations Publishing.

- Polit DF, Beck CT. The content validity index: are you sure you know what’s being reported? Critique and recommendations. Res Nurs Health. 2006;29(5):489–497.

- Polit DF, Beck CT, Owen SV. Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res Nurs Health. 2007;30(4):459–467.

- Davis LL. Instrument review: getting the most from your panel of experts. Appl Nurs Res. 1992;5(4):194–197.

- Nunnally J, Bernstein I. Psychometric theory. 3rd ed. New York: McGraw-Hill; 1994.

- Portney L, Watkins M. 2000. Power and sample size. In: Foundations of clinical research. New Jersey: Prentice Hall Health. 705–730.