ABSTRACT

Digital tools have the potential to both semi-automate science journalist tasks including the incorporation of more diverse voices in science journalism. This paper reports new design science research that developed then elicited first feedback on one digital prototype with algorithms that semi-automated selected science journalism tasks. The feedback revealed that almost all of participating science journalists were comfortable with increased automation of at least of some of their tasks, as long as opportunities to control and personalize this automation remained. The feedback also revealed the potential value of automation to discover and incorporate a wider range of expert voices, depending on geographical location. The paper ends with preliminary design implications for future semi-automation that provides digitized support for science journalism tasks, then a discussion of the paper's wider research contributions.

Introduction

Reporting about science faces multiple challenges in what many describe as a post-truth environment (Davies Citation2017). Post-truth activities are defined as the public burial of objective facts by media content intended to appeal to emotion and personal belief (Kaiser et al. Citation2014; Wardle Citation2020). These activities often focus on the credibility and reliability of science when challenged by the dissemination of misinformation and unreliable material. High-profile examples include different communications about the effectiveness or otherwise of hydroxychloroquine to treat COVID-19. Post-truth activities have serious implications for how more effective science communication and journalism might take place (Castell et al. Citation2014).

It is evident that a post-truth world requires robust science journalism. However, overall numbers of science journalists have been falling (Guenther, Jenny Bischoff, and Marzinkowski Citation2017; Rosen, Guenther, and Froehlich Citation2016; Schäfer Citation2011), and science has long been reported to be a lower priority for most media compared to other subjects such as politics (e.g., Schäfer Citation2011). Resources with which to interrogate science policy, challenge fake science, and reach audiences needing to be informed by science when making democratic decisions (Davies Citation2009) have all been reduced (Goepfert Citation2007). Although some argue that science coverage has had a higher media profile since the late 1990s, and that science journalism has increased in proportion to coverage of some other subjects (Schäfer Citation2011; Schünemann Citation2013), the COVID-19 pandemic highlighted frequent failures to counter misleading content, leading to renewed calls for more trustworthy news on scientific matters (e.g., Lang and Drobac Citation2020).

One means of increasing trustworthy science journalism is for science journalists to exploit new forms of digitized support. Digitized support for science journalism is different to full automation of it. Digitization converts previously hard copy content into digital formats to be processed by computers. Digitized support manipulates this content to facilitate its use by humans during tasks. E.g., this support might guide a journalist to discover and contact scientists with expertise related to a new story. By contrast, full automation manipulates the science digital content without human intervention. E.g., it might automatically generate text in the story based on the published work of a scientist. In this paper, we investigated whether science journalists might use and perceive value in new forms of digitized support that semi-automate some of their current and future tasks.

Furthermore, previous research has revealed the presence of sizeable audience segments that are disengaged with or only moderately interested in science news. People in these segments often form incorrect views about science from a growing multiplicity of alternative and often unreliable sources (e.g., Besley Citation2018; Dawson Citation2018; Schäfer et al. Citation2018). One of the reasons for this disengagement is that the reporting about science lacks sufficient numbers of experts who are women and/or from diverse ethnic backgrounds (e.g., Franks et al. Citation2019). We argue that digitized support for science journalists has the potential to help them discover more diverse experts who can engage with disengaged audience segments. However, the existing support—both digital and otherwise—for journalists to connect more effectively with audiences by including more diverse scientific voices has been limited. Therefore, in this paper, we also investigated whether science journalists might potentially use digitized support that semi-automates discovering and incorporating more diverse scientific voices in their articles.

The remainder of the paper is in four parts. After defining science journalism and its challenges, it identifies gaps in research to digitalize and support science journalism, then it makes a simple case for design science research to innovate and develop new digitized support that implements existing software algorithms. It then outlines a new prototype of digital support that semi-automated selected science journalist tasks including the incorporation of more diverse scientific voices in stories. The paper then reports first feedback on this digital support by science journalists working in two different cultures—Scandinavia and Southern Africa—to explore whether such support can be designed to be usable and add value, and whether it might be acceptable to science journalists. The paper ends with answering three exploratory research questions, a review of the limitations of the reported design science, and outlines implications for new digital support for science journalism.

Science Communication, Journalism and Digital Support Tools

Science communication is defined as the organized, explicit and intended actions to communicate scientific knowledge, methodology, processes, or practices in settings where non-scientists are a recognized part of the audience (Horst, Davies, and Irwin Citation2016). It includes efforts to communicate the culture of science (Chimba and Kitzinger Citation2010), enable laypeople and others with expertise outside of science to communicate about science (Marsh Citation2018), challenge science (Dunwoody Citation2014; Goepfert, Bauer, and Bucchi Citation2007) and engage through new and emerging formats such as social media, science festivals and storytelling (Kaiser et al. Citation2014). Most communication of science does not flow directly from scientists to the public. Instead, according to reported models (Secko, Amend, and Friday Citation2013), it passes through communicators including journalists using mainstream media channels—channels that remain important portals through which science news is consumed and trusted (Angler Citation2017; Davies et al. Citation2021).

The arrival of new digital technologies means that these traditional forms of information exchange with audiences have been challenged (Dunwoody Citation2014). In the world of science, as elsewhere, social media technologies have opened up new channels by which people receive news (e.g., Ginosar, Zimmerman, and Tal Citation2022). As a consequence, post-truth groups such as Stop Mandatory Vaccination and agencies like the Heartland Institute have used these direct means of communication to circumvent mainstream media and share disinformation that contradicts scientific evidence and undermines scientific process (Goldenberg Citation2012). To counter these challenges, we argue that journalists need to evolve their practices to produce science journalism that communicates more effectively, especially to more diverse audiences who might be vulnerable to inaccurate information.

This section expands on the existing challenges facing journalism, to provide our rationale for the design research that follows. These challenges include insufficient numbers of trained science journalists, barriers to effective journalist-scientist communication, the lack of digital support for journalists to report science more effectively, and the observed need for journalists to communicate science to more diverse audiences.

Resources for Science Journalism Are Lacking

Trained science journalists are relatively scarce in the journalism profession. From an estimated 400,000 journalists in the European Union in 2018, only 2500 were represented by the EU's Science Journalists’ Association (Nanodiode Citation2022). Similar ratios can be found in other regions. One consequence has been that non-science journalists are often required to report on science-related topics, as demonstrated by the regular coverage of the COVID-19 pandemic by journalists who previously specialized in politics, economics and even sports. Unsurprisingly, most of these journalists, on their own, lack the awareness, breadth and depth of scientific knowledge needed to research and communicate most science stories effectively. It can be treated as one version of the knowledge-deficit gap (Miller Citation1983) that sometimes exists between journalists and the scientific knowledge that needs to be communicated.

One obvious way for journalists to fill this gap and acquire more relevant scientific knowledge is to talk more with scientists. However, multiple barriers to this communication persist. Reasons for these barriers include the perceived roles of scientists in science communication (e.g., Peters Citation2013), the need for scientist training to communicate more effectively (e.g., Smith and Morgoch Citation2022) and the lack of time that journalists have to engage with scientists in the hyper-competitive age of digitalized news production (Maiden et al. Citation2020b. Indeed, the work of most non-science journalists continues to be based more on the values of news rather than science. These values promote working to short deadlines (e.g., Dunwoody Citation2014 Hansen Citation2009; Lehmkuhl and Peters Citation2016), more dramatic conclusions, positive results, bias and sensationalism (e.g., Davies Citation2009; Schäfer Citation2011; Schünemann Citation2013), rather than understanding more complicated science-related stories (Estelle Smith et al. Citation2020). Senior figures in science have spoken about the paucity of effective reporting of science-related stories. E.g., the Head of UK Research Councils drew attention to the way that science was reported when general journalists are involved, and called for improvements (Castell et al. Citation2014). Rather than seek to challenge these entrenched values but still provide an effective solution, this paper argues that the smaller number of science journalists need to be more effective and produce outputs more productively. New forms of bespoke digital support that semi-automates science journalism tasks might increase this effectiveness and productivity.

Existing Digital Support Does Not Address Specific Science Journalism Challenges

Of course, the growing digitization of news production and consumption has already enabled full automation of some journalism tasks (e.g., Thurman, Lewis, and Kunert Citation2019), including those specific to science journalism. Full automation has been applied to, e.g., verify social media sources (e.g., Tolmie et al. Citation2017), detect deep-fakes (e.g., Sohrawardi et al. Citation2020), and algorithmic journalism to report more quantitative news such as sport and finance (e.g., Linden Citation2018). Digital automation can also empower news consumers, e.g., to personalize their consumption to specific sources and topics (e.g., Bodó Citation2019). However, by contrast, little dedicated automation of or digital support for science journalism tasks has been reported. Exceptions include the digital tools that summarize academic papers for non-scientific audiences (Tatalovic Citation2018) and automatically read research articles, reports and book chapters to breaks them down into bite-sized chunks for journalists to assess the relevance of quickly (Scholarcy Citation2022). Other science journalism examples include content verification tools that check scientific facts. E.g., SciCheck is a feature of FactCheck.org focussing exclusively on false and misleading scientific claims that are made by partisans to influence public policy (SciCheck Citation2022). However, although useful, we argue that these tools on their own are unlikely to enable science journalists to produce science stories more productively.

Nor has much digital support for science journalism tasks emerged from academic research. One exception (Spangher et al. Citation2021) developed an ontological labelling system for science journalists’ human sources based on each source's affiliation and role. Estelle Smith et al. (Citation2018) reported different barriers to the current process of scientific media production—barriers that afford opportunities to innovate. Miscommunication was discovered to occur at four stages (e.g., during interviews and due to media incentives) in the production of science news (Smith et al. 2018). Furthermore, interviews with experienced science journalists and communicators about their challenges revealed that many lacked the time and other resources to write about science-related topics, and none expected more resources to be forthcoming in their organizations (Maiden et al. Citation2020b). This surprising paucity of research into digital support for science journalism tasks led us to conclude that more design science research was needed, to inform the design of new digital support for science journalism.

Existing Design Science Research for General-Purpose and Science Journalism

Most design science research has sought to inform digital support for general-purpose journalism. E.g., it has sought to uncover the design implications for digital support for discovering local news information sources (Garbett et al. Citation2014), for correcting news misinformation using high-ranking crowd-sourced entries (Liaw et al. Citation2013), and for sourcing user-generated content and interactively filtering and ranking this content based on users’ example content (Wang and Diakopoulos Citation2021). More generally, ethnographic studies of journalist practices have been used to determine the requirements for a new prototype social media verification dashboard (Tolmie et al. Citation2017), and a research-through-design study of uncivil commenting on online news was used to generate designs that propose to support emotion regulation by facilitating self-reflection (Kiskola et al. Citation2021). Of course, the COVID-19 pandemic raised the profile of science journalism. It increased, e.g., the computational support for science journalism using large datasets, as sites such as the COVID-19 dashboard at John Hopkins University (John Hopkins University’s Coronavirus Resource Center Citation2021) demonstrated. New tools also emerged, e.g., new-data alerts spreadsheets shared between journalist teams in different time zones to automate the generation of news about the pandemic (Danzon-Chambaud Citation2021) and interactive visualizations to generate narrative maps to support journalists to understand the wider COVID-19 news landscape (Norambuena, Mitra, and North Citation2021). However, overall, this design science research has contributed little directly to the design of digital support that could be applied, either directly or indirectly, to science journalism tasks.

Diverse Science Journalism Audiences

Another documented challenge facing science journalism is its failure to engage with some potential audiences, e.g., low-income minority ethnic backgrounds (Dawson Citation2018). Recent studies (e.g., Davidson and Greene Citation2021) have revealed that women continue to be quoted less often than men in high-profile journals, and that authors with non-European names were less likely to be mentioned or quoted than comparable European-origin named authors (e.g., Peng, Teplitskiy, and Jurgens Citation2020). These biases can reduce the number of scientific voices and role models for science audiences. Reported interviews with experienced science journalists and communicators about their science journalism challenges revealed difficulties in discovering diverse sources to provide the content for their science journalism (Maiden et al. Citation2020b). The biases that might result from such reporting can limit not only the reach of science reporting but also science role models for people in under-represented communities. The reported failure to prioritize for science journalism (e.g., Schäfer Citation2011) is likely to exacerbate these biases, because science journalists have limited time and other resources to discover and incorporate more diverse scientific voices.

Conclusions from Previous Research

Our review revealed that science journalism continues to face pressures. In spite of a step-change increase in the volume of disseminated misinformation about, e.g., the climate crisis or COVID-19 pandemic, the numbers of professional science journalists have not risen, budgets for science journalism have not increased, and non-science journalists often lack the knowledge and contacts needed to report science-related stories effectively. In this paper, we argue that these constraints require the sector to explore alternative solutions. Digitized support has the potential to semi-automate some tasks in ways that still enable science journalists to express their editorial voices (e.g., Lopez et al. Citation2022). However, little bespoke support is available for science journalists to use.

Therefore, this paper reports new design science research undertaken to prototype then evaluate one form of new digitized support that science journalists could use both to be more productive and to discover more diverse scientific voices—voices sought by the public to communicate science (Nguyen and McIlwaine Citation2011). However, rather than use ethnographic techniques (e.g., Lopez et al. Citation2022) and qualitative research interviews (e.g., Linden Citation2017) to collect data about current practices and attitudes, we implemented a design science approach—one that designed then investigated an artefact that interacted with a problem context to improve something in that context (Wieringa Citation2014). Design science provides tried-and-tested approaches for exploring technological advances in different work contexts. Therefore, this paper reports how an existing digital tool was extended to generation a new digital prototype used to explore whether its support might have the potential to be usable by science journalists and to add value to science journalism tasks. The design science approach was formative rather than summative, and used the digital prototype as an artefact to probe journalist responses to future possible digital tools. It sought to make science journalists aware of semi-automation now possible through the emergence of new technologies, in a form that was tailored to their work tasks.

Prototype Digitized Support for Science Journalism Tasks

This section reports two versions of one prototype that provided digital support for journalism. The first provided existing digitized support for general-purpose journalism that was the baseline for the reported design science research. The second was an extension to this prototype that provided digitized support for science journalism tasks.

The existing digitized support for general-purpose journalism tasks was delivered using a tool was called JECT.AI. Two of this paper's authors were directly responsible for this tool's design and implementation. JECT.AI was a research-based tool designed to support general-purpose journalists using semi-automation that discovered new content and angles for articles. As such it was an example of a computational news discovery tool (Diakopoulos Citation2020). It automated some but not all journalism tasks that journalists might undertake when researching and writing new stories. The automated tasks included the retrieval of related published news articles, the generation of possible new angles for stories, and the presentation of sources with which to explore each new angle. To deliver this automation, JECT.AI integrated natural language processing, creative search, and interactive guidance to discover information in published news stories and support journalists to form new associations with it (Maiden et al. Citation2018, Citation2020a). The tool had already been developed using design science techniques that had implemented, evaluated and refined earlier versions of the tool in different newsroom contexts (Maiden et al. Citation2020b). Like most forms of digitized support, it was designed to support specific tasks. Other tasks, such as identifying a scientific consensus on a topic were not supported.

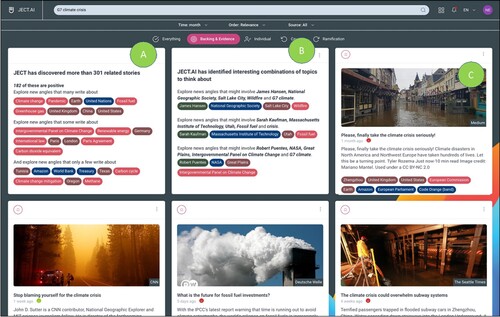

Consider the following typical example of JECT.AI use by a general-purpose journalist. The journalist was asked by their editor to produce an article that took an unusual angle on a forthcoming G7 climate crisis event, to be published on the eve of the event. This schedule gave the journalist some time to research the story and explore novel angles for it, so she chose to use JECT.AI. She could access it either via a web browser from a bookmarked URL, as shown in , or if she wrote her stories in Google Docs, she can choose the JECT.AI sidebar that appears on the right side of the text editor. She could then enter some terms that describe her current topic—G7 climate crisis—and browse different interactive cards that present different content and angles generated for those terms. JECT.AI enabled her to drill down into these content and angles and, when needed, copy source URLs and angle ideas across into her Google Docs file, to develop later.

Figure 1. Screengrab from the existing JECT.AI digitized prototype showing the generated landing card (A), combinations card (B) and multiple article cards (C) generated for the topic term “G7 climate crisis”.

An example of this interactive digital support is depicted in . The fictitious journalist has entered natural language terms describing the topic of interest (e.g., “G7 Climate Crisis”) into the top search bar. In response, JECT.AI’s algorithms automatically generated guidance to augment the journalist's thinking about the topic. The journalist was able to direct this guidance using interactive features that controlled the strategies (e.g., evidence-based or human angles), time periods (e.g., over the last month or year), and types of information to manipulate (e.g., published news sources or scientific publications). Each of these features was designed based on practices reported by experienced journalists for discovering angles on stories. In response to the topic terms and feature settings, the tool presented the results of its automation using different interactive cards, shown (A) to (C) on .

The landing card (A) presented possible new angles for stories generated by the tool's automation. It reported the total number of discovered articles out of a sample total, those that were rated to have positive sentiment based on an automated sentiment analysis of each story, and the angles covered in most discovered articles, some of these articles, and a few of these articles. Each of these discovered angles was generated using different frequency counts across the total number of discovered articles. E.g., angles covered by most angles had high counts and angles covered in only a few had lower counts. By clicking on one of these angles, the tool presented more information to support the journalist to discover new ideas for angles. The combinations card (B) presented different possible combinations of these same angles, and was generated in response to the entered topic terms. Each of the individual article cards (C) presented content from one automatically-discovered article including the title, publication, date, summary text and 10 selected possible angles. Clicking on the title opened the original article, at source, and clicking on each angle generated further interactive guidance for the journalist to consider.

To undertake these more sophisticated and divergent creative searches, JECT.AI was designed to pre-process selected information about each the news stories that it discovered, and to store this information in what was called a creative news index. This index was populated by indexing millions of verified news stories as possible starting points for discovering new angles on stories from selected open RSS feeds and accessible newspapers’ archives. At selected periods in each day, the tool automatically read each new story in over 1200 RSS feeds, generated some index terms for it, and added these terms for each of them to the index. These feeds pushed news content from over 400 different news titles, all selected by the JECT.AI editorial team. In March 2022, the index stored semantic information from over 23 million news stories published over six years. It was this indexed information that JECT.AI manipulated to automate the generation of new angles and content for journalists.

How the New Prototype Discovered Scientific Content

To develop a new version of this prototype to automate science journalism tasks, the existing JECT.AI tool was extended to index scientific papers that might support science journalists in their work. As with the existing JECT.AI tool, the extension was designed and implemented by two of this paper's authors. And this time, our fictitious journalist was asked to produce the article with both unusual angles and more diverse voices. Again, the schedule gave the journalists time to explore novel angles and voices for it, so she chose to use the extended version of JECT.AI. To support this science journalism task the creative news index was extended to include information about over 175,000 scientific articles and papers published in over 50 scientific titles. This technical extension was designed to semi-automate at least four tasks that science journalists would otherwise have undertaken themselves with existing digital tools that offered less support specific to science journalism: (1) discovering news articles and scientific papers related to their stories; (2) comparing discovered articles and papers to determine their relevance to each other; (3) reading each article and paper to extract key themes and qualities such as sentiment, and; (4) generating candidate angles for their story. Moreover, the extension was implemented so that science journalists remained the dominant agents in the production of science journalism, consistent with other recent studies revealing meta-requirements on digital support for science journalism (Milosavljević and Vobič Citation2019).

How the New Prototype Discovered More Diverse Science Voices

In addition, to support science journalists to discover more diverse scientific voices, a new interactive intelligence card and its related automation was designed and implemented. Each card presented up to 20 people (typically journalists) who had published news articles related to the science journalist's topic, and up to another 20 people (typically scientists) who had published relevant scientific papers and articles. Rather than list these people according to relevance or number of publications, it recommended more diverse voices by presenting lists computed to have an equal number of both male and female first names and European and non-European surnames. It was assumed that these lists would encourage and enable science journalists to overcome at least some of the reported gender biases (e.g., Davidson and Greene Citation2021) and ethnicity biases (e.g., Peng, Teplitskiy, and Jurgens Citation2020) in science journalism.

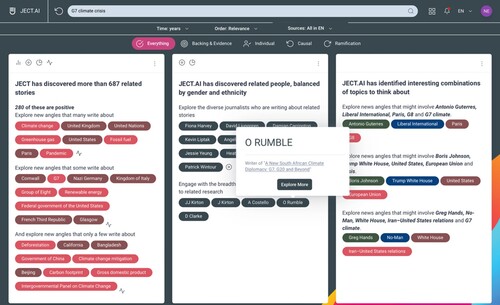

The intelligence card was implemented in the new version of the prototype next to the revised landing card that presented the science angles and content, see . It presented the two lists of names, plus a list of discovered Twitter accounts related to the entered topic terms. If the science journalist clicked on one of these names, the card presented the title of one user or story that the named person has written, as also shown in . Furthermore, if the journalist clicked on the Explore More button, the prototype launched Google web searches using the name if the person was a journalist, and Google Scholar searches if the person was a scientist, to discover their publications and profile.

Figure 2. The new prototype's intelligence card, presented to the right of the landing card, and showing pop-up information about one of the discovered scientists.

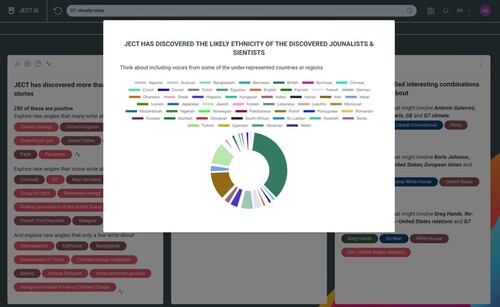

The card also offered features that allowed the science journalist to view different interactive visualizations about the ethnicities and gender of the discovered names. E.g., shows a pie chart visualization of the genders and ethnicities of the wider sets of discovered journalists and scientists writing about the entered topic.

Figure 3. The new prototype's intelligence card's visualization features, revealing the different ethnicities of names of scientists writing on the entered topics.

The names of the publishing journalists and scientists presented on the intelligence card were computed using a new automated algorithm. Use of this algorithm was a deliberate decision to increase the tool's level of digitized support, albeit of a task that too few journalists were undertaking regularly. First of all, the new algorithm extracted the names of journalists who had written the discovered news articles by identifying proper names that explicitly designated the author(s) in an article. The result was an unordered set of names composed of first name and surname. The names of scientific authors were extracted from retrieved academic papers using SerpApi's Google Scholar service (SerpApi Citation2022), which used the query to search for scholarly literature and simply extracts the authors on the top 20 academic papers. The result was also an unordered set of names composed of first name and surname. A third service, called NamSor (Namsor Citation2022), then attributed the probabilities of the gender and cultural origin of each name in each list using a dataset of over 5million names. Finally, using the most probable gender and cultural origin of each name, the algorithm generated two lists, one of retrieved journalists and one of retrieved scientific authors. Each list was composed of up to 20 different names. Each list was intended to be composed of equal amounts of female and male first names, and equal amounts of European and non- European surnames. It was these lists that were presented on the card to support science journalists to find more diverse voices for their stories.

The new card increased the digital support for at least three tasks that science journalists otherwise often undertook without specialist support: (1) discovering journalists and scientists who have published work related to their stories; (2) reviewing these journalists, both individually and as a group, to filter them according to gender and ethnicity, and; (3) discovering articles and papers written by these journalist and scientists related to their stories. As with the original version of JECT.AI, the science journalists were expected to remain the dominant agents in the production of the science journalism (Milosavljević and Vobič Citation2019).

Having designed the new prototype for use in the context of science journalism, we then investigated how science journalists might interact in it to improve their completion of identified science journalism tasks.

Feedback on the Prototype Digitized Support for Science Journalists

The new digitized prototype was made available for use by science journalists. In this paper, we report feedback from more experienced science journalists working in two different regions—Scandinavia and Southern Africa. We took a deliberate decision to collect feedback from science journalists working in different contexts that might have provided different perspectives on digital support and its role in this work. Due to the paucity of time available to most science journalists (Maiden et al. Citation2018), we ran two workshops—the first with three science journalists working in Scandinavia and the second with 11 science journalists in Southern Africa. These workshops, rather than a larger number of interviews with individual journalists, offered both a more cost-effective and valuable form of first engagement for the science journalists, who were able to share and engage in constructive interactions with each other as well as the researchers. Each workshop transcript was analyzed to investigate three exploratory research questions about the prototype's automation and digitized support for science journalists during the identified tasks:

RQ1: Is it acceptable to experienced science journalists?

RQ2: Does it have the potential to support experienced science journalists in their work?

RQ3: Does it have the potential to support these science journalists to discover more diverse scientific voices?

Feedback from the Science Journalists Working in Scandinavia

First of all, three science journalists working in Norway, Sweden and Denmark, each with a minimum of ten years of professional experience, participated in one online workshop to explore and discuss the prototype’s digitized support for their work.

Workshop Method

One of the authors ran the online workshop to present, demonstrate and elicit feedback about the automation and the interactive cards. The workshop took place online using Zoom, and lasted for 90 min. Several days before the workshop the science journalists (respondents A, D and R) were emailed with the link to the prototype, asked to generate user accounts and explore the tool in their work. During the workshop the author presented the prototype's digitized support and demonstrated the landing, news and intelligence cards using examples about Ukraine Chernobyl and Ukraine chemical weapons. The audio transcript was analyzed for feedback about each presented card and the semi-automation of the tasks. A simple thematic analysis of the transcript revealed topics such as reactions to automation, likes, dislikes and desired new features related to each card. In this paper, we report selected participant comments on these themes related to the audience and intelligence cards.

Workshop Findings

Two of the respondents, A and R, had reported using the digitized prototype prior to the workshop, and their experiences were positive. Respondent R reported: “I typed in some keywords and got a few a few results and it looks, it looks nice, the interface was intuitive and okay.”, and respondent A said: “So it's really nice to follow and also is there, like so what when it, so the AI technology is searching for relevant articles, like all around the Internet, I guess.”

All three respondents talked about advantages of the landing card and its visualizations: “I think this is a nice tool, because it gives me a lot of source material, it has been sorted for me, and in a very understandable and reasonable way and, if I can quickly go to the different sources and have a look, I sort of get this overview”“ (R), “I think I like it, especially the concrete suggestions for other stories directly to the media, I think that's and, of course, citizens on new angles” (P), and “I guess you can avoid a lot of biases due to, you know, due to the charts, which is nice. You can also use it in you know you can use it as a reference as well” (A). The respondents were also positive about the automation underlying the revised landing card. Respondent R said: “In my view, it's a search engine. We do this, we use sort of AI all the time, using Google searches so I don't view it as fundamentally different” and “For some time, this is how we did this is how we work. I’ve been working as a journalist for many years and we had this mechanical sort of a way to start to dig into to a topic”. Respondent A made similar comments: “I’m not afraid of AI technology … You know people have different opinions on it. As R has already said we’re already dealing with AI on daily basis, and obviously this one seems very helpful”. However, A was still concerned about how other journalists might perceive the automation: “Maybe a traditional journalist could argue that it kind of repressing the human-to-human view of that article on that issue. But I don't think personally needed”, and: “Obviously, like, if you want to [write] more on-field articles, then the interviews … this should be also included and they say, I cannot do. But, as a research tool, it really is really helpful”.

Respondent R expressed a strong need for a more personalized version of digital support: “Personalization. That's top of my list. I can modify the source list and the sources that you are looking into and that they can that they can keep some of that result, it gives me in a personal bin on record”, “ … if this tool could sort of learn what I’m looking for by my way of using it, and on and on that you’re having a list option that it's more tailored to how I feel about this topic”, and: “after working with a topic for some time, you tend to want more personal views … . So it might be that that after using this tool for a while that would feel the same as on other than that … but for the time being, being new to this. I don't I don't see that as a problem”. The other respondents supported this need for more personalization of the prototype's digitized support.

All three respondents also made positive comments about the prototype intelligence card, e.g.,: “I like it.” (R) and: “But you know yeah, the thing is, is can be hard, a lot of time spent on finding sources that are willing and having the time to talk to you. When you have experts are active on Twitter, usually there are some of the more easily accessible” (P). All three reported that the problem that the card was designed to overcome—finding science sources to include in stories—was a problem that they faced regularly in their work.

However, respondent R also reported the need to record new angles and voices during a session: “Can I sort of make my own little bin on top and drag interesting names, interesting angles into a little bin and keep it keep it for later? … The way I do this that I have my sources in a number of tabs on top of my window, and I jumped from tab to tab … . But if it could have more not only one article or one source, but have a have a bunch of articles written by an interesting person, for instance, or an angle that you have come up with sort of relatively a hand it would be perfect would really like that.”. Respondent A supported R's request: “if you can save articles for later, or is there any option is. As you know that I can come back to the article, and that would be nice”.

Feedback from the Science Journalists in Southern Africa

To provide an alternative geographical perspective on the prototype’s digitized support, science journalists in Southern Africa participated in a second workshop that used a similar method to the first.

Workshop Method

Two of the authors ran the second workshop. A total of 11 experienced science journalists from South Africa participated—all but one was based in that country at the time. Most were highly experienced science journalists, with longstanding backgrounds in the field of science reporting. Again, the workshop took place online using Zoom, and lasted just over 2 h. Several weeks before the workshop, each of the journalists was emailed with the link to the prototype, asked the generate user accounts and explore the tool in their work. Several reported having done so. During the workshop, one of the authors then presented the prototype and demonstrated the landing, intelligence and news card's support for their work using an example about deforestation in Africa. The workshop audio transcript was then analyzed to uncover the same themes as in the first workshop.

Workshop Findings

Again, none of the journalists raised concerns about the task automation behind the prototype's cards. Several asked questions about tool's algorithms, e.g., the input news sources that it manipulated and comparisons to use of search engines in Google and Google Scholar, to understand better the nature of the automation.

Unlike in the first workshop, the demonstration of the intelligence card resulted in considerable interest and feedback. Many of the journalists recognized the science journalism challenge that the card had originally been designed to solve. However, from their perspectives in Southern Africa, several reported that many of the scientists who were available to connect with using existing journal publications and tools such as Google Scholar were too European-centric. E.g., respondent M reported: “One of the things that I find frustrating, and I’m sure other people do to is, when you go looking for African science about an African subject, you’re constantly getting shunted back to the mainstream establishment … the global north publications, and the global north sciences. … [But] there is a hell of a lot science done on African soil, and I think that it is important that we highlight that”. Indeed, this European-centric focus was recognized to create a degree of misinformation about available scientists. E.g., respondent A stated: “We all think that there is a dearth of African scientists. And actually, that's not true, it's just that they’ve not been brought into the fold. They’ve not been seen as a priority. And, in fact, just to underscore what everyone has been saying, that, you know, when I just think about South African countries and South African scientists, it is incredible the amount of knowledge that is here, and the amount of work that is being done”. These challenges were revealed to be general ones, impacting science journalists from other regions—e.g., respondent M said: “And I’m fairly sure from discussions I’ve had that people such as science journalists in South America, and in places like Malaysia, would agree, that you would like something that enabled them to say, specifically, I’m looking for people in my area”. The authors concluded that these experienced science journalists, as a group, recognized the need for the intelligence card, although the algorithms generating its content needed to be extended and refined.

Most of the science journalists reported that the card's information about discovered scientists and Twitter feeds was more valuable than the information about journalists reporting on similar topics. Respondent A said: “Just to say, I think that Twitter connections are great. I think that's excellent.”, and: “There is an incredible need for journalists on the ground in these other countries that are looking to connect with scientists on the continent.”. Likewise, respondent M stated “I don't only want people from the global north, I want people from Africa talking about deforestation in Africa. So that I get to look at papers that are not all written by African scientists, but there are African scientists in that paper, as authors, that we can then get hold of. It is much better to be talking to people from your own area, and continent. It is also one of the reasons that African science does not get highlighted.”. Other journalists broadened this need to science journalists from other regions, e.g., from respondent S: “I don't see why that's just for African journalists. I think that, if people are going to be writing about the topic of African continent and African countries, European writers should be speaking to African scientists, should be speaking to people on the ground. Because that makes journalism better. Their journalism is poorer for only seeing people who are only in their context, and science only done by people in their context. In fact, that is the great benefits that this could have”. Respondent S finished by saying that the prototype was: “a discovery tool of non-European scientists and journalists.” The authors identified this concluding remark as broadening the required remit of the intelligence card, by perceiving its value for non-European science journalists to connect with local scientists.

Given this feedback, it was not surprising that some of the journalists argued that the card's current automation and interactive features needed to be extended. One of these extensions was to discover and present journalist based not only on their name but also their location. Respondent A summarized this emerging need: “I think that location is really important … so scientists gathered in Kemri, e.g., in Kenya, or UCT in Cape Town, or the various institutes, I think it's really important” The workshop participants also discussed different scenarios that future versions of the card should support. One was to discover scientists who are originally from Africa but now working for institutions beyond the continent on local topics. Another was to discover non-African scientists who are now working at African institutions on local topics. As part of the discussion, it was recognized that new automated algorithms would be needed to combine the probabilities of names from different origins with institutions in similar or different regions.

Finally, the feedback also revealed existing challenges when connecting with discovered scientists. E.g., respondent A said: “It is because I think that is about ‘connecting'. So it is that thing about connections to people in the same area, or across borders and so on. You know, for me, to try and get hold of a scientist in the United States is an incredibly frustrating process. I think that it has also got something to do with ease-of-connection. Especially for young journalists” Several respondents argued that “Google Scholars is a good page to land on.”, but then identified the need for more information to connect journalists to scientists more easily.

Conclusions, Design Implications and Discussion

The applied and design research reported in this paper has revealed that the existing digitized support for science journalists is surprisingly limited (e.g., Smith et al. 2018, 2020; Tatalovic Citation2018). In response, new design science research was undertaken that semi-automated some science journalism tasks so that science journalists might work and discover more diverse scientific voices efficiently and effectively. This design science approach, which developed digitized prototypes for science journalists using existing algorithms such as NamSor (NamSor Citation2022) from beyond journalism research provided a valuable probe with which to contextualize and explore possible new forms of digitized support. These forms offered different capabilities to the existing digital automation available to support journalists (e.g., Sohrawardi et al. Citation2020; Tolmie et al. Citation2017) and tools supporting journalists to undertake science journalism tasks such as Scholarcy and FactCheck.org. In spite of a literature review, the authors were unaware of any other digital tools that support journalists either to discover new angles on stories or more diverse voices for these stories.

The results from a first investigation into how 14 experienced science journalists might interact in with a new prototype enabled us to draft tentative first answers to the three research questions:

RQ1: The prototype's semi-automation that supported the identified science journalism tasks was acceptable to the experienced science journalists;

RQ2: This semi-automation had the potential to support the experienced science journalists in their work;

RQ3: The digitized prototype that offered this semi-automation had the potential to support science journalists to discover diverse scientific voices, although interestingly, the roles of the semi-automation varied by journalist locations.

We were initially surprised by the extent to which the prototype digital support was reported to be acceptable to the science journalists. Some of them reported already using tools such as Google, Google Translate and Nero AI, and the workshops revealed that the prototype had the potential to assist science journalists with limited support, time and resources (Guenther et al. Citation2017; Rosen, Guenther, and Froehlich Citation2016; Schäfer Citation2011). That said, as reported in Linden (Citation2017), journalists have shown surprisingly strong capacities for adapting to and mitigating new technologies, and the science journalist responses to the digitized prototype might another example of this. One possible reason for this was that the semi-automation of selected tasks had been designed to allow science journalists to retain control over the outcomes produced by it, consistent with principles outlined in (Milosavljević and Vobič Citation2019). Beyond journalism, Shneiderman (Citation2020) reported that effective human-centred artificial intelligence should provide users with high levels of control alongside high levels of automation. Our digitized prototype's design was consistent with these principles, as it excluded algorithms that automated writing or could be perceived as robot journalism (e.g., Linden Citation2018). The research also revealed how new forms of digital support can contribute to increased audience engagement (e.g., Besley Citation2018; Dawson Citation2018; Schäfer et al. Citation2018) by discovering scientists better placed to engage with different science journalism audiences.

Furthermore, beyond these initial findings, the authors sought to understand whether the prototype's digitized support might have been consistent with established values of science in science journalism—values such as objectivity, honesty, openness, accountability, fairness and stewardship. The authors reflected on the reported findings and judged that future regular prototype use has the potential to contribute to fairness, by reinforcing a system in which trust among the parties and in science can be maintained through easier access to these people. Furthermore, this use also had the potential to contribute to stewarding the dynamics of relationships between different people in research, by surfacing then supporting the management of more diverse networks that science journalists need to maintain. The use might also contribute to greater openness, access and transparency by presenting more information relevant to scientific decisions or conclusions.

Threats to Validity

The reported design science research is preliminary, and there are clear threats to the validity of the conclusions drawn from it. E.g., the semi-automation of the selected science journalism tasks was coded in a single prototype, so our conclusions are limited to that prototype's design and implementation. Only some science journalism tasks were supported, so our conclusions cannot be extended to other tasks such as networking or interviewing scientists about their research. Moreover, most of the workshop feedback was collected from a self-selecting group of science journalists—ones more experienced and willing to provide feedback on digital support. Feedback from less-experienced science journalists and those perhaps more resistant to automation is also needed to broaden our results and validate our conclusions. Most importantly, the workshop format did not collect data about first-hand tool use—data that is more likely to reveal possible limitations of digital support for science journalism tasks in work contexts. Instead, this research sought to elicit first evidence for the designed semi-automation prior to running longer-term studies of its use by science journalists that are planned, to understand the strengths, the weaknesses and the emergent outcomes of it.

Design Implications for Future Versions of the Prototype

The design science research also revealed new requirements for the digitized prototype—to allow science journalists to customize both these sources and the semi-automation used to discover angles and voices, and enable them to save discovered content, angles and voices. In simple terms, these requirements revealed that the prototype was expected to support the contextual model of science journalism (Secko, Amend, and Friday Citation2013) and inform communities and individuals about science as it relates to particular contexts. Furthermore, to overcome the perceived bias towards the “global north”, the prototype will also need to be extended to discover scientists with local ethnicities working in local institutions. This could be achieved by, e.g., linking users to one or more regions for which the card can discover information about. The current automated algorithms can be refined to discover information specific to regions and countries, using NamSor's (Namsor Citation2022) fine-grained name ethnicities (e.g., South African, Cameroonian, Burkina Faso) within predefined UN regions such as Southern Africa and Western Africa. Moreover, the algorithms can link author addresses to these regions, as well as extract email addresses and university webpage URL of the discovered scientists, to support quicker connections.

Towards a Better Understanding of Science Journalism Diversity

Finally, whilst the journalists in Scandinavia recognized the need to connect more with experts who are women and/or from diverse ethnic backgrounds (Franks et al. Citation2019), the journalists in Southern Africa reported an important “global north” bias based on the algorithm's crude distinction between scientists with European and non-European names. Other definitions of diversity were reported that recognized factors such as scientist background, education and work location. As we report, our automated algorithms can be adapted, but first new research will be needed to capture how science journalists in different geographical and cultural contexts define voices that are diverse and/or engage with local audiences. Although some research has reported science journalism challenges in the “global south” (including dependencies on foreign sources) (Nguyen and Tran Citation2019) and the potential for automation to serve science journalism in Africa (Tatalovic Citation2018), it does not define types of diverse or more engaging audiences. The new research will intersect with themes of indigenous journalism. Whilst gender differences in the required appearance of news anchors on-air have been reported (Newton and Steiner Citation2019), indigenous journalists have reported difficulties when working in mainstream outlets that have erased and misrepresented indigenous voices, communities and concerns (e.g., Callison and Young Citation2020). New definitions of diversity need to include indigenous scientists and journalists. Therefore, in the next phase of this research we will also exploit online questionnaires as well as workshops with science journalists globally to define voices that are perceived as diverse and/or engaging with local audiences, before designing more advanced algorithms to semi-automate as part of the prototype's digitized support.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Angler, Martin W. 2017. Science Journalism: An Introduction. Abingdon, Oxon: Routledge.

- Besley, John C. 2018. “Audiences for Science Communication in the United States.” Environmental Communication 12 (8): 1005–1022. https://doi.org/10.1080/17524032.2018.1457067.

- Bodó, Balázs. 2019. “Selling News to Audiences – A Qualitative Inquiry Into the Emerging Logics of Algorithmic News Personalization in European Quality News Media.” Digital Journalism 7 (8): 1054–1075. https://doi.org/10.1080/21670811.2019.1624185.

- Callison, Candis, and Mary Lynn Young. 2020. Reckoning: Journalism’s Limits and Possibilities. Oxford Scholarship Online. https://doi.org/10.1093/oso/9780190067076.003.0007.

- Castell, Sarah, Anne Charlton, Michael Clemence, Nick Pettigrew, Sarah Pope, Anna Quigley, Jayesh Navin Shah, and Tim Silman. 2014. Public Attitudes to Science, UK Department for Business, Innovation and Skills, accessed 9/4/20.

- Chimba, Mwenya, and Jenny Kitzinger. 2010. “Bimbo or Boffin? Women in Science: An Analysis of Media Representations and How Female Scientists Negotiate Cultural Contradictions.” Public Understanding of Science 19 (5): 609–624. https://doi.org/10.1177/0963662508098580.

- Danzon-Chambaud, Samuel. 2021. “Covering COVID-19 with Automated Journalism: Leveraging Technological Innovation in Times of Crisis.” Proceedings Computation + Journalism’2021 Conference, North-Eastern University, February 2021.

- Davidson, Natalie, and Casey Greene. 2021. Analysis of Scientific Journalism in Nature Reveals Gender and Regional Disparities in Coverage. bioRxiv preprint, Accessed August 17, 2021. https://www.biorxiv.org/content/100.1101/2021.06.21.449261v1.full.pdf. https://doi.org/10.1101/2021.06.21.449261.

- Davies, Nick. 2009. Flat Earth News. London: Vintage Books.

- Davies, Evan. 2017. Post Truth: Why we Have Reached Peak Bullshit and What we Can do About it. Great Britain: Little Brown.

- Davies, Sarah, Suzanne Franks, Joseph Roche, Ana Schmidt, Rebecca Wells, and Fabiana Zollo. 2021. “The Landscape of European Science Communication.” Journal of Science Communication 20 (3): A01. https://doi.org/10.22323/2.20030201.

- Dawson, Emily. 2018. “Reimagining Publics and (Non) Participation: Exploring Exclusion from Science Communication Through the Experiences of low-Income, Minority Ethnic Groups.” Public Understanding of Science 27 (7): 772–786. https://doi.org/10.1177/0963662517750072.

- Diakopoulos, Nicholas. 2020. “Computational News Discovery: Towards Design Considerations for Editorial Orientation Algorithms in Journalism.” Digital Journalism 8 (7): 945–967. https://doi.org/10.1080/21670811.2020.1736946.

- Dunwoody, Sharon. 2014. “Science Journalism: Prospects in the Digital Age.” In Handbook of Public Communication of Science and Technology, edited by Massimiano Bucchi, and Brian Trench, 27–39. 2nd ed. New York: Routledge.

- Estelle Smith, C., Eduardo Nevarez, and Haiyi Zhu. 2020. “Disseminating Research News in HCI: Perceived Hazards, How-To’s, and Opportunities for Innovation.” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’20). New York: ACM Press.

- Estelle Smith, C., Xinyi Wang, Raghav Pavan Karumur, and Haiyi Zhu. 2018. “[Un] Breaking News: Design Opportunities for Enhancing Collaboration in Scientific Media Production.” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’18), 1–13. New York, NY: ACM Press.

- Franks, Suzanne, Lis Howell, C. Carter, L. Steiner, and S. Allan. 2019. “Seeking Women’s Expertise in the UK’s Broadcast News Media.” In Journalism, Gender and Power 2019, 13–22. Abingdon Oxon: Routledge.

- Garbett, Andrew, Rob Comber, Paul Egglestone, Maxine Glancy, and Patrick Olivier. 2014. “Finding “Real People": Trust and Diversity in the Interface Between Professional and Citizen Journalists.” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’14), 3015–3024. New York: ACM Press. https://doi.org/10.1145/2556288.2557114.

- Ginosar, Avshalom, Ifat Zimmerman, and Tali Tal. 2022. “Peripheral Science Journalism: Scientists and Journalists Dancing on the Same Floor.” Journalism Practice, 1–20. https://doi.org/10.1080/17512786.2022.2072368.

- Goepfert, Winifrid, Martin W. Bauer, and Massimiano Bucchi. 2007. “The Strength of PR and the Weakness of Science Journalism.” In Journalism, Science and Society, edited by Martin W. Bauer and Massimiano Bucchi, 215–226. New York: Routledge. https://doi.org/10.4324/9780203942314.

- Goldenberg, Suzanne. 2012. “Leak Exposes How Heartland Institute Works to Undermine Climate Science.” Accessed April 25, 2020. https://www.theguardian.com/environment/2012/feb/15/leak-exposes-heartland-institute-climate.

- Guenther, Lars, Löwe A. Jenny Bischoff, and Hanna Marzinkowski. 2017. “Scientific Evidence and Science Journalism: Analysing the Representation of (un)Certainty in German Print and Online Media.” Journalism Studies 20 (1): 40–59. https://doi.org/10.1080/1461670X.2017.1353432.

- Hansen, Anders, et al. 2009. “Science, Communication and Media.” In Investigating Science Communication in the Information Age, edited by Richard Holliman, 105–127. Oxford: Oxford University Press.

- Horst, Maja, Sarah Davies, and Alan Irwin. 2016. “Reframing Science Communication.” In The Handbook of Science and Technology Studies, edited by Clark A. Miller, Laurel Smith-Doerr, and Ulrike Felt. 4th ed., 881–907. Cambridge: MIT Press.

- John Hopkins University’s Coronavirus Resource Center. 2021. https://coronavirus.jhu.edu/map.html, accessed 8/18/21.

- Kaiser, David, John Durant, Thomas Levenson, Ben Wiehe, and Peter Linett. 2014. Report of Findings: September 2013 Workshop. MIT and Culture Kettle. www.cultureofscienceengagement.net.

- Kiskola, Joel, Thomas Olsson, Heli Väätäjä, Aleksi H. Syrjämäki, Anna Rantasila, Poika Isokoski, Mirja Ilves, and Veikko Surakka. 2021. “Applying Critical Voice in Design of User Interfaces for Supporting Self-Reflection and Emotion Regulation in Online News Commenting.” Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, New York, Article 88, 1–13. https://doi.org/10.1145/3411764.3445783.

- Lang, Trudie, and Peter Drobac. 2020. How Journalists Can Help Stop the Spread of the Coronavirus Outbreak. Reuters. RISJ, https://reutersinstitute.politics.ox.ac.uk/risj-review/how-journalists-can-help-stop-spread-coronavirus-outbreak.

- Lehmkuhl, Markus, and Hans Peter Peters. 2016. “Constructing (Un-)Certainty: An Exploration of Journalistic Decision-Making in the Reporting of Neuroscience.” Public Understanding of Science 25 (8): 909–926. https://doi.org/10.1177/0963662516646047.

- Liaw, Raymond, Ari Zilnik, Mark Baldwin, and Stephanie Butler. 2013. “Maater: Crowdsourcing to Improve Online Journalism.” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ‘13), 2549–2554. New York, NY: ACM Press. https://doi.org/10.1145/2468356.2468828.

- Linden, Carl-Gustav. 2017. “Decades of Automation in the Newsroom.” Digital Journalism 5 (2): 123–140. https://doi.org/10.1080/21670811.2016.1160791.

- Linden, Carl-Gustav. 2018. “Algorithms for Journalism: The Future of News Work.” Journal of Media Innovation 4 (1): 60–76. https://doi.org/10.5617/jmi.v4i1.2420.

- Lopez, Marisela Gutierrez, Colin Porlezza, Glenda Cooper, Stephann Makri, Andrew MacFarlane, and Sondess Missaoui. 2022. “A Question of Design: Strategies for Embedding AI-Driven Tools Into Journalistic Work Routines.” Digital Journalism 11 (3): 484–503. https://doi.org/10.1080/21670811.2022.2043759.

- Maiden, Neil, George Brock, Konstantinos Zachos, Amanda Brown, Lars Nyre, Dimitris Apostolou, and Jeremy Evans. 2018. “Making the News: Digital Creativity Support for Journalists.” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ‘18). New York, NY: ACM Press, Paper No 475. https://doi.org/10.1145/3173574.3174049.

- Maiden, Neil, Konstantinos Zachos, Amanda Brown, Dimitris Apostolou, Balder Holm, Lars Nyre, Aleksander Tonheim, and Arend van den Beld. 2020a. “Digital Creativity Support for Original Journalism.” Communications of the ACM 63 (8): 46–53. https://doi.org/10.1145/3386526.

- Maiden, Neil, Konstantinos Zachos, Suzanne Franks, Rebecca Wells, and Samantha Stallard. 2020b. “Designing Digital Content to Support Science Journalism.” In Proceedings of 11th Nordic Conference on Human-Computer Interaction: Shaping Experiences, Shaping Society (NordiCHI ‘20), 1–13. New York: ACM, Article 80. https://doi.org/10.1145/3419249.3420124.

- Marsh, Oliver. 2018. ““Nah, Musing Is Fine. You don’t have to be ‘doing science’": Emotional and Descriptive Meaning-Making in Online Non-professional Conversations about Science.” PhD thesis, Department of Science and Technology Studies, University College London, UK.

- Miller, J. D. 1983. “Scientific Literacy: a Conceptual and Empirical Review.” Dedalus 11: 29–48.

- Milosavljević, Marko, and Igor Vobič. 2019. “Human Still in the Loop.” Digital Journalism 7 (8): 1098–1116. https://doi.org/10.1080/21670811.2019.1601576.

- Namsor. 2022. Namsor, Name Checker for Gender, Origin and Ethnicity Classification. https://www.namsor.com.

- Nanodiode. 2022. European Union of Science Journalists’ Associations EUSJA. http://www.nanodiode.eu/partner/eusja/.

- Newton, April, and Linda Steiner. 2019. “Pretty in Pink: The Ongoing Importance of Appearance in Broadcast News.” In Journalism, Gender and Power, edited by Cynthia Carter, Linda Steiner , and Stuart Allan, 1–17. Abingdon, Oxon: Routledge.

- Nguyen, An, and Steve McIlwaine. 2011. “Who Wants a Voice in Science Issues—And Why?” Journalism Practice 5 (2): 210–226. https://doi.org/10.1080/17512786.2010.527544.

- Nguyen, A., and M. Tran. 2019. “Science Journalism for Development in the Global South: A Systematic Literature Review of Issues and Challenges.” Public Understanding of Science 28 (8): 973–990. https://doi.org/10.1177/0963662519875447.

- Norambuena, Brian Keith, Tanushree Mitra, and Chris North. 2021. “Exploring the Information Landscape of News using Narrative Maps.” Proceedings Computation + Journalism’2021 Conference, North-Eastern University, February 2021.

- Peng, Hao, Misha Teplitskiy, and David Jurgens. 2020. Author Mentions in Science News Reveal Wide-Spread Ethnic Bias. Accessed August 17, 2021. arXiv:2009.01896 [cs.CY].

- Peters, Hans Peter. 2013. “Gap Between Science and Media Revisited: Scientists as Public Communicators.” Proceedings of the National Academy of Sciences 110 (supplement_3): 14102–14109. https://doi.org/10.1073/pnas.1212745110.

- Rosen, Cecilia, Lars Guenther, and Klara Froehlich. 2016. “The Question of Newsworthiness: A Cross-Comparison among Science Journalists’ Selection Criteria in Argentina, France, and Germany.” Science Communication 38 (3): 328–355. https://doi.org/10.1177/1075547016645585.

- Schäfer, Mike. 2011. “Sources, Characteristics and Effects of Mass Media Communication on Science: A Review of the Literature, Current Trends and Areas for Future Research.” Sociology Compass 5 (6): 399–412. https://doi.org/10.1111/j.1751-9020.2011.00373.x.

- Schäfer, Mike, Tobias Füchslin, Julia Metag, Silje Kristiansen, and Adrian Rauchfleisch. 2018. “The Different Audiences of Science Communication: A Segmentation Analysis of the Swiss Population’s Perceptions of Science and Their Information and Media use Patterns.” Public Understanding of Science 27 (7): 836–856. https://doi.org/10.1177/0963662517752886.

- Scholarcy. 2022. The AI-Powered Article Summarizer. https://www.scholarcy.com.

- Schünemann, Sophie. 2013. “Science Journalism.” In Specialist Journalism, edited by Barry Turner, and Richard Orange, 134–146. London: Routledge. https://doi.org/10.4324/9780203146644.

- SciCheck. 2022. SciCheck. https://www.factcheck.org/scicheck/.

- Secko, David M., Elyse Amend, and Terrine Friday. 2013. “Four Models of Science Journalism.” Journalism Practice 7 (1): 62–80. https://doi.org/10.1080/17512786.2012.691351.

- SerpApi. 2022. Google Search API. https://serpapi.com.

- Shneiderman, Ben. 2020. “Human-Centered Artificial Intelligence: Three Fresh Ideas.” AIS Transactions on Human-Computer Interaction 12 (3): 109–123. https://doi.org/10.17705/1thci.00131

- Smith, Hollie, and Meredith L. Morgoch. 2022. “Science & Journalism: Bridging the Gaps Through Specialty Training.” Journalism Practice 16 (5): 883–900. https://doi.org/10.1080/17512786.2020.1818608.

- Sohrawardi, Saniat Javid, Sovantharith Seng, Akash Chintha, Bao Thai, Andrea Hickerson, Raymond Ptucha, and Matthew Wright. 2020. “DeFaking Deepfakes: Understanding Journalists’ Needs for Deepfake Detection.” In Proceedings of the Computation + Journalism 2020 Conference.

- Spangher, Alexander, Nanyun Peng, Jonathan May, and Emilio Ferrara. 2021. “Don’t quote me on that”: Finding Mixtures of Sources in News Articles. arXiv:2104.09656 [cs.CL].

- Tatalovic, Mico. 2018. “AI Writing Bots are About to Revolutionise Science Journalism: We Must Shape how This is Done.” Journal of Science Communication 17:1. https://doi.org/10.22323/2.17010501.

- Thurman, Neil, Seth C. Lewis, and Jessica Kunert. 2019. “Algorithms, Automation, and News.” Digital Journalism 7 (8): 980–992. https://doi.org/10.1080/21670811.2019.1685395.

- Tolmie, Peter, Rob Procter, Mark Rouncefield, Dave Randall, Christian Burger, Geraldine Wong Sak Hoi, Arkaitz Zubiaga, and Maria Liakata. 2017. “Supporting the Use of User Generated Content in Journalistic Practice.” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’17), New York: ACM Press.

- Wang, Yixue, and Nicholas Diakopoulos. 2021. “Journalistic Source Discovery: Supporting the Identification of News Sources in User Generated Content.” In Proceedings of 2021 CHI Conference on Human Factors in Computing Systems. New York: ACM, Article 447, 1–18. https://doi.org/10.1145/3411764.3445266.

- Wardle, Claire. 2020. Understanding Information Disorder: Essential Guides. https://firstdraftnews.org/long-form-article/understanding-information-disorder/.

- Wieringa, R. 2014. Design Science Methodology for Information Systems and Software Engineering. Berlin-Heidelberg: Springer-Verlag.