ABSTRACT

Considering the opportunities and challenges raised by generative AI (GenAI) technologies, many universities have been developing policies to guide the integration of GenAI in their academic community. Focusing on Asian universities, this paper presents a scoping review of policy development regarding the GenAI integration. Guided by the theoretical framework of technology integration, this study examined the GenAI policies of 30 universities from the QS top 60 Asian universities with inductive content analysis. The review reveals that these policies prioritized text generation applications, student management, and academic integrity, suggesting an effort to uphold traditional academic values and encourage informed adoption. While the universities were at different stages of understanding and managing GenAI, they tended towards a comprehensive approach in formatting their guidance for internal stakeholders and assessment policies. The review also uncovered gaps in policymaking, such as the exclusion of non-academic staff, limited use of evidence-based practices, international misalignments, and a strong adherence to traditional academic paradigms. This scoping review provides a comprehensive and multifaceted overview of policy development in Asian universities, laying the groundwork for global discussions about the role of GenAI in higher education.

1. Introduction

Generative AI (GenAI) is a type of artificial intelligence (AI) technology that creates various forms of new content (Baidoo-Anu & Ansah, Citation2023). GenAI is believed to significantly impact the higher education (HE) sector, bringing about both opportunities and risks (Chan & Hu, Citation2023). Considering its far-reaching impacts, universities have been developing policies to guide its integration and address relevant challenges (Michel-Villarreal et al., Citation2023). A recent survey finds that out of over 450 universities globally surveyed, fewer than 10% have developed institutional policies or formal guidance on GenAI integration (UNESCO, Citation2023). While the statistics shows a critical gap in the university governance of GenAI technologies, it also raises questions about what the overall landscape of university policymaking looks like. Understanding these published policies is crucial for identifying best practices and common challenges in the GenAI integration, thus informing future policy development and implementation strategies (Chan, Citation2023).

To fulfil this need, this paper presents a scoping review to examine the existing landscape of university policies regarding GenAI integration. Of particular interest are Asian universities, which present a unique context for observing the institutional response to GenAI (The World Bank, Citation2023). Through the scoping review, we aim to understand the extent, range, and nature of existing policies and to identify promising practices, gaps, and challenges (Pham et al., Citation2014). The findings are expected to offer actionable insights into the future directions of GenAI policy development in Asian universities and globally.

2. Literature review

2.1. The integration of GenAI tools in HE

The emergence of ChatGPT in December 2022 has marked an era of GenAI in the field of AI. GenAI is a category of AI models capable of generating new content from a massive volume of training data (Baidoo-Anu & Ansah, Citation2023). There are multiple types of generative AI models, such as Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformers, each with its unique applications and characteristics. For example, ChatGPT is built upon the GPT (Generative Pre-trained Transformer) architecture, which focuses on natural language processing (NLP) and the generation of coherent and contextually relevant texts (Heidari et al., Citation2024). Other applications of GenAI include image generation (e.g. Midjourney), music creation, video generation, and simulation.

The unique affordance of GenAI technologies has proposed new opportunities and challenges for HE (Dai, Liu, et al., Citation2023; Fauzi et al., Citation2023). Education scholars argue that GenAI integration is expected to revolutionize the teaching, learning, research, and administration process, and the overall approach to education (Imran & Almusharraf, Citation2023; Papyshev, Citation2024; Yang, Citation2023). For example, GenAI may enhance students’ self-directed and self-regulated learning by providing tailored explanations, feedback, and support (Fauzi et al., Citation2023). It may also act as a writing assistant, aiding with language editing and idea generation (Imran & Almusharraf, Citation2023; Li et al., Citation2024). However, these potentials also lead to wide concerns and debates about its risks and ethical implications, such as plagiarism, academic integrity, and the dilution of original thought (Baidoo-Anu & Ansah, Citation2023; Chan & Hu, Citation2023). Given the double-edging nature of GenAI technologies, educators and HE institutions call for new perspectives, practices, and policies to prepare relevant stakeholders for the incoming changes and challenges (Chan, Citation2023; UNESCO, Citation2023).

2.2. Institutional responses and policymaking on GenAI integration

The new opportunities and challenges brought about by GenAI require HE institutions to develop respective policies for guidance, administration, and regulation (UNESCO, Citation2023). For example, Sciences Po in France and RV University, Bengaluru in India, directly banned ChatGPT in early 2023 as a temporary solution to address its challenges (Castillo, Citation2023). In contrast, universities like ETH Zürich and University College London UCL) adopted a more proactive approach to guide the effective and responsible use of GenAI among students and faculty (ETH Zürich, Citation2024; UCL, Citationn.d.). Given the diverse stances of university policies, researchers have examined the status quo of policymaking from multiple perspectives, seeking to identify patterns, challenges, and best practices. For instance, Luo (Citation2024), by reviewing GenAI policies at 20 world-leading universities, found the primary problem represented in the policies as the originality of student work. Moore and Lookadoo (Citation2024) examined 102 AI-related course syllabi and identified different sentiments and common practices among the syllabi (e.g. academic dishonesty and consequences; Moore & Lookadoo, Citation2024). Moorhouse et al. (Citation2023), by examining the assessment guidelines of the world’s 50 top-ranking universities, identified common emphases on academic integrity, assessment design, and communication with students.

While these studies offer valuable findings about the existing GenAI policies, they often remain limited in scope, focusing primarily on specific aspects such as assessment and academic integrity (Malik et al., Citation2023; Perera & Lankathilaka, Citation2023). Such specific focus does not provide a comprehensive understanding of the broader technological integration and administrative strategies necessary for effectively incorporating GenAI in higher education. Moreover, the analyses tend to be broad, encompassing a wide range of institutions worldwide without delving into the regional nuances that significantly influence policy development and implementation (Arbo & Benneworth, Citation2007). Given this context, Asian universities are of particular interest. Asian countries have invested heavily in AI and are home to leading AI companies and research universities (The World Bank, Citation2023). Therefore, a focused examination targeting Asian universities could uncover the unique status and trends regarding GenAI integration in this region, providing more relevant and actionable insights for policy development.

In examining the integration of digital technologies in educational settings, a variety of theoretical frameworks have been proposed, such as the SAMR (Substitution, Augmentation, Modification and Redefinition) and ADDIE (Analyse, Design, Develop, Implement, and Evaluate) models (Hamilton et al., Citation2016; Shibley et al., Citation2011). While these models focus on instructional design and classroom implementation, the present study requires a broader institutional perspective at the level of university policies. As such, we adopt the theoretical framework of technology integration by Lim et al. (Citation2019). This framework categorizes the institutional strategic planning of technology integration in HE into multiple dimensions, including visions and narratives of policy alignment, infrastructure and facilities, stakeholder engagement, and others. This comprehensive approach allows for a more holistic understanding of how universities can effectively integrate GenAI at the institutional level. In alignment with the objectives of this scoping review, this multifaceted can be synthesized into five aspects:

Vision, narrative, and goal. They involve the development of visions and goals to guide the overall integration of GenAI tools in the university and a narrative to justify policymaking efforts. This aspect serves as a foundational guide to all other aspects of the plan.

Technology-centric support. It refers to the material tool and digital infrastructure required for GenAI integration. This includes hardware (e.g. networking equipment and databases), software (e.g. servers and educational apps), facilities (e.g. computer labs and internet gateways), and ongoing support and maintenance.

Human-centric support. It refers to the support provided to the individuals within the university – instructors and students – in adapting to and leveraging technology. This includes professional development programmes, student training resources, and efforts for creating a culture that embraces change and innovation.

Curriculum. It is about teaching, learning, and assessment activities. It involves updating and designing curricula, adapting teaching and assessment methods, and redefining learning outcomes for students, in alignment with the changing expectations in an increasingly AI-driven world.

Stakeholder engagement and partnership. It refers to the involvement and collaboration with internal and external stakeholders. Internal stakeholders include administrators, staffs, students, and others, and external stakeholders can be technology providers, industry partners, and the broader community. Effective stakeholder engagement often begins by understanding their needs and perspectives and incorporating their perspectives into the planning and implementation process.

The framework proposed by Lim et al. (Citation2019) provides a comprehensive framework to examine university policymaking in GenAI integration holistically. Meanwhile, GenAI integration is still an emerging phenomenon with many undefined agendas in most universities. For example, Chiu (Citation2024) found that many universities, though recognizing the importance of AI-related training to students and staff, were still under an early stage of envisioning and planning such curriculum resources. Therefore, in analysing the university policies, it is suggested to focus on immediate, available actions (e.g. assessment policies and usage guidance) over endeavours that require a long time of preparation (e.g. curriculum changes). Informed by Lim’s et al. (Citation2019) and relevant literature, we propose five research questions:

RQ1.

What narratives and visions on GenAI are presented in the university policies?

RQ2.

What GenAI tools and technical supports are targeted in the policies?

RQ3.

What human-related support has been provided in the policies?

RQ4.

How is the curriculum assessment defined in the policies?

RQ5.

What stakeholders are considered in the policy documents? What guidance has been provided for these stakeholders in the policies?

3. Method

Considering GenAI is a newly emerging phenomenon, we adopted a scoping review method that allows for an exploratory and flexible examination, without being constrained by rigid inclusion criteria and methodological limitations of systematic reviews (Arksey & O’Malley, Citation2005). Specifically, we followed the PRISMA-ScR (Preferred Reporting Items for Systematic Reviews and Meta-Analyses Extension for Scoping Review) protocol to guide the review process (Tricco et al., Citation2018).

We chose the top 60 Asian universities in the 2024 QS World University Rankings (QS, Citation2023) as the pool for policy search, since these universities are academic leaders in the region. For each of these 60 universities, we started by searching through their official websites with the terms ‘Generative AI’, ‘AI’, ‘Artificial intelligence’, or ‘ChatGPT’ in English and the official languages of its country/region. Then, we used the Google search engine to search each university with key search terms – (‘Generative AI’ OR ‘AI’ OR ‘ChatGPT’) AND (‘policy’ OR ‘guideline’ OR ‘announcement’ OR ‘notice’ OR ‘principle’) AND (University Name) – in English and official languages of their countries/regions, to further exhaust the search results and enhance credibility. This search string ensured a comprehensive search of relevant policies, which could identify AI-specific policies, but also general digital technology policies as long as AI was mentioned. In searching and reviewing the university documents, we developed the inclusion and exclusion criteria to guide the decision-making, as specified in .

Table 1. Inclusion and exclusion criteria.

illustrates the search and selection process as guided by the PRISMA-ScR protocol. From this process, we identified 30 publicly available policy documents for the scoping review. The basic information and characteristics of selected policy documents are summarized in Appendix 1.

We adopted inductive content analysis (ICA) method to systematically explore, interpret, and compare these 30 policy documents to answer the RQs (Armat et al., Citation2018). ICA, while includes inductive coding as a critical step within its analytical framework, represents a holistic process of qualitative data analysis from data collection to interpretation (Vears & Gillam, Citation2022). By treating these policy documents as texts, ICA allowed us to identify emerging patterns without being constrained by preconceived categories. The analysis started with a coding exercise, in which 15 documents (50% of the data) were randomly selected. Two researchers (the first and second authors) interpedently conducted open coding, where each researchers iteratively read a document line-by-line to generate initial codes that characterized key ideas and practices regarding the GenAI integration (Glaser & Strauss, Citation2017). Meanwhile, theoretical memos were taken to record the researcher's ongoing thoughts about and reflections on emerging patterns or questions (Strauss & Corbin, Citation1994). Following the methods, more documents were analysed iteratively to modify or expand existing codes to accommodate enhancing or discrepant evidence, or to add codes for newly observed patterns. After the initial reading and coding, the two researchers met with the whole research team to compare the codes, through which they collaboratively developed a coding scheme through discussion and negotiation. Using this agree-upon scheme developed by the research team, the two researchers then proceeded to (re)code all the documents.

For each RQ, the researchers followed the above ICA method to inductively develop the codes that categorized certain dimension of policy development. Appendix 2 exemplifies the generated codes regarding the RQ1. For the coding, the overall inter-rater agreement was approximately 87% and any disagreement was resolved through collaborative discussion. After the coding, the frequency of codes across documents was quantified using descriptive statistics in each RQ (Kaur et al., Citation2018). One exception was RQ5, which asked about the categories of stakeholders and the content of guidance issued to each stakeholder. The latter half of RQ5 required a synthesis-oriented approach to analyse and summarize how each stakeholder was addressed in the document. For each identified stakeholder, this involved extracting the specific guidance provided, categorizing these recommendations, and comparing the approaches across different universities. This comprehensive synthesis provided a nuanced understanding of stakeholder engagement and support in GenAI integration.

4. Key findings

In addressing the research questions, the findings are presented progressively from the big picture, including the narrative, focal tools, and content of support, to more specific guidance on assessment and stakeholders.

4.1. Narratives of GenAI

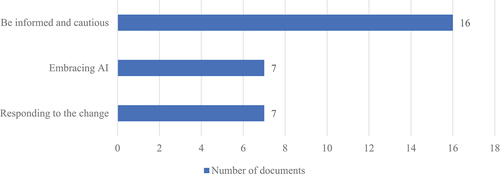

Almost all the reviewed policies began with a narrative or story to explain their specific responses to GenAI. The narrative connected the rationale, policy, and strategy. From the analysis, we identified three major types of narratives in the university policies and their distribution, as shown in . These three themes, while being interconnected, demonstrate distinct emphases on university responses to GenAI.

4.1.1. Be informed and cautious

It was a dominating narrative that emphasized the dual-edged nature of GenAI tools (e.g. Keio, NYCU, and UIFootnote1). This narrative acknowledged GenAI as a transformative force that might reshape modern society and redefine teaching and assessment practices (CU and UNIST). However, it also warned against adopting it wholesale without careful consideration (UM and UPM). In this narrative, GenAI was referred to as ‘inevitable’, ‘not simply forbidden’, or ‘not uniformly prohibited’, with emphasis on its ‘double-edged’ nature. Consequently, it stressed the importance of educators and students being well-informed and prepared about the capabilities and limitations of GenAI (SNU, UTokyo, CUHK, and Waseda). Thus, this narrative advocated for a balanced approach, actively exploring the potential of AI while maintaining continuous dialogue and reflection on its use, precautions, and long-term implications (KoreaU, KyotoU, KyushuU, NagoyaU, and OsakaU, 2023).

4.1.2. Embracing AI

It encapsulated a proactive and forward-thinking approach to integrating GenAI into the academic realm (e.g. HKUST, PolyU, and NCKU). This narrative was characterized by the keywords ‘embrace’, ‘open and forward-looking stance’, and ‘game-changer’. Essentially, this narrative conveyed the idea that GenAI was an inevitable future trend (NTU Taiwan and UMac) and, as a result, advocated for AI literacy/competence as a fundamental competency for both educators and learners (HKU, HKUST, and SKKU). This narrative went beyond simply adopting GenAI tools or accepting their presence; it was about deeply understanding them and actively integrating them into the fabric of teaching, learning and assessment (HKUST, SKKU, and UMac). By embracing AI, universities were actively equipping their communities with the necessary skills and foresight to contribute significantly to an AI-integrated future (NTU Taiwan).

4.1.3. Responding to the change

It was a realistic approach to coping with GenAI tools (e.g. HokkaidoU, NTHU, and TohokuU). Similar to the first two narratives, this one acknowledged the swift changes brought about by GenAI advancements. However, it differed in its emphasis on the responsive, pragmatic adjustments required in the face of AI’s rapid development (CityU, NTU Singapore, and Tokyo Tech). Rather than focusing on preparing students for an AI-infused world, this narrative emphasized managing the unfolding reality and relevant consequences of technological development. It acknowledged the unpredictable nature of GenAI’s impact, suggesting that institutions must remain agile and open to continuous change instead of focusing solely on future-oriented strategies.

The three narratives represent a spectrum of institutional stances towards GenAI in Asian universities, ranging from responsive to proactive stances. Collectively, these narratives encapsulate a dynamic and multifaceted dialogue within universities, each contributing to an approach that embraces AI’s potential while navigating its complexities with thoughtful caution.

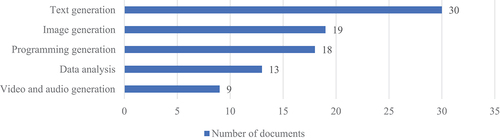

4.2. Focal GenAI tools

The analysis shows that current university policies focused on GenAI tools developed by commercial tech companies, such as OpenAI’s ChatGPT or Google’s BERT, rather than tools developed by universities or educational organizations for educational purposes. Meanwhile, GenAI encompasses a range of applications used to generate texts, images, programming, and others. Different universities mentioned various applications in their policies, indicating their respective interests and concerns. shows the types of GenAI applications mentioned in the reviewed policies. All the universities sampled mentioned text generation, and some (n = 11) even equated GenAI to text generators. In addition to text generation, many universities also included image and programming generation, reflecting the recognition of diverse GenAI applications.

Despite the diversity of the mentioned GenAI applications, further analysis revealed a focus on text generators in the policymaking of these universities. For example, universities such as NTU Taiwan and NUS placed significant emphasis on the use of GenAI tools in coursework and essays. This focus may be attributed to the direct and immediate impact that text generators have on essay assignments and academic writing, both of which are central to university teaching, learning, and research. This overemphasis on text generation also indicates a lag in addressing the comprehensive range of challenges and opportunities presented by other forms of GenAI.

4.3. Content of support

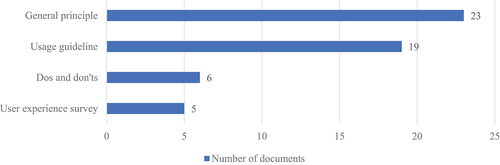

Besides specifying the material tools, the universities developed and presented a wide range of content aimed at supporting the effective integration of GenAI tools. This support content came in different forms, including general principles, usage guideline, and dos and don’ts. Besides the three types of support-oriented content, some policies also included user experience survey, through which they justified their efforts in designing the respective support. Each policy document incorporated one or more of these four types. shows the frequency of each type within the 30 university policies.

4.3.1. General principle

It was the foundational statement that articulated the core values and ethical standards regarding the use of GenAI (e.g. CityU, and NTU Singapore). It served as an overarching framework for interpreting and applying more detailed regulations, guiding decision-making in situations where specific rules may not apply. The principle was often aligned with the mission and vision of the universities.

4.3.2. Do’s and don’ts

This category included explicit and clear statements regarding what behaviours, actions, or practices were acceptable (‘dos’) and prohibited (‘don’ts’). It served as a quick reference to guide user behaviours without ambiguity and provide clear-cut rules for the implementation. It was typically more specific than the general principle and could be easily communicated.

4.3.3. Usage guideline

It encompassed detailed instructions on how to use tools, systems, or processes (e.g. NTU Taiwan and UPM). The guideline was a practical, step-by-step directive that facilitated appropriate and responsible usage. It often included examples, scenarios, or case studies to illustrate proper use, and some included troubleshooting advice or pointers to additional resources for support. This guideline offered clarity on the application of the policy in day-to-day operations and reduced the likelihood of misuse or errors by providing clear instructions.

4.3.4. User experience survey

The survey was a systematic collection and analysis of data obtained from internal stakeholders regarding their use experiences and perspectives related to GenAI. It encompassed quantitative metrics, such as rating scales and qualitative feedback, providing a comprehensive understanding of the user experience. The results of the survey were crucial for ensuring alignment with policymaking and stakeholders’ needs.

shows that most of the reviewed policies (n = 23) involved general principles, indicating a strong emphasis on establishing an ethical framework as a foundation for GenAI use (NUS and PolyU). Further analysis shows that the most common form of policy organization was a hybrid mode (n = 17), where general principles were combined with dos and don’ts and/or usage guidelines (e.g. NUS and UTokyo). Additionally, eight policies included only general principles (e.g. HokkaidoU and NagoyaU), while five policies solely incorporated usage guidelines (e.g. UI and UPM). The popularity of the hybrid model suggests a preference for a comprehensive approach – including the ethical and practical aspects – to policy formulation among Asian universities.

Moreover, five documents included key findings from user experience surveys in their universities, where the opinions of internal stakeholders were referenced in their policymaking (e.g. HKUST and UNIST). Utilizing survey results implies a data-driven approach to policy formulation. This method ensures that policies were not merely theoretical or based on assumptions, but were grounded in the actual experiences and needs of the university community.

4.4. Assessment policy

Assessment policy emerged as a critical area of policy content specified for the internal stakeholders, considering the multifaceted implications of GenAI on academic integrity. Within the spectrum of assessment policies, the review identified two types of strategies:

4.4.1. University-level policy

It was a framework for assessment practices, outlining fundamental principles that ensure fairness, transparency, and academic integrity across all departments (e.g. HKU and NUS). This type of policy served as the bedrock upon which all assessment strategies were built, ensuring a cohesive standard of evaluation while accommodating the diverse spectrum of academic disciplines.

4.4.2. Course-specific policy

This framework delegated a degree of autonomy to individual instructors, granting them the discretion to craft their course-specific assessment policies (e.g. CityU, UTokyo, and NTU Taiwan). This policy represents a decentralized approach, recognizing the unique nature of each subject and the pedagogical expertise of faculty members. This approach allowed them to design assessments that aligned closely with the learning objectives, content, and pedagogical strategies of their courses.

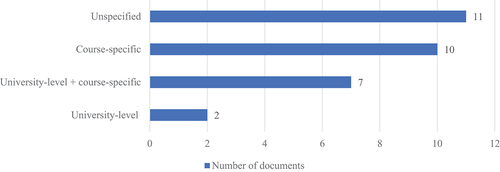

shows the distribution of assessment policies in the reviewed GenAI policies. More than half of the universities (n = 17) granted a degree of autonomy to course instructors in designing the course-level assessment scheme. Of these 17 universities, 10 universities assigned full autonomy to course instructors (e.g. CityU and UTokyo), and seven combined the course-level with university-level policies (e.g. CUHK and HKUST). By granting autonomy to instructors, these universities acknowledged the diverse nature of academic disciplines and the varying needs that come with them. They placed significant trust in their faculty’s expertise to develop subject-specific assessment methods (e.g., HKUST).

Meanwhile, two universities (HKU and NUS) retained a centralized approach, relying on university-level policies. This approach suggests a preference for a uniform standard across all departments and disciplines. The standardized protocol may simplify administrative processes and ensure a consistent application of assessment criteria. Besides, 11 universities (e.g. NagoyaU and UM) did not specify their positions regarding assessment policies. This lack of specification could indicate that these universities were still in the process of developing their policies or that they preferred to maintain flexibility.

4.5. Involved stakeholders and respective guidance

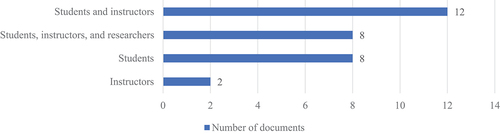

The review shows that all the reviewed policies targeted only internal stakeholders, aligning with their current operational goals. shows the distribution of targeted stakeholders. As shown in , 12 out of the 30 university policies targeted students and instructors (e.g. KyushuU and NCKU), eight at students, instructors, and researchers (e.g. NTU Singapore and UNIST), eight merely at students (e.g. CUHK and NUS), and two at only instructors (UM and UPM). The comparison reveals that universities’ primary concern was the student use of GenAI tools, reflecting the broad concerns about academic integrity and corresponding efforts to establish clear, ethical guidelines. Meanwhile, the inclusion of instructors indicates recognition of GenAI’s impact on teaching and assessment practices, suggesting that instructors were expected to take relevant actions to address the challenges.

4.5.1. Guidance for students

The review shows that students were a major target audience in the university policies, with 28 out of 30 policies containing specific content for them. This policy content primarily addressed their GenAI use in coursework. The relevant content was reviewed and synthesized under four topics.

4.5.1.1. Protocol of use

The protocol set formal guidelines or rules for the appropriate, ethical use of GenAI tools. About half of the policies included these rules: to acknowledge and make transparent the GenAI use in the assignment (e.g. CityU and NUS), to actively communicate with course instructors for GenAI use permission (e.g. CUHK and Tokyo Tech), and to be cautious of data security and avoiding plagiarism (e.g. TohokuU and Waseda). These rules reflect a common emphasis on student accountability, with students expected to understand and adhere to academic and technological codes of conduct (NCKU and NYCU). Additionally, most universities categorized the act of asking GenAI to do the assignment or submitting GenAI outputs in assignments as academic dishonesty (PolyU and UTokyo). Though this kind of misconduct was a recent phenomenon, these universities referred to existing, conventional protocols in handling the misconduct cases. The reliance on established principles of academic integrity suggests a consistent ethical consideration, such as originality, authenticity, and fairness, in line with traditional academic values (HKUST and KyushuU).

4.5.1.2. Recommended usage

Many universities directly suggested students adopt GenAI as a learning tool to assist their study and provided recommendations on its usage (e.g. UNIST and Waseda). The most recommended usage was to leverage GenAI tools, especially ChatGPT, as a personal tutorial capable of explaining complex concepts, clarifying confusion, and providing feedback (NagoyaU and UMac). Other frequently suggested usages included ideation and brainstorming, language editing and proofreading, and practicing thinking skills (HKUST and NTHU). As indicated by these recommendations, these universities were not merely passively observing but actively guiding students on how to integrate these tools into their learning processes (KoreaU). They recognized GenAI as a legitimate learning tool and acknowledged its potential to complement traditional teaching methods.

4.5.1.3. Student competency

While recognizing the potential of GenAI, these university policies delineated certain competencies or skills that students would need to develop to harness the potential. More than two-thirds of the reviewed policies explicitly stated relevant expectations (e.g. KyotoU and NUS). The most prominent expectation for students was a general understanding of the pros and cons of GenAI, which was seen as a prerequisite for students’ informed engagement with GenAI tools (HKUST and UI). Another salient expectation was critical thinking. This skill was highlighted largely because GenAI outputs often included less accurate, current, and reliable information (OsakaU and PolyU). As such, students were encouraged to critically evaluate the generated content, verify the content with external sources, and interpret the content in a reflective way (HKUST). Besides, AI literacy was included in some policies, as a more comprehensive framework for student knowledge, skill, and ethical awareness (HKU and UMac). Additionally, some policies mentioned the importance of appropriate prompts and questions in eliciting quality outputs from GenAI tools and, therefore, encouraging students to craft prompting skills and refine their prompts (CUHK and NTU Taiwan).

4.5.2. Guidance for instructors

The review reveals that 22 out of 30 policies (73.33%) included specific guidance and information to instructors. The relevant content focused on the use of GenAI tools by instructors in teaching, assessing, and supporting students. The review shows that the relevant content usually included a general protocol for using GenAI tools and offered specific suggestions on how to adjust the assessment and teaching methods in response to GenAI tools.

4.5.2.1. Protocol of use

Regarding instructors’ use of GenAI tools, a common concern was data privacy and cyber security (NYCU and TohokuU). Many universities warned that existing GenAI tools were often open and commercial platforms, where inputs of users (instructors) could be stored for data training and analytics (e.g. HokkaidoU). Especially, some policies explicitly forbade instructors from entering any personal or private student information into the GenAI platforms (e.g. TohokuU and UNIST). These rules seem to hold instructors accountable for protecting sensitive and personal information and intellectual property rights (KyushuU). This protocol represents an attempt to balance the potential of GenAI in education with the need to protect the academic community from vulnerabilities associated with these digital tools.

4.5.2.2. Adjusting assessment practices in response to GenAI tools

Given the widespread adoption of GenAI tools among students, it was suggested that instructors adjust their assessment methods and practices accordingly, especially the essay-type assignments (e.g. KyotoU, NYCU, and SKKU). As such, instructors were encouraged to overhaul traditional assessment practices with alternative methods and diverse modalities such as oral exams, live demonstrations, and peer assessments, which were less susceptible to AI manipulation (HKU, UNIST, and UPM). When designing assignments, the priority was placed on critical thinking and engagement with the material (HKUST). There was a clear preference for assignments that required higher-order thinking, such as analysis, evaluation, and personal interpretation (NTHUand UPM). Meanwhile, instructors were advised to broaden the assessment criteria to consider students’ progress or cumulative results over time, instead of focusing solely on a singular final assignment (NTU Taiwan). These suggestions collectively indicate a shift towards more diverse, engaging, and process-oriented assessment practices in universities.

4.5.2.3. Leveraging GenAI tools to enhance teaching

Instructors were not only adapting to changes proposed by GenAI tools but, more importantly, were encouraged to actively leverage GenAI to enhance their teaching practices (e.g. HKUST and SNU). Some universities encouraged instructors to utilize GenAI tools to craft and curate teaching materials, construct lesson plans, and generate comprehensive explanations, examples, questions, and assignments tailored to their students (NTHU and NTU Taiwan). Instructors were also prompted to rethink the unique, irreplaceable value of classroom teaching and instructor-student interactions (HKU and UMac). As such, more interactive, collaborative, and personalized activities for students were recommended. Particularly, with the transition of the education landscape, instructors were advised to solicit and incorporate student opinions into their classroom activities, ensuring the responsiveness and adaptiveness of teaching (NYCU and UPM). Moreover, instructors could incorporate AI content into their courses for interdisciplinary learning, as an effort to prepare students to adopt AI tools in their professional practices (HKUST and UNIST).

4.5.3. Guidance for researchers

The review shows that eight out of 30 policies included specific guidance and instruction to researchers who conducted academic, scientific research, who can be both postgraduate students and staffs (e.g. NagoyaU andNTU Singapore). In comparison with the detailed instructions for students and instructors, the guidance for researchers was usually more concise, focusing primarily on the protocol of use.

4.5.3.1. Protocol of use

In these university policies, researchers were held accountable to fully comprehend and manage the GenAI tools they use, while taking full ownership of their work’s integrity (e.g. CUand NYCU). In particular, the importance of research ethics in complying with data privacy and protection was highlighted (NTU Singapore and TohokuU). The policies outlined a strict prohibition on uploading confidential or personal information into GenAI systems without consent, prioritizing the importance of ethical handling of research data (CU and TohokuU). These regulations reflect a broader institutional commitment to the principles of confidentiality, accuracy, originality, and fairness in research (UNIST). Besides, the policies gently reminded researchers about the dual nature of GenAI tools, such as the need to balance the research efficacy and originality and to avoid an over-reliance on GenAI (Waseda). Meanwhile, researchers were encouraged to critically assess the extent to which they incorporated GenAI tools into their research work, ensuring that it served as an aid rather than a substitute for their intellectual contribution (NYCU).

5. Conclusion and discussion

This scoping review of GenAI policies across 30 Asian universities offers an initial foray into the region’s institutional responses to emerging technologies. The review shows that, while the universities were at different stages of understanding, managing, and integrating GenAI, they demonstrated some promising practices and gaps in their policy development. Based on the findings, we discuss the implications for future policymaking and education policy research.

5.1. Implications for HE policymaking

5.1.1. Promising practices in policymaking

The research findings show that universities consensually set up regulations and offer specific guidance to different stakeholders in university-level policies. Based on the above findings, we identify following promising practices:

5.1.1.1. Recognition of GenAI’s double-edged nature

While the potential of GenAI was widely recognized, there was a recurring theme of caution against its downsides and misuse, such as incorrect content and over-reliance on GenAI. This shows a cautiously optimistic approach to GenAI among Asian universities, demonstrating an effort to balance productivity and originality. The goal was to harness the benefits of these tools while safeguarding academic values and ethical standards.

5.1.1.2. Adherence to traditional academic values

The predominance of policies targeting students and text generation indicates a primary concern about GenAI’s potential impact on the authenticity and originality of academic work. The fact that many universities categorized undisclosed AI-generated assignments as academic dishonesty highlights a dedication to preserving the traditional values of originality and authenticity. Similarly, the instructions given to instructors to ensure data privacy demonstrate a commitment to the fundamental principle of confidentiality. These measures show universities’ efforts to uphold essential scholarly values in the digital age. By reinforcing these values, universities strive to balance the benefits of GenAI with the intellectual rigour central to academic excellence.

5.1.1.3. Comprehensive policy formulation

The integration of various content organization methods and the inclusion of multiple internal stakeholders reveal a notable trend towards a comprehensive policy formulation. These documents include overarching principles that establish the ethical frameworks for the entire university as well as nuanced protocols for specific groups within the academic community. This granularity in policy design demonstrates an awareness that the impact of GenAI varies across different academic roles and activities and that a one-size-fits-all approach is insufficient to address its multifaceted implications.

5.1.2. Gaps and future directions for future policymaking

By juxtaposing the research findings with the theoretical framework by Lim et al. (Citation2019), we further identify gaps in the integration and coordination among various sections and stakeholders, pointing out directions for future policymaking.

5.1.2.1. Inclusion of non-academic staff for inclusive policy development

None of the policies specifically targeted non-academic staff, such as administrative staff, IT personnel, and other employees. This absence might be due to the initial focus of GenAI policies on core functions of the HE institutions (teaching, assessment, and research), where the impact of GenAI is perceived to be most direct and significant (Dai et al., Citation2023). However, non-academic staff play crucial roles in executing, maintaining, and troubleshooting these systems, which is essential for the smooth integration of GenAI across university operations (Kopcha, Citation2010). Including non-academic staff in the policies would ensure a more comprehensive and well-rounded approach and foster an inclusive environment where all members are prepared and empowered to engage with GenAI responsibly.

5.1.2.2. Redefining academic integrity in the age of GenAI collaboration

As GenAI becomes more sophisticated, it has the potential to fundamentally redefine authorship and originality (Luo, Citation2024). GenAI tools like ChatGPT are increasingly used by researchers and students in academic writing, which is believed to disrupt and transform scientific publishing (Conroy, Citation2023). The collaborative interaction between humans and GenAI is blurring the lines of intellectual ownership, raising complex questions about creativity and contribution in the digital age. The current emphasis on disclosure in GenAI-mediated writing may not be sufficient, given the rise in misuse cases. Thus, policies must evolve to reflect these complexities by establishing criteria for acceptable AI involvement and redefining academic integrity.

5.1.2.3. Evidence-based policymaking

A limited number of universities conducted systematic, formal surveys before policymaking, while others relied more on consultation and discussions with selected participants. This highlights a gap in the data-driven, evidence-based approach to policymaking. Considering the novelty and rapid evolution of AI technology, stakeholders’ engagement with GenAI tools might change over time. This dynamic nature highlights the urgent to systematically document the evolving perceptions, expectations, and struggles of HE stakeholders. This echoes the call for an evidence-based approach to policy-making (Sanderson, Citation2002). Universities should adopt a more empirical approach, leveraging data-driven insights to inform their regulatory frameworks. Establishing regular feedback mechanisms, such as surveys, focus groups, and open forums, can capture the perspectives and experiences of their communities.

5.1.2.4. Continuous professional development and curriculum reform

Providing instructions to students, instructors, and researchers is a critical step but may fall short of fully equipping the academic community to navigate the complexities and ethical considerations of GenAI integration. Effective communication and ongoing support mechanisms are essential to address these uncertainties and facilitate a smoother transition towards GenAI integration (Lim et al., Citation2019). Future directions should include implementing AI literacy curricula for students and professional development programmes and training modules for instructors and researchers (Cha et al., Citation2024).

5.1.2.5. International collaboration and knowledge transfer

The variation in the stages of policy development across Asian universities highlights a potential misalignment in academic standards. These disparities can pose challenges, especially in collaborative research, joint academic programmes, and international student exchange. Scientific publishing and knowledge generation are inherently global enterprises, requiring international collaboration in policymaking to ensure cohesive standards, facilitate shared advancements, and address cross-border challenges effectively (Vrontis & Christofi, Citation2021). By sharing resources, expertise, and best practices, universities can collectively tackle the challenges posed by GenAI. Such joint efforts are likely to result in comprehensive policies that meet individual institutional needs and advance the overall management of GenAI in the global academic community.

5.2. Implications for research on university policies on GenAI

In terms of theoretical implications, this study enriches our understandings about HE policymaking regarding GenAI. In accordance with previous studies, this study confirms the emphasis on academic integrity and student management in Asian universities (Luo, Citation2024, Moorhouse et al., Citation2023; Moore & Lookadoo, Citation2024). But this emphasis was not merely delivered via assessment policies, but backed by a comprehensive set of policymaking efforts, including the narrative framing, content organization, and stakeholder involvement. In this regard, this study, compared with previous studies, offer a more comprehensive overview of the multi-faceted approach Asian universities are taking towards GenAI integration. Especially, rather than limited to singular aspects like assessment, this study expands on the scope of observation by situating GenAI integration in a broad context of institutional planning and strategic development. This approach provides a more holistic view of the institutional responses towards GenAI, while echoing a long-lasting call for holistic policymaking in education (Ben-Peretz, Citation2009; Craig et al., Citation2023).

Additionally, the study’s focus on Asian universities adds a regional dimension to the existing body of literature, shedding light on how cultural, social, and technological contexts shape policy development. Meanwhile, within the Asian universities, the diversity in institutional stances and practices were still salient. Such diversity might be caused by multiple reasons, such as the university’s history, available resources, and leadership styles (Altbach, Citation2004). Particularly, a cluster effect appears to emerge, whereby universities in the same region tend to exhibit similar patterns in the development, or lack thereof, of GenAI policies (Aziz & Norhashim, Citation2008). For example, within the mainland China, there appear to be no universities that have developed relevant policies. Conversely, in other regions like Hong Kong, universities appear to be consistent in embracing GenAI and encouraging informed adoption. This regional clustering indicates the importance of regional contexts in shaping the institutional approach to GenAI, which creates a pattern of policy development or absence thereof within geographic or cultural clusters (Sohn & Kenney, Citation2007). In this regard, this study can pave the pathway to comparative studies and contribute to a global understanding of GenAI integration in HE.

6. Limitations

There are some limitations to this scoping review. Firstly, the review was limited to publicly available policy documents, which may not encompass the complete spectrum of strategies and implementations. Particularly, some universities might rely on existing, broader digital technology policies to guide the GenAI integration, while not specifically mentioning GenAI technologies in the documents. Therefore, the findings might not fully capture the intricate and multifaceted approaches institutions adopt towards GenAI. Furthermore, the review’s focus on top-ranking universities introduces a selection bias, potentially overlooking the innovative or unique policies of smaller or less renowned institutions. Another significant limitation is the dynamic nature of GenAI itself, which might lead to ongoing updates to policies. The review, therefore, represents a temporal snapshot, capturing policies at a specific moment. This temporal constraint highlights the necessity for continuous monitoring and updating of policy reviews to keep pace with the fast-evolving field of GenAI and to ensure the relevance and representativeness of the current state of affairs in HE institutions.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Yun Dai

Yun Dai is an Assistant Professor at the Department of Curriculum and Instruction, at the Chinese University of Hong Kong.

Sichen Lai

Sichen Lai is a doctoral student at at the Department of Curriculum and Instruction, at the Chinese University of Hong Kong.

Cher Ping Lim

Cher Ping Lim is the Chair Professor of Learning Technologies and Innovation at the Education University of Hong Kong.

Ang Liu

Ang Liu is an associate professor of Engineering Design at the School of Mechanical and Manufacturing Engineering, University of New South Wales.

Notes

1. All universities mentioned in this manuscript are referred to by their abbreviations, including Keio, NYCU, and UI. Detailed information and the full names of these universities can be found in Appendix 1. These abbreviations will be used throughout the remainder of this manuscript.

References

- Altbach, Philip G. (2004). The past and future of Asian universities: Twenty-first century challenges. In P. G. Altbach &T. Umakoshi (Eds.), Asian universities: historical perspectives and contemporary challenges (pp. 13–32). Brill.

- Arbo, P., & Benneworth, P. (2007). Understanding the regional contribution of higher education institutions: A literature review. OECD education working Papers, No. 9. OECD Publishing. https://doi.org/10.1787/161208155312

- Arksey, H., & O’Malley, L. (2005). Scoping studies: Towards a methodological framework. International Journal of Social Research Methodology, 8(1), 19–32. https://doi.org/10.1080/1364557032000119616

- Armat, M. R., Assarroudi, A., Rad, M., Sharifi, H., & Heydari, A. (2018). Inductive and deductive: Ambiguous labels in qualitative content analysis. The Qualitative Report, 23(1). https://doi.org/10.46743/2160-3715/2018.2872

- Aziz, K. A., & Norhashim, M. (2008). Cluster‐based policy making: Assessing performance and sustaining competitiveness. The Review of Policy Research, 25(4), 349–375. https://doi.org/10.1111/j.1541-1338.2008.00336.x

- Baidoo-Anu, D., & Ansah, L. O. (2023). Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. Journal of AI, 7(1), 52–62. https://doi.org/10.61969/jai.1337500

- Ben-Peretz, M. (2009). Policy-making in education: A holistic approach in response to global changes. Rowman & Littelefield Education.

- Castillo, E. (2023, March 27). These schools and colleges have banned chat GPT and similar AI tools. Retrieved April 3, 2024, from https://www.bestcolleges.com/news/schools-colleges-banned-chat-gpt-similar-ai-tools/

- Cha, Y., Dai, Y., Lin, Z., Liu, A., & Lim, C. P. (2024). Empowering university educators to support generative AI-enabled learning: Proposing a competency framework. Procedia CIRP.

- Chan, C. K. Y. (2023). A comprehensive AI policy education framework for university teaching and learning. International Journal of Educational Technology in Higher Education, 20(1), 38. https://doi.org/10.1186/s41239-023-00408-3

- Chan, C. K. Y., & Hu, W. (2023). Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. International Journal of Educational Technology in Higher Education, 20(1), 43. https://doi.org/10.1186/s41239-023-00411-8

- Chiu, T. K. (2024). Future research recommendations for transforming higher education with generative AI. Computers and Education: Artificial Intelligence, 6, 100197. https://doi.org/10.1016/j.caeai.2023.100197

- Conroy, G. (2023). How ChatGPT and other AI tools could disrupt scientific publishing. Nature, 622(7982), 234–236. https://doi.org/10.1038/d41586-023-03144-w

- Craig, C. J., Flores, M. A., & Orland-Barak, L. (2023). A “life of optimism” in curriculum, teaching, and teacher education: The legacy of Miriam Ben-Peretz. Journal of Curriculum Studies, 55(6), 734–745. https://doi.org/10.1080/00220272.2023.2257259

- Dai, Y., Lai, S., Lim, C. P., & Liu, A. (2023). ChatGPT and its impact on research supervision: Insights from Australian postgraduate research students. Australasian Journal of Educational Technology, 39(4), 74–88. https://doi.org/10.14742/ajet.8843

- Dai, Y., Liu, A., & Lim, C. P. (2023). Reconceptualizing ChatGPT and generative AI as a student-driven innovation in higher education. Procedia CIRP, 119, 84–90. https://doi.org/10.1016/j.procir.2023.05.002

- ETH Zürich. (2024, January 19). Chatgpt. Retrieved April 3, 2024, from https://ethz.ch/en/the-eth-zurich/education/educational-development/ai-in-education/chatgpt.html

- Fauzi, F., Tuhuteru, L., Sampe, F., Ausat, A., & Hatta, H. (2023). Analysing the role of ChatGPT in improving Student productivity in higher education. Journal on Education, 5(4), 14886–14891. https://doi.org/10.31004/joe.v5i4.2563

- Glaser, B., & Strauss, A. (2017). Discovery of grounded theory: Strategies for qualitative research. Routledge. https://doi.org/10.4324/9780203793206

- Hamilton, E. R., Rosenberg, J. M., & Akcaoglu, M. (2016). The substitution augmentation modification redefinition (SAMR) model: A critical review and suggestions for its use. Tech Trends, 60(5), 433–441. https://doi.org/10.1007/s11528-016-0091-y

- Heidari, A., Navimipour, N. J., Zeadally, S., & Chamola, V. (2024). Everything you wanted to know about ChatGPT: Components, capabilities, applications, and opportunities. Internet Technology Letters, e530. https://doi.org/10.1002/itl2.530

- Imran, M., & Almusharraf, N. (2023). Analyzing the role of ChatGPT as a writing assistant at higher education level: A systematic review of the literature. Contemporary Educational Technology, 15(4), ep464. https://doi.org/10.30935/cedtech/13605

- Kaur, P., Stoltzfus, J., & Yellapu, V. (2018). Descriptive statistics. International Journal of Academic Medicine, 4(1), 60–63. https://doi.org/10.4103/IJAM.IJAM_7_18

- Kopcha, T. J. (2010). A systems-based approach to technology integration using mentoring and communities of practice. Educational Technology Research & Development, 58(2), 175–190. https://doi.org/10.1007/s11423-008-9095-4

- Li, H., Wang, Y., Luo, S., & Huang, C. (2024). The influence of GenAI on the effectiveness of argumentative writing in higher education: Evidence from a quasi-experimental study in China. Journal of Asian Public Policy, 1–26. https://doi.org/10.1080/17516234.2024.2363128

- Lim, C. P., Wang, T., & Graham, C. (2019). Driving, sustaining and scaling up blended learning practices in higher education institutions: A proposed framework. Innovation and Education, 1(1), 1. https://doi.org/10.1186/s42862-019-0002-0

- Luo, J. (2024). A critical review of GenAI policies in higher education assessment: A call to reconsider the “originality” of students’ work. Assessment & Evaluation in Higher Education, 1–14. https://doi.org/10.1080/02602938.2024.2309963

- Malik, T., Dettmer, S., Hughes, L., & Dwivedi, Y. K. (2023, December). Academia and generative artificial intelligence (GenAI) SWOT analysis-higher education policy implications. International Working Conference on Transfer and Diffusion of IT (pp. 3–16). Springer Nature Switzerland, Cham.

- Michel-Villarreal, R., Vilalta-Perdomo, E., Salinas-Navarro, D. E., Thierry-Aguilera, R., & Gerardou, F. S. (2023). Challenges and opportunities of generative AI for higher education as explained by ChatGPT. Education Sciences, 13(9), 856. https://doi.org/10.3390/educsci13090856

- Moore, S., & Lookadoo, K. (2024). Communicating clear guidance: Advice for generative AI policy development in higher education. Business and Professional Communication Quarterly. https://doi.org/10.1177/23294906241254786

- Moorhouse, B. L., Yeo, M. A., & Wan, Y. (2023). Generative AI tools and assessment: Guidelines of the world’s top-ranking universities. Computers and Education Open, 5, 100151. https://doi.org/10.1016/j.caeo.2023.100151

- Papyshev, G. (2024). Situated usage of generative AI in policy education: Implications for teaching, learning, and research. Journal of Asian Public Policy, 1–18. https://doi.org/10.1080/17516234.2024.2370716

- Perera, P., & Lankathilaka, M. (2023). Preparing to revolutionize education with the multi-model GenAI 246 tool Google Gemini? A journey towards effective policy making. Journal of Advances in Education and Philosophy, 7(8), 246–253. https://doi.org/10.36348/jaep.2023.v07i08.001

- Pham, M. T., Rajić, A., Greig, J. D., Sargeant, J. M., Papadopoulos, A., & McEwen, S. A. (2014). A scoping review of scoping reviews: Advancing the approach and enhancing the consistency. Research Synthesis Methods, 5(4), 371–385. https://doi.org/10.1002/jrsm.1123

- QS. (2023). QS world university rankings 2024: Top global universities. Retrieved January 4, 2024, from https://www.topuniversities.com/world-university-rankings?region=Asia

- Sanderson, I. (2002). Evaluation, policy learning and evidence‐based policy making. Public Administration, 80(1), 1–22. https://doi.org/10.1111/1467-9299.00292

- Shibley, I., Amaral, K. E., Shank, J. D., & Shibley, L. R. (2011). Designing a blended course: Using ADDIE to guide instructional design. Journal of College Science Teaching, 40(6). https://www.learntechlib.org/p/111210/

- Sohn, D. W., & Kenney, M. (2007). Universities, clusters, and innovation systems: The case of Seoul, Korea. World Development, 35(6), 991–1004. https://doi.org/10.1016/j.worlddev.2006.05.008

- Strauss, A., & Corbin, J. (1994). Grounded theory methodology: An overview. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (pp. 273–285). Sage Publications, Inc.

- Tricco, A. C., Lillie, E., Zarin, W., O’Brien, K. K., Colquhoun, H., Levac, D., Moher, D., Peters, M. D., Horsley, T., Weeks, L., Hempel, S., Akl, E. A., Chang, C., McGowan, J., Stewart, L., Hartling, L., Aldcroft, A., Wilson, M. G., & Tunçalp, Ö. (2018). PRISMA extension for scoping reviews (PRISMA-ScR): Checklist and explanation. Annals of Internal Medicine, 169(7), 467–473. https://doi.org/10.7326/M18-0850

- UCL. (n.d.). Engaging with AI in your education and assessment. Retrieved April 3, 2024, from https://www.ucl.ac.uk/students/exams-and-assessments/assessment-success-guide/engaging-ai-your-education-and-assessment

- UNESCO. (2023, September 6). UNESCO survey: Less than 10% of schools and universities have formal guidance on AI. Retrieved April 3, 2024, from https://www.unesco.org/en/articles/unesco-survey-less-10-schools-and-universities-have-formal-guidance-ai

- Vears, D. F., & Gillam, L. (2022). Inductive content analysis: A guide for beginning qualitative researchers. Focus on Health Professional Education: A Multi-Professional Journal, 23(1), 111–127. https://doi.org/10.11157/fohpe.v23i1.544

- Vrontis, D., & Christofi, M. (2021). R&D internationalization and innovation: A systematic review, integrative framework and future research directions. Journal of Business Research, 128, 812–823. https://doi.org/10.1016/j.jbusres.2019.03.031

- The World Bank. (2023, November 27). Research and development expenditure (% of GDP). Retrieved January 7, 2024, from https://data.worldbank.org/indicator/GB.XPD.RSDV.GD.ZS?most_recent_value_desc=true

- Yang, J. (2023). Challenges and opportunities of generative artificial intelligence in higher education Student educational management. Advances in Educational Technology and Psychology, 7(9), 92–96. https://doi.org/10.23977/aetp.2023.070914