ABSTRACT

Background

Throughout the ongoing COVID-19 pandemic debunking misinformation has been one of the most employed strategies used to address vaccine hesitancy. We investigated whether – and for whom – debunking is effective or even counterproductive in decreasing misinformation belief and vaccination hesitancy.

Method

We conducted a randomized controlled trial (N = 588) utilizing a real-world debunking campaign from the German Ministry of Health. We considered the condition (debunking vs. control) as between-subjects factor, assessed misinformation belief (pretest vs. posttest) as a repeated-measures factor and vaccination intention as a dependent variable. Preregistered subgroup analyses were conducted for different levels of a priori misinformation belief and general vaccination confidence.

Results

The analyses revealed differential effects on misinformation belief and vaccination intention in participants with low, medium, and high a priori belief: A debunking effect on misinformation belief (dRM = –0.80) was only found in participants with a medium a priori belief and did not extend to these participants’ vaccination intentions. Among participants with a high a priori misinformation belief, explorative analysis revealed a small unintended backfiring effect on vaccination intentions (ηp2 = 0.03).

Conclusions

Our findings suggest that debunking is an effective communication strategy to address moderate levels of misinformation beliefs, but it does not constitute a one-fits-all strategy to reduce vaccination hesitancy among the general public. Although countering misinformation should certainly be an integral part of public health communication, additional initiatives, which address individual concerns with targeted and authentic communication, should be taken to enhance the impact on hesitant populations and avoid backfiring effects.

Background

Within the COVID-19 pandemic, online media has become the major informational source regarding healthcare information for people around the world (e.g. [Citation1]). However, the ‘virtual tsunami of data and advice’ ([Citation2], p. 1) generated by the pandemic has included not only helpful and trustworthy advice but an increasing amount of misinformation about COVID-19 vaccines [Citation3,Citation4]. As a result, combating COVID-19 vaccine-related misinformation to prevent vaccine hesitancy has become one of the largest challenges in fighting the pandemic (e.g. [Citation5,Citation6]).

Correcting misinformation through debunking

One strategy that has been extensively applied to counteract misinformation within the COVID-19 pandemic [Citation7] is to ‘debunk’ it by means of a corrective message, enabling people to appropriately update their health belief model [Citation8–10]. Meta-analyses suggest that debunking messages are generally effective in stimulating belief update with medium [Citation11] to large [Citation8] effect sizes. Walter and Murphy’s [Citation11] analysis, however, raises concerns (1) about the existence of a publication bias in the literature on debunking, (2) that real-world misinformation was more difficult to successfully debunk compared to construed misinformation and (3) that debunking messages are more effective in homogeneous student samples compared to more heterogeneous populations [Citation12]. These issuesmotivated us in forming our first research question of how effectively debunking can decrease misinformation belief in the applied setting of COVID-19 vaccination.

Downstream effects of debunking on intentions

When assessing the effectiveness of debunking, participants’ behavioural intentions have also been often addressed as dependent variables [Citation12]. In a recent investigation on COVID-19 communication, MacFarlane et al. [Citation13] found debunking messages to successfully decrease individuals’ willingness to pay for an inappropriate COVID-19 treatment and their promotion intentions (e.g. sharing misinformation via social media). Other studies, however, found debunking to be successful in decreasing misinformation belief but not effective in increasing behavioural intentions, thereby not evoking downstream effects [Citation14–18]. This effect was also found for the COVID-19 vaccine [Citation19]. We therefore considered COVID-19 vaccination intentions as a second behavioural dependent variable.

Debunking in a politicised context

Even though the strategy of debunking is often recommended and applied, its application is sometimes influenced by reservations about potential unintended backfiring effects. A backfiring effect is an unintended increase in misinformation belief resulting from the exposure to a debunking intervention [Citation20]. One subtype is the ‘worldview backfiring effect’, potentially driven by the psychological mechanism of motivated reasoning [Citation21], whereby preexisting beliefs and attitudes determine information processing in terms of an attitude disconfirmation and confirmation bias [Citation22,Citation23]. Unintended worldview backfiring effects after debunking misinformation have been described in the field of politics [Citation24] but also in relation to vaccination communications [Citation15,Citation17,Citation25,Citation26].

These backfiring effects have been reported mainly in post-hoc tests in studies that neither preregistered participants nor identified subgroups properly—a procedure that is susceptible to Type I error inflation [Citation12,Citation27]. A direct replication of the study of Nyhan and Reifler [Citation17] by Haglin [Citation14] and other more recent studies(e.g. [Citation28]) failed to find any backfiring effect in a broad range of politicized topics. Schmid and Betsch [Citation29] found no worldview backfiring effect after debunking vaccination misinformation in a large study. Porter et al. [Citation19] showed that debunking messages about the COVID-19 vaccine were effective in decreasing misinformation beliefs in a large U.S. sample, even and especially in participants with high a priori scepticism against the vaccine.

Thus, some researchers have concluded that the backfiring effect is a robust and systematic bias arising from debunking (e.g. [Citation30]), but others question whether the effect exists at all, results from measurement error or occurs only in certain ‘hot topics’ (e.g. [Citation12]).

Given that the discourse about vaccination has become very politicized within the COVID-19 pandemic [Citation31,Citation32], especially in German-speaking countries [Citation33], it might be one of these rare ‘hot topics’ for which debunking has differential effects in certain groups in which backfiring effects could arise. We therefore aimed to understand how effective debunking messages are in decreasing misinformation beliefs in different groups in the polarized COVID-19 vaccination debate in Germany. We addressed two factors in our research design that might be relevant in triggering worldview backfiring effects: participants’ belief in misinformation and their general confidence in vaccinations, measured before the intervention. Both factors might have become part of participants’ identity and might therefore undermine processing of the debunking campaign in terms of motivated reasoning [Citation34,Citation35], especially in participants with high misinformation belief or low general vaccination confidence.

The present study

In our study, we refer to misinformation as ‘information considered incorrect based on the best available evidence from relevant experts at the time’ ([Citation36], p. 140). We conducted an experimental online survey in early spring 2021 in Germany, investigating the effectiveness of a governmental debunking campaign [Citation37] in decreasing misinformation belief and increasing vaccination intentions. We expected participants’ misinformation belief in the debunking condition to decrease from pre- to posttest (H1a) and more strongly compared to a control group (H1b). We also expected that participants in the debunking condition would report higher vaccination intentions after the intervention compared to the control group (H2). Finally, we assumed that the debunking effect might differ between subgroups. Participants with a high a priori misinformation belief (H3a) and low general vaccination confidence (H3b) would reveal increased misinformation belief (i.e. backfiring effect) after exposure to debunking, which was not expected in the other subgroups.

Method

Design

We conducted a randomized controlled trial with a mixed 2 (debunking vs. control condition, between-subjects) x 2 (pre- vs. posttest misinformation belief, within-subjects) mixed design. The dependent variables were posttest misinformation belief for H1 and H3, and vaccination intention for H2. For H3, we additionally considered preregistered subgroups (low vs. medium vs. high) of a priori misinformation belief and vaccination confidence as between-subjects factors.

Preregistration

The study was preregistered (https://osf.io/zgmhj).

Sample size rational

We conducted an a priori power analysis with G*Power version 3.1.9.2 [Citation38] for the most complex effect, that is, for detecting a medium debunking effect (d = 0.5) in each of the three a priori misinformation belief subgroups with a power of 1 – β = .95. The analysis revealed a minimum sample size of n = 78 for each of six planned comparisons (dependent t tests), comparing pre- and posttest scores in each condition (i.e. debunking, control) in each of the three subgroups (low, medium, high misinformation belief) (Pcrit = .008, accounting for multiple testing), resulting in a total minimum sample size of N = 78×3×2 = 468. Accounting for dropouts, 480 participants were targeted. However, within the first 100 cases, we noticed that more participants were familiar with the debunking campaign than expected, which was an exclusion criterion. Therefore, we increased our target sample size to a minimum of 600 participants to maintain power even after excluding these cases.

Sample

Participants were recruited in March 2021 mainly via Facebook. As an incentive, 4×50 euros were raffled among all participants who registered for it. From a total of 982 participants having started the questionnaire, 732 completed it. Participants who were already vaccinated (n = 55), familiar with the debunking campaign (n = 68), or who responded too fast to provide valid data (i.e. relative speed index above 2.0; [Citation39]; n = 22) were excluded. The final dataset consisted of N = 588 participants (debunking condition: n = 260, control condition: n = 328). Participants were aged between 18 and 81 years (M = 43.8 years; SD = 12.5), mostly female (83%) and employed (62%), and 43% reported having a higher risk for severe COVID-19 disease progression (for further demographic data, see Appendix A, Table A1).

Materials

The questionnaire was created using the software SoSci Survey version 3.2.23.

Experimental manipulation

Participants in the debunking condition were exposed to seven infographics from the German Ministry of Health [Citation37] in random order (Appendix B1). On each infographic, a piece of misinformation was introduced and refuted with a short debunking message. Participants in the control condition read seven information vignettes about the procedure of getting vaccinated in a COVID-19 vaccination centre ([Citation40]; Appendix B2). The infographics in both conditions consisted of verbatim text from the governmental webpages. Presenting the infographics online to the participants was in line with the manner in which the Ministry of Health provided this information (i.e. via advertisements on social media channels and on its homepage). Participants in both conditions were informed that the material was obtained from governmental institutions.

Misinformation belief (Pretest and posttest)

We developed a scale consisting of the seven incorrect claims addressed in the debunking campaign ([Citation37]; see Appendix C). Approval or disapproval for each item (presented in a randomized order) was indicated on a Likert scale ranging from 1 (do not agree at all) to 7 (fully agree). The scale revealed an excellent internal consistency in the pretest (Cronbach’s α = 0.95) and in the posttest (Cronbach’s α = 0.96).

Vaccination intention

We adopted three items that were typically applied in previous research to assess participants’ vaccination intention (see Appendix C). Again, a 7-point Likert scale was used, and items were presented in a random order. However, the item ‘I would rather like to wait before getting vaccinated’ was ambiguous for those without any intention to get vaccinated. Even though the internal consistency of the scale was good (Cronbach’s α = 0.87), we therefore removed this item, resulting in a two-item scale with an excellent internal consistency (Cronbach’s α = 0.96).

Subgroups of a priori misinformation belief

Following Hainmueller et al. [Citation41], participants were categorized into subgroups of low, medium, or high pretest misinformation beliefs by a tercile split of their mean belief in the pretest. After linearly transforming the scale to potentially range from 0 (no misinformation belief) to 1 (very high misinformation belief), the cut-off scores were x < 0.14 and x > 0.43.

Subgroups of vaccination confidence

Participants’ general vaccination confidence was assessed with the ‘Vaccination Confidence’ subscale from the 5C General Vaccination Antecedents Scale [Citation42]. Participants rated the three items on a 7-point Likert scale ranging from 1 (do not agree at all) to 7 (fully agree). After linear transformation, they were categorized into subgroups of low, medium, or high general vaccination confidence as described above. Cut-off scores were x < 0.56 and x > 0.78.

Control, filter and demographic variables

We assessed participants’ age, gender, risk group membership, and a former COVID-19 infection as potential covariates, and familiarity with the material and a completed COVID-19 vaccination as filter variables. Further demographic variables included participants’ educational attainment, occupation, occupational field, and vaccination priority.

Distractor I: vaccination attitudes

To partially disguise the aim of our study, we also presented the remaining subscales of the 5C General Vaccination Antecedents Scale [Citation42], which were not analysed.

Distractor II: evaluation of information campaign

To maintain participants’ attention during the intervention and for exploratory analysis, we assessed their evaluation in terms of helpfulness of the campaigns on a 5-point emoticon scale ranging from 1 (very negative) to 5 (very positive).

Procedure

The study was performed online on participants’ personal electronic devices. Participants initially read the terms and conditions and provided their informed consent by clicking on the ‘next’ button. Participants were informed that the study aimed at examining people’s attitudes and intentions regarding the Covid-19 vaccination by using questionnaires as well as that they would be exposed to governmental infographics on Corona vaccination. They were informed that they could terminate their participation at any time without encountering negative consequences. In addition, participants were assured that data sampling was conducted fully anonymously, and that their data would be stored for research proposals for max. 10 years. Participants provided their informed consent to take part under these conditions in the study by clicking on the ‘next’ button.

After answering the demographic, control and filter questions, participants who had reported being vaccinated were directly forwarded to the last page and thanked for their participation. The others started the pretest, assessing vaccination confidence and pretest misinformation belief. Participants were then either exposed to the seven infographics of the debunking [Citation37] or to the control campaign [Citation40]. Each infographic was presented on a separate page and was evaluated on an emoticon scale beneath. In the debunking group, participants were subsequently asked if they had been familiar with the material. Finally, all participants responded to the posttest, capturing their posttest misinformation belief and their COVID-19 vaccination intention. Participants were then fully debriefed and thanked before we provided contact information to register for the raffle.

Results

Descriptive statistics and preceding analyses

For reasons of clarity and interpretability, we linearly transformed all scales to range from 0 to 1. Descriptive statistics and correlations between the different measures are reported in Appendix D (Table D1 and D2.) All subsequently reported analyses were conducted with an alpha level of 0.05, applying Bonferroni correction for multiple testing.

Hypothesis 1: general effect of debunking on misinformation belief

Initially, a global 2 (debunking condition: debunking, control) x 2 (measurement timepoint: pretest, posttest) x 3 (a priori misinformation belief: low, medium, high) x 3 (vaccination confidence: low, medium, high) mixed ANOVA was run as an omnibus test (see Appendix D, Table D3 for the complete results).

Since misinformation belief in the pretest did not differ between debunking conditions (P = 0.25), the hypotheses that debunking reduces participants’ misinformation belief from pre- to posttest (H1a) and more strongly in the debunking than in the control condition (H1b) would be confirmed by a significant interaction of debunking condition and measurement timepoint in this test (Pcrit = 0.025). Independently of the debunking condition, there was a general decrease in misinformation belief from pre- (M = 0.33; SD = 0.26) to posttest (M = 0.31; SD = 0.27) in both groups (ηp2 = 0.03) but no significant interaction (P = .14).

Hypothesis 2: downstream effect of debunking in vaccination intentions

Next, we tested whether the debunking message had a positive (downstream) effect on vaccination intentions, even though this result was not very plausible given the lack of a general effect of debunking on misinformation belief.

We conducted a one-factorial ANCOVA with the debunking condition as between-subjects factor, vaccination intention as the dependent variable, and participants’ general vaccination confidence as covariate because this variable differed significantly in the pretest between the debunking (M = 0.60; SD = 0.28) and control condition (M = 0.65; SD = 0.26), t(586) = 2.05, P = .041, d = 0.19, and because vaccination confidence correlated positively with participants’ vaccination intentions (see Appendix D, Table D2). Likewise, belonging to a risk group was statistically controlled because it correlated significantly with vaccination intentions. However, intentions to get vaccinated against COVID-19 were not higher in the debunking than in the control group after the intervention (P = 0.87). Instead, we found a significant large effect of the covariate general vaccination confidence, F(1, 584) = 1,047.37, P < .001, ηp 2 = 0.64, and a small effect of belonging to a risk group, F(1, 584) = 7.66, P = .006, ηp 2 = 0.01, on participants’ vaccination intention.

Hypothesis 3: differential (backfiring) effects of debunking

Finally, we tested whether participants reacted differently to the debunking campaign, that is, participants with lower a priori misinformation belief and higher vaccination confidence showing a reduced misinformation belief after debunking (i.e. debunking effect), whereas participants with higher a priori misinformation belief (H3a) and lower general vaccination confidence (H3b) revealing an unintended increase in misinformation belief (i.e. backfiring effect) from pre- to posttest after exposure to the debunking campaign.

Threefold interactions of debunking condition, measurement timepoint, and a priori misinformation belief (H3a) or general vaccination confidence (H3b) in the omnibus-test would be a first hint in confirming the hypotheses (Pcrit = 0.025). In line with H3a, the ANOVA revealed a significant threefold interaction of debunking condition, measurement timepoint and a priori misinformation belief, F(2, 570) = 3.86, P = .022, ηp2 = 0.01. In contrast to H3b, we found no interaction involving general vaccination confidence (P = .10, see Appendix D, Table D3).

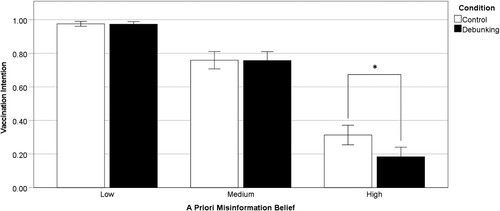

Subsequent to the significant threefold interaction involving a priori misinformation belief, we conducted three repeated-measures ANOVAs, one for each of the three subgroups of a priori misinformation belief, including the debunking condition and measurement timepoint as independent variables. A debunking and a backfiring effect would yield a significant interaction of the two factors (Pcrit = 0.016). No interaction was found in participants with low (P = 0.58) or high misinformation belief (P = 0.06) but in participants with a medium a priori misinformation belief, F(1, 194) = 14.11, P < .001, ηp2 = 0.07 (see ).

Figure 1. Misinformation belief before and after the intervention in the control condition (n = 103) and the debunking condition (n = 93) in the subgroup with medium a priori misinformation belief (N = 196). Error bars indicate the 95% confidence interval. *P < .025.

We then computed a paired-samples t tests comparing the pre- and posttest misinformation belief in the debunking and the control condition of this group, respectively (Pcrit = 0.025). Among participants with a medium a priori misinformation belief, misinformation belief significantly decreased from pretest (M = 0.30; SD = 0.09) to posttest (M = 0.24; SD = 0.13) in the debunking condition, t(92) = 5.60, P < .001, dRM = -0.80, which can be considered a large debunking effect. In the control condition by contrast, misinformation belief only slightly decreased from pre- (M = 0.28; SD = 0.09) to posttest (M = 0.27; SD = 0.11), t(102) = 2.29, P = 0.024, dRM = -0.20, suggesting a small effect. To compare these effects, we exploratively computed a difference score between pretest and posttest misinformation belief. An independent t test for this difference score revealed that misinformation belief decreased more from pre- to posttest in the debunking (M = −0.06, SD = 0.10) as compared to the control condition (M = −0.01, SD = 0.06), t(194) = −3.76, P < .001, d = 0.66, which can be considered as medium effect.

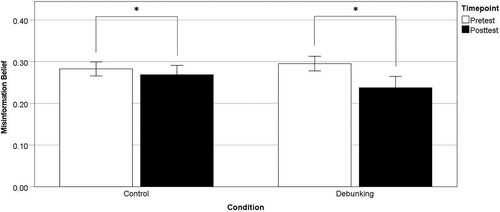

Exploratory analyses I: differential (backfiring) effects on vaccination intentions

We exploratively investigated whether participants’ a priori misinformation belief differentially affected the debunking campaigns’ effectiveness concerning participants’ vaccination intentions. This effect would be indicated by significant interactions between a priori misinformation belief and debunking condition in a 2 (condition: debunking versus, control) x 3 (a priori misinformation belief: low, medium, high) ANCOVA, controlling for vaccination confidence and risk group membership as justified above. The analysis revealed a significant interaction (see Appendix D, Table D4). Three follow-up one-factorial ANCOVAs were conducted, one for each subgroup of a priori misinformation belief, with the debunking condition as between-subjects factor and the vaccination intention as dependent variable, controlling for vaccination confidence and belonging to a risk group (Pcrit = .016). The analysis revealed no significant difference in vaccination intentions between the two conditions in participants with low (P = .64) and medium a priori misinformation belief (P = .74). In participants with high a priori misinformation belief, however, vaccination intentions were significantly decreased in participants who had been exposed to the debunking campaign (M = 0.18; SD = 0.27) compared to participants in the control condition (M = 0.31; SD = 0.31), F(1, 191) = 6.74, P = .010, ηp 2 = 0.03 (see ). We had not planned and preregistered this analysis because of sample size considerations. Thus, given the low power (1 – β = .69) of these analyses, the results should be interpreted cautiously. Their results, however, suggest that in participants with high a priori misinformation belief, the debunking campaign might indeed have caused a small backfiring effect on their vaccination intentions.

Exploratory analysis II: perceived helpfulness of the material

Finally, we explored whether participants’ evaluation of the helpfulness of the campaigns depended on their a priori misinformation belief or their general vaccination confidence. We conducted a 2 (condition: debunking versus control) x 3 (a priori misinformation belief: low, medium, high) x 3 (vaccination confidence: low, medium, high) ANOVA. Descriptive and statistical results can be found in Appendix D, Table D5. The results are noteworthy. We found a significant twofold interaction of debunking condition and a priori misinformation belief, F(2, 586) = 13.36, P < .001, ηp 2 = 0.04. Two-factorial follow-up ANOVAs were conducted—one for the debunking and one for the control condition—with subgroups of a priori misinformation belief and vaccination confidence as between-subjects factors and their evaluation as dependent variable (Pcrit = .025). The analysis revealed that the control campaign was evaluated as similarly helpful by the different group levels of a priori misinformation belief (P = .41), and vaccination confidence (P = .06). The debunking campaign, in contrast, was evaluated differently between the subgroups of a priori misinformation belief, F(2, 251) = 37.19, P < .001, η2p = 0.23, but not between the subgroups of vaccination confidence, P = .09. Independent t tests (Pcrit = .016) revealed that participants with low a priori misinformation belief (M = 0.80; SD = 0.17) evaluated the campaign as more helpful than participants with medium a priori belief (M = 0.64; SD = 0.19), and participants who strongly believed in misinformation evaluated the campaign as least helpful (M = 0.31; SD = 0.24), all Ps < .001.

Discussion

Firstly, our findings suggest that debunking can reduce misinformation belief of COVID-19 vaccines—at least in people who are either not yet fully convinced by nor fully reject the misinformation.

Secondly, we conclude that this change in misinformation belief is not necessarily generalized to behavioural intentions in terms of a downstream effect. No positive debunking effect emerged on participants’ intentions to get vaccinated, not even in the subgroup of participants with moderate a priori misinformation belief. The null effect of debunking on vaccination intention, even after successful belief update, aligns with other research findings of absent downstream effects [Citation14–17,Citation19].

Thirdly, the finding of differential subgroup-dependent effects on misinformation belief update is in line with the observation of Walter and Murphy [Citation11] that correcting real-world misinformation, for example, in a more heterogenic, nonstudent sample, is challenging. It is also consistent with the meta-analytic finding of Walter and Tukachinsky [Citation43] that misinformation belief is rarely fully diminished after debunking. Interestingly, in contrast to Porter et al. [Citation19], misinformation belief in our sample did not decrease in the subsample with the highest a priori misinformation belief but only in participants with a moderate level of belief. Our explorative findings suggest that applying debunking to people who strongly believe in misinformation can be counterproductive because it might evoke small backfiring effects of vaccination intentions. In participants with high a priori misinformation belief, motivated reasoning might have prevented belief update. Participants might have processed the information in a manner that was biased by their a priori beliefs, triggering a backfiring effect on vaccination intention. We had assumed a causal relationship from misinformation belief to intentions, but we cannot rule out that people refusing COVID-19 vaccination are in turn more open for misinformation to justify their scepticism [Citation44]. In this case, the backfiring effect on vaccination intention might be explained by a reactance effect [Citation45] against the message or its messenger (see also [Citation46,Citation47])—a side effect that could be mitigated by modifying communications [Citation48]. One might, for example, ask whether governmental institutions should debunk health relatedmisinformation or whether the debunks should be rather shared with more trusted scientific institutions (e.g. the WHO), which might evoke less of a reactance effect.

In sum, our findings emphasize the caveats that vaccine safety concerns cannot be treated with information only [Citation49] and that debunking campaigns—though a welcome tool in fighting misinformation—are alone not enough to address vaccine hesitancy [Citation50]. Instead, individuals’ concerns should be taken seriously and be addressed with targeted and authentic communication. For example, the social listening tool of the WHO [Citation51] could be useful to adapt communication strategies to the current concerns about the vaccine circulating among the public.

Limitations and future research

Our study applied a very simple design, neglecting that the effectiveness of debunking might be determined by more than just information such as more subtle reactions like sharing intentions or source checking [Citation52]. To better understand the effectiveness of debunking campaigns, assessing health literacy (e.g. [Citation53]) would be informative as well as source credibility of such campaigns (e.g. [Citation54]) and trust in the institutions developing and approving the vaccine [Citation50]. The messages in our study were delivered by a governmental source. Upcoming studies should clarify whether debunking health messages is more efficient when provided by other messengers, such as scientific or health organisations.

Our study design would have profited from also applying a pre–post control design for vaccination intentions and from including a longer time gap between the intervention and the posttest. Backfiring effects might occur after a delay or increase in time (e.g. [Citation25,Citation30]). We cannot rule out that after the corrective message has been forgotten, misinformation might be falsely remembered as true again over time.

Future research should thus specifically address the question under which conditions belief update generalizes to behaviour and how this transfer can be supported.

Conclusions

Debunking misinformation remains a key factor in the ongoing ‘infodemic’ and beyond, given that many people still feel overwhelmed by identifying fake news (e.g. in Germany: 42%; [Citation55]). We found that debunking can be an effective part of public health communications, at least for target groups with moderate misinformation beliefs. Disseminating misinformation debunks to support correct belief formation and decision making should therefore be one important principle in effective public health communication within the pandemic. However, effectively fighting vaccination hesitancy on a behavioural level needs to be addressed with a more complex communication strategy that goes beyond only refuting misinformation.

Appendices

The Appendices can be found under https://osf.io/g65ef/ at https://osf.io/qg6xu/.

Authors’ contributions

AH developed the study, collected the data, conducted the statistical analyses and wrote large parts of the manuscript. ME supervised AH in these processes and revised the manuscript in multiple stages.

Data availability statement

The dataset for this study is accessible under https://osf.io/e39dy/ at https://osf.io/qg6xu/.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Anna Helfers

Anna Helfers (corresponding author) received her master’s degree in Psychology at the University of Kassel. She is currently pursuing a PhD on the topic of risk perception and behaviour during the COVID-19 pandemic and works as a lecturer at University of Kassel.

Mirjam Ebersbach

Mirjam Ebersbach is full professor for Developmental Psychology and is interested in cognitive development. In addition, she and her team are conducting research on risk perception and risk behaviour with regard to environmentalism, climate change, and COVID-19.

References

- Cinelli M, Quattrociocchi W, Galeazzi A, Valensise CM, Brugnoli E, Schmidt AL, et al. The COVID-19 social media infodemic. Sci Rep. 2020;10(1):1–10.

- WHO. An ad hoc WHO technical consultation managing the COVID-19 infodemic: call for action. 7–8 April 2020. Geneva: World Health Organization; 2020; 2020. Licence: CC BY-NC-SA 3.0 IGO. https://www.who.int/publications/i/item/9789240010314.

- Bonnevie E, Gallegos-Jeffrey A, Goldbarg J, Byrd B, Smyser J. Quantifying the rise of vaccine opposition on twitter during the COVID-19 pandemic. J Commun Healthc. 2021;14(1):12–19. doi:10.1080/17538068.2020.1858222.

- Puri N, Coomes EA, Haghbayan H, Gunaratne K. Social media and vaccine hesitancy: new updates for the era of COVID-19 and globalized infectious diseases. Hum Vaccines Immunother. 2020;16(11):2586–2593. doi:10.1080/21645515.2020.1780846.

- Dror AA, Eisenbach N, Taiber S, Morozov NG, Mizrachi M, Zigron A, et al. Vaccine hesitancy: the next challenge in the fight against COVID-19. Eur J Epidemiol. 2020;35(8):775–779. doi:10.1007/s10654-020-00671-y.

- Islam MS, Sarkar T, Khan SH, Mostofa Kamal A-H, Hasan SMM, Kabir A, et al. COVID-19-related infodemic and its impact on public health: a global social media analysis. ASTMH. 2020;103(4):1621–1629. doi:10.4269/ajtmh.20-0812.

- Brennen JS, Simon FM, Howard PN, Nielsen RK. (2020). Types, sources, and claims of COVID-19 misinformation. PrimaOnline. http://www.primaonline.it/wp-content/uploads/2020/04/COVID-19_reuters.pdf.

- Chan M-PS, Jones CR, Hall Jamieson K, Albarracín D. Debunking: a meta-analysis of the psychological efficacy of messages countering misinformation. Psychol Sci. 2017;28(11):1531–1546. doi:10.1177/0956797617714579.

- Lewandowsky S, Cook J, Lombardi D. (2020). Debunking handbook 2020. Databrary. [Cited 2021 April 30]. doi:10.17910/b7.1182.

- Lewandowsky S, Cook J, Schmid P, Holford DL, Finn A, Leask J, et al. (2021). The COVID-19 vaccine communication handbook. A practical guide for improving vaccine communication and fighting misinformation. SciBeh. Available from: https://sks.to/c19vax.

- Walter N, Murphy ST. How to unring the bell: a meta-analytic approach to correction of misinformation. Commun Monogr. 2018;85(3):423–441. doi:10.1080/03637751.2018.1467564.

- Swire-Thompson B, DeGutis J, Lazer D. Searching for the backfire effect: measurement and design considerations. JARMAC. 2020;9(3):286–299. doi:10.1016/j.jarmac.2020.06.006.

- MacFarlane D, Tay LQ, Hurlstone MJ, Ecker UKH. Refuting spurious COVID-19 treatment claims reduces demand and misinformation sharing. JARMAC. 2020;Advance online publication, doi:10.1016/j.jarmac.2020.12.005.

- Haglin K. The limitations of the backfire effect. Res Polit. 2017;4(3):205316801771654. doi:10.1177/2053168017716547.

- Nyhan B, Reifler J, Richey S, Freed GL. Effective messages in vaccine promotion: a randomized trial. Pediatrics. 2014;133(4):e835–e842. doi:10.1542/peds.2013-2365.

- Nyhan B, Porter E, Reifler J, Wood TJ. Taking fact-checks literally but not seriously? The effects of journalistic fact-checking on factual beliefs and candidate favorability. Political Behav. 2020;42(3):939–960. doi:10.1007/s11109-019-09528-x.

- Nyhan B, Reifler J. Does correcting myths about the flu vaccine work? An experimental evaluation of the effects of corrective information. Vaccine. 2015;33(3):459–464. doi:10.1016/j.vaccine.2014.11.017.

- Swire B, Berinsky AJ, Lewandowsky S, Ecker UKH. Processing political misinformation: comprehending the trump phenomenon. R Soc Open Sci. 2017;4(3):160802. doi:10.1098/rsos.160802.

- Porter E, Velez Y, Wood T. (2021). Factual corrections eliminate false beliefs about COVID-19 vaccines. http://nypoliticalpsychology.site44.com/velez.pdf.

- Lewandowsky S, Ecker UKH, Seifert CM, Schwarz N, Cook J. Misinformation and its correction: continued Influence and successful debiasing. Psychol Sci Public Interest. 2012;13(3):106–131. doi:10.1177/1529100612451018.

- Kunda Z. The case for motivated reasoning. Psychol Bull. 1990;108(3):480–498. doi:10.1037/0033-2909.108.3.480.

- Taber CS, Lodge M. Motivated skepticism in the evaluation of political beliefs. Am J Pol Sci. 2006;50(3):755–769. doi:10.1111/j.1540-5907.2006.00214.x.

- Wells C, Reedy J, Gastil J, Lee C. Information distortion and voting choices: the origins and effects of factual beliefs in initiative elections. Polit Psychol. 2009;30(6):953–969. doi:10.1111/j.1467-9221.2009.00735.x.

- Ecker UKH, Ang LC. Political attitudes and the processing of misinformation corrections. Polit Psychol. 2019;40(2):241–260. doi:10.1111/pops.12494.

- Pluviano S, Watt C, Della Sala S. Misinformation lingers in memory: failure of three pro-vaccination strategies. PloS One. 2017;12(7):e0181640. doi:10.1371/journal.pone.0181640.

- Pluviano S, Watt C, Ragazzini G, Della Sala S. Parents’ beliefs in misinformation about vaccines are strengthened by pro-vaccine campaigns. Cogn Process. 2019;20(3):325–331. doi:10.1007/s10339-019-00919-w.

- Wang R, Lagakos SW, Ware JH, Hunter DJ, Drazen JM. Statistics in medicine–reporting of subgroup analyses in clinical trials. NEJM. 2007;357(21):2189–2194. doi:10.1056/NEJMsr077003.

- Wood T, Porter E. The elusive backfire effect: mass attitudes’ steadfast factual adherence. Polit Behav. 2019;41(1):135–163. doi:10.1007/s11109-018-9443-y.

- Schmid P, Betsch C. Effective strategies for rebutting science denialism in public discussions. Nat Hum Behav. 2019;3(9):931–939. doi:10.1038/s41562-019-0632-4.

- Peter C, Koch T. When debunking scientific myths fails (and when it does not). Sci Commun. 2016;38(1):3–25. doi:10.1177/1075547015613523.

- Herrmann C. (2021, April 21). Vakzin-Skepsis und Kulturkämpfe: Die USA rennen in eine Impfwand. N-Tv NACHRICHTEN. Available from: https://www.n-tv.de/politik/Die-USA-rennen-in-eine-Impfwand-article22504693.html?utm_source = pocket-newtab-global-de-DE.

- Uscinski JE, Enders AM, Klofstad C, Seelig M, Funchion J, Everett C, et al. Why do people believe COVID-19 conspiracy theories? HKS Misinformation Review. 2020; Advance online publication, doi:10.37016/mr-2020-015.

- Jones S, Chazan G. (2021, November 11). ‘Nein Danke’: the resistance to Covid-19 vaccines in German-speaking Europe. Financial Times. Available from: https://www.ft.com/content/f04ac67b-92e4-4bab-8c23-817cc0483df5.

- Hart PS, Nisbet EC. Boomerang effects in science communication. Communic Res. 2012;39(6):701–723. doi:10.1177/0093650211416646.

- Trevors GJ, Muis KR, Pekrun R, Sinatra GM, Winne PH. Identity and epistemic emotions during knowledge revision: a potential Account for the Backfire effect. Discourse Process. 2016;53(5-6):339–370. doi:10.1080/0163853X.2015.1136507.

- Vraga EK, Bode L. Defining misinformation and understanding its bounded nature: using expertise and evidence for describing misinformation. Political Commun. 2020;37(1):136–144. doi:10.1080/10584609.2020.1716500.

- German Ministry of Health. (2021, February 1). Corona-Impfung: Mythen und Desinformation. https://www.bundesregierung.de/breg-de/themen/corona-informationen-impfung/mythen-impfstoff-1831898.

- Faul F, Erdfelder E, Lang A-G, Buchner A. G*power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods. 2007;39(2):175–191. doi:10.3758/BF03193146.

- Leiner DJ. Too fast, too straight, too weird: non-reactive indicators for meaningless data in internet surveys. Surv Res Methods. 2019;13(3). doi:10.18148/srm/2019.v13i3.7403.

- Ministry of North Rhine-Westphalia. (2021, March 30). Corona-Schutzimpfung. Das Landesportal Wir in NRW. https://www.land.nrw/de/corona/impfung.

- Hainmueller J, Mummolo J, Xu Y. How much should we trust estimates from multiplicative interaction models? Simple tools to improve empirical practice. Political Anal. 2019;27(2):163–192. doi:10.1017/pan.2018.46.

- Betsch C, Schmid P, Heinemeier D, Korn L, Holtmann C, Böhm R. Beyond confidence: development of a measure assessing the 5C psychological antecedents of vaccination. PloS One. 2018;13(12):e0208601. doi:10.1371/journal.pone.0208601.

- Walter N, Tukachinsky R. A meta-analytic examination of the continued influence of misinformation in the face of correction: how powerful is it, why does it happen, and how to stop it? Commun Res. 2020;47(2):155–177. doi:10.1177/0093650219854600.

- Bertin P, Nera K, Delouvée S. (2020). Conspiracy beliefs, rejection of vaccination, and support for hydroxychloroquine: a conceptual replication-extension in the COVID-19 pandemic context. PsyArXiv. doi:10.31234/osf.io/rz78k.

- Brehm SS, Brehm JW. Psychological reactance: a theory of freedom and control. New York, NY: Academic Press; 1981.

- Drążkowski D, Trepanowski R. (2021). Reactance and perceived severity of a disease as the determinants of COVID-19 vaccination intention: An application of the theory of planned behavior. https://psyarxiv.com/sghmf/.

- Kim H, Seo Y, Yoon HJ, Han JY, Ko Y. The effects of user comment valence of Facebook health messages on intention to receive the flu vaccine: the role of pre-existing attitude towards the flu vaccine and psychological reactance. International Journal of Advertising. 2021: 1–22. doi:10.1080/02650487.2020.1863065.

- Staunton TV, Alvaro EM, Rosenberg BD. A case for directives: strategies for enhancing clarity while mitigating reactance. Curr Psychol. 2020: 1–11. doi:10.1007/s12144-019-00588-0.

- Reyna VF. A theory of medical decision making and health: fuzzy trace theory. Med Decis Making. 2008;28(6):850–865. doi:10.1177/0272989X08327066.

- Larson HJ, Broniatowski DA. Why debunking misinformation is not enough to change people's minds about vaccines. Am J Public Health. 2021;111(6):1058–1060. doi:10.2105/AJPH.2021.306293.

- WHO. (2021a, January 29). WHO launches pilot of AI-powered public-access social listening tool. https://www.who.int/news-room/feature-stories/detail/who-launches-pilot-of-ai-powered-public-access-social-listening-tool.

- WHO. (2021b, November 23). Let’s flatten the infodemic curve. https://www.who.int/news-room/spotlight/let-s-flatten-the-infodemic-curve.

- Okan O, Bollweg TM, Berens EM, Hurrelmann K, Bauer U, Schaeffer D. Coronavirus-related health literacy: A cross-sectional study in adults during the COVID-19 infodemic in Germany. Int J Environ Res Public Health. 2020;17(15):5503.

- Wertgen AG, Richter T, Rouet JF. The role of source credibility in the validation of information depends on the degree of (Im-) plausibility. Discourse Process. 2021;58(5-6):513–528.

- Landesanstalt für Medien NRW. (2017). Forsa Umfrage: Ergebnisbericht Fake News. Medienanstalt NRW. https://www.medienanstalt-nrw.de/fileadmin/user_upload/Ergebnisbericht_Fake_News.pdf.