ABSTRACT

Big Data has emerged in the past few years as a new paradigm providing abundant data and opportunities to improve and/or enable research and decision-support applications with unprecedented value for digital earth applications including business, sciences and engineering. At the same time, Big Data presents challenges for digital earth to store, transport, process, mine and serve the data. Cloud computing provides fundamental support to address the challenges with shared computing resources including computing, storage, networking and analytical software; the application of these resources has fostered impressive Big Data advancements. This paper surveys the two frontiers – Big Data and cloud computing – and reviews the advantages and consequences of utilizing cloud computing to tackling Big Data in the digital earth and relevant science domains. From the aspects of a general introduction, sources, challenges, technology status and research opportunities, the following observations are offered: (i) cloud computing and Big Data enable science discoveries and application developments; (ii) cloud computing provides major solutions for Big Data; (iii) Big Data, spatiotemporal thinking and various application domains drive the advancement of cloud computing and relevant technologies with new requirements; (iv) intrinsic spatiotemporal principles of Big Data and geospatial sciences provide the source for finding technical and theoretical solutions to optimize cloud computing and processing Big Data; (v) open availability of Big Data and processing capability pose social challenges of geospatial significance and (vi) a weave of innovations is transforming Big Data into geospatial research, engineering and business values. This review introduces future innovations and a research agenda for cloud computing supporting the transformation of the volume, velocity, variety and veracity into values of Big Data for local to global digital earth science and applications.

1. Introduction

Big Data refers to the flood of digital data from many digital earth sources, including sensors, digitizers, scanners, numerical modeling, mobile phones, Internet, videos, e-mails and social networks. The data types include texts, geometries, images, videos, sounds and combinations of each. Such data can be directly or indirectly related to geospatial information (Berkovich and Liao Citation2012). The evolution of technologies and human understanding of the data have shifted data handling from the more traditional static mode to an accelerating data arena characterized by volume, velocity, variety, veracity and value (i.e. 5Vs of Big Data; Marr Citation2015). The first V refers to the volume of data which is growing explosively and extends beyond our capability of handling large data sets; volume is the most common descriptor of Big Data (e.g. Hsu, Slagter, and Chung Citation2015). Velocity refers to the fast generation and transmission of data across the Internet as exemplified by data collection from social networks, massive array of sensors from the micro (atomic) to the macro (global) level and data transmission from sensors to supercomputers and decision-makers. Variety refers to the diverse data forms and in which model and structural data are archived. Veracity refers to the diversity of quality, accuracy and trustworthiness of the data. All four Vs are important for reaching the 5th V, which focuses on specific research and decision-support applications that improve our lives, work and prosperity (Mayer-Schönberger and Cukier Citation2013).

The evolution of Big Data, especially its adoption by industry and government, expands the content/meaning of Big Data. The original volume-based definition now encompasses the data itself, relevant technologies and expertise to help generate, collect, store, manage, process, analyze, present and utilize data, as well as the information and knowledge derived. For example, the Big Earth Data Initiative (BEDI, The Whitehouse Citation2014; Ramapriyan Citation2015) designates Big Data as an investment opportunity and a ‘calling card’ for advancing earth science and digital earth using Big Data and relevant processing technologies. For the geospatial domain, Big Data has evolved along a path from purely data to a broader concept including data, technology and workforce. The focus is the geographic aspects of Big Data from Social, Earth Observation (EO), Sensor Observation Service (SOS), Cyber Infrastructure (CI), social media and business. For example, EO generates terabytes (TB) of images daily; climate simulations by the IPCC (Intergovernmental Panel on Climate Change) produce hundreds of peta-bytes (PB) for future climate analyses; and SOS produces even more from sensor web and citizen as sensors (Goodchild Citation2007). Social and business data are generated at a faster pace with specific geographic and temporal footprints (i.e. spatiotemporal data). Tien (Citation2013) investigated the data quality and information to further improve innovation of Big Data to address the 14 engineering challenges identified by National Academy of Engineering in 2008 and advance the 10 breakthrough technologies identified by the Massachusetts Institute of Technology in 2013.

From a business perspective, the Big Data era was envisioned as the next frontier for innovation, competition and productivity in the McKinsey report (Manyika et al. Citation2011) given its potential to drive business revenues and create new opportunities. For example, Najjar and Kettinger (Citation2013) utilized a four-stage strategy to analyze how Big Data can be monetized. Monsanto’s Climate Corporation uses geospatial Big Data to analyze the weather’s complex and multi-layered behavior to help farmers around the world adapt to climate change (Dasgupta Citation2013). Manyika et al. (Citation2011) predicted that Big Data would improve 60% of existing businesses and foster billions of dollars of new business in the next decade. In summary, the Big Data arena ushers in great opportunities and changes in digital earth arena on how we live, think and work (Mayer-Schönberger and Cukier Citation2013), including personalized medicine (Alyass, Turcotte, and Meyre Citation2015), customized product recommendations and travel options. The past few years have witnessed this transformation from concept to reality through a host of technological innovations (e.g. Uber and Wechat).

Past research on processing Big Data focused on the distributed and stream-based processing (Zikopoulos and Eaton Citation2011). While cloud computing emerged a bit earlier than Big Data, it is a new computing paradigm for delivering computation as a fifth utility (after water, electricity, gas and telephony) with the features of elasticity, pooled resources, on-demand access, self-service and pay-as-you-go (Mell and Grance Citation2011). These features enabled cloud services to be Infrastructure as a Service, Platform as a Service and Software as a Service (Mell and Grance Citation2011). While redefining the possibilities of geoscience and digital earth (Yang, Xu, and Nebert Citation2013), cloud computing has engaged Big Data and enlightened potential solutions for various digital earth problems in geoscience and relevant domains such as social science, astronomy, business and industry. The features of cloud computing and their utilization to support characteristics of Big Data are summarized ().

Table 1. Addressing the Big Data challenges with cloud computing (e.g. dust storm forecasting).

Using dust storm forecasting as an example (Xie et al. Citation2010), these storms occur rarely in a year (∼1% of time) and once initiated, they develop rapidly and normally subside in hours to days. These features make it feasible to maintain small-scale forecasting with coarse resolution. But once abnormal dust concentrations are detected, large computing resources need to be engaged quickly (i.e. minutes), to assemble Big Data from weather forecasting and ground observations at different speeds and quality (Huang et al. Citation2013a). Such an application has all features of the 5Vs for Big Data and can be addressed by cloud computing with relevant features (, denoted (‘x’)): (i) data volume processed with a large pooling of computing resource; (ii) velocity of observation and forecasting, handled by elasticity and on-demand features; (iii) variety of multi-sourced inputs addressed by elasticity, pooled resources (computing and analytics) and self-service advantages; (iv) veracity of the Big Data relieved by self-service to select the best-matched services and pay-as-you-go cost model and (v) value represented as accurate forecasting with high resolution, justifiable cost and customer satisfaction with on-demand, elasticity and pay-as-you-go features of cloud computing.

For the adoption of cloud computing for other applications, a table similar to can be constructed. On the other hand, the increasing demand for accuracy, higher resolutions and Big Data will drive the advance of cloud computing and associated technologies. Thus, cloud computing provides a new way to do business and support innovation (Zhang and Xu Citation2013). The integration of cloud computing, Big Data, and economy of goods and digital services have been fostering the discussion of IT-related services, a large share of our daily purchasing consumption (Huang and Rust Citation2013). It is proposed that these Big Data applications with 5V features and challenges are and will be driving the explosive advancements of relevant cloud computing technologies in different directions.

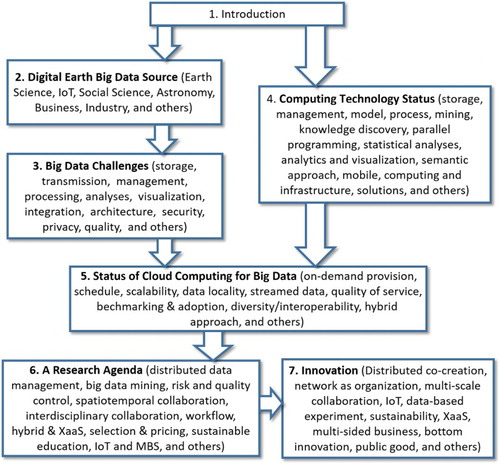

To capture this explosive growth of Big Data and cloud computing in the context of digital earth, this paper presents a comprehensive survey () of the Big Data challenges in different domains (Section 2), Big Data technology challenges (Section 3), cloud computing and relevant technology landscape (Section 4), current status of tackling Big Data problems with cloud computing (Section 5) and a research agenda (Section 6) towards innovation (Section 7).

2. Sources of Big Data

While Big Data has the feature of 5Vs, the feature-based challenges vary in different digital earth relevant domains. This section reviews relevant domain-specific Big Data challenges in the sequence of how closely are they related to geospatial principles and the importance of spatiotemporal thinking in relevant solution developments () as also reflected in the research agenda.

Table 2. The pressing features of Big Data in different domains as denoted with an ‘x’.

2.1. Earth sciences

The advancement of sensing and computing simulation technologies enabled collection and generation of massive data sets every second at different spatiotemporal scales for monitoring, understanding and presenting complex earth systems. For example, EO collects TB of images daily (Yang et al. Citation2011a) with increasing spatial (e.g. sub-meter), temporal (hourly) and spectral (hundreds of bands) resolutions (Benediktsson, Chanussot, and Moon Citation2013). Geospatial models also generate large spatiotemporal data via numerical simulations of the complex earth systems. Climate science is an exemplar representing the Big Data shift across all digital earth domains (Edwards Citation2010; Schnase et al. Citation2014) in using big spatiotemporal data to monitor and describe the complex earth climate system. For example, the IPCC AR5 alone produced 10,000 TB of climate data, and the next IPCC will engage hundreds of PB. It is critical to efficiently analyze these data for detecting global temperature anomalies, identifying geographical regions with similar or disparate climate patterns, and investigating spatiotemporal distribution of extreme weather events (Das and Parthasarathy Citation2009). However, efficiently mining information from PB of climate data is still challenging (Li Citation2015).

2.2. Internet of Things

Advanced sensors and their hosting devices (e.g. mobile phones, health monitors) are connected in a cyber-physical system to measure time and location of humans, movement of automobiles, vibration of machine, temperature, precipitation, humidity and chemical changes in the atmosphere (Lohr Citation2012). The Internet of Things (IoT, Camarinha-Matos, Tomic, and Graça Citation2013) captures this new domain and continuously generates data streams across the globe with geographical footprints from interconnected mobile devices, personal computers, sensors, RFID tags and cameras (Michael and Miller Citation2013; Van den Dam Citation2013). Big Data generated from the various sensors of IoT contains rich spatiotemporal information. The advance of IoT and Big Data technologies presents an array of applications including better product-line management, more effective and timely criminal investigation, boosting agriculture productivity (Jiang, Fang, and Huang Citation2009; Hori, Kawashima, and Yamazaki Citation2010; Xing, Pang, and Zhang Citation2010; Bo and Wang Citation2011), and accelerating the development of smart cities (Belissent Citation2010; Schaffers et al. Citation2011; Gubbi et al. Citation2013) with new architecture (Balakrishna Citation2012; Mitton et al. Citation2012; Theodoridis, Mylonas, and Chatzigiannakis Citation2013; Jin et al. Citation2014).

2.3. Social sciences

Social networks, such as Twitter and Facebook, generate Big Data and are transforming social sciences. As of the time of writing, Twitter users around the world are producing around 6000 tweets per second which corresponds to 500 million tweets per day and around 200 billion tweets per year (Internet Live Stats Citation2016). Economists, political scientists, sociologists and other social scholars use Big Data mining methods to analyze social interactions, health records, phone logs, government records and other digital traces (Boyd and Crawford Citation2012). While such mining methods benefit governments and social studies (Grimmer Citation2015), it is still challenging to quickly extract spatiotemporal patterns from big social data to, for example, help predict criminal activity (Heffner Citation2014), observe emerging public health threats and provide more effective intervention (Lampos and Cristianini Citation2010; Jalali, Olabode, and Bell Citation2012).

2.4. Astronomy

Astronomy is producing a spatiotemporal map of the universe (Dillon Citation2015) by observing the sky using advanced sky survey technologies. Mapping and surveying the universe generates vast amounts of spatiotemporal data. For example, Sloan Digital Sky Survey (SDSS) generated 116 TB data for its Data Release 12 (Alam et al. Citation2015). New observational instruments (e.g. Large Synoptic Survey Telescope) scheduled for operation in 2023 will generate 15 TB data nightly and deliver 200 PB data in total to address the structure and evolution of the universe (http://www.lsst.org). Besides observational data, the Large Hadron Collider is investigating how the universe originated and operates at the atomic level (Bird Citation2011) and produces 60 TB of experimental data per day and 15 PB data per annum (Bryant, Katz, and Lazowska Citation2008). Subsequent to collection, the foremost challenge in this new astronomical arena is managing the Big Data, making sense of the information efficiently, and finding interesting celestial objects and processing in an effective manner (Jagadish et al. Citation2014). The astronomy big data not only records information on how universe evolves, but also can be used to understanding how Earth evolves and protecting Earth from outer space impact, such as planetary defense.

2.5. Business

Business intelligence and analytics are enhanced with Big Data for decisions on strategy, managing optimization and competition (Chen, Chiang, and Storey Citation2012; Gopalkrishnan et al. Citation2012). Business actions (e.g. credit card transitions, online purchases) generate large volume, high velocity and highly unstructured (variety and veracity) data sets. These data contain rich geospatial information, such as where and when a transition occurred. To manage and process these data, the full spectrum of data processing technologies has been developed for the distributed and scalable storage environment (Färber et al. Citation2012; Indeck and Indeck Citation2012; Moniruzzaman and Hossain Citation2013). However, it remains a challenge to efficiently construct spatiotemporal statistical models from business data to optimize product placement, analyze customer transaction and market structure, develop personalized product recommendation systems, manage risks and support timely business decisions (Bryant, Katz, and Lazowska Citation2008; Hsu Citation2008; Duan, Street, and Xu Citation2011).

2.6. Industry

In the fourth industrial revolution (Industry 4.0), products and production systems leverage IoT and Big Data to build ad-hoc networks for self-control and self-optimization (O’Donovan et al. Citation2015). Big Data poses a host of challenges to Industry 4.0, including the following: (i) seamless integration of energy and production; (ii) centralization of data correlations from all production levels; (iii) optimization of performance of scheduling algorithms (Sequeira et al. Citation2014; Gui et al. Citation2016); (iv) storage of Big Data in a semi-structured data model to enable real-time queries and random access without time-consuming operations and data joins (Kagermann et al. Citation2013) and (v) realization of on-the-fly analysis to help organizations react quickly to unanticipated events and detect hidden patterns that compromise production efficiency (Sequeira et al. Citation2014). Cloud computing could be leveraged to tackle these challenges in Industry 4.0 for networking, data integration, data analytics (Gölzer, Cato, and Amberg Citation2015) and intelligence for Cyber-Physical Systems and resiliency and self-adaptation (Krogh Citation2008).

In addition to the reviewed six sources, Big Data challenges may also come from other relevant domains such as medical research, public health, smart cities, security management, emergency response and disaster recovery.

3. Big Data technology challenges

While following the life cycle challenges of traditional data, digital earth Big Data poses other technological challenges because of its 5V features in many different sectors of industry, government and the sciences (McAfee et al. Citation2012). This section reviews the technological challenges posed by Big Data.

3.1. Data storage

Storage challenges are posed by the volume, velocity and variety of Big Data. Storing Big Data on traditional physical storage is problematic as hard disk drives (HDDs) often fail, and traditional data protection mechanisms (e.g. RAID or redundant array of independent disks) are not efficient with PB-scale storage (Robinson Citation2012). In addition, the velocity of Big Data requires the storage systems to be able to scale up quickly which is difficult to achieve with traditional storage systems. Cloud storage services (e.g. Amazon S3, Elastic Block Store or EBS) offer virtually unlimited storage with high fault tolerance which provides potential solutions to address Big Data storage challenges. However, transferring to and hosting Big Data on the cloud is expensive given the size of data volume (Yang, Xu, and Nebert Citation2013). Principles and algorithms, considering the spatiotemporal patterns of data usage, need to be developed to determine the data’s analytical value and its preservation datasets by balancing the cost of storage and data transmission with the fast accumulation of Big Data (Padgavankar and Gupta Citation2014).

3.2. Data transmission

Data transmission proceeds in different stages of data life cycle as follows: (i) data collection from sensors to storage; (ii) data integration from multiple data centers; (iii) data management for transferring the integrated data to processing platforms (e.g. cloud platforms) and (iv) data analysis for moving data from storage to analyzing host (e.g. high performance computing (HPC) clusters). Transferring large volumes of data poses obvious challenges in each of these stages. Therefore, smart preprocessing techniques and data compression algorithms are needed to effectively reduce the data size before transferring the data (Yang, Long, and Jiang Citation2013). For example, Li et al. (Citation2015a) proposed an efficient network transmission model with a set of data compression techniques for transmitting geospatial data in a cyberinfrastructure environment. In addition, when transferring Big Data to cloud platforms from local data centers, how to develop efficient algorithms to automatically recommend the appropriate cloud service (location) based on the spatiotemporal principles to maximize the data transfer speed while minimizing cost is also challenging.

3.3. Data management

It is difficult for computers to efficiently manage, analyze and visualize big, unstructured and heterogeneous data. The variety and veracity of Big Data are redefining the data management paradigm, demanding new technologies (e.g. Hadoop, NoSQL) to clean, store, and organize unstructured data (Kim, Trimi, and Chung Citation2014). While metadata are essential for the integrity of data provenances (Singh et al. Citation2003; Yee et al. Citation2003), the challenge remains to automatically generate metadata to describe Big Data and relevant processes (Gantz and Reinsel Citation2012; Oguntimilehin and Ademola Citation2014). Generating metadata for geospatial data is even challenging due to the data’s intrinsic characteristics of high-dimensionality (3D space and 1D time) and complexity (e.g. space–time correlation and dependency). Besides metadata generation, Big Data also poses challenges to database management systems (DBMSs) because traditional RBDMSs lack scalability for managing and storing unstructured Big Data (Pokorny Citation2013; Chen et al. Citation2014a). While non-relational (NoSQL) databases such as MongoDB and HBase are designed for Big Data (Han et al. Citation2011; Padhy, Patra, and Satapathy Citation2011), how to tailor these NoSQL databases to handle geospatial Big Data by developing efficient spatiotemporal indexing and querying algorithms is still a challenging issue (Whitman et al. Citation2014; Li et al. Citation2016a).

3.4. Data processing

Processing large volumes of data requires dedicated computing resources and this is partially handled by the increasing speed of CPU, network and storage (Bertino et al. Citation2011). However the computing resources required for processing Big Data far exceed the processing power offered by traditional commuting paradigms (Ammn and Irfanuddin Citation2013). Cloud computing offers virtually unlimited and on-demand processing power as a partial solution. However, shifting to the cloud ushers in a number of new issues. First is the limitation of cloud computing’s network bandwidth which impacts the computation efficiency over large data volumes (Bryant, Katz, and Lazowska Citation2008). Second is data locality for Big Data processing (Yang, Xu, and Nebert Citation2013). While ‘moving computation to data’ is a design principle followed by many Big Data processing platforms, such as Hadoop (Ding et al. Citation2013), the virtualization and pooled nature of cloud computing makes it a challenging task to track and ensure data locality (Yang, Long, and Jiang Citation2013), and to support data processing involving intensive data exchange and communication (Huang et al. Citation2013b).

In addition, the veracity of Big Data requires preprocessing before conducting data analysis and mining (e.g. cluster analysis, classification, machine learning) for better quality (LaValle et al. Citation2013; Mayer-Schönberger and Cukier Citation2013). Large, high-dimensional spatiotemporal data cannot be managed by existing data reduction algorithms within a tolerable time frame and acceptable quality (Aghabozorgi, Seyed Shirkhorshidi, and Ying Wah Citation2015; García, Luengo, and Herrera Citation2015). For example, traditional algorithms are not able to preprocess the massive volumes of continuously incoming intelligence and surveillance sensor data in real time. Highly efficient and scalable data reduction algorithms are required for removing the potentially irrelevant, redundant, noisy and misleading data, and this is one of the most important tasks in Big Data research (Zhai, Ong, and Tsang Citation2014).

3.5. Data analysis

Data analysis is an important phase in the value chain of Big Data for information extraction and predictions (Fan and Liu Citation2013; Chen et al. Citation2014b). However, analyzing Big Data challenges the complexity and scalability of the underlying algorithms (Khan et al. Citation2014). Big Data analysis requires sophisticated scalable and interoperable algorithms (Jagadish et al. Citation2014) and is addressed by welding analysis programs to parallel processing platforms (e.g. Hadoop) to harness the power of distributed processing. However, this ‘divide and conquer’ strategy does not work with deep and multi-scale iterations (Chen and Zhang Citation2014) that are required for most geospatial data analysis/mining algorithms. Furthermore, most existing analytical algorithms require structured homogeneous data and have difficulties in processing the heterogeneity of Big Data (Bertino et al. Citation2011). This gap requires either new algorithms that cope with heterogeneous data or new tools for preprocessing data to make them structured to fit existing algorithms. In geospatial domain, optimizing existing spatial analysis algorithms by integrating spatiotemporal principles (Yang et al. Citation2011b) to accelerate geospatial knowledge discovery is challenging and has become a high priority research field of ‘spatiotemporal thinking, computing and applications’ (Cao, Yang, and Wong Citation2009; Yang Citation2011; Yang et al. Citation2014; Li et al. Citation2016a).

3.6. Data visualization

Big Data visualization uncovers hidden patterns and discovers unknown correlations to improve decision-making (Nasser and Tariq Citation2015). Since Big Data is often heterogeneous in type, structure and semantics, visualization is critical to make sense of Big Data (Chen et al. Citation2014b; Padgavankar and Gupta Citation2014). But it is difficult to provide real-time visualization and human interaction for visually exploring and analyzing Big Data (Sun et al. Citation2012; Jagadish et al. Citation2014; Nasser and Tariq Citation2015). The SAS (Citation2012) summarized five key functionalities for Big Data visualization as follows: (i) highly interactive graphics incorporating data visualization best practices; (ii) integrated, intuitive and approachable visual analytics; (iii) web-based interactive interfaces to preview, filter or sample data prior to visualizations; (iv) in-memory processing and (v) easily distributed answers and insight via mobile devices and web portals. Designing and developing these functionalities is challenging because of the many features of Big Data including the fusion of multiple data sources and the high-dimensionality and high spatial resolution of geospatial data (Fox and Hendler Citation2011; Reda et al. Citation2013).

3.7. Data integration

Data integration is critical for achieving the 5th V (value) of Big Data through integrative data analysis and cross-domain collaborations (Chen et al. Citation2013; Christen Citation2014). Dong and Divesh (Citation2015) summarized the data integration challenges of schema mapping, record linkage and data fusion. Metadata is essential for tracking these mappings to make the integrated data sources ‘robotically’ resolvable and to facilitate large-scale analyses (Agrawal et al. Citation2011). However efficiently and automatically creating metadata from Big Data is still a challenging task (Gantz and Reinsel Citation2011). In geospatial domain, geo-data integration has sparked new opportunities driven by ever increasingly collaborative research environment. One example is the EarthCube program initiated by the U.S. NSF’s Geosciences Directorate to provide unprecedented integration and analysis of geospatial data from a variety of geoscience domains (EarthCube Citation2014).

3.8. Data architecture

Big Data is gradually transforming the way scientific research is conducted as evidenced by the increasingly data-driven and the open science approach (Jagadish et al. Citation2014). Such transformations pose challenges to system architecture. For example, seamlessly integrating different tools and geospatial services remain a high priority (Li et al. Citation2011; Wu et al. Citation2011). Additional priority issues include integrating these tools into reusable workflows (Li et al. Citation2015b), incorporating data with the tools to promote functionality (Li et al. Citation2014) and sharing data and analyses among communities. An ideal architecture would seamlessly synthesize and share data, computing resources, network, tools, models and, most importantly, people (Wright and Wang Citation2011). Geospatial cyberinfrastructure is actively investigated in the geospatial sciences (Yang et al. Citation2010). EarthCube, though still in an early development stage, is a good example of such cyberinfrastructure in geospatial domain. Building a similar cyberinfrastructure for other science domains is equally important and challenging.

3.9. Data security

The increasing dependence on computers and Internet over the past decades makes businesses and individuals vulnerable to data breach and abuse (Denning and Denning Citation1979; Abraham and Paprzycki Citation2004; Redlich and Nemzow Citation2006). Big Data poses new security challenges for traditional data encryption standards, methodologies and algorithms (Smid and Branstad Citation1988; Coppersmith Citation1994; Nadeem and Javed Citation2005). Previous studies of data encryption focused on small-to-medium-size data, which does not work well for Big Data due to issues of the performance and scalability (Chen et al. Citation2014b). In addition, data security policies and schemes to work with the structured data stored in conventional DBMS are not effective in handling highly unstructured, heterogeneous data (Villars, Olofson, and Eastwood Citation2011). Thus, effective policies for data access control and safety management need to be investigated in Big Data and these need to incorporate new data management systems and storage structures (Cavoukian and Jonas Citation2012; Chen et al. Citation2014a). In the cloud era, since data owners have limited control on virtualized storage, ensuring data confidentiality, integrity and availability becomes a fundamental concern (Kaufman Citation2009; Wang et al. Citation2009; Feng et al. Citation2011; Chen and Zhao Citation2012).

3.10. Data privacy challenges

The unprecedented networking among smart devices and computing platforms contributes to Big Data but poses privacy concerns where an individual’s location, behavior and transactions are digitally recorded (Cukier Citation2010; Tene Citation2011; Michael and Miller Citation2013; Cheatham Citation2015). For example, social media and individual medical records contain personal health information raising privacy concerns (Terry Citation2012; Kaisler et al. Citation2013; Michael and Miller Citation2013; Padgavankar and Gupta Citation2014). Another example is that companies are using Big Data to monitor workforce performance by tracking the employees’ movement and productivity (Michael and Miller Citation2013). These privacy issues expose a gap between the convention policies/regulations and Big Data and call for new policies to address comprehensively privacy concerns (Khan et al. Citation2014; Eisenstein Citation2015).

3.11. Data quality

Data quality includes four aspects: accuracy, completeness, redundancy and consistency (Chen et al. Citation2014b). The intrinsic nature of complexity and heterogeneity of Big Data makes data accuracy and completeness difficult to identify and track, thus increasing the risk of ‘false discoveries’ (Lohr Citation2012). For example, social media data are highly skewed in space, time and demographics, and location accuracy varies from meters to hundreds of kilometers. In addition, data redundancy control and filtering should be conducted at the point of data collection in real-time (e.g. with sensor networks, Cuzzocrea, Fortino, and Rana Citation2013; Chen et al. Citation2014a). Finally, ensuring data consistency and integrity is challenging with Big Data especially when the data change frequently and are shared with multiple collaborators (Khan et al. Citation2014).

4. Cloud computing and other relevant technology landscape

This section reviews the technology challenges posed by Big Data from 12 different aspects. While some of these challenges (such as analysis, visualization and quality) exist before Big Data era, the 5Vs of Big Data bring the challenges to a new level as discussed above. Big Data poses unique challenges from several aspects including analysis, visualization, integration and architecture, due to the inherent high-dimensionality of geospatial data and the complex spatiotemporal relationships.

To address Big Data challenges (Sections 2 and 3), a variety of methodologies, techniques and tools () are identified to facilitate the transformation of data into value. Computing infrastructure, especially cloud computing, plays a significant role in information and knowledge extraction. Efficient handling of Big Data often requires specific technologies, such as massive parallel processing, distributed databases, data-mining grids, scalable storage systems, and advanced computing architectures, platforms, infrastructures and frameworks (Cheng, Yang, and Rong Citation2012; Zhang, Li, and Chen Citation2012). This section introduces these methodologies and technologies that underpin Big Data handling ().

Table 3. Addressing the Big Data challenges with emerging methodologies, technologies and solutions.

4.1. Data storage, management and model

4.1.1. Distributed file/storage system

To meet the storage challenge, an increasing number of distributed file systems (DFSs) are adapted with storage of small files, load balancing, copy consistency and de-duplication (Zhang and Xu Citation2013) in a network-shared files and storage fashion (Yeager Citation2003). The Hadoop Distributed File System (HDFS; Shvachko et al. Citation2010) is such a system running on multiple hosts, and many IT companies, including Yahoo, Intel and IBM, have adopted HDFS as the Big Data storage technology. Many popular cloud storage services powered by different DFSs, including Dropbox, iCloud, Google Drive, SkyDrive and SugarSync, are widely used by the public to store Big Data and overcome limited data storage on a single computer (Gu et al. Citation2014).

4.1.2. NoSQL database system

While contemporary data management solutions offer limited integration capabilities for the variety and veracity of Big Data, recent advances in cloud computing and NoSQL open the door for new solutions (Grolinger et al. Citation2013). The NoSQL databases match requirements of Big Data with high scalability, availability and fault tolerance (Chen et al. Citation2014a). Many studies have investigated emerging Big Data technologies (e.g. MapReduce frameworks, NoSQL databases) (Burtica et al. Citation2012). A Knowledge as a Service (KaaS) framework is proposed for disaster cloud data management, in which data are stored in a cloud environment using a combination of relational and NoSQL databases (Grolinger et al. Citation2013). Currently, NoSQL systems for interactive data serving environments and large-scale analytical systems based on MapReduce (e.g. Hadoop) are widely adopted for Big Data management and analytics (Witayangkurn, Horanont, and Shibasaki Citation2013). For example, the open-source Hive project integrates declarative query constructs into MapReduce-like software to allow greater data independence, code reusability and automatic query optimization.Footnote1 HadoopDB (Abouzeid et al. Citation2009) incorporates Hadoop and open-source DBMS software for data analysis, achieving the performance and efficiency of parallel databases yet still achieving the scalability, fault tolerance, and flexibility of MapReduce-based systems.

4.1.3. Search, query, indexing and data model design

Performance is critical in Big Data era, and accurately and quickly locating data requires a new generation of search engines and query systems (Miyano and Uehara Citation2012; Aji et al. Citation2013). Zhong et al. (Citation2012) proposed an approach to provide efficient spatial query processing over big spatial data and numerous concurrent user queries. This approach first organizes spatial data in terms of geographic proximity to achieve high Input/Output (I/O) throughput, then designs a two-tier distributed spatial index for pruning the search space, and finally uses an ‘indexing + MapReduce’ data processing architecture for efficient spatial query. Aji et al. (Citation2013) developed Hadoop-GIS, a scalable and high performance spatial data warehousing system on Hadoop, to support large-scale spatial queries. SpatialHadoop (Eldawy and Mokbel Citation2013) is an efficient MapReduce framework for spatial data queries and operations by employing a simple spatial high-level language, a two-level spatial index structure and basic spatial components built inside the MapReduce layer.

In fact, comprehensive and spatiotemporal indices are needed and developed to query and retrieve Big Data (Zhao et al. Citation2012). An example is the global B-tree or spatiotemporal indices for HDFS. Xia et al. (Citation2014) proposed a new indexing structure, the Access Possibility R-tree (APR-tree), to build an R-tree-based index using spatiotemporal query patterns. In a later study, Xia et al. (Citation2015b) further proposed a new indexing mechanism with spatiotemporal patterns integrated to support Big EO global access by defining heterogeneous user queries into different categories based on spatiotemporal patterns of queries, and using different indices for each category.

The spatiotemporal Big Data poses grand challenges in terms of the representation and computation of time geographic entities and relations. Correspondingly, Chen et al. (Citation2015) proposed spatiotemporal data model using a compressed linear reference (CLR) technique to transform time geographic entities of a road network in three-dimensional (3D) (x, y, t) space to two-dimensional (2D) CLR space. As a result, network time geographic entities can be stored and managed in a spatial database and efficient spatial operations and index structures can be employed to perform spatiotemporal operations and queries for network time geographic entities in CLR space.

While progress has been made in designing search engines, parallelizing queries, optimizing indices, and using new data models for better representing and organizing heterogeneous data in various formats, more studies are required to speed up the Big Data representation, access and retrieval under various file systems and database environments.

4.2. Data processing, mining and knowledge discovery

4.2.1. MapReduce (Hadoop) system

MapReduce is a parallel programming model for Big Data with high scalability and fault tolerance. The elegant design of MapReduce has prompted the implementation of MapReduce in different computing architectures, including multi-core clusters, clouds, Cubieboards and GPUs (Jiang et al. Citation2015). MapReduce also has become a primary choice for cloud providers to deliver data analytical services (Zhao et al. Citation2014). Many traditional algorithms and data processing in a single machine environment are transferred to the MapReduce platform (Kim Citation2014; Cosulschi, Cuzzocrea, and De Virgilio Citation2013). For example, Kim (Citation2014) analyzed an algorithm to generate wavelet synopses on the distributed MapReduce framework.

A specially designed MapReduce algorithm with grid index is proposed for polygon overlay processing, one of the important operations in GIS spatial analysis, and associated intersection computation time can be largely reduced by using the grid index (Wang et al. Citation2015). Cary (Citation2011) also showed how indexing spatial data can be scaled in MapReduce for data-intensive problems. To support GIS applications efficiently, Chen, Wang, and Shang (Citation2008) proposed a high performance workflow system MRGIS, a parallel and distributed computing platform based on MapReduce clusters. Nguyen and Halem (Citation2011) proposed a workflow system based on MapReduce framework for scientific data-intensive workflows, and a climate satellite data-intensive processing and analysis application is developed to evaluate the workflow system.

However, many Big Data applications with MapReduce require a rapid response time, and improving the performance of MapReduce jobs is a priority for both academia and industry (Gu et al. Citation2014) for different objectives, including job scheduling, resource allocation, memory issues and I/O bottlenecks (Gu et al. Citation2014; Jiang et al. Citation2015).

4.2.2. Parallel programming languages

While parallel computing (e.g. MapReduce) is widely used and effective in Big Data, an urgent priority is effective programming models and languages (Hellerstein Citation2010; Dobre and Xhafa Citation2014). Alvaro et al. (Citation2010) demonstrated that declarative languages substantially simplify distributed systems programming. Datalog-inspired languages have been explored with a focus on inherently parallel tasks in networking and distributed systems and have proven to be a much simpler family of languages for programming parallel and distributed software (Hellerstein Citation2010). Shook et al. (Citation2016) developed a parallel cartographic modeling language (PCML) implemented in Python to parallelize spatial data processing by extending the widely adopted cartographic modeling framework. However, most of geoscientists and practitioners are lacking of parallel programming skills which requires considering what, when and how to parallelize an application task. Therefore, an associated parallel programming language could be created to automatically produce parallelization code with much simple and less programming work, for example, the dragging and drawing different modules or clicking a set of buttons.

4.2.3. Statistical analyses, machine learning and data mining

Standard statistical and data mining tools for traditional data sets are not designed for supporting statistical and machine learning analytics for Big Data (Triguero et al. Citation2015) because many traditional tools (e.g. R) only run on a single computer. Many scholars have investigated parallel and scalable computing to support the most commonly used algorithms. For example, Triguero et al. (Citation2015) proposed a distributed partitioning methodology for nearest neighbor classification, and Tablan et al. (Citation2013) presented a unique, cloud-based platform for large-scale, natural language processing (NLP) focusing on data-intensive NLP experiments by harnessing the Amazon cloud. Zhang et al. (Citation2015b) proposed three distributed Fuzzy c-means (FCM) algorithms for MapReduce. In Apache Mahout, an open-source machine-learning package for Hadoop, many classic algorithms for data mining (e.g. naïve Bayes, Latent Dirichlet Allocation, LDA, logistic regression) are implemented as MapReduce jobs.

Novel approaches are also developed for big spatial data mining. Du, Zhu, and Qi (Citation2016), for example, introduced an interactive visual approach to detect spatial clusters from emerging spatial Big Data (e.g. geo-referenced social media data) using dynamic density volume visualization in a 3D space (two spatial dimensions and a third temporal or thematic dimension of interest). Liu et al. (Citation2016) proposed an unsupervised land use classification method with a new type of place signature based on aggregated temporal activity variations and detailed spatial interactions among places derived from the emerging Big Data, such as mobile phone records and taxi trajectories. Lary et al. (Citation2014) presented a holistic system called Holistics 3.0 that combines multiple big datasets and massively multivariate computational techniques, such as machine learning, coupled with geospatial techniques, for public health.

However, these systems and tools still lack the features of advanced machine learning, statistical analysis and data mining (Wang, Handurukande, and Nassar Citation2012). To extract meaningful information from the massive data, much effort should be devoted to develop comprehensive libraries and tools that are easy to use, capable of mine massive, multidimensional (especially temporal dimension) data.

4.2.4. Big Data analytics and visualization

Big Data analytics is an emerging research topic with the availability of massive storage and computing capabilities offered by advanced and scalable computing infrastructures. Baumann et al. (Citation2016) introduced the EarthServer, a Big Earth Data Analytics engine, for coverage-type datasets based on high performance array database technology, and interoperable standards for service interaction (e.g. OGC WCS and WCPS). The EarthServer provided a comprehensive solution from query languages to mobile access and visualization of Big Earth Data. Using sensor web event detection and geoprocessing workflow as a case study, Yue et al. (Citation2015) presented a spatial data infrastructure (SDI) approach to support the analytics of scientific and social data.

Visual analytics also emerges as a research topic to support scientific explorations of large-scale multidimensional data. Sagl, Loidl, and Beinat (Citation2012), for example, presented a visual analytics approach for deriving spatiotemporal patterns of collective human mobility from a vast mobile network traffic data set by relying entirely on visualization and mapping techniques.

Several tools and software have also been developed to support visual analytics, and to deliver deeper understanding. For example, the EGAN software was implemented to transform high-throughput, analytical results into a hypergraph visualizations (Paquette and Tokuyasu Citation2011). Gephi (http://gephi.github.io/) is an interactive visualization and exploration tool used to explore and manipulate networks and create dynamic and hierarchical graphs. Zhang et al. (Citation2015c) presented an interactive spatial data analysis and visualization system, TerraFly GeoCloud, to help end users visualize and analyze spatial data and share the analysis results through URLs (Zhang et al. Citation2015b). While progress has been made to leverage cloud infrastructure and data warehouse for Big Data visualization (Edlich, Singh, and Pfennigstorf Citation2013), it remains a challenge to support efficient and effective exploration of Big Data, especially for dynamic and hierarchical graphs, and social media data (Huang and Xu Citation2014).

4.2.5. Semantics and ontology-driven approaches

Semantic and ontologies make computer and web smarter to understand, manipulate and analyze a variety of data. Semantic has emerged as a common, affordable data model that facilitates interoperability, integration and monitoring of knowledge-based systems (Kourtesis, Alvarez-Rodríguez, and Paraskakis Citation2014). In recent years there has been an explosion of interest using semantics for the traditional data analysis since it helps get the real information from data. Especially for cross-domain data, semantic provides advantages to link data and interchange information. Semantic understanding of Big Data is one of the ultimate goals for scientists and is used in Big Data for taxonomies, relationships and knowledge representation (Jiang et al. Citation2016). Currently, research is being conducted to discover and utilize semantic and knowledge database to manage and mine Big Data. For example, Liu et al. (Citation2014) improved the recall and precision of geospatial metadata discovery based on Semantic Web for Earth and Environmental Terminology (SWEET) ontology, concept and relationships. Similar technologies could be used not only for geospatial metadata discover but also in other domains. Dantan, Pollet, and Taibi (Citation2013) developed a semantic-enabled approach to establish a common form to design research models. Choi, Choi, and Kim (Citation2014) proposed an ontology-based, access control model (Onto-ACM) to address the differences in the permitted access control among service providers and users. Semantic and ontological challenges remain for Big Data as the availability of content, ontology, development, evolution, scalability, multi-linguality and visualization are significantly increased (Benjamins et al. Citation2002).

4.3. Mobile data collection, computing and Near Field Communication

Mobile phones are playing a significant role in our lives, and mobile devices allow applications to collect and utilize Big Data and intensive computing resources (Soyata et al. Citation2012). In fact, large volumes of data on massive scales have been generated from GPS-equipped mobile devices, and associated methodologies and approaches are widely developed to incorporate such data for various applications, for example, human mobility studies, route recommendation, urban planning and traffic management. Huang and Wong (Citation2016), for example, developed an approach to integrate geo-tagged tweets posted from smartphones and tradition survey data from the American Community Survey to explore the activity patterns Twitter users with different socioeconomic status. Shelton, Poorthuis, and Zook (Citation2015) also provided a conceptual and methodological framework for the analysis of geo-tagged tweets to explore the spatial imaginaries and processes of segregation and mobility. Sainio, Westerholm, and Oksanen (Citation2015) introduced a fast map server to generate and visualize heat maps of popular routes online based on client preferences from massive track data collected by the Sports Tracker mobile application. Toole et al. (Citation2015) presented a flexible, modular and computationally efficient software system to transform and integrate raw, massive data of call detail records (CDRs) from mobile phones into estimates of travel demand.

Meanwhile, mobile devices are increasingly used to perform extensive computations and store Big Data. Cloud-based Mobile Augmentation (CMA), for example, recently emerged as the state-of-the-art mobile computing model to increase, enhance and optimize computing and storage capabilities of mobile devices. For example, Abolfazli et al. (Citation2014) surveyed the mobile augmentation domain and Soyata et al. (Citation2012) proposed the MObile Cloud-based Hybrid Architecture (MOCHA) for mobile-cloud computing applications including object recognition in the battlefield. Han, Liang, and Zhang (Citation2015) discussed integrating mobile sensing and cloud computing to form the singular idea of mobile cloud sensing. Edlich, Singh, and Pfennigstorf (Citation2013) developed an open-data platform to provide Data-as-a-Service (DaaS) in the cloud for mobile devices. However, mobile devices are still limited by either capacities (e.g. CPU, memory, battery) or network resources. Solutions have been investigated to connect mobile devices to other resources with more powerful computing or network capabilities, including computers and laptops to strengthen their ability to perform computing tasks (Hung, Tuan-Anh, and Huh Citation2013). Near Field Communication (NFC, Coskun, Ozdenizci, and Ok Citation2013) allows data exchange among terminals at close distances, data transmission at low power and with a bi-directional communication and more secure transmission (Joo, Hong, and Kim Citation2012). Most manufacturers (e.g. Apple, Samsung, Nokia) enhance the number of mobile phones with NFC, facilitating visualization of content collected via smart media and multimedia using NFC technology (Joo, Hong, and Kim Citation2012).

The massive growth of mobile devices significantly changes our computer and Internet usage, along with the dramatic development of mobile services, or mobile computing. Therefore, latest networking (e.g. NFC) and computing paradigms (e.g. cloud computing) should be leveraged to enable mobile devices to run applications of Big Data and intensive computing (Soyata et al. Citation2012).

4.4. Big Data computing and processing infrastructure

The features of Big Data drive research to discover comprehensive solutions for both computing and data processing, such as designing advanced architectures, and developing data partition and parallelization strategies to better leverage HPC. Liu (Citation2013) surveyed computing infrastructure for Big Data processing from aspects of architectural, storage and networking challenges and discussed emerging computing infrastructure and technologies. Nativi et al. (Citation2015) discussed the general strategies, focusing on the cloud-based discovery and access solutions, to address Big Data challenges of Global Earth Observation System of Systems (GEOSS). Zhao et al. (Citation2015) reviewed the current state of parallel GIS with respect to parallel GIS architectures, parallel processing strategies, relevant topics, and identified key problems and future potential research directions of parallel GIS. In fact, the widespread adoption of heterogeneous computing architecture (e.g. CPU, GPU) and infrastructure (e.g. local clusters, cloud computing) has substantially improved the computing power and raised optimization issues related to the processing of task streams across different architectures and infrastructures (Campa et al. Citation2014). The following sections discuss various advancements in computing and processing for Big Data.

4.4.1. Computing infrastructure

Parallel computing is one of the most widely used computing solutions to address the computational challenges of Big Data (Lin et al. Citation2013) through HPC cluster, supercomputer, or computing resources geographically distributed in different data centers (Huang and Yang Citation2011). To speed up the computation, this computing paradigm partitions a serial computation task into subtasks and uses multiple resources to run subtasks in parallel by leveraging different levels of parallelization from multi-cores, many cores, server rack, racks in a data center and globally shared infrastructure over the Internet (Liu Citation2013). For example, unmanned aerial vehicles (UAVs) images are processed efficiently to support automatic 3D building damage detection after an earthquake using a parallel processing approach (Hong et al. Citation2015). Pijanowski et al. (Citation2014) redesigned a Land Transformation Model to make it capable of running at large scales and fine resolution (30 m) using a new architecture that employs an HPC cluster.

Distributed computing addresses geographically dispersed Big Data challenges (Cheng, Yang, and Rong Citation2012) where applications are divided into many subtasks. A variety of distributed computing mechanisms (e.g. HPC, Grid) distribute user requests and achieve optimal resource scheduling by implementing distributed collaborative mechanisms (Huang and Yang Citation2011; Gui et al. Citation2016). Volunteer computing has become a popular solution for Big Data, and large-scale computing and support volunteers around the world contribute their computing resources (e.g. personal computers) and storage to help scientists run applications (Anderson and Fedak Citation2006). Compared to HPC clusters or grid computer pool, computing resources from volunteers are free but are much less reliable for tasks with specific deadlines. For no time-constraint tasks (e.g. QMachine, weather@home, Massey et al. Citation2014), computing resources from volunteers is a viable and effective methodology.

Cloud computing enhances the sharing of information, applying modern analytic tools and managing controlled access and security (Radke and Tseng Citation2015). It provides a flexible stack of massive computing, storage and software services in a scalable manner and at low cost. As a result, more scientific applications traditionally handled by HPC or grid facilities are deployed on the cloud. Examples of leveraging cloud computing include supporting geodatabase management (Cary et al. Citation2010), retrieving and indexing spatial data (Wang et al. Citation2009), running climate models (Evangelinos and Hill Citation2008), supporting dust storm forecasting (Huang et al. Citation2013a), optimizing spatial web portals (Xia et al. Citation2015b) and even delivering model as a service (MaaS) for geosciences (Li et al. Citation2014). At the same time, various strategies and experiments are developed to better leverage cloud resources. For example, Campa et al. (Citation2014) investigated the scaling of pattern-based, parallel applications from local CPU/GPU-clusters to a public cloud CPU/GPU infrastructure and demonstrated that CPU-only and mixed CPU/GPU computations are offloaded to remote cloud resources with predictable performance. Gui et al. (Citation2014) recommend a mechanism for selecting the best public cloud services for different applications.

However, only limited geoscience applications have been developed to leverage the parallel computing and emerged cloud platform. Therefore, much effort should be devoted to identify applications of massive impact, of fundamental importance, and requiring the latest parallel programming and computing paradigm (Yang et al. Citation2011a).

4.4.2. Management and processing architecture

Big Data requires high performance data processing tools for scientists to extract knowledge from the unprecedented volume of data (Bellettini et al. Citation2013).To leverage cloud computing for Big Data processing, Kim, Kim, and Kim (Citation2013) offered a framework to access and integrate distributed data in a heterogeneous environment and deployed it in a cloud environment. Somasundaram et al. (Citation2012) proposed a data management framework for processing Big Data in the private cloud infrastructure. Similarly, Big Data processing and analyses platform are widely used for massive data storing, processing, displaying and sharing based on cloud computing (Liu Citation2014) or a combination of traditional clusters and cloud infrastructure (Bellettini et al. Citation2013), MapReduce data processing framework (Ye et al. Citation2012; Lin et al. Citation2013) and open standards and interfaces (e.g. OGC standards; Lin et al. Citation2013). Krämer and Senner (Citation2015), for example, proposed a software architecture that allows for processing of large geospatial data sets in the cloud by supporting multiple data processing paradigms such as MapReduce, in-memory computing or agent-based programming. However, existing data management and processing systems still fall short in addressing many challenges in processing large-scale data, especially stream data (e.g. performance, data storage and fault tolerance) (Zhang et al. Citation2015a). Therefore, an open-data platform, architecture or framework needs to be developed to leverage latest storage, computing and information technologies in managing, processing and analyzing multi-sourced, heterogeneous data in real-time.

4.4.3. Remote collaboration

To process multi-sourced, distributed Big Data, remote collaboration involving Big Data exchange and analysis among distant locations for various Computer-Aided Design and Engineering (CAD/E) applications have been explored (Belaud et al. Citation2014). Fang et al. (Citation2014) discussed the need for innovation in collaboration across multi-domains of CAD/E and introduced a lightweight computing platform for simulation and collaboration in engineering, 3D visualization and Big Data management. To handle massive biological data generated by high-throughput experimental technologies, the BioExtract Server (bioextract.org) is designed as a workflow framework to share data extracts, analytic tools and workflows with collaborators (Lushbough, Gnimpieba, and Dooley Citation2015). Still, more studies would contribute more practical experiences to build a remote collaboration platform provides scientists an ‘easy-to-integrate’ generic tool, thus enabling worldwide collaboration and remote processing for any kind of data.

4.4.4. Cloud monitoring and tracking

Real-time monitoring of cloud resources is crucial for a variety of tasks including performance analysis, workload management, capacity planning and fault detection (Andreolini et al. Citation2015). Progress has been made in cloud system monitoring and tracking. For example, Yang et al. (Citation2015a) investigated the challenges posed by industrial Big Data and complex machine working conditions and proposed a framework for implementing cloud-based, machine health prognostics. To limit computational and communication costs and guarantee high reliability in capturing relevant load changes, Andreolini et al. (Citation2015) presented an adaptive algorithm for monitoring Big Data applications that adapts the intervals of sampling and frequency of updates to data characteristics and administrator needs. Bae et al. (Citation2014) proposed an intrusive analyzer that detects interesting events (such as task failure) occurring in the Hadoop system.

4.4.5. Energy efficiency and cost management

Big Data coupled with cloud computing pose other challenges, notably energy efficiency and cost management. High energy consumption is a major obstacle for achieving green computing with large-scale data centers (Sun et al. Citation2014). Noticeable efforts are proposed to tackle this issue including GreenHDFS, GreenHadoop and Green scheduling (Kaushik and Bhandarkar Citation2010; Kaushik, Bhandarkar, and Nahrstedt Citation2010; Zhu, Shu, and Yu Citation2011; Goiri et al. Citation2012; Hartog et al. Citation2012). However, efficient energy management remains a challenge. While cloud computing provides virtually unlimited storage, computing and networking (Yang et al. Citation2011a; Ji et al. Citation2012; Yang, Xu, and Nebert Citation2013), obtaining optimal cost and high efficiency are elusive (Pumma, Achalakul, and Li Citation2012). Research to address this issue is emerging from different facets, including cloud cost modeling (Gui et al. Citation2014), resource auto- and intelligent-scaling (Röme Citation2010; Jam et al. Citation2013; Xia et al. Citation2015b), location-aware smart job scheduling and workflow (Mao and Humphrey Citation2011; Lorido-Botrán, Miguel-Alonso, and Lozano Citation2012; Li et al. Citation2015b; Gui et al. Citation2016) and hybrid cloud solutions (Shen et al. Citation2011; Bicer, Chiu, and Agrawal Citation2012; Xu Citation2012).

4.5. Big Data and cloud solutions for geospatial sciences

While cloud computing emerged as a potential solution to support computing and data-intensive applications, several barriers hinder transitioning from traditional computing to cloud computing (Li et al. Citation2015b). First, the learning curve for geospatial scientists is steep when it comes to understanding the services, models and cloud techniques. Second, intrinsic challenges brought by cloud infrastructure (e.g. communication overhead, optimal cloud zones) have yet to be addressed. And third, processing massive data and running models involves complex procedures and multiple tools, requiring more flexible and convenient cloud services. This section discusses the solutions used to better leverage cloud computing for addressing Big Data problems in the geospatial sciences.

4.5.1. Anything as a service (XaaS) for geospatial data and models

There are emerging features to ease the challenge of leveraging cloud computing and facilitate the use of widely available models and services. Notable examples are web service composition (Liu et al. Citation2015), web modeling (Geller and Turner Citation2007), application as a service (Lushbough, Gnimpieba, and Dooley Citation2015), MaaS (Li et al. Citation2014) and workflow as a service (WaaS; Krämer and Senner Citation2015). The effectiveness of these features is based on ‘hiding’ from the scientific community the complexity of computing, data and the model and providing an easy-to-use interface for accessing the underlying modeling and computing infrastructure. Web and model service dynamic composition is a key technology and a reliable method for creating value-added services by composing available services and applications (Liu et al. Citation2015). Chung et al. (Citation2014) presented CloudDOE to encapsulate technical details behind a user-friendly graphical interface, thus liberating scientists from performing complicated operational procedures. Uncinus allows researchers easy access to cloud computing resources through web interfaces (Lushbough, Gnimpieba, and Dooley Citation2015). By utilizing cloud computing services, Li et al. (Citation2014) extended the traditional Model Web concept (Geller and Turner Citation2007) by proposing MaaS.

Krämer and Senner (Citation2015) proposed a software architecture containing a web-based user interface where domain experts (e.g. GIS analysts, urban planners) define high-level processing workflows using a domain-specific language (DSL) to generate a processing chain specifying the execution of workflows on a given cloud infrastructure according to user-defined constraints. The proposed architecture is WaaS. Recent advancements in cloud computing provide novel opportunities for scientific workflows. Li et al. (Citation2015b) offered a scientific workflow framework for big geoscience data analytics by leveraging cloud computing, MapReduce and Service-Oriented Architecture (SOA). Zhao et al. (Citation2012) presented the Swift scientific workflow management system as a service in the cloud. Their solution integrates Swift with the OpenNebula Cloud platform. Chung et al. (Citation2014) presented CloudDOE, a platform-independent software in Java to encapsulate technical details behind a user-friendly graphical interface.

However, general cloud platforms are not designed to support and integrate geoscience algorithms, applications and models, which might be data, computation, communication or user intensive, or a combination of two or even three of these intensities (Yang et al. Citation2011a). Therefore, an integrated framework should be developed to enable XaaS that can synthesize computing and model resources across different organizations, and deliver these resources to the geoscience communities to explore, utilize and leverage them efficiently and easily.

4.5.2. Computing resource auto-provision, scaling and scheduling

The large-scale data requirement of collaboration, efficient data processing and the ever increasing disparate forms of user applications are not only making data management more complex but are bringing more challenges for the resource auto-provision, scaling and scheduling of the beneath computing infrastructure (Gui et al. Citation2016). With the development of cloud technologies and extensive deployment of cloud platforms, computing facilities are mostly created in the format of virtualized resources or virtual machines (VMs). The implementation of an efficient and scalable computing platform with such resources is a challenge and important research topic than the traditional computing environments (Wu, Chen, and Li Citation2015; Li et al. Citation2016b). Many strategies and algorithms are proposed for cloud resource optimization, scalability and job/workflow improvement, and these are addressed in Sections 5.1, 5.2 and 5.3.

4.5.3. Optimizing Big Data platforms with spatiotemporal principles

The optimization strategy for designing, developing and deploying geospatial applications using spatiotemporal principles are discussed in Yang et al. (Citation2011a, Citation2011b). Spatiotemporal patterns can be observed from the following: (i) physical location of computing and storage resources; (ii) distribution of data; (iii) dynamic and massive concurrent access of users at different locations and times and (iv) study area of the applications. A key technique for making Big Data applications perform well is location, time and data awareness by leveraging spatiotemporal patterns. Kozuch et al. (Citation2009) demonstrated that location-aware applications outperform those with no location-aware capability by factor of 3–11 in the performance. Based on context awareness of data, platform and services, Feng et al. (Citation2014) presented a tourism service mechanism that dynamically adjusts the service mode based on the user context information and meets the demand for tourism services in conjunction with the individual user characteristics.

The 5V’s Big Data challenges also call for new methods to optimize data handling and analysis. Correspondingly, Xing and Sieber (Citation2016) proposed a land use/land cover change (LUCC) geospatial cyberinfrastructure to optimize Big Data handling at three levels: (1) employ spatial optimization with graph-based image segmentation, (2) use a spatiotemporal Atom Model to temporally optimize the image segments for LUCC and (3) spatiotemporally optimize the Big Data analysis. Other examples include: (1) Yang et al. (Citation2014) proposed a novel technique for processing big graphical data on the cloud by compressing Big Data with its spatiotemporal features; and (2) Xia et al. (Citation2015a) developed a spatiotemporal performance model to evaluate the quality of distributed geographic information services by integrating cloud computing and spatiotemporal principles at a global scale.

5. Current status of tackling Big Data challenges with cloud computing

While the Big Data challenges can be tackled by many advanced technologies, such as HPC, cloud computing is the most elusive and important. This section reviews the status of using cloud computing to address the Big Data challenges.

5.1. On-demand resource provision

The volume and velocity challenges of Big Data require VM creation on-demand. Autonomous detection of the velocity for provisioning VMs is critical (Baughman et al. Citation2015) and should consider both optimal cost and high efficiency in task execution (Pumma, Achalakul, and Li Citation2012). Research is being conducted to understand the applications and relevant Big Data changing patterns to form a comprehensive model to predict system behavior as the usage patterns evolve and working loads change (Castiglione et al. Citation2014). For example, Pumma, Achalakul, and Li (Citation2012) proposed an automatic mechanism to allocate the optimal numbers of resources in the cloud, and Zhang et al. (Citation2015b) proposed a task-level adaptive MapReduce framework for scaling up and down as well as effectively using computing resources. Additionally, many workload prediction and resource-allocation models and algorithms provide or improve auto-scaling and auto-provisioning capability. For example, Zhang et al. (Citation2015a) applied two streaming, workload prediction methods (i.e. smoothing, Kalman filters) to estimate the unknown workload characteristics and achieved a 63% performance improvement. Zhang, Chen, and Yang (Citation2015) offered a nodes-scheduling model based on Markov chain prediction for analyzing big streaming data in real time. Pop et al. (Citation2015) posited the deadline as the limiting constraint and proposed a method to estimate the number of resources needed to schedule a set of aperiodic tasks, taking into account execution and data transfer costs.

However, more research is necessary for improving auto-scaling and auto-provisioning capability under cloud architecture to address Big Data challenges. For example, advanced VM provision strategies should be designed to handle concurrent execution of multiple applications on the same cloud infrastructure.

5.2. Scheduling

Job scheduling effectively allocates computing resources to a set of different tasks. However, scheduling is a challenge in automatic and dynamic resource provisioning for Big Data (Vasile et al. Citation2014; Gui et al. Citation2016). Zhan et al. (Citation2015) proposed several research directions for cloud resource scheduling, including real-time, adaptive dynamic, large-scale, multi-objective, and distributed and parallel scheduling. Sfrent and Pop (Citation2015) showed that under certain conditions one can discover the best scheduling algorithm. Hung, Aazam, and Huh (Citation2015) presented a cost- and time-aware genetic scheduling algorithm to optimize performance. An energy-efficient, resource scheduling and optimization framework is also proposed to enhance energy consumption and performance (Sun et al. Citation2015). As one of the most popular frameworks for Big Data processing, Hadoop MapReduce is optimized (e.g. task partitioning, execution) to accommodate better Big Data processing (Slagter et al. Citation2013; Gu et al. Citation2014; Hsu, Slagter, and Chung Citation2015; Tang et al. Citation2015). Progress on scheduling was made in geospatial fields as well. Kim, Tsou, and Feng (Citation2015), for example, implemented a high performance, parallel agent-based scheduling model that has the potential to be integrated in spatial decision-support systems and to help policy-makers understand the key trends in urban population, urban growth and residential segregation. However, research effort is need on developing more sophisticated scheduling algorithms that can leverage the spatial relationships of data, computing resources, application and users to optimize the task execution process and the resource utilization of the underlying computing infrastructure.

5.3. Scalability

Scalability on distributed and virtualized processors is and has been a bottleneck for leveraging cloud computing to process Big Data. Feller, Ramakrishnan, and Morin (Citation2015) investigated cloud scalability and concluded: (i) co-existing VMs decrease the disk throughput; (ii) performance on physical clusters is significantly better than that on virtual clusters; (iii) performance degradation due to separation of the services depends on the data-to-compute ratio and (iv) application completion progress correlates with the power consumption, and power consumption is application specific. Various cloud performance benchmarks and evaluations (Nazir et al. Citation2012; Huang et al. Citation2013b) demonstrated that balancing the number and size of VMs as a function of the specific applications is critical to achieve optimal scalability for geospatial Big Data applications. Ku, Choi, and Min (Citation2014) analyzed four cloud performance-influencing factors (i.e. number of query statements, garbage collection intervals, quantity of VM resources and virtual CPU assignment types). Accordingly, different strategies improve scalability and achieve cost-effectiveness while handle Big Data processing tasks with scalable and elastic service to disseminate data (Wang and Ma Citation2015). For example, Ma et al. (Citation2015) proposed a scalable and elastic total order service for content-based publish/subscribe using the performance-aware provisioning technique to adjust the scale of servers to the churn workloads.

With the development of various clouds and each cloud has its unique advantages and disadvantages (Huang and Rust Citation2013; Gui et al. Citation2014), the hybrid cloud integrating multiple clouds (e.g. public and private clouds) would be a new trend while adopting cloud solutions to make full use of different clouds. Correspondingly, it would be also necessary to allocate computing resources and distribute the tasks in a way that improves the scalability and performance of networked computing nodes coming from a hybrid environment.

5.4. Data locality

System I/O poses a bottleneck for Big Data processing in the cloud (Kim et al. Citation2014) especially when data and computing are geographically dispersed. Researchers either move data to computing resource or move the computing resources to the data (Jayalath, Stephen, and Eugster Citation2014). Considering the spatiotemporal collocation in the process of scheduling to allocate resources and move data significantly improves system performance and addresses I/O issues (Hammoud and Sakr Citation2011; Rasmussen et al. Citation2012; Yang et al. Citation2013; Kim et al. Citation2014; Feller, Ramakrishnan, and Morin Citation2015). For example, to achieve higher locality and reduce the volume of shuffled data, Kim et al. (Citation2014) developed a burstiness-aware I/O scheduler to enhance the I/O performance up to 23%. Using data locality, grouped slave nodes, and k-means algorithm to hybrid Mapreduce clusters with low intra-communication and high intra-communication, Yang, Long, and Jiang (Citation2013) observed a 35% performance gain. Hammoud and Sakr (Citation2011) described Locality-Aware Reduce Task Scheduler for improving MapReduce performance. Zhang et al. (Citation2014) presented a data-aware programming model by identifying the most frequently shared data (task- and job-level) and replicating these data to each computing node for data locality.

In many scenarios, input data are geographically distributed among data centers, and moving all data to a single center before processing is prohibitive (Jayalath, Stephen, and Eugster Citation2014). In such a distributed cloud system, efficiently moving the data is important to avoid network saturation in Big Data processing. For example, Nita et al. (Citation2013) suggest a scheduling policy and two greedy scheduling algorithms to minimize individual transfer times. Sandhu and Sood (Citation2015b) proposed a global architecture for QoS-based scheduling for Big Data applications distributed over cloud data centers at both coarse and fine grained levels.

5.5. Cloud computing for social media and other streamed data