ABSTRACT

This study investigates misregistration issues between Landsat-8/ Operational Land Imager and Sentinel-2A/ Multi-Spectral Instrument at 30 m resolution, and between multi-temporal Sentinel-2A images at 10 m resolution using a phase-correlation approach and multiple transformation functions. Co-registration of 45 Landsat-8 to Sentinel-2A pairs and 37 Sentinel-2A to Sentinel-2A pairs were analyzed. Phase correlation proved to be a robust approach that allowed us to identify hundreds and thousands of control points on images acquired more than 100 days apart. Overall, misregistration of up to 1.6 pixels at 30 m resolution between Landsat-8 and Sentinel-2A images, and 1.2 pixels and 2.8 pixels at 10 m resolution between multi-temporal Sentinel-2A images from the same and different orbits, respectively, were observed. The non-linear random forest regression used for constructing the mapping function showed best results in terms of root mean square error (RMSE), yielding an average RMSE error of 0.07 ± 0.02 pixels at 30 m resolution, and 0.09 ± 0.05 and 0.15 ± 0.06 pixels at 10 m resolution for the same and adjacent Sentinel-2A orbits, respectively, for multiple tiles and multiple conditions. A simpler 1st order polynomial function (affine transformation) yielded RMSE of 0.08 ± 0.02 pixels at 30 m resolution and 0.12 ± 0.06 (same Sentinel-2A orbits) and 0.20 ± 0.09 (adjacent orbits) pixels at 10 m resolution.

1. Introduction

Many applications in climate change and environmental and agricultural monitoring rely heavily on the exploitation of multi-temporal satellite imagery. Multi-temporal satellite images can help to identify and analyze changes in land cover land use change (LCLUC) (Justice, Gutman, and Vadrevu Citation2015), to capture significant trends in land surface properties, for example, greenness (Ju and Masek Citation2016), or to discriminate specific crop types (Shelestov et al. Citation2017; Skakun et al. Citation2016), that cannot be identified with a single date image. In order to solve these problems more efficiently at high spatial resolutions (30 m), combined use of freely available Landsat-8 and Sentinel-2 images can offer high temporal frequency of about 1 image every 3–5 days globally.

Landsat-8 was launched in 2013 within the Landsat Program, a joint effort between the U.S. Geological Survey (USGS) and NASA (Roy et al. Citation2014). The Operational Land Imager (OLI) and Thermal Infrared Sensor (TIRS) instruments onboard the Landsat-8 satellite capture images of the Earth’s surface in 11 spectral bands of the electromagnetic spectrum at 30 m spatial resolution (15 m for panchromatic band and 100 m for thermal infrared). The swath of Landsat-8 scene is approximately 185 km, allowing global coverage of the Earth’s surface every 16 days. To refine image geolocation, the Landsat-8 processing uses ground control points automatically derived from the Global Land Survey (GLS) Landsat images (Gutman et al. Citation2013). The Sentinel-2A satellite was launched in 2015 within the European Copernicus program (Drusch et al. Citation2012). Sentinel-2A has a Multi-Spectral Instrument (MSI) that acquires images of the Earth’s surface in 13 spectral bands at 10 m, 20 m and 60 m spatial resolution. The swath of a Sentinel-2A scene is approximately 290 km, allowing global coverage of the Earth’s surface every 10 days. The launch of an identical Sentinel-2B satellite will further reduce revisit time to 5 days globally. Both Sentinel-2A/B satellites will use a Global Reference Image (GRI) derived from orthorectified Sentinel-2 cloud-free images to improve geolocation accuracy and repeatability to meet the requirements of multi-temporal registration of 3 m for 10 m bands (Dechoz et al. Citation2015). The GRI dataset is currently under development and is expected to be completed in 2018 (Storey et al. Citation2016).

Recent studies are focusing on the combined use of Landsat-8 and Sentinel-2A images to increase temporal coverage; however misregistration issues between Landsat-8 and Sentinel-2A have already been identified (Storey et al. Citation2016). It has been found that the OLI and MSI misregistration can exceed one 30-meter pixel and, therefore, it is recommended to exploit image registration approaches to further improve alignment between Landsat-8 and Sentinel-2A images (Storey et al. Citation2016). These approaches should be automatic and computationally efficient in order to perform alignment on a global basis, have sub-pixel accuracy and effectively deal with temporal changes, so these approaches can be further applied with GLS and GRI.

There have been many studies carried out to develop automatic satellite image registration methods (e.g. Gao, Masek, and Wolfe Citation2009; Le Moigne, Netanyahu, and Eastman Citation2011; Zitova and Flusser Citation2003). The general image-to-image registration workflow consists of automatic generation of control points (CPs) between the reference (or master) and sensed (or slave) images, building and evaluating a spatial transformation (mapping function) that aligns the reference and sensed images, and warping the sensed image with radiometric transformation. Area-based and feature-based approaches are used to automatically derive CPs. Area-based methods, also referred to as correlation-like or template matching, find correspondence between reference and sensed images through a similarity measure, for example cross-correlation (in spatial or frequency domain) or mutual information. These measures are usually applied on a sliding window basis to derive a dense set of CPs. Feature-based methods aim to find distinctive features on images, for example edges, contours, line intersections, closed boundary regions, and then match the derived features to find correspondences between reference and sensed images. The derived CPs are used to construct and evaluate a mapping function that maps points from the reference image to points in the sensed image. Examples of the mapping function include translation, affine transformation, high-order polynomials, radial basis functions (RBFs), and elastic registration. And finally, radiometric transformation (nearest neighbor, bilinear and splines) should be specified to warp the sensed image to the reference one.

The problem of Landsat-8/OLI and Sentinel-2A/MSI misregistration has already been addressed in several previous studies (Barazzetti, Cuca, and Previtali Citation2016; Yan et al. Citation2016). Yan et al. (Citation2016) used a hierarchical feature-based matching approach to find CPs through construction of image pyramids at various spatial resolutions and an area-based matching approach to further refine and reject non-reliable CPs. Translation, affine transformation and second order polynomial functions were evaluated in the study for three pairs of Landsat-8 and Sentinel-2A images with affine transformation giving the best results in terms of root mean square error (RMSE) of 0.3 pixels at 10 m resolution. Barazzetti, Cuca, and Previtali (Citation2016) utilized standard software packages to study misregistration between Landsat-8 and Sentinal-2A, and achieved RMSE of up to 1.2 pixels at 15 m spatial resolution.

In this paper, we explore a phase-correlation approach to automatically generate a dense grid of CPs when registering Landsat-8 to Sentinel-2A images, as well as multiple mapping functions including those based on machine learning approaches. We also address the issue of multi-temporal misregistration between Sentinel-2A images (for assessment of registration accuracy of multi-temporal Landsat-8 images, we refer readers to Storey, Choate, and Lee (Citation2014)). Our analysis shows misregistration magnitudes of up to 3 pixels at 10 m resolution can be observed. This issue has not been reported in previous studies to our knowledge (except Sentinel-2 Data Quality Reports, see ESA (Citation2016a), and reports delivered at the LCLUC Multi-Source Land Imaging (MuSLI) Science Team Meeting 2016, http://lcluc.umd.edu/meetings/2016-lcluc-spring-science-team-meeting-18-19-april-and-musli-science-team-meeting-20-21), and should be further addressed especially for users dealing with Sentinel-2A time-series at 10 m spatial resolution. As in Yan et al. (Citation2016), we use near-infrared (NIR) bands from Landsat-8 (band 5, 0.85–0.88 um) and Sentinel-2A (band 08, 0.842 um) to find CPs on reference and sensed images, since the NIR provides a wide dynamic range of values for multiple land cover types and is less sensitive to atmospheric effects. Co-registration of Landsat-8 to Sentinel-2A was performed at 30 m spatial resolution while co-registration between Sentinel-2A images was undertaken at 10 m spatial resolution.

2. Data description

2.1. Landsat-8 and Sentinel-2A products description

We used a standard Landsat-8 Level-1 terrain corrected (L1 T) product distributed by USGS through the EarthExplorer system (Roy et al. Citation2014). The product is provided in the World-wide Reference System (WRS-2) of path and row coordinates. The size of the Landsat-8 scene is approximately 185 km × 180 km. The product is provided with the corresponding metafile to convert digital numbers (DNs) into the top-of-atmosphere reflectance (TOA) values. For Sentinel-2, we used a standard Level-1C (L1C) product which is radiometrically and geometrically corrected with ortho-rectification, and provided with the TOA reflectance values (ESA Citation2016b). The product is delivered in tiles, or granules, of approximately 110 km × 110 km size. Both Landsat-8 L1 T and Sentinel-2A L1C products are provided in the Universal Transverse Mercator (UTM) projection with the World Geodetic System 1984 (WGS84) datum. Each Sentinel-2 tile is assigned a UTM zone, and overlapping tiles covering the same geographic region might have different UTM zones assigned. The Sentinel-2 tiling grid is referenced to the U.S. Military Grid Reference System. A tile identifier consists of five signs: two numbers and three letters, for example, 20HNH. The first two numbers in the tile identifier correspond to the UTM zone while the remaining three letters correspond to the tile position. ESA provides a kml file with tile coverage and their identifiers (https://sentinel.esa.int/documents/247904/1955685/S2A_OPER_GIP_TILPAR_MPC__20151209T095117_V20150622T000000_21000101T000000_B00.kml).

It should be also noted that pixel value is for the center of the pixel for the Landsat-8 L1 T product, while it is for the upper left corner of the pixel for the Sentinel-2A L1C product. In this work, we used Sentinel-2 tile system as a reference, that is, Landsat-8 data were subset for the corresponding Sentinel-2 tile with the nearest neighborhood resampling and co-registered to the reference Sentinel-2 scene using the proposed approach. Depending on the application and user needs other reference systems can be specified, for example Web Enabled Landsat Data (Roy et al. Citation2010) and this proposed approach can be easily adapted to it.

2.2. Landsat-8 and Sentinel-2A test data description

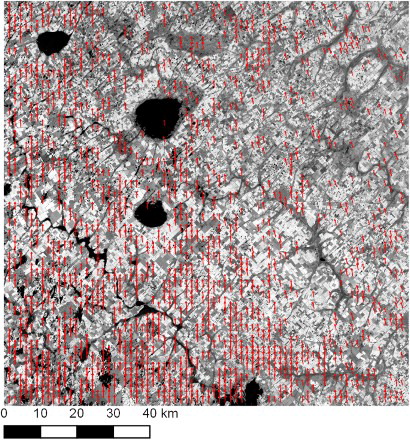

This study was carried out for five Sentinel-2 tiles in three countries: Argentina (Sentinel-2 tile grid numbers 20HNH and 20HPH), U.S.A. (14SKF) and Ukraine (36UUU, 34UFU) (see for examples). The selected tiles cover intensive agriculture regions, where changes are rapid due to seasonal crop development, and a mountain region in the Carpathians (tile 34UFU) where surface elevation varies approximately from 100 m to 1000 m. All selected regions feature considerable difference in Landsat-8 and Sentinel-2A image acquisition dates ranging from 4 July 2015 to 25 July 2016, as well as variable cloud conditions.

Figure 1. Examples of TOA true color images acquired by sentinel-2A/MSI (left) and Landsat-8/OLI (right): (A) tile 20HNH over Argentina, dates of Sentinel-2A/MSI and Landsat-8/OLI acquisitions are 2015358 and 2015361 respectively; (B) tile 20HPH over Argentina, acquisitions dates are 2015358 and 2015258; (C) tile 14SKF over Texas, U.S.A., dates of acquisitions are 2016012 and 2016104; (D) tile T36UUU over Ukraine, acquisition dates are 2016169 and 2016108; (E) tile T34UFU over the Carpathian Mountains, Ukraine, acquisitions dates are 2016198 and 2016063.

Overall co-registration of 45 Landsat-8 to Sentinel-2A pairs and 37 Sentinel-2A to Sentinel-2A pairs were analyzed. gives details on the Landsat-8 and Sentinel-2A imagery used in the study.

Table 1. Description of data used in the study.

3. Methodology

3.1. General overview

The proposed approach follows the general concept of automatic image-to-image registration outlined in (Zitova and Flusser Citation2003). It has the following steps (): image pre-processing; automatic identification of CPs; filtering of CPs; building and evaluating a transformation, and image warping.

3.2. Image pre-processing

Landsat-8 images were converted from DNs to TOA reflectance values using calibration coefficients in the metadata file, so both Landsat-8 and Sentinel-2A images were generated with TOA reflectance values. The steps outlined in this and following subsections will also be valid, should co-registration be applied for atmospherically corrected products (Vermote et al. Citation2016).

For Landsat-8 to Sentinel-2A co-registration, Sentinel-2A band 08 (NIR) was resampled from 10 m to 30 m using averaging, and Landsat-8 data were subset to the corresponding Sentinel-2A tile using the nearest neighborhood resampling technique. Sentinel-2A to Sentinel-2A co-registration was performed at the original 10 m spatial resolution without further resampling.

3.3. Automatic generation of CPs

In this study, we exploited a phase-only correlation image matching method introduced by Guizar-Sicairos, Thurman, and Fienup (Citation2008). It uses a cross-correlation approach in the frequency domain by means of the Fourier transform and exploits a computationally efficient procedure based on non-linear optimization and discrete Fourier transforms to detect sub-pixel shifts between reference and sensed images. For the detailed description of the algorithm, we refer readers to Guizar-Sicairos, Thurman, and Fienup (Citation2008).

The phase-correlation algorithm allows detection of translation between reference and sensed images, and therefore is routinely applied using a moving square window. The size of the window and the step are selected empirically. Window size should be large enough to capture similarities on the reference and sensed images, and small enough to have a dense grid of CPs to accurately construct a transform function. In this study, window size and step were selected 100 and 50 pixels, respectively when co-registering Landsat-8 to Sentinel-2A, and 64 and 32 when co-registering multi-temporal Sentinel-2A images.

Compared to other area-based methods (e.g. cross-correlation in the spatial domain), the phase-correlation image usually contains a sharp peak corresponding to the dominant shift between images, and is usually more robust to temporal changes between reference and sensed images (Kravchenko, Lavrenyuk, and Kussul Citation2014). Compared to feature-based methods, the phase-correlation approach with a moving window allows detection of a dense grid of CPs, especially in cases where features cannot be reliably identified and detected, including at the 30 m spatial resolution imagery.

No matter what method is applied for automatic generation of CPs, filtering is necessary to remove unreliable CPs. First, a peak cross-correlation normalized magnitude is used for initial rejection of CPs. In our study, this value was set to 0.5. After that, a RANdom SAmple Consensus (RANSAC) algorithm (Fischler and Bolles Citation1981) is run for the linear transformation model to detect inliers and outliers. The RANSAC is a widely used algorithm in computer vision and image processing to detect strong outliers with large deviations (Brown and Lowe Citation2007). However, one should be careful to not aggressively remove outliers that can be actually inliers. In our study, we ran a conservative approach for removing outliers, that is, the RANSAC parameters were set in such a way to remove only outliers with a high confidence level. In our particular case, the number of RANSAC trials was set to 100, and a confidence level for selecting outliers was set to 0.99. The detected inlier CPs are further used to construct and evaluate a transformation function.

3.4. Transformation function

The goal of constructing a transformation function F() is to find correspondence between points in the reference image xr = (xr, yr) and points in the sensed image xs = (xs, ys):(1) Function F() is constructed using the set of CPs identified in the previous steps. In this study we compared three different approaches to creating the transformation function: polynomial models, RBFs and random forest (RF) regression trees (see below for details). Regardless of transformation approach, all available CPs were randomly split into a training (calibration) set (80%) and a testing set (20%). The training set was used to build the model and identify its parameters, while the testing set was used to evaluate the model on independent data. We will denote CPs from the testing set with

and

where

and L is the number of points with corresponding shifts:

(2) The quality of the transformation is evaluated using a RMSE between reference values

and estimated values

by transformation function F() using the testing set:

(3)

(4)

3.4.1. Polynomial models

Selection of the type of the transformation function depends on a-priori knowledge of expected geometric deformations and distortions between reference and sensed images, and required registration accuracy (Zitova and Flusser Citation2003). Polynomial functions of the n-th degree have the following form:(5)

(6) In the case of n = 0, the models (5)–(6) are simple translation models where the same values of shift, namely

and

, are applied in x and y directions, respectively:

(7)

(8) A linear model, also referred to as affine, can be reduced to the following form:

(9)

(10) Model parameters

and

are estimated through the ordinary least square method, by minimizing the sum of the squares of the differences between predicted values of the model and reference values.

3.4.2. Radial basis functions

The transformation function based on RBFs has the following form (Zitova and Flusser Citation2003):(11)

(12) where

is the kernel function with parameters (centers)

and

and weights

and

.

In this study, we used two types of kernels, namely Gaussian and thin-plate splines (TPS):(13)

(14) There are several ways of selecting a set of centers

: randomly, on a regular grid, or adaptively through clustering. We used the k-means clustering approach (Forgy Citation1965; Lloyd Citation1982) to adaptively select centers in the models (11)–(12). We varied number of clusters K and found values from 1 to 10 producing best results. Increasing the number over 10 did not improve results.

And finally, weights and

in models (11)–(12) were estimated from training data using the RANSAC algorithm (Fischler and Bolles Citation1981).

As with polynomial models, RBF models are the global mapping functions, however, they are able to account for local non-linear distortions (Zitova and Flusser Citation2003).

3.4.3. RF regression

RF is a machine learning algorithm that represents an ensemble of decision trees (DTs) (Breiman Citation2001). A DT classifier or regression model is built from a set of data using the concept of information entropy. At each node of the tree, one attribute of the data, that most effectively splits its set of samples into subsets enriched in one class or the other, is selected. Its criterion is the normalized information gain that results from choosing an attribute for splitting the data. The attribute with the highest normalized information gain is chosen to make the decision. The algorithm then recurs on the smaller sublists. One disadvantage of the DT classifier is the considerable sensitivity to the input dataset, so that a small change to the training data can result in a very different set of subsets (Bishop Citation2006). In order to overcome disadvantages of a single DT, an ensemble of DTs is used to form a RF. Each DT represents an independent expert (or weak classifier) in the RF that is trained based on different input datasets that are generated through a bagging procedure (Bishop Citation2006). RF can be used for building classification and regression models.

In this study, the RF regression was used to build a transformation function. We used points from the reference image xr = (xr, yr) as inputs to the RF regression model with a polynomial pre-processing. For example, in case of the 2nd degree polynomial function, the following features were input to the RF model: . The RF model was further trained to predict points in the sensed image xs = (xs, ys). In this study, the number of DTs in the RF models was kept low (about 5) to avoid overfitting. The optimal number of DTs in the RF model in terms of RMSE error was identified through the cross-validation procedure.

As with RBFs based mapping functions, RF belongs to the class of global mapping models that can account for local non-linear distortions.

4. Results

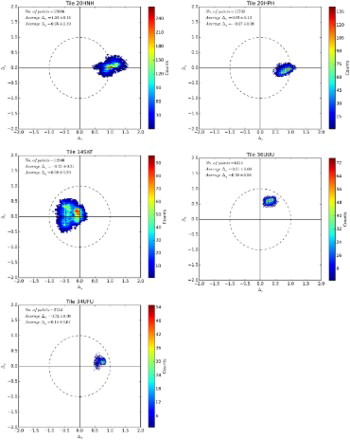

The use of phase correlation allowed us to generate hundreds and thousands of CPs when co-registering Landsat-8 to Sentinel-2A at 30 m spatial resolution (hereafter referred as LandSen30), and when co-registering multi-temporal Sentinel-2A images at 10 m spatial resolution (hereafter referred as SenSen10) (). The average misregistration between Landsat-8 and Sentinel-2A calculated on a tile basis, using identified CPs, varied from 0.11 pixels to 1.35 pixels among tiles considered in the study with the maximum misregistration value varying from 0.25 to 1.59 pixels (per tile). Misregistration between Landsat-8 and Sentinel-2A was stable in time over the same tile (, ) with average standard deviation of the misregistration through the time varying from 0.03 pixels to 0.16 pixels with average of 0.10 pixels (at 30 m resolution).

Figure 3. Location of CPs shown in the form of vectors outlining the direction and magnitude of shifts ( and

(Equation (2)) found between Landsat-8 image acquired on 2016021 (21-Jan-2016), and Sentinel-2A image acquired on 2015358 (24-Dec-2015) and used as a reference image, over the study area in Argentina, tile T20HNH. Vector lengths were multiplied by 100 for visual clarity. Overall, 1634 CPs were found using the phase-correlation approach in this case. The background is a Landsat-8 TOA NIR (band 5) image with TOA reflectance values scaled from 0.05 to 0.65.

Figure 4. Distribution of misregistration values and

(Equation (2)) when co-registering Landsat-8 to Sentinel-2A images for different tiles used in the study. Units are shown in pixel values at 30 m spatial resolution.

Table 2. Results of identifying CPs on the sensed (Landsat-8) and reference (Sentinel-2A) images using phase-correlation approach at 30 m spatial resolution.

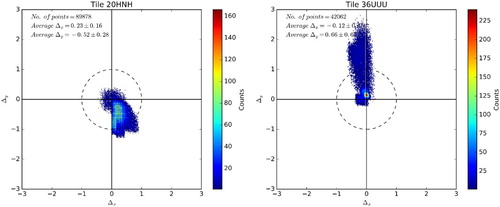

In the SenSen10 case, performance depended on whether the reference and sensed Sentinel-2A images were acquired from the same or different (adjacent) orbits. In case of the same orbits, the average misregistration calculated on a tile basis varied from 0.05 pixels to 0.46 pixels among tiles (with the maximum misregistration up to 1.21 pixels) with average 0.23 ± 0.12 pixels at 10 m resolution (, ). In case of different Sentinel-2 orbits over the same tile, the average misregistration calculated on a tile basis varied from 0.14 pixels to 1.45 pixels among tiles (with the maximum misregistration up to 2.83 pixels) with average 0.61 ± 0.42 pixels at 10 m resolution (, ). This might be the result of the satellite yaw bias that was corrected within the recent baseline processing version 02.04 (ESA Citation2016a). Overall, our estimates of the multi-temporal misregistration in the Sentinel-2A imagery were consistent with the Sentinel-2 Data Quality Reports (ESA Citation2016a).

Figure 5. Distribution of misregistration values and

when co-registering multi-temporal Sentinel-2A images from the same orbits for different tiles used in the study. Units are shown in pixel values at 10 m spatial resolution.

Figure 6. Distribution of misregistration values and

when co-registering multi-temporal Sentinel-2A images from the adjacent orbits for different tiles used in the study. Units are shown in pixel values at 10 m spatial resolution.

Table 3. Results of identifying CPs on the sensed (Sentinel-2A) and reference (Sentinel-2A) images using phase-correlation approach at 10 m spatial resolution.

When building a transformation function, all considered approaches showed a similar performance () with the complex non-linear RF regression slightly outperforming other methods, namely, a simple translation (Equations (7)–(8)), 1st order polynomial (Equations (9)–(10)), Gaussian RBFs (Equations (11)–(12), (13)) and TPS (Equations (11)–(12), (14)). For the RF regression, RMSE values varied from 0.02 to 0.12 pixels for the LandSen30 case at 30 m resolution, and from 0.025 to 0.22 pixels for the SenSen10 case at 10 m resolution for the same orbits and from 0.05 to 0.26 pixels for adjacent orbits. The RF model was built using a 1st order polynomial function for input CP coordinates. Increasing the order of the polynomial function did not improve performance of the RF model. It means that the RF was able to capture non-linearity when building a transformation function between CPs on the reference and sensed images.

Table 4. Average and standard deviation of the RMSE error (Equation (4)) calculated for different transformation functions using CPs from testing set when co-registering Landsat-8 and Sentinel-2A images.

Table 5. Average and standard deviation of the RMSE error (Equation (4)) calculated for different transformation functions using CPs from testing set when co-registering multi-temporal Sentinel-2A images from the same orbit.

Table 6. The same as , but for adjacent Sentinel-2A orbits.

Our results obtained for the 1st order polynomial function were comparable to previous studies by Barazzetti, Cuca, and Previtali (Citation2016) and Yan et al. (Citation2016).

Temporal fluctuations of the RMSE error were analyzed to explore temporal stability of the constructed transformation functions for multi-temporal Sentinel-2A images co-registration. shows dependence of the RMSE error with time for the 1st degree polynomial function for different Sentinel-2A orbits. For the case of the same orbits, there are not too many variations in time, except three cases with RMSE values over 0.2 pixels. These are due to heavy cloud contamination presented in the sensed imagery. As to the adjacent orbits, the tile T20HNH shows a trend which is due to the difference between the sensed and reference images: the difference is up to 103 days. For the T36UUU case, high RMSE error can be attributed to the 70 day difference between the reference and sensed images acquired over highly intensive agriculture region that features a lot of changes within this time period ( (D)). Also, reduction of the RMSE error for 36UUU tile comparing to the 20HNH tile can be related to the improvements made in the baseline processing version 02.04 (ESA Citation2016a).

Figure 7. Changes of RMSE error of building a 1st degree polynomial transformation function when registering multi-temporal Sentinel-2A images over the time for different tiles and different Sentinel-2A orbits: (A) same orbits; (B) adjacent orbits.

Results on correcting misregistration between Landsat-8 and Sentinel-2A are shown in .

Figure 8. A 30 m ‘chessboard’ composed of alternating Landsat-8 (acquired on 20-Dec-2015) and Sentinel-2A (24-Dec-2015) images before (left panel) and after co-registration (right panel). Near-infrared images from band 5 (Landsat-8) and band 8 (Sentinel-2A) were used to produce these ‘chessboard’. TOA reflectance values were scaled from 0.05 to 0.55. This subset covers the area in the south-east part of the tile 20HNH over Argentina ((A)). Misregistrations between satellite images can be seen in the irrigated fields (circles, middle left image) and in the bridge over the lake (bottom left image) with corrections applied and misregistration disappearing in the right images (middle and bottom). Middle and bottom subset images are shown in corresponding boxes on the top images.

The proposed workflow was implemented in Python programming language using the Geospatial Data Abstraction Library (GDAL) for managing geospatial datasets, the ‘skimage package’ for phase-correlation implementation, and the ‘sklearn package’ for the RF regression and RANSAC implementations, and was computationally efficient. Typical processing times on the Dell Laptop with processor Inter® Core™ i7-4810MQ CPU @ 2.80 GHz with 16 Gb RAM are presented in .

Table 7. Typical processing times (in seconds) for the main steps of the proposed approach.

5. Conclusions

Since many applications involving satellite imagery require the use of multi-temporal datasets, misregistration issues can lead to incorrect results. This study investigated misregistration issues between Landsat-8/OLI and Sentinel-2A/MSI at 30 m resolution, and between multi-temporal Sentinel-2A images at 10 m resolution using a phase-correlation approach and multiple transformation functions. Phase correlation proved to be a robust approach that allowed us to identify hundreds and thousands of control points on images acquired more than 100 days apart. Overall, misregistration of up to 1.6 pixels at 30 m resolution between Landsat-8 and Sentinel-2A images, and 1.2 pixels (for the same orbit) and 2.8 pixels (for different orbits) at 10 m resolution between multi-temporal Sentinel-2A images were observed. The non-linear RF regression used for constructing the mapping function showed best results in terms of error, yielding the average RMSE error of 0.07 ± 0.02 pixels at 30 m resolution and 0.09 ± 0.05 and 0.15 ± 0.06 pixels at 10 m resolution for the same and adjacent Sentinel-2A orbits, respectively, for multiple tiles and multiple conditions. On the other hand, a simple linear model such as 1st order polynomial function can provide an error of up to 0.08 ± 0.02 pixels at 30 m resolution and 0.12 ± 0.06 (same Sentinel-2A orbits) and 0.20 ± 0.09 (adjacent orbits) pixels at 10 m resolution.

Disclosure statement

No potential conflict of interest was reported by the authors.

ORCID

Sergii Skakun http://orcid.org/0000-0002-9039-0174

References

- Barazzetti, L., B. Cuca, and M. Previtali. 2016. “Evaluation of Registration Accuracy Between Sentinel-2 and Landsat 8.” In Fourth International Conference on Remote Sensing and Geoinformation of the Environment, 968809–968809. International Society for Optics and Photonics. doi:10.1117/12.2241765.

- Bishop, C. M. 2006. Pattern Recognition and Machine Learning. New York: Springer.

- Breiman, L. 2001. “Random Forests.” Machine Learning 45 (1): 5–32. doi:10.1023/A:1010933404324.

- Brown, M., and D. G. Lowe. 2007. Automatic Panoramic Image Stitching Using Invariant Features.” International Journal of Computer Vision 74 (1): 59–73. doi:10.1007/s11263-006-0002-3.

- Dechoz, C., V. Poulain, S. Massera, F. Languille, D. Greslou, F. de Lussy, A. Gaudel, C. L’Helguen, C. Picard, and T. Trémas. 2015. “Sentinel 2 Global Reference Image.” In SPIE Remote Sensing, pp. 96430A-96430A. International Society for Optics and Photonics. doi:10.1117/12.2195046.

- Drusch, M., U. Del Bello, S. Carlier, O. Colin, V. Fernandez, F. Gascon, B. Hoersch, et al. 2012. “Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services.” Remote Sensing of Environment 120: 25–36. doi:10.1016/j.rse.2011.11.026.

- ESA. 2016a. “ S2 MPC. Data Quality Report.” Ref: S2-PDGS-MPC-DQR, Issue: 08, Date: 05/10/2016. https://earth.esa.int/documents/247904/685211/Sentinel-2-Data-Quality-Report.

- ESA. 2016b. “ Sentinel-2 Products Specification Document.” Ref: S2-PDGS-TAS-DI-PSD, Issue: 14.0, Date: 15/07/2016. https://earth.esa.int/web/sentinel/user-guides/sentinel-2-msi/document-library.

- Fischler, M. A., and R. C. Bolles. 1981. “Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography.” Communications of the ACM 24 (6): 381–395. doi:10.1145/358669.358692.

- Forgy, E. W. 1965. “Cluster Analysis of Multivariate Data: Efficiency Versus Interpretability of Classifications.” Biometrics 21: 768–769.

- Gao, F., J. Masek, and R. E. Wolfe. 2009. “Automated Registration and Orthorectification Package for Landsat and Landsat-Like Data Processing.” Journal of Applied Remote Sensing 3 (1): 033515–033515. doi:10.1117/1.3104620.

- Guizar-Sicairos, M., S. T. Thurman, and J. R. Fienup. 2008. “Efficient Subpixel Image Registration Algorithms.” Optics Letters 33 (2): 156–158. doi:10.1364/OL.33.000156.

- Gutman, G., C. Huang, G. Chander, P. Noojipady, and J. G. Masek. 2013. “Assessment of the NASA–USGS Global Land Survey (GLS) Datasets.” Remote Sensing of Environment 134: 249–265. doi:10.1016/j.rse.2013.02.026.

- Ju, J., and J. G. Masek. 2016. “The Vegetation Greenness Trend in Canada and US Alaska From 1984–2012 Landsat Data.” Remote Sensing of Environment 176: 1–16. doi:10.1016/j.rse.2016.01.001.

- Justice, C., G. Gutman, and K. P. Vadrevu. 2015. “NASA Land Cover and Land Use Change (LCLUC): An Interdisciplinary Research Program.” Journal of Environmental Management 148: 4–9. doi:10.1016/j.jenvman.2014.12.004.

- Kravchenko, O., M. Lavrenyuk, and N. Kussul. 2014. “Orthorectification of Sich-2 Satellite Images Using Elastic Models.” In 2014 IEEE Geoscience and Remote Sensing Symposium, 2281–2284. IEEE. doi:10.1109/IGARSS.2014.6946925.

- Le Moigne, J., N. S. Netanyahu, and R. D. Eastman. 2011. Image Registration for Remote Sensing. Cambridge: Cambridge University Press.

- Lloyd, S. 1982. “Least Squares Quantization in PCM.” IEEE Transactions on Information Theory 28 (2): 129–137. doi:10.1109/TIT.1982.1056489.

- Roy, D. P., J. Ju, K. Kline, P. L. Scaramuzza, V. Kovalskyy, M. Hansen, T. R. Loveland, E. Vermote, and C. Zhang. 2010. “Web-enabled Landsat Data (WELD): Landsat ETM+ Composited Mosaics of the Conterminous United States.” Remote Sensing of Environment 114 (1): 35–49. doi:10.1016/j.rse.2009.08.011.

- Roy, D. P., M. A. Wulder, T. R. Loveland, C. E. Woodcock, R. G. Allen, M. C. Anderson, D. Helder, et al. 2014. “Landsat-8: Science and Product Vision for Terrestrial Global Change Research.” Remote Sensing of Environment 145: 154–172. doi:10.1016/j.rse.2014.02.001.

- Shelestov, A., M. Lavreniuk, N. Kussul, A. Novikov, and S. Skakun. 2017. “Exploring Google Earth Engine Platform for Big Data Processing: Classification of Multi-Temporal Satellite Imagery for Crop Mapping.” Frontiers in Earth Science 5 (17): 1–7. doi:10.3389/feart.2017.00017.

- Skakun, S., N. Kussul, A. Y. Shelestov, M. Lavreniuk, and O. Kussul. 2016. “Efficiency Assessment of Multitemporal C-Band Radarsat-2 Intensity and Landsat-8 Surface Reflectance Satellite Imagery for Crop Classification in Ukraine.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 9 (8): 3712–3719. doi:10.1109/JSTARS.2015.2454297.

- Storey, J., M. Choate, and K. Lee. 2014. “Landsat 8 Operational Land Imager on-Orbit Geometric Calibration and Performance.” Remote Sensing 6 (11): 11127–11152. doi:10.3390/rs61111127.

- Storey, J., D. P. Roy, J. Masek, F. Gascon, J. Dwyer, and M. Choate. 2016. “A Note on the Temporary Misregistration of Landsat-8 Operational Land Imager (OLI) and Sentinel-2 Multi Spectral Instrument (MSI) Imagery.” Remote Sensing of Environment 186: 121–122. doi:10.1016/j.rse.2016.08.025.

- Vermote, E., C. Justice, M. Claverie, and B. Franch. 2016. “Preliminary Analysis of the Performance of the Landsat 8/OLI Land Surface Reflectance Product.” Remote Sensing of Environment 185: 46–56. doi:10.1016/j.rse.2016.04.008.

- Yan, L., D. P. Roy, H. Zhang, J. Li, and H. Huang. 2016. “An Automated Approach for Sub-Pixel Registration of Landsat-8 Operational Land Imager (OLI) and Sentinel-2 Multi Spectral Instrument (MSI) Imagery.” Remote Sensing 8 (6): 520. doi:10.3390/rs8060520.

- Zitova, B., and J. Flusser. 2003. “Image Registration Methods: A Survey.” Image and Vision Computing 21 (11): 977–1000. doi:10.1016/S0262-8856(03)00137-9.