ABSTRACT

In earthquakes and building collapse situations, volumes of people may need to move, suddenly, through spaces that have been destroyed and seem unfamiliar in configuration and appearance. Perception is significant in these cases, determining individual movement, collective egress, and phenomena in between. Alas, exploring how perception shapes critical egress is tricky because perception is both physical and cerebral in genesis and because critical scenarios are often hazardous. In this paper, we describe a computational sandbox for studying urban damage scenarios. The model is built as automata, specialized as human automata and rigid body automata, with interactivity provided by slipstreaming. Our sandbox supports parameterization of synthetic built settings and synthetic humans in fine detail for large interactive collections, allowing flexible analyses of damage scenarios and their determining processes, from micro-perspectives through to the macrocosm of the phenomena that might result. While we have much work to do to improve the model relative to real-world fidelity, our work thus far has produced some meaningful results, supporting practical questions of how urban design and parking scenarios shape egress, and pointing to potential phenomena of perceptual shadowing as a translation mechanism for processes at the built-human interface.

KEYWORDS:

1. Introduction

When critical events befall urban environments, getting people out of harm’s way is imperative (Hofinger, Zinke, and Künzer Citation2014; Schneider and Könnecke Citation2010). Consider, for example, situations in which building collapse occurs after structural failure or earthquakes (Kaya, Curran, and Llewellyn Citation2005). In damage scenarios, people may need to be cleared, urgently, from several buildings or from an entire urban area, with little warning or time for preparation (Hanks and Brady Citation1991). Egress dynamics in these situations often catch people off guard, forces them to act quickly, and constrains their movement with limited degrees of freedom (Shiwakoti et al. Citation2009). Egress outcomes can also hinge on complex and nuanced interdependence between what people usually do in ordinary contexts, what factual information they can gather from the human and built surroundings, and their perceptions of what is going on and what might be feasible (Filippidis et al. Citation2006), all for situations that are likely unusual in experience.

For damage scenarios, which are understandably difficult to experiment with in the real world, computer models have traditionally been tasked as a simulation medium for planning and decision support (Petruccelli Citation2003). Considerable modeling expertise has been developed, in particular, for evaluating how people might move through buildings and facilities in an emergency (Gwynne et al. Citation1999). What happens to people once they spill out onto streets beyond buildings is less well-explored in simulation. It is often presumed that the transport system can clear populations from urban areas (Alam and Goulias Citation1999), but in damage scenarios, many people will likely egress by foot because they cannot reach vehicles, or because road infrastructure is damaged. Modeling the dynamics that happen once people are handed-off to the streetscape creates some very thorny challenges. During foot egress, the onus for determining the most efficient and safest egress path is often placed on the individual, abruptly, at a time when the mental map that they might usually rely upon to interpret perceived movement potential is challenged by sudden disruptions to the urban fabric: the sense of space and place that it usually conveys loses currency. In many instances, the built environment may be destroyed and littered with obstacles. At the same time, people’s perception of what is going on around them may be challenged by extraordinary crowding and mass movement phenomena. In short, massive novelty and uncertainty may overshadow emergency scenarios. Uncertainty creates the sort of messy complexity that models generally shy away from handling (Batty and Torrens Citation2001).

Issues about how people fashion their understanding of (and in) emergency events – by reasoning on big obvious signals as well as fleeting nuances in the dynamics around them – perhaps tasks models with a different job than we usually employ them for (Batty and Torrens Citation2005; Fiedrich and Burghardt Citation2007). The demands of sweeping through messily complex damage scenarios flexes the commonplace use of critical models, from providing actionable metrics to risk and loss software (Schneider and Schauer Citation2006), to other uses that lean on models to support theoretical inquiries into the behavioral underpinnings of emergency phenomena (Gwynne et al. Citation1999). This points to an emerging view of simulation as a sandbox, as a medium for exploring systems by means that must necessarily be imaginative in some aspects and firmly anchored to real-world facts in others (Baudrillard Citation1994). This view of models is coming into focus as large silos of data from urban and personal sensing systems are now waiting in the sidelines as the substrate from which such models might be built (Pentland Citation2014; Sharma and Gifford Citation2005; Swan Citation2012), and as our academic inquiry into human behavior reaches further and further into the dynamics between perception, action, and cognition (Barsalou Citation2008).

A central argument of this paper is that we can push our models to do more: (1) to support high-resolution parameterization of people’s actions, interactions, and reactions that reach toward the most exquisite detail that theory and data can provide; (2) to handle massive systems of synthetic people and objects that might allow us to trace indelible paths from the micro-scale of critical phenomena up to macro-scale impacts, while treating the thorny dynamics in between; and (3) to generate actionable empirical data at scale. In support of these goals, we describe a model of behavioral and perceptual factors of egress, focusing in particular on movement and the information that people gather to inform their egress. The synthetic people in the model are parameterized as human automata (which we will detail shortly) at the microcosm of their movement and locomotion (millimeters of space and fractions of a second of time), and they are endowed with model behaviors at that resolution. We run the models on a synthetic built environment that is generated from real-world data for a real-world city, using damage scenarios that treat the physics of building collapse. Knock-on phenomena are modeled using schemes that support a free flow of information and processes across dynamic and diverse model components. We introduce an analytical scheme that can extract action, reaction, and interaction data directly from human automata (HA) characters’ cognitive processes, and we describe bounding algorithms that can weave those data into collective mental maps that can be displayed, measured, and queried relative to myriad what-if questions. We benchmarked simulated dynamics against real-world data, and while the results show that we have considerable work ahead of us in faithfully representing real movement scenarios, our efforts show that models can be successively tasked to do more to support our exploration of human dynamics in critical scenarios, and our work frames useful avenues for future research and development that might open new possibilities for the community.

2. The automata-based model infrastructure

Our model sandbox aims to flexibly and faithfully represent movement and interaction in egress scenarios. In particular, we focused the model on a diversity of processes that might explain people’s perceptions about how to egress safely in damage settings. The primitives for the model require a variety of data structures to represent entities and processes in simulation. We make use of Geographic Automata Systems (GAS) (Torrens and Benenson Citation2005) to accommodate representational diversity in the model. Our interpretation of perception in the simulation scenarios relies on mental mapping across a range of interpreted geographies, each with different data representations. GAS can work flexibly with diverse forms of geographic information, thereby offering us significant malleability in model construction. We can also interpret geographic automata in different ways to represent diverse processes.

We relied in particular on human automata (HA) and rigid bodies (RBs) in differentiating dynamics of the human and built environments respectively. Both entities can be built as geographic automata and the interplay between them can be flexibly accommodated in a GAS framework (). We used HA to endow automata with synthetic abilities to acquire and process information gleaned from themselves, other automata, and their simulated surroundings, and to frame synthetic spatial actions, interactions, and reactions in simulation. Much of the synthetic human geography and behavioral geography used to specify HA is sourced in theory regarding the perception that might support mental maps for safe egress. It is important to note that we do not necessarily consider HA to be agent-based models, and the distinction here is significant: HA are designed to represent individual human abilities (in this case, spatial abilities) and they are parameterized using human-like components and weights. This differ from agent-based models, which tend to be focused on social abilities.

Figure 1. The main components of the geographic automata system and their interactivity in simulation (hollow boxes indicate data and data structures, filled boxes indicate models).

Buildings provide perceptive guidelines for path-planning, way-finding, and steering, but they also obfuscate movement by imposing physical barriers or occluding what people might see. To represent HA-characters’ (i.e. individually specified HA in their individual context) interactions with the built realm, we need to treat HAs’ visual (perception) and tactile (physical) interplay. We used RBs to represent physicality between the built setting and HA-characters with on-par interactivity. RBs are handled as geographic automata, but they are uniquely susceptible to a sub-model that handles rigid body physics. The data required to calculate physical interactions between RBs is supplied directly between the automata that represent the RBs; the RBs do not proactively acquire those data in the way that HA might in simulation.

The differentiation of automata into diverse entities for simulation necessitates that we establish some framework for handling the flow of processes as ensembles of data and actions. To achieve this, we rely on a technique that we refer to as slipstreaming (details are not relevant to this paper, but are provided in Torrens Citation2015), which transports process fragments from one part of the model to another part, but delivers them in the context that the model component requires to achieve its (own) goals.

2.1. The human automata movement and interaction model

We built movement into the model with interactivity from process components that couple movement behaviors at different characteristic spaces and times for different forms of perception and with details that scale from individual representations of HA-characters’ bodies up to city-wide pathways for trip-making. This requires diversity in behaviors, which we treat using model compartments corresponding to discrete behaviors and discrete spatiotemporal data frameworks to feed them (Torrens Citation2007). The behaviors are sourced in different spatial ‘skills’ that the literature suggests are invoked when people move through urban environments, which we decompose into modules and then integrate to produce the motion that drives egress. We therefore devote specific classes of GA to each specific compartment of behavior (and slipstreaming allows processes to flit across compartment boundaries).

2.1.1. Trip-making

The purpose of the trip-making module is to generate activity sites for HA. We regard HA-characters as being ‘at work’ or ‘on the street’ when the egress event begins. HA that are at work are placed at building entry/exit points at simulation run-time, while those that are engaged in other activities are sited on simulated sidewalks as initial locations. Trip sinks are defined directly to represent the locations of office assembly zones in open spaces and plazas.

2.1.2. Path-planning

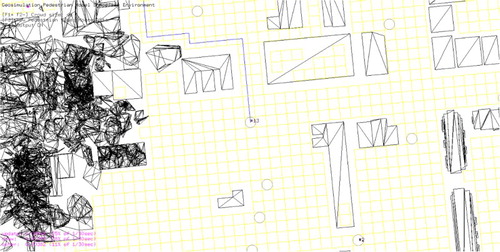

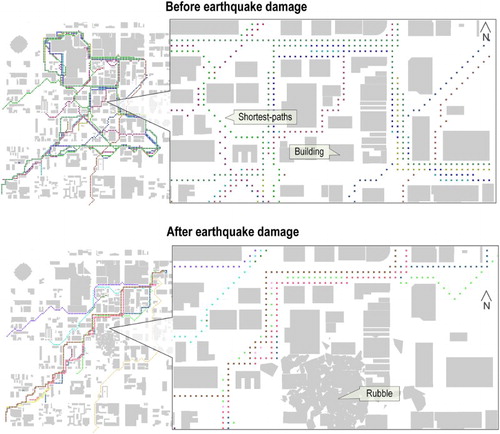

We used path-planning heuristics to build paths between sources and sinks of activity; paths provide a guideline for HA movement. Because of our emphasis on critical egress, we focused on determination of the shortest path. We dynamically built graphs of traversable space by superimposing a regular graph of vertices and edges on the simulated space and subtracting the vertices that have point-in-polygon membership with footprints of built infrastructure (buildings, rubble) and cars (). We used the A* heuristic (Hart, Nilsson, and Raphael Citation1968), which considers Euclidean distance (as well as graph distance) in settling the shortest path, because our HA must balance the shortest travel time (which is largely a function of the street network of roads, sidewalks, and alleys, which are well-represented in graph-space), and also because HA must rely on things that they can see as they move (which conceptually matches to Euclidean distance).

Figure 2. The path-planner can produce shortest paths between origins and destinations in the simulated space by imposing a graph of traversable area around buildings and building damage. Above, a circle indicates an HA-character’s position in the graph traversal pipeline, blue lines denote trip paths produced by the planning heuristic, the yellow grid denotes the global graph of traversable space, and black polygons denote building and debris envelopes.

2.1.3. Way-finding

HA in the model use a three-stage way-finding scheme that considers long-term location goals (LTGs), medium-term location goals (MTGs), and short-term location goals (STGs). LTGs are provided as the source-sink pairs for a trip. We used different waypoint features as MTGs, including building edges and large pieces of rubble from collapsed buildings, as fixed objects that present a phase change in HA movement (e.g. making a 90° turn around a building corner, or negotiating a section of rubble that has interrupted a HA-character’s shortest path). STGs are used to establish right-of-way for HA avoidance of routine fixed obstacles, such as doors and walls. STGs are cast as temporary locations for steering, and they are discarded as location goals once they have been negotiated. (Waypoints can also overlay with the trip graph, as vertices for paths.)

2.1.4. Synthetic awareness via vision and tactile sensing

In egress, people rely on a myriad of schemes for gathering information around them, and how they do this is a broad topic of investigation (Torrens Citation2016a). In our model, we use a tiered visual and tactile awareness scheme that fixes HA information-gathering on specific regions of space (and time) and relays state data to their transition rules. First, we supply a broad field of vision to each HA, which floods the HA with information that it encounters within a limited attentive range. The field is constrained as a sector facing a HA’s forward direction. The sector forms the neighborhood for the HA’s transition calculations: as objects are enveloped by the sector, state data from those objects is passed to the HA. The area of the sector is user-defined and the location of the sector shifts as the HA moves, so that the information that constitutes the HA-character’s ‘surroundings’ also shifts as it courses into and out of the sector. Second, HA cast rays of user-defined length from the mid-leg position of their skeleton, in the direction of travel. The primary purpose of these rays is to provide dynamic detailed data on the distance between the HA (who are mobile) and any fixed objects (non-HA RBs) that the HA might encounter. Where and when the ray is interrupted by a fixed object, the distance and direction to the object is returned to the HA as state data. Also, the RB of the object is returned so that the HA can determine a STG to steer to in avoiding the object. Third, at the finest resolution of space and time, HA use simulated tactile sensing to test immediate collisions within a short distance around them. We encapsulated HA as RBs, affixing collision spheres to key nodes on their simulated body-rigs. These RBs were then used to test for collisions with other HA RBs as well as with RBs that represent the built environment. RB-on-RB collisions are physical and take place on the order of a few millimeters to centimeters in space and fractions of a second in representative time in simulation. HA can back-out of very close physical collisions over tiny bubbles of space-time. In this way, they simulate the fleeting, tactile responses that people might develop by bumping into things or by coming into very close contact by brushing against objects.

2.1.5. Steering to avoid contact with fixed and mobile objects

HA must trade a range of motivations that shape their movement through the simulated space: they would like to get to their destination, they are motivated to do so via the shortest-path and intermediate waypoints of meaning to them, they fastidiously hold to their own preferred speed, they shift to avoid things that they prioritize as interruptions, and they avoid bumping into things where and when it is possible (). When HA-walkers have a clear sense of where they are going, and enough space and time to make the trip, they may optimize all of these motivations without sacrifice. However, that is usually not feasible when large volumes of (self-motivated) HA move through the same space and time, as occurs in mass egress scenarios, and HA must devote a significant amount of their attention and decision-making to identifying collisions and preemptively adjusting their movement to avoid them, while also preserving the competing requirements of their optimal movement trajectory. We enable this decision-making using steering routines (Reynolds Citation1999) that are well-known in the computer gaming and animation communities, and which we have shown elsewhere to match quite well to our theoretical understanding of real people’s behavioral geography (Torrens Citation2012, Citation2014a), and real metrics of human movement in urban spaces (Torrens et al. Citation2012). These steering components are well-covered by Reynolds (Citation1999). In brief, HA steering is controlled by updating the linear and angular acceleration of HA-characters’ movement over a half-space representation of the urban scene that has areas that are coded as accessible (sidewalks and roads) or inaccessible (buildings and rubble). As HA encounter objects in the space, using their synthetic vision, they determine the likelihood of a collision for a small look-ahead bundle of time and space and calculate alternative intermediate and collision-free destinations to pursue should they need to alter their course in a next time-step. Multiple HA are engaged in these calculations, which creates some computational burden for the model, but HA need only assess positioning for a small local window of space and time that their vision affords them, and this reins-in the computational load.

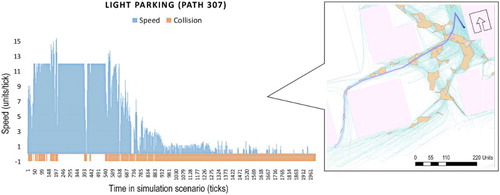

Figure 3. Left: the timeline for walker #307 in the LP scenario is illustrated, showing an extended period of speedy egress (accommodated by a path that proceeds through a SEH), followed by slowing-down at the assembly site. Right (callout): the steering paths for HA in a portion of the urban space for the LP scenario. In the callout, all HA paths through the space are shown in gray, and walker #307′s complete egress path is illustrated with a trail of blue dots (dark to light blue indicates progressive time spent in the scenario). Built structures are shown as light blue polygons, and resolved SEHs are illustrated in orange.

2.1.6. Motion control and kinematics

Trip-making, path-planning, and steering routines deliver long-term, medium-term, and short-term movement suggestions to HA, individually, in the model. HA-walkers must then decide how to act upon those guidelines to generate locomotion. Locomotion is handled in the model by taking a short-burst bubble of space and time resolved via the movement models, and using that as a brief locomotion sequence that is matched to HA-character’s skeleton-rigs and to motion capture data via inverse and forward kinematics (IK/FK) with motion blending. IK/FK is used to articulate HA-character’s locomotion, step-by-step, subject to the constraints that the small movement bubble provides and to additional caveats about foot placement and gait. If, in the course of locomotion using IK/FK, a HA-character comes into a close physical collision with another object in the simulation (the collision objects on other HA, or a RB envelope for a piece of the built environment), we use the commonplace technique of rolling the animation back a few sub-steps to calculate a collision-free pose that matches the IK/FK and motion capture data constraints. We follow standard schemes for accomplishing this: general details are available in Badler, Manoochehri, and Walters (Citation1987), while details for our pipeline are covered in Torrens (Citation2012).

2.2. The rigid body damage and collapse model

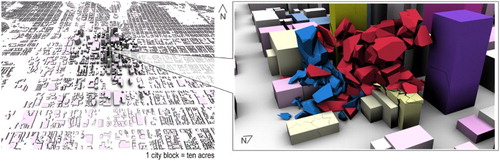

We made use of light detection and ranging (LiDAR) data of a real city (Salt Lake City, Utah, in the USA) to rapidly prototype a volumetric urban space for our simulations (Batty et al. Citation2001). This space serves as a virtual geographic environment (Lin and Batty Citation2011; Lin, Chen, and Lu Citation2013a), that is, a virtual world with realistic geographical foundation and processes (Chen et al. Citation2013; Lin et al. Citation2015), in which geographical analyses can be leveraged for what-if experiments (Lin et al. Citation2013b). The urban space was represented as georeferenced individual RB object-buildings atop a street network. We added additional RBs to represent parked cars. Earthquake-induced building damage and resulting dynamics (such as pounding) were modeled by assigning tessellation routines of varying specifications to RBs, to be activated under forces of gravity and inter-object collision, if and when an earthquake event is assigned to them and if and when the RBs come into actual contact during their subsequent dynamic interactions. The physics model also handles formation of new RBs as a by-product of the forces that those interactions produce. Here, we rely on usual schemes in game engine physics (NVIDIA Citation2012). The details of the physics and collapse model are beyond the discussion of this paper, but are well-treated in Torrens (Citation2014b), where specifics of the tessellation schemes, gravity model, and collision model are specified. HA in the model can interact with the built space as RBs. If a building collapses in a simulation scenario, they can also interact with the decomposed RBs that the collapse model produces, by seeing them in their vision, by steering to avoid them, and by negotiating very close contact collisions with them. We should note that the collapse model is deterministic in the simulations discussed in this paper: RBs will always land in the same place and time per scenario ().

Figure 4. Left: A LiDAR-sourced volumetric model of Salt Lake City, UT was used to rapidly prototype a synthetic city environment for the simulation scenarios. We applied rolling tessellation and a rigid body physics engine to the volumetric model to produce collapse scenarios (right, inset in graphic above).

3. Mental mapping with alpha shapes

HA in the model act, react, and interact based on the state data that they dynamically and proactively acquire and refresh by self-checking their own states and by gleaning state data from other HA through neighborhood filters and by slipstreaming. HA also continuously deduce and calculate further information as composites atop this base. We use a geographic information system (GIS) to manage what soon becomes a trove of big state data generated within the simulation. Entities in the simulation are cross-referenced by the GIS, collectively forming a live poly-space (Torrens and Nara Citation2013) of geometry (three-dimensional position and vector data), graphs (network components of trips and paths), way-space (waypoints and goals), social space (the space of potential collisions), and built space (RBs and their physics) (Torrens Citation2016b).

A central thesis of this paper is that it is meaningful to slice snapshots of the poly-space that empirically and qualitatively indicate and synthesize perceived and actual egress potential for different situations. As HA-walkers traverse the synthetic built and human environment of the simulation, they acquire state data about what they encounter and they update their own states based on those encounters. Moreover, HA transform state data into information that is meaningful relative to their own situation and context. With many HA in the model, we have in essence access to a massively interactive and distributed apparatus for probing and interpreting scenarios. We can therefore build a collective mental map of perceived safe egress across different simulation scenarios, as perception develops relative to the hyper-local (and hyper-immediate) geography of the built and human tapestry of the simulation scenario. We extract key state features from HA state transitions (trips, paths, waypoints, steering decisions, steps, and collisions) and index them to points in space and time, then we bound the regions of the simulated space that the features encompass, using alpha shapes (Edelsbrunner, Kirkpatrick, and Seidel Citation1983) at different scales.

Taking the point-locations output from a search of the HA state-spaces, we use a set of closed generalized disks, , (

is the disk (

) radius) to tessellate the space around the points into sub-regions of the set that those points constitute,

. (It is important to highlight that we may search the very large, very diverse, very rich, and very heterogeneous parameter space of states quite flexibly and quite efficiently.) Specifically, we formed the sub-region as an α-shape, following Edelsbrunner, Kirkpatrick, and Seidel’s (Citation1983, 555) algorithm:

Table

We determined whether a point () from the set of points

extracted from the state-space that HA generate in simulation is α-extreme in

and whether edge-forming points,

, were α-neighbors in

by evaluating where

fell on

, and on the convex hull (i.e. the enveloping region) formed by

. Specifically,

is α-extreme in

if there is a

that has

on its boundary, and if the disk contains all

in

.

are determined to be α-neighbors in

if they are found to be α-extreme in

and there is a

with

on its boundary, and if the

contains all points in

. If these conditions are met, the resulting α-shape can be resolved as the straight-line graph with vertices formed from the α-extreme points and edges formed from α-neighbor connections. Testing the satisfaction of the aforementioned conditions involves examining whether

in

are closest-point Voronoi neighbors or furthest-point Voronoi neighbors on the closest-point or furthest-point Voronoi diagrams of

; these closest-point and furthest-point Voronoi tessellations provide straight-line duals that can be used to build closest point (

) and furthest point (

) Delaunay triangulations. Details for evaluating these tests are provided in Edelsbrunner, Kirkpatrick, and Seidel (Citation1983) and examples on movement data are demonstrated in Torrens (Citation2016b). In the interest of brevity, we will not repeat those details here, but it is important to mention that the key parameters in those details center on the size of the disk,

(what we will heretofore refer to as the alpha value). Small alpha-values will tend to yield hulls (α-shapes) with finer-resolution matching to the underlying bundles of

in

than hulls produced with larger alpha-values, which will tend to yield more generalized regions from collections of

in

. Considered substantively, alpha-values may benchmark scales of HA perception. In particular, we chose alpha-values that relate to different vistas of human perception while moving, from ego-centric to allo-centric (Tuan Citation1975a, Citation1975b), and to urban (Batty Citation1997).

4. Simulation scenarios

The impetus for egress in our simulation scenarios is a hypothetical earthquake, which we presumed as impacting a portion of Salt Lake City, UT that houses the main office district for the downtown of the city. We did not model the earthquake directly, but we did model the building collapse and damage scenarios it might cause. We assumed occupancy levels of the buildings. We seeded the model with a synthetic population of 2000 HA-characters, placed at origins near exits of buildings or on the streetscape outside buildings. Each HA-character in the model was programmed with individual characteristics for height, preferred speed, vision, stride in locomotion, reaction timing, collision potential tolerance, and preference for angular acceleration, through random assignment within user-defined ranges. In each case, state data were obtained from real people in motion capture sessions, or from libraries of motion capture data. For the experiments in this paper, the goal in assignment among available state parameters was to create a broadly heterogeneous adult population. As a result, each HA in the model is specified to be at least slightly different than the other, some more than others. (Elsewhere (Torrens Citation2012), we have shown how a similar state-assignment scheme can be used to specify particular mixes of demographics in a crowd.) We stratified the population into 13 cohorts (color-coded by cohort in the graphics that follow), corresponding to office groups. Within-cohort HA engaged in steering with weak flocking (Reynolds Citation1987) when in the vicinity of other cohort members. HA were pre-assigned to assembly points, which mimics the evacuation plans that most offices prepare. We also ran two variants atop this basic parameterization. In the first scenario (‘light parking’, LP), the time of day was set to before work hours, and we therefore seeded the simulated streets with low volumes of parked cars. In the second scenario (‘heavy parking’, HP), we assumed that work had begun and we seeded the model with heavily-parked streets around buildings.

We can flexibly mine HA (and RB) state data from the simulation. Our interest, in this example, was in exploring perception of safe egress. To support this, we trained our queries on (per-HA) states for the point-locations and times in which HA-characters were:

Mobile;

Not at the extremes of their preferred speed;

Looking in a generally forward direction of travel;

And had looked to their surroundings and not detected an actionable collision either by ray-casting or in their vision.

From this ‘sea’ of points (9.048 million point-locations per scenario), we then alpha-shaped boundaries with varying intervals to derive HA-perceived polygons of perceived egress safety. In essence, the HA (individually and collectively) act to filter out the passages of space and time that they traverse through, and report that ‘the way is clear’, which we then capture, measure, and visualize as a composite of all the HA that pass through the space under the given scenario. If we maintain the same HA population and we maintain the same initial locations for HA, by switching-out different configurations of the built environment, we can examine the likely impact on perceived and actual egress.

5. Results

The collapse and pounding scenarios cause utter destruction to several of the buildings in the simulated space, and damage to others. Large sections of the simulated space becoming entirely blocked to traversal; in many other areas debris must be negotiated. The two parked car scenarios present further obstacles, as do crowds of mobile HA moving in the same or different directions during egress to assembly sites.

We analyzed the simulations to explore the influence between HA egress perception and urban design, collapse damage, human traffic, and parking scenarios. We also analyzed HA-character’s mental maps of egress safety, to explore the geography of those maps, HA-characters’ experiences within and outside perceived areas of safety, and scaling effects in perception of egress safety. Finally, we benchmarked HA movement against real-world data.

5.1. Human automata perception and urban design

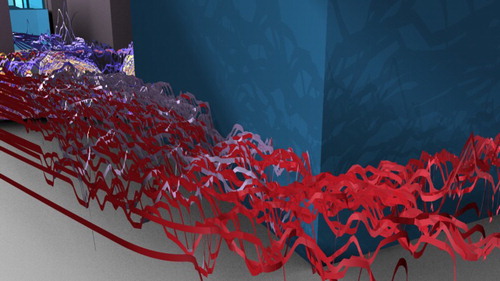

Walls have a rather unique influence on HA perception of egress. First, walls present physical obstacles that cannot be traversed. Second, all walls in our simulation were higher than individual HA-characters and so they occluded HA-characters’ vision. Third, HA-characters were designed to quickly scan the ends of walls and to designate those endpoints as STG waypoints in their way-finding and steering routines. These three conditions invoke dedicated information-gathering that allows HA to acquire a lot of detail about walls. HA were therefore quite adept at not bumping into walls and they were quite successful in proceeding alongside walls, that is, rather than continuously correcting for potential collisions with walls over and over, HA were able to engage in wall-following behavior ().

Figure 5. Visualizations of space-speed paths for HA movement alongside walls shows HA-characters’ success in avoiding collisions with walls (as shown in the XY plane of movement), while also maintaining reasonable speed (visualized as the height (Z-plane) of the space-speed ribbons; higher ribbons indicates higher speed). Paths are color-coded by office cohort.

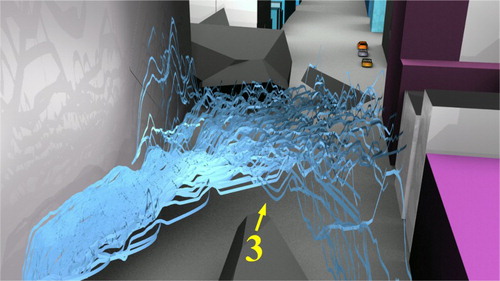

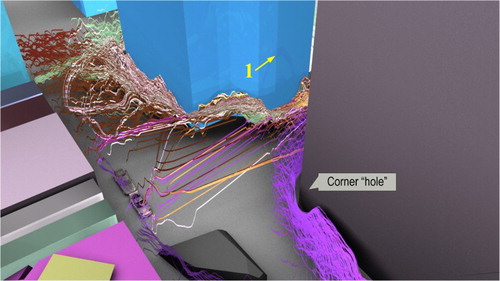

Corners influence HA perception by providing a breakpoint in HA movement. HA-walkers must rotate (often through abrupt and sharp angular acceleration) to negotiate corners. As HA pass a corner, their vision will suddenly and rapidly shift to expose them to new information as the scene around the corner unfolds before them. These two conditions – a transition in movement behavior and an abrupt shift in their state information – introduces considerable novelty for HA at particular places in the built environment. This is evident, for example, in : a ‘hole’ forms in the space-speed paths of HA-walkers traversing a building corner. As HA successively encounter the corner, congestion can build-up and slow egress, especially when streams of HA encounter the corner at the same time. However, corners can also provide useful interruptions to locked-in patterns of egress (such as slow-moving unidirectional convoys) that in essence jostle HA out of hard-fastened trends of shared direction and speed.

Figure 6. The influence of corners on egress: speed drops dramatically at the corner edge and a chain reaction of sorts forms in successive movement, locked-in at the corner. One can see a hole form in the collective space-speed path around the corner in a teardrop shape, with the collective heading forming the apex of the teardrop. (The yellow number 1 indexes the vantage point in to follow later in the paper.).

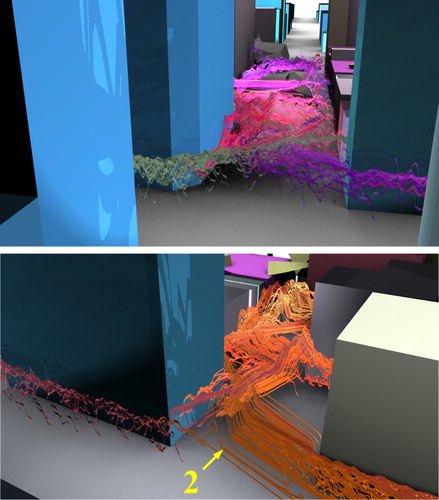

Urban corridors are present, physically, in the space between buildings in Salt Lake City, UT, as alleyways, as access roads to loading and unloading areas, and as rights-of-way. HA in our model are not dissuaded from traversing these spaces to get to their destination. In many instances the corridors provide a shorter path to the assembly points than sidewalks and roadways might otherwise afford. This positive effect of corridors is evident in , which shows crisscrossing of long-lived egress streams through corridors, at high speeds and in relatively straight headings. The potential negative influence of corridor morphology is perhaps evident in , where an irregular corridor presents in the interstitial space between buildings and the design forces egress into a slalom-like pattern of short-lived bursts of relatively high-speed movement interspersed with relatively slow progress.

Figure 7. The influence of urban corridors, showing long-lived persistent movement through alleyways in locations of the urban scene that have linear corridors (top), but sloshing and slaloming effects in others where corridors are irregular (bottom). (The yellow number 2 indexes the vantage point in to follow later in the paper.).

5.2. Human automata perception and collapse damage

The collapse scenario forced three important shocks into the simulation’s human dynamics. First, collapse initiated an evacuation of the buildings and egress to emergency assembly sites. Second, damage altered the urban morphology, so that conduits for movement changed radically and the presence of occluding and collision objects increased dramatically. Third, damage to a few buildings in the center of the simulation space removed critical nodes from the graph of shortest paths, reducing the resulting count of shortest paths and biasing path geography along a southwest-northwest diagonal ().

Figure 8. A system-wide view of shortest-paths from a few select origin locations in the simulated space (dots represent paths in the image above, color-coded by HA; gray polygons illustrate built infrastructure and damage debris). Local views are shown in the inset image, and HA perspectives on these views are shown in .

These three shocks had system-wide impacts as well as very localized effects: pieces of debris created visual and locomotive obstacles in HA-characters’ way and HA could interact with them at high resolution. The collapse disruption then fed the dynamics of secondary phenomena. For example, in some simulation instances, HA successively encountered debris obstacles with the result that HA-walkers who initially reacted to the obstacle by steering to avoid it ended-up kick-starting short- and long-lived patterns of directional bias and acceleration/deceleration dynamics that became phenomenal responses to the obstacle. Perception played a crucial role in translating that initial shock from the collapse phenomenon into the egress phenomenon, essentially bridging the urban-human interface. But, the translation across this divide is quite precarious. For example, an initial HA reaction to steer around an obstacle may be optimal for the first HA-character, may be mistaken, may be efficient, may be costly in travel distance, and so on. Nevertheless, HA can lock-in around that reactive behavior and may either reinforce or modify in their perception, with resulting long-running and wide-forming impacts that promote or discourage safe egress ().

5.3. Human automata perception in human automata traffic

We also examined HA-on-HA dynamics as phenomena in simulation (abstracting from the acknowledgement that HA dynamics are also intertwined with influences from urban design and the collapse scenario).

Lane formation – is apparent in the space-time geography of HA movement in egress, and perception plays a significant part in lane dynamics. HA-characters may form lanes as they share shortest-path segments; as HA align with the same navigational STGs, MTGs, and LTGs; and as HA steer in simpatico to avoid collisions with other HA or obstacles in their vision (i.e. they receive similar geographic information and/or have a similar response in a nearby space and time). Unidirectional lanes of shared movement regularly form in the simulation scenarios, as temporary solutions to physical constraints (such as corridors, or around corners). Bidirectional lanes of movement also form, as a shared reaction to crowding or the impassibility of unidirectional lanes, and these can be quite long-lived.

Group movement – also manifests from perception ingredients in simulation. Recall that HA with shared group allegiance will ease-up their collision reflex to match heading and speed of HA around them when they recognize fellow group members (weak flocking). This can lead to lane formation when groups self-organize around preferred alignment. For example, in , groups of allied HA negotiate a piece of debris blocking the road and they align into a cohesive group movement. We can trace the formation of this pattern through individual HA perception: the HA that first encounter the obstacle veer left to avoid it and most of the other HA in close proximity to them follow suit, partially because their collision tolerance for neighbors of the same cohort is high. In essence, their group membership affords HA some valuable space-time efficiency relative to this obstacle. Once the group steers clear from this collision, HA begin to fan-out and separate from the fleeting group peloton that they have formed in response to the obstacle. Once this occurs, they begin to become much more volatile in their speed and steering. (Similar patterns often occur when cars stream out of toll booths on highways, for example.)

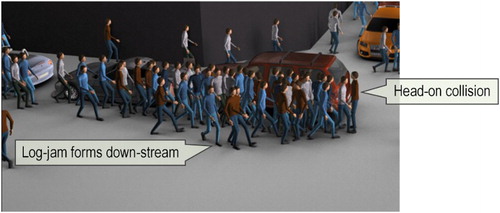

Imprinting by perceptual shadowing – is a recurring by-product of perception in egress. Reactive and interactive steering can create a perceptual shadow at an obstacle; this shadowing in a sense signals to other HA that they should give the obstacle a wide berth, and this is achieved via the event information that prior waves of HA encountering the obstacle have imprinted in the egress phenomenon. These are not permanent shadows, but can be long-lived if the space-time bubble that forms around the obstacle is continually fed with HA that react and interact in the same or a similar fashion. Alas, if a single HA-walker does not follow that behavior (because of a unique situation that they have found or because of a novel reaction to the obstacle), the space-time bubble may pop, and the shadow may disappear, or an entirely different shadow may form around the same event space. This creation and reformation can be positive or negative in egress impact. For example, an exaggerated steering-to-avoid maneuver can give way to a log jam that compounds into broader congestion. As in traffic jams on highways, these initial collision events can propagate back through the phenomenon-event space, even when the initial genesis of the blockage has cleared. Shadowing might also form around fleeting HA maneuvers (such as turning or stopping decisions) and this can be unproductive when a crowd locks-in around what is simply a momentary HA-on-HA exchange (see , which shows how one HA can hold-up a large stream of walkers).

Figure 10. Individual shifts in behavior can catalyze broader phenomena. Above, a stream of successful collision avoidance alongside parked cars is interrupted suddenly when a HA walks, head-on, into the lane of like-moving HA that has formed. This creates an abrupt initial collision that must be resolved, which begets more and more collisions down-stream throughout the lane of moving pedestrians. Down-stream HA have little space to move and do not have information about the collision ahead of them. In slowing-down, successively through the phenomenon event-space, an initial log-jam turns into a larger congestion event, within a few seconds.

5.4. The influence of parking scenarios on egress perception

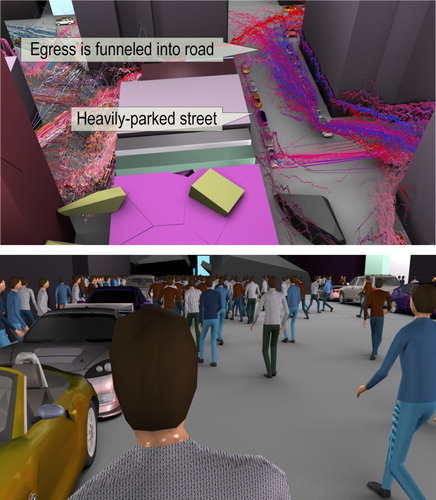

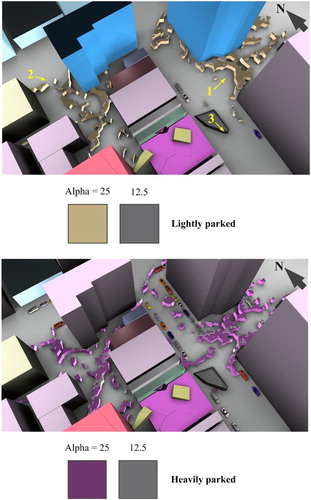

Parked cars create fixed obstacles in specific places, physically and visually blocking the transition space between sidewalks and roads, and cluttering areas of free egress around the buildings that HA-walkers initially evacuate from (). We tested two scenarios for parking in simulation: LP and heavy parking (HP). There was a distinct and local qualitative difference in egress dynamics between the two scenarios: collectively, the crowd moves away from HP areas of the streetscape (). This finding is straightforward: cars block egress. However, another secondary effect was observed: HP areas funnel egress onto roads, where HA have more degrees of freedom to steer and move, and where they can acquire geographic information (i.e. they can feed their perception) more freely. The HP scenario produced a greater number of alpha-hull patches (103 patches at alpha scales of 12.5 and 73 patches at alpha-scale of 25), compared to the LP scenario (85 patches at alpha scales of 12.5 and 59 patches at alpha-scale of 25), and the HP scenario generated a comparatively larger area of patches, although not by much (). This is also expressed in the perimeter-area (PA) ratio for the scenarios: the LP scenario produced safe egress patches that had a much higher PA ratio (by a factor of almost 3 at alpha scales of 12.5 and by five at alpha scales of 25) than the HP scenario yielded. Lower PA-ratios are indicative of lower shape complexity, which perhaps lends empirical support to the visual suggestion that parked cars have a funneling effect on egress. HP also moves traffic away from building walls, which as we already mentioned present a hard barrier to movement and vision, with the added result that crowds are shifted a significant distance from hazards from falling building debris. (However, our simulation did not account for the reality that roadways are usually occupied with moving cars, in which case, the funneling/containment effect of parked cars might steer crowds into dangerous collisions in active roadways.) Our analysis showed little empirical difference between the HP and LP scenarios based on path-based movement metrics that we tested (). Trips within safe egress zones were more numerous, were faster, and were longer in distance than those outside safe egress zones for both parking scenarios, at all scales of alpha-hull.

Figure 11. Walkers in the model must negotiate collisions with parked cars as they egress; the cars also block their vision, thereby limiting both their movement choices and the information that they can acquire around them. The top image shows space-speed paths of egress in the HP scenario: walkers avoid car-lined streets and their egress is funneled into the road. The bottom image presents a walker-based view of the surroundings, showing the visual blockage that parked cars create in egress.

Table 1. Summary statistics for all SEHs, per alpha value (α), for the parking scenarios.

Table 2. Summary statistics for all walker paths for the parking scenarios.

5.5. Human automata mental maps of perceived egress safety

We have access to each and every piece of information that HA receive in simulation, and to the actions, interactions, and reactions that they form around that information, which means that we can peer into HA-characters’ mental maps as those maps form and resolve individually and collectively and as the maps bend around changes in the built and human environment.

5.5.1. The geography of safe egress hull formation

Through alpha-shaping, we fashioned mental maps as safe egress hulls (SEHs). We then examined the parking scenarios for the location of SEHs as well as SEH morphology. SEHs appeared in roughly the same locations in both the LP and HP scenarios (). In each scenario, a large and dominant SEH resolved along the main diagonal shortest-path route, stretching in a northeast-to-southwest direction. Another large SEH was detected on the outskirts of the assembly site in the two scenarios. This is understandable: as HA-walkers assemble they reach a safe space where they can move slowly and deliberatively.

Figure 12. The two parking scenarios (LP in the top image, HP in the bottom image) produce different SEHs. (The yellow numbers index vantage points from figures introduced earlier in the paper.).

The location of SEHs varied little between scenarios, but SEHs did manifest with different morphologies for LP and HP scenarios. HP scenario produced SEHs with more area than LP did. For an alpha value of 12.5, HP generated 22% more total SEH area than was produced by LP. At an alpha value of 25, HP generated 13% more SEH total area than LP produced. In other words, HA perceived HP as affording more space for safe egress over the broader simulated urban area. HP generated more SEHs (21% more than LP at an alpha value of 12.5, and 24% more at an alpha value of 25). Both HP and LP SEHs yielded similar mean hull area (again, however, HP produced more total hull area), but HP SEHs that were resolved were both larger in size and yielded a much smaller perimeter-area (PA) ratio than LP SEHs did: the HP SEHs were smaller in PA ratio by a factor of 2.73 at alpha value of 12.5 and by a factor of 4.211 at an alpha value of 25 when compared to LP SEHs. In summary, the HP scenario produced more SEHs and SEHs that were more cohesively connected in space than those yielded by the LP scenario.

The influence of SEH location and shape on egress is intuitive. SEHs map-out areas of egress potential and actuality that integrate shortest paths at a coarse level of geography, bundles of space and time opened-up by steering at medium level geography, and small bubbles of locomotion at a fine level of geography. Cars present as obstacles to egress at each of these levels and can therefore significantly impact SEH formation. For example, there is a cluster of parked vehicles on both sides of the street near the assembly site (see location 1 in ). In the HP scenario, this is formed by tightly-packed cars, which, has the impact of funneling safe egress around the obstacle that the cars establish. The hull generated by this shift is absent (in the same place and in the same times) in the LP scenario. This particular funnel-like SEH from the HP scenario is important in the collective egress experience, as it continues uninterrupted to the assembly site. In other words, the tight-packing of cars produces a knock-on formation that is expressed in the mental map of the HA, and this perception structure provides HA with a physical conduit through the crowd and buildings to the safety of the assembly site.

5.5.2. Perception and human automata experiences, inside and outside SEHs

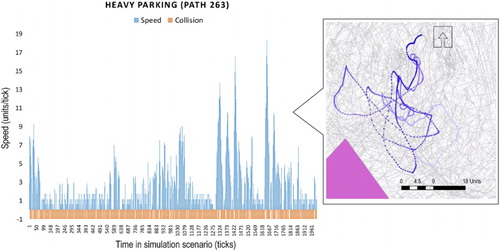

The experience for HA moving within SEHs was dramatically different (along several empirical measures) than that encountered by HA that moved outside SEHs. We would expect to find different experiences inside and outside SEHs, of course, because the SEHs were built atop very specific experiential point-sets. Experiential characteristics were codified from the perspective of individual HA, however, and were defined at very high resolution. In short, HA outside the SEHs were found to detect many more collisions, to encounter higher speed collisions, and to experience more side-on collisions than HA that moved outside SEHs. The experience was detected on a path basis and on an encounter-by-encounter basis ( and ).

The alpha-shaping method allows us to bound individual micro-experiences into collective geographies, and to do so up-scale of the point-indexed experience. When we look at all HA in the simulation from this perspective, we find that on average (mean), HA moved with greater speed inside SEHs (e.g. ) than outside SEHs (e.g. ), and that they did so by a factor of three () in both parking scenarios. In the HP scenario, mean HA speed was 3.8 units/tick outside SEHs (where a unit represents a unit of space in the simulation world and a tick is discrete unit of time), but achieved a mean speed of 10 units/tick outside SEHs at an alpha value of 12.5. HA also had less exposure to side-on collisions (as indexed by collision angle) within SEHs than they had outside SEHs, which means that they had more opportunity to address potential collisions (because they were more likely to be facing straight-ahead) within the SEH than they might have had outside the SEH. The mean potential collision speed, as detected across all HA, was also slightly lower for movement within the SEHs than it was for travel outside the SEHs.

Figure 13. Left: the timeline for walker #263 in the HP scenario is illustrated, showing stop-and-go movement relative to persistent collisions over a small range of egress. Right (callout): the movement path for motile #263 is shown in blue (dark to light blue indicates progressive time spent in the scenario; gray lines indicate all HA movement paths; pink polygons represent resolved SEHs). (Compare this to the movement and timeline in .).

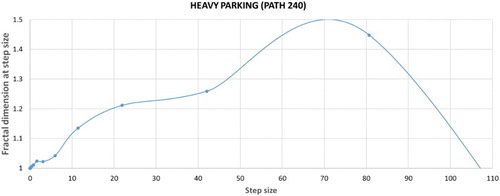

The characteristics of paths do not differ tremendously between the two parking scenarios. For example, we built eight complete space-time paths for a sample of HA (two paths are shown in and ) and calculated a set of sinuosity metrics (Nams Citation1996; Torrens et al. Citation2012). Mean fractal dimension (MFD) results were similar across the HP and LP scenarios, as were turning metrics (). However, HA-characters’ experiences along those paths were quite different across the scenarios. For the LP scenario, collisions per path length outside SEHs were more far more numerous than those inside SEHs, by a factor of 245 at an alpha value of 12.5 and by a factor of 55 at an alpha value of 25. Under the HP scenario, those factor values were 280 at an alpha value of 12.5 and 47 at an alpha value of 25. The plentitude of collision potential outside SEHs causes HA to succumb to stop-and-go movement throughout their trip, as can be seen in for a sampled HA path. The sinuosity metrics for these sampled paths also show that movement within SEHs yields higher probability for turning in the same direction than outside SEHs, that is, HA moving within SEHs have a greater likelihood of preserving heading on a step-by-step basis than they have outside SEHs.

Table 3. Movement metrics for scenario-simulated paths.

5.5.3. Scaling effects

Different alpha values define HA-characters’ resolved mental maps as tapestries of SEHs at different perceptual scales, and to examine the effect of scale we built SEHs at values of 2.5, 12.5, 25, and 50. At alpha values of 2.5 and 50, very few SEHs resolve (they are too small and too large, respectively) and so we do not discuss those results here. At an alpha value of 12.5 and at 25, many SEHs resolve. By examining HA experiences (i.e. the state data that HA produce as they act, react, and interact within hulls at different scales), we can explore scale effects in the egress conditions that they perceive. In particular, at an alpha value of 12.5, HA move significantly faster than at an alpha of 25 (at a mean speed of 9.468 units/tick for the LP scenario at an alpha value of 12.5, compared to 6.646 units/tick at an alpha value of 25; and at 10.004 units/tick for the HP scenario at an alpha value of 12.5, compared to 8.156 units/tick at an alpha value of 25), and they have less collisions per SEH area (collisions per unit area for the LP scenario were 2.52 at an alpha value of 12.5, and 3.397 for an alpha value of 25; collisions per unit area for the HP scenario were 1.458 at an alpha value of 12.5, and 1.965 for an alpha value of 25). In other words, individual HA have more apparent nimbleness within SEHs over relatively small packets of spatial awareness than they are afforded over coarser areas of space and time. In other words, at the micro-scale, HA egress perceptions are well-actuated into actionable movement.

5.6. Real-world comparisons and comparisons to other motion control phenomena

For the purposes of comparing the movement in the two simulation scenarios to movement by other (algorithmic, heuristic, and statistical) motion controllers () and to real movement by real people ( and ), we took a sample of eight paths from the simulation (sampled at each alpha hull scale, for each scenario, within and outside resolved SEHs). We then calculated descriptive statistics for point-events along these paths (e.g. and ), as well as sinuosity and allometry metrics along path polylines ( and ). (Metrics for comparison paths generated by shortest-path heuristics alone, by hopping, by random walks, by simple steering behavior, and by social force controllers are listed in and details of the algorithms and heuristics used are reported in Torrens et al. (Citation2012).)

Figure 14. Fractal dimension variation at different step-size generalizations for walker #240 in the heavily-parked scenario, outside a SEH at an alpha value of 25. (The data points are not continuous; the connecting line is used solely for illustration because of the varying value range.).

Table 4. Movement metrics for benchmark simulation paths.

Table 5. Data collected for real-world walking paths.

Table 6. Movement metrics for real-world pedestrian paths.

The full-stack movement paths (i.e. paths that combine motion at scales from holistic path-planning all the way down to fine-scale locomotion) that were produced by our model exhibited much less tortuosity than other (popular) model motion control schemes that we have experimented with (). The MFD (taken as the fractal dimension of path-lines at different scales of generalization from the minimum scale value in to the maximum scale value in the same table) for our HA (across all scenarios) was closest in value-range to the pure steering routines in the comparison-set: around 1.025 for our HA, versus 1.026 for simple steering (). Our model included a realistic (and very cluttered) built environment, as opposed to the flat and featureless planes that were used as the substrate for movement in the comparison set, and this may explain the relative lack of sinuosity that our earthquake egress scenarios produced.

We also compared sinuosity metrics for our HA movement paths in the simulated downtown Salt Lake City, UT with real movement or real walkers in the real city, as well as in other cities (Tempe, AZ; Tokyo; and Yokohama) across a range of walking scenarios (browsing at open-air art exhibits, walking along retail streets, pedestrian crossings, following trails in an outdoor light festival) (). Our HA paths were in the same range for MFD as the path that we recorded for a real walker in downtown Salt Lake City, UT (∼1.025 for the modeled world, versus 1.0295 for the real world), but only for very low-density crowding along a sidewalk. Alas, our HA paths were well below the MFD recorded for similar crowding in the real world. There may be a few reasonable explanations for this. Our HA were instructed to move at much higher speeds than the real-world comparison sets (for which people moved quite slowly, browsing, trudging through festival crowds, or shopping). Also, the crowd density in our model was massively high, and the volume of potential collisions was also high: HA therefore had reduced degrees of freedom in movement compared to the options available to people in the real-world scenarios. (It is also important to note that the MFD is a composite of several measures of fractal dimension across different step-size generalizations of the path (). Across the eight sampled paths, the fractal dimension at higher step-size values was found to reach to 1.7.)

6. Conclusions

Questions of how large volumes of people might egress, safely and efficiently, through cities following some critical event that has damaged the urban setting are of broad and compelling academic and practical relevance in a host of considerations. Nevertheless, experimenting with egress scenarios for large populations in the real-world is implausibly difficult, and whether people might respond tangibly to simulated hazards in experimental settings is an open question that has not, to our knowledge, been explored, and which would seem difficult to evaluate anyway. Regardless, real-world experiments yield valuable information on people’s movement rates, congestion density, and metrics of egress paths, but cannot probe other significant behavioral and cognitive factors that explain egress phenomena, which are rooted in unrevealed ego-centric processes that govern the acquisition of information through perception and the choice and decision-making schemes that rely on that perception and the action that it affords individuals and groups.

In this paper, we have presented a modeling scheme that, at large, establishes a simulation environment and related analysis schemes as a synthetic sandbox that supports experiments of what people might perceive about their safety and egress potential within critical damage scenarios. Our key innovations in the modeling methodology are a focus on fine-details of HA human automata and rigid body specification and behavior in simulation, which supports faithful representation of real-world perception and behavior; and the representation of a complete and full pipeline of phenomena in simulation, which allows us to trace cause and effects between dynamics of building collapse and infrastructural interruptions through to individual and collective consequences.

Because the HA in our model are synthetic, and because we have assembled all of their behaviors in the model, we have unprecedented access to what they are thinking and doing while in the simulation. Exploration with the model produced several findings that shed light on aspects of egress phenomena at the human-built interface. First, HA are keenly reactive to the built setting – their synthetic perception allows them to traverse broad as well as detailed features of the simulated city-space. Second, the collapse scenario produces system-wide influences with ramifications for egress, as well as local effects that are primary and intuitive. Building collapse also initiates several secondary effects on egress that are subtle and nuanced. Third, events that originate in the built environment are easily transformed into the human environment, where they can produce fleeting results or where they can metamorphosize into long-running and far-reaching patterns. Fourth, perception is key in mediating many of the phenomena that course across the built-human interface. Perception is also instrumental in codifying individual and local moments of human interaction into crowd-wide phenomena. Fifth, as is the case in many massively interactive phenomena, signatures of complexity can be found in our simulations of egress. Because of the exquisite level of detail in our model and because of our schemes for extracting and mapping HA perception, we can in many cases trace an indelible path from micro-to-macro frames of complex phenomena in our simulations. Sixth, and related to micro-macro linkages, we identified and framed the concept of perceptual shadowing as a mechanism by which critical events permeate across data, scale, and processes in egress phenomena.

Although the match between our simulation scenarios and real-world data is not ideal, we did report some success in generating realistic movement, and we have some ideas about how we might improve the model. We could do a lot more to improve the realism of the built model, to include structural details and synthetic materials that would allow us to produce more realistic urban damage scenarios, as well as soft-body collisions that would better represent dynamics of collapse and pounding. We could also do a much better job of representing streetscapes in the model, including adding street furniture and objects that would better capture some of the egress challenges that people might have when moving along streets. We could also run longer-run simulations that would account for several days of urban motifs, with more temporal resolution of human dynamics in and out of the urban area and perhaps scenarios that would account for aftershocks or delayed building damage. Our alpha-shaping exercise could be better honed to explore different decision points for shaping (our detailed model could theoretically support this), including a mixture of perception ingredients that account for normal movement (pre-collapse) and extraordinary movement (post-collapse). The behavioral model for our HA is a topic of continuing development for us, and immediate targets for improvement include the development of different forms of path-planning and the inclusion of emotional and inter-personal factors between HA-characters. We could also do more work to better represent other dynamics of the built environment, including mobile cars and traffic, as well as environmental effects such as dust produced by building collapse.

While the conclusions that the sandbox produces are limited to the caveats of the data that went into its simulations, and to the fidelity of the synthetic behaviors that it supports, our testing showed reasonable matches between patterns of movement behavior from the model and commensurate movement in the real world. Extensibility and detail are twin goals of our approach, with the result that there are many commonplace factors and phenomena that the reader might see as missing in our approach. We regard the extensibility of our model as an advantage over other, more general and/or simple models. Alas, this means that our model is prone to critiques of the nature, ‘you should have added X, Y, and Z to your model’. Although we have some work to do to improve the realism of the model, the research we have discussed in this paper shows some promising avenues by which the research community might build tighter connections between the exhaustive reach and what-if inquiry of computer simulation on one hand, and the small windows of richly realistic data that next-generation observational work with high-resolution video, virtual reality, and neuroscience is advancing on the other. One of our opening arguments in introducing the work centered on the notion that our models could and should push to do more to support theoretical, practical, and what-if inquiry in simulation. We hope that the work that we have presented shines some light on how that cause might be accelerated.

Disclosure statement

No potential conflict of interest was reported by the author.

Additional information

Funding

References

- Alam, Sajjad, and Konstadinos Goulias. 1999. “Dynamic Emergency Evacuation Management System Using Geographic Information System and Spatiotemporal Models of Behavior.” Transportation Research Record: Journal of the Transportation Research Board 1660: 92–99. doi: 10.3141/1660-12

- Badler, Norman I., Kamran. H. Manoochehri, and Grahm Walters. 1987. “Articulated Figure Positioning by Multiple Constraints.” IEEE Computer Graphics and Applications 7 (6): 28–38. doi: 10.1109/MCG.1987.276894

- Barsalou, Lawrence W. 2008. “Grounded Cognition.” Annual Review of Psychology 59 (1): 617–645. doi: 10.1146/annurev.psych.59.103006.093639

- Batty, Michael. 1997. “Predicting Where We Walk.” Nature 388 (6637): 19–20. doi: 10.1038/40266

- Batty, Michael, David Chapman, Steve Evans, Muki Haklay, Stefan Kueppers, Naru Shiode, Andy Smith, and Paul M. Torrens. 2001. “Visualizing the City: Communicating Urban Design to Planners and Decision-Makers.” In Planning Support Systems in Practice: Integrating Geographic Information Systems, Models, and Visualization Tools, edited by Richard K. Brail, and Richard E. Klosterman, 405–443. Redlands, CA: ESRI Press and Center for Urban Policy Research Press.

- Batty, Michael, and Paul M. Torrens. 2001. “Modeling Complexity: The Limits to Prediction.” CyberGeo 201 (1), Online 1035.

- Batty, Michael, and Paul M. Torrens. 2005. “Modeling and Prediction in a Complex World.” Futures 37 (7): 745–766. doi: 10.1016/j.futures.2004.11.003

- Baudrillard, Jean. 1994. Simulacra and Simulation. Ann Arobor, MI: University of Michigan Press.

- Chen, M., H. Lin, L. He, M. Hu, and C. Zhang. 2013. “Real-Geographic-Scenario-Based Virtual Social Environments: Integrating Geography with Social Research.” Environment and Planning B: Planning and Design 40 (6): 1103–1121. doi: 10.1068/b38160

- Edelsbrunner, Herbert, David Kirkpatrick, and Raimund Seidel. 1983. “On the Shape of a Set of Points in the Plane.” IEEE Transactions on Information Theory 29 (4): 551–559. doi: 10.1109/TIT.1983.1056714

- Fiedrich, Frank, and Paul Burghardt. 2007. “Agent-Based Systems for Disaster Management.” Communications of the ACM 50 (3): 41–42. doi: 10.1145/1226736.1226763

- Filippidis, L., E. R. Galea, S. Gwynne, and P. J. Lawrence. 2006. “Representing the Influence of Signage on Evacuation Behavior within an Evacuation Model.” Journal of Fire Protection Engineering 16 (1): 37–73. doi: 10.1177/1042391506054298

- Gwynne, S., Edward R. Galea, M. Owen, P. J. Lawrence, and L. Filippidis. 1999. “A Review of the Methodologies Used in the Computer Simulation of Evacuation from the Built Environment.” Building and Environment 34: 741–749. doi: 10.1016/S0360-1323(98)00057-2

- Hanks, Thomas C., and A. Gerald Brady. 1991. “The Loma Prieta Earthquake, Ground Motion, and Damage in Oakland, Treasure Island, and San Francisco.” Bulletin of the Seismological Society of America 81 (5): 2019–2047.

- Hart, Peter E., Nils J. Nilsson, and Bertram Raphael. 1968. “A Formal Basis for the Heuristic Determination of Minimum Cost Paths.” IEEE Transactions on Systems Science and Cybernetics 4 (2): 100–107. doi: 10.1109/TSSC.1968.300136

- Hofinger, Gesine, Robert Zinke, and Laura Künzer. 2014. “Human Factors in Evacuation Simulation, Planning, and Guidance.” Transportation Research Procedia 2: 603–611. doi: 10.1016/j.trpro.2014.09.101

- Kaya, Ş., P. J. Curran, and G. Llewellyn. 2005. “Post-earthquake Building Collapse: A Comparison of Government Statistics and Estimates Derived from SPOT HRVIR Data.” International Journal of Remote Sensing 26 (13): 2731–2740. doi: 10.1080/01431160500099428

- Lin, Hui, and Michael Batty. 2011. Virtual Geographic Environments: A Primer. Redlands, CA: ESRI Press.

- Lin, H., M. Batty, S. E. Jørgensen, B. Fu, M. Konecny, A. Voinov, P. M. Torrens, et al. 2015. “Virtual Environments Begin to Embrace Process-Based Geographic Analysis.” Transactions in GIS 19 (4): 1–5. doi: 10.1111/tgis.12167

- Lin, Hui, Min Chen, and Guonian Lu. 2013a. “Virtual Geographic Environment: A Workspace for Computer-Aided Geographic Experiments.” Annals of the Association of American Geographers 103 (3): 465–482. doi: 10.1080/00045608.2012.689234

- Lin, Hui, Min Chen, Guonian Lu, Qing Zhu, Jiahua Gong, Xiong You, Yongning Wen, Bingli Xu, and Mingyuan Hu. 2013b. “Virtual Geographic Environments (VGEs): A New Generation of Geographic Analysis Tool.” Earth-Science Reviews 126 (1): 74–84. doi: 10.1016/j.earscirev.2013.08.001

- Nams, Vilis O. 1996. “The VFractal: A New Estimator for Fractal Dimension of Animal Movement Paths.” Landscape Ecology 11 (5): 289–297. doi: 10.1007/BF02059856

- NVIDIA. 2012. “PhysX.” Santa Clara, CA: NVIDIA Corporation.

- Pentland, Alex. 2014. Social Physics: How Good Ideas Spread – The Lessons from a New Science. New York, NY: Penguin.

- Petruccelli, Umberto. 2003. “Urban Evacuation in Seismic Emergency Conditions.” Institute of Transportation Engineers. ITE Journal 73 (8): 34–38.

- Reynolds, Craig W. 1987. “Flocks, Herds, and Schools: A Distributed Behavioral Model.” ACM SIGGRAPH Computer Graphics 21 (4): 25–34. doi: 10.1145/37402.37406

- Reynolds, Craig W. 1999. “Steering Behaviors for Autonomous Characters.” In. Proceedings of Game Developers Conference, edited by Miller Freeman Game Group, 763–782. San Francisco, CA: Miller Freeman Game Group.

- Schneider, Volker, and Rainer Könnecke. 2010. “Egress Route Choice Modelling—Concepts and Applications.” In Pedestrian and Evacuation Dynamics 2008, edited by Wolfram W. F. Klingsch, Christian Rogsch, Andreas Schadschneider, and Michael Schreckenberg, 619–625. Berlin: Springer.

- Schneider, P. J., and B. A. Schauer. 2006. “HAZUS—Its Development and Its Future.” Natural Hazards Review 7 (2): 40–44. doi: 10.1061/(ASCE)1527-6988(2006)7:2(40)

- Sharma, S., and S. Gifford. 2005. “Using RFID to Evaluate Evacuation Behavior Models.” In NAFIPS 2005 - 2005 Annual Meeting of the North American Fuzzy Information Processing Society, Detroit, MI, June 26–28, 2005, edited by Dimitar Filev, 804–808. Piscataway, NJ: IEEE.

- Shiwakoti, Nirajan, Majid Sarvi, Geoff Rose, and Martin Burd. 2009. “Enhancing the Safety of Pedestrians during Emergency Egress.” Transportation Research Record: Journal of the Transportation Research Board 2137 (-1): 31–37. doi: 10.3141/2137-04

- Swan, Melanie. 2012. “Sensor Mania! The Internet of Things, Wearable Computing, Objective Metrics, and the Quantified Self 2.0.” Journal of Sensor and Actuator Networks 1 (3): 217–253. doi: 10.3390/jsan1030217

- Torrens, Paul M. 2007. “Behavioral Intelligence for Geospatial Agents in Urban Environments.” In IEEE Intelligent Agent Technology (IAT 2007), edited by Tsau Young Lin, Jeffrey M. Bradshaw, Matthias Klusch, and Chengqi Zhang, 63–66. Los Alamitos, CA: IEEE.

- Torrens, Paul M. 2012. “Moving Agent Pedestrians through Space and Time.” Annals of the Association of American Geographers 102 (1): 35–66. doi: 10.1080/00045608.2011.595658

- Torrens, Paul M. 2014a. “High-Fidelity Behaviours for Model People on Model Streetscapes.” Annals of GIS 20 (3): 139–157. doi: 10.1080/19475683.2014.944933

- Torrens, Paul M. 2014b. “High-Resolution Space–Time Processes for Agents at the Built–Human Interface of Urban Earthquakes.” International Journal of Geographical Information Science 28 (5): 964–986. doi: 10.1080/13658816.2013.835816

- Torrens, Paul M. 2015. “Slipstreaming Human Geosimulation in Virtual Geographic Environments.” Annals of GIS 21 (4): 325–344. doi: 10.1080/19475683.2015.1009489

- Torrens, Paul M. 2016a. “Computational Streetscapes.” Computation 4 (3): 37. doi:10.3390/computation4030037.

- Torrens, Paul M. 2016b. “Exploring Behavioral Regions in Agents’ Mental Maps.” The Annals of Regional Science 57 (2): 309–334. doi: 10.1007/s00168-015-0682-0

- Torrens, Paul M., and Itzhak Benenson. 2005. “Geographic Automata Systems.” International Journal of Geographical Information Science 19 (4): 385–412. doi: 10.1080/13658810512331325139

- Torrens, Paul M., and Atsushi Nara. 2013. “Polyspatial Agents for Multi-Scale Urban Simulation and Regional Policy Analysis.” Regional Science Policy and Practice 44 (4): 419–445.

- Torrens, Paul M., Atsushi Nara, Xun Li, Haojie Zhu, William A. Griffin, and Scott B. Brown. 2012. “An Extensible Simulation Environment and Movement Metrics for Testing Walking Behavior in Agent-Based Models.” Computers, Environment and Urban Systems 36 (1): 1–17. doi: 10.1016/j.compenvurbsys.2011.07.005

- Tuan, Yi-Fu. 1975a. “Images and Mental Maps.” Annals of the Association of American Geographers 65 (2): 205–212. doi: 10.1111/j.1467-8306.1975.tb01031.x

- Tuan, Yi-Fu. 1975b. “Place: An Experiential Perspective.” Geographical Review 65: 151–165. doi: 10.2307/213970