ABSTRACT

Cloud computing has been considered as the next-generation computing platform with the potential to address the data and computing challenges in geosciences. However, only a limited number of geoscientists have been adapting this platform for their scientific research mainly due to two barriers: 1) selecting an appropriate cloud platform for a specific application could be challenging, as various cloud services are available and 2) existing general cloud platforms are not designed to support geoscience applications, algorithms and models. To tackle such barriers, this research aims to design a hybrid cloud computing (HCC) platform that can utilize and integrate the computing resources across different organizations to build a unified geospatial cloud computing platform. This platform can manage different types of underlying cloud infrastructure (e.g., private or public clouds), and enables geoscientists to test and leverage the cloud capabilities through a web interface. Additionally, the platform also provides different geospatial cloud services, such as workflow as a service, on the top of common cloud services (e.g., infrastructure as a service) provided by general cloud platforms. Therefore, geoscientists can easily create a model workflow by recruiting the needed models for a geospatial application or task on the fly. A HCC prototype is developed and dust storm simulation is used to demonstrate the capability and feasibility of such platform in facilitating geosciences by leveraging across-organization computing and model resources.

1. Introduction

With the capability of pooling, sharing, and integrating latest computing technologies, and deploying physically distributed computing resources, cloud computing becomes a promising computing infrastructure to support scientific applications and knowledge discovery. The benefits brought by cloud computing to catalyze scientific research are advancing and even helping redefine the possibility of geosciences and Digital Earth for the twenty-first century (Yang, Goodchild, et al. Citation2011). For example, cloud computing advances the development of Digital Earth by contributing to the establishment of a flexible system architecture with a thin client and a scalable cloud-based server infrastructure, which manages data and processing resources on remote servers accessed over the Internet (Gould et al. Citation2008; Yang, Xu, and Nebert Citation2013; Guo et al. Citation2014; Li et al. Citation2014).

Indeed, cloud computing removes the technical barrier of managing traditional computing infrastructure, more accessible to end users/scientists to conduct computational or data-intensive studies. However, several barriers are hindering geoscientists transiting from traditional computing to cloud computing, including:

The development of various cloud solutions raises the challenges of selecting an appropriate cloud meeting the general hardware and software needs of applications and switching between different clouds when needed. At present, most commercial computing service providers are now moving from the traditional computing paradigms to the cloud computing paradigm represented by Amazon Elastic Compute Cloud (EC2), Windows Azure, and Google’s Google App Engine, to name a few. Additionally, tools and technologies (e.g. Eucalyptus and CloudStack) have been developed to transform an organization’s existing computing infrastructure into a private cloud computing platform (Sotomayor et al. Citation2009). As a result, many cloud solutions are available, each with unique strengths and limitation. Different cloud platforms vary in hosting solutions (i.e. public vs. private clouds), functionalities, supported services, performance, usability, etc. Selecting a proper cloud computing service becomes a challenge for cloud consumers (Gui et al. Citation2014). Besides, the choices of cloud vary depending on the types of applications, which have different requirements for the computing resources (e.g. central processing unit (CPU), memory, storage, and network bandwidth). Finally, user interface, tools, and application programming interfaces (APIs) for users to access and manipulate the services within different cloud platforms often vary and are not compatible with each other. Therefore, it is challenging to switch from one cloud to another when users intend to explore different cloud solutions.

Geoscience applications have special requirements that are not offered by a general cloud platform. Deploying geoscience applications onto cloud enables users to take advantage of elasticity, scalability, and high-end computing capabilities offered by cloud computing. However, in order to optimize the deployment, spatiotemporal patterns and locations of computing resources, storage resources, applications, and users need to be considered (Yang, Goodchild, et al. Citation2011; Yang, Wu, et al. Citation2011). Geoscience applications are featured of different spatiotemporal patterns and different types of computational intensity, such as data, computing, or user access intensity (Yang, Goodchild, et al. Citation2011; Huang, Li, et al. Citation2013; Shook et al. Citation2016). As a result, a key technique for enabling optimal performance of geoscience applications is location, time, data, and computational intensity awareness by leveraging spatiotemporal patterns (Yang et al. Citation2017). As such, without considering these spatiotemporal patterns and computational requirements from an individual geospatial application, general cloud platforms are not well positioned to support geoscience algorithms, applications, and models. Correspondingly, spatial cloud services should be developed to handle different scenarios.

Lacking a cloud testbed environment for geoscientists to explore this new computing paradigm. There is still a learning curve to know enough cloud computing background and techniques that enable us to embrace this new computing platform for scientific research (Yang et al. Citation2017). Several days even weeks may be taken to deploy a geospatial application onto the clouds (Huang, Yang, Benedict, Chen, et al. Citation2013). However, public cloud platforms are usually not free for geoscientists to deploy and test applications on cloud computing. At the same time, only few research groups are able to build a private cloud platform to explore the capabilities of clouds because sophisticated tools and high-end physical infrastructures are required. Even emerging cloud-enabling tools and technologies are available to build a community or private cloud platform (Sotomayor et al. Citation2009), users still need to be familiar with these tools to establish a cloud infrastructure (Nurmi et al. Citation2009).

Recognizing these barriers, this research designs a framework to integrate the computing and model resources across different organizations for building a hybrid cloud computing (HCC) platform. Such a platform integrates and delivers computing and spatial model resources to geoscientists for convenient exploration, utilization of the cloud capabilities. While an HCC mostly includes computing resources from a traditional computing infrastructure (clusters) and public clouds (Zhang et al. Citation2009; Li, Wang, et al. Citation2013), the proposed HCC is a cross-computing environment with a mix of private clouds built upon open-source cloud solutions (e.g. Eucalyptus) and public clouds. It is also to note that geospatial algorithms, tools, libraries, services, and the traditional simulation and statistic models are all referred as models in this paper.

Within this HCC framework, a cloud middleware is implemented to communicate with different underlying cloud infrastructure, and to provide a set of geospatial cloud services, such as geoprocessing as a service (GaaS), model as a service (MaaS), and workflow as a service (WaaS), for geospatial processing and modeling. Different type of services can offer varying functions to address different geospatial problems. Through WaaS, for example, geoscientists can easily create a workflow of model chaining process to integrate the needed models for a specific application on the fly. At the same time, such an application can utilize and leverage the elastic and on-demand computing resources as a general cloud platform. A proof-of-concept HCC prototype is also developed based on this framework. Additionally, a typical geoscience application, dust storm model simulation and visualization, is used as an example to demonstrate that building such a geospatial cloud platform can facilitate geosciences and address some of the key barriers stated earlier by providing the following functions: (1) integrating different types of clouds in a common platform so geoscientist can easily choose and adopt a proper cloud solution for different applications without spending much time on learning new clouds, (2) supporting geospatial data processing, web applications, model runs, and decision-making in clouds with predesigned geospatial cloud services, and (3) delivering shared computing and model resources across the geoscience communities to help geoscientist learn, test, explore, and leverage cloud capabilities easily.

2. Literature review

2.1. Cloud computing for geosciences

Traditionally, high-performance computing clusters or large-scale grid computing infrastructures, such as TeraGrid (Beckman Citation2005) and Open Science Grid (Pordes et al. Citation2007), have been widely used to support geoscience applications and models with the computational challenges (Bernholdt et al. Citation2005; Fernández-Quiruelas et al. Citation2011; Yang, Wu, et al. Citation2011). However, these computing infrastructures require dedicated hardware and software with large investment and long-term maintenance, and take a long time to build. Alternatively, geoscientists can run applications (e.g. weather forecasting models; (Massey et al. Citation2015)) in a loosely coupled computing resource pool contributed by citizens, known as citizen computing paradigm (Anderson and Fedak Citation2006). While such an infrastructure provides a potential solution for the high-throughput tasks, its computing resources are not reliable as citizens may terminate the assigned tasks at any time. Additionally, it is also challenging to collect Big Data (e.g. global climate simulation) from the citizens, due to the limited bandwidth resources between the public clients and the centralized servers responsible for storing and managing the model output (Li et al. Citation2017). Therefore, volunteer computing is not suitable for time-critical tasks that should be completed within a tight period.

A common limitation of these traditional computing paradigms (e.g. cluster, grid, and citizen computing) is that users are constrained to customize the underlying computing resources for different applications, which may need completely different system environments. Since these computing paradigms do not provide an option for users to customize the underlying physical machines, a computing pool is often designed to run a specific type of computing task, such as message passing interface-based, MapReduce-based (Gao et al. Citation2014), or GPU-based tasks (Li, Jiang, et al. Citation2013; Guan et al. Citation2016; Tang and Feng Citation2017; Zhang, Zhu, and Huang Citation2017), without the agility for adapting to a different type of computing tasks. Correspondingly, the poor availability, accessibility, reliability, and adaptability of computing resources built upon these major computing paradigms hamper the advancements of geoscience and geospatial technologies.

As a result, cloud computing, characterized by on-demand self-service, availability, and elasticity (Mell and Grance Citation2009), emerged as a new computing paradigm that became matured and widely used to in the last few years. The forms of cloud service models mostly include Infrastructure as a Service (IaaS), Platform as a Service (PaaS), Software as a Service (SaaS), Data as a Service (DaaS), and MaaS (Li et al. Citation2017). The first three are defined by National Institute of Standards and Technology (Mell and Grance Citation2009) and the last two are essential to geosciences. A very important feature of cloud computing, especially for IaaS, is that users can automatically provision computing resources to run computing tasks for maximum efficiency and scalability in minutes, instead of spending time waiting in a queue with the limited computing pool in the traditional computing paradigms. Therefore, cloud computing is a powerful and affordable alternative to run large-scale simulation and computation (Huang, Yang, Benedict, Chen, et al. Citation2013; Huang, Cervone, and Zhang Citation2017).

Many studies have leveraged cloud computing to support geoscience applications and learn how to best adapt to this new computing paradigm (Vecchiola, Pandey, and Buyya Citation2009; Huang et al. Citation2010; Yang, Goodchild, et al. Citation2011; Zhang, Huang, et al. Citation2016; Huang, Cervone, and Zhang Citation2017; Tang et al. Citation2017). Indeed, these studies have well demonstrated that cloud computing brings exciting opportunities for geoscience applications with (Yang, Goodchild, et al. Citation2011; Huang, Yang, Benedict, Chen, et al. Citation2013): (1) easy and fast access to computing resources available in seconds to minutes, (2) elastic computing resources to handle spike computing loads, (3) high-end computing capacity to address large-scale computing demands, and (4) distributed services and computing to handle the distributed geoscience problems, data, and users.

However, previous studies mostly focus on using or developing a specific cloud service, such as MaaS (Li et al. Citation2017). These cloud services are primarily dedicated to supporting one type of geoscience applications, such as spatial web applications (Huang et al. Citation2010) and climate models (Evangelinos and Hill Citation2008; Li et al. Citation2017). Therefore, a more general solution is needed to flexibly handle computing challenges raised in different types of geoscience applications. Additionally, most geoscientists have not adapted this platform for their scientific research for the aforementioned three reasons (see Section 1). As such, Yang et al. (Citation2017) summarized an innovative cloud architecture and framework should be developed to synthesize computing and model resources across different organizations, and deliver these resources to geoscientists for convenient exploration and use of cloud computing infrastructure and scientific models.

This research, therefore, aims to design a framework that can utilize the both computing and model resources across different organizations to build an HCC platform that can be shared within the geoscience communities. This framework can integrate, manage, and access different underlying cloud infrastructure and geospatial model resources within a common geoscience cloud platform and at the same time provide and deliver computing resources in the on-demand fashion. Such a cloud platform will also remove the barriers for geoscientists to learn and explore cloud capabilities, and eventually enhance the use and adaptation of cloud computing for geospatial applications.

2.2. Data and model accessibility, interoperability, and integration

There is a rich history of developing accessible and interoperable models to support scientific simulation even before cloud computing has been widely used in geoscience communities. The concept of Model Web, an open-ended system of interoperable computer models and databases, was proposed to enable geoscience communities to collaborate in a system consisting of independent but interactive models (Geller and Turner Citation2007). Since then, it has been considered as a potential tool to build geoscience models by combining individual components in complex workflows (Bastin et al. Citation2013). A variety of studies has also been done to promote data and model interoperability (Geller and Melton Citation2008; Castronova, Goodall, and Elag Citation2013; Nativi, Mazzetti, and Geller Citation2013). Progress has also been made to enhance the accessibility of model output, for example, Program for Climate Model Diagnosis and Intercomparison (Williams Citation1997).

However, the Model Web concept aimed to improve model integration and interoperability across various disciplines and fields. Additionally, research along this line primarily utilized the open-standards to achieve model interoperability but ignored the computing issues posed by an interactive online modeling system. As the evolution of Model Web, MaaS concept was introduced by Roman et al. (Citation2009) without providing details about how such a concept can be implemented with the latest information and computing techniques.

To sum up, previous studies have limitations and constraints in handling the underlying computing infrastructure requirements, for example, running a scientific model, therefore leaving computational challenges (e.g. data, computing, and communication intensity) unaddressed. Correspondingly, Li et al. (Citation2017) extended the Model Web concept and implemented the idea of MaaS by utilizing cloud computing services. Different from the Model Web concept which focuses on model integration and interoperability, the proposed MaaS leverages cloud computing to address the model computability challenges over the Internet for the public. Li et al. (Citation2015) also proposed a scientific workflow framework for big geoscience data analytics by leveraging cloud computing, MapReduce, and Service-Oriented Architecture. However, these studies, on the other hand, did not address the interoperability and automatic integration of models within different organizations and knowledge domains.

Therefore, the proposed HCC framework aims to promote the sharing and interoperability of models, enhance the geoprocessing capabilities, and ease the procedure of model configuration and runs by building a set of geospatial cloud services (e.g. WaaS). These services are accessible and runnable through a plug-and-play fashion in a user-friendly spatial web portal (SWP). As a result, geoscientists can easily utilize the cloud capabilities to support scientific applications within the HCC platform, which can bridge the gap between general cloud solutions and the needs of geospatial applications.

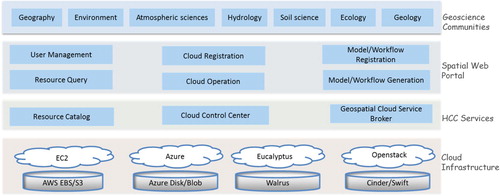

3. An HCC framework

The HCC framework integrates and manages a pool of community computing resources and common geospatial models, contributed by different users and organizations. Originally, these computing resources and models are distributed across custodians’ mainframes or data servers and are accessible only internally. With an HCC platform, which is a shared infrastructure, geoscientists, government agencies, and partners as well as the general public can contribute and consume these common resources more effectively. The system architecture of the HCC platform is designed to include four independent collaborative layers (): the cloud infrastructure layer, HCC services, the SWP, and the geoscience community in the upper layer.

The cloud infrastructure layer integrates the cloud computing resources (e.g. virtual machine (VM), storage, and networking), which could be created from private or public clouds. Within this framework, all major public or private cloud resources can contribute to build a large the computing pool.

The HCC services layer is the key component of the HCC framework, by enabling the computing and model resources to be integrated within a common and shared geoscience cloud platform.

SWP controls the user management, cloud resource registration, cloud computing and model resource query, model workflow generation, common cloud operations, and finally model registration.

Geoscience communities contribute and share both computing and model resources within the HCC platform.

In this architecture, the cloud infrastructure and geoscience community layers are relatively straightforward. The core components are the functions and services developed in the HCC services and SWP layers, which implement and provide the capabilities of efficient computing and modeling in the clouds. These components are elaborated as below.

3.1. Spatial web portal

The SWP is a web-based spatial gateway for the purposes of registering, discovering, managing, and aggregating computing or model resources. With the SWP, public users can search against the resource catalog () to discover the current available computing and geospatial model resources. Cloud consumers can access and manipulate the underlying cloud resources or utilize built-in cloud services for a specific application, for example, building a task workflow by connecting current available models. SWP is a broker between resource providers and consumers. Specifically, the following modules are included within the SWP layer:

User management: The user management module will manage the accounts for different types end users, including cloud resource contributors (i.e. users registering and contributing the computing and model resources), cloud resource consumers (i.e. users that submit geospatial tasks), and cloud administrators (i.e. users that can access and manage both computing resources and tasks).

Resource query: A resource query module supports searching against the resource catalog (in the HCC service layer) for multiple resources, such as VM types, virtual machine images (VMIs), data centers nearby a particular location, and geoscience models.

Cloud registration: A cloud resource registration module is designed for users to register various types of cloud computing resources across different organizations to build a large computing resource pool.

Cloud operation: This module provides functionalities of providing, managing, and operating cloud resources and networking. Through the web portal, cloud consumers can easily perform basic cloud operations, such as creating and deleting cloud resources (e.g. VM and VMI), or configuring networking services for VMs and storage. Some advanced services for a cloud platform, such as VM access management, firewall management, load balancer, and elasticity, can also be provided for cloud consumers to operate the cloud resources. The module provides a uniform user interface to perform these basic operations to manipulate different clouds, and therefore hiding the heterogeneity and complexities from different clouds for the cloud consumers.

Model/workflow generation: This module enables scientists to launch a current available model, or create a workflow by chaining a sequence of available models built in the VMIs. The output of one model feeds into another model as input. This module implements similar functions as ArcGIS model builder, which can create workflows that string together sequences of geoprocessing tools, except that our model generator is capable of leveraging geospatial models that are created by the entire geoscience communities. The computing requirements and constraints to run each model within the workflow can also be specified through the portal.

Model/workflow registration: Finally, after customizing, testing, and validating the generated service workflow, users can register the new workflow to the system, and then share it with the geoscience communities. Additionally, the cloud contributors can also directly register a new model to the system. Upon the registration of the model, proper metadata and configurations about the model should be provided and recorded in the resource catalog. Therefore, other cloud consumers can search and leverage the new model without extra configuration and customization efforts.

3.2. HCC Services

The HCC services layer handles the request and operation (e.g. launching a VM) issued from the SWP layer. This layer interacts with and hides the heterogeneity from different clouds through the cloud control center module. It provides a unified interface to access these different cloud platforms that are typically implemented with different virtualization solutions, and accessed using highly varied users interfaces, command control lines, APIs, or plug-ins, for example, hybridfox plug-in (https://www.openhub.net/p/hybridfox) to access the Amazon EC2. The resource catalog records and stores information of cloud platforms and associated cloud resources voluntarily contributed by geoscience communities, profiles of users, and registered geospatial models. Geospatial cloud service broker validates the cloud services requested by cloud consumers, and creates a task for each request to the computing pool.

3.2.1. Geospatial cloud service broker

A variety of geoscience applications can be supported with a set of developed geospatial cloud services, including:

Elastic service: This service facilitates the configuration of scalable cloud resources for a geoscience application that needs to elastically bring up more computing resources to satisfy the spike or disruptive computing requirements (Huang, Yang, Benedict, Chen, et al. Citation2013).

GaaS: GaaS supports the processing of geospatial data before they can be used for modeling, data analysis, and data mining.

MaaS: Similar as the solution proposed by Li et al. (Citation2017), the proposed service aims to address the model computability challenges over the Internet for the public.

WaaS: This service eases the procedure of model configuration, and facilitates the scientific model runs.

Except for several major key players, most of the cloud solutions do not directly support elasticity capability and require users to programmatically implement this capability through the provided APIs. Therefore, advanced cloud services, such as an easy and intuitive graphic user interface to enable cloud consumers to configure the elasticity service should be developed. The proposed elastic service enables geoscientist to best leverage cloud capabilities by easily configuring the cloud resources to balance the workload between VMs, and automatically scaling up and down VMs based on the computing tasks coming in dynamically.

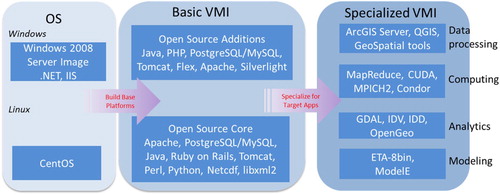

To provide GaaS, common geospatial data processing tools will be recorded and registered as various VMIs and the end users can choose appropriate one based on the data to be processed and the required output format. To implement MaaS and WaaS, the system will also collect common geospatial models, for example, dust storm simulation model (Huang, Yang, Benedict, Chen, et al. Citation2013) and climate model (Li et al. Citation2017). Data access and visualization clients (e.g. ParaView clients; Cedilnik et al. Citation2006; Woodring et al. Citation2011) and data service brokers can be developed by different organizations and shared within the communities. Within this architecture, the geoscience applications can be quickly developed by integrating these available geospatial models as its components in the cloud. These models are also hardened and shared in the format of VMIs. shows the strategy and workflow for developing cloud VMIs.

A bare metal operating system (OS) VMI can be selected for each of two common OSs: a version of Windows Server and a version of enterprise Linux. A set of common libraries can be included as the baseline for creating more advanced images.

Basic VMIs should be built by selecting and adding key open-source components commonly used in geoscience applications on both OS. Open source is a must at this stage to avoid licensing costs to the next level of specialization.

Each basic VMI can be then further customized to create specialized VMIs for different geoscience applications, forming both an open source and proprietary variant of target platforms. Those specialized VMIs are equipped with varying geospatial open-source libraries, tools, software, and models. Additionally, different open-source computing middleware solutions and libraries, such as Apache Hadoop (https://hadoop.apache.org/), Condor (http://research.cs.wisc.edu/htcondor/), and MPICH (https://www.mpich.org/), are also configured as the specialized VMIs to support different types of computing tasks.

Figure 2. The workflow to build basic and specialized images used for different types of geospatial cloud services.

Based on the functions of the configured software, tools, and models (), specialized images could be classified data processing, computing, analytics, and modeling VMIs. Note that a VMI could belong to two or more categories. The specialized images with specific models included then can be used alone (i.e. MaaS) to create an application with the capability of provisioning elastic and distributed computing resources to deal with the intensities of data, computing, and user access. The images can also be combined to develop a complex workflow of services (i.e. WaaS) through the web portal. For example, if geoscientists want to predict the future global temperature with doubled amount of CO2, or explore the different parameterizations to the impact of the climate model output, they can integrate the climate model (a modeling VMI) and ParaView (Cedilnik et al. Citation2006; an analytics VMI) with the climate model simulating the climate change and ParaView visualizing the model results. If a model image (e.g. climate model) is not directly available, the users can select an image (basic or specialized VMI) with dependent libraries and software packages hardcoded so minimum customization is needed to deploy the model.

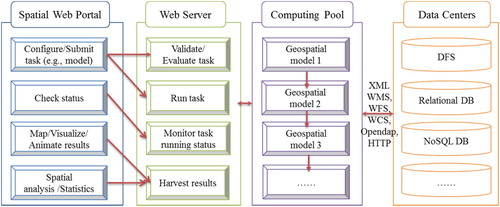

shows the workflow of enabling geospatial cloud services in the HCC services layer. In this workflow, the client is the SWP (Section 3.1) for users to configure, run, and check the geospatial tasks. Users from a variety of communities configure and build a job through the web client. The web server is used to accept and parse users’ requests and forward the requests to the computing pool. After the task is submitted to the web server, the task validator and evaluator will be invoked to analyze if the request can be satisfied in terms of both computing constraints and model constraints based on user’s input.

Figure 3. Workflow of providing geospatial services in clouds through the geospatial cloud service broker.

Computing pool collaboratively contributed and registered by the geoscience communities is used to run the models. After the server validates the requests from users, it will generate a model running task and pass the task to the hybrid cloud control center (Section 3.2.2), which initiates the process of selecting and matching computing resources, launching the VMs based on VMIs with selected models hardcoded, and dispatching the task on the VMs. The cloud control center is in charge of the overall scheduling on all incoming jobs.

The fourth component of the workflow is the data centers, which can communicate with the computing pool through a variety of protocols and standards, such as the Open Geospatial Consortium (OGC) specifications, for example, Web Map Service (WMS) and Web Coverage Service (WCS) depending on the model input and model output format. This workflow () makes extensive uses of OGC open-standards. Those standards can integrate data from different organizations and locations with different formats, such as NetCDF, GRIB, and HDF-EOS, as input for initialization and support the running of different models. Within this workflow, standard input and output file formats should be implemented and utilized for the involved models so that the outputs of one could be used as the input into another. In this case, all the models should be able to assimilate data of common formats, such as WMS, and WCS as model inputs. Additionally, they can produce results in the commonly used formats (e.g. NetCDF) that can be stored in a distributed file system (Shvachko et al. Citation2010), or deliver the results into a spatial database that could be managed by either a Not Only SQL (NoSQL) or relational database management system (DBMS). Those datasets can also be shared as OGC standard services, and therefore can be quickly ingested into the existing workflow and used by other models as input. Section 5 introduces an example of leveraging the HCC services.

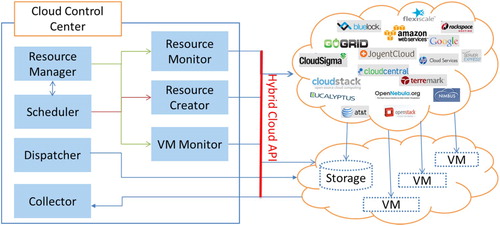

3.2.2. Cloud control center

The cloud control center is responsible for managing the underlying cloud computing resources, such as VMs, machine images, virtual storage, and computing tasks. The hybrid cloud API communicating with different clouds in the HCC service layer is the core of the cloud control center. Typically, each cloud would provide APIs that can be accessed and used to manage its cloud services through several standard ways: manually send requests using API, use a web console interface, or manage them programmatically. Whenever an operation is made to manipulate the cloud services (e.g. create a VM) with the web console, in reality a series of API calls are sent to the cloud provider in the background. In other words, an application can automatize any cloud resource operation from storage access to machine-learning-based image analysis to a specific cloud platform or provider by calling its APIs (Google Cloud APIs Citation2017). However, the clouds APIs are much different between each cloud platform. Correspondingly, the hybrid cloud API layer is developed to interact with the APIs of different clouds. Within our work, it is implemented based on Dasein Cloud libraries (https://github.com/greese/dasein-cloud-core), JAVA-based libraries that enable the interaction to a variety of clouds. The hybrid cloud API layer provides an abstraction interface and handles the implementation details for different underlying cloud platforms. The process of the cloud control center running a computing task can be generally represented in .

The resource manager will discover the current state and availability of resources, such as the availability of clouds to create VMs, public accessible network, and private IPs assigned to the VMs for them to communicate privately data and model resources. The resource monitor provides the functionality to monitor the computing resources from different contributors. The VM monitor offers the capability to monitor the VMs. If all the required resources to run a task are available, the resource manager will pass the task to the scheduler, which will put it into a ready queue. Protocols are used to determine the priority of the tasks according to the parameters, such as time received the request, importance, and emergency. When the task is ready to run, the scheduler will invoke the resource creator to create the requested or desired amount of VMs, and the VMs will be put in the both resource manager and VM monitor’s lists for management and monitoring. Finally, the task will be dispatched to those virtual resources through the network.

To sum up, this architecture () is flexible and extendable so that the HCC service layer can be consistently improved based on the geoscience community requirements. During the HCC platform test and validation phase, geoscience communities can build applications based on the HCC platform to provide feedback about the adjustment for the VMI and SWP interface improvement necessary for facilitating the accessibility and usability of HCC services. In addition, such architecture can integrate the latest services and tools developed into the VMIs and serve them to broad geoscience communities.

4. Prototype and demonstration

4.1. System implementation

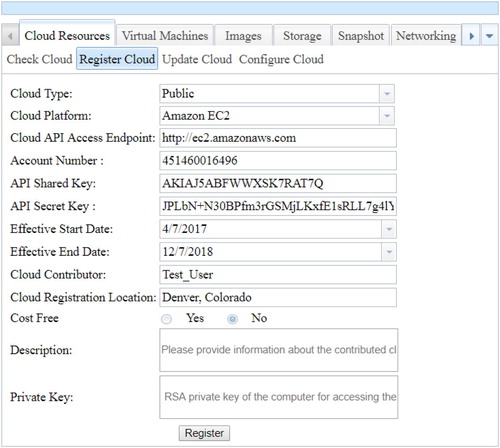

A proof-of-concept prototype has been implemented to demonstrate how to implement an HCC platform for geoscience communities based on the proposed framework. Currently, the system supports several functions from registering a cloud computing pool, submitting a geospatial cloud service task, to managing computing resources, and checking task running status. A cloud database stores the resource metadata, such as cloud platform information, model input configurations, and model output descriptions. A cloud resource registration component is designed to integrate various types of cloud resources across different organizations to build a large computing resource pool. Within this prototype, all major public (e.g. EC2 and Azure) or private cloud resources (e.g. Eucalyptus and CloudStack platform) can be registered to the system. During this work, the private cloud computing platform, built on the Eucalyptus, is used as initial private cloud test platform. The Amazon EC2 is selected as the primary public cloud computing environment.

4.2. Integrating cloud resources

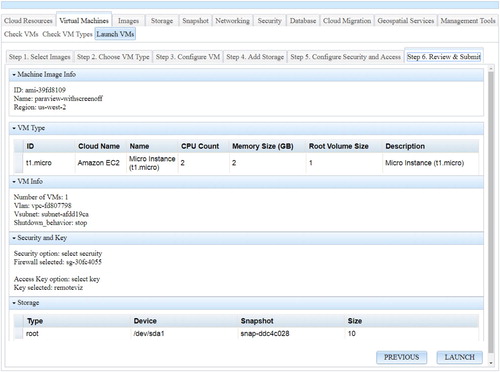

Within this system, there are three types of users, including cloud consumer, cloud administrator, and cloud contributor. The cloud administrator supports and maintains the performance and security of the hybrid cloud, web, and database systems. The cloud consumer is the end user that accesses and utilizes the cloud and model resources. The cloud contributor can register a cloud computing pool to the system. In addition to the permission for registering and updating the registered cloud resources, the contributors are also granted all privileges of a cloud consumer accessing available cloud and model resources. While a cloud administrator is automatically set up when the system is established, the other two roles should be specified by the users while signing up an account. After a cloud contributor’s account is approved by the administrator, the user can register the computing resources through the SWP (). While registering a cloud to the system, the contributor should provide several required information, such as the cloud service type, cloud provider, and API access credentials, and all of those metadata information about the cloud platform will be stored in the cloud catalog database. The contributor can also specify the credits (budget) for associated cloud resource. The cloud control center will automatically calculate the costs of each contributed cloud by using the pricing APIs of the cloud middleware. When the total cost of a cloud usage exceeds the amount supported by authorized credits, the cloud resource will be disabled and an alert can be sent to the contributor, who in turn can further extend or simply remove the cloud for public use through the update function (). This mechanism helps prevent the registered public cloud resources from overuse.

4.3. Cloud resource operation and management

There are several basic functionalities that both public and private clouds should support, such as creating and managing VM, image, snapshot, volume, and networking. Additionally, advanced services for a cloud platform, such as VM access management, and firewall management are available in certain cloud platforms (e.g. Amazon EC2) as well. Firewall management encompasses all security mechanisms related to the access and operation of the VM instances. Typically all cloud platforms would provide basic mechanisms for authenticating users managing the VM instances. For example, users can specify rules that affect access to all of their VM instances.

Through the cloud resource operation module, users can create and delete cloud resources such as VM, images, storage, snapshot, and public IP. As a practical example, shows the provision of a VM from the Amazon EC2. The procedure starts with procuring a VM by choosing a cloud platform, an image with specific models, tools, or applications built in, and a VM type to be started. Several other parameters for storage, security, and VM access permission should be configured as well, such as disk or storage size attached to the VM, or firewall rules and access keys to the VM. After the VM is running, it can be accessed as a remote computer similar to the access to physical servers. Users can directly run a built-in model or customize the VM by configuring the required environment to support an application.

Figure 6. The hybrid cloud platform enables a user-friendly interaction for resource provision from various cloud providers.

The selection of a cloud platform is determined by the supported applications and the improvements made to application in comparison to a traditional computing platform (Dastjerdi, Garg, and Buyya Citation2011). Improvements can normally be achieved in application performance, availability, stability, security, and other aspects required by specific applications (Breskovic et al. Citation2011). However, the deployment processes and steps of a geospatial application or model onto the clouds depend on the selected cloud platform. Typically, the interfaces, command lines, or APIs to access different underlying cloud resources are unavoidably different, and therefore the deployment procedures vary from different cloud platforms. The uniform interface provided in our framework to operate different clouds, therefore, can facilitate the trial-and-error test of applications in different clouds, and eventually help geoscientists select the best cloud platform to maximize the improvement. Within an HCC platform, advanced functions, such as migrating the applications to a different cloud platform, are necessary.

5. Geospatial cloud services: an example of workflow as a service for geoscience application

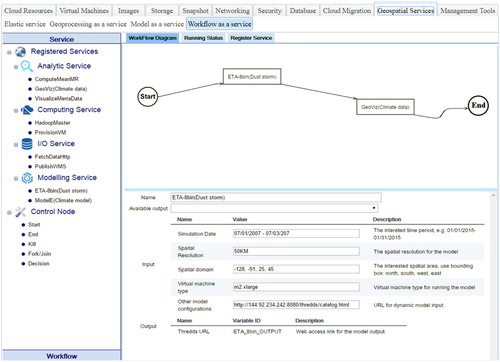

Within this section, we will use WaaS as an example to demonstrate how geospatial cloud services can be used to facilitate scientific research and knowledge discovery. WaaS allows geoscientist to build and submit a model workflow, which can recruit the needed models for a scientific application or task on the fly, to the cloud system. In order to build a workflow, the users first select the relevant models registered as VMIs. As introduced in Section 3.2.1, VMIs encapsulate different open-source tools, and scientific models, and are categorized into different types based on its functions, such as analytics and computing (). Next, users set up the input parameters for each model within the workflow, such as simulation date, spatial resolution, and spatial domain. To make the models to be executed in a parallel manner, the users also need to configure the computing resources to run the models by selecting appropriate VM types. The cloud-based workflow builder (Li et al. Citation2015) is adapted in this prototype to help users build the workflow in a drag-and-drop fashion.

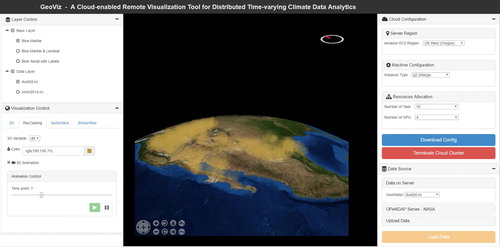

5.1. A workflow of running two models

Shown as , a dust storm model ETA-8bin (Huang, Yang, Benedict, Rezgui, et al. Citation2013) is selected as the first model to start a model running workflow. This dust storm model is a coarse model with a low spatial resolution of 50 km. This model can perform a quick forecasting about the potential dust storm over a large spatial extent. The second model within the workflow, GeoViz (Zhang et al. Citation2016), is a web-based visualization and analytical tool designed for exploring multidimensional climate datasets. The web version of NASA WorldWind (Bell et al. Citation2007), a virtual globe for Earth Science data 3D visualization, is incorporated as the visualization environment to display visualization results. The tool utilizes parallel processing capabilities provided by multiple CPUs or GPUs to handle the computing intensity of visualizing large volume climate datasets.

Figure 7. An example of building a workflow of models by choosing available geoscience models; this workflow includes a dust storm model (ETA-8bin) and data visualization model (GeoViz).

While running a job, we can also check the job running status (e.g. which model is running), and computing resources information, for example, CPU utilization, load information, memory, network situation through the resource management function. After detecting the model output available from the first model, users are able to visualize its results in GeoViz (). Finally, workflows built by the user are saved to the database which can be shared with other users and new VMIs can be created based on the running VMs for each model within the workflow tasks if the user has made any modification of the two models. Therefore, users in geoscience community can easily run the workflow of models (e.g. dust storm forecasting and visualization systems in our case) based on the current available VMIs. This would greatly reduce the duplication of efforts among geoscience communities as any user can run the workflow without much effort on compiling and configuring the workflow, which may require domain knowledge from different disciplines. Additionally, during the first-time model workflow generation, some additional customization of the VMs to run each model involved within the workflow (e.g. installing dependent software or libraries of the model on the VMs), may be required to support the model runs. If we create VMIs from the VMs and use the registered workflow which can be launched through the web interface, such customization efforts can be saved for other geoscientists.

Various researchers and scientists need to build a large number of complex tools, models, and applications tailored to their specific studies (Neuschwander and Coughlan Citation2002). The goals and functions of these tools, models, and applications are not always completely different, despite the differences in research objectives. If we build the functions or applications as cloud services to share with others, duplications can be eliminated. Hence, the proposed hybrid cloud platform would greatly facilitate the geosciences in reusing and sharing functionalities and knowledge across geoscience communities.

5.2. Model communication and interoperability

The use of OGC standard service interfaces achieves interoperability between different models by providing interoperable interfaces to acquire distributed data resources as model input, and facilitates the delivery of model products and data to the public users. Coupling the ETA-8bin and GeoViz models are implemented to enable the communication of the two different models. The model pre-processor component associated with the ETA-8bin model is designed and developed to readily ingest the OGC services as the model input to enable model interoperability. Specifically, the ETA-8bin model pre-processor code has been modified to support the retrieval of initialization parameters from remote systems via WCS requests that return data files which are then subject to further processing to match the required input formats (Fortran binary grids) expected by the model core (Huang, Yang, Benedict, Rezgui, et al. Citation2013).

The ETA-8bin produces the output using NetCDF as the file format, allowing standard meteorological data processing tools to access and process these products. The post-processor of the ETA-8bin is developed to provide additional functionality for automatically pushing the model output to the directory in the cloud storage where a Thematic Real-time Environmental Distributed Data Services (THREDDS) service is configured to store model output and disseminate the model output in an interoperable way through WCS. THREDDS catalogs provide virtual directories of available data and their associated metadata by supplying information about which datasets are available via which services/protocols (Domenico et al. Citation2006). Therefore, the data access capabilities are augmented and integrated with THREDDS catalog services, which provides inventory lists and metadata access. Thus client applications can find out first what is available on the site via the THREDDS interface, then access the datasets themselves via the OPeNDAP, ADDE, WCS, WMS, or NetCDF/HTTP protocols (Nativi et al. Citation2006).

On the other hand, GeoViz is developed to support and process most common Earth science data formats, such as NetCDF and HDF. Once the dust storm model is finished, the system will start one or multiple VMs with GeoViz configured. The GeoViz application hosted on the VM(s) directly accesses and downloads the output of EAT-8bin through a URL provided as the NetCDF, and provides interactive visualization functions for different variables of the model output. Upon retrieving the output, the visualization tool automatically lists all 3D variables to facilitate the subsequent selection of visualization variables by users. When users select variables and visualization modes, the visualization process starts. Cloud computing resources configured through earlier steps are used to execute the visualization process. The visualization results produced by the tool then are sent to the WorldWind for final display. shows the visualization of the dust storm model output with GeoViz.

5.3. Discussion

Compared to general cloud solutions, the proposed HCC platform includes a set of geospatial cloud services, such as elastic service, GaaS, MaaS, and WaaS, to support geoscience applications of different computing challenges and intensities (e.g. data, computing, and user access; Yang, Goodchild, et al. Citation2011; ). For example, GaaS aims to address big data challenges raised in the geoscience domain. Dealing with Big Data requires high-performance data processing tools that would allow scientists to extract the knowledge from the unprecedented amount of data (Bellettini et al. Citation2013). Existing data processing systems fall short in addressing many challenges in processing multi-sourced, heterogeneous, large-scale data, especially stream data (Zhang et al. Citation2015). Accordingly, Big Data processing and analytics platform based on cloud computing (Bellettini et al. Citation2013), MapReduce data processing framework (Ye et al. Citation2012), and open-standards and interfaces (e.g. OGC standards) are widely used for massive data processing, displaying, and sharing. Within the HCC platform, users can easily leverage scalable VMs to build an HPC MapReduce data processing environment based on specialized images (). VMIs dedicated for GaaS can isolate and componentize kernel GIS data process functions (e.g. geospatial data reprojection and format conversion), analysis, and statistics as services. Additionally, community tools from different geoscience users can be recorded as specialized images for handling unique scientific data, such as NetCDF and HDF datasets. By leveraging different types of specialized VMIs, users can perform various manipulations over the raw data, such as data pre-processing, analysis, and plotting/rendering in order to prepare and generate appropriate input data that can fit in a scientific model, and also represent the model output in an intuitive way (e.g. charts and maps).

Table 1. Geospatial cloud services for dealing with the intensities of data, computing and concurrent user accesses.

6. Conclusion

Geoscience phenomena are complex processes and geoscience applications often take a variety of data as input with a long and complex workflow. An urgent need exists to facilitate geosciences by leveraging cloud computing that can improve the performance and enable the computability of scientific problems, and hide the complexity of the computing infrastructure from geoscientists. This paper proposes a framework to develop an HCC platform to ease the challenge of leveraging cloud computing and facilitate the use of widely available geospatial models and services. The HCC platform integrates different types of cloud resources including computing resources, scientific models, and tools in a common and shared environment among geoscience communities.

Both public and private cloud resources can be registered and managed within this HCC platform. Besides providing the capabilities offered by a general cloud solution (e.g. provision of computing resources), the platform offers a set of geospatial cloud services, such as elastic service, GaaS, MaaS, and WaaS, which support the automatic integration and execution of the multi-sourced scientific models. This research addresses the computing demands of geoscience applications through utilizing cloud resources contributed by the geoscience communities as to improve the model performance. The prototype and the use case study have demonstrated a great potential to solve complex scientific problems that require leveraging knowledge from multiple disciplines and resources across organizations. Future research includes the following aspects:

Geospatial cloud services: In this work, to illustrate the geospatial cloud services offered by the proposed framework, WaaS is demonstrated by using two models to build a workflow starting with ETA-8bin, a dust storm model, followed by GeoViz, a tool to analyze and visualize the results of the output of ETA-8bin. Model interoperability is achieved through enabling independent models to communicate with each other, feeding the results of one model to another model as model input, and finally disseminating model results in a real-time fashion with OGC standards. In future, more models and workflow scenarios should be explored, tested, and registered in the platform. In order to fill the gaps between a generic cloud solution and geospatial applications, more geospatial cloud services within the context of XaaS should also be developed and dedicated to address various geospatial data (e.g. social media data and news articles) and computing challenges (Tsou Citation2015; Hu, Ye, and Shaw Citation2017; Li and Wang Citation2017).

Cloud middleware: The scientific workflow of simulation and prediction with multiple models involved, and a hybrid cloud platform integrating multiple volunteered cloud resources globally distributed and with different levels of computing capabilities, would complicate the scheduling of computing resources. A middleware accounting the computing constraints and requirements within each procedure, and locations of computing resources, would enable the allocation and use of computing resources for geospatial applications effectively and efficiently. In the future, it would be also necessary to develop a more sophisticated middleware that can schedule the tasks in a way that improves the scalability and performance of networked computing nodes of different cloud platform registered within the hybrid cloud system. Such an effort would also help establish a better geospatial Cyberinfrastructure (Wang Citation2010; Yang et al. Citation2010) and a spatial cloud computing platform (Yang, Goodchild, et al. Citation2011). Additionally, more cloud resources from different communities and organizations will be registered to test the developed geospatial cloud services, and demonstrate the feasibility and capabilities of the proposed platform on handling different cloud platforms.

SWP: Within the prototype, a set of minimum core functions are provided for users to manipulate the cloud services and run different applications. In future, more advanced user interface and interactive functions (Roth and MacEachren Citation2016; Roth et al. Citation2017) should be developed to facilitate the better utilization of such a cloud platform. For example, performance of the cloud solutions in supporting different types of geoscience applications is an essential criterion when selecting a cloud solution. Therefore, a performance evaluator can be developed and integrated into the SWP to facilitate the automatic selection and recommendation of cloud resources for a particular application. Such evaluator should be able to record the service status, error rates, and computing time of different registered computing resources while executing a task. Additionally, the cloud consumers can also rate and provide reviews for the computing resources through the SWP after running a task. Such information then can be used to develop an advisory algorithm for cloud resource recommendation.

Across cloud platform collaboration: How to efficiently move data cross spatial locations for effective processing has always been a challenging issue to a cloud computing infrastructure. In most cloud applications, data have to be located in the cloud and no data transfer is required. Similarly, while processing geospatial data, or executing a workflow of models, an HCC platform works best once data are directly accessible to the VMs running these models (Yang et al. Citation2017). To leverage different cloud platforms to run a model workflow, the transfer of spatial data, which are often massive, is a bottleneck that should be addressed. Therefore, smart data pre-processing techniques are needed to eliminate redundant and unwanted data before they are transferred. Additionally, effective data compression algorithms can be developed to reduce the data size (Yang, Long, and Jiang Citation2013). Efficient algorithms to automatically select appropriate cloud platforms (locations) based on the spatiotemporal principles to maximize the data transfer speed while minimizing cost and computing time will also pave the way for enabling across cloud platform collaboration.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Anderson, David P., and Gilles Fedak. 2006. “The Computational and Storage Potential of Volunteer Computing.” Paper presented at the Sixth IEEE International Symposium on Cluster Computing and the Grid, Singapore, May 16–19.

- Bastin, Lucy, Dan Cornford, Richard Jones, Gerard B. M. Heuvelink, Edzer Pebesma, Christoph Stasch, Stefano Nativi, Paolo Mazzetti, and Matthew Williams. 2013. “Managing Uncertainty in Integrated Environmental Modelling: The UncertWeb Framework.” Environmental Modelling & Software 39: 116–134. doi: 10.1016/j.envsoft.2012.02.008

- Beckman, Peter H. 2005. “Building the TeraGrid.” Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 363 (1833): 1715–1728. doi:10.1098/rsta.2005.1602.

- Bell, David G., Frank Kuehnel, Chris Maxwell, Randy Kim, Kushyar Kasraie, Tom Gaskins, Patrick Hogan, and Joe Coughlan. 2007. “NASA World Wind: Opensource GIS for Mission Operations.” Paper presented at 2007 IEEE Aerospace Conference, Big Sky, MT, USA, March 3–10.

- Bellettini, Carlo, Matteo Camilli, Lorenzo Capra, and Mattia Monga. 2013. “MaRDiGraS: Simplified Building of Reachability Graphs on Large Clusters.” In Reachability Problems: 7th International Workshop, RP 2013, Uppsala, Sweden, September 24–26, 2013 Proceedings, edited by Parosh Aziz Abdulla, and Igor Potapov, 83–95. Berlin: Springer.

- Bernholdt, David, Shishir Bharathi, David Brown, Kasidit Chanchio, Meili Chen, Ann Chervenak, Luca Cinquini, Bob Drach, Ian Foster, and Peter Fox. 2005. “The Earth System Grid: Supporting the Next Generation of Climate Modeling Research.” Proceedings of the IEEE 93 (3): 485–495. doi: 10.1109/JPROC.2004.842745

- Breskovic, Ivan, Michael Maurer, Vincent C. Emeakaroha, Ivona Brandic, and Schahram Dustdar. 2011. “Cost-efficient Utilization of Public sla Templates in Autonomic Cloud Markets.” Paper presented at the Fourth IEEE International Conference on Utility and Cloud Computing (UCC), Melbourne, Australia, December 5–7.

- Castronova, Anthony M., Jonathan L. Goodall, and Mostafa M. Elag. 2013. “Models as Web Services Using the Open Geospatial Consortium (OGC) Web Processing Service (WPS) Standard.” Environmental Modelling & Software 41: 72–83. doi: 10.1016/j.envsoft.2012.11.010

- Cedilnik, Andy, Berk Geveci, Kenneth Moreland, James P. Ahrens, and Jean M. Favre. 2006. “Remote Large Data Visualization in the ParaView Framework.” Paper presented at the 6th Eurographics Conference on Parallel Graphics and Visualization (EGPGV), Braga, Portugal, May 11–12.

- Dastjerdi, Amir Vahid, Saurabh Kumar Garg, and Rajkumar Buyya. 2011. “Qos-aware Deployment of Network of Virtual Appliances across Multiple Clouds.” Paper presented at the 2011 IEEE Third International Conference on Cloud Computing Technology and Science (CloudCom), Athens, Greece, November 29–December 1.

- Domenico, Ben, John Caron, Ethan Davis, Robb Kambic, and Stefano Nativi. 2006. “Thematic Real-Time Environmental Distributed Data Services (THREDDS): Incorporating Interactive Analysis Tools into NSDL.” Journal of Digital Information 2 (4). Accessed: 01/10/2017. https://journals.tdl.org/jodi/index.php/jodi/article/view/51/54

- Evangelinos, Constantinos, and C. Hill. 2008. “Cloud Computing for Parallel Scientific HPC Applications: Feasibility of Running Coupled Atmosphere-Ocean Climate Models on Amazon’s EC2.” Ratio 2 (2.40): 2–34.

- Fernández-Quiruelas, V., J. Fernández, Antonio S. Cofiño, Lluis Fita, and José Manuel Gutiérrez. 2011. “Benefits and Requirements of Grid Computing for Climate Applications. An Example with the Community Atmospheric Model.” Environmental Modelling & Software 26 (9): 1057–1069. doi:10.1016/j.envsoft.2011.03.006.

- Gao, Song, Linna Li, Wenwen Li, Krzysztof Janowicz, and Yue Zhang. 2014. “Constructing Gazetteers from Volunteered Big Geo-Data Based on Hadoop.” Computers, Environment and Urban Systems 61: 172–186.

- Geller, Gary N., and Forrest Melton. 2008. “Looking Forward: Applying an Ecological Model Web to Assess Impacts of Climate Change.” Biodiversity 9 (3–4): 79–83. doi: 10.1080/14888386.2008.9712910

- Geller, Gary N., and Woody Turner. 2007. “The Model Web: A Concept for Ecological Forecasting.” Paper presented at the 2007 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2007) , Barcelona, Spain, July 23–28.

- Google Cloud APIs. 2017. “ Google Cloud APIs.” Accessed 08/10/2017. https://cloud.google.com/apis/docs/overview.

- Gould, Michael, Max Craglia, Michael F. Goodchild, Alessandro Annoni, Gilberto Camara, Werner Kuhn, David Mark, Ian Masser, David Maguire, and Steve Liang. 2008. “Next-generation Digital Earth: A Position Paper from the Vespucci Initiative for the Advancement of Geographic Information Science.” International Journal of Spatial Data Infraestructures Research 3: 146–167. doi:10.2902/1725-0463.2008.03.art9.

- Guan, Qingfeng, Xuan Shi, Miaoqing Huang, and Chenggang Lai. 2016. “A Hybrid Parallel Cellular Automata Model for Urban Growth Simulation over GPU/CPU Heterogeneous Architectures.” International Journal of Geographical Information Science 30 (3): 494–514. doi:10.1080/13658816.2015.1039538.

- Gui, Zhipeng, Chaowei Yang, Jizhe Xia, Qunying Huang, Kai Liu, Zhenlong Li, Manzhu Yu, Min Sun, Nanyin Zhou, and Baoxuan Jin. 2014. “A Service Brokering and Recommendation Mechanism for Better Selecting Cloud Services.” PLoS ONE 9 (8):e105297. doi:10.1371/journal.pone.0105297.

- Guo, Huadong, Lizhe Wang, Fang Chen, and Dong Liang. 2014. “Scientific Big Data and Digital Earth.” Chinese Science Bulletin 59 (35): 5066–5073. doi:10.1007/s11434-014-0645-3.

- Hu, Yingjie, Xinyue Ye, and Shih-Lung Shaw. 2017. “Extracting and Analyzing Semantic Relatedness Between Cities Using News Articles.” International Journal of Geographical Information Science 31 (12): 2427–2451. doi: 10.1080/13658816.2017.1367797

- Huang, Qunying, Guido Cervone, and Guiming Zhang. 2017. “A Cloud-Enabled Automatic Disaster Analysis System of Multi-Sourced Data Streams: An Example Synthesizing Social Media, Remote Sensing and Wikipedia Data.” Computers, Environment and Urban Systems 66: 23–37. doi:10.1016/j.compenvurbsys.2017.06.004.

- Huang, Qunying, Zhenlong Li, Jizhe Xia, Yunfeng Jiang, Chen Xu, Kai Liu, Manzhu Yu, and Chaowei Yang. 2013. “Accelerating Geocomputation with Cloud Computing.” In Modern Accelerator Technologies for Geographic Information Science, edited by Xuan Shi, Volodymyr Kindratenko, and Chaowei Yang, 41–51. Boston, MA: Springer.

- Huang, Qunying, Chaowei Yang, Karl Benedict, Songqing Chen, Abdelmounaam Rezgui, and Jibo Xie. 2013. “Utilize Cloud Computing to Support Dust Storm Forecasting.” International Journal of Digital Earth 6 (4): 338–355. doi:10.1080/17538947.2012.749949.

- Huang, Qunying, Chaowei Yang, Karl Benedict, Abdelmounaam Rezgui, Jibo Xie, Jizhe Xia, and Songqing Chen. 2013. “Using Adaptively Coupled Models and High-Performance Computing for Enabling the Computability of Dust Storm Forecasting.” International Journal of Geographical Information Science 27 (4): 765–784. doi:10.1080/13658816.2012.715650.

- Huang, Qunying, Chaowei Yang, Doug Nebert, Kai Liu, and Huayi Wu. 2010. “ Cloud Computing for Geosciences: Deployment of GEOSS Clearinghouse on Amazon's EC2.” Paper presented at the ACM SIGSPATIAL International Workshop on High Performance and Distributed Geographic Information Systems, San Jose, California, November 2.

- Li, Jing, Yunfeng Jiang, Chaowei Yang, Qunying Huang, and Matt Rice. 2013. “Visualizing 3D/4D Environmental Data Using Many-Core Graphics Processing Units (GPUs) and Multi-Core Central Processing Units (CPUs).” Computers & Geosciences 59: 78–89. doi: 10.1016/j.cageo.2013.04.029

- Li, Wenwen, and Sizhe Wang. 2017. “PolarGlobe: A Web-Wide Virtual Globe System for Visualizing Multidimensional, Time-Varying, Big Climate Data.” International Journal of Geographical Information Science 31 (8): 1562–1582. doi: 10.1080/13658816.2017.1306863

- Li, Qing, Ze-yuan Wang, Wei-hua Li, Jun Li, Cheng Wang, and Rui-yang Du. 2013. “Applications Integration in a Hybrid Cloud Computing Environment: Modelling and Platform.” Enterprise Information Systems 7 (3): 237–271. doi: 10.1080/17517575.2012.677479

- Li, Zhenlong, Chaowei Yang, Qunying Huang, Kai Liu, Min Sun, and Jizhe Xia. 2017. “Building Model as a Service to Support Geosciences.” Computers, Environment and Urban Systems 61:141–152. doi:10.1016/j.compenvurbsys.2014.06.004.

- Li, Zhenlong, Chaowei Yang, Baoxuan Jin, Manzhu Yu, Kai Liu, Min Sun, and Matthew Zhan. 2015. “Enabling Big Geoscience Data Analytics with a Cloud-Based, MapReduce-Enabled and Service-Oriented Workflow Framework.” PLoS ONE 10 (3): e0116781. doi: 10.1371/journal.pone.0116781

- Li, Deren, Yuan Yao, Zhenfeng Shao, and Le Wang. 2014. “From Digital Earth to Smart Earth.” Chinese Science Bulletin 59 (8): 722–733. doi: 10.1007/s11434-013-0100-x

- Massey, N., R. Jones, F. E. L. Otto, T. Aina, S. Wilson, J. M. Murphy, D. Hassell, Y. H. Yamazaki, and M. R. Allen. 2015. “weather@Home-Development and Validation of a Very Large Ensemble Modelling System for Probabilistic Event Attribution.” Quarterly Journal of the Royal Meteorological Society 141 (690): 1528–1545. doi:10.1002/qj.2455.

- Mell, Peter, and Tim Grance. 2009. “The NIST Definition of Cloud Computing.” National Institute of Standards and Technology 53 (6): 50.

- Nativi, S., L. Bigagli, B. Domenico, J. Caron, and E. Davis. 2006. “ An XML-based Language to Connect NetCDF and Geographic Communities.” Paper presented at the 2006 IEEE International Conference on Geoscience and Remote Sensing Symposium (IGARSS), Denver, CO, USA, July 31–August 4.

- Nativi, Stefano, Paolo Mazzetti, and Gary N. Geller. 2013. “Environmental Model Access and Interoperability: The GEO Model Web Initiative.” Environmental Modelling & Software 39: 214–228. doi: 10.1016/j.envsoft.2012.03.007

- Neuschwander, A., and J. Coughlan. 2002. “Distributed Application Framework for Earth Science Data Processing.” Paper presented at 2002 IEEE international geoscience and remote sensing symposium (IGARSS'02) , Toronto, Ontario, Canada, June 24–28.

- Nurmi, Daniel, Rich Wolski, Chris Grzegorczyk, Graziano Obertelli, Sunil Soman, Lamia Youseff, and Dmitrii Zagorodnov. 2009. “The Eucalyptus Open-Source Cloud-Computing System.” Paper presented at the 2009 9th IEEE/ACM International Symposium on Cluster Computing and the Grid, Shanghai, China, May 18–21.

- Pordes, Ruth, Don Petravick, Bill Kramer, Doug Olson, Miron Livny, Alain Roy, Paul Avery, Kent Blackburn, Torre Wenaus, and W. Frank. 2007. “The Open Science Grid.” Journal of Physics: Conference Series 78 (1): 012057. doi:10.1088/1742-6596/78/1/012057.

- Roman, Dumitru, Sven Schade, A. J. Berre, N. Rune Bodsberg, and J. Langlois. 2009. “ Model as a Service (MaaS).” Paper presented at 2009 AGILE Workshop: Grid Technologies for Geospatial Applications, Hannover, Germany, June 2–5.

- Roth, Robert E., Arzu Çöltekin, Luciene Delazari, Homero Fonseca Filho, Amy Griffin, Andreas Hall, Jari Korpi, Ismini Lokka, André Mendonça, and Kristien Ooms. 2017. “User Studies in Cartography: Opportunities for Empirical Research on Interactive Maps and Visualizations.” International Journal of Cartography 1: 1–29.

- Roth, Robert E., and Alan M. MacEachren. 2016. “Geovisual Analytics and the Science of Interaction: An Empirical Interaction Study.” Cartography and Geographic Information Science 43 (1): 30–54. doi:10.1080/15230406.2015.1021714.

- Shook, Eric, Michael E. Hodgson, Shaowen Wang, Babak Behzad, Kiumars Soltani, April Hiscox, and Jayakrishnan Ajayakumar. 2016. “Parallel Cartographic Modeling: A Methodology for Parallelizing Spatial Data Processing.” International Journal of Geographical Information Science 30 (12): 2355–2376. doi:10.1080/13658816.2016.1172714.

- Shvachko, Konstantin, Hairong Kuang, Sanjay Radia, and Robert Chansler. 2010. “The Hadoop Distributed File System.” Paper presented at 2010 IEEE 26th Symposium on Mass Storage Systems and Technologies (MSST), Incline Village, NV, USA, May 3–7.

- Sotomayor, Borja, Rubén S. Montero, Ignacio M. Llorente, and Ian Foster. 2009. “Virtual Infrastructure Management in Private and Hybrid Clouds.” IEEE Internet Computing 13 (5): 14–22. doi:10.1109/MIC.2009.119.

- Tang, Wenwu, and Wenpeng Feng. 2017. “Parallel Map Projection of Vector-based Big Spatial Data: Coupling Cloud Computing with Graphics Processing Units.” Computers, Environment and Urban Systems 61: 187–197. doi: 10.1016/j.compenvurbsys.2014.01.001

- Tang, Wenwu, Wenpeng Feng, Meijuan Jia, Jiyang Shi, Huifang Zuo, Christina E. Stringer, and Carl C. Trettin. 2017. “A Cyber-Enabled Spatial Decision Support System to Inventory Mangroves in Mozambique: Coupling Scientific Workflows and Cloud Computing.” International Journal of Geographical Information Science 31 (5): 907–938. doi:10.1080/13658816.2016.1245419.

- Tsou, Ming-Hsiang. 2015. “Research Challenges and Opportunities in Mapping Social Media and Big Data.” Cartography and Geographic Information Science 42 (Suppl. 1): 70–74. doi:10.1080/15230406.2015.1059251.

- Vecchiola, Christian, Suraj Pandey, and Rajkumar Buyya. 2009. “High-performance Cloud Computing: A View of Scientific Applications.” Paper presented at 2009 10th International Symposium on Pervasive Systems, Algorithms, and Networks (ISPAN), Kaohsiung, Taiwan, December 14–16.

- Wang, Shaowen. 2010. “A CyberGIS Framework for the Synthesis of Cyberinfrastructure, GIS, and Spatial Analysis.” Annals of the Association of American Geographers 100 (3): 535–557. doi:10.1080/00045601003791243.

- Williams, Dean N. 1997. “ PCMDI Software System: Status and Future Plans.” Report no. 44. Lawrence Livermore National Laboratory (LLNL), Livermore, CA.

- Woodring, Jonathan, Katrin Heitmann, James Ahrens, Patricia Fasel, Chung-Hsing Hsu, Salman Habib, and Adrian Pope. 2011. “Analyzing and Visualizing Cosmological Simulations with ParaView.” The Astrophysical Journal Supplement Series 195 (1): 11. doi:10.1088/0067-0049/195/1/11.

- Yang, Chaowei, Michael Goodchild, Qunying Huang, Doug Nebert, Robert Raskin, Yan Xu, Myra Bambacus, and Daniel Fay. 2011. “Spatial Cloud Computing: How can the Geospatial Sciences Use and Help Shape Cloud Computing?” International Journal of Digital Earth 4 (4): 305–329. doi: 10.1080/17538947.2011.587547

- Yang, Chaowei, Qunying Huang, Zhenlong Li, Kai Liu, and Fei Hu. 2017. “Big Data and Cloud Computing: Innovation Opportunities and Challenges.” International Journal of Digital Earth 10 (1): 13–53. doi: 10.1080/17538947.2016.1239771

- Yang, Yang, Xiang Long, and Bo Jiang. 2013. “K-means Method for Grouping in Hybrid Mapreduce Cluster.” Journal of Computers 8 (10): 2648–2655.

- Yang, Chaowei, Robert Raskin, Michael Goodchild, and Mark Gahegan. 2010. “Geospatial Cyberinfrastructure: Past, Present and Future.” Computers, Environment and Urban Systems 34 (4): 264–277. doi:10.1016/j.compenvurbsys.2010.04.001.

- Yang, Chaowei, Huayi Wu, Qunying Huang, Zhenlong Li, and Jing Li. 2011. “Using Spatial Principles to Optimize Distributed Computing for Enabling the Physical Science Discoveries.” Proceedings of the National Academy of Sciences 108 (14): 5498–5503. doi: 10.1073/pnas.0909315108

- Yang, Chaowei, Yan Xu, and Douglas Nebert. 2013. “Redefining the Possibility of Digital Earth and Geosciences with Spatial Cloud.” International Journal of Digital Earth 6 (4):297–312. doi:10.1080/17538947.2013.769783.

- Ye, Kejiang, Xiaohong Jiang, Yanzhang He, Xiang Li, Haiming Yan, and Peng Huang. 2012. “ vHadoop: A Scalable Hadoop Virtual Cluster Platform for Mapreduce-based Parallel Machine Learning with Performance Consideration.” Paper presented at the 2012 IEEE International Conference on Cluster Computing Workshops Beijing, China, September 24–28.

- Zhang, Guiming, Qunying Huang, A-Xing Zhu, and John H. Keel. 2016. “Enabling Point Pattern Analysis on Spatial big Data Using Cloud Computing: Optimizing and Accelerating Ripley’s K Function.” International Journal of Geographical Information Science 30 (11):2230–2252. doi:10.1080/13658816.2016.1170836.

- Zhang, Hui, Guofei Jiang, Kenji Yoshihira, Haifeng Chen, and Akhilesh Saxena. 2009. “Intelligent Workload Factoring for a Hybrid Cloud Computing Model.” Paper presented at 2009 World Conference on Services-I, Los Angeles, CA, USA, July 6–10.

- Zhang, Tong, Jing Li, Qing Liu, and Qunying Huang. 2016. “A Cloud-Enabled Remote Visualization Tool for Time-Varying Climate Data Analytics.” Environmental Modelling & Software 75: 513–518. doi: 10.1016/j.envsoft.2015.10.033

- Zhang, Weishan, Liang Xu, Pengcheng Duan, Wenjuan Gong, Qinghua Lu, and Su Yang. 2015. “A Video Cloud Platform Combing Online and Offline Cloud Computing Technologies.” Personal and Ubiquitous Computing 19 (7):1099–1110. doi:10.1007/s00779-015-0879-3.

- Zhang, Guiming, A-Xing Zhu, and Qunying Huang. 2017. “A GPU-Accelerated Adaptive Kernel Density Estimation Approach for Efficient Point Pattern Analysis on Spatial big Data.” International Journal of Geographical Information Science 31 (10):2068–2097. doi:10.1080/13658816.2017.1324975.