?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The U.S. Department of Agriculture’s Agricultural Research Service (USDA-ARS) maintains seven in situ soil moisture networks throughout the continental United States, some since 2002. These networks are crucial for understanding the spatial and temporal extent of droughts in their historical context, parameterization of hydrologic models, and local agricultural decision support. However, the estimates from these networks are dependent upon their ability to provide reliable soil moisture information at a large scale. It is also not known how many network stations are sufficient to monitor watershed scale dynamics. Therefore, the objectives of this research were to: (1) determine how temporally stable these networks are, including the relationships between various sensors on a year-to-year and seasonal basis, and (2) attempt to determine how many sensors are required, within a network, to approximate the full network average. Using data from seven in situ, it is concluded that approximately 12 soil moisture sensors are sufficient in most environments, presuming their locations are distributed to capture the hydrologic heterogeneity of the watershed. It is possible to install a temporary network containing a suitable number of sensors for an appropriate length of time, glean stable relationships between locations, and retain these insights moving forward with fewer sensor resources.

1. Introduction

In situ soil moisture networks play a pivotal role in understanding watershed scale soil moisture dynamics. Hydrologic models frequently employ estimates of subsurface flows at the watershed scale. To generate these estimates, it is necessary to have time series data for soil moisture (e.g. Grayson et al. Citation1997; Bell, Weng, and Luo Citation2010). Soil moisture products are deployed for applications from agricultural decision support regarding field trafficability (Coopersmith et al. Citation2014), to the Department of Defense (Jones et al. Citation2010). Long-term climate assessments, made with General Circulation Models (GCMs), to mitigate sources of uncertainty, necessitate inputs of soil moisture (e.g. Koster and Milly Citation1997; Belair et al. Citation2005; De Rosnay et al. Citation2013; Campoy et al. Citation2013; Joetzjer et al. Citation2013). Analyzes of drought events at multiple spatial and temporal scales also contain soil moisture data as a prerequisite input (Sheffield et al. Citation2004). Each of these applications requires accurate and reliable soil moisture information over sufficiently long time scales.

It is beneficial to understand if the relative wetness/dryness of the installed sensors is temporally stable on a year-to-year basis. This type of analysis was initiated by Vachaud et al. (Citation1985) which analyzed a number of soil moisture monitoring sites and ranked their relative average moisture status. Starks et al. (Citation2006) applied this to a watershed scale network with soil moisture profiles and found. Cosh et al. (Citation2006, Citation2014) addressed spatial distributions of soil moisture across watershed scales in Oklahoma which cover different landscapes in Oklahoma. These studies found that surface soil moisture patterns were stable, but didn’t necessarily translate through the soil profile. Remote sensing validation using networks has been done in a variety of ways, including Martinez-Fernandez and Ceballos (Citation2005) for the REMEDHUS network in Spain which found that one year of data was sufficient for the determination of mean soil moisture. However, this study was based on two networks in the same River Duero basin in Spain with approximately four years of data each which may not be sufficient for long-term studies. These studies calculated the stability of ordinal rankings of sensors within watershed networks reveals whether or not it is plausible to replace a ‘missing’ reading for a given hour with an estimate that is appropriately wetter or drier than the remaining network average. In addition to the need for understanding inter-annual temporal stability, understanding seasonal temporal stability is also important. In other words, are those sensors that are wettest/driest in April the same sensors that are wettest/driest in July/October?

Once we have established the stability of seasonal and inter-annual rankings of sensor wetness within a given watershed, we can determine how many sensors are required to characterize a watershed average. Within large in situ networks, it is often an unfortunate reality that individual sensors are damaged or non-responsive and therefore cannot provide an estimate. It is beneficial to understand how many such outages are acceptable before the watershed estimate is no longer valid. Once minimum sensor deployments are understood and temporally stable installations are identified, it may be possible to develop a methodology for deploying temporary extended networks to allow for comparably accurate permanent networks with fewer stations via a scaling function.

2. Study sites

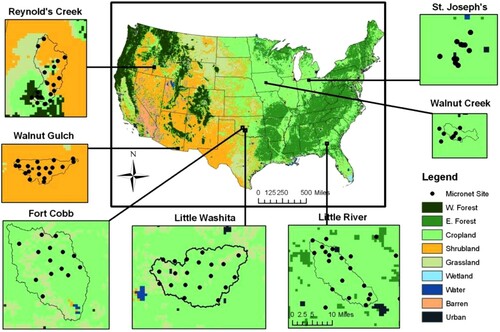

The U.S. Department of Agriculture’s (USDA) Agricultural Research Service (ARS) has installed seven in situ networks containing impedance-based soil moisture probes (Stevens Water hydraprobes) at a 5 cm depth for soil moisture estimation, as shown in . These networks are distributed across a variety of scales of a moderate resolution for satellite remote sensing purposes (∼25–35 km). These networks are located in the Little River basin in Georgia (LR), the Little Washita River basin in Oklahoma (LW), Fort Cobb in Oklahoma (FC), the Reynolds Creek basin in Idaho (RC), the South Fork of the Iowa River (SF), the Walnut Gulch basin in Arizona (AZ), and the St. Joseph’s basin in Indiana (SJ). Some of these installations have been providing hourly soil moisture data since 2001 in the earliest cases and have formed the basis of numerous research objectives comparing in situ estimates to satellite estimates (Jackson et al. Citation2010; Jackson et al. Citation2012; Bindlish et al. Citation2015; Chan et al. Citation2016) and upscaling soil moisture measurements (Coopersmith et al. Citation2016; Clewley et al. Citation2017).

3. Methodology

Prior to beginning more detailed analysis on an individual watershed’s in situ network, we utilized the full length of the watershed’s record and obtained an ordinal ranking of the average soil moisture values reported at each individual sensor. We defined these values as , the rank of sensor i over all years. Additionally, the average and standard deviation of those values reported as the weighted network average soil moisture were recorded as

and

. The weighted network average was chosen by assigning weights to individual sensors within a network that roughly correspond to the proportion of the watershed for which their estimates are representative. For most of these watersheds, the soil moisture distribution was primarily influenced by precipitation patterns, which are assumed to be isotropic at this scale. These simple descriptive statistics provided a basis of comparison for the performances of individual sensors over the temporal intervals selected for further analysis.

3.1 Analysis by year

Within a given year y, we began by calculating for all sensors i. Next, these rankings are correlated with the values of

using the subset of rankings for which the sensor in question was active during year y, defined as the Spearman’s ρ coefficient (correlation of rank). Next, we visualized the values of

over all values of y for each sensor, i, to ascertain qualitatively whether a sensor is consistently wetter or drier than the historical average in any given year.

3.2 Analysis by season

Next, analysis was performed using exclusively the values at each sensor during a specific month (e.g. all estimates in the sensor’s record during the month of June). Thus, for each month, a value of was calculated – the ranking of sensor i during month m. Much like the inter-annual analysis, an analogous rank correlation analysis was performed, comparing the ordinal rankings of wetness/dryness during any individual month m to the values of

. Next, the values of

were visualized over all values of m to ascertain qualitatively whether a sensor’s relative mean difference of wetness or dryness was a function of the month of the year. To avoid issues of freezing and thawing, only the months between April and October (inclusive) were chosen as values of m.

3.3 Combinatoric removals

To ascertain what proportion of sensors are required to generate a suitable estimate of the network average, subsets of the overall network were chosen and subsequently compared to the network average. The analysis begins with subsets of size 1, then subsets of size 2, and so on until subsets reached size n – 1, where n is the number of sensors within a given test watershed. In cases where there does not exist a sufficient number of timestamps where n – 1 sensors were active simultaneously, this analysis was halted at lower group sizes. It is important to note that the number of unique combinations of size k that were chosen from a set of size n is given by:

(1)

(1)

In Little River, the number of sensors within the network (n) was 33. The number of unique combinations of size 16 is in excess of one billion. This was computationally intractable. As a consequence, for cases where the value obtained by Equation (1) exceeds a pre-selected maximum (set to 1000 for the purposes of this analysis), a random selection of the maximum number of combinations was evaluated. Thus, for each value of k, below n, we calculated the following:

(2)

(2)

In Equation (2), represents the average root-mean-squared-error associated with use of only k sensors within an n-sensor network. The mean of the complete network and the average calculated with the available subset of k sensors, at each time t, are signified by

and

respectively. Equation (3), analogous in several respects to Equation (2), calculates the standard deviation of the RMSE-values calculated for the k-sensor combinations,

. This is a measurement of just how sensitive the

value from Equation (2) is to the specific combination of k sensors to which we have access.

(3)

(3)

The only additional new term in Equation (3) is that of , the root-mean-square error for a specific combination i of k sensors.

Previous research evaluating three co-located profiles at U. S. Climate Reference Networks (CRN) revealed that the average measurement error associated with the Stevens Water Hydra probe measurements in the United States was approximately 0.012 m3/m3 (Coopersmith et al. Citation2015). As k is increased, was subsequently compared to the inherent measurement error and/or, for watersheds where this level of uncertainty was not obtained, how many sensors are needed within the network to achieve

values lower than 110% of the above inherent measurement error or 1.1*

where n is the number of sensors within the network. In other words, how many sensors are required to remove ∼90% of the error that would be achieved with only one missing sensor.

4. Results

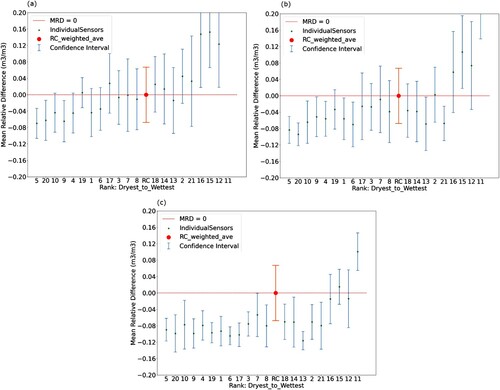

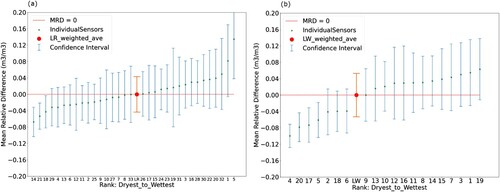

For each of the seven watersheds, we began with an image of the network’s sensors, ranked from driest to wettest from left-to-right, as shown in . At each sensor, the green dot depicts the mean relative difference as compared with the overall network mean (the larger red dot). Furthermore, at each sensor, the standard deviation of soil moisture values reported by that sensor over its lifetime is represented by the blue error bars. The standard deviation of network average estimates is illustrated by the thicker green error bars about the larger red dot. In (a), in Little River, sensors #1 and #5 are considerably wetter than the remaining sensors within the watershed, while sensors, #14, #18, and #21 are atypically dry. In these cases, even one full standard deviation below or above the sensor’s mean soil moisture value still only marginally brings the reported value within the vicinity of the network mean. In (b), in Little Washita, it is noteworthy that rather than a smoothly, monotonically increasing set of sensors, seven sensors are decidedly wetter than the remaining thirteen. In this case, the results are likely the byproduct of a watershed containing two distinct soil textures, (sand and clay) leading to decidedly drier and wetter measurements, respectively.

Figure 2. Temporal stability plots of (a) Little River, Georgia and (b) Little Washita, Oklahoma. Error bars denote standard deviations of individual sensors’ reported values. Watershed weighted averages represent the overall watershed average, as determined by sensor positions and its’ associated confidence interval.

4.1 Analysis by year

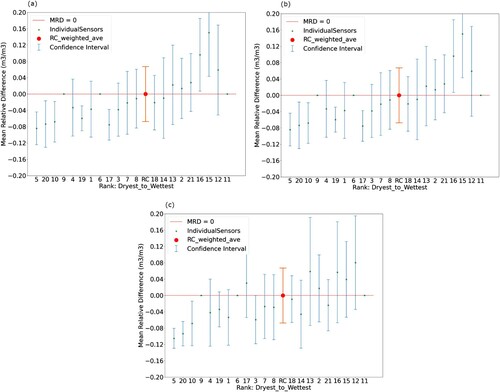

With overall distributions established, we plotted charts analogous to those presented in , maintaining the same sequence of sensors from left-to-right, but presenting the distribution of values from within the given year. Three years are presented in this manner in . While the ordering of sensors from driest to wettest is not identical to the ordering of the overall distribution, the same general pattern remains. Note that, in certain cases, a given sensor did not function during a given year, visualized as a single green dot with a mean relative difference of 0 and a null error bar.

Figure 3. Annual distributions in Reynolds Creek, Idaho. (a) 2004, (b) 2005, and (c) 2006. Error bars denote annual standard deviation of reported sensor values.

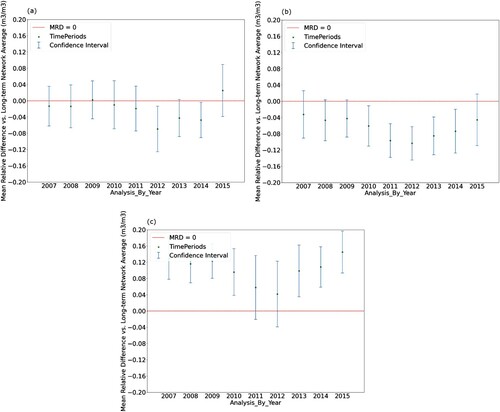

In , the correlation between the rankings of the active sensors and those sensors’ rank in the network’s overall distribution was calculated for every year in which a watershed’s network is in service. The number of active sensors is noted parenthetically. In total, sixty-seven such years were examined across the seven watersheds. Of these, only ten present a Spearman’s rho value below 0.8. Seven of these ten instances occur in the Reynolds Creek watershed. In most of the watersheds examined, these correlations remain fairly consistently above 0.85 and often exceed 0.9. A temporary network left in place for a full growing season is likely to suffice in gleaning the relative relationships between sensors.

Table 1. Annual rank correlations for each of the ARS watersheds.

In addition to analysis by year in terms of rank, individual sensors’ distributions within watersheds were examined on a year-by-year basis to ascertain whether or not their behavior is annually consistent. presents three individual sensors within the Fort Cobb basin (OK).

Figure 4. Fort Cobb, Oklahoma, sensor distributions by year. (a) Sensor #6, (b) Sensor #7, (c) Sensor #13. Error bars denote annual standard deviation of reported sensor values.

In , we can distinguish three types of sensors. All three note a slightly drier year in 2011 and 2012. (a), l depicts a sensor that is consistently dry, throughout the decade for which it is installed. (b) shows is a sensor that is consistently around the overall network mean. (c) shows a sensor that is consistently wetter than the network as a whole. This corroborates the findings of , that these relative relationships (which sensors are wetter/drier) are typically stable temporally.

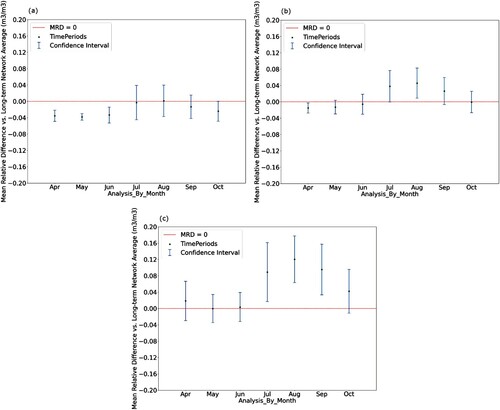

4.2 Analysis by month

presents a triad of images from the Reynolds Creek basin in Idaho, illustrating the distribution of each sensor using only data from the months of May, June, and July, respectively. With the spring months receiving considerably greater quantities of precipitation each year, we note that soil moisture values are typically higher during May than in July. While by mid-summer, the majority of sensors report values that are well below the historical network average, the overall trend remains – that is, those sensors that are drier/wetter in one month tend to be the same sensors that are drier/wetter in another. It is worth noting that these relationships are more robust in watersheds where rain is received regularly in every month. By contrast, in certain watersheds, meaningful rainfall is only received during one or two months of the year. In the months where rainfall is not received, there is little that distinguishes the soil moisture content at one sensor from that at another – all sensors quickly return to their minimum possible values (residual soil moisture) and remain there until months when sufficient precipitation arrives.

presents findings that are similar to those presented in , with the analytical subsets being determined by the month of the year rather than the year within the watershed network’s history. Like , the number of available sensors is noted parenthetically, to the right of the calculated Spearman Rho correlation.

Table 2. Monthly rank correlation for each ‘warm’ month for each watershed.

These correlations were even stronger than their annual analogs. Of forty-nine months examined, only seven display a correlation below 0.8. Four of these seven are during the dry summer in Reynolds Creek (Idaho) and another falls in the dry spring in Walnut Gulch (Arizona). Of the remaining forty-two months, twenty-nine of them showed a correlation above 0.9. As mentioned previously, during months when little, if any rain occurs, it was more difficult for the rankings among sensors to be retained, as most/all measurements fell to residual soil moisture values.

presents a triad of images from the Walnut Gulch. In southern Arizona, rainfall was generally limited to the monsoon season, during late July, August, and September. As a result, all images displayed the same seasonal pattern. However, in (a), sensor #18, was consistently drier than the network average, in (b), sensor #36, is near the network average, while sensor #2, in (c), is decidedly wetter than the network average throughout the year. Also notable is the fact that the remaining four months, where limited rain occurs, assume roughly the same value. Nonetheless, these findings confirmed what is presented in – seasonal relationships are stable in both wetter and drier sensors – relative wetness was not a function of the season in which the analysis occurs.

4.3 Combinatorics – the stability of incomplete networks

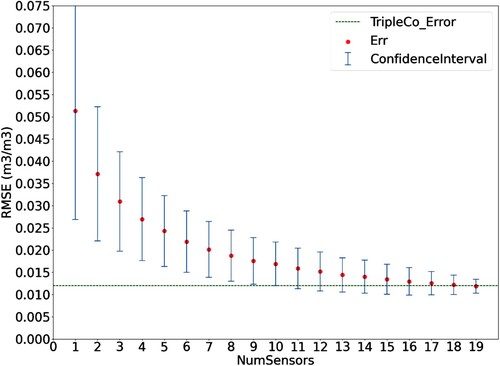

With an understanding from the previous two analyzes that relative intra-sensor relationships are stable both seasonally and year-to-year, the follow-up question is how many sensors are required to approximate the network average. In , the network in South Fork, Iowa was examined by considering all combinations of one sensor, all combinations of two sensors, and so on, until the full network, less a single sensor is considered. For simplicity, when more than 1000 combinations are possible, only one random set of 1000 combinations is considered. See Equations (2) and (3) for additional detail.

In , though it is clear that each additional sensor decreases the error associated with the partial network, there are diminishing marginal returns. A combination of 16 of the 20 available sensors will produce an average error against the overall network mean that is within 110% of the inherent uncertainty associated with Hydraprobe measurements. presents these values for the remaining watersheds.

Table 3. Number of sensors needed to estimate full network average for each watershed. Walnut Gulch is not included in this analysis as no timestamp contain all 54 sensors was available.

In , though the seven watersheds contain highly disparate numbers of total sensors (from 13 in St. Joseph’s to 33 in Little River), the number of sensors beyond which the marginal utility of each additional instrument becomes small is more stable. No network requires more than 16 sensors (South Fork), and the remaining networks allow sufficient estimates with 13 or fewer sensors.

5. Discussion & conclusions

With instrumentation purchase, installation, and maintenance of permanent networks requiring considerable and sustained investment, this analysis helped to determine the viability of and quantitative requirements for a temporary network for scaling of a permanent network. From , it is found that approximately 12 sensors are sufficient in most environments, presuming their locations are distributed such that they capture the hydrologic heterogeneity of the watershed as well as the average moisture condition. This confirms the findings of Martinez-Fernandez and Ceballos (Citation2005), though for a much longer time frame and much more diverse set of hydroclimatic conditions. The findings in and suggest that the intra-sensor relationships are stable inter-annually and inter-seasonally. Therefore, if 12 sensors (stations) are deployed temporarily for one calendar year, it may be possible to scale a singular permanent station so that it estimates the average soil moisture of the location with similar confidence to the temporary network. Enhancing these estimates with models could allow increasingly accurate watershed network approximations with fewer and fewer sensors.

As a result of this temporal stability analysis, it is now possible, when a given sensor’s estimate is unavailable for a brief period, for its value to be replaced (based on its expected mean relative difference) rather than presenting the watershed average as the weighted average of the remaining active sensors. If the network’s wettest sensor is unavailable for a day, the current methodology uses the average of the remaining sensors, which will necessarily lead to a drier watershed-scale estimate.

Finally, by comparing the results between multiple watersheds, we begin to understand the long-term stability of the behavior of the soil moisture readings at a given location. This type of analysis illustrates seasonal patterns of soil moisture and inter-annual trends, especially in those watersheds whose networks have been installed for 15–20 years. It was determined that sensors can have stable patterns of soil moisture from one year to the next, which was previously established in Cosh et al. (Citation2006), but now is confirmed for each of the watersheds in question.

Acknowledgement

This research was a contribution from the Long-Term Agroecosystem Research (LTAR) network. LTAR is supported by the United States Department of Agriculture and available through the National Agricultural Library or STEWARDS database. USDA is an equal opportunity employer and provider.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

Notes: Numbers in parentheses refer to the number of sensors available for that year of study. All correlations are significant with a p-value of less than 0.01.

Note: Number of available sensors used in the study for each watershed in parentheses.

References

- Belair, S., G. Balsamo, J.-F. Mahfouf, and G. Deblonde. 2005. “Towards the Inclusion of Hydros Soil Moisture Measurements in Forecasting Systems of the Meteorological Service of Canada.” IGARSS 4: 2741–2743. Art. 1525624.

- Bell, J. E., E. Weng, and Y. Luo. 2010. “Ecohydrological Responses to Multifactor Global Change in a Tallgrass Prairie: A Modeling Analysis.” Journal of Geophysical Research 115: G04042. doi:https://doi.org/10.1029/2009JG001120

- Bindlish, Rajat, Thomas Jackson, M. H. Cosh, Tianjie Zhao, and Peggy O’Neill. 2015. “Global Soil Moisture from the Aquarius/SAC-D Satellite: Description and Initial Assessment.” IEEE Geoscience and Remote Sensing Letters 12 (5): 923–927.

- Campoy, A., A. Ducharne, F. Cheruy, F. Hourdin, J. Polcher, and J. C. Dupont. 2013. “Response of Land Surface Fluxes and Precipitation to Different Soil Bottom Hydrological Conditions in a General Circulation Model.” Journal of Geophysical Research 118 (19): 10725–10739.

- Chan, Steven K., R. Bindlish, P. E. O’Neill, E. Njoku, T. J. Jackson, A. Colliander, F. Chen, et al. 2016. “Assessment of the SMAP Passive Soil Moisture Product.” IEEE Transactions on Geoscience and Remote Sensing 54 (5): 4994–5007. doi:https://doi.org/10.1109/TGRS.2016.2561938.

- Clewley, Daniel, Jane B. Whitcomb, Ruzbeh Akbar, Agnelo R. Silva, Aaron Berg, Justin R. Adams, Todd Caldwell, Dara Entekhabi, and Mahta Moghaddam. 2017. “A Method for Upscaling In Situ Soil Moisture Measurements to Satellite Footprint Scale Using Random Forests.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 10 (6): 2663–2673. doi:https://doi.org/10.1109/JSTARS.2017.2690220.

- Coopersmith, E. J., M. H. Cosh, J. E. Bell, and W. T. Crow. 2016. "Multi-Profile Analysis of Soil Moisture Within the U.S. Climate Reference Network.” Vadose Zone Journal 1–8. doi:https://doi.org/10.2136/vzj2015.01.0016.

- Coopersmith, E. J., M. H. Cosh, W. Petersen, J. Prueger, and J. Niemeier. 2015. “Soil Moisture Model Calibration and Validation: An ARS Watershed on the South Fork Iowa River.” Journal of Hydromet 1087–1101. doi:https://doi.org/10.1175/JHM-D-14-0145.1.

- Coopersmith, E. J., B. S. Minsker, C. E. Wenzel, and B. J. Gilmore. 2014. “Machine Learning Assessments of Soil Drying for Agricultural Planning.” Computers and Electronics in Agriculture 104: 93–104. doi:https://doi.org/10.1016/j.compag.2014.04.004.

- Cosh, M. H., T. J. Jackson, P. Starks, and G. Heathman. 2006. “Temporal Stability of Surface Soil Moisture in the Little Washita River Watershed and its Applications in Satellite Soil Moisture Product Validation.” Journal of Hydrology 323 (1–4): 168–177. doi:https://doi.org/10.1016/j.jhydrol.2005.08.020.

- Cosh, M. H., P. J. Starks, J. A. Guzman, and D. N. Moriasi. 2014. “Upper Washita River Experimental Watersheds: Multiyear Stability of Soil Water Content Profiles.” Journal of Environmental Quality 43 (4): 1328–1333. doi:https://doi.org/10.2134/jeq2013.08.0318

- De Rosnay, P., M. Drusch, D. Vasiljevic, G. Balsamo, C. Albergel, and L. Isaksen. 2013. “A Simplified Extended Kalman Filter for the Global Operational Soil Moisture Analysis at ECMWF.” Quarterly Journal of the Royal Meteorological Society 139 (674): 1199–1213.

- Grayson, R. B., A. W. Western, F. H. S. Chiew, and G. Bloschl. 1997. “Preferred States in Spatial Soil Moisture Patterns: Local and Nonlocal Controls.” Water Resources Research 33 (12): 2897–2908. doi:https://doi.org/10.1029/97WR02174.

- Jackson, T. J., R. Bindlish, M. H. Cosh, T. Zhao, P. J. Starks, D. D. Bosch, M. S. Seyfried, et al. 2012. “Validation of Soil Moisture Ocean Salinity (SMOS) Soil Moisture Over Watershed Networks in the U.S.” IEEE Transactions of Geoscience and Remote Sensing 50 (5): 1530–1543.

- Jackson, T. J., M. H. Cosh, R. Bindlish, P. J. Starks, D. D. Bosch, M. S. Seyfried, D. C. Goodrich, M. S. Moran, and J. Du. 2010. “Validation of Advanced Microwave Scanning Radiometer Soil Moisture Products.” IEEE Transactions of Geoscience and Remote Sensing 48: 4256–4272.

- Joetzjer, E., H. Douville, C. Delire, and P. Ciais. 2013. “Present-Day and Future Amazonian Precipitation in Global Climate Models: CMIP5 Versus CMIP3.” Climate Dynamics 41 (11-12): 2921–2936.

- Jones, A. S., J. Cogan, G. Mason, and G. McWilliams. 2010. “Design of a Temporal Variational Data Assimilation Method Suitable for Deep Soil Moisture Retrievals Using Passive Microwave Radiometer Data.” 2010 11th Specialist Meeting on Microwave Radiometry and Remote Sensing of the Environment 60–62. doi:https://doi.org/10.1109/MICRORAD.2010.5559591.

- Koster, R. D., and P. C. D. Milly. 1997. “The Interplay Between Transpiration and Runoff Formulations in Land Surface Schemes Used with Atmospheric Models.” Journal of Climate 10 (7): 1578–1591.

- Martinez-Fernandez, J., and A. Ceballos. 2005. “Mean Soil Moisture Estimation Using Temporal Stability Analysis.” Journal of Hydrology 312: 28–38.

- Sheffield, J., G. Goteti, F. Wen, and E. F. Wood. 2004. “A Simulated Soil Moisture Based Drought Analysis for the United States.” Journal of Geophysical Research 109 (24): 1–19. doi:https://doi.org/10.1029/2004JD005182

- Starks, P. J., G. C. Heathman, T. J. Jackson, and M. H. Cosh. 2006. “Temporal Stability of Soil Moisture Profile.” Journal of Hydrology 324 (1–4): 400–411. doi:https://doi.org/10.1016/j.jhydrol.2005.09.024

- Vachaud, G., A. Passerat De Silans, P. Balabanis, and M. & Vauclin. 1985. “Temporal Stability of Spatially Measured Soil Water Probability Density Function.” Soil Science Sociey of America Journal 49: 822–828.