?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The ubiquitous spatiotemporal information extracted from Internet texts limits its application in spatiotemporal association and analysis due to its unstructured nature and uncertainty. This study uses ST-Voxel modeling to solve the problem of structured modeling and the association of ubiquitous spatiotemporal information in natural language texts. It provides a new solution for associating ubiquitous spatiotemporal information on the Internet and discovering public opinion. The main contributions of this paper include: (1) It proposes a convolved method for ST-Voxel, which solves the voxel modeling problem of unstructured and uncertain spatiotemporal objects and spatiotemporal relation in natural language texts. Experiments show that this method can effectively model 5 types of spatiotemporal objects and 16 types of uncertain spatiotemporal relation founded in texts; (2) It realizes the unknown event discovery based on voxelized spatiotemporal information association. Experiments show that this method can effectively solve the aggregation of ubiquitous spatiotemporal information in multi-natural language texts, which is conducive to discovering spatiotemporal events. The selection of convolution parameters in voxel modeling is also discussed. A parameter selection method for balancing the discovery capability and discovery accuracy of spatiotemporal events is given.

1. Introduction

The rise of various information-sharing software represented by social media has led to the production of many texts containing spatiotemporal information daily (Yang et al. Citation2020). This spatiotemporal information, called ubiquitous spatiotemporal information, is enormous in quantity, inconsistent in structure, and uncertain. After the extraction of such ubiquitous spatiotemporal information from text, including geographical names (Hu et al. Citation2022; Ma and Hovy Citation2016), spatial relation (Qiu et al. Citation2019; Stock et al. Citation2022) and time information (Kim and Myaeng Citation2004), processing and application of the extracted spatiotemporal information is crucial and challenging.

The processing difficulties mainly come from the following two aspects. The first is the unstructured and uncertain nature of ubiquitous spatiotemporal information. There are different ways to describe the same spatiotemporal area. It may be latitude and longitude and time stamps, place names and time periods, or spatial relation based on a specific address and temporal relation based on a particular time (Cadorel, Blanchi, and Tettamanzi Citation2021; Tissot et al. Citation2019). Additionally, the information might be inaccurate. It may contain uncertain descriptive words such as ‘around’. Even specific numbers, such as ‘east side’ and ‘1 km’, are generally subjective judgment results that have not been accurately measured. Ubiquitous spatiotemporal information describes an uncertain range with vague boundaries and is a semantic field.

The existing processing of ubiquitous spatiotemporal information is mainly based on membership functions, and quantitative modeling methods are designed for various spatiotemporal relation (Peuquet and Ci-Xiang Citation1987; Tissot et al. Citation2019; Y. Wang et al. Citation2018; Z. Wang et al. Citation2018). These methods can effectively obtain the modeling results of ubiquitous spatiotemporal information in the form of coordinates and time scales. While analyzing similar spatiotemporal areas recorded in this form, it is necessary to calculate the spatiotemporal relation to determine whether these coordinates describe the same place. Hence, modeling has not radically resolved the information’s uncertain nature. Also, the geometry of the fuzzy semantic field is complex, and it isn’t easy to describe it directly with coordinates or equations.

When it comes to the application step, existing solutions need to access the spatiotemporal information associated with the event (based on event discovery) for positioning and next-step analysis (Bakillah, Li, and Liang Citation2015; Kuflik et al. Citation2017; Zhou, Xu, and Kimmons Citation2015). These methods rely not only on the pre-discovery of the event, but also on searching for information about the event that occurred, such as the timely and correct detection of target events (Sakaki, Okazaki, and Matsuo Citation2010) and the reporting of relevant news (Devès et al. Citation2019). In the case of incorrectly related queries, the spatiotemporal information obtained may not be related to the event itself. It will easily result in an incorrect analysis of the event. Another disadvantage is that discovering unknown events from spatiotemporal information is difficult.

Aiming at spatiotemporal association of ubiquitous spatiotemporal information, existing methods process the modeling results using clustering (Ansari, Prakash, and Mainuddin Citation2018). A related index query method has been proposed (Chen et al. Citation2023), where it is necessary to import many results into the spatiotemporal analysis system to perform association. It is challenging to cope with the theoretically infinite growth of spatiotemporal information.

To solve the processing and association problems, we introduce the spatiotemporal voxel (ST-Voxels), a kind of spatiotemporal geometry that realizes the discrete expression of spatiotemporal regions (J. Li et al. Citation2022; Smith and Dragićević Citation2022; Stilla and Xu Citation2023), based on the following two motivations:

When the ST-Voxels determined by the spatiotemporal range are used to represent the ubiquitous spatiotemporal information, the unstructured texts is transformed into a structured set of voxels. The ST-Voxels used to represent the spatiotemporal information will be likely for different descriptions of the same spatiotemporal region. There is no need to judge through complex spatiotemporal relation. Moreover, voxel modeling of the field has been applied to physical fields like magnetic fields (Odawara et al. Citation2011), and its effectiveness has been confirmed. Therefore, ST-Voxels suit the modeling and expression of spatiotemporal information.

When the event associated with the spatiotemporal information cannot be determined in advance, if associating the spatiotemporal information with the ST-Voxels, a large amount of spatiotemporal information will naturally accumulate in the limited spatiotemporal voxel, which leads to discovering unknown events after the spatiotemporal association. Moreover, studies have shown that the discretization of spatiotemporal data helps extract spatiotemporal information features, which can be used in predictive learning, anomaly detection, and other fields (S. Wang, Cao, and Yu Citation2022). In addition, the number of voxels used in the voxelization modeling results of ubiquitous spatiotemporal information is limited, and the number does not become infinite as the number of spatiotemporal information increases. Therefore, the voxelization modeling of ubiquitous spatiotemporal information contributes to its information association and event finding.

Existing ST-Voxel models are generally used to process traditional deterministic spatiotemporal information (Poux and Billen Citation2019; Stilla and Xu Citation2023; Xu, Tong, and Stilla Citation2021), and have limited power to process information that is unstructured and ambiguous. Therefore, it is necessary to expand and supplement the existing ST-Voxel model before it can solve the modeling and application of ubiquitous spatiotemporal information. Our main contributions are:

We introduce and expand the ST-Voxel concepts for the processing of unstructured and uncertain ubiquitous spatiotemporal information;

We propose a ST-Voxel convolution method to handle ubiquitous spatiotemporal information’s unstructured and uncertain nature. The fuzzy semantic field described by various structures and quantities of ubiquitous spatiotemporal information can be modeled as a limited number of deterministic spatial voxel objects.

The application potential of our proposed method has been demonstrated by the information association method, which also enables unknown event discovery.

The article is organized in the following structure: Section 2 introduces the adaptation of the ST-Voxel model, and details the voxel modeling method for spatiotemporal information; Section 3 presents the voxelized information correlation method for event correlation and spatiotemporal correlation; and Section 4 verifies our method’s feasibility and effectiveness.

2. Voxelized modeling method

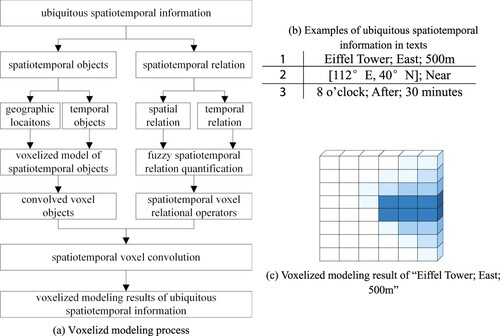

(a) illustrates the proposed voxelized modeling process for ubiquitous spatiotemporal information. For extracted spatiotemporal information from texts, the object voxelized model and the voxel relational operator are proposed for the spatiotemporal objects and the spatiotemporal relation, respectively. The convolved voxel object is then obtained from the voxelized result for the spatiotemporal object. The voxelization modeling results can be finally obtained through the convolution operation of the convolved voxel objects and the voxel relational operators. (b) lists 3 examples of spatiotemporal information extracted from texts which are the input of the voxelized modeling method. The 1st ubiquitous spatiotemporal information ‘Eiffel Tower; East; 500 m’ is extracted from text ‘we held a party 500 meters from the east side of the Eiffel Tower’, and (c) is the voxelized modeling result of that information.

2.1. Adaption of ST-Voxels

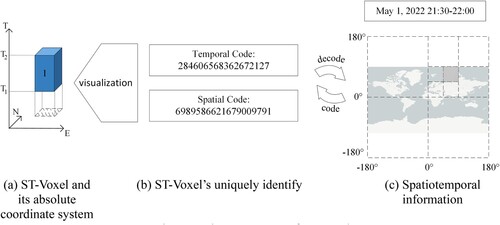

This paper combines spatial position coding (Lei et al. Citation2020) and time coding (Tong et al. Citation2019) to expand the concept of ST-Voxels. The spatial range represented by the spatial coding is used as the bottom of the voxel, representing the voxel’s spatial range. The time range defined by the time coding is used as the height of the voxel, representing the voxel’s time range. The expanded concept of ST-voxels includes three parts: the coordinate system, the identification, and the attributes.

ST-Voxels have absolute and relative coordinate systems, where the absolute coordinate system is used to identify the absolute position of the ST-Voxels, and the relative coordinate system is used to identify the relative position of each voxel within a certain number of ST-Voxel sets. The absolute coordinate system used in this study adopts the latitude and longitude projection. The E-axis coincides with the equator and points due east. The N-axis coincides with 0° longitude and points due north. The T-axis represents time pointing to the future (generally, a specific reference time is the origin of the T-axis). The three axes form a right-handed rectangular coordinate system (‘a’ in ), the scale of which depends on the voxel resolution (In this article, it is also the segmentation scale of coding); the origin of the relative coordinate system is at the center of a voxel. The I-axis points east, the J-axis points north, and the K-axis represents the time axis pointing to the future, and these three axes form a right-hand rectangular coordinate system with the I- and J-axes. The scale of the three axes depends on the voxel resolution.

In this study, a joint code comprising spatial coding (Lei et al. Citation2020) and temporal coding (Tong et al. Citation2019) was used to identify ST-Voxels uniquely. The absolute identification of the ST-Voxel in is 6989586621679009791–284606568362672127 (‘b’ in ). In a relative reference frame, ST-Voxels can also be identified by three-dimensional coordinates of the form

.

The attributes of the ST-Voxels are used to describe the objects, events, information, Etc., present in the voxel. The attribute can be deterministic, with 0 or 1 indicating existence or absence. It can also be non-deterministic, represented by the membership degree

in fuzzy mathematics, which indicates the probability of occurrence of objects, events, and information in ST-Voxels. The ST-Voxel attribute value shown in (a) is 1, which means that the entity is located in the space–time range.

In this study, we use voxels of different colors to distinguish different voxel models. Among them, the gray voxel is the voxelization result of the reference entity when modeling the spatiotemporal relations; the white voxel represents the attribute value of the voxel at the location is 0; the blue voxel is the voxelization modeling result of the spatiotemporal information, where the darker the blue, the greater the attribute value of the voxel. Voxels of other specific colors are described in detail when they first appear and will not be repeated here.

2.2. Spatiotemporal objects

Location-related spatial information in natural texts has two forms: geographic locations (Monteiro, Davis, and Fonseca Citation2016) and spatial relation (Stock et al. Citation2022). There are usually four forms of temporal information in natural texts: time points, time periods, fuzzy time, and temporal relation (Campos et al. Citation2015). As temporal relation (spatial relation) is based on the other three types of temporal information (geographic location), the name of time points, time segments, and fuzzy time reference geographic locations are collectively referred to as temporal objects. Because the time and space in voxels are orthogonal to each other, spatial and temporal information can be modeled separately and combined into an individual voxel modeling result. Hence, the voxelized modeling methods involve 5 types of spatiotemporal objects and 16 types of spatiotemporal relation (Allen Citation1983; Deng, Li, and Wu Citation2013) listed in are discussed separately here ().

Table 1. Ubiquitous spatiotemporal information types in texts.

2.2.1. Geographic locations

The absolute identification of voxels involves a combination of grid and time coding, so geographic location modeling is inseparable from the grid and coding methods of geographic entities (Lei et al. Citation2020; Peterson Citation2011; Sahr Citation2019; Wu et al. Citation2021). A quadtree-based latitude-longitude grid (Hartmann et al. Citation2016; Lei et al. Citation2020) was used to project voxels in space for modeling. The spatial resolution of voxels was related to the application scenario (e.g. for point of interest (POI) data, the resolution was 82 m × 94 m). The attributes of the voxel identified whether the object appeared in the spatiotemporal range, and a value of 1 indicated that the object was located at the voxel position.

Geographic locations include direct coordinates, place names, and other indirect locations (Monteiro, Davis, and Fonseca Citation2016). Indirect location queries have been extensively studied for geographic information retrieval (Baeza-Yates and Ribeiro-Neto Citation1999) and are not repeated here. The voxel modeling method for coordinates and place names in a unit time period is introduced below.

2.2.1.1. Coordinates

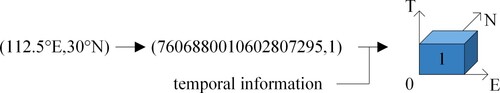

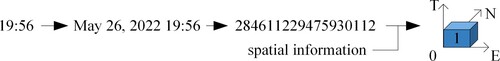

Geographic locations in the form of coordinates can be uniquely identified by a spatial code and then combined with the corresponding time to produce ST-Voxel modeling results (). When fuzzy quantization was not involved, the attribute value of the voxel was 1.

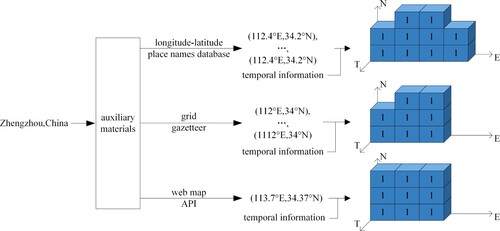

2.2.1.2. Place names

When spatial information as place names was modeled, the following considerations were necessary: (1) when a place name database (Adams Citation2017; Wiki Citation2022) was used as auxiliary data, the corresponding longitude and latitude sets were searched by matching place names; and (2) when a network map was used, its API service (like AutoNavi Map API; AMAP Citation2022) was used to obtain the coordinates of place names. Finally, the spatial modeling results of place names were obtained according to the coordinate modeling method (). Among the three types of auxiliary data, the coordinate data from the longitude-latitude place names database were the most accurate, and the modeling results were closest to the actual geographic range of place names. The coordinate data of the grid gazetteer were less accurate than those of the latitude and longitude place name database owing to the influence of the grid, and some voxelization results were omitted or incorrectly increased. The web map API returned latitude and longitude coordinates for place names, and the voxelization result was generally irrelevant to the real geographic shape.

2.2.2. Temporal objects

Normalized temporal structures like ‘March 21, 2022’ can be directly converted to one-dimension integer encodings (Tong et al. Citation2019), so converting 3 types of temporal objects to a standard format enables their voxelized modeling.

2.2.2.1. Time points

Time information in the form of time points needs to be transformed into a normalized time structure considering the context and then converted into integer codes as the modeling results for time points. The height of the voxels was the time interval represented by the integer code, and the attribute value of the voxel was 1 ().

2.2.2.2. Time periods

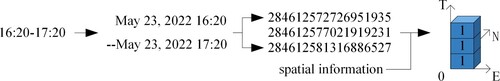

To model time information in the form of time periods, the start and end time points were obtained and converted into temporal codes by modeling temporal objects in the form of time points. Then, all integer codes in the two temporal code intervals under the current time resolution were used as the modeling results for the time periods ().

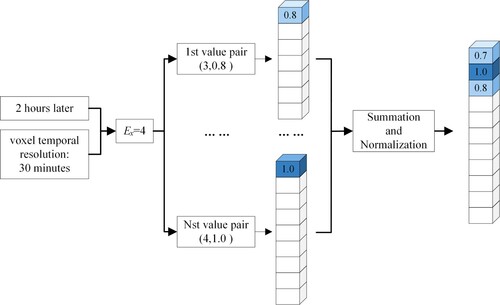

2.2.2.3. Fuzzy time

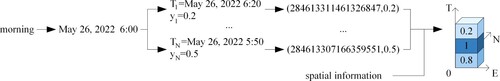

Firstly, the fuzzy times represented by ‘early morning’ and ‘evening’ were converted into time points using a simple mapping method. Then, the membership cloud method (Li et al. Citation1998) was used to solve its ambiguity. This is done as follows. Let

be the smallest scale that is not 0 in

(for example, 6 o’clock in 6:00; 24 min in 00:24). For each value pair

generated, let

(a positive sign for

indicates time delay and a negative sign represents time advance) and assign membership degree

to the time code corresponding to

. After summing and normalizing the

membership degree according to the time code, the value range of each membership degree was from 0 to 1, which was used as the time membership degree of each voxel. If the spatial modeling results had membership degrees, the corresponding membership degrees were multiplied ().

2.3. Spatiotemporal relation

The spatiotemporal relation can determine the spatiotemporal location of an object through the location of another object and their relation. Therefore, modeling should also follow this rule: the reference object and the spatiotemporal relation are modeled separately, and the spatiotemporal position of the target object is obtained through some combination of these. For different reference objects having the same spatiotemporal relation, no matter how the reference objects change, the target spatiotemporal position can be obtained from the same modeling results for the spatiotemporal relation. This is similar to the concepts of system input and impulse response in convolution. At the same time, in the ST-Voxel relative coordinate system, the position of each voxel is uniquely identified by three-dimensional integer coordinates, which is suitable for linear operations. The convolution operation of ST-Voxels is defined here to realize the modeling of spatiotemporal relation.

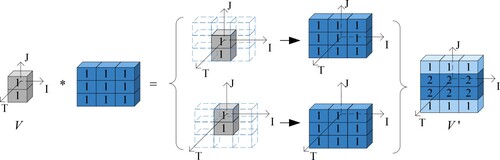

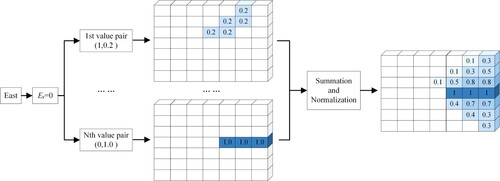

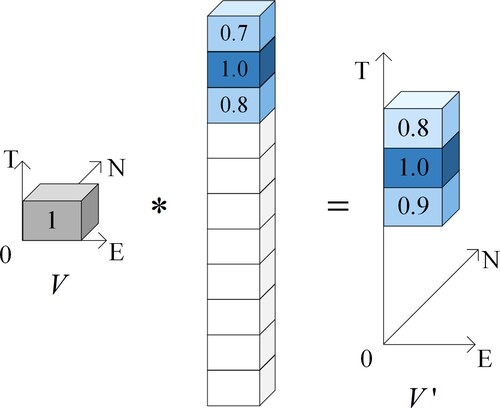

2.3.1. Mathematical principles of ST-Voxel convolution

For real space with

rows,

columns, and

height, if

and

, then their convolution is

(‘

’ is the convolution operator),

where:

(1)

(1) A relative coordinate system can be established with the geometric center of the voxel set,

as the origin, and a direction parallel to the absolute coordinate system. The scale of the relative coordinate system was the resolution of the voxels. For

, we defined

, wherein element

was the attribute value of the voxel whose coordinates were

in the relative coordinate system.

,

, where

. If for the voxel set

, the attribute values of all elements

correspond to

one-to-one, then the attribute value of the voxel is called the spatiotemporal membership degree, which indicates the probability of the object appearing at this position. We call

the convolved (voxel) object, B the (voxel) object matrix, C the (voxel) relation operator, and

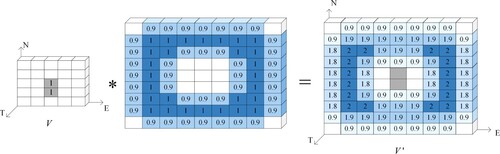

the (voxel) convolution result. presents an intuitive schematic of the voxel convolution process.

2.3.2. Spatial relation

Spatial relation can be divided into three types of basic relation according to the geometric constraints: topological, directional, and distant relation (Deng, Li, and Wu Citation2013), which can also be combined to form a new spatial relation. For example, the directional relation and distant relation can be combined to form an azimuth relation, such as ‘600 m to the east’. The purpose of spatial relation modeling is to obtain the geographic locations of a target entity through the reference object’s geographic locations and their spatial relation.

During spatial relation modeling, the convolved object was obtained from the reference object, and the relational operator was generated according to the spatial relation (because the spatial relation does not involve time, only two scales of the relation operator plane are discussed). The convolution result was processed to obtain the inference result for the spatiotemporal position of the target entity.

2.3.2.1. Generation of convolved objects

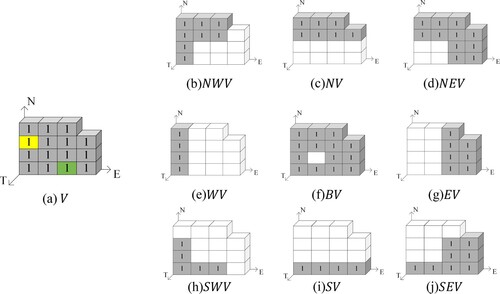

Directional words (such as ‘east’ and ‘south’) in the text not only express the directional relation of the target entity but also restrict the reference entity. For example, for the description ‘The restaurant is 500 m east of the school,’ ‘east’ refers to the directional relation between the ‘restaurant’ and ‘school’, while ‘500 m’ refers to the approximate distance between the ‘restaurant’ and ‘the part of the school located in the east,’ rather than the distance between ‘restaurant’ and ‘the geometric center of the school’. Therefore, the convolved object was obtained by dividing the voxel modeling results of the reference object according to the directional relation and then recombining them. This reduced the workload of subsequent convolution and made the modeling results of the spatial information more accurate.

Note that the voxelized result of the reference object was , and the geometric center of

was

. Let

,

,

,

(

is the rounding symbol). Then, the final convolved object

was obtained according to formula (2), named North Voxel (

), Northeast Voxel (

), East Voxel (

), Southeast Voxel (

), South Voxel (

), Southwest Voxel (

), West Voxel (

), Northwest Voxel (

), and Boundary Voxel (

). If

was observed as in (a), then

was defined as in the dark part of (b)–(j).

(2)

(2)

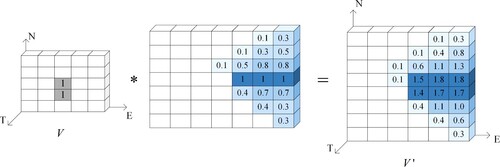

2.3.2.2. From spatial relation to relational operators and convolution operations

Because of the inaccuracy of personal perceptions and descriptions, spatial relation typically has some ambiguity. This study used a random number (pan-normal) generator with a stable tendency (Li et al. Citation1998) to quantify ambiguous qualitative spatial relation. According to the characteristics of the spatial relationship, this paper uses expectation and convolution parameters to calculate other parameters in this method. The higher the uncertainty of the spatial relation, the greater the convolution parameter. The relational operator generation process and convolution operation for three types of basic spatial relation and the complex relation formed by combining basic relation are described. When the distant relation is not included in the text, the magnitude of the relational operator is generally an integer multiple of the diameter of the circumcircle of the convolved object, and it is an odd number.

Topological relation is of three types: adjacent, intersecting and separated. As the topological relation is generally constant, a fixed relational operator was used for modeling. shows an example of the modeling process for topological relation. and

in are 3 × 3 Corrosion and Expansion Structural Elements, respectively (Castleman Citation1996).

Table 2. Example of the topological relation modeling process.

Directional relation: A polar coordinate system was established with due east as the positive direction of the polar axis and the center of the relational operator as the origin. Let be the radian of the direction in the polar coordinate system (for example, due east corresponds to

, and due north corresponds to

). For each value pair

generated, a relational operator

was obtained, and

was assigned to all positions where it intersected rays with radians

. The relational operator of the directional relation was obtained by normalizing the sum of

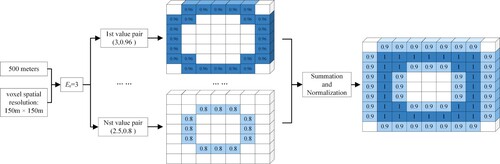

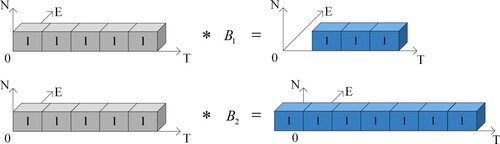

relational operators. The relation operator construction process for the directional relation is shown in . The modeling process for the directional relation is shown in . The gray part of represents the convolved object.

Distant relation: Let be the number of voxels corresponding to the distance at the voxel spatial resolution during modeling (for example, when the spatial resolution of the voxel was 20 m × 20, 500 m corresponded to

). For each value pair

generated, a relational operator

with a column of

was obtained, and

was assigned to all positions in the relational operator that intersected the circles whose center was

and whose radius was

. The relational operator of the distant relation was obtained by normalizing the sum of

relational operators. The relation operator construction process for the distant relation is shown in . The modeling process of the distant relation is shown in .

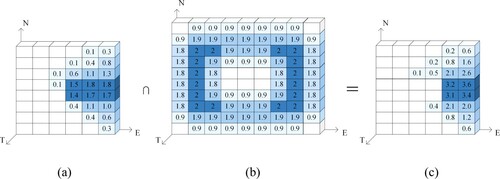

Other spatial relation: This can be regarded as a complex relation formed through a combination of three types of basic spatial relation. The basic spatial relation that comprised this were modeled, and the intersection of the results according to equation (3) was the modeling result for other spatial relation.

(3)

(3) where,

,

, and

are spatial coding, temporal coding, and membership degree, respectively;

and

are the modeling results of two basic spatial relation; and

is the intersection operator. shows the modeling process of a compound relation comprising directional and distant relation.

2.3.3. Temporal relation

The modeling method of 9 types of temporal relation (during; contains; overlaps; overlapped-by; start; started-by; finished; finished-by; equals) is the one-dimensional form of the topological relation modeling method. shows an example of the modeling process for those temporal relation ().

Table 3. Example of the modeling process for 9 types of temporal relation.

Another 4 types of temporal relation (meets; met-by; before; after) are same as directional relation and distant relation. while modeling, the convolved object was obtained from the reference temporal object, and the relation operator was generated according to the one-dimensional time span. Let be the number of voxels corresponding to the time span at the voxel time resolution, with the sign of

being negative in the direction of time progression. For each value pair

generated, a relational operator

with

rows and 1 column were obtained as shown in equation (4). The relational operator of the temporal relation was obtained by normalizing the sum of

relational operators. The relation operator construction for the temporal relation ‘after’ is shown in .

(4)

(4) The modeling process of the temporal relation ‘after’ is shown in .

3. Voxelized information association

Voxelized information will naturally aggregate in voxels. The voxelized information association is essential for event association and spatiotemporal association. The voxelized information association is based on the ST-Voxel union method shown in equation (5) and the ST-Voxel intersection method shown in equation (3).

(5)

(5) where,

,

, and

are spatial coding, temporal coding, and membership degree, respectively;

and

are the modeling results of two ubiquitous spatiotemporal information modeling results; and

is the union operator. Owing to the boundedness of ST-Voxels, the intersection and union of a large number of spatiotemporal information modeling results were also bounded, which resolved the problem associated with infinitely increasing spatiotemporal information with an increasing number of objects.

The ST-Voxel union obtained spatiotemporal hot spots through the aggregation of the modeling results. The ST-Voxel intersection was used to extract ubiquitous texts associated with some voxels. On the one hand, associating information of a founded event can analyze its feature. On the other hand, associating spatiotemporal information in a region can find and predict events.

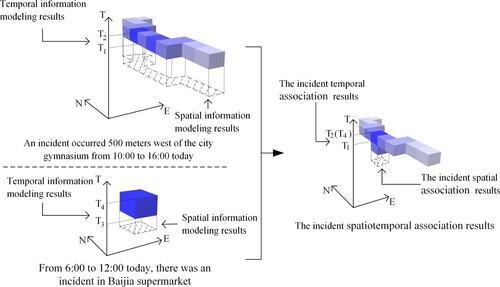

3.1. Event association

When an event is discovered, a large amount of spatial media text related to the event is obtained using the event name. The association results of event’ spatiotemporal information is obtained based on the union of the modeling results of spatiotemporal information in the text. The spatiotemporal range corresponding to the ST-Voxel with the largest membership is the location where the event is most likely to occur. shows the process of associating the spatiotemporal semantics of the same event description in two sentences. First, the spatiotemporal information in the two sentences is modeled separately and obtains the described position. Then the two modeling results are union, and the properties of the voxels determine the spatiotemporal location of the event. The greater the membership of the voxel, the darker the color of the corresponding voxel, indicating the greater the probability of the event happening in that spatiotemporal location.

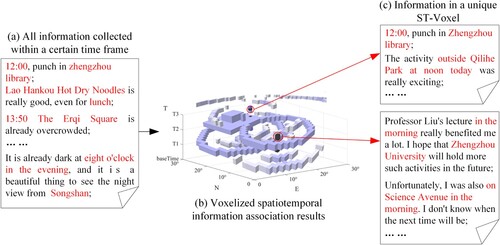

3.2. Spatiotemporal association

When an event occurs or is about to occur but has not been discovered, it is impossible to retrieve relevant information based on the event name. As the spatiotemporal properties of events are unique, the spatiotemporal descriptions of events made by event-related individuals naturally form aggregates in ST-Voxels. Therefore, if the spatiotemporal information in all texts in the time range ((a)) is modeled and incorporated into the voxelized spatiotemporal space ((b)), the potential events and spatiotemporally related texts can be identified as the ST-Voxels with comparatively higher membership ((c)). For example, in the box above (c), the time described in all statements is noon, and the spatial location is near Zhengzhou Library. Then, an event can be confirmed and further recognized using other information (such as attributes and relationships) of the related text in space and time.

4. Experiments and discussions

4.1. Experimental setups

The following three experiments were carried out to validate our proposed method.

Spatiotemporal semantic modeling feasibility experiment: Voxelized modeling of various forms of spatiotemporal information. The modeling results were used to verify the feasibility of the modeling method.

Voxelized information association experiment: Through modeling amount of spatiotemporal information int a region, verify that the voxelized information association method can be used to discovery unknown events.

Modeling parameter comparison experiment: The convolution parameter that affects uncertainty during modeling is usually related to the distant relation. For example, in the text ‘Restaurant 100 meters east of the supermarket’, when the convolution parameter is set to 1/3, the modeling result range is about [60 m,140 m]; when the convolution parameter is set to 1, the modeling result range is about [0 m,300 m]. In this experiment, 6 convolution parameters with an interval of 1/6 were selected for comparison experiments: 1/6, 1/3, 1/2, 2/3, 5/6, and 1. The influence of different convolution parameters on the modeling and association process is explained by comparing the spatiotemporal information modeling with the association results. And a parameter selection method that balanced the capability of spatiotemporal event discovery and the accuracy of discovery was presented.

4.2. Data and the environment

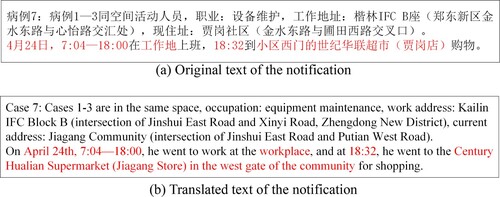

The source of the experimental data was the ‘Notice on 5 Newly Confirmed COVID-19 Cases and 10 Newly Asymptomatic Infections in Zhengzhou City’, issued by Zhengzhou, Henan, China from 1st to 10 May 2022, for 189 COVID-19 cases (Announcement Citation2022b). The content of the notification was the time and space trajectories of each patient. Its form is ‘something was done at a certain time and place’. For example, ‘On April 28th, 8:00–18:30 in the workplace, go shopping at Xinsheng Fruit Hypermarket at 18:41’. The spatiotemporal information in these data has apparent characteristics and includes various forms of spatiotemporal information, such as place names, spatial relation, time points, time periods, and temporal relation. An example of the original ((a)) and the translated ((b)) text of the notification is shown below. The annotated part is the text containing spatiotemporal information.

Figure 20. Notification example. (a) Original text of the notification; (b) Translated text of the notification.

In this study, the spatial information in the text was obtained using the GitHub open-source library pyltp (HuangFJ Citation2020), and the latitudes and longitudes corresponding to the place names were obtained using the AutoNavi Map API service (AMAP Citation2022). The temporal information in the text was obtained from the GitHub open-source library pyunit-time (jtyoui Citation2021).

As the location data in the notification were basically store-level POI data, the time data were generally a time point or time period accurate to the minute. Therefore, ST-Voxels with a spatial resolution of 82 m × 94 m and a temporal resolution of 30 min were used to model the spatiotemporal information. In the city-level application scenario, the spatial resolution was ignored to eliminate the orientation deviation caused by size differences, and the temporal resolution was suitable for the voxelization of temporal information on the time scale of several days. At the same time, the resolution of voxels was in line with the approximate spread of the infectious disease, which was reasonable.

4.3. Experiments results

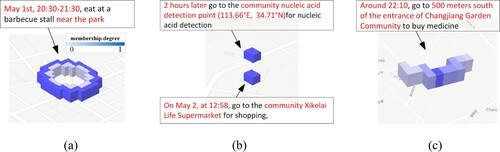

The modeling results of the typical spatiotemporal semantics of 4 sentences are shown in . The darker the color of the voxel in the figure, the greater its membership degree.

Figure 21. Visualization of spatiotemporal information modeling. (a) Distant relation and time period. (b) Place name and time point; coordinate and temporal relation ‘after’. (c) Directional relation, distant relation and fuzzy time.

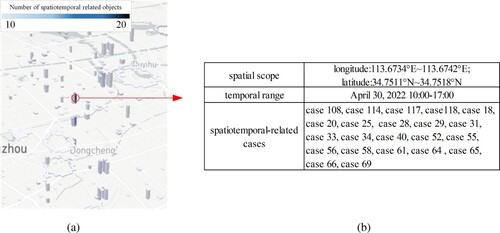

(a) shows the ST-Voxel visualization results for more than 5 overlapping cases in the event discovery experiment. The color of the voxels represents the number of coincident cases: the darker the color, the greater the number. Due to the limited association of space and time of the patients, the voxel distribution was relatively discrete. (b) lists the spatial scope, temporal scope and spatiotemporal-related cases in one voxel with the highest attribute value.

Figure 22. Visualization and statistical results of voxelized information association. (a) ST-Voxel visualization results; (b) Coincidence relative information.

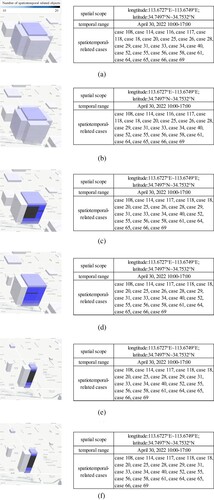

visualizes the comparative results of different modeling parameters. The left side shows all the spatiotemporal voxels in this area. On the right is the spatiotemporal range of the central spatiotemporal voxel and the spatiotemporal-related cases. As the convolution parameters decrease (a–f), all voxels shrink, and the related cases increase.

4.4. Discussions

4.4.1. Feasibility experiment of spatiotemporal semantic modeling

The proposed voxel modeling method can model 5 types of spatiotemporal objects and 16 forms of spatiotemporal relation contained in origin data. The modeling results of the topological relation ‘near the park’ and the time period ‘May 1st 20:30–21:30’ in ‘Eating at a barbecue stall near the park from 20:30–21:30 on May 1st’ are multiple ST-Voxels ((a)); the modeling results of the place name ‘community Xikelai Life Supermarket’ and the time point ‘May 2nd, 12:58’ in ‘May 2nd, 12:58 to go shopping at the community Xikelai Life Supermarket’ are a single ST-Voxel ((b)); the modeling results of the coordinates (1133.66°E, 34.17°N) and the temporal relation ‘after 2 hours’ in ‘After 2 hours, go to the cell nucleic acid detection point (113.66°E, 34.71°N) for nucleic acid detection’ are a single ST-Voxel ((b)); the modeling results of the directional relation ‘south’, distant relation ‘500 m’, and fuzzy time ‘around 22:10’ in ‘Around 22:10, go to 500 meters south of the entrance of Changjiang Garden Community to buy medicine’ are multiple ST-Voxels ((c)).

4.4.2. Spatiotemporal information association experiment

This experiment found the spatiotemporal gathering area of 22 cases ‘10:00–17:00 Qiantang Yicheng, April 30, 2022’. After manual screening and verification, it was found that the spatiotemporal trajectories of all patients overlapped in a certain ST-Voxel, while the trajectories of other patients did not overlap there. The feasibility of voxelized information association for discovering the unknown event of the infection chain was verified. The statistical results of the epidemic situation in this geographic location show that there will be no more aggregated epidemics after 19:00 on 2 May 2022, and it is judged that the place is divided into a separate management area. After the relevant information was manually reviewed, it was determined that the area was zoned on May 3 to control the spread of the epidemic (Announcement Citation2022a). Consistent with the conclusion obtained by the ST-Voxels, this verifies the feasibility of discovering the unknown event of control measures through the aggregation of spatiotemporal information in the voxels.

4.4.3. Comparative experiment of modeling parameters

When 1 and 5/6 ((a,b)) are used as convolution parameters for modeling, the modeling results with fuzzy spatiotemporal information have the largest range. Spatiotemporal information is most likely to form aggregations in ST-Voxels. Although more spatiotemporal hot spots can be found, the spatiotemporal range of the hot spots is the largest. Irrelevant spatiotemporal information will also be aggregated, resulting in the low credibility of hot spots. The parameter is suitable for modeling spatiotemporal data with poor reliability.

When 2/3 and 1/2 ((c,d)) are used as convolution parameters for modeling, the range of modeling results with fuzzy spatiotemporal information is smaller. Although the number of spatiotemporal hot spots discovered is small, the range of spatiotemporal hot spots discovered is smaller, and the credibility of the hot spots is higher.

When 1/3 and 1/6 ((e,f)) are the convolution parameters used in modeling, the range of modeling results with fuzzy spatiotemporal information is the smallest. It is more difficult for spatiotemporal information to aggregate in ST-Voxels, but the spatiotemporal range of hot spots is the most accurate. The reliability of hot spots is the highest, and it is suitable for high-reliability spatiotemporal data modeling.

Therefore, for data with reliability similar to that of the data used in this study, such as official reports, 1/3 can also be used as a convolution parameter for the voxel modeling of spatiotemporal information and spatiotemporal association. If the data are less credible than the data used in this study, such as social media data, convolution parameters of 2/3, 1, or greater can be used for modeling to facilitate the spatiotemporal association of information.

Different events can be obtained using spatiotemporal data from different sources. For example, for the epidemic notification data used in this experiment, the spatiotemporal cross location of each case was identified according to the spatiotemporal aggregation. Discovering possible transmission chains provided a temporal and spatial basis for tracing the origin of the epidemic and delineating epidemic prevention and control areas; for temporal and spatial data in communication texts, users can focus on areas and activity trajectories based on temporal and spatial aggregation. Eventually, combining data with other attributes of the corresponding location can provide a reference for user identification, personalized recommendations, etc.

5. Conclusions and outlook

This study expands the existing ST-Voxels concept and designs a voxelization modeling method for spatiotemporal information in natural texts. The modeling of 5 types of spatiotemporal objects and 16 forms of relation are presented separately. In particular, a set of modeling ideas similar to convolution operations is proposed for spatiotemporal relation in voxelized space–time. Unknown event discovery based on voxelized information association is realized. Finally, experiments are designed to verify the feasibility of the modeling method and application. The influence and selected principle of the model parameters are also analyzed. Motivated by the exciting application results, we will further extend 3D space–time to 4D space–time to process 3D space data and apply the information for spatiotemporal aggregation in different scenarios.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Adams, B. 2017. “Wāhi, a Discrete Global Grid Gazetteer Built Using Linked Open Data.” International Journal of Digital Earth 10: 490–503. doi:10.1080/17538947.2016.1229819.

- Allen, J. F. 1983. “Maintaining Knowledge about Temporal Intervals.” Communications of the ACM 26 (11): 832–843. doi:10.1145/182.358434.

- AMAP. 2022. Main Page. Retrieved from https://lbs.amap.com/.

- Announcement Zhengzhou. 2022a. Circular of Zhengzhou COVID-19 Prevention and Control Headquarters Office on Implementing Classified Management of Some Areas. Administration Committee of Zhengzhou Hi-tech Industrial Development Zone. http://www.zzgx.gov.cn/fkzl/6411727.jhtml.

- Announcement Zhengzhou. 2022b. COVID-19 Prevention and Control Column. Administration Committee of Zhengzhou Hi-tech Industrial Development Zone. http://www.zzgx.gov.cn/fkzl/index.jhtml.

- Ansari, M., A. Prakash, and S. Mainuddin. 2018. “Application of Spatiotemporal Fuzzy C-Means Clustering for Crime Spot Detection.” Defence Science Journal 68: 374–380. doi:10.14429/dsj.68.12518.

- Baeza-Yates, R., and B. Ribeiro-Neto. 1999. Modern Information Retrieval. New York: ACM press.

- Bakillah, M., R. Y. Li, and S. H. Liang. 2015. “Geo-Located Community Detection in Twitter with Enhanced Fast-Greedy Optimization of Modularity: The Case Study of Typhoon Haiyan.” International Journal of Geographical Information Science 29: 258–279. doi:10.1080/13658816.2014.964247.

- Cadorel, L., A. Blanchi, and A. G. Tettamanzi. 2021. “Geospatial Knowledge in Housing Advertisements: Capturing and Extracting Spatial Information from Text.” Paper presented at the Proceedings of the 11th on Knowledge Capture Conference.

- Campos, R., G. Dias, A. M. Jorge, and A. Jatowt. 2015. “Survey of Temporal Information Retrieval and Related Applications.” ACM Computing Surveys 47: 1–41. doi:10.1145/2619088.

- Castleman, K. R. 1996. Digital image processing. Upper Saddle River, NJ: Prentice Hall Press.

- Chen, B. Y., Y.-b. Luo, T. Jia, H.-P. Chen, X.-Y. Chen, J. Gong, and Q. Li. 2023. “A Spatiotemporal Data Model and an Index Structure for Computational Time Geography.” International Journal of Geographical Information Science 37: 550–583. doi:10.1080/13658816.2022.2128192.

- Deng, M., Z. Li, and J. Wu. 2013. Spatial Relationship Theory and Methods. Beijing: China Science Publishing & Media.

- Devès, M. H., M. Le Texier, H. Pécout, and C. Grasland. 2019. “Seismic Risk: The Biases of Earthquake Media Coverage.” Geoscience Communication 2: 125–141. doi:10.5194/gc-2-125-2019.

- Hartmann, A., G. Meinel, R. Hecht, and M. Behnisch. 2016. “A Workflow for Automatic Quantification of Structure and Dynamic of the German Building Stock Using Official Spatial Data.” ISPRS International Journal of Geo-Information 5: 142. doi:10.3390/ijgi5080142.

- Hu, X., H. S. Al-Olimat, J. Kersten, M. Wiegmann, F. Klan, Y. Sun, and H. Fan. 2022. “GazPNE: Annotation-Free Deep Learning for Place Name Extraction from Microblogs Leveraging Gazetteer and Synthetic Data by Rules.” International Journal of Geographical Information Science 36: 310–337. doi:10.1080/13658816.2021.1947507.

- HuangFJ. 2020. pyltp, GitHub Repository. Github. https://github.com/HIT-SCIR/pyltp.git.

- jtyoui. 2021. pyunit-time, GitHub Repository. GitHub. https://github.com/PyUnit/pyunit-time.git.

- Kim, P., and S. H. Myaeng. 2004. “Usefulness of Temporal Information Automatically Extracted from News Articles for Topic Tracking.” ACM Transactions on Asian Language Information Processing 3 (4): 227–242. doi:10.1145/1039621.1039624.

- Kuflik, T., E. Minkov, S. Nocera, S. Grant-Muller, A. Gal-Tzur, and I. Shoor. 2017. “Automating a Framework to Extract and Analyse Transport Related Social Media Content: The Potential and the Challenges.” Transportation Research Part C: Emerging Technologies 77: 275–291. doi:10.1016/j.trc.2017.02.003.

- Lei, Y., X. Tong, Y. Zhang, C. Qiu, X. Wu, G. Lai, H. Li, C. Guo, and Y. Zhang. 2020. “Global Multi-Scale Grid Integer Coding and Spatial Indexing: A Novel Approach for big Earth Observation Data.” ISPRS Journal of Photogrammetry and Remote Sensing 163: 202–213. doi:10.1016/j.isprsjprs.2020.03.010.

- Li, D., D. Cheung, X. Shi, and V. Ng. 1998. “Uncertainty Reasoning Based on Cloud Models in Controllers.” Computers & Mathematics with Applications 35: 99–123. doi:10.1016/S0898-1221(97)00282-4.

- Li, J., C. Wu, C.-A. Xia, P. J.-F. Yeh, B. Chen, W. Lv, and B. X. Hu. 2022. “A Voxel-Based Three-Dimensional Framework for Flash Drought Identification in Space and Time.” Journal of Hydrology 608: 127568. doi:10.1016/j.jhydrol.2022.127568.

- Ma, X., and E. Hovy. 2016. “End-to-End Sequence Labeling via Bi-Directional LSTM-CNNS-CRF.” arXiv preprint arXiv:1603.01354. doi:10.48550/arXiv.1603.01354.

- Monteiro, B. R., C. A. Davis Jr., and F. Fonseca. 2016. “A Survey on the Geographic Scope of Textual Documents.” Computers & Geosciences 96: 23–34. doi:10.1016/j.cageo.2016.07.017.

- Odawara, S., Y. Gao, K. Muramatsu, T. Okitsu, and D. Matsuhashi. 2011. “Magnetic Field Analysis Using Voxel Modeling With Nonconforming Technique.” IEEE Transactions on Magnetics 47: 1442–1445. doi:10.1109/TMAG.2010.2095832.

- Peterson, P. 2011. Close-Packed Uniformly Adjacent, Multiresolutional Overlapping Spatial Data Ordering. Edited by Office, USPT. Washington, DC: Google Patents, U.S.

- Peuquet, D., and Z. Ci-Xiang. 1987. “An Algorithm to Determine the Directional Relationship Between Arbitrarily-Shaped Polygons in the Plane.” Pattern Recognition 20: 65–74. doi:10.1016/0031-3203(87)90018-5.

- Poux, F., and R. Billen. 2019. “Voxel-Based 3D Point Cloud Semantic Segmentation: Unsupervised Geometric and Relationship Featuring vs Deep Learning Methods.” ISPRS International Journal of Geo-Information 8: 213. doi:10.3390/ijgi8050213.

- Qiu, P., L. Yu, J. Gao, and F. Lu. 2019. “Detecting Geo-Relation Phrases from Web Texts for Triplet Extraction of Geographic Knowledge: A Context-Enhanced Method.” Big Earth Data 3: 297–314. doi:10.1080/20964471.2019.1657719.

- Sahr, K. 2019. “Central Place Indexing: Hierarchical Linear Indexing Systems for Mixed-Aperture Hexagonal Discrete Global Grid Systems.” Cartographica: The International Journal for Geographic Information and Geovisualization 54: 16–29. doi:10.3138/cart.54.1.2018-0022.

- Sakaki, T., M. Okazaki, and Y. Matsuo. 2010. “Earthquake Shakes Twitter Users: Real-Time Event Detection by Social Sensors.” Proceedings of the 19th International Conference on World Wide Web, 851–860.

- Smith, A. K., and S. Dragićević. 2022. “Map Comparison Methods for Three-Dimensional Space and Time Voxel Data.” Geographical Analysis 54: 149–172. doi:10.1111/gean.12279.

- Stilla, U., and Y. Xu. 2023. “Change Detection of Urban Objects Using 3D Point Clouds: A Review.” ISPRS Journal of Photogrammetry and Remote Sensing 197: 228–255. doi:10.1016/j.isprsjprs.2023.01.010.

- Stock, K., C. B. Jones, S. Russell, M. Radke, P. Das, and N. Aflaki. 2022. “Detecting Geospatial Location Descriptions in Natural Language Text.” International Journal of Geographical Information Science 36: 547–584. doi:10.1080/13658816.2021.1987441.

- Tissot, H., M. Fabro, L. Derczynski, and A. Roberts. 2019. “Normalisation of Imprecise Temporal Expressions Extracted from Text.” Knowledge and Information Systems 61: 1361–1394. doi:10.1007/s10115-019-01338-1.

- Tong, X., C. Cheng, R. Wang, L. Ding, Y. Zhang, G. Lai, L. Wang, and B. Chen. 2019. “An Efficient Integer Coding Index Algorithm for Multi-Scale Time Information Management.” Data & Knowledge Engineering 119: 123–138. doi:10.1016/j.datak.2019.01.003.

- Wang, S., J. Cao, and P. Yu. 2022. “Deep Learning for Spatio-Temporal Data Mining: A Survey.” IEEE Transactions on Knowledge and Data Engineering 34: 3681–3700. doi:10.1109/TKDE.2020.3025580.

- Wang, Y., H. Fan, R. Chen, H. Li, L. Wang, K. Zhao, and W. Du. 2018. “Positioning Locality Using Cognitive Directions Based on Indoor Landmark Reference System.” Sensors 18: 1049. doi:10.3390/s18041049.

- Wang, Z., Z. Wu, H. Qu, and X. Wang. 2018. “Boolean Matrix Operators for Computing Binary Topological Relations Between Complex Regions.” International Journal of Geographical Information Science 33: 99–133. doi:10.1080/13658816.2018.1527917.

- Wiki. 2022. “Main Page.” In OpenStreetMap Wiki. https://wiki.openstreetmap.org/w/index.php?title=Main_Page&oldid=2013332.

- Wu, X., X. Tong, Y. Lei, H. Li, C. Guo, Y. Zhang, G. Lai, and S. Zhou. 2021. “Rapid Computation of Set Boundaries of Multi-Scale Grids and Its Application in Coverage Analysis of Remote Sensing Images.” Computers & Geosciences 146: 104573. doi:10.1016/j.cageo.2020.104573.

- Xu, Y., X. Tong, and U. Stilla. 2021. “Voxel-Based Representation of 3D Point Clouds: Methods, Applications, and Its Potential Use in the Construction Industry.” Automation in Construction 126: 103675. doi:10.1016/j.autcon.2021.103675.

- Yang, C., K. Clarke, S. Shekhar, and C. V. Tao. 2020. “Big Spatiotemporal Data Analytics: A Research and Innovation Frontier.” International Journal of Geographical Information Science 34 (6): 1075–1088. doi:10.1080/13658816.2019.1698743.

- Zhou, X., C. Xu, and B. Kimmons. 2015. “Detecting Tourism Destinations Using Scalable Geospatial Analysis Based on Cloud Computing Platform.” Computers, Environment and Urban Systems 54: 144–153. doi:10.1016/j.compenvurbsys.2015.07.006.