?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

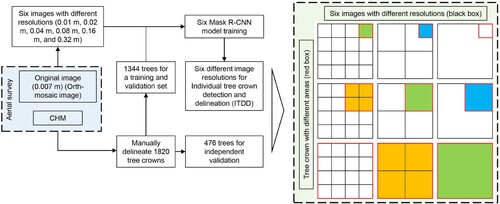

Individual tree detection and delineation (ITDD) is an important subject in forestry and urban forestry. This study represents the first research to propose the concept of crown resolution to comprehensively evaluate the co-effect of image resolution and crown size on deep learning. Six images with different resolutions were derived from a DJI Unmanned Aerial Vehicle (UAV), and 1344 manually delineated Chinese fir (Cunninghamia lanceolata (Lamb) Hook) tree crowns were used for six training and validation mask region-based convolutional neural network (Mask R-CNN) models, while additional 476 delineated tree crowns were reserved for testing. The overall detection accuracy, the influence of different crown sizes, and crown resolutions were calculated to evaluate model performance accuracy with different image resolutions for ITDD. Results show that the highest accuracy was achieved when the crown resolution was between 800 and 12800 pixels/tree. The accuracy of ITDD was impacted by crown resolution, and it was unable to effectively identify Chinese fir when the crown resolution was less than 25 pixels/tree or higher than 12800 pixels/tree. The study highlights crown resolution as a critical factor affecting ITDD and suggests selecting the appropriate resolution based on the target detected crown size.

1. Introduction

Monitoring individual trees is vital to understanding forest growth and dynamics in forests (Qin et al. Citation2022; Weinstein et al. Citation2019). Deep learning approaches and remote sensing techniques have made meaningful progress in the last decade (Goodbody, Coops, and White Citation2019; Guimarães et al. Citation2020; Kattenborn et al. Citation2021). Notably, deep learning approaches combining unmanned aerial vehicle (UAV) data have been successfully used for individual tree detection and delineation (ITDD)(Chiang et al. Citation2020; Li et al., Citation2022a; Sun et al. Citation2022). Unmanned Aerial Vehicle (UAV) images with high spatial resolution allow identifying individual trees using the deep learning approach (Saltiel et al. Citation2022; Schiefer et al. Citation2020). However, acquiring UAV imagery at a resolution higher than required for analysis can result in smaller coverage areas and increased storage and computation requirements (Kattenborn et al. Citation2021; Swayze et al. Citation2022). Therefore, it is important to investigate how image resolution impacts the ability of convolutional neural networks (CNNs) to provide accurate individual tree detection.

Many studies have focused on the performance of deep learning models with different networks for detecting individual trees. For example, the Mask region-based CNN (Mask R-CNN) developed by He et al. (Citation2017) was used in tropical forests (Braga et al. Citation2020), plantations (Iqbal et al. Citation2021; Safonova et al. Citation2021; Tang, Li, and Wang Citation2020), cities (Ocer et al. Citation2020; Sun et al. Citation2022; Zhang, Lin, and Wang Citation2022), urban parks (Yang et al. Citation2022), and drylands (Guirado et al. Citation2021) to detect and delineate individual trees. Mo et al. (Citation2021) applied the YOLACT model developed by Bolya et al. (Citation2022) to delineate Litchi canopies. Ferreira et al. (Citation2021) and Veras et al. (Citation2022) applied the DeepLabv3 + developed by Chen et al. (Citation2018) to identify three palm species (Ferreira et al. Citation2020), Brazil nut trees (Bertholletia excelsa) (Ferreira et al. Citation2021) and multi-tree species (Veras et al. Citation2022) in the Amazon. Similarly developed deep learning models, such as the DeepLabv3 + based Multi-Task Encoder-Decoder (MT-EDv3) network, have also been used for individual tree-crown detection (La Rosa et al. Citation2021; Lassalle et al. Citation2022). Gan, Wang, and Iio (Citation2023) used DeepForest and Detectree2 to detect and delineate tree crowns in a temperate deciduous forest. However, few studies reported the influence of image resolution on model performance for ITDD.

Several researchers highlighted the importance of image resolution on the accuracy of vegetation detection when using the deep learning approach, such as object detection of conifer seedlings (Fromm et al. Citation2019), semantic segmentation of tree species (Egli and Höpke Citation2020; Saltiel et al. Citation2022; Schiefer et al. Citation2020), and CNNs for target plant species and plant communities identification (Kattenborn et al. Citation2020). Most studies have demonstrated that higher spatial resolution improves the accuracy of CNNs model performance. For example, comparing the image resolution of 0.3, 1.5, 2.7, and 6.3 cm, Fromm et al. (Citation2019) reported that an excellent average precision (81%) was achieved for conifer seedlings using a Faster R-CNN with the image resolution of 0.3 cm. Schiefer et al. (Citation2020) employed a U-Net with an image resolution of 2, 4, 8, 16, and 32 cm for tree species mapping and concluded that the coarser spatial resolution could decrease the accuracy of the mapping results. Several studies have found that the accuracy of the CNN model did not improve beyond a certain spatial resolution level. For example, the average precision improved from 78% to 81% when the image resolution increased from 1.5 cm to 0.3 cm (Fromm et al. Citation2019). Gan, Wang, and Iio (Citation2023) showed the results of ITDD using Detectree2 was 0.67–0.71 precision, 0.39–0.45 recall, and 0.49–0.55 F1 score when the resolution was from 0.007–0.1 m. It is also interesting that some studies have demonstrated that very high resolutions can reduce the accuracy of CNN models. Comparing the results of ITDD using DeepForest with resolutions from 0.007–0.1 m, the optimal accuracy was achieved with a resolution of 0.02 m by Gan, Wang, and Iio (Citation2023). When applying wood filtering as part of terrestrial laser scanning analysis, Xi et al. (Citation2020) found that the mIoU decreased considerably with the decrease of voxel resolution from 0.04–0.01 m. In an agricultural application, Madec et al. (Citation2019) applied Faster R-CNN to images with resolutions of 0.13, 0.26, 0.39, 0.52, 0.78, and 1.04 mm to detect wheat ear density and reported higher accuracy was achieved using the image resolution of 0.26 mm rather than 0.13 mm. These studies identified that image resolution can have an influence on the detection of vegetation for CNN models, however, there was no consistent conclusion to help identify the optimal image resolution for CNN models.

When using classical remote sensing techniques for ITDD, tree-crown size is a crucial factor to consider in terms of the required spatial resolution for detecting individual trees. Previous studies found that the ratio of crown diameter to resolution is the most important factor influencing the accuracy of tree crown detection using classical remote sensing techniques (e.g. marker-controlled watershed segmentation algorithm and seeded region growing algorithm). For example, Yin and Wang (Citation2019) found that image resolution should be more than one-fourth of the crown diameter. Comparing the ratio of crown diameters to resolution (17:1,8:1,6:1,3:1), Pouliot et al. (Citation2002) proposed that the optimal ratio of crown diameter to resolution was less than 3:1 and more than 6:1 for tree crown detection. Therefore, based on these algorithms’ results of tree crown detection, it is reasonable to infer that the same image resolution assumptions apply to tree crown detection using deep learning approaches.

Here, we proposed a hypothesis that the optimal image resolution is related to tree-crown size using deep learning approaches for ITDD. We postulated that the number of pixels in the crown area is the key factor influencing ITDD accuracy and that a tree crown with too many or too few pixels will reduce the detection accuracy of ITDD when using deep learning approaches. This study aimed to explore the co-effect of image resolution and crown size on deep learning for ITDD. Therefore, the crown resolution is defined as the ratio of each tree-crown area and the inner pixel sizes to quantify the relationship between image resolution and tree-crown size in this study. This study, (1) assessed the accuracy of deep learning models from various image resolutions for detecting crowns of different sizes and (2) tested the influence of tree crown detection using deep learning to identify the optimal ratio of crown size to pixel resolution.

2. Materials and methods

2.1. Study site

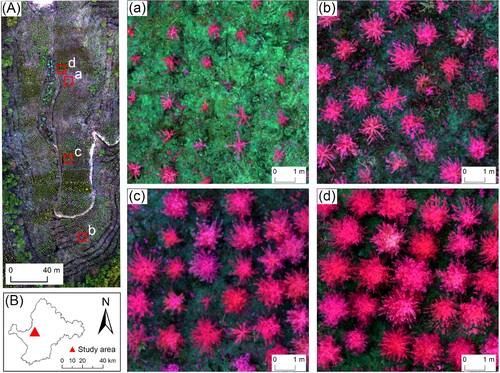

The study area with a plantation forest of Chinese fir is located in Shunchang County, Fujian, China, at the coordinates 26.922623°N latitude, 117.756697°E longitude (). It has a 4.0 ha area, with Chinese fir trees planted at a 2.0 m horizontal distance and 1.5 m base-summit spacing. The site is in a mountain valley with an average slope of 27.8 degrees and around 174 m to 226 m above sea level. A mid-subtropical marine monsoon climate dominates the site (mean annual precipitation = 1756 mm, mean annual temperature = 18.5 °C). The Chinese fir trees were planted in 2018, with a 5–7 columns planting pattern as a group and isolated by a single row of broad-leaved trees from the valley to the hilltop. Some Chinese fir died and were replanted in January 2019, and weeds were removed from the site in December 2019. The crown sizes of study trees vary widely while growing due to the different types of Chinese fir that were planted.

Figure 1. Location of the study site within Shunchang County, Fujian, China, and UAV image: (A) UAV image with a spatial resolution of 0.02 m, shown with the composition of red, green, and blue bands; (B) Location of the study site in Shunchang County; (a), (b), (c) and (d) show a high-resolution overview of different Chinese fir types.

2.2. Data collection

2.2.1. Image acquisition and preprocessing

The UAV data was acquired in December 2019 after weed removal using Phantom4-Multispectral (https://www.dji.com/p4-multispectral). It has an integrated camera with one RGB sensor and five multi-spectral sensors (blue, green, red, red edge, and near-infrared band). In order to reduce the impact of tree shadows on the UAV imagery, the flight was conducted on a diffuse light day (Kattenborn, Eichel, and Fassnacht Citation2019). During the flight, a forward overlap rate of 85% and side-lap of 80%, and an altitude of 30 m above ground was maintained. Moreover, to ensure the high-precision UAV waypoint position acquisition, the UAV's real-time kinematic (RTK) positioning and navigation were aligned using the D-RTK 2 mobile ground GPS station. This resulted in an XY precision of 2 cm and a Z precision of 3 cm. Finally, ortho-mosaics with a 0.76 × 0.76 cm spatial resolution (including blue, green, red, red edge, and near-infrared bands) and a 1.47 × 1.47 cm spatial resolution digital surface model (DSM) were generated using DJI Terra software. In addition, a canopy height model (CHM) was created by subtracting the digital terrain model from the DSM (see (Hao et al. Citation2021a) for details).

2.2.2. Individual tree crown dataset

It is a challenge to delineate tree crowns accurately and automatically to train a Mask R-CNN model using remote sensing-based software or techniques (Wu et al. Citation2020a). Therefore, crowns of Chinese fir were manually outlined using ArcGIS Pro 2.6 software (ESRI, Redlands, CA, USA) based on RGB-band mosaics and the CHM (Mohan et al. Citation2017; Tu et al. Citation2019). In total, 1820 tree crowns were outlined in the study area. Among them, 1344 tree crowns were randomly selected as a training and validation set for model training and validation. The remaining crown locations were used as a test set to evaluate model performance. The statistics of tree crown areas ranged from 0.02 m2∼4.21 m2, with an average of 1.01 m2 ().

Table 1. Statistics of tree crown area.

2.3. Training and application of Mask R-CNN model

We evaluated the performance of six different Mask R-CNN models with various image resolutions for ITDD ().

Figure 2. Flowchart for this study. The light blue color in the box represents the products derived from UAV imagery; the light green color in the box represents the influence of image resolution, tree-crown size, and crown resolution on individual tree crown detection and delineation (ITDD). The combination of image resolution and tree-crown size in the green, orange, and blue color have the same crown resolution, respectively.

First, six UAV images with different spatial resolutions were acquired.

Second, the same number and location of tree crowns were selected as the labels, and six UAV images were used to convert the labels for the deep-learning training dataset.

Next, the corresponding model was trained based on the six training datasets.

Finally, the performance of six different models was evaluated using image resolution, tree-crown size, and crown resolution metrics.

2.3.1. Image resolution

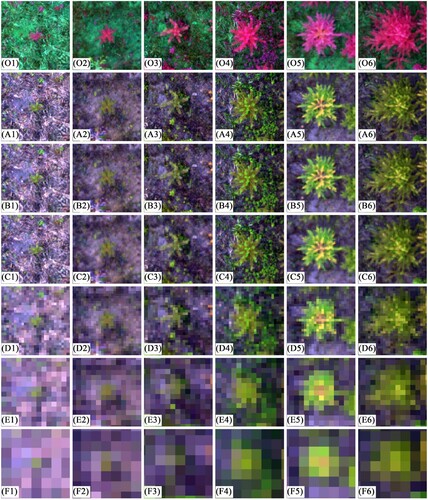

To examine the effect of image resolution on the tree-crown detection performance of Mask R-CNN, the original image of 0.007 m was resampled to generate imagery with 0.01, 0.02, 0.04, 0.08, 0.16, and 0.32 m resolutions (). Spatial averaging was performed before image resampling to generate the corresponding spatial resolution. When comparing image resolution to the tree dataset, there are 58 Chinese fir trees with less than one pixel in the study area at a 0.32 resolution (crown area is less than 0.10 m2) and there are 337 Chinese fir trees with less than one pixel in the study area at a 0.64 m resolution. Therefore, 0.32 m was chosen as the coarsest resolution to analyze the influence of spatial resolution on the Mask R-CNN performance.

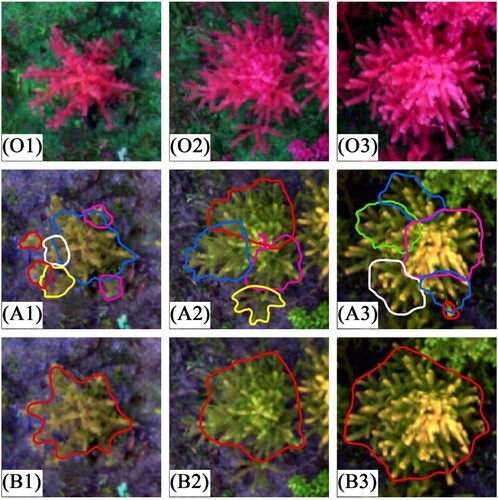

Figure 3. Example of images with different resolutions. The ground distance corresponding to each image was 2.34 m × 2.34 m. The images in the same row have the same resolution. Image resolutions from O, A, B, C, D, E, F are 0.007 m (original image), 0.01, 0.02, 0.04, 0.08, 0.16, and 0.32 m, respectively. For the same column, the images showed visual effects of the same Chinese fir at different resolutions. Crown area sizes from numbers 1–6 are <0.10 m2, 0.10∼0.20 m2, 0.20∼0.40 m2, 0.40∼0.80 m2, 0.80∼1.60 m2, 1.60 < m2, respectively.

2.3.2. Training dataset preparation

Initially, six images with different resolutions (Section 2.2.1) were used to convert the tree-crown labels (Section 2.2.2) into a deep-learning training dataset. Then, six images were split into small tiles for processing to match the input constraints of Mask R-CNN architecture (Pearse et al. Citation2020). The image tiles with corresponding pixels were evaluated to ensure the distance on the ground was consistent (5.12 m) to avoid the impact of different tile sizes on the detection accuracy of Chinese fir (). The stride shift setting of six images are shown in , with all having an overlap of 50%. Moreover, the original orientation of training data was rotated by 90°, 180°, and 270° to compensate for the limited training sample size (Braga et al. Citation2020).

Table 2. Statistics of tile size and stride shift of images with different resolutions in the study area.

2.3.3. Model training and application

The Mask R-CNN model was implemented using the ArcGIS API for Python (Hao et al. Citation2022). In ArcGIS Pro, the Deep learning using the ArcGIS image Analyst extension integrates external deep learning model frameworks, such as TensorFlow, PyTorch, and Keras, allowing for the complete execution of deep learning workflows. The ResNet-50 architecture was applied for transfer learning because it can be adjusted to learn new features and better solve the task during the learning process (Chadwick et al. Citation2020; Zhao et al. Citation2019). Moreover, early stopping was adopted to reduce overfitting. The model training would stop if the validation loss did not improve for 5 epochs (Pleșoianu et al. Citation2020). This study divided all training datasets into 90% for model training and 10% for validation. Six models were run on a Window 10 laptop with an NVIDIA GeForce RTX 2060 GPU and an AMD Ryzen 9 CPU.

Finally, six images with different spatial resolutions were used as inputs for the corresponding Mask R-CNN model to detect individual trees. In order to remove the overlapping and redundant tree-crown detection, the maximum suppression algorithm was used in this study (Wu and Li Citation2021). Additionally, the confidence score of tree-crown detection >0.2 and the maximum overlap = 0.2 was selected (Pleșoianu et al. Citation2020).

2.4. Accuracy evaluation

The influence of image resolution, tree-crown size, and crown resolution on the model performance were compared in this study to analyze the influence of differing crown resolutions on the accuracy of ITDD using Mask R-CNN.

Overall detected accuracy of Chinese fir by Mask R-CNN

The overall detected accuracy was evaluated by calculating the recall, precision, F1 score, and the Intersection over Union (IoU) for six different image resolutions based on the 476 manually delineated tree crowns. Recall explains the ratio of correctly identified trees from the set of all test trees. Precision explains the ratio of correctly identified trees from the Mask R-CNN model. F1 score represents the overall accuracy between recall and precision. Recall, precision, and F1 score are calculated based on the true positive (TP), false negative (FN), and false positive (FP) (Equations 1-3) to evaluate the accuracy of individual trees detection (Goutte and Gaussier Citation2005; Sokolova, Japkowicz, and Szpakowicz Citation2006). IoU is used to evaluate the accuracy of tree-crown delineation by the Mask R-CNN model (EquationEquation 4(4)

(4) ) (Rezatofighi et al. Citation2019). IoU represents the union and intersection of tree-crown areas from the test trees and the delineated trees from Mask R-CNN model (Pleșoianu et al. Citation2020; Wu, Sahoo, and Hoi Citation2020b).

(1)

(1)

(2)

(2)

(3)

(3)

(4)

(4) where

is the number of correctly detected trees with the IoU is > 50%,

is the number of omitted trees, and the IoU is < 50%,

is the number of misidentifications (e.g. broad-leaved trees or weeds) that were detected,

is the tree-crown areas from the test set,

is the predicted tree-crown areas with a confidence score >0.2, the intersection operation and the union operation represent the common area, and the combined area from

and

, respectively.

(2) The impact of tree-crown sizes on model performance

For the detection accuracy of tree crown with different area sizes, the test set of 476 trees was classified into 6 categories based on crown area: < 0.10 m2, 0.10∼0.20 m2, 0.20∼0.40 m2, 0.40∼0.80 m2, 0.80∼1.60 m2, 1.60 < m2 (). Next, the Mask R-CNN model with different image resolutions was applied to evaluate each category’s detection accuracy. The test set of 476 tree crowns was used in this part to calculate TP and FN. The misidentification detected by the Mask R-CNN model was classified based on the area category and used to calculate FP. The correctly detected Chinese fir from the test set and the corresponding detected tree-crown areas by the model were used to calculate IoU of each category. The value of recall, precision, F1 score, and IoU were calculated to evaluate each category, respectively (Equations 1-4).

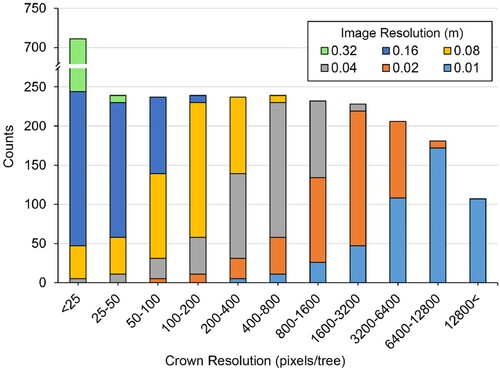

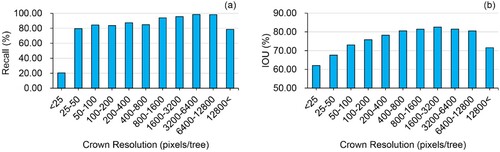

(3) The impact of crown resolution on model performance

To analyze the influence of crown resolution on the accuracy of Chinese fir identification by Mask R-CNN, the ratio of 476 manually outlined areas of the test set to the number of the corresponding pixels in the crown polygon was calculated as the crown resolution for the corresponding image resolution. Considering that crown resolution is the ratio of tree-crown size to the number of pixels, obtaining the same crown resolution is possible when small tree crowns are detected with high image resolution and when large tree crowns are associated with coarse image resolution. Therefore, this study regarded crown resolution with six different image resolutions as the analysis set. In order to compare the impact of different crown resolutions on model performance, the crown resolution was classified into 11 categories based on the crown resolution of the complete dataset (0-50, 50-100, 100-200, 200-400, 400-800, 800-1600, 1600-3200, 3200-6400, 6400-12800, 12800-25600, 25600–51200 pixels/tree) to ensure each category had different crown sizes and image resolutions (). The value of recall and IoU were calculated to evaluate each category.

3. Results

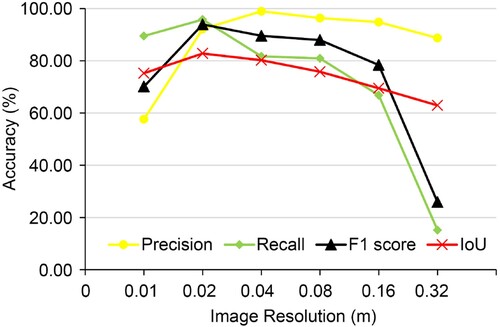

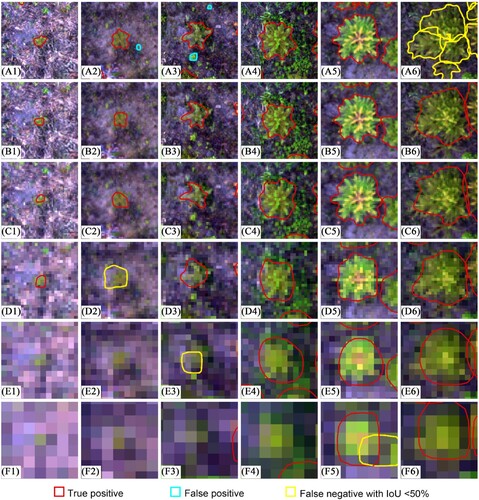

3.1. The effects of spatial resolution on model performance

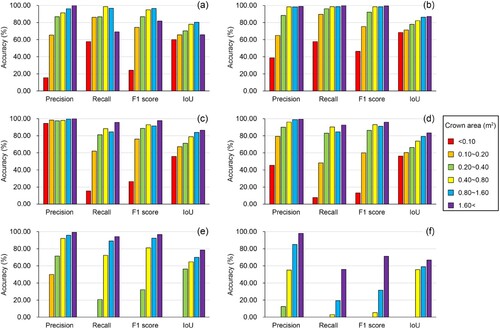

For the 6 Mask R-CNN models, the results show that with increasing image resolution, the accuracy of Chinese fir detection and delineation tended to increase (image resolutions of 0.32 m-0.02 m) but decreased at the resolution of 0.01 m (). It can be seen that lower recall values, F1 score, and IoU were achieved for Chinese fir detection and delineation by Mask R-CNN with the coarser resolution from 0.02 m to 0.32 m. The highest accuracy was achieved when the resolution was 0.02 m, yielded precision = 92.12%, recall = 95.80%, F1 score = 93.92%, and IoU = 82.79%. The lowest accuracy was achieved when the resolution was 0.32 m, yielded precision = 88.68%, recall = 15.13%, F1 score = 25.84%, and IoU = 62.91%. The model's accuracy using the image resolution of 0.01 m (precision = 57.59%, recall = 89.50%, F1 score = 70.09%, and IoU = 75.25%) was inferior to the resolution of 0.02 m.

3.2. The effects of different crown area sizes on model performance

Comparing the co-effect of image resolution and crown size on the accuracy of the Mask R-CNN model, better model performance was achieved with the increase in image resolution mainly due to the superior detection accuracy for smaller crown sizes ( and ). In contrast, the larger crown size decreased the detection accuracy at the highest image resolution level.

Figure 6. Example of different tree-crown detection and delineation results from Mask R-CNN using different image resolutions. Red polygons represent the correctly identified trees; Cyan polygons represent the falsely identified trees (e.g. broad-leaved trees or weeds); Yellow polygons represent some parts of trees that were detected by model, but the IoU value was less than 50%. (E1), (E2), (F1), (F2), (F3), and (F4) are examples of omitted trees. The images in the same row have the same resolution. Image resolutions from O, A, B, C, D, E, F are 0.007 m (original image), 0.01, 0.02, 0.04, 0.08, 0.16, and 0.32 m.

Figure 7. The accuracy of Chinese fir detection with different crown sizes by Mask R-CNN model with different image resolutions. (a) resolution of 0.01 m; (b) resolution of 0.02 m; (c) resolution of 0.04 m; (d) resolution of 0.08 m; (e) resolution of 0.16 m; (f) resolution of 0.32 m. The colors of the histogram represent different categories of Chinese fir with different crown sizes.

When the resolution ranged from 0.02 m to 0.32 m, the accuracy of Chinese fir detection with different crown sizes gradually decreased as image resolution grew coarser. For Chinese fir with the same tree-crown area, the accuracy of tree-crown detection and delineation by Mask R-CNN tended to increase when image resolution improved. Notably, Chinese fir with a small crown size could not be accurately detected from the coarser resolution images but could be detected with an increase in image resolution. For example, a crown size of less than 0.40 m2 was not able to be detected by the model with the image resolution of 0.32 m, and a crown size less than 0.20 m2 was not able to be detected when the resolution coarser than 0.16 m in this study ((e) and (f)). For the same image resolution, higher accuracy of tree-crown detection was achieved when the crown size increased (higher F1 score and IoU values). In (b), the results of Mask R-CNN model yielded F1 score = 75.34% and IoU = 71.31% when the crown size was between 0.10 m2 and 0.20 m2, and F1 score = 92.12% and IoU = 77.88% when the crown size was between 0.20 m2 and 0.40 m2.

However, for 6 categories with different crown areas, the accuracy of tree-crown detection and delineation by Mask R-CNN model with 0.01 m input image resolution yielded lower accuracy than when the input image resolution was 0.02 m. Notably, the accuracy of Chinese fir detection decreased significantly when the crown area was less than 0.01 m2 or more than 1.60 m2. The higher accuracy of tree crown with <0.01 m2 size and >1.60 m2 size detection (F1 score = 46.33% and F1 score = 99.46%, respectively) when the model used the image resolution of 0.02 m compared to the image resolution of 0.01 m (F1 score = 24.21% and F1 score = 81.74%, respectively).

3.3. The effects of crown resolution on model performance

Crown resolution is a critical factor affecting ITDD by Mask R-CNN. This study found a decrease in detection accuracy at lower values of crown resolution (<50 pixels/tree) and larger values of crown resolution (>12800 pixels/tree). Modeling results yielded recall >90.00% and IoU >80.00% when the crown resolution was between 800 pixels/tree and 12800 pixels/tree (). The highest recall value was achieved when the crown resolution was between 3200 pixels/tree and 6400 pixels/tree (recall = 98.54% and IoU = 81.51%). The highest IoU value was achieved when the crown resolution was between 1600 pixels/tree and 3200 pixels/tree (recall = 95.61% and IoU = 82.54%). For crown resolution from 100 pixels/tree to 800 pixels/tree, the accuracy of ITDD by Mask R-CNN models yielded 83.68%< recall <87.34% and 73.01%< IoU <80.54%. The accuracy of ITDD decreased sharply when the crown resolution was less than 25 pixels/tree. The minimum canopy resolution of Chinese fir correctly detected by the model was 7 pixels, and the maximum canopy resolution was 27412 pixels.

4. Discussion

4.1. Significance of the study

To our knowledge, this is the first study that proposes the concept of crown resolution and applied crown resolution to analyze the co-effect of image resolution and tree-crown size on ITDD using a deep learning approach. By relying on the Mask R-CNN performance, this study found that optimal accuracy was achieved for ITDD when the crown resolution was between 3200 pixels/tree and 6400 pixels/tree. This study demonstrates that the optimal image resolution is related to the tree-crown size for ITDD when using a deep-learning approach. In this type of analysis, selecting the appropriate image resolution based on the tree-crown size in forests is important for accurate ITDD.

4.2. Crown resolution

In this study, we found that reasonable accuracy was achieved by the Mask R-CNN model when crown resolution was between 50 pixels/tree and 12800 pixels/tree (recall > 80.00% and IoU > 73.00%), and the best accuracy was achieved when the crown resolution was between 800 pixels/tree and 12800 pixels/tree (recall > 90.00% and IoU > 80.00%). There are few previous comparable studies because this is the first of its kind that analyzes the influence of crown resolution on the accuracy of a deep learning approach for ITDD. Therefore, we can compare previous studies that included spatial resolution, tree-crown area or crown diameter to calculate the image resolution ratio to the crown area for comparison. For example, the accurate detection (Overall Accuracy ≥ 0.93 and Kappa ≥ 0.87) and area estimation (R2 ≥ 0.66, NRMSE ≤ 12%) of ITDD was achieved for mangrove forests with crown sizes ranging from <50 to >4000 pixels (Lassalle et al. Citation2022). A promising accuracy (average precision = 0.98, average recall = 0.85, and average F1 score = 0.91) was achieved for ITDD in regenerating forests (Chadwick et al. Citation2020), with 51% of tree crown diameters ranging from 1–2 m and a corresponding crown resolution of 872–3488 pixels/tree. Yang et al. (Citation2022) reported a tree number detection rate of 82.8% and a crown area detection rate of 81.8% using Mask R-CNN with 0.27 m Google Earth images in New York’s Central Park. In this study, most of tree crown area were between 50 m2 and 600 m2, with the corresponding crown resolution of 686–8230 pixels/tree. Each of these studies supports findings that image resolution can be optimized for accurate ITDD dependent on tree crown size.

In addition, our results can help explain why various image resolutions had optimal model performance in previous studies. For example, optimal accuracy was achieved for conifer seedlings detection using an image with a 0.3 cm resolution (Fromm et al. Citation2019). High accuracy was reported by Schiefer et al. (Citation2020) for tree species classification at the image resolution of 2 cm. Wetland vegetation species mapping yielded the best accuracy when the image had a 7.6 cm spatial resolution (Saltiel et al. Citation2022). The varying size of the optimal image resolution in these prior studies is mainly because image resolution depends on the target object requirements. According to our study's results from the crown resolution analysis, this variation in image requirements can be explained because different target objects have different requirements for image resolution. For smaller target objects, higher resolution images are needed to meet the pixel number requirements, such as the optimal resolution of 1.0 mm-5.0 mm for detecting the flower abundance in grasslands (Gallmann et al. Citation2022) and 0.3 mm for wheat ear density identification (Madec et al. Citation2019).

4.3. Considerations about the loss of accuracy for ITDD under very-high crown resolution

This study showed that crown resolutions >12800 pixels/tree could reduce Mask R-CNN performance (a), and the models could not detect trees with crown resolutions >27412 pixels/tree. Study results also indicate that the accuracy of ITDD is not always improved with an increase in image resolution. This can be explained by branches that were identified as individual tree crowns by the model, which resulted in the over-segmentation of Chinese fir in this study (). The over-segmentation phenomenon is because the branches of Chinese fir with large crown size may have crown-like characteristics, which are very similar to a small sapling. However, the mixed pixels in an image with a coarser resolution can form a transition between branches and land cover classes, which can partially mask these crown-like characteristics and reduce the influence of crown characteristics on ITDD by Mask R-CNN.

Figure 9. Example of the over-segmentation and corrected tree-crown identification by Mask R-CNN. O is the original image, A is the detection results by Mask R-CNN using the image with a resolution of 0.01 m, B is the detection results by Mask R-CNN using the image with a resolution of 0.02 m. The ground distance corresponding to each image was 2.34 m × 2.34 m. The images in the same row have the same resolution. For the same column, the images showed the same Chinese fir at the different resolutions.

Currently, few studies have reported that high crown resolution can reduce the accuracy of ITDD when using deep learning; wheat ear detection using deep learning model (Madec et al. Citation2019) and ITDD using other algorithms (Dash et al. Citation2017; Miraki et al. Citation2021; Pouliot et al. Citation2002; Yin and Wang Citation2019) have the similar conclusion. Based on Faster R-CNN, wheat ear density estimation decreased when the image resolution was increased from 0.26 mm to 0.13 mm, which was attributed to having multiple individual detections assigned to the same ear (Madec et al. Citation2019). Yin and Wang (Citation2019) concluded that similar height variation existed between within-crown and intercrown areas, which was attributed to excessive detail in the high-resolution CHM (Heenkenda, Joyce, and Maier Citation2015). This caused the accuracy of the individual mangrove tree detection to decrease, using the seeded region growing algorithm and the marker-controlled watershed segmentation algorithm when the image resolution was increased from 1 m to 0.1 m. Dash et al. (Citation2017) found that the detection accuracy for monitoring forest health during a simulated disease outbreak using the random forest algorithm with an image resolution of 0.06 m and 1 m was mainly influenced by the outlined large, contiguous polygon of poisoned trees. The image's background information (e.g. many healthy boughs and pixels) impacted the detection of the poisoned trees, which contained additional gaps and understory at the higher image resolution. These studies indicate that excessive detail in high-resolution images can affect the detection accuracy.

4.4. Considerations about the loss of accuracy for ITDD under very-low crown resolution

The accuracy of ITDD by Mask R-CNN tended to decrease when the crown resolution was less than 3200 pixels/tree. The recall of ITDD was lower than 80.00% when the crown resolution was between 25 pixels/tree and 50 pixels/tree, and the recall was 20.39% at the crown resolution of 25 pixels/tree. Several researchers have a similar conclusion to section 3.2 of our study, although few studies have reported the influence of crown resolution reduction on the detection accuracy for deep learning models: (1) the accuracy of a deep learning model decreased with coarser image resolution using the same target object (Gan, Wang, and Iio Citation2023; Kattenborn et al. Citation2020; Schiefer et al. Citation2020); (2) the accuracy of a deep learning model decreased with a smaller target object using the same image resolution (Weinstein et al. Citation2020). For example, by comparing the performance of Detectree2 and DeepForest for ITDD, Gan, Wang, and Iio (Citation2023) reported both models had decreased accuracy of ITDD in a temperate deciduous forest when the image resolution was lower than 0.1 m. Weinstein et al. (Citation2020) used an RGB image with a resolution of 0.1 m to assess four different ecosystems with characteristic tree sizes and reported that small trees yielded inferior detection accuracy. Also, similar results were found in studies that detected relatively small-scale objects (e.g. flower). Gallmann et al. (Citation2022) observed a significant influence on reducing the accuracy of small flower detection when the ground sampling distance was decreased from 5 mm to 10 mm and 20 mm per pixel when using Faster R-CNN, while having little impact on large flower detection. The main reason for the reduced accuracy of target object detection in these studies is likely that the number of pixels in the detected object is less than a threshold that can be effectively detected when using a deep learning approach.

In addition, crown resolution can explain the results of previous studies where tree crowns with various small sizes were successfully detected using a deep learning approach. For instance, tree crowns with a 3 m2 size were successfully detected using U-Net with an image resolution of 0.5 m in the West African Sahara, Sahel, and sub-humid zone (Brandt et al. Citation2020). Braga et al. (Citation2020) reported that a small crown size of 2.75 m2 was detected using Mask R-CNN with an image resolution of 0.5 m in a tropical forest. The smallest crown size in these studies is larger than that of the Chinese fir in our study. Combining the image resolution, the lowest crown resolution of these studies was 11 pixels/tree and 12 pixels/tree, respectively. Therefore, our results of the lowest crown resolution (7 pixels/tree) that can be detected were comparable to these studies. Our study's ability to detect crowns at this low number of pixels/tree can be attributed to the clear tree crown and weed control at the study site that reduced the effects of other vegetation in the background of the tree canopy. Moreover, the UAV image was collected in a diffuse light day, which minimized the impact of weeds (Pearse et al. Citation2020) and tree shadows (Hao et al. Citation2021b) on model performance. There may be a requirement of more pixels/tree to meet model performance requirements in a more complex forest setting.

4.5. The accuracy of tree-crown polygon delineation

This study found that the accuracy of tree-crown polygon delineation increased first and then decreased with the increase in crown resolution. The highest accuracy of tree-crown polygon delineation was achieved when the crown resolution was between 1600 pixels/tree and 3200 pixels/tree. The IoU values are sensitive to the number of pixels per tree crown polygon. The accuracy of tree-crown polygon delineation was impacted by crown resolution, regardless of whether there was a very high or very low crown resolution. Although some Chinese fir were identified as tree crowns, there can be an IoU value less than 50.00% (EquationEquation 4(4)

(4) ) and therefore be classified as invalid identification ( D2 and E3). For very high crown resolution, over-segmentation also leads to the decrease of IoU ( A1, A2 and A3). For low crown resolution, there were few pixels in the tree-crown polygon.

4.6. Limitation and application

This study focuses on exploring the influence of crown resolution on model performance. We found that crown resolution can reflect the co-effect of image resolution and tree-crown size on ITDD. When using a deep learning approach, a balance between target object size and image resolution is important for obtaining good accuracy with ITDD methods. In practice, the determination of optimal image resolution not only needs to take into consideration the accuracy of model performance, but also the feasibility of image acquisition and the efficiency of model training (e.g. the time of one single flight, image size, and the difficulty of image mosaic)(Kameyama and Sugiura Citation2021; Swayze et al. Citation2021; Tu et al. Citation2020). In addition, the tree crown size in forests often varies greatly, even in one area (Gallardo-Salazar and Pompa-García Citation2020; Kwong and Fung Citation2020; Panagiotidis et al. Citation2017). Therefore, it is necessary to consider the different image resolution requirements for small and large tree crowns. In our study, the gap between the smallest and largest crown area (about 12800/50) can be effectively detected by the Mask R-CNN model, which is sufficient to cover most forest conditions. In practice, it is necessary to ensure the number of pixels of the smallest crown is higher than 50, and that the largest crown is less than 12800. This is able to achieve good detection accuracy of ITDD with recall > 80% and IoU > 73.00%. Many factors (e.g. the interlocking of tree crowns or spacing, bit-depth of imagery used, tree crown architecture, and other image quality factors) can interfere with the accuracy of ITDD. In order to control the main factors that impact ITDD, the following techniques were used in this study: (1) UAV imagery was acquired on a diffuse light day to mitigate the impact of tree shadows on ITDD; (2) weeds were removed from the site to minimize the influence of background vegetation; (3) using a site with a distinct and evenly distributed tree crowns to eliminate the effect of crown interlocking; (4) utilization of the same UAV image for spatial averaging to reduce any possible differences in lighting conditions and wind that could be caused by image acquisition at different times.

In a forest with complex vegetation conditions, the detection accuracy decreases using a single image. This may be because insufficient information in the image cannot support the CNN for effectively identifying multiple tree species. It is possible to detect trees in a single image with large crown sizes in a complex forest, such as Amazonian palms in rainforests (Ferreira et al. Citation2020) and large Brazil nut trees (Bertholletia excelsa) (Ferreira et al. Citation2021). Combining vegetation phenology features and time-series imagery can identify multiple tree species. For example, deep learning approaches using a time series of high-resolution drone imagery identified multiple tropical tree species during the flowering period (Lee et al. Citation2023). Sub-meter phenological spectral features were identified based on the three phenological periods of leafless, greenleaf, and senescence while mapping poplars and willows in an urban environment (Li et al., Citation2022b). This classification was likely possible because the phenological features (e.g. flowering, fruiting, and leaf falling) extracted from time-series images can provide more information for the CNN to identify trees.

5. Conclusions

Image resolution is essential to consider, as it affects the detected accuracy of ITDD. The concept of crown resolution was first proposed in this study to better understand the influence of image resolution and tree-crown size for ITDD using deep learning models. For model training, the multi-spectral imagery derived from a UAV was resampled to coarser resolutions (0.01, 0.02, 0.04, 0.08, 0.16, and 0.32 m). The overall detection accuracy, the influence of different crown sizes, and the crown resolution were calculated to evaluate the Mask R-CNN performance. We found that the optimal image resolution should be determined according to the target tree-crown size. The optimum resolution (0.02 m) was lower than the highest spatial resolution imagery (0.01 m) used in this study. This information may help forestry practitioners to select the optimal image resolution to detect Chinese fir trees.

This study provides insight into the influence of crown resolution on Mask R-CNN performance, which can be useful in improving accurate ITDD. There are a wide range of forest types where ITDD can be accomplished using UAV imagery, so it should be a standard practice to determine the optimal image spatial resolution for precision and accuracy. This can help to operationalize ITDD methods for other tree species and mixed forest types.

Data availability statement

The data used in this study is available by contacting the corresponding author.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Bolya, D., C. Zhou, F. Xiao, and Y. J. Lee. 2022. “Yolact++ Better Real-Time Instance Segmentation.” IEEE Transactions On Pattern Analysis and Machine Intelligence 44 (2): 1108–1121. https://doi.org/10.1109/TPAMI.2020.3014297.

- Braga, J. R., V. Peripato, R. Dalagnol, M. P. Ferreira, Y. Tarabalka, L. E. O. C. Aragão, H. F. De Campos Velho, E. H. Shiguemori, and F. H. Wagner. 2020. “Tree Crown Delineation Algorithm Based on a Convolutional Neural Network.” Remote Sensing 12 (8): 1288. https://doi.org/10.3390/rs12081288.

- Brandt, M., C. J. Tucker, A. Kariryaa, K. Rasmussen, C. Abel, J. Small, J. Chave, et al. 2020. “An Unexpectedly Large Count of Trees in the West African Sahara and Sahel.” Nature 587 (7832): 78–82. https://doi.org/10.1038/s41586-020-2824-5.

- Chadwick, A. J., T. R. H. Goodbody, N. C. Coops, A. Hervieux, C. W. Bater, L. A. Martens, B. White, and D. Röeser. 2020. “Automatic Delineation and Height Measurement of Regenerating Conifer Crowns Under Leaf-off Conditions Using UAV Imagery.” Remote Sensing 12 (24): 4104. https://doi.org/10.3390/rs12244104.

- Chen, L., Y. Zhu, G. Papandreou, F. Schroff, and H. Adam. 2018. “Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation.” In Proceedings of the European Conference on Computer Vision (ECCV), 801-818. Munich, Germany.

- Chiang, C., C. Barnes, P. Angelov, and R. Jiang. 2020. “Deep Learning-Based Automated Forest Health Diagnosis from Aerial Images.” IEEE Access, 144064–144076. https://doi.org/10.1109/ACCESS.2020.3012417.

- Dash, J. P., M. S. Watt, G. D. Pearse, M. Heaphy, and H. S. Dungey. 2017. “Assessing Very High Resolution UAV Imagery for Monitoring Forest Health During a Simulated Disease Outbreak.” ISPRS Journal of Photogrammetry and Remote Sensing, 1–14. https://doi.org/10.1016/j.isprsjprs.2017.07.007.

- Egli, S., and M. Höpke. 2020. “CNN-based Tree Species Classification Using High Resolution RGB Image Data from Automated UAV Observations.” Remote Sensing 12 (23): 3892. https://doi.org/10.3390/rs12233892.

- Ferreira, M. P., D. R. A. D. Almeida, D. D. A. Papa, J. B. S. Minervino, H. F. P. Veras, A. Formighieri, C. A. N. Santos, M. A. D. Ferreira, E. O. Figueiredo, and E. J. L. Ferreira. 2020. “Individual Tree Detection and Species Classification of Amazonian Palms Using UAV Images and Deep Learning.” Forest Ecology and Management 475118397, https://doi.org/10.1016/j.foreco.2020.118397.

- Ferreira, M. P., R. G. Lotte, F. V. D'Elia, C. Stamatopoulos, D. Kim, and A. R. Benjamin. 2021. “Accurate Mapping of Brazil nut Trees (Bertholletia Excelsa) in Amazonian Forests Using Worldview-3 Satellite Images and Convolutional Neural Networks.” Ecological Informatics 63101302, https://doi.org/10.1016/j.ecoinf.2021.101302.

- Fromm, M., M. Schubert, G. Castilla, J. Linke, and G. Mcdermid. 2019. “Automated Detection of Conifer Seedlings in Drone Imagery Using Convolutional Neural Networks.” Remote Sensing 11 (21): 2585. https://doi.org/10.3390/rs11212585.

- Gallardo-Salazar, J. L., and M. Pompa-García. 2020. “Detecting Individual Tree Attributes and Multi-Spectral Indices Using Unmanned Aerial Vehicles: Applications in a Pine Clonal Orchard.” Remote Sensing 12 (24): 4144. https://doi.org/10.3390/rs12244144.

- Gallmann, J., B. Schüpbach, K. Jacot, M. Albrecht, J. Winizki, N. Kirchgessner, and H. Aasen. 2022. “Flower Mapping in Grasslands with Drones and Deep Learning.” Frontiers in Plant Science 12774965, https://doi.org/10.3389/fpls.2021.774965.

- Gan, Y., Q. Wang, and A. Iio. 2023. “Tree Crown Detection and Delineation in a Temperate Deciduous Forest from UAV RGB Imagery Using Deep Learning Approaches: Effects of Spatial Resolution and Species Characteristics.” Remote Sensing 15 (3): 778. https://doi.org/10.3390/rs15030778.

- Goodbody, T. R. H., N. C. Coops, and J. C. White. 2019. “Digital Aerial Photogrammetry for Updating Area-Based Forest Inventories: A Review of Opportunities, Challenges, and Future Directions.” Current Forestry Reports 5 (2): 55–75. https://doi.org/10.1007/s40725-019-00087-2.

- Goutte, C., and E. Gaussier. 2005. “A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation.” In Proceedings of the 27th European Conference on IR Research, 345–359. Santiago de Compostela, Spain.

- Guimarães, N., L. Pádua, P. Marques, N. Silva, E. Peres, and J. J. Sousa. 2020. “Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities.” Remote Sensing 12 (6): 1046. https://doi.org/10.3390/rs12061046.

- Guirado, E., J. Blanco-Sacristán, E. Rodríguez-Caballero, S. Tabik, D. Alcaraz-Segura, J. Martínez-Valderrama, and J. Cabello. 2021. “Mask R-CNN and OBIA Fusion Improves the Segmentation of Scattered Vegetation in Very High-Resolution Optical Sensors.” Sensors 21 (1): 320. https://doi.org/10.3390/s21010320.

- Hao, Z., L. Lin, C. J. Post, Y. Jiang, M. Li, N. Wei, K. Yu, and J. Liu. 2021a. “Assessing Tree Height and Density of a Young Forest Using a Consumer Unmanned Aerial Vehicle (UAV).” New Forests 52 (5): 843–862. https://doi.org/10.1007/s11056-020-09827-w.

- Hao, Z., L. Lin, C. J. Post, E. A. Mikhailova, M. Li, Y. Chen, K. Yu, and J. Liu. 2021b. “Automated Tree-Crown and Height Detection in a Young Forest Plantation Using Mask Region-Based Convolutional Neural Network (Mask R-CNN).” ISPRS Journal of Photogrammetry and Remote Sensing 178: 112–123. https://doi.org/10.1016/j.isprsjprs.2021.06.003.

- Hao, Z., C. J. Post, E. A. Mikhailova, L. Lin, J. Liu, and K. Yu. 2022. “How Does Sample Labeling and Distribution Affect the Accuracy and Efficiency of a Deep Learning Model for Individual Tree-Crown Detection and Delineation.” Remote Sensing 14 (7): 1561. https://doi.org/10.3390/rs14071561.

- He, K., G. Gkioxari, P. Dollár, and R. Girshick. 2017. “Mask R-CNN.” In Proceedings of the IEEE International Conference on Computer Vision, 2961-2969. Venice, Italy.

- Heenkenda, M. K., K. E. Joyce, and S. W. Maier. 2015. “Mangrove Tree Crown Delineation from High-Resolution Imagery.” Photogrammetric Engineering & Remote Sensing 81 (6): 471–479. https://doi.org/10.14358/PERS.81.6.471.

- Iqbal, M. S., H. Ali, S. N. Tran, and T. Iqbal. 2021. “Coconut Trees Detection and Segmentation in Aerial Imagery Using Mask Region-Based Convolution Neural Network.” IET Computer Vision 15 (6): 428–439. https://doi.org/10.1049/cvi2.12028.

- Kameyama, S., and K. Sugiura. 2021. “Effects of Differences in Structure from Motion Software on Image Processing of Unmanned Aerial Vehicle Photography and Estimation of Crown Area and Tree Height in Forests.” Remote Sensing 13 (4): 626. https://doi.org/10.3390/rs13040626.

- Kattenborn, T., J. Eichel, and F. E. Fassnacht. 2019. “Convolutional Neural Networks Enable Efficient, Accurate and Fine-Grained Segmentation of Plant Species and Communities from High-Resolution UAV Imagery.” Scientific Reports 9 (1): 17656. https://doi.org/10.1038/s41598-019-53797-9.

- Kattenborn, T., J. Eichel, S. Wiser, L. Burrows, F. E. Fassnacht, and S. Schmidtlein. 2020. “Convolutional Neural Networks Accurately Predict Cover Fractions of Plant Species and Communities in Unmanned Aerial Vehicle Imagery.” Remote Sensing in Ecology and Conservation 6 (4): 472–486. https://doi.org/10.1002/rse2.146.

- Kattenborn, T., J. Leitloff, F. Schiefer, and S. Hinz. 2021. “Review on Convolutional Neural Networks (CNN) in Vegetation Remote Sensing.” ISPRS Journal of Photogrammetry and Remote Sensing, 173, 24–49. https://doi.org/10.1016/j.isprsjprs.2020.12.010.

- Kwong, I. H. Y., and T. Fung. 2020. “Tree Height Mapping and Crown Delineation Using LIDAR, Large Format Aerial Photographs, and Unmanned Aerial Vehicle Photogrammetry in Subtropical Urban Forest.” International Journal of Remote Sensing 41 (14): 5228–5256. https://doi.org/10.1080/01431161.2020.1731002.

- La Rosa, L. E. C., C. Sothe, R. Q. Feitosa, C. M. de Almeida, M. B. Schimalski, and D. A. B. Oliveira. 2021. “Multi-task Fully Convolutional Network for Tree Species Mapping in Dense Forests Using Small Training Hyperspectral Data.” ISPRS Journal of Photogrammetry and Remote Sensing 179: 35–49. https://doi.org/10.1016/j.isprsjprs.2021.07.001.

- Lassalle, G., M. P. Ferreira, L. E. C. La Rosa, and C. R. de Souza Filho. 2022. “Deep Learning-Based Individual Tree Crown Delineation in Mangrove Forests Using Very-High-Resolution Satellite Imagery.” ISPRS Journal of Photogrammetry and Remote Sensing 189: 220–235. https://doi.org/10.1016/j.isprsjprs.2022.05.002.

- Lee, C. K. F., G. Song, H. C. Muller-Landau, S. Wu, S. J. Wright, K. C. Cushman, R. F. Araujo, et al. 2023. “Cost-effective and Accurate Monitoring of Flowering Across Multiple Tropical Tree Species Over two Years with a Time Series of High-Resolution Drone Imagery and Deep Learning.” ISPRS Journal of Photogrammetry and Remote Sensing 201: 92–103. https://doi.org/10.1016/j.isprsjprs.2023.05.022.

- Li, Y., G. Chai, Y. Wang, L. Lei, and X. Zhang. 2022a. “Ace R-CNN: An Attention Complementary and Edge Detection-Based Instance Segmentation Algorithm for Individual Tree Species Identification Using UAV RGB Images and Lidar Data.” Remote Sensing 14 (13): 3035. https://doi.org/10.3390/rs14133035.

- Li, X., J. Tian, X. Li, L. Wang, H. Gong, C. Shi, S. Nie, et al. 2022b. “Developing a sub-Meter Phenological Spectral Feature for Mapping Poplars and Willows in Urban Environment.” ISPRS Journal of Photogrammetry and Remote Sensing 193: 77–89. https://doi.org/10.1016/j.isprsjprs.2022.09.002.

- Madec, S., X. Jin, H. Lu, B. De Solan, S. Liu, F. Duyme, E. Heritier, and F. Baret. 2019. “Ear Density Estimation from High Resolution RGB Imagery Using Deep Learning Technique.” Agricultural and Forest Meteorology 264: 225–234. https://doi.org/10.1016/j.agrformet.2018.10.013.

- Miraki, M., H. Sohrabi, P. Fatehi, and M. Kneubuehler. 2021. “Individual Tree Crown Delineation from High-Resolution UAV Images in Broadleaf Forest.” Ecological Informatics 61: 101207. https://doi.org/10.1016/j.ecoinf.2020.101207.

- Mo, J., Y. Lan, D. Yang, F. Wen, H. Qiu, X. Chen, and X. Deng. 2021. “Deep Learning-Based Instance Segmentation Method of Litchi Canopy from UAV-Acquired Images.” Remote Sensing 13 (19): 3919. https://doi.org/10.3390/rs13193919.

- Mohan, M., C. Silva, C. Klauberg, P. Jat, G. Catts, A. Cardil, A. Hudak, and M. Dia. 2017. “Individual Tree Detection from Unmanned Aerial Vehicle (UAV) Derived Canopy Height Model in an Open Canopy Mixed Conifer Forest.” Forests 8 (9): 340. https://doi.org/10.3390/f8090340.

- Ocer, N. E., G. Kaplan, F. Erdem, D. Kucuk Matci, and U. Avdan. 2020. “Tree Extraction from Multi-Scale UAV Images Using Mask R-CNN with FPN.” Remote Sensing Letters 11 (9): 847–856. https://doi.org/10.1080/2150704X.2020.1784491.

- Panagiotidis, D., A. Abdollahnejad, P. Surový, and V. Chiteculo. 2017. “Determining Tree Height and Crown Diameter from High-Resolution UAV Imagery.” International Journal of Remote Sensing 38 (8-10): 2392–2410. https://doi.org/10.1080/01431161.2016.1264028.

- Pearse, G. D., A. Y. S. Tan, M. S. Watt, M. O. Franz, and J. P. Dash. 2020. “Detecting and Mapping Tree Seedlings in UAV Imagery Using Convolutional Neural Networks and Field-Verified Data.” ISPRS Journal of Photogrammetry and Remote Sensing 168: 156–169. https://doi.org/10.1016/j.isprsjprs.2020.08.005.

- Pleșoianu, A., M. Stupariu, I. andric, I. Pătru-Stupariu, and L. Drăguț. 2020. “Individual Tree-Crown Detection and Species Classification in Very High-Resolution Remote Sensing Imagery Using a Deep Learning Ensemble Model.” Remote Sensing 12 (15): 2426. https://doi.org/10.3390/rs12152426.

- Pouliot, D. A., D. J. King, F. W. Bell, and D. G. Pitt. 2002. “Automated Tree Crown Detection and Delineation in High-Resolution Digital Camera Imagery of Coniferous Forest Regeneration.” Remote Sensing of Environment 82 (2): 322–334. https://doi.org/10.1016/S0034-4257(02)00050-0.

- Qin, H., W. Zhou, Y. Yao, and W. Wang. 2022. “Individual Tree Segmentation and Tree Species Classification in Subtropical Broadleaf Forests Using UAV-Based LIDAR, Hyperspectral, and Ultrahigh-Resolution RGB Data.” Remote Sensing of Environment 280: 113143. https://doi.org/10.1016/j.rse.2022.113143.

- Rezatofighi, H., N. Tsoi, J. Gwak, A. Sadeghian, I. Reid, and S. Savarese. 2019. “Generalized Intersection Over Union: A Metric and a Loss for Bounding box Regression.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 658-666. Long Beach, CA, USA.

- Safonova, A., E. Guirado, Y. Maglinets, D. Alcaraz-Segura, and S. Tabik. 2021. “Olive Tree Biovolume from UAV Multi-Resolution Image Segmentation with Mask R-CNN.” Sensors 21 (5): 1617. https://doi.org/10.3390/s21051617.

- Saltiel, T. M., P. E. Dennison, M. J. Campbell, T. R. Thompson, and K. R. Hambrecht. 2022. “Tradeoffs Between UAS Spatial Resolution and Accuracy for Deep Learning Semantic Segmentation Applied to Wetland Vegetation Species Mapping.” Remote Sensing 14 (11): 2703. https://doi.org/10.3390/rs14112703.

- Schiefer, F., T. Kattenborn, A. Frick, J. Frey, P. Schall, B. Koch, and S. Schmidtlein. 2020. “Mapping Forest Tree Species in High Resolution UAV-Based RGB-Imagery by Means of Convolutional Neural Networks.” ISPRS Journal of Photogrammetry and Remote Sensing 170: 205–215. https://doi.org/10.1016/j.isprsjprs.2020.10.015.

- Sokolova, M., N. Japkowicz, and S. Szpakowicz. 2006. “Beyond Accuracy, F-Score and ROC: A Family of Discriminant Measures for Performance Evaluation.” In Proceedings of the 19th Australian Joint Conference on Artificial Intelligence, 1015-1021. Hobart, Australia: Springer Berlin Heidelberg. doi:10.1007/11941439_114.

- Sun, Y., Z. Li, H. He, L. Guo, X. Zhang, and Q. Xin. 2022. “Counting Trees in a Subtropical Mega City Using the Instance Segmentation Method.” International Journal of Applied Earth Observation and Geoinformation 106: 102662. https://doi.org/10.1016/j.jag.2021.102662.

- Swayze, N. C., W. T. Tinkham, M. B. Creasy, J. C. Vogeler, C. M. Hoffman, and A. T. Hudak. 2022. “Influence of UAS Flight Altitude and Speed on Aboveground Biomass Prediction.” Remote Sensing 14 (9): 1989. https://doi.org/10.3390/rs14091989.

- Swayze, N. C., W. T. Tinkham, J. C. Vogeler, and A. T. Hudak. 2021. “Influence of Flight Parameters on UAS-Based Monitoring of Tree Height, Diameter, and Density.” Remote Sensing of Environment 263: 112540. https://doi.org/10.1016/j.rse.2021.112540.

- Tang, Z., M. Li, and X. Wang. 2020. “Mapping tea Plantations from VHR Images Using OBIA and Convolutional Neural Networks.” Remote Sensing 12 (18): 2935. https://doi.org/10.3390/rs12182935.

- Tu, Y., K. Johansen, S. Phinn, and A. Robson. 2019. “Measuring Canopy Structure and Condition Using Multi-Spectral UAS Imagery in a Horticultural Environment.” Remote Sensing 11 (3): 269. https://doi.org/10.3390/rs11030269.

- Tu, Y., S. Phinn, K. Johansen, A. Robson, and D. Wu. 2020. “Optimising Drone Flight Planning for Measuring Horticultural Tree Crop Structure.” ISPRS Journal of Photogrammetry and Remote Sensing 160: 83–96. https://doi.org/10.1016/j.isprsjprs.2019.12.006.

- Veras, H. F. P., M. P. Ferreira, E. M. Da Cunha Neto, E. O. Figueiredo, A. P. D. Corte, and C. R. Sanquetta. 2022. “Fusing Multi-Season UAS Images with Convolutional Neural Networks to map Tree Species in Amazonian Forests.” Ecological Informatics 71: 101815. https://doi.org/10.1016/j.ecoinf.2022.101815.

- Weinstein, B. G., S. Marconi, S. Bohlman, A. Zare, and E. White. 2019. “Individual Tree-Crown Detection in RGB Imagery Using Semi-Supervised Deep Learning Neural Networks.” Remote Sensing 11 (11): 1309. https://doi.org/10.3390/rs11111309.

- Weinstein, B. G., S. Marconi, S. A. Bohlman, A. Zare, and E. P. White. 2020. “Cross-site Learning in Deep Learning RGB Tree Crown Detection.” Ecological Informatics 56: 101061. https://doi.org/10.1016/j.ecoinf.2020.101061.

- Wu, D., K. Johansen, S. Phinn, A. Robson, and Y. Tu. 2020a. “Inter-comparison of Remote Sensing Platforms for Height Estimation of Mango and Avocado Tree Crowns.” International Journal of Applied Earth Observation and Geoinformation 89: 102091. https://doi.org/10.1016/j.jag.2020.102091.

- Wu, G., and Y. Li. 2021. “Non-maximum Suppression for Object Detection Based on the Chaotic Whale Optimization Algorithm.” Journal of Visual Communication and Image Representation 74: 102985. https://doi.org/10.1016/j.jvcir.2020.102985.

- Wu, X., D. Sahoo, and S. C. H. Hoi. 2020b. “Recent Advances in Deep Learning for Object Detection.” Neurocomputing 396: 39–64. https://doi.org/10.1016/j.neucom.2020.01.085.

- Xi, Z., C. Hopkinson, S. B. Rood, and D. R. Peddle. 2020. “See the Forest and the Trees: Effective Machine and Deep Learning Algorithms for Wood Filtering and Tree Species Classification from Terrestrial Laser Scanning.” ISPRS Journal of Photogrammetry and Remote Sensing 168: 1–16. https://doi.org/10.1016/j.isprsjprs.2020.08.001.

- Yang, M., Y. Mou, S. Liu, Y. Meng, Z. Liu, P. Li, W. Xiang, X. Zhou, and C. Peng. 2022. “Detecting and Mapping Tree Crowns Based on Convolutional Neural Network and Google Earth Images.” International Journal of Applied Earth Observation and Geoinformation 108: 102764. https://doi.org/10.1016/j.jag.2022.102764.

- Yin, D., and L. Wang. 2019. “Individual Mangrove Tree Measurement Using UAV-Based LIDAR Data: Possibilities and Challenges.” Remote Sensing of Environment 223: 34–49. https://doi.org/10.1016/j.rse.2018.12.034.

- Zhang, L., H. Lin, and F. Wang. 2022. “Individual Tree Detection Based on High-Resolution RGB Images for Urban Forestry Applications.” IEEE Access 10: 46589–46598. https://doi.org/10.1109/ACCESS.2022.3171585.

- Zhao, H., F. Liu, H. Zhang, and Z. Liang. 2019. “Convolutional Neural Network Based Heterogeneous Transfer Learning for Remote-Sensing Scene Classification.” International Journal of Remote Sensing 40 (22): 8506–8527. https://doi.org/10.1080/01431161.2019.1615652.