Abstract

The history of computing cannot be discussed without acknowledging the engineering innovations that paved its way. Today, we carry more computing power in our pockets than it took to put a man on the moon. Across the world, ‘smart fever’ is on the rise, revealing our strong reliance on these smart devices. Designing such powerful devices would have been impossible, however, without an understanding of electricity and how it could be utilised to provide both power and control. This article highlights some key engineering innovations and the brilliant minds behind them. We trace the transformative journey of these concepts through the timeline of technology, from their humble beginnings as mere calculation machines to the powerful smart devices we rely on today.

Introduction

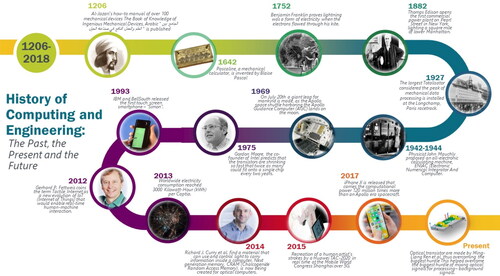

This article provides an overview of the history of computing and engineering, drawing on both primary and secondary sources. It emphasises the significance of historical engineering breakthroughs and their ongoing impact on present and future technologies. This article is divided into five main sections: an exploration of a water clock in Kuwait’s Souq Sharq Mall; the discovery of electricity; the difficult task of defining a computer; the rise of ‘smart fever’; and the spread of embedded systems and their prospects. In each section, the connection between advances in engineering and how they built the foundation for advances in the field of computing are highlighted. A timeline of key events in the history of computing is presented in .

Water clock: the elephant in the room

If you visit Sharq Mall in Kuwait, the remarkable design of the water clock, showcased in , is guaranteed to capture your attention. This clock utilises the flow of water to measure time. Designed by Professor Bernard Gitton in the late 1970s, the water clock includes a swinging pendulum and a scoop connected to a vessel that enables water flow at regular intervals. Its design was influenced by the inventions of Al-Jazari (Badi’al-Zaman Abū al-'Izz ibn Ismā’īl ibn al-Razāz Al-Jazari, 1136–1206.Footnote1,Footnote2 Al-Jazari worked as the chief engineer at Artuklu Palace, during the Artuqid dynasty that ruled over parts of Turkey, Syria, and Iraq in the 11th and 12th centuries.Footnote3,Footnote4 Throughout his tenure, Al-Jazari was well-known as a polymath and inventor within the Muslim world.

FIGURE 2 Professor Bernard Gitton’s water clock at Kuwait’s Sharq Mall. Source: Photograph by Issam Damaj, a co-author.

Al-Jazari is best known for writing ‘The Book of Knowledge of Ingenious Mechanical Devices’ in 1206. This manual details the construction of 100 mechanical devices, 80 of which are trick or novelty vessels of various kinds. In this book, the incomparable elephant clock is described. The clock consists of a water-filled bucket inside the elephant, a ball that can be dropped into the mouth of a moving serpent, a system of strings, and an elephant driver that hits a drum to indicate a half or full hour; all fully automated.Footnote6 Footnote5 is an illustration of the elephant clock by Al-Jazari himself. It may seem a novelty today, but this among many of his devices revolutionised mechanical engineering.

FIGURE 3 Sketch of the elephant water clock by Al-Jazari, from his 1206 work The Book of Knowledge of Ingenious Mechanical Devices. Image accessed through Wikipedia, distributed under a CC-BY 2.0 license.

Al-Jazari used crankshafts, cogwheels, delivery pipes, one-way valves in pumps, an escapement mechanism, and segmental gear to double-action suction pump with valves and reciprocating piston motion.Footnote7 He constructed a variety of automated mechanisms such as:

moving peacocks powered by hydropower;

the first automatic gates and doors;

a robotic musical band; and,

a castle clock.

The robotic band was one of the first programmable automata; the drum machine’s rhythms and beats could be preset using pegs.Footnote8,Footnote9 His castle clock is the first analogue computer. He essentially built the first automata and robot while inventing feed-back control and closed-loop systems, various types of automatic switching to close and open valves or change of direction of flow, and precursors of fail-safe devices in the process.Footnote10

Donald R. Hill, an English historian specialising in Islamic mechanics, and the English translator of Al-Jazari’s original book, wrote in ‘Studies in Medieval Islamic Technology’:

It is impossible to over-emphasise the importance of Al-Jazari’s work in the history of engineering. Until modern times there is no other document from any cultural area that provides a comparable wealth of instructions for the design, manufacture and assembly of machines … Al-Jazari did not only assimilate the techniques of his non-Arab and Arab predecessors, he was also creative. He added several mechanical and hydraulic devices. The impact of these inventions can be seen in the later designing of steam engines and internal combustion engines, paving the way for automatic control, advances in analogue computers, and other modern machinery. The impact of Al-Jazari’s inventions is still felt in modern contemporary mechanical engineering…Footnote11

Consider the potential accomplishments of these exceptionally skilled and innovative individuals if they resided in an era where electricity was comprehended and readily available to power their creations.

Electricity: the feather and amber

A mysterious force has been observed since ancient times, it is revealed in: flashes of lightning; the glow of catfish that inhabited the River Nile: and the ability of amber, Elektron in ancient Greece, to attract flying feathers when rubbed with fur. We now understand that matter is composed of a variety of tiny subatomic parts, some of which are electrons. These negatively charged particles travel easily through metals and this flow of electrons is what we call electricity.

Benjamin Franklin (1706–1790, America) proved lightning was a form of electricity when electrons flowed through his kite in 1752.Footnote12 The electric catfish of the Nile River can discharge stored electrons to inflict a deadly shock.Footnote13 Electrons gather on the surface of amber when it is rubbed, thus attracting feathers.

In each of the above examples, static electricity requires an appropriate medium to activate its attraction (Charles-Augustin de Coulomb, 1736–1806, France) or discharge. As electrons flow, they are not consumed, they simply run through circuits. They provide energy, exert force and do work.

Electricity must be generated; it does not simply appear from nowhere. Chemical reactions in batteries (Luigi Galvani, 1737–1798, and Alessandro Volta, 1745–1827, Italy) and rotating magnets near copper windings (Michael Faraday, 1791–1867, United Kingdom (UK)) are two methods that can produce electricity. Chemicals are costly and rotations require effort. Rotations can be produced using hand cranks, steam engines, windmills, falling water, and turbines driven by nuclear fission, etc.

Great strides in understanding and application were to follow, firstly towards direct current (DC):

André-Marie Ampère (1775–1836, France) studied, clarified and defined the relationship between electricity and magnetism

George Simon Ohm (1789–1854, Germany) formulated the relationship between the flow of electrons and the resistance they face in flow

Sir Humphry Davy (1778–1829, UK) invented the first incandescent light as well as a safety lamp used to prevent mine explosions. Joseph Swan (1828–1914, UK), developed an early version of the electric light bulb. On February 3rd, 1879, Mosley Street, Newcastle upon Tyne, England, became the first public road in the world to be illuminated by his incandescent light bulb. In September 1881, the town of Godalming in England was the first to implement a system for lighting both public streets and some private homes using electricity generated from a nearby mill

Thomas Alva Edison (1847–1931, USA) created a long-lasting electrical lamp

In 1882, Edison’s company established the first commercial coal-fired power plants: the first in London’s Holborn Viaduct and a second in New York’s Pearl. The electricity generated in Edison’s power plants was Direct Current (DC), i.e., the electrons were flowing in one direction onlyFootnote14

The developments did not end there. In July 1888, George Westinghouse (1846 − 1914, USA) adopted Nikola Tesla’s (1856–1943, Serbia, USA) high-voltage alternating current (AC) motor and transformer. In AC electricity, electrons change their direction of flow multiple times per second; typically at 50 or 60 Hertz. He built a hydroelectric power plant at Niagara Falls that was able to generate, transmit, and distribute electricity, initially over tens, then hundreds, of miles.

The quest for greater knowledge of electricity continued; Tesla produced artificial lightning that discharged millions of volts into the sky. Thunder from the released energy was heard 15 miles away.

In 2013 there was an average world consumption of 3000 kilowatt-hours (kWh) per capitaFootnote15, sufficient to power 67 billion 40-watt lightbulbs. It is doubtful that Franklin or Tesla anticipated this.

Electricity: is where the mystery continues—it is a renewable call for the marvellous interplay between science and engineering.

Computers: an engineering and mathematics blend

“How do computers work?” This question can be a true brainteaser. Computers comprise heterogeneous conceptual and physical abstraction layers, all working together through well-defined connections. The physical layer of computers is referred to as computer hardware.

Ancient computer hardware was mechanical, such as gears and levers. Modern computersFootnotea are electronic and they apply the rules of solid-state physics to calculate, store, and communicate. Electricity flows through the various electronic components; the components operate according to a predefined sequence, they communicate, and the user enjoys a quick response.

Based on the historic Hindu-Arabic number system, the Father of Algebra, Muhammad ibn Musa Al-Khwarizmi (Khwarizmi, Iraq, 780–850), George Boole (UK, 1815–1864), Leonardo Fibonacci (Italy, 1170–1250), and many other great mathematicians founded the computing conceptual layer.

The complicated rules, procedures, and real-life applications of mathematics invited automation. This automation could swiftly perform tasks with less mental effort and without the risk of human error. Simply put, applied mathematics led to the development of machines that can compute.

Modern computers, just like their earlier mechanical counterparts, include:

units that enable the provision of information and display the result (input and output),

a computing unit (processor), and

places where information can be internally stored (memory).

For instance, the mechanical calculator shown in ,Footnote16 used rotating dials and wheels as input and output units; gears and levers to compute, and wheels to memorise.Footnote17 This was created by Blaise Pascal (France, 1642).

FIGURE 4 The Pascaline, a 1652 mechanical calculator by Blaise Pascal, is housed at the Conservatoire National des Arts et Métiers (CNAM). Image accessed through Wikipedia, distributed under a CC BY-SA 3.0 FR/CC BY-SA 2.0 FR license. Photo by Rama.

Pascal opted for the decimal number system, commonly known as base ten, for his mechanical processor. In this system, a digit is one of the ten unique symbols, ranging from 0 through 9, used to represent quantities. A numeral, then, is a symbol or group of symbols that represents a number. Pascal creatively implemented this concept on a sizable wheel, around whose rim these numerals were strategically positioned to indicate values based on their sequence.

A number can be represented in a system with a different base, e.g., base two could be used instead of base ten. Base two is also known as the binary system, in this case, digits are assigned values of 0 or 1 only. While the decimal, base ten, system is most common in our daily lives, theoretically any base factor could be used.

It is the use of the binary system in electronics that has resulted in modern computers being classified as digital systems. In digital electronics, 1 is usually allocated to the higher voltage level and 0 is the lower. The specific voltage that each level represents will vary with the type of digital device and its operating range. Other ways to utilise the two levels are ON/OFF switches, pushbuttons and charged/discharged capacitors.

The relationship between electricity and mathematics is a key to understanding the deepest processing of a computer, namely executing operations. Operations in a processor range from simple addition and multiplication to the most complicated equations and logic. A simple addition of two single binary bits has four possible scenarios (see ). To that end, the addition can result in a maximum value of 2, represented in two bits. With basic knowledge of electronics, one can propose a working switching-based circuit that can implement the four scenarios in a lookup table style; this creates a computational unit called a Half-Adder. Larger adders and various functional units can be created by expanding upon this method. These hardware units which perform individual operations are the first step in producing automated multi-step procedures.

Table 1 SINGLE DIGIT ADDITION AND THE CORRESPONDING EQUIVALENT DECIMAL VALUE.

A modern computer also contains a sequence of instructions to be executed. This sequence of instructions is known as an algorithm.Footnoteb These instructions would typically include: receiving inputs; operating upon these inputs; producing results; and communicating the results to the user (output). All of this is carried out using electronic circuits.

The algorithm must be written in a specific language that is simple to translate into the ones and zeros recognised by computers; here, the written algorithm is called a program. Programs are known as the software layer of computers.

Today, the computer hardware layer refers to components, such as the monitor, mouse, keyboard, hard drive disk, graphic cards, sound cards, memory, motherboard, and chips, i.e. physical items. The computer software layers are composed of systems and applications software. Systems software comprises the operating system and all the utilities that enable the development of computer programs. Applications software includes programs, such as word processors, spreadsheets, and internet browsers, etc. Both the hardware and software layers are grounded in mathematical theories and applications.

Great advancements in processing power and speed have been made over the decades. While mechanical computers took a few seconds to compute a single simple addition, ENIAC, a 30-ton computer from the 1940s could add 5,000 numbers in one second and, now, an Intel i7 processor can add hundreds of millions of numbers a second.

In 1983, Steve Jobs (USA, 1955–2011) made what turned out to be uncannily accurate predictions about the current state-of-art technologies. He envisaged portable computers, networks, human-computer interaction, and voice recognition.Footnote19 While Bill Gates (USA, 1955–Present) in 1987 predicted the advent of flat panel displays, various forms of interactive entertainment, sophisticated voice recognition software, and access to vast amounts of information with just a touch.Footnote20 At present, we enjoy being connected without wires, we swipe our phones and tablets, our voice is recognised, and we store our files on a cloud. Given the current research focus, the future seems to be even odder with body implants, holograms, autopilots, and more on the horizon. Computers are becoming ever more intelligent and ubiquitous.

The smart world fever

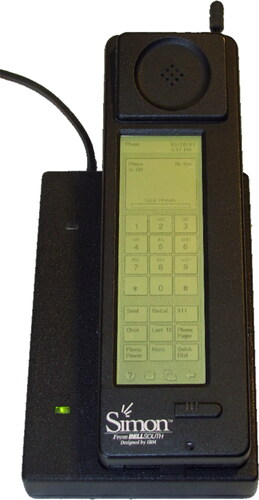

Since the turn of the 21st century, we have witnessed an unprecedented demand to upgrade our daily-life tools and equipment through Internet connectivity, automated features, and smart interactions to name a few. In 1993, IBM and BellSouth released the first touchscreen smartphone—‘Simon’ (see ). Now is the epoque of smart TVs, houses, buildings, cars, and cities. Smart world fever is on the rise. We are in the era of pervasive computing but the peak Internet of Things (IoT) resides ahead of us.

FIGURE 5 The IBM Simon Personal Communicator and its charging base. This device is recognized as one of the first smartphones, integrating mobile phone and PDA functions. Photo taken on June 30, 2012, by Bcos47 and released into the public domain. The image was originally published on October 15, 2009, and accessed through Wikipedia.

The quest to automate our homes and places of work is not a recent endeavour. All eras have aimed to reduce labour requirements, save energy, minimise materials, improve quality of life, increase security, and enhance safety.

Ancient Greek and Arab civilizations contributed float-valve regulators, water clocks, oil lamps, water tanks, water dispensers, and other innovations. During the mechanical revolution, advances like locks, switches, pumps, and shading devices emerged. With the advent of the electrical revolution, technologies such as relays, switches, and programmable controllers were introduced.

More recently, embedded systems such as microcontrollers and programmable devices have enabled the automation of an almost infinite list of gadgets, equipment, vehicles, and tools.

In the mid-nineties, prototyping computer interfacing products required the fabrication of almost all interfacing, port expansions, bus cards, and their software interfaces. While the electronic designs were available, they were not very accessible and off-the-shelf purchases were limited.

Today, a wide range of plug-and-play computer interfacing kits are available, such as the Arduino Project, Phidgets, Raspberry Pi, and National Instruments (NI). These kits are accompanied by a plethora of user-friendly, graphical, and rapid-prototyping software tools, including NI LabVIEW.Footnote21 which support the use of these interfacing kits.

Automation is becoming a core facet of science, technology, engineering, and mathematics (STEM) education. Many automation equipment providers offer academic suites that are tuned to the interests of younger generations. LEGO Mindstorm, and similar packages support learning about robotics, machines and mechanisms. It is surprisingly interesting to use a LabVIEW-based integrated development environment to program the LEGO EV3 robotics kit.

Reviewing the projects produced by young engineering professionals from around the world, one can sense a keen interest in smart solutionsFootnote22,Footnote23,Footnote24:

The HOBOT project takes sensing and intervention assignments to the next level of online user customisation for your home or workplace to control heating, lighting, etc.

MTS and NFC Wallet have developed a universal card machine that can read secure smart bracelets or cards. Its developers hope to make the use of credit cards and physical keys for monetary transactions and access to secure locations redundant.

The RECON project is a long-distance delivery and monitoring drone controlled by a smart device over the Internet.

ChildPOPs is a wearable all-in-one healthcare monitoring system for children.

Smart Cart and SysMART connect supermarket chains with customers to provide easy and safe shopping experiences through traffic monitoring, parking and product availability, indoor navigation and billing, and product track and trace.

AMIE is an IoT system designed to assist blind and visually impaired people to navigate unfamiliar indoor environments safely and effectively.

DEEP is a sub-submerged environment monitoring system which incorporates live streaming, analysis, and sea remote navigation.

AgriSys is a smart Controlled-Environment Agriculture System based on fuzzy inference; it deals with desert-specific challenges, such as dust, infertile soil, wind, low humidity, and extreme variations in temperatures.

Without a doubt, the interest in smart solutions is yet to reach its apogee. Universal translators, tablet computers, tricorders, and communicator badges are all Star Trek gadgets that now exist. The future is revealing more Starfleet-like innovations; transporters, replicators, emergency medical holograms, and air touch technologies. Perhaps we will even be able to fly on a ‘Back to the Future’ hoverboard one day soon. Live long and prosper!

Ultimately, technology never stands still. Do not limit your expectations, for if we had, humans would never have put a man on the moon.

History of embedded system design: from the very first to the present

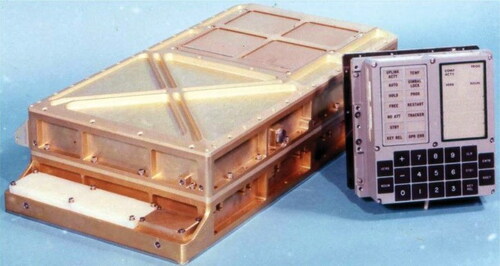

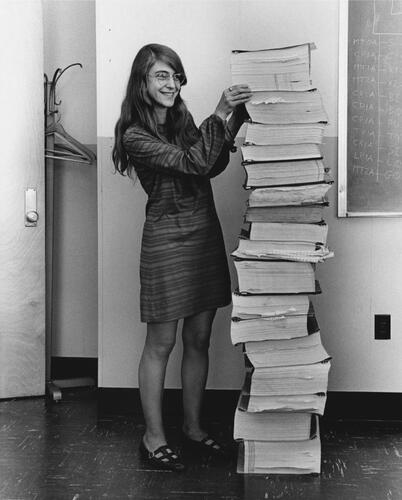

One giant leap for mankind was made on July 20, 1969, with the landing of the Apollo Lunar Module harbouring an Apollo Guidance Computer (AGC) (see and ).Footnote25 This 70-lb box of integrated circuitry complete with a control panel performing real-time guidance and control ensured a safe descent to the moon.

FIGURE 6 The input module (right) is displayed with the main casing of the AGC (left), an instrumental technology used during the Apollo missions. The image was originally uploaded by Grabert to German Wikipedia on 26 July 2005 and is in the public domain as it was created by NASA.

FIGURE 7 Margaret Hamilton standing beside the stacks of software listings she and her MIT team developed for the Apollo Project. Hamilton was the Director of the Software Engineering Division at the MIT Instrumentation Laboratory, which was responsible for creating the onboard flight software for the Apollo spacecraft. This image is in the public domain, published in the United States without a copyright notice, and digitally restored by Adam Cuerden. The original version of this photograph dates back to 1 January 1969.

Eldon Hall,Footnote26 lead designer of the AGC, noted that if they ‘had known what they learned later, or had a complete set of [design] specifications been available, they probably would have [given up on] the technology of the early sixties.’ Hall’s words shed light on the difficulties and challenges of designing embedded systems with optimal design metrics.

The AGC was composed of two trays, each containing 24 modules. Each module comprised two groups of 60 flat packs and 72-pin connectors. Tray A held the logic circuits, interfaces, and power supply, while tray B included the memory, memory electronics, and analogue alarm devices.Footnote27 Further, there were 36k words of Random Only Memory (ROM) and 2048 words of Random Access Memory (RAM), both utilising magnetic core technology. The RAM part utilised 15-bit words with one parity bit. Initially, there were two versions of the AGC, with the original Block 1 variant having even less memory.

The AGC utilised a 2.048 MHz clock as its timing reference. An internal clock operating at 1.024 MHz was generated by dividing the reference clock. A similar division produced a 512 kHz synchronization signal for external systems. As a comparison, the 1989 Nintendo Game Boy had a clock speed of 4.19 MHz.Footnote28 The average instruction execution times ranged between around 12–80 µsec. For example, the Add instruction execution time was 23.4 µsec.Footnote29 All this was packed into a volume of 24 by 12.5 by 6 inches which was a tremendous design feat in the 60s. The AGC was the first computer to use Integrated Circuits (ICs).Footnote30

To put things into perspective, let’s consider the performance of Apple’s iPhone X, released in 2017 (see ). This device features a Hexa-core CPU with two high-performance Monsoon cores clocked at 2.39 GHz and four Mistral energy-efficient cores. It also includes dedicated neural network hardware called a ‘Neural Engine’ by Apple. This neural network hardware can perform up to 600 billion operations per second and is used for security and other machine learning tasks.Footnote31 The most recent iPhone, the 14 Pro Max, boasts a state-of-the-art 16-core Neural Engine, which can perform nearly 17 trillion operations per second.Footnote32 These iPhones can carry out billions more operations per second than an AGC. It is, therefore, a valid assertion that they each possess the capability to guide millions of Apollo-era spacecraft concurrently.

FIGURE 8 The A11 Bionic system on a chip—or SoC—powers the iPhone X, but it can also be found inside the iPhone 8 and iPhone 8 Plus (Courtesy Apple Inc.).

The AGC had a unit cost of approximately USD 200,000, equivalent to about USD 1.5 million in today’s currency. The Non-Recurring Engineering Cost (NRE), or the one-time expense for designing the system, was estimated to be in the millions by today’s standards. The time to prototype was around 5 years and the time to market was 8 years with limited flexibility. The iPhone X’s NRE cost was in the millions with a starting price of $999 for the base model which included 64GB of storage. In response to tight competition, the interval for prototyping and commercialization has been substantially reduced. Flexibility has also emerged as a critical means to minimize NRE expenses; software updates on an iPhone are an example of this design in flexibility.

From portable devices like digital watches to home appliances like washing machines, embedded systems are everywhere! These systems are designed with various metrics in mind, such as size, performance, power, and cost that often contradict each other. Though they are challenging to optimise, a ‘successful design is not the achievement of perfection but the minimisation and accommodation of imperfection.’ as Henry Petroski, a failure analyst, puts it.Footnote33 Without effective and pragmatic design compromises, significant advancements in the realm of computing would never have taken place.

The future of computing: it is bright and quantum

Gordon Moore, the co-founder of Intel, famously said in 1965 and again in 1975 that the transistors were shrinking so fast that twice as many could fit onto a single chip every two years.Footnote34,Footnote35 While true for many years, today the rate of doubling is slowing down and according to Intel, it has increased from 2 to 2.5 years. Making transistors smaller no longer guarantees that they will be cheaper or faster, so the nature of progress is changing. The future of computing is now considered to be in areas beyond raw hardware performance: progress is being made in finding alternate computing technologies and improving computer architectures.

Current computers represent information as bits whereas a single bit represents a digital 1 or a 0. Quantum computing, which represents the next significant leap in computing, uses quantum bits also known as qubits.Footnote36 These qubits can store much more information than just 1 or 0 because they can exist in any superposition of these values.Footnote37 Since quantum computing takes advantage of the strange ability of subatomic particles to exist in more than one state at any time, operations can be executed much faster and with lower energy consumption compared to conventional computers.

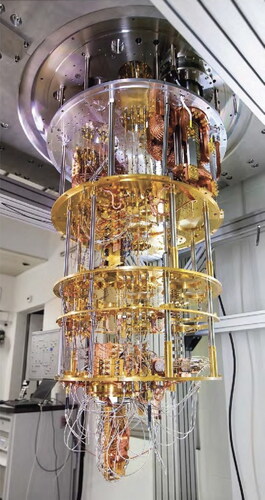

The difference between classical bits and qubits is that we can also prepare qubits in a superposition of 1 and 0 and create nontrivial correlated states of several qubits, aka entangled states. To that end, a traditional digital 0 and 1 can be thought of as poles at the end of an imaginary sphere where a qubit can take any point on this sphere. Complex problems, such as breaking cryptographic systems and predicting interactions in chemical reactions, can be solved efficiently using quantum algorithms that exploit entanglement. A quantum algorithm promises significant performance gains compared to its nonquantum counterpart. presents a quantum computer based on superconducting qubits developed by IBM Research in Zurich, Switzerland. The qubits in the device will be cooled to under 1 kelvin using a dilution refrigerator.Footnote38

FIGURE 9 A quantum computer based on superconducting qubits. A collaboration between IBM Research in Zurich and IBM Thomas J. Watson Research Center in Yorktown Heights, USA, led to the building of the presented device. Photo by IBM Zurich Lab, dated 28 September 2017. Image distributed under a CC-BY 2.0 license and accessed through Wikipedia.

Quantum computing could solve problems that are difficult for classical computers to handle, and in doing so revolutionise many industries and areas of research. Areas where quantum computing is expected to have a significant impact include cryptography, drug discovery, supply chain optimisation, financial modelling, and Artificial Intelligence (AI). However, it is important to note that quantum computing is still in its infancy with its full potential yet to be realised and understood.Footnote39

The future of computing is no longer limited to a steady reduction in silicon transistor sizes and an increase in speed. The technology itself is accelerating, and the end of the growth of computing may be much more distant than previously anticipated.

The computing industry is enjoying something of a renaissance and is pushing the boundaries of conventional computing as smart machines slowly seep into our daily lives to become a powerful driving force. In terms of advancements in computer architecture, to prepare for the next generation of AI, neuromorphic technology is taking the lead. The ultimate goal of neuromorphic computing is to mimic the architecture of the human brain to achieve a human-level of computing for human problem-solving while using a thousand times less energy than traditional transistors. Recently, Intel Labs’ new server, Pohoiki Spring, used 768 neuromorphic chips with 100 million neurons to demonstrate the brain capacity of a small mammal. This server was capable of detecting 10 hazardous smells 1,000 times faster than a supercomputer and 10,000 times faster than a traditional computer.Footnote40

We are familiar with DNA as a genetic material that holds the design plans of living things, but it can also be used as storage for data created by living things. Synthetic DNA makes for an attractive storage solution, with its inherent characteristics of stability, writability, and readability. Usually, an algorithm is used to encode the data into adenine (A), thymine (T), cytosine (C), and guanine (G) that make up the DNA molecules, and then the DNA molecules can be synthesised and stored. When access to the data is required, the molecules are sequenced and decoded using the same algorithm.

DNA is extremely information-dense with 2 bits stored per 0.34 nm, and 1 kg of DNA is capable of storing 200,000 Petabytes compared to the same storage in flash memory with more than 100 kg of silicon. Were this technology to be successfully exploited then a few tens of kg of DNA is sufficient to meet the entire world’s storage requirements for a century or two.Footnote41,Footnote42

Quantum computing weaves a dream of solving problems that are out of reach of traditional computers, from speeding up the modelling of COVID-19 cases to simulating information flow in a black hole. Combining technologies like quantum computing and neuromorphic technology with DNA storage has the potential to contribute to redefining computing as we know it. Thus, ushering us into a new era of computing with unimaginable computing power capable of realising applications beyond expectations.

Conclusion

We have traversed a remarkable journey, from the early mechanical engineering innovations of Al-Jazari that laid the foundations of automata and robotics, to the discovery of electricity, and onwards to the advent of computers and the modern electronic era. We have all been infected by the smart fever, and as a result, computers are embedded everywhere from the smartphones in our hands to the largest satellites. Advancements in engineering have pushed the world of computing further and further towards the realm of real-time human-machine interaction. However, we find ourselves standing on the verge of a new era as Moore’s Law approaches its end. We have made significant progress with the existing technology and have even put a man on the moon. We are turning a page into an era where computers and their frustratingly slow electrons will no longer hold us back. We are instead looking forward to processing the information as fast as we receive it; at the speed of light.

Disclosure statement

No potential competing interest was reported by the authors.

Notes

Additional information

Notes on contributors

Issam W. Damaj

Issam W. Damaj is a Senior Lecturer and the Programme Director for Computer Science at the Engineering Department, Cardiff School of Technologies, Cardiff Metropolitan University, Cardiff, United Kingdom. He is the Co-Director of the Centre for Engineering Research on Intelligent Sensors and Systems. His research interests include hardware design, smart cities, and engineering education. Correspondence to: Issam W. Damaj. Email: [email protected]

Palwasha W. Shaikh

Palwasha W. Shaikh is pursuing a PhD in Electrical and Computer Engineering at the University of Ottawa, Canada. She has been a student member of IEEE since 2016. Her research interests include smart connected and autonomous electric vehicles, deep learning, sensor networks, smart grids, and charging infrastructure and schemes for connected and autonomous electric vehicles.

Hussein T. Mouftah

Hussein T. Mouftah is a Distinguished University Professor and Tier 1 Canada Research Chair at the School of Electrical Engineering and Computer Science, University of Ottawa, Canada. His research interests include ad hoc and sensor networks, connected and autonomous electric vehicles, and optical networks. Dr. Mouftah is the recipient of multiple awards and medals of honour.

Notes

1 Britannica, ‘Al-Jazari Arab Inventor,’ https://www.britannica.com/biography/al-Jazari [accessed December 29, 2023]

2 M. Ceccarelli, Distinguished Figures in Mechanism and Machine Science: Their Contributions and Lega-cities (Springer, 2007).

3 Britannica, Al-jazari Arab Inventor

4 Ceccarelli, II

5 Creative Commons, Attribution 2.0 Generic, https://creativecommons.org [accessed January 29, 2023]

6 P. Hill, The Book of Knowledge of Ingenious Mechanical Devices:(Kitab fi Marifatal-hiyal Al-handasiyya), (Springer Science & Business Media, 2012).

7 J. Challoner and others, 1001 Inventions that Changed the World (Barron’s, 2009)

8 G. Nadarajan, Islamic Automation: A Reading of Al-Jazari’s the Book of Knowledge of Ingenious Mechanical Devices (Cambridge, MA: MIT Press, 2007)

9 R. Donald, Hill, On the Construction of Water-clocks: Kitab Arshimidas fiamal al-binkamat, (London: Turner and Devereux, 1976)

10 E. Savage-Smith, ‘Donald R. Hill, Arabic Water-Clocks (Book Review),’ Technology and Culture, 25 (1984), 325.

11 M. Abattouy, ‘Studies in Medieval Islamic Technology: From Philo to Al-Jazari—From Alexandria to Diyar Bakr, by Donald R. Hill,’ Review of Middle East Studies, 34(2000), 254–255.

12 A. Wolf, History of Science, Technology, and Philosophy in the Eighteenth Century (New York: Macmillan, 1939)

13 C. Wu, ‘Electric fish and the discovery of animal electricity: the mystery of the electric fish motivated research into electricity and was instrumental in the emergence of electrophysiology,’ IEEE Power and Energy Magazine, 11(2013), 598–607.

14 C. Sulzberger, ‘Pearl Street in Miniature: Models of the Electric Generating Station,’ American Scientist, 72(1984), 76–85.

15 World Bank, World Development Indicators, 2013, Electric power consumption (kWh per capita), https://data.worldbank.org/indicator/EG.USE.ELEC.KH.PC [accessed January 29, 2023]

16 Creative Commons Attribution 2.0 Generic License.

17 P. M., ‘Pascal Tercentenary Celebration,’ Nature, 150 (1942), 527.

18 C. Martin, ‘ENIAC: press conference that shook the world,’ IEEE Technology and Society Magazine, 14(1995), 3–10.

19 HuffPost, ‘Steve Jobs’ 1983 Speech Makes Uncanny Predictions About The Future,’ https://www.huffpost.com/entry/steve-jobs-1983-speech n 193581 [accessed January 29, 2023]

20 Smithsonian Magazine, ‘1987 Predictions From Bill Gates:” Siri, Show Me Da Vinci Stuff”,’ https://www.smithsonianmag.com/history/1987-predictions-from-bill-gates-siri-show-me-da-vinci-stuff-500 [accessed January 29, 2023]

21 K. Kodosky, ’LabVIEW,’ Proceedings of the ACM on Programming Languages, 4(2020), 1–54.

22 I. Damaj and S. Kasbah, ‘Integrated Mobile Solutions in an Internet-of-Things Development Model,’ in Mobile Solutions and Their Usefulness in Everyday Life, ed. by S. Paiva (Springer International Publishing, 2018).

23 F. Hussain, I. Damaj, and I. Abu Doush, ‘ChildPOPS: A Smart Child Pocket Monitoring and Protection System,’ in Smart Technologies for Smart Cities, ed. by M. Banat and S. Paiva (Springer, Cham, 2020).

24 M. Kandil, F. Alattar, R. AlBaghdadi, and I. Damaj, ‘AmIE: An Ambient Intelligent Environment for Blind and Visually Impaired People,’ in Technological Trends in Improved Mobility of the Visually Impaired, ed. by S. Paiva (Springer, Cham, 2020).

25 Creative Commons Attribution 2.0 Generic License.

26 E. Hall, Journey to the Moon: The History of the Apollo Guidance Computer (Reston, Virginia: AIAA, 1966).

27 J. Tomayko, Computers in spaceflight: The NASA experience (NASA, 1988).

28 M. Mattioli, ’The Apollo Guidance Computer,’ IEEE Micro, 6(2021), 179–182.

29 E. Hall, Journey to the Moon: The History of the Apollo Guidance Computer (Reston, Virginia: AIAA, 1966).

30 D. Mindell, Digital Apollo: Human and Machine in Spaceflight (MIT Press, 2011).

31 Apple, ’The future is here: iPhone X,’ https://www.apple.com/newsroom/2017/09/the-future-is-here- iphone-x [accessed January 29, 2023]

32 Apple, ’The future is here: iPhone X,’ Apple debuts iPhone 14 Pro and iPhone 14 Pro Max [accessed January 29, 2023]

33 H. Petroski, Small things considered: Why there is no perfect design, (Vintage, 2007).

34 G. Moore, ’Cramming More Components onto Integrated Circuits,’ Proceedings of the IEEE, 86(1998), 82–85.

35 G. Moore et al., ’Progress in digital integrated electronics,’ Electron devices meeting, 21(1975), 11–13.

36 B. Schumacher, ’Quantum Coding,’ Physical Review A, 51(1995), 2738.

37 M. Nielsen and I. Chuang, Quantum Computation and Quantum Information (American Association of Physics Teachers, 2002).

38 Creative Commons Attribution 2.0 Generic License.

39 T. Humble, ’Consumer Applications of Quantum Computing: A Promising Approach for Secure Computation, Trusted Data Storage, and Efficient Applications,’ IEEE Consumer Electronics Magazine, 6(2018), 8–14.

40 E. P. Frady et al., ’Neuromorphic Nearest Neighbor Search Using Intel’s Pohoiki Springs,’ (Proceedings of the Neuro-inspired Computational Elements Workshop), New York, United States, March 17–20, 2020.

41 Y. Dong et al., ’DNA storage: research landscape and future prospects,’ Natl. Sci. Rev., 6(2020), 1092–1107.

42 Potomac Institute for Policy Studies, ‘The Future of DNA Data Storage,’ https://potomacinstitute.org/images/studies/Future of DNA Data Storage.pdf[accessed January 29, 2023]

a John von Neumann (Hungary, USA, 1903 – 1957) is credited with creating the architecture of the modern computing machine.

b A word strongly associated with Al-Khwarizmi, the Father of Algebra.