?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This paper presents visual feedback 3D pose estimation/regulation methodologies in the sampled data setting by camera frame rates. Vision-based estimation/control problems have been studied by a number of research groups. While most works focus on the limitation of measured outputs, they conduct convergence/performance analysis under the assumption that visual measurements extracted from a camera are continuously available. However, the camera frame rates including image processing time often cannot be neglected compared with other computation time. In view of this fact, this paper newly proposes visual feedback pose estimation/regulation techniques under the situation that visual measurements are sampled due to the frame rates. The problem settings are first provided. Then, the pose estimation/regulation methods with sampled visual measurements are proposed. The convergence/performance analysis is conducted by the fusion of a Lyapunov-based approach and an event-triggered control technique. The present analysis scheme provides us guidelines for the design of estimation/control gains guaranteeing desired convergence/performance. The effectiveness of the present technique is verified via simulation and an experiment with real hardware.

1. Introduction

Vision sensors have been widely leveraged for situation recognition since they provide rich 2D information projected from 3D relative states [Citation1]. Thanks to this utility, vision sensors are also utilized in robotics and control engineering communities to develop vision-based autonomous control methods [Citation2–12]. In vision-based control, one of the main issues is how to deal with 2D visual information to estimate/control a 3D pose (position and orientation) of a target object (relative to a camera) [Citation5,Citation10–12]. On the other hand, compared with other computation times, the sampling time for extracting desired information from the image, i.e. a camera frame rate, might not be small enough to be neglected, especially when complex image processing algorithms are employed. Nonetheless, most of research studies, including the references above, focus only on the limitation of measured outputs for estimation/control under the premise that visual information is continuously available.

In view of this fact, this paper investigates visual feedback 3D pose estimation/regulation problems in the sampled data setting. Here, the objectives are (i) to estimate the 3D target object pose relative to the camera, and (ii) to drive the relative pose to a desired one, by using only sampled 2D visual information extracted from a monocular camera with a certain frame rate. To achieve these goals, we introduce a visual feedback 3D pose estimation mechanism, called a visual motion observer, presented by [Citation13,Citation14] and also utilized for some extended estimation/control objectives [Citation15–17]. In the visual motion observer, a passivity property of rigid body motion plays a central role and estimation/control performance is analysed through a Lyapunov-based approach. However, similarly to the references above, the observer assumes continuous measurements of visual information. This assumption leads to the convergence/performance analysis allowing any large positive estimation/control gains, but high gain estimation and its control application often do not work in real experiments with sampled measurements. Therefore, this paper extends the visual motion observer to that with sampled visual measurements.

Since the observer input is formed by the visual measurements, the input is also sampled in the case of sampled measurements. For conducting convergence/performance analysis even in the sampled data setting, this paper employs an event-triggered control technique [Citation18–20]. The merit of event-triggered control is the possibility to reduce the number of re-computing inputs and transmissions (i.e. events) while guaranteeing desired performance. Specifically, inter-event time is investigated to guarantee a monotonic decrease of Lyapunov-like functions for convergence. This technique is suited to the visual motion observer because its convergence/performance analysis is also based on non-increasing properties of Lyapunov-like functions. As a result of the event-triggered approach, we can obtain gain limitations for desired convergence/performance based on given certain camera frame rates.

In summary, the main contribution of this work is to propose a novel vision-based pose observer and its control application in the sampled data setting by camera frame rates. We first provide problem settings: a 3D relative motion model between a camera and a target object; a visual measurement model; and definitions of the estimation and control objectives. Then, we newly propose a sampled visual motion observer and give convergence analysis for the stationary target object case and tracking analysis for the moving object case. A pose regulation method based on the proposed sampled visual motion observer is next proposed, and the regulation/tracking analysis is also conducted for the stationary/moving object. Here, we provide the relationship between the frame rates and estimation/control gains to achieve desired convergence/performance. Specifically, we show that estimation and control errors are ultimately bounded by a function of the camera frame rate, estimation/control gains and target object velocity. This analysis provides us with the guidelines for gain settings. The effectiveness of the proposed methods is demonstrated via simulation and an experiment with real hardware.

The conference versions of this paper are reported in [Citation21,Citation22]. While [Citation21] considers only estimation problems, this work also tackles pose regulation problems. Compared with [Citation22], we greatly improve the conservativeness of the gain condition by modifying the control law structure, and newly carrying out an experimental demonstration with real hardware.

2. Problem settings

This section formulates 3D visual feedback pose estimation/regulation problems consisting of two rigid bodies (a camera robot and a target object robot) and visual measurements by a monocular camera.

2.1. Relative rigid body motion

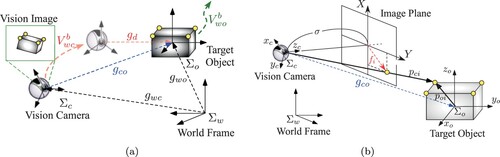

Throughout this paper, this work considers a visual feedback system shown in Figure . Let the world frame, camera frame, and object frame be ,

, and

, respectively (see Figure (a)). Then, the pose of the origin of

relative to

is represented by

, where

,

. (

is the n-dimensional identity matrix.) The orientation is represented by the exponential coordinate of the rotation matrix with the unit axis

and the angle

. The operator

,

provides

for the vector cross-product “×,” and

is its inverse operator. For the ease of representation,

is simply written by

in this paper. Similarly, the pose of

relative to

is represented by

.

Let us next introduce the body velocity of relative to

as

and that of

as

. Here,

and

are respectively the body translational velocity and angular one. The pose g and body velocity

can be also written in the homogeneous representation form as follows:

(

represents the

zero matrix.) Notice here that another definition of “

” is used for the 6D vector

. Then,

and

are respectively given by

and

[Citation1].

Similarly, the pose of relative to

and its body velocity are denoted by

and

, respectively. Then,

holds, and thus the following relative rigid body motion is obtained from

:

(1)

(1)

2.2. Visual measurements

2D visual measurements extracted from a monocular camera are introduced as the measured outputs for 3D pose estimation/regulation laws. Although the extraction by perspective projection is only introduced in this paper, it can be easily extended to the panoramic camera case as in [Citation15].

Consider the target object with feature points. The positions of the feature points relative to the object frame

are represented by

. Then, these positions relative to the camera frame

are given by

from the coordinate transformation.Footnote1 We next denote the m feature points on the 2D image plane by

. Then, the well-known perspective projection [Citation1] yields the following relationship for each

(see Figure (b)):

(2)

(2) Here,

is the focal length of the camera.

This paper considers the situation that the visual measurements f are only available for pose estimation/regulation laws, and supposes that the positions of the feature points in (i.e.

) are known a priori. Then, the visual measurements are given by the function only of the relative pose

, i.e.

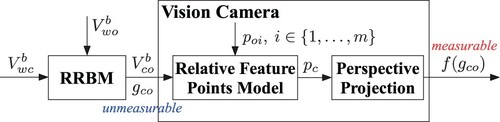

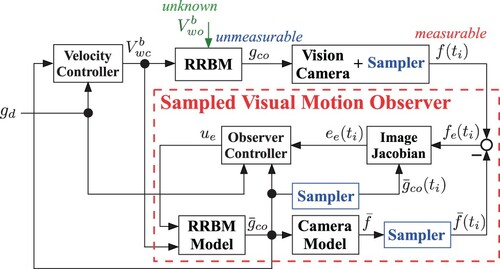

. Figure illustrates the block diagram of the relative rigid body motion (Equation1

(1)

(1) ) with the perspective projection (Equation2

(2)

(2) ).

2.3. Research objectives

For the present visual feedback system, the objectives of this paper are

to propose a pose estimation mechanism of the relative pose

to propose a pose regulation mechanism to drive

to the fixed desired pose

(see Figure (a))

3. Sampled visual feedback pose estimation

We first propose a sampled visual feedback pose estimation mechanism taking account of camera frame rates for the objective (i).

3.1. Estimation error system

Since the 2D visual measurements (Equation2(2)

(2) ) are only available, we consider the estimation of the 3D relative pose

by a nonlinear observer. The estimate of

is represented by

. Similarly to the Luenberger-type observer [Citation23], we build the copy model of the relative rigid body motion (Equation1

(1)

(1) ) as follows:

(3)

(3) Here,

is the observer input for the estimation of

. We note that the model (Equation3

(3)

(3) ) does not include the target object velocity information

, because it is unavailable.Footnote2 Notice also that the estimated visual measurements

can be computed by

and (Equation2

(2)

(2) ).

Let us define the estimation error between

and

and its vector form

as follows:

Here,

. The estimation error vector has the important property that for

,

holdsFootnote3 if and only if

which is equivalent to

, i.e. the objective (i) is achieved. It should be also noted that

can be approximately reconstructed by the measurement error

(refer to [Citation13] for the details). Then, the time derivative of

along the trajectories of (Equation1

(1)

(1) ) and (Equation3

(3)

(3) ) yields the following estimation error system:

(4)

(4) This is also given in the vector form with the Adjoint transformation

as

(5)

(5) Then, it is shown in [Citation13] that if

, the estimation error system (Equation4

(4)

(4) ) has a passivity-like property from the input

to the output

with the storage function

, i.e.

holds. Here,

and notice that

is equivalent to

, that is, the pose estimation (the objective (i)) is achieved. (

represents the Frobenius norm.) Based on this property, Fujita et al. [Citation13] propose the following negative feedback law to achieve the pose estimation:

where the achievement of the estimation is proved by the direct use of the storage function

as a potential function for the Lyapunov-based energy approach. However, this observer input assumes that the visual measurements f are continuously available although general cameras have 60, 30, 15, or fewer fps when the image processing time to extract the feature points is included. Therefore, this paper tackles a new visual feedback pose estimation problem explicitly taking account of the camera frame rates.

3.2. Pose estimation mechanism

Let us first consider the case that the camera has a fixed frame rate [fps], and, for ease of representation, this includes the image processing time to extract the feature points. The variable frame rate case is handled at the end of the main results. We assume that the computation time to calculate the estimation input is small enough to be neglected compared with

. Then, based on the frame rate τ, we introduce the sampling time sequence

such that

holds for all

. (

represents the union of natural numbers and

.) Then, the visual measurements (Equation2

(2)

(2) ) are extracted at each time instant

.

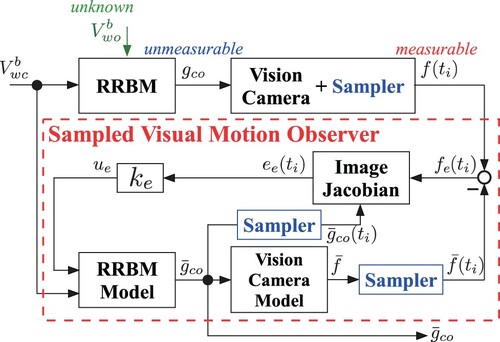

We now propose the following sampled observer input for the relative rigid body motion model (Equation3(3)

(3) ):

(6)

(6) Notice here that the present input remains to be constant until it is re-computed. Figure illustrates the block diagram of the present estimation mechanism called the sampled visual motion observer. In the present observer since the image Jacobian consists of the estimates

and

as well as the visual measurements f [Citation13], we employ the same samplers for these estimates as for the camera.

4. Analysis of pose estimation

This section provides the convergence analysis for the stationary target object (i.e. holds) and the tracking performance analysis for the moving target.

4.1. Convergence analysis

Let us define the sampling error between the actual estimation error

and the sampled one

as

Then, we obtain the following theorem:

Theorem 4.1

Suppose that the target object is static (i.e. ). Then, if the camera frame rate satisfies the condition

(7)

(7) there exist finite time

and positive scalars

such that

(8)

(8) for the closed-loop system (Equation4

(4)

(4) ) and (Equation6

(6)

(6) ). In other words, the equilibrium point

is exponentially stable after time

.

Proof.

When holds, the time derivative of the potential function

along the trajectories of (Equation4

(4)

(4) ) and (Equation6

(6)

(6) ) for

yields

Therefore, if the inequality

(9)

(9) is satisfied, we obtain

(10)

(10) for

.

We next derive the frame rate condition to guarantee the inequality (Equation9(9)

(9) ). Notice first that it is enough to consider each time interval

since ϵ becomes 0 at the next time step

. Motivated by the analysis of event-triggered control [Citation18–20], we investigate the dynamics of

for

as follows:

(11)

(11) Here, we use the fact that

for

. Let us now consider

. Then, we first get the following position term from the estimation error system (Equation4

(4)

(4) ) with

:

Before obtaining the orientation term, we note that the following equality holds for any vector

and any matrix

:

From this property and the estimation error system (Equation4

(4)

(4) ) with

, we get

Here, we use the notation

, and the representation

is also utilized hereafter. Therefore, using the properties

and

, and the estimation input

, we obtain

(12)

(12) Substituting this inequality into (Equation11

(11)

(11) ) gives

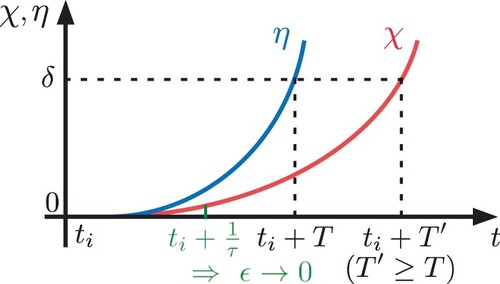

Let us now denote

by

, i.e. we consider

. Then, if

is the solution of

, we have

for all

. This means that the time it takes for χ to evolve from 0 to δ is larger than or equal to the time for η (see Figure ). The time for η is given by

which is obtained by the solution T>0 of

. Therefore, if

holds, (Equation9

(9)

(9) ) is guaranteed for all time because ϵ is reset to 0 before

reaches to δ (see Figure ).

We finally show the exponential stability of after some time, from the Gronwall-Bellman Inequality [Citation24]. Notice that if τ satisfies (Equation7

(7)

(7) ), then the inequality (Equation10

(10)

(10) ) holds, i.e.

is negative definite for all time. It should be also noted that

is continuous and positive definite. Therefore, there exists finite time

in the time sequence

satisfying

. Then,

holds for all

from the definition of

. The property

also holds for

.Footnote4 In summary, (Equation10

(10)

(10) ) provides the following inequality for every interval

:

Then, from the Gronwall-Bellman Inequality, we obtain

(13)

(13) We next consider

. Similarly to the above discussion, we obtain

Then, since

holds from (Equation13

(13)

(13) ) and the continuity of

, we get

Furthermore, the induction from time

gives

(14)

(14) Finally, we derive the inequality (Equation8

(8)

(8) ) from (Equation14

(14)

(14) ). Remember that

holds after time

. Then, substituting these inequalities into (Equation14

(14)

(14) ) yields

Therefore, by taking the square root for both sides of this inequality and defining

as

and

, we obtain (Equation8

(8)

(8) ).

Remark 4.1

The condition (Equation7(7)

(7) ) implies that the choice of a large feedback gain

requires fast frame rates τ (small sampling intervals). This property is intuitive because large gains increase the influence of the sampling error ϵ, which might be a poor impact on the estimation. In other words, after choosing a camera with a certain frame rate, it is not free to make the gain large. Although the condition (Equation7

(7)

(7) ) is only sufficient, this analysis provides the significant insight that camera frame rates or image processing time is not negligible for pose estimation.

4.2. Tracking performance analysis

We next analyse the tracking performance for the moving target. Suppose that holds for a positive scalar

, i.e. the target velocity is bounded. We also assume that the value of κ is known a prior, e.g. simply as prior information or due to hardware limitations of the target vehicle. Then, the time derivative of the potential function

along the trajectories of (Equation4

(4)

(4) ) and (Equation6

(6)

(6) ) yields

Here, for ease of representation, we employ the following notation, differently from

in (Equation5

(5)

(5) ):

Let us now introduce a performance indicator

to evaluate the sampling error ϵ. Then, if

is satisfied, we get

(15)

(15) Since the right-hand side of (Equation15

(15)

(15) ) consists only of

, we get the following theorem by employing ultimate boundedness analysis [Citation24]:

Theorem 4.2

Suppose that the norm of the target object velocity is upper bounded by κ. Then, for every initial estimation error

, there exists

such that the solution

of the closed-loop system (Equation4

(4)

(4) ) and (Equation6

(6)

(6) ) satisfies

(16)

(16) if

and the following frame rate condition hold:

(17)

(17)

Proof.

If is satisfied for all time, we get the following inequality for

from (Equation15

(15)

(15) ):

(18)

(18) Therefore, from Theorem 4.18 of [Citation24] and the property that

holds for

, we can conclude that for every

, there exists

such that

satisfies (Equation16

(16)

(16) ).

We next derive the frame rate condition to guarantee the inequality . Similarly to the proof of Theorem 4.1, we investigate the dynamics of

in

as follows:

(19)

(19) The differences from (Equation12

(12)

(12) ) are to handle

which appears in (Equation4

(4)

(4) ) and to consider not

but

to reduce the conservativeness. Let us now derive the upper bound of

. We first consider the case that

. Then, the following inequality holds from

and (Equation18

(18)

(18) ):

which also means

. We thus obtain

from (Equation19

(19)

(19) ) and the first frame rate condition in (Equation17

(17)

(17) ) via the same analysis as in Theorem 4.1.

On the other hand, when the initial estimation error satisfies ,

might increase and become larger than

. However, since (Equation18

(18)

(18) ) holds,

never goes beyond the value associated with

, denoted by

. Then, this fact provides

for

. Therefore, we obtain

from (Equation19

(19)

(19) ) and the second frame rate condition in (Equation17

(17)

(17) ).

Remark 4.2

The performance evaluation (Equation16(16)

(16) ) can be rewritten as follows:

This means that smaller γ and larger

achieve better performance. However, both of them require fast frame rates because the right-hand sides of (Equation17

(17)

(17) ) are monotonically decreasing for γ and monotonically increasing for

. Therefore, as we expected, γ can be considered as the indicator of the tracking performance and the (sufficiently) allowable gains for a designer. For example, choosing a camera with a certain rate enables us to design

for desired performance related to γ from (Equation16

(16)

(16) ) and (Equation17

(17)

(17) ). A design example is provided in Section 7.

Remark 4.3

Theorem 4.2 provides two frame rate conditions depending on the initial estimation error. However, if we first run the present estimation law (Equation6(6)

(6) ) before the target moves, we can consider only the second condition.

5. Sampled visual feedback pose regulation

We next propose a sampled visual feedback pose regulation mechanism based on the present sampled visual motion observer for the objective (ii).

5.1. Control error system

Similarly to the estimation error system (Equation4(4)

(4) ) presented in Section 3.1, we build the control error system. The control error

, and its vector form

are defined as follows:

Notice that for

,

holds if and only if

, i.e.

. Then, the time derivative of

along the trajectories of (Equation3

(3)

(3) ) provides the following control error system:

(20)

(20) This is also written in the vector form as

Combining the estimation error system (Equation4

(4)

(4) ) with the control error system (Equation20

(20)

(20) ) yields the following total error system in the vector form:

(21)

(21) It is shown in [Citation13] that if

, the total error system (Equation21

(21)

(21) ) also has a passivity-like property from the input

to the output

defined as

Here,

is the total control and estimation error vector, and the corresponding storage function

is defined as

which yields

, i.e. a passivity-like property from the input

to the output

[Citation13]. We note that U=0 is equivalent to

for

, and

means

, that is, the pose regulation (the objective (ii)) is achieved.

Based on the passivity-like property, Fujita et al. [Citation13] propose the following negative feedback law to achieve the pose regulation as in Figure (a):

Here,

is a positive definite gain matrix, and the achievement of the regulation is proved by the direct use of the storage function U as a potential function for the Lyapunov-based energy approach. However, this technique also assumes the continuous availability of the visual measurements f, which allows any positive definite matrix K.

5.2. Pose regulation mechanism

Consider the same settings as in Section 3, i.e. the fixed frame rate τ and the sampling time sequence . Then, motivated by the passivity-like property of the total error system (Equation21

(21)

(21) ), we propose the following visual feedback pose regulation input based on the sampled visual motion observer:

(22)

(22) Notice here that only the estimation error

is constant until the next sampling time since we consider the case that the computation time to obtain the estimate

is small enough to be neglected. This structure enables us to greatly reduce the conservativeness of the frame rate condition provided by the conference version of this paper [Citation22]. In [Citation22],

is also sampled, i.e. instead of

and

,

and

are used in the regulation input (Equation22

(22)

(22) ).

This paper assumes for better performance in the subsequent discussion. The reason to employ this gain relationship is that only the observer input for the estimation is sampled, and as a result, a large gain

increases the influence of the sampling error

, which results in bad control performance. The block diagram of the present sampled visual feedback system is illustrated in Figure .

6. Analysis of pose regulation

Similarly to Section 4, this section provides the convergence analysis for the stationary target object and the tracking performance analysis for the moving target.

6.1. Convergence analysis

Using the same definition of the sampling error as in Section 4, we have the following theorem:

Theorem 6.1

Suppose that the target object is static (i.e. ). Then, if the camera frame rate satisfies the condition

(23)

(23) there exist finite time

and positive scalars

such that

for the closed-loop system (Equation21

(21)

(21) ) and (Equation22

(22)

(22) ).

Proof.

When holds, the time derivative of the potential function U along the trajectories of (Equation21

(21)

(21) ) and (Equation22

(22)

(22) ) for

yields

Here, we use the property

. (

is the smallest eigenvalue for any symmetric matrix

.) Therefore, if the inequality

is satisfied, we obtain

for

.

Then, by the same techniques as in the proof of Theorem 4.1, we get

(24)

(24) and

(25)

(25) Substituting (Equation25

(25)

(25) ) into (Equation24

(24)

(24) ) provides

The remaining analysis to obtain the frame rate condition (Equation23

(23)

(23) ) is the same as in the proof of Theorem 4.1

The condition (Equation23(23)

(23) ) also implies that large feedback gains

and

require fast camera frame rates τ.

6.2. Tracking performance analysis

By using the same assumption as in Section 4.2, the time derivative of the potential function U along the trajectories of (Equation21

(21)

(21) ) and (Equation22

(22)

(22) ) is given as follows:

Here, we note that

appears only in the estimation part for the total error system (Equation21

(21)

(21) ). Therefore, if

is satisfied for a certain performance indicator

, we get

(26)

(26) Then, we have the following theorem similar to Theorem 4.2:

Theorem 6.2

Suppose that the norm of the target object velocity is upper bounded by κ. Then, for every initial control and estimation error

, there exists

such that the solution

of the closed-loop system (Equation21

(21)

(21) ) and (Equation22

(22)

(22) ) satisfies

(27)

(27) if

and the following frame rate condition hold:

(28)

(28)

Proof.

If is satisfied for all time, the following inequality is obtained from (Equation26

(26)

(26) ):

Therefore, from Theorem 4.18 of [Citation24] and the property that

holds for

, we can conclude that for every

, there exists

such that

satisfies (Equation27

(27)

(27) ).

We next derive the frame rate condition to guarantee the inequality . We get the following dynamics of

from (Equation21

(21)

(21) ) and (Equation25

(25)

(25) ):

Then, by the same approach as in the proof of Theorem 4.2, we get the frame rate condition (Equation28

(28)

(28) ).

The condition (Equation28(28)

(28) ) also implies that smaller γ and larger

require fast frame rates. Here, larger

also requires larger

from the gain relationship

.

6.3. Variable camera frame rate case

So far, we have considered the situation that the visual measurements (Equation2(2)

(2) ) can be extracted at every fixed sampling time

. However, actual sampling time for frame rates including image processing time is variable. To deal with this issue, we consider the worst case (i.e. the maximum sampling time).

Suppose that the visual measurements (Equation2(2)

(2) ) are extracted at time instants

, where

. Instead of the fixed camera frame rate case, we now suppose that the worst frame rate, denoted by

, is known a priori via pre-experiments of image processing. Then, by simply replacing τ with

in the conditions (Equation7

(7)

(7) ), (Equation17

(17)

(17) ), (Equation23

(23)

(23) ), and (Equation28

(28)

(28) ), the non-increasing properties of

and U are always guaranteed. We thus have the following corollary:

Corollary 6.3

Suppose that is satisfied for all

. Then, the same statements as in Theorems 4.1, 4.2, 6.1, and 6.2 hold by replacing τ with

.

7. Verification

This section demonstrates the effectiveness of the present sampled visual feedback pose regulation mechanism (Equation22(22)

(22) ) via simulation and an experiment. The verification only of the estimation is omitted because it has been already shown in our previous work [Citation21].

7.1. Simulation

Consider a pinhole camera pointing in the z-axis direction of with the focal length

[m], and suppose that its frame rate is variable with the minimum rate

[fps]. The initial relative pose of the target object to the camera is set as

[m] and

[rad], where we set

. The positions of four feature points in

are given by

,

,

, and

[m]. The target object velocity is set as follows:

where the units of

and

are [m/s] and [rad/s], respectively. We artificially set the velocity to 0 after 50s. This setting enables us to verify both of tracking (until 50s) and convergence (after 50s) in a single demonstration.

The present sampled visual feedback pose regulation mechanism (Equation22(22)

(22) ) with

,

,

,

,

[m], and

[rad] is applied to the camera to achieve the desired relative pose

[m] and

[rad], which yields

. In this setting, the frame rate condition (Equation23

(23)

(23) ) for convergence is

and (Equation28

(28)

(28) ) for tracking becomes

, that is, both frame rate conditions are satisfied. As a result, Corollary 6.3 (according to Theorem 6.2) provides the tracking performance that there exists

such that

.

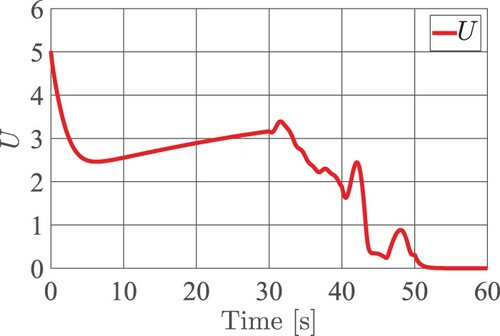

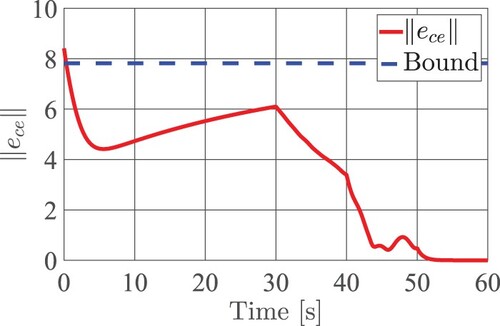

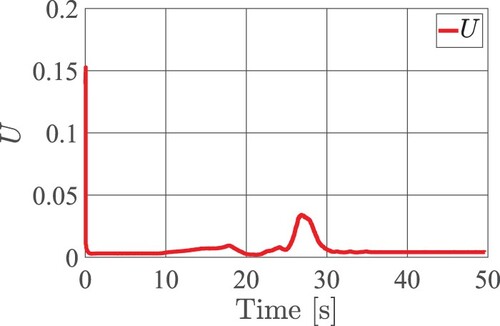

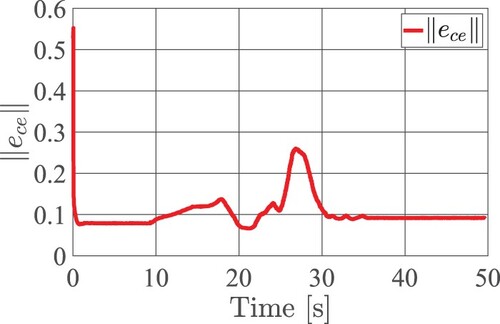

The simulation results are depicted in Figures and . Figure shows the time response of the potential function U, and that of the norm of the total control and estimation error is depicted in Figure . The exponential stability of

is seen from the behaviour after 50s. We also see good tracking performance from the behaviour until 50s, where the potential function U sometimes increases due to the non-zero target object velocity

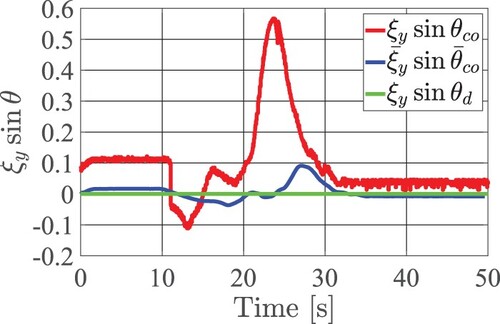

, but it occurs within the bound as shown in the present analysis. In summary, the present sampled visual feedback pose regulation mechanism works successfully.

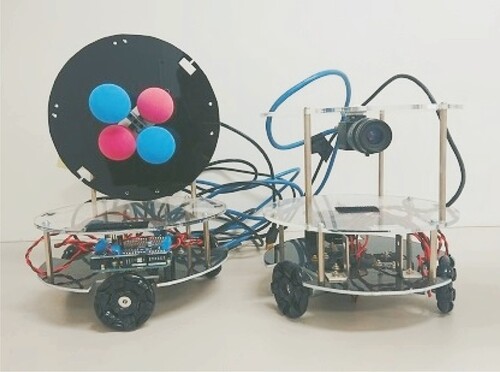

7.2. Experiment

We next show the validity of the present sampled visual feedback pose regulation mechanism (Equation22(22)

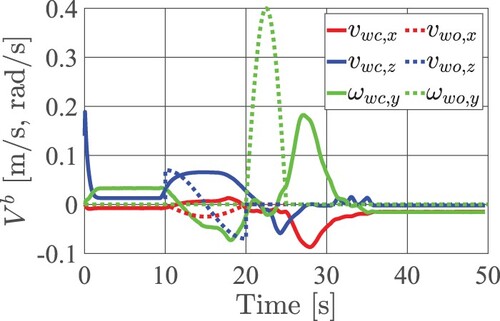

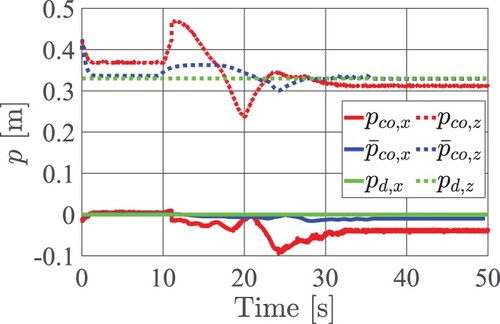

(22) ) via an experiment. Here, two self-developed 2D omnidirectional robots are employed as the camera robot and the target object robot (see Figure ). In this experiment, the pose estimation is conducted in three-dimensional space, but the pose regulation is demonstrated on two-dimensional plane (x–z plane) with the projection from 6D translational/angular velocity inputs onto 3D ones (i.e.

).

Each omnidirectional robot mainly consists of three motors (RA250100–58Y91 from Daisen Electronics Industrial, Co., Ltd.) with omnidirectional wheels (4571398310089 from Vstone, Co., Ltd.) and a single camera (FL3–U3–13S2C–CS from FLIR Systems, Inc.). The focal length of the camera is tuned as [m], and the camera originally has 120fps. However, when we explicitly consider the image processing time to extract the four feature points attached to the target object robot (see Figure ), the actual minimum frame rate becomes 18fps in this experiment. We also introduce a motion capture camera system (with OptiTrack Flex13 from NaturalPoint, Inc.) to obtain actual experimental data for the evaluation of the convergence/tracking, but this information is not used for the control inputs of the camera robot.

The present sampled visual feedback pose regulation mechanism (Equation22(22)

(22) ) with

,

,

,

[m], and

[rad] is applied to the camera robot to achieve the desired relative pose

[m] and

[rad]. Here, the initial relative pose is set as

[m] and

[rad], and the feature points are attached at

,

,

, and

[m] in

. The reference body velocity commands of the target robot are given as follows:

Here, we artificially set the velocity to 0 until 10s and after 25s to verify tracking and convergence in a single demonstration. We also confirmed that the sampling rate of the observer was about 270Hz, which was small enough to be neglected compared with the camera frame rate 18fps.

The experimental results are shown in Figures . Figures and respectively depict the time responses of the potential function U and the norm of the total control and estimation error . On the other hand, Figures – show each input/state behaviour focussed on the pose regulation on the 2D experimental field. We see from these figures that the present visual feedback pose regulation mechanism successfully achieves the tracking to the moving target, and the convergence to the desired relative pose to the stationary target is almost achieved. The convergence errors and the tracking delay are due to the physical elements such as the actual dynamics of the robots, the friction between the wheel and the field, and the distortion of the camera image.

8. Conclusion

This paper presented a vision-based pose observer and its control technique in the sampled data setting by camera frame rates. In the convergence/tracking analysis for the proposed methodologies, we provided the relationship between the frame rates and estimation/control gains. Specifically, we showed that the estimation and control errors are ultimately bounded by a function of the camera frame rate, estimation/control gains, and target object velocity, which provides us with the guidelines for gain settings. The utility of the proposed technique is demonstrated via simulation and an experiment with real hardware.

One of our future directions is to consider robot dynamics. In this regard, previous works [Citation13,Citation16] have already presented passivity-based visual feedback pose regulation mechanisms also for rigid body dynamics in the Euler-Lagrange equation form and the Newton-Euler one. We thus extend the current results with the assistance of these techniques.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Tatsuya Ibuki

Tatsuya Ibuki received his B.Eng., M.Eng., and Ph.D. degrees in mechanical and control engineering from Tokyo Institute of Technology in 2008, 2010, and 2013, respectively. He was a research fellow with the Japan Society for the Promotion of Science from 2012 to 2013, an assistant professor with the Department of Systems and Control Engineering, Tokyo Institute of Technology from 2013 to 2020, and a visiting scholar with the School of Electrical and Computer Engineering, Georgia Institute of Technology in 2019. Since 2020, he has been a senior assistant professor with the Department of Electronics and Bioinformatics, Meiji University. His research interests include cooperative control of robotic networks, fusion of control theory and machine learning, and vision-based estimation and control.

Satoshi Nakano

Satoshi Nakano received a B.Eng. degree from Nagoya Institute of Technology, Japan, in 2013 and the M.Eng. and Ph.D. degrees in mechanical and control engineering from Tokyo Institute of Technology, Japan, in 2015 and 2019, respectively. Since 2019, he has been an assistant professor in the department of engineering, Nagoya Institute of Technology, Japan. His research interests include nonlinear control, constrained control, and vision-based estimation and control.

Shunsuke Shigaki

Shunsuke Shigaki received his B. Eng., M. Eng., and Ph.D. degrees in mechanical and control system engineering from Tokyo Institute of Technology, Tokyo, Japan in 2013, 2015, and 2018, respectively. He was a JSPS Research Fellowship for Young Scientist (DC1) from 2015 to March 2018, an assistant professor in the division of systems research, Yokohama National University from 2018 to 2019, an assistant professor in the department of system innovation, Osaka University from 2019 to 2023, and a visiting scientist with Max Planck Institute for Chemical Ecology in 2022. He is currently an assistant professor in the principles of informatics research division, National Institute of Informatics, Japan since 2023. His research interests include bio-inspired robotics and algorithms, soft robotics, machine learning, and neuroethology.

Takeshi Hatanaka

Takeshi Hatanaka received a Ph.D. degree in applied mathematics and physics from Kyoto University in 2007. He then held faculty positions at Tokyo Institute of Technology and Osaka University. Since April 2020, he has been an associate professor at Tokyo Institute of Technology. He is the coauthor of “Passivity-Based Control and Estimation in Networked Robotics” (Springer, 2015) and the coeditor of “Economically-enabled Energy Management: Interplay between Control Engineering and Economics” (Springer Nature, 2020). His research interests include cyber-physical-human systems and networked robotics. He received the Kimura Award (2017), Pioneer Award (2014), Outstanding Book Award (2016), Control Division Conference Award (2018), Takeda Prize (2020), and Outstanding Paper Awards (2009, 2015, 2020, 2021, and 2023) all from SICE. He also received 3rd IFAC CPHS Best Research Paper Award (2020) and 10th Asian Control Conference Best Paper Prize Award (2015). He is serving/served as an AE for IEEE TSCT, Advanced Robotics, and SICE JCMSI, and is a member of the Conference Editorial Board of IEEE CSS. He is a senior member of IEEE.

Notes

1 In this equality, we use the homogeneous representation .

2 Pose estimation/regulation problems with the target object velocity estimation have been tackled in [Citation16].

3 For ease of representation, we often simply use ‘0’ to denote zero vectors with appropriate dimensions.

4 This is easily shown by the properties that and

can be respectively rewritten by

and

, and thus

means

. In this case,

also holds.

References

- Ma Y, Soatto S, Košecká J. An invitation to 3-D vision: from images to geometric models. New York (NY): Springer; 2004.

- Hutchinson S, Hager GD, Corke PI. A tutorial on visual servo control. IEEE Trans Robot Autom. 1996 Oct;12(5):651–670. doi: 10.1109/70.538972

- Chaumette F, Hutchinson S. Visual servo control, part I: basic approaches. IEEE Robot Autom Mag. 2006 Dec;13(4):82–90. doi: 10.1109/MRA.2006.250573

- Chaumette F, Hutchinson S. Visual servo control, part II: advanced approaches. IEEE Robot Autom Mag. 2007 Mar;14(1):109–118. doi: 10.1109/MRA.2007.339609

- AlBeladi A, Ripperger E, Hutchinson S, et al. Hybrid eye-in-hand/eye-to-hand image based visual servoing for soft continuum arms. IEEE Robot Autom Lett. 2022 Oct;7(4):11298–11305. doi: 10.1109/LRA.2022.3194690

- Niu G, Yang Q, Gao Y, et al. Vision-based autonomous landing for unmanned aerial and ground vehicles cooperative systems. IEEE Robot Autom Lett. 2022 Jul;7(3):6234–6241. doi: 10.1109/LRA.2021.3101882

- Zhou S, Miao Z, Zhao H, et al. Vision-based control of an industrial vehicle in unstructured environments. IEEE Trans Control Syst Technol. 2022 Mar;30(2):598–610. doi: 10.1109/TCST.2021.3073003

- Guo D, Jin X, Shao D, et al. Image-based regulation of mobile robots without pose measurements. IEEE Control Syst Lett. 2022 Jan;6:2156–2161. doi: 10.1109/LCSYS.2021.3139288

- Rotithor G, Trombetta D, Kamalapurkar R, et al. Full- and reduced-order observers for image-based depth estimation using concurrent learning. IEEE Trans Control Syst Technol. 2021 Nov;29(6):2647–2653. doi: 10.1109/TCST.2020.3036369

- Bell ZI, Deptula P, Doucette EA, et al. Simultaneous estimation of Euclidean distances to a stationary object's features and the Euclidean trajectory of a monocular camera. IEEE Trans Autom Control. 2021 Sep;66(9):4252–4258. doi: 10.1109/TAC.2020.3035597

- Li Y, Wang H, Xie Y, et al. Adaptive image-space regulation for robotic systems. IEEE Trans Control Syst Technol. 2021 Mar;29(2):850–857. doi: 10.1109/TCST.87

- Keenan P, Janabi-Sharifi F, Assa A. Vision-based robotic traversal of textureless smooth surfaces. IEEE Trans Robot. 2020 Aug;36(4):1287–1306. doi: 10.1109/TRO.8860

- Fujita M, Kawai H, Spong MW. Passivity-based dynamic visual feedback control for three dimensional target tracking: stability and L2-gain performance analysis. IEEE Trans Control Syst Technol. 2007 Jan;15(1):40–52. doi: 10.1109/TCST.2006.883236

- Kawai H, Murao T, Fujita M. Passivity-based visual motion observer with panoramic camera for pose control. J Int Robot Syst. 2011 May;64(3–4):561–583. doi: 10.1007/s10846-011-9557-5

- Ibuki T, Hatanaka T, Fujita M. Visual feedback pose synchronization with a generalized camera model. In: 50th IEEE Conference on Decision and Control and European Control Conference. Orlando, IEEE; 2011. p. 4999–5004.

- Ibuki T, Hatanaka T, Fujita M. Passivity-based visual feedback pose regulation integrating a target motion model in three dimensions. SICE J Control Meas Syst Integr. 2013 Sep;6(5):322–330. doi: 10.9746/jcmsi.6.322

- Yamauchi J, Saito M, Omainska M, et al. Cooperative visual pursuit control with learning of target motion via distributed Gaussian processes under varying visibility. SICE J Control Meas Syst Integr. 2022 Dec;15(2):228–240. doi: 10.1080/18824889.2022.2155454

- Tabuada P. Event-triggered real-time scheduling of stabilizing control tasks. IEEE Trans Autom Control. 2007 Sep;52(9):1680–1685. doi: 10.1109/TAC.2007.904277

- Bemporad A, Heemels M, Johansson M. Networked control systems. London: Springer; 2010.

- Garcia E, Antsaklis PJ. Model-based event-triggered control for systems with quantization and time-varying network delays. IEEE Trans Autom Control. 2013 Feb;58(2):422–434. doi: 10.1109/TAC.2012.2211411

- Ibuki T, Namba Y, Hatanaka T, et al. Passivity-based discrete visual motion observer taking account of camera frame rates. In: 52nd IEEE Conference on Decision and Control. Florence, IEEE; 2013. p. 7660–7665.

- Ibuki T, Walter JR, Hatanaka T, et al. Frame rate-based discrete visual feedback pose regulation: a passivity approach. IFAC Proc Volumes. 2014;47(3):11171–11176. doi: 10.3182/20140824-6-ZA-1003.02768

- Luenberger DG. An introduction to observers. IEEE Trans Autom Control. 1971 Dec;AC–16(6):596–602. doi: 10.1109/TAC.1971.1099826

- Khalil HK. Nonlinear systems. 3rd ed. Upper Saddle River (NJ): Prentice Hall; 2002.