Abstract

Online cognitive tests have gained popularity in recent years, but their utility needs evaluation. We reviewed the available information on the reliability and validity measures of tests that were designed to be performed online without supervision. We then compared a newly developed web-based and self-administered memory test to traditional neuropsychological tests. We also studied if familiarity with computers affects the willingness to take the test or the test performance. Five hundred thirty-one healthy individuals, who have a history of a perinatal risk and who have been followed up since birth for the potential long-term consequences, participated in a traditional comprehensive neuropsychological assessment at the age of 40. Of them, 234 also completed an online memory test developed for follow-up. The online assessment and traditional neuropsychological tests correlated moderately (total r = .50, p < .001; subtests r = .21−.45). The mean sum scores did not differ between presentation methods (online or traditional) and there was no interaction between presentation method and sex or education. The experience in using computers did not affect the performance, but subjects who used computers often were more likely to take part in the voluntary online test. Our self-administered online test is promising for monitoring memory performance in the follow-up of subjects who have no major cognitive impairments.

1. Introduction

Neuropsychological tests using computers and hand-held devices are gaining popularity, especially in the field of cognitive decline and dementia (Shah et al., Citation2011). Traditional pen-and-paper tests still dominate clinical practice as well as research, even though they are labor-intensive and may not detect theoretically important new findings in neuroscience (Bilder & Reise, Citation2019; Price, Citation2018). Tests using a personal computer, tablet, smartphone, online video, or virtual reality are mostly non-standard and clinically un-validated (Brearly et al., Citation2017; Germine et al., Citation2019; Jongstra et al., Citation2017; Koo & Vizer, Citation2019; Zygouris & Tsolaki, Citation2015). For example, the results of the computerized version of the Trail Making test depend on the tool, i.e., pen, mouse, or touch screen, used in the task (Germine et al., Citation2019). A benefit of computerized online tests in comparison to pen-and-paper tests is cost efficiency and better accessibility for individuals who may have difficulty traveling to laboratory or healthcare settings due to geographical distances (Casaletto & Heaton, Citation2017). Computerized tests, both on-site and online, may provide more standardized presentation methods and results with automated scoring (Iverson et al., Citation2009), and the measurement of reaction times is more accurate (Moore et al., 2016). Computerized bedside and outpatient online tests may be flexibly linked to patient and research databases (Bilder & Reise, Citation2019). There can also be situations, such as recent pandemic restrictions, where remote online testing becomes a necessity.

Several validated tests for screening and evaluation of circumscribed cognitive problems are available, e.g., Immediate Post-Concussion Assessment and Cognitive Testing (ImPACT) (Iverson et al., Citation2003; Mielke et al., Citation2015), Automated Neuropsychological Assessment Metrics (ANAM) (Levinson et al., Citation2005), and CNS Vital Signs (Gualtieri & Johnson, Citation2006). These tests have been successfully used for screening and follow-up of effects of traumatic brain injury, multiple sclerosis, human immunodeficiency virus, or psychiatric disorders (Arrieux et al., Citation2017; Biagianti et al., Citation2019; Kamminga et al., Citation2017; Lapshin et al., Citation2012; Levy et al., Citation2014). Commercial tablet- or computer versions of many of the common neuropsychological tests also exist, and some authors have suggested that we are very close to the point at which administration of all subtests of the Wechsler Adult Intelligence Scale (WAIS) could be performed by a computer (Vrana & Vrana, Citation2017).

Most of the existing computerized cognitive test instruments are designed to be used in healthcare and research requiring trained personnel resources (Alsalaheen et al., Citation2016; Farnsworth et al., Citation2017), but online tests marketed for self-administered screening especially of the aging population have also emerged (Hansen et al., Citation2015, Citation2016; Kluger et al., Citation2009; Trustram Eve & de Jager, Citation2014). Some commercial internet sites for cognitive self-evaluation, e.g., The NeuroCognitive Performance Test (NCPT) (www.lumosity.com) and TestMyBrain (www.testmybrain.org) have shown reliability in initial evaluations (Germine et al., Citation2019; Morrison et al., Citation2015). The newest addition is the Great British Intelligence Test that, although not originally designed for the purpose, has been used in monitoring the consequences of the COVID-19 pandemic (Hampshire et al., Citation2021).

Computerized tests and especially the online tests of cognition must have comparable reliability and validity to a gold-standard method to be suited for clinical work. Reviews and meta-analyses mainly focus on the suitability of computerized tests in the follow-up of deviations from baseline measurements, especially in sports-related brain injuries and progressive diseases (Clionsky & Clionsky, Citation2014; Farnsworth et al., Citation2017; Maerlender et al., Citation2010; Zygouris & Tsolaki, Citation2015). The results have been contradictory. For example, in a meta-analysis of sports-related injuries (Farnsworth et al., Citation2017) the reliability was only moderate in 53% in the computerized tests. In a review of 17 off-line and online tests (Zygouris & Tsolaki, Citation2015) most of the reviewed tests were sufficiently valid and reliable for differentiating normal from abnormal. However, the psychometric properties of the reviewed tests were documented only partly making comparisons difficult. Tasks of the computerized tests that evaluate memory and executive functions are prone to low repeatability (Hansen et al., Citation2016; Resch et al., Citation2018; Rijnen et al., Citation2018), but a learning effect has been observed also in tasks that measure processing speed and reaction time, especially in the first repeated measurement (Fredrickson et al., Citation2010; Jongstra et al., Citation2017; Rijnen et al., Citation2018).

summarizes the reliability and validity measures of tests that were designed to be performed online without supervision. Two of these tests were designed for screening or short-term follow-up of the aged (Assmann et al., Citation2016; Jongstra et al., Citation2017), and several for use in a wider range of ages (Biagianti et al., Citation2019; Morrison et al., Citation2015). One test is designed for use in long-term follow-up (Ruano et al., Citation2016). Correlations in the validity and reliability studies with standard neuropsychological tests have produced coefficients ranging from r = 0.11 to r = 0.82 (Mielke et al., Citation2015; Wallace et al., Citation2017; Zakzanis & Azarbehi, Citation2014). The repeatability has mostly been acceptable with intra-class correlation (ICC) within 0.29 − 0.89, and Cronbach’s alpha within 0.73 − 0.93 (Feenstra et al., Citation2018; Hansen et al., Citation2016). The tests have been found to differentiate patients with progressive memory impairment from the healthy (Jacova et al., 2015; Mackin et al., 2018; Mielke et al., Citation2015; Morrison et al., Citation2015).

Table 1 Self-administered tests.

Several hurdles must be overcome to ensure competent and valid application of online assessment such as quality of the internet connectivity, examinee difficulties with comprehension of instructions, inconsistent effort, or distractions during the test (Casaletto & Heaton, Citation2017). There has been some research on the effect of familiarity with computer use on test performance, but the results are mixed. Among healthy people, or adults with somatic diseases such as cancer, those who had used computers regularly performed better in tests of psychomotor speed, visual search, complex attentional regulation, and cognitive flexibility. They also had faster reaction times and more efficient keyboard use than those who only used the computer occasionally (Iverson et al., Citation2009; Zakzanis & Azarbehi, Citation2014). In contrast, studies of the elderly found that computer experience was not directly related to computer-assisted or online test performance when age and education level were controlled (Feenstra et al., Citation2018; Hansen et al., Citation2016; Rentz et al., Citation2015).

We developed a self-administered online memory test for research use in a cohort of Finnish-speaking middle-aged individuals, who have a history of a perinatal risk and who have been followed-up from birth for potential long-term consequences. In order to study convergent validity, we compared the test with standard neuropsychological tests similarly in Finnish language. We also assessed to which degree demographic variables and reported use of information technology affected participation or performance in the online test.

2. Methods

2.1. Subjects

The subjects are part of the Perinatal Adverse Events and Special Trends in Cognitive Trajectory (PLASTICITY) study, a prospective follow-up cohort of healthy adults born in a single maternity unit in 1971 − 1974 (Hokkanen et al., Citation2013; Launes et al., Citation2014; Michelsson et al., Citation1978). This cohort of initially 1196 newborns with predefined perinatal risks which typically caused no marked disability is described in detail elsewhere (Launes et al., Citation2014). Inclusion required at least one of the following criteria: hyperbilirubinaemia (bilirubin ≥ 340 µmol/L or transfusion), Apgar score < 7 at 5 or 15 min, birthweight < 2000 g, maternal diabetes, marked neurological symptoms (e.g., rigidity or apnoea), hypoglycaemia (blood glucose ≤ 1.67 for full-term and ≤ 1.21 for preterm infants), mechanical ventilation due to poor oxygenation, or severe infection. From 5 years onwards 845 cohort subjects participated in follow-up and a control group, without perinatal risks (n = 199) has also been followed from childhood. The subjects have been followed in order to study the long-term consequences of the perinatal risks (Immonen et al., Citation2020; Michelsson et al., Citation1984; Michelsson & Lindahl, Citation1993; Schiavone et al., Citation2019). Individuals with severe disabilities, e.g., cerebral palsy, brain malformations, sensory deficits, and intellectual disabilities, were excluded from further follow-up by the age of five years.

Around the age of 40 a total of 531 subjects participated in cognitive testing and a detailed neurological (JL) and neuroradiological evaluation. They were all community-dwelling adults with normal work history and an education level corresponding to the general population of Finland (Official Statistics of Finland (OSF), Citation2020). Of them, 234 subjects completed both the online test and a comprehensive neuropsychological test battery, 273 completed the traditional neuropsychological test only and 24 completed the online test only. The subjects also filled in an extensive questionnaire concerning somatic and mental health, cognition, substance use, occupation, leisure activities, social background, education, social media activity, and information technology skills (Launes & Hokkanen, Citation2019). The age of the subjects was 42.1 ± 1.3 years. gives a description of the whole sample and the subgroups.

Table 2 Demographic information (frequencies and percentages in parenthesis) on the whole sample, those with both online and traditional neuropsychological tests (NPS) and online tests (OLT), those with NPS only. and those with OLT only.

The Ethical Review Board of the Hospital District of Helsinki and Uusimaa has approved the project (journal number: 147/13/03/00/13). Written informed consent was obtained from participants. No sensitive identifying information was stored in the online server during the test.

2.2. Online test

The online test (OLT) was designed to be completed at home, without supervision, using written instructions given on the screen. The test used the SoSci Survey platform (www.soscisurvey.de), which is scalable from smartphones to desktop computers. Desktop, laptop, tablet computers, and smartphones were allowed. Subjects were instructed to take the test without interruption in a quiet environment, but the conditions were not monitored. SoSci Survey platform recorded a log on the progression times.

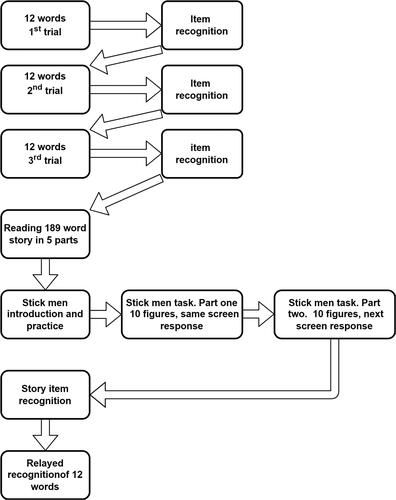

The online test consisted of four tasks focusing mainly on verbal memory. A twelve-item word list learning task with (1) immediate and (2) delayed recognition, (3) a story recognition task, and (4) a visuospatial mental rotation and working memory task. The test required no motor activity, except the use of a mouse or a keyboard. Completing the four tasks took approximately 15 minutes (ranges 9 − 23 minutes). presents the OLT flow.

Words of the word recognition task were unrelated two-syllable nouns from the 1000 most common words in the Finnish corpus (Saukkonen, Citation1979). Words were shown one at a time for two seconds, after which they were automatically replaced by the next one. The twelve words were presented one at a time three times in the same order. Immediate recognition was measured by selecting the correct word from a list of four words after each trial. Recognition order and the order of the alternatives were randomized. After completion, the subject was informed that delayed recall will be measured later. Delayed recognition was tested as the last task of the OLT, and the delay ranged from 7 to 22 minutes depending on the subject’s overall speed. See Appendix 1 for the list of words and their alternatives.

The story recognition test consisted of five short paragraphs (32 − 45 words), displayed on the screen, 189 words in total (see Appendix 2). No alternative forms were used; all subjects read the same story. The story was about shipwrecks in the Baltic Sea and the subjects were informed that parts of the story were factual, while others were imaginary. The Baltic Sea was chosen as the subject topic because at the time, pollution of the Baltic Sea was widely discussed in the media. Also, a well-preserved wreck of the brig ship Vrouw Maria (“Vrouw Maria,” 2018) was recently discovered near the Finnish coast, equally prominently reported in the media. We aimed to reduce the effect of good general knowledge by selecting current themes.

Following the story, the mental rotation (stickmen) task was presented. After this delay, twenty multiple-choice questions were presented about the story. The subject was asked to select one correct statement out of three alternatives, one of which in all cases was “information not given in the story”.

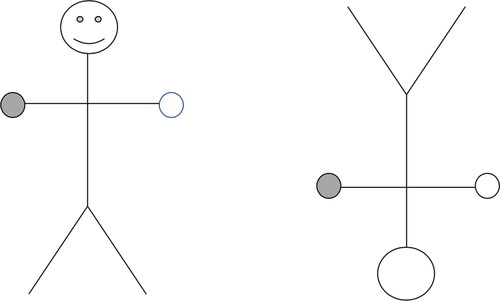

The visuospatial stickmen task was a modification of the Manikin Test by Ratcliff (Ratcliff, Citation1979). There were two presentation types in the task. First, 10 pairs of stick figures were displayed on the screen, one pair at a time. The task was to assess whether the gray ball is held in the same hand by both figures and the view changed automatically after the response. The subject was told that this was a speed task and should be done as quickly as possible. An example of a stick figure is displayed in . In presentation type two, the subject was informed that the test will now require remembering the figures and was shown 10 new pairs 1 sec at a time, each followed by a separate response screen for 5 sec. The scores of the two presentation types were added (maximum of 20 points).

2.3. Neuropsychological evaluation

The traditional, face-to-face neuropsychological assessment (NPS) involved a comprehensive battery of tests and lasted for approximately three hours. For the present study, we analyzed the tests best corresponding to the online tests. The OLT wordlist and story recognition tasks were compared with the Wechsler Memory Scale 3rd version (Wechsler, Citation1997) Word list learning immediate and delayed conditions and Logical memory (story A) immediate recall. The visuospatial stickmen test was compared with the immediate recall of the Rey–Osterrieth Complex Figure Test (ROCFT) (Osterrieth, Citation1944; Rey, Citation1941). The full-scale intelligence quotient (FSIQ) of the Wechsler Adult Intelligence Scale, version IV (Wechsler, Citation2012) (WAIS-IV) was used as a measure of general cognitive ability.

2.4. Familiarity of computers and information technology

The frequency of social media use, including a social media addiction questionnaire, and the proficiency of using information technology was evaluated using a questionnaire (Launes & Hokkanen, Citation2019). We used three items for the subject’s frequency in computer use: social media use, computer gaming, and computer use including programming as a hobby. Items were presented on a five-step Likert scale (1–5, from “not at all” to “every day”) and the three items were combined for a total sum score with a maximum of 15 points. The frequency of computer use was re-categorized as low (0–3 points), medium (4–6 points), and high (7 points and above).

The educational level of the participants was also collected using the questionnaire. The answers were categorized into 4 levels: 9 years or below (corresponds to the obligatory education), 9.5–11 years (corresponds to the vocational school or part of college), 12–15 years (corresponds to college and some years of higher education), and 16 or more years (corresponds to completed university or other higher education degrees).

2.5. Statistical methods

For comparison, all cognitive subtest scores were standardized into z-scores and a sum score was created as the sum of the z-scores of the four subtests. Correlations were estimated by calculating Pearson correlation coefficients. For interpretation, we use the effect size conventions: a coefficient of .10 represents a weak or small association, a coefficient of .30 a moderate, and a coefficient of .50 or above a strong or large correlation (Cohen, Citation1988). Sum score differences between presentation methods (NPS or OLT) and the interaction effects of sex and education were evaluated using a general linear model for repeated testing. Ceiling and floor effects were evaluated by comparing the means and standard deviations to the maximum and minimum values, respectively. For classification of the relative performance level in NPS and OLT, three groups corresponding low, medium, and high performance were created using the cut-off points of the 25th and 75th percentile of the participants’ performance. As the distribution of information technology experience measures was skewed, we used three categories formed from the total score and nonparametric methods (Kruskal–Wallis H, one-way analysis of variance, and the χ2-test). The effect of computer use levels on cognitive tests was evaluated with univariate general linear models with sex and education included as covariates. IBM Statistical Package for the Social Sciences (SPSS)—version 24.0 was used for the analyses.

3. Results

Raw score means in the subgroup that participated in both test presentation methods (n = 234) are given in . The online tests correlated significantly with corresponding traditional neuropsychological tests (r = .21−.45 depending on the subtest), see . The strongest correlations were found between the OLT word list recognition and the NPS WMS-III Word list, both immediately (r = .45, p < .001) and delayed (r = .41, p < .001).

Table 3 Raw scores of traditional neuropsychological (NPS) and online tests (OLT) in the sample with both NPS and OLT available (n = 234).

Table 4 Pearson product moment correlations of online test with traditional neuropsychological tests (n = 234).

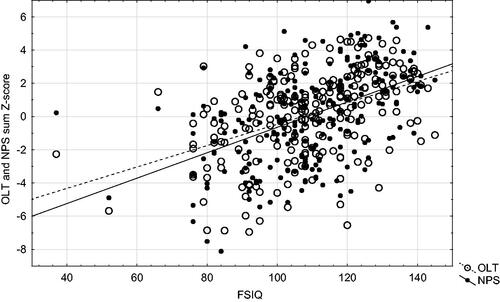

The NPS sum score correlated with the OLT sum score (r = .50, p < .001). In the OLT word list task-delayed recognition, the mean was within one standard deviation of the maximum, indicating a ceiling effect. No other indications of floor or ceiling effects were found. The correlation between the OLT sum score and the FSIQ (r = .45, p < .001) was similar to the correlation between the NPS sum score and FSIQ (r = .49, p < .001), see . The presentation method (OLT or NPS) did not affect the cognitive performance. There was no statistically significant difference between mean sum scores of OLT and NPS tests F(1,230) = 0.118, p = .731. Sex, F(1,230) = 0.775, p = .380, or education F(1,230) = 0.324, p = .570 in the model did not have an interaction with the presentation method.

Figure 3 Association of the online (OLT) and traditional neuropsychological (NPS) test sum scores to Full Scale Intelligence Quotient (FSIQ).

When categorized for individual performance, subjects with low performance in NPS tests also tended to show low performance in the OLT and high performers likely showed high scores in both tests (χ2 (4) = 48.62, p = 0.001), see . Two subjects had high performance in the OLT but low performance in the NPS tests. They had an average intelligence (FSIQ 108 and 98) and neither reported high experience in computer use in the questionnaire (2/12 and 5/12 points). Seven subjects had high performance in NPS, but low performance in OLT. They had average or high FSIQ (mean 111, ranges 91 − 129). In all of these seven, fluctuation of performance was observed within the OLT subtests and all but one spent time on test falling below the mean. These subjects had low experience in computer use (0 − 4/12 points). Seven of the nine subjects with inconsistent performance had a mood or anxiety disorder.

Table 5 Performance groups of the traditional neuropsychological (NPS) and online tests (OLT).

In the whole sample, the median score of computer use frequency was 4 on a range of 0–12. A total of 231 (44%) subjects reported low (0–3 points), 196 (37%) medium (4–6 points), and 42 (8%) high use (7–12 points) of computers, see . Overall computer experience was related to taking the OLT χ2 (4) = 17.91, p = .001. Education χ2 (6) = 15.60, p = .016 was also associated to the participation, but sex was not χ2 (2) = 1.62, p = .444. Computer experience and education were associated χ2 (6) = 13.11, p = .041.

When the three aspects of computer use were analyzed separately, social media use (H (2) = 7.76, p = 0.021) and computers as a hobby (H (2) = 16.92, p = 0.000) influenced participation in the OLT. Frequent gaming had no association to OLT participation (H (2) = 4.30, p = 0.117.

Computer use frequency had no association the performance in NPS, F (2,446) = 0.020, p = .980, or OLT, F (2,240) = 1.370, p = 0.256. There were no differences between the groups also in models where sex and education were controlled for.

4. Discussion

The performance in the online test correlated moderately with the performance in the corresponding standard neuropsychological tests, suggesting convergent validity for the online and traditional memory testing. The online test also correlated with the Full-Scale Intelligence Quotient. The correlations between all subtests of the online test and the corresponding traditional tests were statistically significant. The mean sum scores did not differ between presentation methods and there was no interaction between presentation methods and sex or education. The familiarity of using computers did not affect the performance, but subjects who used computers often were more likely to take part in the voluntary online test.

Overall, performance in the online test corresponded to the performance in traditional neuropsychological tests and was not influenced by gender or education any more than the standard neuropsychological tests are. The correlations were only moderate but in consonance with previous validity studies of online tests, 0.30 − 0.75 (Trustram Eve & de Jager, Citation2014), 0.49 − 0.63 (Hansen et al., Citation2015), 0.40 − 0 .70 (Jacova et al., 2015), and 0.17 − 0.51 (Rentz et al., Citation2015). Stronger correlations have been found in online tests that resembled the traditional neuropsychological tests most (Kluger et al., Citation2009; Morrison et al., Citation2015; Citation2018; Wallace et al., Citation2017). The correlation between both OLT and NPS tasks and the FSIQ suggest that memory also associates with the general cognitive ability, potentially through the association between working memory and intelligence or the general g-factor (Colom et al., Citation2004; Süß et al., Citation2002).

The online word list learning task had the highest correlation coefficient with the corresponding Wechsler WMS-III item, and also moderate correlations with most other neuropsychological tasks. The only non-significant correlations for the OLT word list learning and delayed recognition tasks emerged with the NPS Rey visual memory task, which suggests an, at least partially specific, verbal memory element. Previous studies have also found that the computerized word list learning tasks agree best with standard neuropsychological tests (Feenstra et al., Citation2018; Hansen et al., Citation2016; Morrison et al., Citation2018). This suggests that word-learning tasks are useful when presented online. In our study, the online 12-word learning task had a ceiling effect especially in the delayed recognition, suggesting that to enhance sensitivity the online task should have been more demanding. Other studies have also found ceiling effects in the online word list recognition tasks (Hansen et al., Citation2016) (Feenstra et al., Citation2018; Trustram Eve & de Jager, Citation2014). In a longitudinal follow-up of the gradually ageing population, memory performance tends to decrease rather than increase, however (Rönnlund et al., Citation2005; Salthouse, Citation2019; Schaie, Citation2005), and despite the potential learning effects in repeated testing, the performance that was at ceiling initially may not be so 10 or 20 years later. No floor effects were found. Increasing the length of the list would be an option, but more difficult and complex online tests might not optimally solve this problem, as subjects may be tempted to discontinue difficult tasks. Other solutions include presenting the words in an auditory manner. Word recall, in turn, could be accomplished online by writing or using a speech recognition program instead of recognition to increase difficulty (Feenstra et al., Citation2018; Morrison et al., Citation2015). However, more technically advanced methods of presentation and input may demand costly, complex, and difficult-to-operate equipment, which can be an obstacle for self-administration of tests.

The online story recognition task correlated significantly with the WMS-III Logical memory task, but had an even higher correlation coefficient with the WMS-III Word list learning task. As its correlation coefficient was the lowest with the NPS Rey immediate recall, the task appears to measure verbal rather than non-verbal memory. The relatively weak correlation between the two story recall tasks may be caused by the different presentation (reading versus auditory) and recall (recognition with a slight delay versus spontaneous immediate recall) modes used in the online test. Reading time was not controlled in the online test, and it cannot be excluded that the subjects repeated reading the paragraph before moving forward with the screens. A time limit would increase the similarity to the standard test. However, as subjects progressed at their own pace, the situation resembled more a natural situation where reading material needs to be memorized. Because we anticipated the online test to be easier than the WMS-III due to the way it was presented, we added questions that could not be answered based on the text and also the response option “information not given” in all items. The purpose of this was to complicate the task and to make room for the false memory phenomenon.

Another relatively low correlation between the online test and the standard neuropsychological test was found for the stickmen mental rotation task which correlated only modestly with the Rey–Osterrieth Complex Figure task and not at all with the other tests. The stickmen task has similar cognitive, especially visuo-spatial, elements as the ROCFT, but they are not directly comparable. In the ROCFT, executive functions, visual perception, and figural memory have a greater role than in our stickmen task, which requires more visual mental rotation and working memory. Mental rotation ability has been linked to right hemisphere functions with a strong parietal involvement (Corballis, Citation1997; Hattemer et al., 2011; Morton & Morris, Citation1995) but our version added the working memory component that was not included in the original Manikin Test (Ratcliff, Citation1979) increasing and modifying the task demands. In previous studies of self-administered tests, visual memory has often been measured with tasks more closely resembling traditional neuropsychological tests, which likely explains higher correlations (Jacova et al., 2015; Wallace et al., Citation2017).

We observed that experience with computers did not affect the performance either in an online or standard paper-and-pencil test with or without sex and education being taken into account. A similar observation was made in previous studies among the elderly (Fazeli et al., Citation2013; Hansen et al., Citation2016; Jacova et al., 2015). Among healthy individuals, experience with computer use has been found to affect reaction times and processing speed (Iverson et al., Citation2009; Lee Meeuw Kjoe et al., Citation2021) but since our test focused on memory, speed was not directly evaluated. In individual cases, the discrepancy between high performance in standard tests, but low performance in the online tests could perhaps be caused by low computer use. In the previous studies, attitudes toward IT technology have been found to have an effect on task performance even more than the use of technology (Fazeli et al., Citation2013; Ruano et al., Citation2016). This was not studied here.

Unsurprisingly, the use of computers seemed to explain whether a subject is willing to participate in an online test. Subjects who use social media or program computers as a hobby were more likely to participate in the voluntary online test. Computer experience and education were associated, and also higher education was linked to higher online participation. The effect of age was not assessed in this study, as all the subjects in the cohort were generally the same age, around 42. The results of our study may therefore not be generalizable to younger or older generations. Our subjects differ from the present time adolescents and young adults, sometimes referred to as “diginatives,” as well as from older subjects who mostly have only little computer experience.

Computerized tests for assessing cognitive performance face many challenges that may lead to erroneous or completely invalid results, e.g., with regard to internet connectivity, inconsistent effort, distractions during the test, test standardization, and how instructions are given (Casaletto & Heaton, Citation2017; Gates & Kochan, Citation2015). In the present study, nine subjects were found to have inconsistent performance when the overall online and traditional performance levels were compared, and some of the relatively poor online performance may have been due to fluctuating attention. The reason for this could not be investigated because no monitoring of the environment or test-taking conditions was applied. Comparing test performance between supervised and non-supervised conditions has yielded conflicting results. Performance in the non-supervised test has been found to be better (Mielke et al., Citation2015), worse (Feenstra et al., Citation2018), or similar (Rentz et al., Citation2015) compared to the supervised conditions. Distracting factors like noise, interruptions, and fatigue cannot be controlled without observation. The subject’s inability to understand instructions may go unnoticed. The use of tests completed independently has been reported to be mostly successful, however, with a low frequency of discontinuations or failures to complete the test due to lack of skill or technical problems (Feenstra et al., Citation2018; Jongstra et al., Citation2017; Levy et al., Citation2014; Rentz et al., Citation2015; Tierney & Charles, Citation2014). A questionnaire confirming a successful test would be helpful after the online test.

There are limitations in the study that warrant consideration. No test-retest reliability analyses have been conducted, as we had no repeated measurements with the OLT. For a longitudinal study design, that should be evaluated separately. Also, in order to use the method for diagnostic or other purposes beyond the follow-up of a single longitudinal cohort, normative studies should be done. Our study was aimed at finding comparable traditional tests to compare the OLT with, but for a more detailed discriminant validity analyses a wider variety of traditional test methods could be used. As the OLT tasks were memory oriented, the traditional methods were memory oriented as well. The OLT stickmen task had very low correlations with the other tasks partly suggesting it, especially in its first presentation type, measured something different. Still, we cannot be certain if other non-memory tests would have confirmed memory specificity of the measure. Adding more measures, and then conducting a factor analysis to confirm the structural elements of the test could be useful. Finally, the study population was a well-defined cohort based on consecutive deliveries in a single maternity unit with data collected prospectively since birth. We did not analyze the differences between risk and control subjects because that was not the focus of the present paper.

The speed of development of new hardware, and consequently, the speed at which hardware gets obsolete presents a hard challenge for software developers (Germine et al., Citation2019; Mielke et al., Citation2015). A desirable computerized test is generalizable to many ages (Casaletto & Heaton, Citation2017; Gates & Kochan, Citation2015). Almost all information technology hardware is currently designed to operate the online graphical user interface in a fairly uniform maner, reducing the distraction caused by hardware. Our online test was designed for a long-term follow-up study to be repeated at five to ten-year intervals. The test is easy to present using several online platforms, and it measures memory and cognition producing comparable results with traditional tests. While test–retest validity needs to be evaluated separately, based on the results so far the method should be useful in monitoring memory performance if applied longitudinally in a healthy middle aged sample with no major cognitive impairments. Computer experience, while being associated with the willingness to participate the online test, did not affect test performance but a wider evaluation of attitudes for information technology should be included in future studies. Also, a questionnaire or other methods to confirm the test taking conditions should be added.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Alsalaheen, B., Stockdale, K., Pechumer, D., & Broglio, S. P. (2016). Measurement Error in the Immediate Postconcussion Assessment and Cognitive Testing (ImPACT): Systematic review. Journal of Head Trauma Rehabilitation, 31(4), 242–251. https://doi.org/10.1097/HTR.0000000000000175

- Arrieux, J. P., Cole, W. R., & Ahrens, A. P. (2017). A review of the validity of computerized neurocognitive assessment tools in mild traumatic brain injury assessment. Concussion (London, England), 2(1), CNC31. https://doi.org/10.2217/cnc-2016-0021

- Assmann, K. E., Bailet, M., Lecoffre, A. C., Galan, P., Hercberg, S., Amieva, H., & Kesse-Guyot, E. (2016). Comparison between a self-administered and supervised version of a web-based cognitive test battery: Results from the NutriNet-Santé Cohort Study. Journal of Medical Internet Research, 18(4), e68. https://doi.org/10.2196/jmir.4862

- Biagianti, B., Fisher, M., Brandrett, B., Schlosser, D., Loewy, R., Nahum, M., & Vinogradov, S. (2019). Development and testing of a web-based battery to remotely assess cognitive health in individuals with schizophrenia. Schizophrenia Research, 208, 250–257. https://doi.org/10.1016/j.schres.2019.01.047

- Bilder, R. M., & Reise, S. P. (2019). Neuropsychological tests of the future: How do we get there from here? The Clinical Neuropsychologist, 33(2), 220–245. https://doi.org/10.1080/13854046.2018.1521993

- Brearly, T. W., Shura, R. D., Martindale, S. L., Lazowski, R. A., Luxton, D. D., Shenal, B. V., & Rowland, J. A. (2017). Neuropsychological test administration by videoconference: A systematic review and meta-analysis. Neuropsychology Review, 27(2), 174–186. https://doi.org/10.1007/s11065-017-9349-1

- Casaletto, K. B., & Heaton, R. K. (2017). Neuropsychological assessment: Past and future. Journal of the International Neuropsychological Society, 23(9/10), 778–790. https://doi.org/10.1017/S1355617717001060

- Clionsky, M., & Clionsky, E. (2014). Psychometric equivalence of a paper-based and computerized (iPad) version of the Memory Orientation Screening Test (MOST®). The Clinical Neuropsychologist, 28(5), 747–755. https://doi.org/10.1080/13854046.2014.913686

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Routledge Academic.

- Colom, R., Rebollo, I., Palacios, A., Juan-Espinosa, M., & Kyllonen, P. C. (2004). Working memory is (almost) perfectly predicted by g. Intelligence, 32(3), 277–296. https://doi.org/10.1016/j.intell.2003.12.002

- Corballis, M. C. (1997). Mental rotation and the right hemisphere. Brain and Language, 57(1), 100–121. https://doi.org/10.1006/brln.1997.1835

- Farnsworth, J. L., Dargo, L., Ragan, B. G., & Kang, M. (2017). Reliability of computerized neurocognitive tests for concussion assessment: A meta-analysis. Journal of Athletic Training, 52(9), 826–833. https://doi.org/10.4085/1062-6050-52.6.03

- Fazeli, P. L., Ross, L. A., Vance, D. E., & Ball, K. (2013). The relationship between computer experience and computerized cognitive test performance among older adults. The Journals of Gerontology. Series B, Psychological Sciences and Social Sciences, 68(3), 337–346. https://doi.org/10.1093/geronb/gbs071

- Feenstra, H. E. M., Murre, J. M. J., Vermeulen, I. E., Kieffer, J. M., & Schagen, S. B. (2018). Reliability and validity of a self-administered tool for online neuropsychological testing: The Amsterdam Cognition Scan. Journal of Clinical and Experimental Neuropsychology, 40(3), 253–273. https://doi.org/10.1080/13803395.2017.1339017

- Fredrickson, J., Maruff, P., Woodward, M., Moore, L., Fredrickson, A., Sach, J., & Darby, D. (2010). Evaluation of the usability of a brief computerized cognitive screening test in older people for epidemiological studies. Neuroepidemiology, 34(2), 65–75. . Epub 2009 Dec 11. https://doi.org/10.1159/000264823

- Gates, N. J., & Kochan, N. A. (2015). Computerized and on-line neuropsychological testing for late-life cognition and neurocognitive disorders: Are we there yet? Current Opinion in Psychiatry, 28(2), 165–172. https://doi.org/10.1097/YCO.0000000000000141

- Germine, L., Reinecke, K., & Chaytor, N. S. (2019). Digital neuropsychology: Challenges and opportunities at the intersection of science and software. The Clinical Neuropsychologist, 33(2), 271–286. https://doi.org/10.1080/13854046.2018.1535662

- Gualtieri, C., & Johnson, L. (2006). Reliability and validity of a computerized neurocognitive test battery, CNS vital signs. Archives of Clinical Neuropsychology : The Official Journal of the National Academy of Neuropsychologists, 21(7), 623–643. https://doi.org/10.1016/j.acn.2006.05.007

- Hampshire, A., Trender, W., Chamberlain, S. R., Jolly, A. E., Grant, J. E., Patrick, F., Mazibuko, N., Williams, S. C., Barnby, J. M., Hellyer, P., & Mehta, M. A. (2021). Cognitive deficits in people who have recovered from COVID-19. EClinical Medicine, 39, 101044. https://doi.org/10.1016/j.eclinm.2021.101044

- Hansen, T. I., Haferstrom, E. C. D., Brunner, J. F., Lehn, H., & Håberg, A. K. (2015). Initial validation of a web-based self-administered neuropsychological test battery for older adults and seniors. Journal of Clinical and Experimental Neuropsychology, 37(6), 581–594. https://doi.org/10.1080/13803395.2015.1038220

- Hansen, T. I., Lehn, H., Evensmoen, H. R., & Håberg, A. K. (2016). Initial assessment of reliability of a self-administered web-based neuropsychological test battery. Computers in Human Behavior, 63, 91–97. https://doi.org/10.1016/j.chb.2016.05.025

- Hattemer, K., Plate, A., Heverhagen, J. T., Haag, A., Keil, B., Klein, K. M., Hermsen, A., Oertel, W. H., Hamer, H. M., Rosenow, F., & Knake, S. (2011). Determination of hemispheric dominance with mental rotation using functional transcranial Doppler sonography and FMRI. Journal of Neuroimaging : official Journal of the American Society of Neuroimaging, 21(1), 16–23. https://doi.org/10.1111/j.1552-6569.2009.00402.x

- Hokkanen, L., Launes, J., & Michelsson, K. (2013). The Perinatal Adverse events and Special Trends in Cognitive Trajectory (PLASTICITY) - pre-protocol for a prospective longitudinal follow-up cohort study . F1000Research, 2, 50. https://doi.org/10.12688/f1000research.2-50.v1

- Immonen, S., Launes, J., Järvinen, I., Virta, M., Vanninen, R., Schiavone, N., Lehto, E., Tuulio-Henriksson, A., Lipsanen, J., Michelsson, K., & Hokkanen, L. (2020). Moderate alcohol use is associated with decreased brain volume in early middle age in both sexes. Scientific Reports, 10(1), 13998. https://doi.org/10.1038/s41598-020-70910-5

- Iverson, G. L., Brooks, B. L., Ashton, V. L., Johnson, L. G., & Gualtieri, C. T. (2009). Does familiarity with computers affect computerized neuropsychological test performance? Journal of Clinical and Experimental Neuropsychology, 31(5), 594–604. https://doi.org/10.1080/13803390802372125

- Iverson, G. L., Lovell, M. R., & Collins, M. W. (2003). Interpreting change on ImPACT following sport concussion. The Clinical Neuropsychologist, 17(4), 460–467. https://doi.org/10.1076/clin.17.4.460.27934

- Jacova, C., McGrenere, J., Lee, H. S., Wang, W. W., Le Huray, S., Corenblith, E. F., Brehmer, M., Tang, C., Hayden, S., Beattie, B. L., & Hsiung, G.-Y R. (2015). C-TOC (Cognitive Testing on Computer): Investigating the usability and validity of a novel self-administered cognitive assessment tool in aging and early dementia. Alzheimer Disease and Associated Disorders, 29(3), 213–221. https://doi.org/10.1097/WAD.0000000000000055

- Jongstra, S., Wijsman, L. W., Cachucho, R., Hoevenaar-Blom, M. P., Mooijaart, S. P., & Richard, E. (2017). Cognitive testing in people at increased risk of dementia using a Smartphone App: The iVitality proof-of-principle study. JMIR mHealth and uHealth, 5(5), e68. https://doi.org/10.2196/mhealth.6939

- Kamminga, J., Bloch, M., Vincent, T., Carberry, A., Brew, B. J., & Cysique, L. A. (2017). Determining optimal impairment rating methodology for a new HIV-associated neurocognitive disorder screening procedure. Journal of Clinical and Experimental Neuropsychology, 39(8), 753–767. https://doi.org/10.1080/13803395.2016.1263282

- Kluger, B. M., Saunders, L. V., Hou, W., Garvan, C. W., Kirli, S., Efros, D. B., Chau, Q.-A N., Crucian, G. P., Finney, G. R., Meador, K. J., & Heilman, K. M. (2009). A brief computerized self-screen for dementia. Journal of Clinical and Experimental Neuropsychology, 31(2), 234–244. https://doi.org/10.1080/13803390802317559

- Koo, B. M., & Vizer, L. M. (2019). mobile technology for cognitive assessment of older adults: A scoping review. Innovation in Aging, 3(1), igy038. https://doi.org/10.1093/geroni/igy038

- Lapshin, H., O'Connor, P., Lanctôt, K. L., & Feinstein, A. (2012). Computerized cognitive testing for patients with multiple sclerosis. Multiple Sclerosis and Related Disorders, 1(4), 196–201. https://doi.org/10.1016/j.msard.2012.05.001

- Launes, J., & Hokkanen, L. (2019). PLASTICITY-study questionnaire. Zenodo, https://doi.org/10.5281/zenodo.3594674

- Launes, J., Hokkanen, L., Laasonen, M., Tuulio-Henriksson, A., Virta, M., Lipsanen, J., Tienari, P. J., & Michelsson, K. (2014). Attrition in a 30-year follow-up of a perinatal birth risk cohort: Factors change with age. PeerJ, 2, e480. https://doi.org/10.7717/peerj.480

- Lee Meeuw Kjoe, P. R., Agelink van Rentergem, J. A., Vermeulen, I. E., & Schagen, S. B. (2021). How to correct for computer experience in online cognitive testing? Assessment, 28(5), 1247–1255. https://doi.org/10.1177/1073191120911098

- Levinson, D., Reeves, D., Watson, J., & Harrison, M. (2005). Automated neuropsychological assessment metrics (ANAM) measures of cognitive effects of Alzheimer's disease. Archives of Clinical Neuropsychology, 20(3), 403–408. https://doi.org/10.1016/j.acn.2004.09.001

- Levy, B., Celen-Demirtas, S., Surguladze, T., Eranio, S., & Ellison, J. (2014). Neuropsychological screening as a standard of care during discharge from psychiatric hospitalization: The preliminary psychometrics of the CNS Screen. Psychiatry Research, 215(3), 790–796. https://doi.org/10.1016/j.psychres.2014.01.010

- Mackin, R. S., Insel, P. S., Truran, D., Finley, S., Flenniken, D., Nosheny, R., Ulbright, A., Comacho, M., Bickford, D., Harel, B., Maruff, P., & Weiner, M. W. (2018). Unsupervised online neuropsychological test performance for individuals with mild cognitive impairment and dementia: Results from the Brain Health Registry. Alzheimer's & Dementia: Diagnosis, Assessment & Disease Monitoring, 10(1), 573–582. https://doi.org/10.1016/j.dadm.2018.05.005

- Maerlender, A., Flashman, L., Kessler, A., Kumbhani, S., Greenwald, R., Tosteson, T., & McAllister, T. (2010). Examination of the construct validity of ImPACT™ computerized test, traditional, and experimental neuropsychological measures. The Clinical Neuropsychologist, 24(8), 1309–1325. https://doi.org/10.1080/13854046.2010.516072

- Michelsson, K., & Lindahl, E. (1993). Relationship between perinatal risk factors and motor development at the ages of 5 and 9 years. In A. F. Kalverboer, B. Hopkins, & R. Geuze (Eds.), Motor Development in Early and Later Childhood. (1st ed., pp. 266–285). Cambridge University Press. https://doi.org/10.1017/CBO9780511663284.019

- Michelsson, K., Lindahl, E., Parre, M., & Helenius, M. (1984). Nine-year follow-up of infants weighing 1 500 g or less at birth. Acta Paediatrica Scandinavica, 73(6), 835–841. https://doi.org/10.1111/j.1651-2227.1984.tb17784.x

- Michelsson, K., Ylinen, A., Saarnivaara, A., & Donner, M. (1978). Occurrence of risk factors in newborn infants. A study of 22359 consecutive cases. Annals of Clinical Research, 10(6), 334–336.

- Mielke, M. M., Machulda, M. M., Hagen, C. E., Edwards, K. K., Roberts, R. O., Pankratz, V. S., Knopman, D. S., Jack, C. R., & Petersen, R. C. (2015). Performance of the CogState computerized battery in the Mayo Clinic Study on Aging. Alzheimer's & Dementia : The Journal of the Alzheimer's Association, 11(11), 1367–1376. https://doi.org/10.1016/j.jalz.2015.01.008

- Moore, T. M., Reise, S. P., Roalf, D. R., Satterthwaite, T. D., Davatzikos, C., Bilker, W. B., Port, A. M., Jackson, C. T., Ruparel, K., Savitt, A. P., Baron, R. B., Gur, R. E., & Gur, R. C. (2016). Development of an itemwise efficiency scoring method: Concurrent, convergent, discriminant, and neuroimaging-based predictive validity assessed in a large community sample. Psychological Assessment, 28(12), 1529–1542. https://doi.org/10.1037/pas0000284

- Morrison, G. E., Simone, C. M., Ng, N. F., & Hardy, J. L. (2015). Reliability and validity of the NeuroCognitive Performance Test, a web-based neuropsychological assessment. Frontiers in Psychology, 6, 1652. https://doi.org/10.3389/fpsyg.2015.01652

- Morrison, R. L., Pei, H., Novak, G., Kaufer, D. I., Welsh‐Bohmer, K. A., Ruhmel, S., & Narayan, V. A. (2018). A computerized, self‐administered test of verbal episodic memory in elderly patients with mild cognitive impairment and healthy participants: A randomized, crossover, validation study. –. Alzheimer's & Dementia: Diagnosis, Assessment & Disease Monitoring, 10(1), 647–656. https://doi.org/10.1016/j.dadm.2018.08.010

- Morton, N., & Morris, R. G. (1995). Image transformation dissociated from visuospatial working memory. Cognitive Neuropsychology, 12(7), 767–791. https://doi.org/10.1080/02643299508251401

- Official Statistics of Finland (OSF). (2020). Educational structure of population [e-publication]. https://www.stat.fi/tup/suoluk/suoluk_koulutus_en.html

- Osterrieth, P. A. (1944). Le test de copie d’une figure complexe; contribution a l’etude de la perception et de la memoire. Archives de Psychologie, 30, 286–356.

- Price, C. J. (2018). The evolution of cognitive models: From neuropsychology to neuroimaging and back. Cortex; a Journal Devoted to the Study of the Nervous System and Behavior, 107, 37–49. https://doi.org/10.1016/j.cortex.2017.12.020

- Ratcliff, G. (1979). Spatial thought, mental rotation and the right cerebral hemisphere. Neuropsychologia, 17(1), 49–54. https://doi.org/10.1016/0028-3932(79)90021-6

- Rentz, D. M., Dekhtyar, M., Sherman, J., Burnham, S., Blacker, D., Aghjayan, S. L., Papp, K. V., Amariglio, R. E., Schembri, A., Chenhall, T., Maruff, P., Aisen, P., Hyman, B. T., & Sperling, R. A. (2015). The feasibility of at-home iPad cognitive testing for use in clinical trials. The Journal of Prevention of Alzheimer's Disease, 3, 8–12. https://doi.org/10.14283/jpad.2015.78

- Resch, J. E., Schneider, M. W., & Munro Cullum, C. (2018). The test-retest reliability of three computerized neurocognitive tests used in the assessment of sport concussion. International Journal of Psychophysiology, 132, 31–38. https://doi.org/10.1016/j.ijpsycho.2017.09.011

- Rey, A. (1941). L’examen psychologique dans les cas d’encéphalopathie traumatique.(Les problems.). Archives de Psychologie, 28, 218–285.

- Rijnen, S. J. M., van der Linden, S. D., Emons, W. H. M., Sitskoorn, M. M., & Gehring, K. (2018). Test–retest reliability and practice effects of a computerized neuropsychological battery: A solution-oriented approach. Psychological Assessment, 30(12), 1652–1662. https://doi.org/10.1037/pas0000618

- Rönnlund, M., Nyberg, L., Bäckman, L., & Nilsson, L.-G. (2005). Stability, growth, and decline in adult life span development of declarative memory: cross-sectional and longitudinal data from a population-based study. Psychology and Aging, 20(1), 3–18. https://doi.org/10.1037/0882-7974.20.1.3

- Ruano, L., Sousa, A., Severo, M., Alves, I., Colunas, M., Barreto, R., Mateus, C., Moreira, S., Conde, E., Bento, V., Lunet, N., Pais, J., & Tedim Cruz, V. (2016). Development of a self-administered web-based test for longitudinal cognitive assessment. Scientific Reports, 6(1), 19114. https://doi.org/10.1038/srep19114

- Salthouse, T. A. (2019). Trajectories of normal cognitive aging. Psychology and Aging, 34(1), 17–24. https://doi.org/10.1037/pag0000288

- Saukkonen, P. (1979). Suomen kielen taajuussanasto. Frequency dictionary of Finnish. WSOY.

- Schaie, K. W. (2005). Developmental influences on adult intelligence: The Seattle longitudinal study. Oxford University Press.

- Schiavone, N., Virta, M., Leppämäki, S., Launes, J., Vanninen, R., Tuulio-Henriksson, A., Immonen, S., Järvinen, I., Lehto, E., Michelsson, K., & Hokkanen, L. (2019). ADHD and subthreshold symptoms in childhood and life outcomes at 40 years in a prospective birth-risk cohort. Psychiatry Research, 281, 112574. https://doi.org/10.1016/j.psychres.2019.112574

- Shah, M. M., Hassan, R., & Embi, R. (2011). Technological changes and its relationship with computer anxiety in commercial banks, In Proceedings of the 2nd International Research Symposium in Service Management (pp. 288–295).

- Süß, H.-M., Oberauer, K., Wittmann, W. W., Wilhelm, O., & Schulze, R. (2002). Working-memory capacity explains reasoning ability—and a little bit more. Intelligence, 30(3), 261–288. https://doi.org/10.1016/S0160-2896(01)00100-3

- Tierney, M. C., & Charles, J. (2014). Feasibility and validity of the self-administered computerized assessment of mild cognitive impairment with older primary care patients. Alzheimer Dis Assoc Disord, 28(4), 9. https://doi.org/10/f6q4d3

- Trustram Eve, C., & de Jager, C. A. (2014). Piloting and validation of a novel self-administered online cognitive screening tool in normal older persons: The cognitive function test: Online screening tool for cognitive impairment: the CFT. International Journal of Geriatric Psychiatry, 29(2), 198–206. https://doi.org/10.1002/gps.3993

- Vrana, S. R., & Vrana, D. T. (2017). Can a computer administer a Wechsler Intelligence Test? Professional Psychology: Research and Practice, 48(3), 191–198. https://doi.org/10.1037/pro0000128

- Maria, V. (2018). Wikipedia. https://en.wikipedia.org/w/index.php?title=Vrouw_Maria&oldid=870262643

- Wallace, S. E., Donoso Brown, E. V., Fairman, A. D., Beardshall, K., Olexsovich, A., Taylor, A., & Schreiber, J. B. (2017). Validation of the standardized touchscreen assessment of cognition with neurotypical adults. NeuroRehabilitation, 40(3), 411–420. https://doi.org/10.3233/NRE-161428

- Wechsler, D. (2012). Wechsler Adult Intelligence Scale. Fourth Edition (WAIS-IV) [Finnish version]. Psykologien Kustannus Oy.

- Wechsler, D. (1997). Wechsler Memory Scale (WMS-III). (Vol14). Psychological Corporation. TX.

- Zakzanis, K. K., & Azarbehi, R. (2014). Introducing BRAIN screen: web-based real-time examination and interpretation of cognitive function . Applied Neuropsychology. Adult, 21(2), 77–86. https://doi.org/10.1080/09084282.2012.742994

- Zygouris, S., & Tsolaki, M. (2015). Computerized cognitive testing for older adults: A review. American Journal of Alzheimer's Disease and Other Dementias, 30(1), 13–28. https://doi.org/10.1177/1533317514522852

Appendix 1.

Olt word list, Finnish version with English translations by authors

Appendix 2.

Olt story, Finnish version with English translation by authors

Instruktio. Seuraavaksi näytetään kertomus, joka sinun tulisi lukea. Teksti on jaettu viiteen lyhyeen osaan. Lue kukin lyhyt teksti vain kerran, mutta lue huolellisesti. Osa kertomuksesta on totta, osa mielikuvituksen tuotetta. Tekstistä esitetään myöhemmin kysymyksiä.

Instruction. Next, you will be shown a story that you should read. The text is in five short paragraphs. Read each paragraph just once, but read carefully. Part of the story is true, part is fictional. Questions about the text will be asked later.

Maailman saastuneimmaksi sanotun meren, Itämeren pohjassa on arviolta satoja puisten laivojen hylkyjä. Yli tuhatvuotisesta Venäjän ja lännen välisestä kaupankäynnistä johtuen hylyt ja niiden mukana uponneet lastit ovat hyvin monipuolisia jopa pohjoisessa Suomenlahdella. Jopa hollantilaisten maalarimestarien töitä on säilynyt.

There are estimated to be hundreds of wooden shipwrecks at the bottom of the Baltic Sea, the world's most polluted sea. As a result of more than a thousand years of trade between Russia and the West, the wrecks and the cargoes that have sunk in the northern Gulf of Finland with them are very diverse. Even works of Dutch master painters has survived.

Itämeri on vilkkaan kansainvälisen meriarkeologisen tutkimuksen kohde. Merkittävä osa hylyistä on säilynyt suhteellisen hyvässä kunnossa jopa viisisataa vuotta. Hyvänä esimerkkinä ovat Ruotsin kuningas Kustaa Adolfin sotalaivaston lippulaiva Wasan johdolla purjehtinut Borgå-laiva, ja venäläinen tykkivene “Москва”.

The Baltic Sea is the focus of intense international marine archaeological research. A significant number of shipwrecks have remained in relatively good condition for up to five hundred years. Good examples are the ship "Borgå", which sailed as an escort ship of the flagship “Wasa” of King Gustav Adolf of Sweden's navy, and the Russian gunboat "Москва".

Molemmat laivat upposivat vuonna 1631 Loviisan ja Haminan välillä olevan Pyhtään edustalla käydyssä meritaistelussa. Hyvä säilyminen johtuu osittain siitä, ettei puuta ravinnokseen käyttävää laivamatoa ole alhaisen suolapitoisuuden vuoksi esiintynyt Itämeressä. Myöskään merkittäviä leväkerrostumia ei ole hylkyjen päällä

Both ships sank in 1631 in the sea battle off Pyhtää, between Loviisa and Hamina. The good preservation is partly due to the fact that the shipworm, which feeds on wood, has not been present in the Baltic Sea due to low salinity. There are also no significant layers of algae on the wrecks.

Laivamadon puutteen lisäksi ovat Itämeren hylkyjen säilymisen taustalla myös voimakkaiden merivirtojen ja vuorovesi-ilmiön puute. Itämeren syvänteissä rautarunkoisetkin hylyt säilyvät pitempään kuin muualla, sillä ne ruostuvat hitaasti happikadon takia vähähappisen veden ansiosta. Matalaan veteen uponneet hylyt ovat kuitenkin vaarassa rikkoutua, merenkulun, merenkäynnin ja etenkin pohjoisen Itämeren rantojen jäätyessä talvisin.

In addition to the lack of shipworms, wrecks in the Baltic Sea also survive because of the lack of strong ocean currents and tidal effects. Even iron-bodied wrecks in the deep waters of the Baltic Sea survive longer than elsewhere, as they corrode slowly due to environmental hypoxia in the low-oxygen water. However, wrecks sunk in shallow water are at risk of breaking up, due to shipping, sea traffic and, especially in the northern Baltic, the freezing of the shores in winter.

Itämereen uponneiden puurunkoisten hylkyjen tuhoutumisvaara kasvoi laivamadon saavuttua alueelle 1993 voimakkaan suolapulssin mukana. Talvimyrskyt työnsivät Tanskan salmista poikkeuksellisen paljon uutta suolaista vettä Pohjanmereltä Itämerelle, ja laivamadosta tuli osa eliöstöä ainakin eteläisellä Itämerellä.

The risk of destruction of wooden wrecks in the Baltic Sea increased with the arrival of shipworm in 1993 due to a strong salt pulse. The winter storms pushed an exceptional amount of strongly salinated water of the North Sea through the Danish Straits into the Baltic Sea, and the shipworm became part of the biota at least in the southern Baltic Sea.

The questions and alternatives given after the stickmen task