ABSTRACT

Videoconferencing is increasingly used in education as a way to support distance learning. This article contributes to the emerging interactional literature on video-mediated educational interaction by exploring how a telepresence robot is used to facilitate remote participation in university-level foreign language teaching. A telepresence robot differs from commonly used videoconferencing set-ups in that it allows mobility and remote camera control. A remote student can thus move a classroom-based robot from a distance in order to shift attention between people, objects and environmental structures during classroom activities. Using multimodal conversation analysis, we focus on how participants manage telepresent remote students’ visual access to classroom learning materials. In particular, we show how visibility checks are accomplished as a sequential and embodied practice in interaction between physically dispersed participants. Moreover, we demonstrate how participants conduct interactional work to make learning materials visible to the remote student by showing them and guiding the ‘seeing’ of materials. The findings portray some ways in which participants in video-mediated interaction display sensitivity to the possibility of intersubjective trouble and the recipient’s visual perspective. Besides increasing understanding of visual and interactional practices in technology-rich learning environments, the findings can be applied in the pedagogical design of such environments.

1. Introduction

Digital technology is an increasingly common part of pedagogical interactions. In particular, the rapid development of communications technology, including videoconferencing, has enabled new forms of synchronous telecollaboration (Dooly and O’Dowd Citation2018), hybrid teaching (Gleason and Greenhow Citation2017) and massive open online courses (Patru and Balaji Citation2016). Although there is still a tendency to conceptualise classroom interaction as something that takes place inside the bounded walls of a classroom, these developments attest to the possibilities of videoconferencing to extend ‘classrooms’ beyond the physical space and, perhaps, merge some of the dichotomies between co-present and virtual participation.

As Hutchby (Citation2001, p. 444–445) reminds us by taking the telephone as an example, the materiality of technology is not limited to the physical aspects of devices and tools, but it also manifests itself in less tangible form through the distribution of interactional space. Indeed, when digital technology is integrated into classroom activities, the material ecology of (inter)action, and interaction itself, is transformed. In so called hybrid classrooms in which video-mediated remote students are instructed alongside face-to-face students, there may arise a need to use non-virtual learning materials. In the literature on language learning materials use (see e.g. Guerrettaz et al. Citation2018; introduction to this special issue), learning materials have been defined in different ways but typically as artefacts specifically designed or directly used for pedagogical purposes, such as textbooks and task sheets. Partly separate from this literature, there is by now a substantial body of conversation analytic (CA) studies investigating how participants use and interact around various material and technological artefacts in educational settings. These may include blackboards (Greiffenhagen Citation2014; Matsumoto Citation2019), print text and task sheets (Jakonen Citation2015; Majlesi Citation2015; Karvonen, Tainio, and Routarinne Citation2017), desktop computers and laptops (Cekaite Citation2009; Gardner and Levy Citation2010; Greiffenhagen and Watson Citation2009; Juvonen et al. Citation2019; Musk Citation2016), smartphones and tablets (Sahlström, Tanner, and Valasmo Citation2019; Asplund, Olin-Scheller, and Tanner Citation2018; Hellermann, Thorne, and Fodor Citation2017; Jakonen and Niemi Citation2020) and online environments (Balaman Citation2019; Hjulstad Citation2016). In brief, these studies show that learning materials constitute important resources for constructing and making sense of situated actions, which makes the co-ordination of mutual orientation on the materials a key task for the participants.

In this paper, we are interested in the lived sense of ‘materials use’ in an educational environment that brings together classroom and remote participants by way of a Double telepresence robot, which is a specific videoconferencing technology. In such a synchronous hybrid classroom, the use of learning materials is complicated by a need to ensure that remote students have sufficient (visual) access to those offline learning materials and objects that are physically located in the classroom and are part of the classroom’s material ecology of action. We aim to expand the emerging scholarship on materials use (see e.g. Garton and Graves Citation2014; Guerrettaz et al. Citation2018) by exploring how the participants in this setting, dispersed across two physical environments, coordinate mutual attention to routine classroom materials such as print texts, whiteboards, and laptops in and through robot-enabled video-mediated interaction. By exploring social practices related to the visibility of video-mediated learning materials through a multimodal CA lens, we hope to highlight not only some routine technological difficulties in video-based distance education but also participants’ emic solutions to overcome them, both of which are relevant for developing pedagogically sustainable forms of distance education.

2. Coordinating joint attention in the material ecology of video-mediated instructional interaction

The use of videoconferencing applications is rapidly gaining ground as educational institutions, particularly in higher education, offer various forms of distance and ‘blended’ learning and telecollaboration. Students’ reasons for remote participation can vary considerably, ranging from travel difficulties and geographical isolation to chronic illness or recovery from an acute injury. This means that videoconferencing is a potential tool for increasing the flexibility and accessibility of instruction, one that helped educational systems to cope during the COVID-19 pandemic during the past year (2020). However, studies of videoconferencing in Computer Assisted Language Learning (CALL) show that its sustainability depends on how participants adapt interactional practices to the limitations of the medium (e.g. Hampel and Stickler Citation2012; Guichon and Cohen Citation2014). These and other studies have explored videoconferencing practices both in interaction between dispersed individuals (Satar Citation2013; Guichon and Cohen Citation2014; Rusk and Pörn Citation2019) and between small groups or larger classroom cohorts (Austin, Hampel, and Kukulska-Hulme Citation2017; Satar and Wigham Citation2017).

CALL studies have thus far mainly investigated the use of desktop videoconferencing with programmes such as Skype or Adobe Connect, which tend to have particular affordances (Gibson Citation1979) – i.e. possibilities and constraints – for embodied interaction. As conversation analytic research on mediated interaction shows, video calls are often organised so that participants see each other’s face and parts of the upper body. Such a default multimodal organisation of ‘talking heads’ (Licoppe and Morel Citation2012) largely leaves out one’s physical surroundings, unless participants conduct specific work to move the camera and show objects in their surroundings to the interlocutor. The set-up heightens the relevance of the face and hands as multimodal resources for video-mediated action, and, incidentally, may partly explain the focus in existing multimodal CALL studies on the interactional role of gaze, facial expressions and hand gestures in educational videoconferencing (e.g. Cohen and Wigham Citation2019; Satar Citation2013; Wigham Citation2017).

CA studies of mediated interaction have shown that, in the absence of physical co-presence, there is a heightened need to check co-participant’s presence and ensure mutual orientation via different kinds of verification practices. For example, Jenks and Brandt (Citation2013) demonstrate how participants in voice-based chat rooms deploy interrogatives such as ‘Are you there?’ to ascertain whether the other participant is present and available for interaction. When video is available, the scope of verification practices extends to the visibility of persons or objects. For instance, in analysing participants’ visual appearance and displays of involvement in the openings of Skype conversations, Licoppe (Citation2017b, p. 357–360) analyses an instance of checking one’s own visibility to the interlocutor by way of a question (‘Do you see me there?’). Furthermore, Balaman (Citation2019, p. 517–518) illustrates how the remote co-participant’s online activity – which is not mutually visible – can constitute prerequisite knowledge in order to accomplish an action (hinting), and which needs to be clarified by way of a pre-sequence prior to the base action.

Beyond seeing whether the remote interlocutor is ‘there’, videoconferencing can involve interactional trouble because of the way the screen mediates and limits visual access to the interlocutor’s environment and objects therein. Luff et al. (Citation2003) have used the term fractured ecology to describe how video-mediated social action becomes separated ‘from the environment in which it is produced and from the environment in which is received’ (55). Thus, while a participant may for example see her interlocutor’s pointing action on the screen, she might still be unable to see what exactly is being pointed at (Luff et al. Citation2016). Such a material feature of videoconferencing can make object-centred collaboration difficult. Its implication for video-mediated instructional activities is that standard desktop videoconferencing arrangements may be less ideal for typical classroom activities that require mutual attention on print texts or other material objects and involve their manual handling (e.g. Tanner, Olin-Scheller, and Tengberg Citation2017; Juvonen et al. Citation2019; Jakonen Citation2015).

In this study, we aim to extend existing research on learning materials use and the CALL literature on video-mediated classroom interaction, which has so far focused on videoconferencing tools with limited possibility for remote visual control. Thus, when a video call is made between two laptops, desktops or portable tablets, participant A does not have the ability to control what part of participant B’s environment she sees, because ultimately it depends on how participant B positions her device/webcam. This may seem like a trivial observation, but visual control has significant implications for the agency of remote students in a hybrid classroom: with a desktop or laptop-based videoconferencing tool, the remote student is positioned to see the classroom environment and make sense of action from the perspective that the classroom participants assign her. In contrast, the telepresence technology that we investigate in this study gives remote participants the ability to move and rotate the robot (and its camera) in the classroom, allowing shifts of attention between people, objects and environmental structures in the classroom in real time. The existing evidence from design-oriented and survey/interview-based studies of telepresence robots suggests that such robots can increase the agency, presence and social inclusion of remote students (e.g. Cha, Chen, and Mataric Citation2017; Fitter et al. Citation2018). However, there is an apparent research gap related to substantiating these kinds of claims with micro-level interactional evidence (but outside classrooms, see Liao et al. Citation2019). Thus, this article explores how dispersed participants – a remote student and the classroom teacher and students – manage the visibility of learning materials to the remote student with the help of the remote visual control provided by the telepresence robot. In the remainder of the article, we aim to shed light on how participants work towards what we refer to as perceptual intersubjectivity (see also Gallagher Citation2008) by checking what learning materials the remote student can see, and by resolving emerging problems when they do not see ‘enough’.

3. Data and method

Our multilingual data are c. 8 hours of video-recorded lessons from university-level foreign language classrooms (German, Finnish, English, and Swedish). The dataset consists of recordings made with 1–3 cameras in the classrooms and, during the English and Swedish lessons, recordings made with a screen capture application on the remote student’s laptop. The screen capture video data show the remote student’s point-of-view to the classroom when they operated the robot, including other simultaneous computer conduct such as online searches, etc. Altogether, the data includes both lessons with a real ‘need’ for videoconferencing due to a student having had to travel for work during a course requiring attendance (Finnish) and what can be described as ‘quasi-naturalistic’ interactions during which the teachers and students were testing the telepresence robot in an unplanned manner. In such lessons, the students went in turns to another campus location to participate remotely in otherwise ‘normal’ classroom instruction with the robot. All recordings show users that are relatively inexperienced in operating the robot.

Developed by Double Robotics, the telepresence robot in our data is a commercial videoconferencing tool equipped with an iPad, external video camera, microphone and speakers, and wheels enabling movement: an ‘iPad on wheels’, so to speak. The robot is controlled remotely either by using a computer to access the online interface (as in our data) or with a designated iPad app. By moving the robot (with arrow keys), the user of a telepresence robot can simulate walking in the classroom and control which parts of the environment, participants and learning materials she focuses her attention.

The lessons had different kinds of material ecologies for action and interaction. Routinely used material artefacts included paper-based materials designed for teaching and learning (e.g. task sheets) and various digital devices that were treated as either public (e.g. classroom whiteboard, robot screen showing the remote participant’s face) or personal (classroom participants’ smartphones, the remote student’s home laptop). A typical function for personal devices was to access digitally available learning materials and virtual learning environments used in the instruction. The multiplicity of learning materials also presented a need to co-ordinate joint attention in situationally appropriate ways.

The hybrid setting foregrounded a particular asymmetry regarding participants’ visual access to each other’s environments and their sense of the other’s point-of-view. With the help of the external wide lens camera, the remote user could in fact see a relatively broad view of the classroom (see for an example). In contrast, the classroom students could typically only see the remote student’s ‘talking head’, to use Licoppe and Morel (Citation2012) term, which the remote student’s laptop camera transmitted to them (for an example, see the top right-hand corner of Figure 2.2). This presented at least two constraints for situated action: First, classroom participants were not able to know but only assume which parts of the classroom environment the remote student saw at any given moment, and how well she saw them. Second, their sense of the remote student’s embodied actions in the remote space was largely based on a distinction between whether the remote student gazed towards her laptop camera/screen or away from it. The remote student’s gaze direction thus provided an indication of whether she was engaged in interaction with the classroom participants or, for example, taking notes on paper or looking at her phone. Our analytical focus in this article, the social practice of checking how well the classroom-based material artefacts are visible to the remote student, is a participants’ way of dealing with the first of these constraints.

In those parts of our dataset where both class camera footage and screen capture from the remote student’s laptop exist, we have analytical access to how actions unfold both in ‘real time’ in the classroom and on the remote student’s screen, sometimes in different ways due to for example delay (e.g. Rusk and Pörn Citation2019). While access to both perspectives can add considerably to the analysis, it presents a need for decision-making concerning data presentation. The extracts in this paper have been mainly transcribed from the classroom camera perspective because the focal phenomenon of visibility checks (as analysed in section 4.1) is, at least in our data, overwhelmingly a practice that is made relevant and initiated by the classroom participants. Thus, in order to understand why they treat the visibility of a certain classroom artefact as potentially problematic to the remote student, their perspective on the material ecology of action is paramount. However, part of this ecology is the ‘talking head’ of the remote student on the robot screen. This is why we have also added annotations describing the remote student’s gaze direction and facial expressions in those transcripts where it is available to us either via the remote students’ screen capture () or afforded by the placement of the classroom cameras (). We also show screenshots from the remote student’s footage to illustrate what the classroom and its material environment look like from the remote perspective. The data are transcribed following Jefferson’s (Citation2004) conventions for talk and Mondada’s (Citation2014) for embodied conduct.

4. Analysis

Our analysis is divided in two sections. Section 4.1 analyses visibility checks, typically formatted as ‘can you see X’ questions and directed to the remote student. In contrast to visibility checks inquiring one’s own visibility to a remote interlocutor described by Licoppe (Citation2017b, p. 357–360), the checks we describe here address the visibility of a material object that has significance for the on-going pedagogical activity. We show how these questions allow classroom participants (especially the teacher) to ensure that the remote participant has sufficient visual access to learning materials relevant for a pedagogical activity, before starting the activity or moving to a next phase in it. The section exhibits three different responses to a visibility check. Section 4.2 focuses on ways of re-configuring the material ecology of action in order to make learning materials (more) visible; often, such work is made relevant by problems manifest through visibility checks. Using three examples, we argue that participants remedy insufficient visibility of the learning material by managing the affordances of the material organisation of action in situated ways. We conclude by discussing the implications of our findings with regard to learning materials, asymmetries and intersubjectivity in video-mediated interaction, and hybrid classroom pedagogies.

4.1. Visibility checks as a practice for managing remote access to classroom-based learning materials

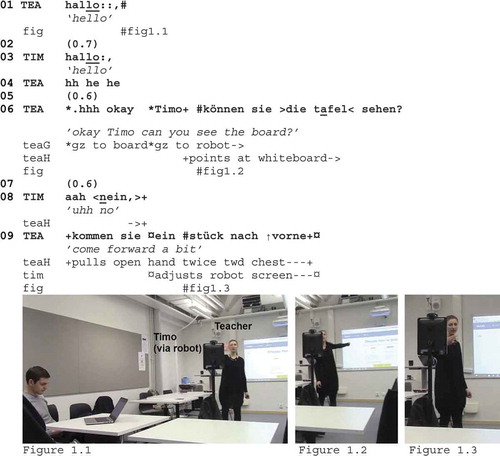

In , a German teacher’s ‘can you see’ check receives a disaffirmative response. The check occurs during an activity transition when the class is beginning a group quiz activity using the Quizlet Live application. The extract begins as the teacher opens interaction with the remote student (line 1), Timo, and proceeds immediately to check the visibility of the whiteboard to him (line 6). Timo can only access the whiteboard through the video call, and seeing what it shows is crucial in this moment: the students need to pick up a code that the teacher will next project on the board and enter it into their own phones in order to join the quiz.

Extract 1. Können sie die tafel sehen? (DR_German_18122018_2935) Having stepped in front of the robot and into its camera view, the teacher summons Timo by greeting him (line 1, see ). Timo reciprocates the greeting and thereby produces the second pair part of the opening sequence: the student’s ‘hello’ functions as a proof of his attention and that the video call connection works. After brief laughter, the teacher asks if Timo can see the whiteboard (line 6), alternating her gaze between the board and the robot. The teacher’s pointing towards the whiteboard (), which she sustains until the end of line 8, and the alternation of her gaze between the robot and the whiteboard are integral aspects of the turn.A question such as that in line 6 constitutes a first pair part of a checking sequence that treats the visibility of a particular material object to the remote student as uncertain. Similar to the case here, these kinds of questions typically co-occur with gestures that identify or depict the object that needs to be ‘seen’. In our data, visibility checks are only presented to video-mediated participants, not students who are co-present in the classroom; even if, as shows, one classroom student is seated in a less advantageous position, further away from the whiteboard than the robot. As a first pair part of a sequence, the question makes relevant an answer, which in our data can be a ‘yes’, ‘no’ or something in between.

As it turns out, Timo produces the second pair part by claiming that he cannot see the whiteboard (line 8). It seems evident that his disaffirmation is offered and received as a claim of inability to read the text instead of not ‘seeing’ the whiteboard itself, because both participants treat the location of the whiteboard as clear in the situation. Thus, there is a sense that the visibility check is a practice taking place via a pre-expansion sequence (Schegloff Citation2007) and geared to ensure that some subsequent activity can be accomplished without problems. Here, the visibility of the text on the whiteboard is secured as the teacher instructs the remote student to move (the robot) closer to the whiteboard (line 9), gesturing the robot towards herself () to show the direction of the requested movement. We will return to the follow-up instruction in more detail in .

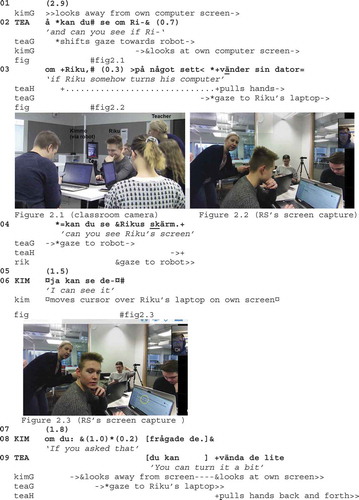

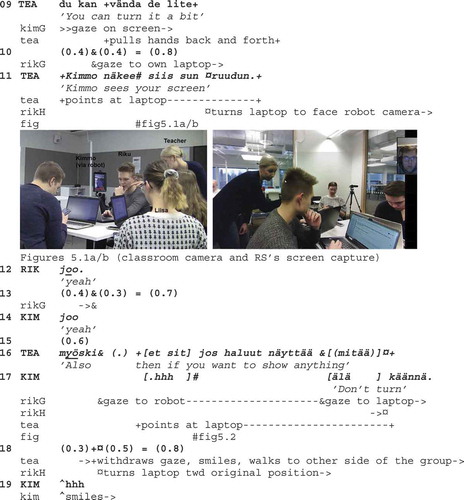

In contrast, shows an example of an affirmative response to a visibility check. The extract comes from a Swedish class where students are working on a peer feedback activity. Prior to this, the teacher has instructed a group of three classroom students and one remote student to comment on each other’s texts on the discussion forum of an online learning platform (Moodle). During the instruction, one of the classroom students (Riku) has opened the texts on his laptop. As the extract begins, the teacher checks whether the remote student (Kimmo) can see Riku’s screen via the robot camera (lines 2–4), and instructs Riku to turn his screen so that it would be better visible to Kimmo.

Extract 2. Kan du se Rikus skärm? (DR_Swedish_12112019_1420) The formulation of the visibility check (‘if Riku somehow turns his computer, can you see Riku’s screen’, lines 2–4) treats Kimmo’s visual access to the learning material object (i.e. Riku’s screen) as even more uncertain than in by implying that the laptop’s current positioning is less than optimal. The turn is a ‘double barrelled’ action in that, besides the visibility check, it also indirectly suggests Riku to re-arrange the material ecology to better accommodate Kimmo’s video-mediated participation.Similar to , gaze and gesture are important resources in the accomplishment of the check. As the teacher begins the question, she shifts her gaze to the robot but finds Kimmo’s gaze away from the camera (see ). The teacher restarts the turn (line 3) after Kimmo has returned his gaze on screen, and then briefly glances at Riku’s laptop in the classroom when she mentions the device (line 3, vänder sin dator, ‘turns his computer’). Simultaneously, the teacher gestures the turning of the laptop by holding her hands in mid-air and pulling them back and forth (see ). As Lilja and Piirainen-Marsh (Citation2019) have shown, such depictive gestures contribute to securing the recognisability of the ongoing action to the recipient(s). Here, recognition is potentially vulnerable not only because of the video-mediated nature of interaction but also because of the lack of mutual orientation at the beginning of the teacher’s turn.

Although Riku does not turn the laptop at this point, Kimmo confirms sufficient visual access by claiming that he ‘can see’ it (line 6). On his own computer screen, he also moves his mouse pointer over Riku’s laptop (visible as the yellow circle on the screen capture, ). Such an action is only visible to him self and not available to the classroom participants; nevertheless, it is a situated demonstration of his attention to the focal learning material. As the classroom participants only see Kimmo’s ‘talking head’ on the robot screen, their main resource for making sense of Kimmo’s attention is his gaze pattern.

There are signs of trouble related to the sense and purpose of the visibility check. Firstly, Kimmo’s increment to his response (line 8, ‘if you asked that’), which he produces after a lengthy silence, treats the teacher’s question as not entirely clear. As he utters the turn, he also looks away from the screen during the intra-turn silence. It is possible that he withdraws his gaze to look at the online texts on his phone.Footnote1 Secondly, as already mentioned, Riku does not at first turn his laptop as the design of the check implies, and the teacher needs to make it explicit (line 9, ‘you can turn it a bit’). By doing so, the teacher treats herself as someone who can assess visibility better than the person whose visual access is in question (Kimmo).

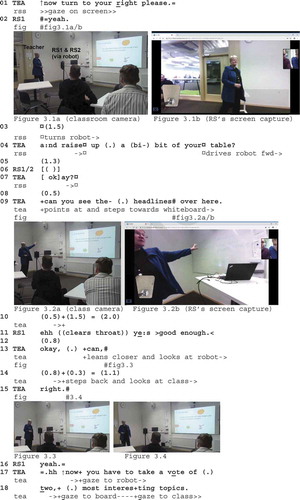

showcases an instance where a ‘can you see’ check queries visual access to whiteboard texts in an EFL class. Prior to the extract, the class have been brainstorming for group work topics, and the teacher has written suggested topics on the whiteboard in the corner of the classroom (see ). The teacher is about to proceed to voting on the topics when she checks whether two students co-participating via one robot can see what is on the board. Unlike in , here the remote students’ gaze is on the screen throughout the extract, and the check occurs after an extensive pause to navigate the robot closer to the whiteboard. We join the situation as the robot is in front of the whiteboard but not yet oriented towards it.

Extract 3. Can you see the headlines (English) When the extract begins, the remote students have turned the robot camera on the teacher (see ). The teacher segments the instruction to move closer to the whiteboard through two directives in lines 1 and 4, produced so that there is time to accomplish the first one (silence in line 3) before the second directive. The teacher’s ‘okay’ with the rising intonation (line 7) works as a suggestion that the robot’s movement is complete, and that the remote students are now assumed to be located in a place that renders the whiteboard text visible.In contrast to –, here the visibility check (‘can you see the headlines’, line 9) specifies what the remote students need to see on the whiteboard. Similar to prior extracts, the teacher coordinates joint attention by pointing towards the board (). Here, the remote students’ response to the visibility check comes after delay and is qualified (‘yes good enough’, line 11).

The qualified response is understandable if one looks at the screen capture on the remote students’ computer screen, on which the hand-written topics seem barely legible (). The teacher seems first to attend to this by beginning a turn (line 13), which she addresses to the remote students by leaning closer and looking at the robot. Given the sequential context, this could have been the beginning of an attempt to remedy the less than optimal visual access to the whiteboard. However, she aborts the turn and reorients her body towards the class during the silence in line 14 (compare and ). Both participants’ subsequent turns (‘right’, ‘yeah’, lines 15–16) are then a way to acknowledge that the access is workable even if not perfect: ’good enough’ for the following activity.Footnote2

In this section, we have analysed how visibility checks are used to verify remote access to learning materials in situations involving a shift to a new pedagogical activity (–) or a new phase within an on-going activity (in , from collecting ideas to processing them on the whiteboard). Visibility checks are characteristically a classroom participants’ practice that treats the remote student’s seeing as vulnerable. If we consider intersubjectivity as a procedural accomplishment, visibility checks constitute one of the many ‘occasions and resources for understanding’ (Schegloff Citation1992, 1299) that social life provides, a procedure for securing and re-establishing intersubjectivity of perception between dispersed participants. Through visibility checks, participants negotiate the specifics of what needs to be ‘seen’ within an environment, how well it is and ought to be seen, and what the role of the ‘seeable’ is in an upcoming action sequence. In this sense, the objects of seeing, which are identified and negotiated verbally and in embodied ways, are situated and interactional accomplishments. What counts as ‘good enough’ visibility is not a pre-defined matter, but depends on local circumstances.

4.2. Showing learning materials and guiding their seeing

The previous examples showed how teachers treat remote access to classroom learning materials as uncertain and vulnerable. This section discusses in more detail how classroom participants make learning materials visible and ‘seeable’ on the screen to remote participants. Previous CA have distinguished between two routinely-occurring video-mediated practices for making an object visible for a remote participant: either the camera is turned towards a showable object (Licoppe and Morel Citation2014) or an (easily movable or handheld) object is brought into a ‘show position’ in front of the camera (Licoppe Citation2017a). Both practices occur in our classroom data but with modifications in the division of labour of manual actions because, unlike in ‘traditional’ video-mediated settings, the movement capabilities of the robot afford remote visual control. We begin by discussing two cases in which a classroom participant shows a learning material to the remote student with no camera movement: a ‘simple’ showing () and one involving instruction by the remote student concerning the positioning of the object (). We then illustrate how the practice of turning the camera towards the target is accomplished in an instructed manner so that a classroom participant guides the remote student to move the robot into a location from which the learning material would be better visible (, consider also ).

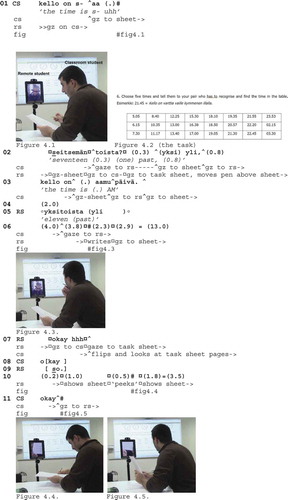

We begin with a relatively simple example of showing a completed task sheet in a Finnish class. In , the students are working on a quite traditional language teaching activity, an information gap task in which pairs of students tell the time in Finnish. The students have a task sheet with several times of day written with numbers on the sheet (see ). The teacher has instructed the students to select and tell three times to their partner, who in turn has to circle the times on his own sheet. The following extract depicts how one pair proceeds to checking the correct times after the classroom student (CS) utters his third time. Prior to the extract, he has already had some trouble formulating the time 11.17 a.m. in Finnish, and the trouble continues here. Our main interest is on how the remote student (RS) manages the answer-checking by showing his task sheet to the classroom student.

Extract 4. Showing a task sheet_DR1_Finnish_2242 The classroom student tells the time (with some difficulty) and alternates his gaze between his own task sheet and the remote student (lines 1–3). The remote student initially alternates gaze similarly, but as soon as the first number is uttered in line 2, he withdraws gaze away from the screen to his task sheet on the desk, and moves his pen above the sheet. This is a visible demonstration of searching where the time of day that CS is producing is on the task sheet () in front of him. The embodied search continues well into the long silence (line 6) after the classroom student has completed his turn, and ends as the remote student begins to write on his sheet (see ).The remote student’s writing is discernible to the classroom student as the movement of the pen as well as the remote student’s withdrawn gaze. Monitoring the remote student’s bodily conduct visually () allows the classroom student to gauge the progression of the activity of finding and circling the correct time, and anticipate the eventual transition to checking answers. The remote student initiates the transition by stopping writing and putting his pen down, shifting his gaze to the classroom student and uttering a transitional ‘okay’ (Beach Citation1993) in line 7. Such conduct displays a readiness to move to answer-checking, which the classroom student reciprocates with another ‘okay’.

The remote participant lifts the paper and brings it to a ‘show position’ (Licoppe Citation2017a) granting maximal visibility of the task sheet (line 10), and maintains the sheet there. When holding the paper in front of the camera, he monitors whether the classroom student is looking at the sheet (), and, noticing that the classroom student’s gaze is not yet on the screen, resumes the ‘show position’. The classroom student signals his beginning to visually inspect the remote student’s circled times with another ‘okay’ (line 11) and moves his gaze to the screen (). Checking that the times have been transmitted and understood correctly requires that the classroom student verify that the three times he has circled in his own task sheet are the same as on the remote student’s sheet. This thus requires the alternation of gaze between the two sheets (lines 11–12) before the closing of the showing sequence can be made relevant to the remote student (line 13) and the show position terminated (line 14).

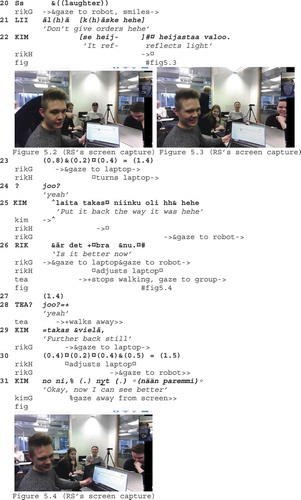

In , the learning material (task sheet) is shown and investigated without any overt signs of trouble. As a material object, a task sheet is highly portable, making it easy to manipulate and position in front of the camera. Other learning materials may be different, and in (continuation of ), visual access to text on a classroom-based laptop screen proves to be more difficult to see for the remote participant. Consequently, participants need to do more work to secure the visibility of the text. As we noted when analysing , in checking the remote student’s (Kimmo) seeing, the teacher simultaneously instructed the classroom student (Riku) to turn his laptop so that Kimmo would see the screen better. As the laptop is now turned (from line 11 onwards), the light reflecting from the screen makes it more difficult for Kimmo to see the screen in the new position. In what follows, he instructs Riku to turn the laptop back to the original position.

Extract 5. Du kan vända de lite_DR_Swedish (bold = Swedish; bold and italics = Finnish) Riku shifts gaze to his laptop during the silence after the teacher’s instruction in line 9. He begins to move the laptop when the teacher explains to him in L1 (Finnish) that Kimmo ‘sees the screen’ (line 11), pointing at the laptop (see ). As illustrates, the screen is visible to the remote student in its original position at a narrow horizontal angle. Both Riku and Kimmo align with the teacher’s instruction to turn the laptop (joo, ‘yeah’, lines 12, 14).While Riku is turning the laptop into a more direct angle, he moves his gaze to the robot (visible in ). This allows him to monitor Kimmo’s reactions to the turning. Kimmo’s response is a sudden request to stop turning (line 17), produced after a sharp in-breath. Riku duly obliges by stopping the movement and begins to turn the laptop back during the silence that follows. All participants mark the remote student’s request as an unusual and potentially transgressive action: The teacher turns away from the robot and smiles (line 18), Kimmo smiles (line 19), classroom students chuckle, and Riku reciprocates the smile into the robot camera (). One of the classroom students, Liisa, even jokingly reproaches Kimmo for ‘giving orders’ (line 21).

Riku turns the laptop back to its original position incrementally and alternates his gaze between the laptop and the robot while doing so. When Kimmo accounts for his request (line 22, ‘it reflects light’), Riku stops the movement for a brief moment and continues it during the following silence. He again stops the movement when Kimmo further instructs to put it in the ‘original position’ (line 25). Riku then adjusts the laptop minimally and, changing the language back to L2 (Swedish), checks if the current position is ‘good’ (line 26). As demonstrates, the laptop is still not in its original place, and Kimmo asks it to be moved further (line 29). With yet another minor adjustment of the laptop (line 30), it comes to a position that Kimmo treats as adequate (line 31). Thus, what began as the classroom members’ attempt to modify the material ecology so as to better accommodate the remote student led to the remote student claiming the deontic right to decide the position of the object being moved, and instructing its movement.

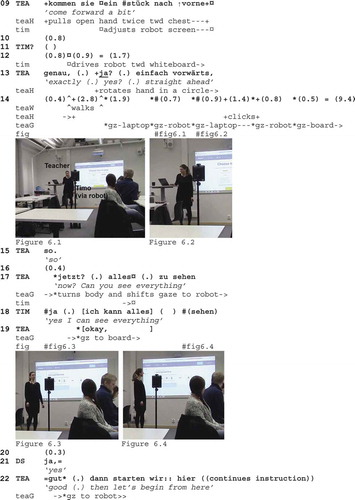

Besides showing and instructing showing, another way to remedy insufficient visual access is to take advantage of the mobility of the robot and navigate it into a location that affords better visibility, provided that the desks are organised so that the classroom space is navigable. shows how the German teacher continues the visibility check of by instructing the remote student (Timo) to move the robot closer to the whiteboard so that the camera can relay the numerical code on the board needed for the upcoming Quizlet Live group activity. This involves skilled multiactivity as the teacher simultaneously sets up the quiz activity on her laptop and monitors the robot’s changing position in the classroom space. This way, she thus to takes into account the remote student’s needs and maintains the progressivity of the on-going activity.

Extract 6. Kommen sie ein stück nach vorne (DR_German_18122018_2950) Timo follows the teacher’s instruction and begins to drive the robot towards the whiteboard during line 12. The teacher monitors and assesses the progression of the movement (genau, ja, ‘exactly, yes’) and provides further instruction (einfach forwärts, ‘straight ahead’). As the robot is approaching the whiteboard, a long silence ensues (line 14). During the silence, the teacher goes behind her desk and sets up the quiz activity on her laptop (projected on the whiteboard). Notice how the teacher first alternates her gaze between her laptop and the robot (see and ), and, having initiated the quiz on her laptop, then moves her gaze via the robot to the whiteboard. The gaze shift to the whiteboard is a way to verify that the code (which the students need to join the group quiz on their personal smartphones) is indeed visible on the board.As the code is visible on the whiteboard, the teacher returns her orientation to the moving robot. After indicating a transition (so, line 15), she turns towards the robot () and makes the ending of the movement relevant by enquiring about Timo’s visual access now that the robot is closer to the board. Timo stops the movement during the check and confirms that he can see ‘everything’ (line 18), giving the teacher a go-ahead to proceed instructing the activity to the entire class. The rapid progression from the check to continuing the activity (line 22) indexes the participants’ familiarity with the activity, its material organisation, and the objects that need to be seen in order to accomplish the activity. Indeed, it is not the first round of the quiz in this classroom.

5. Concluding discussion

In this article, we have explored how participants in hybrid classrooms orient to classroom learning materials and manage their visibility to remote video-mediated students. The visibility checks that we have described (–) exemplify the kinds of routine verification practices that are typical to video-mediated interaction (see Mondada Citation2007). By checking whether remote students can see a particular object in its current physical position and orientation within the material organisation of some on-going activity, classroom participants show sensitivity to the remote student’s visual perspective into the unfolding action. Such a sensitivity to potential asymmetries in visual access demonstrates a concern for constructing action that is designed to fit the recipient’s circumstances (Sacks Citation1992). Given that the visibility checks in our data typically occur in transitional moments, prior to action sequences in which the focal learning materials are used, their function seems to be to pre-empt subsequent intersubjective trouble in remote participation. We have analysed three kinds of type-conforming responses to visibility checks (affirmation, qualified affirmation and disaffirmation), and shown ways of remedying visual trouble by reconfiguring the material ecology of action. This can be done by changing the relative positions of the focal material object and the robot camera, for example by ‘showing’ the object () or by guiding and moving the robot closer to it ().

Different learning materials have different kinds of affordances and constraints for video-mediated interaction. For instance, the portability of a material object plays a role in how it can be made maximally visible in a video call – it is quicker and far more practical to reposition a laptop () or a task sheet () in front of the camera than it is to move a whiteboard (). The designed ability for remote movement and camera control of the telepresence robot can be seen to afford remote participants more autonomy for seeing objects. Interestingly, in our multilingual data corpus this does not translate into a remote participant’s responsibility for ensuring sufficient visual access to the classroom materials; instead, classroom participants and particularly teachers assume the bulk of the work in making things visible to remote students by way of checking, showing and guiding. As Hutchby (Citation2001) argues, affordances are relational by nature and thus differ depending on, for example, a person’s prior experience. Given that our data display relatively inexperienced participants using and interacting with this particular videoconferencing technology, it seems likely that the affordances that the participants find in the robot could change as they learn its lived sense. Routinisation could in turn reconfigure some of the related social practices so that the other participant’s visual perspective becomes less opaque, and there is less need for a practice such as visibility checks. Equally so, the division of roles and responsibilities in managing visibility checks may also change through routinisation.

As video-mediated technologies become more sophisticated and applicable to classroom settings, hybrid classroom pedagogies will become an increasingly relevant area of teacher development, not least because of the increasing demand for distance education due to pandemics such as the COVID-19. From teachers, the simultaneous management of ‘local’ and remote students’ participation requires classroom interactional competence (e.g. Walsh Citation2012) and sensitivity to heterogeneities and asymmetries within the physically dispersed student cohort. Part of this task involves balancing the occasionally competing demands of maintaining progressivity of the on-going activity and ensuring remote visibility of the classroom materials. As illustrates, teachers may need to weigh up what constitutes ‘good enough’ visual access, and find ways to secure it by co-ordinating the affordances and constraints of a learning material as part of the situated organisation of action.

All in all, our findings indicate that classroom participants are sensitive to troubles of intersubjectivity and visual asymmetries involved in video-based hybrid teaching. Ensuring visual access does not itself guarantee equal ability to, for example, edit a text as part of a classroom small group because remote students cannot (yet) touch a learning material located in the classroom. Indeed, even the relatively sophisticated technology that we have investigated here has apparent visual constraints relative to co-presence, including the lack of peripheral vision (objects are either on or off camera), an inability to zoom/focus (in this version of the robot), the relative slowness of ‘gaze shifts’ compared to turning the head, and the representation of three-dimensional environment as 2D. Seeing is a very basic feature of many interactional situations, a ‘seen but unnoticed’ (Garfinkel Citation1967, 36) aspect of everyday life, and the kinds of checks we have described not only index participants’ expectations towards its constituting role as part of routine classroom activities but also make visible its social nature. We hope that this study has helped to increase understanding of the subtleties of interaction in technology-rich learning environments, and that it can be used as a springboard for designing pedagogically sensible activities and materials for such environments. It is evident that more research is needed on video-mediated classroom participation. Both longitudinal studies demonstrating changing practices involving novel technologies and studies exploring the nature of asymmetries between video-mediated and face-to-face participation could provide new insights into the sense and consequences of physical and remote presence.

Acknowledgements

We are grateful to the reviewers’ constructive suggestions for improving earlier versions of this article. We have also benefited greatly from feedback at data sessions at the Department of Applied Linguistics at Portland State University and at Tampere University. Any remaining errors in argumentation and shortcomings are our own.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Teppo Jakonen

Dr Teppo Jakonen works as Senior Lecturer at the Department of Language and Communication Studies, University of Jyväskylä, Finland. His research focuses on the role of the human body and physical materials as resources for learning and participation in classroom and other instructional settings.

Heidi Jauni

Dr Heidi Jauni works as a development manager at the Language Centre of Tampere University, Finland. Her research interests include conversation analysis, especially interaction in educational settings and mediated interaction.

Notes

1. Footnote: Without a video-recording from the remote location, it is difficult to know the target of Kimmo’s gaze. However, we do know that during the lesson, he first attempted to access the online platform on his laptop, and when it turned out to be problematic, he began to use his mobile phone for that purpose instead, reserving the laptop for the video connection to the classroom.

2. Besides ensuring the progressivity of the voting activity, an additional reason for moving on may be related to the fact that these participants are experimenting with the technology. The ‘remote students’ were physically in the classroom during the earlier brainstorming before they went to another room in the campus building to participate via the robot. They are therefore likely to have at least a rough sense of what reads on the board (and what significance it has for the current activity).

References

- Asplund, S.-B., C. Olin-Scheller, and M. Tanner. 2018. “Under the Teacher’s Radar: Literacy Practices in Task-Related Smartphone Use in the Connected Classroom.” L1 Educational Studies in Language and Literature 18 (Running Issue): 1–26. doi:10.17239/L1ESLL-2018.18.01.03.

- Austin, N., R. Hampel, and A. Kukulska-Hulme. 2017. “Video Conferencing and Multimodal Expression of Voice: Children’s Conversations Using Skype for Second Language Development in a Telecollaborative Setting.” System 64: 87–103. doi:10.1016/j.system.2016.12.003.

- Balaman, U. 2019. “Sequential Organization of Hinting in Online Task-Oriented L2 Interaction.” Text and Talk 39 (4): 511–534. doi:10.1515/text-2019-2038.

- Beach, W. A. 1993. “Transitional Regularities for ‘Casual’‘okay’ Usages.” Journal of Pragmatics 19 (4): 325–352. doi:10.1016/0378-2166(93)90092-4.

- Cekaite, A. 2009. “Collaborative Corrections with Spelling Control: Digital Resources and Peer Assistance.” International Journal of Computer-Supported Collaborative Learning 4 (3): 319–341.

- Cha, E., S. Chen, and M. J. Mataric. 2017. “Designing Telepresence Robots for K-12 Education.” RO-MAN 2017-26th IEEE International Symposium on Robot and Human Interactive Communication 2017-Janua, 683–688. doi:10.1109/ROMAN.2017.8172377.

- Cohen, C., and C. R. Wigham. 2019. “A Comparative Study of Lexical Word Search in an Audioconferencing and A Videoconferencing Condition.” Computer Assisted Language Learning 32 (4). Routledge: 448–481. doi10.1080/09588221.2018.1527359.

- Dooly, M., and R. O’Dowd, eds. 2018. In This Together: Teachers’ Experiences with Transnational,Telecollaborative Language Learning Projects. Bern: Peter Lang.

- Fitter, N., Y. Chowdhury, E. Cha, L. Takayama, and M. J. Matarić. 2018. “Evaluating the Effects of Personalized Appearance on Telepresence Robots for Education.” HRI ’18 Companion: 2018 ACM/IEEE International Conference on Human-Robot Interaction Companion, Chicago, IL, March 5–8. doi:10.1145/3173386.3177030.

- Gallagher, S. 2008. “Intersubjectivity in Perception.” Continental Philosophy Review 41 (2): 163–178.

- Gardner, R., and M. Levy. 2010. “The Coordination of Talk and Action in the Collaborative Construction of a Multimodal Text.” Journal of Pragmatics 42: 2189–2203. doi:10.1016/j.pragma.2010.01.006.

- Garfinkel, H. 1967. Studies in Ethnomethodology. Englewood Cliffs, N.J.: Prentice-Hall.

- Garton, S., and K. Graves. 2014. “Identifying a Research Agenda for Language Teaching Materials.” The Modern Language Journal 98 (2): 654–657. doi:10.1111/j.1540-4781.2014.12094.x.

- Gibson, J. 1979. The Ecological Approach to Visual Perception. London: Houghton Mifflin.

- Gleason, B. W., and C. Greenhow. 2017. “Hybrid Education: The Potential of Teaching and Learning with Robot-Mediated Communication.” Online Learning 21 (4): 159–176. doi:10.24059/olj.v21i4.1276.

- Greiffenhagen, C. 2014. “The Materiality of Mathematics: Presenting Mathematics at the Blackboard.” The British Journal of Sociology 65 (3): 502–528. doi:10.1111/1468-4446.12037.

- Greiffenhagen, C., and R. Watson. 2009. “Visual Repairables: Analysing the Work of Repair in Human–computer Interaction.” Visual Communication 8 (1): 65–90. doi:10.1177/1470357208099148.

- Guerrettaz, A. M., M. Grandon, S. Lee, C. Mathieu, A. Berwick, A. Murray, and M. Pourhaji. 2018. “Materials Use and Development: Synergetic Processes and Research Prospects.” Folio 18 (2): 37–44.

- Guichon, N., and C. Cohen. 2014. “The Impact of the Webcam on an Online L2 Interaction.” Canadian Modern Language Review 70 (3): 331–354. doi:10.3138/cmlr.2102.

- Hampel, R., and U. Stickler. 2012. “The Use of Videoconferencing to Support Multimodal Interaction in an Online Language Classroom.” ReCALL 24 (2): 116–137. doi:10.1017/S095834401200002X.

- Hellermann, J., S. Thorne, and P. Fodor. 2017. “Mobile Reading as Social and Embodied Practice.” Classroom Discourse 8 (2): 99–121. doi:10.1080/19463014.2017.1328703.

- Hjulstad, J. 2016. “Practices of Organizing Built Space in Videoconference-Mediated Interactions.” Research on Language and Social Interaction 49 (4). Routledge: 325–341. doi10.1080/08351813.2016.1199087.

- Hutchby, I. 2001. “Technologies, Texts and Affordances.” Sociology 35 (2): 441–456. doi:10.1177/S0038038501000219.

- Jakonen, T. 2015. “Handling Knowledge: Using Classroom Materials to Construct and Interpret Information Requests.” Journal of Pragmatics 89: 100–112. doi:10.1016/j.pragma.2015.10.001.

- Jakonen, T., and K. Niemi. 2020. “Managing Participation and Turn-Taking in Children’s Digital Activities: Touch in Blocking a Peer’s Hand.” Social Interaction 3 (1). doi:10.4324/9781315129556.

- Jefferson, G. 2004. “Glossary of Transcript Symbols with an Introduction.” In Conversation Analysis: Studies from the First Generation, edited by G. H. Lerner, 13–31. Philadelphia, PA: John Benjamins.

- Jenks, C. J., and A. Brandt. 2013. “Managing Mutual Orientation in the Absence of Physical Copresence: Multiparty Voice-based Chat Room Interaction.” Discourse Processes 50 (4): 227–248. doi:10.1080/0163853X.2013.777561.

- Juvonen, R., M. Tanner, C. Olin-Scheller, L. Tainio, and A. Slotte. 2019. “‘Being Stuck’. Analyzing Text-Planning Activities in Digitally Rich Upper Secondary School Classrooms.” Learning, Culture and Social Interaction 21 (March 2018): 196–213. doi:10.1016/j.lcsi.2019.03.006.

- Karvonen, U., L. Tainio, and S. Routarinne. 2017. “Uncovering the Pedagogical Potential of Texts : Curriculum Materials in Classroom Interaction in Fi Rst Language and Literature Education.” Learning, Culture and Social Interaction, August: 1–18. doi:10.1016/j.lcsi.2017.12.003.

- Liao, J., X. Lu, K. Masters, J. Dudek, and Z. Zhou. 2019. “Telepresence-Place-Based Foreign Language Learning and Its Design Principles.” Computer Assisted Language Learning 1–26. doi:10.1080/09588221.2019.1690527.

- Licoppe, C., and J. Morel. 2014. “Mundane Video Directors in Interaction. Showing One’s Environment in Skype and Mobile Video Calls.” In Studies of Video Practices: Video at Work, edited by M. Broth, E. Laurier, and L. Mondada, 135–160. London: Routledge.

- Licoppe, C. 2017a. “Showing Objects in Skype Video-Mediated Conversations: From Showing Gestures to Showing Sequences.” Journal of Pragmatics 110: 63–82. doi:10.1016/j.pragma.2017.01.007.

- Licoppe, C. 2017b. “Skype Appearances, Multiple Greetings and ‘Coucou’: The Sequential Organization of Video-mediated Conversation Openings.” Pragmatics 27 (3): 351–386. doi:10.1075/prag.27.3.03lic.

- Licoppe, C., and J. Morel. 2012. “Video-in-Interaction: ‘Talking Heads’ and the Multimodal Organization of Mobile and Skype Video Calls.” Research on Language and Social Interaction 45 (4): 399–429. doi:10.1080/08351813.2012.724996.

- Lilja, N., and A. Piirainen-Marsh. 2019. “How Hand Gestures Contribute to Action Ascription.” Research on Language and Social Interaction 52 (4): 343–364. doi:10.1080/08351813.2019.1657275.

- Luff, P., C. Heath, H. Kuzuoka, J. Hindmarsh, K. Yamazaki, and S. Oyama. 2003. “Fractured Ecologies: Creating Environments for Collaboration.” Human-Computer Interaction 0024: 776111462. doi:10.1207/S15327051HCI1812.

- Luff, P., C. Heath, N. Yamashita, H. Kuzuoka, and M. Jirotka. 2016. “Embedded Reference: Translocating Gestures in Video-Mediated Interaction.” Research on Language and Social Interaction 49 (4): 342–361. doi:10.1080/08351813.2016.1199088.

- Majlesi, A. R. 2015. “Matching Gestures – Teachers’ Repetitions of Students’ Gestures in Second Language Learning Classrooms.” Journal of Pragmatics 76. Elsevier B.V.: 30–45. doi:10.1016/j.pragma.2014.11.006.

- Matsumoto, Y. 2019. “Material Moments: Teacher and Student Use of Materials in Multilingual Writing Classroom Interactions.” Modern Language Journal 103 (1): 179–204. doi:10.1111/modl.12547.

- Mondada, L. 2007. “Imbrications de La Technologie et de l’ordre Interactionnel: L’organisation de Vérifications et d’identifications de Problèmes Pendant La Visioconférence.” Réseaux 144 (5): 141–182. https://www.cairn.info/revue-reseaux1-2007-5-page-141.htm

- Mondada, L. 2014. “Conventions for Multimodal Transcription.” Basel: Romanisches Seminar der Universität. https://franz.unibas.ch/fileadmin/franz/user_upload/redaktion/Mondada_conv_multimodality.pdf

- Musk, N. 2016. “Correcting Spellings in Second Language Learners’ Computer-assisted Collaborative Writing.” Classroom Discourse 7 (1): 36–57. doi:10.1080/19463014.2015.1095106.

- Patru, M., and V. Balaji. 2016. Making Sense of MOOCs: A Guide for Policy-Makers in Developing Countries. Paris: UNESCO and Commonwealth of Learning.

- Rusk, F., and M. Pörn. 2019. “Delay in L2 Interaction in Video-Mediated Environments in the Context of Virtual Tandem Language Learning.” Linguistics and Education 50: 56–70. doi:10.1016/j.linged.2019.02.003.

- Sacks, H. 1992. Lectures on Conversation: Volume II. Oxford: Blackwell.

- Sahlström, F., M. Tanner, and V. Valasmo. 2019. “Connected Youth, Connected Classrooms. Smartphone Use and Student and Teacher Participation during Plenary Teaching.” Learning, Culture and Social Interaction 21 (January): 311–331. doi:10.1016/j.lcsi.2019.03.008.

- Satar, M. 2013. “Multimodal Language Learner Interactions via Desktop Videoconferencing within a Framework of Social Presence: Gaze.” ReCALL 25 (1): 122–142. doi:10.1017/S0958344012000286.

- Satar, M., and C. R. Wigham. 2017. “Multimodal Instruction-Giving Practices in Webconferencing-Supported Language Teaching.” System 70: 63–80. doi:10.1016/j.system.2017.09.002.

- Schegloff, E. 1992. “Repair after Next Turn: The Last Structurally Provided Defense of Intersubjectivity in Conversation.” American Journal of Sociology 97 (5): 1295–1345. doi:10.1086/229903.

- Schegloff, E. 2007. Sequence Organization in Interaction: A Primer in Conversation Analysis. Cambridge: Cambridge University Press.

- Tanner, M., C. Olin-Scheller, and M. Tengberg. 2017. “Material Texts as Objects in Interaction Constraints and Possibilities in Relation to Dialogic Reading Instruction.” Nordic Journal of Literacy Research 3: 83–103. doi:10.23865/njlr.v3.471.

- Walsh, S. 2012. “Conceptualising Classroom Interactional Competence.” Novitas-ROYAL (Research on Youth and Language) 6 (1): 1–14.

- Wigham, C. R. 2017. “A Multimodal Analysis of Lexical Explanation Sequences in Webconferencing-Supported Language Teaching.” Language Learning in Higher Education 7 (1): 81–108. doi:10.1515/cercles-2017-0001.