ABSTRACT

The COVID-19 pandemic has led to an unprecedented response in terms of clinical research activity. An important part of this research has been focused on randomized controlled clinical trials to evaluate potential therapies for COVID-19. The results from this research need to be obtained as rapidly as possible. This presents a number of challenges associated with considerable uncertainty over the natural history of the disease and the number and characteristics of patients affected, and the emergence of new potential therapies. These challenges make adaptive designs for clinical trials a particularly attractive option. Such designs allow a trial to be modified on the basis of interim analysis data or stopped as soon as sufficiently strong evidence has been observed to answer the research question, without compromising the trial’s scientific validity or integrity. In this article, we describe some of the adaptive design approaches that are available and discuss particular issues and challenges associated with their use in the pandemic setting. Our discussion is illustrated by details of four ongoing COVID-19 trials that have used adaptive designs.

1. Background and the Value and Need for Adaptive Designs

1.1. Introduction

Well conducted high quality randomized controlled clinical trials are the gold standard when evaluating the potential of a novel intervention, a standard that should not be compromised during a pandemic. They are an essential component of an outbreak response (National Academies of Sciences, Engineering, and Medicine Citation2017). Ideally, a rapidly conducted trial will provide definitive evidence about an intervention, allowing its immediate wide-spread deployment in the field. A vaccine that reduces risk of infection has the potential to end an outbreak. Alternatively, a treatment that improves outcomes in those who are ill may reduce transmissions by encouraging infected individuals to visit clinics (to receive treatment), thereby reducing community spread.

1.2. Context of Trials in a Pandemic

Studies must start quickly to track with the epidemic curve and enroll enough cases. This poses a particular challenge as trials therefore need to be initiated and started before the natural history of the disease is fully understood (Baucher and Fontanarosa Citation2020). In the West African Ebola outbreak, clinical trials were devised, funded, and initiated in record time. However, by the time most studies opened, the incidence of new cases was dropping and most studies failed to complete enrolment (Thielman et al. Citation2016). A similar experience is now repeated in COVID-19 where early trials in China (e.g., Wang et al. 2020) were stopped prior to reaching the preplanned sample sizes due to dwindling patient numbers and a large number of separate trials have been initiated investigating the same treatments. Such experience has led to some feeling that treatment trials conducted during one outbreak are about finding therapies to use during subsequent episodes of the disease, although this is certainly not always the case.

In the context of a novel infectious disease (such as Ebola or COVID-19), limited experience with treatment is common and heterogeneity of patient characteristics makes randomization an important tool for establishing intervention efficacy (Dodd et al. Citation2016, Citation2019; Proschan, Dodd, and Price Citation2016). Additionally, careful considerations about the endpoints used in the study are required (Dodd et al. Citation2020). Despite these challenges, there exist recent examples of trials that immediately changed practice such as the rVSV Ebola RING vaccine trial (Henao-Restrepo et al. Citation2017) and PALM in Ebola (Mulangu et al. Citation2019).

1.3. Aims and Remit of This Article

The recent experience in COVID-19 is that poorly conducted studies together with a certain degree of desperation mixed with endorsement of treatments by national and international leaders have meant that treatments have become widely used despite limited evidence of either benefit or safety.

This article seeks to make the case that scientific rigor is equally important during a pandemic outbreak as it usually is (London and Kimmelman Citation2020). We highlight the benefits provided by the flexibility of adaptive designs. Following the recent CONSORT extension for adaptive designs (Dimairo et al. Citation2020), we define an adaptive design as “A clinical trial design that offers preplanned opportunities to use accumulating trial data to modify aspects of an ongoing trial while preserving the validity and integrity of that trial.” We provide a guide to the literature on statistical methods for such designs, briefly describing the main approaches and giving key references. We seek to remind readers that one must ensure that possible adaptations are considered in the final trial analysis, as some critics will otherwise raise questions about the scientific integrity of the study, and definitive trials are meant to resolve, not stir, controversy. We provide examples of COVID-19 trials that use different adaptive features and finally discuss some of the more challenging aspects of implementing adaptive designs during a major disease outbreak.

2. Examples of COVID-19 Trials With Adaptive Designs

Adaptive designs have been used (and were planned to be used) during epidemic outbreaks in the past. During the 2014 Ebola virus disease outbreak in West Africa, for example, the PREVAIL II trial (Dodd et al. Citation2016) used a Bayesian sequential design with non-informative priors (termed “barely Bayesian design”), while the RAPIDE development platform (Cooper et al. Citation2015) used frequentist group-sequential approaches.

To illustrate some of the choices to be made when implementing an adaptive design, and the issues to be considered when making these choices, this section presents four different trials in COVID-19. These range in scope considerably, including an early (phase I/II) dose-finding platform, a confirmatory (phase III) trial, and two trials of COVID-19 patients embedded within previously-running trials. In all cases, an adaptive design has been employed, the aim of which is to obtain a meaningful result from the trial as quickly as possible without any loss of scientific integrity. Our aim in including these examples is not to give a comprehensive review of COVID-19 research, or even a full description of each of these four trials, details of which can be found via the trial registration numbers given, but to highlight the nature and purpose of the adaptive design approach used in each case. More detailed descriptions of the different methodological approaches used for the adaptive designs are then given in Section 3.

2.1. A Randomized Phase I/II Platform Trial Using a Bayesian Sequential Phase II Design: The AGILE-ACCORD Platform (EudraCT 2020-001860-27)

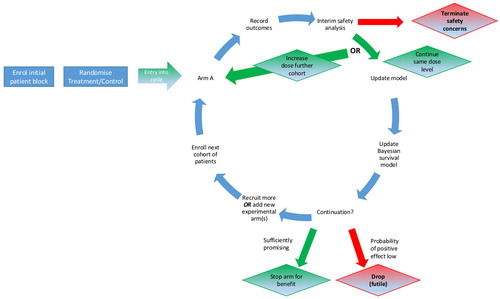

The first example is of a phase I/II dose-finding platform. Before investigating a new or existing compound in COVID-19 patients in a large efficacy trial, it is essential to establish safe doses and preliminary signs of activity. The AGILE-ACCORD (Accelerating COVID-19 Drug Development, EudraCT 2020-001860-27) trial is a randomized seamless phase I/II trial platform in which multiple different candidate treatments, potentially in different populations, can be evaluated. An illustration of the design is given in with the full protocol given by Griffiths et al. (2020). Each candidate compound entering the platform undergoes dose-escalation initially to establish its safety profile. Safe doses then progress to a Bayesian adaptive group-sequential (GS, Gsponer et al. Citation2014) phase in which the efficacy of the doses is established. For each compound, the trial is seamless in that the efficacy data observed during the dose-escalation phase of the trial are used in the efficacy phase, and similarly safety information from the efficacy phase contributes to the safety model.

The safety of the doses is defined in terms of the risk of a dose-limiting toxicity (DLT) in the first 7 days, with dose-escalation decisions based on a randomized Bayesian model-based design (Mozgunov, Jaki, and Paoletti Citation2019). Cohorts of patients are randomized between the control arm (standard of care, SoC) and the highest active dose that is safe according to the safety model. Patients within each cohort are randomized 2:1 in favor of the active dose. The inclusion of control in the dose-escalation phase is motivated by the potential overlap between DLTs and symptoms associated with COVID-19 and the need to avoid labeling potential treatments as unsafe due to misclassifying nontreatment related toxicities. Every dose that is found to be sufficiently safe can proceed to the efficacy evaluation phase so that it is possible to have several parallel efficacy evaluations of different doses of the same compound.

The efficacy of a given dose of a compound is established via a randomized Bayesian GS trial with a time-to-event primary endpoint. Patients are again randomized 2:1 in favor of the active dose being studied. The endpoint depends on the population under study. For mild and moderate cases, time to negative viral titers in nose and/or throat swab within 29 days is used while time to a 2-point improvement in the WHO clinical severity score (WHO Working Group on the Clinical Characteristics of COVID-19 infection 9-point ordinal scale) within 29 days is used for patients with severe disease. After the efficacy data are observed, the dose can be (i) dropped for futility; (ii) progressed to a definitive trial; (iii) be evaluated in an additional cohort of patients, again randomized 2:1. Stopping is based on predefined stopping boundaries that have been derived under a Bayesian GS design such that the Type I error for the evaluation of each dose is controlled to be below 10%. The GS trial gains further efficiency by allowing up to 20 concurrent control observations from other compounds to be shared. The final analysis for efficacy for each arm is based on a Bayesian Cox-model with an uninformative prior for the treatment effect.

2.2. A Multi-Arm Multi-Stage (MAMS) Trial: The RECOVERY-Respiratory Support Trial (ISRCTN16912075)

The second example is the RECOVERY-Respiratory Support trial (ISRCTN16912075). This is a multi-arm multi-stage (MAMS) randomized clinical trial to compare three noninvasive ventilation methods of standard care (oxygen given via face masks or nasal tubes), high flow nasal oxygen (HFNO), and continuous positive airway pressure (CPAP) for patients with confirmed or suspected COVID-19 who require oxygen (FiO2 ≥ 0.4 and SpO2 ≤ 94%). The primary endpoint is the requirement of intubation or death within 30 days.

The trial team acknowledged that not all centers would be able to randomize patients between all three arms, for example, due to the lack of availability of one of the interventions. To maximize recruitment to the trial it was decided to include centers provided they could randomize patients between standard care and at least one of HFNO and CPAP.

The inclusion of centers randomizing between different interventions gives the potential for bias, for example, due to differences between centers randomizing to all arms and those randomizing only to HFNO and standard care. To avoid this bias, analyses will only compare HFNO patients with standard care patients from centers that included HFNO patients, and only compare CPAP patients only with standard care patients from centers that included CPAP patients. The sample size will account for this.

The trial started in April 2020 with a target recruitment of 4002 patients from 40 centers. This would give 90% power to detect a reduction in the proportion of patients requiring intubation or dying from 15% to 10%. To achieve this sample size, the trial is expected to be completed within 18 months. Given the urgent need for effective treatments for COVID-19 patients and the potential long duration of the trial, the investigators wanted to stop the study early if either CPAP or HFNO was shown to be more effective than standard care, or to drop an arm or stop the study completely if one or both experimental arms was not sufficiently promising. This ability was achieved through the planning of interim analyses on a monthly basis. A formal GS stopping rule for efficacy of either experimental arm over standard care was proposed based on the alpha-spending function approach (Kim and Demets Citation1987), as described in Section 3.3.

The stopping rule is applied separately for each pairwise comparison with standard care. A conventional one-sided overall Type I error rate of 0.025 was used, and the final analysis will take account of the GS design using the approach proposed by J. Whitehead (Citation1997). No adjustment is planned for the multiple comparisons with standard care. No futility boundary was specified, though the trial data monitoring committee (DMC), who will monitor the data monthly, will be able to recommend that an experimental arm be dropped or the trial be stopped for futility at any interim analysis.

2.3. Embedding a Trial Within a Trial: The CAPE-Covid and the CAPE-Cod (Community-Acquired Pneumonia: Evaluation of Corticosteroids) Studies (NCT02517489)

The third example shows how an existing trial was modified to include an embedded trial of COVID-19 patients, with this embedded trial given a GS design. The CAPE-Cod trial (NCT02517489), assessing the efficacy of hydrocortisone to improve 28 day survival in intensive care units (ICU) for patients suffering severe community-acquired pneumonia (CAP), was active and recruiting patients at the beginning of the COVID-19 pandemic. As SARS-CoV-2 pneumonia was not an exclusion criterion of the CAPE-Cod trial, centers started to include COVID-19 infected patients in the study. As the clinical characteristics of the two indications differ, trial stakeholders have decided to study the COVID-19 patients by embedding a specific study considering the COVID-19 indication only, with the CAPE-Cod study interrupted temporarily to account for patients’ heterogeneity in disease characteristics and evolution.

A GS design using the alpha-spending approach (Kim and Demets Citation1987) was used for the embedded COVID-19 study. This was chosen for its simplicity in terms of training the local methodological team, availability of software and medical community acceptance of study results. A conservative stopping boundary was selected for efficacy with an aggressive boundary for futility; that is stopping for superiority only if the evidence is particularly strong while stopping early if the experimental treatment is not sufficiently promising. This will be adjusted for in the final analysis. A blinded sample size re-estimation was included in the study protocol because of uncertainty about the mortality rate for placebo patients with COVID-19, with estimates ranging from 26% to 73% (Grasselli et al. 2020; Ruan et al. Citation2020).

2.4. Evaluating COVID-19 Treatments in an Ongoing Adaptive Platform Trial: The REMAP-CAP Trial (NCT0273570)

The final example is of a trial that was designed to be able to adapt to an acute pandemic need. Motivated by the 2009 swine flu (H1N1) pandemic, the Randomized, Embedded, Multifactorial Adaptive Platform trial for Community-Acquired Pneumonia (REMAP-CAP, NCT0273570) seeks to: (i) evaluate multiple interventions to improve outcomes of patients admitted to an ICU with severe CAP, and (ii) provide a platform that can respond rapidly in the event of a respiratory pandemic.

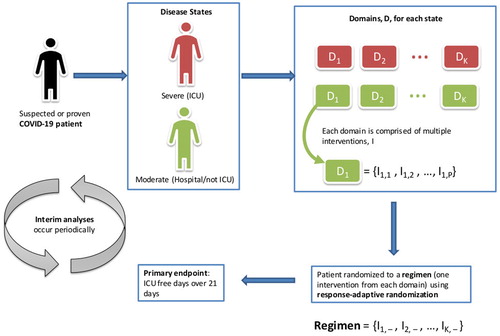

The primary endpoint for COVID-19 patients entering REMAP-CAP is a composite of in-hospital death and the number of ICU free days over 21 days, thus forming a 23-point ordinal scale, that is, −1 (death), 0, 1, …, 21. Adult patients with suspected or proven COVID-19 are enrolled into the COVID-19 stratum of REMAP-CAP, on an open-label basis, and classified into severe (in ICU) or moderate (hospitalized but not in ICU) disease states.

Within each disease state, there are multiple domains, each of which is a set of mutually exclusive interventions. An example is the antiviral domain comprising four interventions: (1) no antiviral; (2) lopinavir/ritonavir; (3) hydroxychloroquine; (4) lopinavir/ritonavir and hydroxychloroquine combination. The trial is adaptive so that new domains or interventions can be added at any time. Sites do not necessarily need to include all of the available domains or interventions.

Each patient is randomized to a regimen—one intervention from every domain—so that they receive multiple interventions simultaneously; this forms the multifactorial component of the trial design (). By randomizing patients to multiple interventions, only a few receive no active treatment. Response-adaptive randomization is used to allocate patients to regimens so that more patients receive the most promising regimen(s) as the trial progresses.

Figure 2. REMAP-CAP trial structure for COVID-19 patients (adapted from a webinar by Berry Consultants, https://www.youtube.com/watch?v=mhPsj1j3hlk).

Interim analyses occur frequently and are currently expected to take place weekly, although this will fluctuate with the rate of enrolment. They are also used to detect superiority, inferiority, or equivalence of interventions. Depending on regional differences or treatment availability, for example, an intervention may not necessarily be dropped if it is needed in the pandemic. All inferences in this trial are based on a Bayesian statistical model, which estimates the posterior probability of the primary endpoint for each regimen and takes into account the variation in outcomes by region, stratum, disease state, age group, and time since the start of the trial. The full statistical analysis plan is available at https://www.remapcap.org/protocol-documents.

3. Statistical Principles of Adaptive Design

3.1. Motivation for Using Adaptive Design Methods

Traditionally, clinical trials testing one or more null hypotheses have followed nonadaptive designs where all details of the design are specified ahead of time. In recent decades, however, there has been a rise of adaptive designs for clinical trials in all phases of drug development (Pallmann et al. Citation2018) and, in response, regulatory authorities have issued several guidelines for adaptive designs (European Medicines Agency 2007; Food and Drug Administration 2019).

Suppose we want to perform a clinical trial to test whether an experimental treatment is superior to control (placebo or current SoC). Measuring the benefit of the novel treatment over control by θ, we want to test the one-sided null hypothesis H0: θ ≤ 0 versus the alternative H1: θ > 0. To claim control of the Type I error rate for the trial, the probability of incorrectly rejecting H0 must not exceed a prespecified level α. The need to maintain strict control of the Type I error rate is well established, particularly in the context of late-phase studies (European Medicines Agency Citation1998) where a typical choice for α is 0.025. In many situations, however, clinical trials have several objectives. For example, trialists may want to compare several experimental treatments or active doses with control, or evaluate the effect of a novel treatment in several patient subgroups. In this setting, a trial begins testing m null hypotheses H0i : θi ≤ 0, for i = 1, …, m, and often is designed to maintain strong control of the family-wise error rate (FWER) at some level α, which is achieved if the probability of rejecting one or more true null hypotheses does not exceed α. The requirement to control the FWER is similar to that of controlling the Type I error rate in that it restricts the probability of making a false claim of efficacy.

Why should one use an adaptive design in the first place? When planning a clinical trial there might be (substantial) uncertainty about important design parameters, for example, which effect sizes and value of the outcome variance are realistic and in which study population. If the study population is set too broadly, or the assumed variance is too small, the study will be underpowered. To address such uncertainties, it is tempting to learn from accumulating data and adapt if necessary. Adaptations of interest for COVID-19 trials may include early stopping for futility or efficacy at an interim analysis, sample size reassessment, dose-group selection, change of allocation ratio or modification of the study population in a data dependent way. Emerging knowledge of the natural history of COVID-19 can also make the choice of a primary endpoint for a trial difficult (Dodd et al. Citation2020). Adaptive methods are available to enable a change in endpoint (Kieser, Bauer, and Lehmacher Citation1999), though it is important to ensure that the results of a trial will be sufficiently convincing to the scientific community when making such a change.

MAMS designs (e.g., Stallard and Friede Citation2008; Magirr, Jaki, and Whitehead Citation2012; Wason et al. Citation2016) are of great interest for COVID-19 trials. They increase efficiency by comparing multiple experimental treatments against a shared control group (Parmar, Carpenter, and Sydes Citation2014), while the adaptive design allows early stopping of non-promising (or highly efficacious) arms using prespecified decision rules (Wason and Jaki Citation2012). Platform trial designs (Meyer et al. Citation2020) allow new experimental treatments to be added as the trial progresses and often start as, or become, MAMS trials. Platform trials provide notable operational efficiency (Schiavone et al. 2019) since evaluation of a new treatment within an existing trial will typically be much quicker than setting up a new trial. This flexible approach enabled the REMAP-CAP trial described in Section 2.4 to rapidly be adapted to study COVID-19 patients. Platform trials are cited as one potential approach for evaluating drugs intended to treat or prevent COVID-19 in a recent guidance (Food and Drug Administration 2020) and several other platform trials, such as the SOLIDARITY trial (ISRCTN83971151) and the RECOVERY trial (ISRCTN50189673, www.recoverytrial.net), are currently running.

For a more detailed review of adaptive clinical trial designs, we refer the interested reader to Bauer et al. (Citation2016) and Pallmann et al. (Citation2018), and the references therein. For a technical description of the methods for confirmatory clinical trials, we refer to the book of Wassmer and Brannath (Citation2016). For an overview of Bayesian adaptive methods, which have attracted much attention in the context of exploratory trials, see Berry et al. (Citation2010).

For all adaptive design approaches, data dependent changes may introduce bias and, particularly for late stage trials, appropriate statistical methods must be used to preserve the Type I error rate. More details on methods to ensure error rate control is maintained are given in the next sections, with estimation methods discussed in Section 3.5.

3.2. Type I Error Rate Control in Adaptive Designs

All regulatory guidelines emphasize that, for confirmatory clinical trials following an adaptive design, control of the Type I error rate is paramount. This requirement remains for trials of COVID-19 treatments (Food and Drug Administration 2020). Elsäßer et al. (Citation2014) reviewed scientific advice given by the European Medicines Agency (EMA) on adaptive design proposals and their outcomes (Collignon et al. Citation2018). The authors showed that if adaptive designs are properly implemented, then such innovative designs are well accepted by health authorities.

Strict control of the Type I error rate can be achieved by using prespecified adaptation rules such as early stopping boundaries in GS designs (Jennison and Turnbull 1999) or blinded sample size reassessment rules when estimating nuisance parameters at an interim analysis (Friede and Kieser Citation2006). However, prespecified designs do not permit unplanned modifications. To allow for further flexibility, fully adaptive designs have been suggested which allow adaptations not completely specified in the adaptation rule while still controlling the Type I error rate. Many designs of this type use p-value combination tests (Bauer and Köhne Citation1994) to allow for more flexible decision making, while still preserving the integrity of the design. Alternatively, designs can follow the conditional error principle (Proschan and Hunsberger Citation1995; Müller and Schäfer Citation2004) to control the Type I error rate. The conditional error principle enables one to introduce flexibility to any type of predefined design, even if no interim analysis was originally planned (Müller and Schäfer Citation2004); the key is that the significance level of the adapted trial must not exceed the level of the initial design conditional on the data already observed. If data are combined across stages of the adaptive trial using the inverse normal function proposed by Lehmacher and Wassmer (Citation1999) and Cui, Hung, and Wang (Citation1999), and monitored against GS boundaries, the test decision should coincide with the study outcome had no unplanned adaptation been performed.

If more than one null hypothesis is to be tested, for example, in a multi-arm study, several extensions (Bauer and Kieser Citation1999; Hommel Citation2001) to the designs discussed above have been proposed which use adaptive designs within a closed testing framework (Marcus, Peritz, and Gabriel Citation1976). A closed testing procedure requires level α tests of each individual null hypothesis to be specified, as well as level α tests of all possible intersections of null hypotheses; if all tests involving a specific hypothesis are significant at level α, then that null hypothesis may be rejected globally at level α. Tests maintaining strong control of the FWER must (whether explicitly defined or not) be a closed testing procedure. For adaptive trials, for each null hypothesis, whether individual or an intersection, an adaptive test is prespecified.

The advantage of the fully adaptive design is that both internal and external information can be used to adapt the trial without fully specifying the adaptation rule. This is crucial in the pandemic situation as it means emerging data from parallel trials can be easily incorporated into the adaptation decision rule of an ongoing adaptive trial without compromising its integrity.

The methods discussed above focus on ensuring Type I error rate control in a frequentist setting. It is also possible to incorporate Bayesian methodology into the decision making process for adaptations without compromising error rate control, often using simulation methods to select critical values and other design parameters (Schmidli, Bretz, and Racine-Poon Citation2007; Brannath et al. Citation2009; Berry et al. 2011; Yu, Ramakrishnan, and Meinzer Citation2019; Stallard et al. Citation2020). Such an approach is illustrated by the examples in Sections 2.1 and 2.4.

3.3. GS Stopping Rules in Confirmatory Adaptive Design Trials

Incorporating a GS stopping rule into a confirmatory clinical trial allows for greater flexibility as compared to a standard trial design without any interim data looks. If an extraordinarily large benefit of the experimental treatment over control is observed at an interim analysis, it is desirable to have the option to stop the trial for efficacy with early rejection of the null hypothesis. If such an option is incorporated, a multiple testing problem emerges which needs to be handled by adjusting the local significance levels for the interim and final analysis to maintain the nominal Type I error rate. In GS trials with one or more interim looks, this can be done by using adjusted rejection regions tailored to prespecified interim analysis times (O’Brien and Fleming Citation1979; J. Whitehead Citation1997). In practice, many GS trials are run allowing flexibility with regards to the timing and number of interim analyses, using the alpha-spending function approach (Kim and Demets Citation1987) or flexible boundary methods (J. Whitehead Citation1997, 2011). The two examples described above in Sections 2.2 and 2.3 that are based on frequentist analyses both use the alpha-spending function approach to ensure that Type I error rates are controlled. GS stopping rules for efficacy can also be incorporated in adaptive designs using a combination test approach (Bauer and Köhne Citation1994; Lehmacher and Wassmer Citation1999) or the conditional error principle (Proschan and Hunsberger Citation1995; Müller and Schäfer Citation2004).

Alternatively, one might also consider stopping a confirmatory trial early for futility if data are consistent with clinically irrelevant or harmful treatment effects. Allowing stopping for futility can be particularly useful in confirmatory trials of COVID-19 treatments which, due to high levels of unmet medical need, may follow accelerated development programs, so that limited phase II data are available before the confirmatory study begins (Food and Drug Administration 2020). Stopping for futility also means that resources can be quickly diverted to test more promising interventions. If recruitment into a COVID-19 trial is rapid, many patients will already have been enrolled by the time the futility interim analysis is performed, and the reductions in expected sample size possible by testing group sequentially will be smaller than those typically cited (Hampson and Jennison Citation2013). To mitigate this issue, accrual could be paused in the run-up to a futility interim analysis (Food and Drug Administration 2020) although in practice restarting recruitment is very challenging. A futility rule can either be binding in the sense that it needs to be strictly adhered to at the interim analysis, or nonbinding, meaning that the rule may also not be followed. While binding futility rules allow for a relaxation of the local significance levels, nonbinding rules can be regarded as more advantageous from a practical perspective due to their increased flexibility. However, the Type II error rate increases with the use of a nonbinding futility rule, requiring an increased sample size to achieve the target power. The COVID-19 trial examples given above include both trials with binding (Section 2.3) and nonbinding (Section 2.2) futility stopping rules.

3.4. Multi-Arm Multi-Stage (MAMS) and Platform Trials

MAMS designs allow several experimental treatments to be assessed in a single trial with treatments dropped from the trial as soon as interim analysis data suggest they are ineffective. The design requires specification of stopping boundaries prior to the trial starting: these usually consist of futility stopping boundaries and, less commonly, efficacy stopping boundaries. At an interim analysis, each experimental arm is compared against control using all patients with outcome information up to that point: experimental arms with test statistics below the futility boundary have further recruitment stopped and experimental arms with test statistics above the efficacy boundary can be concluded to be effective (and the respective null hypothesis rejected). Setting stopping boundaries is not trivial, but can be done using analytical formulas (Royston, Parmar, and Qian Citation2003; Magirr, Jaki, and Whitehead Citation2012) or simulation in the frequentist (Wason and Jaki Citation2012) or Bayesian (Yu, Ramakrishnan, and Meinzer Citation2019) settings. Software exists such as the R package MAMS (Jaki, Pallmann, and Magirr Citation2019) and the Stata module nstage (Bratton, Choodari-Oskooei, and Royston Citation2015). The stopping and selection rules are preplanned, meaning that the trial must be conducted according to the prespecified rules. A frequentist MAMS design is being used in the COVID-19 RECOVERY-Respiratory Support trial described in Section 2.2. The design is efficient in that two experimental treatments are compared with a shared control group with the chance to stop as soon as sufficiently positive results have been observed or to drop an arm or stop the trial completely if results are not sufficiently promising.

The MAMS trial methodology can be generalized to a flexible confirmatory adaptive approach (Magirr, Stallard, and Jaki Citation2014) which is usually based on the combination testing principle (Bauer and Köhne Citation1994). This principle can be applied for testing multiple hypotheses by use of the closed testing principle (Bauer and Köhne Citation1994; Bretz et al. Citation2009; Bauer et al. Citation2016; Wassmer and Brannath Citation2016). An application is the multi-arm setting where, for example, treatment arms can be selected and sample sizes for the selected treatment arms can be recalculated based on the observed response (Bauer and Kieser Citation1999; Koenig et al. Citation2008; Magirr, Stallard, and Jaki Citation2014; Wassmer and Brannath Citation2016). For these designs, the adaptation rules, need not be prespecified. Consequently, this approach allows for testing in MAMS trials controlling the FWER in a strong sense. R packages are available for confirmatory adaptive designs in the multi-arm setting, such as asd (Friede, Stallard, and Parsons Citation2020) and rpact (rpact Citation2020), with MAMS (Jaki, Pallmann, and Magirr Citation2019) also having an extension for dealing with unexpected design modifications.

Some MAMS trials use adaptive randomization (AR) to guide allocation to better performing arms. In a multi-arm setting, if the shared control group is kept separate from the AR procedure, this maintains the power at a high level (Wason and Trippa Citation2014; Williamson and Villar Citation2020). Frequentist and Bayesian approaches to AR can be used, although in practice it is more common to use a Bayesian approach, as in the REMAP-CAP trial described in Section 2.4. AR has been criticized for being susceptible to temporal trends in the trial (Thall, Fox, and Wathen Citation2015; Proschan and Evans Citation2020), which may be possible in COVID-19 settings due to mutation of the virus. However, if the allocation to the shared control group is maintained, there is little impact on the statistical properties of the trial (Villar, Bowden, and Wason Citation2018).

Another type of MAMS design is the drop-the-losers design (Sampson and Sill Citation2005; Wason et al. Citation2017), which sets a fixed number of arms to progress at each interim analysis. An advantage of this is that the design has a fixed sample size (in contrast to a random sample size for the approach described above). The main disadvantage is that it may lead to dropping of promising arms if there are more than the design is permitted to take forward. Approaches that combine the drop-the-losers approach with prespecified stopping boundaries have been proposed (Friede and Stallard Citation2008) to allow early stopping for futility and efficacy.

Magirr, Jaki, and Whitehead (Citation2012), Wason et al. (Citation2017), and Robertson and Wason (2019) describe how FWER control can be achieve in a MAMS design. However, there has been debate about which type of multiplicity adjustment, for example, pair-wise or family-wise, is needed for a multi-armed design (e.g., Wason, Stecher, and Mander Citation2014; Stallard et al. Citation2019; Collignon et al. Citation2020). Generally, the decision will be determined by the relative importance of making a Type I error and a Type II error, as correcting for multiple testing reduces the power. In a platform setting, it is more difficult to formally control the FWER unless it is known in advance how many treatments will be tested. New methods for online control of error rates may provide a way of doing this if it is required (Robertson and Wason Citation2018).

Most methodology work in MAMS has focused on settings with a shared control group. In the case of no obvious control group, we would refer the reader to the Magaret et al. (Citation2016) and the motivating example in Whitehead, Desai, and Jaki (Citation2020).

When there is a shared control group, the relative allocation between control and experimental treatments can affect the power. A natural choice is to randomize patients equally between each available treatment. One can gain a small amount of power by increasing the allocation to the control group (Wassmer Citation2011; Wason et al. Citation2016), although in a MAMS study the optimal allocation depends on the likelihood of dropping arms only. Setting the allocation ratio of new arms in a platform study can also be optimized (Bennett and Mander Citation2020).

3.5. Estimation After an Adaptive Design Trial

Point estimates and confidence intervals (CIs) for the treatment effects assessed in a confirmatory clinical trial play a key role in deciding whether or not to recommend adoption of the new interventions in clinical practice. Therefore, the estimators used in confirmatory adaptive clinical trials need to have good accuracy. In some settings conventional estimation approaches may be used provided the bias can be shown to be small. In other settings naive point estimators that assume a two-arm single-stage design can be substantially biased for an adaptive trial because of the possibility of stopping early for futility or overwhelming effect (Whitehead 1986; Koopmeiners, Feng, and Pepe Citation2012) and/or selecting the most promising experimental treatments at an interim analysis (Bauer et al. Citation2010; Carreras and Brannath Citation2013; Kimani, Todd, and Stallard Citation2013). For two-stage trials, conditional on the trial continuing to stage 2, the selection rule and the treatments continuing to stage 2, several uniformly minimum variance conditional unbiased estimators (UMVCUEs) exist (Bowden and Glimm Citation2008; Kimani, Todd, and Stallard Citation2013; Robertson, Prevost, and Bowden Citation2016; Stallard and Kimani Citation2018). The UMVCUEs can have large mean squared errors (MSEs) and are computed if the trial continues to stage 2. Alternative shrinkage estimators that are based on empirical Bayes approaches tend to have smaller MSEs but they are not necessarily unbiased (Carreras and Brannath Citation2013; Brückner, Titman, and Jaki Citation2017). Estimators that also reduce bias when a trial stops in stage 1 exist (e.g., Stallard and Todd Citation2005; Carreras and Brannath Citation2013) as well as generalizations to multiple stages (Whitehead, Desai, and Jaki Citation2020).

Naive CIs can have incorrect coverage. When an adaptive design selects the most effective experimental treatment in stage 1, among the methods that adjust for adaptation (Sampson and Sill Citation2005; Stallard and Todd Citation2005; Wu, Wang, and Yang Citation2010; Magirr et al. Citation2013), the Sampson and Sill (Citation2005) method performs best in terms of coverage while the approach of Wu, Wang, and Yang (Citation2010) is the most straightforward for computing CIs (Kimani, Todd, and Stallard 2014). Methods for computing CIs with other selection rules are available (Neal et al. Citation2011; Magirr et al. Citation2013). One of these methods allows continuing with multiple experimental treatments in stage 2 but sometimes the computed CIs are non-informative (Magirr et al. Citation2013).

Most of the methods above assume normally distributed outcomes with known variance. Extensions to the case of unknown variance (Robertson and Glimm Citation2019) exist and, using asymptotic approximations, they can be extended to other outcomes. Point estimation with time-to-event outcomes has been considered separately as these outcomes pose specific challenges (Brückner, Titman, and Jaki Citation2017). One of the UMVCUEs is a generalization of the others enabling MAMS trials (Stallard and Kimani Citation2018).

4. Specific Statistical Issues in Adaptive Designs in COVID-19 Intervention Trials

4.1. Characterizing the Dose–Response Relationship

When studying a compound in a completely new disease population, such as COVID-19, it is essential to identify a therapeutic window, that is, a range of doses which simultaneously control the risk of adverse events while having a clinically meaningful efficacy effect. Importantly, the same requirement applies to repurposed medicines as the dose selected for the original indication is not guaranteed to be optimal for the COVID-19 population. Characterization of the dose–response relationship, however, poses several statistical challenges.

Both the toxicity and efficacy signals should be attributable to the compound rather than to the baseline toxicity and efficacy rates. Therefore, the dose–response relationship should be established accounting for outcomes on control, by quantifying differences between the active doses and control (SoC or placebo) for both toxicity and efficacy endpoints. Furthermore, to gain efficiency by using data across different doses of the compound, a model-based approach that stipulates a parametric relationship for the underlying differences should be used. This, in turn, poses a challenge in terms of how to select the dose–response model. To overcome this, an MCP-Mod approach which considers several candidate models (Bretz, Pinheiro, and Branson Citation2005; Pinheiro et al. Citation2014), or a model-averaging approach can be used. To establish the dose-response relationship, randomization of patients between doses is needed to allow for efficient dose-response estimation. Consequently, the safety of the dosing range must be established before the dose-response relationship can be reliable characterized. A two-stage procedure adjusting allocation probabilities to each dose could also be used (Mielke and Dragalin Citation2017).

To ensure the most efficient use of the data, the most informative yet clinically meaningful endpoint should be used. While it is common to dichotomize continuous or time-to-recovery endpoints to simplify the analysis, it is inevitable that this will result in the loss of some statistical information; specifically, this strategy has been found to result in noticeable losses when characterizing the dose–response relationship (Mozgunov, Jaki, and Paoletti 2020). While dose–response models for continuous and binary endpoints are more common, there are also parametric and semiparametric models available for time-to-event data with implementations available in standard statistical software.

In the AGILE-ACCORD trial described in Section 2.1 the risk of toxicity (yes/no) is modeled and excess risk of toxicity of the investigated treatment above control is used for dose-selection.

4.2. Choice of Primary Endpoint

Choosing the primary endpoint is often a challenge in clinical trials, but a novel disease with lots of uncertainty makes this choice is even more challenging. Whitehead and Horby (Citation2017) approached this challenge by recognizing that outcomes such as recovered versus not recovered are almost always of interest. In recent work discussing different choices of endpoints for COVID-19, Dodd et al. (Citation2020) also state that mortality is a desirable endpoint for COVID-19 that might be difficult to use in practice due to the large number of patients required. Other endpoints are therefore often used, but as knowledge about the disease increases, alternative endpoints might emerge that are deemed to be more suitable. In such a situation it is natural to want to adapt the primary endpoint in the light of this new information. Such an approach is possible within an adaptive design (Kieser, Bauer, and Lehmacher Citation1999), but challenges may arise in convincing end-users of the trial results that such a change have not been made with ill intent. A recent example of a trial that did change the primary endpoint is the ACTT trial (Beigel et al. 2020) that initially used the WHO ordinal scale at a fixed time point but later changed, on the basis of information external to the trial, to time-to-recovery.

4.3. Stopping a Trial Early Due to Recruitment Difficulties

The incidence of COVID-19 will vary over the course of the pandemic. A trial starting recruitment during the “post-peak” phase of the pandemic, as disease activity levels trail off, may struggle to reach the target information level before it ends. If a GS test is stopped early due to feasibility issues, steps can be taken to draw valid inferences about the target treatment effect. As mentioned in Section 3.3, many GS trials are monitored according to the alpha-spending function approach (Kim and Demets Citation1987), and accommodating early termination in this framework is relatively straightforward. At the final analysis, we choose the critical value of the test so as to “spend” all the remaining Type I error rate. Choosing the final critical value in this way preserves the Type I error rate of the trial, although there will be a drop in power relative to the originally planned design. CIs and p-values based on the stagewise ordering of the test’s outcome space can be calculated conditioning on the observed sequence of information levels using the methods described in Section 3.5. A similar idea can be used to reach a final hypothesis decision in a GS trial which does not follow an alpha-spending design: one finds the critical value for the final analysis so that the trial has Type I error rate α given the observed information levels and the test boundaries used at previous interim analyses. One additional caveat that applies to all GS trials stopped early for lack of feasibility: while the decision of when to truncate the GS test can (and should) be informed by trends in recruitment rates, the timing of the final analysis should not be influenced by previous treatment effect estimates (Jennison and Turnbull 1999). If, for example, we bring forward the final analysis because we were close to crossing a boundary at the last interim look, test statistics will not follow the anticipated canonical joint distribution used to calculate stopping boundaries (Jennison and Turnbull Citation1997), leading to deviations from the nominal Type I error rate.

If the pandemic ends earlier in some geographic regions than others, the sponsor of a global study can compensate by switching to recruit more patients at sites where the disease is still active. While this strategy would preserve the Type II error rate of the trial, one would still see perturbations in the power of region-specific subgroup analyses. In addition, the impact of this shift in recruitment on the interpretation of the trial should be carefully considered, particularly if the target estimand (ICH Citation2020) remains as the effect of treatment in a global patient population (which would still be pertinent if all regions remained at risk of subsequent waves of infection). To align with this target estimand, the analyst could perform a random effects meta-analysis of region-specific treatment effect estimates, where the mean of the random-effects distribution would be taken as the overall treatment effect of interest.

4.4. Heterogeneity of the Patient Population

The prognosis of COVID-19 is highly variable, ranging from non-symptomatic courses of the disease in the majority of patients, to severe clinical courses requiring hospitalization, intensive care and leading to death in many cases. A number of prognostic factors have been identified, including age, sex, chronic lung disease, diabetes, hypertension, and other cardiovascular comorbidities (Grasselli et al. 2020; Zhou et al. Citation2020). In addition, the time span and course of the disease up to the time of study inclusion is prognostic for the outcome. For the design of clinical trials this implies that the inclusion criteria have a strong impact on the distribution of the primary endpoint and the corresponding sample size planning. Furthermore, in the analysis of the clinical trial, the precision of treatment effect estimates can be substantially improved by adjusting for important covariates in the analysis. Also, stratified randomization according to the risk factors can improve the efficiency of the design.

An important question is to what extent the prognostic factors are also predictive for the efficacy of the treatment. For example, for anti-viral drugs it is usually assumed that efficacy is largest when treatment starts early after infection. However, comorbidities and demographic variables can also play a role in the mechanism of action or affect the safety profile. If the treatment effect varies across subgroups that are defined by baseline characteristics, treatment effect estimates are only meaningful for a well-defined patient population. In addition, subgroup analyses will be critical to better understand the treatment effect. Challenges are the typically small sample sizes in subgroups and the multiplicity problem connected with multiple analyses. Adaptive enrichment designs can be a useful tool in this context (see, e.g., Friede, Stallard, and Parsons Citation2020 for a recent overview). Based on interim results, they allow one to increase the sample size in specific subgroups or to restrict randomization to these populations, while controlling frequentist error rates (Ondra et al. Citation2016, Citation2019; Chiu et al. Citation2018). However, pre-specification of subgroups can be difficult, especially for quantitative predictive factors (e.g., time from the onset of the disease), where thresholds need to be prespecified to define the corresponding sub-populations. If there is not enough prior information to select a single threshold, subgroup analyses with multiple thresholds can be implemented if an appropriate adjustment for multiple testing is foreseen (Graf et al. Citation2020).

4.5. Dynamically Changing Standard of Care

SoC is likely to evolve quickly in the early stages of a pandemic, particularly of a previously unknown disease such as COVID-19, as clinicians’ understanding of the disease and experience treating it increases rapidly. Of course, many trials in an acute pandemic setting recruit quickly and measure short-term endpoints; see, for example, the primary endpoints of the RECOVERY and REMAP-CAP trials described in Section 2. This means that SoC would be expected to remain relatively stable over the course of an individual study. However, more substantial changes may be seen over the course of a trial measuring the long-term outcomes of patients with less severe disease at baseline. Alternatively, we may see changes in SoC across the course of several successive studies which we intend to combine via meta-analysis.

For trials of add-on treatments (comparing SoC + novel therapy vs. SoC alone), block-randomization or pre-specification of the allocation probabilities in a parallel group study will be sufficient to ensure control of the Type I error rate at the nominal level. If changes in SoC result in a time-varying treatment effect, the study investigators should consider what the target estimand is, that is, an aggregate treatment effect averaging across the course of the study, or the effect of treatment compared with the most recent version of SoC, and whether the target effect can be estimated.

Changes in SoC can present a particular challenge in a platform trial adding in new experimental treatments over time. In this setting, there is debate about whether one should use nonconcurrent control patients in the evaluation of experimental treatments. If a new treatment is added in part-way through the trial, concurrent control patients are those who are recruited from that time; nonconcurrent controls would be those patients recruited prior to the addition. If it is likely that there is strong potential for a temporal trend in the data, as may be the case in COVID-19 trials, it is likely best to use only concurrent controls as the primary analysis.

4.6. Leveraging Information Across Trials Using Meta-Analytic Approaches

Comparison-specific treatment effects may accumulate over the course of a pandemic as randomized controlled trials are replicated by different research groups, and as platform trials with common treatment arms report pairwise comparisons. While there may be substantial heterogeneity amongst the control groups of successive trials in a pandemic due to the evolving SoC (Dodd et al. Citation2019), two-group comparative effects may remain more stable. Combining evidence across studies in a meta-analysis will increase the statistical information available for estimating a target treatment effect. If we prospectively intend to update the meta-analysis as each new study is published, the cumulative results should be monitored using a GS test to avoid over-interpretation of random highs and lows in treatment effect estimates, and inflation of the Type I and Type II error rates. Different approaches have been proposed for monitoring a cumulative meta-analysis (Simmonds et al. Citation2017). Sequential meta-analysis approaches use GS boundaries to monitor standardized Z statistics obtained from random-effects meta-analyses. For example, boundaries could be from a triangular GS test (A. Whitehead Citation1997) or a restricted procedure (Higgins, Whitehead, and Simmonds Citation2011), or an alpha-spending test spending Type I error as a function of the estimated proportion of the target information level accumulated thus far. The DerSimonian and Laird (Citation1986) estimate of the between-study heterogeneity may be unstable in the early stages of a cumulative meta-analysis when few studies are available, so that estimated information levels may decrease between successive interim analyses (Higgins, Whitehead, and Simmonds Citation2011). With (very) few studies also Bayesian approaches with weakly informative priors for the between-study heterogeneity were found to be more robust than standard methods (Friede et al. Citation2017). It may be possible to reduce between-study heterogeneity and increase borrowing across studies by leveraging data on baseline covariates, either in a meta-regression approach if only aggregate data are available (Neuenschwander and Schmidli Citation2020), or in models for patient responses if individual patient data are available.

5. Practical Issues in Conducting Adaptive Designs

As highlighted in Section 1, time is essential when conducting clinical trials during a disease outbreak. When conducting an adaptive trial, one particular aspect, namely decision making about undertaking any of the (preplanned) adaptations, needs to be particularly considered in addition. This time-sensitivity means that the decision processes (which often involve review of unblinded data) are streamlined and decision making is clear before undertaking any interim data looks to avoid potential delays in implementing decisions. The DMC of the trial will often have a particularly crucial role to play in these decision processes.

5.1. Data Monitoring Committees for an Adaptive Trial

A DMC, also known as data safety monitoring board (DSMB) or data safety monitoring committee (DSMC), is “a committee created by a sponsor to provide independent review of accumulating safety and efficacy data” (Herson Citation2009). Therefore, the DMC is “responsible for […] protecting trial integrity and patient safety” (Herson Citation2009). Although all trials require safety monitoring, this does not mean that a DMC needs to be established for all trials. However, safety monitoring by a DMC is recommended “for any controlled trial of any size that will compare rates of mortality or major morbidity” or more specifically for a “population at elevated risk of death or other serious outcomes, even when the study objective addresses a lesser endpoint” (Food and Drug Administration Citation2006), which is likely to be the case for any COVID-19 trial. Compared to other studies, COVID-19 trials might be shorter with faster recruitment (given the pandemic situation) and shorter length of follow-up (e.g., restricted to length of stay in hospital). For safety monitoring by a DMC this, in turn, requires shorter review intervals, which might lead to weekly safety reviews in some instances. This has obvious consequences for logistics in terms of data management, statistical analyses, as well as the required availability and commitment of the DMC members. Given these demands it might be practical to set up committees to monitor not only an individual trial but to oversee several trials on a programme level. This would not be uncommon for trials investigating the same compound, but in COVID-19 might be applied also to settings where different compounds are being investigated by the same sponsor. This would also enable the DMC to consider data emerging from other ongoing trials in their decision making, allowing them to integrate rapidly increasing and changing information. This type of oversight committee tends to be larger than the standard DMC which typically comprises two physicians and one statistician, since a wider range of expertise might be required and several regions might need to be represented. In the case of adaptive designs or complex platform trials, the DMC might be charged with extra responsibilities beyond safety monitoring. For example, when performing a formal interim analysis, the DMC might make recommendations regarding treatment or subgroup selection, as well as futility stopping or sample size re-estimation. Since COVID-19 trials often use quickly observed endpoints, such adaptations might still be meaningful, although the trials might be quite short overall given rapid recruitment.

5.2. Regulatory Considerations

Basic regulatory requirements on adaptive designs to be used in confirmatory study are laid down by the Food and Drug Administration and EMA (European Medicines Agency 2007; Food and Drug Administration 2019). In general, regulatory concerns relate to the validity and robustness of the conclusions to be made with respect to a statement on the drug’s efficacy and a thorough benefit risk assessment. In statistical terms, proper Type I error control and unbiased effect estimates (or at least minimization of bias) are of major concern for the primary efficacy endpoint(s), whereas the potential lack of mature data in trials with interim decision relate to the totality of the data package. In addition to proper statistical methods to control Type I error and bias in estimation, issues with the integrity of the trial due to change in trial conduct following an interim evaluation (and leading to inconsistency between stages) play a major role in the assessment of the trial’s validity.

Referring to the specific pandemic setting, the request for rapid decisions, investigation of multiple treatments, and potentially changing controls could complicate the multiplicity issues but the assessment of the trial’s validity remains an overriding regulatory principle. In case the high medical need for effective drugs in a pandemic situation leads to a different appraisal of Type I error rate control to make new treatments available as fast as possible. It remains paramount to understand the trial’s operating characteristics to ensure a fully informed regulatory decision making.

In the specific setting of the COVID-19 pandemic with a number of highly relevant risk factors, high heterogeneity with respect to the severity of the disease and the onset of treatment, a good characterization of the population that would profit from treatment is crucial. Obviously, an extensive search for the optimal subgroup and subsequent confirmation may not be compatible with the need for rapid development. Regulatory principles of subgroup selection are given in the EMA guideline on the investigation of subgroups in confirmatory clinical trials (European Medicines Agency 2019), to be applied in case a positive effect has been established in the overall population but plausible heterogeneity with respect to efficacy and severe side effects asks for a refinement of the population.

6. Conclusions and Discussion

The examples provided in Section 2 give an indication of the range of adaptive design approaches available, and show how these methods have been embraced to advance research even within the challenging setting of a pandemic. In all cases, the objectives of the investigators were to choose an approach that would yield scientifically rigorous results in as rapid a timeframe as possible. The adaptive design framework provides sufficient flexibility to enable trials of COVID-19 patients to be embedded in ongoing trials, while MAMS and platform trial approaches enable rapid evaluation of a number of potential treatments, perhaps repurposed from other disease areas. Bayesian or frequentist methods are available, and in each case can, provide Type I error rate control, at least approximately, required in a confirmatory clinical trial. It is also important that trials are adequately powered, as control of the Type II error rate is particularly relevant in the COVID-19 setting in which there are no existing treatment options.

Although there can be no doubt that clinical research in the setting of a pandemic raises a number of unique challenges, the need for the evaluation of potential treatments in scientifically rigorous randomized controlled clinical trials is as great as in any other area of clinical research.

Whilst it is always desirable to conduct clinical trials of new medical interventions in a timely fashion, faced with a pandemic for which no vaccines or treatments are available, this need is particularly acute, both because of the urgent need for treatments and because of the limited time-window in which large-scale research may be possible in a particular location, or even globally.

Although trials that are unable to fully enroll in time to inform a current outbreak may provide crucial data for future outbreaks or may be analyzed in the context of a meta-analysis, the need for clinical trials that can yield a conclusion, either positive or negative, as quickly as possible, makes adaptive design methods particularly attractive, especially if they are flexible enough to allow modifications to a trial in light of emerging clinical knowledge and results from other ongoing or completed clinical trials. It should be possible to suspend recruitment to a trial during times when the disease is under control and to resume if it re-emerges (Dean et al. Citation2020). The use of adaptive platform designs, in particular for clinical trials in COVID-19, has been proposed by a number of authors (Baucher and Fontanarosa Citation2020) and, as illustrated by the examples given in Section 2, a range of sequential and adaptive design methods are being employed in ongoing clinical trials. Although not a focus of this article, adaptive design methods also provide valuable tools to allow for modification of trials in other diseases necessitated by the COVID-19 pandemic (Kunz et al. Citation2020).

To yield timely results, it is clear that treatment trials should start as soon as possible after an epidemic has begun. The time limiting factor should be identification of candidate treatments, and not the development of a statistical design. In the current COVID-19 pandemic, although adaptive designs were used for some trials, as indicated by the examples above, others which could have benefited from such an approach will have been planned with a fixed sample design because of the pressure to start trials rapidly. Hence, research on trial designs that are most suitable for the purposes of a pandemic should become a research topic that goes beyond the actual case to be better prepared for future pandemics.

A suitable design, or small suite of designs, should be available on the shelf ready for use at any time. Debates about trial design, including whether “best available care” can vary amongst centers and whether Bayesian or frequentist approaches are appropriate should be settled between pandemics, and not reopened when they strike. It might be supposed that such a generic approach is impossible and that the design cannot be created without details of the natural history of the disease or the specification of candidate treatments. However, in almost all cases the primary patient response will be status, a certain number of D days after randomization, classified as dead/severely ill/moderately ill/recovered. The magnitude of differences between treatments can be conveniently assessed in terms of odds-ratios, and an odds-ratio of 2 is almost always clinically important. A potential generic design has been suggested by Whitehead and Horby (Citation2017) that might be a starting point in the search for a suitable vehicle. Perhaps the COVID-19 experience will motivate the clinical trials community to devise and agree upon such a generic design once the current pandemic subsides. It should accommodate the evaluation of multiple treatments compared with best available care, with treatments entering the study as they are devised and are approved for experimental use, and leaving as they are demonstrated to be inferior to others. The design should be adaptive and capable of being used across many sites, preferably internationally.

Even if suitable trial designs are available, the planning and conduct of clinical trials remains difficult. This is true for any trial being conducted during a pandemic, when healthcare resources are overstretched and the possibility of collecting data from face-to-face assessments may be limited (Anker et al. 2020). However, in trials focusing on the pandemic itself, there are additional challenges in that the number of patients likely to be affected, as well as the duration of the pandemic, is unknown and may vary considerably across the globe. In addition, pressures on healthcare systems mean that real-time reporting of trial data is often difficult, reducing the information available at planned interim analyses. Given the importance of solid evidence on the effectiveness of treatments, however, we believe that every effort should be made to ensure that logistical challenges in data collection and transfer do not undermine otherwise high quality trials. For rigorous and quick progress in evaluating treatments, there is a need for scientific collaboration, and the necessary political cooperation to enable and facilitate this. A multi-center trial can recruit patients as quickly as possible by recruiting internationally, or from different countries at different times as the pandemic progresses. In a setting where scientific knowledge is rapidly changing and research studies are being set up quickly, data sharing among global scientific communities is key in allowing meta-analysis methods, such as those described in Section 4.5, to be used. Consequently, results from a number of studies can be combined to provide robust evidence of treatment effectiveness, thus preventing wastage of resources arising from the conduct of multiple similar trials, each of which are individually too small to yield definitive results.

Research Trainees Coordinating Centre;

Acknowledgments

We are grateful to an associate editor and three anonymous referees for their helpful comments that have improved the article.

Disclosure Statement

Martin Posch reports grants from Novartis Pharma AG, grants from Mediconomics GmbH, grants from Merck KGaA, grants from Abbott Laboratories, grants from Almirall, outside the submitted work. He is also a member of the IMI Project Consortium EU-PEARL.

Funding

This article was initiated by the Adaptive Designs and Multiple Testing Procedures Joint Working Group of the Austro-Swiss (ROeS) and the German (IBS-DR) Regions of the International Biometric Society, chaired by Rene Schmidt and Lisa Hampson, and with support from Werner Brannath and Andreas Faldum. J. Wason received funding from UK Medical Research Council (MC_UU_00002/6 and MR/N028171/1). T. Jaki received funding from UK Medical Research Council (MC_UU_00002/14). This report is independent research arising in part from Prof Jaki’s Senior Research Fellowship (NIHR-SRF-2015-08-001) supported by the National Institute for Health Research. The views expressed in this publication are those of the authors and not necessarily those of the NHS, the National Institute for Health Research or the Department of Health and Social Care (DHCS), or the Federal Institute for Drugs and Medical Devices (BfArM). Martin Posch and Franz Koenig (Medical University of Vienna) are members of the EU Patient-centric clinical trial platform (EU-PEARL). EU-PEARL has received funding from the Innovative Medicines Initiative 2 Joint Undertaking under grant agreement no. 853966. This Joint Undertaking receives support from the European Union’s Horizon 2020 Research and Innovation Programme and EFPIA.

References

- Anker, S. D., Butler, J., Khan, M. S., Abraham, W. T., Bauersachs, J., Bocchi, E., Bozkurt, B., Braunwald, E., Chopra, V. K., Cleland, J. G., Ezekowitz, J., Filippatos, G., Friede, T., Hernandez, A. F., Lam, C. S. P., Lindenfeld, J., McMurray, J. J. V., Mehra, M., Metra, M., Packer, M., Pieske, B., Pocock, S. J., Ponikowski, P., Rosano, G. M. C., Teerlink, J. R., Tsutsui, H., Van Veldhuisen, D. J., Verma, S., Voors, A. A., Wittes, J., Zannad, F., Zhang, J., Seferovic, P., and Coats, A. J. S. (2020), “Conducting Clinical Trials in Heart Failure During (and After) the COVID-19 Pandemic: An Expert Consensus Position Paper From the Heart Failure Association (HFA) of the European Society of Cardiology (ESC),” European Heart Journal, 41, 2109–2117. DOI: 10.1093/eurheartj/ehaa461.

- Baucher, H., and Fontanarosa, P. B. (2020), “Randomized Clinical Trials and COVID-19: Managing Expectations,” Journal of the American Medical Association, DOI: 10.1001/jama.2020.8115.

- Bauer, P., Bretz, F., Dragalin, V., König, F., and Wassmer, G. (2016), “Twenty-Five Years of Confirmatory Adaptive Designs: Opportunities and Pitfalls,” Statistics in Medicine, 35, 325–347.

- Bauer, P., and Kieser, M. (1999), “Combining Different Phases in the Development of Medical Treatments Within a Single Trial,” Statistics in Medicine, 18, 1833–1848. DOI: 10.1002/(SICI)1097-0258(19990730)18:14 < 1833::AID-SIM221 > 3.0.CO;2-3.

- Bauer, P., Koenig, F., Brannath, W., and Posch, M. (2010), “Selection and Bias—Two Hostile Brothers,” Statistics in Medicine, 29, 1–13.

- Bauer, P., and Köhne, K. (1994), “Evaluation of Experiments With Adaptive Interim Analyses,” Biometrics, 50:1029–1041, Corrigenda (1996) 52, 380. DOI: 10.2307/2533441.

- Beigel, J. H., Tomashek, K. M., Dodd, L. E., Mehta, A. K., Zingman, B. S., Kalil, A. C., Hohmann, E., Chu, H. Y., Luetkemeyer, A., Kline, S., de Castilla, D. L., Finberg, R. W., Dierberg, K., Tapson, V., Hsieh, L., Patterson, T. F., Paredes, R., Sweeney, D. A., Short, W. R., Touloumi, G., Lye, D. C., Ohmagari, N., Oh, M., Ruiz-Palacios, G. M., Benfield, T., Fätkenheuer, G., Kortepeter, M. G., Atmar, R. L., Creech, C. B., Lundgren, J., Babiker, A. G., Pett, S., Neaton, J. D., Burgess, T. H., Bonnett, T., Green, M., Makowski, M., Osinusi, A., Nayak, S., and Lane, H. C. (2020), “Remdesivir for the Treatment of Covid-19—Preliminary Report,” New England Journal of Medicine, DOI: 10.1056/NEJMoa2007764..

- Bennett, M., and Mander, A. P. (2020), “Designs for Adding a Treatment Arm to an Ongoing Clinical Trial,” Trials, 21, 251, DOI: 10.1186/s13063-020-4073-1.

- Berry, S. M., Carlin, B. P., Lee, J. J., and Müller, P. M. (2010), Bayesian Adaptive Methods for Clinical Trials, Boca Raton, FL: CRC Press.

- ———— (2011), Bayesian Adaptive Methods for Clinical Trials, Boca Raton, FL: CRC Press.

- Bowden, J., and Glimm, E. (2008), “Unbiased Estimation of Selected Treatment Means in Two-Stage Trials,” Biometrical Journal, 50, 515–527.

- Brannath, W., Zuber, E., Branson, M., Bretz, F., Gallo, P., Posch, M., and Racine-Poon, A. (2009), “Confirmatory Adaptive Designs With Bayesian Decision Tools for a Targeted Therapy in Oncology,” Statistics in Medicine, 28, 1445–1463.

- Bratton, D. J., Choodari-Oskooei, B., and Royston, P. (2015), “A Menu-Driven Facility for Sample-Size Calculation in Multiarm, Multistage Randomized Controlled Trials With Time-to-Event Outcomes: Update,” Stata Journal, 15, 350–368, DOI: 10.1177/1536867x1501500202.

- Bretz, F., Koenig, F., Brannath, W.,Glimm, E., and Posch, M. (2009), “Adaptive Designs for Confirmatory Clinical Trials,” Statistics in Medicine, 28, 1181–1217, DOI: 10.1002/sim.3538.

- Bretz, F., Pinheiro, J. C., and Branson, M. (2005), “Combining Multiple Comparisons and Modeling Techniques in Dose-Response Studies,” Biometrics, 61, 738–748.

- Brückner, M., Titman, A., and Jaki, T. (2017), “Estimation in Multi-Arm Two-Stage Trials With Treatment Selection and Time-to-Event Endpoint,” Statistics in Medicine, 36, 3137–3153.

- Carreras, M., and Brannath, W. (2013), “Shrinkage Estimation in Two-Stage Adaptive Designs With Mid-Trial Treatment Selection,” Statistics in Medicine, 32, 1677–1690.

- Chiu, Y. D., Koenig, F., Posch, M., and Jaki, T. (2018), “Design and Estimation in Clinical Trials With Subpopulation Selection,” Statistics in Medicine, 37, 4335–4352.

- Collignon, O., Gartner, C., Haidich, A. B., Hemmings, R. J., Hofner, B., Pétavy, F., Posch, M., Rantell, K., Roes, K., and Schiel, A. (2020), “Current Statistical Considerations and Regulatory Perspectives on the Planning of Confirmatory Basket, Umbrella and Platform Trials,” Clinical Pharmacology & Therapeutics. DOI: 10.1002/cpt.1804.

- Collignon, O., Koenig, F., Koch, A., Hemmings, R. J., Pétavy, F., Saint-Raymond, A., Papaluca-Amati, M., and Posch, M. (2018), “Adaptive Designs in Clinical Trials: From Scientific Advice to Marketing Authorisation to the European Medicine Agency,” Trials, 19, 642.

- Cooper, B. S., Boni, M. F., Pan-ngum, W., Day, N. P., Horby, P. W., Olliaro, P., Lang, T., White, N. J., White, L. J., and Whitehead, J. (2015), “Evaluating Clinical Trial Designs for Investigational Treatments of Ebola Virus Disease,” PLoS Medicine. 12. DOI: 10.1371/journal.pmed.1001815.

- Cui, L., Hung, H. M. J., and Wang, S.-J. (1999), “Modification of Sample Size in Group Sequential Clinical Trials,” Biometrics, 55, 853–857.

- Dean, N. E., Gsell, P. S., Brookmeyer, R., Crawford, F. W., Donnelly, C. A., Ellenberg, S. S., Fleming, T. R., Halloran, M. E., Horby, P., Jaki, T., Krause, P. R., Longini, I. M., Mulangu, S., Muyembe-Tamfum, J.-J., Nason, M. C., Smith, P. G., Wang, R., Henao-Restrepo, A. M., and De Gruttola, V. (2020), “Creating a Framework for Conducting Randomized Clinical Trials During Disease Outbreaks,” New England Journal of Medicine, DOI: 10.1056/NEJMsb1905390.

- DerSimonian, R., and Laird, N. (1986), “Meta-Analysis in Clinical Trials,” Controlled Clinical Trials, 7, 177–188. DOI: 10.1016/0197-2456(86)90046-2.

- Dimairo, M., Pallmann, P., Wason, J., Todd, S., Jaki, T., Julious, S. A., Mander, A. P., Weir, C. J., Koenig, F., Walton, M. K., Nicholl, J. P., Coates, E., Biggs, K., Hamasaki, T., Proschan, M. A., Scott, J. A., Ando, Y., Hind, D., and Altman, D. G. (2020), “The Adaptive Designs CONSORT Extension (ACE) Statement: A Checklist With Explanation and Elaboration Guideline for Reporting Randomized Trials That Use an Adaptive Design,” BMJ, 369, m115.

- Dodd, L. E., Follmann, D., Proschan, M., Wang, J., Malvy, D., van Griensven, J., Ciglenecki, I., Horby, P. W., Ansumana, R., Jiang, J. F., and Davey, R. T. (2019), “A Meta-Analysis of Clinical Studies Conducted During the West Africa Ebola Virus Disease Outbreak Confirms the Need for Randomized Control Groups,” Science Translational Medicine, 11, eaaw1049. DOI: 10.1126/scitranslmed.aaw1049.

- Dodd, L. E., Follmann, D., Wang, J., Koenig, F., Korn, L. L., Schoergenhofer, C., Proschan, M., Hunsberger, S., Bonnett, T., Makowski, M., Belhadi, D., Wang, Y., Cao, B., Mentré, F., and Jaki, T. (2020), “Endpoints for Randomized Controlled Clinical Trials for COVID-19 Treatments,” Clinical Trials.

- Dodd, L. E., Proschan, M. A., Neuhaus, J., Koopmeiners, J. S., Neaton, J., Beigel, J. D., Barrett, K., Lane, H. C., and Davey, R. T., Jr. (2016), “Design of a Randomized Controlled Trial for Ebola Virus Disease Medical Countermeasures: PREVAIL II, the Ebola MCM Study,” The Journal of Infectious Diseases, 213, 1906–1913. DOI: 10.1093/infdis/jiw061.

- Elsäßer, A., Regnstrom, J., Vetter, T., Koenig, F., Hemmings, R. J., Greco, M., Papaluca-Amati, M., and Posch, M. (2014), “Adaptive Clinical Trial Designs for European Marketing Authorization: A Survey of Scientific Advice Letters From the European Medicines Agency,” Trials, 15, 383. DOI: 10.1186/1745-6215-15-383.

- European Medicines Agency (1998), “ICH E9: Statistical Principles for Clinical Trials.”

- ——— (2007), “Reflection Paper on Methodological Issues in Confirmatory Clinical Trials Planned With an Adaptive Design.”

- ——— (2019), “Guideline on the Investigation of Subgroups in Confirmatory Clinical Trials.”

- Food and Drug Administration (2006), “Guidance for Clinical Trial Sponsors: Establishment and Operation of Clinical Trial Data Monitoring.”

- ——— (2019), “Guidance for industry: Adaptive Design Clinical Trials for Drugs and Biologics.”

- ——— (2020), “COVID-19: Developing Drugs and Biologic Products for Treatment or Prevention. Guidance for Industry.”

- Friede, T., and Kieser, M. (2006), “Sample Size Recalculation in Internal Pilot Study Designs: A Review,” Biometrical Journal, 48, 537–555. DOI: 10.1002/bimj.200510238.

- Friede, T., Röver, C., Wandel, S., and Neuenschwander, B. (2017), “Meta-analysis of Few Small Studies in Orphan Diseases,” Research Synthesis Methods, 8, 79–91. DOI: 10.1002/jrsm.1217.

- Friede, T., and Stallard, N. (2008), “A Comparison of Methods for Adaptive Treatment Selection,” Biometrical Journal, 50, 767–781, DOI: 10.1002/bimj.200710453.

- Friede, T., Stallard, N., and Parsons, N. (2020), “Adaptive Seamless Clinical Trials Using Early Outcomes for Treatment or Subgroup Selection: Methods, Simulation Model and Their Implementation in R,” Biometrical Journal, DOI: 10.1002/bimj.201900020.

- Graf, A. C., Magirr, D., Dmitrienko, A., and Posch, M. (2020), “Optimized Multiple Testing Procedures for Nested Sub-Populations Based on a Continuous Biomarker,” Statistical Methods in Medical Research, DOI: 10.1177/0962280220913071.

- Grasselli, G., Zangrillo, A., Zanella, A., Antonelli, M., Cabrini, L., Castelli, A., Cereda, D., Coluccello, A., Foti, G., Fumagalli, R., Iotti, G., Latronico, N., Lorini, L., Merler, S., Natalini, G., Piatti, A., Ranieri, M. V., Scandroglio, A. M., Storti, E., Cecconi, M., and Pesenti, A. (2020), “Baseline Characteristics and Outcomes of 1591 Patients Infected With SARS-CoV-2 Admitted to ICUs of the Lombardy Region, Italy,” Journal of the American Medical Association, 323, 1574–1581.