?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The optimization problems are becoming more complicated, requiring new and efficient optimization techniques to solve them. Many bio-inspired meta-heuristic algorithms have emerged in the last decade to solve these complex problems as most of these algorithms may be trapped into local optima and could not effectively solve all types of optimization problems. Hence, researchers are still trying to develop new and better optimization algorithms. This paper introduces a novel biologically-based optimization algorithm called circulatory system-based optimization (CSBO). CSBO is modeled based on the function of the body’s blood vessels with two distinctive circuits, i.e. pulmonary and systemic circuits. The proposed CSBO algorithm is tested on a wide variety of complex functions of the real world and validated with the standard meta-heuristic algorithms. The results indicate that the CSBO algorithm successfully achieves the optimal solutions and avoids local optima. Note that the source code of the CSBO algorithm is publicly available at http://www.optim-app.com/projects/csbo.

1. Introduction

Optimizing the design process is one of the most critical topics most engineers and inventors consider. A typical design can be optimized if its parameters are selected appropriately. Optimization is a mathematical tool that selects the best decision from the available set of possible solutions to achieve the ideal goal. After defining the problem variables, it is considered a function called the objective function. Physical conditions are then displayed as constraints on the problem. Then, the optimal solution is obtained by solving the resulting model using optimization methods (Radosavljević, Citation2018).

Generally, an optimization problem, whether it is a minimization or maximization, can be described as follows (Radosavljević, Citation2018):

(1)

(1)

Subject to:

(2)

(2)

(3)

(3)

(4)

(4)

In the above equation, represents the objective function of a typical optimization problem,

is the vector of variables with D dimension, i.e. the algorithm inputs.

and

vectors are the inequality and equality constraints of the problem, and n and m parameters are the number of the inequality and equality constraints, respectively.

Optimization problems from different application perspectives can be divided into the following different categories:

Static and dynamic: If the objective function varies with time, the optimization problem is dynamic; otherwise, it is static.

Constrained and unconstrained: A constrained optimization problem involves variables restricted to a specific set or constraint, and an unconstrained optimization problem concerns variables that are not restricted.

Linear or non-linear: The objective function and constraints of linear optimization problems are linear functions of the design variables.

Discrete and continuous: A discrete optimization problem has certain discrete control variables. On the other hand, in a continuous problem, the values of the variables are continuous.

Random and non-random: In an optimization problem, depending on whether the variables are real, binary, or random values, the problem is divided into different categories of true, binary, or random.

Single and multi-objective: A problem can have multiple objective functions. Each of them requires the setting of specific control parameters.

Natural evolutionary processes or occurrences inspire evolutionary algorithms (EAs): such as using the behavior of flora for artificial flora algorithm (AFA) (L. Cheng et al., Citation2018), plant intelligence (Akyol & Alatas, Citation2017), virulence optimization algorithm (VOA), and an optimization strategy inspired by the ideal process through which viruses attack bodily cells (Jaderyan & Khotanlou, Citation2016), evolution strategies (ESs), a sub-class of nature-inspired direct search (Rechenberg, Citation1989). Genetic algorithms (GAs) are search heuristics inspired by Charles Darwin’s theory of natural evolution (Holland, Citation1992). An opposition-based high dimensional optimization algorithm (OHDA) unique feature is its angular movement in response to very few samples, enabling successful search in high dimensions (GhaemiDizaji et al., Citation2020). An artificial infectious disease algorithm via the SEIQR epidemic model (G. Huang, Citation2016) aims to show the relationship of an infectious disease to an optimization algorithm. Mouth brooding fish algorithm (Jahani & Chizari, Citation2018) models organisms’ symbiotic interaction tactics to live and reproduce in an environment and finds the optimal answer by using mouth brooding fish movement, dispersion, and protection patterns. Colonial competitive differential evolution (CCDE), which is based on mathematical modeling of socio-political evolution (Ghasemi et al., Citation2016), tree growth algorithm (TGA) that replicates the fight for food and light among trees (Cheraghalipour et al., Citation2018), invasive tumor growth (ITGA) achieved by abnormal cells detaching from the tumor bulk because of a decrease in or complete lack of intercellular adhesion molecules (Tang et al., Citation2015), slime mould algorithm (SMA) that inspired via the foraging and diffusion conduct of slime mould (Li et al., Citation2020), and invasive weed optimization (IWO) that mimics weed colony’s behavior (Mehrabian & Lucas, Citation2006).

Swarm Intelligence Algorithms (SIAs) development: Simulating natural patterns and behaviors in nature is one of the primary goals of SIAs. Fitness dependent optimizer (FDO), which simulates the behavior of the bee swarm in order to locate better colonies (Abdullah & Ahmed, Citation2019), lion optimization algorithm (LOA) that inspired by lions’ unique lifestyle and social behavior (Yazdani & Jolai, Citation2016), monarch butterfly optimization (MBO) that inspired via idealizing and simplifying the travel of monarch butterflies (Wang et al., Citation2019), yellow saddle goatfish algorithm, an optimization model motivated by yellow saddle goatfish hunting behavior involving chaser and blocker fish (Zaldívar et al., Citation2018), Aquila optimizer (AO) that inspired via the Aquila’s strategies in nature during the process of catching the prey (Abualigah et al., Citation2021), sailfish optimizer (SO) influenced by a group of hunting sailfish (Shadravan et al., Citation2019), moth search algorithm (MSA) that motivated via the Lévy flights and phototaxis of the moths (Wang, Citation2018), ant colony optimization (ACO) (Dorigo & di Caro, Citation1999), kidney-inspired algorithm (KA) making a novel population-based algorithm informed by the human kidney mechanism (Jaddi et al., Citation2017), artificial hummingbird algorithm (AHA) that mimics the intelligent foraging behaviors and flight skills of hummingbirds (Zhao et al., Citation2022), a naturalistic approach to Harris hawks optimization (HHO) (Heidari et al., Citation2019), artificial ecosystem-based optimization (AEO), a population-based optimizer that mimics three unique traits of live organisms (Zhao et al., Citation2020), Chameleon swarm algorithm (CSA) that mimics the dynamic skills of chameleons when hunting and navigating for food sources (Braik Citation2021), crow search algorithm (CSA) motivated by crows’ social smart tendency for hiding food (Askarzadeh, Citation2016), cooperation search algorithm (CSA) taking cues from modern business teamwork (Feng et al., Citation2021), grasshopper optimization algorithm (GOA) based on the natural foraging and swarming activity of grasshoppers (Saremi et al., Citation2017), COOT algorithm motivated by the dynamic behavior of the population of birds (Naruei & Keynia, Citation2021), black widow optimization algorithm (BWOA) prompted by black widow spider mating rituals (Hayyolalam & Kazem, Citation2020), JAYA algorithm a gradient-free optimization technique (R. Rao, Citation2016), tunicate swarm algorithm (TSA), which mimics tunicate navigation and foraging turbojet engines and swarming behavior (Kaur et al., Citation2020), chimp optimization algorithm (COA) influenced by chimps’ individuality and sexual motivation (Khishe & Mosavi, Citation2020), pity beetle algorithm (PBA) based on a beetle’s aggregation habit (Kallioras et al., Citation2018), emperor penguin optimizer (EPO) resembles emperor penguin huddling (Dhiman & Kumar, Citation2018), ludo game-based met-heuristics (P. R. Singh et al., Citation2019), which uses two or four players to imitate the game ludo to update distinct swarm intelligent characteristics, Fox optimization algorithm (RFO) which uses a mathematical model of red fox hunting, developing population, searching for food, and habits (Połap & Woźniak, Citation2021), galactic swarm optimization (GSO) via motion between stars (Muthiah-Nakarajan & Noel, Citation2016), parasitism–predation algorithm (PPA) to tackle the challenges of low convergence and the constraint of dimensionality of enormous data (A.-A. A. Mohamed et al., Citation2020), earthworm optimization algorithm (EWA) via the butterfly adjusting operation and migration operation (Wang et al., Citation2018), Barnacle mating habits in nature served as inspiration for BMO (Sulaiman et al., Citation2020), a biological-inspired optimization algorithm named squirrel search algorithm (SSA) (Jain et al., Citation2019), colony predation algorithm (CPA) based on animals to avoid enemies (Tu et al., Citation2021), wild geese algorithm (WGA) which natural life and death in the wild is its basis (Ghasemi et al., Citation2021), hunger games search (HGS) which mimics the behavioral choice and hunger-driven activities of animals (Yang et al., Citation2021), bald eagle search optimization algorithm (BESO) an innovative, based on bald eagles’ hunting tactics or social conduct when searching for fish (Alsattar et al., Citation2020), phasor particle swarm optimization (PPSO) based on a phasor-theoretic model of particle design variables with a phase angle (Ghasemi et al., Citation2019), elephant herding optimization (EHO) which mimics the herding behavior of elephants (Wang et al., Citation2015), buttery optimization algorithm (BOA) that imitates the natural foraging and mating activities of butterflies (Sharma et al., Citation2021).

Physics-Inspired algorithms (PIAs): Yin-Yang-pair optimization (YYO) algorithm via physical event or specific tool (Punnathanam & Kotecha, Citation2016), an algorithm by Franklin’s and Coulomb’s laws theory, i.e. the CFA optimizer (Ghasemi et al., Citation2018), gradient-based optimizer (GBO) which uses Newton’s approach to investigate the search domain using a number of vectors and two major operators (Ahmadianfar et al., Citation2020), electromagnetic field optimization (EFO) that the behavior of electromagnets with varying polarities and a natural ratio called the golden ratio is its basis (Abedinpourshotorban et al., Citation2016), weIghted meaN oF vectOrs (INFO) which uses a solid structure and updating the vectors’ position (Ahmadianfar et al., Citation2022), wind driven optimization (WDO) algorithm which updates the velocity and position of wind-controlled air parcels regarding the physical equations that control air motion (Bayraktar et al., Citation2013), Lévy flight distribution (LFD) (Houssein et al., Citation2020), Equilibrium optimizer (EO) a revolutionary optimization technique for the implementation of control volume mass balance models (Faramarzi et al., Citation2020), simulated annealing (SA) a method involves metalworking process of heating and cooling a material to change its physical qualities (Kirkpatrick et al., Citation1983), supernova optimizer (SO) motivated by supernova phenomena (Hudaib & Fakhouri, Citation2018), dynamic differential annealed optimization (DDAO) (Ghafil & Jármai, Citation2020), henry gas solubility optimization (HGSO) encouraged by Henry’s law (Hashim et al., Citation2019), artificial chemical reaction optimizer (ACRO), which is designed to be inspired by chemical reactions (Alatas, Citation2011), water evaporation optimization (WEO) that simulates the evaporation of water molecules on a solid surface with varying wettability (Kaveh & Bakhshpoori, Citation2016), rain-fall optimization based on behavior of raindrops (Kaboli et al., Citation2017), gases Brownian motion optimization (GBMO) motivated by gas Brownian movement and turbulent rotational motion (Abdechiri et al., Citation2013), atom search optimization (ASO) basis of the basic of molecular dynamics (Zhao et al., Citation2019), turbulent flow of water-based optimization (TFWO) fascinated by whirlpools formed in turbulent water flow (Ghasemi et al., Citation2020), thermal exchange optimization (TEO) based on Newton’s law of cooling (Kaveh & Dadras, Citation2017), heat transfer search (HTS) which the law of thermodynamics and heat transfer are its basis (Patel & Savsani, Citation2015), RUNge Kutta algorithm (RUN) which the law of the Runge Kutta (RK) method and the mathematical foundations (Ahmadianfar et al., Citation2021), weighted superposition attraction (WSA) in which agents create a superposition that causes other solution vectors to follow (Baykasoğlu & Akpinar, Citation2017), and gravitational search algorithm (GSA) based on mass exchanges and gravity (Rashedi et al., Citation2009).

Human/social-related Algorithms (HSAs): political optimizer (PO) replicating the human political process (Askari et al., Citation2020), a very optimistic method (Vommi & Vemula, Citation2018) employing two factors; luck and effort, future search algorithm (FSA) which mimics the person’s life (Elsisi, Citation2019), volleyball premier league algorithm (VPLA) that works through interacting and competing among volleyball teams (Moghdani & Salimifard, Citation2018), path planning algorithm (PPA) to find a sequence of valid configurations (Zhou et al., Citation2017), pathfinder algorithm (PA) that tries to solve the graph theory’s shortest path problem (Yapici & Cetinkaya, Citation2019), teaching–learning-based optimization (TLBO) algorithm that examines a teacher’s impact on students (R.V. Rao et al., Citation2011), imperialist competitive algorithm (ICA) suggests an optimization method influenced by imperialism (Atashpaz-Gargari & Lucas, Citation2007), collective decision optimization (CDO) that human social behavior based on decision-making traits is its basis (Q. Zhang et al., Citation2017), and queuing search algorithm (QSA) which is stimulated from human doings in queuing process (J. Zhang et al., Citation2018).

On the other hand, time is a significant factor in many optimization problems. Therefore, a new algorithm that is powerful and robust and has a reasonable speed to reach an acceptable optimal solution is required. Besides, each optimization algorithm has several control parameters that the user must determine their values according to his experience, which is sometimes time-consuming and questionable. Therefore, a simple and robust algorithm is required to give the optimal and reasonable result with lower control parameters. In other words, the need for a comprehensive and robust algorithm is well felt in all branches of science. This article introduces an algorithm called CSBO that can achieve these goals.

The CEC 2005, CEC 2014, and CEC 2017 standard test functions are utilized in this paper to demonstrate the effectiveness of the CSBO. These standard test functions cover a wide range of functions, such as multimodal, multimodal, and hybrid functions. We compare the results with several modern and standard algorithms at each optimization stage to show the algorithm’s performance.

Briefly, the advantages of the proposed algorithm can be listed below:

A new meta-heuristic algorithm inspired by regular body function

Ability to perform effectively for a wide range of real-world functions

Having a special competitive performance compared to modern and standard algorithms

2. Circulatory System Based Optimization (CSBO) algorithm

Modeling is one of the most important branches of engineering that is a good way to study the behavior of a system (Ghasemi et al., Citation2021). Models are representations of different systems. With the help of the model, the effect of different factors on the system can be simulated. Of course, models must predict the behavior of different systems and functions of each problem in different conditions.

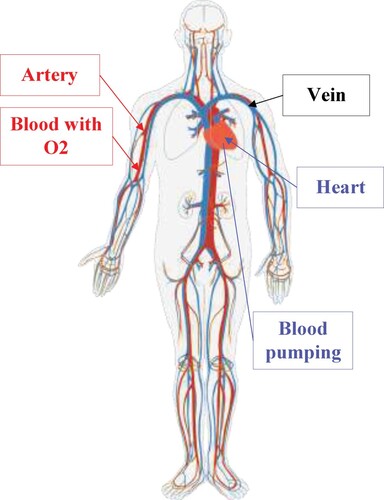

2.1. Regular circulatory system

This article presents a new powerful optimizer using the circulatory system function model. The heart is a fantastic organ that pumps oxygen and nutrient-rich blood through the human body to sustain life. The heart is an essential part of the cardiovascular system, called the circulatory system. It contains all elastic, muscular tubes (vessels) that carry blood from the heart to the body and back to it.

Blood is vital for the body. In addition to carrying fresh oxygen from the lungs and nutrients to the body’s tissues, it also eliminates the body’s waste products, including carbon dioxide, away from the tissues. This circulation is necessary to sustain life and promote the health of all parts of the body.

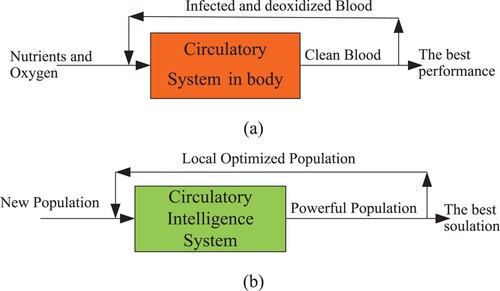

According to the simple inspiration model from the circulatory system of the body’s regular performance in Figure , the body’s blood vessels are functionally divided into two distinctive circuits: the pulmonary circuit and the systemic circuit. The pump for the pulmonary circuit, which circulates blood through the lungs, is the right ventricle. The left ventricle is the pump for the systemic circuit, which provides the blood supply for the body’s tissue cells. Pulmonary circulation transports oxygen-poor blood from the right ventricle to the lungs, where the blood picks up a new blood supply. Then it returns the oxygen-rich blood to the left atrium.

Figure 1. A simple inspiration model from the circulatory system for modeling CSBO (‘Pixabay.com,’ Citationn.d.).

Blood is considered a Newtonian fluid in most cases. The main variables of the circulatory system are flow, pressure, and volume. Pressure-flow modeling of the circulatory system can be examined from two perspectives, beating and non-beating, which is considered in a model inspired by the beating perspective.

Arteries and veins are considered cylindrical vessels whose walls have elastic properties. To stimulate blood flow in most models, the momentum continuity (Fan et al., Citation2009), known as the Navier-Stokes equations (Johnston et al., Citation2006), is used with the assumption of constant density and viscosity. The Newtonian fluid can be expressed in the following general form regardless of several gravitations.

(5)

(5)

(6)

(6)

Where

is the fluid density,

is the velocity vector,

is the pressure, t represents time, and

is the stress tensor.

The systemic circulation provides the functional blood supply to all body tissue. It carries oxygen and nutrients to the cells and picks up carbon dioxide and waste products. Systemic circulation carries oxygenated blood from the left ventricle, through the arteries, to the capillaries in the body’s tissues. From the tissue capillaries, the deoxygenated blood returns through a system of veins to the right atrium of the heart. It then moves into the right ventricle, and the above cycle is repeated, equivalent to one iteration in our proposed algorithm.

In this algorithm, we have modeled two pulmonary and systemic circuits as two separate groups with two different optimization cycles. They are equivalent to specific functions modeled on a specific type of population.

Here, this process of the circulatory system is equivalent to the generation of a more substantial population and elimination of a weaker population in the optimization algorithm. The mathematical modeling of the CSBO optimization process is explained in the following sections.

2.2. Circulatory system regular performance as an intelligent systematic algorithm: CSBO

In the mathematical modeling of new meta-heuristic optimization algorithms, many hypotheses based on the inspiration of the phenomenon may be considered. In this section, we briefly explain how to model the circulatory system function as an optimizer and implement the proposed CSBO algorithm.

In the CSBO algorithm, like any other meta-heuristic optimization algorithm, at first, an initial population is generated based on a random function within the problem range, which here represents the mass of blood droplets. The position of the blood droplets represents the possible solutions to an optimization problem in the search space, and the circulatory system acts as an operator of this population to refine and strengthen them and eliminate the weaker population. In other words, the solution (blood) quality in the search space (body) is improved throughout an iterative process based on the functionality of the blood’s circularity system in the body.

In the proposed algorithm, the pulmonary circulation deals with deoxygenated blood, which is equivalent to the weaker population, and the systematic circulation deals with oxygenated blood, which is equivalent to the population with a better target value. In other words, it deals with a better population. The ith blood mass (BMi) (or ith individual of the population in CSBO) will move based on its position. In other words, it will be directed to a more optimal position; otherwise, it will maintain its current position. Figure shows how the evolutionary process of the blood in the circulatory system equivalently can be modeled as an optimizer system. Also, Table shows, in detail, how the elements or functions of the circulatory system are modeled in the proposed CSBO algorithm.

Table 1. The equivalent concepts of the circulatory system and CSBO algorithm.

2.3. The mathematical modeling of the CSBO algorithm

At first, the CSBO algorithm, like any other meta-heuristic algorithm, starts with an initial population or blood masses for a typical problem with the number of dimensions D (d= 1:D), which randomly generates between the minimum

and maximum

values of the problem parameters range as follows:

(7)

(7)

This initial population, as mentioned earlier, plays the same role as blood particles or masses in the body.

2.3.1. Movement of blood mass in the veins

The ith blood mass in the veins, BMi, moves based on the imposed force or pressure. The mass always moves in a direction that has more optimal conditions. Therefore, the value of its objective function (amount of force or pressure) decreases. We can model the clogged arteries in the heart as trapping in locally optimal solutions. We like this situation not to happen like in the real world. As the body continues to work, the algorithm will continue its optimization process well. This step of the circulatory cycle is modeled based on the particle positions and their objective function values as follows:

(8)

(8)

(9)

(9)

In fact,

determines the direction of movement of the ith blood mass (BMi) in the arteries. pi is a value between 0 and 1 and depends on the problem dimensions. It determines the amount of displacement and moves toward a better value in each circulation cycle.

2.3.2. Population or blood mass flow in pulmonary circulation

As mentioned earlier, the pulmonary circulation deals with deoxygenated blood, equivalent to the weaker population in optimization. In fact, in the CSBO, at each iteration, the population is sorted, and NR numbers of the weakest population enter the pulmonary circulation and are directed to the lungs to gain oxygen

(10)

(10)

In (7), randn denotes the random normal number, it indicates the current algorithm iteration, randc indicates the random vector from the Cauchy probability distribution, and D is the number of the optimization problem dimension. The pulmonary circulation also changes the

for this population as follows:

(11)

(11)

2.3.3. Population or blood mass flow in systematic circulation

As mentioned, NR numbers of the weakest sorted population enter the pulmonary circulation. The rest of the population (NL = Npop-NR) that have a better fit value enter the systematic circulation with a new amount in order to circulate through the body, as modeled below:

(12)

(12)

The systematic circulation also corrects the

for this group of population as follows:

(13)

(13)

where

and

are the worst and best values of cost function obtained until the current iteration.

The cycle of optimization will be continued for the specified number of iterations. Similar to other meta-heuristic algorithms, each member of the population will accept the new position if it gets a better value of the fitness function.

The CSBO algorithm pseudo-code is summarized in Algorithm 1.

3. The competitive study based on the standard real-world benchmark functions: CEC 2005, CEC 2014, and CEC 2017 benchmarks

Table

3.1. CEC2005 benchmark functions

In this section, the first fourteen CEC 2005 popular test functions (Y. Wang et al., Citation2011) have been utilized to show the performance of the proposed algorithm. These functions, which have been used in a wide variety of optimization articles in recent years, are shown in Table .

Table 2. Summary of the selected 14 test functions from CEC 2005 (Y. Wang et al., Citation2011) for testing CSBO for global real-parameter optimization (Fmin = 0).

3.1.1. Number of population in CSBO algorithm

This section selects five different populations for our algorithm and then runs the optimization function. These populations are 30, 45, 60, 75 and 90. The same computer did all the simulations 30 times per test function and 300,000 function evaluations (NFEs) (Y. Wang et al., Citation2011) with 30 dimensions.

The simulation results with NR = 15 are given in Table . In the last column, three indexes are shown in which Nb (the number of the best results) is the number of tries that the algorithm obtained the best results in comparison with other studied algorithms, Nw (the number of the worst results) is the number of tries that the algorithm obtained the worst results compared to other studied algorithms and Mr (the mean rank) is the average rank of all obtained tries among all the test functions. According to this Table , the algorithm can perform well with different populations. For example, the population of 45 could be an appropriate choice for D = 30. We chose this number for the rest of our work.

Table 3. Summary of the results for CSBO from CEC2005 with D = 30 and NR = Npop/3.

3.1.2. Effect of NR on CSBO performance analysis

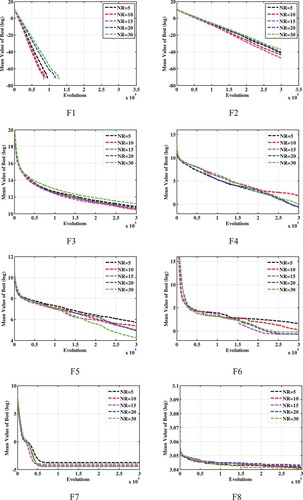

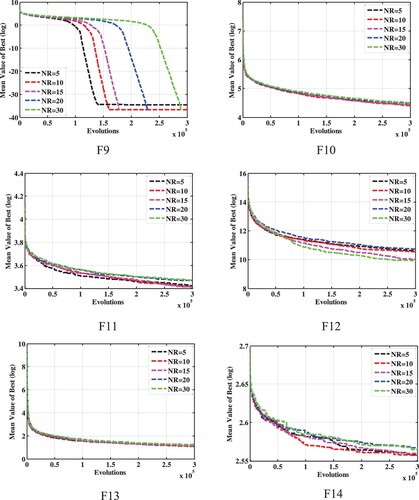

In this section, we consider different values of NR, i.e. 5, 10, 15, 20 and 30, to assess the algorithm’s effectiveness. All the simulations were done by taking 45 populations. From the results, it is evident that different values of NR have a negligible effect on CSBO. For F2, F3, F6, F9, F11, and F14, NR = 15 leads to better performance. However, the efficiency of CSBO deteriorates quickly with the decrease of NR to 5 for more text functions (except F1 and F14). For the rest values of NR (10, 20, and 30), CSBO has approximately the same performance as NR = 15. Therefore, according to Table , NR = Npop/3 is an appropriate choice for our algorithm.

Table 4. Summary of the results CSBO for CEC2005 test functions for testing different NR with Npop = 45 and D = 30.

R denotes the algorithm ranking in the corresponding function compared to other algorithms in Table , in which 1 and 5 indicate the best and worst rank, respectively, and this is true for all parts of this paper.

In Figure , the summary of the CSBO convergence curves has been shown based on the results given in Table to optimize CEC2005 functions. As it is clear from these curves, the CSBO has a decent convergence speed for most functions. According to the figures, although the convergence characteristic of NR = 5 for the F9 curve has the highest convergence rate, its final solution is inferior compared to other NR values. On the other hand, NR = 15 has the best convergence characteristic. Moreover, the convergence rates for the NR = 10 and 30 and even NR = 5 are also acceptable.

Figure 3. The convergence rates of CSBO in different NR values of Table .

3.1.3. Comparison with other algorithms

This section proposes the test functions obtained by the CSBO algorithm compared with other standard algorithms in related articles, as shown in Table with NFEs: 3.00E + 05 for D = 30 and 500,000 for D = 50. The parameter setting of some competitor algorithms, in this case, is given in Table . In this table, the plus sign (+) represents that other algorithms outperform the offered CSBO, the minus sign (−) denotes that other algorithms underperform the proposed CSBO, and the equal sign (=) shows the same functionality.

Table 5. Summary of the results for CEC2005 test functions for different algorithms with D = 30 and NFEs = 3.00E + 05.

Table 6. The parameter setting of different algorithms.

We compared our algorithm with GL-25 (global and local real-coded genetic algorithms based on parent-centric crossover operators) (Y. Wang et al., Citation2011), HRCGA (real-coded genetic algorithm) (C. Li et al., Citation2011), EPSDE (an ensemble of trial vector generation approaches and control parameters of DE) (Y. Wang et al., Citation2011), SaDE (DE with strategy adaptation) (Y. Wang et al., Citation2011), SLPSO (self-learning particle swarm optimizer) (C. Li et al., Citation2011), APSO (an adaptive PSO) (C. Li et al., Citation2011) and FIPS or FIPSO (a fully informed PSO) (C. Li et al., Citation2011). Although the proposed algorithm is basic, it can conquer other algorithms in the same circumstance, which shows its effectiveness as a novel solution for optimization problems. The ‘–’, ‘’, and ‘=’ denote that the performance of the corresponding algorithm is worse than, better than, and similar to that of CSBO, respectively.

From Table , it can be found that SaDE and EPSDE generally have similar performance after CSBO. FIPS algorithm has the worst performance among all in this table. In addition, PSO and GA have the same average ranking. Moreover, SaDE has the most comparable performance to CSBO in the test functions of F6 and F14. It should be noted that SaDE is an enhanced evolved algorithm, while CSBO is the first version of its kind.

3.2. CEC 2014 benchmark functions

This section investigates the results of implementing the CSBO on CEC 2014 benchmark functions.

3.2.1. CSBO initial evaluation in comparison with original classical algorithms

In order to verify the performance of the proposed CSBO algorithm compared to other algorithms, we have selected the CEC2014 functions in this section. These are real-world modeled optimization functions. CEC 2014 functions (unimodal, simple multimodal, hybrid, and composition benchmark tests) (J. J. Liang et al., Citation2013) have been used successfully in many recent articles and, therefore, in this paper to test the performance of the proposed CSBO algorithm, we have used them. These functions are described (Liang et al., Citation2013).

In this section, we have selected two dimensions, 30 and 50, with 30 runs for each test function. The number of evolutions in all parts of the article is 300,000 and 500,000 for two dimensions, 30 and 50, respectively. Also, Npop and NR for these two dimensions are set at 45 and 15 and 60 and 20, respectively. The parameter setting of some of the competitor algorithms is given in Table .

Table 7 Parameters of some competitors for CEC2014 test functions.

The simulation results for D = 30 are given in Table , compared to the robust and modern algorithms in (X. Chen et al., Citation2017) e.g. LDWPSO (linearly decreasing inertia weight PSO), FIPSO or FIPS, BLPSO (biogeography-based learning PSO), RCBBOG (real code biogeography-based optimization with Gaussian mutation), GL-25, and GBABC (Gaussian bare-bones artificial bee colony). Both tables clearly show that the proposed CSBO algorithm has defeated all other algorithms for most functions (F1, F2, F3, F4, F13, F15, F17, F18, F20, F F21, F22, F24, F25, F26, F28, and F30). In addition, increasing the dimension of the functions could not significantly affect CSBO. Interestingly, the proposed algorithm never had the worst performance and rank for both dimensions among the algorithms, indicating the robustness and reliability of the CSBO. Nevertheless, to mention that the two algorithms, GBABC and BLPSO, overcame the CSBO for 7 and 8 test functions of D = 30, respectively, which is normal.

Table 8. Summary of the results for CEC2014 test functions for different algorithms with D = 30 and NFEs = 3.00E + 05.

Examining the table results, we find that although CSBO has obtained inappropriate rankings (rank 4) for the three functions 9, 27 and 29, it has a partial difference with the best mean result. For example, for function 29, the best mean result is 1.02E+ 03 obtained by the ultra-modern genetic algorithm GL-25, while this result for CSBO is 1.34E+ 03, which is acceptable. Although CSBO did its worst performance with a rank of 5 for function 7, it got the best solution for 16 test functions. Based on the last three rows of the table, we can consider it a strong and appropriate emerging algorithm.

Table contains the simulation results for the LDWPSO, FIPSO, BLPSO, RCBBOG, GL-25, and GBABC in (X. Chen et al., Citation2017) and CSBO (this study) algorithms for D = 50. According to this table, the Mr value for a dimension size of 30 is 1.8, while the value for a dimension of 50 is 2.0333. It demonstrates that increasing the dimension reduces the performance of CSBO. Furthermore, it shows that the performance of CSBO partially decreases by increasing the dimension. Nevertheless, it still has the first ranking with the best functionality for half of the test functions without any worst results.

Table 9. Summary of the results for CEC 2014 test functions for different algorithms with D=50 and NFEs=5.00E + 05.

3.2.2. A comparison of CSBO with the state-of-the-art PSO algorithms (PSOs)

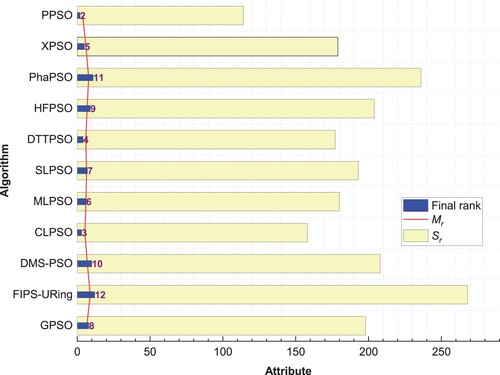

In this section, to better demonstrate the performance of the proposed CSBO, we compare our results with a novel example of PSO called promotional particle swarm optimizer (PPSO) (L. Zhang et al., Citation2021), which has been recently introduced, as well as ten other modern and basic algorithms. The ten state-of-the-art PSO algorithms (L. Zhang et al., Citation2021) are included: GPSO (global version PSO with inertia weight) (Eberhart & Kennedy, Citation1995), FIPS-URing (FIPS with URing topology) (Mendes et al., Citation2004), DMS-PSO (a dynamic multiswarm PSO) (J.-J. Liang & Suganthan, Citation2005), CLPSO (a comprehensive learning PSO) (J. J. Liang et al., Citation2006), MLPSO (a multilayer PSO) (L. Wang et al., Citation2014), SLPSO (a social learning PSO) (R. Cheng & Jin, Citation2015), DTTPSO (a dynamic tournament topology strategy in PSO) (L. Wang et al., Citation2016), HFPSO (a hybrid firefly and PSO algorithm) (Aydilek, Citation2018), PhaPSO (a phasor PSO) (Ghasemi et al., Citation2019), and XPSO (an expanded PSO) (Xia et al., Citation2020).

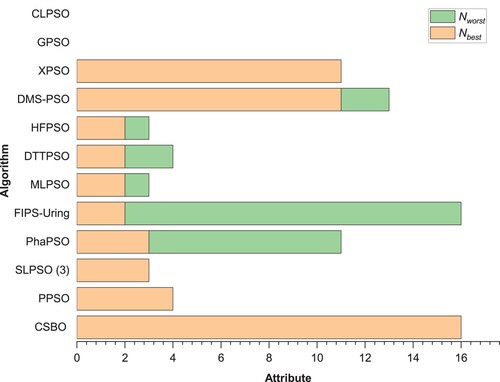

The results for dimension 30 are given in Table based on the mean value and standard deviation. The winning sign is defined by +, indicating the CSBO has won over the competitor algorithm in that function. In addition, the minus sign – and equity sign = show the failure and the same performance of CSBO compared to the corresponding algorithm. Total statistical results are given in Figures and . As can be seen from the results of the first three unimodal functions and simple multimodal function F4, CSBO results are significant and superior to PSOs. On the other hand, the performance of the proposed algorithm for the last three test functions, F28, F29 and F30, which are composition test functions, is average and relatively poor compared to PSOs. CSBO scored the worst ranking of 7 for these three test functions.

Table 10. A comparison of CSBO with the state-of-the-art PSOs for CEC 2014 functions with NFEs=3.00E + 05.

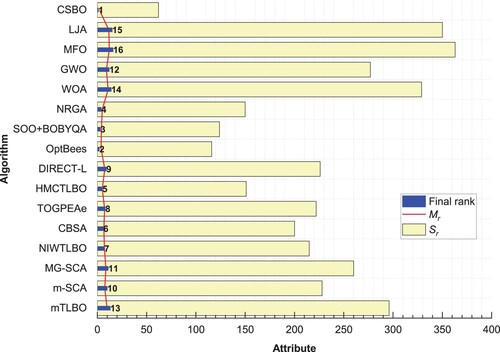

Looking at Figure , where Sr is the sum of the total rank of the algorithm, CSBO, with an average rank of 2.6333, is the decisive winner of this comparative study. Its closest algorithm is PPSO, with an average rank of 3.80, which is a significant difference. This Figure shows that FIPS-URing is the weakest algorithm. Figure also lists the top-down algorithms based on the number of functions with the best value or rank 1 (Nbest) and the number of times they have the weakest rank (Nworst). CSBO has a rank of 1 for 16 functions, while its nearest competitor, PPSO, ranks 1 in only four functions. In addition, GPSO and CLPSO did not get the best value or worst value for any function. The proposed CSBO, SLPSO, and PPSO algorithms never got the worst value, which is a great advantage for these algorithms. Moreover, although FIPS-Uring obtained the best value for two functions; it got the worst value 14 times, which shows its deficiency.

We use Wilcoxon’s test to determine whether two algorithms behave significantly differently (Ghosh et al., Citation2012). The p-values for applying Wilcoxon’s test on CSBO and PSOs are shown in Table . The p-values less than 0.05 (the significance level) are in boldface. Because of the data, it is clear that CSBO outperforms the other eleven PSO algorithms. Furthermore, although CSBO is not significantly superior to PPSO, it outperforms it on an average ranking basis.

Table 11. The competitive results of Wilcoxon’s test and performance of CLBO versus PSOs.

In addition, by looking closely at Table , we can see that CSBO has defeated all PSOs for most of the test functions. The most important competitor of CSBO is the PPSO algorithm, which is a very modern algorithm introduced in 2021. Although CSBO has a worse result than DTTPSO for 8 test functions, it has succeeded in 22 functions instead, which is a significant difference.

3.2.3. A comparison of CSBO with the popular inspired optimization algorithms (IOAs):

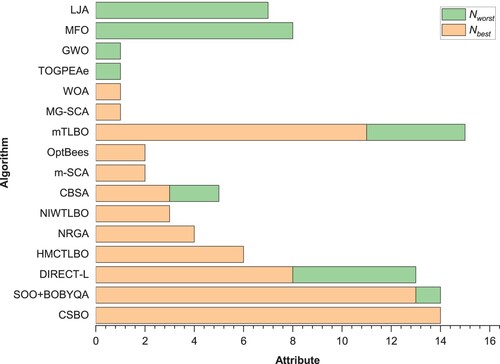

In this section, to identify CSBO compared to inspirational algorithms as well as their improved versions, the results of several other algorithms published in recent articles with conditions similar to CSBO with dimension 30 for the same cec2014 have been demonstrated as shown in Table , and the comparative study has been accomplished. These algorithms are included: mTLBO (a modified TLBO) (X. Chen et al., Citation2018; Satapathy & Naik, Citation2014), m-SCA (a hybrid self-adaptive sine cosine algorithm with opposition based learning) (Gupta & Deep, Citation2019), MG-SCA (a memory guided SCA) (Gupta et al., Citation2020), NIWTLBO (a nonlinear inertia weighted TLBO) (X. Chen et al., Citation2018; Wu et al., Citation2015), CBSA (a new constraint backtracking search optimization algorithm) (Dai et al., Citation2020), TOGPEAe (an improved grey prediction evolution algorithm based on topological opposition based learning) (Dai et al., Citation2020), HMCTLBO (hierarchical multi-swarm cooperative TLBO) (F. Zou et al., Citation2017), DIRECT-L (deterministic global search optimization algorithms with locally-biased version) (Gablonsky & Kelley, Citation2001), OptBees (Bee-inspired algorithm) (Maia et al., Citation2014), SOO + BOBYQA (simultaneous optimistic optimization with BOBYQA) (Preux et al., Citation2014), NRGA (non-uniform mapping in real-coded GAs) (Yashesh et al., Citation2014), WOA (whale optimization algorithm) (Mirjalili & Lewis, Citation2016), GWO (Grey wolf optimizer) (Mirjalili et al., Citation2014), MFO (Mirjalili, Citation2015), and LJA (Jaya with Levy flight) (Iacca et al., Citation2021) In addition, the parameter settings of some of these algorithms are given in Table .

Table 12. A comparison of CSBO with the state-of-the-art IOAs for CEC 2014 functions with NFEs=3.00E + 05.

Table 13. The parameters of some state-of-the-art IOAs for CEC 2014 test functions.

As in the previous section, CSBO has impressive performance for the first four test functions compared to other algorithms or IOAs. The worst rank obtained, which is a middle rank, is 7 for the test function 12 or Shifted and rotated Katsuura function, which is equal to 5.01E − 01, and the best value and the second-best value for this test function are obtained by SOO + BOBYQA and NRGA, respectively, which are equal to 3.00 E − 02 and 1.51E − 01. On the other hand, the worst solutions obtained by m-SCA, NIWTLBO, LJA and mTLBO were 1.76E + 00, 1.93E + 00, 2.49E + 00 and 2.50E + 00. Given these results, it can be said that the solutions obtained by CSBO are acceptable results. At the same time, it can become a more robust algorithm with some modifications.

Note for Table : The ‘–’, ‘’, and ‘=’ denote that the performance of CSBO is worse than, better than, and similar to that of the corresponding algorithm, respectively.

Summary of statistical results from CSBOs and IOAs are given in Figures and . A quick look at Figure reveals that CSBO deservedly is the best and most reliable algorithm. The closest algorithm to CSBO is the OptBees algorithm, with an average value of 3.8667, a difference of 1.8 from the average value of CSBO, which is a considerable difference. WOA, LJA and MFO (three new and trendy algorithms) are the worst rankings, with averages of 10.9667, 11.6667 and 12.1000, which are difficult to accept in a few conditions.

On the other hand, looking carefully at Figure , it can be seen that CSBO got the best solutions for 14 test functions here and never got the worst ranking. As mentioned, the worst ranking was 7, which was within acceptable limits. The modern algorithms SOO + BOBYQA and DIRECT-L obtained the best solutions for 13 and 8 functions in this study. At the same time, SOO + BOBYQA once and DIRECT-L five times got the worst solutions, which indicates the valuable functionality of our proposed CSBO.

It is clear from the figure that the two algorithms, LJA and MFO, which have never had the best solution, are in the red. Their solution has been the worst for most functions compared to other algorithms.

Table compares the p-values of CSBO with fifteen other modern optimization techniques when Wilcoxon’s test is used. The p-values less than 0.05 (the significance level) are in boldface. As a result of the data, it is clear that CSBO outperforms other algorithms. Although CSBO is not statistically superior to the rest of the SOO + BOBYQA, it exceeds this on an average ranking basis. Furthermore, this table demonstrates that CSBO significantly outperforms all other algorithms. The most comparable strategy is SOO + BOBYQA, which achieved the most significant outcomes in 13 situations but failed in 17 cases compared to CSBO.

Table 14. The competitive results of Wilcoxon’s test and performance of CLBO versus IOAs.

3.3. CEC 2017 benchmark functions

In order to verify the performance of the proposed CSBO algorithm compared to other algorithms, we have selected the CEC2017 functions in this section. These are real-world modeled optimization functions. CEC 2017 functions (unimodal, simple multimodal, hybrid, and composition benchmark tests) (Wu et al., Citation2017) have been used successfully in many recent articles and, therefore, in this paper to test the performance of the proposed CSBO algorithm, we have used them.

In this section, we have selected 30-dimension with 30 runs for each test function. The number of evolutions in all parts of the article is 300,000. Also, the population and NR set at 45 and 15, respectively. The parameter settings of some competitors, in this case, are given in Table .

Table 15. The parameter settings of some competitors for CEC 2017 test functions.

The simulation results for D = 30 are given in Table , compared to the robust and modern algorithms in the recent literature, e.g. AWPSO (a sigmoid-function-based adaptive weighted particle swarm optimizer PSO) (Wei et al., Citation2020), GSA and GWO (Lei et al., Citation2020), SCA, EHO and BOA (Li & Wang, Citation2021), BA (Alsalibi et al., Citation2021), HHO (Hu et al., Citation2022), GOMGBO (Gaussian bare-bones mechanism GBO) (Qiao et al., Citation2021) and HGS (Izci et al., Citation2022). From both tables, it is clear that CSBO has defeated all other algorithms for most functions (F1, F3, F4, F6, F7, F9, F11, F12, F13, F14, F15, F17, F18, F19, F21, F23, F24, F27, F28, and F30). Interestingly, CSBO never had the worst performance and rank for CEC 2017 among the algorithms, indicating the robustness and reliability of the CSBO. Nevertheless, to mention that the two algorithms, HGS and GOMGBO, overcame the CSBO for the 8 and 2 functions of CEC 2017, which is normal.

Table 16. Summary of the results for CEC2017 test functions for different algorithms with D = 30 and NFEs = 3.00E + 05.

Although CSBO obtained indicates inappropriate rankings (rank 3) for the two functions, 10 and 22, the last three rows of Table reveal that CSBO is a robust and appropriate emerging algorithm.

3.4. CSBO complexity

The CSBO method was applied in MATLAB 7.6, and the simulation was performed on a Pentium IV E5200 PC equipped with 2 GB of RAM. The CSBO algorithm was used to evaluate all test functions in the CEC 2014 competition (J. J. Liang et al., Citation2013). The algorithm was executed 30 times for each test problem for a total number of 10,000 × D function evaluations. The convergence speed of the CSBO algorithm is determined according to the procedure provided in (J. J. Liang et al., Citation2013). T0 denotes the execution time of the following scheme in Table :

T1 is the time required to compute F18 for 200 000 evaluations, whereas T2 denotes the time required to run the suggested technique for 200 000 evaluations. T2 is assessed five times, and the mean of the five evaluations is represented by

. As a final point, the complexity of the algorithm is represented as

, T1, and (

−T1)/T0.

Table 17. CSBO Complexity.

In addition, the computational cost of CSBO is mainly determined by three processes: blood particle initialization, fitness assessment, and blood particle update. The computing complexity of the initialization procedure is O (Npop), where Npop is the number of blood particles. The updating CSBO has a computational cost of O (Itermax × Npop) + O (Itermax × Npop × D), which is comprised of searching for the optimal position and updating the location vectors of all the blood particles, where Itermax is the maximum number of iterations and D is the dimension of the particular issues. As a result, CSBO’s computational complexity is O (Npop × (Itermax + Itermax × D + 1)).

4. Application of CSBO algorithm for engineering optimization problems

In the second phase study, some experiments were accomplished to compare the proposed CSBO algorithm with other obtained optimal best results for solving various manufacturing parameter optimization problems such as the engineering design optimization, the parameter estimation for frequency-modulated (FM) sound waves, and the maximizing of the reliability in the engineering systems.

Over the last few years, population-based swarm intelligence based on various EAs has attracted much interest among researchers in the related fields for the optimal solutions such as the optimal solutions of various types of manufacturing parameters and engineering design optimization problems in order to improve the system’s features like performance and cost. A variety of manufacturing topics can be defined as optimization problems with many nonlinear characteristics and the inequality (or equality) and nonlinear (or linear) optimization constraints. Product and process design, tuning manufacturing parameters, scheduling and production planning are some examples of this area.

Therefore, to attain desired product quality with high efficiency, it is urgent to use optimization methods to handle the manufacturing development. Since manufacturing processes are going to be more complicated and also the products’ quality must satisfy high standards, the investigation of improved methods for solving these problems is highly explored, and it is still an ongoing subject in the current competitive market (G. Zhang et al., Citation2013).

In the recent years, various optimization heuristic techniques and EAs have been applied to solve manufacturing parameter and engineering design optimization problems, such as harmony search (HS) (Lee & Geem, Citation2005), genetic algorithms (GAs) (Coello & Montes, Citation2002); (Dhadwal et al., Citation2014); (Pasandideh et al., Citation2013), the various optimization heuristic techniques of the differential evolution (DE) algorithm for constrained optimization such as the hybrid DE algorithms (Liao, Citation2010), a cultural DE (CDE) (Becerra & Coello, Citation2006), the DE with dynamic stochastic selection (DSS-MDE) (M. Zhang et al., Citation2008), the co-evolutionary DE (CoDE) (F. Huang et al., Citation2007), a modified DE (COMDE) (A. W. Mohamed & Sabry, Citation2012), the evaluating DE (de Melo & Carosio, Citation2012), a new hybrid DE (Yildiz, Citation2013a), a DE and tissue membrane systems (DETPS) algorithm (C. Li et al., Citation2011), an improved constrained DE (Gong et al., Citation2014), a dual-population DE with coevolution (Gao et al., Citation2014), the various techniques of PSO algorithm for constrained manufacturing parameter optimization such as a unified PSO (UPSO) algorithm (Parsopoulos & Vrahatis, Citation2005), a co-evolutionary PSO (CPSO) algorithm (Q. He & Wang, Citation2007b), a hybrid algorithm of PSO with DE (PSO-DE) (Liu et al., Citation2010), an improved vector PSO (Sun et al., Citation2011), the ABC optimization algorithms ((Brajevic et al., Citation2011); (G. Li et al., Citation2012); (Tsai, Citation2014); (Brajevic & Tuba, Citation2013); (A. Singh & Sundar, Citation2011); (Brajevic, Citation2015)), the TLBO optimization algorithm ((Yildiz, Citation2013b); (Yu et al., Citation2016); (Maity & Mishra, Citation2018)), cuckoo search (CS) algorithm (Gandomi, Yang, and Alavi, Citation2013), Jaya algorithm (R. V. Rao & Waghmare, Citation2017) and etc.

4.1. The constrained engineering design optimization using CSBO

In order to verify the results of the proposed CSBO algorithm on the constrained engineering design applications, three problems from the competitive study are chosen: optimal design of a tension/compression spring, the three-bar truss and the pressure vessel to minimize the total cost of the manufacturing and design. The population size and the maximum number of iterations have been chosen to be 45, 400 for three-bar truss, and 5000 for pressure vessel and tension/compression spring optimal design problems, respectively, with 30 independent runs for the CSBO algorithm, which are summarized as follows. In recent years, it should be noted that many researchers have examined these engineering problems in different studies. Thus, we just investigated the cases considering all the limitations and conditions.

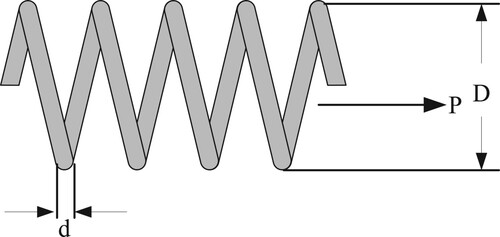

4.1.1. Problem 1: the tension/compression spring design problem

The tension/compression problem aims to minimize the tension/compression spring’s weight regarding constraints on the minimum deflection, shear stress, surge frequency, diameter, and design variables, as shown in Figure (Arora, Citation2004). This problem includes one linear and three nonlinear inequality constraints and three continuous design variables. In addition, tre are three continuous variables, including the wire diameter x1(d) with region 0.05 ≤ x1≤2, the mean coil diameter x2(D) with region 0.25 ≤ x2≤1.3, and the number of active coils x3 (P) with region 2 ≤ x3≤15 (Akay & Karaboga, Citation2012; Coello & Montes, Citation2002).

The mathematical formulation of the tension/compression spring optimal design problem can be given as follows (Q. He & Wang, Citation2007b) and (Yu et al., Citation2016):

Minimize:

(14)

(14)

Subject to:

(15)

(15)

(16)

(16)

(17)

(17)

(18)

(18)

The simulation results achieved by the proposed CSBO algorithm compared to GA (Coello & Montes, Citation2002), CPSO (Q. He & Wang, Citation2007b), CDE (F. Huang et al., Citation2007), DELC (L. Wang & Li, Citation2010), ABC (Akay & Karaboga, Citation2012), UABC (Brajevic & Tuba, Citation2013), ITLBO (Yu et al., Citation2016), CSA (Askarzadeh, Citation2016), EO (Faramarzi et al., Citation2020), water cycle algorithm (WCA) (Eskandar et al., Citation2012), Bat algorithm (BA) (Gandomi, Yang, Alavi, and Talatahari, Citation2013), HEA-ACT (Y. Wang et al., Citation2009), spotted hyena optimizer (SHO) (Dhiman et al., Citation2021), chaotic water cycle algorithm (CWCA III) (Heidari et al., Citation2017), and virus colony search (VCS) (Jain et al., Citation2019) algorithms are listed in Table . The simulation results show that the proposed CSBO algorithm is better and more robust for the tension/compression spring optimal design problem.

Table 18. Comparison of the best results for tension/compression spring problem by algorithms.

In fact, CSBO could obtain the standard deviation of 4.38E −14, which is an outstanding result. Although other algorithms could result in an optimal global solution, they all have the worse Std. compared to CSBO.

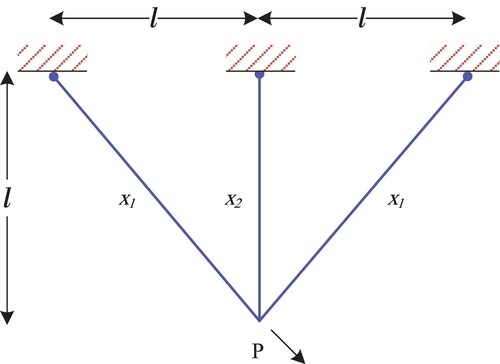

4.1.2. Problem 2: the three-bar truss design problem

The three-bar truss structure design, as shown in Figure , is a continuous constrained nonlinear optimization problem in which the objective is to minimize structure volume by using two continuous design variables x1 and x2 with region 0 ≤ x1≤1, and 2 with region 0 ≤ x2≤1, along with three nonlinear inequality constraints. The mathematical formulation of the three-bar truss structure optimal design problem can be defined as follows (Brajevic & Tuba, Citation2013):

Minimize:

(19)

(19)

Subject to:

(20)

(20)

(21)

(21)

(22)

(22)

The best-obtained simulation results from the proposed CSBO algorithm are listed in Table , which are compared to various earlier algorithms including SBO (Ray & Liew, Citation2003), DSS-MDE (M. Zhang et al., Citation2008), HEA-ACT (Y. Wang et al., Citation2009), PSO-DE (Liu et al., Citation2010), COMDE (F. Huang et al., Citation2007), UABC (Brajevic & Tuba, Citation2013), CSA (Askarzadeh, Citation2016), WCA (Eskandar et al., Citation2012), water strider algorithm (WSA) (Kaveh & Eslamlou, Citation2020), ICA (Atashpaz-Gargari & Lucas, Citation2007), (Kaveh & Eslamlou, Citation2020), salp swarm algorithm (SSA) (Kaveh & Eslamlou, Citation2020), (Mirjalili et al., Citation2017), neural network algorithm (NNA) (Kaveh & Eslamlou, Citation2020), (Sadollah et al., Citation2018), and biogeography-based optimization (BBO) (Kaveh & Eslamlou, Citation2020), (Simon, Citation2008) algorithms. The results indicate that the CSBO algorithm is quite competitive and effective for optimal design of the three-bar truss structure. It is worth mentioning that this is a simple problem with only two dimensions and could be solved easily by most algorithms.

Table 19. Comparison of the best results for the three-bar truss structure optimal design problem.

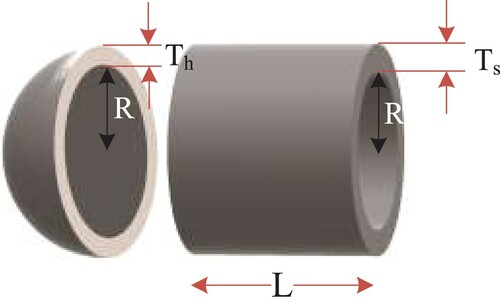

4.1.3. Problem 3: the pressure vessel optimization problem

In the pressure vessel optimal design, as shown in Figure , the optimization problem objective function is to minimize the total cost (F3(X)), containing the material cost, forming and welding of a cylindrical vessel structure. There are two discrete design variables, the thickness of the shell (x1 or Ts ) with region 1 ≤ x1≤99 and the thickness of the head (x2 or Th ) with region 1 ≤ x2≤99, in which the design variables x1 and x2 are integer multiples of 0.0625. In addition, two continuous design variables, including the inner radius (x3 or R ) with region 10 ≤ x3≤200, and the length of the cylindrical section of the vessel, not including the head (x4 or L ) with region 10 ≤ x4≤200 are the other variables (Q. He & Wang, Citation2007b) and (Brajevic & Tuba, Citation2013). Therefore, the optimal design problem can be expressed as follows (Q. He & Wang, Citation2007b):

Minimize:

(23)

(23)

Subject to:

(24)

(24)

(25)

(25)

(26)

(26)

(27)

(27)

The best-achieved simulation results by the proposed CSBO algorithm in comparison with GA (Coello & Montes, Citation2002), CPSO (Q. He & Wang, Citation2007b), CDE (F. Huang et al., Citation2007), CB-ABC (Brajevic, Citation2015), ABC (Akay & Karaboga, Citation2012), UABC (Brajevic & Tuba, Citation2013), CSA (Askarzadeh, Citation2016), EO (Faramarzi et al., Citation2020), BA (Gandomi, Yang, Alavi, & Talatahari, Citation2013), Gaussian quantum-behaved particle swarm optimization (G-QPSO) (Eskandar et al., Citation2012), (dos Santos Coelho, Citation2010), hybrid particle swarm optimization (HPSO) (Eskandar et al., Citation2012), (Q. He & Wang, Citation2007a), moth-flame optimization (MFO) (Mirjalili, Citation2015), and GSA (Mirjalili, Citation2015), (Rashedi et al., Citation2009) algorithms are listed in Table . The simulation results demonstrate that the proposed CSBO algorithm is quite competitive and robust for different optimal design problems. The CSBO algorithm could obtain the same best, worst, and mean values for different cases, which leads to optimal global solutions. On the other hand, although other methods could result in the best solution, they suffer from large Std., which affects their performance in different circumstances.

Table 20. Comparison of the best results for the pressure vessel optimal design problem.

4.2. Parameter estimation for FM sound waves

In this section, the proposed CSBO algorithm with Itermax = 5000 and the population size of Npop = 45 is used to estimate the optimal parameters of an FM sound wave synthesis.

The highly complex multimodal FM sound synthesis optimization problem is significant in numerous recent music systems (Das & Suganthan, Citation2010). Estimating the optimal parameters of an FM sound wave synthesis is a D-dimensional optimization problem. This paper considershe case of D = 6 based on (Das & Suganthan, Citation2010). The 6 dimensional vector has six components: X = [x1(a1), x2(ω1), x3(a2), x4(ω2), x5(a3), x6(ω3)] with region −6.5 ≤ X ≤ 6.35 for all variables. The equations are given for the estimated and objective sound wave are given as follows for 100 times (t = 1:100) (C. Li et al., Citation2011):

(28)

(28)

(29)

(29)

Where

.

The optimization problem objective function is defined as the summation of square errors between (the estimated wave) and

(the target wave) as shown below, with optimum value F4(X) = 0:

(30)

(30)

The comparison between the best results obtained from the CSBO algorithm with the best results reported in (C. Li et al., Citation2011), such as SLPSO, APSO, CLPSO, CPSOH, FIPS, SPSO, JADE, HRCGA, HRCGA, FPSO, TRIBES-D, and G-CMA-ES, is given in Table . As it is evident, the CSBO algorithm outperforms all other proposed algorithms (for all four factors, i.e. Best, Worst, Mean and Std.). Furthermore, test solution obtained from the CSBO algorithm compared to very new algorithms such as gorilla troops optimizer (GTO) (Abdollahzadeh et al., Citation2021), and tunicate swarm algorithm (TSA) (Kaur et al., Citation2020), is shown in Table , which confirms the ability and efficient performance of the proposed CSBO algorithm compared to TSA and GTO.

Table 21. Comparison of the CSBO best results with the best results reported in (C. Li et al., Citation2011).

Table 22. The best solution results from the CSBO and state-of-the-art algorithms for F4(X).

4.3. Reliability-redundancy allocation optimization (RRAO) problem

The main purpose of reliability-redundancy constraint and nonlinear optimization problems is to augment the system reliability (maximization of the overall system reliability) using component reliabilities (r = (r1, r2, … , rm)) and redundancy allocation number (n = (n1, n2, … , nm)) optimization vectors for subsystems of the system, X = [r, n]. The nonlinear mixed-integer programming model of this problem can be formulated by selecting the reliability of the system as the objective function to be maximized by considering multiple nonlinear constraints as expressed below:

(31)

(31)

(32)

(32)

where Rs is the reliability of different systems, F(.) and g(.) are the objective and constraint functions for the RRAO problem of the overall parallel-series systems, respectively. The g(.) is usually associated with system cost, volume and weight. r = (r1, r2, … , rm) and n = (n1, n2, … , nm) are the component reliabilities and redundancy allocation number vectors for system subsystems, including m subsystems, respectively, and l is the system resource limitation.

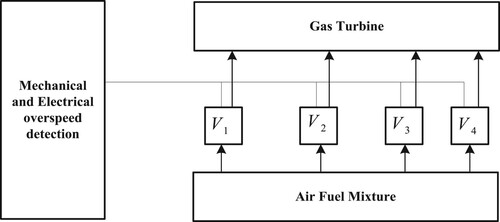

4.3.1. A real-world example: the maximizing of the reliability of the over-speed protection system of a gas turbine

The over-speed detection has an important role in mechanical and electrical systems. When an over-speed happens, it is vital to halt the fuel source by utilizing a few control valves (V1 to V4). The over-speed protection system of a gas turbine for RRAP optimization mixed-integer non-linear problem is depicted in Figure . The input parameters of the over-speed protection system are summarized in Table (T.-C. Chen, Citation2006).

Figure 11. The diagram block for the over-speed protection system of a gas turbine (T.-C. Chen, Citation2006; Ghavidel et al., Citation2018).

Table 23. Data of the fourth test system (T.-C. Chen, Citation2006; Ghavidel et al., Citation2018).

This reliability optimization (maximization) problem can be formulated as follows:

(33)

(33)

The system constraints include:

The combination of weight, volume and redundancy allocation constraints:

(34)

(34) where, vd is the volume of dth subsystem for all components, V is the upper volume limit of the subsystem’s products.

The system cost constraint:

(35)

(35) where, C parameter is the upper-cost limit of the system and

is the cost for all components with reliability rd at dth stage. T is the operating time in which the components are working.

The system weight constraint:

(36)

(36)

Table 24. Comparison of best results obtained of CSBO with some of the previously reported results.

4.4. Discussions and prospect of the future

On the CEC 2005, CEC 2014 and CEC 2017 standard benchmarks and five popular real-world engineering issues, the proposed CSBO algorithm is compared to other well-known nature-inspired algorithms. The statistical analysis of the benchmark functions demonstrates that this method can produce promising and competitive outcomes. Additionally, it was discovered that CSBO is capable of performing well in exploration and exploitation in real-parameter (shifted) multimodal and enlarged multimodal functions, as well as in real-parameter unimodal functions. Additionally, the findings of the real-parameter composite and hybrid functions demonstrate that the CSBO strikes an appropriate balance between exploration and exploitation. Additionally, CSBO’s average optimized outcomes and standard deviation on average results are comparable to those generated by other optimization methods. Convergence speed comparisons further demonstrate the provided algorithm’s rapid convergence capability. It would be fascinating to apply CSBO to other optimization issues in many science and engineering sectors in the future. Numerous study directions can be offered for future works. First, we investigate various spirals’ influence Second, a binary implementation of CSBO may be an intriguing future project. Finally, it is recommended to provide certain operators for solving multi-objective algorithms utilizing CSBO. Another intriguing issue would be conducting additional research on the NR parameter value to decide it automatically without user control.

5. Conclusion

In this article, we presented a new meta-heuristic optimization algorithm inspired by the functionality of the circulatory system in the human body named the Circulatory System Based Optimization (CSBO) algorithm. The performance and mathematical modeling of CSBO and its functionality as an optimizer were presented. CSBO was tested and optimized on a wide variety of complex real-world functions compared with many well-known optimization algorithms. Various test functions, including unimodal, multimodal, hybrid, and composition standard benchmarks of the CEC 2005, CEC 2014 and CEC 2017 were used to test the performance of the proposed algorithm. The results showed the higher performance of CSBO compared to the state-of-the-art algorithms in terms of exploration, exploitation, local optima avoidance, and convergence manner. Also, a dimensional scalability analysis was conducted for CSBO, including 30 and 50 dimensions of the CEC2014, and the results indicated that CSBO could efficiently search the feasible space to find the optimal or near-optimal solutions. Finally, CSBO was applied to several different practical engineering problems. These engineering problems are included: the tension/compression spring design, the three-bar truss design, the pressure vessel design, the parameter estimation for FM sound waves, and the reliability-redundancy allocation optimization. The simulation results indicated that CSBO is quite competitive and robust for optimal design problems compared with many modern and advanced optimization algorithms in the recent literature. Therefore, CSBO can be considered a modern and robust algorithm for future studies and optimization applications.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Abdechiri, M., Meybodi, M. R., & Bahrami, H. (2013). Gases Brownian motion optimization: An algorithm for optimization (GBMO). Applied Soft Computing, 13(5), 2932–2946. https://doi.org/10.1016/j.asoc.2012.03.068

- Abdollahzadeh, B., Soleimanian Gharehchopogh, F., & Mirjalili, S. (2021). Artificial gorilla troops optimizer: A new nature-inspired metaheuristic algorithm for global optimization problems. International Journal of Intelligent Systems, 36(10), 5887–5958. https://doi.org/10.1002/int.22535

- Abdullah, J. M., & Ahmed, T. (2019). Fitness dependent optimizer: Inspired by the bee swarming reproductive process. IEEE Access, 7, 43473–43486. https://doi.org/10.1109/ACCESS.2019.2907012

- Abedinpourshotorban, H., Shamsuddin, S. M., Beheshti, Z., & Jawawi, D. N. A. (2016). Electromagnetic field optimization: A physics-inspired metaheuristic optimization algorithm. Swarm and Evolutionary Computation, 26, 8–22. https://doi.org/10.1016/j.swevo.2015.07.002

- Abualigah, L., Yousri, D., Abd Elaziz, M., Ewees, A. A., Al-Qaness, M. A., & Gandomi, A. H. (2021). Aquila optimizer: A novel meta-heuristic optimization algorithm. Computers & Industrial Engineering, 157, 107250. https://doi.org/10.1016/j.cie.2021.107250

- Afonso, L. D., Mariani, V. C., & dos Santos Coelho, L. (2013). Modified imperialist competitive algorithm based on attraction and repulsion concepts for reliability-redundancy optimization. Expert Systems with Applications, 40(9), 3794–3802. https://doi.org/10.1016/j.eswa.2012.12.093

- Ahmadianfar, I., Bozorg-Haddad, O., & Chu, X. (2020). Gradient-based optimizer: A new metaheuristic optimization algorithm. Information Sciences, 540, 131–159. https://doi.org/10.1016/j.ins.2020.06.037

- Ahmadianfar, I., Heidari, A. A., Gandomi, A. H., Chu, X., & Chen, H. (2021). RUN beyond the metaphor: An efficient optimization algorithm based on Runge Kutta method. Expert Systems with Applications, 181, 115079. https://doi.org/10.1016/j.eswa.2021.115079

- Ahmadianfar, I., Heidari, A. A., Noshadian, S., Chen, H., & Gandomi, A. H. (2022). INFO: An efficient optimization algorithm based on weighted mean of vectors. Expert Systems with Applications, 116516. https://doi.org/10.1016/j.eswa.2022.116516

- Akay, B., & Karaboga, D. (2012). Artificial bee colony algorithm for large-scale problems and engineering design optimization. Journal of Intelligent Manufacturing, 23(4), 1001–1014. https://doi.org/10.1007/s10845-010-0393-4

- Akyol, S., & Alatas, B. (2017). Plant intelligence based metaheuristic optimization algorithms. Artificial Intelligence Review, 47(4), 417–462. https://doi.org/10.1007/s10462-016-9486-6

- Alatas, B. (2011). ACROA: Artificial chemical reaction optimization algorithm for global optimization. Expert Systems with Applications, 38(10), 13170–13180. https://doi.org/10.1016/j.eswa.2011.04.126

- Alsalibi, B., Abualigah, L., & Khader, A. T. (2021). A novel bat algorithm with dynamic membrane structure for optimization problems. Applied Intelligence, 51(4), 1992–2017. https://doi.org/10.1007/s10489-020-01898-8

- Alsattar, H. A., Zaidan, A. A., & Zaidan, B. B. (2020). Novel meta-heuristic bald eagle search optimisation algorithm. Artificial Intelligence Review, 53(3), 2237–2264. https://doi.org/10.1007/s10462-019-09732-5

- Arora, J. (2004). Introduction to optimum design. Elsevier.

- Askari, Q., Younas, I., & Saeed, M. (2020). Political optimizer: A novel socio-inspired meta-heuristic for global optimization. Knowledge-Based Systems, 195, 105709. https://doi.org/10.1016/j.knosys.2020.105709

- Askarzadeh, A. (2016). A novel metaheuristic method for solving constrained engineering optimization problems: Crow search algorithm. Computers & Structures, 169, 1–12. https://doi.org/10.1016/j.compstruc.2016.03.001

- Atashpaz-Gargari, E., & Lucas, C. (2007). Imperialist competitive algorithm: An algorithm for optimization inspired by imperialistic competition. In 2007 IEEE congress on evolutionary computation (pp. 4661–4667). IEEE. https://doi.org/10.1109/CEC.2007.4425083

- Aydilek, I. B. (2018). A hybrid firefly and particle swarm optimization algorithm for computationally expensive numerical problems. Applied Soft Computing, 66, 232–249. https://doi.org/10.1016/j.asoc.2018.02.025

- Baykasoğlu, A., & Akpinar, Ş. (2017). Weighted superposition attraction (WSA): A swarm intelligence algorithm for optimization problems – Part 1: Unconstrained optimization. Applied Soft Computing, 56, 520–540. https://doi.org/10.1016/j.asoc.2015.10.036

- Bayraktar, Z., Komurcu, M., Bossard, J. A., & Werner, D. H. (2013). The wind driven optimization technique and its application in electromagnetics. IEEE Transactions on Antennas and Propagation, 61(5), 2745–2757. https://doi.org/10.1109/TAP.2013.2238654

- Becerra, R. L., & Coello, C. A. C. (2006). Cultured differential evolution for constrained optimization. Computer Methods in Applied Mechanics and Engineering, 195(33–36), 4303–4322. https://doi.org/10.1016/j.cma.2005.09.006

- Braik, M. S. (2021). Chameleon swarm algorithm: A bio-inspired optimizer for solving engineering design problems. Expert Systems with Applications, 174, 114685. https://doi.org/10.1016/j.eswa.2021.114685

- Brajevic, I. (2015). Crossover-based artificial bee colony algorithm for constrained optimization problems. Neural Computing and Applications, 26(7), 1587–1601. https://doi.org/10.1007/s00521-015-1826-y

- Brajevic, I., & Tuba, M. (2013). An upgraded artificial bee colony (ABC) algorithm for constrained optimization problems. Journal of Intelligent Manufacturing, 24(4), 729–740. https://doi.org/10.1007/s10845-011-0621-6

- Brajevic, I., Tuba, M., & Subotic, M. (2011). Performance of the improved artificial bee colony algorithm on standard engineering constrained problems. International Journal of Mathematics And Computers in Simulation, 5(2), 135–143.

- Chen, T.-C. (2006). IAs based approach for reliability redundancy allocation problems. Applied Mathematics and Computation, 182(2), 1556–1567. https://doi.org/10.1016/j.amc.2006.05.044

- Chen, X., Tianfield, H., Mei, C., Du, W., & Liu, G. (2017). Biogeography-based learning particle swarm optimization. Soft Computing, 21(24), 7519–7541. https://doi.org/10.1007/s00500-016-2307-7

- Chen, X., Xu, B., Yu, K., & Du, W. (2018). Teaching-learning-based optimization with learning enthusiasm mechanism and its application in chemical engineering. Journal of Applied Mathematics, 2018, 1–19. https://doi.org/10.1155/2018/1806947

- Cheng, L., Wu, X., & Wang, Y. (2018). Artificial flora (AF) optimization algorithm. Applied Sciences, 8(3), 329. https://doi.org/10.3390/app8030329

- Cheng, R., & Jin, Y. (2015). A social learning particle swarm optimization algorithm for scalable optimization. Information Sciences, 291, 43–60. https://doi.org/10.1016/j.ins.2014.08.039

- Cheraghalipour, A., Hajiaghaei-Keshteli, M., & Paydar, M. M. (2018). Tree growth algorithm (TGA): A novel approach for solving optimization problems. Engineering Applications of Artificial Intelligence, 72, 393–414. https://doi.org/10.1016/j.engappai.2018.04.021

- Coello, C. A. C., & Montes, E. M. (2002). Constraint-handling in genetic algorithms through the use of dominance-based tournament selection. Advanced Engineering Informatics, 16(3), 193–203. https://doi.org/10.1016/S1474-0346(02)00011-3

- Dai, C., Hu, Z., Li, Z., Xiong, Z., & Su, Q. (2020). An improved grey prediction evolution algorithm based on topological opposition-based learning. IEEE Access, 8, 30745–30762. https://doi.org/10.1109/ACCESS.2020.2973197

- Das, S., & Suganthan, P. N. (2010). Problem definitions and evaluation criteria for CEC 2011 competition on testing evolutionary algorithms on real world optimization problems. Jadavpur University, Nanyang Technological University, Kolkata, 341–359.

- de Melo, V. V., & Carosio, G. L. C. (2012). Evaluating differential evolution with penalty function to solve constrained engineering problems. Expert Systems with Applications, 39(9), 7860–7863. https://doi.org/10.1016/j.eswa.2012.01.123

- Dhadwal, M. K., Jung, S. N., & Kim, C. J. (2014). Advanced particle swarm assisted genetic algorithm for constrained optimization problems. Computational Optimization and Applications, 58(3), 781–806. https://doi.org/10.1007/s10589-014-9637-0

- Dhiman, G., Garg, M., Nagar, A., Kumar, V., & Dehghani, M. (2021). A novel algorithm for global optimization: Rat swarm optimizer. Journal of Ambient Intelligence and Humanized Computing, 12(8), 8457–8482. https://doi.org/10.1007/s12652-020-02580-0

- Dhiman, G., & Kumar, V. (2018). Emperor penguin optimizer: A bio-inspired algorithm for engineering problems. Knowledge-Based Systems, 159, 20–50. https://doi.org/10.1016/j.knosys.2018.06.001

- Dorigo, M., & di Caro, G. (1999). Ant colony optimization: A new meta-heuristic. In Proceedings of the 1999 congress on evolutionary computation-CEC99 (Cat. No. 99TH8406) (Vol. 2, pp. 1470–1477). IEEE.

- dos Santos Coelho, L. (2010). Gaussian quantum-behaved particle swarm optimization approaches for constrained engineering design problems. Expert Systems with Applications, 37(2), 1676–1683. https://doi.org/10.1016/j.eswa.2009.06.044

- Eberhart, R., & Kennedy, J. (1995). A new optimizer using particle swarm theory. MHS’95. In Proceedings of the sixth international symposium on micro machine and human science (pp. 39–43). IEEE.

- Elsisi, M. (2019). Future search algorithm for optimization. Evolutionary Intelligence, 12(1), 21–31. https://doi.org/10.1007/s12065-018-0172-2

- Eskandar, H., Sadollah, A., Bahreininejad, A., & Hamdi, M. (2012). Water cycle algorithm – A novel metaheuristic optimization method for solving constrained engineering optimization problems. Computers & Structures, 110-111, 151–166. https://doi.org/10.1016/j.compstruc.2012.07.010

- Fan, Y., Jiang, W., Zou, Y., Li, J., Chen, J., & Deng, X. (2009). Numerical simulation of pulsatile non-Newtonian flow in the carotid artery bifurcation. Acta Mechanica Sinica, 25(2), 249–255. https://doi.org/10.1007/s10409-009-0227-9

- Faramarzi, A., Heidarinejad, M., Stephens, B., & Mirjalili, S. (2020). Equilibrium optimizer: A novel optimization algorithm. Knowledge-Based Systems, 191, 105190. https://doi.org/10.1016/j.knosys.2019.105190

- Feng, Z., Niu, W., & Liu, S. (2021). Cooperation search algorithm: A novel metaheuristic evolutionary intelligence algorithm for numerical optimization and engineering optimization problems. Applied Soft Computing, 98, 106734. https://doi.org/10.1016/j.asoc.2020.106734

- Gablonsky, J. M., & Kelley, C. T. (2001). A locally-biased form of the DIRECT algorithm. Journal of Global Optimization, 21(1), 27–37. https://doi.org/10.1023/A:1017930332101

- Gandomi, A. H., Yang, X.-S., & Alavi, A. H. (2013). Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Engineering with Computers, 29(1), 17–35. https://doi.org/10.1007/s00366-011-0241-y

- Gandomi, A. H., Yang, X.-S., Alavi, A. H., & Talatahari, S. (2013). Bat algorithm for constrained optimization tasks. Neural Computing and Applications, 22(6), 1239–1255. https://doi.org/10.1007/s00521-012-1028-9

- Gao, W.-F., Yen, G. G., & Liu, S.-Y. (2014). A dual-population differential evolution with coevolution for constrained optimization. IEEE Transactions on Cybernetics, 45(5), 1108–1121. https://doi.org/10.1109/TCYB.2014.2345478

- GhaemiDizaji, M., Dadkhah, C., & Leung, H. (2020). OHDA: An opposition based high dimensional optimization algorithm. Applied Soft Computing, 91, 106185. https://doi.org/10.1016/j.asoc.2020.106185

- Ghafil, H. N., & Jármai, K. (2020). Dynamic differential annealed optimization: New metaheuristic optimization algorithm for engineering applications. Applied Soft Computing, 93, 106392. https://doi.org/10.1016/j.asoc.2020.106392

- Ghasemi, M., Akbari, E., Rahimnejad, A., Razavi, S. E., Ghavidel, S., & Li, L. (2019). Phasor particle swarm optimization: A simple and efficient variant of PSO. Soft Computing, 23(19), 9701–9718. https://doi.org/10.1007/s00500-018-3536-8