?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

It is yet unclear which teaching methods are most effective for improving critical thinking (CT) skills and especially for the ability to avoid biased reasoning. Two experiments (laboratory: N = 85; classroom: N = 117), investigated the effect of practice schedule (interleaved/blocked) on students’ learning and transfer of unbiased reasoning, and whether it interacts with practice-task format (worked-examples/problems). After receiving CT-instructions, participants practiced in: (1) a blocked schedule with worked examples, (2) an interleaved schedule with worked examples, (3) a blocked schedule with problems, or (4) an interleaved schedule with problems. In both experiments, learning outcomes improved after instruction/practice. Surprisingly, there were no indications that interleaved practice led to better learning/transfer than blocked practice, irrespective of task format. The practice-task format did matter for novices’ learning: worked examples were more effective than low-assistance practice problems, which demonstrates –for the first time – that the worked-example effect also applies to novices’ learning to avoid biased reasoning.

Every day, we make many decisions that are based on previous experiences and existing knowledge. This happens almost automatically as we rely on a number of heuristics (i.e. mental shortcuts) that ease reasoning processes (Tversky & Kahneman, Citation1974). Heuristic reasoning is typically useful, especially in routine situations. But it can also produce systematic deviations from rational norms (i.e. biases; Kahneman & Tversky, Citation1972, Citation1973; Tversky & Kahneman, Citation1974) with far-reaching consequences, particularly in complex professional environments in which the majority of higher education graduates are employed (e.g. medicine: Ajayi & Okudo, Citation2016; Elia et al., Citation2016; Mamede et al., Citation2010; Law: Koehler et al., Citation2002). Our primary tool for avoiding bias in reasoning and decision-making (hereafter referred to as unbiased reasoning; e.g. Flores et al., Citation2012; West et al., Citation2008) is critical thinking (CT). CT-skills are key to effective communication, problem solving, and decision-making in both daily life and professional environments (e.g. Billings & Roberts, Citation2014; Darling-Hammond, Citation2010; Kuhn, Citation2005). Consequently, people who have difficulty with CT are more susceptible to making illogical and biased decisions that can have serious consequences. Given the importance of CT for successful functioning in today’s society, it is worrying that many students struggle with several aspects of CT. Hence, it is not surprising that helping students to become critically thinking professionals is a major aim of higher education. However, it is not yet clear what teaching methods are most effective, especially to establish transfer (e.g. Van Peppen et al., Citation2018; Heijltjes et al., Citation2014a; Heijltjes et al., Citation2014b, Citation2015), which refers to the ability to apply acquired knowledge and skills in new situations (Halpern, Citation1998; Perkins & Salomon, Citation1992).

Contextual interference in instruction

According to the contextual interference effect, greater transfer is established when materials are presented and learned under conditions of high contextual interference (Schneider et al., Citation2002). High contextual interference can be created by varying practice-tasks from trial to trial (e.g. Battig, Citation1978). This task variability induces reflection on to-be-used procedures and can help learners to recognise distinctive characteristics of different problem types (i.e. inter-task comparing) and to develop more elaborate cognitive schemata that contribute to selecting and using a learned procedure when solving similar problems (evidencing learning) and new problems (evidencing transfer; Barreiros et al., Citation2007; Moxley, Citation1979).

High contextual interference can be achieved by interleaved practice as opposed to blocked practice. Whereas blocked practice involves practicing one task-category at a time before the next (e.g. AAABBBCCC), interleaved practice mixes practice of several categories together (e.g. ABCBACBCA). To illustrate, a blocked schedule of mathematics tasks first offers practice tasks on volumes of cubes and thereafter practice tasks on volumes of cylinders. An interleaved schedule, on the other hand, offers a mix of practice tasks on volumes of cubes and cylinders. It has been suggested that reflection on the to-be-used procedures is what causes the beneficial effect of interleaved practice (e.g. Barreiros et al., Citation2007; Rau et al., Citation2010). Therefore, distinctiveness between task categories should be high enough to reflect what strategy is required, but, on the other hand, should not be too high because learners then immediately recognise what procedure to apply. Additionally, the Sequential Attention Theory (Carvalho & Goldstone, Citation2019) states that an interleaved schedule highlights differences between items, whereas a blocked schedule highlights similarities between items. Thus, interleaved practice is assumed to be beneficial when differences between categories are crucial for acquiring the category structure. Hence, it is important for beneficial effects of interleaved practice to occur that distinctiveness between categories is high, but distinctiveness within task categories is low (Zulkiply & Burt, Citation2013). Research on interleaved practice has frequently demonstrated positive learning effects (for a recent meta-analysis, see Brunmair & Richter, Citation2019), for example in laboratory studies with troubleshooting tasks (De Croock et al., Citation1998; De Croock & van Merriënboer, Citation2007; Van Merriënboer et al., Citation1997, Citation2002); drawing tasks (Albaret & Thon, Citation1998); foreign language learning (Abel & Roediger, Citation2017; Carpenter & Mueller, Citation2013; Schneider et al., Citation2002); category induction tasks (Kornell & Bjork, Citation2008; Sana et al., Citation2018; Wahlheim et al., Citation2011); and learning of logical rules (Schneider et al., Citation1995). Furthermore, several classroom experiments found positive effects of interleaved practice in mathematics learning (e.g. Rau et al., Citation2013; Rohrer et al., Citation2014, Citation2015, Citation2019), and in astronomy learning (Richland et al., Citation2005).

The effect of interleaved practice on performance on reasoning tasks has received scant attention in the literature. However, it has been demonstrated with complex judgment tasks that interleaved practice enhanced not only learning but also transfer performance (Helsdingen et al., Citation2011a, Citation2011b). In these tasks, participants had to identify relevant cues in case descriptions of, for instance, crimes to estimate priorities of urgency for the police. Although this type of task seems is different from tasks typically used to assess unbiased reasoning (i.e. “heuristics-and-biases tasks”; we will elaborate on these tasks in the materials subsection), both rely on evaluation and interpretation of available information for making appropriate judgments. As such, interleaved practice may have similar effects on learning and transfer of unbiased reasoning.

It is important to note, however, that interleaved practice is usually more cognitively demanding than blocked practice, that is, it places a higher demand on limited working memory resources. Given that it also usually results in better (long-term) learning, interleaved practice seems to impose germane cognitive load (Sweller et al., Citation2011), or “desirable difficulties” (Bjork, Citation1994). Desirable difficulties are techniques that are effortful during learning and may seem to temporarily hold back performance gains, but are beneficial for long-term performance. Nevertheless, there is a risk that learners, and especially novices, will experience excessively high cognitive load when engaging in interleaved practice, which may hinder learning because it results in the learner being unable to process and compare all relevant information across tasks (Paas & Van Merriënboer, Citation1994). Using a practice-task format that reduces unnecessary cognitive load, like worked examples (i.e. step-by-step demonstrations of the problem solution; Paas et al., Citation2003; Renkl, Citation2014; Sweller, Citation1988; Van Gog et al., Citation2019; Van Gog & Rummel, Citation2010) may help novices benefit from high contextual interference. The high level of guidance during learning from worked examples provides learners with the opportunity to devote attention towards processes – stimulated by interleaved practice – that are directly relevant for learning. As such, learners can use the freed up cognitive capacity to reflect on to-be-used procedures and develop cognitive schemata that contribute to selecting and using a learned procedure when solving similar and novel problems (Kalyuga, Citation2011; Renkl, Citation2014). Paas and Van Merriënboer (Citation1994) indeed found that high variability during practice produced transfer test performance benefits (geometrical problem solving) when students studied worked examples, but not when they solved practice problems. Moreover, students who studied worked examples perceived that they invested less mental effort in solving the transfer tasks than did the students who had solved practice problems.

The present study

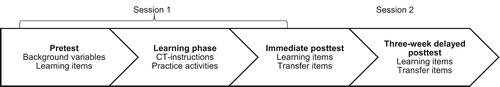

The aim of the present study was to investigate whether there would be an effect of interleaved practice with heuristics-and-biases tasks on experienced cognitive load, learning outcomes, and transfer performance (e.g. Tversky & Kahneman, Citation1974) and whether this effect would interact with the format of the practice-tasks (i.e. worked examples or practice problems). We simultaneously conducted 2 experiments: Experiment 1 was conducted in a laboratory setting with university students and Experiment 2 served as a conceptual replication conducted in a real classroom setting with students of a university of applied sciences.Footnote1 Participants received instructions on CT and heuristics and biases tasks, followed by practice with these tasks. displays an overview of the study design: performance was measured as performance on practiced tasks (learning) and non-practiced tasks (transfer), and on a pretest, immediate posttest, and delayed posttest (two weeks later).

Figure 1. Overview of the study design. The four conditions differed in practice activities during the learning phase.

In line with previous findings (Van Peppen et al., 2018, submitted; Heijltjes et al., Citation2014a; Heijltjes et al., Citation2014b, Citation2015), we hypothesised that students would benefit from the CT-instructions and practice activities, as evidenced by pretest to immediate posttest gains in performance on practiced items (i.e. learning; Hypothesis 1). Regarding our main question (see schematic overview in ), we expected a main effect of interleaved practice, indicating that interleaved practice would require more effort during the practice phase (Hypothesis 2), but would also lead to larger performance gains on practiced items (i.e. learning; Hypothesis 3a) and higher performance on non-practiced items (i.e. transfer; Hypothesis 3b) than blocked practice. We also expected a main effect of practice-task format: conform the worked example effect, we expected that studying worked examples would be less effortful during the practice phase (Hypothesis 4) and would lead to larger performance gains on practiced items (i.e. learning; Hypothesis 5a) and higher performance on non-practiced items (i.e. transfer; Hypothesis 5b) than solving problems. Finally, we expected an interaction effect, indicating that the beneficial effect of interleaved practice would be larger with worked examples than practice problems, on both practiced (i.e. learning; Hypothesis 6a) and non-practiced (i.e. transfer; Hypothesis 6b) items. A delayed (two weeks later) posttest was included, on which we expected these effects (Hypotheses 1-6) to persist. As effects of generative processing (relative to non-generative learning strategies; Dunlosky et al., Citation2013) and of interleaved practice specifically (Rohrer et al., Citation2015) sometimes increase as time goes by, they may be even greater after a delay.

Table 1. Schematic overview of hypotheses 2–6.

Despite not having specific expectations, the mental effort during test data can provide additional insights into the effects of interleaved practice and worked examples on learning (Question 7a/8a) and transfer (Question 7b/8b). As people gain expertise, they can often attain an equal/higher level of performance with less/equal effort investment, respectively. As such, an effort investment decrease in instructed and practiced test items would indicate higher cognitive efficiency (Hoffman & Schraw, Citation2010; Van Gog & Paas, Citation2008).Footnote2

Experiment 1

Materials and methods

We created an Open Science Framework (OSF) page for this project, where detailed descriptions of the experimental design and procedures are provided and where all data and materials (in Dutch) can be found (osf.io/a9czu).

Participants

Participants were 112 first-year Psychology students of a Dutch university. Of these, 104 students (93%) were present at both experimental sessions (see the procedure subsection for more information), and only their data were analysed. Participants were excluded from the analyses when test or practice sessions were not completed or when instructions were not adhered to, i.e. when more than half of the practice tasks were not read seriously. Based on the fact that fast readers can read no more than 350 words per minute (e.g. Trauzettel-Klosinski & Dietz, Citation2012) – and the words in these tasks additionally require understanding – we assumed that participants who spent less than 0.17 s per word (i.e. 60 s/350 words) did not read the instructions seriously. This involved more participants from the worked examples conditions than the practice problems conditions and resulted in a final sample of 85 students (Mage = 19.84, SD = 2.41; 14 males). Based on this sample size, we have calculated a power function of our analyses using the G*Power software (Faul et al., Citation2009). The power of Experiment 1 – under a fixed alpha level of 0.05 and with a correlation between measures of 0.3 (e.g. Van Peppen et al., Citation2018) – is estimated at .24 for detecting a small interaction effect ( = .01), .96 for a medium interaction effect (

= .06), and > .99 for a large interaction effect (

= .14). Thus, the power of our experiment should be sufficient to pick up medium-sized interaction effects, which is in line with the moderate overall positive effect of interleaved practice of previous studies as indicated in a recent meta-analysis (g = 0.42; Brunmair & Richter, Citation2019).Footnote3

Design

The experiment consisted of four phases (see ): pretest, learning phase (CT-instructions plus practice), immediate posttest, and delayed posttest. A 3 × 2 × 2 design was used, with Test Moment (pretest, immediate posttest, and delayed posttest) as within-subjects factor and Practice Schedule (interleaved and blocked) and Practice-task Format (worked examples and practice problems) as between-subjects factors. After completing the pretest on learning items (i.e. instructed and practiced during the learning phase), participants received instructions and were randomly assigned to one of four practice conditions: (1) Blocked Schedule with Worked Examples Condition (n = 18); (2) Blocked Schedule with Practice Problems Condition (n = 28); (3) Interleaved Schedule with Worked Examples Condition (n = 17); and (4) Interleaved Schedule with Practice Problems Condition (n = 22). Subsequently, participants completed the immediate posttest and two weeks later the delayed posttest on learning items (i.e. instructed and practiced during the learning phase) and transfer items (i.e. not instructed and practiced during the learning phase).

Materials

All materials were delivered in a computer-based environment (Qualtrics platform) that is created for this study.

CT-skills tests

The CT-skills pretest consisted of nine classic heuristics-and-biases items across three categories (e.g. West et al., Citation2008) which we refer to as learning items as (isomorphs of) these items were instructed and practiced during the learning phase, (example-items in Appendix): (1) Base-rate items which measured the tendency to overweigh individual-case evidence, that is, specific information (e.g. from personal experience, a single case, or prior beliefs) and to undervalue statistical information (Stanovich et al., Citation2016; Stanovich & West, Citation2000; Tversky & Kahneman, Citation1974); (2) Conjunction items that measured to what extent the conjunction rule (P(A&B) ≤ P(B)) is neglected – this fundamental rule in probability theory states that the probability of Event A and Event B both occurring must be lower than the probability of Event A or Event B occurring alone (adapted from Tversky & Kahneman, Citation1983); (3) Syllogistic reasoning items that examined the tendency to be influenced by the believability of a conclusion when evaluating the logical validity of arguments (Evans, Citation2003). As mentioned previously, it is important for interleaved practice effects to occur that distinctiveness between categories is high enough to reflect what strategy is required but, on the other hand, is not too high because learners then immediately recognise what procedure to apply (see for example, Brunmair & Richter, Citation2019; Carvalho & Goldstone, Citation2019). Therefore, we combined lower distinctive task categories (i.e. only requiring knowledge and rules of statistics: base-rate vs. conjunction) with higher distinctive task categories (i.e. requiring knowledge and rules of statistics and logic: base-rate vs. syllogistic reasoning and conjunction vs. syllogistic reasoning).

The immediate and delayed posttest contained parallel versions of the nine pretest learning items across three categories (base-rate, conjunction, and syllogism) that were designed as structurally equivalent but with different surface features. To illustrate, an immediate posttest item contained the exact same wording as the respective pretest item but, for instance, described a different company. In addition, the immediate and delayed posttests also contained four items of two task-categories that were transfer items as these were not instructed and practiced during the learning phase. The transfer items shared similar features with the learning items, namely, requiring knowledge and rules of logic (i.e. syllogisms rules) or requiring knowledge and rules of statistics (i.e. probability and data interpretation), respectively: (1) Wason selection items which measured the tendency to confirm a hypothesis rather than to falsify it (adapted from Evans, Citation2002; Gigerenzer & Hug, Citation1992); and (2) Contingency items measured the tendency to judge information given in a contingency table unequally, based on already experienced evidence (Heijltjes et al., Citation2014a; Stanovich & West, Citation2000; Wasserman et al., Citation1990).

In the interleaved schedule, all items were offered in random order and in the blocked schedule the items were randomly offered within the blocks. A multiple-choice (MC) format with different numbers of alternatives per item was used, with only one correct alternative for each task that evidences unbiased reasoning. The incorrect alternatives were intuitive (and incorrect) responses or results of incomplete reasoning processes. The content of the surface features (cover stories) of all test items was adapted to the study domain of the participants. All conditions were pilot-tested on difficulty, duration, and representativeness of content (for the study programme) by some students from a university of applied sciences (not partaking in the main experiments). Moreover, several tasks were taken from previous studies that were conducted in similar contexts (i.e. within an existing CT-course with first-year or second-year students of a university of applied sciences; Heijltjes et al., Citation2014a; Heijltjes et al., Citation2014b, Citation2015) and even within the same study domain (Van Peppen et al., Citation2018).

CT-instructions

The video-based instruction consisted of a general instruction on CT and explicit instructions on three heuristics-and-biases tasks. In the general instruction, the features of CT and the attitudes and skills that are needed to think critically were described. Thereafter, participants received explicit instructions on how to avoid base-rate fallacies, conjunction fallacies, and biases in syllogistic reasoning. These instructions consisted of a worked example of each category that not only showed the correct line of reasoning but also included possible problem-solving strategies. The worked examples provided solutions to the tasks seen in the pretest, which allowed participants to mentally correct initially erroneous responses.

CT-practice

The CT-practice phase consisted of nine practice tasks across the three task categories – in random order – of the pretest and the explicit instructions: base-rate (Br), conjunction (C), and syllogistic reasoning (S). Depending on the assigned condition, participants had to practice either in an interleaved (e.g. Br–C-S–C-S-Br-S-Br–C) or blocked schedule (e.g. Br-Br-Br–C-C–C-S-S-S), and either with worked examples or practice problems. Participants in the practice problems conditions were instructed to read the tasks thoroughly and to choose the best answer option. They received a prompt after each of the tasks in which they were asked to explain how the answer was obtained. After that, participants received feedback indicating whether the given answer was correct or incorrect (i.e. “your answer to this assignment was correct” or “your answer to this assignment was incorrect”). Participants in the worked examples conditions were first told that they would not have to solve the problems themselves, but that they receive a worked-out solution to each problem. They were instructed to read each worked-out example thoroughly. The worked examples consisted of a problem statement and a solution to this problem (i.e. the strategy information provided during the CT-instructions was repeated in the worked examples). The line of reasoning and underlying principles were explained in steps, sometimes clarified with a visual representation. The explanations given in the worked examples were based on the explanations from the original literature on the tasks (e.g. “to solve this problem you should … ”) and have been rewritten to make it look like another student has completed the task (e.g. “to solve this problem, I am … ”). Thus, the worked examples consisted of more elaborate information compared to the practice problems.

Mental effort

Invested mental effort was measured with the subjective rating scale developed by Paas (Citation1992). After each practice-task and after each test item, participants reported how much mental effort they invested in completing that task or item, on a 9-point scale ranging from (1) very, very low effort to (9) very, very high effort.

Procedure

The study was run in two sessions that both took place in the computer lab of the university. Participants signed an informed consent form at the start of the experiment. Before participants arrived, A4-papers were distributed among all cubicles (one participant in each cubicle) containing some general rules and a link to the Qualtrics environment of session 1, where all materials were delivered. Participants could work at their own pace and time-on-task was logged during all phases. Furthermore, participants were allowed to use scrap paper during the practice phase and the CT-tests.

In session 1 (ca. 75 min), participants first filled out a demographic questionnaire and then completed the pretest. After each test item, they had to indicate how much mental effort they invested in it. Subsequently, participants entered the learning phase in which they first viewed the video (10 min.), including the general CT-instruction and the explicit instructions. Thereafter, the Qualtrics programme randomly assigned the participants to one of the four practice conditions. Participants rated after each practice task how much mental effort they invested. After the learning phase, participants completed the immediate posttest and again rated their invested mental effort after each test item. The second session took place two weeks later and lasted circa 20 min. Participants again received an A4-paper containing some general rules and a link to the Qualtrics environment of session 2. This time, participants completed the delayed posttest and again reported their mental effort ratings after each test item. One experiment leader (first or third author of this paper) was present during all phases of the experiment.

Data analysis

Of the nine learning items of the CT-skills tests, seven items were MC-only questions (with more than two alternatives) and two items were MC-plus-motivation questions (with two MC alternatives; one conjunction and one base-rate item) to prevent participants from guessing. The transfer items consisted of two MC-only and two MC-plus-motivation questions (two contingency items). Performance on the pretest, immediate posttest, and delayed posttest was scored by assigning 1 point to each correct alternative on the MC-only questions (i.e. referring to unbiased reasoning). For items with only two MC alternatives, the scoring was based on the explanation provided so that no points were assigned for correct guesses. Participants could earn 1 point for the correct explanation, 0.5 point for a partially correct explanation,Footnote4 and 0 points for an incorrect explanation for these MC-plus-motivation questions (score form developed by the first author). As a result, participants could earn a maximum score of 9 on the learning items and a maximum total score of 4 on the transfer items. Two raters independently scored 25% of the explanations on the open questions of the immediate posttest, blind to student identity and condition. The intra-class correlation coefficient was .991 for the learning test items and .986 for the transfer test items. Because of the high inter-rater reliability, the remainder of the tests was scored by one rater (the first author) and this rater’s scores were used in the analyses..

For comparability, we computed percentage scores on the learning and transfer items instead of total scores. It is important to realise that, even though we used percentage scores, caution is warranted in interpreting differences between learning and transfer outcomes because the maximum scores differed. The mean score on the posttest learning items was 59.9% (SD = 20.22) and reliability of these items (Cronbach’s alpha) was .24 on the pretest, .57 on the immediate posttest, and .51 on the delayed posttest. The low reliability on the pretest might be explained by the fact that a lack of prior knowledge requires guessing of answers. As such, inter-item correlations are low, resulting in a low Cronbach’s alpha. Moreover, caution is required in interpreting these reliabilities because sample sizes as in studies like this do not seem to produce sufficiently precise alpha coefficients (e.g. Charter, Citation2003). The mean score on the posttest transfer items was 36.2% (SD = 22.31). Reliability of these items was low (Cronbach’s alpha of .25 on the posttest and .43 on the delayed posttest), which can probably partly be explained by floor effects at both tests for one of our transfer task categories (i.e. Wason selection). Therefore, we decided not to report the test statistics of the analyses on transfer performance. Descriptive statistics can be found in and .

Table 2. Means (SD) of Test performance (multiple-choice % score) and Invested Mental Effort (1-9) per Condition of Experiment 1.

Table 3. Means (SD) of Test performance per task (max. score 1) per Condition of Experiment 1.

Results

In all analyses reported below, a significance level of .05 was used. Partial eta-squared () is reported as a measure of effect size for the ANOVAs for which 0.01 is considered small, 0.06 medium, and 0.14 large (Cohen, Citation1988). On our OSF-project page we presented the intention-to-treat (i.e. all participants who entered the study) analyses, which did not reveal noteworthy differences with the compliant-only (i.e. all participants who have met the criterion of spending more than 0.17 s per word for at least half of the practice tasks) analyses reported below.

Check on condition equivalence and time-on-task

Following the drop-out of some participants, we checked our conditions on equivalence. Preliminary analyses confirmed that the conditions did not differ in educational background, χ²(15) = 15.68, p = .403; performance on the pretest, F(3, 81) = 1.68, p = .178; time spent on the pretest, F(3, 81) = 1.75, p = .164; and average mental effort invested on the pretest items, F(3, 81) = 0.78, p = .510. We found a gender difference between the conditions, χ²(3) = 11.03, p = .012. However, gender did not correlate significantly with learning performance (minimum p = .108) and was therefore not a confounding variable.

A 2 (Practice Schedule: interleaved vs. blocked) × 2 (Practice-task Format: worked examples vs. practice problems) factorial ANOVA showed no significant differences on time-on-task during practice between the interleaved and blocked conditions, F(3, 81) = 3.05, p = .085, = .04, but there was a significant difference between worked examples conditions (M = 577.48, SE = 37.93) compared to the practice problems conditions (M = 737.61, SE = 31.96), F(3, 81) = 10.42, p = .002,

= .11. If it turns out that the practice problems conditions outperformed the worked examples conditions, this finding should be taken into account. No significant interaction between Practice Schedule and Practice-task Format was found, F(3, 81) = 1.00, p = .320,

= .01.Footnote5

Performance on learning items

Performance data are presented in and and all omnibus test statistics can be found in (statistics of follow-up analyses are presented in text). A 3 × 2 × 2 mixed ANOVA on the items that assessed learning, with Test Moment (pretest, immediate posttest, and delayed posttest) as within-subjects factor and Practice Schedule (interleaved and blocked) and Practice-task Format (worked examples and practice problems) as between-subjects factors, showed a main effect of Test Moment. In line with Hypothesis 1, repeated contrasts revealed that participants performed better on the immediate posttest (M = 61.07, SE = 2.10) than on the pretest (M = 24.30, SE = 1.46), F(1, 81) = 267.66, p < .001, = .77. There was no significant difference between performance on the immediate and delayed posttest (M = 63.76, SE = 1.93), F(1, 81) = 2.90, p = .092,

= .04.

Table 4. Results Mixed ANOVAs Experiment 1.

In contrast to Hypothesis 3a (see for a schematic overview of the hypotheses), we did not find a significant main effect of Practice Schedule or an interaction between Practice Schedule and Test Moment on performance on learning items. However, the analysis did reveal a main effect of Practice-task Format, with worked examples resulting in better performance (M = 54.56, SE = 2.21) than practice problems (M = 44.87, SE = 1.86). This was qualified by an interaction effect between Practice-task Format and Test Moment: in line with Hypothesis 5a, repeated contrasts revealed that there was a higher pretest to immediate posttest performance gain for worked examples (Mpre = 23.82, SE = 2.23; Mimmediate = 68.66, SE = 3.21) than for practice problems (Mpre = 24.78, SE = 1.88; Mimmediate = 53.48, SE = 2.70), F(1, 81) = 12.90, p = .001, = .14. Contrary to Hypothesis 6a, there was no interaction between Practice Schedule and Practice-task Format, nor an interaction between Practice Schedule, Practice-task Format, and Test Moment.

Mental effort during learning

Mental effort data are presented in and all omnibus test statistics can be found in . Contrary to hypotheses 2 and 4 respectively, a 2 (Practice Schedule: interleaved and blocked) × 2 (Practice-task Format: worked examples and practice problems) factorial ANOVA on the mental effort during practice data revealed no main effects of Practice Schedule and Practice-task Format. Moreover, no interaction between Practice Schedule and Practice-task Format was found.

Mental effort during test

We exploratory analysed the mental effort during test data with a 3 × 2 × 2 mixed ANOVA on mental effort invested on learning items and a 2 × 2 × 2 mixed ANOVA on mental effort invested on transfer items (i.e. transfer items were not included in the pretest). Mental effort data during test is presented in and all test statistics can be found in .

Regarding effort invested in the learning items, there was no main effect of Practice Schedule (Question 7a). However, there was a main effect of Practice-task Format (Question 8a); less invested effort on learning items was reported in the worked examples conditions (M = 3.57, SE = .13) compared to practice problems conditions (M = 3.92, SE = .11), and an interaction effect between Test Moment and Practice-task Format. Repeated contrasts revealed an effort investment increase over time with a significant difference between immediate and delayed posttest for the practice problems conditions (Mpretest = 3.74, SE = .11; Mimmediate = 3.89, SE = .14; Mdelayed = 4.14, SE = .13), F(1,48) = 6.08, p = .017, = .11, and no significant differences for the worked examples conditions, F(2,66) = .38, p = .683,

= .01. The results did not reveal a main effect of Test Moment and interaction effects.

Regarding invested mental effort in the transfer items, the results revealed a main effect of Practice Schedule (Question 7b), with higher effort investment when practiced in an interleaved schedule (M = 4.78, SD = .15) compared to a blocked schedule (M = 4.33, SD = .14). Furthermore, there was an effect of Practice-task Format (Question 7b): higher effort investment was reported by the practice problems conditions (M = 4.80, SD = .13) compared to worked examples conditions (M = 4.31, SD = .16). No main effect of Test Moment and interaction effects were found.

Interim summary

Taken together, there were no indications that interleaved practice – either in itself or as a function of task-format – contributed to better learning. However, interleaved practice resulted in higher effort investment on transfer items than blocked practice, which may indicate that interleaved practice stimulated analytical and effortful reasoning (i.e. Type 2 processing, e.g. Stanovich, Citation2011) more than blocked practice yet without resulting in replacement of the incorrect intuitive response (i.e. Type 1 processing) with the more analytical correct response. Alternatively, this finding may indicate a lower cognitive efficiency (Hoffman & Schraw, Citation2010; Van Gog & Paas, Citation2008) of interleaved practice as opposed to blocked practice. Furthermore, in line with the worked example effect (e.g. Sweller et al., Citation2011), studying worked examples was more effective for learning than solving problems, as well as more efficient (i.e. higher test performance reached in less practice time and less mental effort investment during the test phase; Van Gog & Paas, Citation2008). We will further elaborate on and discuss the findings of Experiment 1 in the General Discussion.

Experiment 2

We simultaneously conducted a replication experiment in a classroom setting to assess the robustness of our findings and to increase ecological validity. All test and practice items were the same but, if necessary, adapted to the domain of the participants to meet the requirements of the study programme (see for example the conjunction item in the appendix).

Materials and methods

Participants and design

The design of Experiment 2 was the same as that of Experiment 1. Participants were 157 second-year “Safety and Security Management” students of two locations of a Dutch university of applied sciences. Students from the first location had some prior knowledge as they had participated in a study that included similar heuristics-and-biases tasks in the first year of their curriculum that was followed by some lessons on this topic (n = 83), while students of the second location (n = 74) had not. Since the level of prior knowledge may be relevant (Likourezos et al., Citation2019), the factor Site will be included in the main analyses. Of the 157, 117 students (75%) were present at both sessions. As a large number of students missed the second session, we decided to conduct two separate analyses on performance and mental effort on learning items (transfer items were only included in the immediate and delayed posttest): pretest to immediate posttest analyses for all students present during session 1 and immediate posttest to delayed posttest analyses for all students present at both sessions. As in Experiment 1, participants who did not read the instructions seriously were excluded of the analyses. This resulted in a final subsample of 117 students (Mage = 20.05, SD = 1.76; 70 males; 60 higher knowledge) for the pretest-immediate posttest analyses and a final subsample of 89 students (Mage = 19.92, SD = 1.78; 46 males; 51 higher-knowledge) for the immediate posttest-delayed posttest analyses. Participants were randomly assigned to the Blocked Schedule with Worked Examples (n = 20; n = 15); Blocked Schedule with Practice Problems (n = 43; n = 33); Interleaved Schedule with Worked Examples (n = 15; n = 8); and Interleaved Schedule with Practice Problems (n = 39; n = 32) conditions. Based on these two sample sizes, we have calculated power functions of Experiment 2 using the G*Power software (Faul et al., Citation2009), including the factor Site. The power of analysis 1 (n = 117) – under a fixed alpha level of 0.05 and with a correlation between measures of 0.3 (e.g. Van Peppen et al., Citation2018) – is estimated at .20 for detecting a small interaction effect ( = .01), .93 for a medium interaction effect (

= .06), and > .99 for a large interaction effect (

= .14). Under the same assumptions, the power of analysis 2 (n = 89) is estimated at .17 for detecting a small interaction effect (

= .01), .82 for a medium interaction effect (

= .06), and > .99 for a large interaction effect (

= .14). Thus, our experiment should be sufficient to pick up medium-sized interaction effects, which could be expected given the moderate overall positive effect of interleaved practice found in previous studies (Brunmair & Richter, Citation2019).Footnote6

Materials, procedure, and scoring

All data, materials, and detailed descriptions of the procedures and scoring are provided at the OSF-page of this project. The same materials were used as in Experiment 1 but the content of the surface features (cover stories) was adapted to the domain of the participants when the original features did not reflect realistic situations for these participants to keep the level of difficulty approximately equal to Experiment 1 and to meet the requirements of the study programme (i.e. the final exam was based on these materials). The content of all materials was evaluated, including equivalence of information, and approved by a teacher working in the domain.

The main difference with Experiment 1 was that Experiment 2 was run in a real education setting, namely during the lessons of a CT-course. Experiment 2 was conducted in a computer classroom at the participants’ school with an entire class of students present. Participants came from eight different classes (of 25–31 participants) and were randomly distributed among the four conditions within each class. The two sessions of Experiment 2 took place during the first two lessons and between these lessons no CT- instruction was given. In advance of the first session, students were informed about the experiment by their teacher. When entering the classroom, participants were instructed to sit down at one of the desks and read the A4-paper containing some general instructions and a link to the Qualtrics environment of session 1 where they first signed an informed consent form. Again, participants could work at their own pace and could use scrap paper and time-on-task was logged during all phases. Participants had to wait (in silence) until the last participant had finished the posttest before they were allowed to leave the classroom. The experiment leader and the teacher of the CT-course (first and third author of this paper) were both present during all phases of the experiment and one of them explained the nature of the experiment afterwards.

The same test-items and score form for the open questions were used as in Experiment 1. Again, participants could attain a maximum score of 9 on the learning items and a maximum total score of 4 on the transfer items and we computed percentage scores on the learning and transfer items instead of total scores. It is important to realise that, even though we used percentage scores, caution is warranted in interpreting differences between learning and transfer outcomes because the maximum scores differed. Two raters independently scored 25% of the open questions of the immediate posttest, blind to student identity and condition. Because the intra-class correlation coefficient was high (.931 for learning test items; .929 for transfer test items), the remainder of the tests was scored by one rater (the third author) and this rater’s scores were used in the analyses.

The mean score on the posttest learning items was 62.5% (SD = 19.06) and reliability of these items was .36 on the pretest, .45 on the posttest and .52 on the delayed posttest (Cronbach’s alpha). Again, the low reliability on the pretest might be explained by the fact that a lack of prior knowledge requires guessing of answers, resulting in low inter-item correlations and subsequently a low Cronbach’s alpha. Moreover, caution is warranted in interpreting these reliabilities because a sample size as in our study does not seem to produce precise alpha coefficients (e.g. Charter, Citation2003). The mean score on the posttest transfer items was 32.2% (SD = 25.55) and reliability of these items was .36 on the posttest and .30 on the delayed posttest (Cronbach’s alpha). In view of this low reliability, which can probably partly be explained by floor effects at both tests for one of our transfer task categories (i.e. Wason selection), we decided not to report the test statistics of the analyses on transfer performance. Descriptive statistics can be found in and .

Table 5. Means (SD) of Test performance (multiple-choice % score) and Invested Mental Effort (1-9) per condition and analysis of Experiment 2.

Table 6. Means (SD) of Test performance per task (max. score 1) per Condition of Experiment 2.

Results

In all analyses reported below, a significance level of .05 was used. Partial eta-squared () is reported as a measure of effect size for the ANOVAs for which 0.01 is considered small, 0.06 medium, and 0.14 large. On our OSF-project page we presented the intention-to-treat (i.e. all participants who entered the study) analyses, which did not reveal noteworthy differences with the compliant-only analyses. As it might have been of influence that half of the students had some prior knowledge as they participated in a study that included similar heuristics-and-biases tasks in the first year of their curriculum, we included the factor Site in all analyses.

Check on condition equivalence and time-on-task

Preliminary analyses confirmed that there were no significant differences between the conditions in educational background, χ²(9) = 10.00, p = .350; gender, χ²(3) = .318, p = .957, or performance on the pretest, time spent on the pretest, and mental effort invested on the pretest items (maximum F = 1.30, maximum = .03). A one-way ANOVA indicated that there were no significant differences in time-on-task (in seconds) spent on practice of the instruction tasks, F(3, 116) = 1.73, p = .165, d = .016.Footnote7

Performance on learning items

Performance data are presented in and and omnibus test statistics in (statistics of follow-up analyses are presented in text). The data on learning items were analysed with two 2 × 2 × 2 × 2 mixed ANOVAs with Test Moment (analysis 1: pretest and immediate posttest; analysis 2: immediate posttest and delayed posttest) as within-subjects factor and Practice Schedule (interleaved and blocked), Practice-task Format (worked examples and practice problems), and Site (low prior knowledge and higher prior knowledge learners) as between-subjects factors. In line with Hypothesis 1, the pretest-immediate posttest analysis showed a main effect of Test Moment on learning outcomes: participants performed better on the immediate posttest (M = 61.40, SE = 1.49) than on the pretest (M = 46.13, SE = 1.59).

Table 7. Results Mixed ANOVAs experiment 2

Contrary to Hypothesis 3a (see for a schematic overview of the hypotheses), the results did not reveal a significant main effect of Practice Schedule, nor an interaction with Test Moment, indicating that interleaved practice had no differential effect. We did find an interaction effect between Test Moment and Practice-task Format: in line with Hypothesis 5a, there was a higher pretest to immediate posttest performance gain for worked examples (Mpre = 38.79; Mimmediate = 71.96) than for practice problems (Mpre = 41.71; Mimmediate = 58.24), F(1, 109) = 22.18, p < .001, = .17. In contrast to Hypothesis 6a, the results did not reveal an interaction between Practice Schedule and Practice-task Format, nor an interaction between Practice Schedule, Practice-task Format, and Test Moment.

However, there was a main effect of Site, with higher-knowledge learners performing better (M = 60.95, SE = 2.00) than low-knowledge learners (M = 44.39, SE = 1.97). Moreover, we found an interaction between Test Moment and Site, with a higher increase in learning outcomes for low-knowledge learners (Mpre = 29.36, SE = 2.25; Mimmediate = 59.43, SE = 2.31) compared to higher-knowledge learners (Mpre = 51.14, SE = 2.38; Mimmediate = 70.77, SE = 2.34). Interestingly, our results revealed an interaction between Test Moment, Practice-task Format, and Site. Follow-up analyses revealed that low-knowledge learners showed a larger increase in learning outcomes when they practiced with worked examples (Mpre = 27.58, SE = 2.83; Mimmediate = 70.30, SE = 4.28) compared to practice problems (Mpre = 31.14, SE = 2.63; Mimmediate = 48.55, SE = 2.94), F(1, 53) = 22.17, p < .001, = .30. For higher-knowledge learners, the differences in learning gains between the worked examples and practice problems conditions were no longer significant, F(1, 56) = 3.00, p = .089,

= .05.

The second analysis – to test whether our results are still present after two weeks – showed a significant main effect of Test Moment: participants’ performance on learning items improved from immediate (M = 63.13, SE = 2.19) to delayed (M = 67.71, SE = 2.31) posttest. In contrast to Hypotheses 3a, 5a, and 6a respectively, there was no main effect of Practice Schedule, no main effect of Practice-task Format, no interaction between Practice Schedule and Practice-task Format, nor interactions with Test Moment. Again, there was a main effect of Site: higher-knowledge learners performed higher on learning items (M = 72.73, SE = 2.49) than low-knowledge learners (M = 58.11, SE = 3.26). Furthermore, an interaction between Practice Schedule, Practice-task Format, and Site was found. Follow-up analyses revealed that, for low-knowledge learners practice in a blocked schedule worked best with worked examples compared to practice problems (MWE = 69.14, SE = 5.78; MPS = 47.57, SE = 4.34), while in an interleaved schedule practice problems were more beneficial (MWE = 52.78, SE = 12.27; MPS = 62.96, SE = 5.01), F(1, 35) = 4.43, p = .043, = .11. There was no significant interaction between Practice Schedule and Practice-task Format for higher-knowledge learners, F(1, 45) = 1.87, p = .178,

= .04. No other interaction effects were found.

Mental effort during learning

Mental effort data are presented in and omnibus test statistics in . Contrary to Hypotheses 2 and 4, respectively, a 2 (Practice Schedule: interleaved and blocked) × 2 (Practice-task Format: worked examples and practice problems) × 2 (Site: low prior knowledge learners and higher prior knowledge learners) factorial ANOVA on the mental effort during practice data revealed no main effects of Practice Schedule and Practice-task Format, nor an interaction between Practice Schedule and Practice-task Format was found. Moreover, no main effect of Site, nor interactions between Practice Schedule, Practice-task Format, and Site were found.

Mental effort during test

Our pretest-immediate posttest analyses on effort invested on learning items showed no main effects of Practice Schedule (Question 7a) and Practice-task Format (Question 8a), nor an interaction between Practice Schedule and Practice-task Format. The results did reveal a significant interaction between Test Moment, Practice Schedule, and Site, but follow-up analyses revealed no significant interactions between Test Moment and Practice Schedule for both sites (maximum F = 3.47, maximum = .06). No main effects of Test Moment and Site, nor other significant interactions were found.

Our second analysis – to test whether our results were still present after two weeks – showed no main effects of Practice Schedule (Question 7b) and Practice-task Format (Question 8b), nor an interaction between Practice Schedule and Practice-task Format. However, a three-way interaction between Test Moment, Practice Schedule, and Practice-task Format was found. Follow-up analyses revealed that interleaved practice with worked examples resulted in an immediate posttest – delayed posttest increase in effort investment (Mimmediate = 3.58; Mdelayed = 3.97) and with practice problems in an immediate posttest – delayed posttest decrease in effort investment (Mimmediate = 4.45; Mdelayed = 4.07), F(1, 36) = 4.21, p = .047, = .11. There was no significant difference in immediate posttest – delayed posttest effort investment between the practice-task format conditions when practiced in a blocked schedule, F(1, 43) = 2.74, p = .105,

= .06. No main effects of Test Moment and Site, nor other interactions were found.

Our analyses on effort invested in transfer items revealed no main effects of Practice Schedule, Practice-task Format, Test Moment, or Site. Moreover, there were no significant interaction effects.

Interim summary

The results of Experiment 2 provide converging evidence with Experiment 1. Again, we did not find any indications that interleaved practice would be more beneficial than blocked practice for learning, either in itself or as a function of task format. There was again a benefit of studying worked examples over solving problems, but – as was to be expected – this was limited to participants who had low prior knowledge (i.e. had not participated in a study that included similar heuristics and biases tasks in the first year of their curriculum).

General discussion

Previous research has demonstrated that providing students with explicit CT-instructions combined with practice on domain-relevant tasks is beneficial for learning to reason in an unbiased manner (e.g. Heijltjes et al., Citation2015) but not for transfer to new tasks. Therefore, the present experiments investigated whether creating contextual interference in instruction through interleaved practice – which has been proven effective in other and similar domains – would promote both learning and transfer of reasoning skills.

In line with our expectations and consistent with earlier research (e.g. Van Peppen et al., Citation2018; Heijltjes et al., Citation2015), both experiments support the finding that explicit instructions combined with practice improves learning of unbiased reasoning (Hypothesis 1), as we found pretest to immediate posttest gains on practiced tasks in all conditions, which remained stable on the delayed posttest after two weeks. This is in line with the idea of Stanovich (Citation2011) that providing students with relevant mindware (i.e. knowledge bases, rules, procedures and strategies; Perkins, Citation1995) and stimulating them to inhibit incorrectly used intuitive responses (i.e. Type 1 processing, e.g. Evans, Citation2008; Kahneman & Klein, Citation2009; Stanovich, Citation2011; Stanovich et al., Citation2016) and to replace these with more analytical and effortful reasoning (i.e. Type 2 processing) is useful to prevent biases in reasoning and decision-making. However, the scores were not particularly high (i.e. up to 73% accuracy), so there is still room for improvement. The performance gain on practiced tasks suggests that having learners repeatedly retrieve to-be-learned material (i.e. repeated retrieval practice: e.g. Karpicke & Roediger, Citation2007) may be a promising method to further enhance learning to avoid biased reasoning.

Contrary to our hypotheses, we did not find any indications that interleaved practice would improve learning more than blocked practice (Hypothesis 3a), regardless of whether they practiced with worked examples or problem-solving tasks (Hypothesis 6a). These findings are in contrast to previous studies that demonstrated that interleaved practice is effective for establishing both learning and transfer in other domains and with other complex judgment tasks (e.g. Likourezos et al., Citation2019). Moreover, they are contrary to the finding of Paas and Van Merriënboer (Citation1994) that high variability during practice with geometrical problems produced test performance benefits when students studied worked examples, but not when they solved practice problems. Unfortunately, we were not able to test our hypotheses regarding transfer performance (Hypothesis 3b/6b). Therefore, it is unknown whether interleaved practice – either in itself or as a function of task-format – would be beneficial for transfer of unbiased reasoning. However, given that the transfer scores were overall rather low, we can assume the overall effect of instruction and practice (if present at all) would seem to be limited.

One of the more interesting findings to emerge from this study, however, is that the worked example effect (e.g. Paas & Van Gog, Citation2006; Renkl, Citation2014) also applies to CT-tasks. Moreover, this was found even though the instructions that preceded the practice tasks already included two worked examples. As most of the studies on the worked example effects used pure practice conditions or gave minimal instructions prior to practice, these examples could have helped students in the problem-solving conditions perform better on the practice problems; nevertheless, we still found a worked example effect. To the best of our knowledge, the results of Experiment 1 demonstrated for the first time in CT-instruction a benefit of studying worked examples over solving problems on learning outcomes, reached with less effort during the tests (i.e. more effective and efficient, Van Gog & Paas, Citation2008). Experiment 2 replicated the worked example effect (i.e. more effective than solving problems) and demonstrated that this was the case for novices, but not for learners with relatively more prior knowledge. This observation supports findings regarding the expertise reversal effect (e.g. Kalyuga, Citation2007; Kalyuga et al., Citation2003, Citation2012), which shows that while instructional strategies that assist learners in developing cognitive schemata are effective for low-knowledge learners, they are often not effective (or may even be detrimental) for higher-knowledge learners. As far as we know, our second experiment was the first to actually vary both level of guidance (i.e. practice-task format) and level of expertise along with practice schedule and, thus, our study provides a first step in exploring the interactions between these factors. However, caution is warranted in interpreting this finding since our sample size was relatively small. It would be interesting in future research to manipulate students’ level of expertise to actually demonstrate a causal relationship between expertise and the effect of studying worked examples on learning outcomes in CT-instruction.

Admittedly, our explanation for the worked example effect would have been more compelling if we had included measures of the separate types of cognitive load (although it seems very challenging to distinguish between different types of cognitive load and available instruments, e.g. the rating scale developed by Leppink et al., Citation2013, would be too long to apply after each task). There are theoretical reasons to assume that the amount of strategy information given in the worked examples resulted in lower extraneous load and higher germane load compared to solving problems, but in that case, the total load experienced by the learners (as reflected in their invested mental effort) may not differ between conditions. Moreover, studies in which worked examples were compared to practice problems with feedback consisting of or resembling the supportive features of worked examples, still showed a worked example effect. Paas and Van Merriënboer (Citation1994), for instance, showed that training with worked examples was more beneficial for learning than training with practice-problems that were followed by correct-answer feedback and worked examples. Moreover, training with worked examples required less time and was perceived as less effortful. In line with these findings, both McLaren et al. (Citation2016) and Schwonke et al. (Citation2009) demonstrated that worked examples were less time consuming without a loss or even a gain in learning outcomes compared to tutored learning by problem-solving (i.e. clear efficiency benefits of worked example study). It is important to note that the worked example effect does apply to learners who have little prior knowledge while it disappears for learners with high prior knowledge (cf. expertise reversal effect). In the current study, learners were provided with prior knowledge during the initial CT-instructions. We nevertheless revealed a worked example effect. Hence, it seems that participants did not develop such expertise that the positive effect of worked examples disappeared. This allowed learners to still take advantage from the information provided in the worked examples. If learners have not learned from the initial instructions at all (which is unlikely given previous research), the elaborate information in the worked examples may have helped them to apprehend the needed approach to problem solving, while the information in the practice problems and subsequent feedback whether the answer was correct or incorrect could at best hint at what might be a reasonable approach.

Finally, one could argue that the unequal cell distribution (i.e. higher exclusion in worked examples conditions compared to practice problems conditions based on reading time of instructions) may indicate that students’ motivation may have been the basis for the worked example effect. However, our intention-to-treat analyses still revealed a worked example effect and, therefore, this possible explanation does not seem convincing. Yet, this points to another remarkable finding, that is, that worked examples were more beneficial for learning than problems, even if the examples were minimally read; possibly, students quickly located and processed the relevant information in the examples.

Although we have to speculate, a possible explanation for the absence of an interleaved practice effect on learning outcomes might lie in the distinctiveness between the task categories, which may have been greater than in previous studies. Effects of interleaved practice only occur if task categories differ and require different problem-solving procedures. However, as reflection on the to-be-used procedures is what causes the beneficial effect of interleaved practice (e.g. Barreiros et al., Citation2007; Rau et al., Citation2010), distinctiveness between categories should not be too high because learners then immediately recognise what procedure to apply. It seems possible that the task categories used in the present study were the same at a high level but that the mindware needed for each category differed too much. If so, determining the nature of each task was relatively easy and intertask comparing was not necessary. It should be noted, though, that this was not expected in advance and that arguing that the distinctiveness between task categories was too high after we know the results is risky, because of hindsight bias (Fischhoff, Citation1975).

Another, again speculative, possible explanation for the absence of an interleaving effect on learning outcomes, might be that the surface characteristics within the practice-task categories were so different (especially for the base-rate items) that students in the blocked practice condition did not realise that strategies could be reused in subsequent tasks of that category. This suggestion is supported by the performance differences on base-rate and syllogistic reasoning items, although the latter is more likely due to differences in difficulty. To reiterate, in the blocked practice condition, participants practiced with three tasks of one category at a time before the next (e.g. AAABBBCCC), whereas in the interleaved practice condition, participants practiced with the nine heuristics-and-biases tasks in a mixed sequence (e.g. ABCBACBCA). As such, students in the blocked practice condition might have been stimulated as much as students in the interleaved practice condition to stop and think about new problem-solving strategies, especially in base-rate tasks. It seems possible that interleaved practice is useful for practice within a task category in which surface characteristics are similar to each other and problem-solving procedures differ slightly (e.g. syllogistic reasoning tasks), but further research should be undertaken to investigate this.

Additionally, a recent meta-analysis (Brunmair & Richter, Citation2019) has shown that the strength of interleaved practice effects varies widely between types of learning materials. Interleaved practice seems to work well in inductive learning, when the stimuli are complex, when categories are difficult to discriminate, and when the similarity of exemplars within categories is low. Given the pervasiveness of induction, it is surprising that educationally relevant materials are clearly underrepresented in the interleaved practice research. The present study was the first to address interleaved practice effects with heuristics-and-biases tasks, and seems to indicate that interleaved practice is not beneficial for learning of this type of task. Hence, it would be interesting for future studies to investigate the generalizability of the interleaved practice effects and whether it is restricted to specific types and combinations of learning materials. More generally, our findings raise questions about the preconditions of instructional strategies that are known to foster generative processing (e.g. desirable difficulties; Bjork, Citation1994). Instructional strategies, such as interleaved practice, depend highly on the implementation, the measure of learning outcomes, and the specific characteristics of the learning materials. Further research on the exact boundary conditions is therefore recommended to accurately inform educational practice.

Moreover, it should be noted that the relatively low reliabilities, implying high amounts of measurement error, of our learning test items might have played a crucial role as it largely decreased the power to detect intervention effects (Cleary et al., Citation1970; Kanyongo et al., Citation2007; Schmidt & Hunter, Citation1996). Although sample sizes as in studies like this do not seem to produce sufficiently precise alpha coefficients (e.g. Charter, Citation2003), the possibility that the items were not sufficiently related or that students do not see the overlap between the items should be taken into account. In this study, the low levels of reliability can probably be explained in terms of multidimensionality of the tests encompassing several heuristics-and-biases tasks, a factor often ignored in current research. Performance on these tasks depends not only on the extent to which that task elicits a bias (resulting from heuristic reasoning), but also on the extent to which one possesses the requisite mindware (e.g. rules or logic or probability). Thus, systematic variance in performance on such tasks can either be explained by a person’s use of heuristics or his/her available mindware. If it differs per item to what extent a correct answer depends on these two aspects, there may not be a common factor explaining all interrelationships between the measured items. Future research, therefore, would need to find ways to improve CT measures (i.e. decrease random measurement error) or should utilise measures known to have acceptable levels of reliability (LeBel & Paunonen, Citation2011). The latter option seems challenging, however, as multiple studies report rather low levels of reliability of tests consisting of heuristics and biases tasks (Aczel et al., Citation2015; Bruine de Bruin et al., Citation2007; West et al., Citation2008) and revealed concerns with the reliability of widely used standardised CT tests, particularly with regard to subscales (Bernard et al., Citation2008; Ku, Citation2009; Leppa, Citation1997; Liu et al., Citation2014).

One could argue to what extent the tests accurately assessed the more general cognitive capacity “avoiding bias in reasoning” (i.e. unbiased reasoning). Bias refers to systematic deviations from a norm when choosing actions or estimating probabilities (Stanovich et al., Citation2016; Tversky & Kahneman, Citation1974). In the current study, unbiased reasoning was operationalised as performance on classical heuristics-and-biases tasks, in which an intuitively cued heuristic response conflicts normative models of CT as set by formal logic and probability theory. Heuristics-and-biases tasks have been used for decades to measure unbiased reasoning (e.g. Baron, Citation2008; Evans, Citation2003; Gigerenzer & Hug, Citation1992; Heijltjes et al., Citation2014a; Heijltjes et al., Citation2014b, Citation2015; Tversky & Kahneman, Citation1974; Stanovich et al., Citation2016; Stanovich & West, Citation2000; Tversky & Kahneman, Citation1983; Van Brussel et al., Citation2020; Wasserman et al., Citation1990; West et al., Citation2008). Several studies demonstrated associations between people’s performance on heuristics-and-biases tasks and how they reason in more realistic settings (e.g. medical decision making: Arkes, Citation2013) and other real-world correlates (e.g. risk behaviours: Toplak et al., Citation2017). Hence, participants’ performance on these heuristics-and-biases tasks presumably offers a realistic view of everyday reasoning (see for example, Gilovich et al., Citation2002). Relevant next steps would be to investigate how bias in reasoning can be prevented in daily settings and what the effects of instruction/practice are on other aspects of CT.

To conclude, the present experiments provide evidence that worked examples can be effective for novices’ learning to avoid biased reasoning. However, there were no indications that practice in an interleaved schedule – with worked examples or practice problems – enhances performance on heuristics-and-biases tasks. These findings suggest that the nature or the combination of the task categories may be a boundary condition for effects of interleaved practice on learning and transfer. Further research should be undertaken to investigate what the exact boundary conditions of effects of interleaved practice are and to provide more insight into the expertise-reversal effect in CT-instruction. Moreover, future research could investigate whether other types of (generative) activities would be beneficial for establishing learning and transfer of unbiased reasoning and whether it is feasible at all to teach students to inhibit Type 1 processing and to recognise when Type 2 processing is needed. It is important to continue the search for effective methods to foster transfer, because biased reasoning can have huge negative consequences in situations in both daily life and complex professional environments.

Data deposition

The datasets and script files are stored on an Open Science Framework (OSF) page for this project, see osf.io/a9czu.

Appendix_revised.docx

Download MS Word (101.4 KB)Acknowledgments

This work was supported by the Netherlands Organisation for Scientific Research under Grant 409-15-203.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1 The Dutch education system distinguishes between research-oriented higher education (i.e. offered by research universities) and profession-oriented higher education (i.e. offered by universities of applied sciences).

2 We also exploratively analyzed students’ global judgments of learning (JOLs) after practice to gain insight into how informative the different practice types were according to the students themselves; however, these analyses did not have much added value for this paper, and, therefore, are not reported here but provided on our OSF-page.

3 In response to a reviewer, we have calculated power functions of our post hoc analyses. The power of the comparison between interleaved practice and blocked practice, under a fixed alpha level of 0.05, is estimated at .15, .62, and .95 for detecting a small (d = .02), medium (d = .05), and large (d = .08) effect, respectively. The power of the comparison between worked examples and practice problems is estimated at .15, .60, and .95 for detecting a small, medium, and large effect, respectively. Thus, the power of our experiment should be sufficient to pick up medium-to-large-sized effects. However, the power to pick up a differential effect of interleaved practice with worked examples compared to practice problems seems relatively low, to wit, .09, .33, and .67 for detection of a small, medium, or large effect, respectively.

4 That is, when half of the necessary information was given. To illustrate, a correct explanation on a contingency table involves correct consideration of the information presented in the rows and columns, while a partially correct explanation only involves consideration of either the information in the rows or the information in the columns.

5 The relatively low reliabilities of the learning items should be taken into account.

6 In response to a reviewer, we calculated power functions of our post hoc analyses. The power of the comparison between interleaved practice and blocked practice, under a fixed alpha level of 0.05, is estimated at .19, .76, and >.99 (analysis 1) and .15, .64, and .96 (analysis 2) for detecting a small (d = .02), medium (d = .05), and large (d = .08) effect, respectively. The power of the comparison between worked examples and practice problems is estimated at .17, .69, and .98 (analysis 1) and .13, .53, and .90 (analysis 2), for detecting a small, medium, and large effect, respectively. Thus, the power of our experiment should be sufficient to pick up medium-to-large-sized effects of interleaved practice vs. blocked practice and large-sized effects of worked examples vs. practice problems. However, the power to pick up a differential effect of interleaved practice with worked examples compared to practice problems seems relatively low, to wit, .10, .37, and .73 (analysis 1) and .08, .23, and .50 (analysis 2) for detection of a small, medium, or large effect, respectively.

7 The relatively low reliabilities of the learning items should be taken into account.

References

- Abel, M., & Roediger, H. L. (2017). Comparing the testing effect under blocked and mixed practice: The mnemonic benefits of retrieval practice are not affected by practice format. Memory & Cognition, 45(1), 81–92. https://doi.org/10.3758/s13421-016-0641-8

- Aczel, B., Bago, B., Szollosi, A., Foldes, A., & Lukacs, B. (2015). Measuring individual differences in decision biases: Methodological considerations. Frontiers in Psychology, 6. https://doi.org/10.3389/fpsyg.2015.01770

- Ajayi, T., & Okudo, J. (2016). Cardiac arrest and gastrointestinal bleeding: A case of medical heuristics. Case Reports in Medicine, 2016. https://doi.org/10.1155/2016/9621390

- Albaret, J. M., & Thon, B. (1998). Differential effects of task complexity on contextual interference in a drawing task. Acta Psychologica, 100(1-2), 9–24. https://doi.org/10.1016/S0001-6918(98)00022-5

- Arkes, H. R. (2013). The consequences of the hindsight bias in medical decision making. Current Directions in Psychological Science, 22(5), 356–360. https://doi.org/10.1177/0963721413489988

- Baron, J. (2008). Thinking and deciding (4th ed.). Cambridge University Press.

- Barreiros, J., Figueiredo, T., & Godinho, M. (2007). The contextual interference effect in applied settings. European Physical Education Review, 13(2), 195–208. https://doi.org/10.1177/1356336X07076876

- Battig, W. F. (1978). The flexibility of human memory. In L. S. Cermak & F. I. M. Craik (Eds.), Levels of processing and human memory (pp. 23–44). Erlbaum.

- Bernard, R. M., Zhang, D., Abrami, P. C., Sicoly, F., Borokhovski, E., & Surkes, M. A. (2008). Exploring the structure of the Watson–Glaser critical thinking appraisal: One scale or many subscales? Thinking Skills and Creativity, 3(1), 15–22. https://doi.org/10.1016/j.tsc.2007.11.001

- Billings, L., & Roberts, T. (2014). Teaching critical thinking: Using seminars for 21st century literacy. Routledge.

- Bjork, R. A. (1994). Memory and metamemory considerations in the training of human beings. In J. Metcalfe, & A. Shimamura (Eds.), Metacognition: Knowing about knowing (pp. 185–205). MIT Press.

- Bruine de Bruin, W., Parker, A. M., & Fischhoff, B. (2007). Individual differences in adult decision-making competence. Journal of Personality and Social Psychology, 92(5), 938–956. https://doi.org/10.1037/0022-3514.92.5.938

- Brunmair, M., & Richter, T. (2019). Similarity matters: A meta-analysis of interleaved learning and its moderators. Psychological Bulletin, 145(11), 1029–1052. https://doi.org/10.1037/bul0000209

- Carpenter, S. K., & Mueller, F. E. (2013). The effects of interleaving versus blocking on foreign language pronunciation learning. Memory & Cognition, 41(5), 671–682. https://doi.org/10.3758/s13421-012-0291-4

- Carvalho, P. F., & Goldstone, R. L. (2019). When does interleaving practice improve learning. In J. Dunlosky, & K. Rawson (Eds.), The Cambridge Handbook of Cognition and education (pp. 183–208). Cambridge University Press.

- Charter, R. A. (2003). Study samples are too small to produce sufficiently precise reliability coefficients. The Journal of General Psychology, 130(2), 117–129. https://doi.org/10.1080/00221300309601280

- Cleary, T. A., Linn, R. L., & Walster, G. W. (1970). Effect of reliability and validity on power of statistical tests. Sociological Methodology, 2, 130–138. https://doi.org/10.2307/270786

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd. ed., reprint). Psychology Press.

- Darling-Hammond, L. (2010). Teacher education and the American future. Journal of Teacher Education, 61(1-2), 35–47. https://doi.org/10.1177/0022487109348024

- De Croock, M. B., & van Merriënboer, J. J. (2007). Paradoxical effects of information presentation formats and contextual interference on transfer of a complex cognitive skill. Computers in Human Behavior, 23(4), 1740–1761. https://doi.org/10.1016/j.chb.2005.10.003

- De Croock, M. B., van Merriënboer, J. J., & Paas, F. G. (1998). High versus low contextual interference in simulation-based training of troubleshooting skills: Effects on transfer performance and invested mental effort. Computers in Human Behavior, 14(2), 249–267. https://doi.org/10.1016/S0747-5632(98)00005-3

- Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., & Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, 14(1), 4–58. https://doi.org/10.1177/1529100612453266

- Elia, F., Apra, F., Verhovez, A., & Crupi, V. (2016). “First, know thyself”: Cognition and error in medicine. Acta Diabetologica, 53(2), 169–175. https://doi.org/10.1007/s00592-015-0762-8

- Evans, J. S. B. (2002). Logic and human reasoning: An assessment of the deduction paradigm. Psychological Bulletin, 128(6), 978–996. https://doi.org/10.1037/0033-2909.128.6.978

- Evans, J. S. B. (2003). In two minds: Dual-process accounts of reasoning. Trends in Cognitive Sciences, 7(10), 454–459. https://doi.org/10.1016/j.tics.2003.08.012

- Evans, J. S. B. T. (2008). Dual-processing accounts of reasoning, judgment, and social cognition. Annual Review of Psychology, 59(1), 255–278. https://doi.org/10.1146/annurev.psych.59.103006.093629

- Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. (2009). Statistical power analyses using G* Power 3.1: Tests for correlation and regression analyses. Behavior research methods, 41, 1149–1160. https://doi.org/10.3758/BRM.41.4.1149

- Fischhoff, B. (1975). Hindsight ≠ foresight: The effect of outcome knowledge on judgment under uncertainty. Journal of Experimental Psychology: Human Perception and Performance, 1(3), 288–299. https://doi.org/10.1037/0096-1523.1.3.288

- Flores, K. L., Matkin, G. S., Burbach, M. E., Quinn, C. E., & Harding, H. (2012). Deficient critical thinking skills among college graduates: Implications for leadership. Educational Philosophy and Theory, 44(2), 212–230. https://doi.org/10.1111/j.1469-5812.2010.00672.x