ABSTRACT

Participatory systems approaches are readily used in multi- and inter-disciplinary exploration of shared processes, but are less-commonly applied in trans-disciplinary efforts eliciting principles that generalise across contexts. The authors were charged with developing a transdisciplinary framework for prospectively or retrospectively assessing initiatives to improve education and training within a multifaceted organisation. A common System Impact Model (SIM) was developed in a series of workshops involving thirty participants from different disciplines, clinical specialisms, and organisations. The model provided a greater understanding of the interrelationships between factors influencing the benefits of education and training and development as seen from various stakeholder perspectives. It was used to create a system for assessing the impact of initiatives on service-users/patients, trainees, and organisations. It was shown to enable a range of participants to connect on common challenges, to maximise cross-, multi-, and inter-disciplinary learning, and to uncover new strategies for delivering value, as system designers.

1. Introduction

The aim of the work described herein, was to use a participatory systems approach to develop a framework for the assessment of initiatives to improve education and training – both prospectively for strategic prioritisation and retrospectively for learning within a multifaceted organisation. There are examples of outcome- (D. E. Moore et al., Citation2009) and conceptual-frameworks (D. E. Moore et al., Citation2018) to this end, but these stop short of developing assessment frameworks.

The stakeholders who approached the authors were members of the “Education Academy” of King’s Health Partners (KHP). KHP is an Academic Health Science Centre made up of three NHS Foundation Trusts and a University, providing education and training for a wide range of students and healthcare professionals, administrative and support staff. In this instance, KHP’s Education Academy members from a range of professional disciplines, were interested in improving their understanding of how various initiatives or types of training had impacted services, and which prospective initiatives they ought to invest in by virtue of this increased knowledge of their likely impact. Additionally, they hoped that by tracking the impact, more robust cases could be made for increased overall investment in this area.

1.1. Strategic investment in workforce education and training as a transdisciplinary challenge

Staff development is a common and fundamental component within each specialism and discipline of healthcare. This assessment of initiatives to improve education and training was framed as a transdisciplinary challenge because education and training are subject to certain common dynamics and may have common tenets (Lehane et al., Citation2019; Mantzourani et al., Citation2019) and goals (Browne et al., Citation2021); but in practice they are implemented and experienced in the isolation of different disciplinary contexts, and often navigated with distinct practices according to discipline. Transdisciplinarity is further defined below, and its precedent, challenges, and opportunities are briefly described; both in general and in the context of education and training.

1.2. Participatory systems approaches in transdisciplinary challenges

Participatory approaches for developing a shared representation of a system and its dynamics have been shown to be readily and fruitfully usable in numerous interventions. Predominantly these approaches involve the convening of participants of multiple disciplines: they generally contribute their disciplinary knowledge or perspective, per multidisciplinary collaboration; they may collectively establish an interdisciplinary understanding – of how their disciplines are linked and interact; rarely do they develop a generalisable, transdisciplinary understanding, which is holistic, and “transcends their traditional boundaries” (Choi & Pak, Citation2006). The tendency towards the multidisciplinary and transdisciplinary in participatory exercises is natural, since in healthcare these exercise often relate to the management of a particular condition or business process with which each discipline interacts as a stakeholder (Kang et al., Citation2017; Kiekens et al., Citation2022; Kotiadis et al., Citation2014; Marchal et al., Citation2021). This does, however, mean that the use of participatory methods in trans-disciplinary efforts is relatively untested.

Transdisciplinary frameworks are less amenable to subsequent analytical modelling than their counterparts (Kotiadis et al., Citation2014). Those who have attempted to apply participatory systems approach in transdisciplinary exercises report challenges due to increased diversity in participants’ communications-styles and motivations (G. Moore et al., Citation2021), and that heterogeneity of applications were difficult to accommodate within resulting frameworks (Landa-Avila et al., Citation2022).

In spite of the inherent challenges of transdisciplinary frameworks, they are yet considered to be valuable – for example: in supporting the integration of knowledge (Picard et al., Citation2011), in addressing global and fundamental challenges (Swinburn et al., Citation2019; Wardani et al., Citation2022), and in governing distributed functions such as education and training (Appel & Kim-Appel, Citation2018; Foadi et al., Citation2021). Since healthcare services that are diverse and specialised often share support structures and a common ethos, it stands to reason that common frameworks might enable shared learning, cohesiveness, and improvement in general. By framing challenges as transdisciplinary in design approaches, it is possible to co-opt diverse contributions “beyond the limiting confines of traditional disciplines” and enable the mixing of practices (Dorst, Citation2018). This knowledge may then be readily mobilised – as situation- and discipline-agnostic transdisciplinary frameworks, that are usable within and across an entire organisation (Kornevs, Citation2019; Kornevs et al., Citation2018).

In the task of developing support for the uplift of disparate activities collectively and from a single budget, a transdisciplinary framework can be desirable: for example, for solidarity (Appel & Kim-Appel, Citation2018), for efficiency (Nayna Schwerdtle et al., Citation2020) and transferability of interventions (Foadi et al., Citation2021). The methodology applied in this article has previously been applied in multidisciplinary and interdisciplinary projects (Akinluyi, Citation2017; Akinluyi et al., Citation2019), and the authors reflect on its application in transdisciplinary work to understand the value of workforce education and training initiatives.

2. Background: system impact modelling approach

The participatory method used to develop the envisioned transdisciplinary framework is decidedly one that conforms to a systems approach, and one which focuses on the emergence of value from the modelled system. On the basis of applying systems approach, the concept of value, and their embodiment in the “System Impact Model” are described below.

2.1. Importance of systems considerations in workforce education and training initiatives

In healthcare, work is rarely undertaken in isolation. Effective service delivery depends on the collaboration of multiple staff working in different teams, with the resources and technology available and in the environment in which services operate (Greenhalgh & Papoutsi, Citation2018; Sturmberg & Lanham, Citation2014; Wilson et al., Citation2001). When considering the strategic delivery of education and training in an organisation, as in many initiatives, this complexity confounds planning (Bleakley & Cleland, Citation2015; Hamman, Citation2014).

A new programme, project or strategic change may for example be frustrated by unforeseen and often – unobserved factors – anything from poor engagement with cross-disciplinary partners (Lindgren et al., Citation2013; Padgett et al., Citation2019) to a lack of time to engage (Fletcher, Citation2007). Similarly, opportunities to greatly improve the situation may go undetected without a rigorous exploration of the system: confounders and all. A failure to comprehend interdependencies makes it very difficult to predict accurately how an initiative will impact various stakeholders. This fundamentally limits our ability to design for and measure success in health systems. It is broadly accepted that, “a collective systems thinking exercise among an inclusive set of health system stakeholders is critical to designing more robust interventions and their evaluations” (De Savigny & Adam, Citation2009, p. 52).

The stakeholders who approached the authors in this scenario were anecdotally aware of many factors affecting whether training achieves its desired result – citing, for example, that members of a team might gain a new competence but then be unable to put it fully into practice due to other factors such as lack of equipment or limited clinic time. The group wanted to understand and invest effectively in the system as a whole, considering areas such as staff support, team – working and workplace design, all of which might unlock the full value of spending on training and development. It was understood from the outset; therefore, that a systems approach with a diverse participant-group would be necessary in the proposed exercise – not only for identifying assessment indicators; but for identifying common determinants or predictors of effectiveness and “value” in training and education.

2.2. Conceptualisation of Value

Prior to establishing any assessment framework, it would be necessary for the multidisciplinary group to achieve some level of consensus on what it is their education and training endeavours are intended to achieve. The concept of “value” describes just this – it is, “the overall desirability of outcomes, delivered by a course of action or design decision” (Akinluyi, Citation2017). It is important to engage stakeholders who affect and participate in a system, to draw out their understanding of how it works; but to understand value in a system, questions must be asked about which outcomes matter to those stakeholders whom the system is designed to impact. Are the outcomes desirable or undesirable to them? How important are they?

Sometimes it is difficult to measure how desirable something is. In reality, it depends on who you ask and when you ask it. Even though a healthcare system has a complex mix of stakeholders involved in making the most of training opportunities, the methodology described in this report can help to reconcile stakeholder perspectives and capture the complexity of the system they are participating in.

2.3. The System Impact Model

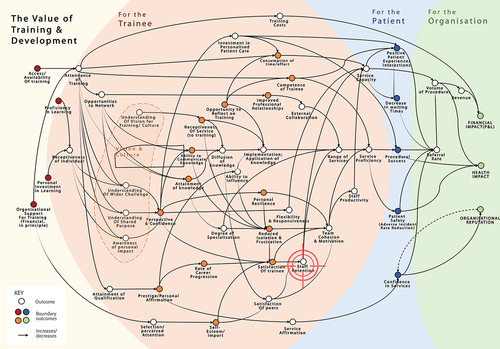

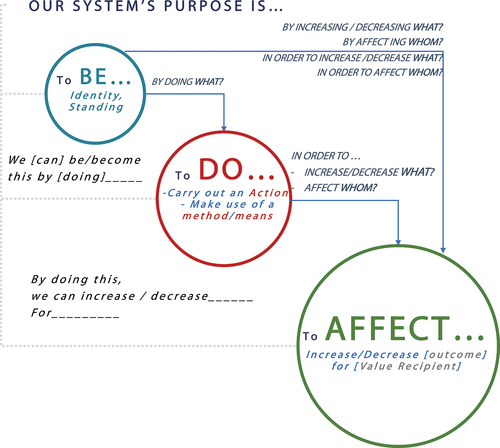

The participatory system and value analysis approach applied here is based on the development of a System Impact Model (SIM) – a network of outcomes that act as influences within a situation. Outcomes, by the convention of the SIM are defined as attributes that can be increased or decreased. A generic picture of a SIM is illustrated in . It is a form of causal mapping whose conventions and extensions can highlight stakeholder perspectives and indicate specific interventions for system improvement.

Figure 1. A generic picture of a system impact model – from Akinluyi et al. (Citation2019)’s paper on outcome identification (with permission).

2.4. The process of developing a system impact model

The SIM and the process for developing it has been described by Akinluyi et al. (Citation2019). The process involves asking oneself, or workshop participants, a sequence of questions in different ways. In doing so, the understanding of participants can be drawn out and captured. In line with insights from Clarkson et al. (Citation2017)’s “Engineering Better Care” report, it is a process where, “the layout of the system, defining all the elements and interconnections and integrating people, systems, design and risk perspectives” is considered (Clarkson et al., Citation2017).

The process is supported by various tools, including the “core purpose translation” tool () may be applied, to take participants’ statements about the core purpose of the (transdisciplinary) challenge, and convert it into an outcome in line with the SIM convention. Similarly, the questions used to develop the SIM form a “loop” which allows facilitators and participants to continue to elaborate, the system description insofar as timing and interest allow. The loop is illustrated in .

Figure 2. Core Purpose Translation Tool (With permission) - Developed by Akinluyi (11) based on a cross-examination of Statements of Core Purpose form leading Non-Profits, Consumer Companies and the NHS.

Figure 3. Developing an Impact Model Systematically, using a Sequence of Questions from the Outcome Identification Loop – from Akinluyi et al. (Citation2019)’s paper on outcome identification (with permission).

2.5. Utility of this approach in principle

This approach can help to elicit the relevant aspects in a situation – including people, resources, opportunities and challenges and the relationships between them. It connects proposed interventions to the workings and purpose of the system as a whole, enabling “system designers” to influence a desired impact more effectively. As such, it is faithful to a definition of a designed system as “an integrated set of elements, subsystems, or assemblies that accomplish a defined objective” (INCOSE, Citation2015). The SIM as described by Akinluyi et al. (Citation2019), stops short of representing how different outcomes interact and only indicates, which outcomes affect others. For the purposes of analysing transdisciplinary phenomena, this level of detail in describing causal relationships may, however, be appropriate – it is anticipated that the nuance of these relationships may manifest differently in different disciplinary contexts.

The SIM diagram can allow knowledge about the impact of initiatives (as “QI/Design activities” in ) to be gathered and presented. This includes considerations of which factors may determine success or failure. As a tool for systems thinking, it encourages consideration of the situation as-is and to judge how best to act. It can also help to answer crucial questions about who the relevant stakeholders are and what value means to them. The process of generating the SIM has been reported previously (Akinluyi et al., Citation2019) but it is applied here in the context of healthcare training and education. An account of how this process was carried out, is given in the method section.

2.6. Applying this approach: case study in training and education impact

This case study considers a pair of workshops held by King’s Health Partners (KHP) entitled “Identifying the value of investment in education and training”. This exercise had a broader organisational remit than the technical disciplines’ workshops run for SIM case studies reported previously (Akinluyi et al., Citation2019), and was distinct in that its goal was transdisciplinary. The aim was to use the SIM approach to produce a transdisciplinary measurement framework that could be used to quantify impact and, therefore, to provide insight for prioritising initiatives.

At the time of carrying out the workshops, the application of the tool and its study was secondary to the goal of producing this framework, and the purpose of the activities can be described as assessing the quality (and primary feasibility) of this approach. Quality was reviewed retrospectively, using the criteria developed and applied by Kotiadis et al. (Citation2014).

3. Method: generating a system impact model for training and education initiatives

As summarised in , a SIM and assessment framework was developed over the course of two two-hour workshops. The goal was to explore how investment in education and training might be most usefully directed and evaluated – the key output was the assessment framework (a system of measures and indicators for impact assessment) that was derived from the SIM. These two workshops are described in this section below, and an additional follow-up workshop is briefly described in the next section.

Table 1. Workshop content in overview.

By way of an initial evaluation, the “quality” of the SIM and its application in these case studies is discussed in terms of criteria of validity, credibility, utility, feasibility and creativity, as applied by Kotiadis et al. (Citation2014)—and additionally experience is considered here. This evaluation is initial and reflective in nature. Acknowledged limitations and indications for subsequent validation are included in the discussion section.

3.1. Assembling of participants

The 30 participants described in were invited through KHP’s Education Academy, and they came from various disciplines and clinical specialisms within one University (King’s College London) and three NHS Trusts (Guy’s & St Thomas’, King’s College Hospital and South London and the Maudsley NHS Foundation Trusts). represents participants’ specialisms at the time of the workshop, and does not account for prior roles and experience, which might also enrich contributions. Participants were not specifically selected by the authors, as might otherwise be the case (“Step 1: Assembling the Team”, in the SIM process outline of the background section).

Table 2. Participant Backgrounds.

3.2. Workshop 1 (Building the SIM)

The overall structure of the workshop was:

Pre-work

Introduction to the concepts of systems-thinking and value.

Identification of desired outcomes and value perspectives (based on pre-work)

Groups application of SIM method to relevant case studies

Feedback and identification of key issues

Next steps

3.2.1. Pre-work: establishing core purpose (Step 2)

Prior to the workshop, participants were asked to prepare a short answer to the question: “what are we hoping to achieve through education, training and development?” This served the purpose of priming the discussion and preparing content for “seeding the SIM”

3.2.2. Seeding the system impact model (SIM) (Step 3)

Whilst introducing themselves participants presented their answers to the “pre-work” question; approximately fifteen minutes were then spent translating “statements of purpose” into desired outcomes. These outcomes were recorded on sticky-notes and grouped into key themes on a board, to form an affinity diagram. These themes formed the high-level outcomes that particularly represented value to senior staff in the organisation; they would seed the SIM and act as “boundary” outcomes.

3.2.3. Developing the SIM (Step 4)

Participants were then divided into four groups. Each group was assigned a predetermined “persona” to work with. These personas were based on discussions with various stakeholders prior to the workshop. They were:

an Allied Health Professional on rotation, looking to develop leadership capabilities.

a Doctor (specialist registrar), working within a multidisciplinary clinical team, with research interests, looking to attend a conference.

a Nurse, studying for a Master’s degree while working and looking to progress.

a Manager, considering taking up a professional qualification.

Each group spent time developing their personas. These then formed the focal point for further SIM development.

Participants were also asked to identify more trainee boundary outcomes by considering “what else might affect [the persona]?” in the context of the individual challenges and development paths faced by their group’s persona. They also went on to identify antecedent outcomes by discussing factors that could help or hinder the delivery of the boundary outcomes identified – addressing the question, ‘what else might affect this [outcome]?

Up to this point, the outcomes that participants had identified had been recorded in one column of a table on a worksheet. Participants were then asked to reflect on the impact of the outcomes they had listed on a number of people: the persona developed within their group, their department and on associated service users. In doing this they considered the question “what [else] might this affect?”

3.2.4. Arranging the impact model (initiating Step 5)

By addressing each of the outcome-identification questions (), the content of the SIM had been built up and recorded in tables of outcomes. The participants then attempted to arrange these outcomes within SIMs of their own. Given time constraints it was not possible to collate all of the insights into a single SIM. Each of the four groups developed their own SIM with mixed levels of success. These were then presented and discussed. This provoked a discussion about the importance of colleagues “buying-in” to what individuals have learned in their continuing professional development (i.e., the “receptiveness of service to training” in ). The content of the outcome tables was then arranged into a draft SIM ready for review at a second workshop.

3.3. Workshop 2 (Completing the SIM and deriving outcome indicators)

This second workshop was convened as a review meeting from the draft SIM. It was attended by a smaller group of 12 people. This meeting began with a summary of and feedback from the first workshop. The draft SIM was then presented and discussed in three groups. The reception was positive and participants immediately set about interrogating, elaborating, and suggesting changes to the working model.

3.3.1. Drawing system boundaries (completing Step 5)

In order to complete the working SIM (), participants focused first on the patient/service-user perspective, highlighting boundary-outcomes that were directly experienced by them. These outcomes are highlighted in blue in the final SIM. Similarly, this process of boundary-identification was carried out from the perspectives of trainees (orange) and corporate staff (green).

The model stimulated a discussion about its implications for investment in training. It raised questions such as, “if ‘receptiveness of service to training’ is such a crucial factor, do we need to invest effort in dealing with this rather than creating more and more training programmes?” Participants also noted a set of key outcomes that related to “Vision and Culture” () which have the potential to unlock downstream benefits. These were, for example, to be supported through leadership development, corporate communications, and networking opportunities. Another crucial factor, “staff retention” was also highlighted and this became the subject of a further workshop (see ‘follow-up exercise).

3.3.2. Deriving measures and indicators (Step 6)

Participants in the second workshop were asked to discount those boundary outcomes in , which, by comparison with the others, are of lower priority where investment is concerned. They were then asked to consider ways in which they might measure and monitor these key boundary outcomes. Three groups separately considered the boundary outcomes affecting key stakeholders: service-users/patients, trainees, and organisational systems. The resulting boundary outcomes and suggestions for system measures are summarised in . If the correct stakeholders are engaged, and each group is engaged in the development of the SIM, this system of measures represents a holistic system of measurement and captures key considerations for those the system serves. There is some question as to whether participants of the second workshop can be said to fully represent those within the first workshop, and this will have influenced the output in .

Table 3. Outcome measures/indicators suggested in the workshops.

One of the key challenges identified in this part of the exercise was the need to capture and reconcile qualitative measures for key outcomes, such as staff engagement. It was helpful to focus on these more – challenging indicators as a group. In just a short time, participants were able to consider a range of novel approaches that included various psychometric and review methods, such as engagement (Jagannathan, Citation2014) and resilience (Ng & Nicholas, Citation2015) surveys. What is more, these were considered alongside quantitative measures, such as those derived from the analysis of coded procedural data. Although qualitative and quantitative measures can be difficult to reconcile, the SIM provides a context for considering different indicators alongside one another and can therefore guide discussions about what these measures mean for stakeholders.

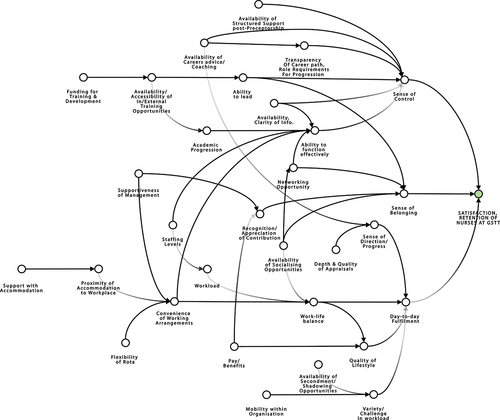

3.4. Additional workshop: elaborating the SIM, to target nurse retention

In an unplanned addition to the two main workshops, one of the participants, a Nursing placement Development Facilitator, approached the authors to apply the same approach elsewhere – to the challenge of increasing “staff retention” in the pool of trainee nurses at Guy’s & St Thomas’. Nursing staff retention was a widely-recognised concern (Finlayson et al., Citation2002), which had recently been exacerbated by the removal of NHS bursaries from nursing, midwifery, and most allied health students and their replacement by student loans. The authors were asked to contribute to an existing set of workshops being run as part of the Trust’s “preceptorship” programme. Using as a starting point and by successively asking the question “what [else] might affect this [outcome]?” in small groups, the 20 nursing trainees in attendance were asked to design system interventions that would appeal to them.

A number of detailed interventions and ideas were elicited from and discussed by the group. Insights from this discussion were taken away and put together by a small group, to translate them into outcomes. The outcomes were then presented to the next group of trainees the following week, so that a second cohort could refine the work and also identify any important issues missing from the picture. The results of this two-stage process were used to produce the impact model in . Although participants were asked to reflect how these outcomes would subsequently impact on service users and the organisation as a whole, their focus was not unexpectedly on nursing staff retention, which is why no system boundaries are drawn. By contrast with the two planned workshops, this impact model was drafted by the Nursing placement Development Facilitator, with limited direct facilitation— is revised and refined with some support, but this was at the level of consultation and peer review.

In subsequent discussions about the application of , it was seen as a potential springboard to use when developing new initiatives to retain staff. Outcomes on the left-hand side of the impact model correspond to “triggers” that can be directly influenced by effect changes in the wider system of which forms a part. Those outcomes form what engineers refer to as “(solution-neutral) problem statements” (Pahl and Wallace, Citation2002), which are particularly useful for seeding design exercises as part of a creative problem-solving process. For example, the problem statement: “devise a means for [supporting staff with accommodation]”, was considered in detail by the nursing trainees. This prompted a lively and creative discussion about potential interventions including suggestions such as, “pursue corporate support from nearby hotels and facilitating staff to stay close to work cheaply during periods of high service demand”. In another discussion about how the Trust could “devise a means for increasing [availability, clarity of information]” trainees highlighted that, on a practical level, increasing their awareness of and access to the Trust’s intranet pages from mobile devices would help them engage with the organisation and improve their training experience. These exercises illustrated how the systems approach and the tools presented here can be used to stimulate the design of complex systems and facilitate continuous improvement.

3.5. Assessing the quality of the SIM

The aim of this work was to develop a framework for the assessment of initiatives to improve education and training – as such the feedback from participants described in the next section was informal. In the discussion section the authors reflect on the SIM’s “quality” based on the experience and outputs of the exercise, and the feedback from KHP participants. Quality is discussed in terms of validity, credibility, utility, feasibility, and creativity, as applied by Kotiadis et al. (Citation2014) in their assessment a framework “tailored to healthcare that supports interaction of simulation modellers with a group of stakeholders to arrive at a common conceptual model”. Since their task also involved having multiple disciplines achieve consensus, it was asserted that the requirements and this the assessment framework might usefully guide reflections here. These reflections are presented in the discussion section, along with indications for subsequent method validation.

4. Discussion

The “quality” of the SIM and its application in these case studies is discussed in terms of criteria of validity, credibility, utility, feasibility, and creativity, as applied by Kotiadis et al. (Citation2014)—albeit with differences in application since the goal of the exercise was to generate a transdisciplinary assessment framework, and not to generate a computer simulation, as they did. Additionally, experience is considered here.

4.1. Feasibility

Where evaluating the approach was concerned, establishing feasibility was the main purpose of the exercise. In this context “feasibility” relates primarily to the ability of the modellers and clients (i.e., participants) to use the approach described, to generate the transdisciplinary SIM and framework. The account given of the workshops in the previous section, and the outputs in and demonstrate that it was possible to produce these “hard” deliverables using this approach, albeit with facilitation. The next step in assessing feasibility would be to consider if and how a SIM might be produced with limited or no facilitation. is initially drafted with limited facilitation, which is an indication of feasibility, but a more rigorous feasibility study is desirable.

The workshops demonstrated how it is feasible to coordinate and combine diverse contributions from a variety of stakeholders (See ), in a transdisciplinary exercise. Accepting that one might argue that these participants have a similar demo-graphic background, their experiences and practices are from organisationally- and clinically- distinct contexts, and the exercise is still considered to be transdisciplinary. The challenges (Kornevs, Citation2019; Kornevs et al., Citation2018) of aligning efforts in spite of differences in communication-style, motivation (G. Moore et al., Citation2021), can be said to have been met in the production of the SIM. A significant factor in this success is due to the centrality of “value” to the SIM approach. The decision to align on a sufficiently-abstract, shared purpose at the outset of the exercise when “seeding the SIM”, meant that discussion was focussed on a common idea. The rest of the SIM-generation process (see ) and its questioning approach is designed to maintain focus on which factors matters most; and its conventions mean that the outcomes within the SIM are expressed in an abstract and transferrable way.

The decision to use personas to elicit discussion was another factor that focussed discussions, while allowing individual specialists to express themselves. It might be possible to expand on this with a toolkit of methods that pose questions about in different ways, but the personas alone achieved considerable success.

4.1.1. Difficulties in SIM amalgamation/transcription

Perhaps the main shortcoming of the approach described in this paper was the effort required to transcribe the contributions of many participants into a single SIM. These diagrams are intended to capture and rationalise the complexity of the situations that they model – it is both important and challenging to achieve this in an orderly way. On reflection on this exercise, the authors would recommend that, where possible, workshop outputs should be collated directly into draft SIMs as the final part of a workshop. Where this is not possible, the process of mapping system impact post-hoc is enlightening but intensive – and necessitates follow-up appointments with participants to check the model and gain further insights. Regardless, it was shown to be valuable to enlist a subgroup of participants, to review and curate the final SIM. It should be noted that this is very much an issue of efficiency; whereas the efficacy of this approach for assimilating the views of diverse participants and disciplines was promising. This method possibly offers some enhancement, building on the experiences reported with similar approaches (Landa-Avila et al., Citation2022; G. Moore et al., Citation2021), but a more detailed concurrent validation exercise would be necessary to explore this in more depth.

4.1.2. Bias in the SIM

The relative detail and density of the SIM at the “trainee” boundary (see ) indicates a bias towards elaborating those parts of the SIM that were most relevant to the interests of trainees, as opposed to those of service users. This was largely because of the profile of participants. Although the participant make-up is significantly diverse so as to test the methodology as a tool for delivering challenging transdisciplinary (Kornevs, Citation2018; Kornevs, Citation2019) exercises (see ), patients were conspicuously absent from the workshops. The nature of the workshops, and the way they were set up, inherently placed the focus on educators and trainers trying to deliver value to trainees.

Further bias in the workshop output emerges from asymmetry in attendance between workshop one and workshop two. In the selection of boundary outcomes for measurement, those outcomes highlighted by participants of workshop May 1 have been discounted or deprioritised by participants of the smaller, follow-up workshop. While having the same participants throughout the exercise may not be practically achievable, it is important to assure that the participant group remains representative. Furthermore, it might be possible to mitigate the risk of unduly excluding outcomes from the SIM altogether, by replacing the discounting/discarding process with a rank prioritisation.

With these biases considered, the development of the SIM is fundamentally an iterative process, and ’s SIM has the potential to be applied in subsequent further work, to better understand how training ultimately impacts on patient experience. This might involve a review of patient feedback or, better still, the direct engagement of patient groups and representatives. Foreseeably, development/testing of this approach with patients is likely to stretch this approach in a different way. This might, for example, affect the power dynamics of interactions; which may have indications for how break-out groups and workshop stages should be organised, to maximise/retain openness while increasing breadth of participation (Geuens et al., Citation2018; Greenhalgh et al., Citation2019).

4.2. Validity and credibility

By some definitions of validity it is fidelity of the SIM to situations faced by the participants in their professional practice. Credibility, here, is taken to mean whether SIM accuracy is perceived to be sufficient for its intended purpose (Kotiadis et al., Citation2014).

One area of challenge in this exercise – and a likely challenge in other complex transdisciplinary exercises (G. Moore et al., Citation2021) – was in representing interrelationships between various stakeholder groups in the context of the system. The marking of boundaries in the SIM helpfully illustrates this. The SIM presents a series of nested systems of value delivery, each pertaining to a different stakeholder group. It is possible to expand the range of stakeholders considered by adding and adapting boundaries – for example, with slight modification, could incorporate the considerations of the quadruple aim (Sikka et al., Citation2015) in its bands and boundaries.

The focus of the exercise was on delivery of outputs and feasibility assessment. A more rigorous evaluation would require additional and more-formal data collection to establish validity and credibility, compared to the informal and open-form feedback survey that was used. For example, it might be appropriate to consider concurrent validity of the SIM with the Kirkpatrick (Citation1954) model. Within the SIM in participants organically elicited indicators of training impact that align to the Kirkpatrick model. For example, Kirkpatrick’s “level 3: behaviour indicators” might map to outcomes such as “Implementation: application of knowledge” and “receptiveness of service” in ; “level 4: results indicators: might encompass all the outcomes to the right of the trainee boundary in . Being primarily a method for assessing the impact of training itself, the scope of the Kirkpatrick model is not directly equivalent to that of the SIM (which also can represent initiatives to improve training), but there is sufficient overlap for the Kirkpatrick model to provide a benchmark of validity. Insofar as there is an increasing need for transdisciplinary participatory methods (Swinburn et al., Citation2019; Wardani et al., Citation2022), it would be of value to re-apply this method, and evaluate validity and credibility with greater rigour.

Similarly, it may be worthwhile to consider concurrent validity of the SIM with a Theory of Change, which is increasingly used to evaluate the impact on interventions in healthcare (Breuer et al., Citation2015). Although the models that the two methods develop are distinct (Theory of Change being closer to a chain/tree of events, and the SIM being closer to a network of factors), they are similar enough to warrant cross-validation: both approaches consider a form of outcomes and causal relationships therein, and the “backwards mapping” of Theories of Change appears to correspond to the “design” question (Question 3, ) of the SIM.

4.3. Utility

“Utility” relates to the perception that the SIM can ultimately be used to improve decision-making and system design. Further work would be required to evaluate quality improvements made as a result of the SIM workshops described in this report, but provisionally the approach shows promise in a number of areas. Perhaps most notably, the workshops and SIM promote systems enquiry; it appeared to empower participants to question practice and proactively seek solutions. For example, the SIM built in the first two workshops inspired the follow-up on nursing retention. As well as empowering system-designers SIM’s utility could be said to extend to equipping them; in their system analysis and creative problem-solving efforts, as discussed below.

In interpreting the SIM, the task of understanding causal relationships between outcomes remains a challenge; however, this diagramming technique frames these challenges coherently. It is possible to add yet more information to SIMs by assigning ± signs (as one might more generally do in causal mappings) (Maani & Cavana, Citation2007; Sterman, Citation2000), weightings, or other descriptors that indicate the strength of causal relationships or degree of complexity by modelling nonlinear relationships between outcomes. Such a quantitative trade-off of outcomes has been considered in more detail in work by Akinluyi (Citation2017). Such approaches are of use in some applications but the type of SIM described in this report represents the relationships between outcomes and value sufficiently well to guide investment in system improvement.

4.4. Creativity

Creativity in the context of system modelling is “seeing a problem in an unusual way, seeing a relationship in a situation that other people fail to see, ability to define a problem well, or the ability to ask the right questions” (Büyükdamgaci, Citation2003; Kotiadis et al., Citation2014). The SIM shows promise as a tool for signalling design interventions. Insofar as design is driven by representation (Simon, Citation1973), the SIM provides another mapping that can guide would-be system designers about where to intervene in a system to improve things. When applied to the workforce challenges explored here, interventions can be identified on an organisational basis, for example, where targeted outcomes in can be used to form a solution neutral problem statements such as “devise a means of improving variety and challenge in nursing workload”); or applied at individual level, when the SIM becomes a reflective tool. An individual with a systems view can identify their own goals and see how these fit with those of others working alongside them. Any mismatch between individual and organisational outcome boundaries will create a tension that may adversely affect the definition and achievement of an overall shared purpose. This could be used to identify where individuals and teams might need to invest in their own development.

SIMs can also highlight areas for investigation, for example considering how in “personal resilience” interacts with “team cohesion and motivation”. By drawing out this relationship from the SIM we can identify an area of study that is of interest and which could be addressed, for example, by correlating the output of feedback surveys and other indicators. The relationships that link most strongly to the overall value agenda are those where further investment in understanding the system might provide the best insights.

4.5. Experience

Feedback from the workshops confirmed that time spent reflecting on shared purpose and system complexity produces benefits in terms of cohesiveness; it created a “shared understanding” and “an appreciation of commonalities”. This was noted from the level of engagement and the rich outputs of the workshops. There was also constructive informal feedback from the participants.

The authors note that the workshop configuration could have been improved for the benefit of the participants. Feedback was largely positive, but there was criticism that not all participants from the first workshop could see the fruits of their work directly. For some, discussions held in the workshop were of interest and seeded ideas about how they might apply this approach (this was the trigger of the exercise to explore nurse retention); for others, the process instead drew out existing frustrations about training effectiveness and provided little immediate pay-off. When considering the complexity of the situation under consideration, it is important to manage the expectations of participants. A two-hour workshop can provoke interesting discussion and even elicit new ideas for interventions but it is unlikely to yield an immediate “solution” to the challenges faced in a complex situation. With the benefit of hindsight, it would have been advantageous to market the workshop as being held in two parts in the first instance. Attendees of the second workshop/review-meeting were the ones who could most appreciate the output of the first.

5. Concluding remarks

The approach described here is a participatory approach for healthcare system design, using value as a key principle. It is shown to be distinctly useful in dealing with multidisciplinary collaborative exercises, with transdisciplinary framework- and policy-level outputs. Such frameworks become necessary in dealing in challenges that manifest in different, siloed settings but are, to some degree, ‘global’—be this within an organisation, or systemic in a broader sense (Swinburn et al., Citation2019; Wardani et al., Citation2022).

There is scope for this SIM-building method to develop further, but the participants that were involved and the questions that were asked represented a “true systems approach that successfully integrated people, systems, design, and risk perspectives in an ordered and well executed manner” (Clarkson et al., Citation2017). As a whole this approach considers, through open-ended systems appraisal, what is important to various stakeholders and extracts understanding about what needs to be considered, to deliver the desired impact. It does so in a sufficiently abstract way, so as to retain transferability. As a causal mapping, the SIM works at a level of abstraction that accommodates modelling of systems as-is and to-be; but it represents a specific type of causal mapping emphasising boundary definition, for multiple levels of stakeholders.

As a systematic output, this approach identifies opportunities for strategic improvement and transformation. It therefore shows potential to complement and structure subsequent quality improvement and design initiatives. Additionally, this approaches underlying conceptualisation of value and shared purpose are engendered in participants throughout the exercise, to positive effect.

In order to develop this approach further, the next step is to update the SIM methodology based on the reflections and feedback gathered herein. Subsequently, a more rigorous evaluation might be carried out. It is of particular interest to explore the feasibility of generating a SIM with limited facilitation; particularly with the involvement of a wider group of system stakeholders including patient groups. In the specific application of the SIM to create an assessment framework for education and training initiatives, it would be of value to carry out a concurrent validation exercise of the output, with other existing frameworks as a benchmark. With this being said, the participant feedback received and the manner in which the SIM was used in follow-up demonstrate the promise of this type of participatory systems approach.

Contributor Statement

EA led the practical development of the methodology described, with contributions from PJC. The workshop was delivered by KI, AG and EA. EA and AG prepared the first draft of the publication. AG developed the content of the publication. All authors contributed to the final version of this paper.

Acknowledgements

The following are thanked for their contributions to the workshops

Deirdre Gibbons is thanked for her secretarial support and administrative input.

Rachael Jarvis (Education Academy Administration Manager, KHP).

Ruth Sivanesan (Nursing Placement Development Facilitator, Guy’s & St Thomas’).

Workshop attendees from KHP’s Education Academy are thanked for their contributions to the education training and development SIM.

Workshop attendees from Guy’s & St Thomas’ Trainee Nurse Cohort are thanked for their contributions to the staff retention SIM.

Sarah Underhill-Hurst (Medical Physics Administrator, Guy’s & St Thomas’), for her help in transcribing the workshop output.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Akinluyi, E. A. (2017). The modelling of value for the systematic design of medical technological infrastructure [ PhD Thesis].

- Akinluyi, E. A., Ison, K., & Clarkson, P. J. (2019). Mapping outcomes in quality improvement and system design activities: The outcome identification loop and system impact model. BMJ Open Quality, 8, e000439. https://doi.org/10.1136/bmjoq-2018-000439

- Appel, J., & Kim-Appel, D. (2018). Towards a transdisciplinary view: Innovations in higher educat. International Journal of Teaching and Education, VI(2), 61–74. https://doi.org/10.20472/TE.2018.6.2.004

- Bleakley, A., & Cleland, J. (2015). Sticking with messy realities: How thinking with complexity can inform healthcare education research. Researching Medical Education, 1, 81–92. https://doi.org/10.1002/9781118838983.ch8

- Breuer, E., Lee, L., De Silva, M., & Lund, C. (2015). Using theory of change to design and evaluate public health interventions: A systematic review. Implementation Science, 11(1), 1–17. https://doi.org/10.1186/s13012-016-0422-6

- Browne, J., Bullock, A., Parker, S., Poletti, C., Jenkins, J., & Gallen, D. (2021). Educators of healthcare professionals: Agreeing a shared purpose (p. 180). Cardiff University Press.

- Büyükdamgaci, G. (2003). Process of organizational problem definition: How to evaluate and how to improve. Omega, 31(4), 327–338. https://doi.org/10.1016/S0305-0483(03)00029-X

- Choi, B. C., & Pak, A. W. (2006, December). Multidisciplinarity, interdisciplinarity and transdisciplinarity in health research, services, education and policy: 1. Definitions, objectives, and evidence of effectiveness. Clinical and Investigative Medicine Medecine Clinique Et Experimentale, 29(6), 351–364. PMID: 17330451.

- Clarkson, P. J., Bogle, D., Dean, J., Tooley, M., Trewby, J., Vaughan, L., Adams, E., Dudgeon, P., Platt, N., & Shelton, P. (2017). Engineering better care: A systems approach to health and care design and continuous improvement. Royal Academy of Engineering. 2017 Sep 25.

- De Savigny, D., & Adam, T. (editors). (2009). Alliance for health policy and systems research. Systems thinking for health systems strengthening. World Health Organization.

- Dorst, C. (2018). Mixing practices to create transdisciplinary innovation: A design-based approach. Technology Innovation Management Review, 8(8), 60–65. https://doi.org/10.22215/timreview/1179

- Finlayson, B., Dixon, J., Meadows, S., & Blair, G. (2002). Mind the gap: The extent of the NHS nursing shortage. BMJ, 325(7363), 538–41. https://doi.org/10.1136/bmj.325.7363.538

- Fletcher, M. (2007). Continuing education for healthcare professionals: Time to prove its worth. Primary Care Respiratory Journal: Journal of the General Practice Airways Group, 16(3), 188–190. https://doi.org/10.3132/pcrj.2007.00041

- Foadi, N., Koop, C., Mikuteit, M., Paulmann, V., Steffens, S., & Behrends, M. (2021). Defining learning outcomes as a prerequisite of implementing a longitudinal and transdisciplinary curriculum with regard to digital competencies at Hannover Medical School. Journal of Medical Education and Curricular Development, 8, 23821205211028347. https://doi.org/10.1177/23821205211028347

- Geuens, J., Geurts, L., Swinnen, T. W., Westhovens, R., Van Mechelen, M., & Abeele, V. V. (2018). Turning tables: A structured focus group method to remediate unequal power during participatory design in health care. In Proceedings of the 15th Participatory Design Conference: Short Papers, Situated Actions, Workshops and Tutorial - Volume 2 (PDC ’18). Association for Computing Machinery, New York, NY, USA, Article 4, 1–5. https://doi.org/10.1145/3210604.3210649

- Greenhalgh, T., Hinton, L., Finlay, T., Macfarlane, A., Fahy, N., Clyde, B., & Chant, A. (2019). Frameworks for supporting patient and public involvement in research: Systematic review and co‐design pilot. Health Expectations, 22(4), 785–801. https://doi.org/10.1111/hex.12888

- Greenhalgh, T., & Papoutsi, C. (2018). Studying complexity in health services research: Desperately seeking an overdue paradigm shift. BMC Medicine, 16 (1), 95. Retrieved June 20, 2018. https://doi.org/10.1186/s12916-018-1089-4.

- Hamman, W. R. (2014). The complexity of team training: What we have learned from aviation and its applications to medicine. BMJ Quality & Safety, 13(suppl_1), i72–i79. https://doi.org/10.1136/qshc.2004.009910

- INCOSE. (2015) . International Council on Systems Engineering (INCOSE) Systems Engineering Handbook. John Wiley & Sons.

- Jagannathan, A. (2014). Determinants of employee engagement and their impact on employee performance. International Journal of Productivity & Performance Management, 63(3), 308–23. https://doi.org/10.1108/IJPPM-01-2013-0008

- Kang, H., Nembhard, H. B., Curry, W., Ghahramani, N., & Hwang, W. (2017). A systems thinking approach to prospective planning of interventions for chronic kidney disease care. Health Systems, 6(2), 130–147. https://doi.org/10.1057/hs.2015.17

- Kiekens, A., Dierckx de Casterlé, B., Vandamme, A. M., & Cremonini, M. (2022). Qualitative systems mapping for complex public health problems: A practical guide. PLoS ONE, 17(2), e0264463. https://doi.org/10.1371/journal.pone.0264463

- Kirkpatrick, D. L. (1954). Evaluating human relations programs for industrial foremen and supervisors. University of Wisconsin–Madison.

- Kornevs, M. (2019). Assessment of Application of Participatory Methods for Complex Adaptive Systems in the Public Sector [ PhD dissertation]. Retrieved from http://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-243022

- Kornevs, M., Baalsrud Hauge, J., Meijer, S., & Dong, J. Dong, J. (Reviewing editor). (2018). Perceptions of stakeholders in project procurement for road construction. Cogent Business & Management, 5(1), 1. https://doi.org/10.1080/23311975.2018.1520447

- Kotiadis, K., Tako, A., & Vasilakis, C. (2014). A participative and facilitative conceptual modelling framework for discrete event simulation studies in healthcare. The Journal of the Operational Research Society, 65(2), 197–213. https://doi.org/10.1057/jors.2012.176

- Landa-Avila, I. C., Escobar-Tello, C., Jun, G. T., & Cain, R. (2022). Multiple outcome interactions in healthcare systems: A participatory outcome mapping approach. Ergonomics, 65(3), 362–383. https://doi.org/10.1080/00140139.2021.1961018

- Lehane, E., Leahy-Warren, P., O’Riordan, C., Savage, E., Drennan, J., O’Tuathaigh, C., O’Connor, M., Corrigan, M., Burke, F., Hayes, M., Lynch, H., Sahm, L., Heffernan, E., O’Keeffe, E., Blake, C., Horgan, F., & Hegarty, J. (2019). Evidence-based practice education for healthcare professions: An expert view. BMJ Evidence-Based Medicine, 24(3), 103–108. https://doi.org/10.1136/bmjebm-2018-111019

- Lindgren, Å., Bååthe, F., & Dellve, L. (2013). Why risk professional fulfilment: A grounded theory of physician engagement in healthcare development. The International Journal of Health Planning and Management, 28(2), e138–e157. https://doi.org/10.1002/hpm.2142

- Maani, K., & Cavana, R. (2007). Systems thinking, system dynamics: Managing change and complexity (2nd ed.). Pearson Education.

- Mantzourani, E., Desselle, S., Le, J., Lonie, J. M., & Lucas, C. (2019, December). The role of reflective practice in healthcare professions: Next steps for pharmacy education and practice. Research in Social & Administrative Pharmacy: RSAP, 15(12), 1476–1479. Epub 2019 Mar 22. PMID: 30926252. https://doi.org/10.1016/j.sapharm.2019.03.011

- Marchal, B., Abejirinde, I. O., Sulaberidze, L., Chikovani, I., Uchaneishvili, M., Shengelia, N., Diaconu, K., Vassall, A., Zoidze, A., Giralt, A. N., & Witter, S. (2021). How do participatory methods shape policy? Applying a realist approach to the formulation of a new tuberculosis policy in Georgia. British Medical Journal Open, 11 (6), e047948. [2021 Jun 29] PMID: 34187826; PMCID: PMC8245474. https://doi.org/10.1136/bmjopen-2020-047948

- Moore, D. E., Chappell, K., Sherman, L., & Vinayaga-Pavan, M. (2018). A conceptual framework for planning and assessing learning in continuing education activities designed for clinicians in one profession and/or clinical teams. Medical Teacher, 40(9), 904–913. https://doi.org/10.1080/0142159X.2018.1483578

- Moore, D. E., Green, J. S., & Gallis, H. A. (2009). Achieving desired results and improved outcomes by integrating planning and assessment throughout a learning activity. The Journal of Continuing Education in the Health Professions, 29(1), 1–15. https://doi.org/10.1002/chp.20001

- Moore, G., Michie, S., Anderson, J., Belesova, K., Crane, M., Deloly, C., Dimitroulopoulou, S., Gitau, H., Hale, J., Lloyd, S. J., Mberu, B., Muindi, K., Niu, Y., Pineo, H., Pluchinotta, I., Prasad, A., Roue Le Gall, A., Shrubsole, C., Turcu, C., Tsoulou, I., & Davies, M. (2021). Developing a programme theory for a transdisciplinary research collaboration: Complex urban systems for sustainability and health. Wellcome Open Research, 6, 35. https://doi.org/10.12688/wellcomeopenres.16542.1

- Nayna Schwerdtle, P., Horton, G., Kent, F., Walker, L., & McLean, M. (2020). Education for sustainable healthcare: A transdisciplinary approach to transversal environmental threats. Medical Teacher, 42(10), 1102–1106. https://doi.org/10.1080/0142159X.2020.1795101

- Ng, W., & Nicholas, H. (2015). iResilience of science pre-service teachers through digital storytelling. Australasian Journal of Educational Technology, 31(6), 736–51. https://doi.org/10.14742/ajet.1699

- Padgett, J., Cristancho, S., Lingard, L., Cherry, R., & Haji, F. (2019). Engagement: What is it good for? The role of learner engagement in healthcare simulation contexts. Advances in Health Sciences Education, 24(4), 811–825. https://doi.org/10.1007/s10459-018-9865-7

- Pahl, G., & Wallace, K. (2002). Using the concept of functions to help synthesise solutions. In: A. Chakrabarti (eds.), Engineering Design Synthesis. Springer. https://doi.org/10.1007/978-1-4471-3717-7_7

- Picard, M., Sabiston, C. M., & McNamara, J. K. (2011, February). The need for a trans-disciplinary, global health framework. The Journal of Alternative and Complementary Medicine, 17(2), 179–84. Epub 2011 Feb 10. PMID: 21309708. https://doi.org/10.1089/acm.2010.0149

- Sikka, R., Morath, J. M., & Leape, L. (2015). The quadruple aim: Care, health, cost and meaning in work. BMJ Quality & Safety, 24, 608–610. https://doi.org/10.1136/bmjqs-2015-004160

- Simon, H. A. (1973). The structure of ill-structured problems. Artificial Intelligence, 4(3–4), 181–201. https://doi.org/10.1016/0004-3702(73)90011-8

- Sterman, J. (2000). Business dynamics: Systems thinking and modeling for a complex world (1st ed.). Irwin/McGraw-Hill.

- Sturmberg, J., & Lanham, H. (2014). Understanding health care delivery as a complex system: Achieving best possible health outcomes for individuals and communities by focusing on interdependencies. Journal of Evaluation in Clinical Practice, 20(6), 1005–1009. https://doi.org/10.1111/jep.12142

- Swinburn, B. A., Kraak, V. I., Allender, S., Atkins, V. J., Baker, P. I., Bogard, J. R., Brinsden, H., Calvillo, A., De Schutter, O., Devarajan, R., & Ezzati, M. (2019). The global syndemic of obesity, undernutrition, and climate change: The lancet commission report. Lancet (London, England), 393(10173), 791–846. https://doi.org/10.1016/S0140-6736(18)32822-8

- Wardani, J., Bos, J. J., Ramirez‐Lovering, D., & Capon, A. G. (2022). Enabling transdisciplinary research collaboration for planetary health: Insights from practice at the environment‐health‐development nexus. Sustainable Development, 30(2), 375–392. https://doi.org/10.1002/sd.2280

- Wilson, T., Holt, T., & Greenhalgh, T. (2001). Complexity and clinical care. BMJ, 323(7314), 685–688. https://doi.org/10.1136/bmj.323.7314.685