ABSTRACT

This study aimed to: (1) examine the influence of working memory capacity on the ability of experienced hearing aid users to recognize speech in noise using new noise reduction settings, and (2) investigate whether male and female hearing aid users differ in their hearing sensitivity and ability to recognize aided speech in noisy environments. 195 experienced hearing aid users (113 males and 82 females, age range: 33–80 years) from the n200 project were investigated. The Hagerman test (capturing speech recognition in noise) was administered using an experimental hearing aid with two digital signal processing settings: (1) linear amplification without noise reduction (NoP), and (2) linear amplification with noise reduction (NR). Gender differences were analysed using a series of independent samples from t-tests on Hagerman sentence scores, and the pure-tone average thresholds across the frequencies 500, 1000, 2000, and 4000 Hz (PTA4) for the left ear and right ear were measured. Working memory capacity (WMC) was measured using a reading span test. A WMC grouping (high and low) was included as a between-group subject factor in the within-group factors ANOVA, NR settings (Nop, NR), noise type (steady state noise, four -talker babble), and level of performance (50%, 80%). Male listeners had better pure-tone thresholds than female listeners at frequencies 500 and 1000 Hz, whereas female listeners had better pure-tone thresholds at 4000 Hz. Female listeners showed significantly better speech recognition ability than male listeners on the Hagerman test with NR , but not with NoP . This gender difference was more pronounced at the 80% performance level than at the 50% level. WMC had a significant effect on speech recognition ability, and there was a two-way interaction between WMC grouping and level of performance. The examination of simple main effects revealed superior performance of listeners with higher WMC at 80% using new NR settings. WMC, rather than background noise, was the main factor influencing performance at 80%, while at 50%, background noise was the main factor. WMC was associated with speech recognition performance even after accounting for hearing sensitivity (PTA4). This is the first study to demonstrate that experienced male and female hearing aid users differ significantly in their hearing ability and sensitivity and ability to recognize aided speech in noise. Thus, the average female listener has a greater speech recognition ability than the average male listener when linear amplification with NR is applied, but not when NoP is activated. An average female listener hears a given sound with greater sensitivity compared with an average male listener at higher frequencies. WMC is an important factor in speech recognition in more challenging listening conditions (i.e., lower signal–noise ratio) for experienced hearing aid users using new NR settings. More investigation is needed for a better understanding of how gender affects the ability of listeners less experienced with hearing aids (such as younger and elderly hearing-impaired listeners) to recognize speech amplified with different signal processing, as gender differences may vary based on numerous factors, including the speaker’s gender and age.

Introduction

With the advent of Cognitive Hearing Science during the last decade, the number of empirical studies investigating the importance of cognitive abilities such as working memory in speech recognition has rapidly increased, particularly when speech is presented in noisy environments or when listeners have hearing loss (Akeroyd, Citation2008; Lunner, Citation2003; Ng, Rudner, Lunner, Pedersen, & Rönnberg, Citation2013; Rönnberg, Rudner, Foo, & Lunner, Citation2008; Rönnberg et al., Citation2013, Citation2016; Rönnberg, Holmer, & Rudner, Citation2019; Souza, Arehart, & Neher, Citation2015; Yumba, Citation2017, Citation2019). Working memory (WM) is defined as the ability to process and temporarily store information (Daneman & Carpenter, Citation1980). It has been found that once audibility has been accounted for, working memory capacity (WMC), particularly as measured by reading span tests, is the most important predictor of speech recognition in noise performance (Akeroyd, Citation2008; Besser, Koelewijn, Zekveld, Kramer, & Festen, Citation2013; Foo, Rudner, Rönnberg, & Lunner, Citation2007).

In addition, listeners’ ability to benefit from signal processing algorithms implemented in hearing aids has been demonstrated to be associated with cognitive abilities such as working memory capacity (Lunner, Citation2003; Ng et al., Citation2013; Rönnberg et al., Citation2016, Citation2019; Souza et al., Citation2015).

Working memory capacity and speech recognition in noise

Working memory refers to a cognitive system comprising a limited capacity that enables a person to simultaneously store and maintain information while executing a task (Daneman & Carpenter, Citation1980). Working memory capacity varies among individuals, placing those with smaller working memory capacity at a disadvantage in terms of speech recognition in noise (Souza et al., Citation2015). Indeed, listeners with smaller working memory capacity have been shown to perform more poorly than listeners with larger working memory capacity in speech recognition tasks when speech signals are degraded because of hearing loss or the presence of noise (Akeroyd, Citation2008, Rönnberg et al., Citation2008, Citation2013). In the Ease of Language for Understanding (ELU) model outlined by Rönnberg et al. (Citation2008, Citation2013), when speech signals are degraded or altered (as a result of hearing loss, background noise, or other types of distortions related to hearing aid signal processing), we might reasonably expect the listener to draw upon working memory capacity to a greater extent for speech recognition in noise by matching speech signal inputs to lexical and phonological representations stored in long-term memory. However, when speech signals are easily audible (e.g., in silence), working memory capacity can be engaged to a lesser extent because speech signal input can be rapidly matched to lexical and phonological representations stored in long-term memory. Evidence from several empirical works has previously supported this view, demonstrating a more robust link between working memory and speech recognition in noise than in silence among individuals with hearing loss (Akeroyd, Citation2008; Lunner, Rudner, Rosenbom, & Ågren, Citation2016). Working memory capacity may be measured using several different types of span test paradigms, such as simple span tests (depending more on storage than on processing of information) and complex span tests (depending on both storage and processing of information, Akeroyd, Citation2008; Besser et al., Citation2013; Rönnberg et al., Citation2016). Furthermore, previous studies have suggested that span tests may also comprise visuospatial and verbal tasks. Visuospatial span tasks use objects or colours (e.g., the visuospatial working memory test, see Rönnberg et al., Citation2016) and verbal span tasks use linguistic information (e.g., the reading span test, see Akeroyd, Citation2008; Rönnberg et al., Citation2016).

Ng et al. (Citation2013) investigated the effects of noise and NR on hearing with 26 middle-aged hearing aid users, and whether such effects were dependent on individual variability in working memory capacity. The results showed the main effect of working memory capacity on recall, with better memory performance associated with higher working memory capacity (as measured via reading span test). An interaction between working memory capacity and noise reduction was also found, indicating that listeners with lower working memory capacity performed worse than those with higher working memory capacity due to noise reduction signal processing. In another work by Ng, Rudner, Lunner, and Rönnberg (Citation2015) using a similar setup, it was found that word recall performance was connected to working memory capacity, but the individual benefit obtained from noise reduction was not related to working memory capacity. The results revealed also an interaction between working memory capacity, noise reduction, and serial word position, suggesting that listeners with poorer working memory capacity performed better on memory recall as a result of noise reduction signal processing for the final word position only, compared to listeners with better working memory capacity, who performed better on memory recall regardless of the position of the word in a sentence.

In a study by Foo et al. (Citation2007), the link between working memory capacity (as measured by reading span test) and aided speech recognition in noise performance (using Hagerman and HINT sentences tests) was examined in 32 participating hearing aid users using new compression release settings. It was found that reading span correlated significantly with speech recognition when the new compression release settings were used in both unmodulated and modulated background noise. Furthermore, the results showed that reading span remained a good predictor of performance on Hagerman sentences even when hearing sensitivity was controlled for, and to some extent, even when both hearing sensitivity and age were accounted for. Rather than solely investigating the role of working memory capacity, the present study contributes to a better understanding of the effect of gender difference on listeners’ ability to hear and recognize speech in noise. This factor has not been considered in previous studies and may prove important in guiding the selection of hearing aids of different types for rehabilitation programmes for hearing-impaired individuals. However, apart from the predictive role of working memory, little is known about the influence of gender difference on aided speech recognition performance in the presence of noise.

Gender differences in speech recognition in noise

Another key factor that has received even less attention in the literature is possible gender differences in speech recognition in noise performance among hearing-impaired hearing aid users. It is important to understand this possible gender difference, particularly as studies on speech recognition in noise generally fail to equalize the distribution of male and female listeners, which frequently skews strongly towards one sex (e.g., Alm & Behne, Citation2015; D’Alessandro & Norwich, Citation2007; Healy, Yoho, & Apoux, Citation2013; Irwin, Whalen, & Fowler, Citation2006; Klasner & Yorkston, Citation2005; Rogers, Harkrider, Burchfield, & Nabelek, Citation2003). Furthermore, potential gender difference in speech recognition in noise performance also needs to be considered because it may play an important role in determining the benefit of hearing aid signal processing algorithms (Akeroyd, Citation2008; Hagerman & Kinnefors, Citation1995; Souza et al., Citation2015) and developing programmes for clinical rehabilitation and speech recognition counselling.

Although some psychoacoustic and neuroimaging studies have found significant differences between male and female subjects in processing of non-auditory and auditory speech cues, there is no firm consensus on the subject. That is, the idea of gender differences in processing of visual, audio-visual, and auditory speech remains controversial, as many studies have reported an absence of gender differences in both psychoacoustical performance (Alm & Behne, Citation2015; Dancer, Krain, Thompson, Davis, & Glen, Citation1994; Irwin et al., Citation2006; Strelinikov et al., Citation2009) and neuroimaging measures (Kansaku, Yamaura, & Kitazawa, Citation2001; Pugh et al., Citation1996; Ruytjens, Albers, Van Dijk, Wit, & Willemsen, Citation2006, Citation2007; Shaywitz et al., Citation1995). However, several psychoacoustical studies have observed gender differences in speech reading tasks, suggesting that female subjects were better speech-readers than males (Alm & Behne, Citation2015; Dancer et al., Citation1994; Irwin et al., Citation2006; Strelinikov et al., Citation2009). Alm and Behne (Citation2015), performing a study on young adults, showed that female listeners had better speech-reading performance than male listeners, whereas no gender differences were found in audio-visual benefit or visual influence in the group. Irwin et al. (Citation2006) found that female listeners displayed significantly greater visual influence on heard speech than male listeners did for brief visual stimuli. This observed variability in visual influence on heard speech has been attributed to the influence of speaker gender and attentional differences between male and female listeners in the processing of audio-visual speech, which have been shown to modulate visual influence on heard speech.

Rogers and colleagues also investigated the effects of listener gender on the most comfortable listening level and acceptable background noise level in 50 normal hearing individuals (25 male, 25 female) aged 19–25 years. The results showed that male listeners had higher comfortable listening levels than female listeners (a 6 dB difference). This suggested that an average male listener may prefer listening to louder speech (about six decibels louder) than an average female listener may prefer. Likewise, their results showed also significant differences between female and male listeners in that female listeners had lower acceptable background noise levels than their male counterparts (a difference of about seven decibels). This indicated that male listeners tolerated significantly louder background noise compared to female listeners (Rogers et al., Citation2003).

In a study, D’Alessandro and Norwich examined individual variability in the identification of loudness of tones at different intensities to estimate the power function exponent relating sound pressure level to loudness for the participants. The results indicated that female listeners estimated the value of the loudness exponent as higher than male listeners did. This finding suggests that female listeners were more sensitive to a given physical range of tones compared to male listeners (D’Alessandro & Norwich, Citation2007).

Crowley and Nabelek (Citation1996) examined the effects of gender on noise level acceptability in 31 male and 15 female hearing-impaired subjects. They found gender differences in acceptance noise level ability, suggesting that male hearing-impaired listeners had a 2 dB lower level of acceptable noise compared to their female counterparts. However, when the analysis was conducted a second time, after randomly selecting the scores from 15 male and 15 female subjects, no significant gender differences were observed. Similarly, Markham and Hazan (Citation2004) reported no difference in speech intelligibility, using percent words correct, as a function of listener gender. A few studies also failed to find gender differences in speech processing for word production tasks (Knecht et al., Citation2000), and in language comprehension tasks (Frost et al., Citation1999). Contrary to Markham and Hazan (Citation2004) and Frost et al. (Citation1999), who reported no gender differences in speech intelligibility, Kwon (Citation2010) investigated gender differences in 20 normal hearing young adult subjects to examine the validity of objective parameters associated with speech intelligibility. He found that female subjects performed better than their male counterparts on speech intelligibility tasks and on most acoustic parameters.

Several studies with functional magnetic resonance imaging (fMRI) have observed the effect of gender on activation for auditory tasks that involve phonological processing in the inferior frontal gyrus (IFG), indicating that male listeners showed stronger left than right activation than female listeners, who were more bilaterally balanced (Pugh et al., Citation1996; Shaywitz et al., Citation1995). In another study by Baxter et al. (2003), female listeners showed stronger activation in the IFG, the superior temporal gyrus (STG), and cingulate regions for semantic tasks. Some functional neuroimaging studies have also suggested that the cause of the gender differences may be that a female listener engages the right perisylvian cortex more than a male listener does when tested on phonologically based language tasks (Kansaku et al., Citation2001). Furthermore, previous neurophysiological studies (Ruytjens et al., Citation2006, Citation2007) have shown that female listeners had greater activation in brain areas related to speech recognition compared to their male counterparts.

However, there is ample evidence suggesting the existence of several factors that may be associated with these reported gender differences, including the influence of the speaker’s gender, in relation to speech intelligibility in noise. Ellis and colleagues examined the effects of gender on listeners’ judgements of intelligibility in 30 listeners. They found that there was no significant gender difference in magnitude estimation scaling responses; however, there was a significant difference in male and female listeners’ overall impressions of the speakers’ intelligibility. Female listeners reported that the voice of the male speaker was more understandable than that of the female speaker, while male listeners reported that the voice of the female speaker was more understandable (Ellis, Fucci, Reynolds, & Benjamin, Citation1996). Furthermore, in a recent investigation by Yoho, Borrie, Barrett, and Whittaker (Citation2019), it was found that the spoken productions of female speakers were more intelligible than the spoken productions of male speakers. That is, male listeners were more distracted by a voice of a female speaker than that of a male speaker (see also Bradlow, Torretta, & Pisoni, Citation1996). The question of why one sex may perform better in aided speech recognition in noise remains unclear.

Gender differences in hearing sensitivity

While there has been limited investigation into the direct impact of a listener’s gender in understanding hearing impairment in older adults, there are some data showing that differences may exist between male and female listeners in hearing sensitivity as measured using audiometric threshold tests (i.e., pure tone air-conduction thresholds). Gender differences in hearing sensitivity also need to be considered because previous studies have shown that a listener’s peripheral hearing sensitivity was the primary factor predicting the variability observed in speech recognition in noisy environments (Akeroyd, Citation2008). In addition, a listener’s gender appears to provide a better understanding of why hearing aid users weight various speech-like dimensions differently and exhibit different degrees of susceptibility to distorted speech signals (Akeroyd, Citation2008; Mattys, Davis, Bradlow, & Scott, Citation2012). For example, in the largest and longest longitudinal study, Pearson and colleagues (Pearson et al., Citation1995) investigated the effects of gender in the rates of change of hearing thresholds after minimizing the effects of factors such as clear noise-induced hearing loss and otological disorders in 681 male participants (followed for 23 years) and 416 female participants (followed for 13 years) aged 20–90 years. The results revealed that hearing sensitivity declined more than twice as rapidly in male participants than in female participants at almost all ages and frequencies. They also found that female participants had more sensitive hearing compared to male participants at frequencies above 1000 Hz. However, male participants had more sensitive hearing than female participants at lower frequencies.

Jerger et al. (Citation1993) reported that female participants had better hearing thresholds than their male counterparts at frequencies above 1000 or 2000 Hz, even though some studies have found that male listeners may have better hearing thresholds than female listeners at 1000 or 2000 Hz frequencies. Chung and colleagues also found that female listeners, on average, had better hearing thresholds than male listeners from frequencies of 2000–8000 Hz, suggesting better auditory sensitivity (Chung, Mason, Gannon, & Willson, Citation1983).

Current study

The aims of the current study were (1) to examine the impact of working memory capacity on speech recognition in noise performance in persons who are habitual hearing aid users using new NR settings, and (2) to investigate whether male and female hearing aid users differ in their hearing sensitivity and their ability to recognize aided speech in noisy environments. To do so, working memory and gender were used as entirely separate variables, because the focus in the present study was more on investigating the possible existence of gender differences. Based on previous studies (Chung et al., Citation1983; Jerger et al., Citation1993; Pearson et al., Citation1995) that reported that female listeners had better hearing thresholds than their male counterparts at higher frequencies (e.g., 3000–8000 Hz), it would be expected that females in the present study would have better hearing thresholds at higher frequencies relative to male participants, and males would have better hearing thresholds at lower frequencies. Furthermore, on the basis of other studies that have found gender differences in favour of female listeners in speech intelligibility tasks (Kwon, Citation2010), it was also expected to find significant gender differences in aided speech recognition in noise in favour of female hearing aid users. To the best of author’s knowledge, there have been no investigations into the role of gender difference in aided speech recognition in noise and with noise reduction algorithms in a large sample population of hearing aid users.

To investigate the effect of working memory capacity (WMC) on speech recognition in noise performance with and without noise reduction, the participants were divided into two different cognitive groupings (higher WMC and lower WMC) based on the results of their RST scores using median split methods (see Ng et al., Citation2013).

Materials and methods

Ethical considerations

All participants, in accordance with the Declaration of Helsinki, were fully informed about the study and its purpose and gave written consent before participating. They were also informed that they were free to choose to terminate their participation at any time during the testing without any explanation and were compensated for their time. The study was approved by the Linköping regional ethics board (Dnr: 55-09 T122-09).

Participants

A group of 195 native Swedish speakers (113 women and 82 men) with hearing impairment, aged 33–80 years (mean = 61.10 years, SD = 8.20) were included in the study. They were recruited to the longitudinal n200 study (see Rönnberg et al., Citation2016 for further details), randomly selected from the hearing clinic patient registry at the University Hospital of Linköping, and invited to participate by letter. The tests were performed at the same hospital. All participants had bilateral, symmetrical, and mild to moderate sensorineural hearing loss. The pure-tone average hearing threshold for both ears at frequencies 500, 1000, 2000, and 4000 Hz (PTA4) was 40.36 dB HL (SD = 18.43). The participants fulfilled the following criteria: they were all bilaterally fitted with digital hearing aids with common features such as wide dynamic range compression, noise reduction, and directional microphones; they had used the aids for at least one year at the time of testing; and they did not have a history of otological problems or psychological disorders.

Cognitive measures

Reading span test

A Swedish version of the reading span test (Daneman & Carpenter, Citation1980) was used to measure participants’ working memory capacity. The test was designed to tax memory storage and processing simultaneously. The reading span test developed by Rönnberg and colleagues is an extension of the test developed by Daneman and Carpenter (Citation1980). During the test, 28 sentences were presented on the computer screen at a rate of one word or word pair every 800 ms. Half of the sentences were absurd (e.g. ‘The car drinks milk’), and half of the sentences were normal (e.g. ‘The farmer builds his house’). After an entire sentence was shown, the participants were instructed to determine whether the sentence made sense or not. The test included two blocks each of two, three, four, and five sentences per block. After each block was shown, the participants were asked to recall the first or the last words of the presented set of sentences. It was expected that the words would be more difficult to recall as the number of sentences in the successive blocks increased. The test results were recorded as the total number of items correctly recalled, irrespective of their serial order.

Outcome measure

Speech recognition in noise test

The present study used Hagerman matrix sentences test (Hagerman & Kinnefors, Citation1995). Three lists of ten sentences each, highly constrained in their nature and with low semantic redundancy, were used. Each sentence consisted of five Swedish words and had the following structure: proper noun, verb, number, adjective, and object, in that order. For example, ‘Petronella had seven red boxes.’ The sentences were presented in either steady-state noise or four-talker babble background noise. As the data of the present study is part of a wider project (n200; see Rönnberg et al., Citation2016 for further details), for the Hagerman sentences test, originally three different signal processing features including linear amplification without NR (NoP, baseline), linear amplification with NR (NR) and non-linear amplification with fast-acting compression (Fast, NR not activated) were implemented in experimental hearing aids that were adopted to match each participant’s audiogram. However, a linear amplification with NR and without NR was included in the analysis, which also is reported here. Four-talker babble consisted of recordings of two male and two female native Swedish speakers reading different paragraphs of a newspaper text and steady-state noise (i.e., stationary speech-shaped noise with the same long-term average spectrum as the speech material) were used.

Procedure

The data were collected in three sessions of approximately three hours each as a part of a larger investigation (i.e., the n200 project, see Rönnberg et al., Citation2016 for further details). In the current study, data were collected during the first and third sessions. In the first session, background data were recorded, PTA4 (left and right ear) was measured, and the Swedish version of the reading span test (a measure of working memory capacity) was administered because it has been proven to be more predictive of aided speech recognition in noise performance (See Foo et al., Citation2007; Rönnberg et al., Citation2008, Citation2013, Citation2016). The Hagerman test (as measure of speech recognition in noise) was administered in the third session. The Hagerman sentences were presented in steady-state noise and four-talker babble, using an experimental hearing aid with two digital signal processing settings: (1) linear amplification without noise reduction (NoP, baseline), (2) linear amplification with noise reduction (NR).

The testing took place in a sound-treated test booth. The participant sat in a chair at a distance of 1 m from a single loudspeaker. An experimental hearing aid was implemented in an anechoic box (Brüel & Kjaer, type 4232) containing a carefully checked experimental hearing aid. To enable audibility and control of target signal processing settings, the experimental hearing aid was adapted to suit each participant’s audiogram. Order of conditions was randomized, and settings were programmed by the experimenter before testing (see Rönnberg et al., Citation2016 for further details).

Results

After checking that the data were normally distributed, a series of independent t-tests were carried out to examine gender differences in hearing sensitivity (PTA4), and in the ability to recognize aided speech in noise (Hagerman sentences test). To investigate the effect of WMC on speech recognition in noise performance using NR settings [linear amplification without noise reduction (NoP), linear amplification with noise reduction (NR)], two cognitive groupings (higher WMC and lower WMC) were created based on the results of the RST scores using the median split methods (n = 94 in each group, see Ng et al., Citation2013). The average aggregate reading span score was 16.07 (SD = 3.83), and the median score was 16. The nine participants who scored 16 were excluded because they could not be assigned to either of the two groups. The exclusion of the scores of nine participants from the analysis might reduce its power, making it more conservative. Cognitive groupings (higher RST scores and lower RST scores) were included as a between-group subject factor in the within-group factor ANOVA, noise reduction settings (linear amplification with noise reduction, linear amplification with noise reduction), noise type (steady state noise, four -talker babble), and level of performance (50%, 80%). The relation between working memory and speech recognition in noise with NoP and NR was examined using Pearson correlation analysis. The mean SNR (dB) and standard deviation for speech recognition in noise in the various conditions are shown in . Lower SNR scores mean better speech recognition performance, as low SNR shows that the participants correctly identified the speech signal despite a high level of background noise, while high SNR scores indicate that the participants correctly repeated the sentences at low noise levels (Hagerman & Kinnefors, Citation1995).

Table 1. Mean scores and standard deviations (SD) for all speech recognition in noise conditions (SNR, in dB).

Gender differences in hearing sensitivity

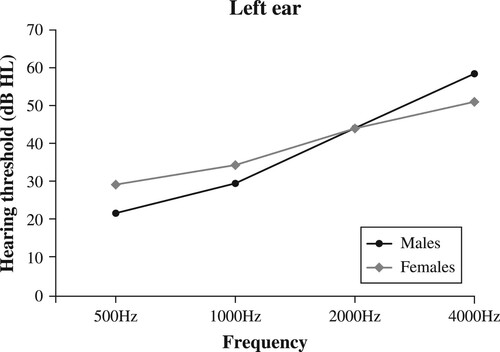

Hearing sensitivity was measured via a pure-tone average hearing threshold for both ears at frequencies 500, 1000, 2000, and 4000 Hz (PTA4); the mean was found to be 39.23 dB HL (SD = 19.64). lists the mean and standard deviation for hearing sensitivity (dB HL) in male and female participants for both left and right ears. Air conduction thresholds were measured to obtain the hearing sensitivity of the participants at 500, 1000, 2000, and 4000 Hz for both left and right ears. As data were normally distributed, a series of independent sample t-tests were carried out to examine gender differences in hearing sensitivity and in speech recognition in noise performance ( and ). The results showed a significant difference between male and female listeners at 500 Hz for the left ear, t (193) = −3.85, p < .001, d = .55. The effect size for this analysis (d = .55) was medium, which indicates that male listeners had better pure-tone thresholds (M = 21.51 dB HL, SD = 13.68 dB HL) than female listeners (M = 29.32 dB HL, SD = 14. 44 dB HL). Moreover, at 1000 Hz for the left ear, a significant difference was obtained, t (193) = −2.04, p < .05, d = .29, in favour of male listeners having better hearing thresholds (M = 30.05 dB HL, SD = 16.18) than female listeners (M = 34.49 dB HL, SD = 14.28). However, although female listeners had a slightly better audiometric threshold (M = 44.50 dB HL, SD = 11.35) than male listeners (M = 45.05 dB HL, SD = 16.78), there was no significant difference at 2000 Hz for the left ear (p > .05). Interestingly, there was a significant difference between male and female listeners at 4000 Hz frequency for the left ear, t (193) = 3.66, p < .001; d = .52. The effect size categorization was based on Cohen’s (Citation1988) convention for effect size. This suggests that female listeners had better hearing sensitivity (M = 51.36 dB HL, SD = 12.92) than male listeners (M = 59.17 dB HL, SD = 16.99).

Table 2. Mean values (M) and standard deviations (SD), the statistical significance and the effect size (Cohen’s d) for PTA4 (left and right ear in dB HL) in male and female participants.

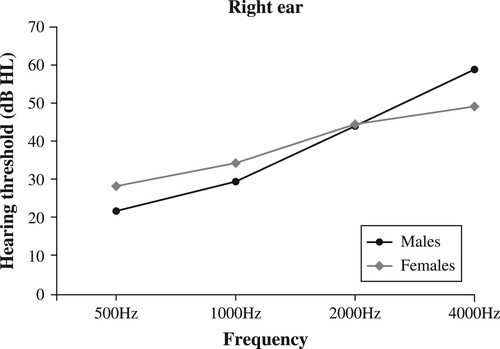

The results for the right ear also showed a significant gender difference at 500 Hz, t (193) = −3.02, p < .01, d = .43. This result indicates that male participants (M = 21.74 dB, SD = 15.19) had better hearing thresholds than female participants (M = 28.18 dB, SD = 14.60). A significant difference between male and female participants at 1000 Hz was also found, t(193) = −2.04, p < .05, d = .29. This shows that male listeners (M = 30.18 dB HL, SD = 17.32) had better pure-tone thresholds than female listeners (M = 34.38 dB HL, SD = 14.40). Otherwise, there was no significant difference between male and female listeners, t (193) = −0.44, p > .05, at 2000 Hz. Nevertheless, there was a significant difference at 4000 Hz, t (193) = 4.63, p < .001, d = .66. Based on Cohen’s (Citation1988) convention, the effect size of this analysis was ‘medium’. This result indicates that female listeners had better hearing thresholds (M = 49.38 dB HL, SD = 13.08) than male listeners (M = 59.68 dB HL, SD = 17.23) (see ).

Gender differences in the Hagerman sentences test

A series of t-tests were carried out between male and female listeners on speech recognition in noise performance using linear amplification with NR and without NR (NoP) in persons who are habitual hearing aid users using new NR settings (see Rönnberg et al., Citation2016; Yumba, Citation2017 for technical details) at different levels of performance (50% and 80%), and the results are presented in . The results show that there was a significant difference between male and female listeners on the Hagerman sentence test scores, in SSN background noise, with NR applied, at the 80% performance level, t (193) = 2.50, p < .05, d = .35. The effect size for this analysis was medium. This result indicates that female listeners had lower SNRs (M = −6.31 dB SNR, SD = 2.61) than male listeners (M = −5.18 dB SNR, SD = 3.43). Moreover, a significant difference was obtained in the 4TB background noise, with NR applied, at the 80% performance level, t (193) = 2.35, p < .05, d = .33. This result suggests that female listeners had lower SNRs (M = −8.93, SD = 1.34) than male listeners (M = −7.06 dB SNR, SD = 1.73). However, there was also a marginally significant difference in SSN background noise, with NR applied, at the 50% performance level, t (193) = 1, 67, p = .05, d = .25. The effect size for this analysis was small. This indicates that male listeners (M = −11.04 dB SNR, SD = 1.89) had slightly lower SNRs than female listeners (M = −11.62 dB SNR, SD = 1.28). However, there were no significant gender differences when NR was not activated in the hearing aids, under all four conditions (see ).

Table 3. Mean values and SD for Hagerman sentences tests (in dB, SNR) scores for male and female participants.

Effect of working memory capacity on aided speech recognition in noise

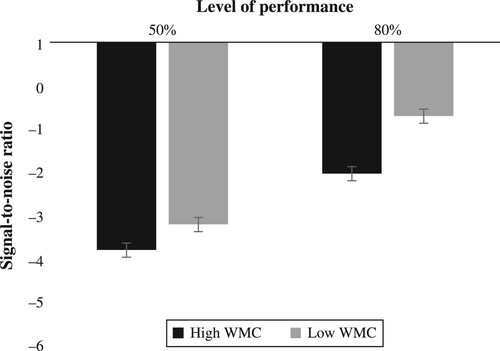

The results of the ANOVA including cognitive grouping as a between-subjects factor showed the main effect of cognitive grouping, F (1, 186) = 4.86, p < .05, ηp² = 0.025, where listeners with larger WMC performed better than those with smaller WMC. There was also an interaction between level of performance and cognitive grouping, F (1, 186) = 3.52, p < .05, ηp² = 0.123. The investigation of this interaction using post hoc t-testing with Bonferroni adjustment for multiple comparisons showed that the group with higher WMC outperformed the group with low WMC at the 80% performance level, t (184) = 5.3, p<.05, d = .78, but not at the 50% performance level, t (184) = 2.6, p < .05, d = .38. This may indicate that WMC, rather than noise type, is the key factor influencing performance at the 80% performance level. But at the 50% performance level, noise type was the key factor (See ).

Relationship between working memory and speech recognition in noise

Correlations between reading span test scores and Hagerman sentence scores were measured. The results showed that reading span performance correlated significantly with speech recognition performance in noise using linear amplification with NR in all four conditions, but correlated significantly with speech recognition scores in noise using linear amplification without NR in only one out four conditions (4TB, see ). This is evidence of a possible relationship between working memory and noise reduction during aided speech recognition in noise, particularly with the benefit obtained from NR ( Ng et al., Citation2013; Souza et al., Citation2015; Yumba, Citation2017). The pattern of these correlations may suggest that cognitive capacity is important in aided speech recognition in noise (Rönnberg et al., Citation2008, Citation2013) ().

Table 4. Pearson correlations between reading span test (%) and Hagerman sentences scores (SNR, in dB).

Table 5. Partial correlations between reading span test (%) and Hagerman sentences scores (SNR, in dB), controlling for PTA4 better ear (dB HL), Pearson’s r.

Discussion

This study had two purposes: (1) to examine the impact of working memory capacity on speech recognition in noise performance in persons who are habitual hearing aid users using new NR settings (linear amplification with noise reduction, and linear amplification without noise reduction), and (2) to investigate whether there is a difference between male and female hearing-aid users in hearing sensitivity, and in the ability to recognize aided speech in noisy environments. This study was motivated by findings from earlier investigations into the role of gender differences in hearing sensitivity (Chung et al., Citation1983; Dubno, Lee, Matthews, & Mills, Citation1997; Pearson et al., Citation1995) and into comfort level and acceptable background noise tests (Crowley & Nabelek, Citation1996; Rogers et al., Citation2003) as well as the predictive power of working memory in aided and unaided speech recognition using different types of signal processing in normal and hearing-impaired listeners (Akeroyd, Citation2008; Rönnberg et al., Citation2008, Citation2013, Citation2016, Citation2019; Yumba, Citation2017, Citation2019).

The main findings of this investigation are that a listener’s gender affected hearing sensitivity and performance on aided speech recognition in noise when using the new NR settings (i.e., linear amplification with NR and without NR) for hearing aid users. Our findings are in line with earlier studies that found significant gender differences in hearing sensitivity at different ages (Chung et al., Citation1983; Pearson et al., Citation1995), and the effect of listener’s gender on background noise tolerance tests (Crowley & Nabelek, Citation1996; Rogers et al., Citation2003). However, these findings provide partial evidence of the role gender difference plays in listeners’ ability to hear a sound or speech and the ability to recognize speech in noise, as the effect size was moderate in almost in the overall results, which may indicate the strength of the present findings. For example, there were significant gender differences in speech recognition in noise performance, with female listeners showing better speech recognition ability than male listeners, particularly when NR was used, but not when NR was not activated (NoP). These results agree with a previous study by Kwon (Citation2010) that reported gender differences in speech intelligibility in younger adults with normal hearing. The findings of the current study appear to be the first to show gender difference in aided speech recognition in noise performance among hearing-impaired habitual hearing aid users, rather than listeners with normal hearing. With regard to gender differences in hearing sensitivity, the results showed also that female listeners had more sensitive hearing than male listeners at frequencies above 200 Hz. The pattern of these findings is consistent with previous results that found gender differences in hearing sensitivity (Chung et al., Citation1983; Crowley & Nabelek, Citation1996; Pearson et al., Citation1995), and in speech intelligibility in noise (Kwon, Citation2010; Rogers et al., Citation2003), even though different test materials were used.

Gender differences in hearing sensitivity

Humes and Roberts observed that a listener’s peripheral hearing sensitivity was the most important predictor of speech recognition performance in noise (Humes & Robert, Citation1990). In another work, Akeroyd examined 20 studies and reported that while the listener’s hearing sensitivity was the most dominant predictor of speech recognition performance, the second most important predictor was cognitive ability. Evidence shows that lower audiometric thresholds indicate better hearing sensitivity (Corso, Citation1959; McGuinness, Citation1974). Interestingly, the results of the present study show that female listeners had better hearing sensitivity compared to their male counterparts at frequencies above 2000 Hz in both ears (see and ), and these observed gender differences were moderate although our sample population was large. These findings are consistent with findings from previous studies (Jerger et al., Citation1993; Pearson et al., Citation1995). One possible explanation for this finding may be that since male listeners have been observed to have higher hearing thresholds than female listeners at high frequencies, an average male listener may choose to listen to a tone more loudly than an average female listener at a higher intensity level of background noise does. This has been also supported by earlier investigators such as Corso (Citation1959) who found that female listeners have superior auditory acuity (i.e., lower thresholds) than male listeners, especially at the test frequencies above 2000 Hz. Another past researcher claimed that female listeners’ average maximum comfortable loudness tolerance level when listening to loud tones was consistently about 8 dB lower than that of male listeners (CASLPA, Citation2006). However, at frequencies 1000 Hz and below in both ears (see and ), male listeners had better hearing sensitivity than their female counterparts, and the magnitude of mean differences was moderate. This may be noteworthy, although the sample population was heterogeneous (i.e., consisting of younger and elderly hearing aid users), which could have negatively affected the results. The current findings extend and replicate the frequently reported finding that male listeners have more sensitive hearing at lower frequencies (Pearson et al., Citation1995).

Gender differences in aided speech recognition in noise

Noise reduction (NR) algorithms are designed to reduce the masking effects of background noise on speech recognition and sound quality by improving SNR for persons with hearing impairments. This is done using an algorithm that determines SNR in various frequency bands of a hearing aid, using an estimate of the level of noise in an individual’s environment (Corso, Citation1959). Evidence has shown that low SNR scores indicate better speech recognition in noise performance compared to high SNR scores. Low SNR scores suggest that listeners correctly identified the speech signal despite a high level of background noise, while high SNR scores indicate that the sentences could only be correctly repeated by the listener at low noise levels (Hagerman & Kinnefors, Citation1995).

The results show that female listeners performed better on speech recognition in noise when NR was used, but not when NR was not activated (NoP). This may suggest that female listeners had a larger capacity to understand speech in background noise than male listeners when NR was applied. The present findings may also suggest that female listeners are better than male listeners at correctly identifying speech signals in adverse listening conditions at 80% level performance than at 50% level performance.

This finding is consistent with findings reported by Moore et al. (Citation2014), which indicated that male listeners reported greater difficulty in hearing speech in noise than female listeners. However, even though previous research observed that 80% correct word recognition was a relatively easy listening condition, male listeners in the present study did not achieve the 80% threshold. Another possible reason for this finding might be that male listeners have higher capacity in terms of comfortable listening levels and accepted higher levels of background noise than the female listeners as suggested by Rogers et al. (Citation2003). This ability may have been compromised by the NR activated in their hearing aids. Consistent with previous reports of a lack of gender difference, our study found also that male and female listeners did not differ in speech intelligibility ability when NR was applied. This was a surprising finding, although it agrees with a previous study by Crowley and Nabelek (Citation1996) that used different tests. A significant gender difference in favour of male listeners was expected because males seem to be less sensitive to louder background noise than females (D’Alessandro & Norwich, Citation2007) and even to higher SNR than female listeners; the Canadian Association of Speech-Language Pathologists and Audiologists suggests that sensitivity to SNR in male and female listeners differs by approximately 3–5 dB (CASLPA, Citation2006). Moreover, when NR was not activated, there was no speech signal processing leading to relatively higher SNR than when NR was applied (Humes & Robert, Citation1990; Yumba, Citation2017).

Working memory and aided speech recognition in noise

This study demonstrates that working memory plays a key role in aided recognition of speech in noise using new binary masking NR settings for persons with hearing loss who are habituated to hearing aids. Our findings support and extend the findings of the previous studies that reported the advantage of larger working memory capacity for listeners to benefit from NR in speech recognition in noise performance (Lunner et al., Citation2016; Ng et al., Citation2013, p. 2015; Rönnberg et al., Citation2016, Citation2019; Yumba, Citation2017). For example, these findings are in line with the results of Ng et al. (Citation2013, p. 2015), who found that recalling speech using the binary masking NR signal processing algorithm was related to working memory. This suggests that listeners with better working memory performed better on auditory recall of words presented with NR.

It is interesting to note that the present study found significant correlations between working memory and speech recognition in noise performance using linear amplification with and without NR even when hearing sensitivity (PTA4) was taken into account. Some of the correlational coefficients found in the present study for new binary masking NR settings are relatively low compared to those found previously between aided speech recognition in noise with habitual NR settings and reading span (Ng et al., Citation2013, p. 2015). However, these findings are consistent with the ELU model (Rönnberg et al., Citation2008, Citation2013), which postulates that working memory capacity can be deployed in aided speech recognition in noise when using new hearing aid signal processing settings compared to results using experienced settings (Lunner et al., Citation2016). The interaction between working memory capacity group and level of performance (50%, 80%) is evidence of the importance of working memory capacity in aided speech recognition performance in noise, suggesting that listeners with higher working memory capacity have better ability to recognize speech in noise at the 80% threshold level than those with lower working memory capacity (see ).

Figure 3. The significant two-way interaction between working memory capacity scores (high, low, in % correct) and level of performance (50%, 80%), measured in terms of signal-to-noise ratios in the Hagerman test (the error bars show standard error).

The positive effects of working memory capacity on Hagerman sentences at the 80% performance level may also suggest that habitual hearing aid users wearing new hearing instruments may rely on their working memory capacity or other cognitive abilities when a speech recognition task is more challenging (Lunner, Citation2003; Rönnberg et al., Citation2008, Citation2013). It appears that working memory capacity, rather than background noise, is most important for the 80% performance level, whereas noise background is a more important factor for the 50% performance level. This may indicate that when SNR is favourable (i.e., higher), the positive effects of working memory capacity are more pronounced than the effects of background noise on aided speech recognition performance, an outcome which is also in line with the predictions of the ELU model (Rönnberg et al., Citation2008, 2013). This was an expected finding, which agreed with the findings of previous studies demonstrating that the simultaneous maintenance and semantic processing ability measured by the reading span test is crucial when interpreting the meaning of such sentences as the Hagerman sentences in the presence of noise, particularly at favourable SNR using hearing aids (Lunner, Citation2003; Lunner et al., Citation2016; Rönnberg et al., Citation2008, Citation2013, Citation2019).

Limitations and future considerations

Methodological differences

In addition to differences between experienced male and female hearing aid users in hearing sensitivity and speech intelligibility, and differences in working memory capacity of individual subjects’ performance in aided speech recognition, there were also important methodological differences that could have influenced these findings. Current knowledge of the effect of the listener’s gender in hearing sensitivity and speech intelligibility among experienced hearing aid users is based primarily on cross-sectional studies, rather than longitudinal studies. Evidence from previous longitudinal studies (Pearson et al., Citation1995) shows a significant association between chronological age and gender differences in terms of hearing sensitivity. This suggests that the effect of the listener’s gender changes with age. For example, Pearson et. (1995) found that among male listeners, hearing sensitivity at 500 Hz declines significantly beyond the age of 20 years, with other frequencies declining at the age of 30 years and beyond. However, the long-term rate of change in hearing level at higher frequencies is superior among elderly male individuals than among younger male individuals. These findings indicate that longitudinal research may reveal that factors such as age may affect the differences caused by listener gender in hearing sensitivity and speech perception in silence and noise. Future investigation should consider these methodological differences (i.e., cross-sectional vs. longitudinal studies) to better understand the effect of gender differences on hearing sensitivity and speech intelligibility among persons with hearing impairments.

Lack of control group

The lack of a control group is another limitation, which raises another concern because in the present study, all participants were hearing-impaired experienced hearing aid users. Since they have been using their hearing aids for some years, they may have been exposed to different types of signal processing algorithms implemented in their hearing aids. This may have given them superior skills for dealing and coping effectively with differences in hearing aids with various signal processing algorithms, e.g., fast-acting compression, NR (Lunner, Citation2003). This familiarization with various types of hearing aid signal processing algorithms may have enabled them over time to learn the effects of these various algorithms and develop better strategies for listening with hearing aids. Such familiarization may have affected the results of the present study with respect to the listener’s gender and the link between working memory and speech recognition in noise (Ng et al., Citation2014). Future studies should include a control group of hearing-impaired listeners of matching age and working memory capacity who are new hearing aid users, still not accustomed to listening with hearing aids, because previous studies have shown that hearing-impaired listeners unused to listening with hearing instruments tend to draw more upon their cognitive resources to recognize processed speech signals (Lunner, Citation2003; Ng et al., Citation2014).

Magnitude of effect

Finally, the magnitude of the effect observed in the present study may have benefits and limitations that should be acknowledged. Interestingly, in the present study, the magnitude of the effect was moderate in the overall results. However, some results showed a weaker effect because the magnitude of differences between the means was small (Cohen’s d = .2), which may raise concern regarding the strength of the effect of the listener’s gender on hearing sensitivity and speech intelligibility among hearing-impaired hearing aid users. Given that the present study was exploratory, more studies will be required for better elucidation of the impact of the listener’s gender on hearing sensitivity and speech intelligibility in the context of rates of changes in hearing sensitivity and intelligibility among experienced and inexperienced hearing aid users.

Conclusions

To the best of author’s knowledge, this is the first study to demonstrate that experienced male and female hearing aid users differ significantly in their hearing ability and sensitivity and ability to recognize aided speech in noise. These findings suggest that an average female hearing aid user, compared with an average male hearing aid user, has greater speech recognition ability when speech is linearly amplified using NR, but not when speech is linearly amplified without NR. Likewise, an average female hearing aid user hears the same sound with a greater sensitivity compared to an average male listener at higher frequencies. Furthermore, hearing aid users with higher working memory capacity had better aided speech recognition performance. The effect of individual variability was more pronounced at the 80% performance level than at the 50% performance level, and was stronger when speech signals were linearly amplified without NR than when NR was used. Taken together, these findings have provided further evidence of the role of the listener’s gender and working memory capacity, which may imply benefits from basing clinical rehabilitation programmes and counselling on the listener’s gender and working memory skill level. Further investigation is needed for a better understanding of how a listener’s gender may impact listening to speech amplified with different hearing signal processing in younger and elderly hearing-impaired people unaccustomed to listening with hearing aids, as gender difference may be associated with numerous factors including the speaker’s gender and age.

Acknowledgements

The author thanks Rachel Jane Ellis for her insightful comments on the earlier version of this manuscript; Mathias Hällgren (from the Department of Technical Audiology, Linköping University) for his technical support; Tomas Bjuvmar and Helena Torlofson (from the Hearing Clinic, University Hospital, Linköping); Elaine Ng (from the Swedish Institute for Disability Research, Linköping University) for her assistance in data collection; and Thomas Karlsson, Marie Rudner and Emil Holmer for their support. The author would like also to thank the reviewers for their helpful and insightful comments on this manuscript.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Akeroyd, M. A. (2008). Are individuals’ differences in speech perception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. International Journal of Audiology, 4(Suppl.2), S125–S143.

- Alm, M., & Behne, D. (2015). Do gender differences in audio-visual benefit and visual influence in audio-visual speech perception emerge with age? Frontiers in Psychology, 6, 1014. doi:https://doi.org/10.3389/fpsyg.2015.01014.

- Besser, J., Koelewijn, T., Zekveld, A. A., Kramer, S. E., & Festen, J. M. (2013). How linguistic closure and verbal working memory relate to speech recognition in noise: A review. Trends in Amplification, 17, 75–93. doi:https://doi.org/10.1044/2014_JSLHR-H-13-0054.

- Bradlow, A., Torretta, G., & Pisoni, D. (1996). Intelligibility of normal speech I: Global and fine-graine acoustic-phonetic talker characteristic. Speech Communication, 20, 255–272. doi:https://doi.org/10.1016/s0167-6393(96)00063-5.

- Canadian Association of Speech Language Pathologists and Audiologists, CASLPA. (2006). Fact sheet: Noise-induced hearing loss.

- Chung, D. Y., Mason, K., Gannon, R. P., & Willson, G. N. (1983). The ear effects as a function of age and hearing loss. Journal of Acoustical Society of America, 73, 1277–1282.

- Cohen, J. (1988). Statistical power analysis for the behavioural sciences (2nd ed.). Hillsdale, NJ: Erlbaum.

- Corso, J. F. (1959). Age and sex differences in pure-tone thresholds. Journal of Acoustical Society of America, 31, 498–507.

- Crowley, H. J., & Nabelek, I. (1996). Estimation of client assessed hearing aid performance based upon unaided variables. Journal of Speech and Hearing Research, 39, 19–27.

- D’Alessandro, S. A., & Norwich, K. H. (2007). Identification variability as measure of loudness: An adaptation to gender differences. Canadian Journal of Experimental Psychology, 61(1), 64–70.

- Dancer, J., Krain, M., Thompson, C., Davis, P., & Glen, J. (1994). A cross-sectional investigation of speechreading in adults: Effects of age, gender, practice, and education. The Volta Review, 96, 31–40.

- Daneman, M., & Carpenter, P. A. (1980). Individual differences in working memory and reading. Journal of Verbal Learning and Verbal Behavior, 19(4), 450–466. doi:https://doi.org/10.1016/S0022-5371(80)90312-6.

- Dubno, J. R., Lee, F., Matthews, L. J., & Mills, J. H. (1997). Age-related and gender-related changes in monaural speech recognition. Journal of Speech, Language and Hearing Research, 40, 444–452.

- Ellis, L., Fucci, D., Reynolds, L., & Benjamin, B. (1996). Effects of gender on listeners’ judgments of speech intelligibility. Perception and Motor Skills, 83(3), 771–775.

- Foo, C., Rudner, M., Rönnberg, J., & Lunner, T. (2007). Recognition of speech in noise with new hearing instrument compression release settings requires explicit cognitive storage and processing capacity. Journal of the American Academy of Audiology, 18, 553–556. doi:https://doi.org/10.3766/jaaa.18.7.8

- Frost, J. A., Binder, J. R., Springer, J. A., Hammeke, T. A., Bellgowan, P. S. F., Rao, S. M., & R. W., Cox. (1999). Language processing is strongly left-lateralized in both sexes: Evidence from functional MRI. Brain, 122, 199–208.

- Hagerman, B., & Kinnefors, V. (1995). Efficient adaptive methods for measurements of speech perception thresholds in quiet and noise. Scandinavian Journal of Audiology, 24, 71–77.

- Healy, E. W., Yoho, S. E., & Apoux, F. (2013). Band importance for sentences and words re-examined. The Journal of the Acoustical Society of America, 133(1), 463–473.

- Humes, L. E., & Robert, L. (1990). Speech recognition difficulties of the hearing-impaired elderly: The contributions of audibility. Journal of Speech and Hearing Research, 33, 726–735.

- Irwin, J. R., Whalen, D. H., & Fowler, C. A. (2006). A sex difference in visual influence on heard speech. Perception Psychophysics, 68, 582–592. doi:https://doi.org/10.3758/BF03208760.

- Jerger, J., Chmiel, R., Stach, B., & Maureen, S. (1993). Gender effects audiometric shape in presbycusis. Journal of American Academy of Audiology, 4, 42–49.

- Kansaku, K., Yamaura, A., & Kitazawa, S. (2001). Imaging studies on Sex differences in lateralization of language areas. Neuroscience Research, 41, 333–337.

- Klasner, E. R., & Yorkston, K. M. (2005). Speech intelligibility in ALS and HD dysarthria: The everyday listener’s perspective. The Journal of Medical Speech Language Pathology, 13(2), 127–140.

- Knecht, S., Drager, B., Deppe, M., Bode, L., Lohmann, H., Floel, A., & Henningsen, H. (2000). Handedness and hemispheric dominance in healthy humans. Brain, 123, 2512–2518.

- Kwon, H. B. (2010). Gender differences in speech intelligibility using speech intelligibility tests and acoustic analyses. The Journal of Advanced Prosthodontics, 2(3), 71–76.

- Lunner, T. (2003). Cognitive function in relation to hearing aid use. International Journal of Audiology, 42((Suppl. 1)), S49–S58. doi:https://doi.org/10.3109/14992020309074624.

- Lunner, T., Rudner, M., Rosenbom, T., & Ågren, J. (2016). Using speech recall in hearing aid fitting and outcome evaluation under ecological test conditions. Ear Hear, 37(1), 145S–154S. doi:https://doi.org/10.1097/AUD.0000000000000294.

- Markham, D., & Hazan, V. (2004). The effects of talker and listener-related factors on intelligibility for real word, open set perception test. Journal of Speech, Language, and Hearing Research, 47(4), 725–737.

- Mattys, S. L., Davis, M. H., Bradlow, A. R., & Scott, S. K. (2012). Speech recognition in adverse conditions: A review. Language and Cognitive Processes, 27(7–8), 953–978.

- McGuinness, D. (1974). Equating individual differences for auditory input. Psychophysiology, 11, 115–120.

- Moore, D. R., Edmondson-Jones, M., Piers Dawes, P., Fortnum, H., McCormack, A., Pierzycki, R. H., … Snyder, J. (2014). Relation between speech-in-noise threshold, hearing loss and cognition from 40–69 years of age. PLos ONE, 9(9), e107720.

- Ng, E. H. N., Rudner, M., Lunner, T., & Rönnberg J. (2015). Noise reduction improves memory for target language speech in competing native but not foreign language speech. Ear Hear, 36(1), 2–91. doi:https://doi.org/10.1097/AUD.0000000000000080.

- Ng, E. H. N., Classon, E., Larsby, B., Arlinger, S., Lunner, T., Rudner, M., & Rönnberg, J. (2014). Dynamic relation between working memory capacity and speech recognition in noise during the first six months of hearing aid use. Trends Hear, 18, 1–10. doi:https://doi.org/10.1177/2331216514558688.

- Ng, E. H. N., Rudner, M., Lunner, T., Pedersen, M. S., & Rönnberg, J. (2013). Effects of noise and working memory capacity on memory processing of speech for hearing-aid users. International Journal of Audiology, 52(7), 433–441.

- Pearson, J. D., Morrell C. H., Gordon-Salant S., Pearson, J. D., Morrell, C. H., Gordon-Salant, S., Brant, L. J., Metter, E. J., Klein, L. L., et al. (1995). Gender differences in a longitudinal study of age-associated hearing loss. Journal of the Acoustical Society of America, 97, 1196–1205. doi.org/https://doi.org/10.1121/1.412231

- Pugh, K. R., Shaywizt, B. A., Shaywitz, S. E., Fulbright, R. K., Byrd, D., Skudlarski, P., … Gore, J. C. (1996). Auditory selective attention: An fMRI investigation. Neuroimage, 4, 159–173. doi:https://doi.org/10.1006/nimg.1996.0067.

- Rogers, D. S., Harkrider, A. W., Burchfield, S. B., & Nabelek, A. K. (2003). The influence of listener’s gender on the acceptance of background noise. Journal of American Academy of Audiology, 14, 372–382.

- Rönnberg, J., Holmer, E., & Rudner, M. (2019). Cognitive hearing science and ease of language understanding. International Journal of Audiology, 58(5), 247–261. doi:https://doi.org/10.1080/14992027.2018.1551631.

- Rönnberg, J., Lunner, T., Ng, E. H. N., Lidestam, B., Zekveld, A. A., Sörqvist, P., … Stenfelt, S. (2016). Hearing loss, cognition and speech understanding: The n200 study. The International Journal of Audiology, 55, 623–642. doi:https://doi.org/10.1080/14992027.2016.1219775.

- Rönnberg, J., Lunner, T., Zekveld, A. A., Sörqvist, P., Danielsson, H., Lyxell, B., … Rudner, M. (2013). The Ease of Language Understanding (ELU) model: theoretical, empirical, and clinical advances. Frontiers in Systems Neuroscience, 7, 31. doi:https://doi.org/10.3389/fnsys.2013.00031.

- Rönnberg, J., Rudner, M., Foo, C., & Lunner, T. (2008). Cognition counts: a working memory system for ease of language understanding (ELU). International Journal of Audiology, 47(2), S99–S105. doi:https://doi.org/10.1080/14992020802301167.

- Ruytjens, L., Albers, F., Van Dijk, P., Wit, H., & Willemsen, A. (2006). Neural responses to silent lipreading in normal hearing male and female subjects. European Journal of Neurosciences, 24, 1835–1844. doi:https://doi.org/10.1111/j.1460-9568.2006.05072.x.

- Ruytjens, L., Georgiadis, J. R., Holstege, G., Wit, H. P., Albers, F. W., & Willemsen, A. T. (2007). Functional sex differences in human primary auditory cortex. European Journal of Nuclear Medical Molar Imaging, 34, 2073–2081. doi:https://doi.org/10.1007/s00259-007-0517-z.

- Shaywitz, B. A., Shaywitz, S. E., Pugh, K. R., Constable, R. T., Skudlarski, P., Fulbright, R. K., … Gore, J. C. (1995). Sex differences in functional organisation of the brain for language. Nature, 373, 607–609. doi:https://doi.org/10.1038/373607a0.

- Souza, P. E., Arehart, K., & Neher, T. (2015). Working memory and hearing aid processing: Literature findings, future directions, and clinical applications. Frontiers in Psychology, 6, 1894. doi:https://doi.org/10.3389/fpsyg.2015.01894.

- Strelinikov, K., Rouger, J., Lagleyre, S., Fraysse, B., Deguine, O., & Barone, P. (2009). Improvement in speech reading ability by auditory training: Evidence from gender differences in normally hearing, deaf and cochlear implanted subjects. Neuropsychologia, 47, 972–979. doi:https://doi.org/10.1016/j.neuropsychologica.2008.10.017.

- Yoho, S. E., Borrie, S. A., Barrett, T. S., & Whittaker, D. B. (2019). Are there sex effects for speech intelligibility in American English? Examining the influence of talker, listener, and methodology. Attention, Perception & Psychophysics, 81, 558–570. doi:https://doi.org/10.3758/s13414-018-1635-3.

- Yumba, K. W. (2017). Cognitive processing speed, working memory and the intelligibility of hearing aid-processed speech in persons with hearing impairment. Frontiers in Psychology, 8, 1308. doi:https://doi.org/10.3389/fpsyg.2017.01308.

- Yumba, K. W. (2019). Selected cognitive factors associated with individual variability in clinical measures of speech recognition in noise amplified by fast-acting compression among hearing aid users. Noise and Health, 21, 7–16. doi:https://doi.org/10.4103/nah.NHA_59_18.