ABSTRACT

The need for accessing historical Earth Observation (EO) data series strongly increased in the last ten years, particularly for long-term science and environmental monitoring applications. This trend is likely to increase even more in the future, in particular regarding the growing interest on global change monitoring which is driving users to request time-series of data spanning 20 years and more, and also due to the need to support the United Nations Framework Convention on Climate Change (UNFCCC). While much of the satellite observations are accessible from different data centers, the solution for analyzing measurements collected from various instruments for time series analysis is both difficult and critical. Climate research is a big data problem that involves high data volume of measurements, methods for on-the-fly extraction and reduction to keep up with the speed and data volume, and the ability to address uncertainties from data collections, processing, and analysis. The content of EO data archives is extending from a few years to decades and therefore, their value as a scientific time-series is continuously increasing. Hence there is a strong need to preserve the EO space data without time constraints and to keep them accessible and exploitable. The preservation of EO space data can also be considered as responsibility of the Space Agencies or data owners as they constitute a humankind asset. This publication aims at describing the activities supported by the European Space Agency relating to the Long Time Series generation with all relevant best practices and models needed to organise and measure the preservation and stewardship processes. The Data Stewardship Reference Model has been defined to give an overview and a way to help the data owners and space agencies in order to preserve and curate the space datasets to be ready for long time data series composition and analysis.

1. European space agency (ESA) Heritage Data Programme

The Earth Observation part of the Heritage Data Programme covers data from ESA missions and Third Party Missions (TPM) available at ESA through specific agreements nominally starting five years after the effective end of life or five years after end of the agreement.

The ESA Long-Term Data Preservation (LTDP+) Programme consists of three main streams of activities in Earth Observation:

Earth Observation LTDP International Coordination and Cooperation Activities;

Earth Observation Heritage Missions Datasets Consolidation, Access, Preservation and Curation;

Earth Observation Heritage Missions Specific Developments and Evolutions.

The last two areas focus particularly on the ESA Historical Dataset rescuing, preservation and valorization according to user communities’ needs. The results of user consultations aimed at capturing and understanding Earth Science users’ needs in terms of long-term preservation (including accessibility and exploitability aspects) has highlighted that the preservation of Earth Science data and associated knowledge is vital to support their research and application activities. The same need was also identified in the frame of discussions and cooperation ongoing between the Data Access and Preservation (DAP) Working Group European space members and ESA Climate Change Initiative (CCI) representatives and projects (http://cci.esa.int/). The definitions of datasets composing the Fundamental Climate Data Records (FCDR) (e.g. processing levels and algorithms, formats, quality, orthorectification, etc.) have big impacts on the CCI projects’ products and results and need to be clearly identified and documented.

2. Big data and LTDP+

“Big Data” indirectly addresses long-term data preservation issues: very large datasets handling, their curation, valorization, retrieval, manipulation and finally visualization. One of the most relevant “Big Data” aspects is a new way of carrying out scientific research. Increasingly, scientific breakthroughs will be powered by advanced computing capabilities that help researchers manipulate and explore massive datasets. Following experimental, theoretical, and computational science, a “Fourth Paradigm” (The Fourth Paradigm: Data-Intensive Scientific Discovery, Citation2011) is emerging in scientific research. This refers to the data management techniques and the computational systems needed to manipulate, visualize, and manage large amounts of scientific data.

The main challenge is not only the volume of data, but its diversity, e.g. in format and type (Granshaw, Citation2018). Other major challenges are data structure and “data on the move” i.e. transferring data through networks. This latter issue is a big inhibitor to jointly using data across distributed archives. Older Science and EO data are recorded on various devices, in different formats. A huge task represents the recovery, reformatting, reprocessing of such data, as well as the transcription of various associated information, necessary to understand and use the data. Challenges include capture, curation, storage, search, sharing, transfer, analysis, and visualization. A large proportion of users are not domain experts anymore; therefore, data discovery tools, documentation and support are also needed.

3. Long time data series generation

The management of ESA EO data holdings (including data from TPM’s archived at ESA) is formally covered within the ESA LTDP Programme starting 5 years after their end of life. The following set of activities is being implemented on the ESA Historical Data Holdings in accordance to the LTDP Core standard documents (e.g. EO Data Preservation Guidelines Best Practices (CEOS, Citation2019b), EO Preserved Dataset Content (PDSC) (CEOS, Citation2015a), the Long-Term Preservation of Earth Observation Space Data: Preservation Workflow (CEOS, Citation2015c)) defined in cooperation with international partners and organizations:

Assessment of archived data and information holdings versus “PDSC” for all ESA and ESA managed TPMs.

Consolidation of Level-0 datasets (and associated information), and generation of two copies to be stored in two different locations.

Ingestion in robotic tape libraries in the format of Open Archival Information System (OAIS) (CCSDS, Citation2012) standard Submission Information Packages (SIPs).

Full datasets reprocessing, when feasible, for alignment to the most recent or future missions.

Ensuring basic discoverability and dissemination through ESA standard systems.

Progressive historical archives rationalization.

As part of the above activities, a particular focus is put on the generation of long time series of coherent data in support to the Climate Change Initiative requirements and related applications.

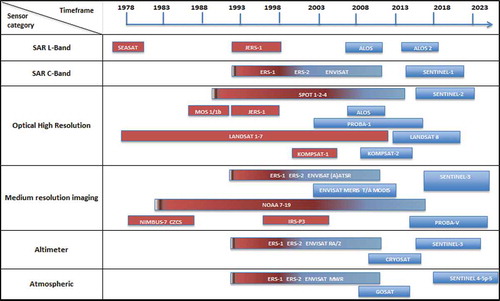

The Long Time Series of data being generated at ESA are shown in the , where the Historical missions covered in the LTDP Programme are highlighted in red.

4. Data stewardship reference model

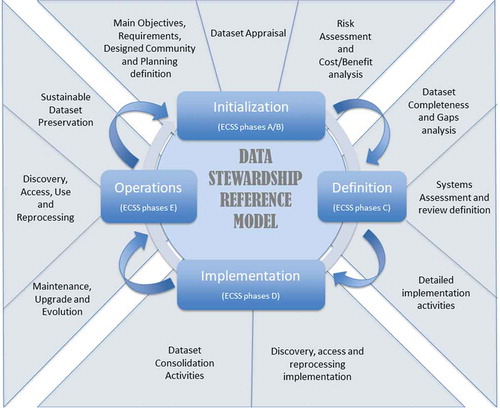

Earth Science data curation and preservation should be addressed during all mission stages – from the initial mission planning, throughout the entire mission lifetime, and during the post-mission phase. The Data Stewardship Reference Model () gives a high-level overview of the steps useful for implementing curation and preservation rules on mission datasets from initial conceptualization or receipt through the iterative curation cycle. The Data Stewardship Reference model describes a series of space assets to be applied and used, before, during, and/or after the end of an Earth observation mission, in order to ensure Earth observation space datasets are preserved and valorized. The Stewardship process starts during the initialization phase and continues until the Operations and Maintenance phase. For each new data reprocessing the process needs to be restarted.

The Data Stewardship Reference Model implementation allows the mapping, for each step, with the relevant space assets and Best Practices/Guidelines. An excerpt of the Best Practices, White Papers and Guidelines defined and developed for this scope is listed below:

EO Data Preservation Guidelines (CEOS, Citation2019b)

Preserved Dataset Content (CEOS, Citation2015a)

Long-Term Preservation of Earth Observation Space Data: Preservation Workflow (CEOS, Citation2015c)

WGISS Data Stewardship Maturity Matrix (CEOS, Citation2020)

EO Data Glossary of Acronyms and Terms (CEOS, Citation2017b)

Generic EO Dataset Consolidation Process (CEOS, Citation2015b)

CEOS Persistent Identifiers (CEOS, Citation2019a)

Associated knowledge Preservation (CEOS, Citation2017a)

EO Data Purge Alert Procedure (CEOS, Citation2016)

Measuring of Earth Observation Data Usage (CEOS, Citation2018)

A final, consistent, consolidated, and validated “data records” obtained by applying a consolidation process consists of the following main steps:

Data collection

Cleaning/pre-processing

Completeness analysis

Processing/reprocessing

In parallel to the data records consolidation process, the data records knowledge, associated information and processing software are also collected and consolidated.

Data stewardship implements and verifies, for the relevant preserved datasets, a set of preservation and curation activities on the basis of a set of requirements defined during the initial phase of the curation exercise. Data preservation activities focus on Earth observation datasets' long-term preservation, and are tailored according to its mission-specific preservation/curation requirements. They consist of all activities required to ensure the “preserved dataset” bit integrity over time, its discoverability and accessibility, and to valorize its (re)-use in the long term (e.g. through metadata/catalogue improvement, processor improvement for algorithm and/or auxiliary data changes and related (re)-processing, linking and improvement of context/provenance information, quality assurance). Preservation activities for digital data record acquired from the space segment and processed on ground embrace ensuring continued data records availability, confidentiality, integrity and authenticity as legal evidence to guarantee that data records are not changed or manipulated after generation and reception over the whole continuum of data preservation (archival media technology migration, input/output format alignment, etc.), valorization and curation activities. The usage of persistent identifier for citation is part of the agency long term data preservation best practices. Data curation activities aim at establishing and increasing the value of “preserved datasets” over their lifecycle, at favouring their exploitation, possibly through the combination with other data records, and at extending the communities using the datasets. These include activities such as primitive features extraction, exploitation improvement, data mining, and generation/management of long time data series and collections (e.g. from the same sensor family) in support to specific applications and in cooperation with international partners. Data stewardship activities refer to the management of an EO Dataset throughout its mission life cycle phases and include preservation and curation activities. It includes all the processes that involve data management (ingestion, dissemination and provision of access for the designated community) and dataset certification.

5. Data management and stewardship maturity matrix

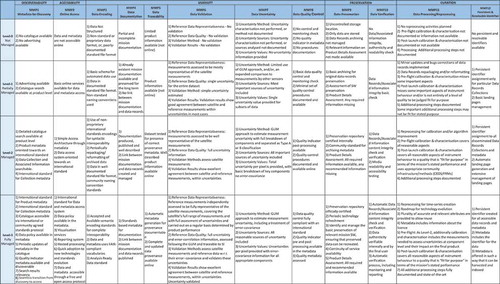

The scope of the ongoing Data Management and Stewardship Maturity Matrix (CEOS, Citation2020) definition is to measure the overall preservation lifecycle and to verify the implemented activities needed to preserve and improve the information content, quality, accessibility, and usability of data and metadata. It can be used to create a stewardship maturity scoreboard of dataset(s) and a roadmap for scientific data stewardship improvement; or to provide data quality and usability information to users, stakeholders, and decision-makers.

In the extended environment of Maturity Matrices and Models, the Maturity Matrix for “Long-Term Scientific Data Stewardship” (WMO, Citation2015), represents a systematic assessment model for measuring the status of individual datasets.

In general, it provides information on all aspects of the data records, including all activities needed to preserve and improve the information content, quality, accessibility, and usability of data and metadata. This has been used as a starting point of the Data Management and Stewardship Maturity Matrix (). In parallel, the Group on Earth Observation (GEO) Data Management Principles Task Force has been tasked with defining a common set of Global Earth Observation System of Systems (GEOSS) Data Management Principles – Implementation Guidelines (DMP-IG). These principles address the need for discovery, accessibility, usability, preservation, and curation of the resources made available through GEOSS.

The content of the Data Management and Stewardship Maturity Matrix represents the result of a combined analysis performed on the DMP-IG and a consultation at European level, with the Long-Term Data Preservation Working Group. The rationales for applying the Data Management and Stewardship Maturity Matrix are:

Providing data quality, usability information to users, stakeholders, and decision-makers;

Providing a reference model for stewardship planning and resource allocation;

Allowing the creation of a roadmap for scientific data stewardship improvement;

Providing detailed guidelines and recommendations for preservation;

Evaluating if the preservation follows best practices;

Giving a technical evaluation of the level of preservation and helping with self-assessment of preservation;

Providing a status of the preservation, but does not offer information on numbers or averages related to preservation;

Helping to break down problems related to preservation, and to understand the costs associated with each preservation level;

Funding agencies can define certain goal levels that they would reach.

6. Long-term series preservation pillars and key drivers

The Heritage Data programme embraces five main pillars: Preservation, Discovery, Access, Valorization and Exploitation.

The climate change communities are requesting time-series of data spanning over 40 years and more to support the United Nations Framework Convention on Climate Change (UNFCCC). Guidelines have been defined by Global Climate Observing System (GCOS) to help all producers of climate – relevant datasets in the generation, processing, documentation and assess of the quality of climate datasets and derived products (i.e. Fundamental Climate Data Records (FCDRs) and the derived Essential Climate Variable products (ECV)) based on observation from surface-based, airborne and satellite-based instruments. The GCOS requirements for data quality, completeness and transparency cover accuracy, stability, temporal and spatial resolution and are based on a broad consensus by the international climate community. For the lower level data sensed from earth observation space missions, the Quality assurance framework of Earth Observation (QA4EO) has been developed through Committee on Earth Observation Satellites (CEOS) to define the quality assurance strategy based on the fundamental principle that “all Earth observation data and derived product should be associated with a documented and fully traceable documentation”.

Key drivers for the long-term data preservation are:

Data Records: these include raw data and/or Level-0 data, higher-level products, browse images, auxiliary and ancillary data, calibration and validation datasets, and descriptive metadata;

Associated Information: this includes all the Tools used in the Data Records generation, quality control, visualization and value adding, and all the Information needed to make the Data Records understandable and usable by the Designated Community (e.g. mission architecture, products specifications, instruments characteristics, algorithms description, calibration and validation procedures, mission/instruments performances reports, quality-related information). It includes all Data Records Representation Information, Packaging Information and Preservation Descriptive Information according to the OAIS information model (part of this information might be included in the descriptive metadata depending on the specific implementation);

Completeness and coherency among all elements to be preserved to ensure present and future exploitability;

Certifying the quality of data, context and provenance information, data citation;

Assure harmonized data accessibility (from catalogue harvesting to output format alignment);

Valorize the dataset exploitation and multi-sensor cross-fertilization (via data mining and big data analysis exploitation platform and tools).

7. Long time data series projects

Sentinel missions, along with the Copernicus Contributing Missions as well as Earth Explorers and Third Party missions are providing routine monitoring of our environment at the global scale, thereby delivering an unprecedented amount of data, also poses a major challenge to achieve its full potential in terms of data exploitation. The emergence of large volumes of data (Petabytes era) raises new issues in terms of discovery, access, exploitation, and visualization of data, with profound implications on how users do “data-intensive” Earth Science. In line with LTDP framework guidelines (CEOS, Citation2015a, Citation2015b, Citation2019) for the preservation of long time series and to foster the exploitation of the dataset with the new emerging technology for big data analysis and reprocessing, various projects were started by the Agency, described in detail hereinafter.

7.1. ERS-1/2 & ENVISAT Missions

The REAPER (RE-processing of Altimeter Products for ERS mission) project is performing a full reprocessing of both the ERS-1 and the ERS-2 Altimetry missions. The reprocessed dataset spans from the start of the ERS-1 mission in July 1991 to June 2003, when the loss of the ERS-2 on-board data storage capability occurred causing the end of the ERS-2 global mission coverage.

The ERS-1/2 Low Bit Rate (LBR) data consolidation, gap filling and master dataset generation project (to be completed in mid-2015) is further refining the existing Level 0 master datasets for all ERS-1/2 LBR Instruments. This activity is also requiring the re-transcription of data from heritage media to fill identified gaps. The consolidated datasets will then be at the basis of further reprocessing campaigns in the future. A similar project is addressing the consolidation of the ERS-1/2 SAR Level 0 master dataset including the repatriation of SAR sensor data from National and Foreign stations in order to complete the master dataset available at ESA facilities.

A follow-on of the REAPER project is the updated orbit solutions for the full ERS-1 and ERS-2 mission periods. New orbit solutions for the ERS-1 and ERS-2 satellites are provided as an update to the solutions generated in the framework of the REAPER project (Reprocessing of Altimeter Products for ERS, funded by ESA). The new solutions cover the full mission period for ERS-2, i.e. the period from June 2003 to July 2011 is taken into account as well. The latter results in ERS-1 and ERS-2 orbit solutions that cover, respectively, August 1991 – July 1996 and May 1995 – July 2011. The initial orbit solutions are a combination of two orbit solutions by ESA/ESOC (ESA in Germany) and Delft University of Technology (The Netherlands), using a combination of Satellite Laser Tracking (SLR) and altimeter crossover observations, augmented with Precise Range and Range-Rate Equipment (PRARE) tracking observations when available. In a second step, a final combination is generated that includes the German Geodetic Research Institute (DGFI) solution as well which has been kindly made available to the REAPER project to be included in the combination process (in the case of the DGFI solution for ERS-2 the end date is February 2006).

The (A)ATSR SWIR Calibration and Cloud Masking project aims at investigating the Long Term stability of the Atmospheric (ATSR) instrument series, by building on early work on ATSR data and analysing the complete dataset to be made available for the final users. The correct radiometric calibration of the SWIR channel is of crucial importance to SST reprocessing and numerous CCI projects for any ATSR cloud-masking algorithm. A review of the SWIR calibration procedures and related parameters and their extension to ATSR-1 and ATSR-2 is, thus, of extreme importance to the aforementioned activities.

The project will provide a SWIR channel correction option and a set of calibration correction functions applicable to the currently available ATSR dataset.

The ESA Fundamental Data Record for ALTimetry (FDR4ALT) project is part of the ESA Heritage Data Programme (LTDP+) aimed at the preservation of ESA’s assets in science data from space, which patently embraces the Earth Observation (EO) data. The ESA LTDP programme funds the data preservation, the data valorization and data accessibility of ESA and Third Party Missions (TPM) EO “Heritage Missions”, starting 5 years after the end of the satellite nominal operations.

Following the successful decades of ERS and Envisat Altimetry mission operations and data exploitation, the FDR4ALT project shall revisit the long-standing series of Altimetry observations (i.e. associated Level 1 and Level 2 processing, dataset validation and traceability according to the QA4EO standards, provision of uncertainties, generation of Fundamental Data Record time series and Thematic Data Products, etc.) with the objective of improving the performance of the ESA heritage datasets and their continuity with current (e.g. CryoSat-2, Sentinel-3, Jason) and future missions for multi-disciplinary applications and broader data use.

The ERS/Envisat MWR sensor recalibration project aims at deriving a homogeneous and fully error-characterized water vapour Thematic Climate Data Record (TCDR) based on the entire time series of available data for ERS-1, ERS-2 and Envisat. This dataset will provide the backbone for atmospheric correction for ESA’s critical altimetry missions. As such, the revised dataset will yield a positive impact on long-term stability and accuracy of radar altimetry products as well as provide uncertainty estimates on the tropospheric correction used in the mean sea level retrievals. High accuracy and stability is especially crucial for sea level trend analysis. This dataset will also provide a unique resource for climate research reaching back 20 years. With the launch of the Sentinel-3 altimetry instrument suite, a long-term perspective exists for extending the dataset.

7.2. JERS-1/SEASAT/ALOS

The JERS-1 and SEASAT mission projects aimed at aligning the respective L-band SAR datasets to the ALOS PALSAR sensor dataset, in terms of processing chain and output format.

The project deliver as output the complete SEASAT and JERS-1 SAR reprocessed datasets in the form of OAIS standard EO Submission Information Packages (SIPs), which will be directly ingested at ESA facilities for archiving and disseminated via the ESA infrastructure.

7.3. AVHRR timeline

Data of the Advanced Very High Resolution Radiometer (AVHRR) onboard of many National Oceanic and Atmospheric Administration (NOAA) satellites – and since 2006 on EUMETSAT MetOp-satellites is the only source to provide long time series based on an almost unchanged sensor of the last 40 years with daily availability and 1.1 km spatial resolution. This long period fulfils the requirements of the World Meteorological Organization for a statistical sound analysis to study climate change induced shifts of Essential Climate Variables (ECV). Beside the central NOAA, CLASS archive exist many local archives, which are partly not accessible or the data holdings are not well maintained to be used by research teams. A few archives in Europe have data holdings covering Europe on a daily basis but are not homogenized and consolidated. In the frame of ESA’s Long-Term Data Preservation (LTDP) programme the data holdings of University of Bern (Switzerland), European Space Agency and Dundee Satellite Receiving Station (UK) are combined, redundancy removed and validated to proof readability of the AVHRR LAC (1 km resolution) level 1b data. In a final step, metafiles and quick looks are generated to be included in a data container (SIP). The metafiles include some quality indicators to guarantee the usability of the dataset. This may help the user to select the needed files for their own investigations. Finally, the homogenized dataset was transferred to ESA to keep the unique AVHRR images alive for the next +50 years and make it accessible for all interested parties via ESA web interfaces.

The four to six band multi-spectral AVHRR data constitute a valuable data source for deriving time series of surface parameters, such as snow cover, land surface temperature or vegetation indices for monitoring global change. AVHRR data are residing in various archives worldwide, including several ESA facilities. The AVHRR Curation project aims at consolidating the AVHRR ESA Level 0 data holdings and at defining the technical approaches and solutions for the possible generation of a coherent European AVHRR dataset consisting of the combination of datasets from different organizations. In this context, an AVHRR Level 0 dataset consolidation procedure was defined in coordination with AVHRR data users and experts, to ensure consistency and homogeneity of the datasets. The project has outlined the importance of assuring that the long-term series datasets are:

homogeneous (data gap consolidation procedure shall be in place during operations or at mission end);

consistent (inter-sensor consistency is one of the main requirements from the user community using long-term data series, i.e. to preserve the data and the knowledge of the family of sensor consistency and evolution and cross – validation assessment within the same the family of sensors as AVHRR);

include the measure of the uncertainty (It is of outmost importance to foster practices for sensor degradation measurement and preserve the measure of uncertainty in the product w.r.t the original mission requirements quality performances expectation and to keep track of sensor performance degradation).

The whole process for consolidation of the AVHRR data was in-line with the recommendations of the CEOS Working Group on Information Systems and Services (WGISS) to fulfil the needs for data management and stewardship maturity.

7.4. MERIS data processing

The 4th MERIS data reprocessing is in the process to be finalized and the products should be available in the near future. It is focused on the alignment, as far as possible, of the MERIS and Sentinel-3/OLCI sensor data processing chains in order to ensure a continuity of the products derived from these two instruments.

A major evolution of this reprocessing is related to the data formatting: the Envisat data format (.N1) is given up in favour to the Sentinel-3 Standard Archive Format for Europe (SAFE) format based on a folder of netCDF format files including a xml manifest file.

Moreover, following recommendations by the MERIS QWG, a number of algorithm and auxiliary data evolutions have been implemented.

The source of the meteorological data included in the L1 and L2 products is now ECMWF Era-Interim.

At level 1:

The L1 calibration is updated based on a reanalysis of the complete mission in-flight calibration dataset. It includes in particular a revised diffuser ageing methodology accounting also for the ageing of the reference diffuser.

The geolocation is improved: parallax and orographic corrected latitude, longitude and altitude are given per pixel.

The a priori surface classification masks (land/sea, tidal areas and in-land-waters) are significantly upgraded and are in line with those used by the OLCI data processing.

At level 2, a large number of algorithm evolutions have also been implemented:

The computation of the surface pressure over land and inland waters is improved taking into account the per-pixel altitude and an improved relationship of barometric pressure with the altitude.

The computations of H2O, O2 and O3 transmissions have been revised and the NO2 absorption has been added in the total gaseous atmospheric transmittance.

The smile correction is performed through the pressure adjustment by using an equivalent Rayleigh optical thickness shift.

The radiometric land/water reclassification has been revised following upgrades of the a priori masks: (1) Reclassified pixels outside the tidal areas remain in their original branch; (2) The Flood and Dry Fallen masks have been added.

Cloud screening is improved thanks to the used of NN derived from the ESA Climate Change Initiative (CCI) programme, and enriched with semi-transparent cirrus clouds detection using O2 absorption.

The Water Vapour retrieval has been upgraded through the use of the 1D-var algorithm.

Handling of molecular scattering is improved for both Marine and Land processing by the use of accurate pixel elevation, better modelling of the relationship between pressure and elevation, better modelling of the Rayleigh optical thickness and improved correction of meteorological variation of atmospheric pressure.

For the Marine processing, (1) The atmospheric correction over coastal waters is better handled thanks to the improvement of the Bright Pixel Atmospheric Correction (BPAC); (2) New aerosols models have been implemented in the LUTs that now have been extended to several pressure levels for use over in-land as well as oceanic waters; (3) Vicarious gains in the VIS have been recomputed to account for modified L1 calibration, atmospheric correction upgrades and updated in-situ measurement datasets and Vicarious adjustment in the NIR (relative to one band) is discarded because the new BPAC is more robust to errors in the NIR and spectrally aligns the path reflectance for each pixel; (4) The Kd_490 product has been added; (5) The Case 2 Ocean Colour algorithm has been upgraded taking into account a new bio-optical model derived from NOMAD dataset and extended for Case 2 water with 5 components and an atmospheric correction based on the atmosphere model of CoastColour which includes a variable ground pressure.

For the Land processing, aerosols retrieval over land has been improved with a better modelling of the surface BRDF; the aerosol Angstrom exponent climatology has been updated and a new product is introduced (T442_ALPHA) and a quality index Q as well.

Uncertainties have been added to ocean and land products.

8. Cooperation activities

ESA is cooperating in the Long-Term Data Preservation domain in Earth observation with European partners through the DAP Working Group, formed within the Ground Segment Coordination Body (DCB), and with other international partners, through participation to various working groups and initiatives.

The LTDP core documents have also been reviewed and approved at international level within CEOS and GEO. A review of the Preserved Dataset Content (CEOS, Citation2015a) document is currently ongoing in the frame of the Consultative Committee for Space Data Systems (CCSDS) Data Archive Ingestion (DAI) working group and the relevant processes are already started to formalize it as an ISO standard.

9. Conclusions

The emergence of large volumes of data raises new issues in terms of discovery, access, exploitation, and visualization of data, with profound implications on how users do “data-intensive” Earth Science. In line with LTDP framework guidelines and strategy for the preservation of long time series and to foster the exploitation of the dataset with the new emerging technology for big data analysis and reprocessing, various projects were started by the Agency. These projects have outlined the importance of assuring that long-term series datasets are: homogeneous (data gap consolidation procedure shall be in place during operations or at mission end); consistent and include measure of the uncertainty (inter-sensor performance degradation) and have demonstrated that data and/or metadata must be broken out of silos in order to be mined, proximity of data to the streaming processing chain and data locality is vital for cross-fertilization and discovery of new products and services to the EO community.

Acknowledgments

The views expressed in the paper are those of the authors and do not necessarily reflect the views of the institutions they belong to. The authors would like to thank the European space Agency (ESA) and for their financial support to the Open Access Article Publishing Charge (APC).

Disclosure statement

No potential conflict of interest was reported by the authors.

Data availability statement

The data that support the findings of this study are available from the corresponding author upon reasonable request to the referred email address.

Additional information

Notes on contributors

Iolanda Maggio

Iolanda Maggio has a computer science background with specialization in Data Science. She has an extensive work experience in the Space and Defence Operations environment and space project development and operations service industries. Currently she is covering a Senior Project Manager/Engineering and Operation of Space Mission Support position on the ESA Establishment in Frascati (Rome) with the following responsibilities: coordination of a team acting as a Team Leader, in order to manage specific projects relating to Mission Data Operation, Preservation, Reprocessing and exploitation (SEASAT, JERS-1, AVHRR/NOAA, ERS, ENVISAT, MOS, etc.) and giving support for space mission international cooperation and standardization committees activities and initiatives. She is also acting as Service Manager with responsibility in the service improvements, KPI management and Risk Management. Particularly, she is providing engineering and operations services support to ESA and Third Parties Earth Observation missions giving support to the Heritage Data Programme (HDP) for proposal definition. Concerning the Project Management skill she recently updated the PMP certification (the expiration date is on 30th of January 2021), completed the ITIL certification and finally a Master Programme in Data Science with honors.

References

- CCSDS. 2012. Reference model for open archival information system. Rerieved from https://public.ccsds.org/pubs/650x0m2.pdf

- CEOS. 2015a. EO preserved dataset content. Rerieved from http://ceos.org/ourwork/workinggroups/wgiss/current-activities/data-stewardship/

- CEOS. 2015b. Generic EO dataset consolidation process. Rerieved from http://ceos.org/document_management/Working_Groups/WGISS/Interest_Groups/Data_Stewardship/Best_Practices/GenericEarthObservationDataSetConsolidationProcess_v1.0.pdf

- CEOS. 2015c. Long term preservation of earth observation space data: Preservation workflow. Rerieved from http://ceos.org/ourwork/workinggroups/wgiss/current-activities/data-stewardship/

- CEOS. 2016. EO data purge alert procedure. Rerieved from http://ceos.org/document_management/Working_Groups/WGISS/Interest_Groups/Data_Stewardship/Recommendations/WGISS_DSIG_Data%20Purge%20Alert_WP.pdf

- CEOS. 2017a. CEOS associated knowledge preservation. Rerieved from http://ceos.org/document_management/Working_Groups/WGISS/Documents/WGISS%20Best%20Practices/CEOS%20Associated%20Knowledge%20Preservation%20Best%20Practices_v1.0.pdf

- CEOS. 2017b. EO data glossary of acronyms and terms. Rerieved from http://ceos.org/document_management/Working_Groups/WGISS/Interest_Groups/Data_Stewardship/White_Papers/EO-DataStewardshipGlossary_v1.2.pdf

- CEOS. 2018. Measuring of earth observation data usage. Rerieved from http://ceos.org/document_management/Working_Groups/WGISS/Interest_Groups/Data_Stewardship/Best_Practices/Measuring%20Earth%20Observation%20Data%20Usage%20-%20Best%20Practices_v1.0.docx

- CEOS. 2019a. CEOS persistent identifiers. Rerieved from http://ceos.org/document_management/Working_Groups/WGISS/Documents/WGISS%20Best%20Practices/CEOS%20Persistent%20Identifier%20Best%20Practices_v1.2.pdf

- CEOS. 2019b. EO data preservation guidelines best practices. Rerieved from http://ceos.org/ourwork/workinggroups/wgiss/current-activities/data-stewardship/

- CEOS. 2020. WGISS data management and stewardship maturity matrix. Rerieved from http://ceos.org/document_management/Working_Groups/WGISS/Interest_Groups/Data_Stewardship/White_Papers/WGISS%20Data%20Management%20and%20Stewardship%20Maturity%20Matrix.pdf

- Granshaw, S. I. 2018. Big data and the fourth paradigm. Rerieved from https://onlinelibrary.wiley.com/doi/10.1111/phor.12255

- The Fourth Paradigm: Data-Intensive Scientific Discovery. (2011, August). Rerieved from https://www.researchgate.net/publication/260686305_The_Fourth_Paradigm_Data-Intensive_Scientific_Discovery_Point_of_View

- WMO. 2015. A unified framework for measuring stewardship practices applied to digital environmental datasets. Ge Peng and Jeffrey L. Privette and all. Rerieved from https://www.researchgate.net/publication/272827811_A_Unified_Framework_for_Measuring_Stewardship_Practices_Applied_to_Digital_Environmental_Datasets