?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

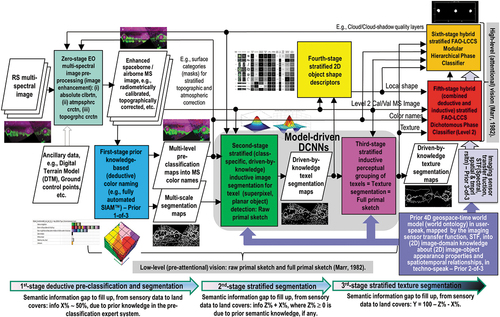

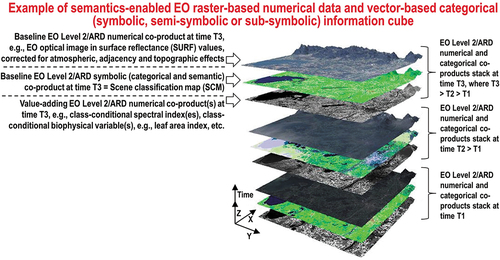

Aiming at the convergence between Earth observation (EO) Big Data and Artificial General Intelligence (AGI), this two-part paper identifies an innovative, but realistic EO optical sensory image-derived semantics-enriched Analysis Ready Data (ARD) product-pair and process gold standard as linchpin for success of a new notion of Space Economy 4.0. To be implemented in operational mode at the space segment and/or midstream segment by both public and private EO big data providers, it is regarded as necessary-but-not-sufficient “horizontal” (enabling) precondition for: (I) Transforming existing EO big raster-based data cubes at the midstream segment, typically affected by the so-called data-rich information-poor syndrome, into a new generation of semantics-enabled EO big raster-based numerical data and vector-based categorical (symbolic, semi-symbolic or subsymbolic) information cube management systems, eligible for semantic content-based image retrieval and semantics-enabled information/knowledge discovery. (II) Boosting the downstream segment in the development of an ever-increasing ensemble of “vertical” (deep and narrow, user-specific and domain-dependent) value–adding information products and services, suitable for a potentially huge worldwide market of institutional and private end-users of space technology. For the sake of readability, this paper consists of two parts. In the present Part 1, first, background notions in the remote sensing metascience domain are critically revised for harmonization across the multi-disciplinary domain of cognitive science. In short, keyword “information” is disambiguated into the two complementary notions of quantitative/unequivocal information-as-thing and qualitative/equivocal/inherently ill-posed information-as-data-interpretation. Moreover, buzzword “artificial intelligence” is disambiguated into the two better-constrained notions of Artificial Narrow Intelligence as part-without-inheritance-of AGI. Second, based on a better-defined and better-understood vocabulary of multidisciplinary terms, existing EO optical sensory image-derived Level 2/ARD products and processes are investigated at the Marr five levels of understanding of an information processing system. To overcome their drawbacks, an innovative, but realistic EO optical sensory image-derived semantics-enriched ARD product-pair and process gold standard is proposed in the subsequent Part 2.

KEYWORDS:

- Analysis Ready Data

- Artificial General Intelligence

- Artificial Narrow Intelligence

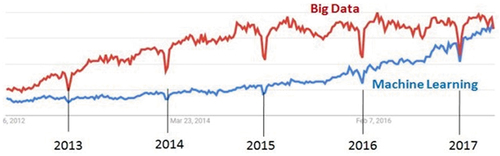

- big data

- cognitive science

- computer vision

- Earth observation

- essential climate variables

- Global Earth Observation System of (component) Systems

- inductive/ deductive/ hybrid inference

- Scene Classification Map

- Space Economy 4.0

- radiometric corrections of optical imagery from atmospheric, topographic, adjacency and bidirectional reflectance distribution function effects

- semantic content-based image retrieval

- 2D spatial topology-preserving/retinotopic image mapping

- world ontology (synonym for conceptual/ mental/ perceptual model of the world)

1. Introduction

For the sake of readability, this methodological and survey paper consists of two parts. The present Part 1 is preliminary to the subsequent Part 2, proposed as (Baraldi et al., Citation2022).

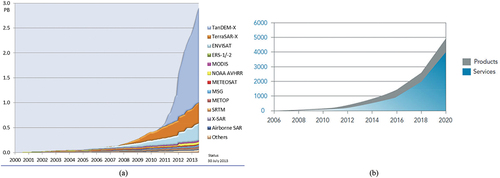

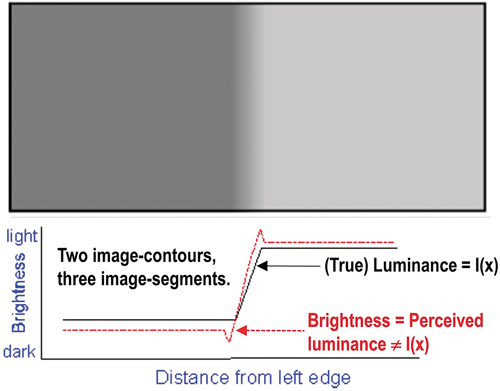

By definition, big data are characterized by the six Vs of volume, variety, veracity, velocity, volatility and value (Metternicht, Mueller, & Lucas, Citation2020), to be coped with by big data management and processing systems. A special case of big data is large image database. An image is a 2D gridded data set, belonging to a (2D) image-plane (Baraldi, Citation2017; Matsuyama & Hwang, Citation1990; Van der Meer & De Jong, Citation2011; Victor, Citation1994). An obvious observation is that all images are data, but not all data are imagery. Hence, not all big data management and processing (understanding) system solutions are expected to perform “well” when input with imagery, but computer vision (CV) systems.

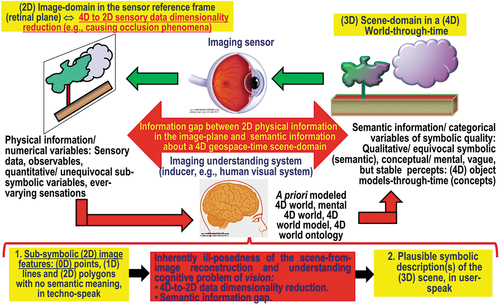

Encompassing both biological vision and CV, vision is an inherently ill-posed cognitive task (sensory data interpretation problem), whose goal is 4D geospace-time scene-from-(2D) image reconstruction and understanding (Matsuyama & Hwang, Citation1990). It requires to fill a so-called semantic information gap, from ever-varying sensory data (subsymbolic numerical variables, sensations) in the (2D) image-domain to stable percepts, equivalent to discrete and finite symbolic concepts, provided with meaning/semantics (Ball, Citation2021; Capurro & Hjørland, Citation2003; Sowa, Citation2000), in a conceptual/ mental/ perceptual model of the observed scene, belonging to a 4D geospace-time physical world-domain (Baraldi, Citation2017; Matsuyama & Hwang, Citation1990).

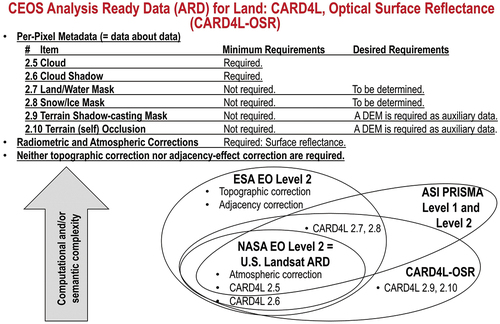

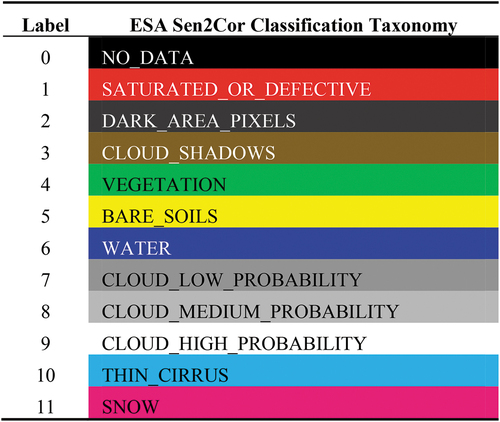

Proposed by the remote sensing (RS) meta-science community in recent years, the notion of Analysis Ready Data (ARD) aims at enabling expert and non-expert end-users of space technology to access/retrieve Earth observation (EO) big data, specifically, EO big 2D gridded data (imagery), ready for use in quantitative EO image analysis of scientific quality, without requiring laborious low-level EO image pre-processing for geometric and radiometric data enhancement and quality assurance, including Cloud and Cloud-shadow quality layers detection in EO optical imagery, preliminary to high-level EO image processing (analysis, interpretation, understanding) (CEOS – Committee on Earth Observation Satellites, Citation2018; Dwyer et al., Citation2018; Helder et al., Citation2018; NASA – National Aeronautics and Space Administration, Citation2019; Qiu et al., Citation2019; USGS – U.S. Geological Survey, Citation2018a, Citation2018c).

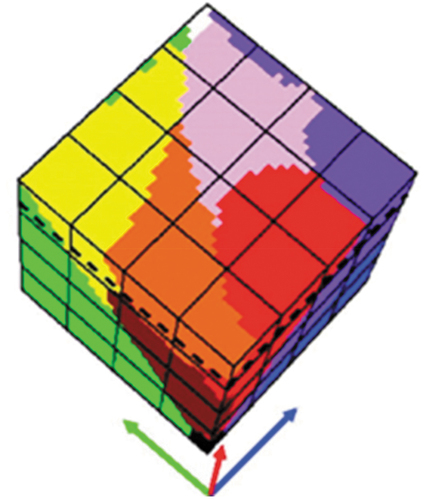

The notion of ARD has been strictly coupled with the concept of EO (raster-based) big data cube, proposed as innovative midstream EO technology by the RS community in recent years (Open Data Cube, Citation2020; Baumann, Citation2017; CEOS – Committee on Earth Observation Satellites, Citation2020; Giuliani et al., Citation2017; Giuliani, Chatenoux, Piller, Moser, & Lacroix, Citation2020; Lewis et al., Citation2017; Strobl et al., Citation2017).

Unfortunately, a community-agreed definition of EO big data cube does not exist yet, although several recommendations and implementations have been proposed (Open Data Cube, Citation2020; Baumann, Citation2017; CEOS – Committee on Earth Observation Satellites, Citation2020; Giuliani et al., Citation2017, Citation2020; Lewis et al., Citation2017; Strobl et al., Citation2017). A community-agreed definition of ARD, to be adopted as standard baseline in EO data cubes, does not exist either. As a consequence, in common practice, many EO (raster-based) data cube definitions and implementations do not require ARD to run and, vice versa, an ever-increasing ensemble of new (supposedly better) ARD definitions and/or ARD software implementations is proposed by the RS community, independently of a standardized/harmonized definition of EO big data cube management system.

Aiming at the convergence between EO big data and Artificial General Intelligence (AGI), not to be confused with Artificial Narrow Intelligence (ANI), regarded herein as part-without-inheritance-of AGI in the multi-disciplinary domain of cognitive science (Ball, Citation2021; Capra & Luisi, Citation2014; Hassabis, Kumaran, Summerfield, & Botvinick, Citation2017; Hoffman, Citation2008, Citation2014; Langley, Citation2012; Miller, Citation2003; Mindfire Foundation, Citation2018; Mitchell, Citation2019; Parisi, Citation1991; Santoro, Lampinen, Mathewson, Lillicrap, & Raposo, Citation2021; Serra & Zanarini, Citation1990; Varela, Thompson, & Rosch, Citation1991; Wikipedia, Citation2019), this paper identifies an innovative ambitious, but realistic EO optical sensory image-derived semantics-enriched ARD product-pair and process gold standard as linchpin for success of a new notion of Space Economy 4.0, envisioned in 2017 by Mazzucato and Robinson in their original work for the European Space Agency (ESA) (Mazzucato & Robinson, Citation2017).

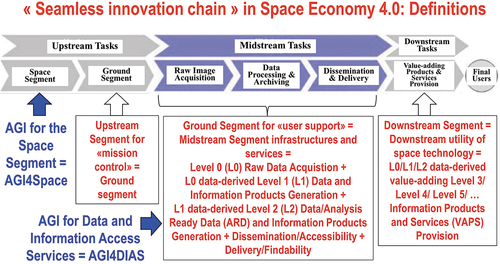

Provided with a relevant survey value in the multi-disciplinary domain of cognitive science, this methodological paper is of potential interest to those relevant portions of the RS meta-science community working with EO imagery (2D gridded data) at any stage of the “seamless innovation chain” required by a new notion of Space Economy 4.0 (Mazzucato & Robinson, Citation2017), ranging from EO image acquisition to low-level EO image pre-processing (enhancement), EO image quality assurance and high-level EO image processing (analysis, interpretation, understanding).

To ease the understanding of the conceptual relationships between topics dealt with by sections located within and across the present Part 1 and the subsequent Part 2 (Baraldi et al., Citation2022) of the two-part paper, a numbered list is provided below as summary of content.

Our original problem description, opportunity recognition, working project’s secondary and primary objectives are presented in Section 2 of the present Part 1.

Problem identification and opportunity recognition: How increasingly popular, but inherently vague/equivocal keywords and buzzwords, including Artificial Intelligence, ARD, EO big data cube and new Space Economy 4.0 (Mazzucato & Robinson, Citation2017), are interrelated in the RS meta-science domain? Before investigating their relationships (dependencies), these inherently vague notions must be better defined (disambiguated, to become better behaved and better understood) as preliminary (secondary) objective of our work. In short, first, keyword “information”, which is inherently ambiguous, although widely adopted in a so-called era of Information and Communications Technology (ICT) (Wikipedia, Citation2009), is disambiguated into the two complementary not-alternative (co-existing) notions of quantitative/unequivocal information-as-thing and inherently ill-posed/ qualitative/ equivocal information-as-data-interpretation.

The notion of quantitative/unequivocal information-as-thing is typical of the Shannon data communication/transmission theory (Baraldi, Citation2017; Baraldi, Humber, Tiede, & Lang, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Capurro & Hjørland, Citation2003; Santoro et al., Citation2021; Shannon, Citation1948).

Investigated by disciplines like philosophy (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020; Fodor, Citation1998; Peirce, Citation1994), semiotics (Ball, Citation2021; Peirce, Citation1994; Perez, Citation2020, Citation2021; Salad, Citation2019; Santoro et al., Citation2021; Wikipedia, Citation2021e) and linguistics (Ball, Citation2021; Berlin & Kay, Citation1969; Firth, Citation1962; Rescorla, Citation2019; Saba, Citation2020a, Citation2020c), the concept of inherently ill-posed/ qualitative/ equivocal information-as-data-interpretation means there is no meaning/semantics in numbers/ (sensory) data/ numerical variables, i.e. meaning/semantics of a data message is provided by the message receiver/interpretant (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Capurro & Hjørland, Citation2003; Peirce, Citation1994; Perez, Citation2020, Citation2021; Salad, Citation2019; Santoro et al., Citation2021; Wikipedia, Citation2021e), where meaning/semantics is always intended as meaning-by-convention/semantics-in-context (Ball, Citation2021; Santoro et al., Citation2021).

If this philosophical premise holds as first principle (axiom, postulate), then a natural question to ask might be: if there is no meaning in numbers, what can machines, specifically, programmable data-crunching machines, a.k.a. computers, learn from numbers, either labeled (supervised, structured, annotated) data or unlabeled (unsupervised, unstructured, without annotation) data (Ball, Citation2021; Rowley, Citation2007; Stanford University, Citation2020; Wikipedia, Citation2020a)?

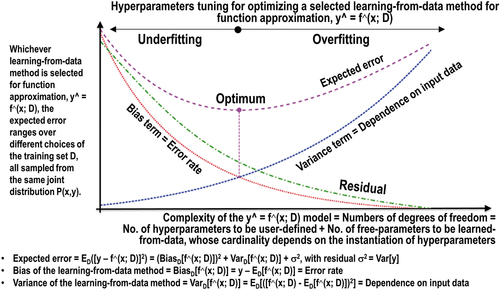

This is tantamount to asking: if there is no meaning in numbers, what does a machine learning-from-data (ML) (Bishop, Citation1995; Cherkassky & Mulier, Citation1998) can learn from data/numbers, together with its special case, the increasingly popular deep-learning-from-data (DL) paradigm (Claire, Citation2019; Copeland, Citation2016; Krizhevsky, Sutskever, & Hinton, Citation2012), where ML is superset-with-inheritance-of DL, whose special case in the CV application domain is the well-known inductive end-to-end learned-from-data deep convolutional neural network (DCNN) framework (Cimpoi et al., Citation2014), such that semantic relationship ‘DCNN ⊂ DL ⊂ ML’ holds?

Our answer would be: A machine, specifically, a computer, synonym for programmable data-crunching machine, whose being is not in-the-world of humans (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020), can learn from numbers, either labeled (supervised, structured, annotated) data or unlabeled (unsupervised, unstructured, without annotation) data, no meaning/semantics because there is none, but statistical data properties exclusively. For example, given a discrete and finite sample of an (input X, output Y) numerical variable pair as supervised reference data set where, by definition, a numerical/quantitative variable is either discrete/countable or continuous/uncountable, to be never confused with a categorical/nominal variable, which is always qualitative, discrete and finite, ML-from-supervised data algorithms can accomplish either data memorization (Bishop, Citation1995; Zhang, Bengio, Hardt, Recht, & Vinyals, Citation2017; Krueger et al., Citation2017), statistical cross-variable correlation estimation or multivariate function regression (Bishop, Citation1995; Cherkassky & Mulier, Citation1998), known that “cross-correlation does not imply causation” and vice versa (Baraldi, Citation2017; Baraldi & Soares, Citation2017; Fjelland, Citation2020; Gonfalonieri, Citation2020; Heckerman & Shachter, Citation1995; Kreyszig, Citation1979; Lovejoy, Citation2020; Pearl, Citation2009; Pearl, Glymour, & Jewell, Citation2016; Pearl & Mackenzie, Citation2018; Schölkopf et al., Citation2021; Sheskin, Citation2000; Tabachnick & Fidell, Citation2014; Varando, Fernandez-Torres, & Camps-Valls, Citation2021; Wolski, Citation2020a, Citation2020b).

If this deduction (modus ponens) rule of inference or forward chaining: (P; P → R) ⇒ R holds, meaning that “if fact P is true and the rule if P then fact R is also true, then we derive by deduction that fact R is also true” (Laurini & Thompson, Citation1992; Peirce, Citation1994), then it means that ML is synonym for “very advanced statistics” and DL as subset-with-inheritance-of ML, ‘DL ⊂ ML’, is synonym for “very, very advanced statistics” (Bills, Citation2020).

If observation/true-fact [‘ML ⊃ DL ⊃ DCNN’ algorithms are capable of “very, very advanced statistical data analysis”, exclusively] holds as premise, then consequence is that, per se, these inductive learning-from-data algorithms are incapable of human-level Artificial Intelligence (Bills, Citation2020; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020; Hassabis et al., Citation2017; Ideami, Citation2021; Jordan, Citation2018; Mindfire Foundation, Citation2018; Mitchell, Citation2021; Perez, Citation2017; Practical AI, Citation2020; Russell & Norvig, Citation1995; Saba, Citation2020c; Thompson, Citation2018; Varela et al., Citation1991), whatever Artificial Intelligence might mean (Jordan, Citation2018), in addition to statistical analytics.

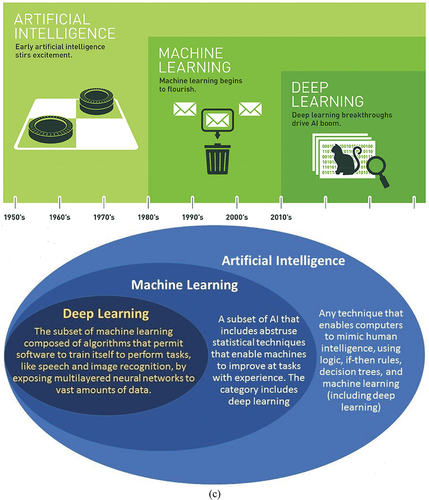

This conjecture is in contrast with the increasingly popular postulate (axiom) that semantic relationship ‘A(G/N)I ⊃ ML ⊃ DL ⊃ DCNN’ = EquationEquation (7)

(7)

(7) holds (Claire, Citation2019; Copeland, Citation2016).

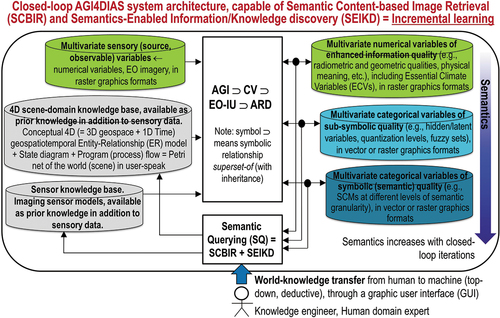

In support of our first conjecture, buzzword “Artificial Intelligence” is disambiguated, as our original second contribution, into the two better-constrained notions of ANI as part-without-inheritance-of AGI. Semantic relationships

EquationEquation (6)

(6)

(6) = ‘[DCNN ⊂ DL ⊂ ML logical-OR Traditional deductive Artificial Intelligence (static expert systems, non-adaptive to data, also known as Good Old-Fashioned Artificial Intelligence, GOFAI)] = ANI’, where ‘ANI → AGI ⊃ CV ⊃ EO image understanding (EO-IU) ⊃ ARD’ = EquationEquation (5)

(5)

(5) ,

are proposed as original working hypotheses, where symbol ‘→’ denotes semantic relationship part-of (without inheritance) pointing from the supplier to the client, not to be confused with semantic relationship subset-of, meaning specialization with inheritance from the superset to the subset, whose symbol is ‘⊃’ (where superset is at left), in agreement with symbols adopted by the standard Unified Modeling Language (UML) for graphical modeling of object-oriented software (Fowler, Citation2003). These postulates (axioms, first principles) contradict the increasingly popular belief that semantic relationship ‘A(G/N)I ⊃ ML ⊃ DL ⊃ DCNN’ = EquationEquation (7)

(7)

(7) holds (Claire, Citation2019; Copeland, Citation2016).

Next, as primary objective of our working project, the better-defined, better-behaved and better-understood notions of ‘DCNN ⊂ DL ⊂ ML → ANI → AGI ⊃ CV ⊃ EO-IU ⊃ ARD’ = EquationEquation (5)

(5)

(5) are investigated for implementation in operational mode as background (enabling) condition, necessary-but-not-sufficient for a new notion of Space Economy 4.0, envisioned in (Mazzucato & Robinson, Citation2017), to become realistic/ feasible/ doable.

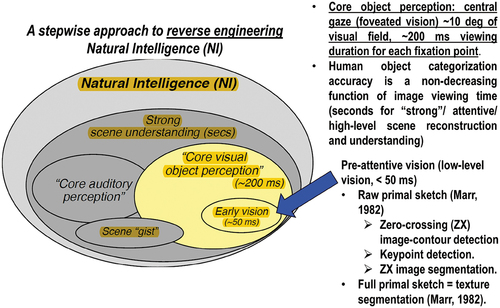

In agreement with Section 2 (summarized above), Section 3 of the present Part 1 investigates the open problem of ‘AGI ← ANI’ across the multidisciplinary domain of cognitive science, where ‘AGI ← ANI’ is regarded as background knowledge of the RS meta-science. The RS meta-science community is expected to transform EO big data into value-adding information products and services (VAPS), suitable for pursuing the United Nations (UN) Sustainable Development Goals (SDGs) at global scale (UN – United Nations, Department of Economic and Social Affairs, Citation2021) in a new notion of Space Economy 4.0 (Mazzucato & Robinson, Citation2017). Provided with a relevant survey value, Section 3 is organized as follows.

Subsection 3.1 – Instantiation of an original minimally dependent and maximally informative (mDMI) set of outcome and process (OP) quantitative quality indicators (Q2Is) (Baraldi, Citation2017; Baraldi & Boschetti, Citation2012a, Citation2012b; Baraldi, Boschetti, & Humber, Citation2014; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b), eligible for use in the quantitative quality assessment of ‘AGI ⊃ CV ⊃ EO-IU ⊃ ARD’ products and processes.

Subsection 3.2 – Presentation of the Marr five levels of system understanding (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Marr, Citation1982; Quinlan, Citation2012; Sonka, Hlavac, & Boyle, Citation1994).

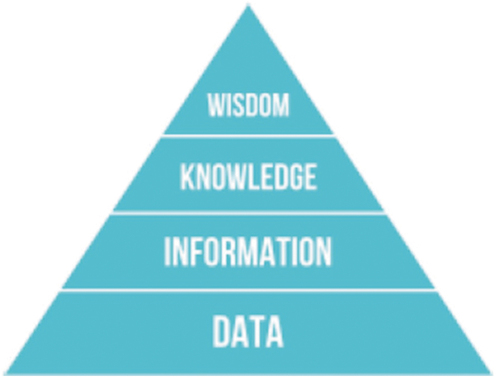

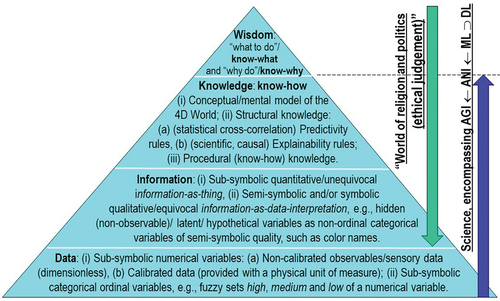

Subsection 3.3 – Augmented Data-Information-Knowledge-Wisdom (DIKW) hierarchical conceptualization (Rowley, Citation2007; Rowley & Hartley, Citation2008; Wikipedia, Citation2020a; Zeleny, Citation1987, Citation2005; Zins, Citation2007), eligible for use in ‘AGI ⊃ CV ⊃ EO-IU ⊃ ARD’ tasks.

Subsection 3.4 – Research and technological development (RTD) of big data cube management systems and AGI as closely related problems that cannot be separated.

Subsection 3.5 – Required by inherently ill-posed AGI systems to become better conditioned for numerical solution, Bayesian inference constraints are proposed.

In Section 4 of the present Part 1, the ‘EO-IU ⊂ CV’ cognitive subproblem of AGI is investigated in detail. In relation to the previous Section 2 and Section 3, Section 4 is organized as follows.

Subsection 4.1. ‘CV ⊃ EO-IU’ is discussed across the multi-disciplinary domain of cognitive science.

Subsection 4.2. Required by inherently ill-posed ‘CV ⊃ EO-IU’ systems to become better conditioned for numerical solution, Bayesian inference constraints are proposed as a specialized version of (subset-of, with-inheritance) those proposed for AGI, as superset-of ‘CV ⊃ EO-IU’, in Subsection 3.5.

Section 5 of the present Part 1 proposes an original multi-objective quality assessment and comparison of existing EO optical image-derived Level 2/ARD product definitions and software implementations. It is organized as follows.

Subsection 5.1. Existing EO optical sensory image-derived Level 2/ARD product definitions and software implementations are critically compared at the Marr five levels of system abstraction, in agreement with the previous Section 2 to Section 4 of the present Part 1.

Subsection 5.2. To overcome limitations of existing EO optical sensory image-derived Level 2/ARD product definitions and software implementations, an innovative semantics-enriched ARD outcome and process gold standard is recommended as a specialized version of (subset-of, with-inheritance) an inherently ill-posed ‘EO-IU ⊂ CV ⊂ AGI’ system, required to become better conditioned for numerical solution as proposed in Subsection 4.2 of the present Part 1.

In relation to the previous Section 2 to Section 5, conclusions of the present Part 1, about the methodological and practical relevance of a new semantics-enriched ARD product-pair and process gold standard as precondition of a new Space Economy 4.0, are reported in Section 6.

For the sake of completeness, Appendix I to Appendix V provide quotes of interest about the increasing disillusionment on ‘DL ⊂ ML → ANI → AGI’ solutions (Bartoš, Citation2017; Bills, Citation2020; Bourdakos, Citation2017; Brendel, Citation2019; Brendel & Bethge, Citation2019; Chollet, Citation2019; Crawford & Paglen, Citation2019; Deutsch, Citation2012; Dreyfus, Citation1965, Citation1991, Citation1992; Etzioni, Citation2017; Expert.ai, Citation2020; Fjelland, Citation2020; Geman, Bienenstock, & Doursat, Citation1992; Gonfalonieri, Citation2020; Hao, Citation2019; Hassabis et al., Citation2017; Hawkins, Citation2021; Ideami, Citation2021; Jordan, Citation2018; Langley, Citation2012; LeVine, Citation2017; Lohr, Citation2018; Lukianoff, Citation2019; Mahadevan, Citation2019; Marcus, Citation2018, Citation2020; Marks, Citation2021; Mindfire Foundation, Citation2018; Mitchell, Citation2019, Citation2021; Nguyen, Yosinski, & Clune, Citation2014; Pearl & Mackenzie, Citation2018; Peng, Citation2017; Perez, Citation2017; Pfeffer, Citation2018; Practical AI, Citation2020; Rahimi, Citation2017; Romero, Citation2021; Russell & Norvig, Citation1995; Saba, Citation2020c; Santoro et al., Citation2021; Strubell, Ganesh, & McCallum, Citation2019; Sweeney, Citation2018a, Citation2018b; Szegedy et al., Citation2013; Thompson, Citation2018; U.S. DARPA – Defense Advanced Research Projects Agency, Citation2018; Wolpert, Citation1996; Wolpert & Macready, Citation1997; Wolski, Citation2020a, Citation2020b; Ye, Citation2020; Yuille & Liu, Citation2019; Zador, Citation2019) mainly stemming from portions of the ML community pre-dating the recent popularity of DL (Claire, Citation2019; Copeland, Citation2016; Krizhevsky et al., Citation2012), but largely ignored to date by meta-sciences like engineering, ML and RS.

Appendix VI provides a list of acronyms, typically found in the existing literature and employed in the present Part 1.

Based on the original contributions of the present Part 1 of this two-part paper, the subsequent Part 2 (Baraldi et al., Citation2022) focuses on an innovative semantics-enriched ARD product-pair and process gold standard, to be investigated for instantiation at the Marr five levels of system understanding, ranging from product and process requirements specification to software implementation. These working project’s final goals are discussed in Section 7 of the Part 2.

At the Marr five levels of system understanding, a novel ambitious, but realistic semantics-enriched ARD product-pair and process gold standard is proposed in Section 8 of the Part 2, based on takeaways about an innovative ARD product and process recommended in Subsection 5.2 of the present Part 1. Section 8 of the Part 2 is organized as follows.

Subsection 8.1. An innovative ARD co-product pair requirements specification is proposed at the Marr first level of abstraction, specifically, product requirements specification.

ARD symbolic (categorical and semantic) co-product requirements specification.

ARD numerical co-product requirements specification.

Subsection 8.2. In relation to Subsection 8.1, ARD software system (process) solutions are investigated at the Marr five levels of information processing system understanding.

ARD-specific software system (process) solutions are investigated at the Marr first to the third level of processing system understanding, namely, process requirements specification, information/knowledge representation and information processing system architecture (design).

As proof of feasibility in addition to proven suitability, existing ARD software subsystem solutions, ranging from software subsystem design to algorithm and implementation, are selected from the scientific literature to benefit from their technology readiness level (TRL) (Wikipedia, Citation2016), at the Marr third to the fifth (shallowest) level of abstraction.

Existing solutions eligible for use in the RTD of a new generation of semantics-enabled EO big raster-based numerical data and vector-based categorical (symbolic, semi-symbolic or subsymbolic) information cube management systems, empowered by a new notion of semantics-enriched ARD product-pair and process gold standard, are discussed in Section 9 of the Part 2.

Conclusions of this two-part paper, focused on an innovative ambitious, but realistic semantics-enriched ARD product-pair and process gold standard, regarded as enabling technology, at the space segment and/or midstream segment, of a new notion of Space Economy 4.0, are summarized in the final Section 10 of the Part 2.

2. Problem identification and opportunity recognition

Findable Accessible Interoperable and Reusable (FAIR) are popular guiding principles for scholarly/scientific digital data and non-data (e.g. analytical pipelines) management (GO FAIR – International Support and Coordination Office, Citation2021; Wilkinson et al., Citation2016). The FAIR principles “highlight the need to embrace good practice by defining essential characteristics of [scholarly/scientific digital data and non-data research objects] to ensure their [transparency, scientific reproducibility and reusability] by both humans and machines: [scholarly/scientific digital data and non-data research objects] should be FAIR” (Lin et al., Citation2020; Wilkinson et al., Citation2016). To stress the paramount difference between digital product/outcome and digital process/analytical pipeline, it is worth mentioning that “the FAIR principles apply not only to ‘data’ in the conventional sense, but also to the algorithms, tools, and workflows [(analytical pipelines)] that led to that data” (Wilkinson et al., Citation2016), see .

Table 1. The Findable Accessible Interoperable and Reusable (FAIR) Guiding Principles for manual (human-driven) and automated (machine-driven) activities attempting to find and/or process scholarly/scientific digital data and non-data research objects – from data in the conventional sense to analytical pipelines (GO FAIR – International Support and Coordination Office, Citation2021; Wilkinson et al., Citation2016). Quoted from (Wilkinson et al., Citation2016), “four foundational principles – Findability, Accessibility, Interoperability, and Reusability (FAIR) – serve to guide [contemporary digital, e.g. web-based] producers and publishers” involved with “both manual [human-driven] and automated [machine-driven] activities attempting to find and process scholarly digital [data and non-data] research objects – from data [in the conventional sense] to analytical pipelines … An example of [scholarly/scientific digital] non-data research objects are analytical workflows, to be considered a critical component of the scholarly/scientific ecosystem, whose formal publication is necessary to achieve [transparency, scientific reproducibility and reusability]”. All scholarly/scientific digital data and non-data research objects, ranging from data in the conventional sense, either numerical or categorical variables as outcome (product), to analytical pipelines (processes), synonym for data and information processing systems, “benefit from application of these principles, since all components of the research process must be available to ensure transparency, [scientific] reproducibility and reusability”. Hence, “the FAIR principles apply not only to ‘data’ in the conventional sense, but also to the algorithms, tools, and workflows that led to that data … While there have been a number of recent, often domain-focused publications advocating for specific improvements in practices relating to data management and archival, FAIR differs in that it describes concise, domain-independent, high-level principles that can be applied to a wide range of scholarly [digital data and non-data] outputs. The elements of the FAIR Principles are related, but independent and separable. The Principles define characteristics that contemporary data resources, tools, vocabularies and infrastructures should exhibit to assist discovery and reuse by third-parties … Throughout the Principles, the phrase ‘(meta)data’ is used in cases where the Principle should be applied to both metadata and data.”

Precondition to make scholarly/scientific digital data and non-data research objects FAIR while preserving them over time is availability of trustworthy digital repositories with sustainable governance and organizational frameworks, reliable infrastructure, and comprehensive policies supporting community-agreed practices. For example, promoted by the Research Data Alliance (RDA – Research Data Alliance, Citation2021), the principles of Transparency, Responsibility, User focus, Sustainability and Technology (TRUST) offer guidance for maintaining the trustworthiness of digital repositories, required to demonstrate essential and enduring capabilities necessary to enable access and reuse of data over time for the communities they serve (Lin et al., Citation2020).

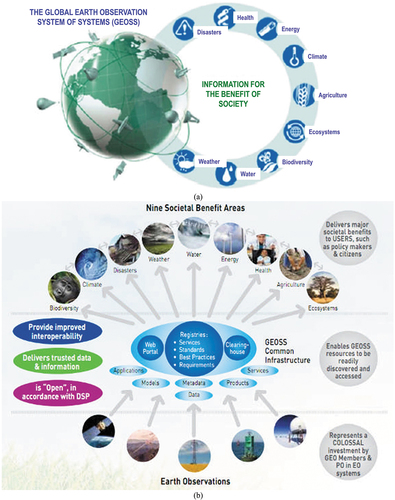

Analogous to the Research Data Alliance community effort (RDA – Research Data Alliance, Citation2021) is the intergovernmental Group on Earth Observations (GEO) exertion to encourage the implementation of Earth observation (EO) data management principles (GEO – Group on Earth Observations, Citation2021) by organizations contributing to the GEO visionary goal of a Global Earth Observation System of (component) Systems (GEOSS) implementation plan for years 2005–2015 (EC – European Commission and GEO – Group on Earth Observations, Citation2014; GEO – Group on Earth Observations, Citation2005, Citation2019; Mavridis, Citation2011), unaccomplished to date (GEO – Group on Earth Observations, Citation2015; Nativi et al., Citation2015; Nativi, Santoro, Giuliani, & Mazzetti, Citation2020), see . In short, the GEOSS objective is “to exploit the growing potential of EO to support decision making” (Nativi et al., Citation2015, p. 21). In 2014, GEO expressed the utmost recommendation that, for the next 10 years 2016–2025 (GEO – Group on Earth Observations, Citation2015), the second mandate of GEOSS is to evolve from an EO big data sharing infrastructure, intuitively referred to as data-centric approach (Nativi et al., Citation2020), to an expert EO data-derived information and knowledge system (Nativi et al., Citation2015, pp. 7, 22), intuitively referred to as knowledge-driven approach (Nativi et al., Citation2020), capable of supporting decision-making by successfully coping with challenges along all six community-agreed degrees (dimensionalities, axes) of complexity of big data (vice versa, equivalent to “high management capabilities” required by big data) (Guo, Goodchild, & Annoni, Citation2020, p. 1), known as the six Vs of volume, variety, veracity, velocity, volatility and value (Metternicht et al., Citation2020).

Figure 1. Group on Earth Observations (GEO)’s implementation plan for years 2005–2015 of the Global Earth Observation System of (component) Systems (GEOSS) (EC – European Commission and GEO – Group on Earth Observations, Citation2014; GEO – Group on Earth Observations, Citation2005, Citation2019; Mavridis, Citation2011), unaccomplished to date and revised by a GEO’s second implementation plan for years 2016–2025 of a new GEOSS, regarded as expert EO data-derived information and knowledge system (GEO – Group on Earth Observations, Citation2015; Nativi et al., Citation2015, Citation2020; Santoro et al., Citation2017). (a) Adapted from (EC – European Commission and GEO – Group on Earth Observations, Citation2014; Mavridis, Citation2011). Graphical representation of the visionary goal of the GEOSS applications. Nine “Societal Benefit Areas” are targeted by GEOSS: disasters, health, energy, climate, water, weather, ecosystems, agriculture and biodiversity. (b) Adapted from (EC – European Commission and GEO – Group on Earth Observations, Citation2014; Mavridis, Citation2011). GEO’s vision of a GEOSS, where interoperability of interconnected component systems is an open problem, to be accomplished in agreement with the Findable Accessible Interoperable and Reusable (FAIR) criteria for scientific data (product and process) management (GO FAIR – International Support and Coordination Office, Citation2021; Wilkinson et al., Citation2016), see . In the terminology of GEOSS, a digital Common Infrastructure is required to allow end-users to search for and access to the interconnected component systems. In practice, the GEOSS Common Infrastructure must rely upon a set of interoperability standards and best practices to interconnect, harmonize and integrate data, applications, models, and products of heterogeneous component systems (Nativi et al., Citation2015, p. 3). In 2014, GEO expressed the utmost recommendation that, for the next 10 years 2016–2025 (GEO – Group on Earth Observations, Citation2015), the second mandate of GEOSS is to evolve from an EO big data sharing infrastructure, intuitively referred to as data-centric approach (Nativi et al., Citation2020), to an expert EO data-derived information and knowledge system (Nativi et al., Citation2015, pp. 7, 22), intuitively referred to as knowledge-driven approach (Nativi et al., Citation2020). The formalization and use of the notion of Essential (Community) Variables and related instances (see ) contributes to the process of making GEOSS an expert information and knowledge system, capable of EO sensory data interpretation/transformation into Essential (Community) Variables in support of decision making (Nativi et al., Citation2015, p. 18, Citation2020). By focusing on the delivery to end-users of EO sensory data-derived Essential (Community) Variables as information sets relevant for decision-making, in place of delivering low-level EO big sensory data, the Big Data requirements of the GEOSS digital Common Infrastructure are expected to decrease (Nativi et al., Citation2015, p. 21, Citation2020).

Table 2. Essential climate variables (ECVs) defined by the World Climate Organization (WCO) (Bojinski et al., Citation2014), pursued by the European Space Agency (ESA) Climate Change Initiative’s parallel projects (ESA – European Space Agency, Citation2017b, Citation2020a, Citation2020b) and by the Group on Earth Observations (GEO)’s second implementation plan for years 2016–2025 of the Global Earth Observation System of (component) Systems (GEOSS) (GEO – Group on Earth Observations, Citation2015; Nativi et al., Citation2015, Citation2020; Santoro et al., Citation2017), see . In the terrestrial layer, ECVs are: River discharge, water use, groundwater, lakes, snow cover, glaciers and ice caps, ice sheets, permafrost, albedo, land cover (including vegetation types), fraction of absorbed photosynthetically active radiation, leaf area index (LAI), above-ground biomass, soil carbon, fire disturbance, soil moisture. All these ECVs can be estimated from Earth observation (EO) imagery by means of either physical or semi-empirical data models, e.g. Clever’s semi-empirical model for pixel-based LAI estimation from multi-spectral (MS) imagery (Van der Meer & De Jong, Citation2011).

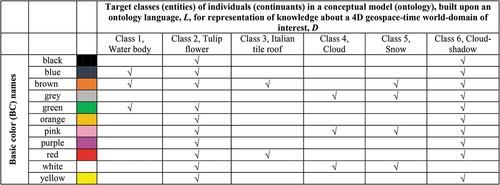

For example, in GEOSS, an important aspect related to property Value listed among the six Vs featured by EO big data is the identification, formalization and use of Essential (Community) Variables (Nativi et al., Citation2015, Citation2020; Santoro, Nativi, Maso, & Jirka, Citation2017). The Horizon 2020 ConnectinGEO project proposed a broad definition of Essential (Community) Variables (Santoro et al., Citation2017): “a minimal set of variables that determine the system’s state and developments, are crucial for predicting system developments, and allow us to define metrics that measure the trajectory of the system”. Intuitively, Essential (Community) Variables can be defined as EO sensory data-derived highly informative (high-level) variables, either numerical (continuous or discrete) or categorical (subsymbolic, semi-symbolic or symbolic, refer to this Section below), required for study, reporting, and management of real-world phenomena, related to any of the various components of the system Earth (e.g. oceans, land surface, solid Earth, biosphere, cryosphere, atmosphere and ionosphere) and their interactions (Nativi et al., Citation2020), in a specific scientific community and/or specific societal domain (Nativi et al., Citation2015, p. 18), including any of the nine “Societal Benefit Areas” targeted by GEOSS, namely, disasters, health, energy, climate, water, weather, ecosystems, agriculture and biodiversity, see .

A popular community-specific ensemble of Essential (Community) Variables is that identified, formalized and adopted by the World Climate Organization (WCO) (Bojinski et al., Citation2014), whose Essential Climate Variables (ECVs) are either numerical or categorical, see . If numerical, then ECVs are always provided with a physical meaning, a physical unit of measure and a physical range of change, such as biophysical variables, e.g. leaf area index, above-ground biomass, etc. If categorical, then ECVs, specifically, land cover, are discrete, finite and symbolic (i.e. provided with meaning/semantics in a conceptual world model, refer to this Section below).

The ongoing GEO activity on the identification, formalization and use of EO sensory data-derived Essential (Community) Variables (such as ECVs, see ) contributes to the process of making GEOSS an expert information and knowledge system, capable of EO sensory data interpretation/transformation into Essential (Community) Variables in support of decision making (Nativi et al., Citation2015, p. 18, Citation2020). It means that only high-level Essential (Community) Variables, rather than low-level EO big sensory data, should be delivered by GEOSS to end-users of spaceborne/airborne EO technologies for decision-making purposes. By focusing on the delivery to end-users of EO sensory data-derived Essential (Community) Variables as information sets relevant for decision-making, in place of delivering low-level EO big sensory data, the Big Data requirements of the GEOSS digital Common Infrastructure are expected to decrease (Nativi et al., Citation2015, p. 21, Citation2020), see .

To take into account the fundamental difference between product/outcome and process/analytical pipeline, it is worth observing that term reusability in the FAIR principles for scientific digital data and non-data research objects management (see ) is conceptually equivalent to tenet regularity adopted by the popular engineering principles of structured (data and information processing) system design, encompassing modularity, hierarchy and regularity (Lipson, Citation2007), considered neither necessary nor sufficient, but highly recommended for system scalability (Page-Jones, Citation1988).

To the same extent, term interoperability (opposite of heterogeneity) in the FAIR principles for scholarly/scientific digital data and non-data research objects (see ) becomes, in the domain of analytical pipelines/processes, the tenet of data and information processing system (and/or component system, as functional unit) interoperability. System interoperability is typically defined as “the ability of systems to provide services to and accept services from other systems and to use the services so exchanged to enable them to operate effectively together” (Wikipedia, Citation2018). According to (ISO/IEC – International Organization for Standardization and International Electrotechnical Commission, Citation2015), system (and/or functional unit) interoperability is “the capability to communicate, execute programs, or transfer data among various functional units [component systems] in a manner that requires the user to have little or no knowledge of the unique characteristics of those units”; in short, it is “the capability of two or more functional units [component systems] to process data cooperatively”.

For example, the notion of “System of [component] Systems” proposed in GEOSS (see ) and the related “System of [component] Systems Engineering” process, “emerged in many fields of applications to address the common problem of [connecting and] integrating many [already existing or yet to be developed complex] independent autonomous systems, frequently of large dimensions, [each with its own objectives, requirements, mandate and governance], in order to satisfy a global [large-scale] goal [where component systems can leverage each other so that the overall System of Systems becomes much more than the sum of its component systems], while keeping [component systems] autonomous. System of [component] Systems can be usefully described as large-scale integrated systems that are heterogeneous and consist of [component] subsystems that are independently operable on their own, but are networked together for a common goal. System of [component] Systems Engineering solutions are necessary to address important challenges, such as: (i) Large-scale: in a System of [component] Systems, directing subsystems to a central task often introduces new challenging problems that for small systems may reduce the advantage of participating in a System of [component] Systems. Therefore, for small systems other solutions may be more effective and efficient. (ii) Heterogeneity: homogeneous/harmonized systems may be merged in an integrated system without the need of System of [component] Systems Engineering actions. (iii) Independent operability: the realization of a System of [component] Systems must not affect the normal and usual working of the composing systems. The System of [component] Systems Engineering needs to implement interoperability agreements supplementing without supplanting the existing ones. (iv) Effective networking: the composing [component] systems need to intercommunicate to achieve the common goal” (Nativi et al., Citation2015, p. 2).

To accomplish effective networking, in the terminology of GEOSS, a digital Common Infrastructure is required to allow end-users to search for and access to the interconnected component systems. To reach its goal, the GEOSS Common Infrastructure must rely upon a set of interoperability standards and best practices to interconnect, harmonize and integrate data, applications, models, and products of heterogeneous component systems (Nativi et al., Citation2015, p. 3), see .

According to the existing literature, there are three levels of system interoperability (opposite of heterogeneity), corresponding to three generations of data and information processing systems.

First lexical/communication level of system interoperability, involving computer system and data communication protocols, data types and formats, operating systems, transparency of location, distribution and replication of data, etc. (Sheth, Citation2015; Wikipedia, Citation2018).

Second syntax/structural level of system interoperability. “Syntactic interoperability only focuses on the technical ability of systems to exchange data” (Hitzler et al., Citation2012). Intuitively, it is related to form, not content/ meaning/ semantics. According to Yingjie Hu, the term syntactics is in contrast with “the term semantics, which refers to the meaning of expressions in a language” (Hu, Citation2017). Syntactic interoperability of component systems is involved with query languages and user interfaces (Sheth, Citation2015; Wikipedia, Citation2018) at the two Marr levels of abstraction of an information processing system known as information/knowledge representation and structured system design (architecture) (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Marr, Citation1982; Quinlan, Citation2012; Sonka et al., Citation1994) (refer to the farther Subsection 3.2). For example, in traditional syntactic analysis (syntactic pattern recognition), a syntactic classifier assigns an input pattern (a word) to an appropriate class (of words) depending on whether or not that word can be generated by a class-specific grammar. When a class description grammar is regular, also known as type 3, then syntactic analysis is simple, because the grammar can be instantiated by a finite non-deterministic automaton (Sonka et al., Citation1994).

Third semantic/ontological level of system interoperability (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Bittner, Donnelly, & Winter, Citation2005; Green, Bean, & Hyon Myaeng, Citation2002; Hitzler et al., Citation2012; Kuhn, Citation2005; Laurini & Thompson, Citation1992; Matsuyama & Hwang, Citation1990; Nativi et al., Citation2015, Citation2020; Obrst, Whittaker, & Meng, Citation1999; Sheth, Citation2015; Sonka et al., Citation1994; Sowa, Citation2000; Stock, Hobona, Granell, & Jackson, Citation2011; Wikipedia, Citation2018; Hu, Citation2017), which is increasingly domain-specific.

In Kuhn (Citation2005), semantic/ontological interoperability is considered the technical analogue to human communication and cooperation.

As reported above, “the term semantics refers to the meaning of expressions in a language and is in contrast with the term syntactics” (Hu, Citation2017).

For example, geospatial semantics is a recognized subfield in geographic information science (GIScience) (Buyong, Citation2007; Couclelis, Citation2010, Citation2012; Ferreira, Camara, & Monteiro, Citation2014; Fonseca, Egenhofer, Agouris, & Camara, Citation2002; Goodchild, Yuan, & Cova, Citation2007; Hitzler et al., Citation2012; Kuhn, Citation2005; Longley, Goodchild, Maguire, & Rhind, Citation2005; Maciel et al., Citation2018; Sheth, Citation2015; Sonka et al., Citation1994; Stock et al., Citation2011; Hu, Citation2017). In more detail, “geospatial semantics adds the adjective geospatial in front of semantics, and this addition both restricts and extends the initial applicable area of semantics. On one hand, geospatial semantics focuses on the expressions that have a connection with geography rather than any general expressions; on the other hand, geospatial semantics enables studies on not only linguistic expressions, but also the meaning/semantics of geographic places [in the physical world-domain] and geospatial data [in the domain of digital machines, like in Geographic Information Systems (GIS)]. Kuhn defines geospatial semantics as ‘understanding GIS contents, and capturing this understanding in formal theories’ (Kuhn, Citation2005). This definition can be divided into two parts: understanding and formalization. The understanding part triggers the question: who is supposed to understand the GIS content, people or machines? When the answer is “people”, research in geospatial semantics involves human cognition of geographic concepts and spatial relations; whereas when the answer is “machines”, it can involve research on the semantic interoperability of distributed systems, digital gazetteers, and geographic information retrieval. The second part of the definition proposes to capture this understanding through formal theories. Ontologies, as formal specifications of concepts and relations, have been widely studied and applied in geospatial semantics and formal logics, such as first-order logic and description logics, are often employed to define the concepts and axioms in an ontology [conceptual/ mental/ perceptual model of the world-domain of interest]. While Kuhn’s definition includes these two parts, research in geospatial semantics is not required to have both – one study can focus on understanding, while another one examines formalization” (Hu, Citation2017).

Intuitively, “beyond the ability of two or more computer systems to exchange information [in compliance with the first lexical/communication level and the second syntax/structural level of system interoperability], semantic interoperability is the ability to automatically interpret the information exchanged meaningfully and accurately [i.e. to automatically and correctly understand the meaning/semantics of an exchanged message] in order to produce useful results as defined by the end users of both systems. To achieve semantic interoperability, both sides must refer to a common information exchange reference model” (Wikipedia, Citation2018), such as a world ontology, synonym for conceptual/ mental/ perceptual model of the 4D geospace-time physical world, to be agreed upon by members of a community before use by the community (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Green et al., Citation2002; Hitzler et al., Citation2012; Laurini & Thompson, Citation1992; Matsuyama & Hwang, Citation1990; Obrst et al., Citation1999; Sonka et al., Citation1994; Sowa, Citation2000; Stock et al., Citation2011). In other words, “[in a system of component systems, say, in an enterprise,] each system (or object of a system) can map from its own conceptual model [mental model of the world, world ontology] to the conceptual model of other systems, thereby ensuring that the meaning [semantics] of their information is transmitted, accepted, understood, and used across the enterprise” (Obrst et al., Citation1999). “The content [meaning, semantics] of the information exchange requests are unambiguously defined: what is sent is the same as what is understood” (Wikipedia, Citation2018).

To guarantee semantic/ontological interoperability of component systems, “the subject of ontology is the study of the categories of things that exist or may exist in some domain. The product of such a study, called an ontology, is a catalog of the types of things that are assumed to exist in a domain of interest D from the perspective of a person who uses a language L for the purpose of talking about D“ (Sowa, Citation2000, p. 492). In an ontology, which is function of pair (D, L), “categories of things, expressed in L, that exist or may exist in an application domain, D, represent the ontological commitments of the ontology designer or knowledge engineer” (Sowa, Citation2000, p. 134). In practice, “ontologies are tools for specifying the semantics of terminology systems in a well-defined and unambiguous manner [community-agreed upon]. They are used to improve communication either between humans or computers by specifying the semantics [meaning] of the symbolic apparatus used in the communication process” (Bittner et al., Citation2005).

In essence, “high-level semantic information availability reduces the problem of knowing the contents and structure of many sensory data and low-level information sources [at the first lexical/communication level or second syntax/structural level of system interoperability] to the problem of knowing the content of intuitive-to-use, high-level domain-specific (specialized) ontologies, which a [human] end-user familiar with the application domain is likely to know or understand easily” (Sheth, Citation2015), see .

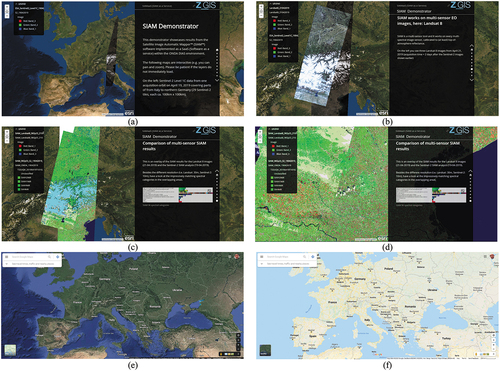

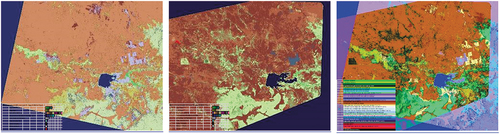

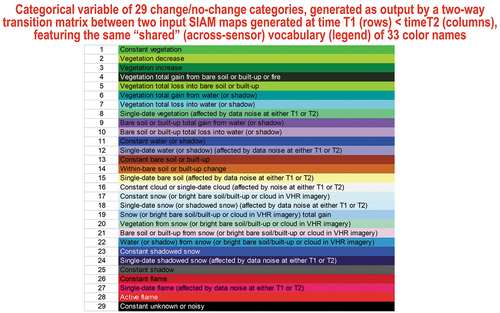

Figure 2. Two examples of semantic/ontological interoperability over heterogeneous sensory data sources (true-facts, observables). The first example is provided by the demonstrator of the Satellite Image Automatic Mapper™ (SIAM™) lightweight computer program (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b; Baraldi, Puzzolo, Blonda, Bruzzone, & Tarantino, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b), installed onto the Serco ONDA Data and Information Access Services (DIAS) Marketplace (Baraldi, Citation2019a). This demonstrator works as proof-of-concept of an Artificial General Intelligence (AGI) (Bills, Citation2020; Chollet, Citation2019; Dreyfus, Citation1965, Citation1991, Citation1992; EC – European Commission, Citation2019; Fjelland, Citation2020; Hassabis et al., Citation2017; Ideami, Citation2021; Jajal, Citation2018; Jordan, Citation2018; Mindfire Foundation, Citation2018; Mitchell, Citation2021; Practical AI, Citation2020; Saba, Citation2020c; Santoro et al., Citation2021; Sweeney, Citation2018a; Thompson, Citation2018; Wolski, Citation2020a, Citation2020b), suitable for Earth observation (AGI4EO) applications, namely, ‘AGI for Data and Information Access Services (DIAS) = AGI4DIAS = AGI-enabled DIAS = Semantics-enabled DIAS 2.0 (DIAS 2nd generation) = AGI + DIAS 1.0 + Semantic content-based image retrieval (SCBIR) + Semantics-enabled information/knowledge discovery (SEIKD)’ = EquationEquation (1)

(1)

(1) , envisioned by portions of the existing literature (Augustin, Sudmanns, Tiede, & Baraldi, Citation2018; Augustin, Sudmanns, Tiede, Lang, & Baraldi, Citation2019; Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Baraldi, Tiede, Sudmanns, Belgiu, & Lang, Citation2016; Baraldi, Tiede, Sudmanns, & Lang, Citation2017; Dhurba & King, Citation2005; FFG – Austrian Research Promotion Agency, Citation2015, Citation2016, Citation2018, Citation2020; Planet, Citation2018; Smeulders, Worring, Santini, Gupta, & Jain, Citation2000; Sudmanns, Augustin, van der Meer, Baraldi, and Tiede, Citation2021; Sudmanns, Tiede, Lang, & Baraldi, Citation2018; Tiede, Baraldi, Sudmanns, Belgiu, & Lang, Citation2017). In the present work, an integrated AGI4DIAS infrastructure = ‘AGI + DIAS 1.0 + SCBIR + SEIKD = DIAS 2.0ʹ is proposed as viable alternative to, first, traditional metadata text-based image retrieval systems, such as popular EO (raster-based) data cubes (Open Data Cube, Citation2020; Baumann, Citation2017; CEOS – Committee on Earth Observation Satellites, Citation2020; Giuliani et al., Citation2017, Citation2020; Lewis et al., Citation2017; Strobl et al., Citation2017), including the European Commission (EC) DIAS 1st generation (DIAS 1.0) (EU – European Union, Citation2017, Citation2018), and, second, prototypical content-based image retrieval (CBIR) systems, whose queries are input with text information, summary statistics or by either image, object or multi-object examples (Datta, Joshi, Li, & Wang, Citation2008; Kumar, Berg, Belhumeur, & Nayar, Citation2011; Ma & Manjunath, Citation1997; Shyu et al., Citation2007; Smeulders et al., Citation2000; Smith & Chang, Citation1996; Tyagi, Citation2017). Typically, existing DIAS and prototypical CBIR systems are affected by the so-called data-rich information-poor (DRIP) syndrome (Ball, Citation2021; Bernus & Noran, Citation2017). Figure captions are as follows. (a) Mosaic of twenty-nine 12-band Sentinel-2 images, acquired across Europe on 19 April, 2019, radiometrically calibrated into top-of-atmosphere reflectance (TOARF) values, depicted in true-colors, 10 m resolution, equivalent to a QuickLook™ technology. (b) Mosaic of three 11-band Landsat-8 images acquired across Europe on 21 April, 2019, radiometrically calibrated into TOARF values, depicted in true-colors, 30 m resolution, equivalent to a QuickLook technology. (c) Overlap between two SIAM’s output maps in semi-symbolic color names (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b), automatically generated in near real-time from, respectively, the input 10 m resolution Sentinel-2 image mosaic, shown in Figure 2(a), and the 30 m resolution Landsat-8 image mosaic shown in Figure 2(b). The same map legend applies to the two input data sets. Semantic (symbolic) interoperability is accomplished by SIAM, independent of the input data source. In practice, SIAM instantiates a QuickMap™ technology, where a categorical map, provided with its discrete and finite map legend, is sensory data-derived automatically, without human-machine interaction and in near real-time, either on-line or off-line. (d) Zoom-in of the two SIAM maps, featuring the same semantics, independent of changes in the input sensory data source. In practice, SIAM accomplishes semantic interoperability by featuring robustness to changes in input data and scalability to changes in sensor specifications. (e) Second example of semantic interoperability over heterogeneous sensory data sources. Taken from Google Earth = Google Maps_Satellite View, it is equivalent to a QuickLook technology. It shows multi-source sensory data (quantitative/unequivocal information-as-thing) (Baraldi & Tiede, Citation2018a, Citation2018b; Capurro & Hjørland, Citation2003), equivalent to subsymbolic numerical variables, to be interpreted by users. (f) Taken from Google Maps_Map View (qualitative/equivocal information-as-data-interpretation) (Baraldi & Tiede, Citation2018a, Citation2018b; Capurro & Hjørland, Citation2003), equivalent to a symbolic interpretation of Google Maps_Satellite View data. In practice, Google Maps_Map View works as QuickMap technology. To be run automatically and in near real-time, either on-line or off-line, a QuickMap technology accomplishes data compression through discretization/categorization, together with interpretation of a continuous or discrete numerical variable belonging to a (2D) image-plane into a discrete and finite categorical variable provided with semantics, belonging to an ontology (mental model, conceptual model) of the 4D geospace-time real-world (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Matsuyama & Hwang, Citation1990).

For example, “in a multidisciplinary environment such as GEOSS, the semantic interoperability aspects acquire a particular importance. Different [component] systems may describe their resources according to different domain semantics. GEOSS addresses the resulting semantic heterogeneity consulting a set of interconnected and autonomous semantic assets, i.e. thesauri, controlled vocabularies and taxonomies” (Nativi et al., Citation2015, p. 13). In this regard, starting from 2016 as part of the new GEO’s task of developing an innovative GEOSS information and knowledge base, built upon the pre-existing GEOSS big data base, ”the definition, estimation and management of Essential (Community) Variables (see ) helps semantic interoperability development in a significant way, by contributing to introduce a common lexicon and an unambiguous formalization of the features and behaviors of these variables for a given scope” (Nativi et al., Citation2020).

To ease the understanding of the aforementioned three levels of system interoperability, let us observe that the third semantic/ontological level of system interoperability appears related to the interoperability levels I1 and I2 of the FAIR guiding principles for scientific data (product and process) management (GO FAIR – International Support and Coordination Office, Citation2021; Wilkinson et al., Citation2016), see .

Moreover, it is worth recalling here that, in 2017, to create a Digital Single Market in Europe, where public administrations can collaborate digitally to develop seamless digital services and data flows, the European Commission (EC) proposed a European Interoperability Framework (EC – European Commission, Citation2021). The conceptual model of a European Interoperability Framework for an integrated public service governance, promoting the idea of “interoperability-by-design” as a standard approach for the design and operation of European public services, comprises four levels of interoperability: (i) legal interoperability, (ii) organizational interoperability, (iii) technical interoperability and (iv) semantic interoperability.

Intuitively, the third technical interoperability level of governance for an integrated public service promoted by the European Interoperability Framework encompasses the aforementioned first lexical/communication level and second syntax/structural level of system interoperability (Sheth, Citation2015; Wikipedia, Citation2018), while the fourth semantic interoperability level of governance of the European Interoperability Framework is one-to-one related to the aforementioned third semantic/ontological level of system interoperability (Bittner et al., Citation2005; Kuhn, Citation2005; Sheth, Citation2015; Sonka et al., Citation1994; Sowa, Citation2000; Wikipedia, Citation2018).

Independently of existing “concise, domain-independent, high-level principles [like FAIR or TRUST] that can be applied to a wide range of [scholarly/scientific digital data and non-data] research objects/outputs – from data [in the conventional sense] to analytical pipelines” (Wilkinson et al., Citation2016), the scientific attention of the present paper is totally focused on the semantic/ontological third-level of system interoperability to be accomplished by the ongoing research and technological development (RTD) of an innovative EO system of component systems, like GEOSS (see ), undertaken by the remote sensing (RS) meta-science community at the intergovernamental level of GEO in the last twenty years (refer to references listed in this Section above).

Like engineering, RS is a meta-science, i.e. it is an “applied” science of “basic” sciences (Wikipedia, Citation2020b). Its goal is to transform observations (sensory data, true-facts) of the physical 4D geospace-time real-world, together with information and knowledge about the world provided by other scientific disciplines, into useful (value-adding) user- and context-dependent information about the world and/or solutions in the world (Couclelis, Citation2012; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020).

According to Amit Sheth (Citation2015), future data and information processing systems can become increasingly user- and domain-specific/ specialized/ “vertical”/ deep and narrow “minded” while accomplishing a third semantic/ontological level of system interoperability by linking (relating, associating, matching) automatically value-adding information, inferred from ever-varying sensory data, with a stable/hard-to-vary (Matsuyama & Hwang, Citation1990; Sweeney, Citation2018a) ontology (Bittner et al., Citation2005) of the 4D geospace-time physical world, also known as world model, mental model of the world or conceptual world model, available a priori and community-agreed upon (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Green et al., Citation2002; Laurini & Thompson, Citation1992; Matsuyama & Hwang, Citation1990; Sonka et al., Citation1994; Sowa, Citation2000).

By definition, a priori knowledge is any knowledge available before looking at sensory data/ observations/ true-facts, i.e. it is available in addition to (not as alternative to) sensory data or sensory data-derived information (Cherkassky & Mulier, Citation1998).

To the best understanding of the present authors, a “vertical”/ deep and narrow/ user- and application domain-specific stable/hard-to-vary conceptual/ mental/ perceptual model/ ontology of the 4D geospace-time physical world can be considered hierarchically built upon a more stable/harder-to-vary “horizontal”/ general-purpose/ user- and domain-independent commonsense knowledge base (Etzioni, Citation2017; Expert.ai, Citation2020; Thompson, Citation2018; U.S. DARPA – Defense Advanced Research Projects Agency, Citation2018; Wikipedia, Citation2021c), where the notion of commonsense knowledge can be one-to-one related to the notion of collective unconscious mind, which co-exists with the personal conscious mind in Jungian psychoanalysis. The former exists in all places, at all times, while the latter only exists in certain places at specific times (Hehe, Citation2021).

According to the existing literature, commonsense knowledge is referred to as “commonsense assumptions or default assumptions. It consists of facts about the everyday world that all humans are expected to know [without need for debate, such as ‘Lemons are sour’]” (U.S. DARPA – Defense Advanced Research Projects Agency, Citation2018). Oren Etzioni describes commonsense knowledge as all the weird, invisible, implied knowledge about the world that we all possess and take for granted, but rarely state out loud explicitly (Etzioni, Citation2017; Thompson, Citation2018). “It helps [humans] to solve [cognitive] problems in the face of incomplete information … As more knowledge of the world is discovered or learned over time, [the commonsense knowledge base of assumptions] can be revised, according to a truth maintenance process” (Wikipedia, Citation2021c). According to the U.S. Defense Advanced Research Projects Agency (DARPA), “possessing this essential background knowledge could significantly advance the symbiotic partnership between humans and machines [to be accomplished by a future Artificial General Intelligence, AGI, regarded by DARPA as human-level Artificial Intelligence, yet to be developed]. But articulating and encoding this obscure-but-pervasive capability is no easy feat … The absence of common sense prevents an intelligent system from understanding [the 4D geospace-time physical world of humans], communicating naturally with people, behaving reasonably in unforeseen situations, and learning from new experiences. This absence is perhaps the most significant barrier between the narrowly focused Artificial Intelligence applications [a.k.a. Artificial Narrow Intelligence, ANI = EquationEquation (6)(6)

(6) , refer to this Section below] we have today and the more general Artificial Intelligence applications [a.k.a. Artificial General Intelligence, AGI = EquationEquation (5)

(5)

(5) , refer to this Section below] we would like to create in the future” (U.S. DARPA – Defense Advanced Research Projects Agency, Citation2018).

One special case of present and/or future data and information processing systems (Sheth, Citation2015), required to link value-adding information, extracted from ever-varying sensory data, with a stable/hard-to-vary ontology of the 4D geospace-time physical world, which incorporates a commonsense knowledge base of assumptions, are computer vision (CV) systems, whose input data are a special case of sensory data, namely, imagery, synonym for 2D gridded data, belonging to a (2D) image-plane (Baraldi, Citation2017; Matsuyama & Hwang, Citation1990; Van der Meer & De Jong, Citation2011; Victor, Citation1994).

Encompassing both CV and biological vision, vision is an inherently ill-posed cognitive problem (sensory data interpretation task), whose goal is 4D geospace-time scene-from-(2D) image reconstruction and understanding (Baraldi, Citation2017; Matsuyama & Hwang, Citation1990), which requires, first, to cope with a data dimensionality reduction problem, from 4D of the world-domain to 2D of the image-domain, and, second, to fill the so-called semantic information gap, from ever-varying sensory data (numerical variables, specifically, sub-symbolic sensations) in the (2D) image-plane to stable percepts, equivalent to a discrete and finite set of concepts, provided with meaning/semantics (Ball, Citation2021; Capurro & Hjørland, Citation2003; Sowa, Citation2000) in a conceptual/ mental/ perceptual model/ ontology of the 3D observed scene (when acquisition time is fixed, in still imagery), which belongs to a 4D geospace-time physical world-domain (Augustin et al., Citation2018, Citation2019; Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Baraldi et al., Citation2016, Citation2017; FFG – Austrian Research Promotion Agency, Citation2015, Citation2016, Citation2018, Citation2020; Matsuyama & Hwang, Citation1990; Sudmanns, Augustin, et al., Citation2021; Sudmanns et al., Citation2018; Tiede et al., Citation2017).

In a conceptual/ mental/ perceptual world model, dimensionality of the perceived 4D geospace-time real-world, observed and mapped (projected) onto a 2D image-plane by an imaging sensor involved with a cognitive visual process, is, apparently, 3D for geographic space (e.g. parameterized as latitude, longitude and height) (Hathaway, Citation2021) plus 1D for time (Ferreira et al., Citation2014; Fonseca et al., Citation2002; Galton & Mizoguchi, Citation2009; Maciel et al., Citation2018).

Actually, the 1D physical time dimension, synonym for 1D numerical variable of time, can be thought of/ perceived/ intellectually interpreted by humans (Buonomano, Citation2018; Harari, Citation2017; Hoffman, Citation2014; Otap, Citation2019). For example, in psychophysics, the well-known cold-water-through-time experiment conducted on human subjects apparently reveal that, in humans, two selves co-exist: every time our narrating self (verbal/symbolic left-brain hemisphere, which retrieves memories, provides meaning/semantics to experience/sensory data and makes decisions) evaluates/interprets our experiences sensed by the experiencing self (non-verbal/subsymbolic right-brain hemisphere, working as moment-to-moment sensoristic consciousness, which remembers nothing), the former is duration-blind. “Usually, it waves an experience using only peak moments and end results. The value of the whole experience is determined by averaging peaks with ends. It discounts [the experience] duration and adopts the peak-end rule” (Harari, Citation2017) (refer to this Section below).

Intuitively, a 1D physical time variable can be intellectually (mentally) discretized (categorized, stratified) into a discrete and finite categorical (nominal) variable (refer to the farther Subsection 3.3.1), consisting of three categorical values (categories, bins, strata, layers): past, present and future time, whose importance weights are different indeed. Typically, they are monotonically non-decreasing, from past to future. The future is never certain and can always change while the past remains the same. Actually, the past becomes increasingly fuzzy (foggy) and plastic in the memory through time. Recent series of experiments by psychologists show that people value events in the future more than they value equivalent events in the equidistant past, that they do so even when they consider this asymmetry irrational, and that one reason why they make these asymmetrical valuations is that contemplating future events produces greater affect than does contemplating past events (APS – Association for Psychological Science, Citation2008). While new studies in psychology explain why the future is more important than the past, as far as the power to create, the present is the most important time category. Actually, the future is collectively perceived as the most important time of the three time categories. For example, in recent years, the slogan of a political campaign of great success at national scale was “Hope” (for a better future). Intuitively, if I am subject to a dental treatment at present time, my endurance to an ever-varying sensation of pain benefits from my stable mental concept of hope in a better future.

From the previous paragraphs our conjecture is that, intuitively, whereas the sensory data dimensionality of an observed geospace-time scene-domain, belonging to the real (physical) world, is 3D for geographic space + 1D for time = 4D, the typical dimensionality of a stable/hard-to-vary (Matsuyama & Hwang, Citation1990; Sweeney, Citation2018a), but plastic (Baraldi & Alpaydin, Citation2002a, Citation2002b; Fritzke, Citation1997; Langley, Citation2012; Mermillod, Bugaiska, & Bonin, Citation2013; Wolski, Citation2020a, Citation2020b) conceptual/ mental/ perceptual model of the 4D geospace-time physical world is 7D overall, specifically:

3D for geographic space, e.g. Latitude, Longitude and Height. Plus

3D for time, partitioned for weighting purposes into past, present and future. Plus

1D for meaning-by-convention/semantics-in-context (Ball, Citation2021; Santoro et al., Citation2021; Sowa, Citation2000, p. 181), where meaning-by-convention/semantics-in-context is regarded as the conceptual/ mental/ intellectual meaning of observations of the real-world, provided by an interpretant. In agreement with philosophical hermeneutics (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020), observation is synonym for true-fact (Matsuyama & Hwang, Citation1990), sensory data, sensation (Matsuyama & Hwang, Citation1990) or quantitative/unequivocal information-as-thing (Capurro & Hjørland, Citation2003). In agreement with philosophical hermeneutics (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020) and semiotics (Ball, Citation2021; Peirce, Citation1994; Perez, Citation2020, Citation2021; Salad, Citation2019; Santoro et al., Citation2021; Wikipedia, Citation2021e), meaning-by-convention/semantics-in-context is synonym for percept (as mental result or product of perceiving) (Matsuyama & Hwang, Citation1990) or inherently ill-posed/ qualitative/ equivocal information-as-data-interpretation (Capurro & Hjørland, Citation2003) (for further details about meaning-by-convention/semantics-in-context as inherently ill-posed sign/ symbol/ data interpretation by an interpretant, refer to this Section below).

For the sake of completeness, let us observe that the proposed conceptualization of a mental world model as 7-dimensional cognitive space can be regarded as a simplification of the 11th dimension (Wikipedia, Citation2021f), which is a characteristic of spacetime that has been proposed as a possible answer to questions that arise in superstring theory/supergravity (Smolin, Citation2003), which involves the existence of 10 dimensions of space and 1 dimension of time. We see only three spatial dimensions and one time dimension, and the remaining seven spatial dimensions are “compactified”. According to Joshua Hehe, “I have found that by applying Ed Witten’s M-theory cosmology (Witten, Citation2001), which requires an 11-dimensional spacetime continuum, it becomes apparent to me that the three dimensions of open external space [geographic space] contain [physical] bodies while the seven dimensions of closed (‘compactified’) internal space must contain [the mind, related to the notion of personal conscious mind in Jungian psychoanalysis, which exists in certain geographic spaces at specific times, refer to this Section above]. What this means is that, along with these physical and mental state-spaces, I personally think that time serves as the dimension of interaction, thereby solving the mind-body problem. So, assuming my hypothesis is tenable, then the mechanism by which the mind affects the body is temporal in nature. This is true for the body affecting the mind, as well. In other words, the 3-dimensional objects in physical [external] space (dimensions 1–3) and the 7-dimensional [‘compactified’] subjects in metaphysical [mental, internal] space (dimensions 5–11) interact by way of time (dimension 4)” (Hehe, Citation2021).

If the physical dimension of time is threefold in human perception, then a natural question to ask might be the following (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020).

In existing so-called Artificial Intelligence systems, expected to mimic and/or replace human behaviors and decision-making processes (EC - European Commission, Citation2019), what is the adopted model of time?

Interestingly enough, beyond their physical time, there is no (abstract, conceptual) model of time in typical artificial data and/or information processing systems, including so-called Artificial Intelligence systems.

The obvious observation (unquestionable true-fact) about these artificial systems/digital machines is that their being-in-the-world is by no means human-like (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020). Hence, a natural question to ask might be the following.

If they are not in-the-world (of humans), how can artificial systems/digital machines reach human-level intelligence (Bills, Citation2020; Chollet, Citation2019; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020; Hassabis et al., Citation2017; Hawkins, Citation2021; Ideami, Citation2021; Jordan, Citation2018; Langley, Citation2012; Mindfire Foundation, Citation2018; Mitchell, Citation2021; Perez, Citation2017; Practical AI, Citation2020; Russell & Norvig, Citation1995; Saba, Citation2020c; Thompson, Citation2018; U.S. DARPA - Defense Advanced Research Projects Agency, Citation2018; Varela et al., Citation1991)?

According to Hubert Dreyfus, they cannot (Dreyfus, Citation1965, Citation1991, Citation1992), unless embodied cognition is pursued (Fjelland, Citation2020; Ideami, Citation2021; Mitchell, Citation2021; Perez, Citation2017; Varela et al., Citation1991) in the multi-disciplinary domain of cognitive science (Ball, Citation2021; Capra & Luisi, Citation2014; Hassabis et al., Citation2017; Hoffman, Citation2008, Citation2014; Langley, Citation2012; Miller, Citation2003; Mindfire Foundation, Citation2018; Mitchell, Citation2019; Parisi, Citation1991; Santoro et al., Citation2021; Serra & Zanarini, Citation1990; Varela et al., Citation1991; Wikipedia, Citation2019).