ABSTRACT

Aiming at the convergence between Earth observation (EO) Big Data and Artificial General Intelligence (AGI), this paper consists of two parts. In the previous Part 1, existing EO optical sensory image-derived Level 2/Analysis Ready Data (ARD) products and processes are critically compared, to overcome their lack of harmonization/ standardization/ interoperability and suitability in a new notion of Space Economy 4.0. In the present Part 2, original contributions comprise, at the Marr five levels of system understanding: (1) an innovative, but realistic EO optical sensory image-derived semantics-enriched ARD co-product pair requirements specification. First, in the pursuit of third-level semantic/ontological interoperability, a novel ARD symbolic (categorical and semantic) co-product, known as Scene Classification Map (SCM), adopts an augmented Cloud versus Not-Cloud taxonomy, whose Not-Cloud class legend complies with the standard fully-nested Land Cover Classification System’s Dichotomous Phase taxonomy proposed by the United Nations Food and Agriculture Organization. Second, a novel ARD subsymbolic numerical co-product, specifically, a panchromatic or multi-spectral EO image whose dimensionless digital numbers are radiometrically calibrated into a physical unit of radiometric measure, ranging from top-of-atmosphere reflectance to surface reflectance and surface albedo values, in a five-stage radiometric correction sequence. (2) An original ARD process requirements specification. (3) An innovative ARD processing system design (architecture), where stepwi se SCM generation and stepwise SCM-conditional EO optical image radiometric correction are alternated in sequence. (4) An original modular hierarchical hybrid (combined deductive and inductive) computer vision subsystem design, provided with feedback loops, where software solutions at the Marr two shallowest levels of system understanding, specifically, algorithm and implementation, are selected from the scientific literature, to benefit from their technology readiness level as proof of feasibility, required in addition to proven suitability. To be implemented in operational mode at the space segment and/or midstream segment by both public and private EO big data providers, the proposed EO optical sensory image-derived semantics-enriched ARD product-pair and process reference standard is highlighted as linchpin for success of a new notion of Space Economy 4.0.

KEYWORDS:

- Analysis Ready Data

- Artificial General Intelligence

- Artificial Narrow Intelligence

- big data

- cognitive science

- computer vision

- Earth observation

- essential climate variables

- Global Earth Observation System of (component) Systems

- inductive/ deductive/ hybrid inference

- Scene Classification Map

- Space Economy 4.0

- radiometric corrections of optical imagery from atmospheric, topographic, adjacency and bidirectional reflectance distribution function effects

- semantic content-based image retrieval

- 2D spatial topology-preserving/retinotopic image mapping

- world ontology (synonym for conceptual/ mental/ perceptual model of the world)

7. Introduction

This is the second part of a methodological paper, provided with a relevant survey value, consisting of two parts for the sake of readability. On the one hand, to stress the legacy of the Part 2 from the previous Part 1 (Baraldi et al., Citation2022), sections figures and tables in the present Part 2 are numbered in sequence to those in the Part 1. In more detail, in the present Part 2, numbered sections range from Section 7 to Section 10, figures are numbered from to and tables from to . On the other hand, the present Part 2 includes all its references in its own reference list. It means a reference can be included in either one of the two parts or in both reference lists.

Figure 45. National Oceanic and Atmospheric Administration (NOAA)’s Geostationary Operational Environmental Satellites (GOES)-16 Advanced Baseline Imager (ABI), 16-band spectral resolution. Temporal resolution: 5–15 minutes. Spatial resolution: Bands 1, 500 m (0.5 km), Band 2 to 5: 1000 m (1 km), Bands 6–16: 2000 m (2 km). Table legend: Visible green/red channels: G, R. Near/Middle Infrared channel: NIR, MIR. Far/Thermal Infrared: TIR, see Figure 7 in the Part 1. Top-of-atmosphere reflectance, in range [0.0, 1.0]: TOARF. Kelvin degrees, in range ≥ 0: K°.

![Figure 45. National Oceanic and Atmospheric Administration (NOAA)’s Geostationary Operational Environmental Satellites (GOES)-16 Advanced Baseline Imager (ABI), 16-band spectral resolution. Temporal resolution: 5–15 minutes. Spatial resolution: Bands 1, 500 m (0.5 km), Band 2 to 5: 1000 m (1 km), Bands 6–16: 2000 m (2 km). Table legend: Visible green/red channels: G, R. Near/Middle Infrared channel: NIR, MIR. Far/Thermal Infrared: TIR, see Figure 7 in the Part 1. Top-of-atmosphere reflectance, in range [0.0, 1.0]: TOARF. Kelvin degrees, in range ≥ 0: K°.](/cms/asset/dbb1ba66-025c-40d6-ae74-6344b117f519/tbed_a_2017582_f0001_c.jpg)

Figure 46. Active fire land cover (LC) class-specific (LC class-conditional) sample of 311 pixels selected world-wide, National Oceanic and Atmospheric Administration (NOAA)’s Geostationary Operational Environmental Satellites (GOES)-16 imaging sensor’s bands 1 to 6 (see ), radiometrically calibrated into top-of-atmosphere (TOA) reflectance (TOARF) values, belonging to the physical range of change [0.0, 1.0] (Rocha de Carvalho, Citation2019). (a) Active fire LC class-specific family (envelope) of spectral signatures, including Band 4 – Cirrus band, consisting of, first, a multivariate shape information component and, second, a multivariate intensity information component, see Figure 30 in the Part 1. To be modeled as a hyperpolyhedron, belonging to a multi-spectral (MS), specifically, a 6-dimensional, color data hypercube in TOARF values, see Figure 29 in the Part 1. (b) Same as (a), without Band 4 – Cirrus band.

![Figure 46. Active fire land cover (LC) class-specific (LC class-conditional) sample of 311 pixels selected world-wide, National Oceanic and Atmospheric Administration (NOAA)’s Geostationary Operational Environmental Satellites (GOES)-16 imaging sensor’s bands 1 to 6 (see Figure 45), radiometrically calibrated into top-of-atmosphere (TOA) reflectance (TOARF) values, belonging to the physical range of change [0.0, 1.0] (Rocha de Carvalho, Citation2019). (a) Active fire LC class-specific family (envelope) of spectral signatures, including Band 4 – Cirrus band, consisting of, first, a multivariate shape information component and, second, a multivariate intensity information component, see Figure 30 in the Part 1. To be modeled as a hyperpolyhedron, belonging to a multi-spectral (MS), specifically, a 6-dimensional, color data hypercube in TOARF values, see Figure 29 in the Part 1. (b) Same as (a), without Band 4 – Cirrus band.](/cms/asset/867c8492-64d4-4ccc-9b95-e78be3f47533/tbed_a_2017582_f0002_c.jpg)

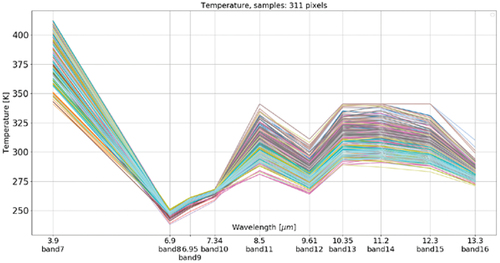

Figure 47. Active fire land cover (LC) class-specific (LC class-conditional) sample of 311 pixels selected world-wide, National Oceanic and Atmospheric Administration (NOAA)’s Geostationary Operational Environmental Satellites (GOES)-16 imaging sensor’s bands 7 to 16 (see ), radiometrically calibrated into top-of-atmosphere (TOA) Temperature (TOAT) values, where the adopted physical unit of measure is the Kelvin degree, whose domain of variation is ≥ 0 (Rocha de Carvalho, Citation2019). This Active fire LC class-specific family (envelope) of spectral signatures consists of, first, a multivariate shape information component and, second, a multivariate intensity information component, see Figure 30 in the Part 1. To be modeled as a hyperpolyhedron, belonging to a multi-spectral (MS), specifically, a 10-dimensional, color data hypercube in Kelvin degree values, see Figure 29 in the Part 1.

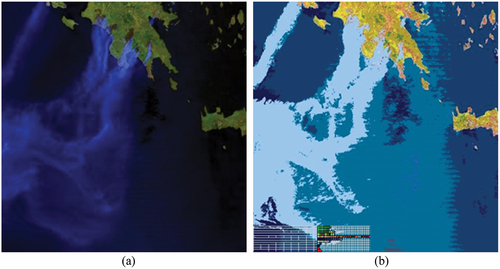

Figure 48. (a) Moderate Resolution Imaging Spectroradiometer (MODIS) image acquired on August 23, 2007, at 9.35 (CEST), covering Greece, depicted in false colors (monitor-typical channel R = MODIS band 6 in the Middle Infrared, channel G = MODIS band 2 in the Near Infrared, channel B = MODIS band 3 in the Visible Blue), spatial resolution: 1 km, radiometrically calibrated into top-of-atmosphere reflectance (TOARF) values, where an Environment for Visualizing Images (ENVI, by L3Harris Geospatial) standard histogram stretching was applied for visualization purposes. (b) Satellite Image Automatic Mapper (SIAM™)’s map of color names (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b), generated from the MODIS image shown in (a), consisting of 83 spectral categories, depicted in pseudo-colors, refer to the SIAM map legend shown in and .

Figure 49. At left, portion of the image shown in , radiometrically calibrated into top-of-atmosphere reflectance (TOARF) values. Moderate Resolution Imaging Spectroradiometer (MODIS) image acquired on August 23, 2007, at 9.35 (CEST), covering Greece, depicted in false colors (monitor-typical channel R = MODIS band 6 in the Middle Infrared, channel G = MODIS band 2 in the Near Infrared, channel B = MODIS band 3 in the Visible Blue), spatial resolution: 1 km, radiometrically calibrated into top-of-atmosphere reflectance (TOARF) values, where an Environment for Visualizing Images (ENVI, by L3Harris Geospatial) standard histogram stretching was applied for visualization purposes. At right, Map Legend – Red: fire pixel detected in both the traditional MODIS Fire Detection (MOFID) algorithm and the SOIL MAPPER-based Fire Detection (SOMAFID) algorithm, an expert system for thermal anomalies detection (Pellegrini, Natali, & Baraldi, Citation2008). White: fire pixel detected by SOMAFID, exclusively. Green: fire pixel detected by MOFID, exclusively.

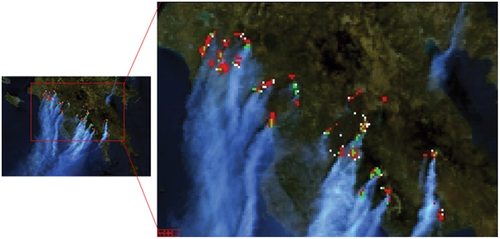

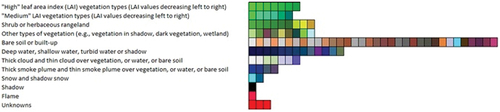

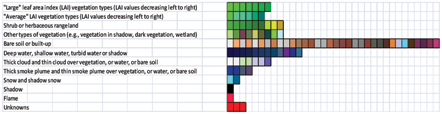

Figure 50. Legend (vocabulary) of hyperspectral color names adopted by the prior knowledge-based Landsat-like Satellite Image Automatic Mapper™ (L-SIAM™, release 88 version 7, see ) lightweight computer program for multi-spectral (MS) reflectance space hyperpolyhedralization (see Figures 29 and 30 in the Part 1), superpixel detection (see Figure 31 in the Part 1) and object-mean view (piecewise-constant input image approximation) quality assessment (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2006, Citation2010a, Citation2010b, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b). Noteworthy, hyperspectral color names do not exist in human languages. Since humans employ a visible RGB imaging sensor as visual data source, human languages employ eleven basic color (BC) names, investigated by linguistics (Berlin & Kay, Citation1969), to partition an RGB data space into polyhedra, which are intuitive to think of and easy to visualize, see Figure 29 in the Part 1. On the contrary, hyperspectral color names (see Figure 30 in the Part 1) must be made up with (invented as) new words, not-yet existing in human languages, to be community-agreed upon for correct (conventional) interpretation before use by members of a community (refer to Subsection 4.2 in the Part 1). For the sake of representation compactness, pseudo-colors associated with the 96 color names/spectral categories, corresponding to a partition of the MS reflectance hyperspace into a discrete and finite ensemble of mutually exclusive and totally exhaustive hyperpolyhedra, equivalent to 96 envelopes/families of spectral signatures (see Figures 29 and 30 in the Part 1), are gathered along the same raw if they share the same parent spectral category (parent hyperpolyhedron) in the prior knowledge-based (static, non-adaptive to data) SIAM decision tree, e.g. “strong” vegetation, equivalent to a spectral end-member (Adams et al., Citation1995). The pseudo-color of a spectral category (color name) is chosen to mimic natural RGB colors of pixels belonging to that spectral category (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2006, Citation2010a, Citation2010b, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b).

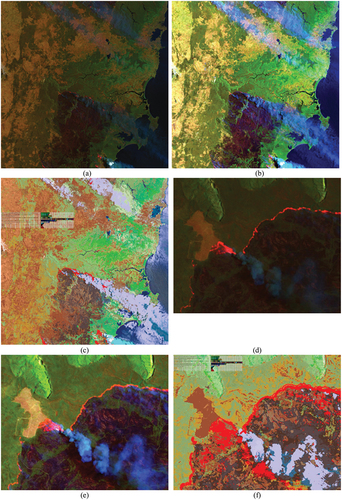

Figure 51. (a) False color quick-look Red-Green-Blue (RGB) Sentinel-2A Multi-Spectral Instrument (MSI) image Level-1C, calibrated into top-of-atmosphere reflectance (TOARF) values, depicting a surface area in SE Australia, acquired on 2019-12-31. In particular: R = Middle Infrared (MIR) = Band 11, G = Near Infrared (NIR) = Band 8, B = Visible Blue = Band 2. Spatial resolution: 10 m. No histogram stretching is applied for visualization purposes. (b) Same as Figure (a), followed by an Environment for Visualizing Images (ENVI, by L3Harris Geospatial) standard histogram stretching, applied for visualization purposes. (c) SIAM’s map of color names generated from Figure (a), consisting of 96 spectral categories (color names) depicted in pseudo-colors. Map of color names legend:

Figure 52. Multi-spectral (MS) signature in top-of-atmosphere reflectance (TOARF) values in range [0, 1], byte-coded into range {0, 255}, such that TOARF_byte = BYTE(TOARF_float * 255.0 + 0.5), where TOARF_byte ∈ {0, 255} is affected by a discretization (quantization) error = (TOARF_Max – TOARF_Min)/255 bins/2 (due to rounding to the closest integer, either above or below) = (1.0–0.0)/255.0/2.0 = 0.002 = 0.2%, to be considered negligible (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2006, Citation2010a, Citation2010b, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b) (refer to Subsection 3.3.2 in the Part 1). (a) Active fire samples (region of interest, ROI), MS signature in TOARF_byte values in range {0, 255}, Sentinel-2A Multi-Spectral Instrument (MSI) image Level-1C, of Australia, acquired on 2019-12-31 and shown in , with bands 1 to 6 equivalent to Landsat-7 ETM+ bands 1 to 5 and 7, respectively. No thermal channel is available in Sentinel-2 MSI imagery, equivalent to the Landsat 7 ETM+ channels 61 and/or 62. (b) For comparison purposes with Figure (a), active fire samples, whose MS signature in TOARF_byte values belongs to range {0, 255}, are selected from a Landsat 7 ETM+ image of Senegal, Path: 203, Row: 051, acquisition date: 2001-01-11. Thermal band ETM62 in kelvin degrees in interval [−100, 155], linearly shifted into range {0, 255}. The two sensor-specific active fire spectral signatures in TOARF values shown in Figure (a) and Figure (b) should be compared with those collected by the geostationary GOES-16 ABI imaging sensor, shown in and .

![Figure 52. Multi-spectral (MS) signature in top-of-atmosphere reflectance (TOARF) values in range [0, 1], byte-coded into range {0, 255}, such that TOARF_byte = BYTE(TOARF_float * 255.0 + 0.5), where TOARF_byte ∈ {0, 255} is affected by a discretization (quantization) error = (TOARF_Max – TOARF_Min)/255 bins/2 (due to rounding to the closest integer, either above or below) = (1.0–0.0)/255.0/2.0 = 0.002 = 0.2%, to be considered negligible (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2006, Citation2010a, Citation2010b, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b) (refer to Subsection 3.3.2 in the Part 1). (a) Active fire samples (region of interest, ROI), MS signature in TOARF_byte values in range {0, 255}, Sentinel-2A Multi-Spectral Instrument (MSI) image Level-1C, of Australia, acquired on 2019-12-31 and shown in Figure 51, with bands 1 to 6 equivalent to Landsat-7 ETM+ bands 1 to 5 and 7, respectively. No thermal channel is available in Sentinel-2 MSI imagery, equivalent to the Landsat 7 ETM+ channels 61 and/or 62. (b) For comparison purposes with Figure (a), active fire samples, whose MS signature in TOARF_byte values belongs to range {0, 255}, are selected from a Landsat 7 ETM+ image of Senegal, Path: 203, Row: 051, acquisition date: 2001-01-11. Thermal band ETM62 in kelvin degrees in interval [−100, 155], linearly shifted into range {0, 255}. The two sensor-specific active fire spectral signatures in TOARF values shown in Figure (a) and Figure (b) should be compared with those collected by the geostationary GOES-16 ABI imaging sensor, shown in Figures 46 and 47.](/cms/asset/51c644e8-a12a-42f6-9464-58ecd2c91320/tbed_a_2017582_f0008_c.jpg)

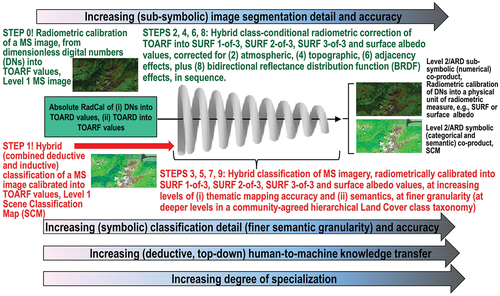

Figure 53. Ideal Earth observation (EO) optical sensory data-derived Level 2/Analysis Ready Data (ARD) product generation system design as a hierarchical alternating sequence of: (A) hybrid (combined deductive and inductive) class-conditional radiometric enhancement of EO Level 1 multi-spectral (MS) top-of-atmosphere reflectance (TOARF) values into EO Level 2/ARD surface reflectance (SURF) 1-of-3, SURF 2-of-3, SURF 3-of-3 and surface albedo values (EC – European Commission, Citation2020; Li et al., Citation2012; Malenovsky et al., Citation2007; Schaepman-Strub et al., Citation2006; Shuai et al., Citation2020) corrected in sequence for (1) atmospheric, (5) topographic (6) adjacency and (7) bidirectional reflectance distribution function (BRDF) effects (Egorov, Roy, Zhang, Hansen, & Kommareddy, Citation2018), and (B) hybrid (combined deductive and inductive) classification of TOARF, SURF 1-of-3 to SURF 3-of-3 and surface albedo values into a stepwise sequence of EO Level 2/ARD scene classification maps (SCMs), whose legend (taxonomy) of community-agreed land cover (LC) class names, in addition to quality layers Cloud and Cloud–shadow, increases stepwise in mapping accuracy and/or in semantics, i.e. stepwise, it reaches deeper semantic levels/finer semantic granularities in a hierarchical LC class taxonomy, see Figure 3 in the Part 1. An instantiation of this EO image pre-processing system design for EO Level 2/ARD symbolic and subsymbolic co-products generation is depicted in . In comparison with this desirable system design, the existing ESA Sen2Cor software system design (see Figure 38 in the Part 1) adopts no hierarchical alternating approach between MS image classification and MS image radiometric enhancement. In more detail, it accomplishes, first, one SCM generation from TOARF values based on a per-pixel (2D spatial context-insensitive) prior spectral knowledge-based decision-tree classifier (synonym for static/non-adaptive-to-data decision-tree for MS color naming, see Figures 29 and 30 in the Part 1). Next, in the ESA Sen2Cor workflow, a stratified/class-conditional MS image radiometric enhancement of TOARF into SURF 1-of-3 up to SURF 3-of-3 values corrected for atmospheric, topographic and adjacency effects is accomplished in sequence, stratified (class-conditioned) by (the haze map and cirrus map of) the same SCM product generated at first stage from TOARF values. In summary, the ESA Sen2Cor SCM co-product is TOARF-derived; hence, it is not “aligned” with data in the ESA Sen2Cor output MS image co-product, consisting of TOARF values radiometrically corrected into SURF 3-of-3 values (refer to Subsection 3.3.2 in the Part 1), where, typically, SURF ≠ TOARF holds, see Equation (9) in the Part 1.

Figure 54. The well-known engineering principles of modularity, hierarchy and regularity, recommended for system scalability (Lipson, Citation2007), characterize a single-date EO optical image processing system design for state-of-the-art multi-sensor EO data-derived Level 2/Analysis Ready Data (ARD) product generation, encompassing an ARD-specific symbolic co-product, known as Scene Classification Map (SCM) (refer to Subsection 8.1.1), and an ARD-specific numerical co-product (refer to Subsection 8.1.2) to be estimated alternately and hierarchically, see . Stage 1: Absolute radiometric calibration (Cal) of dimensionless Digital Numbers (DNs) into top-of-atmosphere (TOA) radiance (TOARD) values ≥ 0. Stage 2: Cal of TOARD into TOA reflectance (TOARF) values ∈ [0.0, 1.0]. Stage 3: EO image classification by an automatic computer vision (CV) system, based on a convergence of spatial with colorimetric evidence (refer to Subsection 4.1 in the Part 1). Stage 4: Class-conditional/Stratified atmospheric correction of TOARF into surface reflectance (SURF) 1-of-3 values ∈ [0, 1]. Stage 5: Class-conditional/Stratified Topographic Correction (STRATCOR) of SURF 1-of-3 into SURF 2-of-3 values. Stage 6: Class-conditional/Stratified adjacency effect correction of SURF 2-of-3 into SURF 3-of-3 values. Stage 7: Class-conditional/Stratified bidirectional reflectance distribution function (BRDF) effect correction of SURF 3-of-3 values into surface albedo values (Bilal et al., Citation2019; EC – European Commission, Citation2020; Egorov, Roy, Zhang, Hansen, & Kommareddy, Citation2018; Franch et al., Citation2019; Li et al., Citation2012; Malenovsky et al., Citation2007; Qiu et al., Citation2019; Schaepman-Strub et al., Citation2006; Shuai et al., Citation2020). This original ARD system design is alternative to, for example, the EO image processing system design and implementation proposed in (Qiu et al., Citation2019) where, to augment the temporal consistency of USGS Landsat ARD imagery, neither topographic correction nor BRDF effect correction is land cover (LC) class-conditional. Worth mentioning, both SCM (referred to as land cover) and surface albedo (referred to as albedo) are included in the list of terrestrial Essential Climate Variables (ECVs) defined by the World Climate Organization (WCO) (Bojinski et al., Citation2014) (see Table 2 in the Part 1), which complies with the Group on Earth Observations (GEO)’s second implementation plan for years 2016–2025 of a new Global Earth Observation System of (component) Systems (GEOSS) as expert EO data-derived information and knowledge system (GEO – Group on Earth Observations, Citation2015; Nativi et al., Citation2015, Citation2020; Santoro et al., Citation2017), see Figure 1 in the Part 1.

![Figure 54. The well-known engineering principles of modularity, hierarchy and regularity, recommended for system scalability (Lipson, Citation2007), characterize a single-date EO optical image processing system design for state-of-the-art multi-sensor EO data-derived Level 2/Analysis Ready Data (ARD) product generation, encompassing an ARD-specific symbolic co-product, known as Scene Classification Map (SCM) (refer to Subsection 8.1.1), and an ARD-specific numerical co-product (refer to Subsection 8.1.2) to be estimated alternately and hierarchically, see Figure 53. Stage 1: Absolute radiometric calibration (Cal) of dimensionless Digital Numbers (DNs) into top-of-atmosphere (TOA) radiance (TOARD) values ≥ 0. Stage 2: Cal of TOARD into TOA reflectance (TOARF) values ∈ [0.0, 1.0]. Stage 3: EO image classification by an automatic computer vision (CV) system, based on a convergence of spatial with colorimetric evidence (refer to Subsection 4.1 in the Part 1). Stage 4: Class-conditional/Stratified atmospheric correction of TOARF into surface reflectance (SURF) 1-of-3 values ∈ [0, 1]. Stage 5: Class-conditional/Stratified Topographic Correction (STRATCOR) of SURF 1-of-3 into SURF 2-of-3 values. Stage 6: Class-conditional/Stratified adjacency effect correction of SURF 2-of-3 into SURF 3-of-3 values. Stage 7: Class-conditional/Stratified bidirectional reflectance distribution function (BRDF) effect correction of SURF 3-of-3 values into surface albedo values (Bilal et al., Citation2019; EC – European Commission, Citation2020; Egorov, Roy, Zhang, Hansen, & Kommareddy, Citation2018; Franch et al., Citation2019; Li et al., Citation2012; Malenovsky et al., Citation2007; Qiu et al., Citation2019; Schaepman-Strub et al., Citation2006; Shuai et al., Citation2020). This original ARD system design is alternative to, for example, the EO image processing system design and implementation proposed in (Qiu et al., Citation2019) where, to augment the temporal consistency of USGS Landsat ARD imagery, neither topographic correction nor BRDF effect correction is land cover (LC) class-conditional. Worth mentioning, both SCM (referred to as land cover) and surface albedo (referred to as albedo) are included in the list of terrestrial Essential Climate Variables (ECVs) defined by the World Climate Organization (WCO) (Bojinski et al., Citation2014) (see Table 2 in the Part 1), which complies with the Group on Earth Observations (GEO)’s second implementation plan for years 2016–2025 of a new Global Earth Observation System of (component) Systems (GEOSS) as expert EO data-derived information and knowledge system (GEO – Group on Earth Observations, Citation2015; Nativi et al., Citation2015, Citation2020; Santoro et al., Citation2017), see Figure 1 in the Part 1.](/cms/asset/e0975773-9d6d-4c18-a2da-1a711c614766/tbed_a_2017582_f0010_c.jpg)

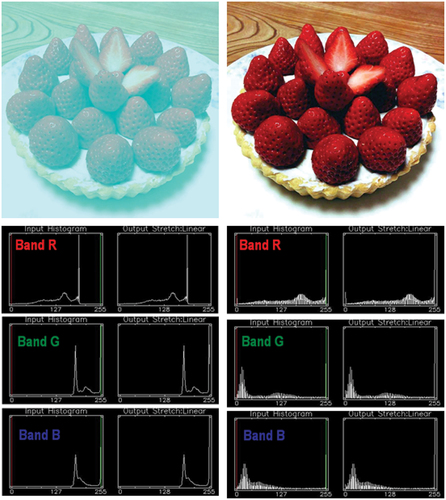

Figure 55. Top left: Red-Green-Blue (RGB) image, source: Akiyoshi Kitaoka @AkiyoshiKitaoka, web page: http://nymag.com/selectall/2017/02/strawberries-look-red-without-red-pixels-color-constancy.html. Strawberries appear to be reddish, though the pixels are not, refer to the monitor-typical RGB input-output histograms shown at bottom left. No histogram stretching is applied for visualization purposes, see the monitor-typical RGB input-output histograms shown at bottom left. Top right: Output of the self-organizing statistical model-based color constancy algorithm, as reported in (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Baraldi et al., Citation2017; Vo et al., Citation2016), input with the image shown top left. No histogram stretching is applied for visualization purposes, see the monitor-typical RGB input-output histograms shown at bottom right.

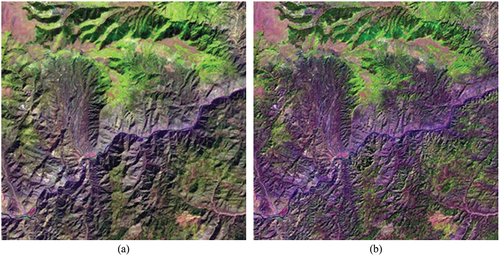

Figure 56. Fully automated two-stage stratified (class-conditional) topographic correction (STRATCOR) (Baraldi et al., Citation2010). (a) Zoomed area of a Landsat 7 ETM+ image of Colorado, USA (path: 128, row: 021, acquisition date: 2000–08-09), depicted in false colors (R: band ETM5, G: band ETM4, B: band ETM1), 30 m resolution, radiometrically calibrated into TOARF values. (b) STRATCOR applied to the Landsat image shown at left, with data stratification based on the Shuttle Radar Topography Mission (SRTM) Digital Elevation Model (DEM) and a 18-class preliminary spectral map generated at coarse granularity by the 7-band Landsat-like Satellite Image Automatic Mapper (L-SIAM) software toolbox (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2006, Citation2010a, Citation2010b, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b), see .

Figure 57. (a) Meteosat Second Generation (MSG) Spinning Enhanced Visible and InfraRed Imager (SEVIRI) image acquired on 2012-05-30, radiometrically calibrated into TOARF values and depicted in false colors (R: band MIR, G: band NIR, B: band Blue), spatial resolution: 3 km. No histogram stretching is applied for visualization purposes. (b). Advanced Very High Resolution Radiometer (AVHRR)-like SIAM (AV-SIAM™, release 88 version 7) hyperpolyhedralization of the MS reflectance hyperspace and prior knowledge-based mapping of the input MS image into a vocabulary of hypercolor names. The AV-SIAM map legend, consisting of 83 spectral categories (see ), is depicted in pseudo-colors, similar to those shown in :

Figure 58. (a) Airborne 10 cm resolution true-color Red-Green-Blue (RGB) orthophoto of Trento, Italy, 4017 × 4096 pixels in size x 3 bands, acquired in 2014 and provided with no radiometric calibration metadata file. No histogram stretching is applied for visualization purposes. (b) Same RGB orthophoto, subject to self-organizing statistical color constancy (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Baraldi et al., Citation2017; Vo et al., Citation2016). No histogram stretching is applied for visualization purposes. (c) RGBIAM’s polyhedralization of the RGB color space and prior knowledge-based map of RGB color names generated from the RGB image, pre-processed by a color constancy algorithm (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Baraldi et al., Citation2017; Vo et al., Citation2016). The RGBIAM legend of RGB color names, consisting of 50 spectral categories at fine discretization granularity, is depicted in pseudo-colors. Map legend, shown in :

Figure 59. Legend (vocabulary) of RGB color names adopted by the prior knowledge-based Red-Green-Blue (RGB) Image Automatic Mapper™ (RGBIAM™, release 6 version 2) lightweight computer program for RGB data space polyhedralization (see Figure 29 in the Part 1), superpixel detection (see Figure 31 in the Part 1) and object-mean view (piecewise-constant input image approximation) quality assessment (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2006; Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b). Before running RGBIAM to map onto a deterministic RGB color name each pixel value of an input 3-band RGB image, encoded in either true- or false-colors, the RGB image should be pre-processed (enhanced) for normalization/ harmonization/ calibration (Cal) purposes, e.g. by means of a color constancy algorithm (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Baraldi et al., Citation2017; Boynton, Citation1990; Finlayson et al., Citation2001; Gevers et al., Citation2012; Gijsenij et al., Citation2010; Vo et al., Citation2016), see and . The RGBIAM implementation (release 6 version 2) adopts 12 basic color (BC) names (polyhedra) as coarse RGB color space partitioning, consisting of the eleven BC names adopted by human languages (Berlin & Kay, Citation1969), specifically, black, white, gray, red, orange, yellow, green, blue, purple, pink and brown (refer to Subsection 4.2 in the Part 1), plus category “Unknowns” (refer to Section 2 in the Part 1), and 50 color names as fine color quantization (discretization) granularity, featuring parent-child relationships from the coarse to the fine quantization level. For the sake of representation compactness, pseudo-colors associated with the 50 color names (spectral categories, corresponding to a mutually exclusive and totally exhaustive partition of the RGB data space into polyhedra, see Figure 29 in the Part 1) are gathered along the same raw if they share the same parent spectral category (parent polyhedron) in the prior knowledge-based (static, non-adaptive to data) RGBIAM decision tree. The pseudo-color of a spectral category (color name) is chosen to mimic natural RGB colors of pixels belonging to that spectral category (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Baraldi et al., Citation2017; Vo et al., Citation2016).

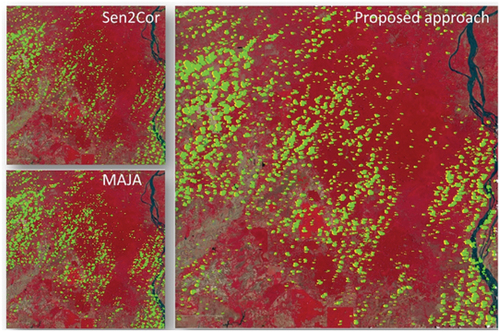

Figure 60. First test image of a Cambodia site (Baraldi & Tiede, Citation2018b). Final 3-level Cloud/Cloud–shadow/Others maps generated by the three algorithms under comparison, specifically, single-date AutoCloud+, the single-date AutoCloud+ Baraldi & Tiede, Citation2018a, Citation2018b), the single-date Sen2Cor (DLR - Deutsches Zentrum für Luft-und Raumfahrt e.V. and VEGA Technologies, Citation2011; ESA - European Space Agency, Citation2015) and the multi-date MAJA (Hagolle et al., Citation2017; Main-Knorn et al., Citation2018), where class “Others” is overlaid with the input Sentinel-2 A Multi-Spectral Instrument (MSI) Level 1C image, radiometrically calibrated into top-of-atmosphere reflectance (TOARF) values and depicted in false-colors: monitor-typical Red-Green-Blue (RGB) channels are selected as R = Near Infrared (NIR) channel, G = Visible Red channel, B = Visible Green channel. Histogram stretching is applied for visualization purposes. Output class Cloud is shown in a green pseudo-color, class Cloud–shadow in a yellow pseudo-color.

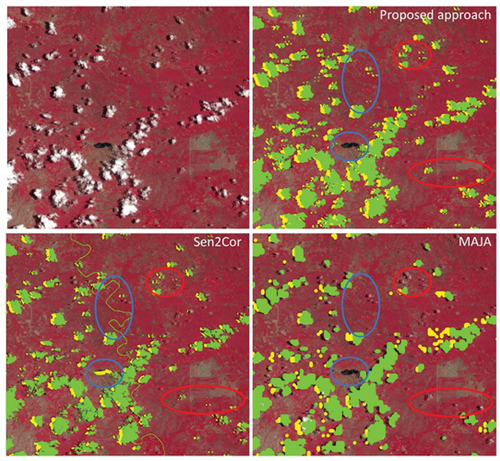

Figure 61. First test image of a Cambodia site (Baraldi & Tiede, Citation2018b). Zoom-in of the final 3-level Cloud/Cloud–shadow/Others maps generated by the three algorithms under comparison, where class “others” is overlaid with the input Sentinel-2 A Multi-Spectral Instrument (MSI) Level 1C image, radiometrically calibrated into top-of-atmosphere (TOA) reflectance (TOARF) values and depicted in false-colors: monitor-typical RGB channels are selected as R = Near InfraRed (NIR) channel, G = Visible Red channel, B = Visible Green channel. Histogram stretching is applied for visualization purposes. Output class Cloud is shown in a green pseudo-color, class Cloud–shadow in a yellow pseudo-color. Based on qualitative photointerpetation, the single-date Sen2Cor algorithm (DLR - Deutsches Zentrum für Luft-und Raumfahrt e.V. and VEGA Technologies, Citation2011; ESA - European Space Agency, Citation2015) appears to underestimate Cloud–shadows, although some Water areas are misclassified as Cloud–shadow. In addition, some River/river beds are misclassified as Cloud. These two cases of Cloud false positives and Cloud–shadow false positives are highlighted in blue circles. The multi-date MAJA algorithm (Hagolle et al., Citation2017; Main-Knorn et al., Citation2018), overlooks some Cloud instances small in size (in relative terms), as highlighted in red circles.

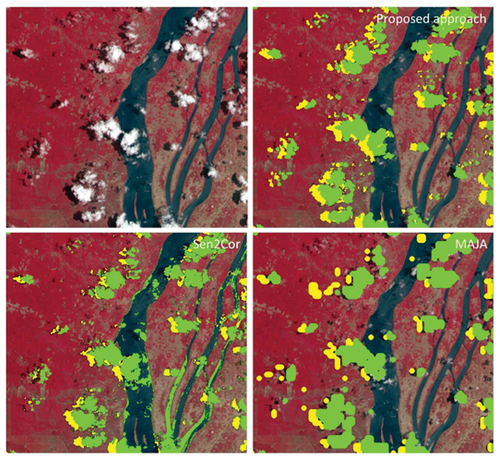

Figure 62. First test image of a Cambodia site (Baraldi & Tiede, Citation2018b). Extra zoom-in of the final 3-level Cloud/Cloud–shadow/Others maps generated by the three algorithms under comparison, where class “Others” is overlaid with the input Sentinel-2 A Multi-Spectral Instrument (MSI) Level 1C image, radiometrically calibrated into top-of-atmosphere (TOA) reflectance (TOARF) values and depicted in false-colors: monitor-typical RGB channels are selected as R = Near InfraRed (NIR) channel, G = Visible Red channel, B = Visible Green channel. Histogram stretching is applied for visualization purposes. Output class Cloud is shown in a green pseudo-color, class Cloud–shadow in a yellow pseudo-color. Based on qualitative photointerpetation, the single-date Sen2Cor algorithm (DLR - Deutsches Zentrum für Luft-und Raumfahrt e.V. and VEGA Technologies, Citation2011; ESA - European Space Agency, Citation2015) appears to underestimate class Cloud–shadow, although some detected Cloud–shadow instances are false positives because of misclassified Water areas. In addition, some River/river beds are misclassified as Cloud. To reduce false positives in Cloud–shadow detection, MAJA adopts a multi-date approach. Nevertheless, the multi-date MAJA algorithm (Hagolle et al., Citation2017; Main-Knorn et al., Citation2018), misses some instances of Cloud-over-water. Overall, MAJA’s Cloud and Cloud–shadow results look more “blocky” (affected by artifacts in localizing true boundaries of target image-objects).

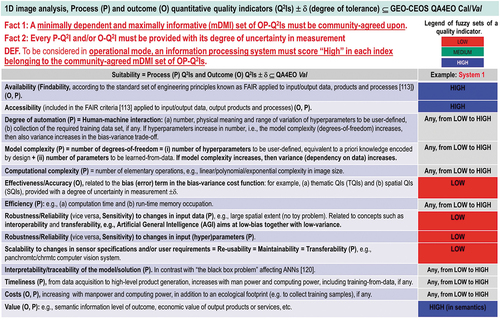

Figure 63. Typical values of a minimally dependent maximally informative (mDMI) set of outcome and process quantitative quality indicators (OP-Q2Is) featured by a pixel-based (2D spatial context-insensitive) 1D image analysis algorithm (see Figure 18 in the Part 1) for Cloud and Cloud-shadow detection in MS imagery (see Figure 22 in the Part 1), such as Fmask (Zhu et al., Citation2015), ESA Sen2Cor (DLR – Deutsches Zentrum für Luft-und Raumfahrt e.V. and VEGA Technologies, Citation2011; ESA – European Space Agency, Citation2015) and CNES-DLR MAJA (Hagolle et al., Citation2017; Main-Knorn et al., Citation2018) (see ), considered a mandatory EO image understanding (classification) task for quality layers detection in Analysis Ready Data (ARD) workflows.

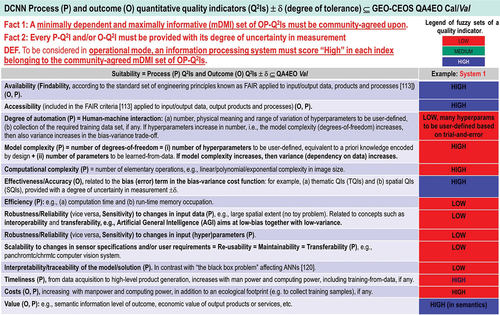

Figure 64. Typical values of a minimally dependent maximally informative (mDMI) set of outcome and process quantitative quality indicators (OP-Q2Is) featured by a deep convolutional neural network (DCNN) (Cimpoi et al., Citation2014) involved with Cloud and Cloud-shadow detection in MS imagery (Bartoš, Citation2017; EOportal, Citation2020; Wieland et al., Citation2019) (see ), considered a mandatory EO image understanding (classification) task for quality layers detection in Analysis Ready Data (ARD) workflows.

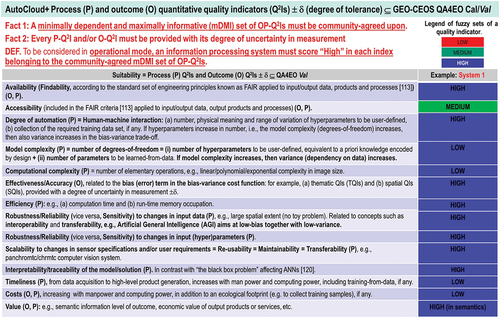

Figure 65. Expected values of a minimally dependent maximally informative (mDMI) set of outcome and process quantitative quality indicators (OP-Q2Is) featured by a “universal” multi-sensor automatic AutoCloud+ algorithm for Cloud and Cloud-shadow detection in multi-spectral (MS) imagery (Baraldi & Tiede, Citation2018a, Citation2018b) (see ), considered a mandatory EO image understanding (classification) task for quality layers detection in Analysis Ready Data (ARD) workflows. By scoring “high” in each indicator of an mDMI set of OP-Q2Is, a “universal” multi-sensor automated AutoCloud+ algorithm for Cloud and Cloud-shadow quality layers detection in MS imagery is expected to be considered in operational mode (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b), as necessary-but-not-sufficient precondition of ARD workflows.

Table 4. The Satellite Image Automatic Mapper™ (SIAM™) lightweight computer program (release 88 version 7) for multi-spectral (MS) reflectance space hyperpolyhedralization (see Figures 29 and 30 in the Part 1), superpixel detection (see Figure 31 in the Part 1) and object-mean view quality assessment (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2006, Citation2010a, Citation2010b, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b). SIAM is an Earth observation (EO) system of systems scalable to any past, present or future MS imaging sensor, provided with radiometric calibration metadata parameters for radiometric calibration (Cal) of digital numbers into top-of-atmosphere reflectance (TOARF), surface reflectance (SURF) or surface albedo values, where relationship ‘TOARF ⊇ SURF ⊇ Surface albedo’ = Equation (8) in the Part 1 holds. SIAM is an expert system (deductive/ top-down/ prior knowledge-based static decision tree, non-adaptive to input data) for mapping each color value in the MS reflectance space into a color name belonging to the SIAM vocabulary of hypercolor names. It consists of the following subsystems. (i) 7-band Landsat-like SIAM™ (L-SIAM™), with Landsat-like input channels Blue (B) = Blue0.45÷0.50, Green (G) = Green0.54÷0.58, Red (R) = Red0.65÷0.68, Near Infrared (NIR) = NIR0.78÷0.90, Middle Infrared 1 (MIR1) = MIR1.57÷1.65, Middle Infrared 2 (MIR2) = MIR2.08÷2.35, and Thermal Infrared (TIR) = TIR10.4–12.5, see Figure 7 and Table 3 in the Part 1. (ii) 4-band (channels G, R, NIR, MIR1) SPOT-like SIAM™ (S-SIAM™). (iii) 4-band (channels R, NIR, MIR1, and TIR) Advanced Very High Resolution Radiometer (AVHRR)-like SIAM™ (AV-SIAM™). (iv) 4-band (channels B, G, R, and NIR) QuickBird-like SIAM™ (Q-SIAM™) (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2006, Citation2010a, Citation2010b, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b).

Table 5. Conceptual/methodological comparison of existing algorithms for Cloud and Cloud-shadow quality layers detection in spaceborne MS imagery for EO Level 2/ARD-specific symbolic output co-product generation.

The preceding Part 1 (Baraldi et al., Citation2022) of the present Part 2 is provided with a relevant survey value in the multi-disciplinary domain of cognitive science (Ball, Citation2021; Capra & Luisi, Citation2014; Hassabis, Kumaran, Summerfield, & Botvinick, Citation2017; Hoffman, Citation2008, Citation2014; Langley, Citation2012; Miller, Citation2003; Mindfire Foundation, Citation2018; Mitchell, Citation2019; Parisi, Citation1991; Santoro, Lampinen, Mathewson, Lillicrap, & Raposo, Citation2021; Serra & Zanarini, Citation1990; Varela, Thompson, & Rosch, Citation1991; Wikipedia, Citation2019), encompassing disciplines like philosophy (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020; Fodor, Citation1998; Peirce, Citation1994), semiotics (Ball, Citation2021; Peirce, Citation1994; Perez, Citation2020, Citation2021; Salad, Citation2019; Santoro et al., Citation2021; Wikipedia, Citation2021e), linguistics (Ball, Citation2021; Berlin & Kay, Citation1969; Firth, Citation1962; Rescorla, Citation2019; Saba, Citation2020a, Citation2020c), anthropology (Harari, Citation2011, Citation2017; Wikipedia, Citation2019), neuroscience (Barrett, Citation2017; Buonomano, Citation2018; Cepelewicz, Citation2021; Daniels, Citation2021; Hathaway, Citation2021; Hawkins, Citation2021; Hawkins, Ahmad, & Cui, Citation2017; Kaufman, Churchland, & Ryu et al., Citation2014; Kosslyn, Citation1994; Libby & Buschman, Citation2021; Mason & Kandel, Citation1991; Salinas, Citation2021a, Citation2021b; Slotnick, Thompson, & Kosslyn, Citation2005; Zador, Citation2019), which is focused on the study of the brain machinery in the mind-brain problem (Hassabis et al., Citation2017; Hoffman, Citation2008; Serra & Zanarini, Citation1990; Westphal, Citation2016), computational neuroscience (Beniaguev, Segev, & London, Citation2021; DiCarlo, Citation2017; Gidon et al., Citation2020; Heitger, Rosenthaler, von der Heydt, Peterhans, & Kubler, Citation1992; Pessoa, Citation1996; Rodrigues & du Buf, Citation2009), psychophysics (Benavente, Vanrell, & Baldrich, Citation2008; Bowers & Davis, Citation2012; Griffin, Citation2006; Lähteenlahti, Citation2021; Mermillod, Bugaiska, & Bonin, Citation2013; Parraga, Benavente, Vanrell, & Baldrich, Citation2009; Vecera & Farah, Citation1997), psychology (APS, Citation2008; Hehe, Citation2021), computer science, formal logic (Laurini & Thompson, Citation1992; Sowa, Citation2000), mathematics, physics, statistics and (the meta-science of) engineering (Langley, Citation2012; Santoro et al., Citation2021; Wikipedia, Citation2019), which includes knowledge engineering (Laurini & Thompson, Citation1992) and geographic information science (GIScience) (Buyong, Citation2007; Couclelis, Citation2010, Citation2012; Ferreira, Camara, & Monteiro, Citation2014; Fonseca, Egenhofer, Agouris, & Camara, Citation2002; Goodchild, Yuan, & Cova, Citation2007; Hitzler et al., Citation2012; Hu, Citation2017; Kuhn, Citation2005; Longley, Goodchild, Maguire, & Rhind, Citation2005; Maciel et al., Citation2018; Sheth, Citation2015; Sonka, Hlavac, & Boyle, Citation1994; Stock, Hobona, Granell, & Jackson, Citation2011). In the preceding Part 1, the multi-disciplinary domain of cognitive science is regarded as background knowledge of the remote sensing (RS) meta-science (science of sciences) community (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Couclelis, Citation2012), see Figure 11(a) in the Part 1.

In agreement with the intergovernmental Group on Earth Observations (GEO)-Committee on Earth Observation Satellites (CEOS) Quality Assurance Framework for Earth Observation (QA4EO) Calibration/Validation (Cal/Val) requirements (Baraldi, Citation2009, Citation2017, Citation2019b; Baraldi, Humber, Tiede, & Lang, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; GEO-CEOS, Citation2010; Schaepman-Strub, Schaepman, Painter, Dangel, & Martonchik, Citation2006; Shuai et al., Citation2020) (refer to Section 2 in the Part 1), overarching goal of the RS meta-science is to transform multi-source Earth observation (EO) big sensory data, characterized by the six Vs of volume, variety, veracity, velocity, volatility and value (Metternicht, Mueller, & Lucas, Citation2020), into value-adding information products and services (VAPS) in operational mode, suitable for coping with the United Nations (UN) Sustainable Development Goals (SDGs) from year 2015 to 2030 (UN, Citation2021), at regional to global spatial extents, in a new era of Space Economy 4.0 (Mazzucato & Robinson, Citation2017), see Figure 10 in the Part 1.

In 2017, a new notion of Space Economy 4.0 was proposed by Mazzucato and Robinson to the European Space Agency (ESA) (Mazzucato & Robinson, Citation2017). According to these authors, in the “seamless innovation chain” required by a new Space Economy 4.0 (see Figure 10 in the Part 1), first-stage “horizontal” (enabling) capacity building, coping with background conditions necessary to specialization, is preliminary to second-stage “vertical” (deep and narrow, specialized) policies, suitable for coping with a potentially huge worldwide market of institutional and private end-users of space technology, encompassing grand societal challenges, such as the UN SDGs from year 2015 to 2030 (UN – United Nations, Department of Economic and Social Affairs, Citation2021).

In the preceding Part 1, the first original contribution is formalization of semantic relationship

‘Deep Convolutional Neural Network (DCNN) ⊂ Deep Learning-from-data (DL) ⊂ Machine Learning-from-data (ML) → Artificial Weak/Narrow Intelligence (ANI) → Artificial General/Strong Intelligence (AGI) ⊃ Computer Vision (CV) ⊃ Earth Observation Image Understanding (EO-IU) ⊃ Analysis Ready Data (ARD)’ = Eq. (5) in the Part 1,

where semantic relationship ‘ARD ⊂ EO-IU ⊂ CV ⊂ AGI ⊂ Cognitive science’ = Eq. (3) in the Part 1 holds (see Figure 11(a) in the Part 1), while ANI is formulated as

‘ANI = [DCNN ⊂ DL ⊂ ML logical-OR Traditional deductive Artificial Intelligence (static expert systems, non-adaptive to data, also known as Good Old-Fashioned Artificial Intelligence, GOFAI)]’ = Eq. (6) in the Part 1.

In these semantic relationships, adopted herein as working hypotheses (postulates, axioms), symbol ‘→’ denotes semantic relationship part-of (without inheritance), pointing from the supplier to the client, not to be confused with semantic relationship subset-of, ‘⊃’, meaning specialization with inheritance from the superset (at left) to the subset (at right), in agreement with symbols adopted by the standard Unified Modeling Language (UML) for graphical modeling of object-oriented software (Fowler, Citation2003), see Figure 11(a) and Figure 11(b) in the Part 1.

In these original equations, buzzword “Artificial Intelligence”, increasingly adopted by the scientific community together with the general public in recent years, is disambiguated into the two better-constrained (better-defined, to be better understood) notions of ANI and AGI, where ANI = Equation (6) in the Part 1 is regarded as part-without-inheritance-of AGI, such that semantic relationship ‘AGI ← ANI ← ML ⊃ DL ⊃ DCNN’ = Equation (5) in the Part 1 holds, in agreement with the notions of AGI ≠ ANI increasingly promoted by relevant portions of the existing literature (Bills, Citation2020; Chollet, Citation2019; Dreyfus, Citation1965, Citation1991, Citation1992; EC, Citation2019; Fjelland, Citation2020; Hassabis et al., Citation2017; Ideami, Citation2021; Jajal, Citation2018; Jordan, Citation2018; Mindfire Foundation, Citation2018; Mitchell, Citation2021; Practical AI, Citation2020; Saba, Citation2020c; Santoro et al., Citation2021; Sweeney, Citation2018a; Thompson, Citation2018; Wolski, Citation2020a, Citation2020b; Zawadzki, Citation2021).

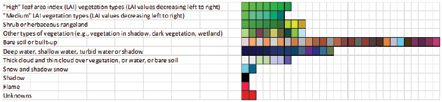

Worth mentioning, AGI = Equation (5) and ANI = Equation (6) in the Part 1 are inconsistent with (alternative to) relationship (Claire, Citation2019; Copeland, Citation2016)

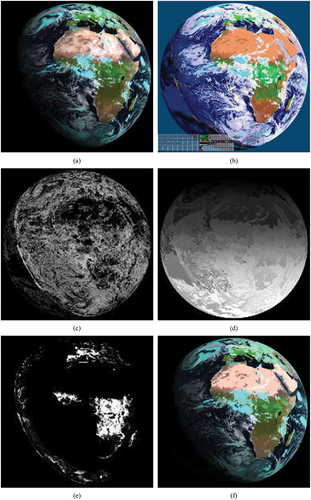

‘A(G/N)I ⊃ ML ⊃ DL ⊃ DCNN’ = Equation (7) in the Part 1,

adopted as postulate by increasing portions of the ML, CV and RS meta-sciences in recent years, see Figure 11(c) in the Part 1. For example, in (Copeland, Citation2016), it is reported that: “since an early flush of optimism in the 1950s, smaller subsets of Artificial Intelligence – first machine learning, then deep learning, a subset of machine learning – have created even larger disruptions.” Moreover, starting from 2012, when the ‘DCNN ⊂ DL ⊂ ML’ paradigm was successfully proposed by the CV community (Krizhevsky, Sutskever, & Hinton, Citation2012), DL enthusiasts, practitioners and scientists have been promoting DL as synonym for A(G/N)I (Claire, Citation2019; Copeland, Citation2016), see Figure 11(c) in the Part 1.

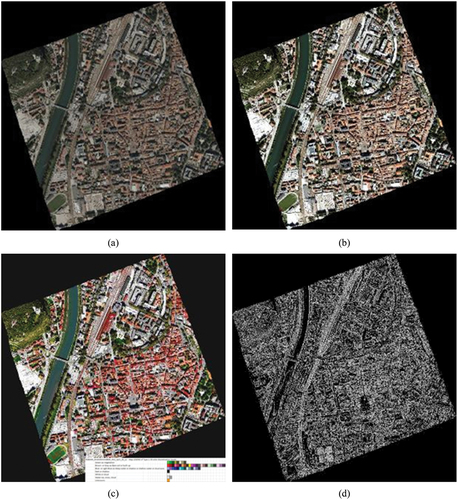

Alternative to Equation (7) in the Part 1, the two semantic relationships adopted herein as working hypotheses, specifically, AGI = Equation (5) and ANI = Equation (6) in the Part 1, are endorsed by the European Commission (EC), when it acknowledges (at least in words) that “currently deployed Artificial Intelligence systems are examples of ANI” (EC – European Commission, Citation2019), in agreement with relevant portions of the scientific literature (Bills, Citation2020; Chollet, Citation2019; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020; Hassabis et al., Citation2017; Ideami, Citation2021; Jajal, Citation2018; Jordan, Citation2018; Mindfire Foundation, Citation2018; Practical AI, Citation2020; Romero, Citation2021; Santoro et al., Citation2021; Sweeney, Citation2018a; Wolski, Citation2020a, Citation2020b; Zawadzki, Citation2021).

Moreover, in spite of its recent popularity, Equation (7) in the Part 1 is in contrast with the early days of the ML meta-science, when scientists never confused ML with A(G/N)I, i.e. relationship ‘ML ⊂ A(G/N)I’ was never promoted (Bishop, Citation1995; Cherkassky & Mulier, Citation1998; Geman, Bienenstock, & Doursat, Citation1992; Mahadevan, Citation2019; Russell & Norvig, Citation1995; Wolpert, Citation1996; Wolpert & Macready, Citation1997).

Increasing disillusionment on ‘DL ⊂ ML → ANI → AGI’ mainly stems from portions of the ML community (Bartoš, Citation2017; Bills, Citation2020; Bourdakos, Citation2017; Brendel, Citation2019; Brendel & Bethge, Citation2019; Chollet, Citation2019; Crawford & Paglen, Citation2019; Daniels, Citation2021; Deutsch, Citation2012; Dreyfus, Citation1965, Citation1991, Citation1992; Etzioni, Citation2017; Expert.ai, Citation2020; Fjelland, Citation2020; Geman et al., Citation1992; Gonfalonieri, Citation2020; Hao, Citation2019; Hassabis et al., Citation2017; Hawkins, Citation2021; Ideami, Citation2021; Jordan, Citation2018; Langley, Citation2012; LeVine, Citation2017; Lohr, Citation2018; Lukianoff, Citation2019; Mahadevan, Citation2019; Marcus, Citation2018, Citation2020; Marks, Citation2021; Mindfire Foundation, Citation2018; Mitchell, Citation2019, Citation2021; Nguyen, Yosinski, & Clune, Citation2014; Pearl & Mackenzie, Citation2018; Peng, Citation2017; Perez, Citation2017; Pfeffer, Citation2018; Practical AI, Citation2020; Rahimi, Citation2017; Romero, Citation2021; Russell & Norvig, Citation1995; Saba, Citation2020c; Santoro et al., Citation2021; Strubell, Ganesh, & McCallum, Citation2019; Sweeney, Citation2018a, Citation2018b; Szegedy et al., Citation2013; Thompson, Citation2018; U.S. DARPA, Citation2018; Wolpert, Citation1996; Wolpert & Macready, Citation1997; Wolski, Citation2020a, Citation2020b; Ye, Citation2020; Yuille and Chenxi Liu, Citation2019; Zador, Citation2019) pre-dating the recent hype on DL (Claire, Citation2019; Copeland, Citation2016; Krizhevsky et al., Citation2012).

For the sake of completeness, in contrast with the recent popularity of Equation (7) in the Part 1, Appendix I of the Part 1 presents a few quotes of interest in the multi-disciplinary domain of cognitive science, by Alberto Romero (Romero, Citation2021), Walid Saba (Saba, Citation2020c), Michael Jordan (Jordan, Citation2018), Oren Etzioni (Etzioni, Citation2017), Stuart Russell (Bills, Citation2020; Practical AI, Citation2020), Pat Langley (Langley, Citation2012), EC (EC – European Commission, Citation2019), Melanie Mitchell (Mitchell, Citation2019), Geoffrey Hinton (LeVine, Citation2017), Ali Rahimi (Pfeffer, Citation2018; Rahimi, Citation2017), Maciej Wolski (Wolski, Citation2020a, Citation2020b) and Karen Hao (Hao, Citation2019; Strubell et al., Citation2019). Unfortunately, these critical contributions have been largely ignored, to date, by meta-sciences like engineering and RS, where Artificial Intelligence is typically coped with as a problem in statistics, see Equation (7) in the Part 1.

In Figure 11(a) of the Part 1, semantic relationship

‘ARD ⊂ EO-IU ⊂ CV ⊂ AGI ⊂ Cognitive science’ = Equation (3) in the Part 1

holds. In line with this semantic relationship, EO image (2D gridded data)-derived ARD generation is not regarded as a data pre-processing task, suitable for data enhancement preliminary to data processing (analysis). In data pre-processing (enhancement), an input numerical variable, either sensory data or sensory data-derived, is transformed into an output numerical variable of augmented quality (e.g. radiometric quality, geometric quality, etc.), somehow more useful, hence, considered more informative. Intuitively, quality enhancement of a numerical variable pertains to the problem domain of quantitative/unequivocal information-as-thing (Capurro & Hjørland, Citation2003), typical of the Shannon data communication/transmission theory (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Capurro & Hjørland, Citation2003; Santoro et al., Citation2021; Sarkar, Citation2018; Shannon, Citation1948) (refer to Subsection 3.3.3 in the Part 1).

Rather, the aforementioned Equation (3) in the Part 1 regards EO image (2D gridded data)-derived ARD generation as a cognitive problem in the CV sub-domain of cognitive science, see Figure 11(a) in the Part 1.

Encompassing both CV and biological vision (DiCarlo, Citation2017; Dubey, Agrawal, Pathak, Griffiths, & Efros, Citation2018; Heitger et al., Citation1992; Kosslyn, Citation1994; Marr, Citation1982; Mason & Kandel, Citation1991; Mély, Linsley, & Serre, Citation2018; Öğmen & Herzog, Citation2010; Perez, Citation2018; Pessoa, Citation1996; Piasini et al., Citation2021; Rappe, Citation2018; Rodrigues & du Buf, Citation2009; Slotnick et al., Citation2005; Vecera & Farah, Citation1997; Victor, Citation1994), the notion of vision is synonym for inherently ill-posed scene-from-image reconstruction and understanding (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Matsuyama & Hwang, Citation1990), see Figure 20 in the Part 1. Vision is a cognitive task (refer to Subsection 4.1 in the Part 1), pertaining to the problem domain of inherently ill-posed/ qualitative/ equivocal information-as-data-interpretation tasks (Capurro & Hjørland, Citation2003) (refer to Subsection 3.3.3 in the Part 1), which are typically investigated by disciplines like philosophy (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020; Fodor, Citation1998; Peirce, Citation1994), semiotics (Ball, Citation2021; Peirce, Citation1994; Perez, Citation2020, Citation2021; Salad, Citation2019; Santoro et al., Citation2021; Wikipedia, Citation2021e) and linguistics (Ball, Citation2021; Berlin & Kay, Citation1969; Firth, Citation1962; Rescorla, Citation2019; Saba, Citation2020a, Citation2020c), encompassed by the multi-disciplinary domain of cognitive science, see Figure 11(a) in the Part 1.

In quantitative data analysis of scientific quality, sensory data/numerical variables must be better constrained, to be better behaved and better understood, than data typically employed in qualitative data analysis, which is inherently subjective, not-replicable, like in artworks.

Proposed by the RS meta-science community in recent years (CEOS, Citation2018; Dwyer et al., Citation2018; Helder et al., Citation2018; NASA, Citation2019; Qiu et al., Citation2019; USGS – U.S. Geological Survey, Citation2018a, Citation2018c), the notion of ARD aims at enabling expert and non-expert end-users to access/retrieve EO big data ready for use in quantitative data analysis of scientific quality, without requiring laborious EO data pre-processing for geometric and radiometric data enhancement, preliminary to EO data processing (analysis, interpretation) (Dwyer et al., Citation2018). For example, in the ensemble of alternative EO optical image-derived Level 2/ARD product definitions and software implementations existing to date (CEOS – Committee on Earth Observation Satellites, Citation2018; Dwyer et al., Citation2018; Gómez-Chova, Camps-Valls, Calpe-Maravilla, Guanter, & Moreno, Citation2007; Helder et al., Citation2018; Houborga & McCabe, Citation2018; NASA – National Aeronautics and Space Administration, Citation2019; OHB, Citation2016; Tiede, Sudmanns, Augustin, & Baraldi, Citation2020; Tiede, Sudmanns, Augustin, & Baraldi, Citation2021; USGS, Citation2018a, Citation2018b; USGS – U.S. Geological Survey, Citation2018c; Vermote & Saleous, Citation2007) (see Figures 35 and 36 in the Part 1), Cloud and Cloud-shadow quality layers (strata, masks) are typically considered necessary to model data uncertainty in EO optical imagery to be considered suitable for quantitative data analysis of scientific quality.

In contrast with the ARD policy of pursuing multi-source EO data-through-time harmonization/ standardization/ interoperability in support of scholarly/scientific digital data understanding (GO FAIR, Citation2021; ISO/EIC, Citation2015; Wikipedia, Citation2018; Wilkinson, Dumontier, Aalbersberg et al., Citation2016), a popular example of qualitative data analysis of non-scientific quality, equivalent to subjective not-replicable artworks, is provided by the Google Earth Engine Timelapse application (ESA, Citation2021; Google Earth Engine, Citation2021), showing videos of multi-year time-series of spaceborne multi-sensor EO optical images whose radiometric quality, if any, is heterogeneous/non-harmonized. In practice, the Google Earth Engine Timelapse application is suitable for gaining a “wow” effect in qualitative human photointerpretation exclusively, equivalent to manmade works of art. Unfortunately, it is completely inadequate for quantitative big data analysis of scientific quality, which would require a (super) human-level ‘EO-IU ⊂ CV ⊂ AGI’ system in operational mode, which does not exist yet (refer to Section 2 in the Part 1), suitable for coping with the six Vs of big data (Metternicht et al., Citation2020) involved with multi-sensor EO big 2D gridded data interpretation tasks.

The potential impact of the cognitive problem of ‘ARD ⊂ EO-IU ⊂ CV ⊂ AGI’ on the RS community is highlighted by recalling here that the notion of ARD has been strictly coupled with the concept of EO (raster-based) data cube, proposed as innovative midstream EO technology by the RS community in recent years (Open Data Cube, Citation2020; Baumann, Citation2017; CEOS, Citation2020; Giuliani et al., Citation2017; Giuliani, Chatenoux, Piller, Moser, & Lacroix, Citation2020; Lewis et al., Citation2017; Strobl et al., Citation2017).

Synonym for EO (raster-based) big data cube in the 4D geospace-time physical world-domain, Digital (Twin) Earth is yet-another buzzword of increasing popularity (Craglia et al., Citation2012; Goodchild, Citation1999; Gore, Citation1999; Guo, Goodchild, & Annoni, Citation2020; ISDE, Citation2012; Loekken, Le Saux, & Aparicio, Citation2020; Metternicht et al., Citation2020), stemming from Al Gore’s 1998 insight that “we need a Digital Earth, a multi-resolution, 3D representation of the planet, into which we can embed vast quantities of geo-referenced data” (Craglia et al., Citation2012; Gore, Citation1999; Loekken et al., Citation2020). A Digital (Twin) Earth is “an interactive digital replica of the entire planet that can facilitate a shared understanding of the multiple relationships between the physical and natural environments and society” (Guo & Annoni, Citation2020). It is the pre-dating concept of Digital Twin of a complex system, defined as “a set of virtual information constructs that fully describes a potential or actual physical system from the micro atomic level to the macro geometrical level” (Grieves & Vickers, Citation2017), applied to planet Earth (Loekken et al., Citation2020).

Unfortunately, a community-agreed definition of EO (raster-based) data cube does not exist yet, although several recommendations and implementations have been made (Open Data Cube, Citation2020; Baumann, Citation2017; CEOS – Committee on Earth Observation Satellites, Citation2020; Giuliani et al., Citation2017, Citation2020; Lewis et al., Citation2017; Strobl et al., Citation2017). A community-agreed definition of ARD, to be adopted as standard baseline in EO data cube implementations, does not exist either. As a consequence, in common practice, many EO (raster-based) data cube definitions and implementations do not require ARD and, vice versa, an ever-increasing ensemble of new (supposedly better) ARD definitions and/or ARD-specific software implementations is proposed by the RS community, independently of a standardized/harmonized definition of EO big data cube (refer to Section 2 in the Part 1).

To foster innovation across the global value chain required by a new notion of Space Economy 4.0 (Mazzucato & Robinson, Citation2017) (see Figure 10 in the Part 1), the second original contribution of the Part 1, preliminary to the present Part 2, is to promote system interoperability/ standardization/ harmonization, encompassing third-level semantic/ontological interoperability (refer to Section 2 in the Part 1), among existing EO optical sensory image-derived Level 2/ARD product definitions and software implementations (see Figures 35 and 36 in the Part 1), while overcoming their limitations, investigated at the Marr five levels of understanding of an information processing system.

In short, the Marr five levels of understanding of an information processing system are identified as follows (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Marr, Citation1982; Quinlan, Citation2012; Sonka et al., Citation1994) (refer to Subsection 3.2 in the Part 1).

(i) Outcome and process requirements specification.

(ii) Information/knowledge representation.

(iii) System design (architecture).

(iv) Algorithm.

(v) Implementation.

Noteworthy, among the five Marr levels of system understanding, the three more abstract levels, namely, outcome and process requirements specification, information/knowledge representation and system design, are typically considered the linchpin of success of an information processing system, rather than algorithm and implementation (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Marr, Citation1982; Quinlan, Citation2012; Sonka et al., Citation1994) (refer to Subsection 3.2 in the Part 1). Although based on commonsense knowledge (Etzioni, Citation2017; Expert.ai, Citation2020; Thompson, Citation2018; U.S. DARPA – Defense Advanced Research Projects Agency, Citation2018; Wikipedia, Citation2021c) (refer to Section 2 in the Part 1), this observation (true-fact) is oversighted in large portions of the RS and CV literature, where ‘CV ⊃ EO-IU ⊃ ARD’ system analysis, assessment and inter-comparison are typically focused on algorithm and implementation, e.g. refer to works like (Foga et al., Citation2017) and (Ghosh & Kaabouch, Citation2014) as negative examples not to be imitated in the inter-comparison of CV systems.

To be achieved across existing EO optical sensory image-derived Level 2/ARD product definitions and software implementations (see Figures 35 and 36 in the Part 1), the pursuit of interoperability/ standardization/ harmonization, encompassing third-level semantic/ontological interoperability (refer to Section 2 in the Part 1), is a cognitive (information-as-data-interpretation) task (see Equation (3) in the Part 1), inherently ill-posed in the Hadamard sense (Hadamard, Citation1902), which requires Bayesian constraints (Bowers & Davis, Citation2012; Ghahramani, Citation2011; Hunt & Tyrrell, Citation2012; Lähteenlahti, Citation2021; Quinlan, Citation2012; Sarkar, Citation2018) to become better posed for numerical solution (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Bishop, Citation1995; Cherkassky & Mulier, Citation1998; Dubey et al., Citation2018) (refer to Section 2 in the Part 1). Constraints adopted in the Part 1 are standard product and process quality criteria, selected from the existing literature. They are summarized as follows.

The popular Findable Accessible Interoperable and Reusable (FAIR) guiding principles for scholarly/scientific digital data and non-data (e.g. analytical pipelines) management (GO FAIR – International Support and Coordination Office, Citation2021; Wilkinson, Dumontier, Aalbersberg et al., Citation2016), see Table 1 in the Part 1. Accounting for the fundamental difference between outcome (product, e.g. sensory data-derived product) and process, it is worth observing that term reusability in the FAIR data principles (see Table 1 in the Part 1) is conceptually equivalent to tenet regularity adopted by the popular engineering principles of structured (data processing) system design, encompassing modularity, hierarchy and regularity (Lipson, Citation2007), considered neither necessary nor sufficient, but highly recommended for system scalability (Page-Jones, Citation1988). Term interoperability in the FAIR data principles (see Table 1 in the Part 1) becomes, in the process domain, the tenet of system interoperability. It is typically defined as “the ability of systems to provide services to and accept services from other systems and to use the services so exchanged to enable them to operate effectively together” (Wikipedia, Citation2018). According to (ISO/IEC, Citation2015), system (functional unit) interoperability is “the capability to communicate, execute programs, or transfer data among various functional units [systems] in a manner that requires the user to have little or no knowledge of the unique characteristics of those units”; in short, it is “the capability of two or more functional units [systems] to process data cooperatively”. In more detail, there are three levels of system interoperability (opposite of heterogeneity), corresponding to three generations of information processing systems (Sheth, Citation2015; Wikipedia, Citation2018), reported hereafter for the sake of completeness (refer to Section 2 in the Part 1).

First lexical/communication level of system interoperability, involving computer system and data communication protocols, data types and formats, operating systems, transparency of location, distribution and replication of data, etc. (Sheth, Citation2015; Wikipedia, Citation2018).

Second syntax/structural level of system interoperability. “Syntactic interoperability only focuses on the technical ability of systems to exchange data” (Hitzler et al., Citation2012). Intuitively, it is related to form, not content. According to Yingjie Hu, the term syntactics is in contrast with “the term semantics, which refers to the meaning of expressions in a language” (Hu, Citation2017). Syntactic interoperability of component systems involves the two Marr levels of abstraction of an information processing system known as information/knowledge representation and structured system design (architecture) (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Marr, Citation1982; Quinlan, Citation2012; Sonka et al., Citation1994) (refer to Subsection 3.2 in the Part 1), query languages and interfaces, etc. (Sheth, Citation2015; Wikipedia, Citation2018).

Third semantic/ontological level of system interoperability, increasingly domain-specific (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Bittner, Donnelly, & Winter, Citation2005; Green, Bean, & Myaeng, Citation2002; Hitzler et al., Citation2012; Hu, Citation2017; Kuhn, Citation2005; Laurini & Thompson, Citation1992; Matsuyama & Hwang, Citation1990; Nativi et al., Citation2015; Nativi, Santoro, Giuliani, & Mazzetti, Citation2020; Obrst, Whittaker, & Meng, Citation1999; Sheth, Citation2015; Sonka et al., Citation1994; Sowa, Citation2000; Stock et al., Citation2011; Wikipedia, Citation2018).

The visionary goal of the intergovernmental GEO’s implementation plan for years 2005–2015 of a Global Earth Observation System of (component) Systems (GEOSS) (EC and GEO, Citation2014; GEO, Citation2005, Citation2019; Mavridis, Citation2011), unaccomplished to date (GEO, Citation2015; Nativi et al., Citation2015, Citation2020; Santoro, Nativi, Maso, & Jirka, Citation2017), see Figure 1 in the Part 1. In 2014, GEO expressed the utmost recommendation that, for the next 10 years 2016–2025 (GEO – Group on Earth Observations, Citation2015), the second mandate of GEOSS is to evolve from an EO big data sharing infrastructure, intuitively referred to as data-centric approach (Nativi et al., Citation2020), to an expert EO data-derived information and knowledge system (Nativi et al., Citation2015, pp. 7, 22), intuitively referred to as knowledge-driven approach (Nativi et al., Citation2020), capable of supporting decision-making by successfully coping with challenges along all six community-agreed degrees (dimensionalities, axes) of complexity of big data (Guo, Goodchild, & Annoni, Citation2020, p. 1), known as the six Vs of volume, variety, veracity, velocity, volatility and value (Metternicht et al., Citation2020). The ongoing GEO activity on the identification, formalization and use of Essential (Community) Variables and related instances (see Table 2 in the Part 1) contributes to the process of making GEOSS an expert EO sensory data-derived information and knowledge system, capable of EO sensory data interpretation/transformation into Essential (Community) Variables in support of decision making (Nativi et al., Citation2015, p. 18, Citation2020; Santoro et al., Citation2017). It means that only high-level Essential (Community) Variables, rather than low-level EO big sensory data, should be delivered by GEOSS to end-users of spaceborne/airborne EO technologies for decision-making purposes. Focusing on the delivery to end-users of EO sensory data-derived Essential (Community) Variables as information sets relevant for decision-making (Santoro et al., Citation2017), in place of delivering low-level EO big sensory data, would reduce the Big Data requirements of the GEOSS digital Common Infrastructure (Nativi et al., Citation2015, p. 21, Citation2020) (see Figure 1 in the Part 1), in agreement with the increasingly popular Data-Information-Knowledge-Wisdom (DIKW) conceptual hierarchy where, typically, information is defined in terms of data, knowledge in terms of information and wisdom in terms of knowledge (Rowley, Citation2007; Rowley & Hartley, Citation2008; Wikipedia, Citation2020; Zeleny, Citation1987, Citation2005; Zins, Citation2007), see Figures 12 and 16 in the Part 1.

The ambitious, but realistic goals of the GEO-CEOS QA4EO Cal/Val guidelines (refer to references listed in this Section above). According to the intergovernmental GEO-CEOS QA4EO Cal/Val guidelines, the following requirements hold.

Timely, operational and comprehensive transformation of multi-sensor EO big sensory data into VAPS requires joint multi-objective optimization of: (a) Suitability, which implies Availability, Findability and Accessibility of product and/or process, together with (jointly with) product accuracy, process efficiency, process robustness to changes in input data, process robustness to changes in input hyperparameters to be user-defined based on heuristics, process transferability, process scalability, process interpretability, product and process cost in manpower, product and process cost in computer power, etc. (refer to Section 2 in the Part 1), and (b) Feasibility, synonym for viability/ practicality/ doableness (refer to Section 2 in the Part 1).

Each step in a data processing workflow must be validated, by an independent third party (GEO-CEOS, Citation2015), for quantitative quality assurance (QA)/quality traceability (vice versa, for error propagation and backtracking), where quality and/or error estimates must be provided with a degree of uncertainty in measurement. Synonym for error (uncertainty) propagation through an information processing chain, the general-purpose garbage in, garbage out (GIGO) principle is intuitive to deal with (Baraldi, Citation2017; Geiger et al., Citation2021; Thompson, Citation2018). Its formal version is the process of uncertainty estimation, based on a combination of the propagation law of uncertainty with the mathematical model of causality for the input-output data mapping (data link) at hand (Ma, Jia, Schaepman, & Zhao, Citation2020), according to the Guide to the Expression of Uncertainty in Measurement (JCGM, Citation2008) and the International Vocabulary of Metrology (JCGM, Citation2012) criteria issued by the Joint Committee for Guides in Metrology. Unfortunately, the large majority of works published in the RS literature presents outcome and process (OP) quantitative quality indicators (Q2Is) estimates, such as statistical estimates of thematic Q2Is (T-Q2Is), provided with no degree of uncertainty in measurement, ±δ (Lunetta & Elvidge, Citation1999). It is important to stress that OP-Q2Is published in the RS literature featuring no uncertainty estimate, ±δ, are in contrast with the principles of statistics (GEO-CEOS – Group on Earth Observations and Committee on Earth Observation Satellites, Citation2010; Holdgraf, Citation2013; JCGM – Joint Committee for Guides in Metrology, Citation2012; JCGM – Joint Committee for Guides in Metrology., Citation2008; Lunetta & Elvidge, Citation1999), i.e. they do not feature any statistical meaning (Baraldi, Citation2017; Baraldi, Boschetti, & Humber, Citation2014; Baraldi & Tiede, Citation2018a, Citation2018b). If this observation holds true as premise, then another fact holds true as consequence: since large portions of OP-Q2Is published in the RS literature feature no degree of uncertainty in measurement, ±δ, in disagreement with intergovernmental GEO-CEOS QA4EO Val requirements (GEO-CEOS – Group on Earth Observations and Committee on Earth Observation Satellites, Citation2010), then the statistical quality of a large portion of outcomes and/or processes published in the RS literature remains unknown to date (refer to Subsection 3.1 in the Part 1).

In the RS meta-science domain (Couclelis, Citation2012) (see Figure 11(a) in the Part 1), radiometric Cal (refer to Section 2 in the Part 1) is the process of transforming EO sensory data, typically encoded as non-negative dimensionless digital numbers (DNs, with DN ≥ 0) provided with no physical meaning at EO Level 0, into a physical variable, i.e. a numerical variable provided with a community-agreed radiometric unit of measure (Baraldi, Citation2009, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; DigitalGlobe, Citation2017; EC - European Commission, Citation2020; GEO-CEOS – Group on Earth Observations and Committee on Earth Observation Satellites, Citation2010; Malenovsky et al., Citation2007; Pacifici, Longbotham, & Emery, Citation2014; Schaepman-Strub et al., Citation2006; Shuai et al., Citation2020), such as top-of-atmosphere radiance (TOARD) values, with TOARD ≥ 0, top-of-atmosphere reflectance (TOARF) values belonging to the physical domain of change [0.0, 1.0], surface reflectance (SURF) values in the physical range of change [0.0, 1.0] and surface albedo values in the physical range [0.0, 1.0]. Noteworthy, surface albedo is included (referred to as albedo) in the list of terrestrial Essential Climate Variables (ECVs) defined by the World Climate Organization (WCO) (Bojinski et al., Citation2014) (see Table 2 in the Part 1), which complies with requirements of the GEO second implementation plan for years 2016-2025 of a new GEOSS, regarded as expert EO data-derived information and knowledge system (GEO - Group on Earth Observations, Citation2015; Nativi et al., Citation2015, Citation2020; Santoro et al., Citation2017) (see Figure 1 in the Part 1), in agreement with the well-known DIKW hierarchical conceptualization (refer to references listed in this Section above), see Figures 12 and 16 in the Part 1. Intuitively, any (calibrated) physical variable, provided with a physical meaning, a physical unit of measure and a physical range of change, is better behaved and better understood than uncalibrated data (Baraldi, Citation2009; Pacifici et al., Citation2014). In particular, calibrated data can be input to:

Deductive/physical model-based inference systems (traditional static if-then decision trees, non-adaptive to data, known as expert systems) (Bishop, Citation1995; Cherkassky & Mulier, Citation1998; Laurini & Thompson, Citation1992; Sonka et al., Citation1994), often referred to as Good Old-Fashioned Artificial Intelligence (GOFAI) (Dreyfus, Citation1965, Citation1991, Citation1992; Santoro et al., Citation2021)

Inductive/statistical model-based inference systems (Laurini & Thompson, Citation1992; Peirce, Citation1994; Salmon, Citation1963). As well as

Hybrid (combined deductive and inductive) inference systems (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Bishop, Citation1995; Cherkassky & Mulier, Citation1998; Chomsky, Citation1957; Expert.ai, Citation2020; Hassabis et al., Citation2017; Laurini & Thompson, Citation1992; Liang, Citation2004; Marcus, Citation2018, Citation2020; Matsuyama & Hwang, Citation1990; Mindfire Foundation, Citation2018; Nagao & Matsuyama, Citation1980; Parisi, Citation1991; Piaget, Citation1970; Sonka et al., Citation1994; Sweeney, Citation2018b; Thompson, Citation2018; Zador, Citation2019), encompassed by AGI solutions in the multi-disciplinary domain of cognitive science, see Figure 11(a) in the Part 1.

In general, statistical model-based systems can be input with either uncalibrated or calibrated data, i.e. they do not require as input numerical variables provided with a physical meaning. Nevertheless, when input with calibrated data, which are better behaved than uncalibrated data, then statistical model-based systems typically gain in accuracy, robustness, transferability and scalability (refer to Subsection 3.1 in the Part 1).

Moreover, EO sensory data radiometrically calibrated into either TOARF, SURF or surface albedo values, all belonging to the physical range of change [0.0, 1.0], can be encoded as unsigned byte, affected by a negligible quantization error of 0.2% (refer to Subsection 3.3.2 in the Part 1). This property is largely oversighted by the RS community to date, because EO Level 1 TOARF values and Level 2/ARD SURF values are typically encoded as 16-bit unsigned short integer, such as in the Planet Surface Reflectance Product (Planet, Citation2019), the U.S. Landsat ARD format (Dwyer et al., Citation2018; Helder et al., Citation2018; NASA - National Aeronautics and Space Administration, Citation2019; USGS - U.S. Geological Survey, Citation2018a, Citation2018c) and the Level 1 TOARF and Level 2 SURF value formats adopted by the ground segment of the Italian Space Agency (ASI) Hyperspectral Precursor and Application Mission (PRISMA) (ASI, Citation2020; OHB, Citation2016). These multi-source EO big data archives, calibrated into TOARF or SURF values and encoded as 16-bit unsigned short integer, can be seamlessly transcoded into an 8-bit unsigned char, affected by a quantization error as low as 0.2%, with a 50% save in memory storage.