Abstract

Extended Reality (XR) systems, such as Virtual Reality (VR) and Augmented Reality (AR), provide a digital simulation either of a complete environment, or of particular objects within the real world. Today, XR is used in a wide variety of settings, including gaming, design, engineering, and the military. In addition, XR has been introduced into psychology, cognitive sciences and biomedicine for both basic research as well as diagnosing or treating neurological and psychiatric disorders. In the context of XR, the simulated ‘reality’ can be controlled and people may safely learn to cope with their feelings and behavior. XR also enables to simulate environments that cannot easily be accessed or created otherwise. Therefore, Extended Reality systems are thought to be a promising tool in the resocialization of criminal offenders, more specifically for purposes of risk assessment and treatment of forensic patients. Employing XR in forensic settings raises ethical and legal intricacies which are not raised in case of most other healthcare applications. Whereas a variety of normative issues of XR have been discussed in the context of medicine and consumer usage, the debate on XR in forensic settings is, as yet, straggling. By discussing two general arguments in favor of employing XR in criminal justice, and two arguments calling for caution in this regard, the present paper aims to broaden the current ethical and legal debate on XR applications to their use in the resocialization of criminal offenders, mainly focusing on forensic patients.

INTRODUCTION

Extended reality (XR) systems, such as virtual reality (VR) and augmented reality (AR), provide a digital simulation either of a complete environment, or of particular objects within the real world (Kaplan et al. Citation2020; Cipresso et al. Citation2018). For example, VR enables to simulate a complete city center, and AR can conjure up a spider crawling over your desk. Using an XR headset, often combined with handheld motion controllers, haptic gloves, and other accessories, the user can act, feel and react in the (partly) simulated environment as if it were real, also in relation to other persons in the form of avatars.

In the last decade, the simulation provided by XR systems has improved considerably. This has resulted in an increased user’s experience of being “absorbed” by the simulated environment (Latoschik et al. Citation2017), to the extent that the user “forgets” the actual physical environment—a phenomenon that is called “immersion.” An immersed user will physically and emotionally respond to the simulated environment as if it were reality.

Today, XR is used in a wide variety of settings, including gaming, design, engineering, and the military (Cornet and Van Gelder Citation2020). In addition, XR has been introduced into psychology, cognitive sciences and biomedicine for both basic research as well as diagnosing or treating neurological and psychiatric disorders such as dementia, psychosis, posttraumatic stress disorder, and pedophilia (Kellmeyer Citation2018; Kellmeyer, Biller-Andorno, and Meynen Citation2019; Renaud et al. Citation2014; Rizzo, Thomas Koenig, and Talbot Citation2019). Three significant advantages of XR in this regard concern (1) that the simulated “reality” can be controlled, so patients learn to deal with challenges in a stepwise manner, (2) the possibility of creating contexts in which people may safely learn to cope with their feelings and behavior: nobody is harmed when the patient responds, e.g. aggressively (Kellmeyer, Biller-Andorno, and Meynen Citation2019), and (3) environments may be simulated that cannot easily be accessed or created otherwise, for instance, a café where a visitor starts to behave angrily toward the user.

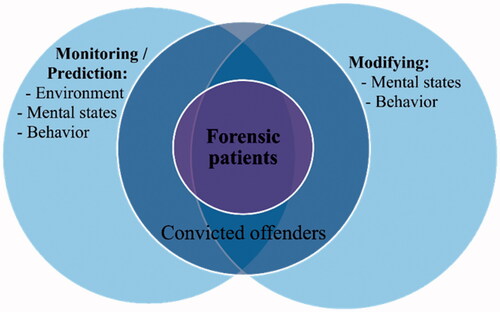

In view of these unique characteristics—in particular feature 2 and, perhaps, most importantly feature 3—XR is thought to be a promising tool in the resocialization of criminal offendersFootnote1 as well, more specifically for purposes of risk assessment and treatment of forensic patients. Moreover, different forensic psychiatric applications of XR have already been used in a research context (Cornet and Van Gelder Citation2020; Klein Tuente et al. Citation2018, Citation2020; Ticknor Citation2019). For example, XR may allow for safely monitoring sexual offenders in a virtual supermarket where they encounter a child (Fromberger, Jordan, and Müller Citation2018); it may enable to observe burglars reenacting a burglary in a virtual environment (Nee et al. Citation2019); it may potentially contribute to training impulse control for aggression regulation (Klein Tuente et al. Citation2018, Citation2020), and improving empathy of offenders convicted for domestic violence (Seinfield Citation2018). In other words, these techniques, on the one hand, may be employed to monitor and predict a person's mental states and behavioral traits in relation to a particular environment, while on the other hand, they may be used to bring about mental and behavioral change (see ). In doing so, XR might facilitate the resocialization of forensic psychiatric patients, contributing to combatting crime, which often comes with serious physical and mental harm for both victims and offenders. Note, that the use of VR to prepare inmates for their reentry into society has already been reported with respect to U.S. prions (Dolven and Fidel Citation2017; Melnick Citation2018).

Figure 1. The relationship between monitoring, predicting, and modifying behavior and mental states in forensic patients and convicted offenders.

In forensic psychiatric settings, unlike in regular healthcare settings, XR interventions might be offered on behalf of the State primarily for security reasons, instead of the (sole) aim of health care. Although such employment of forensic XR may in principle be consensual—the offender has a choice to either accept or decline the offer—significant differences exist between the choice faced by a patient offered XR in an ordinary healthcare context, and that by an offender offered XR in a forensic setting (cf. Ligthart et al. Citation2021). For example, parole may be made conditional in exchange for XR use. Before agreeing with the application of XR, does the offender know what XR exactly entails? Should he be able to discuss the possibilities and perils of the use of XR with a lawyer? Are lawyers equipped to advise their clients in this respect?

Coercion is often not a clear-cut phenomenon, but it characteristically involves gray areas (Szmukler and Appelbaum Citation2008). And using technologies within a forensic setting often raises specific concerns, even though it does not involve complete compulsion: the setting and the choices an offender is faced with, arguably tend to be of an intrinsically coercive nature (Pugh Citation2018; Ryberg Citation2020, Ch. 2). For instance, “Would you like treatment instead of prison time?” This type of offer is clearly different from the normal healthcare situation. In this article, we do not aim to take a position in the discussion about (arguable coercive) offers and consent. What we do want to stress here, is that the arguable “coercive” nature of the forensic setting entails that offenders who are offered XR technologies should be considered “vulnerable” subjects because of institutional (by virtue of their confinement) and medical (their underlying psychiatric condition) reasons (Kellmeyer, Biller-Andorno, and Meynen Citation2019). Therefore, forensic XR raises other questions compared to XR use in medicine and consumer usage.

Altogether, deploying XR in forensic settings raises ethical and legal intricacies which are not raised in case of usual healthcare applications. In fact, whereas a variety of ethical and legal issues of XR have been discussed in the context of medicine and consumer usage (Marloth, Chandler, and Vogeley Citation2020; Barfield and Blitz Citation2018; Kellmeyer Citation2018; Kellmeyer, Biller-Andorno, and Meynen Citation2019; Lemley and Volokh Citation2018; Slater et al. Citation2020; Wassom Citation2015), the debate on XR in forensic settings is, as yet, straggling (Cornet and Van Gelder Citation2020).

By discussing two general arguments in favor of employing XR in criminal justice, and two arguments calling for caution in this regard, the present article aims to broaden the current ethical and legal debate on XR applications to their use in the resocialization of criminal offenders, mainly focusing on forensic patients.

As to the legal part of our analysis, the normative framework is provided by European and international human rights—which are, arguably, closely related to moral rights (Cruft, Liao, and Renzo Citation2015, 4).Footnote2 For example, as the European Union notes with regards to its Charter of Fundamental Rights, this Charter establishes ethical principles and rights for EU citizens and residents that relate to, inter alia, dignity, liberty, and justice, protecting civil and political rights, and covering bioethics.Footnote3 The ethical analysis focusses on moral capabilities and XR as a “moral prosthesis.”

The first argument in favor of forensic XR is based on the legal obligation on the part of the State to facilitate resocialization. From that perspective, a powerful tool such as XR should not be withheld from offenders to help them reintegrate into society. The second argument in favor is ethical in nature and directly related to shaping one's life in society: while respecting human dignity, XR may increase offenders' autonomy and moral agency, enabling them to take control over their own lives (cf. Douglas et al. Citation2013).

Meanwhile, human rights law does not only provide the obligation to resocialization, but also to respect freedom of thought and mental integrity. Even though XR does not directly intervene in the human body, it aims to impact mind and behavior—which raises concerns, as we will discuss.Footnote4 From an ethical perspective, concerns arise regarding, vulnerability, dependency, stigma, and authenticity.

Altogether, we argue that, although, in principle, XR simulations can take us anywhere, certain normative boundaries should be respected. The precise determination of those boundaries deserve close attention in future research, we argue.

TWO ARGUMENTS IN FAVOR OF FORENSIC XR USE

In this section, we discuss two normative frameworks—human rights and ethical principlism—and offer arguments defending the employment of XR in forensic settings. First, we develop an argument from a human rights perspective, focusing on the obligation of States to facilitate the resocialization of criminal offenders, including forensic patients, concentrating on the European legal context. Second, we argue that XR interventions may strengthen the autonomy and moral agency of forensic patients by enabling them to learn to control problematic behavioral impulses under safe conditions.

Human Rights: The Obligation to Facilitate Resocialization

According to the Grand Chamber of the European Court of Human Rights (ECtHR/the Court), prison sentence pursues a variety of objectives. Whereas retributivismFootnote5 remains one of the important aims of prison sentence, to date, the emphasis in European penal policy is on the rehabilitative objective of imprisonment, especially toward the end of a long prison sentence.Footnote6 “Resocialization,” in this context, implies the reintegration of convicted offenders into society, inter alia in order to prevent reoffending and thus also to protect society.Footnote7 This concept of resocialization has become a mandatory factor that member States of the Council of Europe should take into account when designing their penal policies.Footnote8 States have a duty to provide prisoners with a “real opportunity” to rehabilitate themselves.Footnote9 According to the Grand Chamber, this duty entails a “positive obligation” to secure prison regimes with the aim of resocialization, and enable (life) prisoners to make progress in this regard, especially where it is the prison regime or the conditions of detention that obstruct resocializationFootnote10 (Ligthart et al. Citation2019a; Meijer Citation2017). This obligation is one of means, not of result. The actual resocialization of individual offenders should be achieved through fostering their personal responsibility.Footnote11 As to life prisoners with mental health problems, the Grand Chamber has considered that providing such prisoners with a real opportunity of resocialization may require enabling them to take part in treatments or therapies—be they medical, psychological or psychiatric—adapted to their situation with a view to facilitating rehabilitation. This entails that mentally ill detainees should be allowed to take part in occupational or other activities where these may be considered beneficial to resocialization.Footnote12

Similarly, Article 10(3) of the International Covenant on Civil and Political Rights (ICCPR) prescribes that penitentiary systems shall comprise treatment of prisoners with the aim of their reformation and social rehabilitation. The importance of facilitating resocialization is reflected in several other international legal instruments as well, such as the European Prison Rules and the (international) Mandela Rules, containing non-binding standards that set out generally accepted good principles and practices in the treatment of detainees and the management of penal institutions.Footnote13 For example, according to Rule 6 of the European Prison Rules, all detention shall be managed so as to facilitate the reintegration into free society of persons who have been deprived of their liberty. And Rule 102.1 of the European Prison Rules prescribes that prison regimes should be designed to enable convicted offenders to lead a responsible and crime-free life. And Rule 5 of the European Prison Rules denotes that life in prison shall approximate as closely as possible the positive aspects of life in the free community. Similarly, Rule 4 of the Mandela Rules states that in the prospect of resocialization, prison administrators should offer detainees a range of differentiated activities. In addition, Rule 5(1) Mandela Rules prescribes that the prison regime should seek to minimize any differences between prison life and life at liberty that tend to lessen the responsibility of the prisoners or the respect due to their dignity as human beings.

It has been argued that the legal obligation to facilitate resocialization is primarily based on the principle of respect for human dignity.Footnote14 As Meijer writes, “[r]ecognising rehabilitation as a positive obligation—grounded in human dignity—is important, because it makes clear that rehabilitation is at all times to be taken into account and cannot be set aside by other concerns such as the effectiveness of rehabilitative efforts and prison authorities concerns such as cuts or staff shortage” (Meijer Citation2017, 161). In addition, the rehabilitative purpose of criminal justice also follows from the ethical and legal consequentialist theory—that is, that the imposition of criminal sanctions to convicted offenders is justified primarily by the aim of preventing crime.

On the assumption that XR is effective in facilitating successful resocialization, employing forensic XR can be based on considerations about the offender's dignity as well as about crime prevention.Footnote15 Moreover, one could argue that the legal obligation to secure prison regimes with the aim of resocialization should be an incentive, though at least a justification to deploy XR in the execution of criminal sanctions. After all, inherent in the deprivation of liberty and due to security reasons, the possibilities to actively treat and rehabilitate convicted offenders during a prison sentence are limited. The majority of detainees spend most of their time in their cells, doing virtually nothing. One should bear in mind that prison is a deliberately impoverished environment. Regardless of the imposed regime or security level, it entails physical, mental, and social inactiveness of detainees (Ligthart et al. Citation2019a). Various studies have depicted the negative effects that an impoverished environment has on human brain functions and human flourishing. For example, two recent studies, respectively in a Dutch and U.S. prison, investigated the potential negative effects of the impoverished prison environment on a detainee’s neurocognitive functioning. In line with these studies and earlier hypotheses, both studies showed that brain functions connected with self-regulation declined after 3–4 months of imprisonment (Meijers et al. Citation2018; Umbach, Raine, and Leonard Citation2018).

In addition, prisoners will normally only be granted probation or parole when their risk of recidivism is considered acceptably low. In order to overcome such limitations that hamper resocialization, XR provides new and potentially highly valuable opportunities: in a controlled environment, the offender can safely learn to cope with one’s feelings and behavior in a simulated outside world, all of which being accessible from the prisoner’s own cell. Think of the example of simulating a bar where a visitor starts to behave angrily toward the user, or of the possibility XR offers to safely monitoring sexual offenders in a virtual environment where they encounter stimuli that could trigger sexual violence in real-life settings. In fact, employing XR in prison opens up the possibility to adapt to the challenges of the outside world from inside the prison walls, minimizing relevant differences between prison life and life at liberty (cf. Rule 5 of the European Prison Rules and Rule 5(1) Mandela Rules). The tricky transition between prison life and life in the free community can be facilitated by a step-wise XR program, providing the means for successful reintegrating into a (simulated) free society, as is inter alia prescribed by the Mandela Rules, the European Prison Rules, as well as by the European Court on Human Rights.

Moral capabilities: XR as a Moral Prosthesis for Autonomy, Moral Agency and Dignity

Autonomy, agency and dignity are important normative concepts for moral philosophy, biomedical ethics as a well as international human rights law. In (Western) biomedical ethics, for example, the most widely used normative framework is the principlist account by Beauchamp and Childress (Beauchamp and Childress Citation2001) in which autonomy is one of the four key principles (together with Beneficence, Non-Maleficence, and Justice). Traditional philosophical and biomedical ethics accounts of the principle of autonomy are mostly centered on the individual person as a self-contained moral agent who has the capacity for self-governance which entails the right to make their own choices. In a health care setting, the most common questions around this personal autonomy are invoked with respect to informed consent: a patient’s capacity and right to make decisions about their mental and bodily health and related medical interventions. In such a common principlist moral environment, ethical tensions and dilemmas typically arise in cases in which certain capacities are diminished, for example a patient with advanced dementia who cannot understand the scope and consequences of a particular medical intervention, or cases in which two (or more) principles are in conflict with each other. In the context of forensic science and medicine, ethical tensions involving a forensic patient’s autonomy may arise, for example, when institutions and attending forensic psychiatrists have to balance the patient’s autonomy and the risk for others (Urheim et al. Citation2011).

Moral agency, in our interpretation, is a person’s capacity to act and to perform moral judgements in accordance with certain norms, such as internal moral sentiments, convictions or beliefs, but also external norms such as codes of conduct, rules or laws. Whereas the early concepts of moral agency propagated a rather dichotomous view—either an entity (usually a natural person) has the capacity for moral agency or not—more recent and nuanced accounts acknowledge the graded nature of agency (Mackenzie and Stoljar Citation2000; Skalko and Cherry Citation2016). Deficiencies in moral agency tend to be central to historical (and contemporary) models of psychopathy and antisocial personality disorder—a common finding in forensic patients. Indeed, in the most widely used psychological assessment tool for psychopathy, the Psychopathy Checklist (now in revised form) (Hare, Hart, and Harpur Citation1991), dimensions such as “lack of remorse or guilt” or “callous/lack of empathy” are directly linked to moral affects, i.e. the finding that behavior such as violence, deception, or other delinquencies in psychopaths often do not go along with feelings of remorse or guilt. Here, we use a broad conception of moral agency, including capacities that enable one to control tendencies for violence and other harmful and illegal behavior. It therefore encompasses relevant cognitive and emotional capacities as well as the ability to guide and regulate one's impulses (which is also reflected in literature on moral enhancement (Savulescu and Persson Citation2012).

Human dignity, again in our interpretation of current conceptualizations, refers to the fundamental philosophical, theological and anthropological notion of recognizing a human being as a person and treating this person respectfully. It is a fundamental conceptual foundation of international human rights and in some countries also an important constitutional right (Barak Citation2015). In the 20th century, as a consequence of the horrific crimes against humanity by Nazi Germany, including the biomedical experimentation on human prisoners, human dignity has also become an important and foundational concept in international soft law declarations and treaties on biomedicine, such as the Declaration of Helsinki of the World Medical Association on Ethical Principles for Medical Research and the Universal Declaration on Bioethics and Human Rights by UNESCO (Andorno Citation2018). In forensic psychiatry, the impact of therapeutic interventions, such as by means of emerging technologies like XR, on the dignity of forensic patients are intricately connected to both the notion of autonomy and of moral agency.

Another important notion in biomedical ethics that we want to acknowledge here is vulnerability, i.e. a person’s propensity to be afflicted by psychological or physical harm in relation to specific internal dispositions or external factors. Specifically, we base our analysis on contemporary models of vulnerability that stress the multidimensional and layered character of vulnerability, i.e. that a person can be vulnerable in several, yet specific ways (e.g. through social disadvantages as well as current disease) (Ganguli-Mitra and Biller-Andorno Citation2011; Mackenzie, Rogers, and Dodds Citation2013).

For our subsequent discussion, we propose to use the notion of “moral capabilities” to refer to the combined set of an individual’s—in this context a forensic patient—capacities for autonomous decision-making regarding their health, the degree of her moral agency, her vulnerability and her dignity.

But how could XR technologies realistically help forensic patients to preserve or enhance their moral capabilities, particularly autonomy and moral agency while at the same time protecting their dignity and respecting their vulnerabilities?

Let’s consider a hypothetical, yet technologically already feasible, treatment scenario of a closed-loop system that combines biosignal sensing—for example heart rate, skin conductance, but also neurophysiological measures such as bioelectric brain activity with electroencephalography—as correlates of certain affective states with an XR simulation environment and display in which these biosignals are used as a control signal to indicate certain affects or levels of stress to the patients while he is immersed in a virtual simulation that is salient to the patient’s psychopathology.

In such a scenario, both the patient and their doctors would be able to glean highly personalized insights into the relationship between social and environmental context, e.g. the role of trigger stimuli, and the patient’s behavior. Primarily, such a system would likely be used in typical therapeutic settings in forensic institutions or outpatient services in the context of behaviorally oriented psychotherapy (e.g. to support cognitive behavioral therapy, CBT). Many patients who can gain sufficient control over their behavioral impulses in the course of this XR-assisted behavioral therapy will then perhaps be at sufficiently low risk of recidivism that would allow for the reintegration into the community with regular CBT-XR therapy sessions. Other patients might not respond sufficiently to XR-assisted psychotherapy to sustainably control their behavior with regular therapy session but might attain a sufficient level of behavioral control (and thus lower recidivism risk) by permanently wearing the assistive XR system. Perhaps, these patients could wear a set of glasses that regularly augment their view in response to environmental cues. Over time, they could learn to use the XR system also outside of the therapeutic context, to help navigate situations that put the patient at risk for recidivist behavior. Such an adaptive patient-XR system would not only have an early warning function (for patients, but also potentially for forensic health care providers) but could then also act as a form of “moral prosthesis” that supports the patient in overcoming their diminished moral capabilities and moral agency and be reintegrated into the community.

Considering an XR system as a “moral prosthesis” would also enable the patient to operate at a level of moral agency that would ideally allow for holding her morally responsible for her actions while preserving her autonomy over her actions. Overall, this restoration—or in the case of psychopathy, more accurately: the therapeutic enhancement of—moral capabilities would also respect the patient’s dignity as it would enable her to be recognized as a full (or at least functional) moral agent and a person. While we have focused the discussion here on a use-case involving a forensic patient with clear psychopathology that diminishes her moral capabilities, many of the same positive effects could be envisioned for corrective scenarios involving criminal offenders without discernible psychopathology, yet deficits in impulse control or moral decision-making.

TWO ARGUMENTS FOR CAUTION REGARDING FORENSIC XR

In this section, we discuss two arguments that call for caution in deploying XR in forensic settings. First, an argument from human rights, focusing on the right to freedom of thought and the right to mental integrity. Surely, other rights that aim to protect from unwanted intrusions can be relevant as well,Footnote16 but the “elegance” of XR treatment is that it does not by itself interfere in one's body, so concerns about bodily integrity or direct physical harm are at leastless relevant than in, e.g., pharmaceutical, deep brain stimulation, or transcranial magnetic stimulation treatment.Footnote17 Yet, as we will discuss, mental integrity and freedom of thought clearly deserve attention. Second, we discuss the moral issues of vulnerability, dependency, stigma, and authenticity.

Human Rights: Freedom of Thought and Mental Integrity

An aspect of XR that is currently subjected to ethical consideration, is that virtual embodiment can entail emotional, cognitive, and behavioral changes (Slater et al. Citation2020). For example, VR can be an effective tool for changing attitudes through persuasion (Chittaro and Zangrando Citation2010). As Slater and colleagues write, “XR technology is highly persuasive—that is the whole point and that is how it exerts its benefits (e.g. training for disaster response in a virtual setting is a form of persuasion)” (Slater et al. Citation2020: 2).

In fact, inducing emotional, cognitive, and—eventually—behavioral alterations are the primary aim of XR use in forensic settings, for instance by treating mental disorders (Ticknor Citation2019), by training empathy and altering the user’s perception of domestic violence (Seinfield Citation2018), and by improving impulse control in order to prevent aggression (Klein Tuente et al. Citation2020). Since such use of XR intends to change what the user thinks or feels, the question arises whether such forensic XR infringes a fundamental right to mental integrity, i.e. the freedom from certain kinds of interferences with one’s mind (cf. Ligthart, Meynen, and Douglas, Citation2021). In addition, apart from intended mental alterations, XR experiences may also involve psychological and emotional side effects (Cornet and Van Gelder Citation2020).

In view of the advances in digital and neuroscientific technologies, enabling to access, alter, and manipulate mental states, scholars have been debating the case for introducing novel fundamental rights over the mind, such as rights to cognitive liberty, mental integrity, and mental self-determination (Boire Citation2001; Sententia Citation2004; Bublitz and Merkel Citation2014; Ienca and Andorno Citation2017; Lavazza Citation2018; Bublitz Citation2020a). At the same time, others have argued that such rights, protecting from interferences with the mind, are already enshrined in existing human rights law, mainly in the right to mental integrity and the right to freedom of thought (Michalowski Citation2020; Ligthart, Meynen, and Douglas, Citation2021). Below, we briefly discuss both rights and consider their implications for forensic XR.

The right to freedom of thought is a generally accepted fundamental right, inter alia guaranteed by Article 9 ECHR, Article 10 of the Charter of Fundamental Rights of the European Union (ECFR), Article 18 ICCPR, and Article 18 of Universal Declaration of Human Rights (UDHR). According to these provisions, everyone has the right to freedom of thought, conscience, and religion, including the freedom to change religion or belief, and the freedom to manifest one’s religion or belief. This right consists of an internal and external dimension (the forum internum and forum externum). The freedom of (changing) thought, conscience, and religion are protected under the internal dimension. The external part covers the right to manifest one’s religion and belief. Whereas infringements of the external dimension can be lawful under certain circumstances, the internal dimension is absolute—that is, it may never be restricted; any infringement will imply a violation of the right.

As to deploying XR in forensic settings, involving intentional emotional and cognitive alterations—perhaps aiming at changing offense-supportive cognitions (Hempel Citation2013)—questions may arise under the internal dimension of freedom of thought, as it seeks at its most basic level to prevent state indoctrination of individuals. It guarantees that every person is free to have, develop, refine, change, and not to reveal one’s thought, conscience, and religion (Harris et al. Citation2018; Ligthart Citation2020; Murdoch Citation2012; Taylor Citation2005; Vermeulen and Roosmalen Citation2018). Indeed, employing XR in treating and rehabilitating criminal offenders will normally not alter the offender’s religious or moral adherence, but, perhaps, such XR use might affect “thoughts” or behavioral dispositions.

Whereas much case law and scholarship exist on the right to freedom of religion, belief, and conscience, freedom of thought is still an under-elaborated notion (Bublitz Citation2020a; Ligthart Citation2020). Since no human rights court has set out to define “thoughts,” the precise scope of freedom of thought is still open for debate (Ligthart et al. Citation2020).

On the one hand, good reasons exist to argue that, at present, the notion of “thought” should be understood in a somewhat narrow sense, basically comprising only those thoughts that have a major impact on one’s way of living, such as scientific, philosophical, and political thoughts (Ligthart Citation2020). On the other hand, in order to adequately protect people from technological advances that enable us to enter and alter our brains and minds, a broader understanding of “thought” has been advocated as well (Alegre Citation2017; Bublitz Citation2014; McCarthy-Jones Citation2019). For example, Bublitz suggests to understand the notion of thought as comprising any mental state that has content or meaning (Bublitz Citation2014). As another example, McCarthy-Jones argues that core mental processes that enable mental autonomy, such as attentional and cognitive agency, should be placed at the center of freedom of thought. In addition, he suggests to expand the domain of freedom of thought so as to cover external actions that are arguably constitutive of thought, such as reading, writing, and many forms of internet search behavior (McCarthy-Jones Citation2019).

Under these broader interpretations, the right to freedom of thought encompasses basically any thought, opinion, idea, and emotion. As Alegre puts it, “[t]he concept of “thought” is potentially broad including things such as emotional states, political opinions, and trivial thought processes. My decision on what color socks to wear, how I feel about Monday mornings or my thoughts on capital punishment are all capable of coming within the scope of freedom of thought” (Alegre Citation2017, 224).

In this article, we do not take a position in the debate on how to understand the right to freedom of thought. What we do want to stress, is that this fundamental right may have far-reaching implications for interventions in forensic settings that aim to alter the subject’s way of thinking, such as the use of forensic XR. After all, if one understands freedom of thought as covering basically any thought, idea, opinion and emotion, and, as McCarthy-Jones suggests, embracing external actions such as reading, writing, and internet search behavior, it will most probably apply to the forensic employment of XR systems as well, both changing mental states and externalizing them in the (partial) virtual environment. Since freedom of thought is, as part of the forum internum, an absolute right, any such interference with an offender’s mental states (without valid consent) will constitute a right violation.

The right to mental integrity is explicitly enshrined in Article 3(1) ECFR, stating that “[e]veryone has the right to respect for physical and mental integrity.” In drafting this fundamental right, mental integrity was introduced next to physical integrity to provide comprehensive protection of the person, especially against novel technologies (Bublitz Citation2020a). Together, these rights to mental and physical integrity protect the notion of “human integrity,”Footnote18 which is also the general objective of the Convention on Human Rights and Biomedicine (“Oviedo Convention”, see Article 1). A somewhat similar right—to psychological and moral integrity—has been recognized under the right to respect for private life pursuant to Article 8 ECHR.Footnote19 In addition, Article 17 of the Convention on the rights of persons with disabilities prescribes that every person with disabilities has a right to respect for her mental integrity on an equal basis with others.

Similar to the right to freedom of thought, the precise meaning and scope of the right to mental integrity are still unclear. Some authors understand this right as merely a right to mental health, while others suggest that it comprises a right to mental self-determination—that is, a right to control over the content of one’s mental life (Ienca and Andorno Citation2017; Bublitz Citation2020a; Michalowski Citation2020; Marshall Citation2009). Whereas the former understanding may align best with the application of the right in present (scarce) case law, the latter may well evolve into the dominant legal interpretation in the near future. For example, as the Committee of Bioethics of the Council of Europe writes in its Strategic Action Plan on Human Rights and Technologies in Biomedicine (2020–2025):

“Technological developments in the field of biomedicine create new possibilities for intervention in individual behaviour. For instance, certain technologies raise the prospect of increased understanding, monitoring, and control of the human brain, while other developments allow for the permanent health monitoring of individuals. These developments raise novel questions relating to autonomy, privacy, and even freedom of thought. (…) In the light of these developments, the third pillar of the Strategic Action Plan addresses concerns for physical and mental integrity. Guaranteeing respect for a person’s integrity in the sphere of biomedicine is one of the central tenets of the Oviedo Convention. This is understood as the ability of individuals to exercise control over what happens to them with regard to, inter alia, their body, their mental state, and the related personal data.” [paragraph 21-22]

Following this interpretation, the right to mental integrity as enshrined in the Oviedo Convention and encompassed by the right to respect for private life pursuant to Article 8 ECHR, is not confined to issues of mental health understood in a narrow sense, but also guarantees individuals to exercise control over what happens to their mental states—that is, a right to mental self-determination (cf. Michalowski Citation2020). Under such an understanding of the right, it covers (almost) any interference of the individual’s mind by the State, at least those that change or control personal mental states and content.

Since the employment of XR in forensic settings typically aims to both monitor and alter the offender’s mental states and behavior, e.g. by identifying and improving empathy, impulse control and perceptions about domestic violence, forensic XR without valid consent will, under this interpretation, most probably infringe the right to mental integrity.

Unlike the right to freedom of thought, the current right to mental integrity is not absolute. Certain infringements may be lawful. For example, under Article 8 ECHR, infringing mental integrity could be justified if it is necessary and proportionate for the legitimate interest of preventing crime. Think, for example, of forensic psychiatric treatment. Nonetheless, although the right to mental integrity may not as such prohibit from XR use in forensic settings, it will provide certain legal restrictions that should be respected in this regard. Discussing such normative boundaries that follow from human rights is essential as of the outset of introducing XR into forensic settings. As the Committee of Bioethics of the Council of Europe notes in its aforementioned strategic plan:

“The role of governance in biomedicine is often restricted to facilitating the applications of technology and to containing the risks that come to light. In this way, human rights considerations will only come into play at the end of the process, when the technological applications are already established, and the technological pathways often have become irreversible. To overcome this problem, there is a pressing need to embed human rights in technologies which have an application in the field of biomedicine. This implies that technological developments are from the outset oriented towards protecting human rights. For that reason, governance arrangements need to be considered, which seek to steer the innovation process in a way which connects innovation and technologies with social goals and values.” [paragraph 15]

Hence, from the perspective of human rights law, we should exercise caution in introducing novel technologies into the field of biomedicine, and orient the technology toward the protection of human rights. As to the introduction of XR into forensic settings, contributing to risk assessment, treatment, and resocialization, such alignment with human rights should not at least focus on fundamental rights over the mind, such as the rights to freedom of thought and mental integrity.

Of note, changing convicted offenders’ ways of thinking and behavior is not an exclusive feature of emerging technologies such as XR systems. In fact, in the course of criminal justice we aim to alter how convicted offenders think and behave all the time. Think, for example, of obliged participation in treatment programs for sexual offenders or drug addicts. In addition, imprisonment is often intended (at least in part) to stimulate self-reflection and to deter future offending, and may involve unintended changes of brain and mental states as well (Umbach, Raine, & Leonard Citation2018; Meijers et al. Citation2018). Although these traditional interventions appear to induce mental changes, it is open for debate whether they should be considered as infringing fundamental rights over the mind (Bublitz Citation2020b; Douglas Citation2018).Footnote20 As argued elsewhere (Ligthart, Meynen, & Douglas, Citation2021), if one were to contend that the introduction of novel mind-altering technologies into criminal justice, in this case XR, infringes or even violates the subjects’ right to mental integrity or the right to freedom of thought, one should either identify some compelling distinctions between XR and existing criminal interventions that change how convicted offenders think and behave, or one should concede that many of the latter infringe or violate these rights as well. Identifying such distinctions would exceed the scope of the present paper but deserves further research, as is illustrated by this section, arguing that human rights concerns raised by forensic XR shall arguably not be limited to privacy rights, but may cover the right to freedom of thought and mental integrity as well.

XR as a Moral Prosthesis: An Infinite Loop of Vulnerability, Dependency, Stigma, and the Problem of Authenticity

We have discussed above, how an XR system as a moral prosthesis could have positive effects on a forensic patient’s or criminal offender’s moral capabilities broadly conceived. However, apart from these potential positive effects for these individuals (and society), the introduction of such moral prosthetic XR technology would create substantial ethical challenges, some of which shall be considered here.

First, the forensic patient is vulnerable as far as the possible application of far-reaching interventions is concerned. Concerning her dignity, the patient is vulnerable to: (a) harmful or demeaning forms of psychological and/or physical treatment that pursue the (unrealistic) goal to get the forensic patient to sincerely regret their behavior; (b) unrealistic prospects for resocialization and recidivism risk assessment. By employing unrealistic models of patients’ capacity to overcome their diminished moral capabilities, forensic psychiatry might fail in the continued evaluation and recidivism assessment of patients. For instance, studies show that personality traits associated with psychopathy remain remarkably stable over time, even considering dynamic developmental phases such as adolescence to young adulthood (Andershed Citation2010; Salekin Citation2008).

This problem of vulnerability may require the patient to depend on the supportive effect of the XR system to a degree that this dependency could potentially add another layer of vulnerability to her on top of her dispositional/pathogenic vulnerability (because of the psychopathic personality and/or associated mental health problems) and the institutional vulnerability (being confined in a forensic institution). Furthermore, the specific design of XR systems for such scenarios could have potentially stigmatizing effects, as they would—if identifiable as XR moral prostheses by outside observers—make the patient’s medical and psychosocial situation visible to others. Most patients conceivably prefer to have their assistive technology to operate in the background, ambiently and unobtrusively. In the nascent field of closed-loop medical technology, research on the psychosocial effects of closed-loop devices suggests that, for example in patients with diabetes and closed-loop devices for glycemic control, patients are indeed concerned with the operational design, appearance and visibility of the devices (Farrington Citation2018; Muñoz-Velandia et al. Citation2019).

These interrelated potential negative effects of increased vulnerability, dependency, and stigma could also be compounded by the problem of the authenticity of the moral agency that a forensic patient (or a criminal offender) can exercise with the assistance of an XR system. If, for example, a forensic patient becomes particularly adept at displaying functionally moral behavior, without ever experiencing corresponding moral sentiments, she may be able to achieve a positive evaluation and low-risk score in terms of recidivism and perhaps also be allowed to be released into the community. While the patient may over time learn to associate her behavior with the moral implications expected by others, it could be argued that this does not constitute real moral learning but merely a simulation of moral behavior. Further probing this problem invariably leads deeper into the contested territory of the philosophical nature of phenomenological consciousness and its relationship to moral agency and ascribing responsibility or culpability (Skalko and Cherry Citation2016). This illustrates that we need to connect specific ethical demands that we place on using XR as an assistive technology for forensic treatment and corrective resocialization to accounts that integrate philosophical, anthropological (Mattingly and Throop Citation2018), psychological, (Greene Citation2017; Langdon and Mackenzie Citation2012), and neuroscientific (Garrigan, Adlam, and Langdon Citation2016; Greene and Paxton Citation2009) aspects of moral agency and decision-making.

ALIGNMENT WITH FUNDAMENTAL RIGHTS AND VALUES: TOWARD A USER-CENTERED APPROACH

Crime places a significant financial burden on society, and often comes with serious physical and mental harm. Hence, if the use of XR in the resocialization of forensic patients and, more broadly, of non-pathological convicted offenders were able to contribute to reducing recidivism, then preventing harm and protecting society could already be forceful arguments to introducing XR in forensic settings. Interestingly, however, the arguments in favor of forensic XR from both a legal and moral perspective, discussed in “Two arguments in favor of forensic XR use” section, lay not so much in protecting society against dangerous individuals, but rather in fostering the forensic patients themselves, enabling them to (re)obtain and train those moral, mental, and behavioral capabilities that are essential to participate in a free society successfully. In this regard, XR can be very helpful, both as a treatment tool or moral prosthesis and, more practically, as a welcome tool in learning to adapt to the challenges of the outside world from inside the prison walls. Both types of application can facilitate resocialization, as human rights law demands.

At the same time, however, both law and ethics call for caution in introducing XR into forensic settings. Again, the arguments for this claim relate directly to the personal legal and moral interests of individual forensic patients. As discussed in “Two arguments for caution regarding forensic XR” section, employing XR in forensic settings will potentially increase vulnerability and dependency of its users, stigmatizing patients, and may only lead to functional moral behavior, adversely effecting authenticity and moral agency. As we argued, these interrelated potential negative effects of forensic XR illustrate the need of connecting specific ethical demands that we place on using XR in forensic treatment and resocialization to accounts that integrate philosophical, anthropological, psychological, and neuroscientific aspects of moral agency and decision-making.

However, such a multidimensional theoretical account of human moral agency that is grounded in empirical evidence is lacking for the time being. Therefore, the introduction of complex technologies such as XR—soon very likely coupled with powerful auxiliary technology such as deep learning and sensor technology—which can modulate behavior and moral agency may warrant a precautionary approach to technology governance and regulation (Vogel Citation2012); i.e. an approach that favors a gradual and incremental model of user-centered responsible research and innovation (Demers-Payette, Lehoux, and Daudelin Citation2016) over a disruptive and technology-driven model. Yet, medical technology is usually not developed with significant end-user input in the design phase; and elsewhere, we have argued that considering the ethical dimensions of such technology should therefore include the lens of human-technology interaction, design thinking, and related fields to ensure an ethically viable user-centered approach to medical technology (Kellmeyer, Biller-Andorno, and Meynen Citation2019).

Such a user-centered approach should take into account the users’ fundamental rights and embedding them into the technology—especially since offering XR programs in forensic settings may violate the right to freedom of thought, and infringe the right to mental integrity. As the Committee of Bioethics of the Council of Europe has stressed, technological developments in biomedicine must from the outset be oriented toward protecting human rights. Hence, whereas close collaboration between bioethics and the law is already an effective step forward (Ligthart et al. Citation2019b), the technology itself should be involved as well, preferably as of the design phase, during the development of novel technology toward novel applications—that is, in this case, when developing XR toward introducing it into the resocialization of forensic patients and convicted offenders.

CONCLUSION

The grounding of forensic assessment and intervention in scientific evidence and best practices is not only an obligation to society, particularly to past and potential future victims and their families, but also to forensic patients themselves. As the European Court on Human Rights has stressed, providing mentally ill detainees with a real opportunity for resocialization may require enabling them to undergo treatments or therapies—be they medical, psychological, or psychiatric—adapted to their situation with a view to facilitating their social rehabilitation and reintegration into society. XR appears to be a promising tool in this regard. Focusing on the legal and moral interests of forensic patients, we identified two general arguments in favor of introducing XR into forensic settings. At the same time, we argued that offering XR in forensic treatment and resocialization should be approached with caution, since it could potentially infringe fundamental rights over the mind, increase the users’ vulnerability and dependency, stigmatize and adversely affect their authenticity and moral agency. Preferably, the required caution should manifest in an ethical and legal viably user-centered approach to forensic XR—developing the technology toward its application in criminal justice and orienting it toward respecting moral concepts and fundamental rights.

Additional information

Funding

Notes

1 Sometimes also referred to as correctional or social rehabilitation.

2 Within the context of this article, we will not address the relationship between ethics and law more generally; we will focus on human rights, where a close connection exists between legal and ethical principles and values, as well as scholarly discussions about them.

4 Note that questions arise regarding data collection and privacy as well. Yet, since these issues have been much debated in the present literature, and employing XR in forensic settings will not raise typical intricacies in this regard, the present paper focuses on freedom of thought and mental integrity.

5 That is, punishment for the sake of punishment, solely by reference to one's past criminal offense.

6 Dickson/UK App no 44362/04 (ECHR 4 December 2007), para. 75; Vinter and others v UK App nos. 66069/09, 130/10 and 3896/10 (ECHR, 9 July 2013), para. 115; Murray v The Netherlands App no 10511/10 (ECHR, 26 April 2016), para. 101. See also Harakchiev and Tolumov v Bulgaria App nos 15018/11 and 61199/12 (ECHR, 8 July 2014), para. 264.

7 Murray v The Netherlands App no 10511/10 (ECHR, 26 April 2016), para. 102.

8 Khoroshenko v Russia App no 41418/04 (ECHR, 30 June 2015), para. 121; Hutchinson v UK App no 57592/08 (ECHR, 17 January 2017), para. 43; Murray v The Netherlands App no 10511/10 (ECHR, 26 April 2016), para. 104.

9 Harakchiev and Tolumov v Bulgaria App nos 15018/11 and 61199/12 (ECHR, 8 July 2014), para. 264; Murray v The Netherlands App no 10511/10 (ECHR, 26 April 2016), para. 104.

10 Murray v The Netherlands App no 10511/10 (ECHR, 26 April 2016), para. 104.

11 Harakchiev and Tolumov v Bulgaria App nos 15018/11 and 61199/12 (ECHR, 8 July 2014), para. 264.

12 Murray v The Netherlands App no 10511/10 (ECHR, 26 April 2016), para. 109.

13 Although the European Prison Rules and the Mandela Rules concern non-binding “soft-law,” they do play an important role in the interpretation of binding norms in human rights law, such as enshrined in the European Convention on Human rights (ECHR) and the ICCPR. See e.g. Vinter and others v. UK App nos 66069/09, 130/10 and 3896/10 (ECHR, 9 July 2013), par. 115–116; Harakchiev and Tolumov v. Bulgaria App nos 15018/11 and 61199/12 (ECHR, 8 July 2014), par. 264. See also Abels Citation2012, p. 33, 41.

14 Cf. Murray v The Netherlands App no 10511/10 (ECHR, 26 April 2016), para. 101; Vinter and others v UK App nos. 66069/09, 130/10 and 3896/10 (ECHR, 9 July 2013), para. 113.

15 Note that such a view should not necessarily conflict with a retributivist approach of criminal justice (Ryberg Citation2018).

16 As noted in the introduction, we will not discuss the (more general) issues on data collection and privacy.

17 Yet motion sickness is a well-known impediment for VR.

18 Explanations relating to the Charter of Fundamental Rights (2007/C 303/02).

19 Bédat v Switzerland App no 56925/08 (ECHR, 29 March 2016), para. 72; Nada v Switzerland App no 10593/08 (ECHR, 12 September 2012), para. 151.

20 Cf. the following cases of the ECHR where obligatory courses of sex education did not amount to indoctrination: Dojan and others v. Germany App no 319/08 (ECHR, 13 September 2011); Kjeldsen and others v. Denmark App nos 5095/71, 5920/72, 5926/72 (ECHR, 7 December 1976).

REFERENCES

- Abels, D. 2012. Prisoners of the international community. The legal position of persons detained at international criminal tribunals. The Hague: Asser Press.

- Alegre, S. 2017. Rethinking freedom of thought for the 21st century. European Human Rights Law Review (3):221–33.

- Andershed, H., 2010. Stability and change of psychopathic traits: What do we know?. In Handbook of child and adolescent psychopathy, by R. T. Salekin, and D. R. Lynam. New York: The Guilford Press.

- Andorno, R. 2018. The role of UNESCO in promoting universal human rights: From 1948 to 2005. In International biolaw and shared ethical principles. The universal declaration on bioethics and human rights, by C. Caporale and I. Pavone. Oxford: Routledge.

- Barak, A. 2015. Human dignity: The constitutional value and the constitutional right. Cambridge, UK: Cambridge University Press.

- Barfield, W., and M. J. Blitz. 2018. Research handbook on the law of virtual and augmented reality. Cheltenham: Edward Elgar.

- Beauchamp, T. L., and J. F. Childress. 2001. Principles of biomedical ethics. New York: Oxford University Press.

- Boire, R. G. 2001. On cognitive liberty. Journal of Cognitive Liberties 2 (1):7–22.

- Bublitz, J. C. 2014. Freedom of thought in the age of neuroscience. Archiv Für Rechts- Und Sozialphilosophie 100 (1):1–25.

- Bublitz, J. C. 2020a. The nascent right to psychological integrity and mental self-determination. In The Cambridge Handbook of New Human Rights: Recognition, by A. Von Arnauld, K. Von der Decken and M. Susi. Novelty, Rhetoric. Padstow: Cambridge University Press.

- Bublitz, J. C. 2020b. Why means matter: Legally relevant differences between direct and indirect interventions into other minds. In Neurointerventions and the law: Regulating human mental capacity, by N. A. Vincent, T. Nadelhoffer and A. McCay. New York: Oxford University Press.

- Bublitz, J. C., and R. Merkel. 2014. Crimes against minds: On mental manipulations, harms and a human right to mental self-determination. Criminal Law and Philosophy 8 (1):51–77. doi:https://doi.org/10.1007/s11572-012-9172-y.

- Chittaro, L., and N. Zangrando. 2010. The persuasive power of virtual reality: Effects of simulated human distress on attitudes towards fire safety. In Persuasive Technology. PERSUASIVE 2010, by T. Ploug, P. Hasle, and H. Oinas-Kukkonen. Berlin: Springer.

- Cipresso, P., I. A. C. Giglioli, M. A. Raya, and G. Riva. 2018. The past, present, and future of virtual and augmented reality research: A network and cluster analysis of the literature. Frontiers in Psychology 9:2086. doi:https://doi.org/10.3389/fpsyg.2018.02086.

- Cornet, L., and J.-L. Van Gelder. 2020. Virtual reality: A use case for criminal justice practice. Psychology, Crime & Law 26 (7):631–47. doi:https://doi.org/10.1080/1068316X.2019.1708357.

- Cruft, R., M. Liao, and M. Renzo. 2015. The philosophical foundations of human rights. New York: Oxford University Press.

- Demers-Payette, O., P. Lehoux, and G. Daudelin. 2016. Responsible research and innovation: A productive model for the future of medical innovation. Journal of Responsible Innovation 3 (3):188–208. doi:https://doi.org/10.1080/23299460.2016.1256659.

- Dolven, T., and E. Fidel. 2017. This prison is using VR to teach inmates how to live on the outside. Vice News, 27 December.

- Douglas, T. 2018. Neural and environmental modulation of motivation. What’s the moral difference?. In D. Birks and T. Douglas. Treatment for crime. New York: Oxford University Press.

- Douglas, T., P. Bonte, F. Focquaert, K. Devolder, and S. Sterckx. 2013. Coercion, incarceration, and chemical castration: An argument from autonomy. Journal of Bioethical Inquiry 10 (3):393–405. doi:https://doi.org/10.1007/s11673-013-9465-4.

- Farrington, C. 2018. Psychosocial impacts of hybrid closed-loop systems in the management of diabetes: A review. Diabetic Medicine 35 (4):436–49. doi:https://doi.org/10.1111/dme.13567.

- Fromberger, P., K. Jordan, and J. L. Müller. 2018. Virtual reality applications for diagnosis, risk assessment and therapy of child abusers. Behavioral Sciences & the Law 36 (2):235–44. doi:https://doi.org/10.1002/bsl.2332.

- Ganguli-Mitra, A., and N. Biller-Andorno. 2011. Vulnerability in healthcare and research ethics. In The SAGE Handbook of Health Care Ethics, by R. Chadwick, H. Ten Have and E. M. Meslin. Los Angeles, London: SAGE.

- Garrigan, B., A. L. R. Adlam, and P. E. Langdon. 2016. The neural correlates of moral decision-making: A systematic review and meta-analysis of moral evaluations and response decision judgements. Brain and Cognition 108:88–97. doi:https://doi.org/10.1016/j.bandc.2016.07.007.

- Greene, J. D. 2017. The Rat-a-Gorical imperative: Moral intuition and the limits of affective learning. Cognition 167:66–77. doi:https://doi.org/10.1016/j.cognition.2017.03.004.

- Greene, J. D., and J. M. Paxton. 2009. Patterns of neural activity associated with honest and dishonest moral decisions. Proceedings of the National Academy of Sciences of the United States of America 106 (30):12506–11. doi:https://doi.org/10.1073/pnas.0900152106.

- Hare, R. D., S. D. Hart, and T. J. Harpur. 1991. Psychopathy and the DSM-IV criteria for antisocial personality disorder. Journal of Abnormal Psychology 100 (3):391–8. doi:https://doi.org/10.1037/0021-843X.100.3.391.

- Harris, D. J, et al. 2018. Harris, O’Boyle, and Warbrick: Law of the European convention on human rights. New York: Oxford University Press.

- Hempel, I. S. 2013. Sexualized minds: Child sex offenders’ offense-supportive cognitions and interpretations (diss.). Rotterdam: Erasmus Uiversiteit Rotterdam.

- Ienca, M., and R. Andorno. 2017. Towards new human rights in the age of neuroscience and neurotechnology. Life Sciences, Society and Policy 13 (1):5. doi:https://doi.org/10.1186/s40504-017-0050-1.

- Kaplan, A. D, et al. 2020. The effects of virtual reality, augmented reality, and mixed reality as training enhancement methods: A meta-analysis. Human Factors

- Kellmeyer, P. 2018. Neurophilosophical and ethical aspects of virtual reality therapy in neurology and psychiatry. Cambridge Quarterly of Healthcare Ethics 27 (4):610–27. doi:https://doi.org/10.1017/S0963180118000129.

- Kellmeyer, P., N. Biller-Andorno, and G. Meynen. 2019. Ethical tensions of virtual reality treatment in vulnerable patients. Nature Medicine 25 (8):1185–8. doi:https://doi.org/10.1038/s41591-019-0543-y.

- Klein Tuente, S., S. Bogaerts, E. Bulten, M. Keulen-de Vos, M. Vos, H. Bokern, S. v IJzendoorn, C. N. W. Geraets, and W. Veling. 2020. Virtual reality aggression prevention therapy (VRAPT) versus waiting list control for forensic psychiatric inpatients: A multicenter randomized controlled trial. Journal of Clinical Medicine 9 (7):2258. doi:https://doi.org/10.3390/jcm9072258.

- Klein Tuente, S., S. Bogaerts, S. van IJzendoorn, and W. Veling. 2018. Effect of virtual reality aggression prevention training for forensic psychiatric patients (VRAPT): Study protocol of a multi-center RCT. BMC Psychiatry 18 (1):251. doi:https://doi.org/10.1186/s12888-018-1830-8.

- Langdon, R., and C. Mackenzie, eds. 2012. Emotions, imagination, and moral reasoning. Psychology Press.

- Latoschik, M. E, D. Roth, D. Gall, J. Achenbach, T. Waltemate, and M. Botsch. 2017. The effect of avatar realism in immersive social virtual realities. VRST '17: Proceedings of the 23rd ACM Symposium on Virtual Reality Software and Technology, vol. 39, pp. 1–10. doi:https://doi.org/10.1145/3139131.3139156.

- Lavazza, A. 2018. Freedom of thought and mental integrity: The moral requirements for any neural prosthesis. Front Neurosci 12 (82):82. doi:https://doi.org/10.3389/fnins.2018.00082.

- Lemley, M. A., and E. Volokh. 2018. Law, virtual reality, and augumented reality. University of Pennsylvania Law Review 166:1051–138.

- Ligthart, S. 2020. Freedom of thought in Europe: Do advances in brain-reading technology call for revision? Journal of Law and the Bioscience.

- Ligthart, S, T. Kooijmans, T. Douglas, and G. Meynen. 2021. Closed-loop brain devices in offender rehabilitation: Autonomy, human rights, and accountability. Cambridge Quarterly of Healthcare Ethics.

- Ligthart, S, T. Douglas, C. Bublitz, T. Kooijmans, and G. Meynen. 2020. Forensic brain-reading and mental privacy in European Human Rights Law: Foundations and challenges. Neuroethics 30(4).

- Ligthart, S., G. Meynen, T. Douglas. 2021. Persuasive technologies and the right to mental liberty: The “Smart Rehabilitation” of criminal offenders. In Cambridge Handbook of Life Science, Information Technology and Human Rights, by Ienca, M. Cambridge, UK: Cambridge University Press.

- Ligthart, S., L. van Oploo, J. Meijers, G. Meynen, and T. Kooijmans. 2019a. Prison and the brain: Neuropsychological research in light of the European Convention on Human Rights. New Journal of European Criminal Law 10 (3):287–300. doi:https://doi.org/10.1177/2032284419861816.

- Ligthart, S., T. Douglas, C. Bublitz, and G. Meynen. 2019b. The future of neuroethics and the relevance of the law. AJOB Neuroscience 10 (3):120–1. doi:https://doi.org/10.1080/21507740.2019.1632961.

- Mackenzie, C., and N. Stoljar. 2000. Relational autonomy: Feminist perspectives on autonomy, agency, and the social self. New York: Oxford University Press.

- Mackenzie, C., W. Rogers, and S. Dodds. 2013. Vulnerability: New essays in ethics and feminist philosophy. New York: Oxford University Press.

- Marloth, M., J. Chandler, and K. Vogeley. 2020. Psychiatric interventions in virtual reality: Why we need an ethical framework. Cambridge Quarterly of Healthcare Ethics 29 (4):574–84. doi:https://doi.org/10.1017/S0963180120000328.

- Marshall, J. 2009. Personal freedom through human rights law? Autonomy, identity and integrity under the European Convention on Human Rights. Leiden: Martinus Nijhoff.

- Mattingly, C., and J. Throop. 2018. The anthropology of ethics and morality. Annual Review of Anthropology 47 (1):475–92. doi:https://doi.org/10.1146/annurev-anthro-102317-050129.

- McCarthy-Jones, S. 2019. The autonomous mind: The right to freedom of thought in the twenty-first century. Frontiers in Artificial Intelligence 2:19. doi:https://doi.org/10.3389/frai.2019.00019.

- Meijer, S. 2017. Rehabilitation as a positive obligation. European Journal of Crime, Criminal Law and Criminal Justice 25 (2):145–62. doi:https://doi.org/10.1163/15718174-25022110.

- Meijers, J., J. M. Harte, G. Meynen, P. Cuijpers, and E. J. A. Scherder. 2018. Reduced self-control after 3 months of imprisonment: A pilot study. Frontiers in Psychology 9:69. doi:https://doi.org/10.3389/fpsyg.2018.00069.

- Melnick, K. 2018. Inmates use VR to prepare for life on the outside. VRscout 2.

- Michalowski, S. 2020. Critical reflections on the need for a right to mental self-determination. In The Cambridge handbook of new human rights: Recognition, by A. Von Arnauld, K. Von der Decken and M. Susi. Novelty, Rhetoric. Padstow: Cambridge University Press.

- Murdoch, J. 2012. Protecting the right to freedom of thought, conscience and religion under the European Convention on Human Rights. Strasbourg: Council of Europe.

- Muñoz-Velandia, O., G. Guyatt, T. Devji, Y. Zhang, S.-A. Li, P. E. Alexander, D. Henao, A.-M. Gomez, and Á. Ruiz-Morales. 2019. Patient values and preferences regarding continuous subcutaneous insulin infusion and artificial pancreas in adults with type 1 diabetes: A systematic review of quantitative and qualitative data. Diabetes Technology & Therapeutics 21 (4):183–200. doi:https://doi.org/10.1089/dia.2018.0346.

- Nee, C., J. ‐L. Gelder, M. Otte, Z. Vernham, and A. Meenaghan. 2019. Learning on the job: Studying expertise in residential burglars using virtual environments. Criminology 57 (3):481–511. doi:https://doi.org/10.1111/1745-9125.12210.

- Pugh, J. 2018. Coercion and the neurocorrective offer. In Treatment for crime: Philosophical essays on neurointerventions in criminal justice, by D. Birks and T. Douglas. New York, NY: Oxford University Press.

- Renaud, P., D. Trottier, J.-L. Rouleau, M. Goyette, C. Saumur, T. Boukhalfi, and S. Bouchard. 2014. Using immersive virtual reality and anatomically correct computer-generated characters in the forensic assessment of deviant sexual preferences. Virtual Reality 18 (1):37–47. doi:https://doi.org/10.1007/s10055-013-0235-8.

- Rizzo, A., S. Thomas Koenig, and T. B. Talbot. 2019. Clinical results using virtual reality. Journal of Technology in Human Services 37 (1):51–74. doi:https://doi.org/10.1080/15228835.2019.1604292.

- Ryberg, J. 2018. Neuroscientific treatment of criminals and penal theory. In Treatment for crime: Philosophical essays on neurointerventions in criminal justice, by D. Birks and T. Douglas. New York, NY: Oxford University Press.

- Ryberg, J. 2020. Neurointerventions, crime, and punishment: Ethical considerations. New York: Oxford University Press.

- Salekin, R. T. 2008. Psychopathy and recidivism from mid-adolescence to young adulthood: Cumulating legal problems and limiting life opportunities. Journal of Abnormal Psychology 117 (2):386–95. doi:https://doi.org/10.1037/0021-843X.117.2.386.

- Savulescu, J., and I. Persson. 2012. Moral enhancement, freedom and the god machine. The Monist 95 (3):399–421. doi:https://doi.org/10.5840/monist201295321.

- Seinfield, S. 2018. Offenders become the victim in virtual reality: Impact of changing perspective in domestic violence. Nature Scientific Reports 8:2692.

- Sententia, W. 2004. Neuroethical considerations: Cognitive liberty and converging technologies for improving human cognition. Annals of the New York Academy of Sciences 1013 (1):221–8. doi:https://doi.org/10.1196/annals.1305.014.

- Skalko, J., and M. J. Cherry. 2016. Bioethics and moral agency: On autonomy and moral responsibility. Journal of Medicine and Philosophy 41 (5):435–43. doi:https://doi.org/10.1093/jmp/jhw022.

- Slater, M., C. Gonzalez-Liencres, P. Haggard, C. Vinkers, R. Gregory-Clarke, S. Jelley, Z. Watson, G. Breen, R. Schwarz, W. Steptoe, et al. 2020. The ethics of realism in virtual and augmented reality. Frontiers in Virtual Reality 1–13. doi:https://doi.org/10.3389/frvir.2020.00001.

- Szmukler, G., and P. S. Appelbaum. 2008. Treatment pressures, leverage, coercion, and compulsion in mental health care. Journal of Mental Health 17 (3):233–44. doi:https://doi.org/10.1080/09638230802052203.

- Taylor, P. M. 2005. Freedom of religion. New York, NY: Cambridge University Press.

- Ticknor, B. 2019. Virtual reality and correctional rehabilitation: A game changer. Criminal Justice and Behavior 46 (9):1319–36. doi:https://doi.org/10.1177/0093854819842588.

- Umbach, R., A. Raine, and N. L. Leonard. 2018. Cognitive decline as a result of incarceration and the effects of a CBT/MT intervention: A cluster-randomized controlled trial. Criminal Justice and Behavior 45 (1):31–55. doi:https://doi.org/10.1177/0093854817736345.

- Urheim, R., K. Rypdal, T. Palmstierna, and A. Mykletun. 2011. Patient autonomy versus risk management: A case study of change in a high security forensic psychiatric ward. International Journal of Forensic Mental Health 10 (1):41–51. doi:https://doi.org/10.1080/14999013.2010.550983.

- Vermeulen, B., M. Roosmalen, et al. 2018. Freedom of thought, conscience and religion. In Theory and practice of the European Convention on Human Rights, by P. van Dijk. Cambridge: Intersensia.

- Vogel, D. 2012. The politics of precaution: Regulating health, safety, and environmental risks in Europe and the United States. Woodstock, NY: Princeton University Press.

- Wassom, B. 2015. Augmented reality law, privacy, and ethics: Law, society, and emerging AR technologies. Waltham: Syngress.