ABSTRACT

The purpose of most citizen science projects is engaging citizens in providing data to scientists, not supporting citizen learning about science. Any citizen learning that does occur in most projects is normally a by-product of the project rather than a stated aim.This study examines learning outcomes of citizens participating in online citizen science communities purposely designed for inquiry learning. The ‘Citizen inquiry’ approach adopted in this study prioritises and scaffolds learning and engages people in all stages of the scientific process by involving them in inquiry-based learning while participating in citizen science activities. The exploratory research involved an intervention with two design studies of adult volunteer participants engaging in citizen-led inquiries, employing a mixed-methods approach. The findings indicated that, in both design studies, participants engaged in an inquiry process, and practised inquiry skills alongside other kinds of knowledge and skills not directly related to science. Differences between the outcomes of each study highlighted the importance of the design on learning impact. In particular, participant understanding of research processes and methods, and developing more experimental studies were more evident with the support of a dynamic representation of the inquiry process. Furthermore, in the second study, which was hosted in a more engaging and interactive learning environment, participants perceived science as fun, were involved in inquiry discussions, and showed progress in scientific vocabulary. The paper concludes with some considerations for science educators and citizen science facilitators to enhance the learning outcomes of citizen inquiry and similar online communities for science inquiries.

Introduction

Citizen Science (CS) usually refers to the voluntary participation of the general public in different phases of the scientific process, often during data collection or analysis, of projects run by scientists (Bonney et al., Citation2009). Citizen science projects have been characterised as contributory, collaborative, and co-created, according to the level of collaboration between scientists and citizens. In contributory projects participants mainly take part in the data collection and analysis phases; in collaborative projects they also help in designing the study and interpreting or disseminating the data; and in co-created projects participants collaborate with scientists in all stages of the project (including defining the research question, discussion of results and further work) (Bonney et al., Citation2009). In contributory projects, participants are only engaged in data collection and analysis activities, without having access to the big picture of the scientific approach. In collaborative and co-created projects, the citizen participants may get incidental learning about the design and conduct of science investigations, but learning is not an explicit aim of these projects and they may assume a high level of scientific knowledge.

Although research projects have focused on measuring learning outcomes in CS projects, few CS projects have been primarily designed to improve citizens’ understanding of the scientific approach and confidence around science and inquiry. For example, in contributory projects, participants are only engaged in data collection and analysis activities, without having access to the big picture of the scientific approach. As a response to this design challenge, citizen-led inquiry connects inquiry learning and CS as an innovative way for engaging the public in science learning (Herodotou et al., Citation2018). The role of inquiry learning in science inquiry is critical as it can transform the CS participants into learners and enable them to pose thoughtful questions, make sense of information, and develop new understanding about a science topic and the world around them. Through inquiry learning, CS participants will be able to develop the skills and attitudes needed to be self-directed, lifelong learners (National Library of New Zealand, Citationn.d.). This paper reports learning outcomes of participants in two online CS inquiry communities, designed specifically to support science inquiry in citizen-led investigations, and presents considerations for future CS projects.

Citizen science and science learning

Participation in CS has been viewed as an opportunity for learning: Volunteers can gain a better understanding of science processes and methods, appreciate nature, and support local and global science initiatives (Bonney et al., Citation2016; Freitag et al., Citation2013; Herodotou et al., Citation2017). CS participation projects promote social inclusion by welcoming people of all ages and backgrounds who have no access to formal education. Only a few have been evaluated in terms of science and learning outcomes (Geoghegan et al., Citation2016) or directly used in formal education settings (Kelemen-Finan et al., Citation2018), but with the rising popularity of CS, demand has increased for the creation of indicator-based evaluation frameworks, and several guides have been developed to evaluate the outputs and outcomes of CS projects. For instance, Cornell Lab of Ornithology, a leading institution in CS activities, developed a framework for evaluating CS learning outcomes (Phillips et al., Citation2014) based on Friedman’s (Citation2008) Informal Science Education framework. The guide includes measurements such as interest in science and the environment, self-efficacy, motivation, knowledge of the nature of science, skills of science inquiry, and behaviour and stewardship. The latest version extends the framework by adding data interpretation skills to the set of learning outcomes (Bonney et al., Citation2016).

Beyond the field-based CS projects that take place in the physical world, many CS projects require the use of web-based or mobile technology. These ‘online’ projects either develop their own technologies (e.g. websites, social networks and mobile applications) or they are hosted on CS platforms. For example, iNaturalist (https://www.inaturalist.org) and iSpot (https://www.ispotnature.org) are CS social network sites involving people in exploring and mapping biodiversity, while Zooniverse (https://www.zooniverse.org) is a CS crowdsourcing platform engaging people in projects on many topics across the sciences and humanities.

Evaluating science learning in online settings reveals outcomes not encountered in field-based programmes (Aristeidou & Herodotou, Citation2020). For example, collecting and analysing data through mobile and other devices encourages learning related to digital literacy (Jennett et al., Citation2016; Kloetzer et al., Citation2013, Citation2016). Further, the online collaboration that usually characterises online CS programmes promotes communication skills, such as English and social skills, writing skills and community learning (Jennett et al., Citation2016; Kloetzer et al., Citation2016). Subsequently, the nature of online programmes requires additional learning evaluation methods, including user and community observation via learning metrics and observation protocols (e.g. Amsha et al., Citation2016).

Yet, science learning in CS projects is often an incidental product of the formalising training that aims at the successful completion of the scientific goals, rather than educational design that intends to improve learning outcomes.

Citizen inquiry

Citizen inquiry overcomes this challenge of whether to prioritise learning in citizen science projects by emphasising the active involvement of the public in initiating and implementing their own CS projects (Herodotou et al., Citation2017), engaging them in all the stages of the scientific process of inquiry: the conception of a project, definition of research objectives, selection of data collection and analysis methods, implementation of research (Aristeidou, Scanlon & Sharples, Citation2017b ; Herodotou et al., Citation2017). In citizen inquiry, members of the public define their own agenda of personally-meaningful scientific investigations and, guided by models of scientific inquiry and co-investigators, produce identifiable learning outcomes. Structured scaffolding, based on the inquiry-based learning approach, facilitates the investigators in managing their inquiries and encourages self-expression and reflection (Quintana et al., Citation2004). It is suggested that, by involving inquiry-based learning in science inquiries, the general public are more likely to experience real science (de Jong, Citation2006) and develop thinking competences similar to scientists (Edelson et al., Citation1999). Therefore, citizen inquiry starts from an explicit pedagogy, of inquiry-based learning, combined with a science practice of CS. The intention is that this new pedagogy-based science practice may enable both good scientific outcomes (from mass engagement in data collection and or/analysis) and good learning outcomes, which include learning about the process of scientific investigation, learning about the topic under investigation and gaining digital literacy.

Aim and research question

To explore learning outcomes in CS communities that were specifically designed to support inquiry, two citizen science communities were designed using the citizen inquiry approach, implemented and evaluated. This paper addresses the following questions:

(Q1) What do citizens learn when participating in online citizen science communities designed for learning?

(Q2) What are the implications for science education of online citizen science?

Materials and methods

Study design

Design-based mixed-methods approach

A design-based research (DBR) methodology (Cobb et al., Citation2003) was employed. The aim was to improve learning in online CS participation communities with interventions in collaboration with the participating citizens. Adopting DBR allowed ongoing revisions of the technology and engagement techniques according to current success, involved other researchers in the design, and identified aspects that may affect the situation, rather than manipulating specific variables (Collins et al., Citation2004). A mixed-methods approach was used to measure learning and engagement quantitatively and qualitatively, drawing on the strengths and balancing the weakness of both approaches (Symonds & Gorard, Citation2010). The main motivations for the participants to join the citizen inquiry communities was their ‘interest in the topic’, followed by ‘friends’ who have already joined the community. Although ‘interest in the topic’ was a very popular motivation for both science expert and non-expert participants, ‘friends’ was only stated as a motivation by non-experts. The latter have probably joined the community following their friends’ invitation and recommendation. There were also several members, mainly non-experts, who were attracted to the community or the technology used. A more thorough analysis of participant engagement indicated that extrinsic engagement factors, such as community features and the software, attracted and activated the participants in the community, while intrinsic factors, such as their interest in the topic, were the main reasons for remaining in the community for a longer period of time. Furthermore, in comparison to science participation communities that require lower levels of citizen participation, participants of a citizen inquiry community seemed to have some loyal participation behaviour (with a long stay and high levels of activity) and some lurking, non-active contribution (observing, following and liking other contributions). Detailed results of the participants’ motivation and engagement in the projects have been presented elsewhere (Aristeidou et al., Citation2017a, Citation2017b); this paper describes the learning outcomes and discusses implications for designers and citizen science researchers for the development of a CS community designed for learning. To address the research questions, we adopted the following methods of assessing learning in two design studies: completion rates, observation, examining the investigations for cognitive presence, self-reported learning, and vocabulary and sensor plots assessment. The main features of the two design studies, named Rock Hunters and Weather-it, are shown in .

Table 1. Main aspects of Rock Hunters and Weather-it design studies.

Design study 1: Rock Hunters

The first design study, Rock Hunters, lasted for three weeks and aimed to involve participant geology experts and non-experts in creating and conducting their own investigations into rocks. Recruitment involved the creation of a blog and a Facebook page for the project; a leaflet shared online and in hard copies; advertisements on web-pages with visitors interested in education, geology or citizen science. The invitation message urged people who wanted to answer their topic specific science-related questions, in collaboration with scientists, to join the study. Email invitations were also sent to expert geologists (via the university’s geological society and geology department) to join the community as participants and not facilitators, but they were asked to provide help when needed. A sequence of emails to the people who indicated interest in the study included further explanations about study aims, instructions on how to join, important dates, and a consent form. An invitation to the online platform hosting the study was sent to the 24 participants who consented to join Rock Hunters. The lead researcher in Rock Hunters (the first author of this paper) had a ‘facilitator’ and ‘helper’ role rather than participating in the project.

The main focus in the Rock Hunters study was to understand how citizens learn through their participation in online scientific investigations; thus, it focused on exploring the investigations created by participants, the collaboration between the participants, and the experience participants gained from their participation. The hosting software was the nQuire v1 platform. nQuire was initially developed to support inquiry-based activities for secondary education science learning within the Personal Inquiry project (Sharples et al., Citation2014). It was then further developed to support inquiry and self-managed learning without teacher input. ‘nQuire’ in this paper refers to the first version of the platform.

Technological affordances in nQuire included a dynamic representation of the inquiry process (Scanlon et al., Citation2017) that enabled storing data in a structured format, discussions and disseminating results. This dynamic representation guided Rock Hunters to carry out their investigations through the inquiry stages, starting with a theme and research question and concluding with their findings. The participants were enabled to design the sequence of their research activities, revise their research and publish it at any stage in the ‘journal’, a space where other participants could view and comment on the published inquiries. Other technologies that supported Rock Hunters during their research were text and video tutorials on how to use the nQuire platform, informational text on the dynamic representation for better guidance, and integrated research tools such as Google maps, a virtual microscope and spreadsheets. Furthermore, asynchronous forum threads and live chat for synchronous interactions with other participants in discussion spaces alongside the published investigations boosted communication.

The rock inquiries created by the Rock Hunters had mainly location-based and colour-related questions: For example, what types of rocks are in their area and whether some rocks have specific colours inside or outside them. Then, the participants planned their methods, conducted their investigations, and posted their conclusions.

The Rock Hunters study highlighted issues related to the usability of the hosting platform and the lack of participants’ engagement and interaction (Aristeidou et al., Citation2014). These outcomes led to recommendations that facilitated the design of the second study, Weather-it, including investigating recruitment methods based on the participants’ background and behaviour, improving the platform usability, using gamification features to enhance participation, creating personal profiles and communication features for better collaboration, and creating collaborative investigations with easier flow of discussion.

Design study 2: Weather-it

Weather-it ran for 14 weeks and aimed to explore the engagement and learning of citizens in communities of online scientific weather investigations. In contrast to Rock Hunters, the recruitment was continuous. Initially a group of ten people interested in weather from around Europe were recruited to form the community’s core before other participants arrived and facilitate the recruitment of others. Then, an invitation to Weather-it was circulated to learning, CS and weather communities, social networks and mailing lists (e.g. the Royal Meteorological Society (RMetS), the UK Weather Watch community). The advertisement involved a printed and posted leaflet explaining the aim of establishing a Weather-it community, the hosting software features and the registration procedure. Moreover, a Weather-it Facebook page was created to promote community activities and attract participants. The 101 registered participants completed a consent form that contained information about the study and the use of their data and reported their email address and username in order to be identifiable and available for contact. The lead researcher in Weather-it, also the lead author of this paper, had a more active and invasive role compared to Rock Hunters, moderating and sustaining engagement in the community, while contributing as a participant.

The hosting software for Weather-it was the nQuire-it toolkit, which included the nQuire-it platform and Sense-it app. nQuire-it was a new version of nQuire v1 with its main design objectives focusing on simplifying the inquiry process, creating social mechanisms and allowing a number of ways to conduct research (Herodotou et al., Citation2018).

In Weather-it, people of all levels of weather expertise could start or participate in weather missions (investigations) in relation to everyday life weather questions, weather phenomena and climate change. The three available formats, based on the data collection method, were:

Sense-it missions: the data collection process was facilitated by the Sense-it app, which activates the existing sensors of Android mobile devices (e.g. light sensors, humidity, pressure) and allows participants to take measurements, store, visualise and upload these automatically to the nQuire-it platform. The app produces graphs of the measurements over time, indicating the variation in readings.

Spot-it missions: the participants could capture images and spot things around them. The images were used for the data collection.

Win-it missions: a challenging research question was set by a participant, requiring text responses from other participants. The questions usually involved summarising current research and the answers had to be creative as they were rated by the other participants.

The main focus of this study was to investigate how to create active and sustainable online learning communities working on scientific investigations. Therefore, ongoing changes to the software focused on improving the engagement of participants and supporting learning through interaction (Aristeidou, Scanlon & Sharples, 2017). The main technological affordances developed prior to and during Weather-it, beyond the different types of inquiry and mobile technologies for sensor measurements, were a simplified and more attractive interface, investigation goals and processes open for all to view, collaborative research with contributions from other participants, mapping of collected data location, comments alongside the data collection items, downloading collected data in a spreadsheet, the use of forums for weather-related discussion and as learning centres, and viewing other people’s profiles (online users and the most recently active missions).

As engagement in the community and the study was one of the issues faced during the Rock Hunters investigations, the Weather-it participants were further supported and encouraged to contribute in a number of ways. These included ‘get started’ steps, an ‘exemplar mission’ for the newcomers, daily updates and announcements via the forum and the Facebook page, a weekly mailing list with the new activities, email notifications for new responses and communication with inactive participants. Also, awards and prizes were given to the participants with the best performance: monthly prizes for the top contributor (most responses) and best photographer (most liked picture), prizes for the Win-it most-voted responses, and the final top contributor and sharer. Spotting the prize winners was enabled by the participants’ consent to access their activities regarding the number of missions joined and/or created, comments and data added, forum posts, and the number of shares and likes given/received. These engagement techniques aimed to keep the study going by enhancing participant commitment to the online learning community.

An example of an inquiry created by a Weather-it participant is the mission ‘Air pressure and rainfall’, in which the creator encouraged his co-participants to investigate the question, ‘Does it rain when the pressure is low?’ using the Sense-it app and their mobile devices’ pressure sensors or barometers to add information about the level of rainfall to each one of their contributions. The inquiry recorded 16 contributions in a period of five weeks. The participant concluded that in most of the cases, the lower the pressure the rainier the weather. However, 18 additional contributions were recorded later, and the analysis of another participant showed no clear relation between air pressure and rainfall.

Data collection and analysis methods

Investigations

The participants of both design studies were given the opportunity to conduct their own investigations. The data from the investigations were collected and analysed in order to provide information for the following:

Participation in investigations: In Rock Hunters, the number of inquiries created by participants was counted and their completion status checked. In Weather-it, the participation volume was calculated based on the number of registrations in the project, investigations, total memberships in investigations, created data, comments, forum posts and average members per investigation.

Examining the investigations: The investigations and the associated online discussions were manually evaluated for evidence of cognitive presence, built through personal understanding and shared dialogue. The evaluation involved detecting critical thinking via problem definition conversations, exploration of different ideas, conversation on the proposed solutions and their meaning, and ideas on how to apply the developed knowledge. The four cognitive phases for evaluating the nature and quality of critical discourse in online discussions were inspired by the theoretical framework of Garrison et al. (Citation2001). Evidence was then presented in categories, according to the type of the scientific/topic learning.

Sensor plots: In Weather-it, the plots produced by the sensor recordings were examined to confirm whether the uploaded measurements were valid (correct data collection method and readings within the range).

Vocabulary: In Rock Hunters, a geology-specialised vocabulary, provided by an expert geologist, was used to explore whether the participants adopted a field-specific language. The qualitative data analysis software ‘nVivo’ (Version 10) was used to compare the two vocabularies (geology-specialised and participant generated). In Weather-it, the vocabulary was not compared to a weather-specialised glossary as the results from Rock Hunters showed that Google Copy Paste Syndrome (GCPS) (Weber, Citation2007) reduces the credibility of the results. Further, a word frequency query in nVivo was used in both Rock Hunters and ‘Weather it’ to identify the most frequently used words. Finally, the word frequency results were used in Weather-it to generate word-clouds (with words that were used at least twice a week) to visualise participant-generated vocabulary progress every week.

Interviews

Semi-structured interviews (Bryman, Citation2012) were conducted with the participants in both design studies. In Rock Hunters, an invitation was sent out to all the participants and a face-to-face or online (Facebook, Google Hangouts) interview took place with those who accepted. In Weather-it, some of the participants had characteristics of particular interest to the research, so an extreme/deviant sampling procedure (Teddlie & Tashakkori, Citation2009) was employed as a type of purposive sampling for the selection of cases of interest. Eight interviewees were selected: one with outstanding participation, one lurker, two dropouts, two experts and two participants of both studies. The aim of the interviews was to understand reasons for dropping out and lurking, provide insight into the experience of participants with the investigations, and allow a comparison between the two design studies by participants who joined both. Seven out of the eight invited Weather-it participants gave a positive response to the invitation; the member with the lurking behaviour declined due to time constraints. The interviews were recorded, transcribed and translated, when the interview was not in English, by the first author of this paper. The interviews in both studies were analysed through thematic analysis. The analysis was a hybrid approach of deductive and inductive coding and theme development (Braun & Clarke, Citation2008). Therefore, the interview themes focused on the data suggested by the research questions of each design study, but at the same time, themes linked to the data, giving new information, were identified. This paper contains comments related to the ‘learning experience’ theme only.

Questionnaires

The participants in both design studies were invited through their email addresses to complete online questionnaires. The questionnaires included a question regarding the participants’ learning experiences. In Rock Hunters, the question was open-ended and more exploratory, while in Weather-it there were some pre-determined responses in a closed-ended question. The qualitative feedback from the online questionnaires has been subjected to either a thematic or content analysis. Thematic analysis was used for the open-ended questions and content analysis for closed-ended questions that had an open field for the participants to fill in when a predetermined response was inappropriate.

Ethics and Rigour

The Open University Human Research Ethics Committee (HREC) authorised this study. The names of participants used on the platforms were changed to RH1 to RH24 in design study 1 (RH as Rock Hunters) and names inspired by clouds and winds in design study 2 (e.g. Cumulus, Zephyros).

To assess the reliability of the interview coding, the list of the codes produced by the first author was given to a qualitative researcher who was instructed to reapply the codes to a part of the data set (two interviews). Then, the percentage agreement between the two coders was then calculated by dividing the number of agreed coding to the total number of the code comparisons. At 75% for Rock Hunters and 80% for Weather-it, the inter-rater reliability falls into the ‘rule of thumb’ figure set at 70% (Guest et al., Citation2011).

To authenticate the questionnaire respondents, the invitations included a log-in code or identification number with which to complete the questionnaire; reminders were sent before the end of the survey. The cross-checking of generated data was further increased due to the complementary nature of the methods used – for example when the outcomes from the investigation analysis corresponded to those of the questionnaire. In addition to a multi-method approach, the design studies were conducted within two different scientific domains with each having its own culture (scientific language and methods).

Results

Rock Hunters

In Rock Hunters, 12 out of 24 participants (six experts and 18 non-experts) published their inquiry into rocks on the ‘journal’ space of the platform; seven with conclusions (complete) and five without conclusions (incomplete).

Inquiry completion and phases

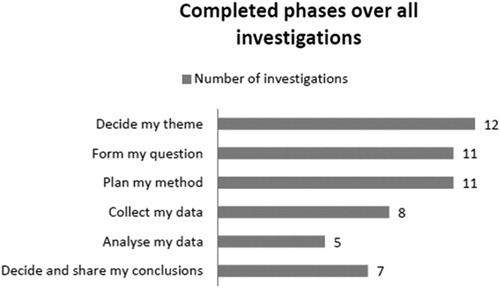

shows the number of published investigations (available on ‘journal’) for which a particular phase was completed.

Data from the survey (n = 20) and interviews (n = 9) provided some insights into understanding the participation in Rock Hunters. Six respondents (30%) found it difficult to form a question for their investigation and one (5%) needed help to revise their questions. Rock Hunters referenced a variety of methods for researching their questions, including seeking geological resources; examining geological maps; observing, comparing, and describing rock samples; and tabulating findings. Four of the participants conducted experimental studies, in which they formed their hypotheses, tested samples or examined geological maps, and decided on their answer after comparing and analysing their collected data. One of the participants stated: ‘I got excited and carried away at that point. I thought I could find some more rocks and top them all on a graph’ (RH. 18, non-expert). In analysing the data, non-experts described names, shapes, dates and location, while experts used more geology-specialised methods and tools, such as collating graphic logs and testing samples. One participant stated that the data analysis phase was their favourite as ‘it makes you think in a more scientific and specialised way on the method you chose to follow […] It brings you closer to the tools if you are a beginner’ (RH. 16, non-expert). An explanation given by an interviewee for not finishing their investigation was ‘lack of time’ (RH. 20, non-expert) which, in the interviews, appears to be the main reason given for many other incomplete tasks and activities. In addition to the above, an interesting explanation came from one of the interviewees who indicated being ‘afraid of the data analysis phase’ because ‘it might lead me to wrong conclusions’ or ‘will use the knowledge received by the experts without understanding the conclusion’ (RH. 21, non-expert).

Feedback from the members of the community was given on three investigations, but the inquiry authors did not reply, so there was no follow-up discussion. One possible reason is that participants were not notified that somebody had viewed and given feedback on their published investigation. Some interactions between the participants took place on the forum, and in the chat. A total of 60 messages was posted in twelve forum topics focusing mainly on nQuire use and rock identification. Although two of the incomplete investigation owners had received feedback and found their answers through the forum, they did not add their analysis and conclusions to their investigations. Finally, 14 participants asked the lead researcher for help via email, Facebook or chat.

Vocabulary

The vocabulary analysis indicated that the use of scientific geology-based vocabulary is dependent on the data collection sources, the length of the text, the type of the investigation (e.g. literature, field-work, rock identification), the level of geology expertise, the background of the participant and the investigations’ completion status. An examination of the published investigations showed that there was an apparent tendency by some participants conducting literature investigations to copy and paste various digital material instead of using their own language. This ‘culture’ affected the quality of the investigations and the results of the discourse analysis, as the increased numbers did not represent the individual’s knowledge and vocabulary.

Self-reported learning

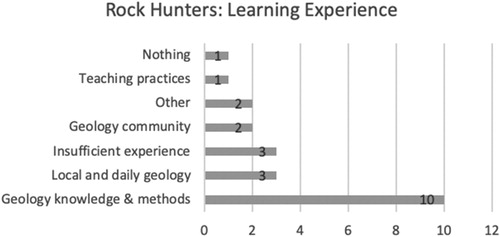

One of the survey open-ended questions was dedicated to the learning experience Rock Hunters gained from participating in this study. shows the main learning categories and the number of responses associated to each category. Some participants reported more than one learning gain.

The majority of the responses reported geology knowledge and methods as their main learning experience, while four respondents said they gained nothing during their participation in the project or felt that they did not have enough experience to proceed with the investigation. Some other responses, focused on local and daily geology examples, the different forms of rock transformation, the specific route to conduct an investigation, how to organise data, and where to look for resources:

I learned stuff about the methodology of research; that some rocks have isolated minerals inside them and they are called metal ores, about the types of rocks. I had no idea on what’s happening in earth’s depths! (RH. 21, non-expert)

I learned that there were useful tools on the web – the one with the rock ID. Also it made me follow a specific route to my investigations: start, hypothesis, plan, collect, etc. (RH. 11, non-expert)

Weather-it

Thirteen missions were produced within the Weather-it project, but the participants contributed to a total of 24 missions; eleven more missions (e.g. noise maps) were available on the nQuire-it platform, created by non-participants. Out of the 13 Weather-it missions, seven were created by participants other than the moderators (the first and second author of this paper). provides an overview of participation in Weather-it, including contributions to Weather-it and other missions hosted on the nQuire-it platform.

Members in Weather-it joined a minimum of 1 mission and a maximum of 17 missions, with an average of three missions (mean = 3.2) and a high distribution (SD = 3.5) from 19 members joining a single mission, to one joining 17 missions.

Evidence of learning in online discussions

Examining the investigations for cognitive presence provided evidence on what participants in Weather-it learn. The development of content knowledge was the consequence of exploring different ideas and discussing the proposed solutions and their meaning. However, the main cognitive phase detected in the discussion around the investigations was gaining and applying developed knowledge and skills, which is evident from the data annotation and data identification. Problem definition conversations were very rare as few participants started their own investigation.

Weather Knowledge (content knowledge): Content learning in Weather-it depends on the existing weather knowledge level of the participants. Thus, some participants were engaged in learning about basic weather content and some others, more advanced, furthered their existing knowledge. For instance, member Cumulus (non-expert) was trying to figure out why deserts are not found at the equator where it might be expected to be the hottest. Although she attempted to guess, she preferred to post her thoughts in a mission comment rather than to compete with the other posted ideas: ‘Because earth rotates so that equator is not vertical to the sun but diagonal?’ (Cumulus, non-expert, basic knowledge). Although her comment was incorrect and received no response, Cumulus remained interested and continued to discuss the other proposed answers and their meanings.

An example of a participant who was interested in furthering their existing knowledge was Fremantle (non-expert). Fremantle showed interest in a single mission, ‘Why are there two tides?’, and his participation focused around it. Even though his comments demonstrate prior understanding of the role of centrifugal force, he reacts by replying with further questions, evaluating the posted ideas and challenging for further inquiry.

Annotating weather data: Several participants in Weather-it attempted to annotate their data in order to describe their settings. Data annotation is an important part of communication among scientists, especially for collaborative research (Bose et al., Citation2006). The nQuire-it platform does not provide an automatic system for data annotation; thus, the participants had to do it manually, following the mission instructions and other exemplar data descriptions. For instance, in ‘Snowflake Spotting’, a mission that aimed to collect pictures of snowflakes and explore how air temperature affects the shape and size of snowflakes, Ostria (expert), an expert in weather but not snowflakes, initially uploaded her items without comments. Then, following Borea’s (expert) example, and by applying her recently developed knowledge, she started adding date, time, temperature and descriptions to her recorded snowflake observations.

Identification methods: Some investigations (mainly the Spot-it ones), required identification and further classification of the inquiry items by the participants. Participants had to follow an identification guide and/or do their own research, by applying the developed knowledge. For example, Boreas (non-expert) in a comment expresses his guess about the type of cloud in a picture: ‘I would say that one big grey cloud is a cumulonimbus’. His identification seems to follow inductive reasoning as he recognised that big grey clouds are usually cumulonimbus.

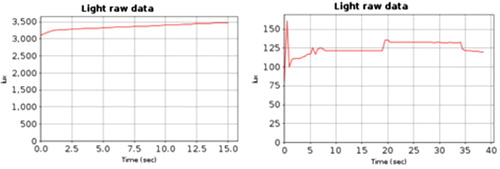

Sensor plots

Evaluating the Sense-it participation in ‘Record the sunlight’ mission (146 contributions by 16 participants) showed that the majority of the sunlight measurements (95%) were valid, containing the right time (midday), duration, label and data collection method (non-wavy plots). Within the data, eight invalid measurements (5%) were identified and removed from the data list. show examples of a valid and an invalid sensor plot. The low light levels in the invalid sensor plot (right) indicate that the participant probably took the reading indoors, instead of directly under the sun, as instructed.

Figure 4. Sense-it 'Record the sunlight' mission valid sensor plot (left) and non-valid sensor plot (right).

The majority of the measurements (95%), however, were recorded at the right time of day, included reasonably steady plots, and showed measurement results within the reasonable range of outdoors light levels. This finding suggests that participants in ‘Record the sunlight’ mastered a data collection skill that allowed them to collectively map sunlight levels around Europe for a month.

Community vocabulary progress

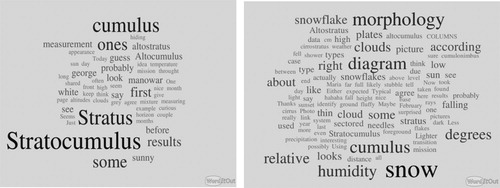

shows the vocabulary of the first (left) and last (right) week in the Weather-it community. The darker and larger the word the more important it was during that period. As shown, the dominant words during the first week were types of clouds, such as ‘stratocumulus’ while in the last week words like ‘degrees’, ‘morphology’, ‘diagram’, ‘humidity’ appear in the dominant, largest words, giving a more scientific nuance to the community vocabulary. Gradually attracting participants who were more interested in weather in a more scientific way may have also been the reason for producing scientific and weather-wise words.

The difference between the level of participation (and thus number of words) is also apparent in , as only words recorded at least twice in a week were included in the cloud.

Self-reported learning

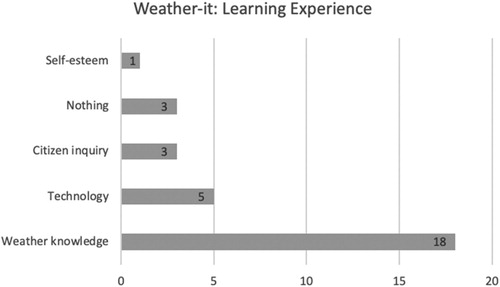

Questionnaire respondents (n = 28) chose multiple responses from a list, with answers borrowed by Rock Hunters, about what, if anything, they had learned that was new or interesting during their participation in Weather-it (). The majority of the questionnaire responses (18) focused on knowledge relating to the domain of the project and the mission topics. Two out of eighteen references mentioned aspects related to ‘technology’ alongside the knowledge and three stated ‘technology’ as their only learning experience. Three participants stated that they learned nothing, nothing specific or nothing yet. Finally, three participants identified the citizen inquiry approach in Weather-it as their learning experience.

Over half of the respondents stated ‘weather knowledge’ as the outcome of their participation in the Weather-it community. The responses included learning mainly about clouds – cloud formations, cloud names and cloud identification: ‘I have learned that clouds can give us weather predictions!’ (Tramontana, non-expert). Several respondents outlined how they learned about more than one topic as they had joined more missions: ‘That there are mixed types of clouds and some more interesting information from the forum about waves and severe weather’. (Levanto, non-expert); ‘I learned about clouds and atmosphere on Earth and Mars’ (Sundowner, non-expert).

Five participants mentioned technology aspects in their responses, mainly for expressing their surprise about the technology potential in scientific research: ‘I’ve learned about the sense information monitored in a smartphone’ (Fremantle, non-expert). Some other participants focused on the big picture and the philosophy behind Weather-it. Three respondents, all experts, expressed their thoughts about citizen-led citizen science, its existence and potential: ‘People who have no meteorology degree can take initiatives too:) and maybe, in the future, they will have some good ideas about questions or solutions’ (Euros, expert). One individual reflected on her fear of participating in the community: ‘I was concerned that I wouldn't make a valuable contribution!’ (Sumatra, non-expert).

Finally, a member who also participated in Rock Hunters provided a comparison of the learning experience in the two communities:

It would be nice to have a variety of types of Missions - some that need to be planned more like the Rock Hunter and some that can be done from inside. This may then encourage people to extend themselves and do ‘deeper’ research like the R[ock] H[unters] one. I liked the RH one as it gave you headings, tests etc. to choose and so guided you. However, the Weather-it was very enjoyable as it made me think and find answers to things I might not have known. (Brisa, non-expert)

Discussion

What do citizens learn when participating in online science communities designed for learning?

As a first general remark, it is evident that participation in the two online communities led to some common, but also to some different, learning outcomes, emphasising the importance of the embedded scaffolding and communication mechanisms to support learning outcomes.

Participants in both communities engaged in micro-learning activities (Kloetzer et al., Citation2013) related to the inquiry process: forming a research question and creating an investigation, deciding on research methods and tools, collecting and analysing data, concluding with results, discussing and reflecting on the conclusions. Creating an investigation involved participants in developing a research question or/and hypothesis and articulating their thoughts about a problem they encounter in their everyday life. As a result, they ‘translated’ their questions into research projects (Bonney et al., Citation2009, Citation2016; ). Some non-experts in both communities, however, found it difficult to form their research question or lacked the confidence to create an investigation. Finally, Weather-it participants stated that they had gained knowledge and skills not directly related to the science topic, such as digital literacy (e.g. monitoring via smartphones), communication and writing skills (e.g. building an argument), and self-reflection (e.g. making valuable contributions).

As investigations in these communities were citizen-led and the content is not known in advance, the development of a context-specific instrument for evaluating scientific literacy (e.g. a pre/post-test of knowledge items) was not possible. Therefore, the evaluation of scientific literacy for this study was based on self-reported changes in knowledge by participants and observation of cognitive presence. Measuring attitudes in online communities that are monitored for a short time has also been a challenge.

Comparing the two communities, in Rock Hunters, a much larger percentage of participants engaged in creating an inquiry: 50% against 7% in Weather-it. A participant of both communities suggested that the dynamic representation of the inquiry process in Rock Hunters was useful for ‘deeper’ research. The representation of the inquiry process may also explain the fact that Rock Hunters stated that they gained knowledge on how to approach an investigation, while Weather-it participants made no references to any research methods and processes. On the other hand, Weather-it participants contradict findings of public attitudes to science reports in which the public finds science and scientists serious (Ipsos MORI, Citation2014), and considered the community as a fun way to spend their free time while engaging with science. This ‘imbalance’ between gains in scientific literacy and fun has also been noticed in other citizen science projects, where participants were more interested in learning about birds than learning about the scientific processes (Brossard et al., Citation2005; Cronje et al., Citation2010). Regarding the discussions, there is evidence that the scientific vocabulary on Weather-it progressed between the first and last week of the project, while in Rock Hunters, the detection of Google Copy Paste Syndrome (Weber, Citation2007) indicated the use of language that does not represent the participants’ personal knowledge. Furthermore, Weather-it participants seemed to be more confident in getting involved in interactions with scientists and other non-experts, imitating, opposing and acknowledging their techniques and knowledge.

The different designs in the two communities may have affected the level of participation and type of learning gains. The more guidance provided by the scaffolding system on phases of inquiry in Rock Hunters led to the creation of more inquiries, with references to learning about research methods and processes, and the development of experimental studies. On the other hand, Weather-it participants found their participation to be more fun, got involved in inquiry discussions with more confidence, and gradually developed a more scientific vocabulary. The comparison of these two spaces highlights the extent to which the design affects the learning outcomes.

What are the implications for science education of online citizen science?

The following design considerations, resulting from drawing together failures and successes from both online communities, aim to improve the design of CS or other similar online communities with similar conditions, and facilitate the adoption of good science and learning practices.

Design explicit inquiry activities as part of a complete scientific process: engaging participants with several phases or the entire scientific process requires preparation with aim, activity, tools and research methods instructions for each phase. Furthermore, information about the entire scientific process and where each inquiry phase lies may facilitate scientific literacy to a greater extent.

Aim to balance fun and gains in scientific literacy: it is important that the community design will deliver a pleasant and fun environment for sustaining engagement in the community but will also promote scientific literacy. This involves the need to do ‘good’ citizen-led citizen science by ensuring the correct use of scientific tools (e.g. calibration of sensors), while supporting the analysis of findings.

Collaborate with experts to make available on-topic culture and learning: a large number of participants join projects to learn more about the science topic. Providing access to the culture of the science topic would sustain their interest and increase the inquiry outcomes, as suggested by the interviewed experts. This involves content and research methods knowledge, access to the science topic vocabulary and the field tools. Science experts are the appropriate people to convey this culture, participating in the inquiry design and tools. Additionally, tools that allow input of online topic-related glossaries could also extend the scientific culture. This development of scientific language and understanding of how to carry out citizen science could also be reinforced by peer facilitation. For example, in school settings, teachers act as facilitators and moderators in CS. A collaboration with universities and university students could act as the bridge between school students and science and facilitate the knowledge exchange between ‘experts’ and ‘amateurs’.

Scaling and sustaining online communities: Since citizen inquiry depends on the interaction between the participants and the knowledge exchange, a key issue is how to grow and maintain a community of citizen-led investigations, while preserving effectiveness in science learning. This could be facilitated in two complementary ways: automation and individualisation (Clarke et al., Citation2006). For instance, the development of a better scaffolding system that supports, guides and informs participants in every step of the inquiry process (automation); and a recommendation system that delivers to-do-lists to participants, according to their interests (individualisation). A further version of nQuire (v2) has been developed to support large-scale interactive investigations in the physical and social sciences (see Sharples et al., Citation2019). Current work with nQuire v2 attempts to scale up participation while supporting good science practices, by embedding built-in consent forms and an approvals process for developing and launching missions.

Limitations

Many of the investigations that the participants in our studies initiated and maintained cannot be considered as ‘genuine’ research that contributes to scientific knowledge, but are intended to get people interested in practical science. The lessons we received when trying to combine inquiry learning and the advancement of scientific knowledge, and the abovementioned design considerations, have contributed to the creation of the nQuire v2 platform. This latest version has the capacity to host large scale CS project with publishable scientific outcomes while offering both communities and individuals a platform to design citizen inquiry missions.

Conclusions

This study investigated what people learn in online citizen science communities designed for inquiry. Components of inquiry-based learning and citizen science were synthesised and a design-based approach was used for the design and exploration of two different citizen science communities for inquiry. Employing inquiry-based learning in citizen-led investigations and developing scaffolding mechanisms for participation were based on the notion that involvement with all the aspects of scientific process increases learning outcomes (Bonney et al., Citation2009).

Findings showed that the two communities engaged participants in science learning through their participation in the entire inquiry processes, from forming a research question based on their everyday experience of science, to drawing conclusions. They gained content knowledge and practised science skills, such as observation and identification, data collection and annotation; and developed transferable skills, such as digital literacy, writing and self-efficacy. The different guidance levels in the two studies affected the level of participation and type of learning.

Reflection on the findings have led to some design suggestions for enhancing good science and learning practices by improving the design of citizen science or similar communities. These include facilitating the understanding of inquiry activities as part of a complete scientific process, balancing project enjoyment with gains in scientific literacy, promoting science culture by motivating science experts and employing glossaries, and scaling up the community and the interaction between the participants.

These guidelines could be useful to science education researchers, to practitioners who might use student-led citizen science for their classes, and to citizen science project designers who prioritise participant learning gains or want to balance scientific and learning outcomes within a project.

Acknowledgements

The authors would like to thank The OpenScience Laboratory and the Nominet Trust (DUCY1343737172) for funding the software development, Dr Christothea Herodotou and Dr Eloy Villasclaras-Fernández for their contributions to the project, and all the Rock Hunters and Weather-it participants for taking part in this study.

Disclosure statement

No potential conflict of interest was reported by the authors.

Correction Statement

This article has been republished with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

References

- Amsha, A. O., Schneider, D., Fernandez-Marquez, J. L., Da Costa, J., Fuchs, B., & Kloetzer, L. (2016). Data analytics in citizen cyberscience: Evaluating participant learning and engagement with analytics. Human Computation, 3(1), 69–97. https://doi.org/10.15346/hc.v3i1.5

- Aristeidou, M., & Herodotou, C. (2020). Online citizen science: A systematic review of effects on learning and scientific literacy. Citizen Science: Theory & Practice, 5(1), 11. https://doi.org/10.5334/cstp.224

- Aristeidou, M., Scanlon, E., & Sharples, M. (2014). Inquiring rock hunters. In C. Rensing, S. de Freitas, T. Ley, & P. J. Muñoz-Merino (Eds.), Open learning and teaching in educational communities, proceedings of the 9th European conference on technology enhanced learning, EC-TEL 2014 (pp. 546–547). Springer International Publishing.

- Aristeidou, M., Scanlon, E., & Sharples, M. (2017a). Profiles of engagement in online communities of citizen science participation. Computers in Human Behavior, 74, 246–256. https://doi.org/10.1016/j.chb.2017.04.044

- Aristeidou, M., Scanlon, E., & Sharples, M. (2017b). Design processes of a citizen inquiry community. In C. Herodotou, M. Sharples, & E. Scanlon (Eds.), Citizen inquiry synthesising science and inquiry learning (pp. 210–229). Routledge.

- Bonney, R., Cooper, C. B., Dickinson, J., Kelling, S., Phillips, T., Rosenberg, K. V., & Shirk, J. (2009). Citizen science: A developing tool for expanding science knowledge and scientific literacy. BioScience, 59(11), 977–984. https://doi.org/10.1525/bio.2009.59.11.9

- Bonney, R., Phillips, T. B., Ballard, H. L., & Enck, J. W. (2016). Can citizen science enhance public understanding of science? Public Understanding of Science, 25(1), 2–16. https://doi.org/10.1177/0963662515607406

- Bose, R., Buneman, P., & Ecklund, D. (2006). Annotating scientific data: Why it is important and why it is difficult. In Proceedings of the 2006 UK e-science all hands meeting, Nottingham, UK, 18th-21st September. Edinburgh: National e-Science Centre 739–747.

- Braun, V., & Clarke, V. (2008). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

- Brossard, D., Lewenstein, B., & Bonney, R. (2005). Scientific knowledge and attitude change: The impact of a citizen science project. International Journal of Science Education, 27(9), 1099–1121. https://doi.org/10.1080/09500690500069483

- Bryman, A. (2012). Integrating quantitative and qualitative research: How is it done? Qualitative Research, 6(1), 97–113. https://doi.org/10.1177/1468794106058877

- Clarke, J, Dede, C, Ketelhut, D, & Nelson, J. (2006). A design-based research strategy to promote scalability for educational innovations. Educational Technology, 46(3), 27–36. http://www.jstor.org/stable/44429300

- Cobb, P., Confrey, J., DiSessa, A., Lehrer, R., & Schauble, L. (2003). Design experiments in educational research. Educational Researcher, 32(1), 9–13. https://doi.org/10.3102/0013189X032001009

- Collins, A., Joseph, D., & Bielaczyc, K. (2004). Design research: Theoretical and methodological issues. Journal of the Learning Sciences, 13(1), 15–42. https://doi.org/10.1207/s15327809jls1301_2

- Cronje, R., Rohlinger, S., Crall, A., & Newman, G. (2010). Does participation in citizen science improve scientific literacy? A study to compare assessment methods. Applied Environmental Education and Communication, 10(3), 135–145. https://doi.org/10.1080/1533015X.2011.603611

- de Jong, T. (2006). Computer simulations – technological advances in inquiry learning. Science, 312(5773), 532–533. https://doi.org/10.1126/science.1127750

- Edelson, D., Gordin, D., & Pea, R. (1999). Addressing the challenges of inquiry-based learning through technology and curriculum design. Journal of the Learning Sciences, 8(3&4), 391–450. https://doi.org/10.1207/s15327809jls0803&4_3

- Freitag, A., Pfeffer, M. J., & Nardini, C. (2013). Process, not product: Investigating recommendations for improving citizen science ‘success’. PloS One, 8(5), 1–5. https://doi.org/10.1371/journal.pone.0064079

- Friedman, A. J. (2008). Framework for evaluating impacts of informal science education projects report from a National Science Foundation Workshop. http://www.informalscience.org/sites/default/files/Eval_Framework

- Garrison, D. R., Anderson, T., & Archer, W. (2001). Critical thinking, cognitive presence, and computer conferencing in distance education. American Journal of Distance Education, 15(1), 7–23. https://doi.org/10.1080/08923640109527071

- Geoghegan, H., Dyke, A., Pateman, R., West, S., & Everett, G. (2016). Understanding motivations for citizen science. Final report. http://www.ukeof.org.uk/resources/citizen-scienceresources/MotivationsforCSREPORTFINALMay2016.pdf

- Guest, G., MacQueen, K., & Namey, E. (2011). Applied thematic analysis. Sage.

- Herodotou, C., Aristeidou, M., Sharples, M., & Scanlon, E. (2018). Designing citizen science tools for learning: Lessons learnt from the iterative development of nQuire. Research and Practice in Technology Enhanced Learning, 13(1), 4. https://doi.org/10.1186/s41039-018-0072-1

- Herodotou, C., Sharples, M., & Scanlon, E. (2017). Citizen inquiry: Synthesising science and inquiry learning. Routledge.

- Ipsos MORI. (2014). Public attitudes to science 2014 (Report). Department for Business Innovation and Skills. Retrieved June 1, 2015, from https://www.ipsosmori.com/Assets/Docs/Polls/pas-2014-main-report.pdf

- Jennett, C., Kloetzer, L., Schneider, D., Iacovides, I., Cox, A., Gold, M., Fuchs, B., Eveleigh, A., Mathieu, K., Ajani, Z., & Talsi, Y. (2016). Motivations, learning and creativity in online citizen science. Journal of Science Communication, 15(3), https://doi.org/10.22323/2.15030205

- Kelemen-Finan, J., Scheuch, M., & Winter, S. (2018). Contributions from citizen science to science education: An examination of a biodiversity citizen science project with schools in Central Europe. International Journal of Science Education, 40(17), 2078–2098. https://doi.org/10.1080/09500693.2018.1520405

- Kloetzer, L., Da Costa, J., & Schneider, D. K. (2016). Not so passive: Engagement and learning in volunteer Computing projects. Human Computation, 3(1), 25–68. https://doi.org/10.15346/hc.v3i1.4

- Kloetzer, L., Schneider, D., Jennett, C., Iacovides, I., Eveleigh, A., Cox, A., & Gold, M. (2013). Learning by volunteer computing, thinking and gaming: What and how are volunteers learning by participating in virtual citizen science? In Proceedings of the 2013 European research conference of the network of access, learning careers and identities, European society for research on the education of Adults (ESREA) (pp. 28–30 November, 2013, 73–92), Linköping, Sweden.

- National Library New Zealand. (n.d.). Understanding inquiry learning. https://natlib.govt.nz/schools/school-libraries/library-services-for-teaching-and-learning/supporting-inquiry-learning/understanding-inquiry-learning

- Phillips, T. B., Ferguson, M., Minarchek, M., Porticella, N., & Bonney, R. (2014). User’s guide for evaluating learning outcomes in citizen science. Cornell Lab of Ornithology. http://www.birds.cornell.edu/citscitoolkit/evaluation

- Quintana, C., Reiser, B. J., Davis, E. A., Krajcik, J., Fretz, E., Duncan, R. G., Kyza, E., Edelson, D., & Soloway, E. (2004). A scaffolding design framework for software to support science inquiry. Journal of the Learning Sciences, 13(3), 337–386. https://doi.org/10.1207/s15327809jls1303_4

- Scanlon, E., Anastopoulou, S., & Kerawalla, L. (2017). Inquiry learning reconsidered: Contexts, representations and challenges. In K. Littleton, E. Scanlon, & M. Sharples (Eds.), Orchestrating inquiry learning (pp. 16–39). Routledge.

- Sharples, M., Aristeidou, M., Herodotou, C., McLeod, K., & Scanlon, E. (2019). Inquiry learning at scale: Pedagogy informed design of a platform for citizen inquiry. In Proceedings of the sixth (2019) ACM conference on learning@ scale, Chicago, Illinois, USA, 24-25 June29). ACM.

- Sharples, M., Scanlon, E., Ainsworth, S., Anastopoulou, S., Collins, T., Crook, C., Jones, A., Kerawalla, L., Littleton, K., Mulholland, P., & O’Malley, C. (2014). Personal inquiry: Orchestrating science investigations within and beyond the classroom. Journal of the Learning Sciences, 24(2), 308–341. https://doi.org/10.1080/10508406.2014.944642

- Symonds, J. E., & Gorard, S. (2010). Death of mixed methods? Or the rebirth of research as craft. Evaluation & Research in Education, 23(2), 121–136. https://doi.org/10.1080/09500790.2010.483514

- Teddlie, C., & Tashakkori, A. (2009). Foundations of mixed methods research: Integrating quantitative and qualitative approaches in the social and behavioral sciences. Sage Publications, Inc.

- Weber, S. (2007). Das google-copy-paste-syndrom. In Wie Netzplagiate Ausbildung und Wissen gefährden. Heise. http://www.heise.de/tp/buch/buch_25.html