?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

When reading scientific information on the Internet laypersons frequently encounter conflicting claims. However, they usually lack the ability to resolve these scientific conflicts based on their own prior knowledge. This study aims to investigate how differences in the trustworthiness and/or expertise of the sources putting forward the conflicting claims affect laypersons’ explanation and resolution of the scientific conflict. We sequentially presented 144 participants with two conflicting scientific claims regarding the safety of nanoparticles in sunscreen and manipulated whether the scientists putting forward the claims differed in their trustworthiness and/or expertise. After having read the claims on a computer in a self-paced manner, participants rated their subjective explanations for the conflicting claims, assessed their personal claim agreement, and completed a source memory task. We examined how differences in source trustworthiness and source expertise affected these measures. Results showed that trustworthiness differences resulted in higher attribution of the conflict to motivational explanations, and expertise differences in higher attribution of the conflict to competence explanations, than without respective differences. Furthermore, main effects of trustworthiness differences and of expertise differences on readers’ claim agreement were shown, with participants agreeing more with claims from sources of higher trustworthiness or expertise.

Introduction

Laypersons frequently need to find answers to complex and conflicting science-related questions that may affect their daily lives and personal decisions. ‘Is the sun helpful or harmful to my health?’, or ‘Is this ingredient in sunscreen safe?’ may serve as some examples. However, laypersons – per definition – do not have enough prior knowledge to adequately answer respective questions without the help of expert sources (Bromme & Goldman, Citation2014). The internet has simplified the access to scientific information; however, it often provides multiple perspectives and discrepant claims put forward by various sources. For example, one website may present the claim that sunscreen containing nanoparticles provides better protection from UV radiation and is safer than conventional products, while another website may point out potential negative consequences for our health by nanoparticles entering our body through the skin barrier. Thus, laypersons frequently need to decide not only whether to believe a scientific claim, but also which claim to believe in (if any at all), and the evaluation of multiple documents or perspectives becomes a central role in scientific literacy (Britt et al., Citation2014; Halverson et al., Citation2010; Lang et al., Citation2020; Sharon & Baram-Tsabari, Citation2020). This is a difficult task, since laypersons often lack prior knowledge and may rely on fragmentary understandings of complex scientific issues.

One potential way to overcome this challenge is to evaluate the sources providing the claims (Bromme et al., Citation2010, Citation2015; Stadtler & Bromme, Citation2014). In line with this, Kolstø (Citation2001) identified the evaluation of information sources’ interests, neutrality, and competence as important strategies of students to resolve a socio-scientific conflict (i.e. ‘to decide who and what to trust’, p. 877). These strategies address aspects of source trustworthiness and source expertise, which are commonly identified as dimensions of source credibility (Hovland & Weiss, Citation1951; see also e.g. Bråten et al., Citation2010; Pornpitakpan, Citation2004; Rouet et al., Citation2020; Werner da Rosa & Otero, Citation2018). In this context, trustworthiness refers to the extent to which a source is perceived to be willing to provide accurate and unbiased information, and expertise to the extent to which a source is perceived to be able, and thus competent, to provide accurate and valid information (Danielson, Citation2006; Sperber et al., Citation2010). That is, readers may perceive an expert in a field as more trustworthy if the expert works for a university rather than for a company, and therefore conceive the former source as more credible. Likewise, they may perceive a professor as having more expertise in his or her field of research than a junior scientist, and therefore to be a more credible source.

To our knowledge, previous studies that investigated the influence of these source features on conflict evaluation have varied either trustworthiness or expertise differences, while holding the other dimension of source credibility constant (e.g. Gottschling et al., Citation2019; Thomm et al., Citation2015; Thomm & Bromme, Citation2016). The goal of the present study was to examine how differences in both source trustworthiness and source expertise (as indicated by available source information) affect readers’ subjective explanations for the conflicting claims, their personal agreement with the claims, and their source memory, as compared to situations in which sources do not differ in their trustworthiness and/or expertise.

With this study we thus aimed to contribute to the growing body of research on how laypersons reconcile discrepant scientific accounts based on source information. The results can be valuable and informative to further identify and detail skills on how to critically evaluate information and information sources. Such skills become increasingly relevant for laypersons within their everyday life and, thus, also need to be addressed as part of science education in schools (Sharon & Baram-Tsabari, Citation2020).

Processing of conflicting scientific claims and the use of source information

While a number of theoretical models on the use and representation of source information when reading multiple texts have been introduced in recent years (Braasch et al., Citation2012; Britt et al., Citation2013; List & Alexander, Citation2017; Perfetti et al., Citation1999; Rouet et al., Citation2017; Rouet & Britt, Citation2011), the present research is mainly based on the theoretical assumptions of the content-source integration (CSI) model proposed by Stadtler and Bromme (Citation2014), which specifically addresses the use of source information to explain (or regulate) and resolve conflicting scientific claims. The CSI model proposes three stages of processing conflicting scientific claims readers can go through. The first stage, conflict detection, in which readers need to detect the lack of coherence between claims, is a prerequisite to engage in the subsequent stages. In the present research, however, we focus on the second stage, that is conflict regulation, and the third stage, that is conflict resolution, as source information plays an essential role in these stages.

Conflict regulation

During the stage of conflict regulation readers try to re-establish coherence for themselves either (a) by ignoring the conflict or disputing its importance, (b) by reconciling the conflict by drawing additional inferences, or (c) by accepting and explaining it as due to different sources (Stadtler & Bromme, Citation2014). Ignoring a present conflict, while arguably the easiest option, will generally not lead to a resolution of the conflict and is therefore not regarded as a desirable option in this context. The second option, reconciling the conflicting claims by drawing additional inferences, refers to searching for explanations for the conflict provided in the document(s), generating one’s own explanations, or explaining the conflict away, respectively (Otero & Campanario, Citation1990).

Finally, the third option, accepting the conflict as due to different sources, is a first way in which source information can affect conflict regulation. While this process does not necessarily encompass specific explanations for why the two sources might differ in their claims, it requires the understanding that different sources and perspectives can lead to conflicting claims (Bromme et al., Citation2015). Based on this understanding, the reader can integrate the conflicting information into a global, coherent mental representation, given that contents are indexed onto the respective sources represented. This process is also described by the Documents Model framework (Britt & Rouet, Citation2012; Perfetti et al., Citation1999), on which the CSI model builds on, and specifically, by the documents-as-entities assumption of the Documents Model framework (Britt et al., Citation2013).

In addition, in some cases available source information cannot only be used to explain that a conflict emerged, but also to explain why it might have emerged (Braasch & Scharrer, Citation2020). As an example, two scientists might differ in their claims as to whether sunscreen containing nanoparticles is safe for use. If background information about the scientists (i.e. source information) indicates that one scientist is independent while the other scientist works for a company producing nano products, this information might be used as a subjective explanation for the conflict, in this case differences in the scientists’ motivations (i.e. whether or not they have potential vested interests).

Prior studies have shown that differences in source trustworthiness (operationalized by potential vested interests) or source expertise (operationalized by the extent of professional experience) increased readers’ attribution of the conflict to scientists’ motivations or competence, respectively, as subjective explanations for the conflict, as compared to situations without such differences (Gottschling et al., Citation2019; Thomm et al., Citation2015; Thomm & Bromme, Citation2016). In these studies, readers were presented with two conflicting claims regarding scientific topics. While the source given for one claim was a scientist of high expertise and trustworthiness (i.e. a university professor), the source of the second claim was indicated to be either of the same standing (i.e. another university professor), or inferior in terms of expertise or trustworthiness (i.e. a professor working for a company or a junior scientist). Furthermore, Gottschling et al. (Citation2019) recorded participants’ eye movements while reading the conflicting claims and found increased attention to source information when the sources differed in their trustworthiness than when they did not differ in their trustworthiness. As an indirect indication for increased processing of source information, Thomm and Bromme (Citation2016) found that participants showed better memory for source information when differences in source trustworthiness and source expertise were present than when the sources were of equal trustworthiness and expertise (i.e. when both sources were university professors). In contrast, however, Gottschling et al. (Citation2019) did not find an effect on source memory. Yet, as we will elaborate next, differences in source trustworthiness and source expertise may not only affect conflict regulation, but also readers’ conflict resolution.

Conflict resolution

To resolve a scientific conflict and, thus, to develop a personal stance toward it, readers need to not only explain the conflict but also judge the validity of the conflicting claims (cf. Braasch & Scharrer, Citation2020). According to Stadtler and Bromme (Citation2014), there are two major pathways to resolve conflicting scientific claims: a first-hand approach and a second-hand approach. The first-hand approach implies that readers evaluate the validity of a claim based on their own knowledge and beliefs, and, hence, assess directly what appears to be true. Laypersons, however, may not be able to reliably judge claim validity directly due to their bounded understanding (Bromme & Goldman, Citation2014). Instead, they may engage in a second-hand approach and evaluate claim validity based on the perceived credibility of their sources. Consequently, they may assess whom to believe instead of what to believe.

In line with this reasoning, an interview study by Bromme et al. (Citation2015) showed that laypersons focused mainly on second-hand evaluation strategies when asked to resolve and decide on conflicting scientific claims about a medical topic. Also, in a qualitative observational study by Halverson et al. (Citation2010), source credibility was found to be one of the most prevalent criteria used by students when choosing and evaluating websites for a report on a controversial biotechnology subject. Further experimental research revealed that readers being confronted with conflicting claims agreed more with the position of sources that appear to be more trustworthy (Gottschling et al., Citation2019; Paul et al., Citation2019) or more competent (Kobayashi, Citation2014), although it should be noted that one other study did not find such effects on claim agreement (Thomm & Bromme, Citation2016). Readers also have been shown to rate arguments of a source with potential vested interests as less convincing than those of a neutral source (Kammerer et al., Citation2016) or to cite sources they perceive as more trustworthy more often in their written argumentation about the conflicting scientific issue (Bråten et al., Citation2015; List et al., Citation2017). In contrast, if source information does not indicate any differences in source trustworthiness or source expertise, conflicts between scientific claims cannot be resolved by means of a second-hand approach (cf. Gottschling et al., Citation2019; for a similar argumentation, see Richter & Maier, Citation2017).

While previous research has varied either the presence of differences in source trustworthiness or source expertise when laypersons face conflicting scientific claims, the present study aims to examine whether respective effects on conflict regulation and conflict resolution are additive (i.e. main effects for both trustworthiness differences and expertise differences and no interaction) or interactive (e.g. over-additive, such that trustworthiness differences and expertise differences in combination would have an even stronger effect than alone).

Present study

The main goal of the present study is to replicate and extend previous findings on the role of differences in source trustworthiness and source expertise on readers’ conflict regulation and conflict resolution (Gottschling et al., Citation2019; Thomm & Bromme, Citation2016). This will help to better understand how laypersons use cues that point to the sources’ credibility to explain unfamiliar conflicting scientific claims and to decide which claim to agree with more.

To this end, we varied differences (as compared to no differences) in the trustworthiness and expertise of two sources that put forward two conflicting scientific claims. Specifically, other than in previous research, in the present study differences in source trustworthiness and source expertise were manipulated independently of each other, resulting in four experimental conditions. In each condition, one claim was said to stem from a university professor (baseline source) while the source information of the second claim was varied according to the condition (comparison source; cf. Gottschling et al., Citation2019; Thomm & Bromme, Citation2016). The scientific conflict used in our study addressed a topic from the domain of nanotechnology, specifically nanosafety, which dealt with the question as to whether nanoparticles in sunscreen are safe. We expected prior knowledge on this topic to be lower than on many other socioscientific issues (Pillai & Bezbaruah, Citation2017), which, in turn, should facilitate the examination of effects associated with the second-hand approach to evaluation (Stadtler & Bromme, Citation2014). Regarding our dependent variables we differentiated between conflict explanation as a part of conflict regulation and claim agreement as a part of conflict resolution.

While some previous studies presented both claims simultaneously (Braasch et al., Citation2012; Saux et al., Citation2017; Thomm & Bromme, Citation2016), we used a sequential presentation of the conflicting claims (Gottschling et al., Citation2019; Kobayashi, Citation2014), which is typical to situations on the Internet where opposing claims are often found on different websites. We ensured that source information was no longer present when participants were asked to provide explanations for the conflict and to judge their agreement with the claims. Effects of differences in source trustworthiness or source expertise on conflict explanation or claim agreement would therefore indicate readers’ integration of source information into a mental representation, as also suggested by the Documents Model framework (Britt & Rouet, Citation2012; Perfetti et al., Citation1999).

Hypotheses

Based on our theoretical and empirical background analysis, we examined the following hypotheses: First, regarding conflict regulation, we assumed that perceived differences in source trustworthiness should lead readers to attribute the conflict more strongly to motivational explanations than when confronted with sources without differences in trustworthiness (H1a). Likewise, perceived differences in source expertise should lead readers to attribute the conflict more strongly to competence explanations than when confronted with sources without differences in expertise (H1b).

Second, regarding conflict resolution, we expected that perceived differences in source trustworthiness and source expertise should affect readers’ agreement with the two claims as described in the CSI model, because these differences can be used for an indirect evaluation of the validity of the claims. Accordingly, there should be less agreement with the claim put forward by the less trustworthy source than with the claim of the more trustworthy source, whereas when confronted with sources without differences in trustworthiness, agreement to the claims put forward by the two sources should be comparable (H2a). Likewise, there should be less agreement with the claim put forward by the less expert source than with the claim of the more expert source, whereas when confronted with sources without differences in expertise, agreement to the claims put forward by the two sources should be comparable (H2b).

Third, we also expected better memory for source information in conditions with differences in source trustworthiness and/or source expertise compared to conditions without the respective differences, due to a deeper processing of source information to regulate and resolve the conflict in the former case. Accordingly, source memory should be higher with differences in source trustworthiness than without such differences (H3a). Likewise, source memory should be higher with differences in source expertise than without such differences (H3b).

In addition to these hypotheses, we explored potential interactions between differences in source trustworthiness and differences in source expertise on conflict explanation, claim agreement, and source memory. However, we did not have directed hypotheses regarding such interaction effects. Furthermore, we explored how differences in source trustworthiness and source expertise affect process measures, such as, reading times of claims and revisits to the claims.

Materials and methods

Participants

Participants were recruited via a local, web-based online recruitment system. Participants had the chance to win one of twenty 10€ Amazon-vouchers. The study was approved by the local ethics committee. Overall, data of N = 178 participants were collected. However, 22 datasets were excluded for the following reasons: (a) because participants studied psychology and might have participated in the pretest reported below (n = 5); (b) because they had finished the questionnaire in insufficient time to read all of the material (less than eight minutes; n = 10); or (c) because they interrupted their participation for at least 20 min (n = 7). Subsequently, we only included the first 36 participants by date of finishing the questionnaire for each experimental group (for details see Section ‘Experimental Design’) to ensure a completely counterbalanced design regarding the combination and sequence of claims and sources. Accordingly, the final sample consisted of N = 144 participants (68.75% female, 96.53% university students) from a variety of majors (42.36% from social sciences and humanities, 43.06% from natural sciences, 10.42% from psychology, and 4.17% unspecified), with an average age of 26.36 years (SD = 9.52). On average, participants reported moderate interest (M = 3.13, SD = 1.07) and low prior topic knowledge (M = 1.97, SD = 1.10) concerning nanotechnology, as assessed in the beginning of the study with two single self-report items with 5-point Likert scales from 1 (‘very low’) to 5 (‘very high’).

Material

All materials were presented in German. The study was conducted as an online study using the survey platform Qualtrics (Qualtrics, Provo, UT). The study was designed to be processed in approximately fifteen minutes.

Scenario and claims

Participants were presented with a conflict scenario from the field of nanosafety. We used a topic that was expected to have personal relevance for a large proportion of participants, that is, the use of nanoparticles in sunscreen. First, participants were given introductory information on the use of nanoparticles as a UV-blocker in sunscreen and were informed about the controversy on whether these nanoparticles can penetrate the human skin and therefore might cause health risks. Participants, then, were informed that in the following they would be presented with information from the websites of two scientists that put forward opposing claims on this topic (Gottschling et al., Citation2019; Thomm & Bromme, Citation2016). Participants were asked to carefully read the two opposing positions in order to answer questions on the controversy afterwards. The two claims presented as part of the scenario were that studies have shown nanoparticles to be unable to penetrate the human skin (Claim A) or that studies have shown nanoparticles to be able to penetrate the human skin (Claim B). These claims were based on authentic reports and adapted for use in this study. Both claims were of similar length, structure, and readability (for detailed information on the claims see and for the translated claims Appendix A). The two claims were presented on separate HTML pages.

Table 1. Information on claim material (without source information).

The claims were pretested (without source information) in an independent sample regarding perceived comprehensibility and convincingness. A total of 32 undergraduate psychology students (Mage = 21.38, SDage = 2.95, 27 female) assessed both variables on a seven-point Likert scale (1, ‘very low’ to 7, ‘very high’). Paired t-tests showed no significant differences in the perceived comprehensibility of the claims (t(31) = 1.09, p = .282) while Claim B was perceived as somewhat more convincing (t(31) = −2.33, p = .027) than Claim A. To ensure that this possible difference in claim convincingness could not affect the results of this study, the combination of claims and source information was counterbalanced.

Manipulation of differences in trustworthiness and expertise

To manipulate differences in source trustworthiness and source expertise, source information was added to each of the two claims (23 additional words per claim). One claim was consistently said to stem from a professor of nanoscience working at a university, being publicly funded, and having 10 years of experience in the research field (‘baseline source’ in every experimental group, i.e. high trustworthiness and high expertise). The opposing claim (put forward by the ‘comparison source’) was said to stem from (a) a professor of nanoscience working for a company, being industrially funded, and having 10 years of experience in the research field (i.e. low trustworthiness, but high expertise; trustworthiness-difference group), (b) a junior scientist of nanoscience working at a university, being publicly funded, and having one year of experience in the research field (i.e. high trustworthiness, but low expertise; expertise-difference group), (c) a junior scientist of nanoscience working for a company, being industrially funded, and having one year of experience in the research field (i.e. low trustworthiness and low expertise; combined-difference group), or (d) another professor of nanoscience working at a university, being publicly funded, and also having ten years of experience in the research field (i.e. high trustworthiness and high expertise; control group).

The used source information was pretested with an independent sample regarding perceived trustworthiness and expertise. A total of 17 undergraduate psychology students (Mage = 21.35, SDage = 2.62, 15 female) assessed both variables on a seven-point Likert scale (1, ‘very low’ to 7, ‘very high’). The results of this material test showed that scientists working at a university were rated as significantly more trustworthy than scientists working for a company, F(1,48) = 14.95, p < .001, and that professors were rated as significantly more competent than junior scientists, F(1,48) = 22.20, p < .001.

Measures

Prior domain knowledge and attitudes (control variables)

To ascertain comparability across experimental conditions, we used adapted versions of the Public Knowledge in Nano Technology (PKNT) and the Public Attitudes towards Nano Technology (PANT) questionnaires (Lin et al., Citation2013) to measure participants’ prior knowledge regarding nanotechnology and their attitudes towards risks of nanotechnology.

For prior domain knowledge, participants had to answer eight multiple-choice questions on nanotechnology (Cronbach’s α = .63, correlation with self-reported prior knowledge r = .53). Each question was followed by four possible answers from which only one was correct. The sum of correct answers was used as a measure of prior domain knowledge.

For attitudes towards risks of nanotechnology, participants were asked to rate their agreement with four statements on possible risks of nanotechnology (sample item, ‘The toxicity of nanoparticles may be even higher than that of large-size particles.’) on a five-point Likert scale (1, ‘very much disagree’ to 5, ‘very much agree’; Cronbach’s α = .86).

Conflict explanation

Conflict explanation was measured with the Explaining Conflicting Scientific Claims (ECSC) questionnaire (Thomm et al., Citation2015). The ECSC measures four different dimensions of explanations, capturing two knowledge-related explanations (i.e. differences in research process and topic complexity) and two source-related explanations (i.e. differences in researchers’ motivations and differences in researchers’ competence). Each dimension is assessed by five to six explanatory statements (e.g. ‘The scientists are qualified to varying degrees.’ for the scale differences in researchers’ competence), resulting in a total set of 23 items. In the present study, the 23 statements of the ECSC were presented to the participants as possible explanations for the previously read conflict. Participants were asked to rate the extent to which each explanatory statement may provide a potential reason for the specific conflict, from 1 (‘very much disagree’) to 6 (‘very much agree’). Internal consistency (indicated by Cronbach’s alpha) of the ECSC dimensions in the present study was α = .83 for differences in research process, α = .72 for topic complexity, α = .91 for differences in researchers’ motivations, and α = .75 for differences in researchers’ competence.

Claim agreement

Participants were asked to rate their agreement with each of the two claims on a seven-point Likert scale (1, ‘very much disagree’ to 7, ‘very much agree’), with the claims being presented without source information, and in the original presentation order.

Source memory

To measure source memory participants were asked to answer one multiple choice question for each claim, in which they had to choose the correct source of the claim from four options. These were ‘professor at a university’, ‘professor at a company’, ‘junior scientist at a university’, and ‘junior scientist at a company’. The original claim was presented together with these options, from which they had to choose one. Claims were presented in the original presentation order but without source information. Source memory was only scored as correct, if the correct source was selected for both claims.

Ratings of source trustworthiness and source expertise (Manipulation check)

Finally, as a manipulation check, the claims including source information (as displayed during the experimental part of the study) were presented again and had to be rated regarding the trustworthiness and the expertise of the source (with 2 items each). The questions for source trustworthiness were ‘How trustworthy is this scientist in your opinion?’ and ‘How honest is this scientist in your opinion?’ (Cronbach’s α = .84 – .91). The questions for source expertise were ‘How competent is this scientist in your opinion?’ and ‘How much domain knowledge has this scientist in your opinion?’ (Cronbach’s α = .90 – .92). Each question had to be answered on a seven-point Likert scale (from 1 = ‘not at all’ to 7 = ‘very’). To compute one score for source trustworthiness or source expertise, respectively, we averaged the ratings across both trustworthiness and expertise items for each source separately.

Experimental design

For the dependent variables of conflict explanation and source memory, the study was realized as a 2 × 2 between-subject design with the two factors differences in trustworthiness (differences vs. no differences) and differences in expertise (differences vs. no differences). For the dependent variables of claim agreement and trustworthiness and expertise ratings (manipulation check), which were all obtained separately for the two claims, the additional within-subject factor source (baseline source, comparison source) completed our 2 × 2x2 mixed design.

Procedure

After giving informed consent to participate in the experiment, participants reported their interest and prior knowledge concerning nanotechnology and completed the PKNT multiple-choice knowledge test (Lin et al., Citation2013). Subsequently, participants received a short introduction into the topic and were instructed to read carefully the following material. Then, the claims were presented on two separate HTML pages and participants could navigate freely back and forth between them by clicking on respective navigation buttons. There was no restriction in reading time or navigation between the two claims. After participants decided to proceed, they were asked to complete the ECSC questionnaire (Thomm et al., Citation2015). Subsequently, they had to rate their personal agreement with the two claims, which were presented in the same order as in the experimental reading phase but without source information. Then, participants’ source memory was assessed for both claims, also in the same order as originally presented. Finally, as a manipulation check, participants had to rate the perceived trustworthiness and expertise of the two scientists. To this end, they were again presented with the claims together with the source information in the same order as in the reading phase.

Analytic approach

H1a and H1b, analyses of variance (ANOVAs) were conducted to investigate the effects of differences in trustworthiness and differences in expertise on participants’ conflict explanations. To test H2a and H2b, multilevel linear regression analyses with random intercepts were conducted to investigate the effects of differences in trustworthiness and differences in expertise on claim agreement depending on the source (baseline or comparison). To test H3a and H3b, a logistic regression model was conducted to investigate the effects of differences in trustworthiness and differences in expertise on the likelihood to correctly remember both sources. All analyses were conducted in R (R Core Team).

Results

Comparability of experimental conditions

Two-factorial ANOVAs with the factors differences in trustworthiness and differences in expertise were conducted to assess the comparability of the experimental groups in terms of age, self-reported topic interest, self-reported prior knowledge, prior domain knowledge (PKNT score), and attitudes towards perceived risks of nanotechnologies (PANT score). No significant differences were found for any of these measures, all F(1, 140) < 1.09, all p > .298. Means (and standard deviations) per group for these measures are shown in .

Table 2. Means (and SD) for control variables as a function of trustworthiness differences (differences, no differences) and expertise differences (differences, no differences).

Conflict explanation (H1)

For the ECSC dimension differences in researchers’ motivations, in line with H1a, the ANOVA showed a significant main effect of the factor differences in trustworthiness, F(1, 140) = 5.67, p = .019, = .04, in that participants agreed more strongly with motivations as an explanation for the conflict with differences in source trustworthiness (M = 4.11, SD = 1.12) than without such differences (M = 3.61, SD = 1.34). There was neither a significant main effect for differences in expertise, F(1, 140) = 1.62, p = .206, nor a significant interaction between the two factors, F(1, 140) = 1.76, p = .190. Means (and standard deviations) per group for all ECSC dimensions are shown in .

Table 3. Means (and SD) for ECSC dimensions and for source memory and revisiting claims as a function of trustworthiness differences (differences, no differences) and expertise differences (differences, no differences).

For the ECSC dimension differences in researchers’ competence, in line with H1b, the ANOVA showed a significant main effect of the factor differences in expertise, F(1, 140) = 6.18, p = .014, = .04, with participants agreeing more strongly with competence explanations with differences in source expertise (M = 3.07, SD = 0.83) than without such differences (M = 2.72, SD = 0.89). There was also a significant main effect of the factor differences in trustworthiness, F(1, 140) = 5.10, p = .025,

= .03, with participants agreeing more strongly with competence explanations with differences in trustworthiness (M = 3.06, SD = 0.89) than without such differences (M = 2.74, SD = 0.84). The interaction between the two factors was not significant, F(1, 140) = 0.04, p = .833.

For the ECSC dimension differences in research process, the ANOVA showed no significant main effects of differences in expertise, F(1, 140) = 1.35, p = .247, or differences in trustworthiness, F(1, 140) = 0.48, p = .490, but a significant interaction between these factors, F(1, 140) = 4.84, p = .029, = .03. While descriptively the data shows higher attribution to this explanation when neither differences in trustworthiness nor differences in expertise are present (i.e. the control group), further investigation of this interaction with Tukey-corrected pairwise comparisons, however, showed no significant effects, all p > .08.

Finally, for the ECSC dimension topic complexity, the ANOVA showed neither a significant main effect of differences in expertise, F(1, 140) = 0.16, p = .686, nor differences in trustworthiness, F(1, 140) = 0.68, p = .410, nor a significant interaction between the two factors, F(1, 140) = 1.18, p = .279.

Agreement with claims (H2)

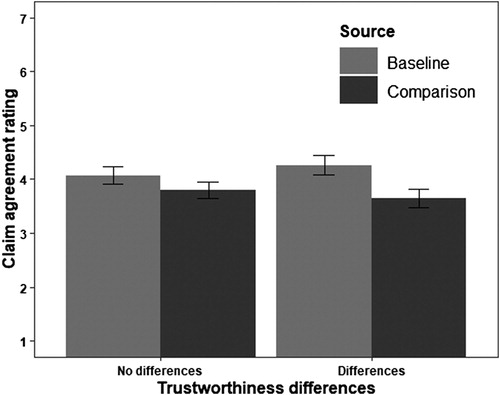

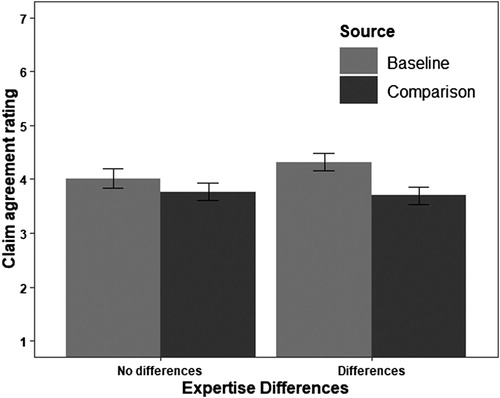

The multilevel linear regression model for claim agreement showed no significant variance in intercepts across participants, χ2 = 0.00, p > .999. Thus, random intercepts for participants were dropped from the model. Regarding fixed effects there were no significant main effects of differences in source trustworthiness, differences in source expertise, or source (baseline vs. comparison). However, there were significant interactions between differences in trustworthiness and source, b = −1.00 (95% CI: −1.94, −0.11), t(140) = −2.13, p = .035, as expected by H2a (see ), and between differences in expertise and source, b = −1.02 (95% CI: −1.91, −0.09), t(140) = −2.19, p = .030, as expected by H2b (see ). Tukey-corrected contrasts showed that with differences in trustworthiness, claim agreement was significantly higher for the baseline source than for the comparison source, b = 0.611 (95% CI, 0.00, 1.22), t(140) = 2.61, p = .049. In contrast, without differences in trustworthiness, claim agreement did not significantly differ between sources, b = 0.26 (95% CI, −0.35, 0.87), t(140) = 1.13, p = .675. Also, with differences in expertise, claim agreement was significantly higher for the baseline source than for the comparison source, b = 0.625 (95% CI, 0.02, 1.24), t(140) = 2.66, p = .042, whereas without differences in trustworthiness, claim agreement did not significantly differ between sources, b = 0.25 (95% CI, −0.36, 0.86), t(140) = 1.07, p = .711. The three-way-interaction between differences in trustworthiness, differences in expertise, and source, while pointing into the direction of a less than additive effect of the two source features, was not significant, b = 1.31 (95% CI: 0.01, 2.60), t(140) = 1.97, p = .051. Group means (and standard deviations) by source for claim agreement are shown in Source Memory (H3)

Figure 1. Agreement ratings for claims of baseline and comparison sources as a function of trustworthiness differences. Note. Error bars represent the 95%-confidence intervals.

Figure 2. Agreement ratings for claims of baseline and comparison sources as a function of expertise differences. Note. Error bars represent the 95%-confidence intervals.

In total, 75.69% of the participants remembered both sources correctly. Contrary to H3a and H3b, the logistic regression model showed no significant main effects of the factors differences in source trustworthiness, Z = −0.54, p = .587, or differences in source expertise, Z = −0.80, p = .424, on the likelihood to correctly remember the two sources, nor a significant interaction between the two factors, Z = 1.37, p = .171. The mean percentages for source memory by group are shown in .

Trustworthiness and expertise ratings of the sources (manipulation check)

The multilevel linear regression model for source trustworthiness ratings showed significant variance in intercepts across participants, SD = 0.68 (95% CI: 0.54, 0.86), χ2 = 21.35, p < .001. Regarding the fixed effects of the model there were no significant main effects of differences in source trustworthiness, differences in source expertise, or source (baseline vs. comparison) on trustworthiness ratings. The only significant interaction shown by the model was the expected interaction between differences in trustworthiness and source (as expected in H1a), b = −1.31 (95% CI: −1.89, −0.72), t(140) = −4.36, p < .001. Tukey corrected pairwise comparisons showed that with differences in trustworthiness, the comparison source was rated significantly less trustworthy than the baseline source, b = 1.55 (95% CI, 1.16, 1.94), t(140) = 12.20, p < .001. In contrast, without differences in trustworthiness, trustworthiness ratings for the comparison and the baseline source did not differ significantly, b = 0.08 (95% CI, −0.30, 0.47), t(140) = 0.56, p = .945. Thus, the manipulation can be considered successful.

The multilevel linear regression model for source expertise ratings showed a significant variance in intercepts across participants, SD = 0.48 (95% CI: 0.33, 0.71), χ2 = 7.29, p = .007. Again, there were no significant main effects of differences in trustworthiness, differences in expertise, or source on expertise ratings. The only significant interaction shown by the model was the expected interaction between differences in expertise and source, b = −1.21 (95% CI: −1.81, −0.61), t(140) = −3.94, p < .001. Tukey corrected contrasts showed that in the condition with differences in trustworthiness being present, the comparison source was rated significantly less trustworthy than the baseline source, b = −1.55 (95% CI, 1.15, 1.95), t(140) = 10.11, p < .001. In contrast, without differences in trustworthiness, trustworthiness ratings for the comparison and the baseline source did not differ significantly, b = 0.18 (95% CI, −0.22, 0.58), t(140) = 1.18, p = .641. Thus, the manipulation can be considered successful. Group means (and standard deviations) by source for claim agreement are shown in .

Table 4. Mean scores (and SD) for reading time, claim agreement, and trustworthiness and expertise ratings for baseline and comparison source as a function of trustworthiness differences (differences, no differences) and expertise differences (differences, no differences).

Additional exploratory analyses

We explored reading times of the claims as a measure that could give insight into readers’ degree of processing of the presented claims. We conducted a multilevel linear regression model for reading time (log-transformed) of each claim with the three predictors differences in trustworthiness, differences in expertise, and source. This exploratory analysis showed main effects for differences in trustworthiness, b = −0.28 (95% CI: −0.54, −0.01), t(140) = −2.04, p = .043, and differences in expertise, b = −0.35 (95% CI: −0.61, −0.08), t(140) = −2.57, p = .011, but no effects of source, b = −0.12 (95% CI: −0.28, 0.03), t(140) = −1.48, p = .140, nor any significant interaction effects (all p > .103). For both, differences in source trustworthiness and differences in source expertise, the reading time was shorter with differences than without. Additionally, we explored the use of the possibility to go back and forth between the claims. Only 20.83% of the participants made at least one revisit to the previously read claim. A logistic regression model showed no significant main effects of or interactions between factors for revisit likelihood (all p > .50). The revisit likelihood (in percent) by group is shown in .

Discussion

The goal of this study was to gain further insights into the effects of differences in perceived source trustworthiness and source expertise on laypersons’ conflict regulation and resolution when facing scientific conflicts. To this end, we presented university students with two conflicting claims about an unfamiliar topic from the area of nanosafety, while varying information on the sources’ workplace and work experience in the field. The results of our manipulation check suggest that participants in our sample were able to identify and interpret these source features as intended. That is, when the sources differed in their trustworthiness (university vs. company) and/or their expertise (professor vs. junior scientist), readers perceived the comparison source as less trustworthy or less expert, respectively, than the baseline source.

More importantly, we expected these differences in source trustworthiness and/or source expertise to affect conflict regulation as well as conflict resolution as predicted by the CSI model (Stadtler & Bromme, Citation2014). Participants’ subjective conflict explanations were measured as indications for conflict regulation and participants’ agreement with the two claims as an indication for conflict resolution.

Subjective conflict explanation based on source information

Regarding our hypotheses on readers’ subjective explanations for the conflict, the present study corroborates prior research showing that source information affects readers’ regulation of scientific conflicts (Gottschling et al., Citation2019). As expected, differences in source trustworthiness increased readers’ attribution of the conflict to differences in scientists’ motivations as a subjective explanation of the conflict and differences in source expertise respective attribution to differences in scientists’ competence. This is in line with the assumption of the CSI model that one way to restore coherence is to accept the scientific conflict as due to different sources and to use source information to explain why the conflict might have emerged. Additionally, we found an effect of differences in source trustworthiness on participants’ endorsement of explanations through scientists’ competence. Though we did not expect this effect, it appears to be plausible. The items of the ECSC questionnaire capturing competence explanations, in part, also consider competence as the appropriate usage of one’s expertise as a scientist (e.g. being thorough in one’s research work). Such facets could be interpreted as being connected to the willingness of scientists to provide accurate knowledge, and therefore might also be affected by source trustworthiness.

While the focus of this study is on source-related explanations of conflicting claims (i.e. differences in researchers’ motivations and differences in researchers’ competence), it is important to note that research-related explanations for the conflict (i.e. differences in the research process and topic complexity) also received high agreement by the readers. This could be explained through readers’ low prior domain knowledge about nanotechnology and perception of the topic at stake. It is possible that they considered the topic to be highly complex and subject of advanced research. Interestingly, our results also indicate that, when sources were of equally high trustworthiness and expertise, participants allocated more time to reading. Thus, participants possibly spent additional time to search for and reason about explanations, when there was not an immediate explanation for the conflict at hand. However, so far, we can only speculate about this interpretation; more research is needed to clarify this observation.

Finally, it should be noted that the effects for conflict explanation were only small in size. Nonetheless, we believe that our results still have value for research on science education, since they point to laypersons’ ability to explain a scientific conflict based on source information, which is a critical skill for scientific literacy (Aikenhead, Citation2003; Bos, Citation2000; Bromme & Goldman, Citation2014; Kolstø, Citation2001). Furthermore, following the rationale of the CSI model (also see Braasch & Scharrer, Citation2020; Stadtler & Bromme, Citation2014), the effects on source-related conflict explanations lay the foundation for the more substantial effects of source differences on conflict resolution (as measured, e.g. by claim agreement), that we discuss in the following section.

Source credibility affecting claim agreement

In line with our expectations regarding claim agreement, both differences in source trustworthiness and differences in source expertise led to reduced agreement with the claim of the source that was perceived as less trustworthy or as less expert. This also corroborates previous findings regarding the influence of differences in source trustworthiness on claim agreement (Gottschling et al., Citation2019) and expands them by showing that the same effect can be triggered by differences in source expertise. Therefore, our quantitative results complement conclusions drawn from qualitative findings in science education (Kolstø, Citation2001). Also, in the present study, participants had to give their ratings for conflict explanation and claim agreement without source information again being presented to them. Thus, the observation that differences in source trustworthiness and/or source expertise still affected these dependent measures can be regarded as further evidence for the integration of source information into readers’ mental representation as stated by the Documents Model framework (Britt & Rouet, Citation2012; Perfetti et al., Citation1999).

To conclude, our findings also have practical implications for science education. One potential application of our findings are refutation texts aimed to stimulate knowledge revision regarding common scientific misconceptions that are present in the public. Refutation texts try to accomplish this by providing the misconception along with an explanation why it is false, together with correct information on the topic (Kendeou et al., Citation2016; Tippett, Citation2010). Given that this resembles the information environment of our study with two conflicting positions, providing additional source information for both positions can be expected to further increase agreement with the correct information and therefore respective knowledge revision. A first study regarding the role of source information in refutation texts already showed that source information can have an effect on successful knowledge revision (Van Boekel et al., Citation2017). In that study, however, only the source credibility (i.e. professor vs. celebrity) of the whole refutation text was manipulated rather than the sources for the positions within the text.

No effects of differences in source credibility on source memory

It is important to note that even though differences in source trustworthiness and/or differences in expertise affected readers’ subjective conflict explanations as well as their claim agreement, we did not find any effects on source memory. While this is in line with previous findings by Gottschling et al. (Citation2019), it contradicts the results by Thomm and Bromme (Citation2016). The absence of effects on source memory in the present study might be explained by a ceiling effect, since source memory was high in all conditions, which may be due to the relatively simple multiple-choice format used. Thus, future research could use free or cued recall questions to assess source memory, in order to get deeper and more accurate insights into what parts of source information are remembered by readers who are confronted with conflicting scientific information.

Interactions of multiple dimensions of source credibility

Finally, an additional goal of this study was to investigate possible interactions between differences in source trustworthiness and source expertise on conflict regulation and conflict resolution. While we did not find any significant interactions on our dependent variables, there was one trend regarding claim agreement that should be considered for further research: When both differences in trustworthiness and differences in expertise were present, effects on claim agreement seemed to be less than additive. That is, the effect of the combined source differences tended to be smaller than the sum of both main effects. This could indicate that as soon as there is one reason to question the credibility of a source as compared to another source with a conflicting claim (either because of differences in trustworthiness or differences in expertise being present), this is sufficient to resolve the conflict based on a second-hand approach to evaluation (Thomm et al., Citation2017). However, since this interaction effect did not reach significance, further research is needed to explore this possibility.

Limitations and outlook

This study does not come without limitations. First, because the sample consisted of undergraduate students, it is unclear how well the findings can be generalized to other samples. Undergraduate students might be more sensitive to source information than the general public because of their current education process as well as their high level of education. Yet, they may also represent a population that often searches for scientific information. Still, it would be desirable for future research to investigate whether the effects observed in the present study can also be found with other populations (e.g. younger students or individuals without academic education). This might be specifically relevant, when considering the current situation of the Covid19 pandemic that may drive individuals of all ages and educational backgrounds to search for scientific information.

A second limitation of this study is that we only used one scientific topic. Previous studies have shown that subjective explanations for scientific conflicts might vary across different topics or domains (Johnson & Dieckmann, Citation2018; Thomm & Bromme, Citation2016). Third, it is likely that the low prior knowledge about nanotechnology of our sample has resulted in a particularly high dependence on source information for claim evaluation. While this was intended, future studies could examine whether and how effects of differences in source trustworthiness and source expertise might be moderated by readers’ prior domain knowledge.

Finally, while one strength of the present research is the well-controlled and standardized material, this comes at the cost of external validity. Although we took measures to make the material comparable to natural information environments (e.g. by using information based on real online articles and a sequential presentation of information), situations on actual websites are generally more complex. In many cases source information would not be limited to references in text, but could also be related to the article type, the author, or the website’s general reputation (Bråten et al., Citation2010), or would need to be actively sought out, for instance, by accessing ‘about us’ sections (Kammerer et al., Citation2016; Stadtler et al., Citation2015). Additionally, texts found during online inquiry are often longer than the ones used in this study and conflicting claims might not be as clear and easy to detect. Future research, thus, should gradually approach more realistic information materials in order to increase external validity and to inform educational interventions that can support laypersons in their assessment of scientific conflicts. Furthermore, by means of controlled experimental designs, such as the one used in the present study, interventions on the assessment of scientific conflicts then should also be evaluated regarding their effects on the use of source information in readers’ conflict regulation and resolution.

In summary, the present study showed how laypersons can use source information in their explanation and resolution of a scientific knowledge for which they possess low prior knowledge. Based on the vast amount of conflicting scientific information and plain misinformation that can be found on the Internet, this is a skill of growing importance for science literacy.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Aikenhead, G. (2003). Review of research on humanistic perspectives in science curricula. European science education research association (ESERA) conference, Noordwijkerhout, The Netherlands, August 19-23.

- Bos, N. (2000). High school students’ critical evaluation of scientific resources on the world wide web. Journal of Science Education and Technology, 9(2). https://doi.org/10.1023/A:1009426107434.

- Braasch, J. L. G., Rouet, J.-F., Vibert, N., & Britt, M. A. (2012). Readers’ use of source information in text comprehension. Memory & Cognition, 40(3), 450–465. https://doi.org/10.3758/s13421-011-0160-6.

- Braasch, J. L. G., & Scharrer, L. (2020). The role of cognitive conflict in understanding and learning from multiple perspectives. In P. Van Meter, A. List, D. Lombardi, & P. Kendeou (Eds.), Handbook of learning from multiple representations and perspectives (pp. 205–222). Routledge. https://doi.org/10.4324/9780429443961-15.

- Bråten, I., Braasch, J. L. G., Strømsø, H. I., & Ferguson, L. E. (2015). Establishing trustworthiness when students read multiple documents containing conflicting scientific evidence. Reading Psychology, 36(4), 315–349. https://doi.org/10.1080/02702711.2013.864362

- Bråten, I., Strømsø, H. I., & Salmerón, L. (2010). Trust and mistrust when students read multiple information sources about climate change. Learning and Instruction, 21(2), 180–192. https://doi.org/10.1016/j.learninstruc.2010.02.002.

- Britt, M. A., Richter, T., & Rouet, J.-F. F. (2014). Scientific literacy: The role of goal-directed reading and evaluation in understanding scientific information. Educational Psychologist, 49(2), 104–122. https://doi.org/10.1080/00461520.2014.916217.

- Britt, M. A., & Rouet, J.-F. F. (2012). Learning with multiple documents: Component skills and their acquisition. In J. R. Kirby, & M. J. Lawson (Eds.), Enhancing the quality of learning: Dispositions, instruction, and learning processes (pp. 276–314). Cambridge University Press. https://doi.org/10.1017/CBO9781139048224.017.

- Britt, M. A., Rouet, J.-F., & Braasch, J. L. G. (2013). Documents as entities: Extending the situation model theory of comprehension. In M. A. Britt, S. R. Goldman, & J.-F. Rouet (Eds.), Reading-from words to multiple texts (pp. 174–193). Routledge. https://doi.org/10.4324/9780203131268.

- Bromme, R., & Goldman, S. R. (2014). The public’s bounded understanding of science. Educational Psychologist, 49(2), 59–69. https://doi.org/10.1080/00461520.2014.921572

- Bromme, R., Kienhues, D., & Porsch, T. (2010). Who knows what and who can we believe? Epistemological beliefs are beliefs about knowledge (mostly) to be attained from others. In L. D. Bendixen, & F. C. Feucht (Eds.), Personal epistemology in the classroom: Theory, research, and implications for practice (pp. 163–193). Cambridge University Press. https://doi.org/10.1017/CBO9780511691904.

- Bromme, R., Thomm, E., & Wolf, V. (2015). From understanding to deference: Laypersons’ and medical students’ views on conflicts within medicine. International Journal of Science Education, Part B, 5(1), 68–91. https://doi.org/10.1080/21548455.2013.849017

- Danielson, D. R. (2006). Web credibility. In C. Ghaoui (Ed.), Encyclopedia of human computer interaction (pp. 713–721). IGI Global. https://doi.org/10.4018/978-1-59140-562-7.

- Gottschling, S., Kammerer, Y., & Gerjets, P. (2019). Readers’ processing and use of source information as a function of its usefulness to explain conflicting scientific claims. Discourse Processes, 56(5–6), 429–446. https://doi.org/10.1080/0163853X.2019.1610305

- Halverson, K. L., Siegel, M. A., & Freyermuth, S. K. (2010). Non-science majors’ critical evaluation of websites in a biotechnology course. Journal of Science Education and Technology, 19(6), 612–620. https://doi.org/10.1007/s10956-010-9227-6

- Hovland, C. I., & Weiss, W. (1951). The influence of source credibility on communication effectiveness. Public Opinion Quarterly, 15(4), 635–650. https://doi.org/10.1086/266350

- Johnson, B. B., & Dieckmann, N. F. (2018). Lay Americans’ views of why scientists disagree with each other. Public Understanding of Science, 27(7), 824–835. https://doi.org/10.1177/0963662517738408

- Kammerer, Y., Kalbfell, E., & Gerjets, P. (2016). Is this information source commercially biased? How contradictions between web pages stimulate the consideration of source information. Discourse Processes, 53(5–6), 430–456. https://doi.org/10.1080/0163853X.2016.1169968

- Kendeou, P., Braasch, J. L. G., & Bråten, I. (2016). Optimizing conditions for learning: Situating refutations in epistemic cognition. Journal of Experimental Education, 84(2), 245–263. https://doi.org/10.1080/00220973.2015.1027806

- Kobayashi, K. (2014). Students’ consideration of source information during the reading of multiple texts and its effect on intertextual conflict resolution. Instructional Science, 42(2), 183–205. https://doi.org/10.1007/s11251-013-9276-3.

- Kolstø, S. D. (2001). “To trust or not to trust, … ” -pupils’ ways of judging information encountered in a socio-scientific issue. International Journal of Science Education, 23(9), 877–901. https://doi.org/10.1080/09500690010016102.

- Lang, F., Kammerer, Y., Oschatz, K., Stürmer, K., & Gerjets, P. (2020). The role of beliefs regarding the uncertainty of knowledge and mental effort as indicated by pupil dilation in evaluating scientific controversies. International Journal of Science Education, 42(3), 350–371. https://doi.org/10.1080/09500693.2019.1710875.

- Lin, S. F., Lin, H. S., & Wu, Y. Y. (2013). Validation and exploration of instruments for assessing public knowledge of and attitudes toward nanotechnology. Journal of Science Education and Technology, 22(4), 548–559. https://doi.org/10.1007/s10956-012-9413-9

- List, A., & Alexander, P. A. (2017). Cognitive affective engagement model of multiple source Use. Educational Psychologist, 52(3), 182–199. https://doi.org/10.1080/00461520.2017.1329014

- List, A., Alexander, P. A., & Stephens, L. A. (2017). Trust but verify: Examining the association between students’ sourcing behaviors and ratings of text trustworthiness. Discourse Processes, 54(2), 83–104. https://doi.org/10.1080/0163853X.2016.1174654

- Otero, J. C., & Campanario, J. M. (1990). Comprehension evaluation and regulation in learning from science texts. Journal of Research in Science Teaching, 27(5), 447–460. https://doi.org/10.1002/tea.3660270505

- Paul, J., Stadtler, M., & Bromme, R. (2019). Effects of a sourcing prompt and conflicts in reading materials on elementary students’ use of source information. Discourse Processes, 56(2), 155–169. https://doi.org/10.1080/0163853X.2017.1402165

- Perfetti, C. A., Rouet, J.-F., & Britt, M. A. (1999). Toward a theory of documents representation. In H. van Oostendorp & S. R. Goldman (Eds.), The construction of mental representations during reading (pp. 88–108). Lawrence Erlbaum Associates Publishers.

- Pillai, R. G., & Bezbaruah, A. N. (2017). Perceptions and attitude effects on nanotechnology acceptance: An exploratory framework. Journal of Nanoparticle Research, 19(2), 41–54. https://doi.org/10.1007/s11051-016-3733-2

- Pornpitakpan, C. (2004). The persuasiveness of source credibility: A critical review of five decades’ evidence. Journal of Applied Social Psychology, 34(2), 243–281. https://doi.org/10.1111/j.1559-1816.2004.tb02547.x.

- Richter, T., & Maier, J. (2017). Comprehension of multiple documents with conflicting information: A two-step model of validation. Educational Psychologist, 52(3), 148–166. https://doi.org/10.1080/00461520.2017.1322968.

- Rouet, J.-F., & Britt, M. A. (2011). Relevance processes in multiple document comprehension. In M. T. McCrudden, J. P. Magliano, & G. Schraw (Eds.), Text relevance and learning from text (Issue June, pp. 19–52). Information Age Publishing.

- Rouet, J.-F., Britt, M. A., & Durik, A. M. (2017). RESOLV: Readers’ representation of reading contexts and tasks. Educational Psychologist, 52(3), 200–215. https://doi.org/10.1080/00461520.2017.1329015.

- Rouet, J.-F., Saux, G., Ros, C., Stadtler, M., Vibert, N., & Britt, M. A. (2020). Inside document models: Role of source attributes in readers’ integration of multiple text contents. Discourse Processes, 1–20. https://doi.org/10.1080/0163853X.2020.1750246

- Saux, G., Britt, A., Le Bigot, L., Vibert, N., Burin, D., & Rouet, J. F. (2017). Conflicting but close: Readers’ integration of information sources as a function of their disagreement. Memory and Cognition, 45(1), 151–167. https://doi.org/10.3758/s13421-016-0644-5

- Sharon, A. J., & Baram-Tsabari, A. (2020). Can science literacy help individuals identify misinformation in everyday life? Science Education, 104(5), 873–894. https://doi.org/10.1002/sce.21581

- Sperber, D., Clément, F., Heintz, C., Mascaro, O., Mercier, H., Origgi, G., & Wilson, D. (2010). Epistemic vigilance. Mind and Language, 25(4), 359–393. https://doi.org/10.1111/j.1468-0017.2010.01394.x

- Stadtler, M., & Bromme, R. (2014). The content–source integration model: A taxonomic description of how readers comprehend conflicting scientific information. In D. N. Rapp, & J. L. G. Braasch (Eds.), Processing inaccurate information: Theoretical and applied perspectives from cognitive science and the educational sciences (pp. 379–402). MIT Press.

- Stadtler, M., Paul, J., Globoschütz, S., & Bromme, R.. (2015). Watch out! – An instruction raising students’ epistemic vigilance augments their sourcing activities. In D. C. Noelle, R. Dale, A. S. Warlaumont, J. Yoshimi, T. Matlock, C. D. Jennings, & P. P. Maglio (Eds.), Proceedings of the 37th Annual Conference of the Cognitive Science Society (pp. 2278–2283). Austin, TX: Cognitive Science Society.

- Thomm, E., Barzilai, S., & Bromme, R. (2017). Why do experts disagree? The role of conflict topics and epistemic perspectives in conflict explanations. Learning and Instruction, 52, 15–26. https://doi.org/10.1016/j.learninstruc.2017.03.008

- Thomm, E., & Bromme, R. (2016). How source information shapes lay interpretations of science conflicts: Interplay between sourcing, conflict explanation, source evaluation, and claim evaluation. Reading and Writing, 29(8), 1629–1652. https://doi.org/10.1007/s11145-016-9638-8

- Thomm, E., Hentschke, J., & Bromme, R. (2015). The explaining conflicting scientific claims (ECSC) questionnaire: Measuring laypersons’ explanations for conflicts in science. Learning and Individual Differences, 37, 139–152. https://doi.org/10.1016/j.lindif.2014.12.001

- Tippett, C. D. (2010). Refutation text in science education: A review of two decades of research. International Journal of Science and Mathematics Education, 8(6), 951–970. https://doi.org/10.1007/s10763-010-9203-x

- Van Boekel, M., Lassonde, K. A., O’Brien, E. J., & Kendeou, P. (2017). Source credibility and the processing of refutation texts. Memory and Cognition, 45(1), 168–181. https://doi.org/10.3758/s13421-016-0649-0

- Werner da Rosa, C., & Otero, J. (2018). Influence of source credibility on students’ noticing and assessing comprehension obstacles in science texts. International Journal of Science Education, 40(13), 1653–1668. https://doi.org/10.1080/09500693.2018.1501168

Appendices

Appendix A. Introduction to the scientific conflict and the task, as presented to the study participants (translated from German)

___________________________________________________________________________

Information about a controversy in the field of nanotechnology is presented below. Please read this information carefully.

Introduction to the topic ‘Nanoparticles in sunscreen’

Nanoparticles of zinc oxide and titanium dioxide have been used in the production of sunscreens for some time. The advantage of these particles is that they effectively reflect a broad UV spectrum. Thus, chemical UV filters can be avoided. Chemical UV filters convert UV radiation into heat on the skin and can trigger allergies or can have unwanted hormonal side effects. Such side effects are not known to occur with UV filters containing mineral nanoparticles that block and reflect UV radiation. Furthermore, particularly high sun protection factors can be achieved by using nanoparticles. However, it is controversial whether the tiny nanoparticles can enter the body through the skin, where they could have unknown and undesirable effects on our health.

In the following, statements by two scientists on this controversy are presented, which can be found on their respective websites. Please read both statements carefully and then answer some questions about the controversy.

___________________________________________________________________________

Appendix B. Components of the claims presented in the study based on the manipulation of trustworthiness and expertise as well as the position concerning the scientific conflict (translated from German).

___________________________________________________________________________

Component 1: First part of source information

A state-funded professor working in the field of nanoscience at a university assumes that …

An industry-funded professor working in a nanoscience company assumes that …

A state-funded junior scientist working in the field of nanoscience at a university assumes that …

An industry-funded junior scientist working in a nanoscience company assumes that …

Component 2: Position

1) nanoparticles do not penetrate the upper layers of the skin and therefore cannot have an undesirable effect on our health.

2) nanoparticles can penetrate deep into the skin and can have undesirable effects on our health.

Component 3: Second part of source information

This professor has been researching this topic at his university for about ten years and writes on his website: …

This professor has been researching this topic at his company for about ten years and writes on his website: …

This junior scientist has been researching this topic at his university for about a year and writes on his website: …

This junior scientist has been researching this topic at his company for about a year and writes on his website: …

Component 4: Quote

‘The results of our study indicate that the used nanoparticles cannot penetrate the upper layers of skin and therefore cannot come into contact with living cells.’

‘The results of our study indicate that the used nanoparticles can penetrate deep into the skin layers and thus come into contact with living cells and the bloodstream.’

___________________________________________________________________________

The two conflicting claims presented to each participant were built from these blocks depending on the experimental condition regarding source expertise and trustworthiness with the following logic:

Source information

= high expertise / high trustworthiness

= high expertise / low trustworthiness

= low expertise / high trustworthiness

= low expertise / low trustworthiness

Position within the conflict

1) = in favour of nanoparticles in sunscreen

2) = against nanoparticles in sunscreen

Appendix C. Item used for the measurement of source memory with the instructions given to the participants of the study (translated from German)

___________________________________________________________________________

Memory questions

In the following we are interested in how well you remember where the respective statements came from. For each of the two statements, please select the source you consider to be correct.

First claim

_____ assumes that nanoparticles do not penetrate the upper layers of the skin and therefore cannot have an undesirable effect on our health. He writes on his website: ‘The results of our study indicate that the nanoparticles used cannot penetrate the upper layers of skin and therefore cannot come into contact with living cells.’

Who provided this statement? (the four alternatives were presented in random order)

o an industry-funded junior scientist working in a nanoscience company for one year

o a state-funded junior scientist working in the field of nanoscience at a university for one year

o an industry-funded professor working in a nanoscience company for ten years

o a state-funded professor working in the field of nanoscience at a university for ten years

___________________________________________________________________________