?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

When individuals in our knowledge society assess the extent of their own knowledge, they may overestimate what they actually know. But, this knowledge illusion can be reduced when people are prompted to explain the content. To investigate whether this holds true for written self-explanations about science phenomena this study transfers the Illusion of explanatory depth (IOED) paradigm to learning from a written science-related text. In an experimental group design, individuals (N = 155) first read information on artificial intelligence supported weather forecasting and then either did or did not produce a written explanation on the topic. Afterwards they rated their own knowledge on the topic, rated experts’ knowledge on the topic, answered questions on their strategies for handling scientific information and rated their own topic specific intellectual humility. Results show that participants in all experimental conditions rated their own knowledge significantly lower than that of experts; however, providing the written explanation about predicting severe weather events did not significantly affect the dependent measures. Implications address how giving explanations may influence judgements of one’s own and scientists’ knowledge in the context of reading science-related texts.

Introduction

In our knowledge society we depend on scientific information and the experts who provide it. For instance, adult individuals report that they often inform themselves about science and search for scientific information online (e.g. Wissenschaft im Dialog/Kantar Emnid, Citation2019). In doing so, individuals are confronted with different types of information, ranging from information that is simplistic and oriented towards laypeople to information that uses highly specialized jargon and is geared towards experts (Bromme & Jucks, Citation2018).

Understanding complex scientific claims is a highly demanding task, also because a person’s depth of understanding depends on the level of their prior knowledge (Bromme & Goldman, Citation2014). The more details we request to know when trying to understand a scientific topic, the more the explanation about that topic becomes specific and interconnected with other pieces of information (Keil, Citation2006). For instance, a simple answer to how meteorological scientists predict the weather is that they compile data from weather stations at different locations and compute and analyse models based on that data. If we inquire further, we may learn about various forecasting tools and differential equation models that differ in what they predict depending on certain spatial and temporal variables (e.g. current weather, climate change, sea ice and glacier cover, severe weather events) and depending on which type of data is used (e.g. wind, air density, temperature, pressure). Essentially, as complexity expands, each new concept is related to other concepts at a similar level of depth (Keil, Citation2006).

While experts working on specific problems are equipped with the knowledge and skills to understand and evaluate the complexities and uncertainties of science, everyone else may only be able to gain a simplified and thus bounded perspective on how science works (Bromme & Goldman, Citation2014). Contrary to a typical education context, where students are instructed to further their metacognitive understanding of scientific topics by elaborating and self-evaluating their knowledge, adults in an informal setting commonly do not experience such scaffolding. The realities of high distribution of scientific knowledge can therefore posit further challenges for their engagement with science.

The role of explanations

Empirical studies suggest that when people are prompted to explain something, they can make better sense of causal mechanisms, can better understand their complexity, and can gain metacognitive awareness of their own knowledge (Lachner et al., Citation2020). According to Lombrozo (Citation2006), explanations are not mere summarizations, because explaining something allows the explainer to filter out some facts to fit the logic of the causal mechanism. Due to their communicative and elaborative nature, explanations provide an opportunity for individuals to identify the strengths and weaknesses of their own understanding (Duschl & Osborne, Citation2002). Moreover, dialogic practices such as argumentation and explanation include verbal and social aspects of reasoning (Asterhan & Schwarz, Citation2016). Discussing, explaining, and arguing about scientific topics is a learning activity that is related to taking different perspectives and elaborating one’s own knowledge, especially outside of the traditional educational setting (Jucks & Mayweg-Paus, Citation2016). While prior studies have focused on the relationship between explaining and gaining knowledge in an educational context, the role of explaining scientific topic in an informal context has not been extensively investigated.

The illusion of explanatory depth

Studies investigating the Illusion of explanatory depth (IOED) (Rozenblit & Keil, Citation2002) have demonstrated how explanations affect people’s perception of their own knowledge. Because of the IOED effect, people seem to be prone to overestimating their own understanding of natural phenomena and mechanical objects before they are requested to explain them. Namely, their initial confidence in their ability to give a detailed explanation of a phenomenon is significantly higher than after they had actually experienced the struggle of giving one.

Sloman and Rabb (Citation2016) suggest that the illusion of explanatory depth occurs because people mistake their own knowledge for the perceived knowledge of experts. Rabb et al. (Citation2019) have proposed three varieties of knowledge representation in our society: (1) detailed knowledge about a small number of things (experts’ knowledge), (2) superficial knowledge about many things (laypeople’s knowledge), and (3) markers for knowledge that can be found elsewhere (on the Internet, from a colleague, in a notebook). Interestingly, and without fully realizing it, people seem to consider their own individual knowledge as being on par with or equal to society’s collective knowledge. Indeed, a study by Alter et al. (Citation2010) indicated that IOED is more likely to emerge when people mistake their knowledge about abstract (superficial) characteristics of an object with a belief that they possess knowledge about the concrete (detailed) characteristics of that object. Along those lines, participants who initially estimated their own knowledge more accurately or were prompted to do so in an experiment, were less likely to overestimate their knowledge because they had a more detailed understanding of what was required of them.

Epistemic dependence

Everyone in our knowledge society is epistemically dependent on other people, albeit on different people for different issues (Origgi, Citation2012). The example with mistaking one’s own knowledge with knowledge of experts in our community of knowledge (Rabb et al., Citation2019) illustrates how interconnected our knowledge judgments are with what we assume other experts to know. This works as long as we have cognitive mechanisms in place that make us epistemically vigilant about the credibility and the source of information, and even ourselves (Origgi, Citation2012). The reality of high distribution of knowledge implies that other strategies such as distinguishing between experts and non-experts, being intellectually humble as well as being able to cope and engage with science in a practical manner may be as important as gaining content knowledge about scientific issues (Feinstein, Citation2011; Vaupotič et al., Citation2021). Studies have shown that individuals are able to successfully differentiate between more and less reliable experts (Bromme & Thomm, Citation2016). Moreover, when individuals evaluate conflicting claims, they agree more with those from sources of higher trustworthiness or expertise (Gottschling et al., Citation2020).

Individuals’ knowledge judgments

Making accurate judgments about one’s own knowledge and about experts’ knowledge is a complex task, especially without scaffolding (e.g. monitoring and control) and particularly for tasks in which external criteria for successful performance are not clearly defined (Pieschl, Citation2009). Prior beliefs and the contextual factors have an influence on people’s knowledge judgments. For instance, in an experimental study that asked participants about their understanding of how foods are genetically modified, those who thought they knew the most about the topic actually knew the least (Fernbach et al., Citation2019). In another empirical study, students were presented with the same amount of information in either a high-fluency video lecture or a low-fluency video lecture (Carpenter et al., Citation2013). While both groups displayed the same amount of learned information, the students exposed to the high-fluency lecture were significantly more confident in how much they learned. Similarly, Scharrer et al. (Citation2014) have shown that people are prone to judge themselves as more competent if they receive causally complex and fictitious information presented in a highly comprehensive manner (vs. a less comprehensive manner). Along these lines, Rabb et al. (Citation2019) suggested that people may feel that they understand certain concepts better if they are told that experts deeply understand them or if they can easily find them online.

One of the main dangers of overconfidence is that laypeople may be more fixed in their beliefs than would be rational, considering that they can only realistically process relatively superficial knowledge (Fernbach & Light, Citation2020). Moreover, overconfidence affects people’s effort regulation, in the sense that they will stop learning when they think they have obtained an adequate understanding of the material (e.g. Fiedler et al., Citation2019). This seems to be especially salient in digital environments, where individuals not only seem to stop learning earlier than in analog environments (Delgado et al., Citation2018), but they also are more likely to over-assume the amount of knowledge they possess (Fisher et al., Citation2015). Finally, overconfidence precludes deference to experts, because people may not recognize that they lack the necessary understanding of a concept (Scharrer et al., Citation2014).

Individuals’ strategies for dealing with the division of cognitive labour

Understanding one’s knowledge limits is practically important in situations where people need to reason about scientific issues and make adherent decisions. When faced with these knowledge limits, people generally use two different strategies to cope with complex scientific issues: People either try to make knowledge judgments on ‘what is true’ or to make source judgments on ‘whom to trust’ (Bromme & Goldman, Citation2014). Based on this distinction, people can use first-hand, ‘what is true’ strategies (e.g. evaluate scientific reliability, search for further information), when they possess enough knowledge to make an informed decision, and they can use second-hand, ‘whom to trust’ strategies (e.g. evaluate the source, defer to further experts) when they do not. Another strategy people use is to simply rely on their own judgments (Kienhues et al., Citation2019). This strategy emphasises people’s reliance on their own non-epistemic intuitions and opinions as opposed to scrutinizing the content of information or the characteristics of the source that provided information. In an interview study by Bromme et al. (Citation2015), laypersons as well as intermediately knowledgeable people reported validation and deference to other experts as common strategies for resolving conflicting science-based knowledge claims.

Through the above-mentioned strategies, people try to achieve their epistemic aims, such as making decisions about scientific issues or forming opinions about societal and technological developments as competent outsiders (Feinstein, Citation2011). Considering how epistemic cognition is traditionally conceptualised, the notion of ‘trusting’ as a basis for making judgements may seem unsophisticated; however, due to our limitations, it may be the most reasonable strategy to adopt (Sinatra et al., Citation2014).

Domain-Specific intellectual humility

To productively deal with complex scientific issues, it is also important to appropriately attend to one’s own cognitive limitations. This means revising one’s own beliefs in light of new information and productively cope and admit to the lacks of one’s own knowledge; this is what Whitcomb et al. (Citation2017) define as intellectual humility. Moreover, studies have shown that greater intellectual humility is linked to more accurate estimation of one’s own knowledge, more intrinsic motivation and acquisition of new knowledge (Krumrei-Mancuso et al., Citation2020) In the context of domain-specific knowledge, intellectual humility is accompanied by an appropriate attentiveness to the limits of the actual evidence behind that knowledge and to one's own limitations in obtaining and evaluating information relevant to it (Hoyle et al., Citation2016). Studies have shown that individuals display context-specific levels of intellectual humility, meaning that in different contexts, people show different levels of readiness to change or adapt their opinions in light of new viewpoints and evidence.

Present study

In the present work, we wished to investigate how adults explain a scientific topic without scaffolding that is typical in educational settings. We specifically aimed to study a common everyday situation in which people interact with scientific information: reading about science online. But we added one additional focus, which is that some participants were asked to explain, in writing, their newly acquired knowledge to another person. We used a 1 × 2 experimental group design and split participants into a ‘reading group’ and an ‘explanation group’. All participants were provided with an online science news article about the use of artificial intelligence to predict severe weather events. After reading the article, participants in the explanation group were asked to explain to an acquaintance (in writing) what they read about. Participants were instructed to generate an explanation without a specific training or example. The mode of writing the explanation enabled more control and provided an insight into participants’ complex reasoning. Participants in the reading group read the text only; they did not produce an explanation. We investigated how the different experimental conditions affected participants’ own assumed knowledge and topic experts’ assumed knowledge. Furthermore, we were interested in participants anticipated strategies for dealing with the division of cognitive labour and their topic-specific intellectual humility.

First, we investigated the effect of the experimental group on participants’ assumed knowledge by asking them to rate the extent of topic knowledge and knowledge of the scientific method, as well as the extent of field experts’ topic knowledge and knowledge of the scientific method. The ratings were given before and after participants completed the tasks (reading or reading + explaining). We expected that all participants, regardless of their experimental group, would assume to possess less knowledge than the field experts at all times, as we expected participants to be aware of the division of cognitive labour (H1). Further, we expected that all participants would assume their own knowledge to be significantly higher after reading than before reading (H2). In addition, we hypothesized the following differences between the two experimental groups: After writing the explanation, participants of the explanation group, who likely struggled to provide an elaborate explanation, would assume to possess less knowledge than the reading group (H3).

Second, after both groups finished the tasks, all participants reported what strategies they would use to gain more knowledge on the topic. Through this dependent measure, we captured the practical aspect of dealing with the division of cognitive labour. We expected participants in the explanation group to report more second-hand strategies than participants in the reading group due to this realization that their knowledge was not as thorough as they had thought (H4).

Third, after participants finished the tasks, we investigated the degree to which they perceive that their own topic-specific knowledge is fallible and limited. Through this dependent measure, we captured the attitude of participants towards their own knowledge (limits). As the participants in the explanation group would likely realize the limits of their own knowledge, we expected them to report greater topic-specific intellectual humility than participants in the reading group (H5).

The study was preregistered ahead of the data collection (https://aspredicted.org/blind.php?x=8nb5i5).

Methods

Participants

Altogether, 155 adult participants (79 female) aged between 18 and 67 (M = 30.34, SD = 9.14) were included in the analysis. Initially, 192 participants completed the survey online through the Prolific platform, a participant pool for research in the social and behavioural sciences (Palan & Schitter, Citation2018). Assuming relatively small effect size (Cohen’s d = 0.3), power analysis done with G*Power software suggested a sample size of 147 in order to find a significant difference between the two groups (alpha error at 0,05 and beta error at 0,95). The participation in the study was fully anonymous. All participants gave informed consent to participate in the study at the beginning of the survey and at the end, after being debriefed about the purpose of the study. All participants were native German speakers. Participants were excluded if they failed to give a correct reply to at least two out of four knowledge control questions, provided no or nonsensical explanations, reported studying meteorology or computer science, reported a substantial amount of knowledge on the topic at the time of the first rating (5 or more on a scale from 1 (no knowledge) to 7 (a lot of knowledge)) or took more than one standard deviation less time than the average of their experimental group to complete the survey. Participants reported having a university degree in 47% of cases. The reading group took on average 12 min 48 s (SD = 4 min 19 s), and the explanation group took on average 16 min 49 s (SD = 5 min 36 s) to complete the online survey. Participants received a 4.8 € reward for their participation. On a scale from 1 (not at all) to 5 (very much), participants reported a rather high general interest in science (M = 4.14; SD = 0.73); furthermore, on a scale from 1 (never) to 5 (very often), they reported that they quite often inform themselves about science (M = 3.49; SD = 0.85), and they quite often do so with the help of online sources (M = 3.32; SD = 1.42).

Materials

Science news article

Participants were given a science news article about predicting severe weather events based on artificial intelligence algorithms. The article was taken from a well-known science news webpage and translated from English into the participants’ native language. Text difficulty indices (e.g. LIX = 63; Lenhard & Lenhard, Citation2011) were used to assure that the comprehensibility was comparable to other science news texts aimed at people without specialized knowledge on the topic. The chosen topic was neutral in the sense that no pre-belief or a strong group commitment was assumed. The underlying scientific process of algorithm-based weather predictions was explained in a simplified manner and with a storyline highlighting its positive role in the society. Specifically, the article included a mechanistic explanation of how satellite images of dynamic cloud formations are collected and used for identifying machine-learning classifiers that make it easier to detect severe weather events with higher degree of certainty. Some scientific jargon was used, such as comma-shaped clouds, learning linear classifiers, supercomputer and so forth. Moreover, the article made the distinction that the algorithms could detect comma-shaped clouds with a 99% accuracy but could predict severe weather events with a 64% accuracy. The complexity of the datasets was briefly described. The online science article was retrieved from the Science Direct web page (Penn State, Citation2019; see Appendix 1 for the original text and Appendix 2 for the translated version used in the study).

Explanation task

After reading the article, participants in the explanation group were told that an uninformed acquaintance wanted to know what they had just read about with the exact instruction to explain:

Please explain to him how artificial intelligence is used in the field of meteorology and how it supports the prediction of extreme weather events. Please write your explanation as detailed as possible and in reference to the article you just read.

Measures

As indicators of how capable the participants perceived themselves to be with regard to their knowledge about the specific topic, we measured three different aspects:

Assumed knowledge

Participants were asked to rate the extent of their own assumed knowledge on the topic as well as the extent of topic experts’ assumed knowledge on it. The knowledge rating question was split into two parts: general knowledge (How much do you/field experts generally know about using artificial intelligence algorithms for predicting severe weather events?) and knowledge about the scientific methods (How much do you/field experts know about the scientific methods underlying artificial intelligence algorithms for predicting severe weather events?). The ratings were given on a scale from 1 (no knowledge) to 7 (a lot of knowledge). Knowledge assumption ratings were given before (pre-rating) and after (post-rating) the tasks (reading or reading + explaining).

Strategies for dealing with scientific knowledge

We used the STRATEGI questionnaire (Kienhues et al., Citation2019) to determine which strategies participants use when coping with scientific knowledge claims. Ratings on 15 topic-specific items were given on a scale from 1 (I would never do this) to 5 (I would definitely do this). Based on the distinction between first-hand ‘what is true?’ strategies and second-hand ‘whom to trust?’ strategies (Bromme & Goldman, Citation2014), the instrument captures five factors: Two relate to participants’ anticipated use of first-hand strategies (‘Evaluation of scientific reliability’, ‘Searching for further information’), two relate to the anticipated use of second-hand strategies (‘Evaluation of the source’, ‘Deference to further experts’), and one refers to ‘Reliance on one’s own judgments’. The Cronbach’s α for the five factors ranges between 0.6 and 0.8. The items are attached in Appendix 3.

Topic-specific intellectual humility

We measured participants’ topic-specific intellectual humility with an instrument developed and validated by Hoyle et al. (Citation2016). This nine-item instrument captures fallibility, attentiveness to limitations of one’s view, and one’s ability to determine the veracity of the scientific topic on a single factor, using a scale from 1 (completely agree) to 5 (completely disagree). The Cronbach’s α for the nine items is 0.7.

Control measures

To control for potential differences between groups before the intervention, we included four items about participants’ attitudes towards science; this allowed us to control for participants who generally distrust scientific institutions and current scientific consensus about evolution, climate change, and vaccination (attitudes towards science). The items were taken from the Science Barometer (Wissenschaft im Dialog/Kantar Emnid).

Additionally, we included four multiple-choice questions about the content of the news article; these items primarily served as a control to verify that participants actually read the article and comprehended the main message (reading control). Participants who answered 3 out of 4 questions correctly were kept in the study (see Appendix 4).

Further, to gauge their interest in the topic, participants were asked whether they would like to receive further information about it (interest). If they answered positively, they received three additional texts about the use of artificial intelligence after completing the survey.

After writing an explanation on how artificial intelligence algorithms are used to predict severe weather events, participants of the explanation group were asked to rate how satisfied they were with the explanation they wrote (assumed explanation quality) on a scale from 1 (not satisfied at all) to 7 (very satisfied).

Procedure

Participants first reported their demographic data and gave ratings about their interests and attitudes towards science. All participants were then asked for their assumed knowledge pre-ratings. Subsequently, participants were split into two experimental groups, namely a reading group and an explanation group. Participants in both groups were asked to thoroughly read the online science article, which was followed by the assumed knowledge post-rating for the reading group and the knowledge control rating for the explanation group. Afterwards, participants in the explanation group were asked to explain the topic in writing. Finally, they rated their assumed explanation quality and gave their assumed knowledge post-ratings. Participants had an unlimited amount of time for both reading the article and writing the explanation. In the next part of the survey, all participants were requested to think about their anticipated strategies for informing themselves further about the topic by answering the items of the STRATEGI questionnaire. They next answered the intellectual humility scale. Lastly, participants answered the four reading control questions. Before finishing, participants indicated whether they would like to receive additional similar articles to inform themselves further about the topic.

Results

Assumed knowledge

To compare changes in experimental groups with regards to assumed knowledge (hypotheses 1–3), we conducted a mixed effect model with the fixed ‘between factor’ being the group (reading group vs. explanation group) and the two fixed ‘within factors’ being (1) the pre-rating and post-rating, and (2) participants’ own assumed knowledge and field experts’ assumed knowledge. We calculated two models, one for the topic knowledge and one for the scientific methods knowledge. In both, we accounted for the dependencies between ratings made by the same participant as the random effect factor; however, the random effects were not interpreted. All effects are reported as significant at p < .05. As a measure of the effect size we used partial eta squared. The main data analyses were done in the R software using the package afex (Singmann et al., Citation2015).

The means and standard deviations of the ratings for assumed topic-specific knowledge and scientific method knowledge are shown in , separated according to the experimental group and the time at which the rating was taken. Of 155 participants, 76 were randomly allocated to the reading group and 79 were randomly allocated to the explanation group. Prior to main analyses, we conducted an independent samples t-test to compare content knowledge scores between both groups. We found no significant difference between the reading and explanation group (t = −0.73, df = 142, p = .47). Moreover, we tested if the groups differed regarding their interest in receiving further literature about the topic. We conducted a Chi-square test and found no significant difference, X2(1, N = 155) = 0.05, p = .82.

Table 1. Means and standard deviations of assumed knowledge ratings, split up by experimental group as well as by pre-rating and post-rating.

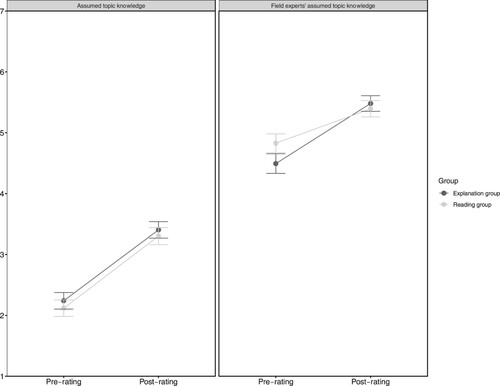

Assumed topic knowledge

For assumed topic knowledge, we found no significant main effect of the experimental group, β = 0.01, SE = 0.12, z(153) = 0.05. There was a significant main difference between participants’ own assumed topic knowledge and field experts’ assumed topic knowledge, β = 2.50, SE = 0.12, z(462) = 20.53, p < .001, = .36, as well as a significant difference between pre-ratings and post-ratings, β = 1.12, SE = 0.16, z(672) = 6.67, p < .001,

= .10. There was a significant interaction effect between both within factors; namely, the increase between pre-rating and post-rating was significantly larger for assumed own topic knowledge in comparison to the increase between pre-rating and post-rating of field experts’ assumed topic knowledge, β = −.58, SE = 0.23, z(672) = −2.46, p = .01,

= .01. The assumed knowledge ratings before and after the tasks are shown in .

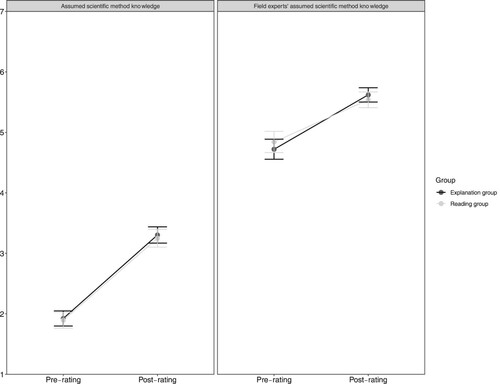

Assumed scientific method knowledge

Regarding participants’ assumed knowledge on the scientific method, we found no significant main effect of the experimental group, β = 0.01, SE = 0.13, z(153) = −0.08. There was a significant main difference between participants’ own assumed knowledge about the scientific method and field experts’ assumed knowledge about the scientific method, β = 2.8, SE = 0.12, z(462) = 23.36, p < .01, = .52, as well as a significant main difference between pre-rating and post-ratings, β = 1.37, SE = 0.12, z(462) = 11.12, p < .01,

= .16. There was a significant interaction effect of both between factors; namely, the increase between pre-rating and post-rating was significantly larger for participants’ own assumed knowledge about the scientific method in comparison to field experts’ assumed knowledge about the scientific method, β = −.56, SE = 0.17, z(462) = −3.266, p < .01,

= .01. The assumed knowledge ratings for the scientific method knowledge before and after the tasks are shown in .

Reported strategies and intellectual humility

Regarding hypotheses 4 and 5, we conducted a multivariate analysis of variance for reported strategies and intellectual humility between the reading group and the explanation group. The multivariate result was not significant for the experimental group, Pillai’s Trace = .05, F = 1.43, df = (6,148). Box’s homogeneity of covariance matrices test was not statistically significant (χ2 = 18.4, p = 0.66). The univariate F tests with Bonferroni corrections showed a significant difference between the reading group and the explanation group for the factor ‘Reliance on one’s own judgments’ (F(1,153) = 4.57, p = .03, η2 = 0.03). The descriptive statistics for the use of strategies and for intellectual humility are reported in .

Table 2. Descriptive statistics for reported strategies and intellectual humility by experimental group.

Further analyses

In addition to testing our five hypotheses, we were interested in the relationship between participants’ ratings of their own assumed knowledge, ratings of field experts’ assumed knowledge, reported strategies and intellectual humility. We also had a deeper look into the explanations provided by the explanation group.

Correlations between assumed knowledge, reported strategies, and intellectual humility

We analysed correlations between assumed knowledge post-ratings, reported strategies, and intellectual humility. A significant positive correlation was found between assumed own knowledge (general and method) and reported ‘Deference to further experts’ (r(153) = 0.17, p < .05; r(153) = 0.19, p < .05) and a significant negative correlation to intellectual humility (r(153)= −0.21). A positive correlation was found between participants’ ratings of field experts’ assumed knowledge and the strategies ‘Source evaluation’ (r(153) = 0.16, p < .05) and ‘Searching for further information’ (r(153) = 0.19, p < .05). A perceived gap between participants’ own and field experts’ assumed knowledge of the scientific method was negatively related to ‘Reliance on one’s own knowledge’ (r(153) = −0.20). Furthermore, strong and significant correlations (p < .001) between 0.35 and 0.32 were found for ratings of participants’ own assumed knowledge about the topic and the scientific method as well as for experts’ assumed knowledge about the topic and the scientific method. Topic-specific intellectual humility was significantly and negatively correlated with ‘Reliance on one’s own knowledge’ (r(153) = 0.33, p < .001) and significantly and positively correlated with ‘Content evaluation’ (r(153) = 0.37, p < .001) and with ‘Deference to further experts’ (r(153) = 0.22, p < .01). The correlation matrix table is shown in .

Table 3. Correlations between assumed (scientific method) knowledge, strategies for coping with the division of cognitive labour and intellectual humility.

Exploratory analyses of participants’ explanations

To gain a better impression of what the participants of the explanation group wrote when they were asked to explain the issue, we analysed their explanations regarding length and content. We analysed to what extent participants wrote simplified and easy texts, and whether they made references to scientific uncertainty and complexity. Coding was done by the first author of the paper. To evaluate the reliability, a second rater analysed the first 50 explanations.

The average length of participants’ written explanations was 56.67 (SD = 28.17) words. The length of the explanations was positively correlated with participants’ confidence in providing a good explanation (r(77) = 0.456, p < .001), and positively but not significantly with participants’ own knowledge rating (r(77) = 0.214, p = .058). Regarding the content of the explanations, factual information such as specific numbers, percentages, names of research institutions, or technical terminology was mentioned on average 2.5 times (SD = 1.2) per provided explanation. Inter-rater agreement (ICC= 0.88) between two raters was sufficiently high (Koo & Li, Citation2016). All in all, participants’ explanations were frequently summaries of the article rather than actual explanations. Out of 79 provided explanations, 62 were written in a conclusive and causally simple manner (both raters agreed in 91% of cases), in the sense that participants mentioned clear causal mechanisms and absolutistic scientific solutions, such as in the following excerpt:

Meteorologists have developed an algorithm based on weather data that recognizes and evaluates weather in real time and detects upcoming weather disturbances. These storms all develop according to the same pattern and have a comma-shaped appearance. The artificial intelligence searches for such patterns. Thus, the people in the affected areas can be warned, and the risk of damage due to unexpected severe weather events can be reduced.

Based on a science news article, a computer program was developed by researchers. This program detects a certain shape of clouds (in this case comma-shaped) based on a lot of data that was analysed beforehand. These are often the cause of storms or more severe weather events. The AI is sometimes apparently able to recognize cloud formations in the beginning, which humans do not yet recognize. I do not know much more about it.

Discussion

In the present study, we wished to investigate the influence of engaging with a science news text in different ways, namely either by just reading it or by reading it and explaining the topic to another person. We focused on how these different engagements influenced participants’ ratings of their own and of experts’ knowledge about a science topic, their reported strategies for coping with the division of cognitive labour and their topic-specific intellectual humility.

In line with our first two hypotheses (H1 & H2), results showed that participants, regardless the experimental group, perceived their own knowledge (both topic and method knowledge) as lower than experts’ knowledge at all times, meaning that they were aware of the division of cognitive labour. Furthermore, after engaging with the science topic in either of the two ways, participants rated their knowledge as well as the knowledge of experts as being significantly higher than before reading the science news article. The perceived increase in knowledge was significantly larger for their ratings of their own knowledge in comparison to their rating of scientists’ knowledge, albeit the perception of their own knowledge was still rather low. The pattern of ratings for general knowledge and method knowledge was almost identical.

Regarding the third hypothesis (H3), results unexpectedly showed that participants who additionally had to prepare a written explanation did not rate their knowledge significantly differently after doing so than the participants who only read the science news article. Regarding H4, we found that participants in the explanation group reported a higher reliance on their own judgments, which indicates that they saw themselves as more capable than the reading group to make up their own mind about the science topic. While we anticipated that participants would actually become more critical about their own knowledge, our results showed the opposite. Nonetheless, the strategy of ‘relying on one’s own knowledge’ was the least endorsed strategy overall, and no other significant differences were found with regards to reported strategies for coping with the division of cognitive labour. Contrary to H5, we did not find significant differences between the two experimental groups concerning topic-specific intellectual humility.

To gain a better understanding of these results, we qualitatively investigated how participants explained to their uninformed friend what they read about. We found that the explanations given by participants were more often summaries of what they read in the text than actual explanations of how algorithms were used to predict severe weather events. While previous studies have indicated that explanation can be especially useful for learning when individuals integrate their previous knowledge with new knowledge (O’Reilly et al., Citation1998), this was not the case in our study. Given that participants’ explanations contained factual information, participants may have experienced an increased feeling of knowing, as being able to recall specific factual information is related to a higher feeling of knowing (Koriat, Citation1995). Moreover, most of the participants did not go into detail about how artificial intelligence algorithms are informed by visual data; instead, they focused on the simple storyline of how experts are more accurately able to predict severe weather events. Overall then, as participants did not actually explain the complex mechanism outlined in the science news article, they likely were not disillusioned by the limited amount of knowledge they actually possessed. Quite the contrary, because participants were able to recall some information, this might have increased their confidence (Baker & Dunlosky, Citation2006). Participants also did not adhere to the structure of explanation as it is defined in the literature (e.g. Lombrozo, Citation2006). The relatively high satisfaction with their explanations demonstrates that participants were probably rather ignorant of the fact that they lacked significant depth of detail. Taken together, the characteristics of the explanations the participants provided further add to the evidence that individuals without expertise seek simple causal explanations of scientific findings (Scharrer et al., Citation2014).

The IOED phenomenon is more likely to emerge when people mistake their mastery of abstract characteristics about a concept for an assumed understanding of the concrete details of a concept (Alter et al., Citation2010). Here, participants may have mistaken their simplified explanation as a detailed mechanical explanation. However, this does not necessarily mean that people actually have no knowledge when they think they do. We found a reasonable pattern in how participants’ ratings of their own knowledge related to their ratings of scientists’ knowledge as well as their anticipated strategies for coping with the division of cognitive labour: Participants who gave higher ratings of experts’ knowledge also tended to use the strategy of source evaluation, implying that the more scientists are perceived as knowledgeable, the more people are likely to try to evaluate their credibility as the providers of information. Moreover, participants’ ratings of their own knowledge were positively related to reported deference to further experts. Therefore, the more knowledge participants perceived they had, the more likely they were to defer to experts as a useful strategy for coping with the division of cognitive labour.

Further, as mentioned above, we found that participants’ ratings of their own and scientists’ knowledge were positively correlated. This is in line with what Rabb et al. (Citation2019) described as the difficulty in distinguishing between one’s own knowledge, community knowledge, and markers of knowledge: People may perceive themselves as more knowledgeable about a scientific phenomenon if they think that scientists understand it and are able to explain it. As we identified a robust correlation between the participants’ ratings of their own knowledge and scientists’ knowledge, this indicates that the more the participants rated themselves as knowledgeable, the more they also rated scientists as knowledgeable, thus keeping the knowledge gap rather stable. These results further imply that reading the science news article also enhanced participants’ perception of what experts know, which can be seen as a positive outcome of understanding the community of knowledge (Rabb et al., Citation2019).

It was not our aim to problematize the fact that participants in the explanation group were rather satisfied with the explanations they provided, even though the explanations were often simplified and not very detailed. This is because from reading the text, they actually did gain some knowledge regarding algorithm-based predictions of severe weather events, and they did in fact receive information about what scientists currently know. These knowledge gains were also evidenced by participants’ accurate responses to the reading control questions. Apart from content knowledge, participants also likely gained knowledge about the nature of science and scientists’ work in the field of weather forecasting. As all participants perceived a clear gap between their knowledge as individuals without expertise and the knowledge of scientists with presumed expertise, we can assume that their views on their own knowledge gains are not overly confident. Moreover, participants’ anticipated strategies for dealing cognitive labour division demonstrate that they rely much less on their intuition about a topic than on other strategies, such as credibility evaluation or looking for further information. In a similar vein, people who rated their own knowledge as higher also actually reported that they would defer to other experts to obtain further information. This suggests that as long as individuals perceive the gap between themselves and the scientists, and that individuals anticipate using first-hand and second-hand and strategies for coping with the division of cognitive labour, the results do not need to be interpreted as problematic.

Limitations

Our study is not a ‘pure’ IOED study but was rather inspired by this effect. As our aim was to study a potential IOED effect in learning about science, we had to diverge from the original IOED study design (Rozenblit & Keil, Citation2002). In the original design, people are initially not given any material about the topic they have to explain. In contrast, in our study participants actually learned something by reading the text we provided and did indeed have more knowledge than at the beginning of the study. While gaining new knowledge is indeed a likely positive consequence of reading about science (McClune & Jarman, Citation2012), it was not our aim to measure learning gains. We intended to study laypeople as competent outsiders (Feinstein, Citation2011) and have focused on specific dependent measures that better reflect the practical aspects of coping with the realities of highly specialized knowledge. In this context, other variables such as engagement with science, interest in science, or transfer to other learning situations could also be interesting to examine.

Furthermore, we did not specify how detailed and mechanistic the explanation needed to be. This made it much more difficult to show the effect of knowledge rating dynamics, but at the same time, the design better reflected people’s natural behaviour when they inform themselves about science. All in all, the effects that we were trying to show were rather indirect: We were trying to induce higher intellectual humility, more critical perception of participants’ own knowledge and more sophisticated strategies for coping with the high division of cognitive labour by using the explanation task. Perhaps a simpler and more direct design could have had a stronger effect on participants’ ratings of their own knowledge. Likewise, an intervention using argumentation (e.g. Fisher et al., Citation2015) may have induced a stronger knowledge recalibration and a more direct focus on what one does now know.

For similar reasons, our study has only limited implications for educational settings. The decision to omit the instruction on how to generate a ‘correct’ explanation has perhaps resulted in less complex reasoning. Studies on explaining in an educational setting have indeed found positive effects on students’ understanding and generalization. We cannot conclude that our participants have furthered their understanding in a meaningful way and cannot claim that explaining was more beneficial for them than reading. However, these results should be acknowledged critically in the frame of laypeople’s engagement with science. Laypeople commonly receive information about science from media outlets, however, merely reading or even discussing may not be enough for reflecting about the nature of science (Maier et al., Citation2014). Future studies should investigate how understanding of complexities about science can be triggered outside of educational environments, for instance through science communication and public engagement with science.

Furthermore, the writing modality may have influenced our participants. Empirical evidence points to the differential role of the mode of explaining on students’ learning (Hoogerheide et al., Citation2016; Lachner et al., Citation2018). That is, explaining orally, in writing or on video creates a different context as well as cognitive load demands. Moreover, explaining itself may be more effective if it occurs in an interactive setting. In a similar vein, to know that one will be asked to generate an explanation may also have an effect on the nature of the engagement with the text material (e.g. Fiorella & Mayer, Citation2014). Further studies could investigate how communication goals (in written and oral form) differently affect laypeople’s reasoning about science.

The topic we chose was, in essence, very scientific and not controversial; hence it is likely that participants were more aware of the division of the cognitive labour and did view their initial and post-explanation knowledge position as inferior to scientists’ knowledge position. Moreover, scientists and their research were portrayed in a positive manner. A more controversial scientific topic or a topic closer to people’s everyday discourse might have led to different ratings, e.g. participants’ perceptions of their own knowledge perhaps would have been more biased and less realistic. This was illustrated in studies on political policies or genetically modified foods (Fernbach et al., Citation2013; Fernbach et al., Citation2019). Therefore, further studies on different scientific topics are needed, as people may reason differently about different academic domains and more controversial topics (climate change, abortion), which may induce stronger emotional reactions. Therefore, caution must be exercised when generalizing the results of our study to other domains.

Lastly, participants in our sample were generally interested in science and educated at an above-average level (49% of participants have attained higher education while 33,5% of German population has attained that level of education; Statistisches Bundesamt, Citation2019), which coincides with what other researches have noted about the Prolific participants pool (Palan & Schitter, Citation2018). This could mean that our sample was somewhat biased for the purpose of studying laypeople’s understanding of science. On the other hand, the chosen sample is well suited for investigating phenomena related to seeking scientific information online, which is a particularly common activity for the sample of people we used. Furthermore, participation in the experimental study was anonymous. While we qualitatively analysed all explanations and used screening questions to control that they had read the material, we cannot guarantee that their effort matches the real-life effort put in a similar task.

Conclusions

The study set out to investigate how people’s understanding of science and ways of coping with scientific information are influenced by active communication about the science topic. More specifically, we investigated the effects of explaining a scientific topic – in contrast to merely reading about it – on how people rate their own knowledge, how they rate scientists’ knowledge, which strategies they use to cope with the division of cognitive labour, and their intellectual humility. The findings reported here shed new light on how individuals without expertise cope with information about science that is communicated to them in an informal context, especially when they then communicate it to other people. This has been more commonly studied in controlled educational settings and rarely looked at through the lens of how adults cope with science in their everyday lives, although seeking information about science and making sense of it is a fairly common behaviour for adults (Wissenschaft im Dialog/Kantar Emnid, Citation2019). In terms of participants’ ratings of their own and scientists’ knowledge, we found no significant differences between participants who only read the science text and those who read it and subsequently explained it. However, we found that after either way of engaging with the text, participants perceived their own and scientists’ knowledge as higher. Moreover, they were less prone to rely on their own knowledge than to use other strategies for coping with the division of cognitive labour, such as credibility evaluation and deference to further experts. Considering the specific topic in our study, our results demonstrate that even though the information were presented in a simplified manner, people were able to clearly distinguish between their own knowledge and the knowledge of scientists in the field. Engaging with science news texts through reading and/or explaining may therefore have a positive effect on how people perceive scientists’ knowledge. Likewise, strategies they see as useful for gaining further information indicate that they tend not to rely on themselves and rather use first-hand and second-hand strategies to cope with the division of cognitive labour.

Supplemental Material

Download Zip (222.7 KB)Acknowledgements

We thank Celeste Brennecka for language editing and Hannah Meinert for her research assistance.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The datasets generated and analysed during the current study are available in the ZPID repository (https://www.psycharchives.org/handle/20.500.12034/2778).

Additional information

Funding

References

- Alter, A. L., Oppenheimer, D. M., & Zemla, J. C. (2010). Missing the trees for the forest: A construal level account of the illusion of explanatory depth. Journal of Personality and Social Psychology, 99(3), 436–451. https://doi.org/https://doi.org/10.1037/a0020218

- Asterhan, C. S. C., & Schwarz, B. B. (2016). Argumentation for learning: Well-trodden paths and unexplored territories. Educational Psychologist, 51(2), 164–187. https://doi.org/https://doi.org/10.1080/00461520.2016.1155458

- Baker, J. M. C., & Dunlosky, J. (2006). Does momentary accessibility influence metacomprehension judgments? The influence of study—Judgment lags on accessibility effects. Psychonomic Bulletin & Review, 13(1), 60–65. https://doi.org/https://doi.org/10.3758/BF03193813

- Bromme, R., & Goldman, S. R. (2014). The public’s bounded understanding of science. Educational Psychologist, 49(2), 59–69. https://doi.org/https://doi.org/10.1080/00461520.2014.921572

- Bromme, R., & Jucks, R. (2018). Discourse and expertise: The challenge of mutual understanding between experts and laypeople. In M. F. Schober, D. N. Rapp, & M. A. Britt (Eds.), The Routledge handbook of discourse processes (pp. 222–246). Routledge/Taylor & Francis Group.

- Bromme, R., & Thomm, E. (2016). Knowing who knows: Laypersons’ capabilities to judge experts’ pertinence for science topics. Cognitive Science, 40(1), 241–252. https://doi.org/https://doi.org/10.1111/cogs.12252

- Bromme, R., Thomm, E., & Wolf, V. (2015). From understanding to deference: Laypersons’ and medical students’ views on conflicts within medicine. International Journal of Science Education, Part B, 5(1), 68–91. https://doi.org/https://doi.org/10.1080/21548455.2013.849017

- Carpenter, S. K., Wilford, M. M., Kornell, N., & Mullaney, K. M. (2013). Appearances can be deceiving: Instructor fluency increases perceptions of learning without increasing actual learning. Psychonomic Bulletin & Review, 20(6), 1350–1356. https://doi.org/https://doi.org/10.3758/s13423-013-0442-z

- Delgado, P., Vargas, C., Ackerman, R., & Salmerón, L. (2018). Don’t throw away your printed books: A meta-analysis on the effects of reading media on reading comprehension. Educational Research Review, 25, 23–38. https://doi.org/https://doi.org/10.1016/j.edurev.2018.09.003

- Duschl, R. A., & Osborne, J. (2002). Supporting and promoting argumentation discourse in science education. Studies in Science Education, 38(1), 39–72. https://doi.org/https://doi.org/10.1080/03057260208560187

- Feinstein, N. (2011). Salvaging science literacy. Science Education, 95(1), 168–185. https://doi.org/https://doi.org/10.1002/sce.20414

- Fernbach, P. M., & Light, N. (2020). Knowledge is shared. Psychological Inquiry, 31(1), 26–28. https://doi.org/https://doi.org/10.1080/1047840X.2020.1722601

- Fernbach, P. M., Light, N., Scott, S. E., Inbar, Y., & Rozin, P. (2019). Extreme opponents of genetically modified foods know the least but think they know the most. Nature Human Behaviour, 3(3), 251–256. https://doi.org/https://doi.org/10.1038/s41562-018-0520-3

- Fernbach, P. M., Rogers, T., Fox, C. R., & Sloman, S. A. (2013). Political extremism is supported by an illusion of understanding. Psychological Science, 24(6), 939–946. https://doi.org/https://doi.org/10.1177/0956797612464058

- Fiedler, K., Ackerman, R., & Scarampi, C. (2019). Metacognition: Monitoring and controlling one’s own knowledge, reasoning and decisions. In R. J. Sternberg & J. Funke (Eds.), The psychology of human thought (pp. 89–111). Heidelberg University Publishing. https://doi.org/https://doi.org/10.17885/HEIUP.470.C6669

- Fiorella, L., & Mayer, R. E. (2014). Role of expectations and explanations in learning by teaching. Contemporary Educational Psychology, 39(2), 75–85. https://doi.org/https://doi.org/10.1016/j.cedpsych.2014.01.001

- Fisher, M., Goddu, M. K., & Keil, F. C. (2015). Searching for explanations: How the internet inflates estimates of internal knowledge. Journal of Experimental Psychology: General, 144(3), 674–687. https://doi.org/https://doi.org/10.1037/xge0000070

- Gottschling, S., Kammerer, Y., Thomm, E., & Gerjets, P. (2020). How laypersons consider differences in sources’ trustworthiness and expertise in their regulation and resolution of scientific conflicts. International Journal of Science Education, Part B, 10(4), 335–354. https://doi.org/https://doi.org/10.1080/21548455.2020.1849856

- Hoogerheide, V., Deijkers, L., Loyens, S. M. M., Heijltjes, A., & van Gog, T. (2016). Gaining from explaining: Learning improves from explaining to fictitious others on video, not from writing to them. Contemporary Educational Psychology, 44-45, 95–106. https://doi.org/https://doi.org/10.1016/j.cedpsych.2016.02.005

- Hoyle, R. H., Davisson, E. K., Diebels, K. J., & Leary, M. R. (2016). Holding specific views with humility: Conceptualization and measurement of specific intellectual humility. Personality and Individual Differences, 97, 165–172. https://doi.org/https://doi.org/10.1016/j.paid.2016.03.043

- Jucks, R., & Mayweg-Paus, E. (2016). Learning through communication: How arguing about scientific information contributes to learning. Zeitschrift Für Pädagogische Psychologie, 30(2-3), 75–77. https://doi.org/https://doi.org/10.1024/1010-0652/a000170

- Keil, F. C. (2006). Explanation and understanding. Annual Review of Psychology, 57(1), 227–254. https://doi.org/https://doi.org/10.1146/annurev.psych.57.102904.190100

- Kienhues, D., Hendriks, F., Jucks, R., & Bromme, R. (2019, August). Identifying strategies in dealing with science-based information. Paper presentation. European Association for Research on Learning and Instruction (EARLI) Biennial Conference 2019, Aachen.

- Koo, T. K., & Li, M. Y. (2016). A guideline of selecting and reporting intraclass correlation coefficients for reliability research. Journal of Chiropractic Medicine, 15(2), 155–163. https://doi.org/https://doi.org/10.1016/j.jcm.2016.02.012

- Koriat, A. (1995). Dissociating knowing and the feeling of knowing: Further evidence for the accessibility model. Journal of Experimental Psychology: General, 124(3), 311–333. https://doi.org/https://doi.org/10.1037/0096-3445.124.3.311

- Krumrei-Mancuso, E. J., Haggard, M. C., LaBouff, J. P., & Rowatt, W. C. (2020). Links between intellectual humility and acquiring knowledge. The Journal of Positive Psychology, 15(2), 155–170. https://doi.org/https://doi.org/10.1080/17439760.2019.1579359

- Lachner, A., Backfisch, I., Hoogerheide, V., van Gog, T., & Renkl, A. (2020). Timing matters! Explaining between study phases enhances students’ learning. Journal of Educational Psychology, 112(4), 841–853. https://doi.org/https://doi.org/10.1037/edu0000396

- Lachner, A., Ly, K.-T., & Nückles, M. (2018). Providing written or oral explanations? Differential effects of the modality of explaining on students' conceptual learning and transfer. The Journal of Experimental Education, 86(3), 344–361. https://doi.org/https://doi.org/10.1080/00220973.2017.1363691

- Lenhard, W., & Lenhard, A. (2011). Berechnung des Lesbarkeitsindex LIX nach Björnson [Calculation of the readability index LIX after Björnson]. http://www.psychometrica.de/lix.html

- Lombrozo, T. (2006). The structure and function of explanations. Trends in Cognitive Sciences, 10(10), 464–470. https://doi.org/https://doi.org/10.1016/j.tics.2006.08.004

- Maier, M., Rothmund, T., Retzbach, A., Otto, L., & Besley, J. C. (2014). Informal learning through science media usage. Educational Psychologist, 49(2), 86–103. https://doi.org/https://doi.org/10.1080/00461520.2014.916215

- McClune, B., & Jarman, R. (2012). Encouraging and equipping students to engage critically with science in the news: What can we learn from the literature? Studies in Science Education, 48(1), 1–49. https://doi.org/https://doi.org/10.1080/03057267.2012.655036

- O’Reilly, T., Symons, S., & MacLatchy-Gaudet, H. (1998). A comparison of self-explanation and elaborative interrogation. Contemporary Educational Psychology, 23(4), 434–445. https://doi.org/https://doi.org/10.1006/ceps.1997.0977

- Origgi, G. (2012). Epistemic injustice and epistemic trust. Social Epistemology, 26(2), 221–235. https://doi.org/https://doi.org/10.1080/02691728.2011.652213

- Palan, S., & Schitter, C. (2018). Prolific.ac—A subject pool for online experiments. Journal of Behavioral and Experimental Finance, 17, 22–27. https://doi.org/https://doi.org/10.1016/j.jbef.2017.12.004

- Penn State. (2019, July 2). Using artificial intelligence to better predict severe weather: Researchers create AI algorithm to detect cloud formations that lead to storms. ScienceDaily. Retrieved August 22, 2021 from www.sciencedaily.com/releases/2019/07/190702160115.htm

- Pieschl, S. (2009). Metacognitive calibration—An extended conceptualization and potential applications. Metacognition and Learning, 4(1), 3–31. https://doi.org/https://doi.org/10.1007/s11409-008-9030-4

- Rabb, N., Fernbach, P. M., & Sloman, S. A. (2019). Individual representation in a community of knowledge. Trends in Cognitive Sciences, 23(10), 891–902. https://doi.org/https://doi.org/10.1016/j.tics.2019.07.011

- Rozenblit, L., & Keil, F. (2002). The misunderstood limits of folk science: An illusion of explanatory depth. Cognitive Science, 26(5), 521–562. https://doi.org/https://doi.org/10.1207/s15516709cog2605_1

- Scharrer, L., Stadtler, M., & Bromme, R. (2014). You’d better ask an expert: Mitigating the comprehensibility effect on laypeople’s decisions about science-based knowledge claims. Applied Cognitive Psychology, 28(4), 465–471. https://doi.org/https://doi.org/10.1002/acp.3018

- Sinatra, G. M., Kienhues, D., & Hofer, B. K. (2014). Addressing challenges to public understanding of science: Epistemic cognition, motivated reasoning, and conceptual change. Educational Psychologist, 49(2), 123–138. https://doi.org/https://doi.org/10.1080/00461520.2014.916216

- Singmann, H., Bolker, B., Westfall, J., Aust, F., & Ben-Shachar, M. S. (2015). Afex: Analysis of factorial experiments R Package Version 0.24-1. https://CRAN.R-project.org/package=afex

- Sloman, S. A., & Rabb, N. (2016). Your understanding is my understanding: Evidence for a community of knowledge. Psychological Science, 27(11), 1451–1460. https://doi.org/https://doi.org/10.1177/0956797616662271

- Statistisches Bundesamt. (2019, October 30). Bildung. Statistisches Bundesamt. https://www.destatis.de/DE/Themen/Gesellschaft-Umwelt/Bildung-Forschung-Kultur/Bildungsstand/_inhalt.html;jsessionid=42B759F33726B5577C4BA0C06E682170.live742

- Vaupotič, N., Kienhues, D., & Jucks, R. (2021). Trust in science and scientists: Implications for (higher) education. In B. Blöbaum (Ed.), Trust and communication: findings and implications of trust research (pp. 207–220). Springer International Publishing. https://doi.org/https://doi.org/10.1007/978-3-030-72945-5_10

- Whitcomb, D., Battaly, H., Baehr, J., & Howard-Snyder, D. (2017). Intellectual humility: Owning our limitations. Philosophy and Phenomenological Research, 94(3), 509–539. https://doi.org/https://doi.org/10.1111/phpr.12228

- Wissenschaft im Dialog/Kantar Emnid. (2019). Science Barometer 2019. Berlin. https://www.wissenschaft-im-dialog.de/en/our-projects/science-barometer/science-barometer-2019/