?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The purpose of the study reported here was to observe the effects of examination practices on the extent to which university students procrastinate. These examination practices were: (1) limiting the number of resits, (2) compensatory rather than conjunctive decision-making about student progress, and (3) restricting the time available for completing the first bachelor year. Study success in the first academic year (successful completion within one year, delay, or dropout) of 12,432 students entering a Dutch university before the introduction of the new examination practices was compared with that of 17,036 students admitted after its introduction. After the implementation of the new examination practices successful completion increased with 23% and delay decreased with 25%. The data were collected using an interrupted time series design. Three attempts were made to deal with possible threats to its internal validity. (1) Potential confounding variables were demonstrated not to play a role in explaining the effect of the new examination practices. (2) Interrupted time series regression demonstrated that the intervention, not other changes over time, contributed to study success. And (3), extraneous events interfering with the effect of the intervention were shown to be unlikely. In conclusion, the study presented here is the first to demonstrate the effect of examination rules on study delay. The findings indicate that delays, as usually observed in higher education, are not necessarily the result of lack of ability. Nor are they necessarily the effect of some inherent personality disorder.

Introduction

Procrastination of students in higher education, here defined as postponement of preparing adequately for examinations, presents a serious problem. According to some authors, 80% to 95% of college students procrastinate to some extent (Steel Citation2007). In a study among a population of such students, 32% were found to be severe procrastinators (Day, Mensink, and O'Sullivan Citation2000). In a study by Meeuwisse, van Wensveen, and Severiens (Citation2011), business administration students were found to spend around three hours per day on study, including attending lectures and practicals. By contrast, they were spending almost four and a half hours on recreation, such as watching television, chatting, being on the internet, and visiting with family and friends. Some studies suggest that, not surprisingly, procrastination is a precursor of dropout: most students who drop out, do this after an extended period in which they delay their studies (Diver and Martinez Citation2015; Kim and Seo Citation2015; Munoz-Olano and Hurtado-Parrado Citation2017).

Explanations for the procrastination phenomenon tend to focus on personal characteristics of students (Watson Citation2001). Researchers seek its causes in maladaptive implicit beliefs (Howell and Buro Citation2009), in self-regulation failure (Senécal, Koestner, and Vallerand Citation1995; Van Eerde Citation2000; Wolters Citation2003), in low self-efficacy (Klassen, Krawchuk, and Rajani Citation2008), or in unproductive achievement motives (Ferrari and Tice Citation2000; Howell and Watson Citation2007). See for overviews: (Ferrari, Johnson, and McCown Citation1995; Steel Citation2007).

By contrast, studies of possible curricular causes of procrastination are limited. Van Den Berg and Hofman (Citation2005) studied the study progress of more than 9000 students in 60 curricula. Study progress was measured by the amount of time needed to complete the first year and the bachelor. They found that as the number of courses in a particular curriculum year increased, study progress decreased. The more courses were taught in parallel, the fewer progress students made. Similar findings were reported by others (Jansen Citation2004; Van der Hulst and Jansen Citation2002).

The study reported in this article focus on the influence of examination practices on procrastination. Unlike in US college education, students on the European continent are generally required to pass all their examinations to be able to graduate. If they fail some of these, they have the opportunity to repeat or resit these examinations. Decisions about progress of students based on such approach are called conjunctive decisions, as opposed to decisions based on compensation (Haladyna and Hess Citation1999; Rekveld and Starren Citation1994). Under the latter, students are allowed to compensate insufficient performance on one achievement test with better performances on other tests. Decisions with regard to study progress are here not made based on conjunction, but on the mean score of all tests taken together: the grade-point average or GPA. Since often one-third of students fail an examination, extensive remedial tests are needed under a conjunctive decision approach. These resits are however often used by students to delay their studies, possibly arguing: ‘If I do not pass this examination at this time, I will have the opportunity to do it at a later stage’. Ostermaier, Beltz, and Link (Citation2013) demonstrated that, the more often students were enabled to resit an examination, the lower their achievement and the more likely their failure.

So, resits, meant to help students progress, in fact enable them to procrastinate. These effects are by no means limited: Bruinsma and Jansen (Citation2009) for instance, in a study of 565 students in four curricula, demonstrated that 69% of these students failed to pass all examinations of their first year within 12 months. After two years, only 50% had completed all their first-year examinations. In response to this undesirable state of affairs, most Dutch universities require students to obtain 40 out of 60 ECTS creditsFootnote1 in their first year, allowing them to acquire the remaining first-year credits in their second year. If they fail to do so, they have to leave the particular curriculum.

Interestingly, in the literature on resits (McManus Citation1992; Pell, Boursicot, and Roberts Citation2009; Ricketts Citation2010) few traces are found of this obvious negative effect of enabling students to repeat an examination. The only studies hinting at the adverse effect of resits, however without further exploration, are studies by Arnold (Citation2017) and Ostermaier, Beltz, and Link (Citation2013). Since enabling students to do resits for failed examinations is such an important potential source of procrastination, an obvious measure to limit procrastination is to limit opportunities for resits or even abandon them. However, to do so, it is necessary to allow students to compensate insufficient marks on one examination with high marks on another examination: conjunctive decisions would be unfair under such examination regimen.

In this article, we describe the effects of an attempt by a Dutch university to implement such an examination policy. In this university, students have only limited resit opportunities and have to complete their first bachelor year in one year rather than in two. If they fail, they have to leave the particular program. In popular parlance among staff and students, the approach became known as ‘Nominal = Normal’, indicating that it should be normal for students to complete a first year in the nominally available time of 12 months. The shortcut Nominal = Normal, summarizing changes in examination practices to diminish procrastination, will be used throughout the manuscript. We will report on the effects of this approach on study success of students taught at this particular university, comparing progress of first-year bachelor students before and after introduction of the measures. The data were collected using a quasi-experimental interrupted time series design.

Method

Context

This study was conducted at a medium-size university in the Netherlands. The data of 12 bachelor programs were included, representing all of the university’s programs, with one exception.Footnote2 The 12 bachelor programs implemented Nominal = Normal at different moments in time. Psychology, sociology, and public administration instigated the new examination strategy in 2011 as a pilot study. Economics, law, history, cultural studies, communication and media, health policy and management, criminology, and business administration followed in 2012, and medicine in 2014.

Participants

shows numbers of students and their background characteristics admitted in the last years prior to the introduction of Nominal = Normal and in the first years after its introduction. The study included the seven cohorts admitted between 2009 and 2015: 12,432 students who were admitted before its introduction, and 17,036 students admitted after its introduction. The background characteristics of the students enrolled before and after introduction of the new examination rules are largely similar, attesting to the similitude of the two populations, with one exception; the GPAs from Dutch students passing the national end-of-secondary-school examination were noticeably lower after the introduction of Nominal = Normal.

Table 1. Background characteristics of all full-time students enrolled at the Dutch university under study between 2009 and 2015 (with the exception of those studying philosophy), expressed in frequency counts and percentages before and after the introduction of ‘Nominal = Normal’.

The intervention

Before Nominal = Normal was implemented, first-year students were obliged to have acquired at least 40 ECTS-credits (one credit equals 28 hours of study) out of the 60 belonging to the first-year program to be able to continue their studies. After the introduction of Nominal = Normal, students were obliged to have acquired all 60 credits belonging to the first-year bachelor program at the end of the first year. There was one exception: students who had demonstrably suffered from adverse personal circumstances, such as a serious disease or the death of a close relative, were still allowed to continue their studies. Upon the university-wide implementation of this approach to examination, the total number of admissible resits was set to a maximum of two. This implied that students could, at the end of the year, repeat not more than two out of the total number of examinations taken in the first year, and only if they had completed these examinations earlier with an insufficient grade. The Dutch marking system consists of grades varying from 1 to 10, 5.5 being sufficient.Footnote3 If they could comply with these requirements, they would be allowed to continue their studies in the second year. If not, they had to leave the program.

Measurements

The following measures were taken: (I) Enrolment of students. The data with regard to the enrolment of students in the period 2009–2015 were obtained from the central administration of the particular university. (II) Study progress in the first year. Based on their performance, students were assigned to the following categories: (1) Successful completion of the first year: at the end of the first year, these students had successfully completed all first-year courses and had thus obtained all 60 credits belonging to the first-year program; (2) Study delay: this category existed of (a) students who – before the implementation of Nominal = Normal – had obtained at least the minimum number of 40 credits required (but thus not all 60 credits) at the end of the first year and (b) students who had not obtained this minimal number of credits required at the end of the first year but had suffered from adversary personal circumstances, such as serious illness or death of a family member. These latter students were still allowed to continue their studies; (3) Drop-out: this group contained (a) no-show students (students who finalized their registration, but never showed up), (b) early dropouts (students who dropped out within the first four months of the program), and (c) students who had not obtained the minimal required number of credits at the end of the first year and had not suffered from adversary personal circumstances.

Data analysis

All the above-mentioned data were collected and then linked based on student ID. After linking the data, privacy of the students was ascertained by removing the student ID from the database. All cohorts entering the university before the implementation of Nominal = Normal together and all cohorts entering after the implementation of Nominal = Normal were compared on (a) the percentage of students who had successfully completed the first-year program within a year after the start, (b) the percentage of students who had suffered from study delay in the first year, but were still allowed to continue their studies, and (c) the percentage of students who dropped out in the first year of study. Differences in the percentages of successful students, delayed students, and dropouts between the cohorts before and after the implementation of Nominal = Normal were tested for significance by Pearson Chi-square tests. Since the number of tests conducted was rather large, differences were assessed at the p <.001 level. Cramer’s V was used to calculate the effect size of the Chi-square tests. This statistic varies between 0 and 1.

The study was a quasi-experimental interrupted time series design. Unlike a randomized experiment, such approach necessitates to control for threats to its internal validity, that is: to check for extraneous variables that may have influenced the findings (Cook and Campbell Citation1979). First, we made an attempt to control for confounding factors. The literature suggests that at least three variables are important while explaining study success in higher education: prior performance in secondary education, gender, and ethnicity: The best single predictor of study success in higher education is generally considered the student’s GPA in secondary education (Harackiewicz et al. Citation2002; McKenzie and Schweitzer Citation2001; Zeegers Citation2004); women perform generally better than men in higher education (Goldin, Katz, and Kuziemko Citation2006; Jacob Citation2002), and ethnic minorities tend to underperform compared to other groups (Dekkers, Bosker, and Driessen Citation2000; Roscigno and Ainsworth-Darnell Citation1999). To assess the influence of these variables on study success relative to the influence of the new examination practices, we conducted two multinomial logistic regression analyses using IBM Statistical Software for the Social Sciences (SPSS). Multinomial logistic regression allows one to study the relative influences of multiple categorical variables on a dependent variable, akin to multiple regression. Since we had secondary-education achievement information only from part of our students, one analysis was conducted without secondary-education GPA, and one with GPA. The dependent variable in the analyses was study success, expressed as either successful completion of the first year (scored as 1), delay (scored as 2), or dropout (scored as 3). De independent categorical variables were Nominal = Normal (a dummy variable scored as before introduction = 0, versus after introduction = 1), gender, and ethnicity (as expressed in ). Secondary education GPA was treated as a covariate.

Second, interrupted time series regression was applied to test the likely causal influence of the new examination practices on study success as a function of the timing of its introduction (Bernal, Cummins, and Gasparrini Citation2017).

A quasi-experimental design such as used in the present study is particularly vulnerable to events unrelated to, but happening in parallel with the experimental treatment, that may affect the findings. For instance, if national policy changes would coincide with the introduction of the experimental treatment, it would be a competitor in the explanation of possible effects. In fact, in 2012, the Dutch government concluded a covenant with all universities detailing measures to increase study progress and decrease dropout. Effects of such measures may have interfered with the introduction of the new examination practices. Nominal = Normal was, however, introduced in the different bachelor programs at three different points in time. If its effects only could be observed directly after its introduction at each of these three points in time, and not before or somewhat later, this would provide evidence that extraneous events did not cause the effect. Therefore, to study the influence of extraneous events as a threat to the internal validity of our study, we plotted percentages of completion rate data for the different bachelor programs on the timeline between 2009 and 2015 and looked for evidence of influences other than the introduction of Nominal = Normal.

Results and discussion

shows overall study progress of students in the first year of the university under study before and after the introduction of Nominal = Normal. It displays counts and percentages of students who ended up in one of the three categories of interest: successful completion of the first year, delays, and dropout.

Table 2. Study progress in year 1 as frequency counts and percentages of all full-time students enrolled at the Dutch university under study between 2009 and 2015 (with the exception of those studying philosophy) before and after the introduction of ‘Nominal is Normal’.

We conducted Chi-square tests on each of the variables involved. The difference between percentages of students completing the first year in one year before and after the introduction of Nominal = Normal is statistically significant: Chi-square (1, 14,596) = 1593.41, p < .0001, Cramer’s V = 0.23; the same applies to the percentages of students showing study delays: Chi-square (1, 6388) = 2532.11, p < .0001, Cramer’s V = 0.29. The percentages of students dropping out are not significantly different before and after introduction of Nominal = Normal: Chi-square (1, 8472) = 2.97, p = .09, Cramer’s V = 0.01. To what extent, however, could these observed effects be attributed to the changes in the examination practices?

Control for potential confounders

First, two multinomial logistic regression analyses were conducted to study the influence of possible confounders relative to the influence of the new examination practices. In the first multinomial logistic regression, the dependent variable was study success. Gender and ethnic background were included as independent variables in the analysis. Nominal = Normal was included among the independent variables as a dummy variable. The first analysis was conducted including all students in the sample (implying the exclusion of the secondary-education GPA variable). When including all other variables in the analysis, the full model represented a significant gain in the predictability of study success, compared with the basic (null) model: Chi-square (8, 29,377) = 3684.70, p < .0001. contains the likelihood ratio tests, delineating unique contributions of the variables in the model to the prediction of study success. contains the parameter estimates for this analysis.

Table 3. Likelihood ratio tests for three predictors of study success in the first academic year (successful completion, study delays, dropout): Nominal = Normal, gender, and ethnicity.

Table 4. Parameter estimates resulting from multinomial logistic regression involving study success in the first academic year (successful completion, study delays, dropout) and three predictors: Nominal = Normal, gender, and ethnicity.

All variables contributed significantly. However, the influence of the changes in the examination practices, embodied by Nominal = Normal is larger than the influences of the other variables combined.

The second multinomial logistic regression included secondary-school GPA as a predictor along with the other variables of relevance. This analysis was conducted on a subgroup in our sample because GPA data were available for 65%, being 19,155 students, completing their pre-university education in the Netherlands. The full model, not surprisingly, represented again a significant gain in predictability of study success: Chi-square (10, 19,145) = 4086.99, p < .0001. The likelihood ratio tests depicted in demonstrated that Nominal = Normal as an independent source of variation again contributed most to the prediction of study success. In conclusion, these findings suggest that the examination practices aimed at decreasing procrastination were the best predictor of whether a student would bring her or his first year successfully to completion, would delay her or his study, or would dropout. contains the parameter estimates for this analysis.

Table 5. Likelihood ratio tests for four predictors of study success in the first academic year (successful completion, study delays, dropout): Nominal = Normal, secondary-education GPA, gender, and ethnicity.

Table 6. Parameter estimates resulting from multinomial logistic regression involving study success in the first academic year (successful completion, study delays, dropout) and four predictors: Nominal = Normal, secondary-education GPA, gender, and ethnicity.

Interrupted time series regression

Second, we employed interrupted time series regression. Interrupted time series regression is a useful approach to test whether the introduction of an intervention (and not other factors) at a particular point in time has been effective (Bernal, Cummins, and Gasparrini Citation2017; Kontopantelis et al. Citation2015). Since Nominal = Normal was introduced in different programs at different points in time, the data were first centered around the moment of introduction of the new examination practices in each program. Note that here successful completion is not a nominal variable but a percentage and that analysis takes place, not at the individual student level, but at the cohort level.

Minimally three variables are required for an interrupted time series analysis (Bernal, Cummins, and Gasparrini Citation2017): the dependent variable, here study success expressed as the percentage of students who successfully completed their first year, and two independent variables: the time elapsed since the beginning of the study (here expressed in years), and the intervention (here Nominal = Normal). The appropriate single-group model to be tested is

(1)

(1) where β0 is the pre-intervention intercept; β1 is the pre-intervention slope, β2 is the change in level in the period immediately following intervention initiation; and β3 the difference between pre-intervention and post-intervention slopes. The interrupted time series was analysed using the itsa command developed for the Stata program (Linden Citation2015; StataCorp Citation2019). contains the parameter estimates.

Table 7. Parameter estimates for single group interrupted time series regression with Newey-West standard errors.

These findings can be interpreted as follows: The starting level of study success in the first curriculum year, as expressed by the percentage of students that received 60 credits in one year, was estimated at 39.58%. That percentage decreased non-significantly by 0.68 every year prior to the intervention. In the first year of the intervention, there appeared to be a significant increase in study success by 24.58 percentage points, followed by a nonsignificant increase (relative to the pre-intervention trend) of 0.10 per year. In summary, Nominal = Normal, not other changes over time, turned out to be the significant predictor of the changes in study success.

External threats

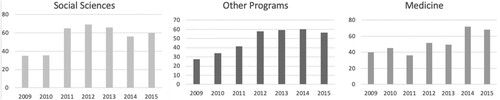

A final test of the influence of external threats on the internal validity of our study was the plotting of percentages of completion rate data on the timeline between 2009 and 2015 and looking for evidence of influences other than the introduction of Nominal = Normal. The examination practices were introduced in the various programs at three different points in time. In , we collapsed the data only for those bachelor programs that introduced Nominal = Normal at the same point in time.

Figure 1. Percentages first-year completion rates of all full-time students enrolled at the Dutch university under study between 2009 and 2015, before and after the introduction of ‘Nominal = Normal.’ The social sciences (psychology, public administration, sociology) introduced the new examination practices in 2011; the other bachelor programs in 2012; and medicine in 2014.

The figure suggests that whenever Nominal = Normal is implemented, completion rates increase more than other observed changes. They coincide with the points in time at which the examination practices were introduced. This makes it rather unlikely that unrelated events may have caused the effect.

General discussion

In the Introduction section, we have argued that study delays are a serious problem in higher education and that one possible – and hitherto unexplored – source of procrastination of students is the extent to which they are enabled to delay their studies using resits of examinations that they did not pass initially. A conceivable remedy would then be to restrict the number of resits available to them and to limit the time window within which a sufficient performance should be demonstrated. However, such tactic would only be fair if students are allowed to compensate poor performance on one achievement test with better performance on another, and study success would be measured by GPA. If students are encouraged not to postpone their studies, requiring them to finalize their first year of studies within 12 months is a necessary consequence.

This combination of restricting resits and deciding about study progress based on GPA without giving students the possibility of pushing forward some examinations to a subsequent year, was applied by one Dutch university under the heading Nominal = Normal, indicating that it should be normal for students to complete the first year in the nominal time available. In a quasi-experimental interrupted time series design, we compared the percentages of students completing their first year before and after the introduction of Nominal = Normal. The results were significant. In the bachelor programs surveyed, completion within one year rose from 36% to 59%, whereas study delay showed the reverse: a decrease of 25%.

Of course, these findings cannot without reservation be attributed to the new examination rules. Data collected through an interrupted time series design are vulnerable to confounding variables. In the context of higher education, particularly pre-university GPA, (Harackiewicz et al. Citation2002; McKenzie and Schweitzer Citation2001; Zeegers Citation2004); gender (Goldin, Katz, and Kuziemko Citation2006; Jacob Citation2002), and ethnic background (Dekkers, Bosker, and Driessen Citation2000; Roscigno and Ainsworth-Darnell Citation1999) are known to affect study success. Therefore, we conducted two multinomial logistic regression analyses in which we also included Nominal = Normal as an independent categorial variable. These analyses demonstrated that the new examination rules aimed at decreasing procrastination were the best predictor of whether a student would bring her or his first year successfully to completion, would delay her or his study, or would dropout.

Attribution of causation to an intervention is problematic if the data were not collected in a randomized experiment. However, when data are gathered sequentially over time, and when the intervention is clearly located in time, then an interrupted time series regression approach is second best (Bernal, Cummins, and Gasparrini Citation2017; Kontopantelis et al. Citation2015). The regression analysis using study success as the dependent variable, and Nominal = Normal and time lapsed as independent variables, found an R-square of .87, while Nominal = Normal was the only significant contributor to study success. These findings seem to imply that the new examination practices were the likely causal agent in the increase of study success among students.

Finally, we asked whether extraneous events, events unrelated to the experimental manipulation but affecting outcomes, such as changing government policy, may have played a role. The new examination practices were introduced in the various bachelor programs at different points in time. Inspection of the completion rates of these programs demonstrates that as soon as Nominal = Normal is implemented, completion rates increase more than other observed changes, making it unlikely that unrelated events may have caused the effect.

The question is then how these improvements came about. A tentative answer is provided by a study among first-year psychology students in 2011, the year that Nominal = Normal was introduced in their bachelor program. Adriaans et al. (Citation2013) studied changes in examination marks for the eight courses of the first-year psychology curriculum over a period of four years, the last being the year during which the new examination rules were introduced. They found that the GPA significantly increased under Nominal = Normal from 6.45 to 6.86 (on a 10-point scale), and that the percentage of students who failed examinations was cut almost by half. In addition, mean self-study time increased by approximately 2 hours per week. Similar findings were reported for the first-year medical curriculum before and after introduction of Nominal = Normal. Mean scores on achievement tests increased here significantly from 6.06 to 6.57 (Kickert et al. Citation2018).

There are obvious benefits of the approach to procrastination described in this article. Most important perhaps is the effect on the perception of students on themselves as learners. In a study in the medical curriculum of the particular university, comparing scores of students before and after the introduction of the new examination rules, self-efficacy, lecture attendance, time management, and effort regulation all significantly increased. In addition, deep learning strategies also became more prevalent (Kickert et al. Citation2018). Second, by completing the first year within the timeframe allotted, students prove to themselves that they are able to appropriately respond to the requirements set by a university education, thereby boosting their self-confidence. In addition, they enter graduate training at a younger age and will take up their first job earlier. And third, in the particular period studied, between 2009 and 2015, students in the Netherlands had to invest in tuition fees and living expenses approximately 10,550 euro for each additional year in university. Therefore, decreasing procrastination serves the students’ budget.

A potential downside is that students may have less time available to engage in extracurricular activities while studying. This was at least one of the main concerns of their representatives in the university council when Nominal = Normal was first discussed. One student, in an interview with the university newspaper, indicated that he would continue his studies elsewhere. He feared that because of the stricter examination requirements he could not go skiing in the winter. The study by Adriaans et al. (Citation2013) however already indicated that the additional time requirements are limited and would leave sufficient time for extracurricular enjoyment.

What do these findings imply for the theory of procrastination? Interestingly, psychological theories about the subject have little to say about methods to curb procrastination because they tend to interpret procrastination as a maladaptive personality characteristic (Howell and Buro Citation2009; Klassen, Krawchuk, and Rajani Citation2008; Senécal, Koestner, and Vallerand Citation1995; Van Eerde Citation2000). A perhaps more appropriate approach to procrastination, Temporal Motivation Theory, has its roots in economics (Ainslie Citation1992; Steel Citation2007; Steel and König Citation2006). It has at its core the idea that time is central to the choices people make. According to Ainslie (Citation1992), humans have a tendency to prefer and pursue immediate but often poorer goals, over more valuable long-term goals. This explains why many students postpone studying until it cannot any longer be avoided; more attractive short-term rewards need to be pursued first. Steel and König (Citation2006) interpret procrastination as a utility function. The motivation to avoid procrastination can be expressed as

(2)

(2) From this utility function it can be deduced that, all other things being equal, the shorter the delay between action required and goal reached, the high the motivation to pursue that goal. By removing the possibility to resit examinations and by requiring students to complete their first year of study within twelve months, Nominal = Normal curtails delays of reward and, consequently increases motivation to avoid procrastination, leading to higher completion rates.

There are several potential limitations to these interpretations of the findings. The examination policy changes were shared with prospective students during the bachelor ‘open days’, in the four months prior to the intervention. It is possible that these students anticipated the effects of the changes and modified their behaviours along dimensions not necessarily related to the new policy itself, the most obvious being the decision not to study at the particular university. Since there were initially some worries among staff and administrators that students would shun the university because of the new examination practices, 112 students were approached who initially signed up but then decided to join another university. Only two of them mentioned Nominal = Normal as the reason not to join (Baars et al. Citation2012). In addition, in pre-university education in the Netherlands resits are largely absent, and pass/fail decisions are made based on GPA. Therefore, first-year students, confronted with the intervention, had little difficulty to adapt.

Second, it could be argued that it is not so much the abandonment of resits, or the introduction of a one-year deadline, but the introduction of compensatory decision practices that increase the likelihood that unfit students nevertheless proceed. However, compensatory decisions tend to be more accurate than conjunctive decisions (Chester Citation2003; Haladyna and Hess Citation1999; Yocarini et al. Citation2018) and the number of dropouts remained the same.

A third potential limitation of our study concerns our emphasis on limiting resits in battling procrastination in university students. We emphasized this hypothesis because there is direct evidence that students use resits to postpone studying (Ostermaier, Beltz, and Link Citation2013). Strictly speaking however, Nominal = Normal does not only limit resits, but also changes the way decisions are made about student progress (compensatory rather than conjunctive), and confronts students with the one-year deadline within which their achievement should meet a minimum standard. There is evidence that setting a minimum performance standard in itself has effects on students’ progress. For instance, programs in which the minimum required GPA was raised from 5.5 to 6, saw improved performance on achievement tests (Adriaans et al. Citation2013; Kickert et al. Citation2018), whereas in a program that did not raise the bar, no such increase in performance was observed (Rol Citation2014). When the previous standard of 40 credits (out of 60) was introduced, Arnold (Citation2012) demonstrated that students put in efforts into their studies until that standard was reached and then largely stopped further efforts. In the psychology curriculum, students on average collected more than 50 credits before the 40-credits practice was introduced. After the introduction of the 40-credits standard, mean number of credits acquired decreased significantly (De Koning et al. Citation2014). Students seem to aim at the minimum level of performance required from them.

In conclusion, the studies presented here are the first to demonstrate the effect of changes in examination rules on study delay. Our findings also are the first to indicate that delays, as usually observed in higher education, are not necessarily the result of lack of ability. Nor are they the effect of some inherent personality disorder (Ferrari, Johnson, and McCown Citation1995; Steel Citation2007). When confronted with the new examination rules, a great majority of students who, under previous examination practices would delay their studies, demonstrated to be able to rise to the occasion and to respond successfully to the challenge. It would be interesting to see what happens when an even higher standard would be introduced in a curriculum, be it a higher GPA, or a limitation of the time in which a minimum performance is required. These questions require further research. Answers would almost certainly help in a better understanding of the sources of procrastination in university students.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Henk G. Schmidt

Henk Schmidt is a professor of psychology at Erasmus University Rotterdam. Previously, he was the rector magnificus of this university. His areas of expertise include problem-based learning, long-term memory and expertise development. In addition, he is interested in student success in higher education.

Gerard J. A. Baars

Gerard Baars is director of the Netherlands Initiative of Education Research (NRO). NRO enhances the connection between education research on the one hand and educational practice and policy on the other hand. The research of Gerard Baars focuses on student success in higher education.

Peter Hermus

Peter Hermus is the manager of the High Tech Data Unit at Risbo, the research, training and consultancy center of Erasmus University Rotterdam. His work ranges from the use and analysis of existing data to complex data collections. He specializes in the application of registration systems use in (higher) education for research purposes.

Henk T. van der Molen

Henk van der Molen is professor of psychology at Erasmus University Rotterdam. Previously he was the Dean of the Erasmus School of Social and Behavioural Sciences at this university. His areas of expertise are professional communication skills training, problem-based learning, and educational assessment.

Ivo J. M. Arnold

Ivo Arnold is professor of economics education at Erasmus University Rotterdam and professor of monetary economics at Nyenrode Business Universiteit. In the past 15 years, he has been in charge of the educational programs at the Erasmus School of Economics. His main research interests are economics education and European monetary and financial integration.

Guus Smeets

Guus Smeets is a professor of psychology at Erasmus University Rotterdam. Previously he was director of education and chairman of the department of psychology, education and child studies. He has a special interest in assessment in higher education, problem-based learning, and effective learning strategies.

Notes

1 European universities use the European Credit Transfer System (ECTS) to enable students to acquire credits while studying elsewhere. By passing all examinations during a curriculum year, students receive 60 ECTS. Assume that the first curriculum year of a Dutch university’s bachelor curriculum consists of eight courses. The student will in such case receive 7.5 ECTS credits for each successfully completed course. If the student does not pass a particular course examination, he or she will not receive credits. However, there is the possibility to resit such examination. At the end of the first year, a minimum of 40 ECTS credits is required to be allowed to acquire the remaining 20 credits in a second year. If the student fails to reach the 40 credits limit, he or she has to leave the program. Different universities in the Netherlands use slightly different credit limits.

2 The bachelor of philosophy was not included because of the small number of students involved.

3 A majority of the programs however required a GPA of 6.0, as a precaution against false positives (Smits, Kelderman, and Hoeksma Citation2015).

References

- Adriaans, M., G. Baars, H. Van der Molen, and G. Smeets. 2013. “Betere studieresultaten dankzij ‘Nominaal is normaal’.” Thema Hoger Onderwijs 20 (1): 30–34.

- Ainslie, G. 1992. Picoeconomics: The Strategic Interaction of Successive Motivational States Within the Person. Cambridge, UK: Cambridge University Press.

- Arnold, I. J. M. 2012. “De BSA-norm: minimumeis of streefwaarde?” Tijdschrift voor Hoger Onderwijs & Management 3: 4–8.

- Arnold, I. 2017. “Resitting or Compensating a Failed Examination: Does It Affect Subsequent Results?” Assessment & Evaluation in Higher Education 42 (7): 1103–1117.

- Baars, G., M. Adriaans, B. Godor, P. Hermus, and P. van Wensveen. 2012. “Pilot ‘Nominaal = Normaal’ bij de Faculteit der Sociale Wetenschappen aan de Erasmus Universiteit Rotterdam.” Eindrapport. Retrieved from Rotterdam, the Netherlands: https://www.risbo.nl/r_expertise.php?n=publicaties.

- Bernal, J. L., S. Cummins, and A. Gasparrini. 2017. “Interrupted Time Series Regression for the Evaluation of Public Health Interventions: A Tutorial.” International Journal of Epidemiology 46 (1): 348–355.

- Bruinsma, M., and E. P. W. A. Jansen. 2009. “When Will I Succeed in My First-Year Diploma? Survival Analysis in Dutch Higher Education.” Higher Education Research & Development 28 (1): 99–114. doi:https://doi.org/10.1080/07294360802444396.

- Chester, M. D. 2003. “Multiple Measures and High-Stakes Decisions: A Framework for Combining Measures.” Educational Measurement: Issues and Practice 22 (2): 32–41. doi:https://doi.org/10.1111/j.1745-3992.2003.tb00126.x.

- Cook, T. D., and D. T. Campbell. 1979. Quasi-Experimentation: Design and Analysis for Field Settings. Vol. 3. Chicago, IL: Rand McNally.

- Day, V., D. Mensink, and M. O'Sullivan. 2000. “Patterns of Academic Procrastination.” Journal of College Reading and Learning 30 (2): 120–134.

- Dekkers, H. P., R. J. Bosker, and G. W. Driessen. 2000. “Complex Inequalities of Educational Opportunities. A Large-Scale Longitudinal Study on the Relation Between Gender, Social Class, Ethnicity and School Success.” Educational Research and Evaluation 6 (1): 59–82.

- De Koning, B. B., S. M. M. Loyens, R. M. J. P. Rikers, G. Smeets, and H. T. Van der Molen. 2014. “Impact of Binding Study Advice on Study Behavior and Pre-University Education Qualification Factors in a Problem-Based Psychology Bachelor Program.” Studies in Higher Education 39 (5): 835–847.

- Diver, P., and I. Martinez. 2015. “MOOCs as a Massive Research Laboratory: Opportunities and Challenges.” Distance Education 36 (1): 5–25. doi:https://doi.org/10.1080/01587919.2015.1019968.

- Ferrari, J. R., J. L. Johnson, and W. G. McCown. 1995. Procrastination and Task Avoidance: Theory, Research, and Treatment. Boston, MA: Springer.

- Ferrari, J. R., and D. M. Tice. 2000. “Procrastination as a Self-Handicap for Men and Women: A Task-Avoidance Strategy in a Laboratory Setting.” Journal of Research in Personality 34 (1): 73–83. doi:https://doi.org/10.1006/jrpe.1999.2261.

- Goldin, C., L. F. Katz, and I. Kuziemko. 2006. “The Homecoming of American College Women: The Reversal of the College Gender Gap.” Journal of Economic Perspectives 20 (4): 133–156.

- Haladyna, T., and R. Hess. 1999. “An Evaluation of Conjunctive and Compensatory Standard-Setting Strategies for Test Decisions.” Educational Assessment 6 (2): 129–153.

- Harackiewicz, J. M., K. E. Barron, J. M. Tauer, and A. J. Elliot. 2002. “Predicting Success in College: A Longitudinal Study of Achievement Goals and Ability Measures as Predictors of Interest and Performance from Freshman Year Through Graduation.” Journal of Educational Psychology 94 (3): 562.

- Howell, A. J., and K. Buro. 2009. “Implicit Beliefs, Achievement Goals, and Procrastination: A Mediational Analysis.” Learning and Individual Differences 19: 151–154. doi:https://doi.org/10.1016/j.lindif.2008.08.006.

- Howell, A. J., and D. C. Watson. 2007. “Procrastination: Associations with Achievement Goal Orientation and Learning Strategies.” Personality and Individual Differences 43 (1): 167–178. doi:https://doi.org/10.1016/j.paid.2006.11.017.

- Jacob, B. A. 2002. “Where the Boys Aren't: Non-Cognitive Skills, Returns to School and the Gender Gap in Higher Education.” Economics of Education Review 21 (6): 589–598.

- Jansen, E. P. W. A. 2004. “The Influence of the Curriculum Organization on Study Progress in Higher Education.” Higher Education 47 (4): 411–435. doi:https://doi.org/10.1023/b:high.0000020868.39084.21.

- Kickert, R., K. M. Stegers-Jager, M. Meeuwisse, P. Prinzie, and L. R. Arends. 2018. “The Role of the Assessment Policy in the Relation Between Learning and Performance.” Medical Education 52 (3): 324–335. doi:https://doi.org/10.1111/medu.13487.

- Kim, K. R., and E. H. Seo. 2015. “The Relationship Between Procrastination and Academic Performance: A Meta-Analysis.” Personality and Individual Differences 82: 26–33. doi:https://doi.org/10.1016/j.paid.2015.02.038.

- Klassen, R. M., L. L. Krawchuk, and S. Rajani. 2008. “Academic Procrastination of Undergraduates: Low Self-Efficacy to Self-Regulate Predicts Higher Levels of Procrastination.” Contemporary Educational Psychology 33 (4): 915–931. doi:https://doi.org/10.1016/j.cedpsych.2007.07.001.

- Kontopantelis, E., T. Doran, D. A. Springate, I. Buchan, and D. Reeves. 2015. “Regression Based Quasi-Experimental Approach when Randomisation Is Not an Option: Interrupted Time Series Analysis.” British Medical Journal 350: h2750.

- Linden, A. 2015. “Conducting Interrupted Time-Series Analysis for Single-and Multiple-Group Comparisons.” The Stata Journal 15 (2): 480–500.

- McKenzie, K., and R. Schweitzer. 2001. “Who Succeeds at University? Factors Predicting Academic Performance in First Year Australian University Students.” Higher Education Research & Development 20 (1): 21–33.

- McManus, I. C. 1992. “Does Performance Improve When Candidates Resit a Postgraduate Examination?” Medical Education 26 (2): 157–162.

- Meeuwisse, M., P. van Wensveen, and S. E. Severiens. 2011. “Tijd om te studeren. Een onderzoek naar tijdbesteding en studiesucces in leeromgevingen.” In Studiesucces in de Bachelor: Drie Onderzoeken Naar Factoren die Studiesucces in de Bachelor Verklaren, 88–123. The Hague: Ministerie van Onderwijs, Cultuur en Wetenschap.

- Munoz-Olano, J. F., and C. Hurtado-Parrado. 2017. “Effects of Goal Clarification on Impulsivity and Academic Procrastination of College Students.” Revista Latinoamericana De Psicologia 49 (3): 173–181. doi:https://doi.org/10.1016/j.rlp.2017.03.001.

- Ostermaier, A., P. Beltz, and S. Link. 2013. “Do University Policies Matter? Effects of Course Policies on Performance.” Paper presented at the Beiträge zur Jahrestagung des Vereins für Socialpolitik 2013: Wettbewerbspolitik und Regulierung in einer globalen Wirtschaftsordnung.

- Pell, G., K. Boursicot, and T. Roberts. 2009. “The Trouble with Resits … .” Assessment & Evaluation in Higher Education 34 (2): 243–251.

- Rekveld, I. J., and J. Starren. 1994. “Een examenregeling zonder compensatie in het Nederlandse hoger onderwijs? Een vergelijking tussen compensatie en conjunctie.” Tijdschrift voor Hoger Onderwijs 12 (4): 210–219.

- Ricketts, C. 2010. “A New Look at Resits: Are They Simply a Second Chance?” Assessment & Evaluation in Higher Education 35 (4): 351–356.

- Rol, M. 2014. Het effect van de ‘Nominaal is Normaal’-regeling op studieprestaties in het eerste studiejaar. Rotterdam.

- Roscigno, V. J., and J. W. Ainsworth-Darnell. 1999. “Race, Cultural Capital, and Educational Resources: Persistent Inequalities and Achievement Returns.” Sociology of Education, 158–178.

- Senécal, C., R. Koestner, and R. J. Vallerand. 1995. “Self-Regulation and Academic Procrastination.” Journal of Social Psychology 135: 607–619.

- Smits, N., H. Kelderman, and J. B. Hoeksma. 2015. “Een vergelijking van compensatoir en conjunctief toetsen in het hoger onderwijs.” Pedagogische Studiën 92: 275–286.

- StataCorp. 2019. Stata Statistical Software: Release 16. College Station, TX: StataCorp LLC.

- Steel, P. 2007. “The Nature of Procrastination: A Meta-Analytic and Theoretical Review of Quintessential Self-Regulatory Failure.” Psychological Bulletin 133 (1): 65–94. doi:https://doi.org/10.1037/0033-2909.133.1.65.

- Steel, P., and C. J. König. 2006. “Integrating Theories of Motivation.” Academy of Management Review 31 (4): 889–913.

- Van Den Berg, M. N., and W. H. A. Hofman. 2005. “Student Success in University Education: A Multi-Measurement Study of the Impact of Student and Faculty Factors on Study Progress.” Higher Education 50 (3): 413–446. doi:https://doi.org/10.1007/s10734-004-6361-1.

- Van der Hulst, M., and E. Jansen. 2002. “Effects of Curriculum Organisation on Study Progress in Engineering Studies.” Higher Education 43 (4): 489–506. doi:https://doi.org/10.1023/a:1015207706917.

- Van Eerde, W. 2000. “Procrastination: Self-Regulation in Initiating Aversive Goals.” Applied Psychology-an International Review-Psychologie Appliquee-Revue Internationale 49 (3): 372–389. doi:https://doi.org/10.1111/1464-0597.00021.

- Watson, D. C. 2001. “Procrastination and the Five-Factor Model: A Facet Level Analysis.” Personality and Individual Differences 30 (1): 149–158. doi:https://doi.org/10.1016/s0191-8869(00)00019-2.

- Wolters, C. A. 2003. “Understanding Procrastination from a Self-Regulated Learning Perspective.” Journal of Educational Psychology 95 (1): 179–187. doi:https://doi.org/10.1037/0022-0663.95.1.179.

- Yocarini, I. E., S. Bouwmeester, G. Smeets, and L. R. Arends. 2018. “Systematic Comparison of Decision Accuracy of Complex Compensatory Decision Rules Combining Multiple Tests in a Higher Education Context.” Educational Measurement: Issues and Practice, 37: 24–39. doi:https://doi.org/10.1111/emip.12186.

- Zeegers, P. 2004. “Student Learning in Higher Education: A Path Analysis of Academic Achievement in Science.” Higher Education Research & Development 23 (1): 35–56.