ABSTRACT

The growing number of interdisciplinary degree programmes offered at comprehensive research universities aim to ensure that students gain interdisciplinary understanding, defined as knowledge and skills that provide them with the means to produce cognitive enhancements that would not be possible through monodisciplinary programmes. Previous studies suggest that interdisciplinary understanding comprises six main elements: knowledge of different disciplinary paradigms, knowledge of interdisciplinarity, reflection skills, critical reflection skills, communication skills, and collaboration skills. However, empirical evidence to support this conceptualised model is lacking. The current study therefore proposes an Interdisciplinary Understanding Questionnaire (24 items) to assess this model. Its construct validity and measurement invariance were tested among 505 first-year Bachelor’s students from different academic disciplines (e.g. humanities, science, social sciences). A (multigroup) confirmatory factor analysis confirmed the conceptualised model of interdisciplinary understanding, as well as measurement invariance across academic disciplines. Implications for educational practice, for instance regarding student assessment and quality assurance, are discussed.

Introduction

In Europe and the United States, the number of interdisciplinary study programmes at research universities has increased in recent years, at both the module and degree programme level, and in undergraduate and graduate studies (Borrego and Newswander Citation2010; Kurland et al. Citation2010; Lyall et al. Citation2015). Many research universities explicitly included interdisciplinary education in their formal institutional strategies and this number still is increasing (Lyall Citation2019; Lyall et al. Citation2015). The shift from monodisciplinary to multi- or interdisciplinary programmes in higher education often is motivated by arguments related to professional demands but also by referring to the need of preparing students to deal with complex societal issues (Blackmore and Kandiko Citation2012; Lyall et al. Citation2015; Manathunga, Lant, and Mellick Citation2006), or wicked problems, that mutate and evolve over time, creating vast uncertainty regarding both causality and effective solutions (Dentoni and Bitzer Citation2015; Roberts Citation2000). Some notable examples of wicked problems include the search for alternative energy sources, coping with climate change, and options for reducing social injustice. The complexity of these problems requires professionals with the capabilities to address them.

In our view, interdisciplinary education is one promising teaching approach that prepares students for participating in a complicated world. For example, the COVID-19 pandemic demonstrates that more than only medical knowledge is needed to deal with this situation. Social scientists could provide insight into human behaviour that contribute (or not) to a way out of this pandemic. But also, people with a background in for instance law or economics are needed to understand both societal and human consequences of pandemic-related policy. Aside from interdisciplinary approaches, detailed disciplinary academic knowledge is still needed to for example carry out particular analyses or to develop (technical) solutions.

From an institutional perspective, interdisciplinary education is also a manner to educate future interdisciplinary (research) leaders as a reaction to critiques that research universities tend to highly specialise (Manathunga, Lant, and Mellick Citation2006). Not surprisingly, many research universities have added interdisciplinary education to their institutional strategy (Lyall et al. Citation2015). The research university we included in our research project has also designated interdisciplinary education as one of its strategic goals. Several small-scale interdisciplinary education programmes were set up to develop and test interdisciplinary teaching approaches, to stimulate professional development of lecturers and to further examine the practical implications of interdisciplinary education within this mainly disciplinary organised comprehensive research university.

Given the increasing focus on interdisciplinary education, research universities need specific interdisciplinary teaching approaches that aim to teach students interdisciplinary understanding, defined as an ability to integrate knowledge from different academic fields and thereby contribute to complex problem-solving abilities (Boix Mansilla Citation2005; Boix Mansilla, Miller, and Gardner Citation2000; Graybill et al. Citation2006; Ivanitskaya et al. Citation2002; Klein and Newell Citation1997; Lattuca, Voigt, and Fath Citation2004; Weber and Khademian Citation2008).

Considering its prevalence, popularity, and potential to contribute to the social good, interdisciplinary education demands ongoing research consideration. However, thorough evaluations of the effects of interdisciplinary education programmes on student outcomes have not been commonly conducted, partly because a convenient instrument to measure students’ generic interdisciplinary progress is lacking. Accordingly, the current article seeks to take generic notions of interdisciplinary understanding as input to develop and validate an instrument to measure it, in the context of a comprehensive research university.

Specifically, we report on the validity and measurement invariance of an instrument to measure interdisciplinary understanding in the context of a Dutch comprehensive research university. Within this research university high-performing students are allowed to pursue an interdisciplinary excellence programme, requiring 1260 hours of study over 2.5 years, on top of their enrolment in a regular Bachelor’s degree programme. The interdisciplinary excellence curriculum consists of interdisciplinary thematic and skills modules and the student population within each module is multidisciplinary, so students from various disciplines are challenged to look beyond their own field of study to solve complex scientific and social issues. That is, the programme explicitly aims to ensure students to attain interdisciplinary learning outcomes. Until now, there is no well-supported method available to determine whether and to what degree they actually do so. With the instrument we propose, we suggest an effective measure of the success of interdisciplinary programmes.

Interdisciplinary understanding

Definition

In higher education, interdisciplinary learning outcomes have been conceptualised in several manners. Most conceptualisations share an emphasis on the use and integration of concepts, knowledge, and methods from more than one academic discipline, to enhance students’ understanding of some phenomenon or problem (e.g. Boix Mansilla, Miller, and Gardner Citation2000; Boix Mansilla Citation2005; Graybill et al. Citation2006; Ivanitskaya et al. Citation2002; Klein and Newell Citation1997; Lattuca, Voigt, and Fath Citation2004). We adopted the interdisciplinary understanding concept as a learning outcome of interdisciplinary education, defined by Boix Mansilla, Miller, and Gardner (Citation2000, 17) as:

The capacity to integrate knowledge and modes of thinking in two or more disciplines or established areas of expertise to produce a cognitive enhancement—such as explaining a phenomenon, solving a problem, or creating a product—in ways that would have been impossible or unlikely through single disciplinary means.

Conceptualisation

In their review, Spelt et al. (Citation2009) explore how interdisciplinary understanding had been conceptualised in previous research, which they then use to suggest its constitution. In particular, they argue that knowledge and skills components help students become proficient in interdisciplinary understanding. The first component consists of having knowledge of (1) academic disciplines, (2) different disciplinary paradigms, and (3) interdisciplinarity. For example, Spelt et al. (Citation2009) propose that students need a substantive grounding in some academic discipline (i.e. knowledge of academic disciplines) to be able to recognise theory development. Other research is more ambiguous about the importance of disciplinary grounding though. Although some studies concur that students should be fluent in a particular academic discipline, as a foundation to continue into interdisciplinary education (Davies and Devlin Citation2007; Derrick et al. Citation2011), others recommend exposing students to various fields early in their education, to avoid the risk that they might get too stuck in one particular discipline (Lyall et al. Citation2015; MacKinnon, Hine, and Barnard Citation2013).

It would thus be interesting to assess whether early disciplinary grounding – i.e. having acquired the knowledge and skills of a specific academic discipline (Bachelor’s programme) – would be an advantage or disadvantage for the development of interdisciplinary understanding. However, the population we studied consisted of students who were all enrolled in a specific monodisciplinary Bachelor’s programme supplemented with an (optional) interdisciplinary programme. Therefore, we could not compare early disciplinary grounding to a situation in which students started in a broad interdisciplinary (Bachelor’s) programme.

Moreover, our focus on generic interdisciplinary learning outcomes prevented us from assessing substantive knowledge of disciplines, for example students’ knowledge of a certain theory used in a certain academic field. We therefore excluded the knowledge of disciplines component from our proposed model. Instead, we included the component knowledge of different disciplinary paradigms. This component refers to how knowledge is valued in the different academic disciplines. For example, in social sciences, knowledge can be negotiated while in physics knowledge is ‘fixed’ and needed to construct theories further. We propose that students need to be familiar with such different scientific paradigms to be able to make fruitful connections across disciplines and identify their similarities and dissimilarities.

In addition, we included the existing sense of the knowledge of interdisciplinarity component, which refers to an ability to integrate knowledge from different perspectives, such as by actively combining theories or methods from distinct academic disciplines (Spelt et al. Citation2009).

With regard to the skills component of interdisciplinary understanding, Spelt et al. (Citation2009) offer theoretical arguments for the importance of (1) reflection skills, (2) critical reflection skills, and (3) communication skills as preconditions of interdisciplinary understanding. Although Spelt et al. refer to higher order skills, we prefer reflection skills and critical reflection skills as more adequate labels for the ability to evaluate beliefs and knowledge critically (Kember et al. Citation2000), explore experiences in ways that lead to broader understanding (Boyd and Fales Citation1983; Kember et al. Citation2000), and pursue deeper levels of thinking (Kember et al. Citation2000). This conceptualisation resonates with the prediction that cognitive enhancement results from interdisciplinary understanding (Boix Mansilla and Dawes Duraisingh Citation2007; Ivanitskaya et al. Citation2002; Woods Citation2007). Thus, (critical) reflection skills are important to attain innovative directions in thought or solutions, and communication skills are key for transferring such knowledge.

We conducted a further literature review too, beyond Spelt et al.’s (Citation2009) effort, which led us to add collaboration skills to the skills dimension of interdisciplinary understanding. Increased calls for interdisciplinary education often cite the idea that interdisciplinary programmes should teach students to work together with people from different backgrounds, such that collaboration skills may be a relevant outcome of interdisciplinary education (e.g. Boni, Weingart, and Evenson Citation2009; Curşeu and Pluut Citation2013; Finlay et al. Citation2019; Little and Hoel Citation2011; MacLeod Citation2018; Richter, Paretti, and McNair Citation2009; Rienties and Héliot Citation2018). But interdisciplinary courses do not automatically result in interdisciplinary collaboration among students (Rienties and Héliot Citation2018), because students tend to prefer collaborating with friends or students with a similar disciplinary background. We therefore added collaboration skills to our conceptualisation of interdisciplinary understanding.

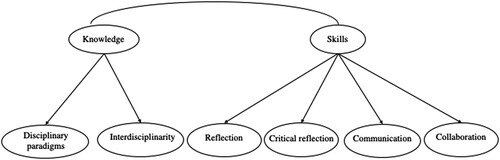

Despite the appeal of these theoretical arguments, empirical data to support this classification and definition is lacking (Spelt et al. Citation2009). We know of a few studies that attempted to develop an instrument that can track students’ progression in gaining interdisciplinary understanding (e.g. Lattuca, Knight, and Bergom Citation2012; Lattuca et al. Citation2017a, Citation2017b). However, most of those studies offer a relatively narrow perspective, focusing on a particular category of learning outcomes, such as assessing knowledge without considering skill-related learning outcomes. With our study, we aimed to develop a measurement instrument that comprises both knowledge and skills. presents a simplified version of our proposed conceptual model of the knowledge and skills components that constitute interdisciplinary understanding.

In addition to proposing this model, we validated it in the real-world setting of a comprehensive research university. In contrast to most prior research into interdisciplinary higher education, which concentrated on science and engineering students (e.g. Calatrava Moreno and Danowitz Citation2016; Lattuca et al. Citation2017a; Lyall et al. Citation2015; Mawdsley and Willis Citation2018; Spelt Citation2015; You, Marshall, and Delgado Citation2019) our study included students of all disciplinary backgrounds, spanning languages, law, medical sciences, psychology, science, and so on. In this manner, we were able to test whether our instrument is measurement invariant across disciplines and thus is applicable in the context of a comprehensive research university.

Method

Setting and participants

The current study was conducted in one of the largest comprehensive research universities in the Netherlands, where 32,000 students attend Bachelor’s and Master’s degree programmes covering all academic disciplines. In spring 2019, we administered the proposed Interdisciplinary Understanding Questionnaire (IUQ) (which we detail in the Instruments section) to 1020 first-year students, as part of a longitudinal research project dedicated to the academic development of high-performing Bachelor’s students. These students (best 10% of their monodisciplinary Bachelor’s programme) may apply to join an interdisciplinary excellence programme, in parallel with their Bachelor’s programme (1260 extracurricular hours, over a period of 2.5 years).

In 2019, 320 students started the interdisciplinary excellence programme, and they all received a paper-and-pencil questionnaire upon doing so. A group of 700 high-performing students who were not participating in the interdisciplinary excellence programme received an online invitation to complete the same questionnaire. In total, 505 students filled out at least one of the scales of the test battery. Students receiving the paper-and-pencil questionnaire were more inclined to complete it (response rate = 81%, n= 259) than students approached online (response rate = 35%, n = 246). Although the response rates are not equal, we consider both response rates as sufficient for the purpose of our validation analysis.

Among the respondents we included in our analysis, 51 percent were about to start the interdisciplinary excellence programme, and 49 percent were not. Furthermore, 62 percent of the participants were women, 37 percent were men, and 1 percent identified as non-binary. These students came from a wide range of academic disciplines (i.e. their Bachelor’s degree programme). For convenience, we created three clusters of academic disciplines, in accordance with a common division in the Netherlands, including humanities (arts, philosophy, theology and religious studies), science (natural sciences, engineering, medical sciences), and social sciences (economics, business, behavioural and social sciences, law, spatial sciences) (). The majority of the students were 19 (38%) or 20 (25%) years of age. Most of them had obtained their secondary school degree in the Netherlands (52%), Germany (18%), or elsewhere in Europe (19%), though some obtained it outside Europe (11%). With regard to gender and study background, the sample adequately reflects the wider student population of this research university. However, the sample included more international students than the general student population at this research university.

Table 1. Disciplinary background of respondents.

Instrument

To measure the level of interdisciplinary understanding among first-year Bachelor’s students, we developed the Interdisciplinary Understanding Questionnaire (IUQ), on the basis of our literature review and the previously discussed components that theoretically constitute the concept. The IUQ items measured students’ knowledge of different disciplinary paradigms, knowledge of interdisciplinarity, (critical) reflection skills, communication skills, and collaboration skills. Some items were derived from existing scales, but others were newly developed, as we detail next. For the 27 self-reported items, respondents indicated, on a 5-point Likert-scale from (1) ‘very inaccurate’ to (5) ‘very accurate’, how accurately each item described them. The IUQ also included several background questions.

Knowledge of different disciplinary paradigms

Students indicated their knowledge of different disciplinary paradigms with three items derived from the Recognizing Disciplinary Perspectives Scale (Lattuca, Knight, and Bergom Citation2012; α = .68), which initially was developed to assess interdisciplinary competencies in undergraduate engineering education. An example item is: ‘I have a good understanding of the strengths and limitations of academic disciplines’. We rephrased one item to support its applicability to a wide range of academic disciplines (original item: ‘I’m good at figuring out what experts in different fields have missed in explaining a problem or proposing a solution’, revised to ‘I am good at figuring out what students in another field of study have missed in explaining a problem or solution’).

Knowledge of interdisciplinarity

The measure of knowledge of interdisciplinarity includes seven items obtained from the Interdisciplinary Skills Scale (Lattuca, Knight, and Bergom Citation2012; eight items, α = .79) that reveal the extent to which students integrate knowledge of other academic disciplines and of their own discipline while solving academic problems. This scale initially was developed in the field of engineering, so we rephrased all the items to ensure they apply to students regardless of their disciplinary background. For example, we revised the original item ‘I value reading about topics outside of engineering’ to ‘I often read about topics outside my own academic discipline’. We dropped one of the original eight items because it was impossible to formulate a version that could be used across disciplines (i.e. ‘Not all engineering problems have purely technical solutions’).

(Critical) Reflection skills

Kember et al.’s (Citation2000) Reflection Scale provides the items to measure this skills-related dimension. The original scale sought to measure students’ tendency to consider their own beliefs and knowledge, actively and carefully, as well as their tendency to explore experiences in ways that support their broader understanding (Boyd and Fales Citation1983; Kember et al. Citation2000). This scale included four items (original α = .63). An example of an item we used is: ‘I often reflect on my actions to see whether I could have improved on what I did’.

In addition, we integrated the Critical Reflection Scale (Kember et al. Citation2000), which was designed to measure higher-level reflective thinking. That is, critical reflection goes beyond the Reflection Scale, by measuring a more thorough reflection process that leads to more profound thinking (Kember et al. Citation2000). This scale also consists of four items (original α = .68). For all eight items, we revised the stem, which previously read ‘As a result of this course’ to read ‘As a result of university education’. We for example included this item: ‘As a result of university education, I have changed the way I look at myself’.

Communication skills

To measure communication skills, we integrated existing instruments with some self-developed items to create a five-item scale. For example, we rephrased one item that had been used to measure Master students’ ability to communicate academic theories to students outside their own academic field (McEwen et al. Citation2009). A second item came from a study of the learning outcomes of an interdisciplinary team-teaching method (Little and Hoel Citation2011), which asked students to rate their confidence in their ability to communicate effectively. We reformulated it to refer to a competency (‘I can communicate effectively about scientific theories with students outside my own field of study’). The newly developed items pertain to communication with non-experts and the ability to communicate discipline-specific content.

Collaboration skills

We measured students’ collaboration skills with an adapted version of a teamwork scale (α = .86), originally developed for interdisciplinary undergraduate engineering education (Knight Citation2012; Lattuca et al. Citation2011). The five items from the original scale were rephrased to fit the university-wide setting of our research. For example, ‘Please rate your ability to work in teams that include people from fields outside engineering’ became ‘I am good at working in teams that include students from outside my own field of study’.

Background variables

Finally, we collected information about the respondents’ gender, age, education nationality (i.e. country where students obtained secondary school degree), the discipline of their Bachelor’s degree programme, and enrolment in the interdisciplinary programme.

Procedure

Before the data collection began, we gained ethical approval from the ethics committee of the primary researcher’s research department. All participants were informed about the research aim and provided explicit, informed consent before they started filling in the questionnaire. In accordance with the European Union General Data Protection Regulation, a privacy statement explained all privacy-related issues and informed participants about data storage and security.

Prior to the main study, we conducted a pilot study. First, we discussed a previous version of the IUQ through a think-out-loud procedure with two Bachelor’s students. Second, in fall 2018, we conducted a pilot study among 120 second-year Bachelor’s students to test both the data collection procedure and the questionnaire. The feedback led us to make some slight adjustments to several questions. Both, the thinking-out-loud procedure and the pilot study among second-year students, are not further presented in the current article.

The data for the main study were collected in two ways. Students enrolled in the interdisciplinary excellence programme were visited by the principal investigator during a lecture at the start of the programme. She told students about the purpose of the study and invited them to participate. They received a paper questionnaire and could complete it on site or return it to the research institute later. A second group of respondents, who were not enrolled in the interdisciplinary excellence programme, received an invitation by e-mail and filled in the questionnaire digitally. They were engaged in more than 45 different Bachelor’s programmes, so it would be impossible to solicit their participation in person.

Data analysis

The data analysis was conducted in SPSS Statistics 25 and R (version 4.0.2), using R’s data packages BDgraphs, haven, Lavaan (0.6–7), semPlot, and zip. With SPSS, we organised the data set, reversed scores as needed, obtained cluster grouping variables, and calculated descriptive statistics. The (multigroup) confirmatory factor analysis were conducted in R/Lavaan. Due to the length of the test battery, some participants did not complete the full IUQ. We did not want to delete respondents from our data set, so we chose to use the full information maximum likelihood (FIML) option, a relevant technique for handling missing data (Beaujean, Citation2014). With FIML, we calculated parameter estimates for all observations and combined them into final estimates for all observations.

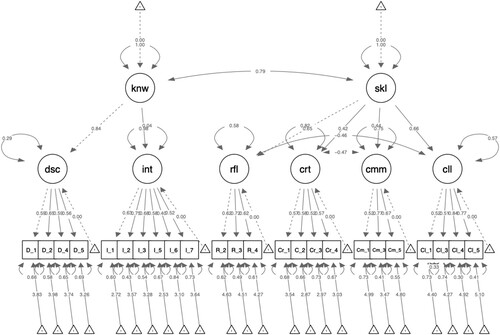

We conducted both a confirmatory factor analysis (CFA) and multi group confirmatory factor analysis (MGCFA) to assess the conceptualised model of interdisciplinary understanding ().

Confirmatory factor analysis

We started with a satisfactory first-order model, then continued the process by modelling a higher-order model. We preferred to use a modelling above a statistical approach for this evaluation because χ2 values are sensitive to sample sizes (Beaujean, Citation2014; Meade, Johnson, and Braddy Citation2008). We applied four fit indices (Bentler Citation1990; Brown Citation2015; Hu and Bentler Citation1999; Kline Citation2011): the Tucker–Lewis index (TLI), which should be greater than .95; the comparative fit index (CFI), which preferably exceeds .90; and the standardised root mean square residual (SRMR) and root mean square error of approximation (RMSEA), both of which should be smaller than .08 and preferably smaller than .05. For comprehensiveness, we also report the model χ2. Furthermore, we evaluated each modification in two steps. First, we inspected the factor loadings and removed non-significant indicators and/or indicators with a standardised factor loading smaller than .40. Second, we added covariances between error terms, based on post hoc modification indices.

Measurement invariance

We conducted MGCFA to examine measurement invariance by testing ‘whether or not under different conditions of observing and studying phenomena, measurements yield measures of the same attributes’ (Horn and McArdle Citation1992, 117). If invariance holds, differences between groups or individuals can be interpreted as actual differences, not artefacts caused by, for example, their different interpretations of the instrument (Beaujean, Citation2014). MGCFA is related to construct bias, because it is a method to verify that a questionnaire measures the same construct in different groups (Kline Citation2011). We checked the model’s measurement invariance by using respondents’ disciplinary background, namely humanities (i.e. arts, philosophy, theology and religious studies), science (i.e. natural sciences, engineering, medical sciences), and social sciences (i.e. economics, business, behavioural and social sciences, law, spatial sciences), as a grouping variable.

We tested four levels of measurement invariance: configural, metric, scalar and error variance invariance (Vandenberg and Lance Citation2000). Configural invariance indicates that the same latent variable model applies to all three disciplinary groups. Both the number of factors and the factor-indicators are equal (Kline Citation2011). Next, to test for metric invariance (also called ‘weak invariance’ [Beaujean, Citation2014]), we added the loadings of the indicators and constrained them to be equal across groups (Vandenberg and Lance Citation2000). In the scalar invariance (also called ‘strong invariance’ [Beaujean, Citation2014]) model, the intercepts are forced to be identical across groups too (Vandenberg and Lance Citation2000). Scalar invariance is a requirement to allow score comparison across groups. Finally, the measure of error variance invariance (also called ‘strict invariance’ [Beaujean, Citation2014]) constrains the error variances to be equivalent as well (Vandenberg and Lance Citation2000).

Invariance model testing entails hierarchical modelling, so each step needs to be compared with the previous level of tested invariance (Beaujean, Citation2014). In our modelling approach, we used the RMSEA to evaluate adequate fit and the change in the CFI to compare models against one another (Meade, Johnson, and Braddy Citation2008). Any changes in the RMSEA should be within a margin of .015 (Chen Citation2007); a change in the CFI that is equal to or less than .01 suggests the model is invariant relative to the previous version (Cheung and Rensvold Citation2002).

Results

Confirmatory factor analysis

We started building the hypothesised first-order model and its six factors (knowledge of different disciplinary paradigms, knowledge of interdisciplinarity, reflection skills, critical reflection skills, communication skills, collaboration skills), measured by 27 items. The results showed that the data did not adequately fit the model (see ). Three indicators had low factor loadings (below .40): one of the reflection items (‘I sometimes question the way others do something and try to think of a better way’, λ = .21), one item from the knowledge of interdisciplinarity scale (‘I can use what I have learned in an academic course in another non-academic setting’, λ = .31), and one communication scale item (‘I find it difficult to explain what my Bachelor’s study is about to students outside my own field of study’ (reversed), λ = .30).

Table 2. Fit indices for subsequent models.

We deleted the reflection item and reran the CFA, but the model fit indices again suggested that it could be further improved. All factor loadings were significant, but the two previously mentioned communication and interdisciplinarity items still had low loadings. We therefore eliminated the communication item and ran a third model. It approached the thresholds for sufficient model fit, with the exception of the TLI scores. We therefore deleted the interdisciplinary item in a fourth run. With this version of the model, all factor loadings exceeded the cut-off score of .40. However, when we assessed the covariances across error terms, the modification indices of this fourth model suggested adding covariance between the errors of two collaboration skills items. With this alteration, we created and tested a fifth version of the first-order model. Its TLI score exceeded .95, so it met all the model fit criteria.

We continued the testing procedure by adding two higher-order factors for knowledge and skills. The fit indices of this higher-order model affirmed that the data fitted the proposed model quite well (i.e. CFI reached the cut-off score of .90, the TLI value approached the .95 threshold, and both RMSEA and SRMR remained below .08). However, the factor loading of the first-order critical reflection factor turned out to be low, scoring less than .40. After examining the modification indices, we tried to increase its loading by adding covariance between critical reflection and the communication factor, and the altered model met most goodness-of-fit criteria. However, we did not attain .95 for the TLI. In another attempt to improve the model, we added covariance between the collaboration and reflection factors, as suggested by the modification indices. This third version of our higher-order model resulted in a model that reached the preferred TLI cut-off threshold.

The final higher-order model confirmed the presence of two higher-order latent factors (knowledge and skills) and six underlying latent factors (knowledge of different disciplinary paradigms, knowledge of interdisciplinarity, reflection skills, critical reflection skills, communication skills, and collaboration skills). We also note two covariances between the error terms of latent factors, between critical reflection and communication and then between collaboration and reflection. The model parameters are displayed in Table A4, Appendix A, and we offer a graphical representation of the model in Figure A2, Appendix B.

Measurement invariance

We tested the model’s measurement invariance through MGCFA. The test for configural invariance indicates good model fit (CFI = .911; RMSEA = .049), such that the same latent variable model applies to all three education domains (). In support of metric invariance, the change in CFI and RMSEA remained within the change margins (ΔCFI = .010; ΔRMSEA = .001). The indicator loadings thus appear equal across groups. We also confirm identical intercepts with the scalar invariance test (ΔCFI = .004; ΔRMSEA < .001). The model passes the error variance invariance test too (ΔCFI = .009; ΔRMSEA =.001), so the error variances are equivalent. The CFI of this final model is close to the threshold (CFI = .888), and the RMSEA indicates good model fit (RMSEA = .051). In summary, the measurement invariance tests affirm that the construct interdisciplinary understanding is measured equally for the three academic fields (humanities, science, social sciences).

Table 3. Fit indices for measurement invariance.

Discussion

We report on the careful validation of an instrument to measure research university students’ level of interdisciplinary understanding. As our first research aim, we sought to develop and validate an effective, self-reported instrument, in the context of a comprehensive research university. In addition, we tested its measurement invariance across students who focus on humanities, science, and social sciences domains.

In line with Spelt et al.’s (Citation2009) review findings, regarding the knowledge and skills dimensions of interdisciplinary understanding, we compiled a measurement instrument with previously used items (Kember et al. Citation2000; Knight Citation2012; Lattuca et al. Citation2011, Citation2012; Little and Hoel Citation2011; McEwen et al. Citation2009) and newly developed items, which we tested among 505 first-year Bachelors’ students. Confirmatory factor analyses support our conceptualised model of interdisciplinary understanding. That is, we identify two higher-order latent factors, knowledge and skills. In addition, we specify six underlying latent factors. The knowledge higher-order factor comprises, as expected, knowledge of different disciplinary paradigms and knowledge of interdisciplinarity. The skills higher-order factor in turn consists of reflection skills, critical reflection skills, communication skills, and collaboration skills. The final version of the IUQ includes 24 items. To the best of our knowledge, this article offers the first attempt to confirm theoretical claims about the structure of interdisciplinary understanding with empirical data.

Our check of whether this proposed measurement instrument can be unambiguously interpreted across groups also demonstrates its configural, metric, scalar, and error variance invariance across students from three different groups of academic disciplines. That is, differences that arise across groups of individuals likely are caused by their existing group differences, and not by ambiguous interpretations of the questionnaire or other unobserved effects (Beaujean, Citation2014).

Limitations and further research

Several limitations mark this study. We collected study data from high-achieving students and we have no evidence that suggests interdisciplinary understanding takes a different constitution among students with varying levels of achievement. However, it would be pertinent to conduct complementary research with a more diverse population consisting of students with different achievement levels.

Our participants were all first-year students, reflecting the goals of a broader project in which the current study will serve as a baseline measure for longitudinal research. Students who participated had not had any substantial experience in interdisciplinary education at the university level, so their answers to the interdisciplinary understanding questionnaire indicate their likely propensity for this ability. For this reason, only the structure of the underlying model and its measurement invariance are discussed in the current article.

In future research, we will administer the IUQ among more advanced students and also track student progression over the course of their study programme. Insight into students’ maturation will contribute to a better understanding of students’ interdisciplinary literacy; the repeated measurement also might provide insight into which aspects of interdisciplinary understanding students have developed to greater or lesser extents. In turn, we plan to provide teachers and administrators with relevant information for improving the curriculum.

Another potential concern relates to the self-reported questionnaire. Such data collection efforts are appealing because they take less time, compared with standardised testing or observations. However, it might be helpful to develop additional instruments to measure students’ interdisciplinary understanding. Observations of students’ actions in interdisciplinary course work or serious games might be informative, though these methods also are difficult to deploy on a large scale, time-consuming, and costly.

In our conceptual model we excluded students’ knowledge of academic disciplines, which we conceive as a substantive grounding in some academic discipline. In the context of our study, it was impossible to measure grounding in a particular discipline since our population was enrolled in 45 different Bachelor’s programmes spanning languages, law, medical sciences, psychology, science, and so on. Moreover, at the time of our study, students had only had courses in their Bachelor’s discipline for six months. Nevertheless, we suggest to research whether early disciplinary grounding – i.e. gaining knowledge and skills in a specific academic discipline (Bachelor’s programme) – would be an advantage or disadvantage for the development of interdisciplinary understanding in for instance students’ Master’s phase. This could for example be done by adding a measurement instrument that determines students’ level of disciplinary knowledge within a particular academic field.

Finally, the aim of the current study was validating the Interdisciplinary Understanding Questionnaire and testing its measurement invariance. The actual scores participants obtained on the questionnaire were not reported, because this was simply outside the scope of our validation research. In future research, we plan to use our questionnaire to explore students’ interdisciplinary achievement in subsequent years and to analyse whether and how student characteristics influence scores on interdisciplinary understanding.

Practical implications

Our research supports the hypothesis that interdisciplinary understanding as a learning outcome entails both knowledge-related and skills-related components. Both should therefore be addressed in the interdisciplinary teaching and learning practice. We suggest incorporating these in the learning goals of interdisciplinary education programmes and in its teaching approaches. Thus, an interdisciplinary degree programme should not only focus on topics that require an interdisciplinary approach, but should also explicitly pay attention to knowledge outcomes regarding differences between research paradigms, research methods and approaches used in diverse academic fields, and how these could be actively used in the interdisciplinary classroom. Besides that, skills-related learning outcomes – for instance communication and collaboration in interdisciplinary settings – should have their position within the interdisciplinary curriculum.

The IUQ might be a useful instrument to assess students’ progression in interdisciplinary study programmes. It could be used as a formative assessment tool to yearly measure students’ generic interdisciplinary knowledge and skills. In addition, the questionnaire might generate useful insight for interdisciplinary programme evaluation. It can provide insight into the extent to which students reach the goals of interdisciplinary study programmes and which components of the programme might be further improved. Lecturers and academic management staff could in such manner review if interdisciplinary knowledge and skills are adequately taught throughout the curriculum.

Conclusion

On the basis of our effort to establish an instrument to measure students’ interdisciplinary understanding in the context of a comprehensive research university, we present the Interdisciplinary Understanding Questionnaire, consisting of 24 items with good construct validity. The instrument achieves measurement invariance across groups of students from different academic disciplines, so any differences found are not due to the instrument itself or varying interpretations by students from three categories of academic disciplines, and instead, these groups of students can be compared viably. Thus, our study expands the current knowledge base by providing an empirical validation of an existing conceptual model of interdisciplinary understanding.

The resulting measurement instrument offers a good starting point for continued research into the learning outcomes of interdisciplinary education in general. More specific, it is a promising starting point for our longitudinal research into the effectiveness of an interdisciplinary excellence programme in a comprehensive research university. We believe our research contributes to the continued effort to educate students who can take interdisciplinary approaches to the wicked problems that confront today's complex society.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Jennifer E. Schijf

Jennifer (J.E.) Schijf MSc is a PhD candidate at the Department of Teacher Education of the University of Groningen, the Netherlands. She graduated from the University of Amsterdam with degrees in Educational Sciences (BSc, Research MSc) and Sociology (BSc). Her research interests lie in higher education, interdisciplinary education and student success. Besides her research activities, she has worked as a policy officer at several Dutch research universities.

Greetje P.C. van der Werf

Greetje (M.P.C.) van der Werf PhD is Professor Emeritus of the University of Groningen, the Netherlands. Before her retirement in June 2019, she was Full Professor of Learning and Instruction at the department GION education/research. During her working life, she coordinated several national large-scale, multilevel longitudinal studies in primary and secondary education, as well as the Dutch part of one of the IEA studies on Civics and Citizenship Education. She published a large number of articles on the effects of schooling on students’ achievement and long-term attainment. Her main research expertise is on the influence of students’ psychological attributes, among which students’ personality traits, achievement motivation, social comparison and peer relations on students’ achievement and success in their school career. Currently, she is still Editor-in-Chief of the international journal Educational Research and Evaluation.

Ellen P.W.A. Jansen

Ellen (E.P.W.A.) Jansen PhD holds the position of Associate Professor in Higher Education within the Department of Teacher Education at the University of Groningen in Groningen, the Netherlands. Her expertise relates to the fields of teaching and learning, curriculum development, factors related to excellence and study success, social (policy) research, and quality assurance in higher education. She supervised national and international PhD-students in the field of higher education.

References

- Beaujean, A. A. 2014. Latent Variable Modelling Using R: A Step-by-Step Guide. Routledge. doi:10.4324/9781315869780.

- Bentler, P. M. 1990. “Comparative Fit Indexes in Structural Models.” Psychological Bulletin 107 (2): 238–246. doi:10.1037/0033-2909.107.2.238.

- Blackmore, P., and C. B. Kandiko. 2012. Strategic Curriculum Change in Universities: Global Trends. Routledge. doi:10.4324/9780203111628.

- Boix Mansilla, V. 2005. “Assessing Student Work at Disciplinary Crossroads.” Change: The Magazine of Higher Learning 37 (1): 14–21. doi:10.3200/CHNG.37.1.14-21.

- Boix Mansilla, V., and E. Dawes Duraisingh. 2007. “Targeted Assessment of Students’ Interdisciplinary Work: An Empirically Grounded Framework Proposed.” Journal of Higher Education 78 (2): 215–237. doi:10.1353/jhe.2007.0008.

- Boix Mansilla, V., W. C. Miller, and H. Gardner. 2000. “On Disciplinary Lenses and Interdisciplinary Work.” In Interdisciplinary Curriculum: Challenges to Implementation, edited by S. S. Wineburg, and P. Grossman, 17–38. New York, NY: Teachers College Press.

- Boni, A. A., L. R. Weingart, and S. Evenson. 2009. “Innovation in an Academic Setting: Designing and Leading a Business Through Market-Focused, Interdisciplinary Teams.” Academy of Management Learning & Education 8 (3): 407–417. doi:10.5465/amle.8.3.zqr407.

- Borrego, M., and L. K. Newswander. 2010. “Definitions of Interdisciplinary Research: Toward Graduate-Level Interdisciplinary Learning Outcomes.” Review of Higher Education 34 (1): 61–84. https://muse.jhu.edu/article/395602.

- Boyd, E. M., and A. W. Fales. 1983. “Reflective Learning: Key to Learning from Experience.” Journal of Humanistic Psychology 23 (2): 99–117. doi:10.1177/0022167883232011.

- Brown, T. A. 2015. Confirmatory Factor Analysis for Applied Research. New York, NY: Guilford Publications.

- Calatrava Moreno, M. D. C., and M. A. Danowitz. 2016. “Becoming an Interdisciplinary Scientist: An Analysis of Students’ Experiences in Three Computer Science Doctoral Programmes.” Journal of Higher Education Policy and Management 38 (4): 448–464. doi:10.1080/1360080X.2016.1182670.

- Chen, F. F. 2007. “Sensitivity of Goodness of Fit Indexes to Lack of Measurement Invariance.” Structural Equation Modelling 14 (3): 464–504. doi:10.1080/10705510701301834.

- Cheung, G. W., and R. B. Rensvold. 2002. “Evaluating Goodness-of-Fit Indexes for Testing Measurement Invariance.” Structural Equation Modelling 9 (2): 233–255. doi:10.1207/S15328007SEM0902_5.

- Curşeu, P. L., and H. Pluut. 2013. “Student Groups as Learning Entities: The Effect of Group Diversity and Teamwork Quality on Groups’ Cognitive Complexity.” Studies in Higher Education 38 (1): 87–103. doi:10.1080/03075079.2011.565122.

- Davies, M., and M. T. Devlin. 2007. Interdisciplinary Higher Education: Implications for Teaching and Learning. Melbourne: Centre for the Study of Higher Education. https://www.academia.edu/download/4745290/davies-and-devlin_interdisciplinary_higher_ed__implications_2007.pdf.

- Dentoni, D., and V. Bitzer. 2015. “The Role(s) of Universities in Dealing with Global Wicked Problems Through Multi-Stakeholder Initiatives.” Journal of Cleaner Production 106: 68–78. doi:10.1016/j.jclepro.2014.09.050.

- Derrick, E. G., H. J. Falk-Krzesinski, M. R. Roberts, and S. Olson. 2011. “Facilitating Interdisciplinary Research and Education: A Practical Guide.” Report from the ‘Science on FIRE: Facilitating Interdisciplinary Research and Education’ workshop of the American Association for the Advancement of Science. http://www.aaas.org/sites/default/files/reports/Interdisciplinary%20Resarch%20Guide.pdf.

- Finlay, J. M., H. Davila, M. O. Whipple, E. M. McCreedy, E. Jutkowitz, A. Jensen, and R. A. Kane. 2019. “What We Learned Through Asking about Evidence: A Model for Interdisciplinary Student Engagement.” Gerontology & Geriatrics Education 40 (1): 90–104. doi:10.1080/02701960.2018.1428578.

- Graybill, J. K., S. Dooling, V. Shandas, J. Withey, A. Greve, and G. L. Simon. 2006. “A Rough Guide to Interdisciplinarity: Graduate Student Perspectives.” BioScience 56 (9): 757–763. doi:10.1641/0006-3568(2006)56%5B757:ARGTIG%5D2.0.CO;2.

- Horn, J. L., and J. J. McArdle. 1992. “A Practical and Theoretical Guide to Measurement Invariance in Aging Research.” Experimental Aging Research 18 (3): 117–144. doi:10.1080/03610739208253916.

- Hu, L. T., and P. M. Bentler. 1999. “Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria versus New Alternatives.” Structural Equation Modelling: A Multidisciplinary Journal 6 (1): 1–55. doi:10.1080/10705519909540118.

- Ivanitskaya, L., D. Clark, G. Montgomery, and R. Primeau. 2002. “Interdisciplinary Learning: Process and Outcomes.” Innovative Higher Education 27 (2): 95–111. doi:10.1023/A:1021105309984.

- Kember, D., D. Y. Leung, A. Jones, A. Y. Loke, J. McKay, K. Sinclair, T. Harrison, et al. 2000. “Development of a Questionnaire to Measure the Level of Reflective Thinking.” Assessment & Evaluation in Higher Education 25 (4): 381–395. doi:10.1080/713611442.

- Klein, J. T., and W. H. Newell. 1997. “Advancing Interdisciplinary Studies.” In Handbook of the Undergraduate Curriculum: A Comprehensive Guide to Purposes, Structures, Practices, and Change, edited by J. G. Gaff, and J. L. Ratcliff, 393–415. San Francisco, CA: Jossey-Bass Publishers.

- Kline, R. B. 2011. Principles and Practice of Structural Equation Modelling. 3rd ed. New York, NY: Guilford Press.

- Knight, D. B. 2012. “In Search of the Engineers of 2020: An Outcomes-Based Typology of Engineering Undergraduates.” In 2012 ASEE Annual Conference & Exposition, 25–757. https://peer.asee.org/in-search-of-the-engineers-of-2020-an-outcomes-based-typology-of-engineering-undergraduates.pdf.

- Kurland, N. B., K. E. Michaud, M. Best, E. Wohldmann, H. Cox, K. Pontikis, and A. Vasishth. 2010. “Overcoming Silos: The Role of an Interdisciplinary Course in Shaping a Sustainability Network.” Academy of Management Learning & Education 9 (3): 457–476. doi:10.5465/AMLE.2010.53791827.

- Lattuca, L. R., D. Knight, and I. Bergom. 2012. “Developing a Measure of Interdisciplinary Competence.” International Journal of Engineering Education 29 (3): 726–739. https://peer.asee.org/developing-a-measure-of-interdisciplinary-competence-for-engineers.

- Lattuca, L. R., D. B. Knight, H. K. Ro, and B. J. Novoselich. 2017a. “Supporting the Development of Engineers’ Interdisciplinary Competence.” Journal of Engineering Education 106 (1): 71–97. doi:10.1002/jee.20155.

- Lattuca, L. R., D. Knight, T. A. Seifert, R. D. Reason, and Q. Liu. 2017b. “Examining the Impact of Interdisciplinary Programmes on Student Learning.” Innovative Higher Education 42 (4): 337–353. doi:10.1007/s10755-017-9393-z.

- Lattuca, L. R., L. C. Trautvetter, S. L. Codd, D. B. Knight, and C. M. Cortes. 2011. “Working as a Team: Enhancing Interdisciplinarity for the Engineer of 2020.” In 2011 ASEE Annual Conference & Exposition, 22–1711. https://peer.asee.org/working-as-a-team-enhancing-interdisciplinarity-for-the-engineer-of-2020.

- Lattuca, L. R., L. J. Voigt, and K. Q. Fath. 2004. “Does Interdisciplinarity Promote Learning? Theoretical Support and Researchable Questions.” Review of Higher Education 28 (1): 23–48.

- Little, A., and A. Hoel. 2011. “Interdisciplinary Team Teaching: An Effective Method to Transform Student Attitudes.” Journal of Effective Teaching 11 (1): 36–44. doi:10.1353/rhe.2004.0028.

- Lyall, C. 2019. Being an Interdisciplinary Academic: How Institutions Shape University Careers. Springer. doi:10.1007/978-3-030-18659-3.

- Lyall, C., L. Meagher, J. Bandola, and A. Kettle. 2015. “Interdisciplinary Provision in Higher Education.” University of Edinburgh. https://www.research.ed.ac.uk/portal/en/publications/interdisciplinary-provision-in-higher-education(b30a7652-0af5-47e7-a9ac-11db0b3392bd).html.

- MacKinnon, P. J., D. Hine, and R. T. Barnard. 2013. “Interdisciplinary Science Research and Education.” Higher Education Research & Development 32 (3): 407–419. doi:10.1080/07294360.2012.686482.

- MacLeod, M. 2018. “What Makes Interdisciplinarity Difficult? Some Consequences of Domain Specificity in Interdisciplinary Practice.” Synthese 195 (2): 697–720. doi:10.1007/s11229-016-1236-4.

- Manathunga, C., P. Lant, and G. Mellick. 2006. “Imagining an Interdisciplinary Doctoral Pedagogy.” Teaching in Higher Education 11 (3): 365–379. doi:10.1080/13562510600680954.

- Mawdsley, A., and S. Willis. 2018. “Exploring an Integrated Curriculum in Pharmacy: Educators’ Perspectives.” Currents in Pharmacy Teaching and Learning 10 (3): 373–381. doi:10.1016/j.cptl.2017.11.017.

- McEwen, L., R. Jennings, R. Duck, and H. Roberts. 2009. Students’ Experiences of Interdisciplinary Masters’ Courses. Interdisciplinary Teaching and Learning Group, Subject Centre for Languages, Linguistics and Area Studies, School of Humanities, University of Southampton. https://www.advance-he.ac.uk/knowledge-hub/students-experiences-interdisciplinary-masters-courses.

- Meade, A. W., E. C. Johnson, and P. W. Braddy. 2008. “Power and Sensitivity of Alternative Fit Indices in Tests of Measurement Invariance.” Journal of Applied Psychology 93 (3): 568–592. doi:10.1037/0021-9010.93.3.568.

- Richter, D. M., M. C. Paretti, and L. D. McNair. 2009. “Teaching Interdisciplinary Collaboration: Learning Barriers and Classroom Strategies.” In 2009 ASEE Southeast Section Conference. ASEE. https://vtechworks.lib.vt.edu/bitstream/handle/10919/82444/McNairTeachingCollaboration2009.PDF?sequence=1&isAllowed=y.

- Rienties, B., and Y. Héliot. 2018. “Enhancing (In) formal Learning Ties in Interdisciplinary Management Courses: A Quasi-Experimental Social Network Study.” Studies in Higher Education 43 (3): 437–451.

- Roberts, N. 2000. “Wicked Problems and Network Approaches to Resolution.” International Public Management Review 1 (1): 1–19. doi:10.1080/03075079.2016.1174986.

- Spelt, E. J. H. 2015. Teaching and Learning of Interdisciplinary Thinking in Higher Education in Engineering. Wageningen University. https://library.wur.nl/WebQuery/wurpubs/fulltext/358332.

- Spelt, E. J., H. J. Biemans, H. Tobi, P. A. Luning, and M. Mulder. 2009. “Teaching and Learning in Interdisciplinary Higher Education: A Systematic Review.” Educational Psychology Review 21 (4): 365–378. doi:10.1007/s10648-009-9113-z.

- Vandenberg, R. J., and C. E. Lance. 2000. “A Review and Synthesis of the Measurement Invariance Literature: Suggestions, Practices, and Recommendations for Organizational Research.” Organizational Research Methods 3 (1): 4–70. doi:10.1177/109442810031002.

- Weber, E. P., and A. M. Khademian. 2008. “Wicked Problems, Knowledge Challenges, and Collaborative Capacity Builders in Network Settings.” Public Administration Review 68 (2): 334–349. doi:10.1111/j.1540-6210.2007.00866.x.

- Woods, C. 2007. “Researching and Developing Interdisciplinary Teaching: Towards a Conceptual Framework for Classroom Communication.” Higher Education 54 (6): 853–866. doi:10.1007/s10734-006-9027-3.

- You, H. S., J. A. Marshall, and C. Delgado. 2019. “Toward Interdisciplinary Learning: Development and Validation of an Assessment for Interdisciplinary Understanding of Global Carbon Cycling.” Research in Science Education, 1–25. doi:10.1007/s11165-019-9836-x.