?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The beetle antennae search (BAS) algorithm is a new meta-heuristic algorithm which has been shown to be very useful in many applications. However, the algorithm itself still has some problems, such as low precision and easy to fall into local optimum when solving complex problems, and excessive dependence on parameter settings. In this paper, an algorithm called beetle antennae search algorithm based on Lévy flights and adaptive strategy (LABAS) is proposed to solve these problems. The algorithm turns the beetle into a population and updates the population with elite individuals' information to improve the convergence rate and stability. At the same time, Lévy flights and scaling factor are introduced to enhance the algorithm's exploration ability. After that, the adaptive step size strategy is used to solve the problem of difficult parameter setting. Finally, the generalized opposition-based learning is applied to the initial population and elite individuals, which makes the algorithm achieve a certain balance between global exploration and local exploitation. The LABAS algorithm is compared with 6 other heuristic algorithms on 10 benchmark functions. And the simulation results show that the LABAS algorithm is superior to the other six algorithms in terms of solution accuracy, convergence rate and robustness.

1. Introduction

Heuristic algorithm is a hotspot in the field of optimization algorithms because of its simple implementation, good scalability and high efficiency. And is widely used in computer vision, medical, power systems and other fields (Zeng et al., Citation2018, Citation2019; Zeng, Zhang, Liu, Liang, & Alsaadi, Citation2017). In recent years, many new heuristic algorithms have been proposed. For example, the firefly algorithm (FA) has been proposed in Yang (Citation2008), which mimics the biological characteristics of fireflies in nature that transmit information or attract food through luminescence. The cuckoo search (CS) algorithm has been proposed in Yang and Deb (Citation2009), which simulates the parasitic brooding behaviour of cuckoos, and innovatively introduces the Lévy flights mechanism, enabling the algorithm to effectively achieve global and local searches. The chicken swarm optimization (CSO) algorithm has been proposed in Meng, Liu, Gao, and Zhang (Citation2014), which is a stochastic optimization method to simulate the hierarchy and foraging behaviour of chickens. The whale optimization algorithm (WOA) has been proposed in Mirjalili and Lewis (Citation2016), which mimics the hunting behaviour of humpback whales, and uses the shrinking encircling mechanism and the spiral updating position to optimize. In addition to the aforementioned algorithms, there are many other heuristic algorithms (see e.g. Abedinia, Amjady, & Ghasemi, Citation2016; Faris, Aljarah, Al-Betar, & Mirjalili, Citation2018; Li, Zhang, & Yin, Citation2014; Passino, Citation2002; Salcedo-Sanz, Pastor-Sanchez, Gallo-Marazuela, & Portilla-Figueras, Citation2013; Tan & Zhu, Citation2010; Zheng, Citation2015).

The algorithms described above have a common problem, that is, the amount of calculation is large. To this end, a new bio-heuristic intelligent algorithm has been proposed in Jiang and Li (Citation2017, Citation2018), which is the beetle antennae search (BAS) algorithm. BAS requires only one individual in the optimization, and the optimization mechanism is simple, so the amount of calculation is small. In Jiang and Li (Citation2018), the effectiveness of BAS for optimization problems is proved by simulation experiments on typical test functions. Currently, BAS has also been successfully applied to some optimization problems. For example, in Song (Citation2018), BAS has been combined with the particle swarm optimization algorithm to solve the problem of wireless sensor network coverage. Furthermore, in Zhu et al. (Citation2018), BAS has been applied to the energy management of microgrid with constrained, multi-objective, nonlinear and mixed integer programming forms. Besides, in Wu, Ma, Xu, Li, and Chen (Citation2019), BAS has been used to optimize the weights between the hidden layer and the output layer of the neural network, which effectively improves the calculation speed and classification accuracy of the classifier. In addition, there have been other successful applications of BAS algorithm (see e.g. Chen, Wang, & Wang, Citation2018; Wang & Liu, Citation2018). All these applications show that the BAS algorithm has potential research value.

Although BAS has been applied successfully to practical engineering problems, the algorithm itself still has some shortcomings. For example, an individual has limited ability in optimizing, and the algorithm does not utilize the information of the current optimal value, which also leads to some unnecessary iterations. In addition, when solving the optimization problem with complex functions and high dimensionality of variables, the BAS algorithm's convergence accuracy becomes low and it is easy to fall into local optimum. Furthermore, BAS relies heavily on the parameter settings during optimization. Therefore, the convergence and accuracy of the BAS algorithm are closely related to the parameters used. These shortcomings need to be overcome. However, the improvement of the algorithm is very challenging, and the related research has been few relatively.

In response to the above discussion, this paper proposes an algorithm called beetle antennae search algorithm based on Lévy flights and adaptive strategy (LABAS), which puts forward four improvements to the above shortcomings. The main contributions of this paper are summarized as follows. Firstly, a novel population update strategy is adopted instead of the traditional individual's optimization. When updating, the elite individuals' information is used and the optimal solution is searched in the vicinity of some of the best individuals that obtained so far. As such, the global optimization ability, stability and exploitation capability of the algorithm are improved. Secondly, Lévy flights are used in the search process to improve the exploration ability of the algorithm and the local optimum issue is hence avoided. Thirdly, the adaptive step size strategy is used to solve the problem of parameter setting of the original algorithm, and the current position of the beetle is updated by using the variables with better fitness value. Fourthly, the generalized opposition-based learning strategy is applied to the initial population and elite individuals in order to increase the diversity of the population and simultaneously the convergence rate of the algorithm is increased. Experimental results on 10 benchmark functions indicate that the proposed LABAS algorithm has better performance than the original BAS algorithm and 5 other representative heuristic algorithms.

The rest of this paper is organized as follows. In Section 2, the original BAS algorithm is explained. The proposed LABAS algorithm is presented in Section 3, and experimental results are shown in Section 4. As a final, conclusions about this paper are presented in Section 5.

2. An overview of BAS algorithm

The BAS algorithm is a new optimization algorithm inspired by the foraging behaviour of the beetle. The beetle has two antennae, which can detect the smell of food. If the odour intensity detected by the left antenna is larger than the right side, the beetle will fly to the left next time, otherwise it will fly to the right. This simple principle gives the beetle the direction information of the food, and the food can be found in that direction.

In the BAS algorithm, the function to be optimized is regarded as food, and the variables of the function can be considered as the position of the beetle. Beetle uses a random search method for unknown areas. In order to simulate this search behaviour, a random direction is generated by the following formula:

(1)

(1) where

represents a function that produces a random number in

, d can be regarded as the dimension of space and variable at the same time,

indicates the norm. Then the spatial coordinates of the two antennae on the left and right sides of the beetle are generated as follows:

(2)

(2) where

and

respectively represent the position coordinates of the left and right antennae of the beetle at time t,

indicates the position of the beetle at the tth iteration, and

is the length of the antennae at the tth iteration. Then, the concentration of odour on the left and right sides is calculated, denoted by

and

, where

is the fitness function. After that, the position of the beetle is updated with the following formula:

(3)

(3) where

represents the step size at the tth iteration,

is a sign function. Therefore, the beetle moves the

length in a direction in which the fitness value is better. Notably, with the number of iterations increases, the beetle's antennae length d and step size δ need to slowly decrease, and they can be expressed as

(4)

(4)

(5)

(5) where the initial values of d and δ and the corresponding attenuation coefficients

and

need to be selected according to the variables' ranges of the specific function.

3. The proposed LABAS algorithm

In this section, a new LABAS algorithm is proposed to improve the performance of the BAS algorithm. There are four main innovations. The first is to group the beetles and use the elite individuals to participate in the population update. The second is to introduce Lévy flights and scaling factor in the search process. The third is to adopt an adaptive strategy in the step size, and update the position of the beetle with variables with a better fitness value after detection. The fourth is to use the generalized opposition-based learning strategy for the initial population and elite individuals to increase the diversity of the population. These strategies make the LABAS algorithm reduce unnecessary iterations compared with BAS algorithm. At the same time, it can improve the stability of the algorithm, and also make the algorithm achieve a certain balance between global exploration and local exploitation. In addition, LABAS does not have complicated parameter setting problem.

3.1. Group composition and population update method involving elite individuals

BAS uses an individual to optimize, so the amount of computation is small. For some simple optimization problems, BAS can also get a satisfactory approximate solution. Once the function to be optimized becomes more complex and the dimension of the variable increases, the optimization accuracy of the BAS becomes lower and the performance deteriorates. Therefore, this paper changes the individual's optimization to the optimization of the population, and refers to and improves the processing method of the Moth-Flame optimization (MFO) algorithm on the population structure (Mirjalili, Citation2015; Savsani & Tawhid, Citation2017; Vikas & Nanda, Citation2016). At the same time, the simple and effective optimization mechanism of BAS is still retained. Such improvements can improve the optimization ability and stability of the algorithm.

The beetle population can be represented by a matrix as follows:

(6)

(6) where n represents the number of beetles, d represents the dimension of the variables to be optimized. The fitness values corresponding to these beetles can be represented by the following vector:

(7)

(7) where n represents the number of the beetles, and the value of each row in

is the fitness value of the individual in the corresponding row in the beetles matrix

.

The BAS algorithm does not utilize the information obtained from the current superior values. To this end, the LABAS algorithm uses the information of these elite individuals in the population update process. Elite individuals are represented by the following matrix:

(8)

(8) where

represents the number of optimal individuals obtained so far and d represents the dimension of the variable of the problem to be optimized. Thus each row of matrix

represents the elite individual's position that has been obtained so far. Similarly, the fitness values corresponding to these elite individuals are represented by a vector as follows:

(9)

(9) It should be noted that

and

are updated simultaneously with the population, and the elite individuals are the best

solutions obtained so far. In this paper, we make

equal to n.

In order to better illustrate the LABAS algorithm proposed in this paper, the following assumptions are made:

each individual in matrix

is sorted in increasing order of fitness values. Because the fitness value is as small as possible for the minimization problem.

is also arranged in the same order as

. So the first row in matrix

is the best individual's position so far, and the first one in

is its corresponding fitness value;

each beetle will update its position according to the corresponding elite individual in the matrix

. Therefore, the first beetle always updates its position relative to the current optimal solution.

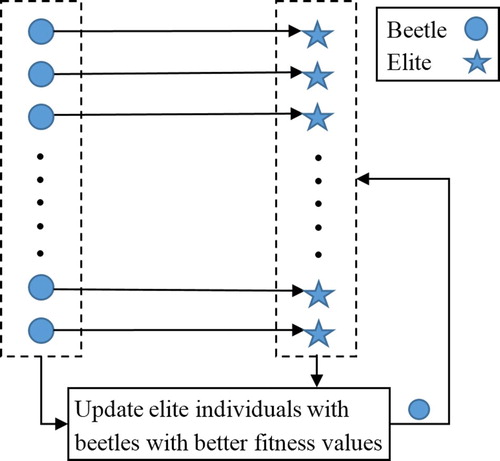

The above assumptions ensure that the beetles can exploit around the optimal solutions. At the same time, because these individuals in matrix are constantly updated, so the beetles will not always search around fixed solutions. This increases the exploration of the search space and therefore avoids falling into local optimum. Figure shows the distribution relationship with the elite individuals when the beetles update their positions, and how the elite individuals are updated.

3.2. Search method based on Lévy flights

When solving the complex optimization problem, the BAS algorithm has low convergence precision, and it is easy to fall into local optimum. To solve this problem, the Lévy flights strategy is introduced into the LABAS algorithm. Lévy flights (Ali, Awad, Reynolds, & Suganthan, Citation2018; Heidari & Pahlavani, Citation2017; Ling, Zhou, & Luo, Citation2017) is a Markov process proposed by the French mathematician Lévy. After that, Benoit Mandelbrot gave a detailed description. This is a random walk mode in which the step size of the walk follows the stable distribution of Lévy and can be expressed by the following formula:

(10)

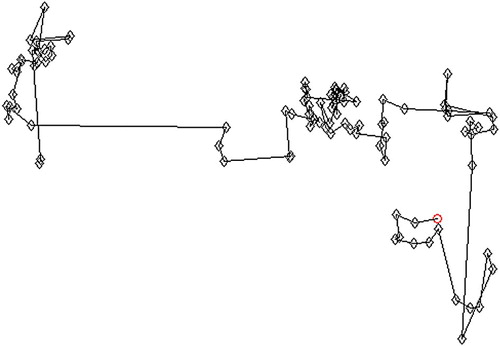

(10) It can be seen from the above equation that the distribution has an infinite mean and an infinite variance. Numerous studies have shown that many living things in nature have the characteristics of Lévy flights (Edwards et al., Citation2007; Marell, Ball, & Hofgaard, Citation2002; Reynolds & Frye, Citation2007; Reynolds, Smith, Reynolds, Carreck, & Osborne, Citation2007; Viswanathan et al., Citation1996), such as bees, fruit flies, albatrosses, reindeers, etc. At the same time, Lévy flights can maximize the efficiency of resource search in uncertain environments, and many optimization algorithms using Lévy flights also show excellent performance. Lévy flights is characterized by frequent short-range local searches, but occasionally a longer jump occurs and the direction of motion changes dramatically. These characteristics can avoid the convergence of the algorithm to the local optimal to a certain extent. Figure is a trajectory simulation of a two-dimensional Lévy flights.

A symmetric Lévy stable distribution is usually generated using the Mantegna algorithm (Jensi & Jiji, Citation2016), where symmetry means that the step size can be positive or negative. The random step size is expressed as follows:

(11)

(11) where

, μ and v obey the following Gaussian distribution:

(12)

(12) where

(13)

(13) where

is the standard gamma function, and

is used in this paper. The symmetric Lévy stable distribution may result in a larger step size after a series of small steps, and the direction will change several times. This allows beetle to speed up local searches to approaching the optimal solution. In addition, a few long-distance jump-type walks are beneficial to expand the search range of beetle, which makes it easier escape from the local optimum. Therefore, in this paper, Lévy flights is used to generate direction and step size, and is combined with the adaptive strategy in Section 3.3. Accordingly, the specific implementation is described in Section 3.3.

3.3. Adaptive step size strategy

BAS relies heavily on the setting of four parameters, namely the initial antennae length and step size and their corresponding attenuation coefficients. The performance of the algorithm is closely related to the parameters used. Therefore, for different optimization problems, it is necessary to adjust the parameters reasonably to get a better solution. This undoubtedly adds inconvenience to the use of the algorithm. In response to this problem, the proposed LABAS adopts the adaptive step size strategy in terms of parameters, and directly updates the current position of the beetle with the variables with better fitness value after detection.

First, the distance between the beetle and the elite individual needs to be calculated. The formula for each dimension is as follows:

(14)

(14) where

represents the jth dimensional coordinate of the ith beetle at time t,

is the jth dimension of the ith optimal value currently obtained at time t, and

represents the distance of the ith beetle from the ith elite individual in the jth dimension at time t.

Then, the distance is multiplied by a random scaling factor:

(15)

(15) where

represents a random number in

. This allows the beetle to move to any position between the corresponding elite individual when updating, which is more conducive to the algorithm's search for the promising optimal solution. At the same time, since

is automatically calculated and adjusted according to the distance between the beetle and the elite individual. Therefore it is an adaptive strategy. In order to allow beetle to balance local exploitation and global exploration while searching, the Lévy flights strategy introduced in Section 3.2 is added to Equation (Equation15

(15)

(15) ). At the same time, a constant 0.1 is multiplied. Hence the step size is expressed as follows:

(16)

(16) where

is symmetrical, that is, both positive and negative numbers can be generated, and its size is a random number obeying the Lévy distribution.

is therefore used to generate the required direction and step size. The step size represented by Equation (Equation16

(16)

(16) ) herein can be understood as the jth dimension of

in the Equation (Equation2

(2)

(2) ), including both the size and the direction.

After the distances of all dimensions are calculated, the antennae on the left and right sides of the beetle are used for detection. Here we change Equation (Equation2(2)

(2) ) to the following expression:

(17)

(17) where

represents the position of the ith beetle at time t,

indicates the step size or antennae length of the ith beetle at time t,

and

respectively indicate the position of the left and right antennae of the ith beetle at time t. Calculate the fitness values

and

at the two antennae, and then update the position of the beetle using the following formula:

(18)

(18) where

denotes the smaller one of

and

, and

is the one with better fitness value in

and

. The above formula indicates that the position of an antenna with a better fitness value after the detection is directly used to update the current position of the beetle, which avoids the extra need to calculate the δ in Equation (Equation5

(5)

(5) ) to update the individual. And the step size here is adaptively adjusted according to the distance between the beetle and the elite individual.

3.4. Generalized opposition-based learning

The opposition-based learning (OBL) has been proposed in Tizhoosh (Citation2005), which is a new technology in the field of computational intelligence. At present, the OBL strategy has been applied to a variety of optimization algorithms (Ahandani & Alavi-Rad, Citation2015; Dong, Kang, & Zhang, Citation2017; Ewees, Elaziz, & Houssein, Citation2018; Park & Lee, Citation2016; Rahnamayan, Tizhoosh, & Salama, Citation2008; Zhou, Hao, & Duval, Citation2017), and has achieved satisfactory optimization results. The main idea of OBL is to simultaneously evaluate the candidate solution and its opposite solution, and choose a better solution from them. However, the OBL uses fixed interval boundaries, which may result in loss of information of the currently converged region. Therefore, the generalized opposition-based learning (GOBL) has been proposed in Wang, Wu, Rahnamayan, Liu, and Ventresca (Citation2011), which replaces fixed boundaries with dynamically updated interval boundaries (El-Abd, Citation2012; Feng, Liu, Sun, Yue, & Liu, Citation2018). This strategy is also more conducive to the convergence of the algorithm. Some definitions of OBL and GOBL are given below.

Definition

opposite number

Let x be a real number defined in , then the opposite number of x is defined as follows:

(19)

(19) Similarly, it can be extended to high dimensional space.

Definition

opposite point

Let be a point in the d-dimensional space, where

, and

. Then the opposite point

of

is defined as follows:

(20)

(20) After the opposite point is defined, the opposition-based learning can be defined as follows.

Definition

opposition-based learning

Let be a point of the d-dimensional space (i.e. a candidate solution). The opposite point

is calculated based on the definition of the opposite point. Let

be a fitness function and be used to evaluate the appropriateness of the solution. If

is better than

, then the point

is replaced by its opposite point

, otherwise

remains unchanged. Therefore, this point and its opposite point are evaluated simultaneously, and only the better one will continue to be used for optimization.

Definition

generalized opposition-based learning

Let be the ith candidate solution at time t, where d is the dimension of the variables and n is the number of individuals in the population. Then the jth dimension of its generalized opposite point

can be defined as follows:

(21)

(21)

(22)

(22)

(23)

(23) where

and

represent the minimum and maximum values of the jth dimension of all individuals in the population at time t, and k is a random number in

.

From the above definitions, we can find that the interval boundaries in GOBL are dynamically updated, and the scope of the search space is small. Note that by using the GOBL, the diversity of the population could be increased and the convergence of the algorithm may be speeded up. That's why we introduce the GOBL into the LABAS algorithm. It should be noted that the LABAS algorithm proposed in this paper uses GOBL in two places. First, the GOBL is performed on randomly generated candidate solutions at the time of population initialization. This makes the initial individuals have better fitness, so it provides a good start for the algorithm. Second, the LABAS algorithm performs GOBL for elite individuals. This makes the algorithm have more opportunities to explore better solutions, and also increases the diversity of the population. Thereby the convergence rate of the algorithm is improved.

3.5. Algorithm flow

In order to make the interpretation of the LABAS algorithm proposed in this paper more clear, the basic steps are represented by the pseudo code Algorithm 1.

First, the algorithm randomly initializes the beetle population and performs GOBL (Line 1). At the same time, the number of iterations is initialized to 1 (Line 2). Then calculate the fitness values of the initial population (Lines 3–5). During each iteration, it is necessary to update the elite individuals (Lines 7–11) and perform GOBL on the elite individuals (Line 12). Then, sort the elite individuals in increasing order of fitness values (Line 13). When each beetle updates its position, first calculate its distance from the corresponding elite individual (Line 15). The step size is then generated based on the Lévy flights and scaling factor (Lines 16–17), and the positions of the two antennae on the left and right sides of the beetle are calculated using this step size (Line 18). Then update the position of the beetle according to the antennae (Line 19). The number of iterations needs to be updated after all beetles finish updating their positions (Line 21). Finally, the relevant information of the optimal solution is returned (Line 23).

4. Experimental design and results analysis

4.1. Experimental design

4.1.1. Experimental operation platform

The simulation environment of this paper is: CPU Intel Core i7-4710MQ, 2.50 GHz, 8 GB RAM, Windows 7 OS, Matlab R2013a.

4.1.2. Benchmark functions

In this paper, 10 benchmark functions on CEC2005 are selected for simulation experiments. The expressions, dimensions, search ranges, and theoretical optimal values of the benchmark functions are shown in Table . Among them, –

are continuous unimodal functions, which are used to test the optimization precision, convergence rate and local exploitation ability of the algorithm.

–

are continuous multimodal functions, and their local extreme points increase exponentially as the function's dimension increases. Therefore, they are often used to test the convergence rate, local optimal avoidance ability and global optimization ability of the algorithm.

Table 1. Benchmark functions.

4.1.3. Experimental parameter setting

In order to compare and analyse the performance of the proposed LABAS algorithm, this paper selects six other representative algorithms for comparative experiments, including: cuckoo search (CS) algorithm, particle swarm optimization (PSO) algorithm, differential evolution (DE) algorithm, artificial bee colony (ABC) algorithm, moth-flame optimization (MFO) algorithm and beetle antennae search (BAS) algorithm. The above algorithms can be divided into four categories. The CS algorithm also uses Lévy flights, so it is used to compare the performance of the algorithm that also uses this strategy. PSO, DE, and ABC are representative algorithms in heuristic optimization algorithms, and they have good optimization capabilities. The MFO algorithm is a relatively new optimization algorithm, which has been proposed in 2015. BAS is the algorithm to be improved in this paper, which is used to compare whether the improved LABAS is superior to the original algorithm. It should be noted that since the LABAS algorithm adopts an adaptive step size strategy, there is no need to tune its parameters.

The dimensions of the variables for all benchmark functions in this experiment are set to 30. And in order to compare the results more fairly, the population size and number of iterations of each algorithm are set the same. The specific parameter settings of each algorithm are shown in Table .

Table 2. Algorithm parameter table.

4.2. Experimental results and analysis

In order to prevent the error caused by the contingency of the algorithm, the 7 algorithms run independently 30 times under each benchmark function, and record the minimum (Min), maximum (Max), mean (Mean) and standard deviation (Std). These performance indicators are used to evaluate the optimization performance of the algorithm. The simulation results are shown in Tables and . It should be noted that the bold values indicate the optimal data of these algorithms under the corresponding evaluation indexes. The Mean reflects the average convergence accuracy that the algorithm can achieve for a given number of iterations. The Std reflects the stability and robustness of the algorithm.

Table 3. Comparison of optimization results of seven algorithms for unimodal benchmark functions.

Table 4. Comparison of optimization results of seven algorithms for multimodal benchmark functions.

First analyse the data under the unimodal functions. Taking the function in Table as an example, the precision of the optimal value of the LABAS algorithm reaches

. Moreover, the accuracy in the worst case is better than the best accuracy that other algorithms can achieve. The average solution accuracy is three orders of magnitude higher than the suboptimal DE algorithm, and is five orders of magnitude higher than the original BAS algorithm. At the same time, the standard deviation of the algorithm is very small. Therefore, LABAS algorithm has better robustness and stability. It can be seen from the results in Table that the performance of the LABAS algorithm under most functions is optimal when optimizing the unimodal benchmark functions. Among them, the accuracy of the optimal solution solved by LABAS on

is obviously higher than other algorithms, and the average solution accuracy is also the best, which reflects the excellent optimization ability of the algorithm. When optimizing

, the LABAS algorithm also achieved better average solution accuracy and standard deviation. For

, the LABAS algorithm fails to achieve the best accuracy, but its average solution accuracy is second only to the ABC algorithm that obtains the optimal solution. Therefore, the LABAS algorithm is still very competitive. For

, the optimal solution accuracy of the MFO algorithm is higher than that of the LABAS algorithm, but its other indicators are worse than the LABAS algorithm. When comparing LABAS with BAS, it can be found that the accuracy and stability of LABAS under each function are better than that of BAS, which shows the effectiveness of the improved algorithm. It can be seen from the above comparison that the solution accuracy of the LABAS algorithm is superior to other algorithms in most cases, which also shows that the algorithm has better local exploitation ability. In addition, the LABAS algorithm also has good robustness.

From the comparison results in Table , the LABAS algorithm also has good performance for the multimodal benchmark functions, which have an exponential number of local solutions. Among them, LABAS obtains the global optimal solution when optimizing function , and the average solution accuracy is also the best. For function

, the average solution accuracy of LABAS is not optimal, but it is also the fourth best. In addition, for the functions

, the values of the LABAS algorithm under each index are optimal. This also shows that the LABAS algorithm has superior optimization ability. When comparing LABAS with BAS, it can be found that LABAS is also significantly outperforms BAS for the performance of multimodal functions' optimization. The above comparison shows that the LABAS algorithm also has well solution accuracy for multimodal functions with many local minima. This indicates that the algorithm has a good ability to explore the unknown region, and its local optimal avoidance ability is strong.

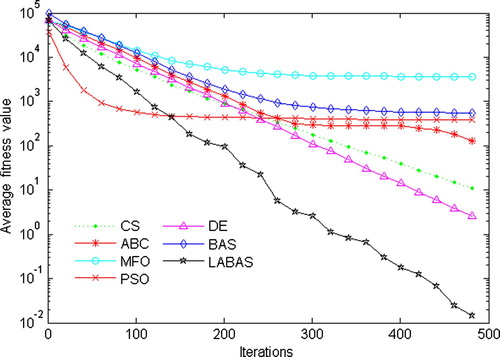

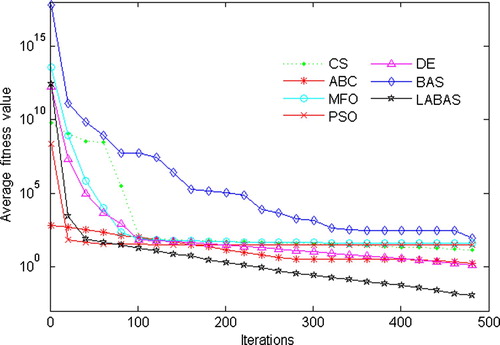

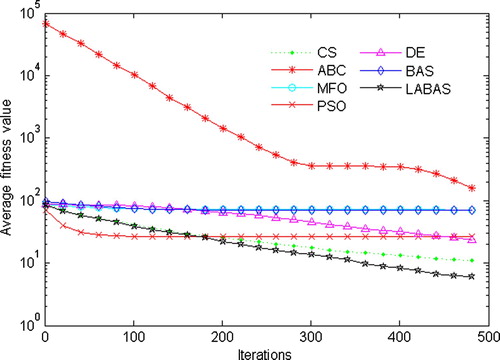

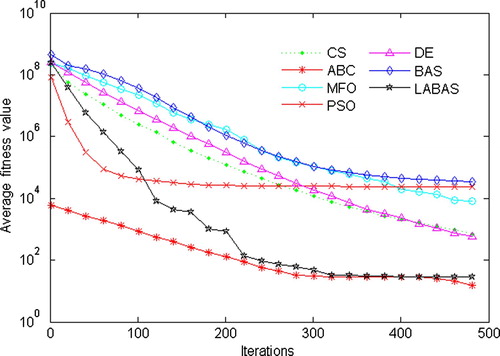

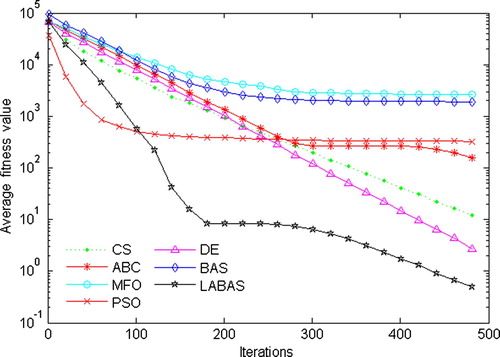

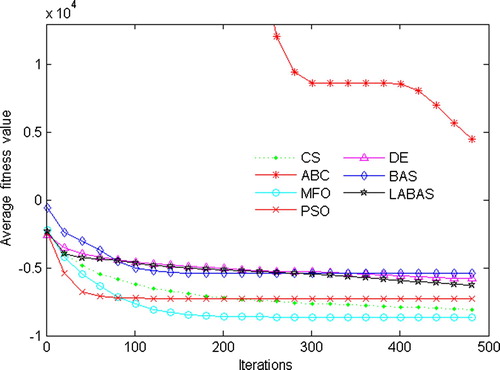

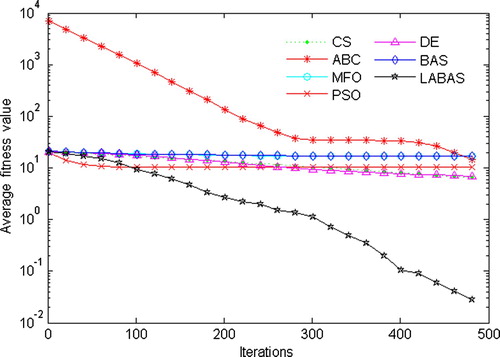

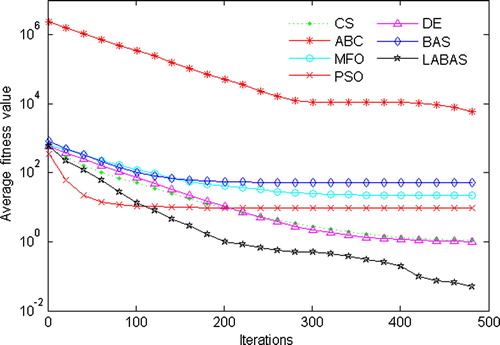

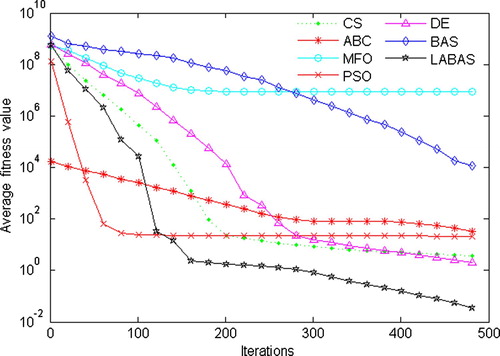

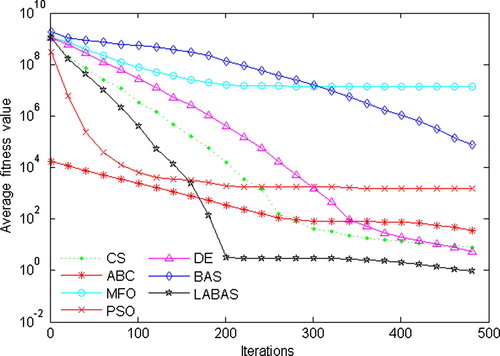

In order to reflect and compare the optimization precision and convergence rate of each algorithm more intuitively, the average convergence curves of the seven algorithms under each benchmark function are plotted, as shown in Figures –. It should be noted that the average fitness values of the 7 algorithms are plotted against the base 10 logarithm, except for . It can be seen from Figures to that the convergence rate of the LABAS algorithm is the fastest under each benchmark function, except for

. In addition, observing the change of the convergence curve, other algorithms are easy to fall into the local optimum. In this case, the algorithm can not find the theoretical optimal value by performing more iterations. However, the LABAS algorithm in this paper adopts Lévy flights, step size adaptive strategy and GOBL, so it can effectively avoid convergence to the local optimal value.

5. Conclusion

In view of the shortcomings of BAS algorithm when solving complex optimization problems, such as low convergence precision, easy to fall into local optimum, and excessive dependence on parameter settings. This paper proposes an algorithm called beetle antennae search algorithm based on Lévy flights and adaptive strategy (LABAS). Firstly, the population used by the algorithm and the corresponding strategy of updating the population using elite individual information enhance its optimization ability, stability and exploitation ability. Secondly, the Lévy flights and the scaling factor improve the ability of the algorithm to explore the region of the global optimal solution, avoiding falling into local optimum and converge to the global optimal value more quickly. Thirdly, the adaptive step size strategy avoids the difficulty of parameter setting and can be automatically adjusted according to the type and size of the problem. Fourthly, GOBL enhances the diversity of the population and also makes the algorithm has better ability to find the optimal solution. The above improvements balance the global exploration and local exploitation of the algorithm to some extent. Simulation experiments and comparative analysis show that the LABAS algorithm is superior to the BAS algorithm and other comparison algorithms in terms of accuracy, convergence rate, stability, robustness and local optimal value avoidance. In our future research work, the LABAS algorithm will be applied in the optimization problems in the textiles, carbon fibre production and other fields.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Abedinia, O., Amjady, N., & Ghasemi, A. (2016). A new metaheuristic algorithm based on shark smell optimization. Complexity, 21(5), 97–116. doi: 10.1002/cplx.21634

- Ahandani, M. A., & Alavi-Rad, H. (2015). Opposition-based learning in shuffled frog leaping: An application for parameter identification. Information Sciences, 291, 19–42. doi: 10.1016/j.ins.2014.08.031

- Ali, M. Z., Awad, N. H., Reynolds, R. G., & Suganthan, P. N. (2018). A balanced fuzzy cultural algorithm with a modified Levy flight search for real parameter optimization. Information Sciences, 447, 12–35. doi: 10.1016/j.ins.2018.03.008

- Chen, J., Wang, C., & Wang, S. (2018). Research on evaluation method of spatial straightness for variable step beetle antennae search algorithm. Tool Engineering, 8, 136–138.

- Dong, W., Kang, L., & Zhang, W. (2017). Opposition-based particle swarm optimization with adaptive mutation strategy. Soft Computing, 21(17), 5081–5090. doi: 10.1007/s00500-016-2102-5

- Edwards, A. M., Phillips, R. A., Watkins, N. W., Freeman, M. P., Murphy, E. J., Afanasyev, V., … Viswanathan, G. M. (2007). Revisiting Levy flight search patterns of wandering albatrosses, bumblebees and deer. Nature, 449(7165), 1044–1048. doi: 10.1038/nature06199

- El-Abd, M. (2012). Generalized opposition-based artificial bee colony algorithm. In 2012 IEEE congress on evolutionary computation, Brisbane, Australia (pp. 1–4).

- Ewees, A. A., Elaziz, M. A., & Houssein, E. H. (2018). Improved grasshopper optimization algorithm using opposition-based learning. Expert Systems with Applications, 112, 156–172. doi: 10.1016/j.eswa.2018.06.023

- Faris, H., Aljarah, I., Al-Betar, M. A., & Mirjalili, S. (2018). Grey wolf optimizer: A review of recent variants and applications. Neural Computing and Applications, 30(2), 413–435. doi: 10.1007/s00521-017-3272-5

- Feng, X., Liu, A., Sun, W., Yue, X., & Liu, B. (2018). A dynamic generalized opposition-based learning fruit fly algorithm for function optimization. In 2018 IEEE congress on evolutionary computation (CEC), Rio de Janeiro, Brazil (pp. 1–7).

- Heidari, A. A., & Pahlavani, P. (2017). An efficient modified grey wolf optimizer with Lévy flight for optimization tasks. Applied Soft Computing, 60, 115–134. doi: 10.1016/j.asoc.2017.06.044

- Jensi, R., & Jiji, G. W. (2016). An enhanced particle swarm optimization with levy flight for global optimization. Applied Soft Computing, 43, 248–261. doi: 10.1016/j.asoc.2016.02.018

- Jiang, X., & Li, S. (2017). Beetle antennae search without parameter tuning (BAS-WPT) for multi-objective optimization. arXiv:1711.02395v1 [cs.NE].

- Jiang, X., & Li, S. (2018). BAS: Beetle antennae search algorithm for optimization problems. International Journal of Robotics and Control, 1(1), 1–5. doi: 10.5430/ijrc.v1n1p1

- Li, X., Zhang, J., & Yin, M. (2014). Animal migration optimization: An optimization algorithm inspired by animal migration behavior. Neural Computing and Applications, 24(7-8), 1867–1877. doi: 10.1007/s00521-013-1433-8

- Ling, Y., Zhou, Y., & Luo, Q. (2017). Lévy flight trajectory-based whale optimization algorithm for global optimization. IEEE Access, 5, 6168–6186. doi: 10.1109/ACCESS.2017.2695498

- Marell, A., Ball, J. P., & Hofgaard, A. (2002). Foraging and movement paths of female reindeer: Insights from fractal analysis, correlated random walks, and Levy flights. Canadian Journal of Zoology, 80(5), 854–865. doi: 10.1139/z02-061

- Meng, X., Liu, Y., Gao, X., & Zhang, H. (2014). A new bio-inspired algorithm: Chicken swarm optimization. In Fifth international conference on swarm intelligence, Hefei, China (pp. 86–94).

- Mirjalili, S. (2015). Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowledge-Based Systems, 89, 228–249. doi: 10.1016/j.knosys.2015.07.006

- Mirjalili, S., & Lewis, A. (2016). The whale optimization algorithm. Advances in Engineering Software, 95, 51–67. doi: 10.1016/j.advengsoft.2016.01.008

- Park, S., & Lee, J. (2016). Stochastic opposition-based learning using a beta distribution in differential evolution. IEEE Transactions on Cybernetics, 46(10), 2184–2194. doi: 10.1109/TCYB.2015.2469722

- Passino, K. M. (2002). Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Systems Magazine, 22(3), 52–67. doi: 10.1109/MCS.2002.1004010

- Rahnamayan, S., Tizhoosh, H. R., & Salama, M. M. A. (2008). Opposition-based differential evolution. IEEE Transactions on Evolutionary Computation, 12(1), 64–79. doi: 10.1109/TEVC.2007.894200

- Reynolds, A. M., & Frye, M. A. (2007). Free-flight odor tracking in drosophila is consistent with an optimal intermittent scale-free search. PLoS One, 2(4), 1–9. doi: 10.1371/journal.pone.0000354

- Reynolds, A. M., Smith, A. D., Reynolds, D. R., Carreck, N. L., & Osborne, J. L. (2007). Honeybees perform optimal scale-free searching flights when attempting to locate a food source. Journal of Experimental Biology, 210(21), 3763–3770. doi: 10.1242/jeb.009563

- Salcedo-Sanz, S., Pastor-Sanchez, A., Gallo-Marazuela, D., & Portilla-Figueras, A. (2013). A novel coral reefs optimization algorithm for multi-objective problems. In 14th international conference on intelligent data engineering and automated learning (IDEAL), Hefei, China (pp. 326–333).

- Savsani, V., & Tawhid, M. A. (2017). Non-dominated sorting moth flame optimization (NS-MFO) for multi-objective problems. Engineering Applications of Artificial Intelligence, 63, 20–32. doi: 10.1016/j.engappai.2017.04.018

- Song, D. (2018). Application of particle swarm optimization based on beetle antennae search strategy in wireless sensor network coverage. In International conference on network, communication, computer engineering (NCCE 2018), Chongqing, China (pp. 1051–1054).

- Tan, Y., & Zhu, Y. (2010). Fireworks algorithm for optimization. In 1st international conference on swarm intelligence, Beijing, China (pp. 355–364).

- Tizhoosh, H. R. (2005). Opposition-based learning: A new scheme for machine intelligence. In International conference on computational intelligence for modelling, control and automation/international conference on intelligent agents web technologies and international commerce, Vienna, Austria (pp. 695–701).

- Vikas, & Nanda, S. J. (2016). Multi-objective moth flame optimization. In 2016 international conference on advances in computing, communications and informatics (ICACCI), Jaipur (pp. 2470–2476).

- Viswanathan, G. M., Afanasyev, V., Buldyrev, S. V., Murphy, E. J., Prince, P. A., & Stanley, H. E. (1996). Levy flight search patterns of wandering albatrosses. Nature, 381(6581), 413–415. doi: 10.1038/381413a0

- Wang, T., & Liu, Q. (2018). The assessment of storm surge disaster loss based on BAS-BP model. Marine Environmental Science, 37(3), 457–463.

- Wang, H., Wu, Z., Rahnamayan, S., Liu, Y., & Ventresca, M. (2011). Enhancing particle swarm optimization using generalized opposition-based learning. Information Sciences, 181(20), 4699–4714. doi: 10.1016/j.ins.2011.03.016

- Wu, Q., Ma, Z., Xu, G., Li, S., & Chen, D. (2019). A novel neural network classifier using beetle antennae search algorithm for pattern classification. IEEE Access, 7, 64686–64696. doi: 10.1109/ACCESS.2019.2917526

- Yang, X. (2008). Nature-inspired metaheuristic algorithms. Frome: Luniver Press.

- Yang, X., & Deb, S. (2009). Cuckoo search via Lévy flights. In World congress on nature and biologically inspired computing, Coimbatore, India (pp. 210–214).

- Zeng, N., Qiu, H., Wang, Z., Liu, W., Zhang, H., & Li, Y. (2018). A new switching-delayed-PSO-based optimized SVM algorithm for diagnosis of Alzheimer's disease. Neurocomputing, 320, 195–202. doi: 10.1016/j.neucom.2018.09.001

- Zeng, N., Wang, Z., Zhang, H., Kim, K., Li, Y., & Liu, X. (2019). An improved particle filter with a novel hybrid proposal distribution for quantitative analysis of gold immunochromatographic strips. IEEE Transactions on Nanotechnology, 18, 819–829. doi: 10.1109/TNANO.2019.2932271

- Zeng, N., Zhang, H., Liu, W., Liang, J., & Alsaadi, F. E. (2017). A switching delayed PSO optimized extreme learning machine for short-term load forecasting. Neurocomputing, 240, 175–182. doi: 10.1016/j.neucom.2017.01.090

- Zheng, Y. (2015). Water wave optimization: A new nature-inspired metaheuristic. Computers and Operations Research, 55, 1–11. doi: 10.1016/j.cor.2014.10.008

- Zhou, Y., Hao, J., & Duval, B. (2017). Opposition-based memetic search for the maximum diversity problem. IEEE Transactions on Evolutionary Computation, 21(5), 731–745. doi: 10.1109/TEVC.2017.2674800

- Zhu, Z., Zhang, Z., Man, W., Tong, X., Qiu, J., & Li, F. (2018). A new beetle antennae search algorithm for multi-objective energy management in microgrid. In 13th IEEE conference on industrial electronics and applications (ICIEA), Wuhan, China (pp. 1599–1603).